Abstract

Purpose

Patient-reported outcomes are increasingly used in routine outpatient cancer care to guide clinical decisions and enhance communication. Prior evidence suggests good patient compliance with reporting at scheduled clinic visits, but there is limited evidence about compliance with long-term longitudinal reporting between visits.

Patients and Methods

Patients receiving chemotherapy for lung, gynecologic, genitourinary, or breast cancer at a tertiary cancer center, with access to a home computer and prior e-mail experience, were asked to self-report seven symptomatic toxicities via the Web between visits. E-mail reminders were sent to participants weekly; patient-reported high-grade toxicities triggered e-mail alerts to nurses; printed reports were provided to oncologists at visits. A priori threshold criteria were set to determine if this data collection approach merited further development based on monthly (≥ 75% participants reporting at least once per month on average) and weekly compliance rates (60% at least once per week).

Results

Between September 2006 and November 2010, 286 patients were enrolled (64% were women; 88% were white; median age, 58 years). Mean follow-up was 34 weeks (range, 2 to 214). On average, monthly compliance was 83%, and weekly compliance was 62%, without attrition until the month before death. Greater compliance was associated with older age and higher education but not with performance status. Compliance was greatest during the initial 12 weeks. Symptomatic illness and technical problems were rarely barriers to compliance.

Conclusion

Monthly compliance with home Web reporting was high, but weekly compliance was lower, warranting strategies to enhance compliance in routine care settings.

INTRODUCTION

The use of patient-reported outcomes (PROs) in routine clinical practice has received recent interest by health care providers for its potential to improve symptom management, communication, and efficiency of clinical operations.1–6 Medical, surgical, and radiation oncology practices are integrating PROs into their clinical workflow, and several software vendors of electronic medical records are offering PRO assessment modules as optional packages.1 This movement follows the uptake of PRO use in cancer clinical trials in both the regulatory and cooperative group settings, in which PROs have become a mainstay of study design to capture the patient perspective.7–11

Recent interest in PROs in health care has evolved amid a broader milieu of patient-centeredness in the United States12,13 and is based on a growing body of evidence demonstrating that PROs improve quality of care and patient-provider communication.2,14–16 Moreover, PROs can flag symptoms that providers systematically miss or downgrade, such as nausea, neuropathy, fatigue, dyspnea, and anorexia; failure to note these symptoms may result in subsequent health problems that otherwise could have been prevented.17–20

Previous work has demonstrated that in industry-sponsored clinical trials, remote electronic self-reporting (ie, home reporting) is feasible and can have high levels of compliance.21–23 However, these trials were conducted in carefully selected and motivated patient populations with good baseline performance status, with close follow-up by data managers within a robust infrastructure. In contrast, routine-care patients include all comers—that is, patients of variable performance status who may have little or no interest in self-reporting. Moreover, outside the context of clinical trials, research staff are not in place to engage patients to self-report between visits.

The feasibility of patient self-reporting during routine chemotherapy care at clinic visits via waiting room tablet computers has also been reported, with mean compliance rates ranging from 75% to 85%, high patient satisfaction, and good usability of systems even among the non–Web avid (ie, non–Internet familiar), the elderly, or frail individuals.6,25,25 However, this work does not provide evidence about the feasibility of home reporting between visits. Between-visit reporting is of particular interest because of the ability to monitor patients when they are away from the clinic but experiencing symptoms that merit changes in management.3,26–31

Therefore, we sought to examine long-term patient compliance rates with self-reporting of seven common symptomatic toxicities associated with chemotherapy and to identify variables associated with greater or lesser compliance across multiple cancer types. Compliance rates for different frequencies of self-reporting were of interest (eg, at least once per month, per 2 weeks, and per week) to inform future implementation strategies, because these frequencies of reporting may be appropriate in different treatment contexts where more or less common symptom information is meaningful for decision making.

PATIENTS AND METHODS

Study Design and Patient Population

A preplanned feasibility study of self-reporting compliance was embedded within a larger, randomized controlled trial assessing the potential clinical benefits of PRO assessment in routine cancer care.32 The feasibility study entailed one of three trial arms, in which patients were assigned to regularly self-report a set of symptomatic toxicities from home between clinic visits and from clinic waiting areas on dates of visits via a Web survey composed of previously established PRO measures.6,25,33

Patients being treated at Memorial Sloan-Kettering Cancer Center (MSKCC) lung, gynecologic, breast, and genitourinary outpatient clinics were identified if they were starting a new chemotherapy regimen and not currently in a clinical treatment trial. To be eligible for the feasibility study, patients had to indicate Web avidity (ie, Internet familiarity) in a baseline questionnaire defined as access to a computer and the Internet at home with prior e-mail experience and be able to read and understand English.

Eligible patients were approached in clinic waiting areas and invited to participate in this institutional review board–approved study. To be enrolled onto the study and analyzed, patients were required to complete informed consent and a baseline symptom survey.

Patients were discontinued from the study when they ceased systemic anticancer therapy at MSKCC or voluntarily disenrolled. Therefore, all patients were considered to be receiving active treatment during participation.

Symptom Tracking

Enrolled patients underwent a 15-minute training session via a tablet computer for a Web-based PRO software system called STAR (Symptom Tracking and Reporting), which has been described previously.33 Training included instructions on how to log into STAR and enter information about symptomatic toxicities. STAR was only available in English at the time of this study. Participants were told that although the STAR system would generate reports for clinicians to review at subsequent visits, and it would trigger automated e-mails to clinical staff for concerning or worsening symptoms, this information might not necessarily be reviewed in real time. Therefore, they should still contact clinical staff by telephone about concerning symptoms. This stipulation was requested by the institutional review board.

Patients were asked to self-report from home during weeks they did not have a clinic visit and were sent automated e-mails reminding them of the availability of the online questionnaire and providing a link to it. However, they could log in whenever and however often they wished, with no specific requirement for timing of reporting. During clinic visits, patients were asked to self-report using a clinic-based tablet computer or kiosk in waiting areas. Patients were offered no financial incentive to self-report.

Each time a patient logged into the STAR Web site, he or she was prompted to respond to seven plain-language questions about symptomatic toxicities corresponding to items in the National Cancer Institute Common Terminology Criteria for Adverse Events,34 including: pain, fatigue, nausea, vomiting, constipation, diarrhea, and appetite loss.19 Patients were asked additional questions to assess health state (via the EuroQoL EQ-5D) and patient-reported performance status.25,35,36 Subsequently, at each clinic visit, a STAR report was printed for providers showing patient responses for review by the nurse and/or medical oncologist as part of standard care. In addition, real-time automatic e-mails were triggered to nurses in the event of patients reporting any grade 3 or 4 toxicities or in the event of symptoms worsening by ≥ two grade points since the prior visit.

Patients were eligible to continue using the STAR system until their off-study date, which was defined as either the date of final chemotherapy treatment at MSKCC, the date of voluntary withdrawal from the study, or the date of overall trial closure. Baseline patient demographics, including age, sex, highest level of education completed, and race/ethnicity, were reported by the patient at time of enrollment. Stage of disease and Eastern Cooperative Oncology Group (ECOG) performance status37 were abstracted from the medical record.

Statistical Analysis

Longitudinal compliance rates were quantified by calculating the proportion of patients who self-reported at least once during each of three different timeframes of analysis, respectively: monthly, every 2 weeks, and weekly. For example, for weekly compliance, the proportion of patients reporting at least once was measured for week 1 of enrollment, then week 2, week 3, and so on, and these proportions were then averaged over the observation period. This analysis was repeated for bimonthly and monthly compliance rates.

A priori threshold criteria for compliance were set based on prior feasibility studies in related contexts6,25,31 to determine if this data collection approach merited further development. The feasibility threshold for monthly compliance was specified as 75% (ie, during each month of study enrollment, at least 75% of participants, on average, completed a self-report), and for weekly compliance, it was specified as 60%. Expert consensus determined that these thresholds were reasonable for this study, principally because these numbers represent compliance rates that conceivably could be improved with future interventions to support or encourage participation (to be based on findings in the study about barriers to self-reporting) and would indicate a data management strategy worth pursuing in future research. Separately, patient-level compliance was quantified, defined as the proportion of weeks during which a given patient self-reported at least once.

A multivariate analysis was used to identify patient characteristics associated with higher and lower compliance rates. Baseline patient characteristics were examined in relationship to compliance via a population-averaged logit model using generalized estimating equations. Patient-level variables of interest included age, race, sex, education level, cancer type, cancer stage, baseline ECOG performance status, time since enrollment, and total duration of time in the study. Odds ratios (ORs) were calculated for each covariate. All reported P values were two sided, and P values < .05 were interpreted as being statistically significant.

Reasons for noncompliance were elicited from patients at subsequent logins or clinic visits and were summarized descriptively. In a separate analysis, compliance in the weeks before death was evaluated for all patients who died before the trial closure date to assess if proximity to death reduced compliance rates.

The size of the cohort was determined by the overall clinical trial, which was powered to measure effectiveness differences between study arms, with an a priori projected N for this feasibility substudy of 260 patients to provide a 95% CI of 69% to 80% for a point estimate of 75% for the predetermined monthly compliance threshold.

RESULTS

Patient Characteristics

Between September 2006 and November 2010, 286 eligible patients were enrolled onto this feasibility study, of whom 64% were women, and 88% were white; median age was 58 years (range, 30 to 85; Table 1). By design, all patients met the criteria for being Web avid (ie, familiarity with Internet based on self-reported access to a computer at home and prior e-mail experience). Patients were observed until the study closure date of November 21, 2011. Average enrollment duration was 34 weeks (range, 2 to 214), totaling 9,771 study weeks, and the median number of visits was 24 (range, two to 272). Less than 8% of patients (22 of 286) voluntarily withdrew from the study, and 30% of patients (86 of 286) died during the study period. Overall, 381 patients were approached to participate (25% refusal rate), with primary reasons for refusal being “do not plan to follow-up here,” “not enough time,” “not interested in research,” and “enrolled in another study.”

Table 1.

Baseline Patient Demographics and Clinical Characteristics (N = 286)

| Characteristic | No. | % |

|---|---|---|

| Age, years | ||

| Median | 58 | |

| Range | 30-85 | |

| Female sex | 184 | 64 |

| Race | ||

| White | 253 | 88 |

| Black/African American | 20 | 7 |

| Asian/Far East/Indian | 12 | 4 |

| Native American | 1 | < 1 |

| Primary cancer site | ||

| Lung cancer | 69 | 24 |

| Gynecologic* | 67 | 23 |

| Genitourinary† | 78 | 27 |

| Breast | 72 | 25 |

| Educational attainment | ||

| High school or less | 45 | 16 |

| College | 144 | 51 |

| Professional degree | 96 | 34 |

Gynecologic includes ovarian, cervical, uterine, and primary peritoneal cancers.

Genitourinary includes prostate and bladder cancers.

Symptom Self-Reporting

During the 9,771 total study weeks, participants logged into self-report using STAR a total of 8,690 times (median, 17 logins per patient), for an average of 0.9 logins per patient per week. Of these self-reports, 71% were from home (ie, between visits), and 29% were from clinic.

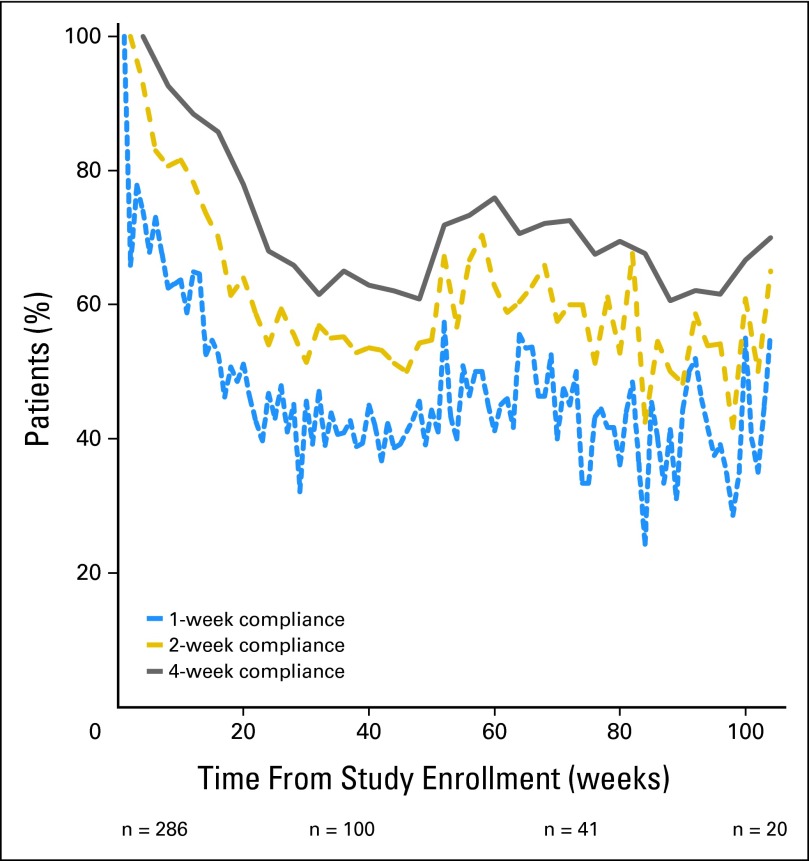

Figure 1 shows longitudinal compliance rates for the cohort using the weekly, every 2 week, and every 4 week time unit thresholds, respectively. Average monthly compliance was 83% (standard deviation, 25%), whereas average weekly compliance was 62% (standard deviation, 30%), in both cases meeting the prespecified compliance thresholds for feasibility. These analyses included reporting both from home and from clinic. Overall, compliance rates were higher during the initial 16 weeks of enrollment, following which there was a dropoff before relative stabilization at approximately 24 weeks.

Fig 1.

Proportion of patients self-reporting symptomatic toxicities over time, measured using three different compliance definitions: at least one self-report per week, at least one self-report per 2-week period, or at least one self-report per month.

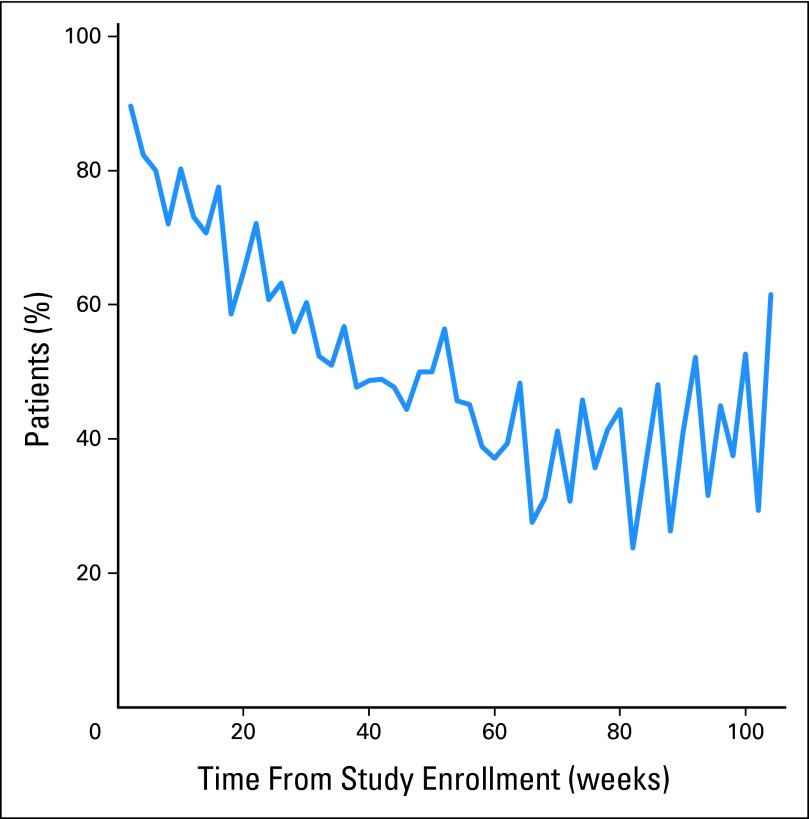

To evaluate patient compliance with reporting exclusively from home (ie, between visits), the weekly compliance analysis was repeated including only weeks during which patients did not have a clinic visit (Fig 2). The between-visit home compliance rates followed a pattern similar to the overall compliance rates, decreasing until a plateau at approximately 24 weeks.

Fig 2.

Proportion of patents self-reporting at least once per week from home during weeks when they did not have a clinic visit.

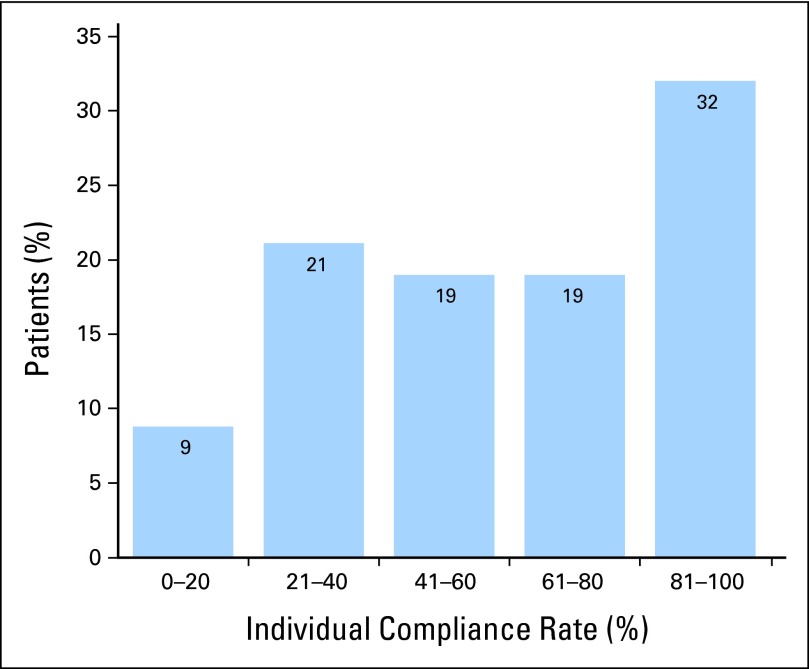

To determine the distribution of individual patient-level compliance, rates over the duration of each participant's enrollment were tabulated (Appendix Fig A, online only), finding that more than half of patients self-reported in > 60% of the weeks they were enrolled.

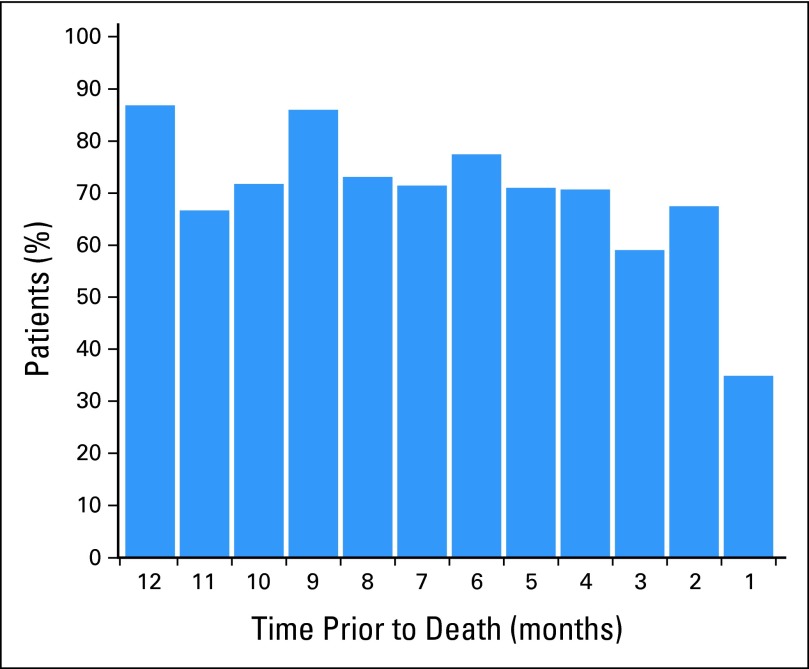

Figure 3 shows compliance rates in the months preceding death for the 86 patients who died during the study using the monthly compliance criterion. Patient compliance remained relatively stable until the last month before death, at which time it declined to 35%.

Fig 3.

Proportion of patients self-reporting at least once per month, shown for each month during the year before death.

Self-reported reasons for noncompliance are summarized in Table 2. Seventy-three percent of patients said they forgot, were too busy, or did not feel like self-reporting. Technical and illness-related barriers to self-reporting were noted by 11%.

Table 2.

Primary Reasons Given by Patients for Missing a Self-Report

| Reason | % |

|---|---|

| I forgot | 42 |

| I was too busy | 21 |

| I didn't feel like it | 10 |

| It was inconvenient | 9 |

| I was too sick | 7 |

| I was feeling well | 5 |

| Technical problems | 4 |

| I didn't find it useful | 2 |

Associations of Variables With Compliance

Table 3 summarizes the associations between variables of interest and weekly self-reporting compliance. Baseline older age, white race, and higher educational level were significantly associated with compliance, with modest OR differences. Patients were more than 3× as likely to be compliant with self-reporting during their first 12 weeks in the study than they were after that time (OR, 3.31; P < .001). Patients with lung and genitourinary cancers were more likely than patients with breast cancer to be compliant, which may have been related to a sex effect seen in a separate model, in which men were more likely to be compliant than women (OR, 1.31; P < .001). Participants with later-stage cancers were also more likely to be compliant (OR, 1.19; P = .001). Baseline ECOG performance status scores were not associated with differences in compliance.

Table 3.

Multivariate Analysis of Associations of Variables With Patient Online Reporting Compliance

| Variable | Adjusted OR | P |

|---|---|---|

| Age | 1.01 | .029* |

| White race† | 1.38 | .001* |

| Higher education‡ | 1.20 | .028* |

| Breast clinic (reference clinic) | — | — |

| Gynecologic clinic | 1.07 | .437 |

| Lung clinic | 1.72 | < .001* |

| Genitourinary clinic | 1.46 | < .001* |

| Duration on study | 1.00 | .018* |

| First 3 months of data§ | 3.31 | < .001* |

| Baseline ECOG performance status | 0.95 | .468 |

Abbreviations: ECOG, Eastern Cooperative Oncology Group; OR, odds ratio.

P < .05.

Race was divided into two groups (white or nonwhite) because of a limited number of nonwhite patients in the study.

Education was divided into two groups: having a college degree or higher versus having less than a college degree.

The first 3 months of data were compared with all data after that point.

Because this study was initiated in 2006, when Web use and familiarity with technology was less prevalent than later years of the study (patients were observed until late 2011), an analysis was conducted to assess if compliance rates differed for patients enrolled in earlier years of the study versus in later years. No significant difference was seen in compliance rates based on year of enrollment.

DISCUSSION

This study suggests that long-term between-visit Web-based reporting of symptomatic toxicities is feasible for patients receiving cancer chemotherapy. Patients were observed for up to 4 years, with a mean follow-up of 8.5 months. Compliance rates were higher for longer timeframes of reporting (ie, monthly v weekly). The principal reasons given by patients for missing a report were that they forgot or were too busy. It was much less common for ill health to serve as a barrier (7% of missing data were attributed by patients to being too ill), and compliance rates were maintained until shortly before death, corroborating previously published results.25 Similarly, technical difficulties were rarely a barrier.

Prior work by our group and others has shown high rates of compliance for patient self-reporting in regulatory clinical trials.21–23 Similarly, good compliance has been seen in routine care contexts when patients self-report using tablet computers in waiting rooms—if staff members approach and remind them to log in.6,24,25 But there has been limited information about whether patients can be engaged to report their symptoms from home between visits on a regular basis, particularly over long periods of time. Information about this context of PRO use is increasingly important because various stakeholders consider using PROs for routine remote monitoring of symptoms, for longitudinal registries, and for use in quality assessment programs. This study therefore provides essential information for moving this field forward.

In particular, these findings offer insights toward developing future data collection strategies to increase compliance rates. Although the compliance rates in this study met the prespecified criteria for concluding feasibility and for meriting further development, there were still many patients who did not self-report during any given month or week. Because the principal reason for missing self-reports was that patients forgot (whereas impaired functional status or technical problems were not substantial barriers), better integration of PROs into regular workflow and improved reminder systems are likely to boost compliance rates in future work. Approaches that have been successful in increasing compliance in industry-sponsored clinical trials include reminder telephone calls from a central telephone bank as well as personalized automated reminder e-mails, telephone calls, or letters with the name of the patient's nurse or physician encouraging participation.21 Because this study was not a part of regular clinical workflow but rather was an add-on study, it did not have the feeling of being an expected part of care or clinical operations either to staff or patients.

Offering patients a menu of interfaces (ie, multiple modes of questionnaire administration), such as an automated telephone system (eg, interactive voice response system), handheld device, and computer, may also improve accessibility and compliance. Many contemporary electronic platforms outside of health care are accessible via multiple modes (eg, online banking).

Limitations of this study include its conduct at a single urban tertiary cancer center with a predominantly white, educated patient population who could read and understand English. By design, only patients with home Web access and e-mail experience were included (over the entire duration of this study, 27% of approached patients were deemed ineligible because they did not have home Internet access; ie, approximately one in every four patients approached). This rarified sample may have overestimated broader compliance rates. Nonetheless, this study provides an initial understanding of challenges faced when translating PRO approaches from the regulatory context into routine care settings. This study started in 2006 when electronic communications were less developed than they are currently. More patients are now connected electronically (including with their health providers),38,39 and hence, the applicability of this work may be greater, and current eligibility and compliance may be superior to those observed in this study.

Although this study focused on remote self-reporting for the purposes of symptom monitoring and management, the strategies used here have broader applications. There is growing interest in use of PROs for evaluating quality of care as well as in comparative effectiveness research. In both of these settings, non–highly selected patients are asked to report their experiences with clinical care and treatment, and the barriers faced in this study are likely to be experienced there as well. The risk of nonrandom missing data, particularly from the hardest to reach individuals, is a threat in these contexts.

It has also been suggested that severe toxicities or functional status impairment may hinder self-reporting, but in this study, ECOG performance status was not associated with compliance, and patients rarely attributed noncompliance to being too sick. Nonetheless, compliance rates did decline in the month before death, suggesting reasons related to disease progression that may affect willingness or ability to self-report. Future research on barriers and strategies for minimizing missing PRO data in outpatient nontrial contexts is warranted.

In conclusion, this analysis is intended to provide insights for further integration of between-visit symptom reporting in routine care settings, leading to more patient-centered treatment, improved patient-provider communication, enhanced quality of care, and increased patient satisfaction.

Appendix

Fig A1.

Histogram of individual patient compliance. Individual compliance is defined for each patient as the number of weeks with at least one report divided by the total number of weeks enrolled onto the study.

Footnotes

Supported by National Cancer Institute Grant No. 5K07CA124851.

Authors' disclosures of potential conflicts of interest and author contributions are found at the end of this article.

AUTHORS' DISCLOSURES OF POTENTIAL CONFLICTS OF INTEREST

The author(s) indicated no potential conflicts of interest.

AUTHOR CONTRIBUTIONS

Conception and design: Mark G. Kris, Deborah Schrag, Ethan Basch

Administrative support: Lauren J. Rogak, Laura Sit, Allison Barz

Provision of study materials or patients: Mark G. Kris, Clifford A. Hudis, Howard I. Scher, Paul Sabbatini, Ethan Basch

Collection and assembly of data: Antonia V. Bennett, Lauren J. Rogak, Laura Sit, Allison Barz, Mark G. Kris, Clifford A. Hudis, Howard I. Scher, Paul Sabbatini, Deborah Schrag, Ethan Basch

Data analysis and interpretation: Timothy J. Judson, Antonia V. Bennett, Mark G. Kris, Clifford A. Hudis, Paul Sabbatini, Deborah Schrag, Ethan Basch

Manuscript writing: All authors

Final approval of manuscript: All authors

REFERENCES

- 1.Bennett AV, Jensen RE, Basch E. Electronic patient-reported outcome systems in oncology clinical practice. CA Cancer J Clin. 2012;62:337–347. doi: 10.3322/caac.21150. [DOI] [PubMed] [Google Scholar]

- 2.Velikova G, Booth L, Smith AB, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: A randomized controlled trial. J Clin Oncol. 2004;22:714–724. doi: 10.1200/JCO.2004.06.078. [DOI] [PubMed] [Google Scholar]

- 3.Cleeland CS, Wang XS, Shi Q, et al. Automated symptom alerts reduce postoperative symptom severity after cancer surgery: A randomized controlled clinical trial. J Clin Oncol. 2011;29:994–1000. doi: 10.1200/JCO.2010.29.8315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Detmar SB, Muller MJ, Schornagel JH, et al. Health-related quality-of-life assessments and patient-physician communication: A randomized controlled trial. JAMA. 2002;288:3027–3034. doi: 10.1001/jama.288.23.3027. [DOI] [PubMed] [Google Scholar]

- 5.Basch E. The missing voice of patients in drug-safety reporting. N Engl J Med. 2010;362:865–869. doi: 10.1056/NEJMp0911494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Basch E, Artz D, Dulko D, et al. Patient online self-reporting of toxicity symptoms during chemotherapy. J Clin Oncol. 2005;23:3552–3561. doi: 10.1200/JCO.2005.04.275. [DOI] [PubMed] [Google Scholar]

- 7.Minasian LM, O'Mara AM, Reeve BB, et al. Health-related quality of life and symptom management research sponsored by the National Cancer Institute. J Clin Oncol. 2007;25:5128–5132. doi: 10.1200/JCO.2007.12.6672. [DOI] [PubMed] [Google Scholar]

- 8.Bruner DW, Bryan CJ, Aaronson N, et al. Issues and challenges with integrating patient-reported outcomes in clinical trials supported by the National Cancer Institute-sponsored clinical trials networks. J Clin Oncol. 2007;25:5051–5057. doi: 10.1200/JCO.2007.11.3324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bruner DW. Should patient-reported outcomes be mandatory for toxicity reporting in cancer clinical trials? J Clin Oncol. 2007;25:5345–5347. doi: 10.1200/JCO.2007.13.3330. [DOI] [PubMed] [Google Scholar]

- 10.US Food and Drug Administration: Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. http://www.fda.gov/downloads/Drugs/Guidances/UCM193282.pdf.

- 11.Lipscomb J, Reeve BB, Clauser SB, et al. Patient-reported outcomes assessment in cancer trials: Taking stock, moving forward. J Clin Oncol. 2007;25:5133–5140. doi: 10.1200/JCO.2007.12.4644. [DOI] [PubMed] [Google Scholar]

- 12.Methodology Committee of the Patient-Centered Outcomes Research Institute (PCORI) Methodological standards and patient-centeredness in comparative effectiveness research: The PCORI perspective. JAMA. 2012;307:1636–1640. [Google Scholar]

- 13.Selby JV, Beal AC, Frank L. The Patient-Centered Outcomes Research Institute (PCORI) national priorities for research and initial research agenda. JAMA. 2012;307:1583–1584. doi: 10.1001/jama.2012.500. [DOI] [PubMed] [Google Scholar]

- 14.Eng TR, Gustafson DH, editors. Washington, DC: US Department of Health and Human Services, US Government Printing Office; 1999. Wired for Health and Well-Being: The Emergence of Interactive Health Communication. [Google Scholar]

- 15.Fowler FJ, Jr, Barry MJ, Lu-Yao G, et al. Patient-reported complications and follow-up treatment after radical prostatectomy: The national Medicare experience: 1988-1990 (updated June 1993) Urology. 1993;42:622–629. doi: 10.1016/0090-4295(93)90524-e. [DOI] [PubMed] [Google Scholar]

- 16.Detmar SB, Muller MJ, Schornagel JH, et al. Health-related quality-of-life assessments and patient-physician communication: A randomized controlled trial. JAMA. 2002;288:3027–3034. doi: 10.1001/jama.288.23.3027. [DOI] [PubMed] [Google Scholar]

- 17.Harrison PL, Pope JE, Coberley CR, et al. Evaluation of the relationship between individual well-being and future health care utilization and cost. Popul Health Manag. 2012;15:325–330. doi: 10.1089/pop.2011.0089. [DOI] [PubMed] [Google Scholar]

- 18.Weingart SN, Gandhi TK, Seger AC, et al. Patient-reported medication symptoms in primary care. Arch Intern Med. 2005;165:234–240. doi: 10.1001/archinte.165.2.234. [DOI] [PubMed] [Google Scholar]

- 19.Basch E, Jia X, Heller G, et al. Adverse symptom event reporting by patients vs clinicians: Relationships with clinical outcomes. J Natl Cancer Inst. 2009;101:1624–1632. doi: 10.1093/jnci/djp386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Basch E, Iasonos A, McDonough T, et al. Patient versus clinician symptom reporting using the National Cancer Institute Common Terminology Criteria for Adverse Events: Results of a questionnaire-based study. Lancet Oncol. 2006;7:903–909. doi: 10.1016/S1470-2045(06)70910-X. [DOI] [PubMed] [Google Scholar]

- 21.Meacham R, McEngart D, O'Gorman H, et al. Use and compliance with electronic patient reported outcomes within clinical drug trials. Presented at the 13th Annual International Meeting of the International Society for Pharmacoeconomics and Outcomes Research; May 3-7; Toronto, Ontario, Canada. 2008. [Google Scholar]

- 22.Verstovsek S, Mesa RA, Gotlib J, et al. A double-blind, placebo-controlled trial of ruxolitinib for myelofibrosis. N Engl J Med. 2012;366:799–807. doi: 10.1056/NEJMoa1110557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Harrison C, Kiladjian JJ, Al-Ali HK, et al. JAK inhibition with ruxolitinib versus best available therapy for myelofibrosis. N Engl J Med. 2012;366:787–798. doi: 10.1056/NEJMoa1110556. [DOI] [PubMed] [Google Scholar]

- 24.Abernethy AP, Herndon JE, 2nd, Wheeler JL, et al. Feasibility and acceptability to patients of a longitudinal system for evaluating cancer-related symptoms and quality of life: Pilot study of an e/Tablet data-collection system in academic oncology. J Pain Symptom Manage. 2009;37:1027–1038. doi: 10.1016/j.jpainsymman.2008.07.011. [DOI] [PubMed] [Google Scholar]

- 25.Basch E, Iasonos A, Barz A, et al. Long-term toxicity monitoring via electronic patient-reported outcomes in patients receiving chemotherapy. J Clin Oncol. 2007;25:5374–5380. doi: 10.1200/JCO.2007.11.2243. [DOI] [PubMed] [Google Scholar]

- 26.McCann L, Maguire R, Miller M, et al. Patients' perceptions and experiences of using a mobile phone-based advanced symptom management system (ASyMS) to monitor and manage chemotherapy related toxicity. Eur J Cancer Care (Engl) 2009;18:156–164. doi: 10.1111/j.1365-2354.2008.00938.x. [DOI] [PubMed] [Google Scholar]

- 27.Dy SM, Roy J, Ott GE, et al. Tell Us: A Web-based tool for improving communication among patients, families, and providers in hospice and palliative care through systematic data specification, collection, and use. J Pain Symptom Manage. 2011;42:526–534. doi: 10.1016/j.jpainsymman.2010.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gibson F, Aldiss S, Taylor RM, et al. Involving health professionals in the development of an advanced symptom management system for young people: The ASyMS-YG study. Eur J Oncol Nurs. 2009;13:187–192. doi: 10.1016/j.ejon.2009.03.004. [DOI] [PubMed] [Google Scholar]

- 29.Maguire R, McCann L, Miller M, et al. Nurse's perceptions and experiences of using of a mobile-phone-based Advanced Symptom Management System (ASyMS) to monitor and manage chemotherapy-related toxicity. Eur J Oncol Nurs. 2008;12:380–386. doi: 10.1016/j.ejon.2008.04.007. [DOI] [PubMed] [Google Scholar]

- 30.McCall K, Keen J, Farrer K, et al. Perceptions of the use of a remote monitoring system in patients receiving palliative care at home. Int J Palliat Nurs. 2008;14:426–431. doi: 10.12968/ijpn.2008.14.9.31121. [DOI] [PubMed] [Google Scholar]

- 31.Snyder CF, Jensen R, Courtin SO, et al. PatientViewpoint: A website for patient-reported outcomes assessment. Qual Life Res. 2009;18:793–800. doi: 10.1007/s11136-009-9497-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Memorial Sloan-Kettering Cancer Center: Internet-Based System for Cancer Patients to Self-Report Toxicity. http://clinicaltrials.gov/ct2/show/NCT00578006.

- 33.Basch E, Artz D, Iasonos A, et al. Evaluation of an online platform for cancer patient self-reporting of chemotherapy toxicities. J Am Med Inform Assoc. 2007;14:264–268. doi: 10.1197/jamia.M2177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.National Cancer Institute: Common Terminology Criteria for Adverse Events, version 4.03. http://evs.nci.nih.gov/ftp1/CTCAE/CTCAE_4.03_2010-06-14_QuickReference_8.5x11.pdf.

- 35.Hauser K, Walsh D. Visual analogue scales and assessment of quality of life in cancer. J Support Oncol. 2008;6:277–282. [PubMed] [Google Scholar]

- 36.Rabin R, de Charro F. EQ-5D: A measure of health status from the EuroQol Group. Ann Med. 2001;33:337–343. doi: 10.3109/07853890109002087. [DOI] [PubMed] [Google Scholar]

- 37.Buccheri G, Ferrigno D, Tamburini M. Karnofsky and ECOG performance status scoring in lung cancer: A prospective, longitudinal study of 536 patients from a single institution. Eur J Cancer. 1996;32A:1135–1141. doi: 10.1016/0959-8049(95)00664-8. [DOI] [PubMed] [Google Scholar]

- 38.United States Census Bureau: Computer Internet Use in the United States. 2003. http://www.census.gov/prod/2005pubs/p23-208.pdf.

- 39.United States Census Bureau: Computer and Internet Use in the United States. 2010. http://www.census.gov/hhes/computer/publications/2010.html.