Abstract

The notion of action simulation refers to the ability to re-enact foreign actions (i.e., actions observed in other individuals). Simulating others' actions implies a mirroring of their activities, based on one's own sensorimotor competencies. Here, we discuss theoretical and experimental approaches to action simulation and the study of its representational underpinnings. One focus of our discussion is on the timing of internal simulation and its relation to the timing of external action, and a paradigm that requires participants to predict the future course of actions that are temporarily occluded from view. We address transitions between perceptual mechanisms (referring to action representation before and after occlusion) and simulation mechanisms (referring to action representation during occlusion). Findings suggest that action simulation runs in real-time; acting on newly created action representations rather than relying on continuous visual extrapolations. A further focus of our discussion pertains to the functional characteristics of the mechanisms involved in predicting other people's actions. We propose that two processes are engaged, dynamic updating and static matching, which may draw on both semantic and motor information. In a concluding section, we discuss these findings in the context of broader theoretical issues related to action and event representation, arguing that a detailed functional analysis of action simulation in cognitive, neural, and computational terms may help to further advance our understanding of action cognition and motor control.

Keywords: action simulation, internal forward models, occlusion, point-light action, static matching, predictive coding

Representation of occluded action

In recent years, the concept of simulation has flourished within various fields of psychological research, ranging from agency (Ruby and Decety, 2001), action perception (Rizzolatti et al., 1996; Blakemore and Decety, 2001; Mukamel et al., 2010), imitation (Brass et al., 2001; Buccino et al., 2004), mind reading (Gordon, 1996, 2001; Goldman, 2005, 2006), and empathy (Chartrand and Bargh, 1999; Gallese et al., 2004) to the study of clinical issues like schizophrenia (Enticott et al., 2008). Across these domains and the majority of studies, the term simulation concordantly expresses the idea that humans possess non-conceptual and direct ways of understanding others' thoughts, feelings, intentions and actions by mirroring or re-enacting their mental states and physical activities (Blakemore and Decety, 2001; Rizzolatti et al., 2006).

The present paper focuses on action simulation. It aims to discuss theoretical and experimental approaches to action simulation and its cognitive underpinnings, broadly understood as internal representations that parallel external action events, like observing a friend making a cup of coffee or a couple dancing together. From a functional point of view, internal simulations of physical actions may improve our appraisal of actions that we plan to perform in collaboration with others and that require us to act in response to and in anticipation of the actions of others (e.g., Sebanz and Knoblich, 2009; Doerrfeld et al., 2012; Manera et al., 2013). A recent study illustrative of this demonstrated that judgments of the weight of an object varied according to whether the participants planned to lift the object by themselves or whether they planned to lift it together with a co-actor who was either healthy or injured (Doerrfeld et al., 2012).

Here, we use the term simulation to refer to the mental operations involved in internally representing actions during so-called visual action occlusions. In everyday life, when we watch other people moving around us, it often happens that they disappear from sight for a moment. However, in these situations, behaviours and physical actions observed immediately prior to occlusion do not just stop. Based on what we have seen before, we are usually capable of internally substituting (simulating) the invisible parts of an action and rendering quite precise predictions about its future course (i.e., what the agent will do, and when his/her action will take place).

Neurophysiological evidence in monkeys demonstrates that neurons continue to fire for some time in response to specific actions even after these actions disappear behind an occluding object (Umilta et al., 2001; Jellema and Perrett, 2003). In human experiments using action occlusion paradigms, participants typically watch familiar actions that are briefly occluded from view and then continued. A typical task is then for participants to indicate if the re-appearing action part (after occlusion) is an accurate continuation of the perceived action (seen prior to occlusion), or if it has changed in spatial angle (e.g., Graf et al., 2007; Springer et al., 2011) or has jumped in time (e.g., Stadler et al., 2012b). High accuracy on this task requires a precise internal representation (simulation) of the occluded action part, and factors that influence performance can then be measured. For instance, Stadler et al. (2012b) examined the accuracy of action simulation when the observed actors moved according to human-like vs. artificial motion profiles whose kinematics had been changed according to a non-human, constant velocity. Under conditions with temporary occlusions, observers were clearly more able to predict human-like actions compared to non-human actions (Stadler et al., 2012b), highlighting the fundamental susceptibility of the human action simulation system (Parkinson et al., 2012; Saygin and Stadler, 2012; see Gowen and Poliakoff, 2012, for a review).

Since temporary occlusions require switching back and forth between externally guided perception and internal representations (simulations), action occlusion paradigms, further, allow the study of these two phases separately and in terms of their interrelations. In an illustrative study by Prinz and Rapinett (2008), the participants observed a human hand transporting an object. After a moment, the hand with the object was briefly occluded from view. Participants were required to make a judgment about the time that they thought the transporting hand with the object would reappear from behind the occluding object (Figure 5). Results indicated a substantial positive time error (i.e., lag effect), meaning that the continuation of the action after occlusion was judged to be “just in time” when the point of reappearance was slightly shifted ahead (by 20–100 ms). This finding provided a first insight into the timing and nature of internal action representations (simulations) during occlusions, suggesting that the mental operations called up during occlusions involve the generation of novel action representations rather than just pure extrapolation of perceived movement trajectories (Prinz and Rapinett, 2008).

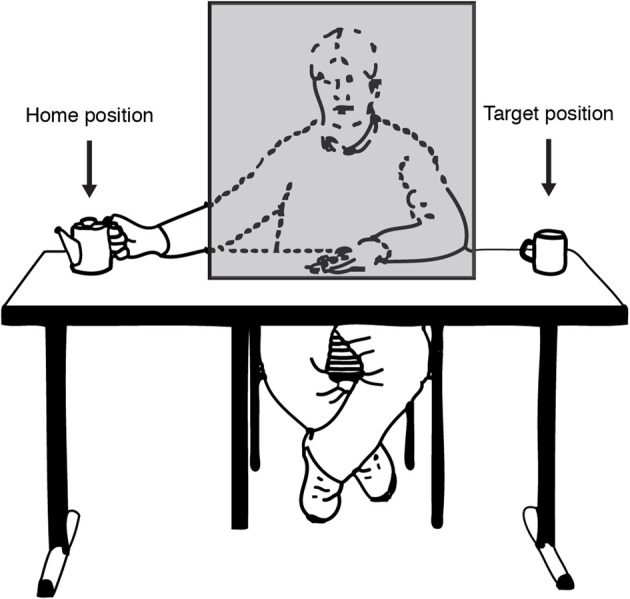

Figure 5.

Illustration of the experimental setting as seen through the eyes of the participant. On each trial the actor (sitting behind an occluding object) transported a teapot from a home position to a target position. Figure adapted from Prinz and Rapinett (2008) (p. 226). Copyright by IOS Press. Adapted with permission.

The authors of this study put forward that these issues are based on the assumption that unlike action perception, which naturally draws on external resources derived from actual stimulation, action simulation draws on internal resources derived from stored knowledge (cf. Prinz and Rapinett, 2008). In the following section, we focus on the temporal characteristics of action simulation. Based on the experimental evidence from different occlusion tasks, we propose that action simulation engages internal online processes operating in real-time, which act on newly created action representations rather than relying on continuous visual extrapolations of observed movement trajectories.

In Section Representational Mechanisms, we discuss another strand of experimental studies that have explored the procedural and representational characteristics of the processes engaged for solving action occlusion tasks. Two major findings emerge. Firstly, predicting occluded actions may engage two distinct processes: dynamic simulation and static matching. Both processes do not by themselves speak to the representational format in which they occur (e.g., simulation/matching in the motor and/or visual domain). Secondly, two different kinds of representational domains may be involved: sensorimotor processes (those involved in an observers' own physical activity) and semantic processes (those involved in understanding action-related verbal contents). In a concluding section, we propose that the concept of internal action simulation can be related to a predictive coding account of motor control (e.g., Kilner et al., 2007), in correspondence with the broader notion that humans can use their motor system to simulate and predict others' actions (Grèzes and Decety, 2001; Jeannerod, 2001). In line with this notion, action simulation may also be linked to embodied views of language, holding that processing verbal and conceptual action-related information is strongly linked to (and may even rely on) information processing in sensory and motor domains (e.g., Barsalou, 2003, 2008; Zwaan, 2004; Pulvermüller, 2005, 2008; Glenberg, 2008; Mahon and Caramazza, 2008, 2009).

Time course

Simulation in real time: the occluder paradigm

The first research into real-time action simulation used what we refer to as the occluder paradigm, first developed by Graf et al. (2007). This paradigm has been used, with some novel variations, in subsequent research on action simulation (Prinz and Rapinett, 2008; Parkinson et al., 2011; Sparenberg et al., 2012). The occluder paradigm is based on the hypothesis that when we observe a human moving, who is then occluded from view—perhaps he or she disappears behind a fence—an internal action simulation runs in real-time predicting the on-going motion. Then the person reappears in view at a point in the motion that either matches or does not match that internal real-time simulation. In this way, the spatiotemporal accuracy of the action simulation and the ability of people to use action simulation to aid their perception and prediction of human movement can be tested.

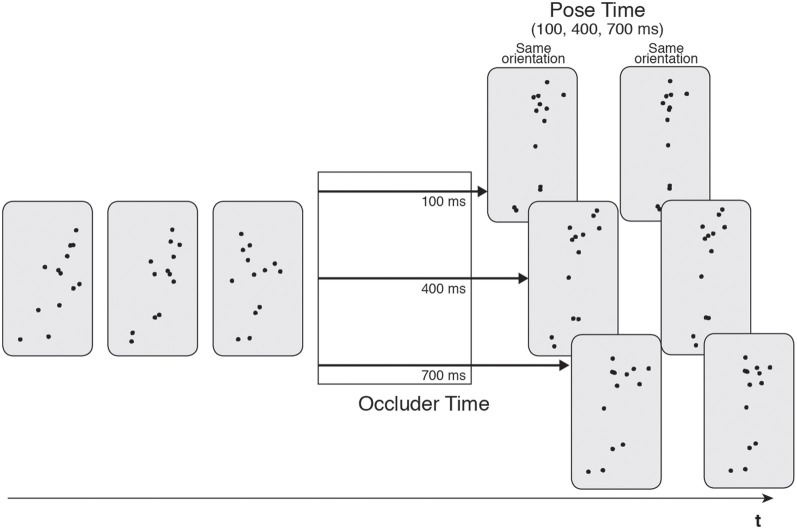

The original occluder paradigm (Graf et al., 2007) used representations of human motion known as point-light actors (PLAs; Johansson, 1973), which convey motion via a group of moving dots that track the motion of the major joints of the human body. These stimuli have been widely used to examine human movement processing (Johansson, 1973, 1976; Cutting et al., 1988). Graf et al.'s (2007) original studies used PLA stimuli of non-cyclical human motions, such as performing a basketball shot or hitting a tennis ball. The PLA was presented to the participant for a short period of 2–4 s before being occluded from view for a fixed amount of time (occluder time; 100, 400 or 700 ms, see Figure 1). Following this, a static test posture of the action was presented that was either rotated around the actor's vertical axis, as if he had suddenly spun to the left or the right, or in the correct orientation, as if he had continued smoothly on in his motion. The participants' task was to judge whether this rotation had occurred or not (yes/no response). Crucially, and independent of the spatial orientation, the test posture was either a congruent or incongruent continuation of the motion. That is, if the test posture was congruent, it was taken from the point in the motion that the actor would have reached if he had continued for the exact duration of the occluder. In this condition, the test posture should match the state of the internal real-time simulation. In the incongruent conditions, the test posture was from a point of the motion that was too early or too late with respect to the exact occlusion period. In this case, the test posture would not match the current state of the real-time action simulation. The occlusion period is referred to as the pose time (which, again, can take three values, 100, 400 or 700 ms, see Figure 1).

Figure 1.

Illustration of the stimuli utilized in Graf et al. (2007). Point light actions were presented and then occluded for a variable time (100, 400, or 700 ms). Occlusion was followed by a test pose that was rotated or in the correct orientation. Pose time was also varied (100, 400, or 700 ms).

The hypothesis was that if the test posture matched the current state of the action simulation, then the orientation judgment would be easier and more successful. This was indeed the case: error rates for congruent continuations were less than those for incongruent continuations across different occluded durations, a finding that was demonstrated across a number of different types of human motions. This was the first evidence that the real-time action simulation existed and that it could be tested by utilizing its beneficial effects in visual judgment tasks. Graf et al. (2007) conducted a further experiment using PLAs that were inverted on their vertical axis; it is well-known that it is difficult to perceive human motion under these conditions. The results showed that there was no judgment-benefit for the congruent test postures compared to the incongruent ones. This suggested that the effects seen in the earlier studies were specific to human motion itself and not some more generalized visual predictive system. That is, the real-time simulation is specifically concerned with predicting coherent examples of human actions.

Benefits of real-time simulation

Having described the occluder paradigm for testing action simulation, we will now review recent research that has used and further developed it to measure various aspects of action simulation, such as its precise time course, its susceptibility to online change, and its role in the direct visual perception of human motion.

Detection thresholds

First, we consider the advantageous role that real-time action simulation has in the visual processing of human motion. That is, can we show that the action simulation can provide a here-and-now, or predictive, benefit to visual perception? Imagine watching a football match on television. The TV is an old analogue set and is fed by a radio antenna on the roof. It's windy, the antenna is blown around, and the TV picture is occasionally replaced with “snow”: the random cascade of black and white dots that represent a lack of signal. A player is about to score when he disappears briefly under this visual snow and you are trying to keep track of him until he reappears on the screen in the haze of bad picture quality.

We argue that this is when action simulation might occur: you generate a real-time model of a player's movements whilst he is briefly occluded from view. This example also illustrates what may be an adaptive benefit of the real-time action simulation: if the internal model tracks the exact time-course of the player's movements, the model can then be used to provide a perceptual prediction—also in real-time—of how the player should look at any moment during occlusion. If the figure is not fully occluded but simply visually degraded, the perceptual prediction may provide real-time support to the visual system, aiding the detection and processing of the faintly seen player. Importantly, if the faintly reappearing player was not then moving in a way consistent with the real-time predictive simulation—the video had skipped forward or backwards, for example—the simulation would provide no such predictive benefit.

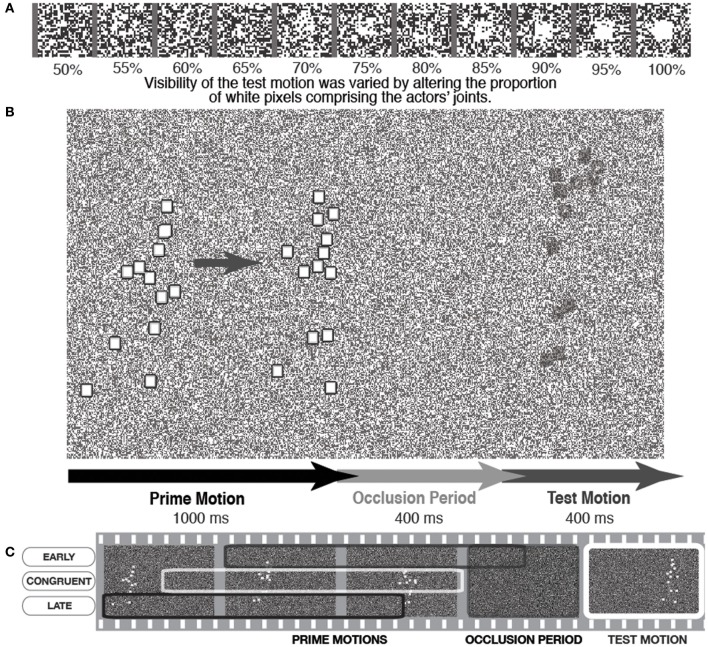

This was the rationale behind a recent set of experiments (Parkinson et al., 2011) in which PLAs were presented on top of a constantly changing random pattern of black and white pixels in a 50/50 ratio, resembling TV “noise.” The points of the actor were squares of pixels that could also be rendered as randomized patterns of black and white dots, with a variable ratio of white dots to black dots. If the ratio of white dots was 100%, the actor was clearly visible. However, as the white ratio was reduced the actor was less visible against the background noise, until a 50% ratio rendered him totally invisible (see Figure 2A).

Figure 2.

A schematic illustration of a trial in which the PLA was presented with variable visibility against the background. (A) Shows how varying white pixel ratio in the actor's joints increases visibility against the noise background, (B) shows a basic trial sequence, and (C) depicts a schematic showing how different sections of the action were shown as the prime motion to manipulate motion congruency with the same test motion section. Figure adapted from Parkinson et al. (2011, p. 1466). Copyright © The Experimental Psychology Society. Adapted with permission of Taylor and Francis Ltd., www.tandfonline.com on behalf of The Experimental Psychology Society, with permission from the authors.

In each trial, the participants were presented with background noise that continuously changed throughout the trials. Participants clearly saw the initial part of the action, which we refer to as the prime motion, as it was the section of motion that was assumed to prime the generation of the subsequent action simulation. Then the actor was occluded from view for a short period (400 ms) after which he reappeared, in motion, for 400 ms. This was the test motion that was presented with variable visibility against the background (see Figure 2B). The participants' task was to indicate whether they saw the reappearing actor or not, with test motion visibility adaptively altered to reach set detection rate targets. Thus, detection thresholds for the test motion could be measured in terms of the ratio of white pixels depicting the test motion actor. This was an indicator of how easy participants found it to detect the reappearing actor under difficult visual conditions.

It is important to note that this detection task is a simple, immediate “here-and-now” judgment, as opposed to the more postdictive one used in the original Graf et al. (2007) paradigm. That is, participants were not asked to make any judgment about the quality of the reappearing actor (e.g., whether he had turned or not), but quite simply whether he had reappeared at all. Thus, this paradigm made it possible to measure whether action simulation aided the basic visual prediction and subsequent detection of human motion (i.e., detection thresholds). An action simulation would be generated during the occlusion, which was a continuation of the prime motion seen prior to occlusion. In order to test the simulation's effect on test motion detection, the spatiotemporal relationship between the prime motion and the test motion was manipulated. This meant that the action simulation would be congruent with the test motion, or the test motion would be “too early” or “too late” to match the action simulation (incongruent conditions). To avoid confounds of test motion on detection thresholds, the same section of test motion was used in all congruent and incongruent conditions. Thus, the spatiotemporal manipulations were achieved by presenting different sections of prime motion, which would subsequently drive different action simulations in relation to the single test motion (see Figure 2C).

Detection thresholds were measured for a variety of actions in three conditions: action simulation-congruent test motions, incongruent early test motions, or incongruent late test motions. Congruent thresholds were consistently lower than those for either of the incongruent condition. Hence, if the currently generated action simulation was temporally congruent with the test motion, the latter was more easily detected. These experiments suggest that the action simulation can have a direct, immediate, here-and-now benefit for the perception of human movement, but only when the external stimulus of movement temporally matches the internal action simulation. This suggests some form of on-going, real-time, top-down effect of action simulation on ongoing visual processes, and supports the notion that internal forward models for biological motion and action can be used to directly supplement the perception of those actions (Wilson and Knoblich, 2005; Prinz, 2006).

Inserted motion

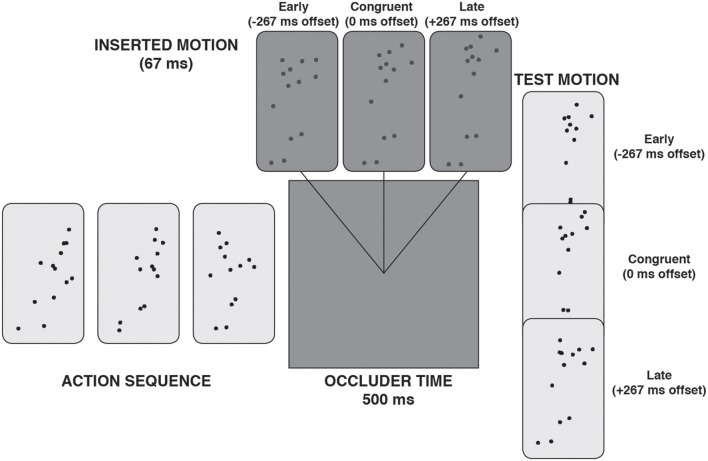

Another consideration in understanding action simulation is how stable the internal real-time model of motion is. In other words, is it possible to briefly bias, or indeed replace, the current ongoing action simulation by very briefly introducing human motion information that does not match the current state of the ongoing simulation process? Parkinson et al. (2012; Experiment 2) investigated this question by adding “inserted motion” in the occluder. Specifically, PLAs were presented that were briefly occluded for 500 ms and then reappeared in motion for 500 ms (i.e., test motions). These test motions were either temporally congruent with the ongoing action simulation at that point or were temporally incongruent, that is, offset 267 ms too early or too late in the motion sequences. The participants were asked to make an explicit 2-alternative forced-choice judgment as to whether the test motion was a correct continuation or not, that is, the judgment measured how well the test motion matched the action simulation that was being generated at that point in time.

Around the temporal halfway point of the occlusion period, 67 ms (4 frames) of low contrast PLA was presented, such that participants got the impression of a brief “flash” of motion within the occlusion period (see Figure 3). Crucially, the inserted motion was either temporally congruent with the action simulation or too early or too late by 267 ms. In the congruent instance, the inserted motion matched the action simulation, almost as if the ongoing action was seen very briefly through a slit in the occlusion period. In the two incongruent instances, the inserted motion would not match the ongoing action simulation. The hypothesis was that, despite the brevity of the inserted motion, it would nevertheless act to bias or replace the action simulation.

Figure 3.

A schematic illustration of a trial with “inserted motion” during the occlusion phase.

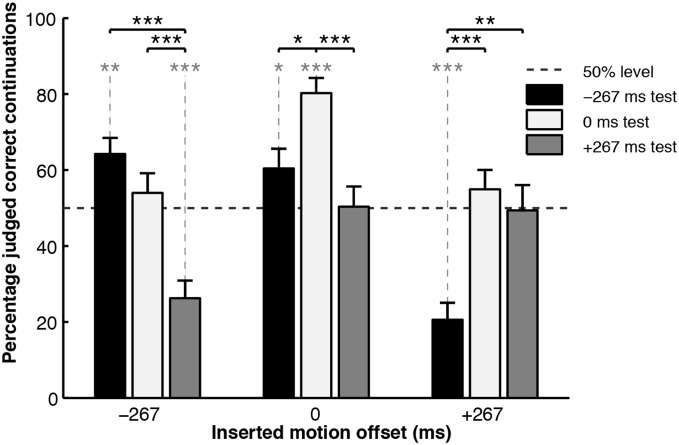

The experiment, thus, represented a 3 (test motion offset) × 3 (inserted motion offset) design, with the measurement being the percentage of trials in which the participants judged the test motion to be a “correct continuation.” The results are shown in Figure 4. When the inserted motion matched the action simulation (0 ms offset, central cluster), it did not interfere with the action simulation process and the results were as expected: participants were more likely to judge the congruent test motion as correct, as opposed to either of the incongruent test motions, showing that they could utilize the action simulation to correctly judge the veracity of the test motion. However, the pattern of results was distinctly different when the inserted motion was incongruent with the action simulation: when the inserted motion was offset in one temporal direction, judgments of what was a correct test motion also shifted in that temporal direction.

Figure 4.

Percentage correct judgments for the “inserted motion” experiment. Black asterisks connected with solid lines indicate significance levels of between-condition t-test comparisons. Asterisks in gray connected with dotted lines indicate significance levels of one-sample t-test comparisons to chance performance (50%), *p < 0.05, **p < 0.01, ***p < 0.001. Figure reproduced from Parkinson et al. (2012, p. 428). Copyright © Springer Science+Business Media. Reproduced with permission.

This suggests that the action simulation process can be updated “mid-flow” by new incoming motion information, no matter how briefly that new motion is perceived for. This is perhaps unsurprising because an action simulation process that remains immune to change may not be a very useful mechanism for predicting the ongoing movements of others. Two possibilities arise as to how the inserted motion effects this updating of the action simulation: firstly, the biasing hypothesis suggests that briefly presented motion that does not temporally match the current state of the action simulation acts to fluidly update it, temporally “pushing” the action simulation in that direction. The second hypothesis suggests that re-simulation occurs: perceiving even a short duration of inserted motion cancels the currently generated action simulation and generates a new one based on the new motion information. Naturally, if the inserted motion matches the old simulation, the new one will roughly match the old one, leading to the expected results, as seen in the 0 ms inserted offset condition described above. However, if the re-simulation is based upon temporally shifted motion, the newly generated simulation will perceptually support “incorrect” continuation test motions.

Both of these hypotheses are possible and the current evidence does not conclusively support one or the other (Parkinson et al., 2012). It might seem, on the face of it, that the re-simulation hypothesis is less intuitively sensible, since it would entail that an entirely new action simulation can be accurately generated from only 67 ms of a PLA motion. In fact, this is entirely feasible, as we will see later (Section The Lag Effect: Towards a Higher Temporal Resolution).

The lag effect: towards a higher temporal resolution

We have described recent research, which, in the first instance, shows the existence of real-time action simulation of human motion (Graf et al., 2007; Parkinson et al., 2011). Secondly, we have demonstrated an ecologically valid real-world benefit of action simulation, which can act in a top-down fashion to aid human motion detection (Parkinson et al., 2011). Finally, we went on to illustrate the fluid, updatable nature of the simulation (Parkinson et al., 2012). Now, we turn to the investigation of the spatiotemporal accuracy of the action simulation: the more fine-grained nature of how well the simulation can track human motion.

Spatial occlusion and the teapot experiment

Work by Prinz and Rapinett (2008) attempted to investigate the time-course and accuracy of action simulation by asking how it relates, at first, to simple visual linear extrapolation. They used a novel version of the occluder paradigm, which used actual video footage of people making simple goal-directed motions. The actors of these videos sat facing the viewer but hidden behind a permanently present cardboard occluder (see Figure 5). The motions they performed were simple, manual transport movements, such as reaching with their right hand for a teapot on their right (screen-left), picking it up, and moving it screen-right behind the occluder, to reappear screen-right of the occluder where a mug or cup awaited the teapot. Since the occluder was onscreen throughout, the moments and position of the start of the occlusion were highly predictable, as was the spatial position of reappearance considering the linear left-right trajectory of the transport motion. Therefore, the experiment was ideally suited to measure the spatiotemporal accuracy of the action simulation by examining participants' judgments of the time the teapot reappeared from the right-edge of the occluder.

The teapot could reappear either at the correct time, as if the motion had continued as normal behind the occluder, or too late or too early in steps of 40 ms. Participants judged whether the teapot appeared too late, just in time, or too early. When the “just in time” judgments were analyzed, it was found that there was a positive time error in the judgments. That is, the reappearances participants thought were correct were, in fact, too late. This suggests that the action simulation is not entirely accurate in tracking ongoing occluded motion; there is some temporal lag present. Figure 6A schematically illustrates these results on the assumption that the transport motion (solid black line) has a constant velocity as the teapot is moved from screen left to right. The black dotted line represents the occluded portion of the motion. This would also represent the trajectory of an accurate action simulation: one without lag. The gray line represents the perceived trajectory of the teapot after occlusion, with a positive time lag, which equates with the time lag in the “just in time” judgments.

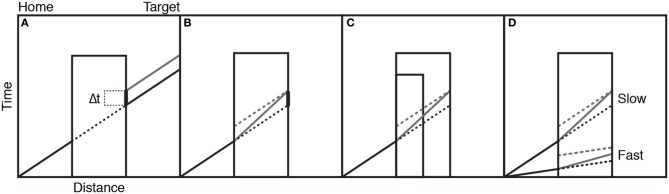

Figure 6.

Panel (A) shows the actual movement of an object behind the occluder (black lines) and the action simulation (gray line) illustrating lag error. Panel (B) shows two sources of the lag error: intercept (dotted gray) and slope (solid gray) lines. Panel (C) shows the different predictions of the two sources of lag error when occluder duration changes. Panel (D) shows the different predictions of the two sources of lag error when motion speed changes. See text for detailed explanations. Figure adapted from Prinz and Rapinett (2008) (p. 226). Copyright by IOS Press. Adapted with permission.

Retaining the assumption that an action simulation has a linear velocity profile like the action it represents, there are two possible sources for the judgment error. Firstly, the generated action simulation may be slower than the actual action. This is called the slope error, and is represented in Figure 6B as the solid gray line within the occluder. The second source of error comes from instances where the action simulation matches the real action in terms of speed, but there is a time-cost involved in generating an action simulation, which means it lags behind the action by a set amount from the start. This is represented as the dotted line in the occluder in Figure 6B, and is known as the intercept error. The two errors are not mutually exclusive.

In order to ascertain which of the two errors contributes to the lag in continuation judgments described above Prinz and Rapinett (2008), conducted a second experiment in which two different sizes of occluder and two different speeds of motion were used. Altering the occluder size changes both the distance over which the action is occluded and the time taken until reappearance (Figure 6C). Altering the speed of the motion alters the amount of time it takes the motion to cross the same occluder distance (Figure 6D). In both cases the slope and intercept error hypotheses make markedly different predictions. The simulations affected by intercept errors are shown in dotted gray lines, and those affected by the slope error are in solid gray. Because the time cost involved will occur from the start of simulation, this lag should be constant, irrespective of the occluder size, and so judgment lags will remain constant across occluder conditions. However, the slope error hypothesis implies that the longer the action is occluded for, the more the lag increases. Hence, a larger occluder should produce more error than a smaller occluder (Figure 6C). Similarly, increasing the speed of the action and, thus, decreasing occlusion time should, according to the slope hypothesis, decrease lag error, whilst again the intercept error suggests that lag will be the same irrespective of action speed (Figure 6D).

However, when an experiment was run combining two transport speeds with two occluder widths, the results were the opposite of the predictions of both the slope and the intercept error hypotheses, with lag error being smaller for slower action speeds, and smaller for longer occluders. This means, firstly that the constant cost (intercept) hypothesis must be rejected, because it predicted that error would be constant. Interestingly, however, it also suggests that the source of the lag error cannot be a slowing of a linear extrapolation (slope error) because the results are the opposite of what that hypothesis predicts.

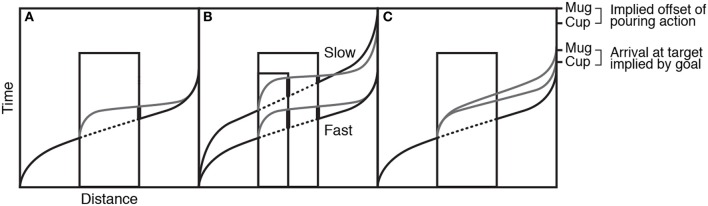

Since the available evidence did not support action simulation as a simple, linear extrapolation of the occluded motion, Prinz and Rapinett (2008) went on to reconsider the nature of the simulation: First, they included more details about the spatiotemporal properties of goal-directed movements, namely that a goal-directed transport movement tends to have a period of acceleration at the start and a deceleration toward the goal at the end. Second, they suggested that rather than being a simple extrapolation or continuation of the movement, the action simulation is actually an internally generated re-start of the motion. As the visual input of the goal-directed input is removed at the occluded edge, the action simulation may generate a model of a similar goal-directed action with the same target (end-point) but with a new start point that of the occluded edge. This means that the action simulation entails a period of acceleration from its own start, then moves and decelerates toward the exact same spatiotemporal target of the original action. Figure 7A shows the velocity profile of the action as it accelerates from the start and decelerates at the target (black solid line) with the occluded portion dotted. The re-generated action simulation is shown in gray, with a similar accelerating-decelerating profile. The thick line on the right side of the occluder highlights the magnitude of the lag error. Figure 7B shows how this re-generated simulation hypothesis can account for the previously puzzling results: faster actions produce more lag error than slower actions and larger occluders produce more error than smaller occluders.

Figure 7.

Panel (A) shows the velocity profile of the action as it accelerates from the start and decelerates at the target (black solid line) with the occluded portion dotted. The regenerated action simulation is shown in gray. Panel (B) shows how this regenerated simulation hypothesis provides different predictions when occluder duration and action speed change. Panel (C) shows how action simulations might be affected by the implied goal of the action. Figure adapted from Prinz and Rapinett (2008) (p. 226). Copyright by IOS Press. Adapted with permission.

In a final experiment, Prinz and Rapinett (2008) looked at the effects of implied goal duration and produced a remarkably effective demonstration that action simulation involves the internal modeling of goal-oriented human action and not merely visual prediction of kinematics: they used the same video-based paradigm involving a left–right teapot transport with two different occluder widths. In addition, they varied the visual identity of the target item between a small cup and a large mug. The large mug would take longer to fill than the small cup, so the length of time taken to achieve the action-goal should be longer for the mug than the cup. While the videos of the reappearing movement always stopped at the same point, just before the contents of the teapot were about to be poured, a greater positive lag error was observed in response to the mug compared to the cup targets, meaning that the greater amount of time implied for filling the mug had increased the target time for the generated action simulation (see Figure 7C).

This work by Prinz and Rapinett (2008) simply, but effectively, demonstrates a number of details regarding both the generation and the spatiotemporal details of action simulation. Firstly, the simulation is not merely a linear extrapolation or continuation of the perceptual information; indeed, it seems not to be a continuation at all. Instead, it may actually be that an entirely new model of the goal-directed action that has been occluded is generated, but starting from the point of occlusion, and this re-generation utilizes goal-directed kinematic information inherent in action systems. In this sense, the re-generation may, in fact, be more closely tied to motor systems than perceptual systems, in that it uses goal-directed motor information to supply the perceptual information, a notion put forward by Prinz (2006) and Wilson and Knoblich (2005).

Sparenberg et al. (2012) took a more detailed look at the lag error in action simulation measured by Prinz and Rapinett (2008). They used PLA stimuli and a 300 ms occluder period, after which they showed a static test posture, which could be offset earlier or later than the true posture of the actor immediately following occlusion. Participants were asked if the test posture was too late or too early to be the correct continuation of the motion. Results showed that test postures that were too early in the sequence were judged to be a correct continuation. That is, over a fixed period of occluder time, the action simulation lags behind the true motion. When Sparenberg et al. (2012) measured this lag over two different occluder durations, they found that the lag did not change but remained constant at 25 ms lag error. This contradicted the findings of Prinz and Rapinett (2008) that the error reduced with longer occlusion durations (and also movement speed, not manipulated by Sparenberg et al., 2012), and varied between 18 and 141 ms. Sparenberg et al. (2012) concluded that the stable lag error was a result of a constant time-cost when switching from perception of motion to internal action simulation.

It should be noted that, on closer inspection, it is very difficult to directly compare the two paradigms. For instance, in the stimuli used by Prinz and Rapinett (2008), the occluder was permanently visible, meaning that the point of occlusion was spatially and temporally predictable, and the point of reappearance was at least spatially predictable. In comparison, in Sparenberg et al. (2012) the “occluder square” would suddenly appear and then disappear on the screen, meaning that the spatiotemporal point of occlusion was less predictable, and the position of the reappearing test posture was also not predicted by any properties of the occluder. Secondly, the nature and complexity of the motions that were to be simulated differed greatly between paradigms: the Prinz and Rapinett (2008) transport movement is much more linear in nature than the full body motions used by Sparenberg et al. (2012). This action also only uses a single limb and the individual arm movement has a clearly defined (or implied) end-point or goal.

The combination of predictable occluder onset and offset, plus the simpler, linear quality of the motion in the Prinz and Rapinett (2008) paradigm may contribute to a greater ability to simulate a goal-directed end-point for those actions and thus produce an action simulation with the spatiotemporal properties shown in Figure 7. In this situation, lag error will vary according to occluder duration and action speed, as previously discussed (Figure 7B). On the other hand, it may not be possible to generate a simulation based on a goal-directed end-point for more complex full body motions, as used in Sparenberg et al. (2012). In this case, the action simulation may predict the ongoing complex motion in a more linear fashion, meaning constant lag costs irrespective of occluder duration. Further experiments are needed to tackle this issue.

Still, the findings from both paradigms are informative regarding the nature of action simulation and its underlying dynamic processes, suggesting that, whilst the precise temporal nature of the simulation may vary with the type of action being simulated, the existence of temporal lag is common.

Motion information required for action simulation generation

As described earlier, Prinz and Rapinett (2008) suggested that action simulation is not merely a perceptual–continuation mechanism but is instead a generative internal modeling that uses information about the perceived motion—and perhaps also motoric knowledge regarding that action—to produce a new goal-directed simulation of the action. If the simulation is generated using motor as well as perceptual information, a pertinent question to ask is: exactly how much visual motion information is required to generate the action simulation?

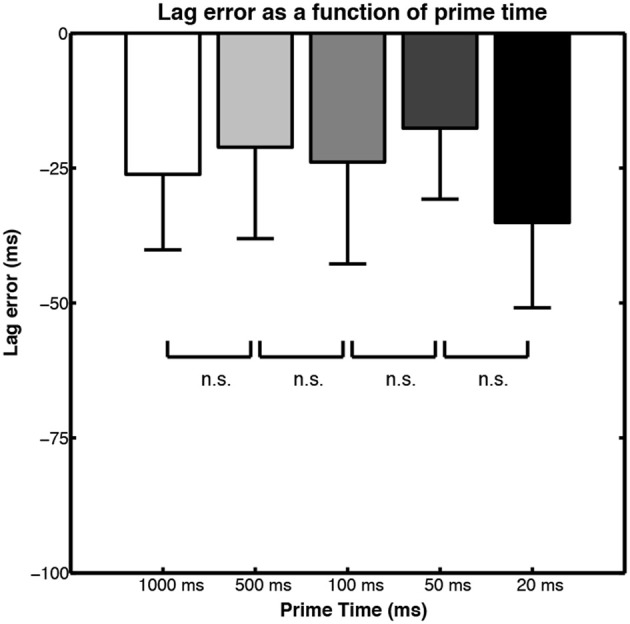

To test this issue, Parkinson et al. (2012; Experiment 1) used a PLA version of the paradigm, in which the initial prime motion of the PLA was occluded for 500 ms followed by 500 ms of the reappearing actor in motion. The crucial manipulation was the duration of the pre-occluder motion: with each frame of the PLA animation lasting 10 ms, the prime duration was varied to be either 20, 50, 100, 500, or 1000 ms (i.e., the last condition only presented 2 frames of PLA motion before the occluder). Different sections of test motion were presented in method of constant stimulus (MOCS) fashion and participants were asked to judge if the test motion was too early or too late to be a correct continuation. This allowed the accuracy of the action simulations in terms of its temporal lag to be computed in a similar way to that used by Prinz and Rapinett (2008).

The mean lag errors for each of the prime motion durations are shown in Figure 8. The lag errors are all negative, meaning that participants tended to judge early offset test motions as being correct continuations. This implies that the action simulation is running slightly slower than the action it is being generated to predict. This is a result we have already encountered in Section The Lag Effect: Towards A Higher Temporal Resolution where the Prinz and Rapinett (2008) and Sparenberg et al. (2012) studies are detailed. What is remarkable in Parkinson et al. (2012) experiment is that the temporal accuracy of the action simulation was not affected by the amount of human motion provided before the occluder: even when presented with as little as 20 or 50 ms of PLA motion (2 or 5 frames), the generated action simulation was just as temporally accurate as it was when participants saw 1 s of motion before the occluder.

Figure 8.

Bar graphs of lag error in action simulation motion judgments when different durations of motion are shown prior to occlusion. Figure adapted from Parkinson et al. (2012, p. 426). Copyright © Springer Science + Business Media. Adapted with permission.

Of course, during the course of an experiment the actions will become familiar, so generating a simulation from a brief glimpse of a PLA may not be a finding that will generalize to other situations, but it is still an interesting finding. This relates to Section Benefits of Real-Time Simulation, in which we described how inserting a very brief amount of point light motion within the occluder can bias subsequent judgments of reappearing motion (Parkinson et al., 2011). We suggested two mechanisms for this: 1) that the inserted motion biases the currently generated action simulation, or 2) that the inserted motion is used as the basis for a re-simulation and the generation of an entirely new action simulation based on the new motion percept. At first the re-simulation notion seems less appealing: is 67 ms of human motion enough to generate a whole new predictive model?

However, the results of the experiment by Parkinson et al. (2012) point to just this and it had been shown previously that very brief exposures to biological motion can provide enough information for adequate processing (Thirkettle et al., 2009). The results of Parkinson et al. (2012) suggest that the action simulation is remarkable in that it can generate real-time motion predictions from very short exposure to familiar human movements, and this perhaps accounts for the results when brief conflicting motion information is inserted in the occluder: the action simulation is re-started using the new motion section. The notion of re-simulation is also appealing in light of the hypothesis brought forward by Prinz and Rapinett (2008), namely that action simulation involves the generation of an internal forward model that combines current motion information, motor knowledge, and information about the implied end-point of the motion.

An interim summary

Action simulation is a process that internally models human movements in real-time. The process of action simulation can be demonstrated and investigated using the occluder paradigm (Graf et al., 2007; Prinz and Rapinett, 2008), in which a human actor disappears from view and then reappears at a position/motion–continuation which can be either correct—as if they had continued moving behind the occluder—or from too late or too early in the sequence—as if the “video” of the motion skipped forward or back. When participants are tested on some orthogonal aspect of the reappearing actor, for example when asked “Has her form been rotated?”, they perform better when the test position of the actor is correct with respect to the length of the occlusion. This demonstrates that the action simulation is real-time in nature, modeling the position of the occluded actor at that temporal point (cf. Section Simulation in Real Time: The Occluder Paradigm).

Action simulation has been demonstrated to directly aid the visual perception of a visually degraded human motion, but only when that motion spatiotemporally matches the real-time state of the action simulation (Sections Benefits of Real-Time Simulation and Detection thresholds). We have detailed how visual exposure to even very short durations of human motion can provide sufficient information to generate an action simulation (Sections The Lag Effect: Towards A Higher Temporal Resolution and Motion information required for action simulation generation). We have also described the way in which the ongoing time-course of the action simulation can be manipulated by displaying very short sections of the motion during occlusion, which could again be either temporally congruent with—or earlier or later than—the real-time state of the action simulation at that point. These tended to bias judgments of which reappearing motion was a “correct continuation” in the temporal direction of the inserted motion (Section Benefits of Real-Time Simulation, “Inserted motion”). This illustrates that the action simulation can be updated in real-time.

Finally, whilst it is clear that the action simulation is real-time, in that it unfolds over time as the real action does, the simulation slightly lags the action (Section The Lag Effect: Towards A Higher Temporal Resolution, “Spatial Occlusion and the Teapot Experiment”). Research into the source of this lag error has suggested that the simulation itself is not simply a linear extrapolation of the visual motion of the action before occlusion. Instead, the process of action simulation involves an internal generation of a model of the movement that includes the velocity and acceleration profiles of a newly initiated goal-directed action. This model uses the spatiotemporal point of occlusion as the starting point and the implied goal of the action as its end point. Taken together, we see that action simulation is a process which generates a real-time model of an action that takes into account the goals of the action, probably using one's own implicit motor knowledge, and that the action simulation can be dynamically updated and provide direct perceptual benefits when a human motion is difficult to see.

Representational mechanisms

While the studies discussed above indicated that perceptual processes can strongly impact how we perceive and predict others' actions, a number of significant unexplored questions regarding the role of motor processes remain. In the following, we discuss this issue based on studies examining how an observer's own physical activity may affect his or her ability to accurately simulate and predict others' actions.

Sensorimotor processes

A wealth of experimental research has demonstrated strong mutual influences between action perception and execution (for a review see Schütz-Bosbach and Prinz, 2007). While motion detection is impaired when the motions go in the same direction as concurrently performed actions (Hamilton et al., 2004; Zwickel et al., 2007), movement execution depends on similarity-based relationships between go-signals and movements to be performed (Brass et al., 2001; Craighero et al., 2002) and exhibits greater variability when a different movement is concurrently observed (Kilner et al., 2003).

Recent experiments have studied how the representational resources involved in action simulation may be related to the resources involved in action execution and asked: does action execution affect the performance in occluded action tasks considered to reflect internal action simulation? In one of these studies, participants observed arm movements of a PLA while performing a corresponding arm movement themselves (Springer et al., 2011). The executed and observed movements were synchronized; furthermore, they were either fully congruent (i.e., involving the same anatomical body side and the same movement pattern; full overlap) or they were fully incongruent on both dimensions (no overlap), or they differed in either the anatomical body side used or in the movement pattern involved (partial overlap). For example, in a no overlap trial, the participant reached out his right arm to the right side, while the PLA lifted his left arm upwards over his head.

In each trial, the observed action was briefly occluded and then continued by the presentation of a static test pose (Graf et al., 2007). Participants indicated whether the test pose depicted a spatially coherent continuation of the previous arm movement. Two factors were manipulated: occluder time (the duration of occlusion) and pose time (the time at which the posture shown after occlusion was actually taken from the occluded movement), and each of them could take three values (100, 400 and 700 ms; as already explained in Section Simulation in Real Time: The Occluder Paradigm; Figure 1).

If real-time simulation takes place, response accuracy should be best when the occluder time (OT) and the pose time (PT) match, because then the internal representation (updated in real-time) should match the actual test pose (Graf et al., 2007). In addition, performance should yield a monotonic distance function, which emerges from the three levels of absolute time distances between the OT and PT (i.e., 0, 300 and 600 ms). If the two times match perfectly (i.e., no time distance), the test posture is presented just in time. Running a real-time simulation of the occluded action means an internal representation is run and updated, which can be used as a reference for evaluating the upcoming test pose. If real-time simulation occurs, that internal reference would, in the 0 ms distance condition, precisely match the test pose—whereas that match should be weaker in the conditions with a temporal distance of either 300 or 600 ms. This is reflected by a monotonic distance function, that is, a monotonic decrease of response accuracy with increasing temporal distance (e.g., Graf et al., 2007; Springer and Prinz, 2010). This description of the logic of the occluder paradigm by Graf et al. (2007) is a more technical recapitulation of the description already given earlier on in Section Simulation in Real Time: The Occluder Paradigm.

If internal simulation involves motor resources, the distance function should vary depending on the conditions of motor execution. This was, in fact, indicated. A monotonic distance effect (indicating real-time simulation) emerged when the observer's own movements were similar (but not identical) to the PLA's movements (i.e., partial overlap). In contrast, there was no monotonic distance effect for full overlap and no overlap (i.e., when both movements involved the same body sides and movement patterns and different body sides and movement patterns, respectively). This finding suggests that the degree of a representational overlap between performed and observed actions (e.g., Hommel et al., 2001) influenced the action simulation, as indicated by a monotonic distance effect.

However, spatial congruence may matter (Craighero et al., 2002; Kilner et al., 2009). That is, in one of the conditions of partial overlap, executed and observed movements involved the same movement pattern and occurred at the same side of the screen. This condition clearly showed a monotonic distance effect (i.e., real-time simulation). Hence, spatial congruence may have acted to increase the likelihood with which the participants engaged in internal action simulation when solving the task.

To test this alternative, an additional experiment was run in which participants were instructed that they would see the back view of the PLA, while all other parameters remained constant. This was possible because the PL stimuli being used were ambiguous with regard to front vs. back view. While under front view conditions, spatial and anatomical body side congruence falls apart, the back view manipulation implies that spatial and anatomical congruence corresponds, meaning that if the PLA and the executed action involve the same body side (e.g., left arm), they occur on the same side of the screen (left side). Hence, if spatial congruence matters, a monotonic distance function should occur in this condition.

However, the back view instructions revealed the same pattern as was found under front view instructions (Springer et al., 2011; Experiment 2). Specifically, the mirror-inverted constellation (implying spatial congruence between executed and observed movements) did not show a monotonic distance function. Therefore, the findings clearly contradicted a spatial congruence account. This study suggests that action simulation engages motor resources. The strength of the motor influences may depend on the amount of structural overlap between observed and executed actions (as defined by the anatomical side of the body and the movement pattern involved).

Further evidence of this view comes from a study by Tausche et al. (2010) examining effector-specific influences on the prediction of partly occluded full-body actions of a PLA (cf. Graf et al., 2007). While the movements observed were performed with either the arms or the legs, the participants themselves responded with a (different) movement involving either their arms or legs. The results indicated that a correspondence between the effectors observed and the effectors used induced a motor interference effect. Specifically, a monotonic distance effect, indicating real-time simulation, emerged for the incompatible trials (involving different effectors, i.e., arms and legs), whereas no such function occurred for the compatible trials (involving the same effectors, i.e., arms or legs).

Overall, these findings suggest that the accuracy with which an acting observer predicts others' actions may be influenced by anatomical mappings between performed and observed actions (Wapner and Cirillo, 1968; Sambrook, 1998; Gillmeister et al., 2008; Liepelt et al., 2010). This influence may arise at the level of effector-specific formats (Tausche et al., 2010; Springer et al., 2011; cf. Springer et al., 2013). This view accords with the notion that action observation activates the motor system in a corresponding somatotopic manner (Decety and Grèzes, 1999, 2006; Buccino et al., 2001; Grèzes and Decety, 2001).

Dynamic and static processes

As the above-described studies demonstrated, physical activity does not appear to prevent participants from solving the action occlusion task (Tausche et al., 2010; Springer et al., 2011). Hence, additional and/or alternative processes may contribute to solving this task. Can action simulation recruit additional processes that are less motor-based when motor representations are constrained by execution?

It has, in fact, been suggested that predicting actions over visual occlusions may base on (at least) two different mental operations: dynamic updating and static matching (Springer and Prinz, 2010). Dynamic updating corresponds to an internal real-time simulation that should be indicated by a monotonic distance effect (i.e., performance should be best for time distances of 0 ms and should monotonically decrease for time distances of 300 and 600 ms; as explained previously; cf. Section Simulation in Real Time: The Occluder Paradigm). In the following, we use the term real-time simulation (specifying the timing of an assumed internal simulation process) synonymously with dynamic simulation and dynamic updating.

In addition to dynamic updating, performing an action occlusion task may involve a matching process, implying that the test pose after occlusion is matched against a statically maintained representation derived from the last action pose seen or perceived prior to occlusion (Springer and Prinz, 2010). If static matching takes place, performance in the action occlusion paradigm should decrease with increasing pose times (i.e., 100, 400, or 700 ms), irrespective of the actual duration of the occlusion period, because an increase in the pose time implies a decrease in the similarity between the last visible action pose (shown before occlusion) and the test pose (shown after occlusion) by definition. Hence, while static matching in its pure form predicts a main effect of pose time (but no interaction of occluder time and pose time and, therefore, no monotonic distance function), real-time simulation, in its pure form, predicts a strong interaction (emerging as a monotonic distance function), but no main effect of the pose time factor.

A study by Springer et al. (2013) used body part priming to address this issue. The participants played a motion-controlled video game for 5 min with either their arms or legs, yielding conditions of compatible and incompatible effector priming relative to subsequently performed arm movements of a PLA. The visual actions shown were briefly occluded after some time (action duration of 1254–1782 ms), followed by a static test pose. Participants judged whether or not the test pose showed a spatially coherent continuation of the previous action (as explained previously; cf. Graf et al., 2007). While compatible effector priming (e.g., arms) revealed evidence of dynamic updating (i.e., a monotonic distance effect, but no pose time effect), incompatible effector priming (e.g., legs) indicated static matching (i.e., a pose time effect, but no monotonic distance function). That is, in the compatible effector priming condition, response accuracy was best when the duration of occlusion matched the actual test pose shown after the occlusion, indicating an internal representation of the observed action was updated in real-time, thus matching the actual test pose. In addition, response accuracy decreased monotonically with increasing time difference between the duration of occlusion and the actual test pose (i.e., monotonic distance effect), corresponding to an increase of the time difference between an internal real-time model and the actual action outcome shown in the test pose. Hence, the findings of the compatible condition supported real-time simulation (Graf et al., 2007).

On the other hand, in the incompatible effector priming condition, evidence of real-time simulation was lacking (i.e., the duration of occlusion did not interact with the actual action progress shown in the test pose; see Figure 1). In this condition, however, response accuracy decreased with an increase in the pose time factor, implying a decrease in the similarity between the last visible action pose seen prior to occlusion and the test pose seen after occlusion—irrespective of the actual duration of the occlusion period. Thus, after being primed with incompatible effectors, participants were more accurate in the action occlusion task when the test pose shown was more similar to the most recently perceived action pose seen prior to occlusion (pose time effect). This effect cannot be explained by internal updating of the last perceived action image. It supports static matching. Instead of matching the test poses against real-time updated representations, participants in this condition may have alternatively matched the test poses against statically maintained representations derived from the most recently perceived action pose, which were maintained and then used as a static reference for the match with the upcoming test pose (Springer et al., 2013 cf. Springer and Prinz, 2010).

These results suggest that recognizing and predicting others' actions engages two distinct processes: dynamic updating (simulation) and static matching. The degree to which each process is involved may depend on contextual factors, such as the compatibility of the body parts involved in one's own and others' actions. Converging evidence comes from studies with a quite different focus of interest, for example, studies using semantic priming as a means of experimental context manipulation and addressing verbal descriptions of meaningful actions, rather than the kinematics involved in those actions.

Semantic processes

The experiments we are going to consider now investigated the relationships between the processes involved in predicting occluded actions and those involved in semantic processing of verbal contents (Springer and Prinz, 2010; Springer et al., 2012; cf. Prinz et al., 2013). A great deal of previous research has indicated that motor processes are involved during the understanding of language that describes action (e.g., Pulvermüller, 2005, 2008; Andres et al., 2008; Fischer and Zwaan, 2008). For instance, while words denoting “far” and “near” printed on objects to be grasped yielded comparable effects on movement kinematics to the actual greater or shorter distances between hand position and the object (Gentilucci et al., 2000), processing verbal descriptions of actions activated compatible motor responses (e.g., Glenberg and Kaschak, 2002; Glenberg et al., 2008) and supported the conduct of reaching movements when the verb was processed prior to movement onset (Boulenger et al., 2006).

To what extent would verbal primes modulate the internal simulation of actions under conditions of temporary occlusion? In one study, the occluded action task was always preceded by a lexical decision task (Springer and Prinz, 2010; Experiment 2). Specifically, the participants judged whether a single word (onset 1250 ms) was a valid German verb (which was the case in 75% of trials, whereas pseudo-verbs appeared in the remaining 25 %). While all 102 verbs shown (all of them in the infinitive form) described achievable full-body actions, one half expressed high motor activity (like springen—“to jump”) while the other half expressed low motor activity (like stehen—“to stand”). This (relative) distinction of high vs. low motor activity resulted from an independent rating of the words by 20 volunteers.

On each trial, the lexical decision task was immediately followed by an occluded action task (as described previously) displaying a familiar PLA involving the whole body (e.g., lifting something from the floor, putting on a boot, or getting up from a chair). Instructions for the two tasks were given to make them appear to be completely unrelated to each other. However, as the results clearly showed, verbal content affected performance in this task. While lexical decisions involving high-activity verbs revealed a pronounced monotonic distance function (taken as a signature of internal real-time simulation), no such effect emerged for trials involving lexical decisions about low-activity verbs. We took these results as first evidence for the idea that the processes involved in an occluded action task may be tuned by the dynamic qualities of action verbs. To test this assumption, we ran another experiment in which the same verbs were used, but they were further differentiated according to the speed being expressed by “fast,” “moderate,” and “slow” action verbs based on an additional word rating (e.g., “to catch,” “to grasp,” “to stretch,” respectively; Springer and Prinz, 2010; Experiment 3). While words expressing fast and moderate actions produced a monotonic distance effect (indicating real-time simulation), slow action words clearly did not. That is, when the action occlusion task followed lexical decisions about verbs denoting fast and moderately fast actions, the monotonic distance function turned out to be more pronounced and steeper compared to trials in which the task was preceded by lexical decisions involving slow activity verbs.

These experiments suggest that language-based representations can affect the processes used for predicting actions observed in another individual. However, because the prime verbs always required lexical decisions, participants may have noticed that some of the verbs matched the visual actions, while others did not. Therefore, when responding to the test poses after occlusion (i.e., deciding whether or not it depicted a coherent continuation of the action), participants may have been more likely to give “yes” responses after a “match” than a “mismatch” (e.g., Forster and Davis, 1984).

To control for such strategy-based effects, we ran an additional experiment in which the prime verbs were masked and did not require any response at all (Springer et al., 2012). Specifically, ten verbs that had been rated as very fast (e.g., fangen—“to catch”) and ten verbs rated as very slow (e.g., lehnen—“to lean”) were briefly presented (onset 33 ms) embedded within a forward and a backward mask consisting of meaningless letter strings. Hence, people were not consciously aware of the verbal primes and were unlikely to engage in any deliberate response strategies (e.g., mapping the semantic content to the observed actions; see Forster, 1998; Van den Bussche et al., 2009). Still, masked priming revealed a similar result: While a pose time main effect was always present, indicating static matching was involved, a pronounced monotonic distance effect (taken to reflect dynamic updating, i.e., real-time simulation) emerged for verbs expressing dynamic actions, while it was lacking for verbs expressing static actions and meaningless letter strings (Springer et al., 2012).

While masked words are not visible, they have still been shown to access semantic processing levels (Kiefer and Spitzer, 2000; Schütz et al., 2007; Van den Bussche and Reynvoet, 2007). Also, when we used a non-semantic, purely visual priming of action dynamics (by presenting dots rotating with slow, moderate, or fast speed), a monotonic distance effect was lacking. Overall, the observations from both conscious and unconscious priming experiments seem to suggest that the semantic content implied in verbal processing has an impact on procedural operations involved in a subsequent occluded action task.

To better understand the nature of these effects the details of the putative internal action representation during occlusions and its underlying mental operations described above must be considered. Specifically, predicting occluded actions seems to imply two processes: dynamic updating and static matching. Hence, the observation that the slope of the monotonic distance function (indicating dynamic updating) is more pronounced after processing high-activity, as compared to low-activity action verbs, suggests (at least) two different functional interpretations (cf. Prinz et al., 2013). One is to consider a direct impact of verbal semantics on simulation dynamics—in the sense that the degree of activity expressed in the verbs affects the speed of simulation (faster after processing high-activity verbs as compared to low-activity verbs). The other alternative is that the distance function actually reflects a blend of performance resulting from two ways of solving the task: dynamic updating and static matching. While dynamic updating relies on real-time updating of the internal reference against which the test pose is matched, static matching relies on an internal reference that is static and may be derived from the last posture seen before occlusion (Springer and Prinz, 2010; Prinz et al., 2013).

Based on a direct differentiation of the two processes, as described previously (Springer et al., 2013), we argue that the results by Springer et al. (2012) can be best understood as a blend of outcomes of static and dynamic processes. That is, semantic verb content may modulate the relative contributions of two processes, static matching and dynamic updating. While high-activity verb contents invite stronger contributions of dynamic processing than low-activity contents, low-activity contents may promote stronger contributions of static processing.

In sum, the results from both explicit and implicit semantic priming experiments suggest that semantic verbal contents may impact on the mental operations involved when observers engage in recognizing actions that are transiently covered from sight (Springer and Prinz, 2010; Springer et al., 2012). Further studies converge with this view, although addressing semantic interference rather than priming effects (e.g., Liepelt et al., 2012; Diefenbach et al., 2013). For example, Liepelt et al. (2012) found evidence of interference between language and action, demonstrating that word perception influences hand actions and hand actions influence language production. Overall, one may conclude that internal action simulation and semantic processing can access common underlying representations, a view that corresponds to recent accounts of embodied cognition (e.g., Barsalou, 2003, 2008; Zwaan, 2004; Pulvermüller, 2005, 2008; Glenberg, 2008; Mahon and Caramazza, 2008, 2009).

A framework for action representation

This paper focuses on experimental research investigating action simulation through systematic manipulation of the factors that influence how we perceive and predict actions observed in other people. While the studies discussed here differ according to a number of methodological aspects, including postdictive and predictive types of measurements, as well as the features studied, including the time course, sub-processes, and representational grounds of action simulation, all of them involve variations of an action occlusion paradigm (Graf et al., 2007; Prinz and Rapinett, 2008). This paradigm requires observers to evaluate the course of actions that are briefly and transiently covered from sight. When visual input is lacking, observers need to strongly rely on internally guided action representations. Thus, the paradigm allows for systematic testing of the cognitive underpinnings of action simulation and its internal processes and resources.

Several new insights emerged from the findings of these action occlusion paradigms. First, action simulation enables observers to render quite precise real-time predictions of others' actions (Graf et al., 2007; Parkinson et al., 2011; Sparenberg et al., 2012). For instance, observers were highly accurate in differentiating between time-coherent and time-incoherent continuations of temporarily occluded human full-body actions (Sparenberg et al., 2012) and spatially occluded human hand actions (Prinz and Rapinett, 2008). Hence, action simulation may involve an internal predictive process that runs in real-time with observed actions. This process may act on newly created action representations rather than relying on continuous visual extrapolations of observed movement trajectories (Prinz and Rapinett, 2008).

Second, action simulation seems to be highly susceptible to subtle visual manipulations, indicating that it draws on perceptual representations of diverse aspects of human motion and kinematic features, which may enable observers to develop highly accurate predictions about actions observed even after quite short phases of visual observation (Parkinson et al., 2012).

Third, action simulation can be influenced by an observer's own physical activity. Thus, the representational resources involved in internal action simulation may be related to the resources involved in motor execution. The strength of the motor influences varied according to the degree of correspondence between observed and performed actions, for instance, regarding the effectors involved (Tausche et al., 2010; Springer et al., 2011).

Fourth, predicting actions through periods of occlusion may involve two distinct processes: dynamic updating and static matching. While dynamic updating corresponds to real-time simulation, static matching implies that recently perceived action images are maintained as an internal reference against which newly incoming action information can be matched. The relative proportion to which the two processes are used may depend on contextual factors such as a correspondence of body parts involved in performed and perceived action (Springer et al., 2013).

Fifth, internal action simulation was affected by linguistic processing of action-related words. While prime verbs describing dynamic actions corresponding to the observed actions (i.e., implying movement of the limbs) revealed evidence of dynamic updating, this was not the case for those describing static actions (implying no movement of the limbs) (Springer and Prinz, 2010). This occurs even if people are not consciously aware of these action verbs and, thus, not prone to deliberate response strategies, suggesting that action simulation may involve semantic representational resources (Springer et al., 2012).

In the next section we are going to place the experimental evidence in the wider context of major theoretical issues in the broad domain of action and event representation.

Real-time simulation and predictive coding

Several studies in which observers had to predict temporarily occluded actions have shown that prediction accuracy was best when the actions reappeared in a time-consistent manner after occlusions. In addition, prediction accuracy systematically decreased as the time gap between the duration of occlusion and the temporal advance of the action stage shown after occlusion increased (Graf et al., 2007; Springer and Prinz, 2010). These findings correspond to the notion that action simulation involves internal models that run in real-time with observed action (Verfaillie and Daems, 2002; Flanagan and Johansson, 2003; Rotman et al., 2006). Furthermore, internal real-time simulation was affected by the observers' own physical activity (Tausche et al., 2010; Springer et al., 2011).

Possible explanations for these results come from a predictive coding account of motor control (e.g., Kilner et al., 2007, 2009) and from the broader Theory of Event Coding (TEC; Prinz, 1990, 1997, 2006; Hommel et al., 2001). Efficient visuo-motor control requires estimating one's own body state prior to movement execution, which is based on internal forward models. These internal forward models allow individuals to anticipate the sensory consequences of their own movements in real-time based on motor commands (i.e., efference copies; Wolpert and Flanagan, 2001). They may also operate when observers engage in predicting actions observed in others (Grush, 2004; Blakemore and Frith, 2005; Prinz, 2006; Thornton and Knoblich, 2006; Kilner et al., 2007). Internal sensorimotor simulations may contribute to perceptual processing by generating top-down expectations and predictions of the unfolding action, allowing to precisely anticipating others' actions (see Wilson and Knoblich, 2005, for a review).

According to TEC, codes of perceived events and planned actions share a common representational domain. Perceptual codes and action codes may, thus, influence each other on the basis of this representational overlap. For instance, during different motor cognitive tasks (i.e., action observation or motor imagery), the cortical representations of a target muscle and a functionally related muscle were enhanced within a single task and across different tasks, suggesting a topographical and functional overlap of motor cortical representations (Marconi et al., 2007; cf. Dinstein et al., 2007). This overlap may provide a basis for anticipating others' actions by mapping those actions onto one's own sensorimotor experiences (Jeannerod, 2001; Gallese, 2005).

The participants in the experiments reported here (Section Representational Mechanisms) may have applied the same motor representations that were activated during execution to predicting a corresponding action observed. If so, the requirement to internally simulate an observed action may be reduced when observed and concurrently performed actions fully correspond, because under this condition execution by itself may already provide a continuously updated internal reference by which the occlusion task can be solved. Hence, this condition yielded better task performance than conditions in which observed and performed actions were not (or only partially) similar to each other (Springer et al., 2011). Given a complete lack of correspondence, execution may strongly interfere with internal simulation (Prinz, 1997; Wilson and Knoblich, 2005) such that internal simulations need to be shielded from information available from executing a movement that is entirely different from the observed one. Hence, this condition did not reveal evidence of internal simulation but showed increased errors, suggesting interference from execution to simulation (cf. Tausche et al., 2010). In fact, running real-time simulations of observed actions may be efficient for solving the task only when executed and observed movements are similar (but not when they are identical or fully incongruent on each possible dimension) (Springer et al., 2011). Here, evidence of real-time simulation was obtained, suggesting that the cost/benefit ratio for running internal sensorimotor simulations was more balanced, whether this is due to congruence in terms of the anatomical body sides used (Wilson and Knoblich, 2005) or the exact movement patterns involved in observed and performed actions (Kilner et al., 2003).

In line with this view, the temporal predictions generated by one's own motor system for efficient motor control may also be applied when predicting other people's actions (Blakemore and Frith, 2005; Kilner et al., 2007). Observers are able to quite precisely predict not only the sensory consequences of their own actions, but also those of others' actions (e.g., Sato, 2008). Furthermore, based on the observation of the communicative gestures of an agent in dyadic interaction, they are able to render quite precise predictions about when the action of the second agent will take place (Manera et al., 2013).