Abstract

Cocaine addiction is characterized by poor judgment and maladaptive decision-making. Here we review evidence implicating the orbitofrontal cortex in such behavior. This evidence suggests that cocaine-induced changes in orbitofrontal cortex disrupt the representation of states and transition functions that form the basis of flexible and adaptive ‘model-based’ behavioral control. By impairing this function, cocaine exposure leads to an overemphasis on less flexible, maladaptive ‘model-free’ control systems. We propose that such an effect accounts for the complex pattern of maladaptive behaviors associated with cocaine addiction.

Cocaine addiction is characterized by apparently compulsive drug use despite adverse consequences1; this addiction persists in part because of poor judgment and maladaptive decisions that those who are addicted make in their daily lives. The goal of decision-making is to choose the optimal course of action from potential options. To accomplish this, humans and nonhumans must constantly update and integrate information about the value of current and potential actions and future states in reference to current needs. In many psychiatric disorders, including drug addiction, this complex process is disrupted. In particular, according to the American Psychiatric Association’s Diagnostic and Statistical Manual of Mental Disorders (DSM-IV-TR) or the World Health Organization’s International Classification of Diseases (ICD-10), the criteria for substance dependence include several direct and indirect references to impaired decision-making. For example, these criteria include pursuing drug use at the cost of other important and valued life activities, an inability to control or cut down drug use despite an explicit desire to do so and using larger amounts of drugs or using them over a longer period than intended. These criteria suggest that those who are addicted lose control over drug use because they are unable to incorporate the negative health and social consequences of drug-seeking and drug-taking into their decision-making.

Consistent with this idea, over the last decade several theories have attempted to explain drug addiction as a failure of the biological decision-making system (see Box 1). Although each theory highlights different aspects of drug addiction and different alterations that may contribute to altered decision-making, a common theme running through these theories is that abnormal decision-making reflects changes in function of or interaction between two decision- making systems: a flexible planning or cognitive control system, usually associated with prefrontal cortical regions, and a rigid habit system, often associated with subcortical regions including, in particular, parts of the basal ganglia and amygdala2.

Box 1. Related addiction theories.

Several important theories have previously been advanced to explain decision-making deficits in addiction. These hypotheses can be grouped into two main classes. The first class proposes that a drug-induced deficit in prefrontal cortical function results in a loss of control over behavior. Jentsch and Taylor showed that primates chronically exposed to cocaine are impaired in inhibiting responding to conditioned and unconditioned stimuli, and they proposed that these effects could contribute to addiction45. Related ideas and experimental evidence have been advanced by Everitt et al.98, Bechara99 and Volkow and colleagues46. A large body of evidence supports the notion that chronic exposure to addictive drugs, particularly psycho-stimulants, can cause hypofunction in prefrontal cortical areas and concomitant cognitive dysfunctions (see main text). Many have made the argument that these cognitive dysfunctions could contribute to addiction, although direct evidence of this link is not often shown. Volkow has specifically implicated the OFC in these effects46, and Damasio and Bechara have suggested that an OFC-linked deficit in predicting future outcomes could explain some aspects of addiction99. The hypothesis described herein builds on these ideas and advance them in two important ways: first, our hypothesis links drug-induced cognitive dysfunction to specific neurophysiological changes in OFC observed during performance in OFC-mediated tasks, and second, it links these changes to an understanding of the specific informational contributions made by OFC to both decision-making and learning in well controlled cognitive tasks. Thus, our hypothesis attempts to go beyond a phenomenological description of the effects of cocaine exposure on cognition—namely, a loss of inhibitory control or an inability to make good decisions—and instead suggests the underlying reasons, at the level of information processing, for these effects. By formulating the hypothesis in terms of the underlying functions that are affected, further predictions about the cognitive effects of chronic psychostimulant exposure can be made, allowing rigorous testing of the hypothesis.

The second class of related theories, which are not exclusive of prefrontal dysfunction theories, includes those that postulate a drug-induced shift from goal-directed behavior based on action-outcome associations to habitual behavior based on stimulus-response (S-R) associations. Both Robbins and Everitt100 and Jentsch and Taylor45 have suggested that such a shift could explain the loss of control over drug-seeking behavior that is seen in addiction. Some evidence supports the idea that psychostimulant exposure can result in strong S-R associations and a reduction in the efficacy of goal-directed mechanisms in controlling behavior. The hypothesis herein is closely related to these previous hypotheses, with two important distinctions. Whereas Robbins and Everitt100 have pointed to a shift from frontal to striatal or ventral striatal to dorsal striatal control in models of addiction, here we suggest that specific drug-induced changes to OFC information processing can also contribute to the observed shift in the basis of behavior. Furthermore, on the basis of experimental evidence, we suggest that these changes in OFC could also result in impairments in model-based learning and decision-making processes. Second, we attempt here to understand this shift by using the computational distinction, first advanced by machine learning theorists, between model-based and model-free behavioral control (see Box 2 and the main text for a definition of these terms and how they relate to learning-theory terms). By drawing on these computational approaches, we argue that a more complete understanding of the many behavioral and learning changes seen in cocaine addiction can be achieved. In addition, these computational tools allow predictions about the microstructure of behavior or neural activity (that is, trial-by-trial variations) to be made, again permitting more rigorous testing of the specific hypothesis at issue.

According to parallel computational and learning theory accounts3, these two systems are distinguished by the type and complexity of their underlying associative representations. The flexible planning model-based system (see Box 2 for glossary of specialized terms shown in italics throughout) relies on an associative model of the world to make decisions. This model consists of states that represent the critical cues and outcomes available in an environment and transition functions that contain knowledge about which actions lead from state to state. To make decisions, the system can use this model to mentally simulate sequences of candidate actions and their consequences and thereby derive the expected future outcomes, and thus the subjective values, of available actions. As actions are executed, the expected immediate consequences (ensuing states and rewards) can be compared to actual consequences to continuously update the model. The advantage of this model-based system is that it is up to date and flexible, taking into account at each point in time all available information; because its value judgments are based on a model of how the world is put together, it can (i) integrate predicted outcomes across potential future states and actions and (ii) adapt to new information such as changes in the reward value of predicted outcomes, without having to experience the changed outcomes in the context of the decision at hand.

Box 2. Glossary of terms.

Pavlovian reinforcer devaluation

A procedure in which the conditioned response to a cue is tested after the unconditional stimulus (for example, food) is separately devalued by motivational (for example, inducing satiation with prefeeding) or associative (for example, pairing the food reward with LiCl-induced illness) manipulations. The normal consequences of such manipulations are a decrease in the conditioned responding to the cue previously paired with the reward.

Pavlovian-to-instrumental transfer

Refers to the ability of a Pavlovian conditioned stimulus to influence instrumental (operant) responding (for example, lever pressing). During training, the subject learns to press a lever for a reward (for example, food) and also learns in different sessions a Pavlovian association between the conditioned stimulus and the reward. Subsequently, lever-pressing is assessed in extinction tests in the presence or absence of the Pavlovian conditioned stimulus; the conditioned stimulus is presented noncontingently during testing. Altered responding in the presence of the conditioned stimulus is referred to as Pavlovian-to-instrumental transfer and is thought to reflect the general motivating effect of the Pavlovian cue.

Conditioned reinforcement

A process in which a previously neutral stimulus (a tone or light), which has acquired reinforcing effects through its previous association with a primary or unconditioned reinforcer or reward (for example, food or drug), is used to support the acquisition of a new instrumental response. In a typical experiment, a Pavlovian stimulus is paired with an appetitive unconditional stimulus (for example, food). After acquisition, the subjects are given a test session in which a new instrumental response results in the presentation of the conditioned stimulus.

Second-order conditioning

A Pavlovian conditioning procedure in which a neutral stimulus is paired with a second conditioned stimulus that has been previously paired with an unconditional stimulus and can thus support new conditioning. For example, a tone is paired with food and then a new cue (a light) is paired with the tone. The light will produce a conditioned response even if it was never paired directly with the food.

Delay discounting

The decrease in subjective reward value as a function of the increasing delay of the reward. This subjective discounting is demonstrated empirically by the willingness of subjects to accept a small reward immediately in lieu of a large reward after a delay.

Reversal learning

Any task in which subjects first learn to discriminate among different cues associated with different probabilities of reward and punishment, and then the cue-outcome (reward) associations are reversed.

Pavlovian overexpectation

A Pavlovian conditioning procedure in which two conditioned stimuli are first separately paired with the same unconditioned stimulus (for example, food) and then the cues are combined (in a compound cue) and paired with the same unconditioned stimulus. After such training, the subjects are given a test session in which cues are presented alone, without the unconditioned stimulus; subjects typically show a reduction in conditioned responding to the previously compounded cues, as soon as the first test trial. This decline in responding is thought to reflect a decrease in the cues’ associative strength during the compound phase, owing to the fact that during this stage the two cues together overpredict the unconditional stimulus, resulting in a negative prediction error that drives learning and hence reduction in cues’ associative strength.

Unblocking

A Pavlovian conditioning procedure in which a neutral stimulus is first paired with an unconditional stimulus (for example, two food pellets). In a second stage, a second stimulus is added to the first (forming a compound cue). Normally this would cause ‘blocking’; that is, there would be no extra learning to the second stimulus as the first stimulus already fully predicts the availability of the unconditional stimulus. However, if the unconditional stimulus is also altered in amount (for example, one food pellet) or quality (for example, two sucrose pellets), learning is unblocked and the second stimulus is also learned (because it becomes an effective reward predictor) and becomes an effective conditioned stimulus. Conditioning is usually demonstrated by measuring the conditioned response to each conditioned stimulus alone in a subsequent test phase.

Prediction error

The discrepancy between an actual and predicted outcome. According to learning theories, the prediction error constitutes a teaching signal for learning correct predictions.

Model-free learning andcached values

A set of reinforcement learning methods that use prediction errors to estimate and store scalar cue or action values from experience. These stored (‘cached’) values indicate the predicted total future reward if an action or cue are pursued, and they are used to bias choices of actions in order to gain maximal rewards. This decision strategy is simple but inflexible: the values are simply scalar numbers, separated from the identity of the expected future outcomes themselves or the specific events that will ensue en route to obtaining the outcomes. In particular, this means that cached values do not immediately change if the outcomes are revalued or new knowledge about sequences of likely events is acquired. Instead, such a change requires repeated experience and learning of new cached values by means of prediction errors, the differences between the cached value and the actual experienced value. As a result of this inflexibility, it has been postulated that decision-making using cached values underlies habitual behavior.

Model-based learning

A set of reinforcement learning methods in which an internal model of the environment is learned and used to evaluate available actions or cues on the basis of their potential outcomes. In these methods, values are not learned incrementally through prediction errors and stored for future use, but rather are computed ‘on the fly’ when needed, by mental simulation of sequences of events and outcomes using the internal world model. All that is required is a world model that includes predictions about the immediate consequences of each action or state in a sequence. This approach to action selection is computationally expensive owing to the need to mentally simulate and ‘test’ alternative courses of action, but it allows one to flexibly adapt behavior in a changing environment, and specifically, to adapt immediately to changes in the current value of the outcome. As goal-directed behavior is defined as a behavior that changes flexibly according to new knowledge about outcomes and event sequences, it has been suggested that this type of behavior must rely on model-based learning and decision mechanisms.

Habitual behavior

Instrumental behavior that persists despite alterations in the value or utility of its outcomes. Classic examples include a person driving to his old address even after he has moved, or a rat continuing to press a lever that has previously led to food that is no longer desirable. In practice, conditions such as extensive training or certain brain manipulations can cause behavior to become habitual. Owing to its inflexible nature (by definition), habitual behavior has been suggested to result from decision-making based on cached values. Note that habitual behavior describes behavior at the phenomenological level, in contrast to model-free learning, which describes the computational processes underlying learning and action selection that may explain habitual behavior (see above). (While habitual and goal-directed behaviors are most commonly used now to refer to instrumental, not Pavlovian, responses, the computational distinction between inflexible, cached value–based and flexible, world model–based behavior is more far-reaching and can readily encompass Pavlovian responses.)

Goal-directed behavior

Instrumental responding that is sensitive both to the contingencies between responses and outcomes and to the current desirability of these outcomes. As such, the hallmark of goal-directed behavior is its immediate flexibility in the face of changes in outcome availability or current value. As goal-directed responding is expected to adapt to new information immediately even if this information is acquired in a different context, it has been suggested that such behavior must rely on model-based representations and on flexible, online, mental simulation–based evaluations of actions. The term goal-directed behavior describes behavior at the phenomenological level, in contrast to model-based learning, which describes the computational processes that may explain goal-directed behavior.

By contrast, the more rigid model-free habit system relies on stored or cached values associated with each mental representation or ‘state’ of the environment (see Box 2 for more detailed definition). These values accumulate over time through repeated experience of the consequences of cues and actions. This model-free system is quicker to make decisions, as it does not require planning or mental simulation. However, its decisions are more stimulus bound and do not reflect knowledge about changes in future consequences or outcomes until these have been repeatedly experienced in the specific decision-making context. Although originally viewed as operating independently, new evidence suggests that these two systems interact in determining behavior and also in facilitating learning, through their impact on prediction error signaling mechanisms4.

Here, we build on these ideas to suggest that in cocaine addiction the mechanisms used to access model-based representations to generate representations of the specific consequences of an act often fail. The effect of this failure is evident in the inability of humans who are cocaine-addicted and cocaine-experienced rats and monkeys to make adaptive decisions in the present, and their corresponding difficulty in learning from unexpected or changing outcomes to improve decision-making in the future. We propose that this failure results, in part, from long-lasting cocaine-induced neuroadaptations in the orbitofrontal cortex (OFC) and other interconnected brain regions that prevent these areas from using model-based representations to derive accurate predictions about expected outcomes. To support our specific proposal, we primarily discuss human and nonhuman studies on the effect of cocaine exposure on OFC neurons and OFC-controlled behavior, but when appropriate we also include other psychostimulants (see Supplementary Note for consideration of the effects of other drugs of abuse on OFC function).

The OFC is critical for using model-based information

According to theoretical accounts, decisions are based on predictions of the value or utility of outcomes expected to result from a potential action. Besides guiding decision-making directly, information about expected outcomes can also be compared to actual outcomes to facilitate learning in a changing or uncertain environment. In this section, we review data from our own and others’ studies that consistently demonstrate the involvement of the OFC in both of these functions, particularly when expected value must be derived from a model-based representation of the structure of a particular task or environment.

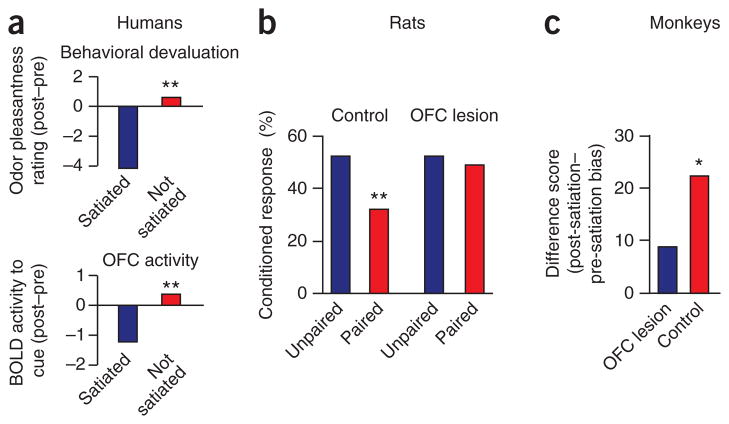

Nonhuman and human studies demonstrate that neurons in the OFC represent primary reinforcers and distinguish between rewarding and punishing outcomes5–7. These neurons encode details concerning the sensory properties of rewards, such as visual, olfactory and gustatory aspects8, and the size or timing of past or future rewards9–11, as well as the magnitude of more abstract rewards and penalties such as money12,13. Notably, OFC neurons are also activated in the anticipation of the receipt of such outcomes in the near future14–17; this neural activity seems to reflect the distinctive value of specific outcomes that are expected. For example, anticipatory neural activity in OFC corresponds to the preference for the particular reward relative to others available, reflecting the animal’s motivational state15, and activity in OFC changes when the value of an outcome is reduced by satiety18. Similar results have been obtained in human functional magnetic resonance imaging studies, where blood oxygen level–dependent (BOLD) activity in OFC has been shown to be correlated with changes in the value of expected outcomes19,20 (Fig. 1a). Such correlates of flexible, motivation-dependent evaluations are difficult to explain as reflecting cached value and instead are thought to require access to model-based representations of specific outcomes of actions3.

Figure 1.

The role of orbitofrontal cortex in changing conditioned responding as a result of reinforcer devaluation. (a) Changes in BOLD signal in human orbitofrontal cortex after reinforcer devaluation. Subjects were scanned during presentation of odors of different foods. Subsequently, one food was devalued by overfeeding and then subjects were rescanned. Subjective appetitive ratings of the odor (top) and BOLD response to the odor-predicting cue in orbitofrontal cortex (bottom) decline for satiated but not nonsatiated foods. (b) Changes in Pavlovian conditioned responding in sham and OFC-lesioned rats after reinforcer devaluation. Rats were trained to associate a light cue with food. Subsequently, the food was devalued by pairing it with LiCl-induced illness and response to the cue was assessed in a final probe session. Rats with OFC lesions fail to show any effect of devaluation on conditioned responding (percentage of time in food cup), despite normal conditioning and devaluation of the food reward. (c) Changes in discriminative responding in sham and orbitofrontal-lesioned monkeys after reinforcer devaluation. Monkeys were trained to associate different objects with different food rewards. Subsequently, one food was devalued by overfeeding and then discrimination performance was assessed in a probe test. The figure illustrates a difference score comparing post- and pre-satiation bias; OFC-lesioned monkeys fail to bias their choices away from objects associated with the satiated food. *P < 0.05; **P < 0.01. Adapted from refs. 20–22.

Evidence that these neural firing patterns do guide behavior comes from experiments in which decisions are especially dependent on momentary information regarding outcome predictions, such as Pavlovian reinforcer devaluation. In this procedure, a neutral cue is paired with the delivery of an appetitive food outcome (reward), resulting in the formation of associations between the cue and the sensory and motivational representation of the outcome in hungry rats. Subsequently, in a probe test after the food outcome is devalued (by pairing the reward with lithium chloride, which induces nausea and aversion to the food, or by giving free access to the food to induce satiation), the conditioned response to the reward-predicting cue is decreased. This decrease is observed on the first trial of the probe test and occurs even though the devalued food is never experienced in conjunction with the cue; thus, the decrease cannot be based on new learning about the value of the cue through experience. Rather, the new value must be computed by integrating two previously learned pieces of information; namely, that the outcome follows the cue and that this outcome has a new value. Animals with OFC lesions fail to show any effect of devaluation on cue-evoked conditioned responses in this procedure, despite normal conditioning and devaluation of the food outcome21,22. That is, OFC-lesioned animals will continue responding in anticipation of a devalued outcome, despite behavior indicating that the outcome is no longer desirable (Fig. 1b,c). Notably, OFC is particularly critical in the final test phase, in which previously acquired information about the association between the cue and the food and between the food and illness or satiety must be integrated to guide the response23. These data provide convincing evidence that OFC is fundamental for using information about expected outcomes to guide behavior when such information must be derived from the causal structure of the environment.

Other examples that support the role of the OFC for guiding behavior on the basis of specific information about expected outcomes (and not merely the cached value of these outcomes) come from Pavlovian-to-instrumental transfer24, conditioned reinforcement25,26, second-order conditioning27, delay discounting28,29 and even procedures that require counterfactual reasoning30. Each of these tasks requires subjects to access a model-based representation of the task to calculate the current value of a particular outcome that can be expected.

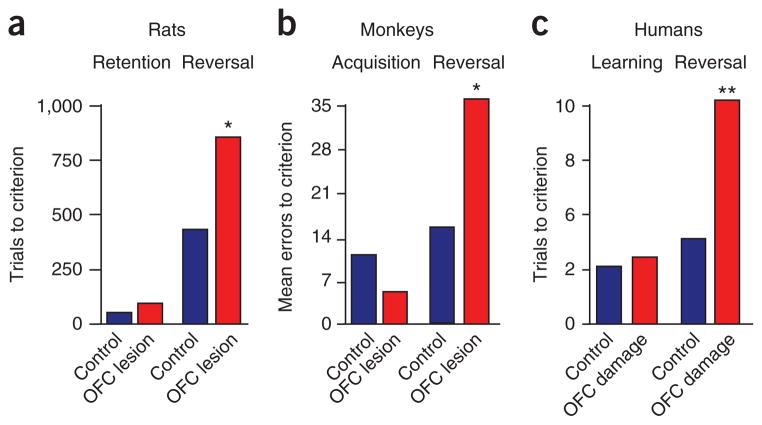

In addition to guiding behavior on the basis of information about expected appetitive or aversive outcomes, the OFC also influences learning in response to changes in these outcomes. Historically this has been most apparent in reversal learning tasks. In these tasks, subjects learn to associate different cues (typically two) with different probabilities of reward and punishment; after learning, the cue-outcome associations are reversed such that each cue now predicts the other outcome. Many studies in rats, mice, primates and humans have implicated the OFC in reversal learning (see ref. 31 for review) (Fig. 2).

Figure 2.

The role of orbitofrontal cortex in reversal learning. (a) Rats were trained to sample odors at a central port and then respond at a nearby fluid well. In each odor problem, one odor predicted sucrose and a second quinine. Rats had to learn to respond for sucrose and to inhibit responding to avoid quinine, and then the odor-outcome associations were reversed. Shown is the number of trials required by controls and OFC-lesioned rats to meet a 90% performance criterion. OFC lesions have intact retention of a previously learned discrimination, but impaired reversal learning. (b) Group mean errors to criterion for initial learning and nine serial reversals in object reversal learning in monkeys with bilateral OFC lesions. OFC-lesioned monkeys are specifically impaired in reversal learning. (c) Initial stimulus-reinforcer association learning and reversal learning performance in subjects with damage to the ventromedial prefrontal region and in controls. Initial learning and reversal performance is expressed as trials before the learning criterion is met. Subjects with lesions make more than twice as many errors as healthy controls in the reversal phase of the task. *P < 0.05; **P < 0.01. Adapted from refs. 22,95,96.

However, reversal impairments are difficult to interpret. The tasks are typically complex and potentially confound impaired learning (that is, the acquisition of the new information or extinction of the old) and performance (that is, the use of the new information). We have recently used a Pavlovian overexpectation task32 to provide a more direct test of whether outcome expectancies signaled by OFC contribute directly to learning. In the first stage of this experiment, several cues are separately paired with reward (typically food). In the second stage, two of these cues are presented together as a compound cue, followed by the same reward. This is the ‘overexpectation’ stage in which the animal presumably expects not just one but two rewards to follow the compound cue, and must adjust its predictions in light of the delivery of only one reward. In a subsequent probe test in which each cue is presented alone (in extinction), subjects show reduced conditioned responding to the previously compounded cues. Bilateral inactivation of OFC in rats during the compound training phase prevents this normal reduction in conditioned response, suggesting that OFC is essential for learning from the mismatch of expected and obtained outcomes33. Notably, we are not suggesting that this is an additional function of OFC; rather, the same information that OFC provides to guide behavior after devaluation may be used by downstream areas to generate prediction errors to facilitate learning in overexpectation. Consistent with this idea, summation—the increased response in the compound phase, demonstrating that the rat combines the predictive value of the two cues—is also absent if the OFC is inactivated33. Indeed, the value judgment required for summation is conceptually similar to that underlying devaluation effects in that it requires the animal to integrate existing representations to derive a novel value prediction. In both cases, this new value has not been experienced directly as a consequence of the cue, and thus it cannot be reflected in cached or stored cue values.

By contrast, OFC inactivation in these same rats has no effect on extinction learning when reward is simply omitted34. Such extinction does not require summation of expectancies across cues, or other ‘online’ value calculations, and thus can be accomplished by relying only on cached values of the individual cues. Data such as these suggest that OFC will be involved in learning when it is necessary to resort to model-based representations to recognize prediction errors. This idea is consistent with our recent results that OFC is necessary for unblocking of learning, but only when unblocking is driven by a change in outcome identity (for example, banana pellets to grape), not when it is driven by a change in value (for example, two banana pellets to three banana pellets)35.

The idea that OFC is critical to learning owing to a role in signaling model-based information relevant to the derivation of the values of expected outcomes may also account for recent evidence from probabilistic reversal learning tasks, suggesting that OFC-dependent deficits reflect an inability to accurately assign credit for errors36–38. In two of these studies36,37, a detailed analysis of the choice pattern showed that although monkeys with lateral OFC lesions still recognize positive and negative errors in reward prediction and adjust behavior according to these, the monkeys are unable to constrain the spread of effect of the errors to the chosen cues alone, but rather overgeneralize to the most commonly chosen cues. This result shows that OFC lesions leave intact some prediction error signaling but that the residual capacity is not well constrained by information about what actions were recently taken or, in our terminology, is not applied only to the relevant action-outcome contingency. Information about the contingencies between specific actions and outcomes is inherently part of a model-based system.

The data described above support the notion that OFC functions to guide behavior that is based on the value of an expected reward or punishment, and in addition contributes to learning when expected outcomes change. In each case, the role of OFC seems to be most critical when judgments about expected outcomes and their value must be derived from model-based information. Below we discuss the implications of this idea to understanding the significance of structural and functional changes to the OFC that are associated with cocaine addiction.

Cocaine is associated with OFC dysfunction

Results from many neuropharmacological studies in animal models indicate that neuronal activity in the mesocorticolimbic dopamine system (which comprises cell bodies in the ventral tegmental area that project to several brain areas, including the medial and orbital prefrontal cortex, nucleus accumbens and amygdala) underlies cocaine- seeking behaviors39. On the basis of these studies, an influential hypothesis is that cocaine addiction is due to cocaine-induced neuroadaptations in these circuits40–43. These neuroadaptations have been hypothesized to cause hypersensitivity to cocaine-associated cues44, impulsive decision-making45,46, abnormal habit-like behaviors47,48 and persistent relapse vulnerability49. Over the last decade, investigators have demonstrated causal roles of specific cocaine-induced neuroadaptations in cocaine reward50, escalation of cocaine intake51 and relapse to cocaine seeking52. In contrast, evidence linking specific cocaine-induced brain neuroadaptations with an inability to use information about consequences or long-term outcomes to control cocaine use—a core feature of addiction—has been slower to develop. Here, we argue that this core feature of addiction is due, in part, to cocaine-induced neuroadaptations in OFC at the molecular, structural, functional and circuit organizational levels.

At the structural level, several studies have reported a decrease in gray matter concentration in the OFC of cocaine53–55 and meth-amphetamine56 users. Gray matter volume reduction in OFC of cocaine users has been correlated with greater duration of cocaine dependence and greater compulsivity of drug use, as assessed with the Obsessive Compulsive Drug Use Scale (OCDUS)55. The OCDUS measures general craving and motivational drive to use drugs, suggesting that drug-induced adaptations in the OFC may also underlie the increase of the motivational effects of drugs and drug-related cues over time. In addition, structural abnormalities in frontal white matter have been found, including decreases in frontal white matter integrity among psychostimulant users57–59.

This drug-associated reorganization has also been identified using functional neuroimaging. Studies have shown a general decrease in glucose metabolism, which is considered a marker of neuronal function, throughout the brain of psychostimulant users. This reduction is particularly apparent in the frontal cortex during acute cocaine use60,61. During early periods of drug abstinence, circuits including the OFC are hypermetabolic. This hypermetabolism is correlated with the intensity of spontaneous craving62. In contrast, during protracted withdrawal, the OFC is hypoactive in cocaine63,64 and methamphetamine65,66 users. Moreover, in cocaine and methamphetamine users, the degree of this hypometabolism has been shown to correlate with decreased dopamine D2 receptors in striatum67.

The results from these human studies indicate that OFC structure and activity are altered in cocaine and methamphetamine users, but these data cannot distinguish whether changes in OFC function are induced by psychostimulant use (or even exposure) or represent a pre-existing condition. This issue has been addressed in studies using animal models. For example, a focal structural analysis of the dendritic morphology in OFC and medial prefrontal cortex (mPFC) in rats revealed a profound effect of psychostimulants on the spine density in these areas after prolonged withdrawal from noncontingent or contingent drug exposure68. Interestingly, chronic amphetamine or cocaine exposure increases dendritic length and spine density in mPFC (and nucleus accumbens) but decreases these measures in OFC69. Chronic exposure of rats to cocaine has also been shown to result in accumulation of the transcription factor ΔFosB in both mPFC and orbitofrontal regions after 18 to 24 h of withdrawal from the drug70.

Overall, these data suggest that the OFC is one important substrate for rapidly emerging and enduring alterations resulting from psychostimulant exposure. However, these results do not address the underlying relevance of the psychostimulant-induced changes to behaviors that are relevant to addiction. Given the large number of degrees of freedom available in any study of the effects of drugs on molecular or other markers across an entire neural circuit, it is critical that a drug-induced change be linked to behavior. According to our present understanding of OFC function, such alterations in OFC could cause long-lasting impairments to the ability of OFC to support outcome-guided decision-making, as well as learning that results from comparing expected outcome to actual outcomes. In the next section, we review behavioral and neurophysiological data that directly support this hypothesis.

Cocaine alters OFC-dependent behavior and underlying neurophysiology

A growing number of studies demonstrate that reversal learning is impaired after psychostimulant exposure (Table 1). Jentsch, Taylor and colleagues71 were the first to demonstrate this effect. Monkeys were given experimenter-administered cocaine for 14 days (2 or 4 mg per kilogram body weight per day, intraperitoneally) and then tested in an object discrimination and reversal task, 9 and 30 days after withdrawal from cocaine. These monkeys acquire the discriminations normally but make many more errors than controls in acquiring the reversal learning task (Fig. 3a). The same results were obtained in monkeys allowed to self-administer cocaine 4 days per week for 9 months and tested in a reversal task after a 72-hour withdrawal period on a weekly basis72.

Table 1.

Effect of different experimental procedures on OFC-dependent deficits in drug-treated animals

| Drug | Species | Experimental procedure | Training dose; treatment schedule | Withdrawal period | Behavioral impairment | Refs. |

|---|---|---|---|---|---|---|

| Cocaine | Monkey | EA | 2 or 4 mg kg−1 per day i.p.; 14 days, 1 injection per day | 9 and 30 days | Object discrimination reversal task | 71 |

| SA | 0.1 to 0.5 mg kg−1 per infusion; 9 months, 6 infusions per day | 3 days | Reversal task; delayed match-to-sample task | 72 | ||

| Rat | EA | 30 mg kg−1 per day i.p.; 14 days, 1 injection per day | 3–6 weeks | Odor discrimination reversal task; reinforcer devaluation task; delay discounting task | 73,97,86,87,89 | |

| SA | 0.75 mg kg−1 per infusion; 14 days, 60 infusions per day | 4 weeks | Odor discrimination reversal task; Pavlovian overexpectation task | 74, F.L. et al.a | ||

| Mouse | EA | 30 mg kg−1 per day i.p.; 14 days, 1 injection per day | 2 weeks | Instrumental reversal task; delayed matching-to-position task | 75 | |

| Methamphetamine | Rat | EA | 2 mg kg−1 s.c.; 1 day, 4 injections | 3–5 days | Reversal discrimination task; attentional set shifting task | 76 |

EA, experimenter-administered; SA, self-administered; i.p., intraperitoneal injection; s.c., subcutaneous injection.

F. Lucantonio et al. Soc. Neurosci. Abst. 707.707/MMM709, 2010.

Figure 3.

Effect of cocaine on reversal learning. (a) Incorrect responses in monkeys exposed to noncontingent cocaine (4 mg kg−1, once daily for 14 d) or saline in a reversal task. Compared with controls, cocaine-treated monkeys show similar acquisition but impaired discrimination-reversal learning. (b) Rats were trained to self-administer cocaine (0.75 mg kg−1 per infusion, 4 hours per day for 14 days) and then tested on the same odor discrimination reversal task used in Figure 2a, after approximately 3 months of withdrawal from the drug. Cocaine self-administration has no effect on retention but impairs reversal learning. (c) Consecutive incorrect responses immediately after the change in reward contingencies in chronic cocaine users. Cocaine users show response perseveration to the previously rewarded stimulus. *P < 0.05; **P < 0.01. Adapted from refs. 71,78,96.

This basic finding has since been replicated in rats, mice and humans. We have found similar deficits in rats tested on an odor discrimination reversal task after withdrawal from either noncontingent or self-administered cocaine73,74 (Fig. 3b). Other studies have found deficits after cocaine exposure in mice trained on an instrumental reversal learning task and after methamphetamine exposure in rats trained on a visual discrimination task75,76. These findings have been extended to human cocaine or polydrug users tested on a probabilistic reversal learning task77,78 (Fig. 3c). Additionally, subjects addicted to methamphetamine79,80 show impaired performance on the Iowa Gambling Task, a task in which correct choices of cards necessitates reversal of previously learned associations between card decks and rewards, consistent with reversal learning deficits. Thus, whereas exposure to psychostimulants does not impair basic learning abilities, it does seem to cause a specific deficit in the ability to adjust behavior in response to changes in established associations. This pattern of results closely mirrors that seen with OFC lesions or inactivation. Notably, in animal models, these deficits typically persist for weeks and even months after the last drug exposure and are therefore appropriately positioned for a critical role in drug relapse and other long-term behavioral issues that define drug addiction.

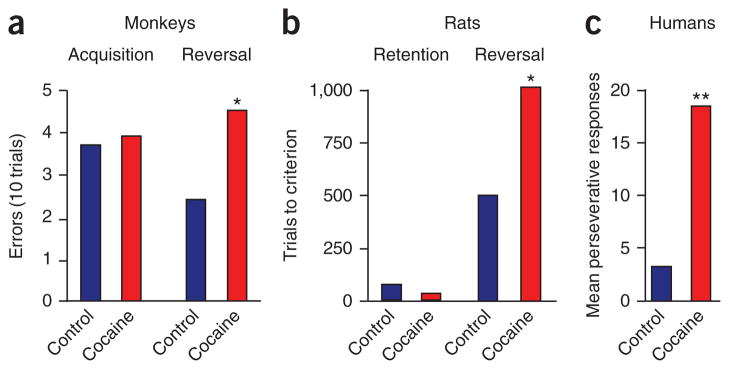

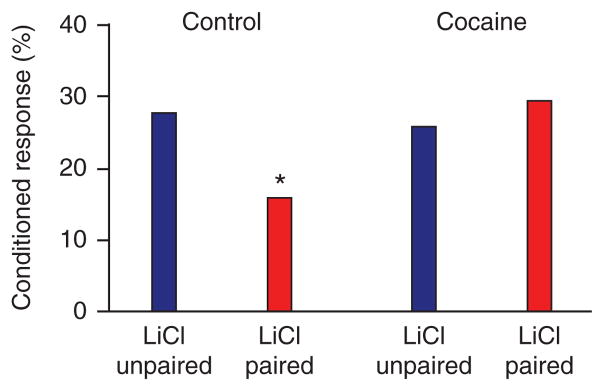

Besides reversal learning, a striking parallel exists in several other procedures between the behavioral effects of OFC lesions or inactivation and the long-lasting effects of chronic psychostimulant exposure. For example, cocaine-experienced rats show a deficit identical to that seen in OFC-lesioned rats in the Pavlovian reinforcer devaluation task described earlier (Fig. 4). These rats show normal conditioning and taste aversion learning, and they also extinguish conditioned responding normally in the absence of the food reward; however, they fail to change their conditioned responding in response to devaluation of the food. Similarly, amphetamine-sensitized rats show no devaluation effect in an instrumental reinforcer devaluation task81. Although instrumental devaluation deficits may be linked to striatal or medial prefrontal dysfunction, dorsal striatal regions implicated in this effect receive strong input from orbitofrontal areas82, and normal performance in this setting has recently been shown to be affected by OFC lesions (C.M. Gremel & R.M. Costa, Soc. Neurosci Abst. 682.684/GG658, 2009). These results suggest that cocaine exposure may induce changes in OFC or related areas that disrupt the normal ability of these circuits to represent and use information about expected outcomes, particularly when deriving the appropriate value requires access to a model of how the task works.

Figure 4.

Effect of cocaine on changes in conditioned responding as a result of reinforcer devaluation in rats. Rats were trained in the same task described in Figure 1a. Shown is the mean percentage of time spent in the food cup during presentation of the food-predicting cue after devaluation, in the extinction probe test. Red bars, devalued groups; blue bars, non-devalued groups. During the devaluation test, cocaine-exposed rats fail to change conditioned responding as a result of previous devaluation of the food reward. *P < 0.05. Adapted from ref. 97.

The effect of cocaine exposure also extends to the Pavlovian overexpectation task described earlier. Rats previously trained to self-administer cocaine and tested in the overexpectation task more than a month later failed to show summation during compound training and also failed to show the spontaneous reduction in responding to the compounded cue in the later probe test (F. Lucantonio et al., Soc. Neurosci. Abst. 707.707/MMM709, 2010). Thus, cocaine-experienced rats were unable to integrate reward expectations during compound training to drive learning. In contrast, the same rats were able to extinguish responding when the actual reward was omitted. As noted earlier, extinction learning in response to reward omission contrasts with extinction driven by overexpectation in that the former does not require the integration of reward expectancies that is necessary for the latter. This subtle deficit in one but not another form of extinction learning provides potential insight into the puzzling inability of addicted humans to effectively extinguish drug-taking in complex, real-world environments, which are far more likely to involve the integration—and estimation—of predictions from a variety of sources.

More evidence that psychostimulant exposure causes an inability to guide behavior on the basis of specific information about expected outcomes can also be found in studies using other behavioral procedures sensitive to OFC dysfunction. Thus, chronic exposure to psychostimulants has been reported to increase impulsive choice in human cocaine users83–85 and in rats exposed to cocaine86,87 and tested in delay discounting tasks.

Of course, none of these effects necessarily require changes in OFC. Effects of systemic drugs, even if they resemble those of brain lesions, may be due to alterations in other parts of the circuit that simply mimic the lesion’s effects. However, exposure to psychostimulants has been shown to affect activity of single units recorded in OFC in awake, behaving rats. For example, rats sensitized to amphetamine show enhanced activity in OFC neurons recorded during instrumental behavior, particularly in categories of neurons that seem to be task responsive88. These results suggest a general overactivation of OFC, which could potentially distort and obscure the representation of associative information crucial to OFC-mediated behaviors such as reward devaluation and reversal learning.

In addition, we have shown that signaling of outcome expectancies by OFC neurons is directly affected by cocaine exposure, even when recording is conducted several months after withdrawal from the drug. These expectancies are evident in the selective firing activity of OFC neurons during sampling of cues that predict the delivery of sucrose reward or quinine punishment5,14. During learning, distinct populations of neurons in OFC respond selectively to one or the other outcome. At the point at which rats show behavioral evidence ofhaving learned the contingencies, a subset of these outcome-selective neurons begin to fire selectively for the odor that predicts their preferred outcome14. This pattern of neural activity seems to reflect a representation of the expected outcome at the time of cue sampling, which could be used to guide decision-making and to recognize violations of expectations when contingencies change. OFC neurons recorded in rats exposed to the same regimen of cocaine that we have found to disrupt OFC-dependent reversal learning, reinforcer devaluation and overexpectation fail to develop these neural correlates at the time of odor sampling89.

Conclusions

As outlined in DSM-IV, maladaptive decision-making is a hallmark of addiction to cocaine and other drugs of abuse. Here we have reviewed recent findings to support the proposal that such maladaptive decision-making in people addicted to cocaine may be due, at least in part, to cocaine-induced changes in OFC function. Behavioral impairments in cocaine-addicted humans and cocaine-experienced animals are reminiscent of behavioral deficits caused by damage to OFC, and there is good evidence that cocaine exposure alters structural and neurophysiological markers of OFC function, including critical correlates that we believe underlie OFC-dependent behaviors.

Evidence suggests that OFC is critical for humans and animals to navigate model-based task representations to derive estimates regarding the values of expected outcomes for the purposes of guiding behavior and facilitate learning. Loss of this function causes the behavior of some humans and laboratory animals who have been exposed to psychostimulants to become more dependent on model-free cached values to make decisions and drive learning. Whereas this cached-value or model-free decision-making system is quicker and more efficient, its decisions are inflexible and stimulus-bound, and therefore more prone to errors, because they do not reflect changes in consequences or outcomes downstream until these changes have been experienced repeatedly and used to modify their cached values.

A change in the balance between the model-based and model-free systems would affect both behavior and learning in complex ways. Learning and behavior could appear normal or perhaps even enhanced in simple situations in which model-free information is sufficient, such as the extinction or acquisition of simple Pavlovian associations. For example, cocaine-exposed rats have been shown to show enhanced conditioned responding90, higher sensitivity to cues predicting large rewards86 and enhanced Pavlovian-to-instrumental transfer effects91. However, in complex situations where signals regarding expected values must be derived from model-based representations of the states and transition functions that define a task, learning and behavior would be affected. The examples above illustrate this dissociation. Cocaine-experienced rats condition and extinguish normally based on reward delivery or omission; however, they are unable to learn to modify responding as a result of overexpectation. Real-world situations in which addicted humans are unable to learn to modify drug-seeking behaviors will typically fall into the latter category.

Interestingly, drug users are still able to pursue seemingly novel and elaborate schemes to obtain drugs. This is consistent with a view that the OFC is not the sole region contributing to the generation and use of these models. However, behavior that is complex or apparently novel could still rely largely on cached, model-free values. Imagine, for example, a seemingly complex ‘plan’ that consists of a series of simpler behaviors, each of which has previously acquired a cached value. What distinguishes model-based behavioral control from model-free is not complexity per se but rather the flexibility with which it is pursued, and to assess this flexibility requires a formal test. That is, drug users could be engaging in complex behaviors that are based on an inflexible, cached network of values. The anecdotal evidence cannot resolve whether this is actually the case.

Finally, it is worth noting that decision-making impairments are important both in the early stages of cocaine addiction in mediating and facilitating initial maladaptive responses to cocaine exposure and in supporting drug-taking when drugs are taken intermittently. In addition, such impairments may also persist and interfere with the success of therapies designed to promote long-term abstinence. Most of the drugs-induced changes to OFC function reported here were detected several weeks after drug exposure, whereas Kantak and colleagues92 did not observe any impairment in OFC-dependent behavior in cocaine-experienced rats when testing was conducted without any withdrawal period. These data suggest that changes in OFC function develop over time or with repeated bouts of intermittent use, as typically occur in humans. Consistent with this idea, several studies have demonstrated the involvement of the OFC in cocaine seeking or relapse after re-exposure to cocaine-related cues in both humans and laboratory animals even after long periods of abstinence93,94. Thus OFC dysfunction may be most strongly linked to the long-term behaviors that define cocaine addiction and not to the immediate effects of the drug.

Supplementary Material

Acknowledgments

This review is dedicated to the family of Jacob Peter Waletzky. The work of writing it was supported by funding from the intramural and extramural programs of the US National Institute on Drug Abuse, including time while G.S. and T.A.S. were employed at the University of Maryland, Baltimore. The opinions expressed in this article are the author’s own and do not reflect the view of the US National Institutes of Health, the Department of Health and Human Services, or the United States government.

Footnotes

Note: Supplementary information is available on the Nature Neuroscience website.

COMPETING FINANCIAL INTERESTS

The authors declare no competing financial interests.

Reprints and permissions information is available online at http://www.nature.com/reprints/index.html.

References

- 1.Mendelson JH, Mello NK. Management of cocaine abuse and dependence. N Engl J Med. 1996;334:965–972. doi: 10.1056/NEJM199604113341507. [DOI] [PubMed] [Google Scholar]

- 2.Cardinal RN, Parkinson JA, Hall G, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- 3.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 4.Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-based influences on humans’ choices and striatal prediction errors. Neuron. 2011;69:1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Thorpe SJ, Rolls ET, Maddison S. The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp Brain Res. 1983;49:93–115. doi: 10.1007/BF00235545. [DOI] [PubMed] [Google Scholar]

- 6.O’Doherty J, Rolls ET, Francis S, Bowtell R, McGlone F. Representation of pleasant and aversive taste in the human brain. J Neurophysiol. 2001;85:1315–1321. doi: 10.1152/jn.2001.85.3.1315. [DOI] [PubMed] [Google Scholar]

- 7.Liu X, et al. Functional dissociation in frontal and striatal areas for processing of positive and negative reward information. J Neurosci. 2007;27:4587–4597. doi: 10.1523/JNEUROSCI.5227-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rolls ET, Baylis LL. Gustatory, olfactory, and visual convergence within the primate orbitofrontal cortex. J Neurosci. 1994;14:5437–5452. doi: 10.1523/JNEUROSCI.14-09-05437.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sul JH, Kim H, Huh N, Lee D, Jung MW. Distinct roles of rodent orbitofrontal and medial prefrontal cortex in decision making. Neuron. 2010;66:449–460. doi: 10.1016/j.neuron.2010.03.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wallis JD, Miller EK. Neuronal activity in primate dorsolateral and orbital prefrontal cortex during performance of a reward preference task. Eur J Neurosci. 2003;18:2069–2081. doi: 10.1046/j.1460-9568.2003.02922.x. [DOI] [PubMed] [Google Scholar]

- 11.Roesch MR, Olson CR. Neuronal activity in primate orbitofrontal cortex reflects the value of time. J Neurophysiol. 2005;94:2457–2471. doi: 10.1152/jn.00373.2005. [DOI] [PubMed] [Google Scholar]

- 12.O’Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci. 2001;4:95–102. doi: 10.1038/82959. [DOI] [PubMed] [Google Scholar]

- 13.Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- 14.Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- 15.Tremblay L, Schultz W. Relative reward preference in primate orbitofrontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- 16.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hikosaka K, Watanabe M. Long- and short-range reward expectancy in the primate orbitofrontal cortex. Eur J Neurosci. 2004;19:1046–1054. doi: 10.1111/j.0953-816x.2004.03120.x. [DOI] [PubMed] [Google Scholar]

- 18.Critchley HD, Rolls ET. Hunger and satiety modify the responses of olfactory and visual neurons in the primate orbitofrontal cortex. J Neurophysiol. 1996;75:1673–1686. doi: 10.1152/jn.1996.75.4.1673. [DOI] [PubMed] [Google Scholar]

- 19.O’Doherty J, et al. Sensory-specific satiety-related olfactory activation of the human orbitofrontal cortex. Neuroreport. 2000;11:399–403. doi: 10.1097/00001756-200002070-00035. [DOI] [PubMed] [Google Scholar]

- 20.Gottfried JA, O’Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- 21.Gallagher M, McMahan RW, Schoenbaum G. Orbitofrontal cortex and representation of incentive value in associative learning. J Neurosci. 1999;19:6610–6614. doi: 10.1523/JNEUROSCI.19-15-06610.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Pickens CL, et al. Different roles for orbitofrontal cortex and basolateral amygdala in a reinforcer devaluation task. J Neurosci. 2003;23:11078–11084. doi: 10.1523/JNEUROSCI.23-35-11078.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ostlund SB, Balleine BW. Orbitofrontal cortex mediates outcome encoding in Pavlovian but not instrumental conditioning. J Neurosci. 2007;27:4819–4825. doi: 10.1523/JNEUROSCI.5443-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Burke KA, Franz TM, Miller DN, Schoenbaum G. Conditioned reinforcement can be mediated by either outcome-specific or general affective representations. Front Integr Neurosci. 2007;1:2. doi: 10.3389/neuro.07.002.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pears A, Parkinson JA, Hopewell L, Everitt BJ, Roberts AC. Lesions of the orbitofrontal but not medial prefrontal cortex disrupt conditioned reinforcement in primates. J Neurosci. 2003;23:11189–11201. doi: 10.1523/JNEUROSCI.23-35-11189.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cousens GA, Otto T. Neural substrates of olfactory discrimination learning with auditory secondary reinforcement. I Contributions of the basolateral amygdaloid complex and orbitofrontal cortex. Integr Physiol Behav Sci. 2003;38:272–294. doi: 10.1007/BF02688858. [DOI] [PubMed] [Google Scholar]

- 28.Mobini S, et al. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology (Berl) 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- 29.Winstanley CA, Theobald DEH, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Camille N, et al. The involvement of the orbitofrontal cortex in the experience of regret. Science. 2004;304:1167–1170. doi: 10.1126/science.1094550. [DOI] [PubMed] [Google Scholar]

- 31.Schoenbaum G, Roesch MR, Stalnaker TA, Takahashi YK. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nat Rev Neurosci. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kremer EF. Rescorla-Wagner model – losses in associative strength in compound conditioned stimuli. J Exp Psychol Anim Behav Process. 1978;4:22–36. doi: 10.1037//0097-7403.4.1.22. [DOI] [PubMed] [Google Scholar]

- 33.Takahashi YK, et al. The orbitofrontal cortex and ventral tegmental area are necessary for learning from unexpected outcomes. Neuron. 2009;62:269–280. doi: 10.1016/j.neuron.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Burke KA, Takahashi YK, Correll J, Brown PL, Schoenbaum G. Orbitofrontal inactivation impairs reversal of Pavlovian learning by interfering with ‘disinhibition’ of responding for previously unrewarded cues. Eur J Neurosci. 2009;30:1941–1946. doi: 10.1111/j.1460-9568.2009.06992.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.McDannald MA, Lucantonio F, Burke KA, Niv Y, Schoenbaum G. Ventral striatum and orbitofrontal cortex are both required for model-based, but not model-free, reinforcement learning. J Neurosci. 2011;31:2700–2705. doi: 10.1523/JNEUROSCI.5499-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Walton ME, Behrens TEJ, Buckley MJ, Rudebeck PH, Rushworth MFS. Separable learning systems in the macaque brain and the role of the orbitofrontal cortex in contingent learning. Neuron. 2010;65:927–939. doi: 10.1016/j.neuron.2010.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Noonan MP, et al. Separate value comparison and learning mechanisms in macaque medial and lateral orbitofrontal cortex. Proc Natl Acad Sci USA. 2010;107:20547–20552. doi: 10.1073/pnas.1012246107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tsuchida A, Doll BB, Fellows LK. Beyond reversal: a critical role for human orbitofrontal cortex in flexible learning from probabilistic feedback. J Neurosci. 2010;30:16868–16875. doi: 10.1523/JNEUROSCI.1958-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5:483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- 40.Nestler EJ. Common molecular and cellular substrates of addiction and memory. Neurobiol Learn Mem. 2002;78:637–647. doi: 10.1006/nlme.2002.4084. [DOI] [PubMed] [Google Scholar]

- 41.Wolf ME, Sun X, Mangiavacchi S, Chao SZ. Psychomotor stimulants and neuronal plasticity. Neuropharmacology. 2004;47 (suppl 1):61–79. doi: 10.1016/j.neuropharm.2004.07.006. [DOI] [PubMed] [Google Scholar]

- 42.Kalivas PW, O’Brien C. Drug addiction as a pathology of staged neuroplasticity. Neuropsychopharmacology. 2008;33:166–180. doi: 10.1038/sj.npp.1301564. [DOI] [PubMed] [Google Scholar]

- 43.Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat Neurosci. 2005;8:1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- 44.Everitt BJ, Wolf AP. Psychomotor stimulant addiction: a neural systems perspective. J Neurosci. 2002;22:3312–3320. doi: 10.1523/JNEUROSCI.22-09-03312.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jentsch JD, Taylor JR. Impulsivity resulting from frontostriatal dysfunction in drug abuse: implications for the control of behavior by reward-related stimuli. Psychopharmacology (Berl) 1999;146:373–390. doi: 10.1007/pl00005483. [DOI] [PubMed] [Google Scholar]

- 46.Volkow ND, Fowler JS. Addiction, a disease of compulsion and drive: involvement of orbitofrontal cortex. Cereb Cortex. 2000;10:318–325. doi: 10.1093/cercor/10.3.318. [DOI] [PubMed] [Google Scholar]

- 47.Vanderschuren LJ, Everitt BJ. Drug seeking becomes compulsive after prolonged cocaine self-administration. Science. 2004;305:1017–1019. doi: 10.1126/science.1098975. [DOI] [PubMed] [Google Scholar]

- 48.Deroche-Gamonet V, Belin D, Piazza PV. Evidence for addiction-like behavior in the rat. Science. 2004;305:1014–1017. doi: 10.1126/science.1099020. [DOI] [PubMed] [Google Scholar]

- 49.Shaham Y, Hope BT. The role of neuroadaptations in relapse to drug seeking. Nat Neurosci. 2005;8:1437–1439. doi: 10.1038/nn1105-1437. [DOI] [PubMed] [Google Scholar]

- 50.Nestler EJ. Molecular basis of long-term plasticity underlying addiction. Nat Rev Neurosci. 2001;2:119–128. doi: 10.1038/35053570. [DOI] [PubMed] [Google Scholar]

- 51.Im HI, Hollander JA, Bali P, Kenny PJ. MeCP2 controls BDNF expression and cocaine intake through homeostatic interactions with microRNA-212. Nat Neurosci. 2010;13:1120–1127. doi: 10.1038/nn.2615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Lu L, et al. Central amygdala ERK signaling pathway is critical to incubation of cocaine craving. Nat Neurosci. 2005;8:212–219. doi: 10.1038/nn1383. [DOI] [PubMed] [Google Scholar]

- 53.Franklin TR, et al. Decreased gray matter concentration in the insular, orbitofrontal, cingulate, and temporal cortices of cocaine patients. Biol Psychiatry. 2002;51:134–142. doi: 10.1016/s0006-3223(01)01269-0. [DOI] [PubMed] [Google Scholar]

- 54.O’Neill J, Cardenas VA, Meyerhoff DJ. Separate and interactive effects of cocaine and alcohol dependence on brain structures and metabolites: quantitative MRI and proton MR spectroscopic imaging. Addict Biol. 2001;6:347–361. doi: 10.1080/13556210020077073. [DOI] [PubMed] [Google Scholar]

- 55.Ersche KD, et al. Abnormal structure of frontostriatal brain systems is associated with aspects of impulsivity and compulsivity in cocaine dependence. Brain. 2011;134:2013–2024. doi: 10.1093/brain/awr138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Thompson PM, et al. Structural abnormalities in the brains of human subjects who use methamphetamine. J Neurosci. 2004;24:6028–6036. doi: 10.1523/JNEUROSCI.0713-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Volkow ND, Valentine A, Kulkarni M. Radiological and neurological changes in the drug abuse patient: a study with MRI. J Neuroradiol. 1988;15:288–293. [PubMed] [Google Scholar]

- 58.O’Neill J, Cardenas VA, Meyerhoff DJ. Effects of abstinence on the brain: quantitative magnetic resonance imaging and magnetic resonance spectroscopic imaging in chronic alcohol abuse. Alcohol Clin Exp Res. 2001;25:1673–1682. [PubMed] [Google Scholar]

- 59.Lyoo IK, et al. White matter hyperintensities in subjects with cocaine and opiate dependence and healthy comparison subjects. Psychiatry Res. 2004;131:135–145. doi: 10.1016/j.pscychresns.2004.04.001. [DOI] [PubMed] [Google Scholar]

- 60.London ED, et al. Cocaine-induced reduction of glucose utilization in human brain. A study using positron emission tomography and [fluorine 18]-fluorodeoxyglucose. Arch Gen Psychiatry. 1990;47:567–574. doi: 10.1001/archpsyc.1990.01810180067010. [DOI] [PubMed] [Google Scholar]

- 61.Volkow ND, Fowler JS, Wolf AP, Gillespi H. Metabolic studies of drugs of abuse. NIDA Res Monogr. 1990;105:47–53. [PubMed] [Google Scholar]

- 62.Volkow ND, et al. Changes in brain glucose metabolism in cocaine dependence and withdrawal. Am J Psychiatry. 1991;148:621–626. doi: 10.1176/ajp.148.5.621. [DOI] [PubMed] [Google Scholar]

- 63.Volkow ND, et al. Decreased dopamine D2 receptor availability is associated with reduced frontal metabolism in cocaine abusers. Synapse. 1993;14:169–177. doi: 10.1002/syn.890140210. [DOI] [PubMed] [Google Scholar]

- 64.Adinoff B, et al. Limbic responsiveness to procaine in cocaine-addicted subjects. Am J Psychiatry. 2001;158:390–398. doi: 10.1176/appi.ajp.158.3.390. [DOI] [PubMed] [Google Scholar]

- 65.Volkow ND, et al. Higher cortical and lower subcortical metabolism in detoxified methamphetamine abusers. Am J Psychiatry. 2001;158:383–389. doi: 10.1176/appi.ajp.158.3.383. [DOI] [PubMed] [Google Scholar]

- 66.Kim SJ, et al. Frontal glucose hypometabolism in abstinent methamphetamine users. Neuropsychopharmacology. 2005;30:1383–1391. doi: 10.1038/sj.npp.1300699. [DOI] [PubMed] [Google Scholar]

- 67.Volkow ND, et al. Low level of brain dopamine D2 receptors in methamphetamine abusers: association with metabolism in the orbitofrontal cortex. Am J Psychiatry. 2001;158:2015–2021. doi: 10.1176/appi.ajp.158.12.2015. [DOI] [PubMed] [Google Scholar]

- 68.Kolb B, Pellis S, Robinson TE. Plasticity and functions of the orbital frontal cortex. Brain Cogn. 2004;55:104–115. doi: 10.1016/S0278-2626(03)00278-1. [DOI] [PubMed] [Google Scholar]

- 69.Crombag HS, Gorny G, Li Y, Kolb B, Robinson TE. Opposite effects of amphetamine self-administration experience on dendritic spines in the medial and orbital prefrontal cortex. Cereb Cortex. 2005;15:341–348. doi: 10.1093/cercor/bhh136. [DOI] [PubMed] [Google Scholar]

- 70.Winstanley CA, et al. DeltaFosB induction in orbitofrontal cortex mediates tolerance to cocaine-induced cognitive dysfunction. J Neurosci. 2007;27:10497–10507. doi: 10.1523/JNEUROSCI.2566-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Jentsch JD, Olausson P, De La Garza R, Taylor JR. Impairments of reversal learning and response perseveration after repeated, intermittent cocaine administrations to monkeys. Neuropsychopharmacology. 2002;26:183–190. doi: 10.1016/S0893-133X(01)00355-4. [DOI] [PubMed] [Google Scholar]

- 72.Porter JN, et al. Chronic cocaine self-administration in rhesus monkeys: impact on associative learning, cognitive control, and working memory. J Neurosci. 2011;31:4926–4934. doi: 10.1523/JNEUROSCI.5426-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Schoenbaum G, Saddoris MP, Ramus SJ, Shaham Y, Setlow B. Cocaine-experienced rats exhibit learning deficits in a task sensitive to orbitofrontal cortex lesions. Eur J Neurosci. 2004;19:1997–2002. doi: 10.1111/j.1460-9568.2004.03274.x. [DOI] [PubMed] [Google Scholar]

- 74.Calu DJ, et al. Withdrawal from cocaine self-administration produces long-lasting deficits in orbitofrontal-dependent reversal learning in rats. Learn Mem. 2007;14:325–328. doi: 10.1101/lm.534807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Krueger DD, et al. Prior chronic cocaine exposure in mice induces persistent alterations in cognitive function. Behav Pharmacol. 2009;20:695–704. doi: 10.1097/FBP.0b013e328333a2bb. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Izquierdo A, et al. Reversal-specific learning impairments after a binge regimen of methamphetamine in rats: possible involvement of striatal dopamine. Neuropsychopharmacology. 2010;35:505–514. doi: 10.1038/npp.2009.155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Fillmore MT, Rush CR. Polydrug abusers display impaired discrimination-reversal learning in a model of behavioural control. J Psychopharmacol. 2006;20:24–32. doi: 10.1177/0269881105057000. [DOI] [PubMed] [Google Scholar]

- 78.Ersche KD, Roiser JP, Robbins TW, Sahakian BJ. Chronic cocaine but not chronic amphetamine use is associated with perseverative responding in humans. Psychopharmacology (Berl) 2008;197:421–431. doi: 10.1007/s00213-007-1051-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Bechara A, et al. Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia. 2001;39:376–389. doi: 10.1016/s0028-3932(00)00136-6. [DOI] [PubMed] [Google Scholar]

- 80.Grant S, Contoreggi C, London ED. Drug abusers show impaired performance in a laboratory test of decision making. Neuropsychologia. 2000;38:1180–1187. doi: 10.1016/s0028-3932(99)00158-x. [DOI] [PubMed] [Google Scholar]

- 81.Nelson A, Killcross S. Amphetamine exposure enhances habit formation. J Neurosci. 2006;26:3805–3812. doi: 10.1523/JNEUROSCI.4305-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Voorn P, Vanderschuren LJMJ, Groenewegen HJ, Robbins TW, Pennartz CMA. Putting a spin on the dorsal-ventral divide of the striatum. Trends Neurosci. 2004;27:468–474. doi: 10.1016/j.tins.2004.06.006. [DOI] [PubMed] [Google Scholar]

- 83.Coffey SF, Gudleski GD, Saladin ME, Brady KT. Impulsivity and rapid discounting of delayed hypothetical rewards in cocaine-dependent individuals. Exp Clin Psychopharmacol. 2003;11:18–25. doi: 10.1037//1064-1297.11.1.18. [DOI] [PubMed] [Google Scholar]

- 84.Kirby KN, Petry NM. Heroin and cocaine abusers have higher discount rates for delayed rewards than alcoholics or non-drug-using controls. Addiction. 2004;99:461–471. doi: 10.1111/j.1360-0443.2003.00669.x. [DOI] [PubMed] [Google Scholar]

- 85.Heil SH, Johnson MW, Higgins ST, Bickel WK. Delay discounting in currently using and currently abstinent cocaine-dependent outpatients and non-drug-using matched controls. Addict Behav. 2006;31:1290–1294. doi: 10.1016/j.addbeh.2005.09.005. [DOI] [PubMed] [Google Scholar]

- 86.Roesch MR, Takahashi Y, Gugsa N, Bissonette GB, Schoenbaum G. Previous cocaine exposure makes rats hypersensitive to both delay and reward magnitude. J Neurosci. 2007;27:245–250. doi: 10.1523/JNEUROSCI.4080-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Simon NW, Mendez IA, Setlow B. Cocaine exposure causes long-term increases in impulsive choice. Behav Neurosci. 2007;121:543–549. doi: 10.1037/0735-7044.121.3.543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Homayoun H, Moghaddam B. Progression of cellular adaptations in medial prefrontal and orbitofrontal cortex in response to repeated amphetamine. J Neurosci. 2006;26:8025–8039. doi: 10.1523/JNEUROSCI.0842-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Stalnaker TA, Roesch MR, Franz TM, Burke KA, Schoenbaum G. Abnormal associative encoding in orbitofrontal neurons in cocaine-experienced rats during decision-making. Eur J Neurosci. 2006;24:2643–2653. doi: 10.1111/j.1460-9568.2006.05128.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Taylor JR, Jentsch JD. Repeated intermittent administration of psychomotor stimulant drugs alters the acquisition of Pavlovian approach behavior in rats: differential effects of cocaine, D-amphetamine and 3,4-methylenedioxymethamphetamine (“ecstasy”) Biol Psychiatry. 2001;50:137–143. doi: 10.1016/s0006-3223(01)01106-4. [DOI] [PubMed] [Google Scholar]

- 91.Wyvell CL, Berridge KC. Incentive sensitization by previous amphetamine exposure: increased cue-triggered “wanting” for sucrose reward. J Neurosci. 2001;21:7831–7840. doi: 10.1523/JNEUROSCI.21-19-07831.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Kantak KM, et al. Influence of cocaine self-administration on learning related to prefrontal cortex or hippocampus functioning in rats. Psychopharmacology (Berl) 2005;181:227–236. doi: 10.1007/s00213-005-2243-1. [DOI] [PubMed] [Google Scholar]

- 93.Fuchs RA, Evans KA, Parker MP, See RE. Differential involvement of orbitofrontal cortex subregions in conditioned cue-induced and cocaine-primed reinstatement of cocaine seeking in rats. J Neurosci. 2004;24:6600–6610. doi: 10.1523/JNEUROSCI.1924-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Lasseter HC, Ramirez DR, Xie X, Fuchs RA. Involvement of the lateral orbitofrontal cortex in drug context-induced reinstatement of cocaine-seeking behavior in rats. Eur J Neurosci. 2009;30:1370–1381. doi: 10.1111/j.1460-9568.2009.06906.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Fellows LK, Farah MJ. Ventromedial frontal cortex mediates affective shifting in humans: evidence from a reversal learning paradigm. Brain. 2003;126:1830–1837. doi: 10.1093/brain/awg180. [DOI] [PubMed] [Google Scholar]

- 96.Schoenbaum G, Shaham Y. The role of orbitofrontal cortex in drug addiction: a review of preclinical studies. Biol Psychiatry. 2008;63:256–262. doi: 10.1016/j.biopsych.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Schoenbaum G, Setlow B. Cocaine makes actions insensitive to outcomes but not extinction: implications for altered orbitofrontal-amygdalar function. Cereb Cortex. 2005;15:1162–1169. doi: 10.1093/cercor/bhh216. [DOI] [PubMed] [Google Scholar]

- 98.Everitt BJ, et al. Review. Neural mechanisms underlying the vulnerability to develop compulsive drug-seeking habits and addiction. Phil Trans R Soc Lond B. 2008;363:3125–3135. doi: 10.1098/rstb.2008.0089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Bechara A. Decision making, impulse control and loss of willpower to resist drugs: a neurocognitive perspective. Nat Neurosci. 2005;8:1458–1463. doi: 10.1038/nn1584. [DOI] [PubMed] [Google Scholar]

- 100.Robbins TW, Everitt BJ. Drug addiction: bad habits add up. Nature. 1999;398:567–570. doi: 10.1038/19208. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.