Editor’s note:

Few issues are as important to the future of humanity as acquiring literacy. Brain-scanning technology and cognitive tests on a variety of subjects by one of the world’s foremost cognitive neuroscientists has led to a better understanding of how a region of the brain responds to visual stimuli. The results could profoundly affect learning and help individuals with reading disabilities.

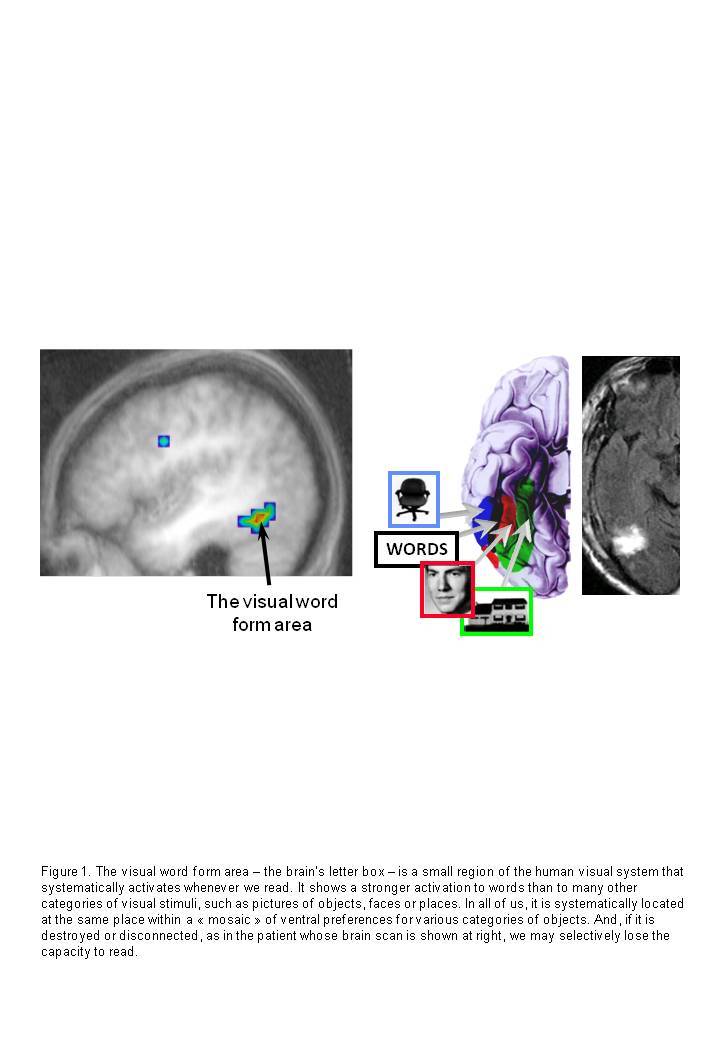

Although I find the diversity of the world’s writing systems bewildering, there is also a striking regularity that remains hidden. Whenever we read—whether our language is Japanese, Hebrew, English, or Italian—each of us relies on very similar brain networks.1 In particular, a small region of the visual cortex becomes active with remarkable reproducibility in the brains of all readers (see figure 1). A brief localizer scan, during which images of brain activity are collected as a person responds to written words, faces, objects, and other visual stimuli, serves to identify this region. Written words never fail to activate a small region at the base of the left hemisphere, always at the same place, give or take a few millimeters.2

Figure 1.

The visual word form area—the brain’s letter box— is a small region of the human visual system that systematically activates whenever we read. It shows a stronger activation to words than to many other categories of visual stimuli, such as pictures of objects, faces, or places. In all of us, it is systematically located at the same place within a “mosaic” of ventral preferences for various categories of objects. And, if it is destroyed or disconnected, as in the patient whose brain scan is shown at right, we may selectively lose the capacity to read.

Experts call this region the visual word form area, but in a recent book for the general public,3 I dubbed it the brain’s letterbox, because it concentrates much of our visual knowledge of letters and their configurations. Indeed, this site is amazingly specialized. The letterbox responds to written words more than it does to most other categories of visual stimuli, including pictures of faces, objects, houses, and even Arabic numerals.4 Its efficiency is so great that it even responds to words that we fail to recognize consciously—words made subliminal by flashing them for a fraction of a second. Yet it performs highly sophisticated operations that are indispensable to fluent reading. For instance, the letterbox is the first visual area that recognizes that “READ” and “read” depict the same word by representing strings of letters invariantly for changes in case, which is no small feat if you consider that uppercase and lowercase letters such as “A” and “a” bear very little similarity. Furthermore, if it is impaired or disconnected via brain surgery or a cerebral infarct (type of stroke), the patient may develop a syndrome called pure alexia. He or she will be unable to recognize even a single word, as well as faces, objects, digits, and Arabic numerals. Yet many of these patients can still speak and understand spoken language fluently, and they may even still write; only their visual capacity to process letter strings seems dramatically affected.

The brain of any educated adult contains a circuit specialized for reading. But how is this possible, given that reading is an extremely recent and highly variable cultural activity? The alphabet is only about 4,000 years old, and until recently, only a very small fraction of humanity could read. Thus, there was no time for Darwinian evolution to shape our genome and adapt our brain networks to the particularities of reading. How is it, then, that we all possess a specialized letterbox area?

Reading as Neuronal Recycling

Resolving this paradox requires thinking about the state of the brain prior to literacy. According to a theoretical proposal called the neuronal recycling hypothesis, which I introduced with colleague Laurent Cohen a few years ago, the human brain contains highly organized cortical maps that constrain subsequent learning.5 We should stop thinking of human culture as a distinctly social layer, free to vary without bounds, independent of our biological endowment. On the contrary, new cultural inventions such as writing are only possible inasmuch as they fit within our preexisting brain architecture. Each cultural object must find its neuronal niche—a set of circuits that are sufficiently close to the required function and sufficiently plastic to be partially “recycled.” The theory stipulates that cultural inventions always involve the recycling of older cerebral structures that originally were selected by evolution to address very different problems but manage, more or less successfully, to shift toward a novel cultural use.

How can this view explain why all readers possess a specialized and reproducibly located area for a recent cultural invention? The idea is that the act of reading is tightly constrained by the preexisting brain architectures for language and vision. The human brain is subject to strong anatomical and connectional constraints inherited from its evolution, and the crossing of these multiple constraints implies that reading acquisition is channeled to an essentially unique circuit.

As far as spoken language is concerned, all humans rely on a highly determined network of left superior temporal and inferior frontal regions. This system is so reproducible that it is found in any individual. Indeed, it can already be evidenced with functional magnetic resonance imaging (fMRI) in 2-month-old babies when they listen to short sentences in their mother tongue6 —and even at this early age, the language network is already lateralized to the left hemisphere in most subjects. Somewhat unsurprisingly, the lateralization of this spoken language system constrains the lateralization of reading. The visual word form area is systematically lateralized to the same hemisphere as spoken language: it typically falls in the left hemisphere in most people, but it shifts to the right ventral occipitotemporal region in those rare subjects with right-hemisphere language.7 Presumably, this is because the ventral temporal visual cortex lies very close to the temporal language areas that encode spoken words and speech sounds, thus allowing this region to serve as a visual interface for reading. This optimally short circuit may allow a good reader to convert back and forth between letters and sounds with minimal transmission time.

Many other constraints probably conspire to mark the precise location of the brain’s letterbox area. For instance, this region always falls within the part of the visual cortex that receives inputs from the high-resolution region of the retina called the fovea.8 Thanks to this projection pattern, this cortical sector can discriminate very small shapes—obviously a highly desirable feature when considering that reading involves resolving the often minuscule differences that distinguish similar letters, especially in small print.

Another important constraint is that neurons in the ventral visual pathway often respond to simple shapes, such as those formed by the intersections of contours of objects.9 Even in the macaque monkey, the inferotemporal visual cortex already contains neurons sensitive to letter-like combinations of lines such as T, L, X, and *.10 The ventral visual system seems to favor those shapes because they signal salient properties of objects that tend to be robustly invariant to a change in viewpoint. For instance, a T frequently signals occlusion of one object by another: the vertical contour disappears behind the horizontal one, indicating that the latter marks the contour of an opaque surface that lies in front of the former. Across evolution and development, the visual system of all primates acquires a whole “alphabet” of such shape primitives, presumably because they allow us to immediately encode any new shape that we encounter.

The visual word form area seems to arise from this preexisting neuronal alphabet: a subset of the shape-recognition system specializes in the shapes of letters. In my book Reading in the Brain,11 I further speculate that in all of the world’s cultures, scribes in generation after generation progressively selected their letters and written characters to closely match the set of shapes that were already present in the brains of all primates and, as a result, were easy to learn. This hypothesis is corroborated by a large-scale analysis of the world’s writing systems.12 Writing systems do vary in their “grain size”: the linguistic units that are marked in writing vary from phonemes (in our alphabet) to syllables (in Japanese Kana notation) or even entire words or morphemes (as in Chinese).

However, visually speaking, they systematically make use of the same set of shapes, precisely those that abound in natural visual scenes and tend to be internalized in the ventral visual cortex.13 All writing systems seem to rely on the set of shapes to which our primate brain is already highly attuned—living proof that culture is constrained by brain biology.

In the end, the neuronal recycling hypothesis leads me to believe that we are able to learn to read because, within our preexisting circuits, there is one that links the left ventral visual pathway to the left-hemispheric language areas. This circuit is already capable of recognizing many letter-like shapes, and it possesses enough plasticity, or adaptability, to reorient toward whichever shapes are used in our alphabet. But this region is highly constrained, explaining why we all use the same cortical circuit and the same set of basic shapes when we read.

Scanning the Illiterate Brain

In the past eight years, my research has put the predictions of the neuronal recycling hypothesis to the test. One of the predictions, which I find particularly interesting, is the notion of a cortical competition process. The model predicts that, as cortical territories dedicated to evolutionarily older functions are invaded by novel cultural objects, their prior organization should slightly shift away from the original function (though the original function is never entirely erased). As a result, reading acquisition should displace and dislodge whichever evolutionary older function is implemented at the site of the visual word form area.

To test this idea, we needed to figure out the organization of the human brain prior to reading: the architecture of the illiterate brain. Indeed, at present, our knowledge of the human brain is highly biased. Most of it comes from neuroimaging experiments in highly educated students. My laboratory recently took a different approach by launching a major investigation into the brains of uneducated illiterate adults.14 This collaborative effort, which involved colleagues in Belgium, Portugal, and Brazil, aimed to obtain a detailed map of the visual, auditory, and language areas in people who never had the chance to learn to read. By comparing these areas with those of literate adults, we hoped to illuminate how literacy transforms the brain.

We recruited adults around the age of fifty. They held jobs and were integrated into society, but had not attended school during their youth. Ten remained utterly illiterate, unable even to recognize most letters. Twenty-one were “ex-illiterates,” meaning that although they hadn’t attended school; they had later received adult alphabetization classes and, consequently, had achieved a modest and variable level of literacy. We compared them to thirty-two literate adults, some of whom came from the very same socioeconomic groups as the illiterates did.

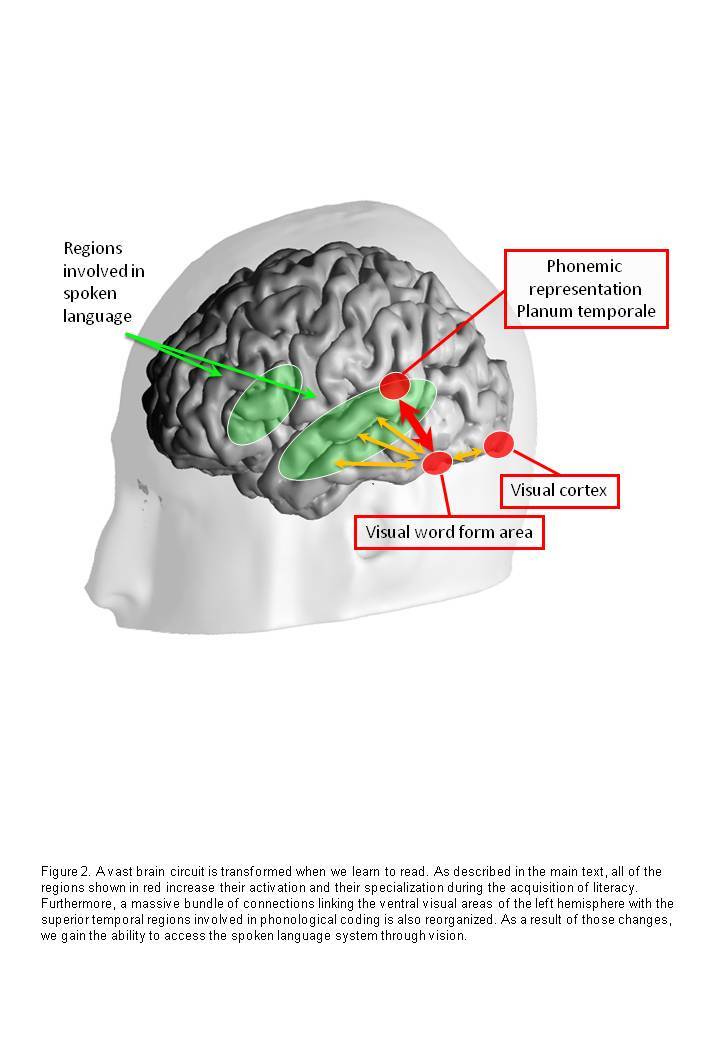

The results demonstrated a large impact of reading acquisition on the brain (see figure 2). In the visual system, the visual word-form area was massively affected. At its usual site in the left lateral occipitotemporal sulcus, the activation evoked by a string of letters was directly proportional to the subjects’ reading score, to such an extent that one could predict a large proportion of the variance in the number of words they could read per minute, simply by measuring their brain activity.

Figure 2.

A vast brain circuit is transformed when we learn to read. As described in the main text, all of the regions shown in red increase their activation and specialization during the acquisition of literacy. Furthermore, a massive bundle of connections linking the ventral visual areas of the left hemisphere with the superior temporal regions involved in phonological coding is also reorganized. As a result of those changes, we gain the ability to access the spoken language system through vision.

We also observed unsuspected changes within the brain areas that implement the initial stages of visual recognition. With literacy, lateral occipital areas increased their activation, not just to words, but also to all sorts of stimuli (faces, houses, checkerboards), suggesting that learning to read had refined the capacity to recognize any picture. In fact, even the primary visual area V1, which is the first point of entry of visual signals into the cortex, was enhanced. Relative to illiterates, trained readers showed enhanced fMRI responses to horizontal than to vertical checkerboards. Intensive training with horizontally presented words had obviously led to a refinement of the corresponding region of the visual field, in the part of the eye that is indispensable for reading fine print. Behaviorally, the literates performed much better than the illiterates at a contour integration task that is thought to depend heavily on the earliest cortical stage of vision, the primary visual area V1 and its horizontal connections.15 In brief, learning to read seems to render even the lower-level processes of early vision more accurate and more efficient.

Most crucially for the neuronal recycling hypothesis, however, was our determination that reading did not merely have a positive effect on the brain. Exactly as predicted, we also observed a small but significant cortical competition effect, precisely at the site of the letterbox area. For the first time, our study revealed which shapes triggered a response at this site prior to learning to read. In illiterates, faces and objects caused intense activity in this region—and, strikingly, the response to faces diminished with literacy. It was highest in illiterates, and quickly dropped in ex-illiterates and literates. This cortical competition effect, whereby word responses increased while face responses decreased, was found only in the left hemisphere. In the symmetrical fusiform area of the right hemisphere, face responses increased with reading. Thus, at least part of the right-hemisphere specialization for faces, which has been repeatedly observed in dozens of fMRI studies, arises from the fact that these neuroimaging studies always involved educated adults. Obviously, the acquisition of reading involves the reconversion of evolutionary older cortical territory, and text competes with faces for a place in the cortex.16

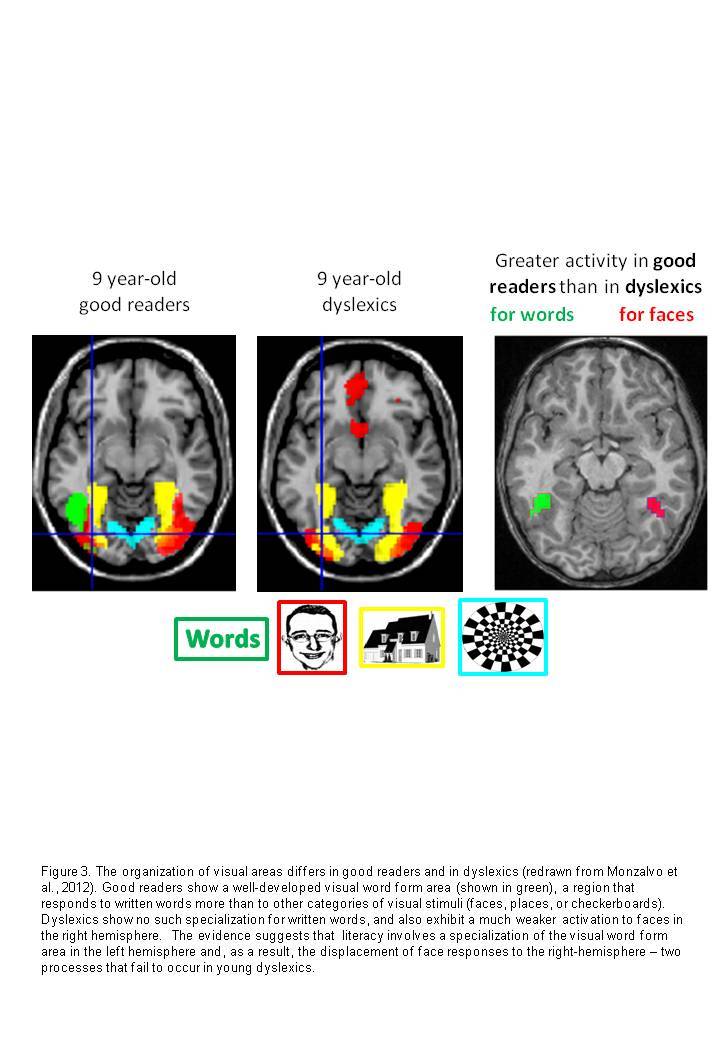

We also replicated this finding in children.17 When scanning 9-year-olds who were good versus poor readers, we found two interesting differences in the ventral visual pathway (see figure 3). The poor readers not only showed weaker responses to written words in the left-hemispheric visual word form area, but also showed weaker responses to faces in the right-hemispheric fusiform face area. Thus, the acquisition of reading seemed to induce an important reorganization of the ventral visual pathway, which displaces the cortical responses to face away from the left hemisphere and more toward the right. This displacement is presumably because the features that are most useful for letter recognition (configurations and intersections of lines) are incompatible with those that are useful for faces, so that one pushes the other away.

Figure 3.

The organization of visual areas differs in good readers and in dyslexics (redrawn from Monzalvo et al., 2012). Good readers show a well-developed visual word form area (shown in green), a region that responds to written words more than to other categories of visual stimuli (faces, places, or checkerboards). Dyslexics show no such specialization for written words, and also exhibit a much weaker activation to faces in the right hemisphere. The evidence suggests that literacy involves a specialization of the visual word form area in the left hemisphere and, as a result, the displacement of face responses to the right-hemisphere—two processes that fail to occur in young dyslexics.

There is no reason to worry, however. In our research so far, we have been unable to uncover any negative behavioral consequences of literacy on face-recognition abilities.

Although face recognition is displaced in the cortex, it seems to be just as efficient in literate people as in illiterate people. In fact, it may even be more efficient in literate people. In a test of holistic perception, where subjects were asked to compare the top halves of two faces while avoiding any interference from their bottom halves, literate people outperformed illiterate people, suggesting that the former had learned to focus their attention better and in a more flexible manner. Many of us complain about the difficulty of recognizing faces and retrieving people’s proper names in everyday life, but the true culprit is unlikely to be an excess of reading.

Unlearning Mirror Invariance

Another feature of the reading system—the need to discriminate mirror-symmetrical images—places an unusual constraint on the visual system. Our alphabet comprises pairs of letters such as “p” and “q” or “b” and “d,” which are similar except for a left-right inversion. In order to read fluently, we must learn to discriminate these letters perfectly, because they point to different phonemes. Remarkably, however, in its preliterate state, the inferotemporal cortex of all primates generalizes across such mirror images and treats them as two views of the same object.18 Indeed, adults, children, and even infants immediately recognize an object regardless of whether its left or right profile is seen. In macaque monkeys, inferotemporal neurons spontaneously generalize across mirror images. When a monkey is trained with a shape in a specific orientation, the neurons that specialize for this shape generalize to its mirror image without any further training.

My colleagues and I reasoned that mirror invariance was a perfect test of the neuronal recycling hypothesis. Here was a preexisting competence, clearly present in the brains of all primates, and yet if our hypothesis was correct, it had to selectively disappear in the course of reading acquisition. Mirror invariance had the potential to become the “panda’s thumb” of reading—a vestigial trait, inherited from our evolution in a natural world where most objects maintain their identity across a left-right inversion. Its presence prior to literacy and its disappearance during reading acquisition would imply that learning to read involves the recycling of a preexisting circuit that never evolved for reading but willy-nilly adapts to this novel task.

There is, in fact, much evidence to support this theory. Early on during reading acquisition, most children generalize across mirror images to such an extent that they are able to read and write their first words independent of orientation. Just ask any 5- or 6-year-old child to write his or her name next to a dot located near the right side of the page. Most of them will unhesitatingly solve the problem by writing from right to left.19 This mirror competence slowly disappears during reading acquisition, but it remains present in illiterate people who, contrary to literates, exhibit no cost at all in recognizing a learned object in mirrored form. Indeed, illiterate people find it extraordinarily hard not to see “b” and “d” as identical shapes. The breaking of this spontaneous mirror invariance is one of the important outcomes of literacy.

Using fMRI, we found that the visual word form area is, once again, the site of this adaptation to reading.20 When we used an fMRI priming technique in adult readers, we observed no mirror invariance to words in the brain’s letterbox area. This region easily recognized that “radio” and “RADIO” were one and the same word, but it did not label a word and its mirror image as identical (“radio” and “oidar”). It did so, however, whenever pictures were presented (even when they were visually just as simple as a single letter). Surprisingly, our fMRI study revealed that the visual word form area is the place of the visual system with the strongest mirror invariance for pictures. No wonder, then, that young readers experience special difficulties with mirror letters such as “b” and “d”: they are learning to read via the precise site of the cortex that treats these letters as identical. This difficulty is not connected to dyslexia; it is due to a universal feature of the primate brain that all children possess and must unlearn. Only if mirror errors persist beyond the ages of 9 or 10 should parents and educators worry, because the prolongation of mirror errors beyond that age suggests that the unlearning processing is not proceeding normally.21

The unlearning of mirror symmetry should not be solely construed as a loss. As we gain literacy, we become slightly worse in judging that two mirror images are the same, but we also gain an enormous advantage, which is the capacity to distinguish them. Behavioral tests show that illiterate adults, even if their intelligence is high, find it extremely hard to distinguish nonsense shapes such as ↙ and ↘, since they cannot help but see the shapes as identical.22 Using recordings of the brain’s event-related potentials in our literate and illiterate participants, we recently found that the capacity to discriminate mirror-symmetrical pseudo-words such as “iduo” and “oubi” increases with literacy. Again, literacy enhances our species’ behavioral repertoire by allowing our visual system to flexibly treat shapes as identical or different, depending on context.

Literacy Enhances Speech Processing

Up to now, we focused primarily on how learning to read changes the visual brain. However, our study of literate and illiterate brains also uncovered a massive and positive effect of literacy on the network for spoken language processing. First, and perhaps trivially, in literate people but not in illiterate people, the language network of left temporal and inferior frontal regions activates very strongly and identically to written and spoken language. Thus, the acquisition of reading gives us access, from vision, to a broad and universal language processing system—the very same language circuit that is already active in 2-month-old babies. Writing really acts as a substitute for speech, and ends up activating the same areas of the brain.

Second, and most important, this network for spoken language also changes under the influence of reading. In most areas, we found decreased activation. In order to understand the very same spoken sentence, the best readers required less activity than the illiterate people. Indeed several brain areas associated with mental effort, such as the anterior cingulate, decreased in activity dramatically with literacy, confirming that reading facilitates the comprehension of complex sentences.

In one auditory area, however—the left planum temporale, located just behind the primary auditory area—we saw a strongly increased response to spoken sentences, words, and even meaningless pseudo-words. We think that this region may be the site of one of the most important competences that we acquire when we learn to read: the capacity to convert letters into sounds, or graphemes into phonemes. During infancy, the planum temporale region plays an essential role in the acquisition of the phonemes and the phonological rules that are particular to a person’s mother tongue.23 The additional changes that this region undergoes in literate people may reflect how literacy changes the phonological code. Many behavioral studies have demonstrated that alphabetization leads to a considerable improvement in phonological awareness. Readers of an alphabetic language gain explicit access to a representation of phonemes, the fundamental units of spoken language.24 Illiterate people cannot hear the identical “b” phoneme in “back” and “cab,” and they cannot selectively delete it to produce “hack” or “ca.” It seems very likely that the near-doubling of brain activity that we saw in the left planum temporale relates to this massive reorganization of spoken language. Literate and illiterate brains differ in the very manner in which they encode speech sounds.

A Bidirectional Sight-to-Sound Pathway

Our fMRI study of literacy revealed yet one more change in the way good readers process speech. When listening to spoken language, they alone could activate the orthographic code in a top-down manner. Indeed, we saw a massive activation at the exact site of the visual word form area whenever the good readers were asked to decide whether a spoken item (e.g., “ploot”) was or was not a word in English. During the same lexical decision task, illiterate people showed no trace of any such recruitment of their letterbox system. We therefore concluded that with literacy comes the capacity to recode speech with vision; it obviously helps to consider a sound’s possible spellings before deciding whether it is a word or not.

Prior behavioral results showed a huge influence of spelling on speech processing in this domain as well. For instance, English literate people believe that there are more phonemes in “pitch” than in “rich” (just because there are more letters), and they think that by removing the sound /n/ in the spoken word “bind,” one gets “bid” (the correct answer is “bide”).25 Obviously, their spoken-language judgments are deeply biased by spelling. But once again, the net effect is primarily positive, as it increases the number of redundant codes available to process speech. This expanded mental world may explain why literate people usually exhibit a much larger verbal memory than illiterate people do.

If our hypothesis is correct, then reading acquisition enhances a fast and bidirectional connection between letters and sounds—and I do mean a physical connection, a bundle of axons linking the visual word form area to the planum temporal. We reasoned that we might be able to see this putative connectivity change directly in our anatomical images of the human brain by using a technique called diffusion tensor imaging. This technique measures the microscopic organization of the “white matter” of the brain, the massive bundles of fibers that link brain areas into functional networks. One parameter in particular, called fractional anisotropy, provides a sensitive index of whether the fibers are well aligned and covered with an insulating sheet of myelin that speeds up neural transmission. To evaluate this parameter, we measured fractional anisotropy in our literate and illiterate subjects, in five different long-distance pathways of the left hemisphere.

We found that the pathway that correlated quite well with the subject’s literacy was the vertical and posterior segment of the arcuate fasciculus, which links the posterior temporal lobe (including the visual word form area) with the inferior parietal lobule and posterior superior temporal regions involved in grapheme-to-phoneme conversion.26 We also found that the anisotropy of this fiber bundle correlated with the amount of fMRI activation to written words in the visual word form area and to spoken words in the left planum temporale. This finding strengthens the hypothesis that this bundle links visual and phonological areas and participates in the grapheme-to-phoneme conversion route, whose acquisition lies at the heart of literacy.

The Next Step

Learning to read is a major event in a child’s life. Cognitive neuroscience shows why: compared to the brain of an illiterate person, the literate brain is massively changed, mostly for the better—through the enhancement of the brain’s visual and phonological areas and their interconnections—but also slightly for the worse, as the displacement of the brain’s face-recognition circuits reduces the capacity for mirror invariance. Once children learn to read, their brains are literally different.

Now that we understand exactly which circuits are changed by reading education, we may start thinking about how to optimize this process, particularly for children who struggle in school. Indeed, the present results dovetail nicely with prior education research, which leaves little doubt that the systematic teaching of how letters map on to speech sounds may favor the rapid establishment of the brain’s visual and phonological circuits.27 Training preschoolers with just a few hours of GraphoGame—fun software that links graphemes and phonemes—is enough to enhance the representation of letters in the cortex. In one fMRI study, after less than four hours of total training that was spread over a few weeks, the visual word form area quickly began to respond to written words in 6-year-olds.28 By monitoring children’s progress by their behavior as well as by brain imaging, we now have all the necessary tools to better understand what schools do and facilitate enhanced learning strategies.

References

- 1.Nakamura, Kuo, Pegado, Cohen, Tzeng, & Dehaene, 2012

- 2.Bolger, Perfetti & Schneider, 2005

- 3.Dehaene, 2009

- 4.Josef Parvizi and his team recently discovered that Arabic numerals are recognized by another nearby region. See Shum, Hermes, Foster, Dastjerdi, Rangarajan, Winawer, Miller, & Parvizi, 2013

- 5.Dehaene, 2005 ; Dehaene & Cohen, 2007

- 6.Dehaene-Lambertz, Dehaene & Hertz-Pannier, 2002 ; Dehaene-Lambertz, Hertz-Pannier, Dubois, Meriaux, Roche, Sigman, & Dehaene, 2006

- 7.Cai, Lavidor, Brysbaert, Paulignan, & Nazir, 2008 ; Pinel & Dehaene, 2009 ; Cai, Paulignan, Brysbaert, Ibarrola, & Nazir, 2010

- 8.Hasson, Levy, Behrmann, Hendler & Malach, 2002

- 9.Biederman, 1987 ; Szwed, Cohen, Qiao, & Dehaene, 2009 ; Szwed, Dehaene, Kleinschmidt, Eger, Valabregue, Amadon, & Cohen, 2011

- 10.Tanaka, 1996 ; Baker, Behrmann, & Olson, 2002 ; Brincat & Connor, 2004

- 11.Dehaene, 2009

- 12.Changizi, Zhang, Ye, & Shimojo, 2006; See also Changizi & Shimojo, 2005

- 13.Biederman, 1987 ; Szwed et al., 2011

- 14.Dehaene, Pegado, Braga, Ventura, Nunes Filho, Jobert, Dehaene-Lambertz, Kolinsky, Morais, & Cohen, 2010

- 15.Dehaene, Pegado, Braga, Ventura, Nunes Filho, Jobert, Dehaene-Lambertz, Kolinsky, Morais, & Cohen, 2010

- 16.Szwed, Ventura, Querido, Cohen & Dehaene, 2012

- 17.For supporting evidence, see also Dundas, Plaut, & Behrmann, 2012 ; Li, Lee, Zhao, Yang, He, & Weng, 2013

- 18.Monzalvo, Fluss, Billard, Dehaene & Dehaene-Lambertz, 2012

- 19.For a review, see chapter 9 in Dehaene, 2009

- 20.Cornell, 1985

- 21.Dehaene, Nakamura, Jobert, Kuroki, Ogawa, & Cohen, 2009; Pegado, Nakamura, Cohen, & Dehaene, 2011

- 22.Lachmann & van Leeuwen, 2007

- 23.Dehaene, Izard, Pica, & Spelke, 2006; Kolinsky, Verhaeghe, Fernandes, Mengarda, Grimm-Cabral, & Morais, 2010

- 24.Jacquemot, Pallier, LeBihan, Dehaene & Dupoux, 2003

- 25.Morais, Cary, Alegria, & Bertelson, 1979

- 26.Ehri & Wilce, 1980 ; Stuart, 1990 ; Ziegler & Ferrand, 1998

- 27.Thiebaut de Schotten, Cohen, Amemiya, Braga & Dehaene, 2012

- 28.National Institute of Child Health and Human Development, 2000 [Google Scholar]

- 29.Brem, Bach, Kucian, Guttorm, Martin, Lyytinen, Brandeis, & Richardson, 2010