Abstract

With the recent advances in microelectronic fabrication technology, it has now become now possible to fabricate thin imagers, less than half a millimeter thick. Dubbed TOMBO (an acronym for Thin Observation Module by Bound Optics), a thin camera-on-a-chip integrates micro-optics and photo-sensing elements, together with advanced processing circuitry, all on a single silicon chip. Modeled after the compound-eye found in insects and many other arthropods, the TOMBO imager simultaneously captures a mosaic of low resolution images. In this paper, we describe and analyze a novel spectral-based blind algorithm that enables the restoration of a high resolution image from the captured low resolution images. The proposed blind restoration method does not require prior information about the imaging system nor the original scene. Furthermore, it alleviates the need for conventional de-shading and rearrangement processing techniques. Experimental results demonstrate that the proposed method can restore images for Signal-to-Noise Energy Ratio (SNER) lower than 3 dB.

Keywords: Image Restoration, TOMBO, CMOS Imager, Back-Projection, Cross-correlation, Spectra

1. Introduction

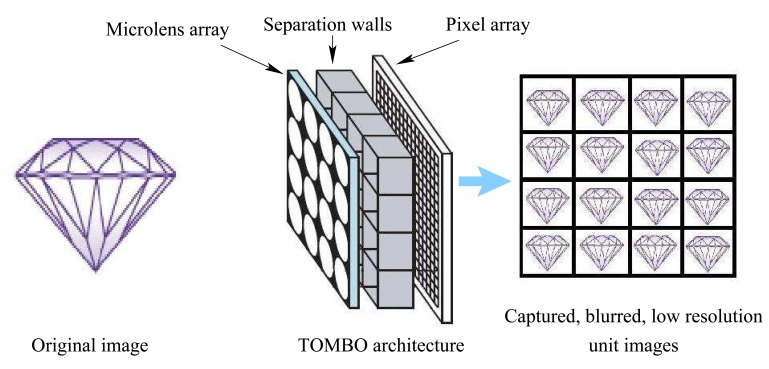

Rapid advances in semiconductor CMOS fabrication technology have enabled the realization of the concept of a camera-on-a-chip, i.e., a CMOS imager [1]. The integration of an increased number of camera functions onto a single silicon chip offers significant advantages in terms of system miniaturization and manufacturing cost [1]. As a result, CMOS imagers can now be found in a wide range of consumer electronic products from mobile phones, PC mice and webcams to fax machines, to name a few. Other examples of applications of CMOS imagers include blind spot detection and rear vision in cars, automated industrial product quality inspection, photography, digital radiography, fluoroscopy, microscopy and even implantable microimager retinal stimulation [2]. CMOS camera manufacturers have been continuously improving the performance of their products in terms of resolution, power consumption, and read-out speed [1]. The continuous aggressive scaling of the minimum feature size in CMOS technology offers the possibility to further miniaturize CMOS imagers, while still integrating increased built-in functionalities and advanced on-chip processing for improved imaging performance [1]. However, a further miniaturization of the optics is not possible since this would cause light to pass through a very small opening and to diffract or spread due to the interference of light waves. Diffraction would cause so much spreading that the image would be very blurry and essentially useless. To achieve a thin camera-on-a chip, Tanida et al have recently proposed an imaging system called TOMBO [3] (an acronym for Thin Observation Module by Bound Optics) emulating the visual system of insects and other arthropods [4, 5]. In contrast to traditional camera systems, TOMBO is not based on a single lens system [3]. Instead, a TOMBO imager comprises a collection of imaging units, each of which consists of a microlens unit associated to a subset of the pixel array (Fig. 1). Adjacent imaging units are separated by an opaque wall to prevent crosstalk. Each individual imaging unit is thus optically isolated and images part of the scene. As a result, the TOMBO sensor captures multiple low resolution (LR) images at the same time and the output is a compound image formed by the mosaic of low resolution unit images. Advanced Digital signal processing can then be used to reconstruct a high resolution image from the available set of low resolution images [3, 10]. Tanida et al have demonstrated that the restoration of a high resolution image is possible since each imaging unit provides a different view of the scene [7]-[12]. In [3], Tanida et al proposed an image restoration algorithm which uses the back-projection (BP) method. This algorithm requires complete knowledge of the imaging system point spread function (PSF). To reconstruct the original image, the inverse (pseudo-inverse) of the known PSF is multiplied by the captured low resolution images. This approach has a number of limitations: (i) the PSF differs from one camera to another and cannot be easily identified from the system parameters [10] (ii) poor performance when used in an experimental TOMBO system, due to the variation in the properties of the individual imaging units [10] (iii) the matrix representing the PSF can be singular, i.e. non-invertible.

Figure 1.

The TOMBO architecture

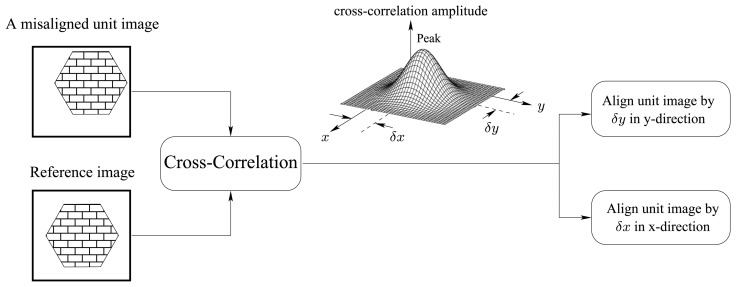

To overcome the above limitations, Tanida et al proposed a new image reconstruction approach called, pixel rearrange method [10], which could be integrated to enable the realization of a compact, low cost thin imaging system. In their approach, a cross-correlation based technique is used to arrange and align unit image pixels. To correct for the misalignment, a unit reference image is used. The relative shift values (δx and δy in Fig. 2) of each unit image with respect to the reference image are determined by identifying the peak location of the cross-correlation function between the unit image and the reference one. Interpolation techniques were used to identify the cross-correlation peak [10]. The cross-correlation based pixel rearrange method is illustrated in Fig. 2.

Figure 2.

Cross correlation-based pixel rearrange method

In the rearrangement process, it is assumed that the cross-correlation function is ideally symmetric around a single peak. In other words, there is a single shift between the considered unit image and the reference image, i.e., the spatial PSF function has only one parameter. In reality, however, there would be more than one parameter in a PSF (i.e., several cross-correlation peaks). This will limit the performance of the rearrange method when aligning unit images. Furthermore, the presence of several cross-correlation peaks introduces additional blur in the restored image. Inverse filtering is subsequently required. This operation is not only computationally costly but also unstable if at least a single non-minimum phase component is present. It also requires for the PSF to be known. Besides the spatial PSF, additive noise can also introduce false cross-correlation peaks, which further degrades significantly the performance of rearrange method [10].

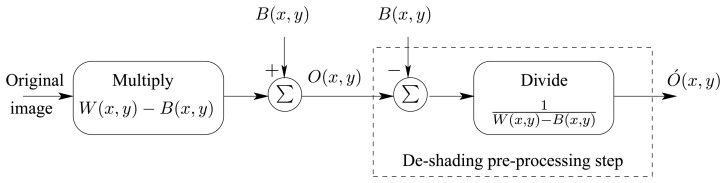

In the same paper, Tanida et al proposed a method to minimize the problems associated with: (i) TOMBO's intrinsic PSFs (ii) imager internal noise, and (iii) shading introduced by the separating walls (Fig. 1). To overcome these problems, Tanida et al introduced a de-shading pre-processing step, which uses a black picture and a white one for calibration. We can analyze the de-shading process by noting that,

| (1) |

| (2) |

where, x and y define the pixel location, hint(x, y) represents the intrinsic PSF of the TOMBO imager, Bi(x, y) and Wi(x, y) are the black and white pictures to be captured, B(x, y) and W(x, y) are the captured black and white images, and VB(x, y) and VW(x, y) are the additive internal noise for the black and white images respectively.

By subtracting Eqn. (1) from Eqn. (2) and after some manipulation we have,

| (3) |

When normalized, a black pixel correspond to a ‘0’ value while a white pixel has a value of ‘1’, i.e., Bi(x, y) = 0 and Wi(x, y) = 1, thus

| (4) |

Tanida et al assume VW(x, y) and VB(x, y) to be equal. As a result, the intrinsic PSF will take the form,

| (5) |

The model representing the intrinsic characteristics and the de-shading pre-processing step can be seen in Fig. 3.

Figure 3.

A model for the shading and de-shading pre-processing step

When an image O(x, y) is captured, the de-shading pre-processing step (Fig. 3) generates the image:

| (6) |

where C > 0 is an amplification factor.

From the above equations, it is clear that dividing by the intrinsic PSF can introduce nonlinearity problems and unrealistic large pixel values in the de-shaded image. Furthermore, it is assumed that the internal noises VW(x, y) and VB(x, y) are equal while in reality they are not and can differ from one image to another and from one imager to another.

In this paper, a spectral-based blind image restoration algorithm is proposed for TOMBO thin imagers [13]. The mathematical analysis for the algorithm is conducted in the frequency domain. Unlike conventional TOMBO image restoration methods [3] and [7]-[12], our method:

does not require prior information about the imaging system (e.g. intrinsic PSF and internal noise)

does not require prior information about the original scene and the PSFs (mapping the original scene into the pixel array)

is robust in the presence of additive noise whether external, internal, correlated or uncorrelated and at low signal to noise ratios (SNR)

does not require a de-shading pre-processing step

does not require pixel rearrangement since this is automatically done in the frequency domain through the restoration process

is able to restore original images that are blurred without the use of inverse filtering

requires less than 30 iterations at SNRs ≤ 7 dB to restore the original image

The paper is organized as follows. Section 2 gives a model of the TOMBO system. Section 3 describes the proposed spectral-based image restoration approach. Section 4 presents the blind image restoration algorithm. The experimental results are discussed in Section 5. Finally, a conclusion is given in Section 6.

2. System Model

If the original image can be regarded as either a deterministic or stochastic signal and if the cause of image degradation can be modeled, then the original image can be reconstructed. However, in real situations, it is difficult to accurately model image degradation since it has many origins: atmospheric turbulences, an out-of-focus camera, relative motion between the camera and the scene, electronic imaging components, the number of pixels used to capture the image, to name a few. In other words, an image can be degraded or blurred by a linear or nonlinear process, distorted by an additive or multiplicative noise process [16].

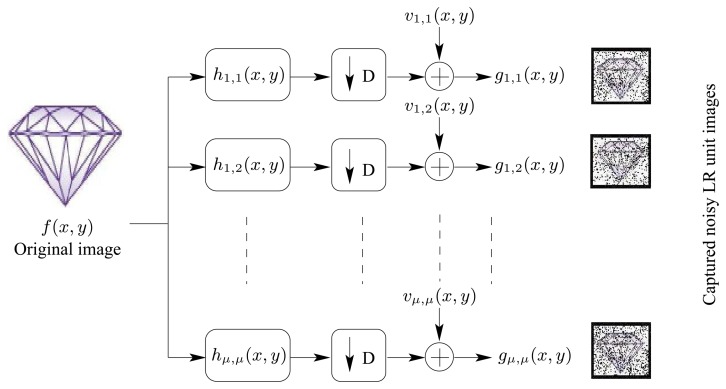

Consider the TOMBO system shown in Fig. 1 with a (μ × μ) imaging units. Each captured unit image can be modeled by,

| (7) |

Where,

gi,j(x,y) represents the captured (i,j) blurred, low resolution (LR) and noisy unit image with dimension (M × N) pixels

hi,j(x, y) is a two-dimensional (2-d) PSF with dimension (ℓ × ℓ), representing the channel blur for the (i, j) image unit

“* *” represents the 2-d convolution operator

f(x, y) is the original image with resolution (M ×

)

)vi,j(x, y) is the additive 2-d zero mean white Gaussian noise introduced when capturing the (i, j) unit image

↓ D is the down-sampling factor modeling the LR operation done to the captured images

The overall system model is illustrated in Fig. 4

Figure 4.

A mathematical model for the TOMBO system

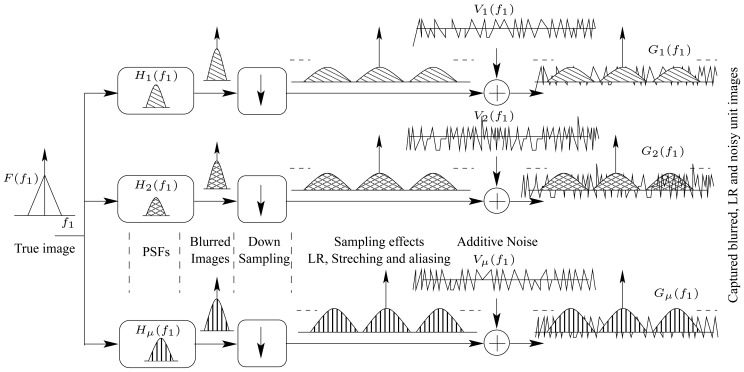

3. Spectral-Based Image Restoration Approach

Our objective is to restore or estimate the original image f(x, y), given only the blurred, LR and noisy images gi,j(x, y), i, j = 1,2,…,μ captured by the TOMBO. This objective should to be achieved with minimal information about the original image, PSFs and noise contribution. To tackle the problem, we propose a spectral-based image restoration technique. We consider the 2-d z-transform of the model given by Eqn. (7), i.e.,

| (8) |

where, z1 = e−j2πf1 and z2 = e−j2πf2.

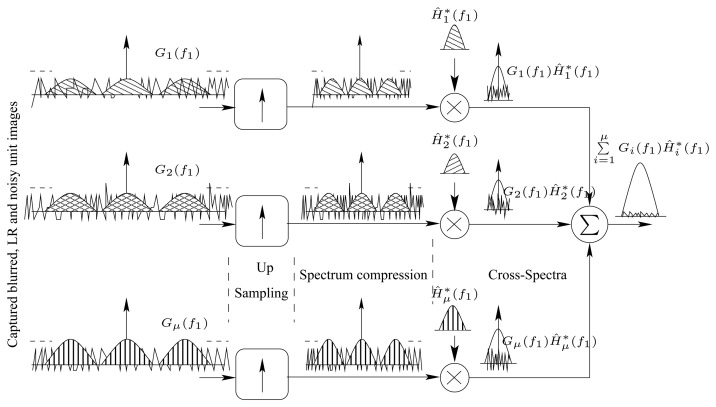

Fig. 5 illustrates the spectra of all involved signals. For the sake of clarification, the spectra are shown for one-dimension signals. The figure also shows the impact of both the down-sampling operator, “↓” and the noise in degrading the original image.

Figure 5.

A spectral representation for the captured images in the TOMBO system.

Eqn. (8) can be partitioned into,

| (9) |

and can be rewritten as,

| (10) |

where,

(a) represents the image of interest and the noise term, in-band useful terms

(b) symbolized by represents the aliasing out-off band image terms

(c) symbolized by represents the aliasing out-off band noise term

By multiplying both sides of Eqn. (10) with the complex conjugate of , i.e., and applying the ensemble average, E {} and after some manipulation we have,

| (11) |

which can rewritten as,

| (12) |

Where the cross-spectra CXY*(z1, z2) = E{X(z1, z2)Y*(z1, z2)} [13]

Since and are not located in the same frequency band where is located, thus the second and the last cross-spectral terms and in Eqn. (12) will disappear, leading to,

| (13) |

Similarly and by following the same previous procedure,

| (14) |

where and are residual errors representing the in-band cross spec-tral terms between original image, PSFs and the independent additive noise in Eqn. (11) respectively. This error can be assumed to be a zero mean, 2-d independent and identically distributed signal (i.i.d) under some regularity conditions. In addition, these error terms represent the cross-spectra between signals and noise, i.e. smaller in amplitude when compared with the spectra of the signals themselves [14].

Eqns. (13) and (14) suggest that it is possible to estimate the original image and the PSFs with minimal prior information/constraints on the PSFs/original image. For instance, if the PSF associated with each (i, j) unit image, is known or can be estimated, then the original image can be restored using,

| (15) |

However, there are three main problems when using image and PSF estimation methods in real situations. The first is the unknown size of the PSF. The second is the PSF itself, and the third and mostcritical one, is the residual error term, which can significantly affect the restoration process [15]-[22].

Since TOMBO imaging systems provide multiple observations of the scene, it is possible to significantly reduce the impact of the error terms using averaged cross-spectral techniques [13]. To demonstrate this, consider a TOMBO system with a (μ × μ) unit images. Since the span of i = 1, 2, … μ and j = 1, 2, …, μ, thus the averaged spectral and cross-spectral techniques can be applied similar to Eqns (13) and (14), but using spectral estimates instead of the true ones. Therefore, and similarly can be estimated iteratively using the equation,

| (16) |

where ĈXY*(z1, z2) = X(z1, z2)Y*(z1, z2) is an estimate of the cross-spectra between X(z1, z2) and Y(z1, z2). is here not considered since it is only a scaling factor.

For large values of (μ × μ) unit images, the last summation error term of Eqn. (16), is nothing but the mean value of an i.i.d signal which has a zero mean [13][14]. In this situation, we will have,

| (17) |

Fig. 6 is a spectral diagram illustrating how signals' cross-spectra are estimated so that the original image can be restored while minimizing the effect of additive noise and aliasing terms resulting from down-sampling. A similar diagram can be drawn for the PSF function.

Figure 6.

A spectral diagram showing how cross-spectra are estimated in the case of a 1-d signal

4. Blind Image Restoration Algorithm

Blind image restoration is to reconstruct an original image from degraded, blurred and noisy observation(s) without any knowledge about either the original image or the degradation process, which includes the noise and the blur PSF [15]-[22].

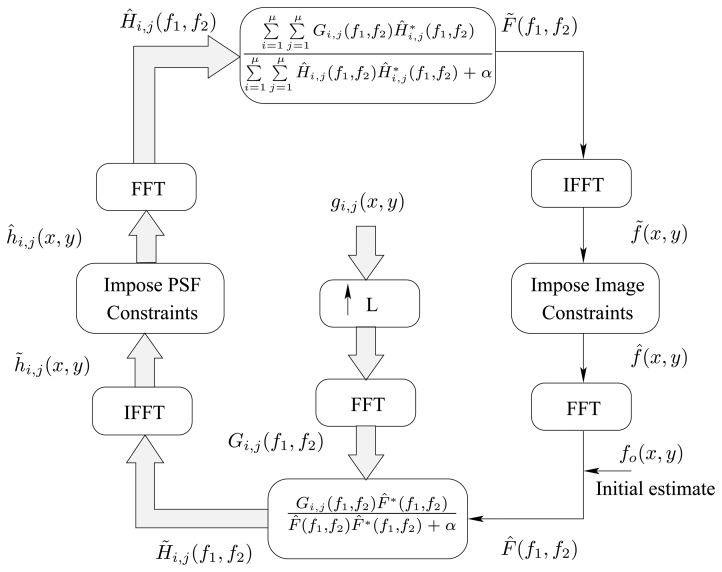

Using the analysis carried out in the previous section, we have developed a blind image restoration algorithm capable of restoring the original image using only the captured, noisy and LR unit images. The proposed algorithm is given in Fig. 7.

Figure 7.

Blind Image Restoration Algorithm

During the restoration process, the algorithm will only impose two constraints on the restored image and the PSFs: the positivity and the support region. The two constraints can be described mathematically as follows,

- For the image

(18) - For the PSFs

(19)

where, L ≥ D is the up-sampling factor needed to restore the high resolution image, (LM × LN) is the size of the restored image which can be greater or equal to the size of the original image (M ×

), and (ℓ × ℓ; is the size of the estimated PSF.

), and (ℓ × ℓ; is the size of the estimated PSF.

For the purpose of implementations and to evade the division by zero in Eqn. (17), a small value α can be added to the denominator (Fig. 7). Finally, the up-sampling is done by zero-padding in the spatial domain between the image samples, then Fast Fourier Transform (FFT) is applied and in the Fourier domain, a single spectrum is then taken out of the repetitive spectrums using a low pass filter with cutoff frequency and zeroing the rest of the spectrum. Finally, the inverse FFT (IFFT) is used to return to the image domain. Notice that the zero-padding must be such that the zero frequency components remain the same and that zero-padding is applied to both positive and negative frequencies. The whole process is symbolized by (↑ L) in Fig. 7. Other interpolation techniques could also be used.

5. Experimental Results and Discussion

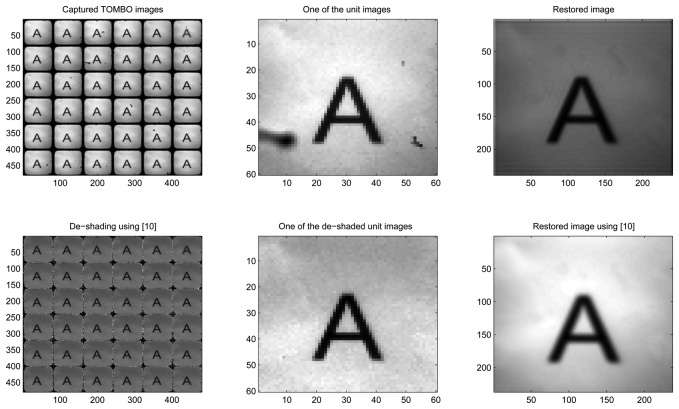

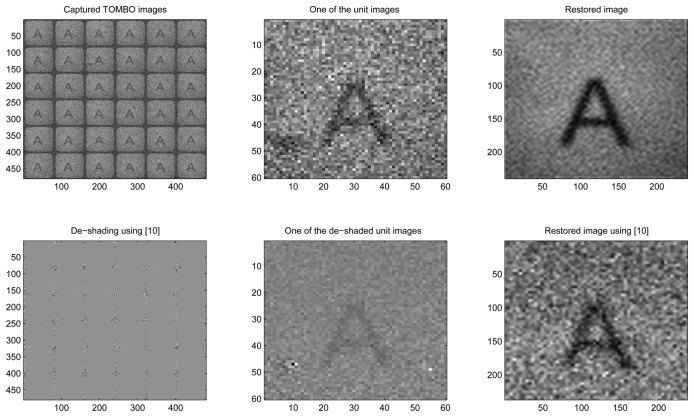

In this section, we applied the proposed method to restore high resolution images from LR noisy images captured by an experimental TOMBO imagers. Captured images for a letter ‘A’ picture (Fig. 8), were provided by [3]. The letter ‘A’ picture was located 285 mm from the TOMBO imager, which comprises (10× 10) imaging units. Each unit has (60 × 60) pixels and each pixel is 6.25 μm× 6.25 μm. The microlens array has the following characteristics: 1.3 mm focal length, 0.5 mm diameter of aperture, and a 0.5 mm pitch for the microlens array.

Figure 8.

Experimental, no added noise, 6 × 6 lenses

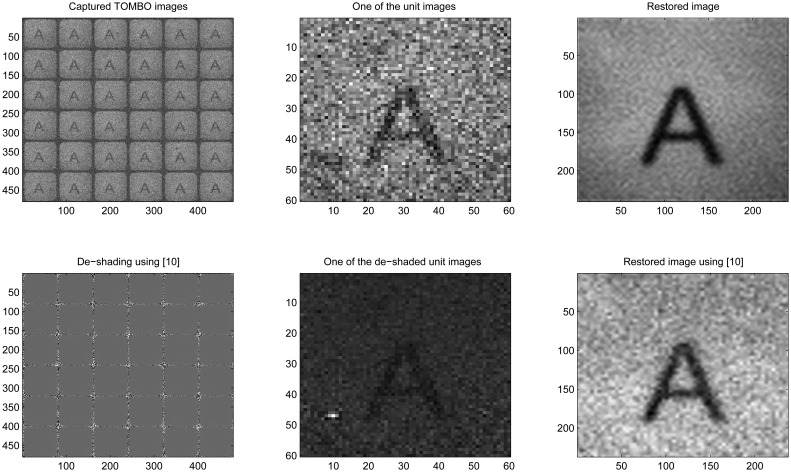

To assess the robustness of the proposed method in the presence of noise, a zero mean correlated and uncorrelated Gaussian noise is added manually to the captured images. We consider the restoration of the original image for the following four different cases: (i) no added noise (ii) additive external noise (iii) additive internal and external noise (iv) additive external correlated noise. Information about test input data and output images can be found in Table 1.

Table 1.

Test input data

| Figure | μ × μ used | M × N | SNR | ℓ | ↑ L | LM × LN | α | # of Iterations |

|---|---|---|---|---|---|---|---|---|

| Fig. 8, No added noise | 6 × 6 | 60 × 60 | - | 3 | 4 | 240 × 240 | 0.01 | 30 |

| Fig. 9, External noise | 6 × 6 | 60 × 60 | 7 dB | 3 | 4 | 240 × 240 | 0.01 | 30 |

| Fig. 10, Internal and external noise | 6 × 6 | 60 × 60 | 40 dB (In) and 7 dB (Ex) | 3 | 4 | 240 × 240 | 0.01 | 30 |

| Fig. 11, External correlated noise | 6 × 6 | 60 × 60 | 2.8 dB | 3 | 4 | 240 × 240 | 0.01 | 30 |

The external noise is directly added to the captured images, while the internal noise is increased by altering the black, B(x, y), and white W(x, y) images (Eqns. (1) and (2)).

In Fig. 8 (no added noise), our blind restoration method is seen to perform slightly better than the pixel rearrangement method.

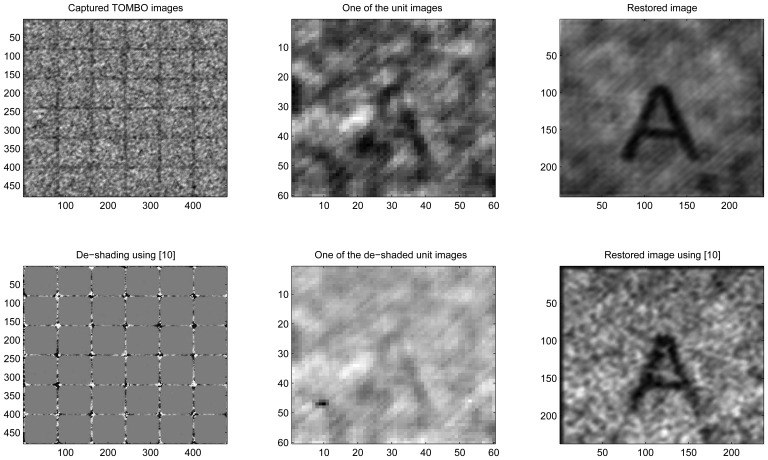

In Fig. 9, under external noisy conditions, one can see that our method performs better than the pixel rearrangement method.

Figure 9.

Experimental, External noise, 6 × 6 unit images

In Fig. 10, under internal and external noisy conditions, one can see that the de-shading pre-processing step further degrades the captured images. As a result, the pixel rearrangement method was unable to restore the original image, while our proposed method is able to. We have considered the effect of the internal noise because the conditions under which the black and white calibration images have been captured can change. In other words, when a new image is captured, the system will encounter noise levels and nonlinearities that are different from the ones encountered when calibrating the system using the black and white images.

Figure 10.

Experimental, Internal and external noise, 6 × 6 unit images

In Fig. 11, under an external correlated noise, it is clear that the pixel rearrangement method is unable to restore the original image accurately, while our method can restore the original image. The reason behind the lower performance of the rearrangement method is that correlated noise creates false peaks (spares) that significantly affect the alignment performance of the rearrangement method (Fig. 2). In contrast, in our method, spectral information is not affected by misalignments (shifts δx and δy in Fig. 2).

Figure 11.

Experimental, External correlated noise, 6 × 6 unit images

In summary, the results of Figs. 8, 9, 10 and 11 show that our spectral-based blind image restoration method can reconstruct the original image in noisy conditions. This is achieved whether the source of noise is internal or external, uncorrelated or correlated, and at low signal-to-noise Energy ratio (SNER) as seen in Table 1.

Note also, the impact of the de-shading pre-processing step in Figs. 8, 9, 10 and 11, with the values of pixels increasing especially at the periphery of each unit image.

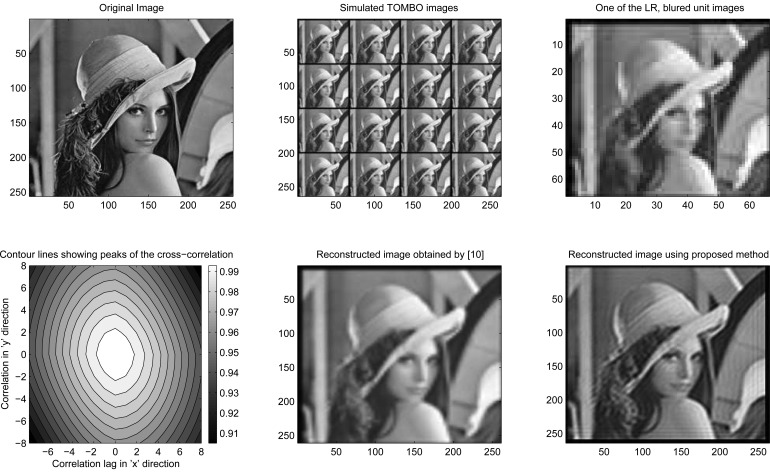

To compare the performance of our approach with the pixel rearrangement method, when the blur is significant and the down-sampling is high, a “Lena” image [23], f(x, y) of (M =256×

=256; pixels is used to represent the original image. To accurately model real-world conditions, the original image is first convolved with (μ × μ) different PSFs representing the blur. A rotationally symmetric truncated Gaussian PSF (blur) combined with a motion-like PSF,

, with standard deviation σ is used as a reference. From the reference PSF, all PSFs are generated such that, hi,j(x, y) = ho(x, y) + ri,j(x, y) where ri,j(x, y) is a random variable uniformly distributed between [−δ,δ]. To model a captured TOMBO unit image gi,j(x, y), the resultant blurred image plus the noise is then down-sampled (↓, D) in a way that mimics the function of the imager. Mathematically we have,

=256; pixels is used to represent the original image. To accurately model real-world conditions, the original image is first convolved with (μ × μ) different PSFs representing the blur. A rotationally symmetric truncated Gaussian PSF (blur) combined with a motion-like PSF,

, with standard deviation σ is used as a reference. From the reference PSF, all PSFs are generated such that, hi,j(x, y) = ho(x, y) + ri,j(x, y) where ri,j(x, y) is a random variable uniformly distributed between [−δ,δ]. To model a captured TOMBO unit image gi,j(x, y), the resultant blurred image plus the noise is then down-sampled (↓, D) in a way that mimics the function of the imager. Mathematically we have,

| (20) |

Simulation parameters are given in Table 2.

Table 2.

Simulation input data

| Figure | ℓ, σ | ↓D | SNER | μ × μ unit images | M × N | ↑L | LM×LN | α | # of Iterations |

|---|---|---|---|---|---|---|---|---|---|

| Fig. 12 | 7, 4 | 4 | - | 4 × 4 | 64 × 64 | 4 | 256×256 | 0.01 | 30 |

From Fig. 12, it is clear that with realistic PSFs, our restoration method outperforms the pixel rearrangement method. This is because the pixel rearrangement method is unable to align the captured unit images for the simulation parameters in Table 2. To illustrate this, the cross-correlation function between a reference image and a unit image is represented by the contour lines in Fig 12. Note that there is a single peak at (x = 0, y = 0). However, there should be at least 7 peaks due to the motion-like PSFs since each has (7 × 7) coefficients. Instead, only a single peak is recognized by the pixel rearrangement method, leading to the observed blurred output image, which requires inverse filtering.

Figure 12.

Simulation, no added noise, 4 × 4 unit images

6. Conclusions

A spectral-based blind image restoration method for thin TOMBO imagers is described and analyzed. The proposed method does neither require conventional de-shading or pixel rearrangement as these operations are performed automatically through the blind restoration process. As a result, errors associated with misalignment, nonlinearities, singularities and high pixel values are overcome. Experimental results demonstrate that the proposed method can reconstruct the original image wether the source of additive noise is internal or external, uncorrelated or correlated. In contrast to conventional pixel rearrangement method, it is able to restore the original at SNER lower than 3dB. The proposed method can also be extended to color images.

Acknowledgments

This work is supported by the Australian Research Council's Discovery Project DP0664909. The authors would like to thank Professor Tanida for kindly providing the authors with images of their experimental TOMBO imager.

References

- 1.El Gamal A., Eltoukhy H. CMOS Image Sensors. IEEE Circ. Dev. Mag. 2005;21:6–20. [Google Scholar]

- 2.Ng D.C., Tokuda T., Shiosaka S., Tano Y., Ohta J. Implantable Microimagers. Sensors. 2008;8:3183–3204. doi: 10.3390/s8053183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tanida J., Kumagai T., Yamada K., Miyatake S., Ishida K., Morimoto T., Kondou N., Miyazaki D., Ichioka Y. Thin Observation Module by Bound Optics (TOMBO): Concept and experimental verification. Appl. Opt. 2001;40:1806–1813. doi: 10.1364/ao.40.001806. [DOI] [PubMed] [Google Scholar]

- 4.Völkel R., Eisner M., Weible K. J. Miniaturized imaging systems. Microelectron Eng. 2003;67-68:461–472. [Google Scholar]

- 5.Land M., Nilsson D.-E. Animal Eyes. Oxford University Press; New York, NY, USA: 2002. [Google Scholar]

- 6.Hamanaka K., Koshi H. An artificial compound eye using a microlens array and its application to scale invariant processing. Optics. 1996;3:264–268. [Google Scholar]

- 7.Yamada K., Tanida J., Yu W., Miyatake S., Ishida K., Miyazaki D. Fabrication of diffractive microlens array for opto-electronic hybrid information system. Proc. of Diffrac. Opt. 1999;Vol. 22:5253. [Google Scholar]

- 8.Tanida J., Kumagai T., Yamada K., Miyatake S., Ishida K., Morimoto T., Kondou N., Miyazaki D., Ichioka Y. Proc. SPIE Optics in Computing. 2000. Thin observation module by bound optics-TOMBO: an optoelectronic image capturing system; p. 10301036. [Google Scholar]

- 9.Tanida J., Yamada K. TOMBO: thin observation module by bound optics. Proc. the 15th Annual Meeting of the IEEE in Lasers and Electro-Optics; 2002. pp. 233–234. [Google Scholar]

- 10.Kitamura Y., Shogenji R., Yamada K., Miyatake S., Miyamoto M., Morimoto T., Masaki Y., Kondou N., Miyazaki D., Tanida J., Ichioka Y. Reconstruction of a high-resolution image on a compound-eye image-capturing system. App. Optics. 2004;43:1719–1727. doi: 10.1364/ao.43.001719. [DOI] [PubMed] [Google Scholar]

- 11.Nitta K., Shogenji R., Miyatake S., Tanida J. Image reconstruction for thin observation module by bound optics by using the iterative backprojection method. App. Optics. 2006;45:2893–2900. doi: 10.1364/ao.45.002893. [DOI] [PubMed] [Google Scholar]

- 12.Yamada K., Ishida K., Shougenji R., Tanida J. Development of three dimensional endoscope by Thin Observation by Bound Optics(TOMBO). Proc. World Automation Congress; 2006. pp. 1–4. [Google Scholar]

- 13.Kay S. M. Modern Spectral Estimation: Theory and Application. Englewood Cliffs, N.J., USA: Prentice Hall; 1988. [Google Scholar]

- 14.Brillinger D. R. Time Series: Data Analysis and Theory. Holden-Day, Inc.; San Francisco, CA, USA: 1981. [Google Scholar]

- 15.Banham M. R., Katsaggelos A. K. Digital image restoration. IEEE Signal Process. Mag. 1997;14:24–41. [Google Scholar]

- 16.Tekalp A. M., Pavlovic G. Image restoration with multiplicative noise: incorporating the sensor nonlinearity. IEEE Trans. Signal Process. 1991;39:2132–2136. [Google Scholar]

- 17.Kundur D., Hatzinakos D. Blind Image Deconvolution. IEEE Signal Process. Mag. 1996;13:43–64. [Google Scholar]

- 18.Richardson W. H. Bayesian-based Iterative Method of Image Restoration. J. Opt. Soc. Am. 1972;62:55–59. [Google Scholar]

- 19.Lucy L. B. An iterative technique for the rectification of observed distribution. Astronom. J. 1974;79:745–759. [Google Scholar]

- 20.Ayers G. R., Dainty J. C. Iterative Blind Deconvolution Method and Its Applications. Optics Lett. 1988;13:547–549. doi: 10.1364/ol.13.000547. [DOI] [PubMed] [Google Scholar]

- 21.McCallum B. C. Blind Deconvolution by Simulated Annealing. J. Optics Commun. 1990;75:101–105. [Google Scholar]

- 22.Chen L., Yap K., He Y. Efficient Recursive Multichannel Blind Image Restoration. EURASIP J. Adv. Signal Proces. 2007:1–10. [Google Scholar]

- 23.Munson D. C. A Note On Lena. IEEE Trans. Image Proces. 1996;5:1–2. [Google Scholar]