Abstract

Designing and implementing assessment tasks in large-scale undergraduate science courses is a labor-intensive process subject to increasing scrutiny from students and quality assurance authorities alike. Recent pedagogical research has provided conceptual frameworks for teaching introductory undergraduate microbiology, but has yet to define best-practice assessment guidelines. This study assessed the applicability of Biggs’ theory of constructive alignment in designing consistent learning objectives, activities, and assessment items that aligned with the American Society for Microbiology’s concept-based microbiology curriculum in MICR2000, an introductory microbiology course offered at the University of Queensland, Australia. By improving the internal consistency in assessment criteria and increasing the number of assessment items explicitly aligned to the course learning objectives, the teaching team was able to efficiently provide adequate feedback on numerous assessment tasks throughout the semester, which contributed to improved student performance and learning gains. When comparing the constructively aligned 2011 offering of MICR2000 with its 2010 counterpart, students obtained higher marks in both coursework assignments and examinations as the semester progressed. Students also valued the additional feedback provided, as student rankings for course feedback provision increased in 2011 and assessment and feedback was identified as a key strength of MICR2000. By designing MICR2000 using constructive alignment and iterative assessment tasks that followed a common set of learning outcomes, the teaching team was able to effectively deliver detailed and timely feedback in a large introductory microbiology course. This study serves as a case study for how constructive alignment can be integrated into modern teaching practices for large-scale courses.

INTRODUCTION

The appropriate design and implementation of assessment tasks that effectively support student learning is one of the biggest challenges facing educators across all disciplines. The Scholarship of Teaching and Learning (SoTL) has provided much guidance on this topic, emphasizing that assessment is the main lens through which students view the outcomes and value of a course (3), which must then be coupled with timely, detailed and personalized feedback to truly facilitate learning gains (6).

Despite good intentions however, the integration of these optimal assessment practices remains limited. Time and resource constraints placed upon instructors coordinating large undergraduate science courses often lower the frequency of both formative and summative assessment pieces, while severely diminishing instructor capacity for providing feedback. Moreover there is often misalignment between the intended learning outcomes for a course and the way in which students are taught and assessed. Scientific data acquired through active experimentation are often taught as dogmatic facts devoid of any inquiry or hypothesis-testing (5). Large-scale lectures are used to deliver as much information as possible yet minimize student-instructor interactions (10), and multiple-choice question exams that promote short-term rote memorization are inappropriately used to test in-depth understanding over a broad range of topics while failing to provide sufficient student feedback (18). The Boyer Commission Report in 1998 identified these pervasive problems as ones that allowed science students to graduate without “a coherent body of knowledge” and not knowing “how to think logically, write clearly, or speak coherently” (17).

Biggs’ theory of constructive alignment has the potential to remedy such flaws in course design, describing an educational model where there is consistent alignment in learning objectives, learning activities, and assessment items (3). It has been used as the underlying theoretical basis for developing inter-professional education for medical teams (18), and early-career professional development courses for doctors (20), where coherence and transparency have been crucial for validating these courses against stringent accreditation requirements across multiple medical professions. In the absence of consensus teaching and learning conceptual frameworks in science, despite recommendations for such consensus (16), uptake of constructive alignment in science courses has been sporadic and anecdotal, appearing in isolated reports of new assessment methodology (7). Recently, however, the American Society for Microbiology (ASM) published a consensus concept-driven framework for the undergraduate microbiology curriculum (11), presenting a concerted effort at establishing deep student understanding of fundamental concepts within introductory microbiology courses. The integration of these learning objectives into existing microbiology courses needs to be reinforced by appropriate learning activities and assessment items in order for these courses to be constructively aligned to “maximize the likelihood that students will engage in the activities designed to achieve the intended outcomes” (3).

This study attempted to dissect the importance of appropriate assessment implementation in large-scale undergraduate microbiology courses by applying Biggs’ theory of constructive alignment to the 2011 offering of MICR2000, an introductory microbiology course offered at the University of Queensland (UQ), Australia. Approximately 400 students enroll in the course each year, and the administration and design of progressive assessment items for hundreds of students was a shortcoming in MICR2000. This was reflected in 2010 student survey responses, where “I received helpful feedback on how I was going in the course” was the only statement about the course to receive less than 4 on a 1–5 Likert scale, a standardized survey metric employed in Australian tertiary institutions.

To address these issues, the 2011 offering of MICR2000 constructively aligned the course’s learning objectives, activities, and outcomes to a progressive assessment scheme comprising coursework assignments and examinations. Internal alignment was achieved in each of these iterative series of assessment tasks as they followed a common set of criteria, standards, and learning objectives. Feedback sessions for each assessment task were conducted throughout the semester; and student performances in these tasks, as well as responses to ethics-approved surveys, were monitored. Using these data, the project team attempted to answer the following research question: Will increasing the number of constructively aligned assessment items be able to provide additional feedback to a large number of students in an efficient manner that will consequently improve student confidence and performance?

METHODS

Participant selection

This study focused on student perceptions and performance in MICR2000, an introductory microbiology and immunology course offered at UQ. The student cohorts enrolled in the 2010 and 2011 offerings of MICR2000 were invited to participate in this study, with the 2010 results serving as the baseline prior to interventions in assessment and feedback processes in 2011. A total of 265 and 264 students in 2010 and 2011 respectively provided their informed consent to participate in this study, ranging in age from 17 to 50 years old with approximately equivalent male to female ratios.

Informed consent

Informed consent was obtained in all cases from students with regard to completing de-identified surveys regarding their perception of learning gains made throughout the course, as well as the potential for analyzing and publishing the results from their performance in course assessments. This study has been cleared in accordance with the ethical review processes of UQ (“Evaluating MICR2000” – Project Number: 201000226) and with the guidelines of the National Statement on Ethical Conduct in Human Research 2007 in Australia as determined by the Australian Health Ethics Committee (AHEC), an Australian government advisory committee for national and international health ethics policy.

Quantitative analysis of student perceptions and performance

The Student Evaluation of Course and Teaching (SECaT) survey instrument is a standardized questionnaire administered centrally across all UQ courses at the end of each teaching semester to evaluate course structure and teaching quality through a consistent framework. Like the previously validated Student Assessment of Learning Gains (SALG) surveys (15), the SECaT utilizes a 5-point scale to analyze participant responses, and, to maintain consistency, the Attitudes and Skills After Practicals (ASAP) survey was also designed using this format. De-identified student responses were quantified using either a 5-point learning gains scale (1 = No Gain; 2 = Little Gain; 3 = Moderate Gain; 4 = Good Gain; 5 = Great Gain) or a 5-point Likert scale (1 = Strongly Disagree; 2 = Disagree; 3 = Neutral; 4 = Agree; 5 = Strongly Agree). ASAP focused on student perceptions toward learning gains made in attitudes and scientific skills after completing the practical component of MICR2000, and SECaT assessed student understanding, course structure, assessment implementation, as well as key strengths of the course. Student performance in progressive assessment items was also collated across 2010 and 2011 offerings of MICR2000. Statistical comparisons were conducted using the Mann-Whitney U-test, with p < 0.05 denoting statistical significance.

RESULTS

Course structure

MICR2000 is an introductory microbiology undergraduate course offered as part of the microbiology major at the University of Queensland. The course represents a holistic introduction to microbiology for students who have already completed BIOL1020, a UQ course covering “Genes, Cells, and Evolution.” Both courses are compulsory for students pursuing microbiology, molecular biology, or biotechnology majors. The course content begins with introductory bacteriology covering microbial cell structure, function, and growth, before discussing the evolution of microorganisms and how this contributes to metabolic diversity in environmental ecology and drug resistance in clinical pathogenesis. Modules on virology and immunology then follow, with students being introduced to virus lifecycles, viral pathogens, and vaccine technologies, together with components of the immune system and the distinctions between innate and adaptive immune responses. To facilitate the retention of these concepts, bacteriology and immunology laboratory practical sessions that directly align with the lecture content also run over eight weeks of the semester. The breadth and depth of topics covered throughout MICR2000 (summarized in Table 1) as well as the course learning objectives (Table 2) are consistent with ASM’s concept-driven curriculum guidelines for undergraduate microbiology focused around promoting holistic student understanding of the field (11). Evolution, cell structure and function, metabolic pathways, information flow and genetics, microbial systems, and the impact of microorganisms—the key concepts within the ASM curriculum guidelines—are all covered within MICR2000.

TABLE 1.

Overview of lecturing schedule and learning activities in MICR2000.

| Module 1 – Introduction to Microbiology | |

| Week 1 | Cell structure and function |

| Week 2 | Microbial growth |

| Module 2 – Environmental Microbiology | |

| Week 3 | Microbial diversity and metabolism |

| Week 4 | Microbial ecology, evolution and systematics Bacteriology practicals commence |

| Module 3 – Eukaryotic Microbes | |

| Week 5 | Fungal growth, biotechnology, and pathogens |

| Module 4 – Bacteriology | |

| Week 7 | Bacterial gene transfer, resistance, and pathogenesis |

| Module 5 – Virology | |

| Week 8 | Viral definition, structure, and replication |

| Week 9 | Viral pathogenesis, research, and biotechnology |

| Week 10 | Midsemester break |

| Module 6 – Immunology and Host-Pathogen Interactions | |

| Week 11 | Principles and components of the immune system Immunology practicals commence |

| Week 12 | Innate and acquired immunity in response to pathogens |

TABLE 2.

Alignment of MICR2000 learning objectives to assessment tasks in 2010 and 2011 offerings of the course.

| MICR2000 Learning Objectives | Alignment with Assessment (2010) | Alignment with Assessment (2011) |

|---|---|---|

| 1. Explain the structure and function of the components of a variety of microbial cells | Project Report 1 | Project Report 1 |

| Midsemester Exam | Project Report 2 | |

| Final Exam | Midsemester Exam 1 | |

| Midsemester Exam 2 | ||

| Final Exam | ||

| 2. Categorize prokaryotic, eukaryotic, and viral microorganisms based on their growth, nutrition, metabolism, and physiological diversity | Project Report 1 | Project Report 1 |

| Project Report 2 | Project Report 2 | |

| Midsemester Exam | Midsemester Exam 1 | |

| Final Exam | Midsemester Exam 2 | |

| Final Exam | ||

| 3. Apply the principles of molecular phylogeny to explain the diversity and evolutionary relationships of microorganisms (archaea, bacteria, fungi, protozoa, algae, and viruses) across a variety of ecosystems | Midsemester Exam | Midsemester Exam 1 |

| Final Exam | Final Exam | |

| 4. Identify microorganisms that are important in health and disease in mammals through their transmission cycles, modes of replication, and mechanisms of pathogenesis | Project Report 1 | Project Report 1 |

| Project Report 2 | Project Report 2 | |

| Midsemester Exam | Midsemester Exam 1 | |

| Final Exam | Midsemester Exam 2 | |

| Final Exam | ||

| 5. Differentiate between the different aspects of the immune system (innate, humoral, cellular) and explain how each component would respond in both healthy and diseased states | Project Report 2 | Project Report 2 |

| Final Exam | Final Exam | |

| 6. Proficiently utilize technical laboratory skills to study bacteria, viruses, and the immune response while maintaining high safety standards | Project Report 1 | Project Report 1 |

| Project Report 2 | Project Report 2 | |

| Laboratory Note-Keeping | Laboratory Note-Keeping | |

| 7. Clearly communicate experimental results through the accurate recording and evaluation of laboratory observations | Project Report 1 | Project Report 1 |

| Project Report 2 | Project Report 2 | |

| Laboratory Note-Keeping | Laboratory Note-Keeping |

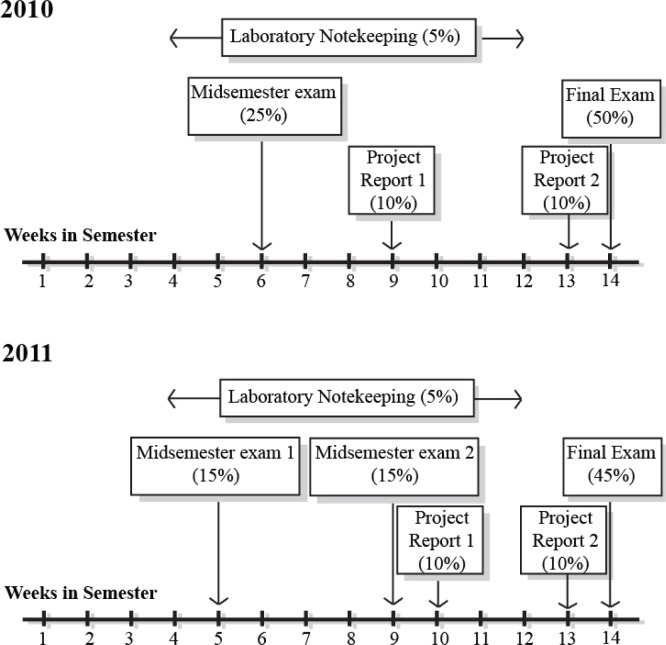

Given the wide range of topics students are expected to understand by the end of the course, assessment is crucial to promoting student learning in these discipline areas. The assessment items students completed within MICR2000 in 2010 included a midsemester exam, laboratory note-keeping after each practical session, two project reports based around bacteriology and immunology practicals respectively, and a final written exam, all of which were designed to align with at least one of the course’s seven learning objectives (Table 2). However, despite students receiving their marks in a timely fashion for each of these assessment pieces, “I received helpful feedback on how I was going in the course” was the only statement that scored poorly (less than 4 on a 1–5 Likert scale) in the standardized Student Evaluation of Teacher and Course (SECaT) survey at the end of 2010. To address the perceived lack of feedback on assessment items throughout the course, the MICR2000 teaching team initiated a two-tiered approach. Firstly, internal consistency in learning objectives for each assessment piece was established to ensure that students were able to apply the feedback obtained toward future assessment items. Secondly, the number of midsemester exams was increased to two in 2011, providing students with additional feedback on their progress in the course prior to the final exam. To ensure that changes in the assessment strategy and scheduling were the main variables being altered across the 2010 and 2011 offerings of MICR2000, the lecture and practical laboratory class schedules remained consistent across the two years. The assessment schedule for 2010 and 2011 offerings of MICR2000 is shown in Figure 1.

FIGURE 1.

Comparison of progressive course assessment schedules throughout 14 weeks of semester in 2010 and 2011 offerings of MICR2000.

Student demographic in 2010 and 2011 MICR2000 cohorts

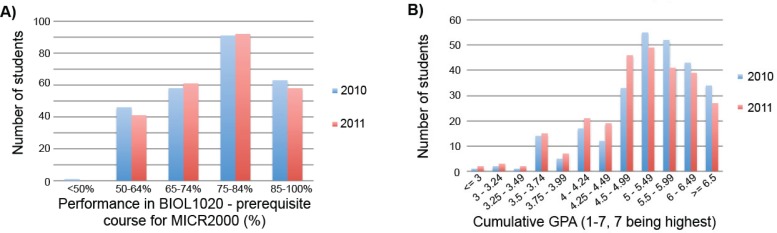

Bachelor programs in Biomedical Science, Biotechnology, Science, and Medicine were the most strongly represented programs for students enrolled in both 2010 and 2011 offerings of MICR2000 (Table 3), potentially minimizing variability in background knowledge across the two years that may confound the results of this study. While it is difficult to ensure two separate student cohorts are comparable in terms of capability and competency, student performance in prerequisite courses provide a quantifiable parameter on which to assess their grasp of introductory microbiology concepts before enrolling in MICR2000. No statistically significant difference could be observed in the prior academic performance of the 2010 and 2011 MICR2000 student cohorts in BIOL1020 – “Genes, Cells, and Evolution,” the only prerequisite course for MICR2000 at UQ (p = 0.9868) (Fig. 2(A)). Another metric to assess student academic capability is their cumulative Grade Point Average (GPA) across at least one full year of tertiary study, a system used by the governing body Queensland Tertiary Admissions Centre (QTAC) to determine student rankings for tertiary program enrollment in Queensland, Australia (13). No statistically significant difference was detected between the cumulative GPA rankings of 2010 and 2011 MICR2000 students (p = 0.855) (Fig. 2(B)), and together these data suggest that the student cohorts across the two years of this study are of similar academic experience and competence.

TABLE 3.

Comparison of program enrollments across student cohorts in 2010 and 2011 offerings of MICR2000.

| 2010 MICR2000 | 2011 MICR2000 | ||

|---|---|---|---|

| Program | Proportion of Students | Program | Proportion of Students |

| B Biomedical Science | 10.5% | B Biomedical Science | 16% |

| B Biotechnology | 8.2% | B Biotechnology | 12% |

| B Business Management/B Science | 0.2% | B Business Management/B Science | 0.3% |

| B Commerce/B Science | 0.7% | B Commerce/B Science | 0.6% |

| B Economics/B Science | 0.2% | B Economics/B Science | 0.3% |

| B Engineering | 0.7% | B Engineering | 0.6% |

| B Engineering/B Science | 1.1% | B Engineering/B Science | 0.9% |

| B Environmental Science | 0.2% | B Environmental Science | 0.3% |

| B Health Sciences | 0.2% | B Environmental Management | 0.3% |

| B Information Technology | 0.2% | B Health Science/B Medicine, Surgery | 0.6% |

| B Medicine, Surgery/B Science | 35.5% | B Information Technology/B Science | 0.3% |

| B Science | 38% | B Medicine, Surgery/B Science | 24.1% |

| B Science/B Journalism | 0.2% | B Science | 41% |

| B Science/B Arts | 0.9% | B Science/B Arts | 1.2% |

| B Science/B Education | 0.5% | B Science/B Laws | 0.9% |

| B Science/B Laws | 1.1% | Study Abroad | 0.3% |

| Study Abroad | 0.9% | ||

FIGURE 2.

Comparison of prior academic performance of students in 2010 (n = 265) and 2011 (n = 264) offerings of MICR2000. (A) Breakdown of student performance in BIOL1020 prior to enrolling in 2010 and 2011 offerings of MICR2000. BIOL1020 – “Genes, Cells, and Evolution” is offered at UQ as the only prerequisite course before entering MICR2000. (B) Cumulative Grade Point Average (GPA) of students enrolled in 2010 and 2011 offerings of MICR2000. The cumulative GPA for each student was calculated through their mean grade (1–7, 7 being the highest) across UQ courses for a minimum of one full year of tertiary study. GPA bands are as determined by the Queensland Tertiary Admissions Centre for student selection ranking when enrolling in tertiary programs (13).

Internal alignment across assessment items facilitates student learning gains

The practical laboratory classes in MICR2000 spanned eight weeks, comprising five weeks of bacteriology practicals followed by three weeks of immunology. A wide array of individual projects within these laboratory sessions facilitates the development of fundamental microbiology laboratory skills, including microscopy, pure and selective culture to isolate microorganisms from a number of human and environmental sources, diagnostic identification of microbes, and quantitative measurement of bacterial and viral concentration and activity (Table 4). These skills directly align with the skills and competencies outlined in the ASM curriculum guidelines (11), and are reinforced through progressive course assessment including weekly laboratory note-keeping as well as two extended project reports that required students to summarize their experimental findings from the respective laboratory classes. Students were notified of the topics of these project reports two weeks before their respective due dates in both 2010 and 2011, ensuring the time available to complete the assignments was equivalent in each case.

TABLE 4.

Overview of MICR2000 practical laboratory classes.

| Modulea | Project Title | Project Synopsis |

|---|---|---|

| Weeks 4–8: Bacteriology | 1. How can we visualize microorganisms? | Appropriate use of light microscope; differentiating different types of microscopy. |

| 2. Aseptic techniques and Gram staining | Safe handling and aseptic culture of micro-organisms. Gram-staining techniques. | |

| 3. Are organisms transmitted by skin contact and oral routes? | Attempt to culture micro-organisms from skin and respiratory tract before and after washing hands/wearing facemask. | |

| 4. How do we test for antibiotic sensitivity? | Conduct antibiotic disc diffusion assays on multiple bacterial strains. | |

| 5. What is the incidence of nasal carriage of coagulase-positive Staphylococcus? | Attempt to culture and identify S. aureus from nasal swabs. | |

| 6. solation of a marine Vibrio species from mangrove mud | Attempt to culture and identify Vibrio spp. from mangrove mud using colony characteristics and biochemical testing. | |

| 7. Respiratory flora – Streptococci | Identify and categorize Streptococcus spp. using culturing and immunological assays. | |

| 8. Isolation of Pseudomonas aeruginosa from garden soil | Use of enrichment culture technique to isolate and identify Pseudomonas spp. | |

| 9. Coliforms and Escherichia coli from polluted water | Biochemical testing to identify coliforms from contaminated water samples. | |

| 10. Microbial motility | Observe and differentiate between different types of bacterial motility. | |

| Weeks 10–12: Immunology | 11. Titration of a lytic bacteriophage T2 | Quantification of lytic T2 phage concentration using titration techniques. |

| 12. Virus haemagglutination assay | Quantification of influenza viral concentration using haemagglutination assays. | |

| 13. Identification of viral pathogens using monoclonal antibodies in enzyme-linked immunosorbent assay (ELISA) | Identify viral isolates using ELISA. | |

| 14. Antibacterial action of lysozyme | Assess lysozyme activity across egg white, saliva, and tears. | |

| 15. Assay for production of nitric oxide by macrophages | Measure immune activity of macrophages through nitric oxide production in response to interferon, LPS, and bacterial DNA. |

Scheduling and content remained constant across 2010 and 2011 offerings of the course. The bacteriology module consisted of projects 1 to 10, spanning across weeks 4 to 8 in the semester, and was assessed via Project Report 1. The immunology practical module consisted of projects 11 to 15, spanning across weeks 10 to 12 of the semester, and was assessed correspondingly via Project Report 2.

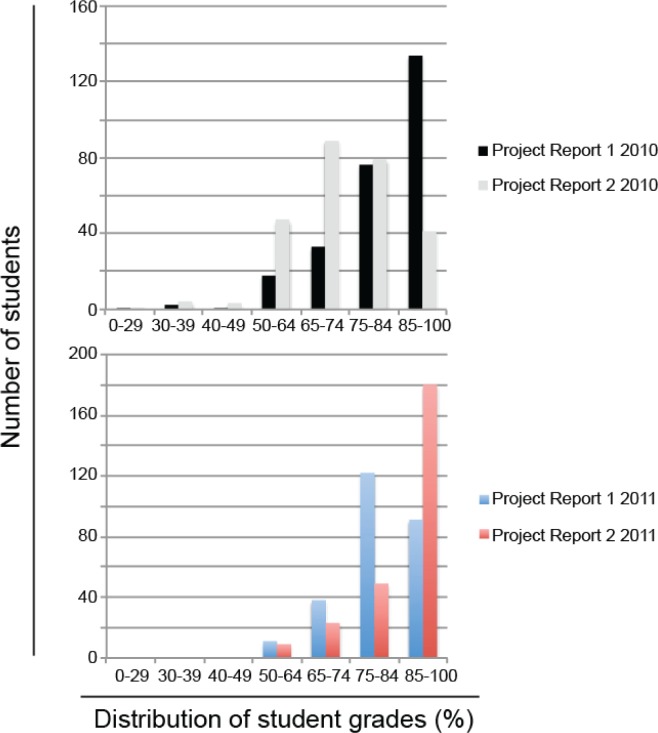

The project reports had a very similar structure to traditional scientific writing, containing introduction, methods, results, and discussion sections (Table 5). The majority of the marks were weighted toward the results and discussion sections, which emphasized the importance of data analysis and interpretation in scientific communication as consistent with learning objective 7 in the course (Table 2). Given that students were required to complete this laboratory note-keeping on a weekly basis, and also submit two project reports on which they were provided feedback, one would expect to see progressive improvement in student performance across these project reports. In 2010 however, students displayed a dramatic regression in Project Report 2 versus Project Report 1, performing significantly worse (p < 0.05) in their second submission (Fig. 3). This deterioration in student performance in 2010 was attributed to a lack of internal consistency within the learning objectives of each project report. Although the marking criteria were the same for both project reports (Table 5), there existed a notable disparity in the type of experiment performed in each project report, and correspondingly the type of experimental data that students needed to analyze and dissect. The first report involved qualitative descriptions and summaries of bacterial colony morphology on agar plates (project 8), whereas the second report involved measuring, plotting, and interpreting quantitative data for ELISA absorption curves in identification of viral load (project 13) (Table 6). Although individualized feedback was provided regarding student performance in each of the marking criteria, as well as identification of common mistakes made across the whole cohort in the first report, students could not readily apply this feedback to the second report as there was a lack of internal consistency in what they were expected to achieve across the two assignments.

TABLE 5.

Project report marking rubric.

| Criteriaa | Fail | Pass | High Pass |

|---|---|---|---|

| Formatting and Style (1 mark) | Grammar and spelling errors throughout AND Inconsistent visual layout lacking clarity |

Minor grammar and spelling errors OR Inconsistent communication and consistent visual layout |

Accurate grammar and spelling AND Clear communication and consistent visual layout |

| 0 marks | 0.5 mark | 1 mark | |

| Introduction and Methods (1 mark) | Incomplete description of background information, aims, and hypotheses for project AND Description of methods incomplete and/or inaccurate |

Incomplete description of background information, aims, and hypotheses for project OR Description of methods incomplete and/or inaccurate |

Effective summary of background information of project and specific project aims and hypotheses AND Accurate and complete description of methods |

| 0 marks | 0.5 mark | 1 mark | |

| Results (3 marks) | Incomplete explanation of results in text AND Inaccurate presentation of figures/tables AND Incomplete figure legends |

Incomplete explanation of results in text OR Inaccurate presentation of figures/tables OR Incomplete figure legends |

Clear explanation of results in text AND Clear presentation of figures/tables AND Detailed and complete figure legends |

| 0–1 marks | 1.5–2.5 marks | 3 marks | |

| Discussion (5 marks) | Incomplete or inaccurate summary of results and conclusions AND Incomplete or inaccurate discussion of role of controls in experimentation AND Incomplete or inaccurate discussion of problems encountered (if any) and possible explanations |

Incomplete or inaccurate summary of results and conclusions OR Incomplete or inaccurate discussion of role of controls in experimentation OR Incomplete or inaccurate discussion of problems encountered (if any) and possible explanations |

Clear and concise summary of results and conclusions AND Clear discussion of role of controls in experimentation AND Clear discussion of problems encountered (if any) and possible explanations |

| 0–2 marks | 2.5–4.5 marks | 5 marks | |

|

| |||

| TOTAL MARK OUT OF 10 | |||

The same marking rubric was applied in the moderated marking of Project Reports 1 and 2 in both 2010 and 2011 offerings of MICR2000. Students were provided feedback on their performance in each criterion for each Project Report.

FIGURE 3.

Distribution of student performance in Project Reports across 2010 (n = 265) and 2011 (n = 264) offerings of MICR2000.

TABLE 6.

Alignment of assessment learning outcomes between practical project reports across 2010 and 2011 offerings of MICR2000.

| Assignment | Topic | Experimental Data to Be Analyzed and Presented | Alignment in Learning Outcomes Across Project Reports |

|---|---|---|---|

| 2010 Project Report 1 | 8. Isolation of Pseudomonas aeruginosa from garden soil | Qualitative description of colony morphology on agar plates; results of Gram staining and biochemical testing | No |

| 2010 Project Report 2 | 13. Viral identification through ELISA | Quantitative measurement of ELISA absorbance readings, statistical measures of mean and standard deviation across experimental replicates | |

| 2011 Project Report 1 | 4. Bacterial antibiotic sensitivity testing | Quantitative measurement of zones of inhibition for different antibiotics against different bacterial strains; statistical measures of mean and standard deviation across experimental replicates | Yes |

| 2011 Project Report 2 | 14. Antibacterial action of lysozyme | Quantitative measurement of zones of inhibition for different lysozyme-containing solutions against different bacterial strains; statistical measures of mean and standard deviation across experimental replicates |

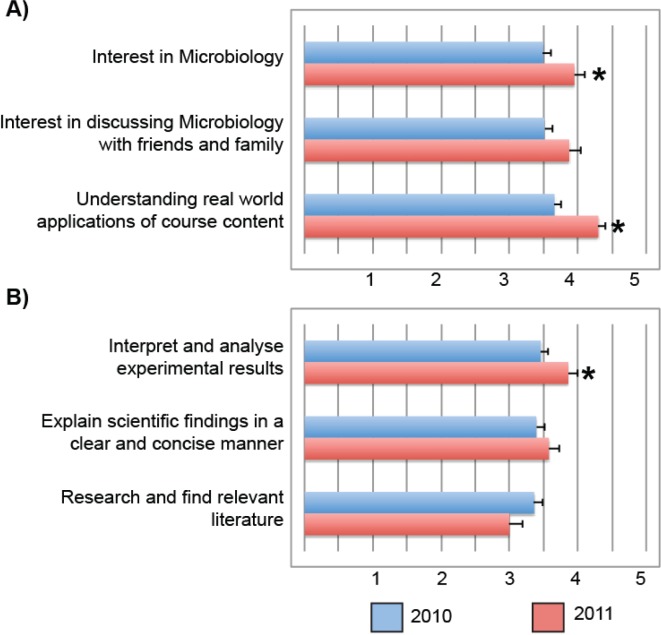

To address this issue in 2011, internal consistency in the types of experimental data to be analyzed was established between the two project reports. Project Report 1 involved the determination of bacterial antibiotic sensitivity and identification of resistant strains (project 4), and Project Report 2 involved determining the concentration of lysozyme found across a number of naturally occurring substances and bodily fluids (project 14). While covering disparate topics in microbiology, both projects required students to measure, analyze, and present graphical representations of quantitative data (Table 6). Given the time and resource constraints in marking assignments for high enrollment courses with over 400 students, the teaching team was not able to provide more individualized feedback for Project Report 1 in 2011 than in 2010; however, as there was internal consistency in the expected learning objectives across Project Reports 1 and 2 in 2011, the students were able to more effectively apply the feedback provided to the second project report. This conclusion was supported by the significant improvement in student marks in Project Report 2 when compared with Project Report 1 in 2011 (p < 0.05) (Fig. 3). In addition to improved student performance across assessment items, 2011 student responses to the ASAP survey instrument revealed significant learning gains in their interest in microbiology, understanding of the relevance of course content to real world applications, and ability to interpret and analyze experimental data when compared with 2010 students (Fig. 4). These results indicate that the internal alignment of learning objectives across assessment items improves the efficiency at which feedback is delivered to students while resulting in improved student learning gains in large undergraduate courses.

FIGURE 4.

Student responses to the Attitudes and Skills After Practicals (ASAP) survey instrument. Students were invited to voluntarily respond to surveys regarding their perception of learning gains made in (A) attitudes toward microbiology and (B) scientific skills separately in 2010 (n = 90) and 2011 (n = 43). Student rankings of learning gains were quantified as follows: 1 = No Gain; 2 = Little Gain; 3 = Moderate Gain; 4 = Good Gain; 5 = Great Gain. Bars represent mean +/− standard error of the mean (SEM). *Denotes a statistically significant difference between student responses for 2010 and 2011 offerings of MICR2000, as determined by the Mann-Whitney U test (p < 0.05).

Using multiple midsemester exams as promoters of feedback

In addition to the project reports, the midsemester exam represented the most significant piece of assessment throughout the semester prior to the final exam. In the 2010 offering of MICR2000, the midsemester exam was worth 25%, comprising 15 multiple-choice questions and 10 short-answer questions each worth one percent (Table 7). Multiple-choice questions (MCQs) in this exam facilitated automated computerized marking of a significant portion of the exam, which is important to ensure the efficient marking of over 400 exam papers in order to provide timely feedback to students. Sample MCQs used in 2010 and 2011 offerings of the course are shown in Table 8. However, it is difficult to provide in-depth student feedback based on their performance in MCQs, as these questions tend to have a narrow focus and frequently promote specific memorization rather than a broad understanding of a discipline area (2); moreover, releasing the answers to these questions prevents the teaching team from reusing questions across multiple student cohorts. Short-answer questions on the other hand, are more adept at assessing in-depth understanding of a topic (2) and can also provide rich feedback to students regarding their specific performance against a model written response. As a result, the feedback students received from the 2010 midsemester exam included their marks for the MCQ and short-answer question sections, and the option of viewing their exam paper together with a model-answer guide for the short-answer questions.

TABLE 7.

Comparison of midsemester examinations between 2010 and 2011 offerings of MICR2000.

| 2010 | 2011 | |

|---|---|---|

| Total Weighting | One 25% exam in Week 6 | Two 15% exams in Weeks 5 and 9 |

| Multiple-Choice Questions (MCQs) | 15 MCQs worth 1% each (15%) | 10 MCQs worth 0.5% each (5%) in each exam |

| Short-Answer Questions (SAQs) | 10 SAQs worth 1% each (10%) | 5 SAQs worth 2% each (10%) in each exam |

| Examinable Material | 16 lectures and practical content | 10 lectures and practical content for each midsemester quiz |

| Feedback Obtained | Individual performance in MCQ and SAQ sections, as well as model answer guides for SAQs | Individual performance in MCQ and SAQ sections, as well as model answer guides for SAQs across both midsemester exams |

Both years utilized a common pool of multiple-choice and short-answer questions, but differed in frequency, weighting, distribution of marks across question styles, and amount of assessable content covered in each exam.

TABLE 8.

Example multiple-choice questions (MCQ) and short-answer questions (SAQs) for midsemester and final exams in 2010 and 2011 offerings of MICR2000.

| Module | Question |

|---|---|

| 1. Introduction to Microbiology |

MCQ: The structure that confers rigidity on the cell and protects it from osmotic lysis is known as the: A. Cell wall B. Cytoplasmic membrane C. Ribosome D. Periplasmic membrane E. Capsule |

| SAQ: Describe the steps required to conduct a Gram stain and describe the appearance of the two types of bacteria identified by this procedure. | |

| 2. Environmental Microbiology |

MCQ: Which of the following statements about phototrophic microorganisms is not true? A. Phototrophs are the foundation of the carbon cycle B. Anoxygenic phototrophs generate ATP via cyclic phosphorylation C. All phototrophs produce chlorophyll or bacteriochlorophyll to be photosynthetic D. Carotenoids’ primary role is to absorb light energy E. Oxygenic phototrophs use water (H2O) as an electron donor |

| SAQ: Explain why nitrate (NO32−) is the preferred electron acceptor in anaerobic respiration. | |

| 3. Eukaryotic Microbes |

MCQ: Fungal cells existing in the classic yeast form: A. Remain attached to one another at a constricted septation site B. Are long and highly polarized C. Have no obvious constrictions between connected cells D. Separate readily from each other E. Grow in a branching pattern |

| SAQ: What are the five key features in a shuttle vector for use in Saccharomyces cerevisiae? | |

| 4. Clinical Bacteriology |

MCQ: The BCG vaccine is used in some countries to immunize against: A. Peptic ulcer disease caused by Helicobacter pylori B. Tuberculosis caused by Mycobacterium tuberculosis C. Diphtheria caused by Corynebacterium diphtheriae D. Pneumonia caused by Streptococcus pneumoniae E. Gastric cancer caused by Helicobacter pylori |

| SAQ: A patient presents with a gastric ulcer, and you suspect a Helicobacter pylori infection is responsible. What diagnostic tests can you run to verify this, and how accurate are these tests? | |

| 5. Virology |

MCQ: What effects can prophage have on their host cell? A. The host cell dies when the prophage replicates. B. The prophage causes the host cell to undergo apoptosis. C. Prophage genes may induce the expression of toxins that increase the virulence of the host cell. D. Prophage genes inhibit replication of the host cell. E. Prophage genes may cause the cell to undergo meiosis. |

| SAQ: Describe the primary components of innate and adaptive immunity in response to a viral infection. How do these components confer protection against viral infections? | |

| 6. Immunology and Host-Pathogen Interactions |

MCQ: Upon activation by a PAMP, dendritic cells in peripheral tissues: A. Migrate to the blood stream and then enter the thymus B. Undergo apoptosis to prevent excessive inflammatory damage C. Go into the lymphatic system, then into the blood stream via the thoracic duct D. Migrate in the lymph to the draining lymph node E. Upregulate expression of T cell receptors for display of peptide antigen |

| SAQ: Describe the primary components of innate and adaptive immunity in response to a viral infection. How do they protect against infections? |

These mechanisms of feedback for the 2010 midsemester exam were the only indicators of student progress in understanding and retaining the course content under closed-book examination conditions prior to sitting the final exam; the MICR2000 teaching team wanted to improve upon this in the 2011 offering of the course. Two midsemester exams were introduced in 2011 to provide students with additional opportunities to assess the level of understanding required to perform well in examination situations within the course. Each midsemester exam was worth 15%, with a heavier weighting placed upon short-answer questions (10%) than MCQs (5%) (Table 7). Interestingly, despite the lower weighting (15% in 2011 versus 25% in 2010) and reduction in examinable material per midsemester exam (12 lectures and practicals in 2011, 15 lectures and practicals in 2010), the 2011 students performed significantly worse in Midsemester Exam 1 when compared with student marks in the 2010 midsemester exam (p < 0.05) (Fig. 5(A)). Midsemester Exam 1 in 2011 took place in week 5 of the semester while the 2010 Midsemester Exam took place in week 6, so it is possible that an extra week of preparation improved the performance of the 2010 cohort; however, the reduced coverage for examinable material per midsemester exam in 2011 should have also resulted in a concomitant reduction in the examination preparation time required for the first 2011 midsemester exam. Moreover, given that no significant difference could be observed in the cumulative GPAs and performance in the prerequisite course for MICR2000 across the 2010 and 2011 students (Fig. 2), it is difficult to establish that a cohort effect is solely responsible for the disparity in midsemester examination performance across the two years.

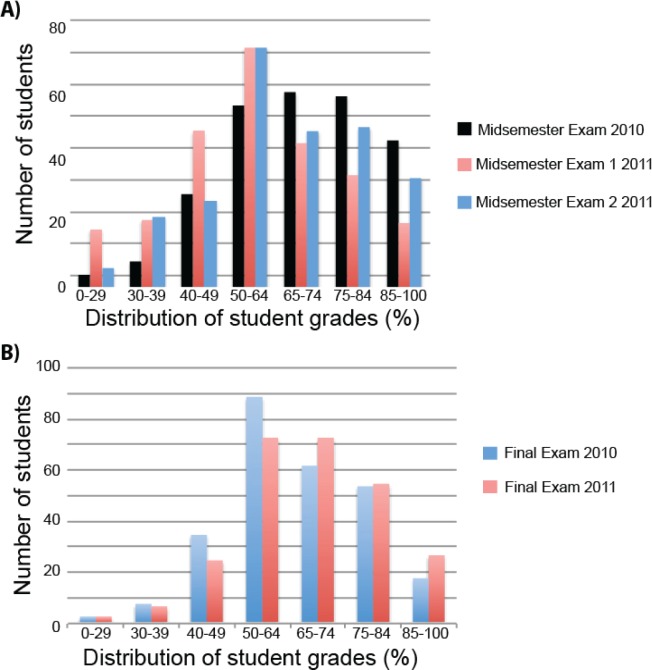

FIGURE 5.

Student performance in (A) midsemester and (B) final exams across 2010 (n = 265) and 2011 (n = 264) offerings of MICR2000.

Following Midsemester Exam 1 in 2011, students were provided with the same feedback mechanisms as the 2010 students for their performance in both multiple-choice and short-answer questions. In contrast to 2010 however, students were able to act upon this feedback on another similar piece of assessment within a short period of time, which is a strong proponent of assessment-driven learning gains (6). The 2011 Midsemester Exam 2 was very similar in format to Midsemester Exam 1 in its spread of multiple-choice and short-answer questions that assessed different modules within the course. When given the chance to apply the feedback they obtained regarding exam time management and structuring written responses to short-answer questions, a significant improvement in student performance in 2011 Midsemester Exam 2 was observed compared with 2011 Midsemester Exam 1 (p < 0.05) (Fig. 5(A)).

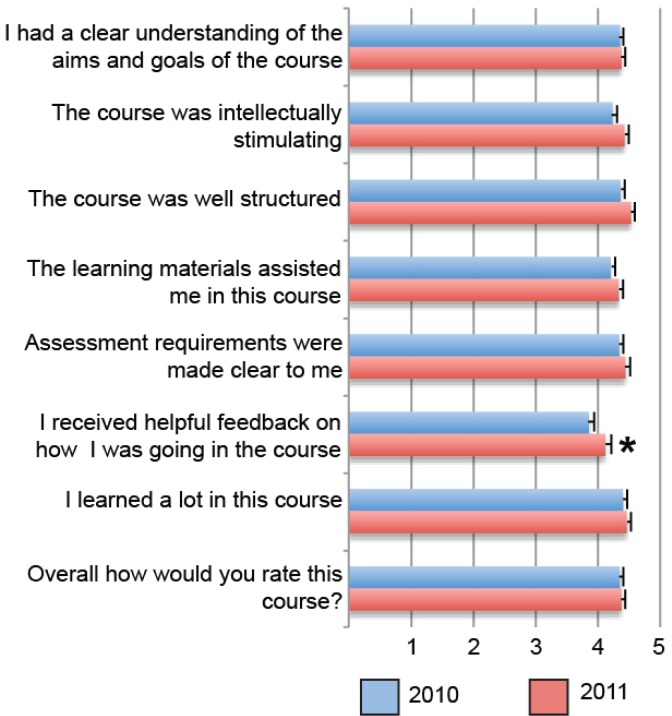

The final end-of-semester exam is comprised of 30 multiple-choice questions, 10 short-answer questions worth two marks each, and five in-depth short-answer questions worth four marks each, assessing all modules covered within the course. The same final exam format was used in both 2010 and 2011, yet significantly improved student performance in the final exam was observed in 2011 when compared to 2010 (p < 0.05) (Fig. 5(B)). Since the 2011 students were provided with an additional midsemester exam, this afforded them an additional assessment item to assist in their preparation for the final course examination. The notion that the additional feedback through two midsemester examinations bolstered student confidence and performance in the final exam is further supported by SECaT survey data across the two years of the study. Student responses to the statement “I received helpful feedback on how I was going in the course” within the standardized SECaT survey improved from 3.8 in 2010 to 4.1 in 2011 (p < 0.05) (Fig. 6). Moreover using qualitative thematic analysis of optional open-ended response questions under “What were the best aspects of this course,” 87% of respondents who elected to answer this question in 2011 identified well-structured assessment and feedback as the strongest component of MICR2000.

FIGURE 6.

Student Evaluation of Course and Teaching (SECaT) scores across 2010 and 2011 offerings of MICR2000. Students were invited to voluntarily respond to surveys regarding their evaluation of teaching within MICR2000 in 2010 (n = 108) and 2011 (n = 87) using a standardized University-Wide Student Evaluation of Course and Teaching (SECaT) survey instrument. Student responses corresponded to a 5-point Likert scale and quantified as follows: 1 = Strongly Disagree; 2 = Disagree; 3 = Neutral; 4 = Agree; 5 = Strongly Agree. Bars represent mean +/− standard error of the mean (SEM). *Denotes a statistically significant difference between student responses for 2010 and 2011 offerings of MICR2000, as determined by the Mann-Whitney U test (p < 0.05).

DISCUSSION

The observations made within this study across two offerings of MICR2000 indicated that improving the internal consistency of assessment and increasing the number of assessment tasks that were constructively aligned to the learning activities and objectives of an introductory microbiology course plays a role in bolstering student confidence and academic performance. Providing students with multiple attempts at multimodal assessment tasks has been previously shown to improve student performance and knowledge retention (4, 9), as well as inherently increase the number of opportunities for students and instructors to obtain formative and summative feedback (21). A concomitant improvement in student perceptions of their skills and interest in microbiology was also observed along with their improved performance in progressive course assessment within MICR2000, indicating that students responded positively to these assessment practices when the course design was constructively aligned. This is further supported by a similar example of constructive alignment in biochemistry, where aligning case-based learning assessment activities to problem-solving learning outcomes led to improved student satisfaction and academic performance (7).

Moreover, provided that the progressive assessment items were internally consistent with respect to the criteria, standards, and alignment with course learning outcomes, students were able to readily apply the lessons learned from previous feedback to the next learning activity—a crucial component of assessment practices that effectively support student learning (6). The consistency in criteria and requirements across multiple assessment items also eased the marking burden, allowing instructors to define marking standards applicable to several assessment items at once while providing transparency in feedback to students. In doing so, the MICR2000 teaching team was able to administer, mark, and provide feedback on an additional assessment item in 2011 without dramatic increases in workload or resource demands.

Despite the clear benefits of employing constructive alignment in the design of science courses, the initial workload required to re-conceptualize each course within a cohesive program has deterred many instructors from doing so in a systematic way. However, with the development of consensus concept frameworks for physics (8), chemistry (12), biochemistry (14), and more recently microbiology (11), science instructors can more readily define their course and program learning outcomes based on their discipline’s concept-driven curriculum and rationalize the effectiveness of this design in line with the ongoing push for evidence-based teaching (19, 1).

Our design of MICR2000 serves as a proof-of-concept demonstration of this approach. We defined learning outcomes that were consistent with the concepts and skills outlined in the ASM concept-driven curriculum guidelines (11) and kept this core curriculum consistent across two years to monitor the variables in student perception and performance in response to different assessment practices. Only then were we able to successfully apply and evaluate student-centered course design (21), and constructively align the systems for assessment and feedback within the course to the learning objectives. This study has provided some insight into how best-practice assessment guidelines can be implemented and aligned with existing microbiology concept frameworks to validate and enhance the delivery of large undergraduate science courses.

Acknowledgments

The authors would like to acknowledge the rest of the MICR2000 teaching team, Dr. Gene Tyson, Dr. James Fraser, and Dr. Kate Stacey, and the MICR2000 practical tutors. The entire teaching team was instrumental in the delivery of the practical laboratory classes, prompt marking of assessment items, and overall organization of the course. This study was supported by the School of Chemistry and Molecular Biosciences, the University of Queensland, and a Teaching and Learning Grant from the University of Queensland Faculty of Science. The present manuscript “How much is too much assessment? Insight into assessment-driven student learning gains in large-scale undergraduate microbiology courses,” has been read and approved by all co-authors. This manuscript is the original work of the project team and is not under review at any other publication. The authors declare that there are no conflicts of interest. Sources of support: University of Queensland Faculty of Science Teaching and Learning Grant 2010 and internal funding provided by the School of Chemistry and Molecular Biosciences.

REFERENCES

- 1.American Association for the Advancement of Science . Vision and change in undergraduate biology education: a call to action. American Association for the Advancement of Science; Washington, DC: 2010. http://visionandchange.org/files/2010/03/VC_report.pdf. [Google Scholar]

- 2.Atherton JS. Teaching and learning; forms of assessment. 2011. http://www.learningandteaching.info/teaching/assess_form.htm.

- 3.Biggs J. Aligning teaching and assessing to course objectives. University of Aveiro, Oliveira de Azeméis, Portugal. 2003. http://www.josemnazevedo.uac.pt/proreitoria/docs/biggs.pdf.

- 4.Cook A. Assessing the use of flexible assessment. Assess Eval Higher Educ. 2001;26:539–549. doi: 10.1080/02602930120093878. [DOI] [Google Scholar]

- 5.Duncan DB, Lubman A, Hoskins SG. Introductory biology textbooks under-represent scientific process. J Microbiol Biol Educ. 2011;12:143–151. doi: 10.1128/jmbe.v12i2.307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Gibbs G, Simpson C. Conditions under which assessment supports students’ learning. Learn Teach Higher Educ. 2004–2005;1:3–31. [Google Scholar]

- 7.Hartfield PJ. Reinforcing constructivist teaching in advanced level Biochemistry through the introduction of case-based learning activities. J Learn Des. 2010;3:20–31. [Google Scholar]

- 8.Hestenes D, Wells M, Swackhamer G. Force concept inventory. Phys Teach. 1992;30:141–158. doi: 10.1119/1.2343497. [DOI] [Google Scholar]

- 9.Karpicke JD, Roediger HL., 3rd The critical importance of retrieval for learning. Science. 2008;319:966–968. doi: 10.1126/science.1152408. [DOI] [PubMed] [Google Scholar]

- 10.Knight JK, Wood WB. Teaching more by lecturing less. Cell Biol Educ. 2005;4:298–310. doi: 10.1187/05-06-0082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Merkel S. The development of curricular guidelines for introductory microbiology that focus on understanding. J Microbiol Biol Educ. 2012;13:32–38. doi: 10.1128/jmbe.v13i1.363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mulford DR, Robinson WR. An inventory for alternate conceptions among first-semester general chemistry students. J Chem Educ. 2002;79:739–744. doi: 10.1021/ed079p739. [DOI] [Google Scholar]

- 13.Queensland Tertiary Admissions Centre Upgrading via Tertiary Study. Queensland Tertiary Admissions Centre. 2012. http://www.qtac.edu.au/InfoSheets/UpgradingViaTertiary.html.

- 14.Rowland SL, Smith CA, Gillam EM, Wright T. The concept lens diagram: a new mechanism for presenting biochemistry content in terms of “big ideas”. Biochem Mol Biol Educ. 2011;39:267–279. doi: 10.1002/bmb.20517. [DOI] [PubMed] [Google Scholar]

- 15.SALG Student assessment of their learning gains. 2008. http://www.salgsite.org/.

- 16.Smith AC, Marbach-Ad G. Learning outcomes with linked assessments—an essential part of our regular teaching practice. J Microbiol Biol Educ. 2010;11:123–129. doi: 10.1128/jmbe.v11i2.217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.The Boyer Commission on Educating Undergraduates in the Research University . Reinventing undergraduate education: a blueprint for america’s research universities. State University of New York, Boyer Commission on Educating Undergraduates in the Research University; Stony Brook, NY: 1998. http://www.niu.edu/engagedlearning/research/pdfs/Boyer_Report.pdf. [Google Scholar]

- 18.Thistlethwaite J. Interprofessional education: a review of context, learning and the research agenda. Med Educ. 2012;46:58–70. doi: 10.1111/j.1365-2923.2011.04143.x. [DOI] [PubMed] [Google Scholar]

- 19.U.S. Department of Education . A test of leadership—charting the future of u.S. Higher education. Commission on the Future of Higher Education, U.S. Department of Education; Washington, DC: 2006. http://www2.ed.gov/about/bdscomm/list/hiedfuture/reports/final-report.pdf. [Google Scholar]

- 20.von Below B, Hellquist G, Rodjer S, Gunnarsson R, Bjorkelund C, Wahlqvist M. Medical students’ and facilitators’ experiences of an Early Professional Contact course: active and motivated students, strained facilitators. BMC Med Educ. 2008;8:56. doi: 10.1186/1472-6920-8-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wood WB. Innovations in teaching undergraduate biology and why we need them. Annu Rev Cell Dev Biol. 2009;25:93–112. doi: 10.1146/annurev.cellbio.24.110707.175306. [DOI] [PubMed] [Google Scholar]