Abstract

PURPOSE

The patient-centered medical home is often discussed as though there exist either traditional practices or medical homes, with marked differences between them. We analyzed data from an evaluation of certified medical homes in Minnesota to study this topic.

METHODS

We obtained publicly reported composite measures for quality of care outcomes pertaining to diabetes and vascular disease for all clinics in Minnesota from 2008 to 2010. The extent of and change in practice systems over that same time period for the first 120 clinics serving adults certified as health care homes (HCHs) was measured by the Physician Practice Connections Research Survey (PPC-RS), a self-report tool similar to the National Committee for Quality Assurance standards for patient-centered medical homes. Measures were compared between these clinics and 518 non-HCH clinics in the state.

RESULTS

Among the 102 clinics for which we had precertification and postcertification scores for both the PPC-RS and either diabetes or vascular disease measures, the mean increase in systems score over 3 years was an absolute 29.1% (SD = 16.7%) from a baseline score of 38.8% (SD = 16.5%, P ≤.001). The proportion of clinics in which all patients had optimal diabetes measures improved by an absolute 2.1% (SD = 5.5%, P ≤.001) and the proportion in which all had optimal cardiovascular disease measures by 4.4% (SD = 7.5%, P ≤.001), but all measures varied widely among clinics. Mean performance rates of HCH clinics were higher than those of non-HCH clinics, but there was extensive overlap, and neither group changed much over this time period.

CONCLUSIONS

The extensive variation among HCH clinics, their overlap with non-HCH clinics, and the small change in performance over time suggest that medical homes are not similar, that change in outcomes is slow, and that there is a continuum of transformation.

Keywords: patient-centered medical home, primary care, change, organizational, practice-based research, certification

INTRODUCTION

There has been controversy about the definition, measurement, and recognition of primary care clinics as patient-centered medical homes (PCMHs).1,2 It is not surprising that such a recent development, especially one with widely divergent high expectations from different perspectives, would be so ambiguous and full of unanswered questions about what a PCMH is, how it might be measured, what its effects are, and how a traditional primary care practice best becomes one.1–3 Although the PCMH recognition program of the National Committee for Quality Assurance (NCQA) was the first and is still the largest program for identifying PCMHs, several other national programs and local demonstration projects have come up with their own operational definitions.4

Advocates of medical homes often describe them as uniquely and markedly different from traditional practices, in both process and outcomes.5 As a result, medical homes are often compared with non–medical homes. The research literature, on the other hand, is more cautious about this difference. For example, the evaluators of the National Demonstration Project (NDP), one of the first to provide scientific information about medical home practices, emphasized that the transformation process was a long and slow one.6,7 They stressed that “this transformation requires tremendous effort and motivation, and benefits from external support.”

In 2011, we published a study of one large medical group’s change in quantitative performance measures over time, including the point at which it obtained NCQA recognition for all of its primary care clinics as Level 3 PCMHs, the highest designation possible.8 These clinics collectively demonstrated a 1% to 3% increase per year in patient satisfaction and a 2% to 7% increase per year in performance on measures of quality of care for diabetes, coronary artery disease, preventive services, and generic medication use. We concluded that the rate of improvement in outcomes was slow and not always faster than that of other groups that had not applied for PCMH recognition. In this study, we evaluated the extent to which there is a continuum of organizational change and patient outcomes among practices designated as medical homes, with substantial variation and room to further improve, even after achieving PCMH designation. As part of our grant to study the transformation of primary care clinics in Minnesota into what here are called health care homes (HCHs), we collected data about their change in both practice systems and performance measures over time spanning their certification, as well performance measures for comparison clinics that did not become certified. In 2008, Minnesota legislated that the state Department of Health establish and operate a process to test and certify primary care practices as HCHs to be eligible for special payments from both state medical programs and health plans. At the time of this analysis, 132 clinics had achieved certification in 2010–2011, of which 120 served adults or all ages and 12 were limited to children. All of these clinics agreed to participate in this study.

METHODS

Data for this study came from TransforMN, an Agency for Healthcare Research and Quality–funded study of HCH transformation in Minnesota. The standards for certification, as established by the Minnesota Department of Health and the Minnesota Department of Human Services with extensive community input, were as follows:

Continuous access and communication between HCH and the patient and family;

Electronic searchable registry to identify care gaps and manage services;

Care coordination for patient- and family-centered care;

Care plans that involve patients with chronic or complex conditions and their family; and

Continuous improvement in experience, health outcomes, and cost-effectiveness.

Application for HCH certification is voluntary but limited to clinics providing primary care. The application requires documentation of meeting the above standards, which is then audited by an on-site visit that includes chart audits and interviews with both staff and randomly selected patients about their experience with the clinic. The results are submitted to a Community Certification Advisory Committee, which makes a recommendation to the Commissioner of Health. Demonstration of improvement on standardized performance measures as well as attainment of progressively more stringent structural and functional standards are part of annual recertification.

The certification process began in January 2010, with 86 clinics completing certification in the first year. By the time this study completed enrollment in October 2011, a total of 132 of the 728 primary care clinics in the state were certified, and all agreed to participate in this study. These clinics were distributed throughout the state, with two-thirds being part of 3 large medical groups (typical of Minnesota primary care practice); 12 were pediatrics practices, whereas the rest served adults or all age-groups. Nearly all practices applying became certified.

The physician leaders of each organization with certified clinics were contacted by an investigator (L.I.S.), and all agreed to have their clinics participate in this study. To engender trust and honest responses to study surveys, they were assured that all their responses would be kept confidential.

Data Collection

Clinic descriptive data were obtained from a questionnaire completed by administrative leaders at each clinic or the central office in large groups.

Practice systems were measured by a questionnaire similar to that used by NCQA to recognize clinics as medical homes. We call this instrument the Physician Practice Connection Research Survey (PPC-RS) because it is a slightly modified version of the one originally created by NCQA in 2008 and has been tested for reliability.9 That test demonstrated that it was as reliable to obtain the answers from the designated physician leader of a clinic as to summarize information from various other clinic respondent groups. Physician leaders tended to underreport systems rather than over-report them, although this test was conducted before initiatives that paid clinics extra for these capabilities.

We expanded the response options for the PPC-RS so that the questions asked both about presence of a system and whether it worked well. Examples of these questions are shown in Table 1. For this study, we made a further modification so that the respondent needed to answer each question twice—once for the current state of the clinic and a second time for 3 years prior, which for most would be at least 2 years before being certified. If a current leader was not present 3 years ago, he/she identified another physician to complete the entire questionnaire. Each question received various points depending on whether the system was said to be missing (0), to be present but needs improvement (0.5), or to be present and works well (1.0). Each of the 105 questions was given equal weight, so the total score represented the proportion of points awarded out of a potential total of 105. In addition to a total score, there are subscores corresponding to 5 of the 6 domains of the chronic care model.10

Table 1.

Sample Questions From the Physician Practice Connections Research Survey, for Various Chronic Care Model Domains

| Domain | Sample Question |

|---|---|

| Decision Support | Does your clinic have a system to provide alerts about clinically important abnormal test results to the doctors at the time they are received? |

| Clinical Information System | Does your clinic maintain a registry (a list of patients with a particular condition along with associated clinical data for each patient) for diabetes? |

| Self-Management Support | Does your clinic have a systematic approach to identify and remind patients with chronic illnesses who are due for a follow- up visit? |

| Delivery System Redesign | Does your clinic have nonphysician staff who are specially trained and designated to educate patients in managing their illness? |

| Health Care Organization | Does your clinic conduct or participate in formal quality improvement activities? |

Note: The answers for each question were No (scored as 0), Yes, but needs improvement (0.5), or present and works well (1.0).

We sent an e-mail to each leader requesting completion of the questionnaire, with a link to the PPC-RS survey on the Web. Nonrespondents were sent another e-mail reminder weekly thereafter. Beginning with the second weekly reminder, the project principal investigator (L.I.S.) called the leader and followed up until either the nonrespondent completed the questionnaire or it became clear that completion was unlikely.

We obtained performance rates from Minnesota Community Measurement for 2008 to 2010 for all clinics with such data in the state, both certified and not.11 This organization has been reporting standardized rates publicly since 2004 on its Web site (http://www.mnhealthscores.org) and at the clinic level since 2008. We selected composite all-or-none measures for diabetes and vascular care. To be counted as a success for the optimal diabetes measure, each patient with diabetes needed to have a hemoglobin A1c (HbA1c) level of 7% or lower, a blood pressure of 130/80 mm Hg or lower, and a low-density lipoprotein cholesterol level of 100 mg/dL or lower, and also had to have documentation that they did not smoke and took aspirin. No partial credit was given. The optimal vascular composite measure was similar except that no HbA1c level was needed. Such all-or-none measures have been recommended by Nolan and Berwick12 as providing better spurs to comprehensive, patient-centered improvement.

Analysis

We calculated measures of central tendency and dispersion for clinic characteristics, for precertification and postcertification practice systems scores, overall PPC and by subscale, and for the diabetes and vascular composite measures from 2008 through 2010 dates of service. Paired t tests assessed the extent to which systems scores (postcertification vs precertification) and performance rates (2010 vs the earlier of 2008 or 2009) improved over time. We used Pearson correlation coefficients to estimate the strength of the linear association between changes in practice systems and performance measures. General linear models separately predicted systems scores (precertification, post-certification, change) and performance measures (2008, 2009, 2010, change) from clinic location (Twin Cities metropolitan area or not) and size (350–549, 550–999, ≥1,000 patient visits per week vs <350 patient visits per week). The omnibus test of significance for each predictor identified significant differences in systems scores or performance rates by clinic characteristics, which were interpreted using estimated regression coefficients. We estimated 2 series of general linear models predicting change in performance for diabetes or cardiovascular measures. In each series, 3 models estimated how well change in performance was predicted by (1) change in systems scores and precertification systems scores, (2) systems change, precertification systems, and their interaction, and (3) systems change, precertification systems, change by precertification interaction, and clinic size and location.

We used 2 general linear mixed models that incorporated 2008–2010 diabetes (or vascular) composite data from all reporting clinics in the state to predict performance rates from the HCH status of each clinic (HCH vs non-HCH), performance measure date (2010, 2009 vs 2008), and the interaction of these factors. A planned comparison tested whether HCH and non-HCH clinics performed better in terms of diabetes or vascular care in 2010, while the interaction term assessed whether the rate of performance increase differed among the 2 types of clinics.

The study was reviewed, approved, and monitored by a local institutional review board.

RESULTS

Of the 120 adult certified HCH clinics, 111 (92.5%) completed a PPC-RS questionnaire, but only 109 (90.8%) completed both current and precertification reports of the presence and function of various systems for use in this analysis. Of those 111 clinics, 101 also had results for at least 2 of the 3 years for optimal diabetes measures, permitting calculation of a change score for this measure, and 98 also had such results for the optimal vascular measure. Thus, the 102 clinics included in this analysis are those that had current plus precertification scores on the PPC-RS as well as 2010 and earlier rates for either diabetes or vascular care.

The characteristics of the HCH clinics in the study are shown in Table 2. Minnesota has very few independent clinics and few solo physicians, so 75% of certified HCHs were part of medical groups with more than 20 clinics and nearly all were owned by a health system, usually including a hospital. Although 77% of the clinics had 1 to 10 primary care physicians, nearly all had nurse practitioners or physician assistants, and all had electronic health records.

Table 2.

Characteristics of the Adult Certified Health Care Homes (N = 102)

| Characteristic | No. (%) |

|---|---|

| Location | |

| Metropolitan | 65 (63.7) |

| Nonmetropolitan | 37 (36.3) |

| Ownership | |

| Health system | 97 (95.1) |

| Health plan | 4 (3.9) |

| Physicians | 1 (1.0) |

| Medical services | |

| Primary care only | 40 (39.2) |

| Primary care and some specialty | 13 (12.8) |

| Multispecialty | 49 (48.0) |

| Primary care physicians, No. | |

| 1–3 | 13 (12.8) |

| 4–7 | 43 (42.2) |

| 8–10 | 22 (21.6) |

| ≥11 | 22 (21.6) |

| Nurse practitioners and physician assistants, No. | |

| 0 | 8 (7.8) |

| 1–3 | 58 (56.9) |

| ≥4 | 21 (20.6) |

| Clinics in medical group, No. | |

| 1 | 6 (5.9) |

| 2–4 | 6 (5.9) |

| 5–10 | 9 (8.8) |

| 11–20 | 2 (1.7) |

| ≥21 | 77 (75.5) |

| Patient visits/week | |

| <350 | 21 (20.6) |

| 350–549 | 27 (26.5) |

| 550–999 | 27 (26.5) |

| ≥1,000 | 27 (26.5) |

| Medical records | |

| Fully electronic | 94 (92.2) |

| Paper and electronic | 6 (5.9) |

| Paper only | 0 (0) |

| Mean (SD) | |

|

|

|

| Patient insurance | |

| Commercial | 63.3 (22.9) |

| Medicare | 17.3 (10.4) |

| Medicaid | 14.3 (15.0) |

| Uninsured | 3.5 (6.3) |

The PPC-RS systems scores and the optimal diabetes and vascular rates with standard deviations and ranges are listed in Table 3. The mean systems score for these clinics increased from 38.8% as of 3 years ago to 67.9% currently (P ≤.001), with equally large variations among the clinics at each time point. Overall rates on both performance measures did not change much over time in an absolute sense, but there was considerable variation among the clinics in each year and in change over time. Within the 3 large medical groups having 22 to 34 certified HCH clinics, there was as much variation in clinic scores on the PPC systems measure as for the smaller groups as a whole (SD = 13.9, 14.2, and 13.0 vs 12.9). For the diabetes measure, there was less variation within the large systems than among the others (SD = 4.0, 6.8, and 3.7 vs 9.3), but on the vascular measure, 1 large group had an SD similar to that of the smaller systems, whereas the other 2 had a lower value.

Table 3.

Practice Systems Scores and Quality Measure Rates for Diabetes and Vascular Disease

| 3 Years Ago | Now | Change | ||||

|---|---|---|---|---|---|---|

|

|

||||||

| Score or Measure | Mean (SD) | Range | Mean (SD) | Range | Mean (SD) | Range |

| PPC-RS score, pointsa | 38.8 (16.5) | 10.0 to 81.0 | 68.0 (14.1) | 28.5 to 97.1 | 29.1 (16.7) | −1.0 to 62.9 |

| Health care organization | 61.3 (32.2) | 0.0 to 100.0 | 82.3 (22.6) | 0.0 to 100.0 | 21.1 (26.1) | 0.0 to 100.0 |

| Delivery system redesign | 24.0 (17.0) | 0.0 to 78.3 | 58.0 (20.3) | 13.0 to 100.0 | 34.0 (23.6) | −4.3 to 89.1 |

| Clinical information systems | 40.1 (20.9) | 4.8 to 100.0 | 73.6 (18.9) | 23.8 to 100.0 | 33.4 (20.7) | 0.0 to 73.8 |

| Decision support | 54.7 (23.1) | 6.7 to 100 | 80.1 (14.8) | 26.7 to 100.0 | 25.4 (19.7) | 0.0 to 76.7 |

| Self-management support | 39.1 (16.4) | 0.0 to 83.8 | 63.2 (16.2) | 0.0 to 91.9 | 24.1 (15.2) | −4.0 to 59.5 |

| Optimal diabetes rate, % | 22.0 (8.5) | 3.8 to 52.0 | 24.4 (7.9) | 5.9 to 41.4 | 2.1 (5.5) | −12.0 to 21.0 |

| Optimal vascular rate, % | 37.5 (9.8) | 10.0 to 57.9 | 41.6 (11.2) | 10.6 to 63.6 | 4.4 (7.5) | −15.7 to 27.1 |

PPC-RS = Physician Practice Connections Research Survey.

Possible range of scores: 0 to 100.

Notes: Numbers of clinics for each score/measure ranged from 98 to 102, as some had missing data. Optimal diabetes rate = percentage of patients with diabetes meeting all thresholds for control of hemoglobin A1c, blood pressure, and lipids as well as aspirin use and tobacco abstinence. Optimal vascular rate = percentage of patients with heart disease meeting all thresholds for control of blood pressure and lipids as well as aspirin use and tobacco abstinence.

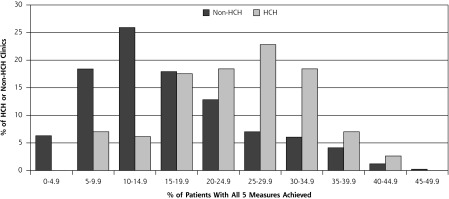

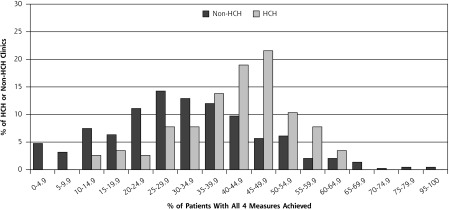

We also compared these HCH clinics with the 518 uncertified clinics in the state on the performance rates. For diabetes, the certified clinics had a mean rate of 24.6% ± 8.3% in 2010 as compared with 16.6% ± 9.3% for the 413 uncertified clinics with available performance data. For vascular care, it was 41.6% ± 11.5% vs 31.4% ± 16.0% for uncertified. Both these differences were significant at P ≤.001; however, there was substantial overlap between HCH and non-HCH clinics, as illustrated in Figures 1 and 2. Comparing trends in diabetes and vascular care over time, improvement in both performance rates was actually greater among non-HCH clinics than among HCH clinics (P ≤.01).

Figure 1.

Rates of achievement of optimal diabetes measure among clinics in 2010 by Health Care Home status.

HCH = health care home.

Figure 2.

Rates of achievement of optimal vascular measure among clinics in 2010 by Health Care Home status.

HCH = health care home.

Although not large, the Pearson correlation coefficients for the association between change in PPC score and change in performance score among HCH clinics were highly significant, with a correlation of r = .26 (P = .008) for diabetes care and r = .30 (P = .003) for vascular care. For the diabetes composite, this correlation appeared to depend on the increase in low-density lipoprotein cholesterol and HbA1c control, whereas for vascular care, it depended solely on low-density lipoprotein cholesterol control (data not shown).

There were no systematic differences in PPC scores (precertification, postcertification, change) or care measures (2008, 2009, 2010, change) as a function of clinic location (urban, nonurban) or size (<350, 350–549, 550–999, ≥1,000 patient visits per week). The exceptions to this finding were that midsized clinics (350–549 and 550–999 patient visits per week) had larger increases in their PPC scores (mean = 34.3, P = .01 and mean = 32.6, P = .02, respectively) than small clinics (<350, mean = 22.5) or large clinics (≥1,000 mean = 25.6, P = .41), and that diabetes optimal care tended to increase more in nonurban clinics (mean = 3.9) relative to urban ones (mean = 1.2, P = .02).

Finally, the multiple regression analysis showed that for every 10% increase in PPC score, there was a 0.9% increase in diabetes composite measure (P=.03) and a 2.4% increase in the vascular composite measure (P <.001) for a clinic. When predicting the diabetes composite, this relationship was even stronger among clinics that had relatively low or moderate PPC scores 3 years earlier (but was weak among those with the highest scores 3 years earlier). Controlling for urban location and size did not affect the relationships between change in PPC scores and diabetes or vascular composites.

DISCUSSION

We found large increases in practice systems among our sample of HCH clinics, but also very large variation among them in both current scores on systems and performance measures, as well as in change over time. HCH clinics on average had higher overall performance rates on diabetes and vascular disease measures than non-HCH clinics, but there was extensive overlap. Average change in performance over the past 3 years was small and greater for non-HCH clinics than for their HCH counterparts. This finding suggests that the difference in performance may be due to clinics with higher performance being more likely to apply for certification. It is possible, however, that HCH performance rates may have increased in 2011 and 2012, as outcomes likely lag behind changes in systems. We do know that the small changes in performance are not due to ceiling effects, as the publicly reported data currently available show many clinics achieving performance levels far above these mean rates.

These findings raise the question of whether the certification process drives change or certification provides a way for primary care clinics to distinguish themselves with a strong market signal (or both).13 In either case, we think that these large variations in systems and performance among clinics certified as HCHs and the overlap with non-HCH clinics strongly suggest that there is not an abrupt change in performance among clinics that become certified as medical homes. Although improvements in practice systems and culture are indeed likely to lead to desired improvements in performance measures over time, that relationship is unlikely to be either quick or homogeneous among medical homes. This pattern has been well illustrated and recognized in the NDP evaluation, where quality improvements were small over 26 months and no greater among clinics receiving extensive assistance than among those working on this goal on their own.14 Moreover, the 36 clinics in that NDP randomized controlled trial were self-selected volunteers that were all eager to make the transition to medical homes. The one thing that might speed the transition would be a large change in payment from fee for service to substantial financial incentives for the conversion and improved performance, something not true for either the NDP clinics or those HCHs in Minnesota.15–17

Besides suggesting caution in expectations about the speed of improved outcomes, these data suggest that it might be helpful to rank medical home clinics along a continuum of transformation and improved outcomes. Clearly, such a ranking should include diverse measures, combining structural and process measures with outcome measures.18,19 Ideally those outcome measures should include technical quality, patient experience, and health care costs, although we still lack the ability to obtain such standardized measures nationally. Where possible, however, demonstration projects should develop and test such ranking systems.

Limitations of these results and conclusions include imperfection in our measurement of practice systems and inability to validate retrospective responses about the 3 years prior, although the resulting change over time (75% increase) is very similar to that in another study with repeated PPC-RS measures where we showed an increase of 64% at 1 year after implementation of systems for managing depression (unpublished). Also, many of these clinics were certified during or just after the 2010 performance measurement year, so it is possible that their rates will improve more than those of uncertified clinics over the next few years. Moreover, if time differences since certification affected system changes, we did not account for that in the analysis. We also have no way to know the PPC-RS scores or clinic characteristics of non-HCH clinics and no complete data on patient experience or satisfaction. The 15% of HCH clinics lacking complete data for this analysis may differ from those with such data. Finally, our findings may have been influenced by contextual factors, which are outlined in the Supplemental Appendix (available online at http://annfammed.org/content/11/Suppl_1/S108/suppl/DC1).

Nevertheless, these findings demonstrate considerable variation in both practice systems and outcome measures among clinics that have been certified through a fairly rigorous process as medical homes. Although there is some correlation between those measures, outcomes change slowly. Now it is key to better understand what is needed to implement those systems as well as the other changes needed to transform traditional primary care practices into idealized medical/health care homes.

Acknowledgments

We are very grateful for the cooperation of the Minnesota Department of Health (Marie Maes-Voreis and Cherrylee Sherry), the Minnesota Department of Human Services (Jeff Schiff), Minnesota Community Measurement (Anne McGeary Snowden), and NCQA (Manasi Tirodkar). We are also very grateful for all the leaders of the clinics and medical groups that have cooperated so well with completing our surveys. Finally, our project coordinating team has benefitted greatly from the input of 2 patient representatives, Ceci Shapland and Makeda Norris.

Footnotes

Conflicts of interest: authors report none.

Funding support: This research was funded by grant 1R18HS019161 from the Agency for Healthcare Research and Quality.

Disclaimer: The opinions expressed in this document are those of the authors and do not reflect the official position of AHRQ or the US Department of Health and Human Services.

References

- 1.Solberg LI, Van Royen P. The medical home: is it a blind men and elephant tale? Fam Pract. 2009;26(6):425–427 [DOI] [PubMed] [Google Scholar]

- 2.Solberg LI. How can we remodel practices into medical homes without a blueprint or a bank account? J Ambul Care Manage. 2011;34(1):3–9 [DOI] [PubMed] [Google Scholar]

- 3.Vest JR, Bolin JN, Miller TR, Gamm LD, Siegrist TE, Martinez LE. Medical homes: “where you stand on definitions depends on where you sit.” Med Care Res Rev. 2010;67(4):393–411 [DOI] [PubMed] [Google Scholar]

- 4.Scholle SH, Saunders RC, Tirodkar MA, Torda P, Pawlson LG. Patient-centered medical homes in the U.S. J Ambul Care Manage. 2011;34(1):20–32 [DOI] [PubMed] [Google Scholar]

- 5.Nielsen M, Langner B, Zema C, Hacker T, Grundy P. Benefits of Implementing the Primary Care Patient Centered Medical Home: A Review of Cost & Quality Results, 2012. Patient-Centered Primary Care Collaborative. 2012. http://www.pcpcc.net/guide/benefits-implementing-primary-care-medical-home Accessed May 1, 2013

- 6.Crabtree BF, Nutting PA, Miller WL, Stange KC, Stewart EE, Jaén CR. Summary of the National Demonstration Project and recommendations for the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1):S80–S90 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Nutting PA, Miller WL, Crabtree BF, Jaén CR, Stewart EE, Stange KC. Initial lessons from the first national demonstration project on practice transformation to a patient-centered medical home. Ann Fam Med. 2009;7(3):254–260 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Solberg LI, Asche SE, Fontaine P, Flottemesch TJ, Anderson LH. Trends in quality during medical home transformation. Ann Fam Med. 2011;9(6):515–521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Scholle SH, Pawlson LG, Solberg LI, et al. Measuring practice systems for chronic illness care: accuracy of self-reports from clinical personnel. Jt Comm J Qual Patient Saf. 2008;34(7):407–416 [DOI] [PubMed] [Google Scholar]

- 10.Bodenheimer T, Wagner EH, Grumbach K. Improving primary care for patients with chronic illness: the chronic care model, Part 2. JAMA. 2002;288(15):1909–1914 [DOI] [PubMed] [Google Scholar]

- 11.Amundson GM, O’Connor PJ, Solberg LI, et al. Diabetes care quality: insurance, health plan, and physician group contributions. Am J Manag Care. 2009;15(9):585–592 [PubMed] [Google Scholar]

- 12.Nolan T, Berwick DM. All-or-none measurement raises the bar on performance. JAMA. 2006;295(10):1168–1170 [DOI] [PubMed] [Google Scholar]

- 13.Connelly BL, Certo ST, Ireland RD, Reutzel C. Signaling theory: a review and assessment. J Manage. 2011;37(1):39–65 [Google Scholar]

- 14.Jaén CR, Ferrer RL, Miller WL, et al. Patient outcomes at 26 months in the patient-centered medical home National Demonstration Project. Ann Fam Med. 2010;8(Suppl 1):S57–S67 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Landon BE, Gill JM, Antonelli RC, Rich EC. Prospects for rebuilding primary care using the patient-centered medical home. Health Aff (Millwood). 2010;29(5):827–834 [DOI] [PubMed] [Google Scholar]

- 16.Merrell K, Berenson RA. Structuring payment for medical homes. Health Aff (Millwood). 2010;29(5):852–858 [DOI] [PubMed] [Google Scholar]

- 17.Nutting PA, Crabtree BF, Miller WL, Stange KC, Stewart E, Jaén C. Transforming physician practices to patient-centered medical homes: lessons from the National Demonstration Project. Health Aff (Millwood). 2011;30(3):439–445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stange KC, Nutting PA, Miller WL, et al. Defining and measuring the patient-centered medical home. J Gen Intern Med. 2010;25(6):601–612 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Holmboe ES, Arnold GK, Weng W, Lipner R. Current yardsticks may be inadequate for measuring quality improvements from the medical home. Health Aff (Millwood). 2010;29(5):859–866 [DOI] [PubMed] [Google Scholar]