Abstract

The effect of expertise training with faces was studied in adults with ASD who showed initial impairment in face recognition. Participants were randomly assigned to a computerized training program involving either faces or houses. Pre- and post-testing included standardized and experimental measures of behavior and event-related brain potentials (ERPs), as well as interviews after training. After training, all participants met behavioral criteria for expertise with the specific stimuli on which they received training. Scores on standardized measures improved after training for both groups, but only the face training group showed an increased face inversion effect behaviorally and electrophysiological changes to faces in the P100 component. These findings suggest that individuals with ASD can gain expertise in face processing through training.

Keywords: ASD, Face processing, Training, Intervention, ERPs, N170

Introduction

Brain and behavioral studies suggest specialized processing advantages for human faces among typically developing adults. However, individuals with autism spectrum disorders (ASD) fail to exhibit specialized processing or do so less consistently and robustly than individuals without ASD. Recognition of faces by typically developing adults is disrupted by presentation in an inverted orientation (e.g., Boutet and Faubert 2006; Yin 1969). Faces are better recognized holistically than by isolated features (Boutet and Faubert 2006; Pellicano and Rhodes 2003; Tanaka and Farah 1993; Tanaka and Sengco 1997), and distances between features are encoded as evidenced by sensitivity to manipulations of this second-order information (Freire and Lee 2001; McKone and Boyer 2006; Young et al. 1987). In contrast, both children and adults with ASD have a less robust inversion effect (Faja et al. 2009; Hobson et al. 1988; Langdell 1978; Rose et al. 2007; Teunisse and de Gelder 2003, but see Lahaie et al. 2006), and show impaired holistic processing (Joseph and Tanaka 2003; Wallace et al. 2008). Children are relatively more reliant on isolated facial features (Hobson et al. 1988) and adolescents and adults appear to have enhanced feature processing (Lahaie et al. 2006). Disrupted configural processing among children and adults with ASD has been detected via experimental manipulations that found: (1) biased high spatial frequency processing (Deruelle et al. 2004), (2) less impairment when faces are split (Teunisse and de Gelder 2003), and (3) less awareness of manipulations of relations between features (Faja et al. 2009; Wallace et al. 2008). However, a configural processing impairment has not been consistently detected among adults with high-functioning ASD (e.g., Nishimura et al. 2008). There is also evidence of differing patterns of attention to faces by adults (Klin et al. 2002; Pelphrey et al. 2002) and young children with ASD (Jones et al. 2008; Webb et al. 2010), which may contribute to decreased specialization for faces.

Electrophysiological studies with typical adults have provided further evidence of neural specialization for face processing. Event-related potentials (ERPs) measured from occipital-temporal scalp regions show a peak at approximately 130–200 ms after the presentation of a visual stimulus (i.e., the N170 component), which has more negative amplitude and shorter latency to faces than other types of stimuli (e.g., Bentin et al. 1996). For inverted versus upright faces, the N170 amplitude is more negative but peaks with a slower latency (e.g., Bentin et al. 1996; Rossion et al. 1999; Itier and Taylor 2002). In contrast to the pattern seen in controls, adolescents and adults with ASD had a slower latency for faces than objects and similar latency for inverted versus upright faces (McPartland et al. 2004). Similarly, compared to typical individuals, adults with Asperger syndrome had slower N170 latency and reduced amplitude to faces, slower N170 latencies to eyes and mouths, but did not differ in response to objects (O’Connor et al. 2007). When a crosshair preceded the stimulus, adults with ASD showed normative P1 and N170 amplitude and latency differentiation of faces versus objects but failed to show differing responses for upright versus inverted faces (Webb et al. 2009). The authors suggested more normative first order face processing may have been facilitated by external cues.

While typical adults are assumed to have specialized processing for faces, possibly due to their social importance during development, Gauthier and Tarr (1997) and Gauthier et al. (1998) have argued that this perceptual expertise is not unique to faces (but see McKone et al. 2007). To test this hypothesis, training aimed at developing expertise for a novel set of computer rendered cartoon items was designed. After training, experts exhibit emerging holistic and configural processing behaviors (Gauthier et al. 1998), and N170 activation patterns that resembled responses to faces, such as an increased difference in latency between upright and inverted cartoons (Rossion et al. 2002). After similar training with cars or birds, the N170 amplitude increased for all objects within the trained category (e.g., cars), while N250 amplitude was enhanced only for stimuli within the category trained at the subordinate level (e.g., SUVs) (Scott et al. 2006, 2008), leading the authors to conclude that the N170 may be related to experience while the N250 may be related to identifying and remembering individuals.

Two groups have reported on the use of training for individuals with ASD to facilitate more typical recognition of faces (Faja et al. 2008; Tanaka et al. 2010) while others have employed computerized training programs to facilitate emotion recognition by individuals with ASD (Bölte et al. 2002, 2006; Golan and Baron-Cohen 2006; Golan et al. 2010; Silver and Oakes 2001). In adolescents and adults, 6–8 h of training across 3 weeks produced improvements in static face recognition, including improved sensitivity to manipulations of distances between features (Faja et al. 2008). In children, playing training games an average of 20 h across 19 weeks improved recognition of the eyes in the context of the whole face and mouths in isolation (Tanaka et al. 2010). Changes in behavior and neural activation were found after emotion training (Bölte et al. 2006).

Specific training for face recognition provides the opportunity to modify attention patterns over time, to investigate the development and plasticity of the face processing system in ASD, and to intervene in a way that targets one of the specific social domains affected for many individuals with ASD. The approach developed by our group targets face expertise, which is reflected by specialized processing advantages and efficiency of classification of individuals. We built on Gauthier’s tasks, which emphasized learning to classify stimuli at multiple semantic levels and thereby acquiring knowledge about the perceptual stimulus class being learned. Our group added tasks aimed at focusing visual attention on: (a) core features of the face by cropping stimuli, (b) configural information contained at lower spatial frequencies by filtering images, and (c) three-dimensional aspects of the faces by presenting multiple photos of each individual taken from a variety of angles. Training also provided explicit rule-based guidelines for viewing faces because individuals with ASD may be more reliant on rule-based strategies (Klinger and Dawson 2001; Rutherford and McIntosh 2007). This approach to training has been shown to be an effective way to change behavior (Faja et al. 2008). In the previous study, five high functioning adolescents and adults with ASD were trained and outcome was compared with five individuals who did not receive training. The original study did not assess brain function, so the effect of training on brain activity is unknown.

The first goal of the current investigation was to replicate the original training study, further demonstrating that individuals with ASD can become expert with static faces and generalize the processing advantages they develop to a new set of faces. The current investigation provides an opportunity to address limitations of the original study by (a) comparing face training to object training in order to investigate whether social contact with a trainer, rule-based instruction, or exposure to computer activities alone contributes to better performance; and, (b) including only individuals who showed impairments on standardized measures of face processing. Adults were recruited for the current study in order to assess the effects of training on brain and behavior with less influence of ongoing maturational processes and because expertise training studies have used typically developing adults. Based on the previous training study, we anticipated that training would result in expertise with trained stimuli and that the group receiving face training but not the group receiving house training would improve in the processing of novel faces. The second goal was to explore whether training produced changes in brain activity. Given that expertise training has been shown to alter configural processing, we propose training would result in greater effects on the ERP of upright versus inverted stimuli at the P1 and N170 components. Both trained and untrained exemplars were presented, which allowed for exploration of familiarity related effects on the N250.

Method

Participants

Thirty-six adults with high functioning ASD were initially recruited via advertising in the community, the University of Washington autism research database of previous study participants, and the UW Autism Center Clinic to participate in the UW’s NIH-funded STAART Electrophysiological and fMRI Studies of Social Cognition study, during which brain function and behavior in response to faces were well characterized (Faja et al. 2009; Kleinhans et al. 2008, 2009; Sterling et al. 2008; Webb et al. 2009, 2010). Diagnosis was confirmed using the Autism Diagnostic Interview-Revised (ADI-R; Lord et al. 1994), Autism Diagnostic Observation Schedule (ADOS; Lord et al. 2000), and the Diagnostic and Statistical Manual of Mental Disorders, fourth edition (American Psychiatric Association 1994). All participants had full-scale IQs of at least 85, based on an abbreviated version of the Wechsler Adult Intelligence Scale-Third Edition (WAIS-III; Wechsler 1997a), which included the Vocabulary, Comprehension, Object Assembly, and Block Design subtests.

Upon completion of the STAART study, a subset of eligible participants were invited to participate in the training study based on (a) proximity to the university in order to facilitate multiple training visits, and (b) impaired face recognition measured during STAART by the immediate and delayed recall of faces subtests of the Wechsler Memory Scale-Third Edition (WMS-III; Wechsler 1997b) and the long form of the Benton Test of Facial Recognition (Benton et al. 1983). Of the 18 individuals who did not participate in the training study, 5 lived too far away, 3 were lost to follow up, 4 declined, and 6 did not have an impairment. The final training sample included 13 individuals with an absolute impairment, WMS-III subtest scaled scores of 7 or below (scaled score M = 10) or Benton scores of 40 or below, and 5 with a relative impairment, WMS-III scores that were more than one standard deviation below their overall IQ or WAIS Perceptual Reasoning score. Individuals with an absolute or relative impairment in face processing were stratified and were randomly assigned to either face training (n = 9, 6 with absolute impairment) or to house training (n = 9, 7 with absolute impairment). Groups did not differ in age, cognitive ability, or other pre-training characteristics (see Table 1). The current study was conducted with the approval of the Institutional Review Board of the Human Subjects Division at the University of Washington.

Table 1.

Participant characteristics prior to training with Mean (Standard Deviation) reported

| Measure | Face training | House training | t (p) |

|---|---|---|---|

| Age in years | 22.4 (4.4) | 21.5 (5.6) | 0.40 (.70) |

| Wechsler (WAIS) full scale IQ | 116.3 (16.3) | 118.2 (17.4) | −0.24 (.82) |

| WAIS verbal IQ | 116.9 (14.8) | 114.9 (19.8) | 0.24 (.81) |

| WAIS performance IQ | 111.9 (17.6) | 117.3 (17.5) | −0.66 (.52) |

| ADOS social | 8.0 (2.5) | 7.3 (2.2) | 0.58 (.57) |

| ADOS communication | 4.1 (1.6) | 3.1 (1.3) | 1.3 (.23) |

| ADOS total | 12.1 (4.1) | 10.4 (2.6) | 0.98 (.35) |

| ADI-R reciprocal social interaction | 17.4 (7.0) | 17.5 (5.0) | −0.02 (.99) |

| ADI-R communication (Verbal) | 12.8 (4.2) | 14.0 (4.4) | −0.58 (.57) |

| ADI-R repetitive behavior | 5.6 (3.0) | 5.5 (2.4) | 0.04 (.97) |

| Benton test of facial recognition | 42.0 (3.6) | 43.9 (2.6) | −1.2 (.25) |

| Wechsler memory scales—faces immed. | 6.8 (2.8) | 6.8 (1.5) | 0.0 (1.0) |

| Wechsler memory scales—faces delayed | 7.2 (2.3) | 7.7 (1.7) | −0.47 (.65) |

| House memory scale—immediate | 7.3 (3.0) | 8.1 (1.6) | −0.69 (.51) |

| House memory scale—delayed | 7.0 (3.2) | 6.9 (1.2) | 0.10 (.93) |

Wechsler IQ scores have M = 100, SD = 15 and Wechsler Memory scaled scores have M = 10, SD = 3. Raw scores are reported for the Benton, ADOS, and ADI. Benton scores ≥ 41 are considered “normal.” ASD diagnostic criteria are ADOS (Module 4) Total ≥ 7, ADI-R Reciprocal Social Interaction ≥ 7, ADI-R Communication ≥ 6, and ADI-R Repetitive Behavior ≥ 2

Face and House Training Protocol

Participants received training until criteria for expertise (described below) were met or 8 sessions were completed; training duration ranged from 5 to 8 sessions. Training sessions were conducted in person, not necessarily on consecutive days, and scheduled at the convenience of the participant with a maximum of two sessions on any given day. The duration between the first and last training session (in days) did not differ by group, Mface = 22.6, SD = 11.8 and Mhouse = 23.4, SD = 14.7, t(16) = −1.41, p = .89, Cohen’s d = −.06.

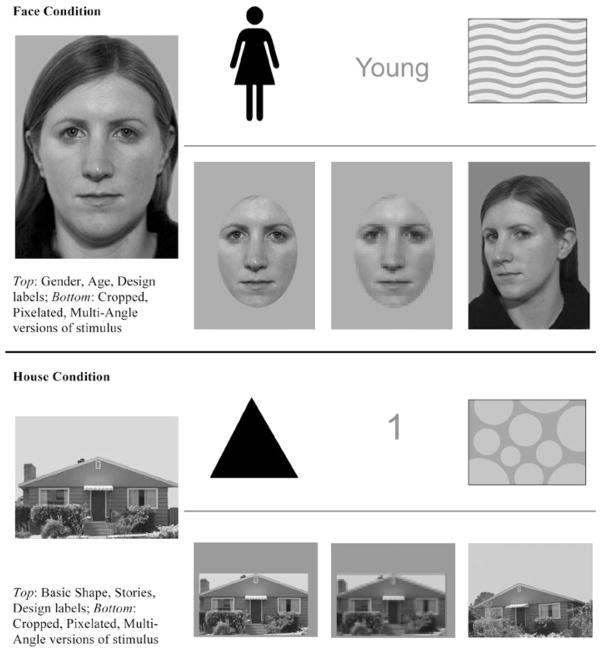

The training protocol has already been described in detail (Faja et al. 2008). Sessions 1–4 included several minutes of explicit rule-based instruction emphasizing configural processing, core features, and shifting attention within stimuli. For the house group, core features such as windows and doors were substituted for eyes and mouth. Next, 18 interrelated training tasks were presented in E-Prime (Psychology Software Tools, Inc.). Stimuli were 24 novel grayscale digital photos of faces or houses (see Fig. 1). Six stimuli were introduced in each of the first four sessions and then reviewed. Both groups trained on three semantic levels. For each face, participants matched the corresponding gender (male/female), age (young/middle/old) and a unique individual design. For each house, participants matched the basic shape (square/triangular), number of stories (1/1.5/2), and unique individual design. Designs were used instead of names because of possible impairments in verbal skills and for comparable difficulty of individual matching labels across face and house training. During matching blocks, participants viewed an individual stimulus and, after a brief pause, selected the appropriate label from two choices. Additional matching conditions were added to emphasize the most important regions of the face and increase focus on configural information over individual features. These were replicated in house training. For the cropped condition, stimuli were presented without outer features, while high-spatial frequency details were obscured by using the Photoshop mosaic filter in the pixilated condition. The multi-angle condition, in which stimuli were photographed from multiple angles, was added to the current protocol. Participants also viewed individual designs and selected the correct matches from pairs of faces or houses in backward individual matching. Sessions 5–8 involved matching the full set of stimuli with non-altered, cropped, pixilated, and multi-angle targets at all three levels of classification. Additional individual matching practice was provided as needed and as time permitted with the target and four designs. Correct responses were always rewarded with a brief image display of the participant’s choosing, while the stimulus and correct label followed incorrect responses.

Fig. 1.

Examples of face and house training stimuli and labels

Verification Task

In order to assess whether participants met criteria for expertise, the verification task was administered at the conclusion of Sessions 1 through 4 and twice per session during Sessions 5 through 8. A label for one of the three levels of categorization (gender/shape, age/number of stories, unique individual design) was presented in mixed order, followed by a 1 s delay, and then a stimulus that either matched or did not match the label. Each target appeared 6 times—as a target and foil for each level of categorization. Feedback was given. Criteria for expertise followed those previously reported (Faja et al. 2008; Gauthier et al. 1998; Tanaka and Taylor 1991) and were: (a) no significant difference in reaction time between the basic level (gender/basic shape) and individual level, (b) accuracy above 85%, and (c) achieving criteria 1 and 2 during two consecutive verification tasks.

Behavioral Measures of Face Processing Ability

Both groups were tested before and after training using all behavioral measures except the naturalistic measure of face recognition and self-report about training, which were administered after training. Pre-training assessment was conducted during the larger STAART study. Time elapsed between these visits (Mface = 2.2 years, SD = 0.8 and Mhouse = 1.6 years, SD = 0.6) did not significantly differ by group, t(16) = 1.6, p = .13, Cohen’s d = .75, nor did the time elapsed between the final training session and the post testing session (Mface = 12.6 days, SD = 5.7 and Mhouse = 15.3 days, SD = 12.5, t(16) = −0.61, p = .55, Cohen’s d = −.28).

Standardized Measures

Face recognition and memory were measured via the Benton Facial Recognition (Benton et al. 1983) and the WMS-III immediate and delayed facial memory tasks (Wechsler 1997b). To directly compare object to face memory, our group developed a House Memory Scale that assesses immediate and delayed recognition of houses. It parallels the WMS face subtests in administration, the number of items, presentation time, and memory delay.

Experimental Measures of the Inversion Effect, Holistic Processing and Detection of Second-order Manipulations

Before and after training, two experimental measures of face processing were collected on a computer to capture accuracy and reaction time. Stimuli for each task were unique and differed from the training stimuli, though they were also grayscale photos with neutral facial expressions (for examples see Faja et al. 2008). For each task, two versions were created with different manipulations to the stimuli and presentation was counterbalanced across participants. Throughout the study, care was taken not to reveal the experimental design to participants. Baseline data were collected as part of a larger sample (Faja et al. 2009). Analyses for these tasks were repeated-measures ANOVA with Greenhouse-Geisser corrected degrees of freedom for the specific variables described next.

To measure the inversion effect and holistic processing, eighty unique trials were presented, which began with a 16.5 by 15.3 cm photo of a whole face shown for 3,500 ms. After a 1,000 ms delay with a blank screen, the target and a foil were presented together until a selection was made or 8,000 ms elapsed. Participants decided which images were exact matches with the whole face. Target-foil pairs were matched on test type and feature; the foil differed from the target by a single feature. Within the task, variables included orientation (upright/inverted), test type (whole face/isolated feature), and feature (eyes/mouth), resulting in 8 combinations. Orientation was constant throughout a trial. For the part condition, facial features were presented at the same size and location as the feature on the target face.

The measure of detection of second-order manipulations consisted of 96 trials, each beginning with a 3,500 ms display of a 17.9 by 21.8 cm target image (stimuli from Macarthur Face Stimulus Set; Tottenham et al. 2009), followed by 1,000 ms of a blank screen, and then a test face for up to 8,000 ms. Participants decided whether or not the test face exactly matched the target. The within group variable was the magnitude of change, and distance between features varied to produce the three conditions: 48 trials with no change, 24 trials with small changes (6 pixels), and 24 trials with large changes (12 pixels). For images that were changed, the eyes and mouths were both shifted (eyes: up, down, closer or further apart and mouths: up, down) and the pixels surrounding them were blended using Photoshop.

Naturalistic Measure of Face Recognition (Post Testing Only)

During the self-report of training interview, an unfamiliar woman briefly entered the testing room, posed a surprised and confused expression and said loudly, “Oops. Excuse me. I thought this room was available.” She recorded whether the participant oriented and made eye contact, but did not deliberately seek his/her attention beyond the scripted intrusion. The examiner then commented, “She works here, but that doesn’t usually happen.” Approximately 25–30 min later, participants were shown six cropped neutral grayscale face photos and asked to identify the interrupter.

EEG Recording, Processing and Analysis

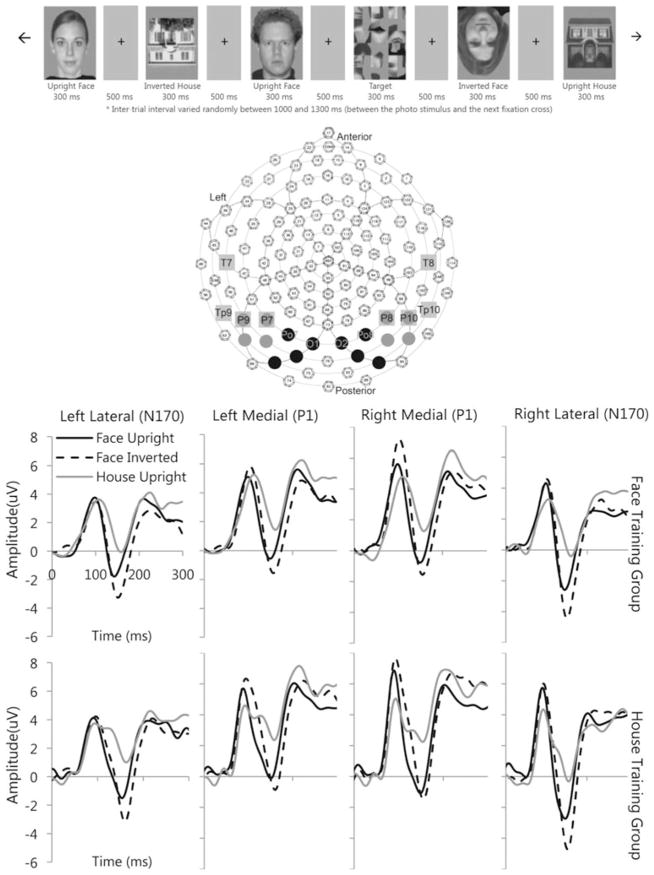

Baseline and post-training ERP data were recorded using the same procedures with a 128 channel EGI Geodesic Sensor Net (EGI, Eugene OR). Baseline data were collected via a larger sample of participants (Webb et al. 2009). Participants viewed stimuli of upright faces (50), inverted faces (50), upright houses (50), inverted houses (50), and targets (scrambled faces; 32), as shown in Fig. 3. Face stimuli were standardized so the eyes were at the center of the screen, while house stimuli were symmetrical to more closely parallel the visual properties of faces. All were grayscale photos and half were the stimuli used for training. For each trial, a visual fixation cross was displayed in the middle of the screen for 500 ms, followed by stimulus presentation for 300 ms, and an inter-trial interval varying randomly between 1,000 and 1,300 ms.

Fig. 3.

Top Examples of stimuli from ERP task. Middle Geodesic Sensor Net with 128 channels. P1 medial posterior electrodes in black circles; N170 lateral posterior electrodes in dark grey circles; N250 posterior-temporal electrodes in light grey squares. Bottom Baseline ERP responses to upright (black line) and inverted faces (dotted lines) and upright houses (grey line) in the Face Training (upper row) and House Training (lower row) groups by region. P1 was analyzed over left and right medial electrode groups; N170 was analyzed across left and right lateral electrode groups

Post processing data analysis included: low pass filtering at 30 Hz, artifact detection (including blink removal), averaging trials by condition, re-referencing to an average reference, and baseline correction (100 ms pre-stimulus onset). Electrode regions chosen for analyses are shown in Fig. 3. The time windows for ERP components were chosen based on visual inspection of the grand-average and data for individual participants. Baseline analyses used repeated measures ANOVA testing the effect of Group (face/house training), stimulus category (faces/houses), orientation (upright/inverted), and hemisphere (right/left). For the P1 (60–130 ms; medial posterior left 66, 70, 71, 72 and medial posterior right 85, 90, 84, 77) and N170 (120–180 ms; lateral posterior left 58, 59, 64, 65 and lateral posterior right 97, 92, 96, 91), both peak latency and peak amplitude were analyzed over averaged lead groups. Peaks were visually verified as occurring within that window by the authors (JB, EJ, KM, and SW). For the N250, average amplitude was analyzed at two windows (200–250 and 250–300 ms) using lateral posterior-temporal leads based on the 10–20 system (Itier and Taylor 2004; Webb et al. 2010; Schweinberger et al. 2002, 2004).

To test effects of training, within group patterns of change were examined using repeated measures ANOVA of difference scores (post-treatment minus pre-treatment). Specifically, to identify whether expertise training altered the degree of the inversion effect, in separate analyses we directly compared: (a) face upright versus face inverted responses for the face training group, (b) house upright versus house inverted for the house training group, and (c) face upright versus face inverted responses for the house training group to examine whether expertise training resulted in generalization of the processes supporting the inversion effect. Lastly, for the N250, familiarity (trained/untrained stimuli) was also tested as a within subject effect based on Scott et al. (2008). Between groups effects were also measured for the face upright versus face inverted conditions. The Greenhouse-Geisser correction was employed and Fisher’s Least Significant Differences was used for follow up tests when appropriate.

Qualitative Self-report of Training (Post Testing Only)

Participants were questioned after training about: (a) whether they thought the training helped, (b) whether training changed the way they look at faces, (c) whether they noticed changes in their skills in social situations.

Results

Verification of Expertise

Both training groups were able to reach previously defined criteria for expertise during the verification task (Tanaka and Taylor 1991; Gauthier and Tarr 1997) with no significant differences in the proportion of participants in each group who met criteria. Matching at the subordinate level became as automatic as the basic level for all participants in both the face and house groups (i.e., no difference in reaction time for individual vs. basic level categorization during at least one verification task). Eight of nine participants in each group met this criterion for their final two consecutive verification tasks, which is a more conservative standard of expertise. All individuals had accuracy above 85% across the final two verification tasks. Groups did not differ in accuracy, Mface = 95.3%, SD = 5.2% and Mhouse = 92.5%, SD = 3.6%, t(16) = 1.3, p = .21, Cohen’s d = .63, or the number of sessions required to reach these expertise criteria, Mface = 6.3, SD = 1.5 and Mhouse = 6.0, SD = 1.3, t(16) = 0.42, p = .68, Cohen’s d = .21.

Behavioral Measures of Face Processing Ability

Standardized Measures Before and After Training

Change scores were calculated for each participant (i.e., pre-training score minus post-training score). Groups did not significantly differ in the magnitude of change in their performance (ts < 1.37, ps >.19). Instead, one-sample t tests revealed both groups significantly improved after training on the WMS Faces immediate, t(17) = 3.45, p = .003, Cohen’s d = .81, WMS Faces delayed, t(17) = 3.82, p = .001, d = .90, and House Memory immediate, t(17) = 2.89, p = .04, Cohen’s d = .54. No changes were detected on the House Memory delayed or Benton Test of Facial Recognition (ts < 1.55, ps >.14).

Inversion Effect and Holistic Processing Before and After Training

Prior to training, there were no group differences in accuracy overall or for any conditions (orientation, whole/part, feature) measured (Fs < 2.75, ps >.12). For both groups baseline accuracy was greater (F(1, 16) = 11.69, p = .004, ) but reaction time was similar for upright versus inverted faces. Accuracy was greater and reaction time was slower for whole faces than for features (Fs(1, 16) >6.87, ps < .018, ). Groups differed in their baseline reaction time pattern (group × test type interaction: F(1, 16) = 6.26, p = .024, ). Reaction times were similar across conditions for the face group (Mwh = 2.517 s, SD = 0.776 vs. Mpt = 2.493 s, SD = 0.728), while the house group initially processed parts of faces more quickly than whole faces (Mwh = 2.503 s, SD = 0.574 vs. Mpt = 2.143 s, SD = 0.387). Baseline reaction time and accuracy did not differ for eyes versus mouths.

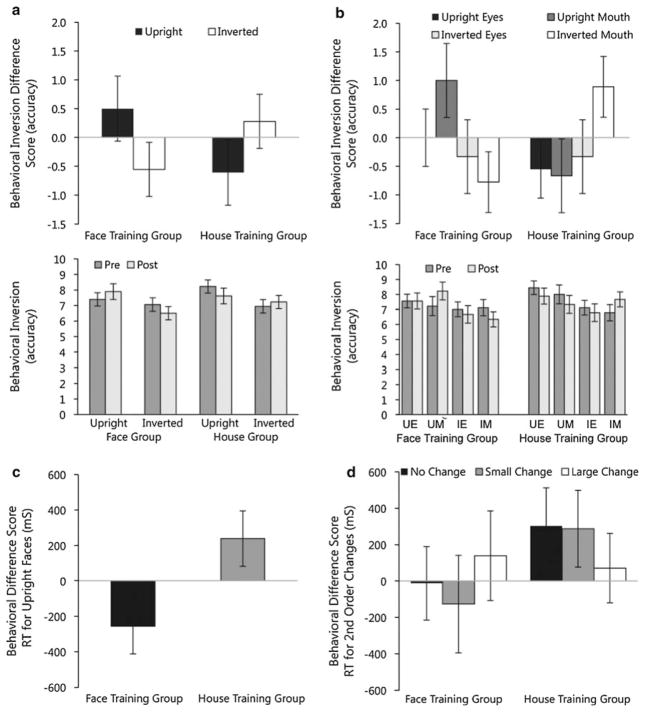

After training, repeated measures ANOVA with difference scores (change in accuracy) on trials with whole faces revealed a larger change in the inversion effect for the face group than the house group (group × orientation interaction: F(1, 16) = 5.13, p = .038, , as shown in Fig. 2a). The magnitude of the inversion effect for trials with whole faces was influenced by feature type (group × orientation × feature interaction: F(1, 16) = 5.66, p = .030, , as shown in Fig. 2b). For the face group, accuracy was maintained for upright eyes, improved on upright mouths, and decreased for both inverted conditions. For the house group, accuracy decreased for both upright conditions and inverted eyes, and improved with inverted mouths. When processing of upright whole faces and face features was compared, no significant changes in accuracy were detected for the whole versus part conditions, (Fs < .52, ps >.48), suggesting that holistic processing accuracy was not significantly impacted by face or house training.

Fig. 2.

Main behavioral responses to training. Top Accuracy changes for each training group to faces as a result of training (post-training minus baseline) a for the whole face inversion effect and b by feature within whole faces. Middle Comparison of accuracy scores at baseline and after training corresponding to change scores in the top row. Bottom Reaction time changes from baseline to post-training for each training group c for upright faces and d by the degree of second order configural manipulation of faces. Standard error bars are shown

When changes in reaction time were examined, groups differed for upright faces (main effect of group: F(1, 16) = 5.00, p = .040, , as shown in Fig. 2c); the face training group demonstrated significant improvements in reaction time (Mpre = 2.483 s, SD = 0.751, Mpost = 2.227 s, SD = 1.005) compared with the house training group (Mpre = 2.218 s, SD = 0.524, Mpost = 2.457 s, SD = 0.367). There were no other training-related group effects on reaction time related to the availability of the whole upright face versus an isolated feature or related to inversion of whole faces (Fs < 3.54, ps >.08, ).

Detection of Second-order Manipulations Before and After Training

At baseline, the accuracy of detecting second-order manipulations depended on their size (main effect of magnitude: F(2, 30) = 7.67, p = .005, ), but reaction times were similar regardless of magnitude. There were no group differences or group by magnitude interactions for accuracy or reaction time (Fs < 1.17, ps >.30). There were also no group differences in signal detection (d′) of matches versus non-matches at baseline, t(15) = −0.17, p = .87, Cohen’s d = −0.08.

Training did not improve accuracy of detecting second order manipulations (Fs < 0.55, ps >.53). However, the pattern of changes in reaction time differed by group (group × magnitude interaction: F(2,30) = 6.54, p = .005, , as shown in Fig. 2d). There were no differences in d′ at post testing, and groups did not differ in the change in d′ signal detection of faces between time points (ts < 0.26, ps >.8, Cohen’s ds < 0.13).

Naturalistic Face Memory Task

At the conclusion of training, 4 individuals in the face training group correctly identified the face of the female who interrupted the testing session, 3 choose the distracter who was rated most similar to the target face, and 2 choose an unrelated distracter. In the house training group, 1 individual correctly identified the face interrupter, and 7 chose an unrelated distracter. (Data was unavailable for 1 individual in the house training group.) Using strict criteria of only scoring the target as correct, this proportion did not differ, χ2 (1, N = 17) = 2.08, p = .15, Φ = .350. Using more liberal criteria (target + closest distracter vs. unrelated distracters), better naturalistic face memory was detected in the face training group, χ2 (1, N = 17) = 7.24, p = .007, Φ = .653. All but one participant per group oriented to the interrupter. Thus, group differences in recognition did not appear to be due to a basic failure to orient.

Event Related Potentials Before and After Training

Of the 18 individuals in the study who were assessed using the ERP paradigm, two were excluded from ERP analyses: one fell asleep (House; post-training ERP) and one did not have artifact free data (Face; pre-training). Of the remaining 16 individuals, three in the house training group had poorly defined (left) early components (2 pre-training; 1 post-training) and were removed from some of the P1 and N170 analyses; one in the face group did not have data available for analyzing trained versus untrained images (N250 analysis) due to computer error.

P1 Component

In the subset of individuals participating in training who had adequate ERP data, at baseline, the P1 amplitude at medial posterior leads was significantly greater for faces than houses (main effect of stimulus: F(1,12) = 16.58, p = .002, ). Inverted faces were of greater amplitude than upright faces with no difference between upright and inverted houses (stimulus × orientation interaction: F(1,12) = 10.4, p = .007, ). Groups did not differ in P1 amplitude (group: F(1,12) = .28, p = .61). For P1 latency, participant responses were significantly faster for faces than houses (main effect of stimulus: F(1,12) = 7.03, p = .02, ); Fig. 3. However, there were group differences for the latency of the P1 (stimulus × group interaction: F(1,12) = 6.9, p = .02, ). The face training group (main effect of stimulus: F(1,7) = 14.5, p = .007; ) but not the house training group (no main effect of stimulus: F(1,5) = .00, p = .99; ) showed a faster response to faces than houses before training. Both groups initially processed upright stimuli faster than inverted (main effect of orientation: F(1,12) = 7.01, p = .02, ), and groups did not differ in their P1 amplitude or latency responses to upright versus inverted stimuli (Fs < 1.3, ps >.28).

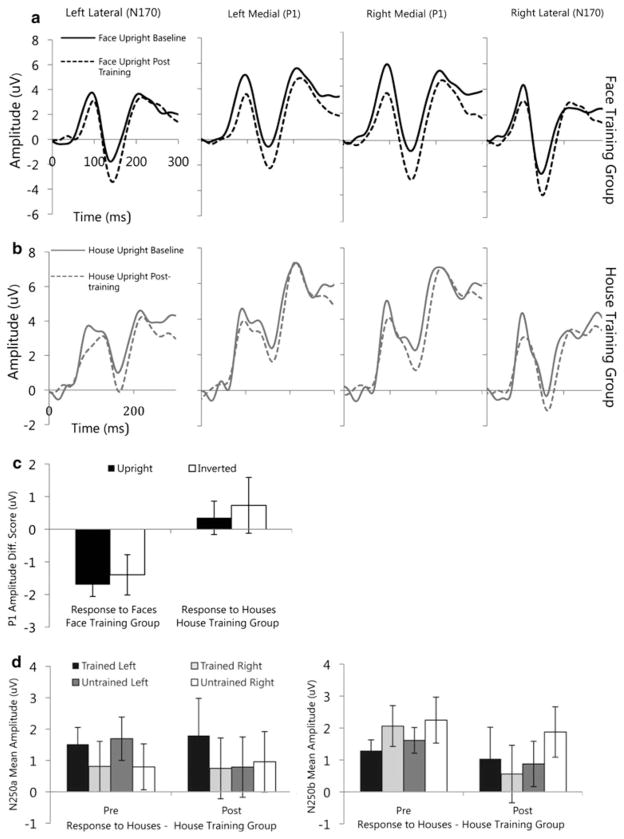

After training, groups were examined separately to test the pattern of performance within each group for the trained stimulus category. Face training reduced the P1 responses to faces (overall effect: F(1,7) = 10.7, p = .014 ) with the amplitude becoming more negative from pre- to post-training; Fig. 4. For the house training group, there were no significant changes in the P1 amplitude response to houses or faces (main effects of stimulus: Fs(1,6) < .67, ps >.44, ). Groups were also directly compared and the reduction in P1 amplitude in response to faces significantly differed by group (group effect: F(1,13) = 4.8, p = .047 ). There were no training related changes in latency of the P1 for either group when examined separately or when groups were directly compared (for faces).

Fig. 4.

Top Effects of training on ERP responses to a upright faces (black lines) in the Face Training group and b upright houses (grey lines) in the House Training group by region. P1 was analyzed over left and right medial electrode groups; N170 was analyzed across left and right lateral electrode groups. Bottom Main ERP responses to training. c P1 amplitude changes in response to training (post-training minus baseline) to faces in the Face Training group, and houses in the House Training group. Laterality of baseline and post-training N250 responses d to trained and untrained houses in the House Training group

N170 Component

At baseline, participants in the training study showed a more negative and faster lateral posterior N170 to faces than houses (main effect of stimulus: Fs(1,12) >37.1, ps < .001, ), and a more negative but slower response to inverted than upright stimuli (main effect of orientation: Fs(1,12) >23.9, ps < .001, ); Fig. 3. Further, inverted faces were associated with ERPs that were more negative in amplitude but slower in latency than upright faces, with no differences in the processing of upright versus inverted houses (stimulus × orientation interactions for amplitude and latency: Fs(1,12) >4.5, ps < .055, ). The N170 was also more negative in right than left lateral posterior leads (main effect of hemisphere: F(1,12) = 11.6, p = .005 ); although, this differed by group, with the house training group showing this pattern but the face training group showing bilateral processing (hemisphere × group interaction: F(1,12) = 5.5, p = .04, ). There were no other group differences at baseline. In sum, despite selection of participants with a specific behavioral face memory impairment, this group did not exhibit an atypical electrophysiological response pre-training, at least as assessed by the N170 (see Webb et al. 2009).

Effects of training were first examined within the face training group. There were not significant effects of training on overall N170 amplitude, though there was a trend in the lateral posterior region for response to faces to became more negative after training (overall: F(1,7) = 5.0, p = .06; ). There were no training related changes in response to orientation (for main effect and interactions all Fs < 1.7, ps >.23). There were no changes in latency (for all main effects and interactions: Fs < 1.4, ps >.28). For the house training group, the N170 lateral posterior amplitude and latency did not change in response to training, nor were there changes in response to houses based on orientation (for main effect and interactions: Fs(1,5) < 1.2, ps >.32, ), suggesting that house training does not produce an inversion effect for house stimuli. Amplitude and latency in response to faces did not change for the house group (for all main effects and interactions: Fs < 2.8, ps >.13, ) indicating that house training did not impact the participants’ responses to faces. Direct comparison of groups was consistent with these findings; there were no significant group effects for N170 amplitude or latency to faces.

N250 Average Amplitude

At baseline, the amplitude of the N250a and N250b at posterior-temporal leads was more negative at the temporal sites than posterior sites (T7/8 < TP9/10 < P9/10 < P7/8) (main effect of lead: Fs(3,13) >27.1, ps < .001, ). The right hemisphere N250a (but not N250b) response to upright stimuli was more negative than the response to inverted stimuli (hemisphere × orientation interaction: F(1,13) = 4.6, p = .05, ). For the N250b but not the N250a, the response to faces was more negative than the response to houses (main effect of stimulus: F(1,13) = 7.4, p = .02, ) and the response to to-be-trained stimuli was more negative than untrained (main effect of stimuli: F(1,13) = 5.5, p = .04, ). Groups did not differ in N250 amplitude (main effect and interactions: Fs(1,13) < 4.1, ps >.06, ).

After face training, neither the N250a nor N250b changed, including the N250 response to trained versus untrained faces (all main effects and interactions: Fs < 2.4, ps >.15, ). For the house-training group, there were several effects of training at the N250. First, the amplitude response to trained versus untrained houses differed based on hemisphere for both the N250a and N250b (trained × hemisphere interaction: Fs(1,7) >5.4, ps ≤ .05, ). Before training, the overall N250a to houses was more negative in the right than left hemisphere, while after training the N250a amplitude remained more negative in the right than left for trained houses but was of similar amplitude in the right and left for untrained houses (Fig. 4d). In contrast, at the N250b, pre-training responses were equivalent in the right and left hemisphere, while the post-training N250b amplitude remained equivalent in the right and left hemisphere for trained images but became more negative in the left than right for the untrained houses (Fig. 4d). For both the N250a and N250b there was an interaction involving training, orientation, and lead (Fs >4.6, ps < .04, ); however, none of the change scores was greater than zero (ts(7) = −2.6 to 1.8) suggesting that the interaction was driven more by topographical differences than by training related effects. There were no changes in the N250a/b to faces in the house training group (main effect and interactions: Fs(1,6) < 1.9, p >.17, ). Direct comparisons of the groups were also conducted for N250a/b amplitude. For face stimuli, there were no significant group interactions. However, for house stimuli the interaction involving training and orientation was reflected at the group level (Fs >8.1, ps < .01, ).

Self-report of Training

There were no group differences in the proportion of participants who reported an impact of training on the way they viewed faces or in their social interactions. Of the participants who responded to these items, 64% reported no change in social functioning and 81% reported no change in the way they viewed faces. Some noted that they had not yet had the opportunity to put their skills to use because they had not met any new people since completing training.

Discussion

Both groups were able to reach criteria for expertise as defined in the literature, suggesting that individuals with ASD are able to become experts with social stimuli (i.e., faces) as well as non-social objects (i.e., houses) through focused experience. Face and house training were comparable in difficulty for adults with ASD. Thus, the main finding of Faja et al. (2008) that individuals with ASD become expert with subordinate level classification was replicated.

Next we assessed the ability of individuals in the face group to generalize these perceptual abilities to untrained faces. Expertise training in general was associated with better performance on standardized measures, with both face and house recognition improving. We speculate that improvements on standardized measures may be related to increased motivation and attention as a result of developing a relationship with the research group during training as well as practice effects with the tasks. While standardized tasks may be influenced by a number of factors related to the training, experimental measures that probe for the neurotypical biases in face processing suggest that face training specifically alters basic face processing ability in individuals with ASD. Specifically, current face training increased the behavioral inversion effect with unfamiliar faces. After training, the face group had relatively greater improvement in accuracy for whole faces in the upright condition, more difficulty with inverted faces, and increased efficiency responding to upright faces than the house group. The inversion effect is thought to result from a disruption of configural processing (e.g., Diamond and Carey 1986; Farah et al. 1995; Mondloch et al. 2002; Young et al. 1987), so these changes may represent increased awareness and encoding of configural information. Group differences in holistic processing of upright faces (i.e., measured by a processing advantage for recognizing features in the context of a face relative to isolated features) were not found as a result of training, perhaps due to the initial advantage for whole faces at baseline. There were also no significant training differences between the eye or mouth region when measured experimentally. The face training group had increased efficiency recognizing manipulations of second order relations within faces relative to the house group, particularly for small manipulations, which is consistent with Faja et al. (2008). In the current study, accuracy for detecting changes in second order relations did not change, suggesting increased efficiency was not at the cost of accuracy for the face group. Finally, although nearly two-thirds of participants did not notice a change in social function and over 80% believed their ability to recognize faces did not differ after training, the group receiving face training was significantly more likely to select either the photo of an unfamiliar person who interrupted the session or err by selecting the most similar distracter than the house group for whom errors were more evenly distributed. Thus, with the behavioral battery, it appears there are some specific improvements in specialized face processing ability related to the type of training received.

This was the first investigation to our knowledge that measured changes in brain response via ERP as a result of training individuals with ASD. Despite more typical baseline ERP results than expected for individuals with ASD, which were consistent with the larger STAART sample (Webb et al. 2009), specific changes in ERP amplitude suggest that changes in processing emerged after training. P1 responses to faces were reduced in individuals in the face training group, but not the house training group, which may be due to modulation of early attention to faces. Hypothetically, individuals who were trained on faces employed less neural effort to attend to faces presented briefly in an implicit task (e.g. Taylor et al. 2004). For the N170 component, this subgroup of participants with behavioral face memory impairments had relatively typical N170 responses to both faces versus houses and upright versus inverted faces. Thus, it is perhaps not surprising that there were no significant changes in response to faces as a result of face training. Unlike previous training paradigms (Scott et al. 2006, 2008), house training did not appear to alter the N170 to houses, nor was there an increased house inversion effect at the N170 for amplitude or latency. This may be due to the timing of post testing (discussed below), the meaningfulness of houses or the semantic descriptors used for house training, which were post-hoc classifications (general shape, number of stories). Houses do not seem to lend themselves to the semantic categorization, perceptual standardization, and social enthusiasm that one finds for cars or animals. Post-training responses at the N250a/b suggest familiarity with the specific training images did change electrophysiological response. Unlike previous studies with typical adults, which found the N250 amplitude can be modulated by familiarity, repetition, and overt recognition (e.g., Itier and Taylor 2004; Jemel et al. 2010; Schweinberger et al. 2002, 2004; Tanaka et al. 2006) and increased for non-face stimuli trained at the subordinate level (Scott et al. 2006, 2008), the face training group did not show more negative N250 amplitude to the trained images. For the house training group, lateralization of the N250 for houses shifted after training suggesting the potential for changes in the neural circuitry contributing to this response. These changes in N250 for houses trained at the subordinate level measured 1–2 weeks after training are consistent with Scott et al. (2006, 2008), who reported that electrophysiological changes related to non-face expertise training were maintained 1 week later for the N250 component, but not the N170 component and that the changes detected immediately following both basic and subordinate level training were reflected in the N170 and N250, while only subordinate level training produced changes in the N250.

As an important caveat, the baseline N250 responses from both groups suggest that unintentional biases within our stimuli set resulted in differences in the trained versus untrained stimuli. Trained faces included young, middle and older individuals (to facilitate categorization during the training) while the untrained images were mainly young adults. Wiese et al. (2008) found that young neurotypical participants show more negative N250 amplitude to old faces; likewise responses to the trained images in the current study were of more negative amplitude. Second, neurotypical adults may show a less negative N250-like response to ‘other-race’ faces (e.g. Balas and Nelson 2010; Ito et al. 2004; Ito and Urland 2003; though see Stahl et al. 2008) and trained images included a larger proportion of Caucasian faces than untrained images (96% vs. 60%). Last, individuating experience with non-face categories may increase N250 negativity in neurotypical adults (Scott et al. 2006, 2008); the trained house stimuli contained houses from this region whilst the untrained stimuli contained a more geographically diverse set of images. Thus, the baseline results suggest that participants may have had relatively typical electrophysiological correlates for age, race, and architectural familiarity prior to training.

There are important limitations to the current study. First, although all eligible participants who were screened as part of the larger STAART study were invited to participate, the number of interested individuals was relatively small. Self-selection may have resulted in a group with more preserved skills (as suggested by the baseline ERP findings) or greater motivation. Second, it is possible that performance improvements may represent a general effect of increased motivation, practice, or regression toward the mean when retested. Participants had 6–9 STAART visits prior to training, and may have benefitted from familiarity with our research space and staff. Third, the larger group of individuals with ASD (Webb et al. 2009, 2010), as well as the specific subgroup who participated in this project, demonstrated relatively fewer electrophysiological disruptions than in previous reports of individuals with ASD (McPartland et al. 2004; O’Connor et al. 2007). Minor external manipulations to attention (use of a cross hair) during the ERP may have allowed individuals with ASD to recruit typical basic face processing circuits. If training facilitates a more typical pattern of attention, use of an external cue would mask these changes. Fourth, participants discontinued training immediately when they met criteria for expertise (as in Gauthier and Tarr 1997), which we believed would increase motivation during training. However, this introduced variability in the duration of exposure to training stimuli. Because training related changes in an early electrophysiological component (the N170) have been suggested to relate to exposure to a stimulus class rather than expertise at the subordinate level (Scott et al. 2006, 2008), the effect of this variability will be important to pursue in future studies. Last, because this study primarily focused on measuring the specific effects of face expertise training as a way to improve specialized face processing rather than an examination of the capacity of individuals with ASD to develop expertise in general, some tasks focused solely on face processing limiting examination of object expertise training effects. This also guided our decision to provide house training in a manner that very closely paralleled face training so that general procedures were closely controlled (e.g., contact with the trainer, use of explicit verbal instructions with a similar script rather than providing information about architectural style, identical matching tasks).

Despite these limitations, the results of the current study, along with Faja et al. (2008), suggest perceptual expertise training is a promising way to intervene and improve processing of trained stimuli. Importantly, specific effects of training with faces, both in brain and behavior, extend beyond general expertise training with objects and generalize to novel faces. Face training increased behavioral sensitivity to face inversion, efficiency of responses to upright faces, and is more likely to translate to accurate representation of faces encountered in real-world situations. Participants had insight about poor face processing efficiency, so it is of interest that face training uniquely produced changes to the P1 component, which is thought to index early visual attention to a stimulus, as well as reaction time to manipulations of second order information and upright faces in general. In contrast, a clear pattern of expertise did not emerge for the house group, particularly for house versus face memory or for electrophysiological responding.

It should be noted that in a sample of adults with high functioning ASDs who were specifically selected for having impairments on standardized measures of face recognition and memory, the baseline performance for experimental behavioral measures and ERP responding resembled the pattern of typically developing adults in many ways. We speculate that for the face group, an existing framework for face processing had already developed prior to specialized training, albeit less consistently specialized for faces and more poorly developed than controls. The specific effects of face training led to improvements that produced a more consistent and typical pattern of brain and behavioral responses to faces.

The current investigation has several clinical implications. While standardized measures allow for comparison of performance with a common task, they generally do not require a specific type of perceptual processing. As a consequence, it is likely that individuals with ASD may have exhibited behavioral impairments and improvements for a variety of reasons. Second, the two expertise training conditions provide some information about the essential features of training. Use of face stimuli within this context produced changes specific to faces beyond effects of rule-based instruction and time with an examiner, while individuals receiving house training exhibited the opposite pattern of responding for experimental measures of face processing (e.g., reduced inversion effect, and longer reaction times for face tasks). One other factor that appears to be important for individuals with ASD is adequate time for consolidation of perceptual information. An additional pilot participant combined all face training sessions into a 2-day period due to his travel distance. He did not meet expertise criteria in 8 h of training.

Future investigations will be critical to determine whether expertise training is effective with children for whom there may be greater plasticity and whether earlier development of expertise is more likely to improve the ability of individuals with ASD to use facial information in their social context. Participants commented that it was difficult to know whether training worked without meeting any new people, so follow up investigations to determine whether changes in brain response and behavior are lasting over time will also be important. While the current study suggests that the face group may generalize some effects of training to real faces, it may be most efficacious to place this type of training within a larger therapeutic setting such as social skills groups or job training. Another direction for future work will be to assess whether more specialized brain and behavior for faces translates to dynamic stimuli. At baseline, 60% of the participants described advantages to viewing faces under experimental conditions (i.e., static pictures) compared with real-world face-to-face interactions, such as being able to stare and not being simultaneously required to recognize emotions or listen to speech. One participant articulated a common theme saying, “Printed faces don’t move, so they’re easier to look at. It’s harder with a moving face, because there is less time and also they could see if I was staring.” Another noted that shyness contributes to this difference saying, “I’m shy, so I don’t look at faces in real life. It’s easier to look at pictures.” Thus, initial training in which faces are presented statically may be critical for individuals with ASD to focus their attention to key aspects of the face and to apply rule-based strategies for perception. But it will be important to introduce more complex and naturalistic stimuli, both during training and assessment, in order to better understand the initial challenges and progress made during training.

Acknowledgments

This project was supported by the NIMH STAART (Dawson/Aylward U54 MH066399), the NICHD Autism Center of Excellence (Webb P50 HD055782), NINDS Postdoctoral Award (Faja T32NS007413), Autism Speaks Postdoctoral Fellowship (Jones), the UW Mary Gates Fellowship (Kamara), Dr. Jessica Greenson and the STAART Diagnostic Core, and the UW Psychophysiology and Behavioral Systems Lab. The project is the sole responsibility of the authors and does not necessarily reflect the views of the funding agencies. Some stimuli were from the MacBrain Face Stimulus Set (John D. & Catherine T. MacArthur Foundation Research Network on Early Experience & Brain Development), Dr. Nancy Kanwiser (houses), and Dr. Martin Eimer (houses). We especially thank the participants and their families. These data were presented at the International Meeting for Autism Research, London, UK, in May, 2008.

Contributor Information

Susan Faja, Email: susfaja@u.washington.edu, Department of Psychiatry & Behavioral Sciences, University of Washington, CHDD Box 357920, Seattle, WA 98195, USA. Department of Psychology, University of Washington, Seattle, WA, USA. The Children’s Hospital of Philadelphia, Philadelphia, PA, USA.

Sara Jane Webb, Department of Psychiatry & Behavioral Sciences, University of Washington, CHDD Box 357920, Seattle, WA 98195, USA. Department of Psychology, University of Washington, Seattle, WA, USA.

Emily Jones, Department of Psychiatry & Behavioral Sciences, University of Washington, CHDD Box 357920, Seattle, WA 98195, USA.

Kristen Merkle, Department of Psychiatry & Behavioral Sciences, University of Washington, CHDD Box 357920, Seattle, WA 98195, USA. Institute of Imaging Science, Vanderbilt University, Nashville, TN, USA.

Dana Kamara, Department of Psychology, University of Washington, Seattle, WA, USA.

Joshua Bavaro, Department of Psychology, University of Washington, Seattle, WA, USA.

Elizabeth Aylward, Department of Psychiatry & Behavioral Sciences, University of Washington, CHDD Box 357920, Seattle, WA 98195, USA. Department of Radiology, University of Washington, Seattle, WA, USA.

Geraldine Dawson, Department of Psychiatry & Behavioral Sciences, University of Washington, CHDD Box 357920, Seattle, WA 98195, USA. Department of Psychology, University of Washington, Seattle, WA, USA. Autism Speaks, New York, NY, USA. Department of Psychiatry, University of North Carolina at Chapel Hill, Chapel Hill, NC, USA.

References

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4. Washington, DC: Author; 1994. [Google Scholar]

- Balas B, Nelson CA. The role of face shape and pigmentation in other-race face perception: An electrophysiological study. Neuropsychologia. 2010;48:498–506. doi: 10.1016/j.neuropsychologia.2009.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benton AL, Sivan AB, de Hamsher KS, Varney NR, Spreen O. Facial recognition: Stimulus and multiple choice pictures. New York, NY: Oxford University Press; 1983. [Google Scholar]

- Bölte S, Feineis-Matthews S, Leber S, Dierks T, Hubl D, Poustka F. The development and evaluation of a computer-based program to test and to teach the recognition of facial affect. International Journal of Circumpolar Health. 2002;61:61–68. doi: 10.3402/ijch.v61i0.17503. [DOI] [PubMed] [Google Scholar]

- Bölte S, Hubl D, Feineis-Matthews S, Prvulovic D, Dierks T, Poustka F. Facial affect recognition training in autism: Can we animate the fusiform gyrus? Behavioral Neuroscience. 2006;120:211–216. doi: 10.1037/0735-7044.120.1.211. [DOI] [PubMed] [Google Scholar]

- Boutet I, Faubert J. Recognition of faces and complex objects in younger and older adults. Memory and Cognition. 2006;34:854–864. doi: 10.3758/bf03193432. [DOI] [PubMed] [Google Scholar]

- Deruelle C, Rondan C, Gepner B, Tardif C. Spatial frequency and face processing in children with autism and Asperger syndrome. Journal of Autism and Developmental Disorders. 2004;34:199–210. doi: 10.1023/b:jadd.0000022610.09668.4c. [DOI] [PubMed] [Google Scholar]

- Diamond R, Carey S. Why faces are and are not special: An effect of expertise. Journal of Experimental Psychology: General. 1986;115:107–117. doi: 10.1037//0096-3445.115.2.107. [DOI] [PubMed] [Google Scholar]

- Faja S, Aylward E, Bernier R, Dawson G. Becoming a face expert: A computerized face-training program. Developmental Neuropsychology. 2008;33:1–24. doi: 10.1080/87565640701729573. [DOI] [PubMed] [Google Scholar]

- Faja S, Webb SJ, Merkle K, Aylward E, Dawson G. Brief report: Face configuration accuracy and processing speed among adults with high-functioning autism spectrum disorders. Journal of Autism and Developmental Disorders. 2009;39:532–538. doi: 10.1007/s10803-008-0635-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah MJ, Tanaka JW, Drain HM. What causes the face inversion effect? Journal of Experimental Psychology; Human Perception and Performance. 1995;21:628–634. doi: 10.1037//0096-1523.21.3.628. [DOI] [PubMed] [Google Scholar]

- Freire A, Lee K. Face recognition in 4- to 7-year olds: Processing of configural, featural, and paraphernalia information. Journal of Experimental Child Psychology. 2001;80:347–371. doi: 10.1006/jecp.2001.2639. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Becoming a “Greeble” expert: Exploring mechanisms for face recognition. Vision Research. 1997;37:1673–1682. doi: 10.1016/s0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Williams P, Tarr MJ, Tanaka J. Training Greeble experts: A framework for studying expert object recognition processes. Vision Research. 1998;38:2401–2428. doi: 10.1016/s0042-6989(97)00442-2. [DOI] [PubMed] [Google Scholar]

- Golan O, Baron-Cohen S. Systemizing empathy: teaching adults with Asperger syndrome or high-functioning autism to recognize complex emotions using interactive multimedia. Development and Psychopathology. 2006;18:591–617. doi: 10.1017/S0954579406060305. [DOI] [PubMed] [Google Scholar]

- Golan O, Baron-Cohen S, Ashwin E, Granader Y, McClintock S, Day K, et al. Enhancing emotion recognition in children with autism spectrum conditions: An intervention using animated vehicles with real emotional faces. Journal of Autism and Developmental Disorders. 2010;40:269–279. doi: 10.1007/s10803-009-0862-9. [DOI] [PubMed] [Google Scholar]

- Hobson RP, Ouston J, Lee A. What’s in a face? The case of autism. The British Journal of Psychology. 1988;79:441–453. doi: 10.1111/j.2044-8295.1988.tb02745.x. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Taylor MJ. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: A repetition study using ERPs. Neuroimage. 2002;15:353–372. doi: 10.1006/nimg.2001.0982. [DOI] [PubMed] [Google Scholar]

- Itier RH, Taylor MJ. Effects of repetition learning on upright, inverted and contrast-reversed face processing using ERPs. Neuroimage. 2004;21:1518–1532. doi: 10.1016/j.neuroimage.2003.12.016. [DOI] [PubMed] [Google Scholar]

- Ito TA, Urland GR. Race and gender on the brain: Electrocortical measures of attention to the race and gender of multiply categorizable individuals. Journal of Personality and Social Psychology. 2003;85:616–626. doi: 10.1037/0022-3514.85.4.616. [DOI] [PubMed] [Google Scholar]

- Ito TA, Thompson E, Cacioppo JT. Tracking the timecourse of social perception: The effects of racial cues on event-related brain potentials. Personality and Social Psychology Bulletin. 2004;30:1267–1280. doi: 10.1177/0146167204264335. [DOI] [PubMed] [Google Scholar]

- Jemel B, Schuller AM, Goffaux V. Characterizing the spatio-temporal dynamics of the neural events occurring prior to and up to overt recognition of famous faces. Journal of Cognitive Neuroscience. 2010;22:2289–2305. doi: 10.1162/jocn.2009.21320. [DOI] [PubMed] [Google Scholar]

- Jones W, Carr K, Klin A. Absence of preferential looking to the eyes of approaching adults predicts level of social disability in 2-year-old toddlers with autism spectrum disorder. Archives of General Psychiatry. 2008;65:946–954. doi: 10.1001/archpsyc.65.8.946. [DOI] [PubMed] [Google Scholar]

- Joseph RM, Tanaka J. Holistic and part-based face recognition in children with autism. Journal of Child Psychology and Psychiatry and Allied Disciplines. 2003;44:529–542. doi: 10.1111/1469-7610.00142. [DOI] [PubMed] [Google Scholar]

- Kleinhans NM, Richards T, Sterling L, Stegbauer KC, Mahurin R, Johnson LC, et al. Abnormal functional connectivity in autism spectrum disorders during face processing. Brain. 2008;131:1000–1012. doi: 10.1093/brain/awm334. [DOI] [PubMed] [Google Scholar]

- Kleinhans NM, Johnson LC, Richards T, Mahurin R, Greenson J, Dawson G, et al. Reduced neural habituation in the amygdala and social impairments in autism spectrum disorders. American Journal of Psychiatry. 2009;166:467–475. doi: 10.1176/appi.ajp.2008.07101681. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Klinger LG, Dawson G. Prototype formation in autism. Developmental Psychopathology. 2001;13:111–124. doi: 10.1017/s0954579401001080. [DOI] [PubMed] [Google Scholar]

- Lahaie A, Mottron L, Arguin M, Berthiaume C, Jemel B, Saumier D. Face perception in high-functioning autistic adults: Evidence for superior processing of face parts, not for a configural face-processing deficit. Neuropsychology. 2006;20:30–41. doi: 10.1037/0894-4105.20.1.30. [DOI] [PubMed] [Google Scholar]

- Langdell T. Recognition of faces: An approach to the study of autism. Journal of Child Psychology and Psychiatry and Allied Disciplines. 1978;19:255–268. doi: 10.1111/j.1469-7610.1978.tb00468.x. [DOI] [PubMed] [Google Scholar]

- Lord C, Rutter M, LeCouteur A. Autism Diagnostic Interview-Revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders. 1994;24:659–685. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- Lord C, Risi S, Lambrecht L, Cook EH, Jr, Leventhal BL, DiLavore PC, et al. The Autism Diagnostic Observation Schedule–Generic: A standard measure of social and communication deficits associated with the spectrum of autism. Journal of Autism and Developmental Disorders. 2000;30:205–223. [PubMed] [Google Scholar]

- McKone E, Boyer BL. Sensitivity of 4-year-olds to featural and second-order relational changes in face distinctiveness. Journal of Experimental Child Psychology. 2006;94:134–162. doi: 10.1016/j.jecp.2006.01.001. [DOI] [PubMed] [Google Scholar]

- McKone E, Kanwisher N, Duchaine BC. Can generic expertise explain special processing for faces? Trends in Cognitive Sciences. 2007;11:8–15. doi: 10.1016/j.tics.2006.11.002. [DOI] [PubMed] [Google Scholar]

- McPartland J, Dawson G, Webb S, Panagiotides H, Carver L. Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology and Psychiatry. 2004;45:1235–1245. doi: 10.1111/j.1469-7610.2004.00318.x. [DOI] [PubMed] [Google Scholar]

- Mondloch CJ, Le Grand R, Maurer D. Configural face processing develops more slowly than featural face processing. Perception. 2002;31:553–566. doi: 10.1068/p3339. [DOI] [PubMed] [Google Scholar]

- Nishimura M, Rutherford MD, Maurer D. Converging evidence of configural processing of faces in high-functioning adults with autism spectrum disorders. Visual Cognition. 2008;16:859–891. [Google Scholar]

- O’Connor K, Hamm JP, Kirk IJ. Neurophysiological responses to face, facial regions and objects in adults with Asperger’s syndrome: An ERP investigation. International Journal of Psychophysiology. 2007;63:283–293. doi: 10.1016/j.ijpsycho.2006.12.001. [DOI] [PubMed] [Google Scholar]

- Pellicano E, Rhodes G. Holistic processing of faces in preschool children and adults. Psychological Science. 2003;14:618–622. doi: 10.1046/j.0956-7976.2003.psci_1474.x. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Develomental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Rose FE, Lincoln AJ, Lai Z, Ene M, Searcy YM, Bellugi U. Orientation and affective expression effects on face recognition in Williams syndrome and autism. Journal of Autism and Developmental Disorders. 2007;37:513–522. doi: 10.1007/s10803-006-0200-4. [DOI] [PubMed] [Google Scholar]

- Rossion B, Delvenne JF, Debatisse D, Goffaux V, Bruyer R, Crommelinck M, et al. Spatio-temporal localization of the face inversion effect: an event-related potentials study. Biological Psychology. 1999;50:173–189. doi: 10.1016/s0301-0511(99)00013-7. [DOI] [PubMed] [Google Scholar]

- Rossion B, Gauthier I, Goffaux V, Tarr MJ, Crommelinck M. Exertise training with novel objects leads to left-lateralized facelike electrophysiological responses. Psychological Science. 2002;13:250–257. doi: 10.1111/1467-9280.00446. [DOI] [PubMed] [Google Scholar]

- Rutherford MD, McIntosh DN. Rules versus prototype matching: strategies of perception of emotional facial expressions in the autism spectrum. Journal of Autism and Developmental Disorders. 2007;37:187–196. doi: 10.1007/s10803-006-0151-9. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Pickering EC, Jentzsch I, Burton AM, Kaufmann JM. Event-related brain potential evidence for a response of inferior temporal cortex to familiar face repetitions. Brain Research Cognitive Brain Research. 2002;14:398–409. doi: 10.1016/s0926-6410(02)00142-8. [DOI] [PubMed] [Google Scholar]

- Schweinberger SR, Huddy V, Burton AM. N250r: A face-selective brain response to stimulus repetitions. NeuroReport. 2004;15:1501–1505. doi: 10.1097/01.wnr.0000131675.00319.42. [DOI] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Sheinberg DL, Curran T. A reevaluation of the electrophysiological correlates of expert object processing. Journal of Cognitive Neuroscience. 2006;18:1453–1465. doi: 10.1162/jocn.2006.18.9.1453. [DOI] [PubMed] [Google Scholar]

- Scott LS, Tanaka JW, Sheinberg DL, Curran T. The role of category learning in the acquisition and retention of perceptual expertise: a behavioral and neurophysiological study. Brain Research. 2008;1210:204–215. doi: 10.1016/j.brainres.2008.02.054. [DOI] [PubMed] [Google Scholar]

- Silver M, Oakes P. Evaluation of a new computer intervention to teach people with autism or Asperger syndrome to recognize and predict emotions in others. Autism. 2001;5:299–316. doi: 10.1177/1362361301005003007. [DOI] [PubMed] [Google Scholar]

- Stahl J, Wiese H, Schweinberger SR. Expertise and own-race bias in face processing: An event-related potential study. NeuroReport. 2008;19:583–587. doi: 10.1097/WNR.0b013e3282f97b4d. [DOI] [PubMed] [Google Scholar]

- Sterling L, Dawson G, Webb SJ, Murias M, Munson J, Panagiotides H, et al. The role of face familiarity in eye tracking of faces by individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders. 2008;38:1666–1675. doi: 10.1007/s10803-008-0550-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Quarterly Journal of Experimental Psychology. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Sengco JA. Features and their configuration in face recognition. Memory & Cognition. 1997;25:583–592. doi: 10.3758/bf03211301. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Taylor M. Object categories and expertise: Is the basic level in the eye of the beholder? Cognitive Psychology. 1991;23:457–482. [Google Scholar]

- Tanaka JW, Curran T, Porterfield AL, Collins D. Activation of preexisting and acquired face representations: the N250 event-related potential as an index of face familiarity. Journal of Cognitive Neuroscience. 2006;18:1488–1497. doi: 10.1162/jocn.2006.18.9.1488. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Wolf JM, Klaiman C, Koenig K, Cockburn J, Herlihy L, et al. Using computerized games to teach face recognition skills to children with autism spectrum disorder: The Let’s Face It! program. Journal of Child Psychology and Psychiatry. 2010;51:944–952. doi: 10.1111/j.1469-7610.2010.02258.x. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Batty M, Itier RJ. The faces of development: A review of early face processing over childhood. Journal of Cognitive Neuroscience. 2004;16:1426–1442. doi: 10.1162/0898929042304732. [DOI] [PubMed] [Google Scholar]

- Teunisse JP, de Gelder B. Face processing in adolescents with autistic disorder: The inversion and composite effects. Brain and Cognition. 2003;52:285–294. doi: 10.1016/s0278-2626(03)00042-3. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168:242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace S, Coleman M, Bailey A. Face and object processing in autism spectrum disorders. Autism. 2008;1:43–51. doi: 10.1002/aur.7. [DOI] [PubMed] [Google Scholar]

- Webb SJ, Jones EJH, Merkle K, Murias M, Greenson J, Richards T, et al. Response to familiar faces, newly familiar faces, and novel faces as assessed by ERPs is intact in adults with autism spectrum disorders. International Journal of Psychophysiology. 2010a;77:106–117. doi: 10.1016/j.ijpsycho.2010.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb SJ, Jones EJH, Merkle K, Nankung J, Toth K, Greenson J, et al. Toddlers with elevated autism symptoms show slowed habituation to faces. Child Neuropsychology. 2010b;16:255–278. doi: 10.1080/09297041003601454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webb SJ, Merkle K, Murias M, Richards T, Aylward E, Dawson G. ERP responses differentiate inverted but not upright face processing in adults with ASD. Social Cognitive and Affective Neuroscience. 2009 doi: 10.1093/scan/nsp002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wechsler D. Wechsler adult intelligence scale. 3. San Antonio, TX: The Psychological Corporation; 1997a. [Google Scholar]

- Wechsler D. Wechsler memory scale. 3. San Antonio, TX: The Psychological Corporation; 1997b. [Google Scholar]

- Wiese H, Schweinberger SR, Hansen K. The age of the beholder: ERP evidence of an own-age bias in face memory. Neuropsychologia. 2008;46:2973–2985. doi: 10.1016/j.neuropsychologia.2008.06.007. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–145. [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configural information in face perception. Perception. 1987;16:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]