Clinical trial workload has been successfully measured and used to guide staffing by the Wichita Community Clinical Oncology Program. Further research is needed regarding its applicability to other research programs.

Abstract

Purpose:

The ability to quantify clinical trial–associated workload can have a significant impact on the efficiency and success of a research organization. However, methods to effectively estimate the number of research staff needed for clinical trial recruitment, maintenance, compliance, and follow-up are lacking. To address this need, the Wichita Community Clinical Oncology Program (WCCOP) developed and implemented an acuity-based workload assessment tool to facilitate assessment and balancing of workload among its research nursing staff.

Methods:

An acuity-based measurement tool was developed, assigning acuity scores for individual clinical trials using six trial-related determinants. Using trial acuity scores and numbers of patients per trial, acuity scores for individual research nursing staff were then calculated and compared on a monthly basis.

Results:

During the 11 years that data were collected, acuity scores increased from 65% to 181%. However, during this same period, WCCOP was able to decrease individual research nurse staff full-time equivalent (FTE) acuity scores and number of patients per FTE. These trends reflect the use of the acuity-based measurement tool to determine actual workload and use of the acuity data to direct hiring decisions.

Conclusion:

Clinical trial workload has been successfully measured and used to guide staffing by one community clinical oncology program. Further research is needed regarding its applicability to other research programs.

Introduction

Coordination of an efficient, successful clinical research program can be challenging in light of increased regulatory burden, fewer available trials, increasingly restrictive inclusion and exclusion criteria, and fewer dollars to manage the work. Compounding these challenges is the lack of resources for quantifying clinical trial–associated workload to help guide staffing and budgetary planning. Research investigators and managers are regularly faced with questions about research staffing needs to support clinical trial recruitment, maintenance, compliance, and follow-up. The National Cancer Institute (NCI) has historically based funding allocations for cooperative group accrual on a 1992 Cancer Clinical Investigations Review Committee algorithm of 1.0 full-time equivalents (FTEs) per 40 credits or registrations.1 This staffing and credit assignment formula does not account for differences in individual trial complexity and associated work effort.

Cancer clinical trials continue to increase in complexity. An analysis of 10,038 protocols conducted from 1999 to 2005 found a 6.5% annual increase in the total number of procedures required per protocol, such as laboratory tests, imaging, examinations, and office visits, along with an 8.7% annual increase in the frequency of procedures.2 In this same time period, the median number of total procedures per protocol increased from 89.8 to 150.5, and the median number of case report form pages increased from 55 to 180.2 The cancer clinical trials of today also require involvement of multiple disciplines and intensive recruitment planning. In addition, the requirements for biospecimen submission are expanding, and the likelihood of toxicity with subsequent adverse event reporting requirements has increased.

Development of a tool for measuring clinical trial workload using objective metrics has the potential to increase the productivity and efficiency of clinical research programs. Such a tool would help quantify actual work needs and provide guidance to investigators and managers in budgeting and allocating personnel. The ability to more effectively balance work among research staff also has the potential to positively affect job satisfaction, resulting in reduced staff turnover and improved quality of clinical trial data. With these concerns in mind, one community-based research program decided to investigate best methods for measuring and predicting clinical trial–associated workload.

The Wichita Community Clinical Oncology Program (WCCOP), an NCI grant–funded statewide program located in Wichita, Kansas, was initially established in 1983 with the goal of engaging community physicians in NCI clinical trial programs, thereby facilitating the incorporation of research results into practice. Since its inception, WCCOP has successfully enrolled more than 14,000 patients and participants onto cancer treatment, cancer control, and prevention-related trials, averaging approximately 850 registrations per year between the years 2000 and 2010.

Within WCCOP, the research nurse plays a major role in the clinical trial process. The research nurses are responsible for identifying eligible trial participants, assisting the physician investigator with the consent process, assuring that eligibility requirements and prestudy testing measures are met, registering the patient to the trial, drafting treatment- and study-related orders (with physician approval and signoff), requesting trial-related follow-up tests and appointments, providing study calendars and follow-up information to the patient and family, evaluating the patient before the physician at each visit, documenting toxicities experienced (grade and attribution assignment), and determining appropriate dosing adjustment per protocol.

The non-nurse clinical research associate (CRA) also fulfills a vital role within the WCCOP clinical trial process. CRAs are assigned to work with specific research nurses, forming a team. CRAs are responsible for non-nursing activities such as electronically logging and tracking patient registrations, submitting data to the appropriate research organization, answering data-related queries, requesting submission of pathology and radiology materials, ordering study-specific laboratory kits, maintaining patient research files, and completing follow-up on patients. Other WCCOP research staff include a quality assurance specialist, regulatory specialists, and secretarial support staff.

From 1993 to 1998, WCCOP management became increasingly aware of an unequal distribution of workload and lack of accountability for research-related activities among its research nurses. Data were collected about numbers of patients per research nurse. However, neither trial-related workload nor complexity was taken into account. For example, management of a patient enrolled onto an acute leukemia clinical trial required a higher degree of work effort than the management of a patient enrolled onto an adjuvant treatment trial. The number of treatment- and disease-related toxicities was higher, laboratory testing was more frequent and complex, and the number and complexity of case report forms were greater in the acute leukemia trial. This often required daily capture of data, compared with monthly in the typical adjuvant treatment trial. WCCOP management determined that a process to measure clinical trial–associated workload was needed and began the process of developing a measurement tool.

Validated tools for the measurement of clinical trial workload did not exist. However, a literature review revealed publications that discussed productivity and workload measurements within inpatient and outpatient ambulatory care nursing units.3–6 Patient classification systems, which used a classification instrument or tool, are used to categorize patients into quantifiable care groups based on nursing care effort. These classifications are then used to determine nursing workload and staffing assignments. WCCOP management opted to evaluate a similar process of assigning acuity levels or numeric weights to cancer clinical trials reflecting complexity and intensity of care needed.

Methods

Following the concept of the factor classification tool,6 WCCOP management evaluated and categorized all clinical trials (open and closed to accrual) and the patients enrolled onto these trials.

Patient Classifications

All patients were classified into one of two categories according to their current trial status: on study or off study. The on-study category was divided into two subgroups: on active treatment, defined as currently receiving active treatment as delineated in the trial, or off treatment, where participants are no longer receiving protocol treatment but are still being observed per protocol-defined criteria. The second category—off study—included participants who were no longer receiving required protocol treatment and no longer being observed per protocol-defined criteria (ie, patients whose disease had progressed). Because newly enrolled patients were initiating protocol therapy, they were automatically classified as on active treatment and therefore on study. The combinations of on- and off-study categories reflected the total number of patients being monitored and therefore total overall workload.

Protocol Classifications

Protocols were classified within the WCCOP computer system as either being a treatment- or cancer control–focused trial. Individual trials were then ranked by the WCCOP manager based on six workload-related determinants: one, complexity of treatment; two, trial-specific laboratory and testing requirements; three, treatment toxicity potential; four, complexity and number of data forms required; five, degree of coordination required (involvement of ancillary departments, outside offices/sites, and/or disciplines); and six, number of trial random assignments or steps. Trials were then assigned a score according to their estimated workload using a range of 1 to 4:

Observational/registry trial

Oral agents with minimal toxicity, tests/procedures considered standard of care, data forms requiring basic information easily captured from medical records, requires minimal coordination with outside and/or ancillary staff, nonrandomized or single random assignment (included laboratory studies, the majority of cancer control symptom management trials, and hormone therapy trials)

Chemotherapy and/or radiation therapy regimen, increased toxicity potential when compared with a trial rated as 2, involves non–standard of care research tests/procedures, data forms more complex and higher in number, requires coordination with one to two other disciplines/ancillary departments, single time point, randomized phase II or III (included the majority of randomized phase II and III treatment trials)

Complex, multiple drug regimens, high degree of toxicity potential, involves multiple non–standard of care research tests/procedures, data forms more complex, daily to weekly data collection required and data items higher in number, requires coordination with ≥ two disciplines/ancillary departments, multiple random assignments and/or steps (included primarily bone marrow transplantation, leukemia, lymphoblastic lymphoma, myeloma trials)

Calculating Acuity Scores

On a monthly basis, lists of patients by research nurse were generated by trial type (treatment and cancer control) and by patient category (on active treatment, off treatment, or off study). Individual research nurse workload scores were calculated by multiplying the number of patients per trial by the acuity score assigned to the trial. For example, if a trial were assigned a score of 3, and the nurse had two patients in this study, the acuity score would be 6. Scores for all protocols, by number of patients, were then added together for the total acuity score of an individual nurse. As a means of accounting for part-time staff, the total acuity score was divided by the number of days the research nurse worked per week (Appendix Table A1, online only).

Research nurses received their individual scores as well as the group average so that they could see where they ranked in comparison with their peers. Individual scores were kept confidential and not shared among staff members. Acuity scores were calculated and reported on a monthly basis over a period of 11 years (1999 to 2010) according to the NCI CCOP grant year (June 1 to May 31).

Results

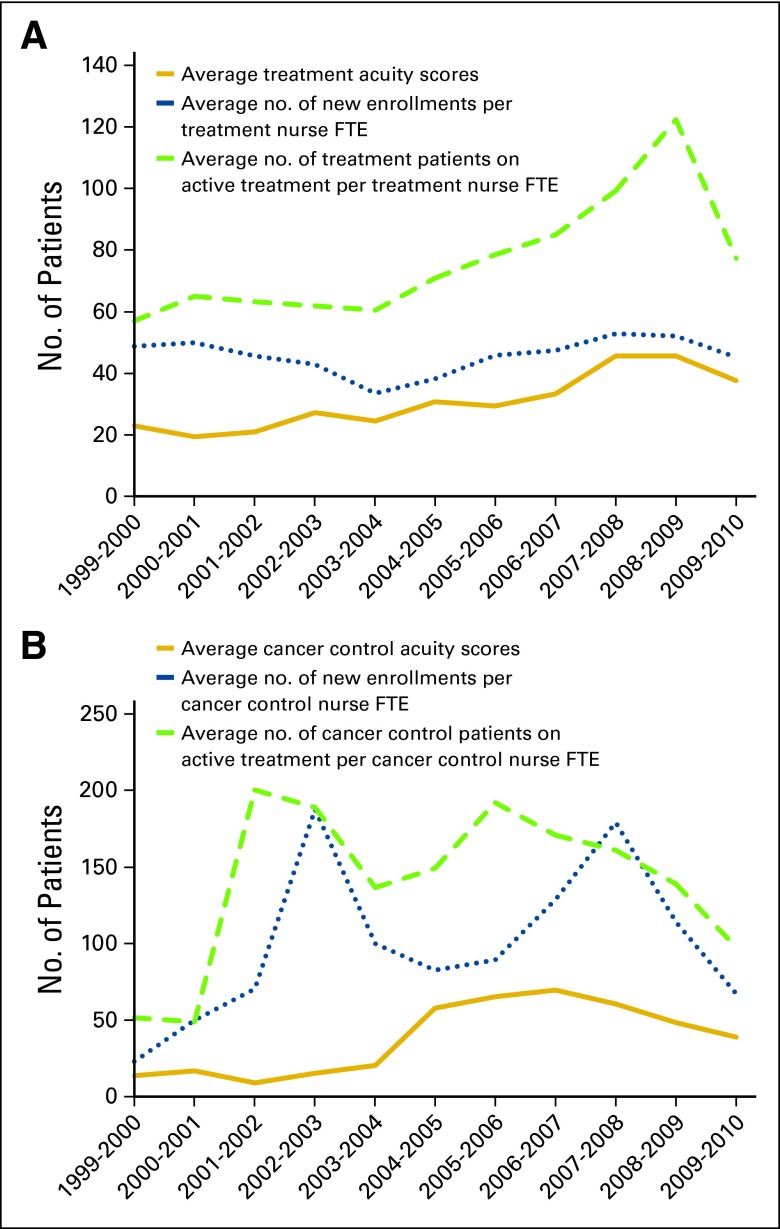

Review of the acuity score data across the 11-year timespan confirmed that clinical trial complexity has increased. Average annual acuity scores increased for both treatment- and cancer control–focused trials. Acuity scores for treatment trials increased from an annual average of 22.8 in fiscal year (FY) 1999 to 37.6 in FY 2009 (65% increase), and cancer control acuity scores increased from 13.8 to 38.8 (181% increase) for the same time periods (Table 1). The 11-year average acuity score for treatment trials was 30.6, with annual scores ranging from 19.3 in FY 2000 to 45.6 in FY 2008. The annual acuity score range for cancer control trials increased more dramatically from a low of 8.97 in FY 2001 to a high of 69.8 in FY 2006, with an 11-year average annual score of 37.8. Off-treatment acuity scores also increased from 14.2 in FY 2001 to 39.3 in FY 2009 (178% increase), with an 11-year average of 15.9.

Table 1.

Annual Average Acuity Scores by On- and Off-Study Trial* Status Categories

| Trial Status | FY 1999 | FY 2009 | Low |

High |

11-Year Average | ||

|---|---|---|---|---|---|---|---|

| Score | Year | Score | Year | ||||

| Treatment | 22.8 | 37.6 | 19.3 | 2000 | 45.6 | 2008 | 30.6 |

| Cancer control | 13.8 | 38.8 | 8.97 | 2001 | 69.8 | 2006 | 37.8 |

| Off study | 14.2 | 39.3 | 10.4 | 2001 | 39.3 | 2009 | 15.9 |

Abbreviation: FY, fiscal year.

Includes treatment- and cancer control–focused trials.

The number of new patient enrollments and the number of patients on and off study increased over the 11-year timespan (Table 2). However, as a result of monitoring workload and adjusting staffing needs based on acuity scores, the numbers of patients per research nurse FTE for new enrollments and patients on study decreased. The number of patients categorized as off study only slightly increased.

Table 2.

Annual Average Numbers of Patients by Patient Classification

| No. of Patients | FY 1999 | FY 2009 | Low |

High |

11-Year Average | ||

|---|---|---|---|---|---|---|---|

| Score | Year | Score | Year | ||||

| Newly enrolled | |||||||

| Total | 430 | 653 | 430 | 1999 | 1,424 | 2007 | 872 |

| Per research nurse FTE | 72 | 54 | 51 | 2001 | 95 | 2007 | 69 |

| On study | |||||||

| Total | 652 | 1,031 | 652 | 1999 | 1,798 | 2007 | 1,342 |

| Per research nurse FTE | 109 | 86 | 83 | 2003 | 125 | 2008 | 103 |

| Off study | |||||||

| Total | 672 | 1,978 | 672 | 1999 | 1,978 | 2009 | 1,187 |

| Per research nurse FTE | 112 | 165 | 72 | 2003 | 165 | 2009 | 97 |

Abbreviations: FTE, full-time equivalent; FY, fiscal year.

Discussion

The collection of measurable clinical trial workload metrics had multiple benefits over the 11-year period. The per research nurse FTE reductions in workload, including decreased numbers of new enrollments and on-study patients, along with stable numbers of off-study patients, were a direct consequence of consistently monitoring workload distribution and adjusting staffing accordingly. Monthly monitoring of individual as well as group average acuity scores provided management with the ability to balance workload among staff. If the scores of one research nurse were consistently below the group average, he or she was encouraged to assist another research nurse whose scores were above average by enrolling a higher number of new enrollments until their acuity scores became more aligned. The WCCOP protocol acuity tool (WPAT) provided objective data to institution administration that validated subjective feedback from staff about the need for additional staff. The 11 years of data collection and evaluation resulted in a determination that a research nurse group average acuity score between 35.0 and 40.0 generally validated the need to consider an increase in staff. Monitoring acuity scores and quality metrics also allowed management the opportunity to mentor and educate staff who had difficulty managing workloads similar to those of their peers, resulting in staff either taking a higher acuity patient load or considering other employment.

New enrollment accrual rates fluctuated over the 11-year timeframe based primarily on trial availability (Fig 1). This fluctuation was more prominent in cancer control than in treatment trials. Before the use of the WPAT, WCCOP management hired additional staff based on subjective assessments that workload had increased related to increased accrual. However, because the acuity scores were not clearly correlated with new patient enrollment, acuity was found to be a better gauge for justification of increased staffing.

Figure 1.

Number of patients in relation to acuity scores by (A) treatment- and (B) cancer control–focused trials. FTE, full-time equivalent. (*) Acuity scores are based on the sum of monthly patient numbers multiplied by the protocol-specific acuity score.

Since implementing the WPAT in 1999, the literature addressing clinical trial–associated workload has increased. However, a validated tool has yet to be developed and implemented. Although others have begun the process of developing workload measurement tools,7–11 the WPAT is the only tool to our knowledge supported by 11 years of workload assessment data. In addition, many of the other tools employ complex scoring formulas or ratings that include measurements of time associated with individual trial-related tasks and/or rankings similar to the WPAT but with more detailed ranking options.8–12 The WPAT was developed with an emphasis on simplicity, reproducibility, and long-term usability.

The WPAT has only been used by one research program, and therefore, it and the findings reported may not be generalizable to other community or academic settings. In addition, capability to capture patient and trial information electronically is required for this tool. Plus, accuracy of the data is influenced by the timely and accurate updating by the individual research nurses of the trial status of their patients in the computer tracking system. For instance, if the research nurse does not update patient status from on study to off study, the acuity scores and number of patients can be inaccurately skewed higher.

The WPAT was developed with the assumption that the greatest amount of work associated with clinical trial management occurs at the patient level. The acuity score is therefore a reflection of the individual research nurse workload and not the overall research team. It is clear, however, that a successful research program requires the involvement of a wider variety of research staff to accomplish all clinical trial–related activities. Future tools should therefore account for additional clinical trial–related activities, such as regulatory compliance, study startup, screening, and recruitment efforts.

Although they were helpful in monitoring workload and adjusting staffing, WPAT data have not yet been used by the WCCOP in determining whether to open or activate a new clinical trial. This decision has been generally based on whether the patient population, treatment, and study design of a trial fit with the current priorities and needs of the WCCOP investigators. Future research efforts should also examine the use of workload assessment as a prospective consideration when evaluating new clinical trials for possible activation.

Acuity scores were assigned by the WCCOP manager, which most likely contributed to a higher degree of consistency. Before the tool can be validated or used by other research programs, the acuity scoring levels as well as protocol determinants need to be better defined to facilitate consistent interpretation and application across research sites and among raters.

In conclusion, the WPAT has been successfully used to measure clinical trial–associated workload and inform staffing decisions at a CCOP. Research nurse workload and staffing decisions have historically been based on subjective observations provided by these staff and managers. The WPAT provides objective data that can be used to further explore and validate these subjective data.

Further testing will be needed to evaluate its applicability and utility within other research programs. Standardizing the measurement of clinical trial–associated workload across multiple research sites could produce much-needed benchmarking data to provide guidance about appropriate clinical research program staffing. Data-driven decisions about staffing should facilitate appropriate allocation of individual research staff workloads. This could have a positive impact on job satisfaction, resulting in the retention of more seasoned research staff with the skills to improve accrual and ensure protocol compliance and collection of quality data.

Acknowledgment

The Wichita Community Clinical Oncology Program is supported by Grant No. U10 CA035431 from the National Cancer Institute. Presented in part at the National Cancer Institute Community Clinical Oncology Program Meeting, Bethesda, MD, September 24-25, 2012; American Society of Clinical Oncology Community Research Forum, Alexandria, VA, September 14, 2012; National Surgical Adjuvant Breast and Bowel Project Cancer Risk Assessment Workshop Breakout Session, Toronto, Ontario, Canada, June 23, 1999; and the Pre-Congress Session of the Oncology Nursing Society, Denver, CO, April 30, 2003.

Appendix

Table A1.

Acuity Calculation Example: On Active Treatment Acuity for Individual Research Nurse

| Study | Acuity Score | No. of Patients | Total | ||

|---|---|---|---|---|---|

| E1496 | 3 | × | 2 | = | 6 |

| E2997 | 3 | × | 4 | = | 12 |

| E3999 | 4 | × | 2 | = | 8 |

| N9831 | 3 | × | 5 | = | 15 |

| Total | 41 | ||||

| Divide by No. of days per week worked | 5 | ||||

| Total on active treatment acuity | 8.2 |

Authors' Disclosures of Potential Conflicts of Interest

Although all authors completed the disclosure declaration, the following author(s) and/or an author's immediate family member(s) indicated a financial or other interest that is relevant to the subject matter under consideration in this article. Certain relationships marked with a “U” are those for which no compensation was received; those relationships marked with a “C” were compensated. For a detailed description of the disclosure categories, or for more information about ASCO's conflict of interest policy, please refer to the Author Disclosure Declaration and the Disclosures of Potential Conflicts of Interest section in Information for Contributors.

Employment or Leadership Position: Keisha Humphries, Via Christi Hospital/Wichita Community Clinical Oncology Program (C) Consultant or Advisory Role: None Stock Ownership: None Honoraria: None Research Funding: None Expert Testimony: None Other Remuneration: None

Author Contributions

Conception and design: Marjorie J. Good

Administrative support: Marjorie J. Good, Andrea Medders

Collection and assembly of data: Marjorie J. Good, Keisha Humphries, Andrea Medders

Data analysis and interpretation: Marjorie J. Good, Andrea Medders

Manuscript writing: All authors

Final approval of manuscript: All authors

References

- 1.Gwede CK, Johnson D, Trotti A. Measuring the workload of clinical research coordinators: Part 1—Tools to study workload issues. Appl Clin Trials. 2000;9:40–44. http://www.appliedclinicaltrialsonline.com/appliedclinicaltrials/issue/issueDetail.jsp?id=22662. [Google Scholar]

- 2.Getz KA, Wenger J, Campo RA, et al. Assessing the impact of protocol design changes on clinical trial performance. Am J Ther. 2008;15:450–457. doi: 10.1097/MJT.0b013e31816b9027. [DOI] [PubMed] [Google Scholar]

- 3.Giovannetti P. Understanding patient classification systems. J Nurs Adm. 1979;9:4–9. [PubMed] [Google Scholar]

- 4.Campbell SB, Hallgren L, Kamitomo V, et al. Chemotherapy administration: A beginning survey of chemotherapy as a workload index. Cancer Nurs. 1984;7:213–220. [PubMed] [Google Scholar]

- 5.Van Slyck A. A systems approach to the management of nursing services: Part II—Patient classification system. Nurs Manage. 1991;22:23–25. doi: 10.1097/00006247-199104000-00009. [DOI] [PubMed] [Google Scholar]

- 6.Medvec BR. Productivity and workload measurement in ambulatory oncology. Semin Oncol Nurs. 1994;10:288–295. doi: 10.1016/s0749-2081(05)80076-2. [DOI] [PubMed] [Google Scholar]

- 7.Roche K, Paul N, Smuck B, et al. Factors affecting workload of cancer clinical trials: Results of a multicenter study of the National Cancer Institute of Canada Clinical Trials Group. J Clin Oncol. 2002;20:545–556. doi: 10.1200/JCO.2002.20.2.545. [DOI] [PubMed] [Google Scholar]

- 8.Fowler DR, Thomas CJ. Protocol acuity scoring as a rational approach to clinical research management. Research Practitioner. 2003;4:64–71. http://www.accessmylibrary.com/article-1G1-100110332/protocol-acuity-scoring-rational.html. [Google Scholar]

- 9.Berridge J, Coffey M. Workload measurement. Appl Clin Trials. 2008;6:17–21. http://www.appliedclinicaltrialsonline.com/appliedclinicaltrials/article/articleDetail.jsp?id=522055. [Google Scholar]

- 10.Smuck B, Bettello P, Berghout K, et al. Ontario protocol assessment level: Clinical trial complexity rating tool for workload planning in oncology clinical trials. J Oncol Pract. 2011;7:80–84. doi: 10.1200/JOP.2010.000051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.James P, Bebee P, Beekman L, et al. Creating an effort tracking tool to improve therapeutic cancer clinical trials workload management and budgeting. J Natl Compr Canc Netw. 2011;9:1228–1233. doi: 10.6004/jnccn.2011.0103. [DOI] [PubMed] [Google Scholar]

- 12.National Cancer Institute. NCI Trial Complexity Elements and Scoring Model. http://ctep.cancer.gov/protocolDevelopment/docs/trial_complexity_elements_scoring.doc.