Abstract

We present an efficient and accurate algorithm for principal component analysis (PCA) of a large set of two-dimensional images and, for each image, the set of its uniform rotations in the plane and its reflection. The algorithm starts by expanding each image, originally given on a Cartesian grid, in the Fourier–Bessel basis for the disk. Because the images are essentially band limited in the Fourier domain, we use a sampling criterion to truncate the Fourier–Bessel expansion such that the maximum amount of information is preserved without the effect of aliasing. The constructed covariance matrix is invariant to rotation and reflection and has a special block diagonal structure. PCA is efficiently done for each block separately. This Fourier–Bessel-based PCA detects more meaningful eigenimages and has improved denoising capability compared to traditional PCA for a finite number of noisy images.

1. INTRODUCTION

Principal component analysis (PCA) is a classical method for dimensionality reduction, compression, and denoising. The principal components are the eigenvectors of the sample covariance matrix. In image analysis, the principal components are often referred to as “eigenimages” and they form a basis adaptive to the image set. In some applications, including all planar rotations of the input images, PCA is advantageous. For example, in single particle reconstruction (SPR) using cryoelectron microscopy (cryo-EM) [1], the 3D structure of a molecule needs to be determined from many noisy 2D projection images taken at unknown viewing directions. PCA, known in this field as multivariate statistical analysis, is often a first step in SPR [2]. Inclusion of the rotated images for PCA is desirable because such images are just as likely to be obtained in the experiment by in-plane rotating either the specimen or the detector. When all rotated images are included for PCA, then the eigenimages have a special separation of variables form in polar coordinates in terms of radial functions and angular Fourier modes [3–5]. It is easy to steer the eigenimages by a simple phase shift, hence the name “steerable PCA.” Computing the steerable PCA efficiently and accurately is, however, challenging.

The first challenge is to mitigate the increased computational cost associated with replicating each image multiple times. Efficient algorithms for steerable PCA were introduced in [6,7] with computational complexity almost similar to that of traditional PCA on the original images without their rotations. Current efficient algorithms for steerable PCA first map the images from a Cartesian grid to a polar grid using, e.g., interpolation. Because the transformation from Cartesian to polar is not unitary, the eigenimages corresponding to images mapped to a polar grid are not equivalent to transforming the original eigenimages from Cartesian to polar.

The second challenge is associated with noise. The nonunitary transformation from Cartesian to polar changes the noise statistics. Interpolation transforms additive white noise to correlated (i.e., colored) noise. As a consequence, spurious eigenimages and eigenvalues corresponding to colored noise may arise in the eigen-analysis. The naïve algorithm for steerable PCA that replicates each image multiple times also mistreats the noise. While the realization of noise is independent between the original images, the realization of noise among duplicated images is dependent. This in turn can lead to noise-induced spurious eigenimages and to artifacts in the leading eigenimages.

We present a new efficient and accurate algorithm for computing the steerable PCA that meets these challenges. This is achieved by combining into the steerable PCA framework a sampling criterion similar to the criterion proposed by Klug and Crowther [8] in a different context of reconstructing a 2D image from its 1D projections. We represent the images in a truncated Fourier–Bessel basis in which the number of radial components is decreasing with the angular frequency. Our sampling criterion implies that the covariance matrix of the images has a block diagonal structure where the block size decreases as a function of the angular frequency. The block diagonal structure of the covariance matrix was observed and utilized in previous works on steerable PCA. However, while in all existing methods for steerable PCA the block size is constant, here the block size shrinks with the angular frequency. The incorporation of the sampling criterion into the steerable PCA framework is the main contribution of this paper.

2. SAMPLING CRITERION

We assume that the set of images correspond to spatially limited objects. By appropriate scaling of the pixel size, we can assume that the images vanish outside a disk of radius 1. The eigenfunctions of the Laplacian in the unit disk with vanishing Dirichlet boundary condition are the Fourier–Bessel functions. Hence, they form an orthogonal basis to the space of squared-integrable functions over the unit disk, and it is natural to expand the images in that basis. The Fourier–Bessel functions are given by

| (1) |

where Nkq is a normalization factor; Jk is the Bessel function of integer order k, and Rkq is the qth root of the equation

| (2) |

The functions ψkq are normalized to unity, that is

| (3) |

The normalization factors are given by

| (4) |

We use here the following convention for the 2D Fourier transform of a function f in polar coordinates

| (5) |

The 2D Fourier transform of the Fourier–Bessel functions denoted

(ψkq), is given in polar coordinates as

(ψkq), is given in polar coordinates as

| (6) |

This result is typically derived using the Jacobi–Anger identity

| (7) |

Notice that the Fourier transform

(ψkq)(k0, φ0) vanishes on concentric rings of radii k0 = (Rkq′/2π) with q′ ≠ q. The maximum of |

(ψkq)(k0, φ0) vanishes on concentric rings of radii k0 = (Rkq′/2π) with q′ ≠ q. The maximum of |

(ψkq)(k0, φ0)| is obtained near the ring k0 = (Rkq/2π), as can be verified from the asymptotic behavior of the Bessel functions [cf. Eq. (9)]. For images that are sampled on a squared Cartesian grid of size 2L × 2L pixels, with grid size 1/L (corresponding to the square [−1, 1] × [−1, 1]) the sampling rate is L and the corresponding Nyquist frequency (the band limit) is (L/2). Because of the Nyquist criterion, the Fourier–Bessel expansion requires components for which

(ψkq)(k0, φ0)| is obtained near the ring k0 = (Rkq/2π), as can be verified from the asymptotic behavior of the Bessel functions [cf. Eq. (9)]. For images that are sampled on a squared Cartesian grid of size 2L × 2L pixels, with grid size 1/L (corresponding to the square [−1, 1] × [−1, 1]) the sampling rate is L and the corresponding Nyquist frequency (the band limit) is (L/2). Because of the Nyquist criterion, the Fourier–Bessel expansion requires components for which

| (8) |

because other components represent features beyond the resolution and their inclusion would result in aliasing. The asymptotic behavior of the Bessel functions

| (9) |

for Rkqr ≫ |k2 − (1/4)|, suggests that the roots are asymptotically

| (10) |

which is indeed the first term of the asymptotic expansion for the roots (see [9]). Equations (8) and (10) lead to the sampling criterion

| (11) |

In practice, we do not rely on the asymptotic formula, Eq. (10). Instead, we find the roots of the Bessel functions numerically, and check directly which components satisfy the criterion Rkq ≤ πL. The number of components satisfying |k| + 2q ≤ 2L + (1/2) is approximately 2L2, which is smaller than πL2 (the number of pixels inside the unit disk) by a factor of 2/π. We remark that the cutoff criterion, Eq. (11), is similar to the criterion in [8] but is not identical to it. Specifically, the criterion in [8] has a different cutoff value for k + 2q. The difference stems from the fact that [8] studies a different problem, namely the 2D reconstruction problem of an image from its 1D line projections.

3. FOURIER–BESSEL EXPANSION OF IMAGES SAMPLED ON A CARTESIAN GRID

Suppose I1, …, In are n images sampled on a Cartesian grid. We denote by Ĩi the continuous approximation of the ith image in terms of a truncated Fourier–Bessel expansion including only components satisfying the sampling criterion, namely

| (12) |

where for each k, pk denotes the number of components satisfying Rkq ≤ πL. We choose the expansion coefficients that minimize the squared distance between Ii and Ĩi, with the latter restricted to the grid points; that is, are the least squares solution to the overdetermined linear system obtained from Eq. (12) by evaluating the images and the Fourier–Bessel functions at the grid points. More specifically, let Ψ be the matrix whose entries are evaluations of the Fourier–Bessel functions at the grid points, with rows indexed by the grid points and columns indexed by angular and radial frequencies. Then, the coefficient vector ai of the ith image is the solution of minai ||Ψai − Ii||2, given by

| (13) |

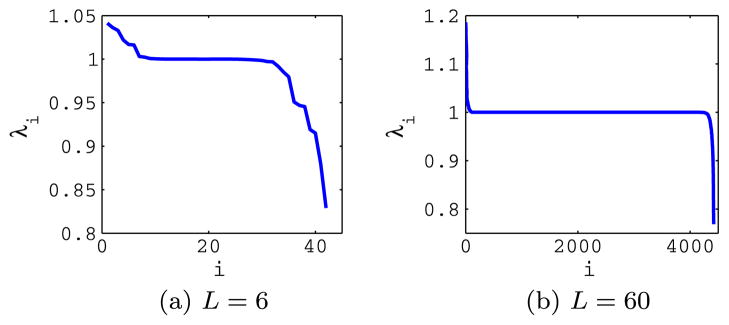

The orthogonality of the Fourier–Bessel functions on the disk does not necessarily imply that their discretized counterparts are orthogonal. That is, the matrix Ψ†Ψ may differ from the identity matrix. The columns of the matrix Ψ are guaranteed to approach orthogonality only as the grid is indefinitely refined (e.g., as L → ∞). In practice, we have numerically observed that the columns of Ψ are approximately orthogonal already for moderate values of L. In particular, white noise remains approximately white after the transformation, since the eigenvalues of (Ψ†Ψ)−1 are close to 1 (see Fig. 1). Indeed, for white noise images, the mean and covariance satisfy

[Ii] = 0 and

, where σ2 is the noise variance and I is the identity matrix. Therefore, Eq. (13) and the linearity of expectation yield

[Ii] = 0 and

, where σ2 is the noise variance and I is the identity matrix. Therefore, Eq. (13) and the linearity of expectation yield

[ai] = 0 and

[ai] = 0 and

[aiai†] = σ2(Ψ†Ψ)−1.

[aiai†] = σ2(Ψ†Ψ)−1.

Fig. 1.

Eigenvalues of (Ψ†Ψ)−1, where Ψ is the truncated Fourier–Bessel transform for L = 6 and L = 60 pixels respectively. This is also the spectrum of transformed white noise images (see text). The eigenvalues are close to 1, indicating that the discretized Fourier–Bessel transform is approximately unitary and that white noise remains approximately white. For L = 60, among the eigenvalues, only 18 are above 1.05 and 34 are below 0.95. For L = 6, among the eigenvalues, there are 6 below 0.95.

In the previous work [7] on fast computation of steerable principal components for a large data set, images are re-sampled on a polar grid by the polar Fourier transform. The polar Fourier transform is nonunitary and therefore causes artifacts in the eigenimages. Suppose the linear transformation is described by the matrix A. The sample covariance matrix CA of the transformed images is related to the sample covariance matrix C of the original images by

| (14) |

Unless A is unitary, there is no simple way to transform the eigenvectors of ACA† to the eigenvectors of C. The advantage of using the Fourier–Bessel transform with the sampling criterion that adapts to the band limit of the images is that such transform is approximately unitary (Fig. 1).

4. SAMPLE COVARIANCE MATRIX

It is easy to “steer” the continuous approximation Ĩi of Ii [Eq. (12)]. If denotes the rotation of the i′ th image by an angle α, then

| (15) |

Because J−k(x) = (−1)kJk(x), for real valued images . Also, under reflection changes to , and the reflected image, denoted , is given by

| (16) |

| (17) |

The sample mean, denoted Ĩmean, is the continuous image obtained by averaging the continuous images and all their possible rotations and reflections:

| (18) |

Substituting Eqs. (15) and (17) into (18) we obtain

| (19) |

As expected, the sample mean image is radially symmetric, because ψ0q is only a function of r but not of θ.

The sample covariance matrix built from the continuous images and all possible rotations and reflections is formally

| (20) |

When written in terms of the Fourier–Bessel basis, the covariance matrix C can be directly computed from the expansion coefficients . Subtracting the mean image is equivalent to subtracting the coefficients with , while keeping other coefficients unchanged. That is, we update

| (21) |

In the Fourier–Bessel basis the covariance matrix C is given by

| (22) |

| (23) |

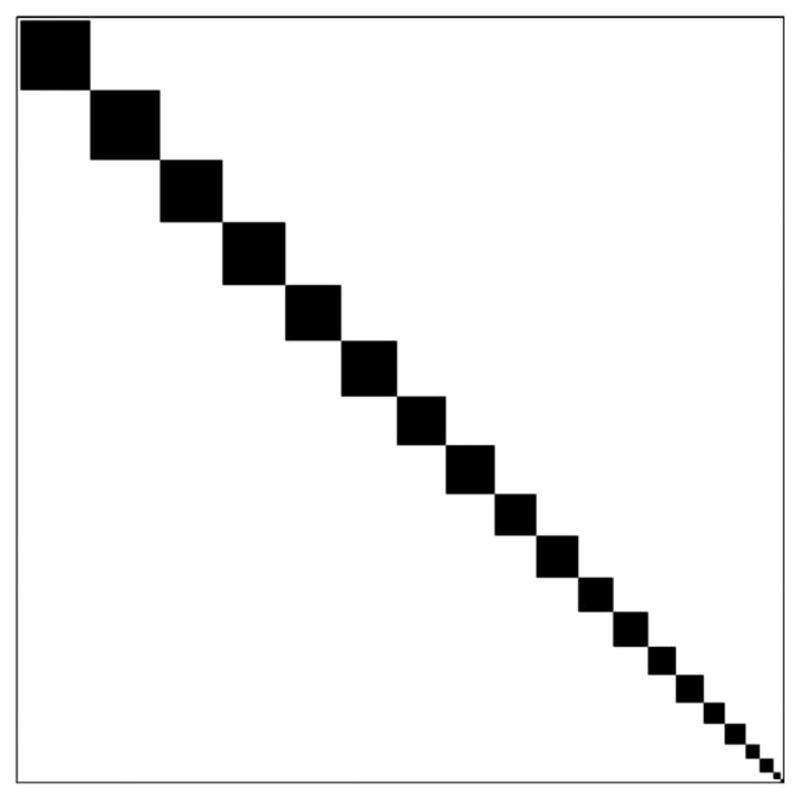

The nonzero entries of C correspond to k = k′, rendering its block diagonal structure (see Fig. 2). Also, it suffices to consider k ≥ 0, because C(k,q),(k,q′) = C(−k,q),(−k,q′). Thus, the covariance matrix can be written as the direct sum

Fig. 2.

Illustration of the block diagonal structure of the rotational invariant covariance matrix. The block size shrinks as the angular frequency k increases.

| (24) |

where is by itself a sample covariance matrix of size pk × pk. The block size pk decreases as the angular frequency k increases (see Fig. 2). We remark that the block structure of the covariance matrix in steerable PCA is well known; however, in previous works the block size is constant (i.e., independent of the angular frequency k). Our main observation is that the block size must reduce as the angular frequency increases in order to avoid aliasing. Moreover, if the images are corrupted by independent additive white (uncorrelated) noise, then each block C(k) is also affected by independent additive white noise because the Fourier–Bessel transform is unitary (up to grid discretization).

5. ALGORITHM AND COMPUTATIONAL COMPLEXITY

We refer to the resulting algorithm as Fourier–Bessel steerable PCA (FBsPCA). The steps of FBsPCA are summarized in Algorithm 1. The computational complexity of FBsPCA (excluding pre-computation) is O(nL4 + L5), whereas the computational complexity of the traditional PCA (applied on the original images without their rotational copies) is O(nL4 + L6). The different steps of FBsPCA have the following computational cost. The cost for precomputing the Fourier–Bessel functions on the discrete Cartesian grid is O(L4), because the number of basis functions satisfying the sampling criterion is O(L2) and the number of grid points is also O(L2). The complexity of computing the pseudoinverse of Ψ†Ψ is dominated by computing the singular value decomposition of Ψ in O(L6). Computing the pseudoinverse of Ψ†Ψ is a precomputation that does not depend on the images. Alternatively, since the eigenvalues of Ψ†Ψ are almost equal to one it can be approximated by the identity matrix. It takes O(nL4) to compute the expansion coefficients . The computational complexity of constructing the block diagonal covariance matrix is O(nL3). Since the covariance matrix has a special block diagonal structure, its eigen decomposition takes O(L4). Constructing the steerable basis takes O(L5). Therefore, the total computational complexity for FBsPCA without the precomputation is O(nL4 + L5). For traditional PCA, computing the covariance matrix takes O(nL4) and its eigen decomposition takes O(L6). Overall, the computational cost of FBsPCA is lower than that of the traditional rotational variant PCA.

Algorithm 1.

Fourier–Bessel Steerable PCA (FBsPCA)

Require:

n pre-whitened images I1, …, In sampled on a Cartesian grid of size 2L × 2L.

|

6. RESULTS

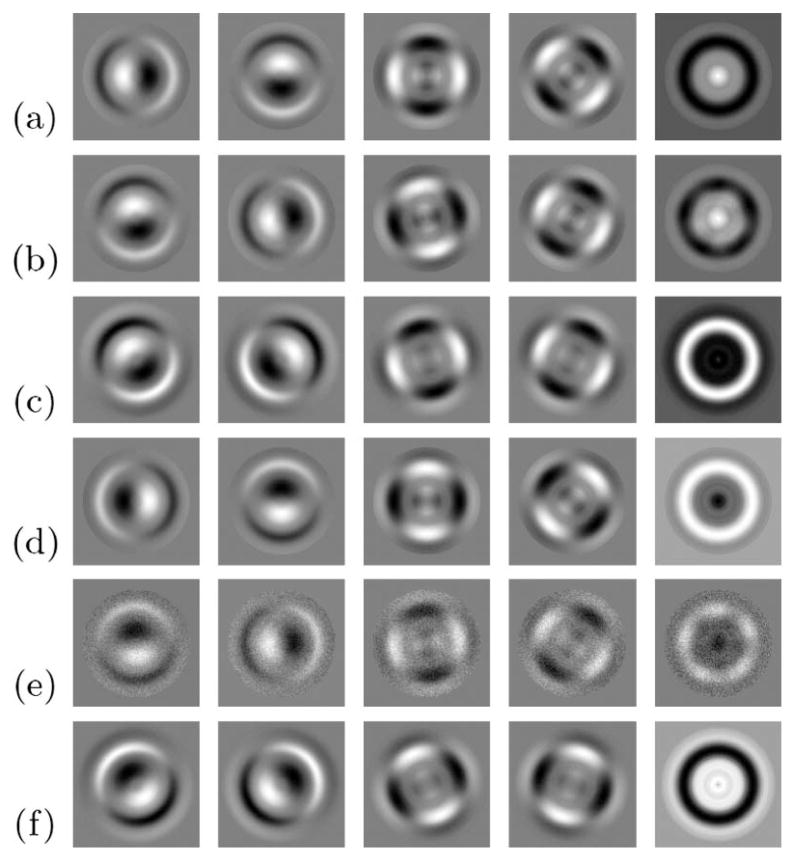

In the first experiment, we simulated n = 104 clean projection images of E. coli 70S ribosome. The images are of size 129 × 129 pixels, but the molecule is confined to a disk of radius L = 55 pixels. We corrupted the clean images with additive white Gaussian noise at different levels of signal-to-noise ratio (SNR) (Fig. 3). The running time of traditional PCA was 695 s, compared to 85 s (including precomputation) for FBsPCA, both implemented in MATLAB on a machine with 2 Intel(R) Xeon(R) CPUs X5570, each with 4 cores, running at 2.93 GHz. The top 5 eigenimages for noisy images agree with the eigenimages from clean projection images [Figs. 4(a) and 4(d)]. The eigenimages generated by FBsPCA are much cleaner than the eigenimages from the traditional PCA [Figs. 4(d) and 4(e)]. We also see that the eigenimages generated by steerable PCA with polar transform (PTsPCA) are consistent with the eigenvectors from the traditional PCA [see Figs. 4(c) and 4(f)].

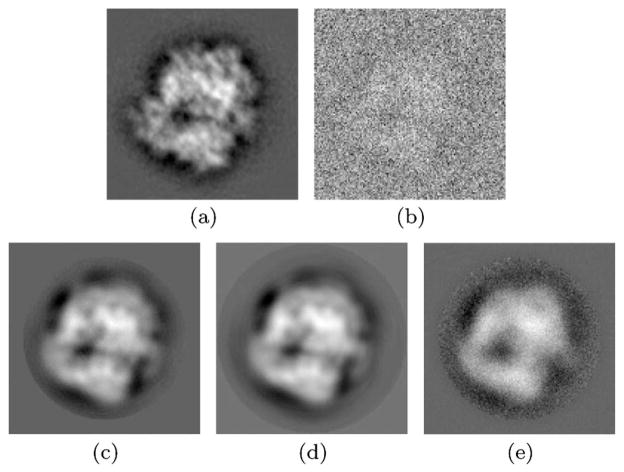

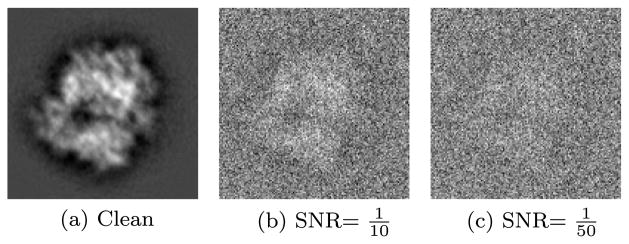

Fig. 3.

Simulated 70S ribosome projection images with different SNR.

Fig. 4.

Eigenimages for 104 simulated 70S ribosome projection images. Clean images: (a) FBsPCA, (b) traditional PCA, and (c) PTsPCA. Noisy images with SNR = (1/50): (d) FBsPCA, (e) traditional PCA, and (f) PTsPCA. Image size is 129 × 129 pixels and L = 55 pixels.

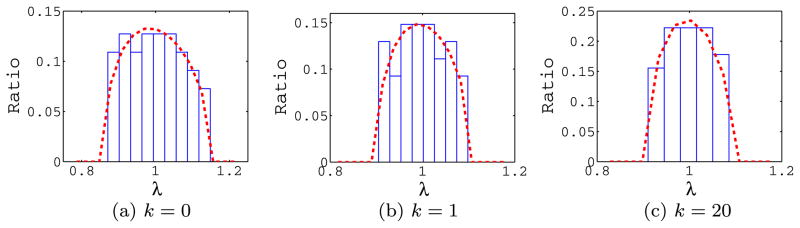

In another experiment, we simulated images consisting entirely of white Gaussian noise with mean 0 and variance 1 and computed the Fourier–Bessel expansion coefficients. The distribution of the eigenvalues for the rotational invariant covariance matrix with different angular frequencies k are well predicted by the Marčenko–Pastur distribution (see Fig. 5). This property allows us to use the method described in [10] to estimate the noise variance and the number of principal components to choose.

Fig. 5.

Histogram of eigenvalues of C(k) (with k = 0, 1, 20) for images consisting of white Gaussian noise with mean 0 and variance 1. The dashed lines correspond to the Marčenko–Pastur distribution. n = 104, L = 55 pixels and σ2 = 1.

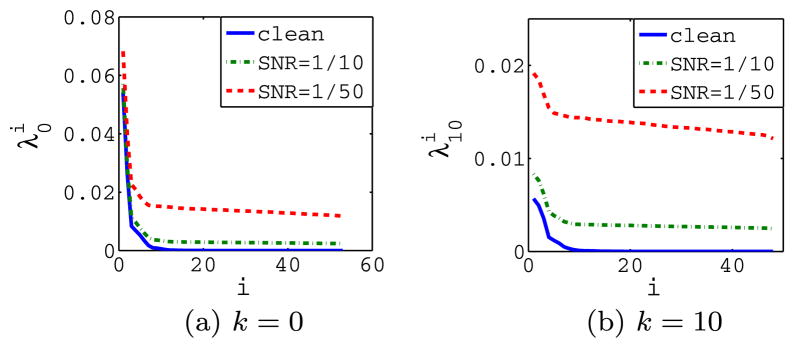

We compared the eigenvalues in the previous experiment of the ribosome projection images with different SNRs (Fig. 6). As the noise variance (σ2) increases, the number of signal components that we are able to discriminate decreases. We use the method in [10] to automatically estimate the noise variance and the number of components beyond the noise level. With the estimated noise variance σ̂2, components with eigenvalues , where γ0 = (p0/n) and γk = (pk/2n) for k > 0, are chosen (the case k = 0 is special because the expansion coefficients a0,q are purely real, since .

Fig. 6.

Eigenvalues for C(0) and C(10) for simulated projection images with different SNRs.

We compared the effects of denoising with FBsPCA, PTsPCA, and traditional PCA. The asymptotically optimal Wiener filters [11] designed with the eigenvalues and eigenimages from FBsPCA (PTsPCA, PCA, resp.) are applied to the noisy images. The number of signal components determined with PCA is 62, whereas the total number of eigenimages for FBsPCA is 430 for 104 noisy images with SNR = (1/20) and L = 55 pixels. For PTsPCA, due to the nonunitary nature of the interpolation from Catersian to polar, we do not have a simple rule for choosing the number of eigenimages. Instead, we applied denoising multiple times corresponding to different number of eigenimages, and present here the denoising result with the smallest mean squared error (MSE). The optimal number of eigenimages for PTsPCA was 348. Of course, in practice the clean image is not available and such a procedure cannot be used. Still, the optimal denoising result by PTsPCA is inferior to that obtained by FBsPCA, where the number of components is chosen automatically. Even if we allow PTsPCA to use more components than FBsPCA, the denoising result does not improve. Figure 7 shows that FBsPCA gives the best denoised image. We computed the MSE, peak SNR (PSNR), and the mean structural similarity (MSSIM ) index [12] to show the effectiveness of the denoising effect using FBsPCA compared with traditional rotational variant PCA and PTsPCA (see Table 1).

Fig. 7.

Denoising by FBsPCA, PTsPCA, and PCA. n = 104 and L = 55 pixels. (a) clean image. (b) noisy image (SNR = (1/20)). (c) FBsPCA denoised. (d) PTsPCA denoised. (e) PCA denoised.

Table 1.

Denoising Effect: FBsPCA, PTsPCA, and PCA

| MSE (10−5) | PSNR (dB) | 1-MSSIM (10−6) | |

|---|---|---|---|

| FBsPCA | 6.6 | 20.2 | 4.4 |

| PTsPCA | 7.7 | 19.5 | 5.9 |

| PCA | 10.1 | 18.3 | 5.9 |

7. SUMMARY AND DISCUSSION

In this paper we adapted a sampling criterion that was originally proposed in [8] into the framework of steerable PCA. The Fourier–Bessel transform with the modified cutoff criterion Rkq ≤ πL is approximately unitary and keeps the statistics of white noise approximately unchanged. This sampling criterion obtains maximum information from a set of images and their rotated and reflected copies while preventing aliasing. Instead of constructing the invariant covariance matrix from the original images and their rotated and reflected copies, we compute the covariance matrix from the truncated Fourier–Bessel expansion coefficients. The covariance matrix has a special block diagonal structure that allows us to perform PCA on different angular frequencies separately. The block diagonal structure was also observed and utilized in previous algorithms for steerable PCA. However, we show here that the block size must shrink as the angular frequency increases in order to avoid aliasing and spurious eigenimages.

While steerable PCA has found applications in computer vision and pattern recognition, this work has been mostly motivated by its application to cryo-EM. Besides compression and denoising of the experimental images, it is worth mentioning that under the assumption that the viewing directions of the images are uniformly distributed over the sphere, Kam has previously demonstrated [13] that the covariance matrix of the images is related to the expansion coefficients of the molecule in spherical harmonics. Our FBsPCA can therefore be applied in conjunction to Kam’s approach.

When applying PCA to cryo-EM images, one has to take into account the fact that cryo-EM images are not perfectly centered. It is well known that the unknown translational shifts can be estimated experimentally using the double exposure technique. Specifically, the images that are analyzed by PCA are obtained from an initial low-dose exposure, while the centers of the images are determined from a second exposure with higher dose. Furthermore, image acquisition is typically followed by an iterative global translational alignment procedure in which images are translationally aligned with their sample mean, which was noted earlier to be radially symmetric. It has been observed that such translational alignment measures produce images whose centers are off by only a few pixels from their true centers. Such small translational misalignments mainly impact the high frequencies of the eigenimages. The general problem of performing PCA for a set of images and their rotations and translations, or other noncompact groups of transformations, is beyond the scope of this paper.

Another important consideration when applying PCA to cryo-EM images is the contrast transfer function (CTF) of the microscope. The images are not simply projections of the molecule, but are rather convolved with a CTF. The Fourier transform of the CTF is real valued and oscillates between positive and negative values. The frequencies at which the CTF vanishes are known as “zero crossings.” The images do not carry any information about the molecule at zero crossing frequencies. The CTF depends on the defocus value, and changing the defocus value changes the location of the zero crossings. Therefore, projection images from several different defocus values are acquired. When performing PCA for the images, one must take into account the fact that they belong to several defocus groups characterized by different CTFs. One possible way of circumventing this issue is to apply CTF correction to the images prior to PCA. A popular CTF correction procedure is phase flipping, which has the advantage of not altering the noise statistics. Alternatively, one can estimate the sample covariance matrix corresponding to each defocus group separately, and then combine these estimators using a least squares procedure in order to estimate the covariance matrix of projection images that are unaffected by CTF. The optimal procedure for performing PCA for images that belong to different defocus groups is a topic for future research.

Finally, we remark that the Fourier–Bessel basis can be replaced in our framework with other suitable bases. For example, the 2D prolate spheroidal wave functions (PSWFs) on a disk [14] enjoy from many properties that make them attractive for steerable PCA. In particular, among all band limited functions of a given band limit they are the mostly spatially concentrated in the disk. They also have a separation of variables form which makes them convenient for steerable PCA. However, an accurate numerical evaluation of the PSWF requires more sophistication compared to the Bessel functions, which is the reason why we have not applied PSWFs here.

Acknowledgments

The project described was partially supported by Award Number R01GM090200 from the National Institute of General Medical Sciences, Award Number DMS-0914892 from the NSF, Award Numbers FA9550-09-1-0551 and FA9550-12-1-0317 from AFOSR, the Alfred P. Sloan Foundation, and Award Number LTR DTD 06-05-2012 from the Simons Foundation.

References

- 1.Frank J. Three-Dimensional Electron Microscopy of Macromolecular Assemblies: Visualization of Biological Molecules in Their Native State. Oxford; 2006. [Google Scholar]

- 2.van Heel M, Frank J. Use of multivariate statistics in analysing the images of biological macromolecules. Ultramicroscopy. 1981;6:187–194. doi: 10.1016/0304-3991(81)90059-0. [DOI] [PubMed] [Google Scholar]

- 3.Hilai R, Rubinstein J. Recognition of rotated images by invariant Karhunen–Loéve expansion. J Opt Soc Am A. 1994;11:1610–1618. [Google Scholar]

- 4.Perona P. Deformable kernels for early vision. IEEE Trans Pattern Anal Mach Intell. 1995;17:488–499. [Google Scholar]

- 5.Uenohara M, Kanade T. Optimal approximation of uniformly rotated images: relationship between Karhunen–Loéve expansion and discrete cosine transform. IEEE Trans Image Process. 1998;7:116–119. doi: 10.1109/83.650856. [DOI] [PubMed] [Google Scholar]

- 6.Jogan M, Zagar E, Leonardis A. Karhunen–Loéve expansion of a set of rotated templates. IEEE Trans Image Process. 2003;12:817–825. doi: 10.1109/TIP.2003.813141. [DOI] [PubMed] [Google Scholar]

- 7.Ponce C, Singer A. Computing steerable principal components of a large set of images and their rotations. IEEE Trans Image Process. 2011;20:3051–3062. doi: 10.1109/TIP.2011.2147323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Klug A, Crowther RA. Three-dimensional image reconstruction from the viewpoint of information theory. Nature. 1972;238:435–440. [Google Scholar]

- 9.McMahon J. On the roots of the Bessel and certain related functions. Ann Math. 1894–1895;9:23–30. [Google Scholar]

- 10.Kritchman S, Nadler B. Determining the number of components in a factor model from limited noisy data. Chemom Intell Lab Syst. 2008;94:1932. [Google Scholar]

- 11.Singer A, Wu HT. Two-dimensional tomography from noisy projections taken at unknown random directions. SIAM J Imaging Sci. 2013;6:136–175. doi: 10.1137/090764657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process. 2004;13:600–612. doi: 10.1109/tip.2003.819861. [DOI] [PubMed] [Google Scholar]

- 13.Kam Z. The reconstruction of structure from electron micrographs of randomly oriented particles. J Theor Biol. 1980;82:15–39. doi: 10.1016/0022-5193(80)90088-0. [DOI] [PubMed] [Google Scholar]

- 14.Slepian D. Prolate spheroidal wave functions, Fourier analysis, and uncertainty—IV: extensions to many dimensions, generalized prolate spheroidal wave functions. Bell Syst Tech J. 1964;43:3009–3057. [Google Scholar]