Abstract

Multisensory integration involves combining information from different senses to create a perception. The diverse characteristics of different sensory systems make it interesting to determine how cooperation and competition contribute to emotional experiences. Therefore, the aim of this study were to estimate the bias from the match attributes of the auditory and visual modalities and to depict specific brain activity frequency (theta, alpha, beta, and gamma) patterns related to a peaceful mood by using magnetoencephalography. The present study provides evidence of auditory domination in perceptual bias during multimodality processing of peaceful consciousness. Coherence analysis suggested that the theta oscillations are a transmitter of emotion signals, with the left and right brains being active in peaceful and fearful moods, respectively. Notably, hemispheric lateralization was also apparent in the alpha and beta oscillations, which might govern simple or pure information (e.g. from single modality) in the right brain but complex or mixed information (e.g. from multiple modalities) in the left brain.

Keywords: Dominance, Audiovisual modality, Magnetoencephalography, Coherence

Introduction

Our environment is full of diverse stimuli (e.g., sound and light) that can be perceived by separate human physical senses to induce affective states. A successful social life requires the multitude of information from different sensory systems to be collected, analyzed, and interpreted to form unified percepts (Tanaka et al. 2009). Recent investigations of multisensory integration suggest that responses to a stimulus in one modality (e.g., auditory, visual, or touch) can be influenced by the occurrence of a stimulus in another modality (Logeswaran and Bhattacharya 2009; Stekelenburg and Vroomen 2009). Integrating such sensory inputs is critically dependent on the congruency relation of interest, which may enhance or degrade the linkage of environmental events (Doehrmann et al. 2010). For example, when a subject views peaceful scenery accompanied by peaceful music, this is most likely to be perceived as peaceful, whereas when a subject views fearful scenery accompanied by peaceful music, it may be perceived as either fearful or peaceful. In other words, conflicting information from two modalities will be combined into an ambiguous affection.

Because of the divergence among different sensory systems, the weightings from each modality may not be equal in the fusion process (Esposito 2009). Some studies have suggested that visual information is more important in the decoding of emotional meaning (e.g., Dolan et al. 2001), whereas others have suggested that auditory information is more efficient for communicating emotional state (e.g., Logeswaran and Bhattacharya 2009). All these contradictions have demonstrated that the influences of the senses depend on both the stimulus properties and the task necessaries in everyday life (Latinus et al. 2010; Schifferstein et al. 2010). Moreover, descriptions of the dominant modality probe the importance of intermodality discrepancies, which may vary with the situation (Fenko et al. 2010). Since auditory and visual sensory systems usually provide redundant information, the presence of cooperation and competition in the audiovisual modality is an interesting issue in emotional experiences.

Emotion is an essential part of the human life. It stores in our bodies, impacts our life in helping us to become aware of our needs and take our decisions, and also sets boundaries to protect us from others. It has been said that emotions are designed to ensure that we are paying attention so we can respond to what is happening around us. For example, when we feel afraid, the heart beat faster and the blood pressure increases. This body’s reaction to fear, called the “fight or flight” response, would let us focus on the situation and quickly choose a way to safety. Because of the significance, emotion studies have gained great interest in the late two decades which involve subjective experience, physiology and behavior. And one opposite of fear among all the emotions is peace. It is important to maintain peaceful mind in any situation, even when experiencing dread or uncertainty, since it can help to keep brain function works properly. However, previous studies on emotion have focused more on basic emotions including happiness, anger, sadness, surprise, disgust, and fear (Dolan et al. 2001; Pourtois et al. 2005; Zhao and Chen 2009), and have seldom assessed the calm state, namely peace.

As we know from the study of resting-state, high-frequency oscillatory potentials are associated with information processing, perception, learning, and cognitive tasks (Wu et al. 2010). Whether the low resting-state frequencies could be observed in peaceful state is another interesting issue. Therefore, the aim of this study were to estimate the bias from the match attributes of the auditory and visual modalities and to depict specific brain activity frequency (theta, alpha, beta, and gamma) patterns related to a peaceful mood by using magnetoencephalography (MEG). In the latter part, we tried to investigate (1) the role of theta oscillations during peaceful mood, (2) the relations of varied modalities with alpha and beta oscillations, respectively, and (3) the increase of gamma oscillations to the audiovisual modality. Besides, although some behavioral studies used dynamic visual and vocal clips of affect expressions and found those more appropriate in real life (Massaro and Egan 1996; Campanella and Belin 2007; Collignon et al. 2008), most related psychophysiological and electrophysiological studies have compared static pictures with dynamic sound (Pourtois et al. 2002; Koelewijn et al. 2010), which have incompatible dimensions. According to that, the present study focused on how inner quiet information is processed in the human brain with both dynamic materials (i.e. music and video) extracted from movies.

Methods

Subjects

Ten healthy males who were ophthalmologically and neurologically normal participated in this study. Their ages ranged from 22 to 42 years (28.0 ± 6.0 years, mean ± SD). The visual acuity of all subjects was corrected, where necessary, to the normal range. Informed consents that had been approved by the local Ethics Committee of Yang-Ming University were obtained from all the participants.

Stimuli and stimulation procedure

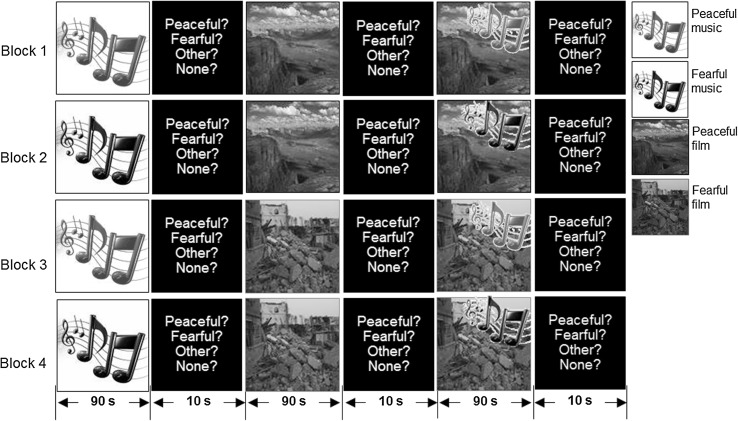

Before the recording session, 94 healthy males constituting another group of subjects were asked to rate their perceived feelings from 1 to 10 points, with one being the lowest and ten being the highest, of 10 (5 peaceful natural scenery and 5 fearful natural disaster) 90-s video clips and 10 (5 peaceful and 5 fearful) 90-s music clips. Clips of both emotional states that received scores of similar and at least 5 points were used in the subsequent MEG experiments. These stimuli formed a total of seven stimulus paradigms that consisted of three modalities (auditory, visual, and audiovisual) and two feelings (peaceful and fearful) as follows: (1) listening to peaceful (Ap) or fearful (Af) music without watching a film, (2) watching a peaceful (Vp) or fearful (Vf) film without listening to music, (3) watching a peaceful film while listening to peaceful music (ApVp) or watching a fearful film while listening to fearful music (AfVf), (4) watching a peaceful film while listening to fearful music (AfVp), and (5) watching a fearful film while listening to peaceful music (ApVf). The experimental procedure was separated into four blocks (see Fig. 1) by a break interval of about 1.5 min. During the entire experiment, the subject lay supine in a comfortable and stable position. A 1,000-Hz pure tone was presented for 10 s (250 ms on/250 ms off) prior to listening to the music clip, while a checkerboard pattern was presented for 10 s (250 ms on/250 ms off) prior to watching the film clip. Thereafter, in block one, the paradigms (Ap), (Vp), and (ApVp) were applied sequentially, each followed by a 15-s response time for labeling the emotional expressions of peacefulness, fear, other, or no emotion, and for scaling the score (ranging from 1 to 4 points). The participants were instructed to indicate their scores as accurately and quickly as possible after the paradigm had been presented. Similar procedures were followed in block two [i.e., (Af) + (Vp) + (AfVp)], block three [i.e., (Ap) + (Vf) + (ApVf)] and block four [i.e., (Af) + (Vf) + (AfVf)]. These visual and auditory stimuli were generated in MATLAB (The MathWorks, Natick, MA) using functions provided by the Psychophysics Toolbox (Brainard 1997; Pelli 1997) on a personal computer, and projected onto a mirror by a projector and delivered with binaurally inserted earphones via a silicone-tube system, respectively.

Fig. 1.

Experimental design in four blocks with the time course of serial stimulation shown along the bottom. The right-side legends indicate the stimulus types

Data acquisition

Visual evoked fields were recorded with a whole-head 160-channel coaxial gradiometer (PQ1160C, Yokogawa Electric, Tokyo, Japan). The magnetic responses were filtered by a bandpass filter from 0.1 to 200 Hz and digitized at a sampling rate of 1,000 Hz. For off-line analysis, the nonperiodic low-frequency noise in the MEG raw data was reduced using a continuously adjusted least-squares method (Adachi et al. 2001). The resulting data were then filtered by a bandpass FIR filter from 0.5 to 50 Hz after removing artifacts with amplitudes exceeding 3,000 fT/cm in the MEG signals.

Anatomic image data was acquired from human subjects using a GE Healthcare Signa 1.5-T Excite scanner (General Electric, Milwaukee, WI) with an eight-channel head coil. The imaging parameters were as follows: TR = 8.548 ms, TE = 1.836 ms, TI = 400 ms, flip angle = 15°, field of view = 256 × 256 mm2, matrix size = 256 × 256, and no gaps. This gave an in-plane resolution of 1 mm2 and a slice thickness of 1.5 mm.

The subject’s head shape and position relative to the MEG sensor were measured using a three-dimensional digitizer and five markers. Three predetermined landmarks (nasion and bilateral preauricular points) on the scalp were also used to match and coregister MEG source signals on the anatomic MRI scans to ensure realistic source reconstruction according to the coordinate systems.

Data analysis

The exported MEG data were preprocessed to remove eye movements, blink artifacts, and ECG activity by the FastICA algorithm (Hyvärinen et al. 1999). The cleaned data were then segmented into epochs of 2 s beginning at the start of the segment (the first 10 s of the original data in each paradigm from all subjects were ignored to eliminate the possibility of attention transients associated with stimulus initiation). For each segment, two types of analysis were applied to the epoched data. The first produced an averaged spectrum by using wavelet transformation for each channel. The spectral power was calculated on a per-0.5-Hz basis and then classified into the following ten brain regions: (1) left frontal; (2) right frontal; (3) left frontotemporal; (4) right frontotemporal; (5) left temporal; (6) right temporal; (7) left central; (8) right central; (9) left occipital; and (10) right occipital. All these values were transformed into percentage, divided by total power, for each frequency band in order to compare different types of stimulations. Statistical differences were analyzed by a two-way repeated-measures ANOVA (with Greenhouse–Geisser adjustment) with within-subject factors [auditory stimulus type (none, peace and fear) × visual stimulus type (none, peace and fear)] for various brain waves (four frequency bands) and areas (ten regions). Bonferroni corrected post hoc tests (paired t test) were conducted only when preceded by significant main effects (p < 0.05). The second type of analysis was designed to reveal functional connectivity between different brain areas by applying coherence estimation for each channel. From a number of 11,520 calculations (i.e., 160 channels × (160 − 16) channels in other areas/2 repetitions = 11,520), grand coherences were averaged for the above classification regions. In total, 25 connections were calculated (between brain regions 1 and 2, 1 and 3, 1 and 5, 1 and 7, 1 and 9, 2 and 4, 2 and 6, 2 and 8, 2 and 10, 3 and 4, 3 and 5, 3 and 7, 3 and 9, 4 and 6, 4 and 8, 4 and 10, 5 and 6, 5 and 7, 5 and 9, 6 and 8, 6 and 10, 7 and 8, 7 and 9, 8 and 10, and 9 and 10). Coherence values (ranging between 0 and 1) for each subject were sifted to a binary digit (0 or 1) with a threshold of the mean plus 2 SDs (i.e., the width of the 95 % confidence interval with α = 0.05) through the theta, alpha, beta, and gamma bands. Those binary digits of 1 (significant candidate) for each subject were then stacked to form a connecting map. We considered that assembled values in the grand map greater than 8 (indicating at least 80 % of the subjects, 8/10, had) were indicative of significant interaction between two brain regions. These criterions used to ensure a valid linkage were similar with our previous study for estimating mutual information (Yang et al. 2011).

Results

Behavioral measures

Before the recording session, the behavioral analyses revealed that more than 80 % of the 94 participants in the rating group correctly assigned the peaceful and fearful clips to the corresponding emotional states. The scores (with a maximum of 10) for the clips were 7.1 ± 1.7 and 6.7 ± 2.5, respectively, validating the affective contrast (p > 0.05).

The responses measured in the MEG experiments revealed that all 10 subjects had the same feelings about the unimodal stimulus clips (i.e., feeling peaceful about Ap (2.7 ± 0.4, in strength with a maximum of 4) or Vp (2.5 ± 0.5), and feeling fearful about Af (2.4 ± 0.5) or Vf (2.5 ± 0.7)), and rated the same scores for different modalities in the same states. However, four of the subjects considered ApVf to be peaceful (2.5 ± 0.7) and AfVp to be fearful (2.0 ± 0.8), while four of them made the opposite assignments. The remnant two considered ApVf and AfVp to be fearful (2.0 ± 0.0) and ApVf and AfVp to be peaceful (1.5 ± 0.7), respectively.

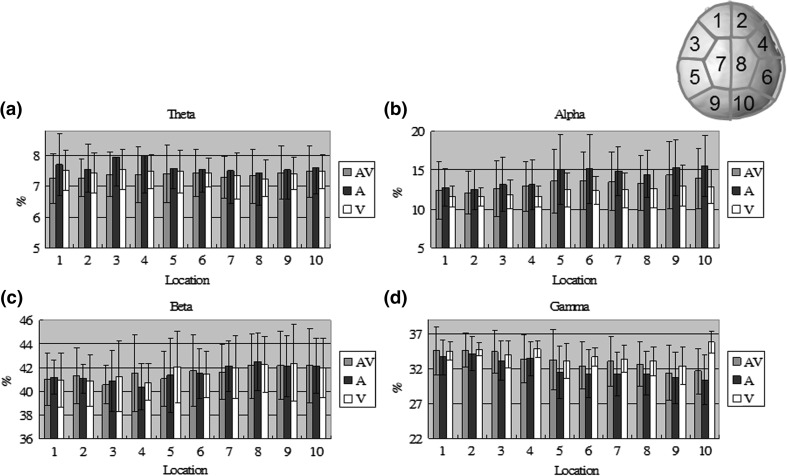

Frequency analysis

Table 1 lists the significances of the brain rhythms from different measures. Auditory modality differed significantly (p < 0.05) in the alpha and gamma bands, which produced a positive and a negative shift by the peaceful stimulus compared to the no-stimulus condition, respectively, around the right frontotemporal, temporal, central and occipital areas. There was also a reliable significant interaction (i.e. audiovisual modality) around the right temporal, central and occipital areas. However, no significant difference was found in visual modality (i.e. main effect). To get a closer look to the peaceful effect, Fig. 2 shows mean values of theta, alpha, beta, and gamma activity from the subjects to Ap, Vp and ApVp modalities in ten different regions. Each bar represents the percentage ratio between power in one frequency band and total power across all bands for one modality. Ap induced stronger theta and alpha power in the frontotemporal and temporal areas, respectively, whereas Vp induced stronger beta and gamma power in the left temporal and right occipital areas. It is interesting to note that ApVp only induced strongest power in the beta and gamma bands around few areas as compared to the other two unimodalities.

Table 1.

Significances (p values) of the brain rhythms from different modalities at four frequency bands across ten brain areas

| Frequency band | Modality | Location | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | ||

| Theta | Auditory test (none, peace, and fear) |

0.94 (F1.76,14.10 = 0.054) |

0.84 (F1.89,15.08 = 0.168) |

0.57 (F1.69,13.51 = 0.578) |

0.55 (F1.65,13.20 = 0.605) |

0.72 (F1.49,11.91 = 0.333) |

0.25 (F1.07,8.56 = 1.489) |

0.69 (F1.70,13.62 = 0.377) |

0.92 (F1.32,10.57 = 0.076) |

0.64 (F1.39,11.08 = 0.448) |

0.47 (F1.44,11.55 = 0.693) |

| Visual test (none, peace, and fear) |

0.31 (F1.98,15.85 = 1.263) |

0.06 (F1.79,14.28 = 3.264) |

0.25 (F1.75,14.00 = 1.491) |

0.23 (F1.84,14.70 = 1.589) |

0.18 (F1.99,15.94 = 1.873) |

0.05 (F1.48,11.87 = 3.708) |

0.31 (F1.81,14.47 = 1.236) |

0.12 (F1.59,12.72 = 2.374) |

0.13 (F1.40,11.21 = 2.590) |

0.15 (F1.13,9.07 = 2.460) |

|

| A*V | 0.16 (F2.22,17.77 = 1.963) |

0.31 (F2.36,18.90 = 1.249) |

0.15 (F3.00,23.61 = 1.772) |

0.2 (F2.33,18.67 = 1.594) |

0.92 (F2.54,20.32 = 0.216) |

0.34 (F2.88,23.01 = 1.165) |

0.47 (F2.47,19.77 = 0.905) |

0.42 (F2.54,20.29 = 0.999) |

0.85 (F2.92,23.35 = 0.328) |

0.67 (F2.28,18.26 = 0.587) |

|

| Alpha | Auditory test (none, peace, and fear) |

0.11 (F1.32,10.56 = 2.531) |

0.18 (F1.28,10.25 = 1.858) |

0.07 (F1.27,10.13 = 3.830) |

0.047 P > N (F1.22,9.75 = 4.899) |

0.011 P > N (F1.57,12.58 = 7.207) |

0.015 P > N (F1.38,11.07 = 7.293) |

0.009 P > N (F1.48,11.82 = 8.114) |

0.023 P > N (F1.43,11.47 = 5.984) |

0.008 P > N (F1.37,10.95 = 9.097) |

0.008 P > N (F1.44,11.52 = 8.771) |

| Visual test (none, peace, and fear) |

0.99 (F1.33,10.61 = 0.010) |

0.32 (F1.65,12.21 = 1.207) |

0.75 (F1.89,15.15 = 0.291) |

0.61 (F1.74,13.92 = 0.508) |

0.82 (F1.75,14.01 = 0.202) |

0.52 (F1.23,9.84 = 0.527) |

0.32 (F1.81,14.49 = 1.192) |

0.54 (F1.78,14.22 = 0.636) |

0.21 (F1.60,12.79 = 1.701) |

0.22 (F1.29,10.32 = 1.626) |

|

| A*V | 0.43 (F1.46,11.71 = 0.806) |

0.32 (F2.43,19.42 = 1.207) |

0.16 (F1.41,11.25 = 1.737) |

0.15 (F1.91,15.27 = 2.118) |

0.08 (F2.47,19.79 = 2.234) |

0.07 (F1.90,15.19 = 3.201) |

0.07 (F2.56,20.45 = 2.822) |

0.023 (F2.22,17.77 = 4.526) |

0.05 (F2.08,16.67 = 3.431) |

0.028 (F2.85,22.77 = 3.695) |

|

| Beta | Auditory test (none, peace, and fear) |

0.29 (F1.64,13.11 = 1.325) |

0.31 (F1.22,9.73 = 1.204) |

0.83 (F1.55,12.37 = 0.180) |

0.39 (F1.61,12.90 = 0.994) |

0.87 (F1.69,13.50 = 0.130) |

0.31 (F1.24,9.91 = 1.175) |

0.34 (F1.67,13.39 = 1.135) |

0.05 (F1.27,10.12 = 4.338) |

0.72 (F1.55,12.38 = 0.323) |

0.12 (F1.83,14.65 = 2.445) |

| Visual test (none, peace, and fear) |

0.19 (F1.68,13.42 = 1.797) |

0.1 (F1.49,11.88 = 2.564) |

0.27 (F1.22,9.72 = 1.380) |

0.08 (F1.83,14.66 = 2.921) |

0.1 (F1.40,11.18 = 2.587) |

0.27 (F1.43,11.44 = 1.407) |

0.26 (F1.84,14.70 = 1.464) |

0.21 (F1.68,13.45 = 1.683) |

0.13 (F1.81,14.45 = 2.321) |

0.20 (F1.86,14.86 = 1.791) |

|

| A*V | 0.1 (F2.24,17.88 = 2.086) |

0.13 (F2.13,17.02 = 1.904) |

0.05 (F2.34,18.73 = 2.574) |

0.043 (F2.62,20.99 = 3.354) |

0.17 (F2.51,20.05 = 1.696) |

0.08 (F2.51,10.07 = 2.274) |

0.026 (F3.36,26.91 = 3.457) |

0.023 (F2.59,20.69 = 4.121) |

0.06 (F2.60,20.78 = 2.843) |

0.024 (F2.97,23.73 = 3.790) |

|

| Gamma | Auditory test (none, peace, and fear) |

0.42 (F1.47,11.75 = 0.900) |

0.52 (F1.52,12.15 = 0.676) |

0.3 (F1.43,11.42 = 1.291) |

0.046 N > P (F1.54,12.31 = 4.304) |

0.07 (F1.90,15.23 = 3.104) |

0.004 N > P (F1.51,12.09 = 10.261) |

0.016 N > P (F1.53,12.23 = 6.621) |

0.025 N > P (F1.92,15.33 = 4.793) |

0.022 N > P (F1.62,12.96 = 5.658) |

0.002 N > P (F1.69,13.49 = 10.866) |

| Visual test (none, peace, and fear) |

0.5 (F1.77,14.19 = 0.706) |

0.16 (F1.78,14.26 = 1.996) |

0.22 (F1.87,14.95 = 1.628) |

0.36 (F1.58,12.64 = 1.091) |

0.95 (F1.78,14.23 = 0.043) |

0.87 (F1.59,12.71 = 0.132) |

0.78 (F1.67,13.35 = 0.247) |

0.58 (F1.87,14.97 = 0.561) |

0.4 (F1.54,12.34 = 0.961) |

0.65 (F1.13,9.05 = 0.251) |

|

| A*V | 0.46 (F2.45,19.63 = 0.918) |

0.29 (F2.82,22.54 = 1.295) |

0.16 (F2.68,21.47 = 1.741) |

0.52 (F2.40,19.17 = 0.823) |

0.08 (F3.07,24.58 = 2.252) |

0.043 (F3.02,24.19 = 3.143) |

0.05 (F3.25,26.02 = 2.807) |

0.002 (F2.88,23.01 = 7.094) |

0.011 (F3.10,24.82 = 4.479) |

0.004 (F2.88,23.06 = 6.063) |

|

The location numbers correspond to ten brain regions: 1, left frontal; 2, right frontal; 3, left frontotemporal; 4, right frontotemporal; 5, left temporal; 6, right temporal; 7, left central; 8, right central; 9, left occipital; and 10, right occipital

The notations of P and N indicate peaceful stimulus and no-stimulus conditions

Bold numbers indicate significant values at p < 0.05

Fig. 2.

Mean values of the a theta, b alpha, c beta, and d gamma activity from the ten subjects to Ap, Vp and ApVp modalities in ten different regions. Each bar represents the percentage ratio between power in one frequency band and total power across all bands for one modality. The location numbers correspond to a top view of the brain with labels in the upper-right corner

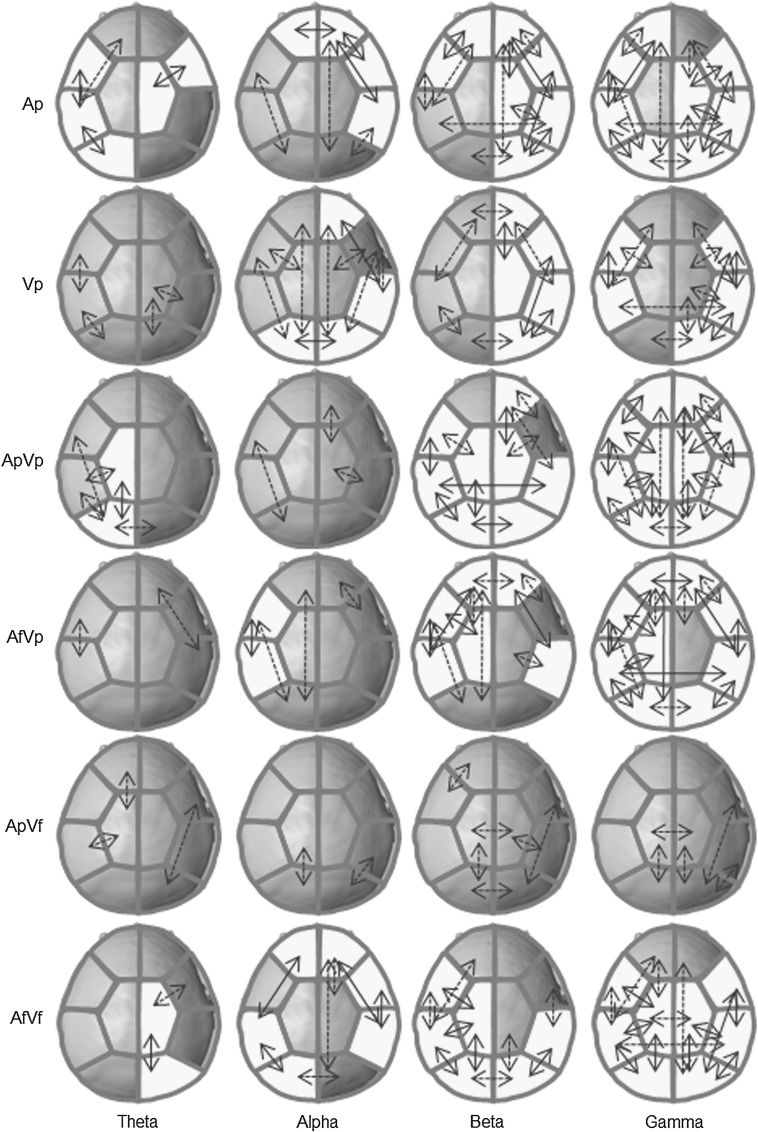

Coherence analysis

The grand functional connection maps for each coherent frequency band are illustrated in Fig. 3. In the pure auditory modality (Ap), coherent theta oscillations were found in the left hemisphere areas, whereas coherent alpha oscillations were found in the right hemisphere. Coherent oscillations of higher frequencies (>30 Hz) were found in both hemispheres. The interhemispheric connection was only observed for alpha activity in the frontal area. In the pure visual modality (Vp), coherent alpha and beta oscillations were found in the right hemisphere areas while coherent gamma oscillations were found in both hemispheres around the frontotemporal, temporal and right posterior areas. The interhemispheric connection was only observed for alpha activity in the occipital area. In the audiovisual modality of congruent conditions (ApVp and AfVf), coherent theta oscillations were found in the left and right hemisphere areas, respectively, while coherent beta and gamma oscillations were found in both hemispheres, but more in the left hemisphere areas for coherent beta oscillations. The interhemispheric connections were observed with higher activity in the posterior areas. Notably, in the audiovisual modality of incongruent conditions (AfVp or ApVf), coherent oscillations were found in the left hemisphere areas or none. The interhemispheric connections were observed with higher activity in the frontal and temporal areas in the former condition but not in the latter condition.

Fig. 3.

The grand functional connection maps between pairs of brain regions. Each yellow patch indicates a significant value averaged over 16 sensors. Solid lines represent assembled values greater than 8, while dotted lines represent assembled values equal 7. (Color figure online)

Discussion

Magnetophysiological analysis

Auditory effect was significantly (positively/negatively) related to the alpha and gamma activity, so was the interaction. In peaceful mode, auditory stimulus could induce higher percentage of low frequency power in the temporal area, while visual stimulus could induce that of high frequency power in the temporal and occipital areas. These observations suggest that information extraction in a single modality can be completely dependent on its own pathway (Zhao and Chen 2009). However, the various types of information elicited by a multimodality from different pathways may exert competitive effects, especially in incongruent conditions, and a bias sensation that would manifest finally after fusion (Stekelenburg and Vroomen 2009; Koelewijn et al. 2010). The event-related potential research of Fort et al. (2002) revealed that the magnitude of early interactions in recognition partly depended on the dominant sensory modality of the subjects, with those who were considered to be auditory dominant being faster to recognize auditory objects than visual objects, and visual dominant individuals recognizing visual objects more rapidly than auditory objects. In the present study, auditory modality had a relatively large influence on emotion than visual modality and approached more to audiovisual modality. This bias was in line with the study of Most and Michaelis (2012), which showed that the accuracy of emotion perception among children with hearing loss was lower than that of the normal hearing children in all three conditions: auditory, visual, and auditory-visual. Baumgartner et al. (2006) also demonstrated that music can markedly enhance the emotional experience evoked by affective pictures. However, the dominance can depend on the period and quality of the stimulus usage as well (Fenko et al. 2010). Our results just suggest the presence of auditory modality may be easier to facilitate within peaceful state. And appropriate stimuli could exploit the existence of a specific dominance in individual subjects to enhance his/her mental condition in clinical practice (Fenko et al. 2010).

Coherences

Statistical comparisons between ApVp and other modalities (i.e., Ap, Vp, and ApVf) revealed significant differences in the theta, alpha, beta, and gamma bands around the frontotemporal and occipital areas. This prompted the drawing of coherence maps in order to reveal the method of frequency processing for each modality.

Theta coherence

Linkages in the theta connectivity patterns were more frequent in the left hemisphere for Ap, whereas they were more common in the right hemisphere (especially in the frontotemporal areas) for Vf. This observation is similar to that reported by Pourtois et al. (2005), whose analyses of emotional happiness and fear revealed supplementary convergence areas situated mainly anteriorly in the left hemisphere for happy pairings and in the right hemisphere for fear pairings. Also, Dolan et al. (2001) showed that perceptual facilitation when processing fearful faces is expressed through modulation of neuronal responses in the fusiform cortex, which is associated with the processing of face identity, and the amygdale, which matches our findings in the frontotemporal area. The importance of low-frequency neural activity was addressed in the MEG study of Luo et al. (2010), which revealed out that a delta-theta phase modulation across early sensory areas plays an essential “active” role in continuously tracking naturalistic audiovisual streams, carrying dynamic multisensory information, and reflecting cross-sensory interaction in real time.

Alpha coherence

Linkages in the alpha connectivity patterns were more frequent in the left hemisphere for AfVp, whereas they were more common in the right hemisphere for unimodalities. A notable result was the absence of significant alpha coherence for ApVp and ApVf, which constitutes a condition of strong peacefulness. Chen et al. (2010) observed early alpha suppression in both the AV > A and AV > V contrasts, which implies that alpha activity may be associated with the ascending specific sensory information in each pathway (Yang et al. 2008).

Beta coherence

Linkages in the beta connectivity patterns arose more frequently in the left hemisphere for the bimodality, except ApVf), but more in the right hemisphere for the unimodalities. Senkowski et al. (2009) concluded that the association between oscillatory beta activity and integrative multisensory processing is directly linked to the effects of multisensory reaction-time facilitation, which means that beta activity plays an important role in early multisensory integrative processing.

Gamma coherence

Linkages in the gamma connectivity arose in both hemispheres for each modality, except ApVf. The involvement of activity at higher frequencies has been discussed in many reports on multimodality. For example, Mishra et al. (2007) showed that short-latency ERP activity localized to the auditory cortex and polymodal cortex of the temporal lobe, concurrent with gamma bursts in the visual cortex, were associated with perception of the double-flash illusion in early cross-modal interactions. Moreover, dynamic coupling of neural populations in the gamma frequency range might be a crucial mechanism for integrative multisensory processes (Senkowski et al. 2009).

Conclusion

The present study provides evidence of auditory domination in perceptual bias during multimodality processing of peaceful consciousness. Coherence analysis suggested that the theta oscillations are a transmitter of emotion signals, with the left and right brains being active in peaceful and fearful moods, respectively. Notably, cerebral hemispheric lateralization was also apparent in the alpha and beta oscillations as asymmetric communications that might handle simple or pure information in the right brain but complex or mixed information in the left brain.

Acknowledgments

This study was supported in part by research grants from National Science Council (NSC-99-2410-H-130-018-, NSC-100-2628-E-010-002-MY3) and Academia Sinica (AS-99-TP-AC1), Taiwan.

Contributor Information

Chia-Yen Yang, Email: cyyang@mcu.edu.tw.

Ching-Po Lin, Phone: +886-2-28267338, FAX: +886-2-28262285, Email: chingpolin@gmail.com.

References

- Adachi Y, Shimogawara M, Higuchi M, Haruta Y, Ochiai M. Reduction of non-periodic environmental magnetic noise in MEG measurement by continuously adjusted least squares method. IEEE Trans Appl Supercon. 2001;11:669–672. doi: 10.1109/77.919433. [DOI] [Google Scholar]

- Baumgartner T, Esslen M, Jancke L. From emotion perception to emotion experience: emotions evoked by pictures and classical music. Int J Psychophysiol. 2006;60:34–43. doi: 10.1016/j.ijpsycho.2005.04.007. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. doi: 10.1163/156856897X00357. [DOI] [PubMed] [Google Scholar]

- Campanella S, Belin P. Integrating face and voice in person perception. Trends Cogn Sci. 2007;11:535–543. doi: 10.1016/j.tics.2007.10.001. [DOI] [PubMed] [Google Scholar]

- Chen YH, Edgar JC, Holroyd T, Dammers J, Thonnessen H, Roberts TP, Mathiak K. Neuromagnetic oscillations to emotional faces and prosody. Eur J Neurosci. 2010;31:1818–1827. doi: 10.1111/j.1460-9568.2010.07203.x. [DOI] [PubMed] [Google Scholar]

- Collignon O, Girard S, Gosselin F, Roy S, Saint-Amour D, Lassonde M, Lepore F. Audio-visual integration of emotion expression. Brain Res. 2008;1242:126–135. doi: 10.1016/j.brainres.2008.04.023. [DOI] [PubMed] [Google Scholar]

- Doehrmann O, Weigelt S, Altmann CF, Kaiser J, Naumer MJ. Audiovisual functional magnetic resonance imaging adaptation reveals multisensory integration effects in object-related sensory cortices. J Neurosci. 2010;30:3370–3379. doi: 10.1523/JNEUROSCI.5074-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dolan RJ, Morris JS, de Gelder B. Crossmodal binding of fear in voice and face. Proc Natl Acad Sci USA. 2001;98:10006–10010. doi: 10.1073/pnas.171288598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esposito A. The perceptual and cognitive role of visual and auditory channels in conveying emotional information. Cognit Comput. 2009;1:268–278. doi: 10.1007/s12559-009-9017-8. [DOI] [Google Scholar]

- Fenko A, Schifferstein HN, Hekkert P. Shifts in sensory dominance between various stages of user-product interactions. Appl Ergon. 2010;41:34–40. doi: 10.1016/j.apergo.2009.03.007. [DOI] [PubMed] [Google Scholar]

- Fort A, Delpuech C, Pernier J, Giard MH. Dynamics of cortico-subcortical cross-modal operations involved in audio-visual object detection in humans. Cereb Cortex. 2002;12:1031–1039. doi: 10.1093/cercor/12.10.1031. [DOI] [PubMed] [Google Scholar]

- Hyvärinen A, Särelä J, Vigário R (1999) Spikes and bumps: artefacts generated by independent component analysis with insufficient sample size. In: Proceedings of international workshop on independent component analysis and signal separation (ICA’99), pp 425–429

- Koelewijn T, Bronkhorst A, Theeuwes J. Attention and the multiple stages of multisensory integration: a review of audiovisual studies. Acta Psychol. 2010;134:372–384. doi: 10.1016/j.actpsy.2010.03.010. [DOI] [PubMed] [Google Scholar]

- Latinus M, VanRullen R, Taylor MJ. Top-down and bottom-up modulation in processing bimodal face/voice stimuli. BMC Neurosci. 2010;11:36. doi: 10.1186/1471-2202-11-36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logeswaran N, Bhattacharya J. Crossmodal transfer of emotion by music. Neurosci Lett. 2009;455:129–133. doi: 10.1016/j.neulet.2009.03.044. [DOI] [PubMed] [Google Scholar]

- Luo H, Liu Z, Poeppel D. Auditory cortex tracks both auditory and visual stimulus dynamics using low-frequency neuronal phase modulation. PLoS Biol. 2010;8:e1000445. doi: 10.1371/journal.pbio.1000445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massaro DW, Egan PB. Perceiving affect from the voice and the face. Psychon Bull Rev. 1996;3:215–221. doi: 10.3758/BF03212421. [DOI] [PubMed] [Google Scholar]

- Mishra J, Martinez A, Sejnowski TJ, Hillyard SA. Early cross-modal interactions in auditory and visual cortex underlie a sound-induced visual illusion. J Neurosci. 2007;27:4120–4131. doi: 10.1523/JNEUROSCI.4912-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Most T, Michaelis H (2012) Auditory, Visual, and auditory-visual perception of emotions by young children with hearing loss vs. children with normal hearing. J Speech Lang Hear Res. doi:10.1044/1092-4388(2011/11-0060) [DOI] [PubMed]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. doi: 10.1163/156856897X00366. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Debatisse D, Despland PA, de Gelder B. Facial expressions modulate the time course of long latency auditory brain potentials. Brain Res Cogn Brain Res. 2002;14:99–105. doi: 10.1016/S0926-6410(02)00064-2. [DOI] [PubMed] [Google Scholar]

- Pourtois G, de Gelder B, Bol A, Crommelinck M. Perception of facial expressions and voices and of their combination in the human brain. Cortex. 2005;41:49–59. doi: 10.1016/S0010-9452(08)70177-1. [DOI] [PubMed] [Google Scholar]

- Schifferstein HNJ, Otten JJ, Thoolen F, Hekkert P. The experimental assessment of sensory dominance in a product development context. J Design Res. 2010;8:119–144. doi: 10.1504/JDR.2010.032074. [DOI] [Google Scholar]

- Senkowski D, Schneider TR, Tandler F, Engel AK. Gamma-band activity reflects multisensory matching in working memory. Exp Brain Res. 2009;198:363–372. doi: 10.1007/s00221-009-1835-0. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, Vroomen J. Neural correlates of audiovisual motion capture. Exp Brain Res. 2009;198:383–390. doi: 10.1007/s00221-009-1763-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka E, Kida T, Inui K, Kakigi R. Change-driven cortical activation in multisensory environments: an MEG study. Neuroimage. 2009;48:464–474. doi: 10.1016/j.neuroimage.2009.06.037. [DOI] [PubMed] [Google Scholar]

- Wu L, Eichele T, Calhoun VD. Reactivity of hemodynamic responses and functional connectivity to different states of alpha synchrony: a concurrent EEG-fMRI study. Neuroimage. 2010;52:1252–1260. doi: 10.1016/j.neuroimage.2010.05.053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang CY, Hsieh JC, Chang Y. Foveal evoked magneto-encephalography features related to parvocellular pathway. Vis Neurosci. 2008;25:179–185. doi: 10.1017/S0952523808080413. [DOI] [PubMed] [Google Scholar]

- Yang CY, Chao YP, Lin CP (2011) Differences in early dynamic connectivity between visual expansion and contraction stimulations revealed by an fMRI-directed MEG approach. J Neuroimaging. doi:10.1111/j.1552-6569(2011.00623.x) [DOI] [PubMed]

- Zhao H, Chen ACN. Both happy and sad melodies modulate tonic human heat pain. J Pain. 2009;10:953–960. doi: 10.1016/j.jpain.2009.03.006. [DOI] [PubMed] [Google Scholar]