Abstract

Signals from different sensory modalities may converge on a single neuron. We study theoretically a setup in which one signal is transmitted via facilitating synapses (F signal) and another via depressing synapses (D signal). When both signals are present, the postsynaptic cell preferentially encodes information about slow components of the F signal and fast components of the D signal, whereas for a single signal, transmission is broadband. We also show that, in the fluctuation-driven regime, the rate of information transmission may be increased through stochastic resonance (SR). Remarkably, the role of the beneficial noise is played by another signal, which is itself represented in the spike train of the postsynaptic cell.

Keywords: short-term plasticity, information transmission, information filtering, multi-sensory integration, stochastic resonance

1. Introduction

A single neuron can receive inputs that encode more than one signal. The combined effect of several stimuli on the postsynaptic spike train can be regarded as a non-trivial signal interaction. When stimuli stem from different sensory modalities (like vision and hearing), such interaction is often referred to as multisensory integration (Shimojo and Shams, 2001; Driver and Noesselt, 2008) and has been demonstrated on many levels, ranging from behavioral experiments (Sekuler et al., 1997; Shams et al., 2000) over fMRI (Macaluso et al., 2000) and EEG studies (Giard and Peronnet, 1999) to intracellular measurements in single neurons (Meredith and Stein, 1983; Stein and Stanford, 2008).

Typically, studies of multisensory integration consider the overall increase or decrease in firing rate. The information transmission about each of the time-dependent stimuli and how it is affected by the interaction of signals has received less attention. However, temporal features may play an important role in multisensory integration. It thus seems worthwhile to study theoretically how two time-varying signals can interact in a neuron.

In general, signals may differ in their temporal structure and in the way they enter the postsynaptic dynamics, e.g., on different dendritic or somatic locations (Rowland et al., 2007). On a functional level, the synapses transmitting one signal may differ in their filter properties from the synapses transmitting the other. Synaptic filter properties are shaped by short-term synaptic plasticity (Zucker and Regehr, 2002): upon repetitive stimulation, synaptic efficacies can either increase (facilitation) or decrease (depression), depending on the nature of the synapse. This usage-dependence of synaptic transmission endows synapses with a variety of functions relevant to information processing (Abbott and Regehr, 2004).

An important aspect of neural information transmission is whether neurons preferentially transmit information about slow or fast components of a signal, i.e., whether they act as a filter of sensory information (Chacron et al., 2003; Krahe et al., 2008; Middleton et al., 2009; Sharafi et al., 2013). Previous theoretical studies have found that information transmission through homogeneous populations of dynamic synapses does hardly depend on signal frequency (Lindner et al., 2009; Merkel and Lindner, 2010), although it has recently been shown (by taking synaptic stochasticity into account) that this is only true for large synaptic populations (Rosenbaum et al., 2012). Filter properties of heterogeneous populations, in which synapses differ in their dynamics depending on the kind of presynaptic cell, have not yet been studied. Examples for such a scenario include Purkinje cells [parallel/climbing fibers making facilitating/depressing synapses, (Kandel et al., 2000)] and simple cells in cat visual cortex [cortico-cortical facilitating and thalamo-cortical depressing synapses, (Banitt et al., 2007)]. In this paper, we study the neural transmission of two independent signals that enter via such distinct synaptic populations.

Specifically, we explore theoretically how the presence or absence of one signal can influence the neuron's information transmission properties with respect to the other signal. To this end, we use information-theoretic measures to quantify (a) the amount of information transferred about certain frequency components of the signal and (b) the total amount of information transferred about each of the signals. We find that the target neuron preferentially encodes information about slow components of the signal impinging on facilitating synapses and fast components of the signal impinging on depressing synapses when both signals are present. This is in contrast to the case of only one signal, in which information transfer is largely frequency independent. Further, we find that the presence of a second signal can increase the total rate of information transmission about the first signal, through the effect of stochastic resonance (SR) (Gammaitoni et al., 1998). In order to clarify the role of short-term plasticity for SR, we also compare to a setup with static synapses.

2. Materials and methods

2.1. Model

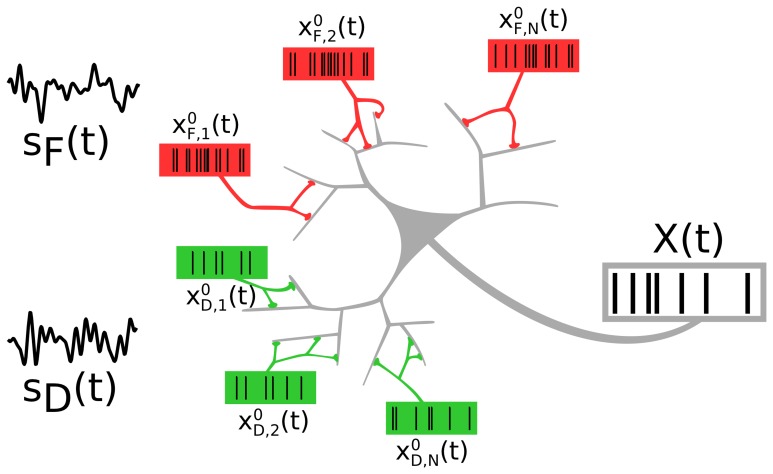

We consider a neuron that receives inputs from two distinct neural populations, each of which encodes an independent signal (see Figure 1). The two presynaptic populations differ in the synaptic connections they make onto the target cell: One signal (F signal) is encoded in spike trains impinging on facilitating, the other (D signal) in spike trains impinging on depressing synapses.

Figure 1.

A neuron receiving spike trains via two types of synapses showing short-term facilitation (red) or depression (green). Presynaptic populations are assumed to fire Poissonian spike trains, either at a background rate or an elevated rate, modulated by a signal sF(t) (“F signal,” for the facilitating synapses) and sD(t) (“D signal,” for the depressing synapses). We address the question of how the two independent signals are represented and how they interact in the output spike train.

2.1.1. Input

The spiking activity of each presynaptic neuron (N = 500 per population) is modeled as an independent Poisson process [where P is F or D], with a common instantaneous rate RP(t) for all the neurons within one population. A population P can be in a background state, in which all neurons fire at a low constant rate RP(t) = r0 = 1 Hz. Alternatively, the population may be in a signaling state, in which a time-dependent signal sP(t) is encoded in the common instantaneous rate RP(t) = rP (1 + ϵP sP(t)), with a higher baseline rate rP = 20 Hz. For the two signals, sF(t) and sD(t), we use two independent band-limited Gaussian white noise processes, each with unit variance and cutoff frequency fc = 10 Hz; the modulation amplitude is ϵP = 0.05.

2.1.2. Synapses

We use a deterministic model of synaptic short-term plasticity, close to the models proposed or used in Tsodyks and Markram (1997), Markram et al. (1998), Dittman et al. (2000), Lewis and Maler (2002, 2004), Lindner et al. (2009) and Merkel and Lindner (2010). We use purely facilitating and purely depressing synapses, which is an idealization but allows us to use theoretical expressions developed in Merkel and Lindner (2010). We have verified that using synapses that are predominantly facilitating or depressing leads to qualitatively similar results.

Facilitating synapses are governed by

| (1) |

| (2) |

while depressing synapses obey

| (3) |

Here, Fn(t−) (Dk(t−)) is the probability that a functional contact of the nth facilitating (kth depressing) synaptic connection releases a neurotransmitter-filled vesicle upon spike arrival. Under functional contacts, we subsume multiple synaptic boutons, multiple active zones per bouton, or any other physiological feature that allows a synapse to release more than one vesicle onto the target neuron. By the “-”-superscript to the time argument, we denote the evaluation of this variable immediately before it is itself influenced by the incoming spike. Between spikes, release probability relaxes to its intrinsic value F0, F = 0.05 (F0, D = 0.4) on a time scale τF = τD = 50 ms; we set Δ = 0.175.

As is commonly done in this kind of deterministic model, we approximate the effect of a presynaptic spike on the postsynaptic conductance by considering the trial average of the number of vesicles released. This means that the jump in postsynaptic conductance induced by a presynaptic spike at the nth facilitating (kth depressing) synapse is aFn(t) (aDk(t)), where a = 10 nS is the jump in conductivity if all functional contacts release their vesicles. This corresponds roughly to 10 functional contacts that each presynaptic neuron makes onto the target cell.

In reality, release of synaptic vesicles is probabilistic, and once a vesicle has been released, additional variability is introduced by the stochastic nature of vesicle recovery. Synaptic depression then emerges naturally as a consequence of resource depletion. As it has recently been shown that synaptic stochasticity can influence the filtering of rate coded information (Rosenbaum et al., 2012), we have verified that the effects we describe below are also found in simulations with stochastic synapses. For these simulations, we have used a stochastic model for depression dynamics (Vere-Jones, 1966; Fuhrmann et al., 2002; Loebel et al., 2009; Rosenbaum et al., 2012), combined with the deterministic facilitation dynamics [Equation (1), (Dittman et al., 2000; Lewis and Maler, 2002, 2004; Merkel and Lindner, 2010)]: Upon spike arrival, each functional contact with a release-ready vesicle at the nth facilitating (depressing) synapse independently releases its vesicle with probability Fn(t−) (F0, D). The jump in postsynaptic conductance induced by vesicle release is aNn, R(t)/NC, where Nn, R(t) is the number of vesicles released and NC is the number of functional contacts. In depressing synapses, used vesicles get replaced after exponentially distributed waiting times with time constant τD, while in purely facilitating synapses, we model vesicle replacement as instantaneous. For NC → ∞, we recover the deterministic model Equation (3).

2.1.3. Target cell

The dynamics of the total postsynaptic conductance is given by

| (4) |

where the total input

| (5) |

is the sum over all incoming spike trains weighted by facilitating and depressing synaptic dynamics,

| (6) |

The target cell is a leaky integrate-and-fire neuron

| (7) |

where C = 300 pF is the membrane capacitance, gL = 15 nS the leak conductance, EL = −60 mV the leak reversal potential, and Ee = 0 mV the excitatory reversal potential. We approximate local inhibition by a constant current Ii, allowing us to control whether the neuron is in a supra-threshold or a sub-threshold regime. When V reaches a threshold Vth = −50 mV, the neuron emits a spike and the voltage is reset to Vr = −62.5 mV. We define the output spike train as , where ti* is the time of the ith threshold crossing.

2.2. Measure of information transmission

In order to assess how the signals sF(t) and sD(t) are encoded in the output spike train X(t), we utilize spectral measures. In simulations, we use a finite-time-window version of the Fourier transform, which for a time series x(t) is given by

| (8) |

while in analytical calculations, it is advantageous to use the infinite-time-window transform

| (9) |

Denoting trial averaging by brackets and complex conjugation by an asterisk, we approximate the cross- and power spectra of two time series x(t) and y(t) (where x = y for power spectra) as

| (10) |

for simulations (according to the strict definition, we would have to take the limit T → ∞), and use

| (11) |

for analytical calculations. A frequency-resolved measure of how well a signal s(t) can be linearly reconstructed from the spike train X(t) (or vice versa) is then given by the coherence function

| (12) |

which also yields a lower bound on the mutual information rate via the relation (Borst and Theunissen, 1999)

| (13) |

We can determine the coherence by long simulations of the system and—in the case where the integrate-and-fire model acts as a mostly linear filter—also estimate it analytically. To this end, we consider Xin(t), the total input to the target neuron after it has been weighted by the synaptic dynamics [see Equation (5)]. As detailed in the Appendix, we can express CsFXin(f) and CsDXin(f), the coherences for the two population setup, in terms of single synapse coherences and spectra, all of which have previously been derived in Merkel and Lindner (2010) [see also Rosenbaum et al. (2012) for depressing synapses]. We find

| (14) |

CsDXin(f) can be obtained from Equation (14) by simply swapping all F and D subscripts.

3. Results

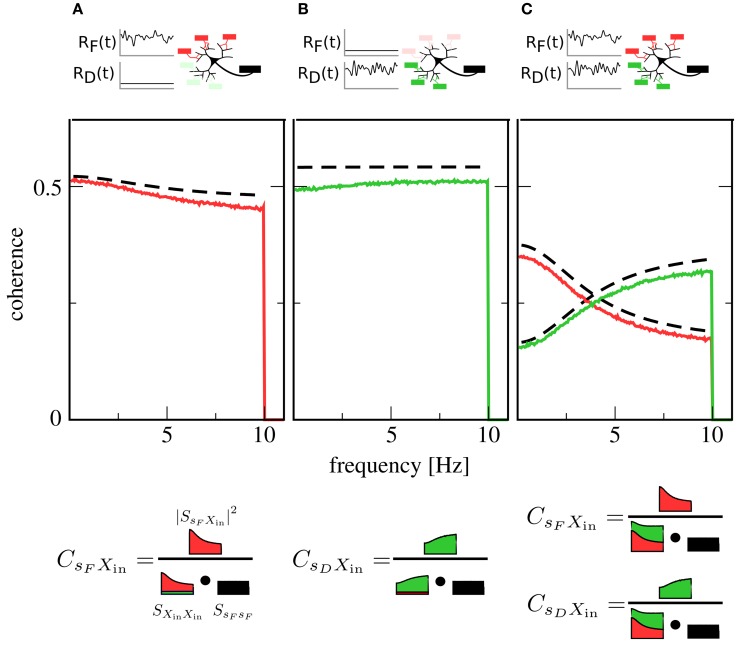

3.1. Spectral separation of information

We first examine the case of only one signal. Here, one presynaptic population is active, while the other is firing at a low background rate. This could, for instance, correspond to the presentation of a stimulus to only one sensory modality. Figures 2A,B show plots of the coherence between signal and output spike train in this situation. The coherence can be seen to be mostly flat, i.e., information transfer about the signals shows only a mild frequency dependence [note that for higher cutoff frequencies, the integrate-and-fire neuron itself would induce low-pass filtering (Vilela and Lindner, 2009)].

Figure 2.

Coherence between signals and the output spike train X(t). Red lines: coherence between F signal and X(t). Green lines: coherence between D signal and X(t). Black dashed lines: theory for CsFXin(f) and CsDXin(f) [see Equation (14)]. In (A), only the F population is encoding a signal, while the D population is firing at a constant background rate. In (B), the situation is reversed: here, the D population is in the signaling state while the F population generates background spikes at low rate. In (C), both populations are active and encoding a signal. While in (A,B) coherences are rather flat, indicating broadband transmission, the activity of both populations in (C) leads to a new effect: Coherence over the F signal is more suppressed at low frequencies, while coherence over the D signal is more suppressed at high frequencies, leading to a spectral separation of information. A graphical representation of Equation (12) (bottom row) illustrates the role of the input power spectra in shaping this functional dependence. Parameters: Ii = −2.25 nA, ϵF = ϵD = 0.05.

Simultaneous presence of both signals (and, consequently, activity of both populations) changes this situation qualitatively. As can be seen in Figure 2C, both signals are encoded in the output spike train, but their respective coherence now shows a marked dependence on frequency. While the overall coherence is suppressed for both signals, suppression is stronger at high frequencies than at low frequencies for the F signal and vice versa for the D signal. In other words, the neuron now preferentially encodes slow components of the F signal and fast components of the D signal.

To understand the mechanism behind this effect, first consider the situation where only the F population is transmitting a signal: spike trains filtered through facilitating synapses have more power at low than at high frequencies (Lindner et al., 2009; Merkel and Lindner, 2010). These spike trains dominate the shape of the total input power spectrum SXinXin(f), as the D population is firing at a low, unmodulated rate. However, the squared cross-spectrum |SsFXin(f)|2 has a similar shape, so that most of the frequency dependence cancels out in the calculation of the coherence (cf. Figure 2A, bottom row). This leads to the observed broadband behavior. Such a cancelation, which has first been described in Lindner et al. (2009), also explains the flat coherence in Figure 2B. In contrast, when both F and D population are transmitting a signal, the cross-spectra are unchanged but the power spectra add up, so that the total power spectrum is rather flat (Figure 2C, bottom row). Consequently, the frequency dependence of the cross spectrum is still apparent in the coherence, leading to the new effect of spectral separation.

Theoretical curves for CsFXin(f) and CsDXin(f) are also shown in Figure 2; while systematically overestimating simulation results, they are in reasonable agreement with them. This is remarkable, as we have only taken synaptic but not neuronal dynamics into account in the derivation of Equation (14). Closer inspection reveals that the theory works as long as the output firing rate is much higher than the cutoff frequency of the signals.

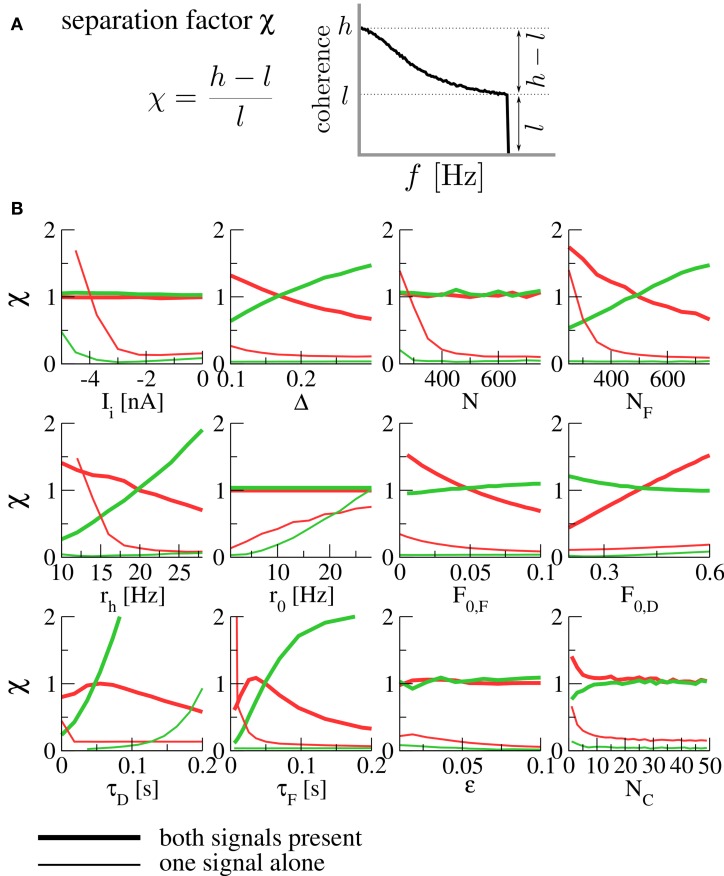

In order to quantify the robustness of the spectral separation effect under variation of parameters, we introduce the separation factor

| (15) |

where

| (16) |

are the maximal and minimal values of the coherence function over the signal's frequency band. This is a measure of the high- or low-pass character of the coherence; small values indicate broadband filtering, while large values indicate pronounced high- or low-pass behavior (see Figure 3A). The effect of spectral separation corresponds to a coherence that is rather broadband (low separation factor χ) for each signal alone, but either high- or low-pass when both are present (high separation factor).

Figure 3.

(A) The separation factor as a measure for the high- or low-pass nature of the coherence. (B) Separation factors under variation of various parameters. Thick lines denote separation factors obtained when both populations are transmitting a signal, while thin lines mark the case of only one signal (with the other population firing at the background rate r0). Separation factors for the coherence between the F signal and the output spike train are plotted in red, those for the D signal in green. For all panels, the fixed parameters are those of Figure 2. N is the size of each of the presynaptic populations (meaning that both population sizes were varied at the same time), NF is the size of the F population (with the D population fixed at ND = 500), rh is the firing rate of a population transmitting a signal (meaning that both rF and rD were varied at the same time). For the simulation of varying number of functional contacts NC, the stochastic model of synaptic dynamics was used. When separation factors are low for each signal alone but high in the presence of both signals, we observe the spectral separation effect described above.

In Figure 3B, we plot separation factors for various varied parameters. In general, it can be seen that they are considerably higher when both signals are present than for either of the signals alone for wide ranges of the parameter under variation, confirming the robustness of the effect. As a result of this analysis, we expect the spectral separation effect to be relevant for presynaptic populations of about 400 cells and more, and for synapses with rather short timescales of facilitation and depression [on the order of 100 ms, compatible with the values reported in Lewis and Maler (2002)]. To assess the influence of using a deterministic model, we have run simulations of the stochastic model (which converges to the deterministic model in the limit of infinitely many functional contacts NC). It can be seen in Figure 3B (bottom right) that the same spectral separation effect is observed in the stochastic model already for a relatively modest number of functional contacts (≈ 10).

3.2. Stochastic resonance

Until now, we have been concerned with the neuron's information filter properties in a two-signal setup. Another vital issue is whether the second signal increases or decreases the amount of information that is transmitted in total about the first. It is known (Gammaitoni et al., 1998) that additional noise can enhance information transmission in non-linear systems by virtue of stochastic resonance. Basically, additional noise may help the system to cross a threshold; in a neuronal setting, this corresponds to raising the firing rate. Here we inspect in a physiological setting whether a second signal can play a similarly beneficial role as the noise in SR.

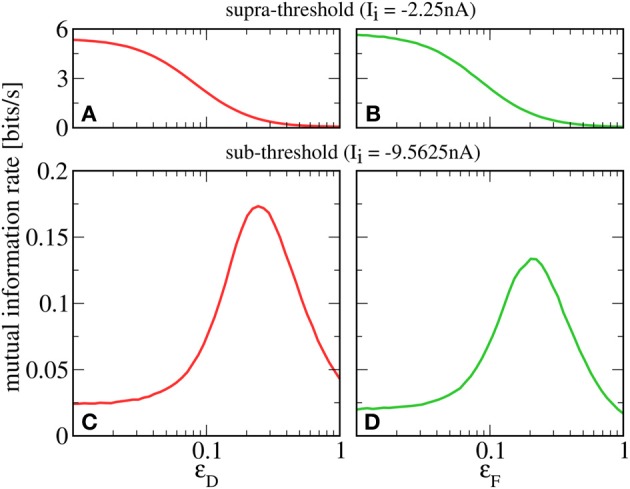

A prerequisite for SR is that the neuron is in the sub-threshold regime (reached in our model by lowering Ii), in which most cortical neurons seem to operate (Shadlen and Newsome, 1998). For this regime, we plot in Figures 4C,D a lower bound for the mutual information rate between the F or D signal and the output spike train [, Equation (13)] as a function of the second signal's amplitude ϵD or ϵF (with rF = rD = 20 Hz). It can be seen that, up to some optimal value, a stronger D signal indeed helps the transmission of the F signal and vice versa—a clear-cut case of SR. This is in contrast to the supra-threshold regime considered in the previous section, where adding a second signal always impedes the transmission of the first (see Figures 4A,B).

Figure 4.

Mutual information rate (lower bound) between the F signal (A,C, red) or D signal (B,D, green), and the output spike train when the modulation amplitude of the respective other signal (ϵD or ϵF) is varied. In the supra-threshold regime (Ii = −2.25 nA; A,B), a second signal always impedes the transmission of the first. In contrast, in the sub-threshold regime (Ii = −9.5625 nA; C,D), increasing the modulation amplitude of the D signal can be beneficial to the transmission of the F signal and vice versa. For both regimes, rF = rD = 20 Hz; ϵF = 0.05 in A,C; ϵD = 0.05 in B,D.

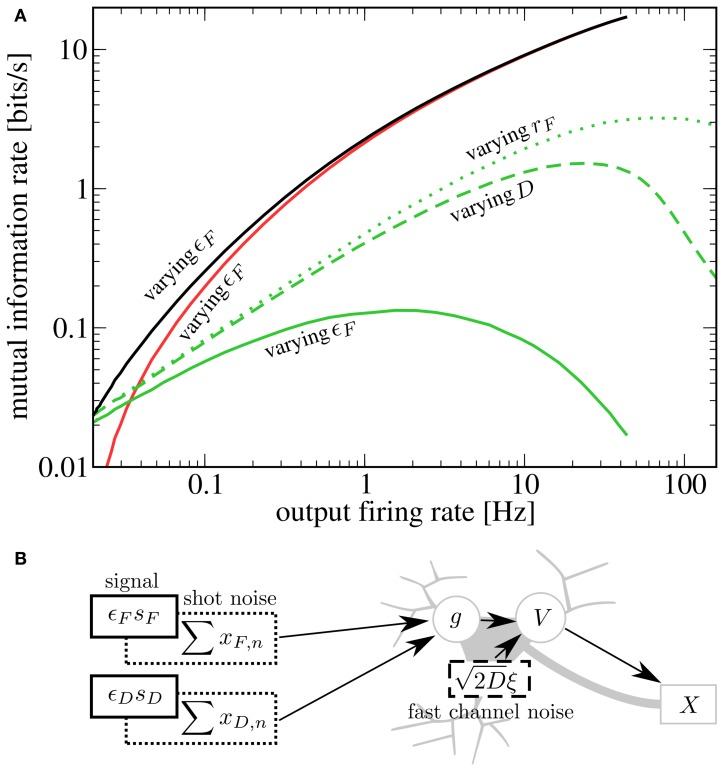

It is instructive to compare our results to other scenarios of SR. Two prominent sources of neuronal noise lie in the stochasticity of spike arrival times (synaptic noise) and the stochastic opening and closing of ion channels (channel noise). Both synaptic noise (Rudolph and Destexhe, 2001; Torres et al., 2011) as well as channel noise (Schmid et al., 2001) can give rise to SR. Synaptic noise is already present in our model and can be controlled by the baseline rate of presynaptic populations (a higher rate leads to a higher mean input as well as larger fluctuations around this mean). As a caricature of fast channel noise, we add Gaussian white noise to the r.h.s. of Equation (7); it is this kind of noise that was used in most previous studies of SR. In the following, we consider information transmission about the D signal when the amplitude of the F signal is varied; qualitative results and conclusions drawn from them are the same in the inverse case.

To compare the effects of the different noise sources on signal transmission, we start from rF = 20 Hz, D = 0 nA2s, ϵF = 0 and increase either the noise strength, the baseline rate, or the modulation amplitude. We see in Figure 5A that for SR via a second signal (solid green line), the information rate about the D signal at a given output firing rate is lower than for SR via channel noise (dashed green line). This can be understood by considering that the second signal, which plays the role of the noise, has power in exactly the same frequency range as the one that we want to transmit. It is plausible that with weaker but more broadband noise (e.g., white noise), one can achieve the same output firing rates (with help from the high frequency components) with a lower contamination of the relevant frequency range. This reasoning is consistent with the experimental finding that white noise is more “effective” for SR than low-frequency noise (Nozaki et al., 1999). Both the second signal as well as white noise yield lower peak mutual information rates than synaptic noise (green dotted line). This is due to the increase in mean input that goes along with an increase in presynaptic firing rate (raising the output firing rate without introducing noise). Although in our scenario, the information rate about the D signal is smaller than in the other two cases, there is a clear advantage of enhancing the transmission of one signal by adding another signal: both add meaningful information to the output spike train. Indeed, the total rate of information transmission for two signals (black line) exceeds that in all the other cases. At least in the case of channel noise, we can be certain that considering the total rate of information would not be meaningful, because of the molecular (not signal-related) origin of these fluctuations.

Figure 5.

(A) Comparison of different kinds of stochastic resonance. Starting from Ii = −9.5625 nA, rF = rD = 20 Hz, ϵD = 0.05, ϵF = 0, D = 0 nA2s, we increase either ϵF (up to 1), rF (up to 60 Hz) or D (up to 0.14 nA2s). For each case, we plot as a function of the output firing rate (green lines; solid, dashed, and dotted for varying ϵF, D, and rF, respectively). Additionally, we plot (red line) and (black line) for the case of varying ϵF. A second signal is less effective at enhancing information transmission of the first signal than either channel noise or an increase in presynaptic-synaptic firing. However, as the helpful signal is transmitted as well, the total rate of information transmission is highest in this case. (B) Schematic depiction of the sources of noise in the system. In addition to the fluctuating signals, noise is introduced by the stochastic firing of the presynaptic-synaptic populations and the stochasticity of ion channels.

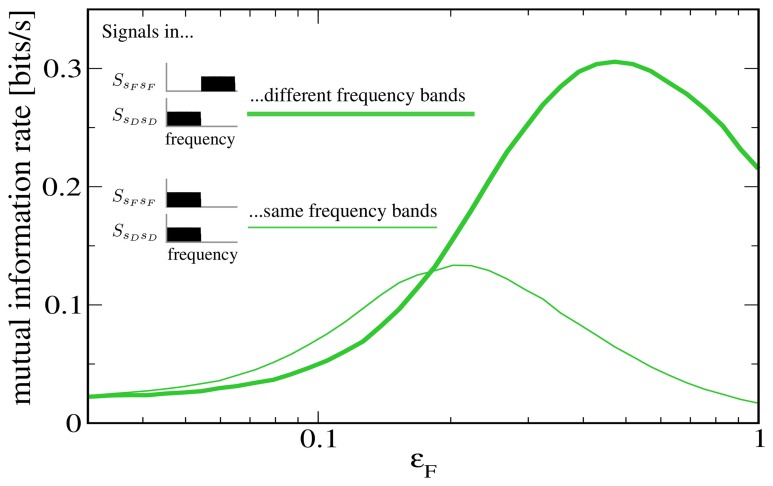

To further illustrate that the reason for the low rate of information transmission in the case of signal-meditated SR in Figure 5 is mainly the overlap of both signals in frequency space, we plot in Figure 6 a comparison to a “detuned” setup, i.e., one in which the F signal has been shifted to frequencies between 10 Hz and 20 Hz. It can be seen that this shifted version of the F signal is indeed considerably more effective at increasing information transmission about the D signal.

Figure 6.

Mutual information rate (lower bound) between the D signal and the output spike train when the modulation amplitude ϵF is varied, both for the case considered above (both signals in the same frequency range) and for the case where the F signal has been shifted to values between 10 and 20 Hz. The shifted signal is clearly more effective at enhancing information transmission about the D signal. Other parameters as in Figures 4, 5.

3.3. Comparison to a setup with static synapses

The filtering effect described in section 3.1 is clearly a consequence of heterogeneous synaptic short-term plasticity; it would not occur in a setup with static synapses. In contrast, one can expect the effect of signal-mediated SR (section 3.2) to occur independently of synaptic dynamics, as the primary beneficial effect of the second signal is an increase in postsynaptic firing rate, something that can be achieved with static synapses as well. In the following, we explicitly compare our setup to one with static synapses.

How should one choose the amplitude of static synapses to allow for a meaningful comparison? An obvious approach would be to use the intrinsic release probabilities F0, F and F0, D as the respective weights, which would correspond to taking the dynamic synapses and switching off short-term plasticity ( for example by taking the limit of infinitely fast recovery). However, the most prominent difference would then lie in the mean amplitude of the postsynaptic spike trains. In other words, shutting off facilitation (depression) would lead to a dramatic reduction (increase) in the rate of vesicle release. Instead of assessing the influence of synaptic dynamics on information transmission, we would essentially be comparing strong to weak synapses, with the obvious outcome. Here we choose the amplitudes of static synapses to equal the mean of those in the dynamic case (see Figure 7A). Note that more sophisticated schemes exist to tune parameters of the conductance dynamics such that not only the mean but also the variance of the conductance are the same for static and dynamic synapses (Lindner et al., 2009).

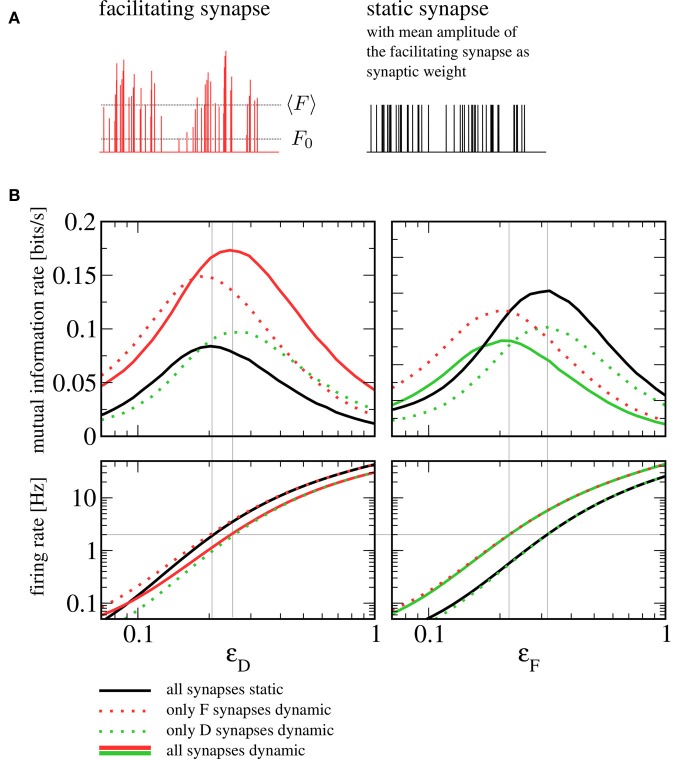

Figure 7.

Comparison to a setup with static synapses. (A) Schematic depiction of a spike train's amplitudes after passing through a facilitating synapse (with the mean amplitude 〈F〉 and the intrinsic release probability F0 indicated by dotted lines) and the same spike train with static amplitudes chosen to equal the mean amplitude of the dynamic synapse. (B) Mutual information rates (lower bound) over F signal (left) and D signal (right) when the amplitude of the respective other signal is varied (sub-threshold regime, Ii = −9.5625 nA). The solid red and green lines denote information rates for the setup with heterogeneous short-term plasticity (as shown in Figure 4), the dotted and the solid black lines correspond to setups in which one or both synaptic populations have been replaced by static synapses. It can be seen that replacing dynamic by static synapses does not qualitatively change information transmission rates. In the bottom row, we plot the firing rate of the postsynaptic cell. If the signal that plays the role of the beneficial noise is strong, the firing rate can be seen to depend only on the type of synapses (static or dynamic) through which this signal enters. The gray horizontal line indicates a firing rate of 2 Hz. Gray vertical lines indicate values of ϵD and ϵF at which this firing rate is attained; they can be seen to be in good agreement with the position of the peaks in the mutual information rate.

In Figure 7B, we show a comparison to a setup where some or all dynamic synapses have been replaced by static ones. Generally, the mutual information rate shows the same qualitative behavior for all variants. In particular, the SR peaks persist when one or both synaptic populations are replaced by static synapses. For our standard sets of parameters, the peak for all-static synapses (black lines) is shifted somewhat to the left for the F signal and somewhat to the right for the D signal; using static synapses can be seen to yield lower mutual information rates for the F signal and higher mutual information rates for the D signal. As we detail below, the shift can be understood from the change of character (dynamic to static) of the synapses through which the beneficial “noise” signal enters, in the following referred to as “noise synapses”. The increase or reduction in information transmission rate, on the other hand, can be traced back to the change of the signal synapses.

Let us first discuss the shift in the noise signal amplitudes ϵD and ϵF (the abscissae in Figure 7B, left and right, respectively) that maximize the information transmission in the sub-threshold case (top panels in Figure 7B). To understand these shifts, it is instructive to look at the postsynaptic firing rates (Figure 7B, bottom row). The beneficial effect of noise in SR is based on a rapid increase in firing rate that this noise brings about. It can be seen that for the particular parameters we have chosen, a unique condition for a maximized information transfer is that the postsynaptic firing rate is about 2 Hz. The shift in the maximum can be thus understood by addressing the much simpler question what sets the output firing rate in the different combinations of static and dynamic synapses. In the range where the maximum is attained, the rate is mainly determined by the nature of the noise synapses. Hence, we find that the maxima of the mutual information rate are attained at the same level if noise synapses are dynamic (solid red and dotted green in the left panel, solid green and dotted red in the right panel) or if they are static (dotted red and solid black in the left panel; dotted green and solid black in the right panel). For the same mean amplitude of the postsynaptic input, the facilitating (depressing) synapse introduces more (less) power in the relevant low-frequency range than the static synapse does. In order to achieve the same firing rate of 2 Hz with a static synapse, we thus have to increase (decrease) the noise signal amplitude compared to the situation with a facilitating (depressing) synapse.

Finally, we would like to address the question why with the chosen parameters facilitating (depressing) synapses seem to transfer more (less) information about the stimulus than static ones do. First of all, closer inspection of the theoretical formula [Equation (14)] indicates that this does not have to be the case for all parameter sets. We can, however, make plausible that we expect a larger (smaller) information rate for facilitating (depressing) synapses compared to static synapses for a large number of synapses and a moderate-to-large amplitude of the noise signal. If we have a large amount of noise in the system that is independent of the channel that carries the signal, the difference between dynamic and static signal synapses arises due to the differences between input-signal-output-spike-train cross-spectra. Facilitating synapses have a higher cross-correlation with the signal because the amplitudes of the spikes change in parallel with the rate modulation of input spikes and this higher cross-correlation leads to a higher coherence. Depressing synapses, on the contrary, change the amplitude of input spikes in an opposite sense to the rate modulation and thus have a smaller cross-correlation between rate modulation and output spikes, thus a lower coherence.

4. Discussion

How a single time-varying signal is encoded in the neural spike train has been the subject of numerous studies [see e.g., Borst and Theunissen (1999) and references therein]. In this paper, we have demonstrated two distinct effects that arise in a setup with two signals. Firstly, adding a second signal can switch the neuron's information filter properties from broadband to frequency selective, if the two signals impinge on synapses that display opposite kinds of synaptic short-term plasticity. Secondly, the second signal can increase the respective rate of information transmission for both signals, when the neuron is in the fluctuation-driven regime. Although we have demonstrated these effects in the same model setup, it should be noted that they are distinct in nature and that the latter does not depend on synaptic plasticity.

We have explicitly compared our setup to one in which one or both synaptic populations have been replaced by static synapses and found that the influence of one signal on the total rate of information transmission about the other signal does not depend on synaptic plasticity in a qualitative way. In particular, the SR effect is also observed with static synapses. It is noteworthy that facilitating synapses yield higher mutual information rates than static synapses with the same mean amplitude, while the opposite is true when comparing depressing and static synapses. This is non-trivial, as the coherence for a single static synapse is always higher than for a single facilitating or depressing synapse (Merkel and Lindner, 2010).

While the situation that a neuron receives more than one signal arises naturally in the context of multisensory integration, it is most likely more general. One of the signals might, for instance, represent an actual sensory stimulus that is passed through a feed-forward structure (bottom–up), while the other may be an internally generated top–down signal from higher cortical areas. In our setup, such an internal signal could control the transmission of the stimulus, either by inducing a filtering of information, or by enhancing transmission through SR. Note that in this general case, the requirement of having independent signals might need to be relaxed.

The spectral separation effect can be considered a non-trivial interaction between the two signals. In the presence of both signals, the neuron acts as an information filter—its coherence function deviates from a flat form, indicating that some frequency components get transmitted more reliably than others. In general, such a frequency dependence of the coherence can result from non-linearity in the system or from temporal structure in the inputs (colored noise). In our setup, temporal correlations in the inputs are induced by facilitation or depression, and, as we have shown, they are already enough to understand the separation effect: When entering through a homogeneous synaptic population, noise and signal are filtered in a similar way, so that the signal-to-noise ratio stays almost constant and information transmission is largely frequency independent (Lindner et al., 2009; Merkel and Lindner, 2010). With respect to the transmission of this signal, a second signal acts as additional noise, and if it is filtered differently, the frequency dependence no longer cancels. With synaptic populations of opposite kinds, this effect is especially pronounced, as the filtered second signal adds more power at those frequencies that are already suppressed by the first filter.

The spectral separation effect is most certainly not the only way frequency-selective information transmission could be implemented. As demonstrated by Rosenbaum et al. (2012), depressing synapses alone can already constitute a high-pass information filter, due to the stochasticity in vesicle uptake and release. We have run simulations with stochastic synapses, and found that in the setting we consider, stochasticity-induced filtering seems to be negligible, even when the number of functional contacts is small. However, in a situation with smaller pre-synaptic populations or long time scales of vesicle recovery τd, they should become relevant.

As shown, we have verified that the information spectral separation effect persists when parameters are varied withing reasonable bounds. Furthermore, because the effect is a result of correlations induced by synaptic dynamics rather than of non-linearity in the system (such as the spike-generating mechanism) or a network mechanism (Middleton et al., 2009; Sharafi et al., 2013), similar results can be expected with neuron models that are more realistic than the integrate-and-fire model we used. It should be noted that our approach contains implicit assumptions (most importantly stationarity, coding of information in the instantaneous firing rate and Poissonian input statistics) that are probably not always justified. Relaxing these assumptions is an interesting task for future studies.

The functional role of the information filtering effect at the level of signal processing networks is still unclear and merits further investigation. At the single cell level, our findings have general implications for the study of filtering properties of synapses: It may make more sense to consider filtering on the neuronal level (taking potentially inhomogeneous synaptic populations into account) than to discuss the filtering properties of a certain kind of synapse in isolation.

The beneficial effect that one signal can have on the transmission of the other is a manifestation of SR, which, loosely speaking, refers to the enhancement of signal transmission by a non-vanishing amount of noise. In our case, the place of this noise is taken by the second signal, which does not enter the neuron directly, but as a modulation of presynaptic firing rates. We have compared this variant of SR to others previously discussed in the literature (Rudolph and Destexhe, 2001; Schmid et al., 2001; Torres et al., 2011) and found a second signal to be less effective in enhancing information transmission (about the first signal) than traditionally considered noise sources. However, an obvious benefit of this scenario is that both signals contain information that is transmitted by the neuron.

It has been noted before that “the input signal for one computation may well be considered noise for a different computation” (McDonnell and Ward, 2011) and that variability may be “signal, even though it would look like noise” (Masquelier, 2013), but the present work is to our knowledge the first to explore such a scenario explicitly. It is an attractive idea that neural systems might exploit SR not by adding noise that serves no other purpose, but rather through the interplay of signals that are being processed anyway.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Matthias Merkel for helpful discussions and the referees for constructive comments that have lead to improvements of this manuscript. This work was supported by Bundesministerium für Bildung und Forschung grant 01GQ1001A and the research training group GRK1589/1.

Appendix

Derivation of the coherence formula for the two-signal setup

Our aim is to express the coherence in the two-signal setup,

| (A1) |

with s = sF, sD, in terms of the single-synapse cross- and power-spectra SsFxF(f), SxFxF(f), SsDxD(f) and SxDxD(f), where SsFxF(f) is the cross spectrum between the F signal and a spike train that has been weighted by a single facilitating synapse, SxFxF(f) is the power spectrum for such a spike train, and SsDxD(f) and SxDxD(f) are the corresponding quantities for a depressing synapse. They have been derived in Merkel and Lindner (2010) and read

| (A2) |

| (A3) |

| (A4) |

| (A5) |

where

| (A6) |

| (A7) |

| (A8) |

| (A9) |

| (A10) |

In the following, we derive the coherence between the F signal and the total weighted input Xin(t). The derivation for the D signal is completely analogous and can be obtained by swapping all F and D subscripts. We begin with the cross spectrum between sF(t) and Xin(t). Using Equations (11) and (5), the independence between the F signal and the spike trains entering through the D synapses, the stationarity of signals and spike trains, and the uniformity of F synapses, we obtain

| (A11) |

| (A12) |

| (A13) |

| (A14) |

| (A15) |

where 〈.〉s denotes averaging over the appropriate stimulus ensemble(s), 〈.〉ξ averaging over the ensemble(s) of (inhomogeneous Poisson) spike trains and 〈.〉ξ, s averaging over all ensembles. Thus, we have

| (A16) |

In the derivation of Equations (A2–A5), it has been assumed that signals are weak, so that linear response theory,

| (A17) |

can be applied. Here, χF(f) is the susceptibility of facilitating synapses. It is implicitly given by Equation (A2), which can be written as

| (A18) |

Note that we have assumed the two signals to have identical statistics and unit variance, so that SsFsF(f) = SsDsD(f) = 1/(2fc).

Exploiting the independence between spike trains entering via F and D synapses, we obtain for the power spectrum

| (A19) |

| (A20) |

| (A21) |

and, because

| (A22) |

| (A23) |

(and likewise for the depressing synapses), the power spectrum can be written as

| (A24) |

Inserting Equation (A15) and (A24) into the definition of the coherence (Equation A1) and using Equation (A18), we finally obtain

| (A25) |

where CsFxF(f) is the single synapse coherence.

References

- Abbott L., Regehr W. (2004). Synaptic computation. Nature 431, 796–803 10.1038/nature03010 [DOI] [PubMed] [Google Scholar]

- Banitt Y., Martin K. A. C., Segev I. (2007). A biologically realistic model of contrast invariant orientation tuning by thalamocortical synaptic depression. J. Neurosci. 27, 10230–10239 10.1523/JNEUROSCI.1640-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borst A., Theunissen F., (1999). Information theory and neural coding. Nat. Neurosci. 2, 947–958 10.1038/14731 [DOI] [PubMed] [Google Scholar]

- Chacron M. J., Doiron B., Maler L., Longtin A., Bastian J. (2003). Non-classical receptive field mediates switch in a sensory neuron's frequency tuning. Nature 423, 77–81 10.1038/nature01590 [DOI] [PubMed] [Google Scholar]

- Dittman J., Kreitzer A., Regehr W. (2000). Interplay between facilitation, depression, and residual calcium at three presynaptic terminals. J. Neurosci. 20, 1374–1385 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J., Noesselt T. (2008). Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron 57, 11–23 10.1016/j.neuron.2007.12.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuhrmann G., Segev I., Markram H., Tsodyks M. (2002). Coding of temporal information by activity-dependent synapses. J. Neurophysiol. 87, 140–148 [DOI] [PubMed] [Google Scholar]

- Gammaitoni L., Hänggi P., Jung P., Marchesoni F. (1998). Stochastic resonance. Rev. Mod. Phys. 70:223 10.1103/RevModPhys.70.223 [DOI] [Google Scholar]

- Giard M., Peronnet F. (1999). Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J. Cogn. Neurosci. 11, 473–490 10.1162/089892999563544 [DOI] [PubMed] [Google Scholar]

- Kandel E., Schwartz J., Jessell T. (2000). Principles of Neural Scicence. New York, NY: McGraw-Hill [Google Scholar]

- Krahe R., Bastian J., Chacron M. J. (2008). Temporal processing across multiple topographic maps in the electrosensory system. J. Neurophysiol. 100, 852–867 10.1152/jn.90300.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis J. E., Maler L. (2002). Dynamics of electrosensory feedback: short-term plasticity and inhibition in a parallel fiber pathway. J. Neurophysiol. 88, 1695–1706 [DOI] [PubMed] [Google Scholar]

- Lewis J. E., Maler L. (2004). Synaptic dynamics on different time scales in a parallel fiber feedback pathway of the weakly electric fish. J. Neurophysiol. 91, 1064–1070 10.1152/jn.00856.2003 [DOI] [PubMed] [Google Scholar]

- Lindner B., Gangloff D., Longtin A., Lewis J. (2009). Broadband coding with dynamic synapses. J. Neurosci. 29, 2076–2087 10.1523/JNEUROSCI.3702-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loebel A., Silberberg G., Helbig D., Markram H., Tsodyks M., Richardson M. J. (2009). Multiquantal release underlies the distribution of synaptic efficacies in the neocortex. Front. Comput. Neurosci. 3:27. 10.3389/neuro.10.027.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macaluso E., Frith C., Driver J. (2000). Modulation of human visual cortex by crossmodal spatial attention. Science 289, 1206–1208 10.1126/science.289.5482.1206 [DOI] [PubMed] [Google Scholar]

- Markram H., Pikus D., Gupta A., Tsodyks M. (1998). Potential for multiple mechanisms, phenomena and algorithms for synaptic plasticity at single synapses. Neuropharmacology 37, 489–500 10.1016/S0028-3908(98)00049-5 [DOI] [PubMed] [Google Scholar]

- Masquelier T. (2013). Neural variability, or lack thereof. Front. Comput. Neurosci. 7:7. 10.3389/fncom.2013.00007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonnell M., Ward L. (2011). The benefits of noise in neural systems: bridging theory and experiment. Nat. Rev. Neurosci. 12, 415–426 10.1038/nrn3061 [DOI] [PubMed] [Google Scholar]

- Meredith M., Stein B. (1983). Interactions among converging sensory inputs in the superior colliculus. Science 221, 389–391 10.1126/science.6867718 [DOI] [PubMed] [Google Scholar]

- Merkel M., Lindner B. (2010). Synaptic filtering of rate-coded information. Phys. Rev. E 81:041921 10.1103/PhysRevE.81.041921 [DOI] [PubMed] [Google Scholar]

- Middleton J. W., Longtin A., Benda J., Maler L. (2009). Postsynaptic receptive field size and spike threshold determine encoding of high-frequency information via sensitivity to synchronous presynaptic activit. J. Neurophysiol. 101, 1160–1170 10.1152/jn.90814.2008 [DOI] [PubMed] [Google Scholar]

- Nozaki D., Mar D., Grigg P., Collins J. (1999). Effects of colored noise on stochastic resonance in sensory neurons. Phys. Rev. Lett. 82, 2402–2405 10.1103/PhysRevLett.82.240211308630 [DOI] [Google Scholar]

- Rosenbaum R., Rubin J., Doiron B. (2012). Short term synaptic depression imposes a frequency dependent filter on synaptic information transfer. PLoS Comput. Biol. 8:e1002557 10.1371/journal.pcbi.1002557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowland B., Stanford T., Stein B. (2007). A model of the neural mechanisms underlying multisensory integration in the superior colliculus. Perception 36, 1431–1444 10.1068/p5842 [DOI] [PubMed] [Google Scholar]

- Rudolph M., Destexhe A. (2001). Correlation detection and resonance in neural systems with distributed noise sources. Phys. Rev. Lett. 86, 3662–3665 10.1103/PhysRevLett.86.3662 [DOI] [PubMed] [Google Scholar]

- Schmid G., Goychuk I., Hänggi P. (2001). Stochastic resonance as a collective property of ion channel assemblies. Europhys. Lett. 56, 22 10.1209/epl/i2001-00482-6 [DOI] [Google Scholar]

- Sekuler R., Sekuler A., Lau R. (1997). Sound alters visual motion perception. Nature 385, 308 10.1038/385308a0 [DOI] [PubMed] [Google Scholar]

- Shadlen M., Newsome W. (1998). The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J. Neurosci. 18, 3870–3896 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shams L., Kamitani Y., Shimojo S. (2000). What you see is what you hear. Nature 408, 788 10.1038/35048669 [DOI] [PubMed] [Google Scholar]

- Sharafi N., Benda J., Lindner B. (2013). Information filtering by synchronous spikes in a neural population. J. Comput. Neurosci. 34, 285–301 10.1007/s10827-012-0421-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shimojo S., Shams L. (2001). Sensory modalities are not separate modalities: plasticity and interactions. Curr. Opin. Neurobiol. 11, 505–509 10.1016/S0959-4388(00)00241-5 [DOI] [PubMed] [Google Scholar]

- Stein B., Stanford T. (2008). Multisensory integration: current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266 10.1038/nrn2331 [DOI] [PubMed] [Google Scholar]

- Torres J., Marro J., Mejias J. (2011). Can intrinsic noise induce various resonant peaks? New J. Phys. 13:053014 10.1088/1367-2630/13/5/053014 [DOI] [Google Scholar]

- Tsodyks M. V., Markram H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. U.S.A. 94, 719–723 10.1073/pnas.94.2.719 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vere-Jones D. (1966). Simple stochastic models for the release of quanta of transmitter from a nerve terminal. Aust. J. Stat. 8, 53–63 10.1111/j.1467-842X.1966.tb00164.x [DOI] [Google Scholar]

- Vilela R. D., Lindner B. (2009). Comparative study of different integrate-and-fire neurons: spontaneous activity, dynamical response, and stimulus-induced correlation. Phys. Rev. E 80:031909 10.1103/PhysRevE.80.031909 [DOI] [PubMed] [Google Scholar]

- Zucker R., Regehr W. (2002). Short-term synaptic plasticity. Annu. Rev. Physiol. 64, 355–405 10.1146/annurev.physiol.64.092501.114547 [DOI] [PubMed] [Google Scholar]