Abstract

Background and objective

Electronic patient record (EPR) systems are widely used. This study explores the context and use of systems to provide insights into improving their use in clinical practice.

Methods

We used video to observe 163 consultations by 16 clinicians using four EPR brands. We made a visual study of the consultation room and coded interactions between clinician, patient, and computer. Few patients (6.9%, n=12) declined to participate.

Results

Patients looked at the computer twice as much (47.6 s vs 20.6 s, p<0.001) when it was within their gaze. A quarter of consultations were interrupted (27.6%, n=45); and in half the clinician left the room (12.3%, n=20). The core consultation takes about 87% of the total session time; 5% of time is spent pre-consultation, reading the record and calling the patient in; and 8% of time is spent post-consultation, largely entering notes. Consultations with more than one person and where prescribing took place were longer (R2 adj=22.5%, p<0.001). The core consultation can be divided into 61% of direct clinician–patient interaction, of which 15% is examination, 25% computer use with no patient involvement, and 14% simultaneous clinician–computer–patient interplay. The proportions of computer use are similar between consultations (mean=40.6%, SD=13.7%). There was more data coding in problem-orientated EPR systems, though clinicians often used vague codes.

Conclusions

The EPR system is used for a consistent proportion of the consultation and should be designed to facilitate multi-tasking. Clinicians who want to promote screen sharing should change their consulting room layout.

Keywords: Medical Records System; Observation; Process Assessment; Model, Theoretical; Information Storage and Retrieval; Referral and Consultation

Introduction

Internationally, electronic patient record (EPR) systems are used in the clinical consultation; however clinicians and patients sometimes find this problematic. The computer is widely used in primary care.1 However, few models of how to consult incorporate any reference to computer use.2–4 It is claimed that computer use enhances some elements of the consultation5–8 by improving the completeness of medical records,9 accuracy of prescribing, and patient safety,10 11 and supporting chronic disease management.12 Despite the increased use of computers, both patients and clinicians still have reservations about their role in the consultation,13 14 and computer use may interrupt the consultation workflow.15 16 Clinicians may get overloaded by information demands or interruptions by the computer, and this can influence clinician–patient interactions,17–19 or result in suboptimal computer use.20 Prompts presented by the computer can influence the direction of the consultation.21 22 The current evidence base about how to develop EPR systems that can be more readily integrated into the clinical consultation remains limited.23–25

The clinician–patient–computer interaction in a modern day practice demands clinicians to multi-task, and cope with various workflow modifiers; this is a difficult environment to research. Observational studies report the urgency and uncertainty associated with clinical workflow, and its non-linear nature.26 27 Clinicians need to be skilful and rapidly change between short duration tasks.28 The limited amount of contextual information reported in clinical workflow studies limits their usefulness.29 Studies focused on analyzing clinicians’ workflow and interactions, for example, by combining audio–video recording and field notes, have reported challenges in data transcribing, coding, and the limitations of the sample sizes.30

Interruptions combined with workspace characteristics, and human cognitive factors have been extensively studied.31 32 Interruptions substantially increase clinicians’ cognitive burden33; and the reduction of the consultation time as a result of an interruption, or clinician failing to return to the interrupted task, could undermine patient safety.34 Strategies suggested for managing interruptions include redesigning the workplace to be interruption resilient and harnessing their possible positive effects.35 Studies of multi-tasking and interruptions have highlighted their combined effect in introducing clinical errors.36 37 The introduction of pay for performance (P4P) in the UK may further contribute to dysfunctional and unanticipated consultation behaviors.38

Notwithstanding the apparent complexity of the clinical consultation, we carried out this multi-channel video study to provide insights into how to make better use of current EPR systems. We did this by observing the context of computer use, how much of the consultation involves clinician–patient–computer interaction, and how the different EPR systems influence the tasks carried out in the clinical consultation.

Methods

Development of an open-source, multi-channel video toolkit

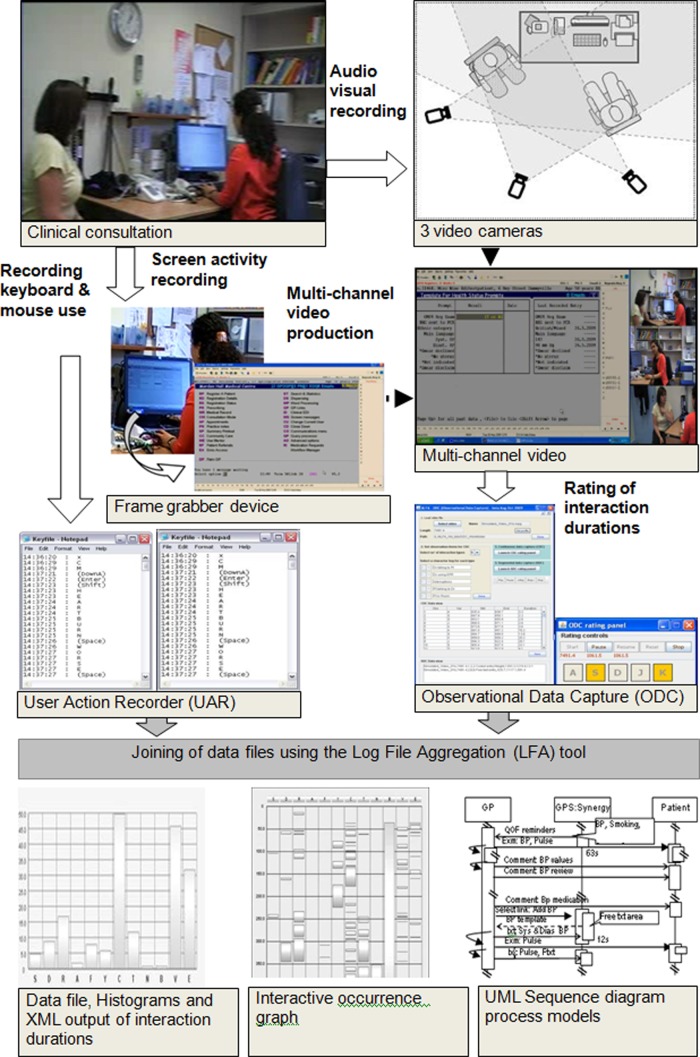

We developed a multi-channel video and data capture toolkit (ALFA; Activity Log File Aggregation), to overcome the limitations of the existing observation techniques. It is a culmination of over a decade of development of video methods to enable holistic observation of the consultation.22 39–43 While single channel video has been widely used for research and assessment of competency, it has limitations in providing the richness of data required to monitor the clinician–patient–computer interactions.39 40 Prior to the development of low cost digital video recording and production applications, multi-camera filming required professional mixing,41 making such techniques expensive and inflexible.22 Further, none of the existing computer usability tools are designed to assess human computer interaction in the context of the clinical consultation where the primary focus of the clinician is their patient, and the information technology (IT) should ideally support that process. We therefore developed a comprehensive open-source toolkit which captures the detail of body language, direction of gaze, screen activity, and mouse and keyboard use (figure 1).42

Figure 1.

Recording and analyzing a consultation using the Activity Log File Aggregation (ALFA) open-source toolkit. This figure gives an overview of the whole consultation analysis process. (1) Clinical consultation is recorded using (2) a frame grabber, which captures what is on the clinician's computer, which is also used (3) to capture all the mouse movements and key board strokes. (4) The whole consultation is recorded using three video cameras and mixed into a (5) multichannel video, which is manually coded using (6) an observational data capture tool. (7) All the files are joined using the LFA tool. Analysis utilizes ALFA outputs. (8) Histograms of interactions and duration. (9) A consultation overview from the interactive occurrence graph (clicking on any part takes the user to that point in the consultation). This figure is only reproduced in colour in the online version.

Subjects and setting

We observed 163 real-life clinical consultations conducted by 16 clinicians from 11 general practice surgeries, using the ALFA toolkit. The number of consultations recorded at each surgery ranged from 7 to 31 (mean 15, SD 7.1, median 11, IQR 8.5). All clinicians are general practitioners (family physicians); 11 (69%) of the doctors were male and 10 (63%) were under 40 years of age. The practices were located within inner city areas (73%) or within county towns in the south east of London (27%). Twelve patients (6.9%) approached by the research team declined to take part in the study; none withdrew their consent after the consultation recording. Of the patients who consented to participate, 101 were female (62%) and 62 male; 48.5% of the consultations were with patients who were aged under 40 years. More than a quarter of the consultations had an additional person accompanying the patient (28%, 45/163): mainly children or parents (75.6%, 34/45).

EPR systems

We collected data about the use of four different clinical computer systems in common use; EMIS-LV, EMIS-PCS, InPS-Vision, and iSOFT-Synergy. InPS-Vision and iSOFT-Synergy support strictly problem orientated medical records (POMR),44 whereas the EMIS brands are encounter orientated and allow text or other data to be entered and filed without entering a problem title. EMIS-LV is most used of the EMIS versions and is the least changed interface over time. It is predominately a keyboard driven system and most consultations are conducted without any use of the mouse. Its screen was designed for low resolution monitors, though this is now embedded within the graphical user interface (GUI). The other three systems, EMIS-PCS, InPS-Vision, and iSOFT-Synergy, are GUI based, with different approaches for presenting on-screen information.

Describing the clinical consultation

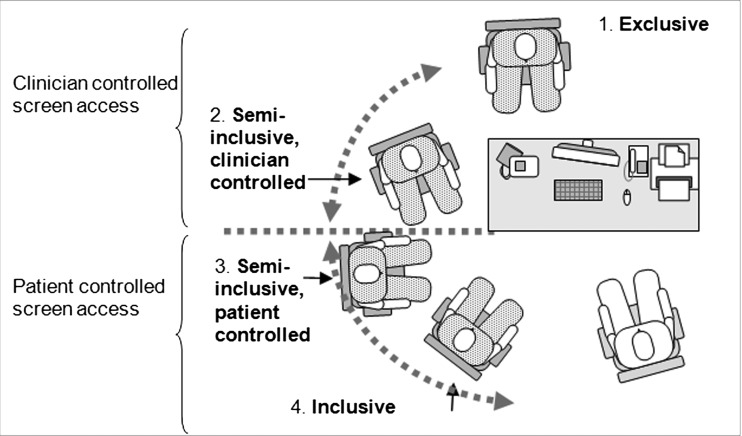

We developed a framework for describing the parts of the clinical consultation; this was subsequently incorporated into recommendations for reporting observational studies of EPR by an international informatics association working group.45 We described the consultation room as having four possible layouts: (1) inclusive, where the clinician and patient share computer screen access; (2) semi-inclusive with patient-controlled screen access, where the patient can view the computer screen without an unnatural body movement; (3) semi-inclusive clinician-controlled screen access, where the patient must move or the clinician must turn the screen to view the screen; and (4) exclusive, where the patient is excluded from viewing the computer screen (figure 2); and report their association with differences in interaction.

Figure 2.

Taxonomy for consulting room layout based on the patient’s chair position and who controls whether they can view or share the clinician’s computer screen. (1) Exclusive position: the patient sits opposite and cannot share the screen. (2) Semi-inclusive position: the clinician controls access to the computer screen. (3) Semi-inclusive position: patient controls when they look at the computer. (4) Inclusive position: the patient sits in a triadic position; clinician and patient share the screen.

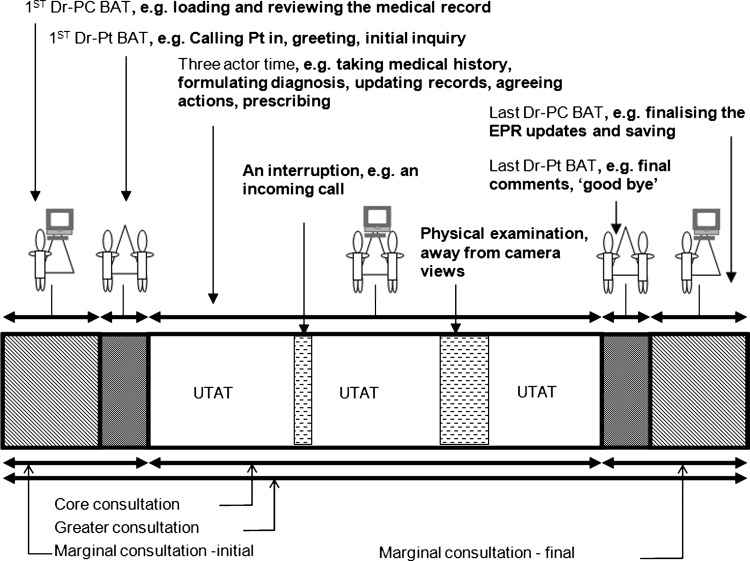

We noted the exact start and end of consultations, and measured the time spent by clinicians and patients in the consulting room; and if they were accompanied. We also measured the method adopted by the clinician to call the patient in, and planned and unplanned interruptions. We defined the whole period of a consultation, including the patient coming in and out of the room, as the ‘greater consultation’. The periods before and after the patient is in the room are the initial and final marginal consultation, respectively. The central period is the ‘core consultation’ and is a largely uninterrupted three-actor time where the clinician, patient, and computer interact; the marginal consultation is generally bilateral (two) actor time. (figure 3).

Figure 3.

Components of the consultation. The whole period is termed greater consultation; the periods before and after the patient is in the room are the initial and final marginal consultation, respectively. The central period is the core consultation and is largely uninterrupted three actor time; the marginal consultation is generally bilateral (two) actor time.

Proportion of the consultation spent using the computer

We calculated the proportion of computer use in the consultation by detailed analysis of the video and by monitoring mouse and keyboard use.42 43 46 We rated each consultation video five times to create a complete recording of the time taken by interaction between actors and carrying out the common consultation tasks. The continuous tasks observed included: verbal interaction between the actors and eye contact; the time taken to interact with the computer including making narrative data entries; and the proportion of the consultation where the clinician and patient simultaneously view the computer, ‘screen sharing’. We made detailed observation of episodic tasks such as reviewing the patient's history, time taken to search and select from clinical coding lists47; or for acute and repeat prescribing. We captured details about other tasks including blood pressure recording, physical examination, and referral. The time clinicians spent using the computer for actions unrelated to patient care or administration we describe as the ‘overheads’. The initiating tasks associated with navigating between functional features were termed ‘transition time’; other overheads included system delays and error messages. We classified the doctor–patient–computer ‘triadic’ interactions48 into (1) continuous, (2) episodic, and (3) singleton categories; depending on whether they occur throughout the consultation (eg, making eye contact); in blocks (eg, speech); or usually only once (eg, referral).

Rating the consultation and analysis

Over a quarter, (28.2%, 46/163) of the consultations were rated by more than one person; and for a comparative study all three variables were independently rated for all the consultations.49 During the development of the ALFA toolkit we demonstrated inter-rater reliability.50 We analyzed the results using SPSS V.18, with descriptive statistics and a multiple regression analysis using the backward stepwise removal method to identify potential predictor variables or the confounding factors of the proportion of computer use.51

Results

Room layout; setting the stage

A combination of room layout and the clinician's actions determined the extent to which patients view and interact with their computer record. The commonest room layout (62.5%, 10/16) had the patient in the clinician-controlled semi-inclusive position, where the patient could only observe the content of their EPR by changing their seating position or the clinician turning the computer screen. Consequently the majority of our recordings are made with this room layout (65%, n=106/163). A quarter of the clinicians (25%, 4/16) had the patient in the patient-controlled semi-inclusive position. Only one general practitioner (6.3%, 1/16) had the patient sit alongside in the inclusive position.

The clinician actively shared the computer screen with the patient in 13 consultations (8.0%, 13/163); screen sharing did not take place in the clinician-controlled layouts (χ2 p<0.001). In seven consultations there was a single episode of screen-sharing; the maximum number observed was four. In clinician-controlled room layouts the clinician looked at the computer less than in patient-controlled layouts; the mean proportion of the greater consultation was 5.6% (SD 7.6%) and 17.6% (SD 20.7%), respectively (p<0.001).

Interaction in the consultation

The mean duration of the greater consultation was 11.8 min (SD 5.2 min, range 2.4–31.3 min); the marginal consultation duration was 38 s (SD 45 s) before and 1:01 min (SD 1:20 min) after the period the patient was in the room. There were no significant differences in consultation length between brands of EPR. In 94.5% (154/163) of the consultations, clinicians viewed the EPR before inviting the patient in. The commonest (39.9%, 65/163) method of inviting patients in was an EPR linked digital display screen located in the waiting room; the median time from activation to the patient entering the room was 30.3 s (IQR 19.2 s). Clinicians personally invited patients in 48 consultations (29.4%); this was the slowest approach (median 45 s, IQR 23 s). Announcing the patient's name using a public address system was the least common, but marginally quickest (median 28 s, IQR 22 s). Most general practitioners consistently used the same method of calling patient in, though three varied between methods; most often this was because an interruption took the doctor away from their consulting room between patients and they collected the patient on the way back through the waiting room.

Common consultation tasks

In total we identified 40 ‘common consultation tasks’: six continuous, 25 episodic, and nine singleton tasks of the consultation (Box 1).

Box 1. Common consultation tasks.

Continuous type consultation tasks (can occur throughout the consultation, unpredictable)

Clinician's computer use

Clinician looking at the computer screen

Clinician talking to the patient

Patient talking to the clinician

Clinician looking at the patient

Clinician and patient both looking at the screen

Episodic consultation tasks (may occur intermittently, relatively predictable)

Reviewing the past encounters (summary view)

Reviewing of examination findings

Reviewing of test results

Reviewing letters attached to the patient’s EPR

Reviewing current medications

Reviewing current problems (list view)

Reviewing alerts

Review patient details

Coded data entry

Free text entry

Reviewing past medications

Prescribing – acute

Prescribing – new repeat

Prescribing – old repeat

Referencing – using electronic or printed resources

System delays or errors

Incomplete/purposeless computer use

Responding to or reviewing prompts

Transitioning between EPR system’s functional areas

Transitioning between EPR system and external applications

Other paper work

Other interactions

Screen sharing

Reading or writing aloud

Third party interruptions

Singleton type consultation tasks (usually occur only once, relatively predictable)

Blood pressure recording

Use of data entry forms/templates

Issuing and printing prescriptions

Writing referral or other letters

Generating test requests—electronic or paper based

Referral—electronic or paper based

Physical examination

Patient in the consultation room

Clinician not in the consultation room

Multi-tasking and interruptions

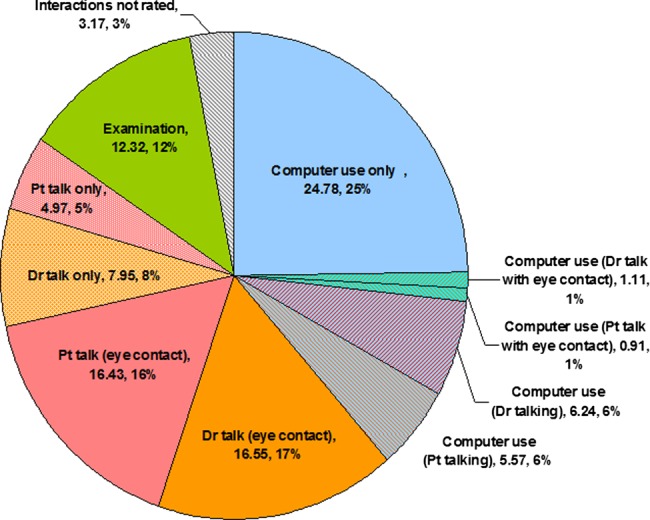

Clinicians multi-tasked in the consultation, there were things to do before, during and after the core consultation, and interruptions seemed to be the norm; speech, eye contact, and computer use all took place separately and together at different times (figure 4). Across the greater consultation: clinicians and patients spoke or made eye contact 45% of the time. Clinicians spoke more than patients, talking for 27% compared with 17%. They made eye contact for 39% of the consultation. The computer was viewed or used for 40.6% and mainly comprised the clinician using the computer without interacting with their patients (64%); the remaining 36% was simultaneous with or interleaved with clinician–patient interaction. We noted that 25% of clinicians’ and 35% of patients’ verbal interactions, and 5% of the total eye contact occurred while the clinician was interacting with the EPR system. Physical examinations occurred in 126 consultations (77.3%) with a mean duration of approximately 2 min (median 1:17 min, IQR 1:33 min).

Figure 4.

Clinician (Dr)–patient (Pt)–computer interactions in greater consultation: detailed breakdown of computer use, clinician–patient interaction with or without eye contact, during and outside computer use. This figure is only reproduced in colour in the online version.

Interruptions were observed in 14.1% of the consultations (23/163); the majority of them were caused by incoming phone calls (13/23). The mean duration of interruptions was 34 s (median 34 s, IQR 22 s). Interruptions mainly occurred in the core consultation (75%), however they appeared to be longer when occurring in the marginal consultation (mean duration 45 s, median 20 s, IQR 41 s). Less data were coded and computer use was shorter in these consultations.

Proportion of the consultation spent using the computer

There was considerable heterogeneity of computer use between clinicians, whether the patient was accompanied, consultations where prescriptions were issued, and brand of EPR system. Notwithstanding this, the proportion of computer use in greater consultation approximated to a normal distribution: mean was 40.6% (SD 14.7%), representing 4:34 min (SD 2:24 min) of an average length consultation. The proportion of computer use in the greater consultation after excluding the episodes of examination and interruption was 47.6% (SD 14.3%); it was similar across the four EPR brands (median 48.6%, IQR 21.3%). Computer use was distributed as follows: 8% in the initial-marginal, 74% in the core, and 18% in the final-marginal consultations.

The presence of an accompanying person appeared to break this rule. Consultations with an additional person were longer (12:22 vs 11:37 min); and were associated with less computer use in the consultation (35.1% vs 42.8%, 2:54 vs 3:42 min; p=0.003). There did not appear to be any compensatory catch-up in the final-marginal consultation.

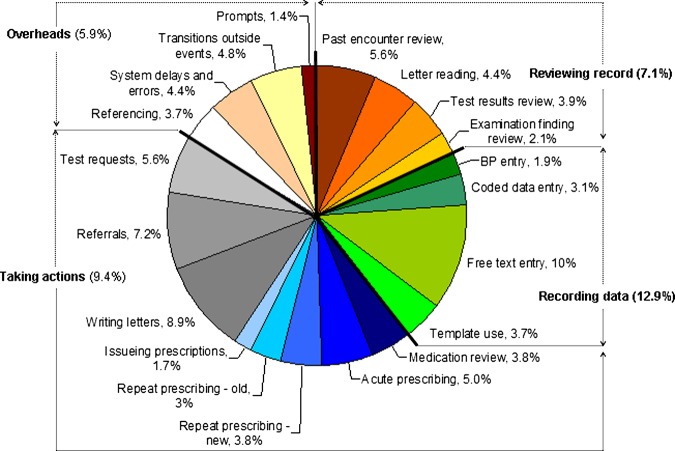

Using the computer; reviewing, recording, and taking action

Clinicians’ computer use was divided between reviewing information in the medical record, making new record entries, and taking actions (figure 5). Reviewing the medical record represented a third of the computer use (36.0%), of which just over half (58.0%) is non-specific and the remainder (42.0%) was spent reviewing past encounters. Recording data occupied a further third (31%) of computer use, of which just over a quarter (27.8%) was coded data entry or using data entry forms, and three quarters (72.2%) free-text data entry. The quarter of computer use spent coding can be divided into coding individual items (18.7%), using data entry forms (5.7%), and BP recording (3.4%); taking action, such as prescribing, and the overheads of computer use occupied the remaining 20.0%.

Figure 5.

Distribution of mean computer use durations associated with common consultation tasks, shown as proportions of the greater consultation duration, based on the number of actual consultations they were observed in; for example, when a clinician was observed entering free text data they did so spending a mean duration of 10.0% of the consultation duration. Note: the percentage values shown are based on the actual number of consultations in which the particular task was observed, and since not all tasks took place in every consultation, the sum of proportions is less than 100%; approximate proportionate values are used to draw the pie chart. This figure is only reproduced in colour in the online version.

Reviewing the record

Reviewing the medical history required multiple clinician–record interactions; clinicians reviewed the past encounters three to five times in each consultation. There was variation between the four brands of EPR systems (p<0.001). Vision users browsed the record nearly five times (mean 4.7, SD 2.7) using multiple icons to quickly view different aspects of the record. LV and Synergy users interacted around three times with the record (LV: mean 3.1, SD 2.0; Synergy: mean 2.9, SD 2.1). LV users tended to page-up through the journal of previous encounters; whereas after viewing the summary page, Synergy users had to select a specific problem. PCS users had the lowest number of interactions (mean 2.5, SD 1.9) and spent the smallest proportion of the computer use time reviewing the past encounter lists (mean 11.2%, SD 0.3%); this appeared to be because much of the information required is presented on the default screen.

Recording data; coded data entry and blood pressure recording

Differences in the interface designs, specifically the degree of problem-orientation between the brands of EPR, affected whether it was obligatory to code and the time taken to code. The EMIS-LV and EMIS-PCS EPR systems had the least number of codes recorded (1.5 codes, SD 1.5 per consultation) while in Vision and Synergy 2.9 codes were recorded (p=0.001). However, the codes used in the problem-orientated system often added little to patient care; for example, we saw repeated use of: ‘Had a chat to patient’ (Read code 8CB) in 21.0% of consultations with one system. Consultations with PCS had the shortest mean duration for entering coded data (mean 5.6 s, SD 3.4 s). Both LV and Vision took significantly longer to code (LV: mean 9.0 s, SD 6.1 s; Vision: mean 8.8 s, SD 3.9 s; t tests comparing LV and Vision with PCS, p<0.001). Part of the reason for the faster coded data entry among the PCS users (mean 1.8 s, SD 0.8 s) was the ‘auto suggestion’ feature where the computer suggested a coded term during free-text entry. In the other three systems it took nearly 3 s (LV 2.8 s, Vision 2.8 s, Synergy 3.0 s) to navigate to the coding screen prior to commencing the coding process.

Blood pressure recording varied greatly between brands (p=0.032). Synergy was the fastest with a mean duration of 9.7 s (SD 3.4 s); an icon visible on all screens opens a simple data entry form. Vision and LV were the next fastest, with similar mean durations for data recoding (mean 10.6 s for both; LV: SD 2.7 s, Vision: SD 2.4 s). However, they were very different processes: LV required the data entry page or form to be opened using the keyboard; and Vision users either used an icon or had menu led access. The auto-suggestion feature offered in PCS recognizes the clinician's attempt to record BP values and automatically initiates presenting the blood pressure recording interface; however the delay between the text recognition (usually initiated by entering ‘bp’) and interface presentation lengthened the actual coding time (mean 14 s, SD 3.7 s).

Taking actions; including prescribing

Prescribing functions varied greatly, most notably in the numbers of prompts given when adding a new medication and the impact of system defaults that auto-load when completing the prescription details. The keyboard based navigation and sequential movement between vertically arranged input fields enabled LV users to generate a new prescription in the shortest time (mean 21.6 s, SD 6.2 s). Clinicians using LV also had least variation in duration (IQR 18 s, min 7 s, max 1:06 min). There were two outliers in LV where repeated searches for drug names were done. The time LV users spent activating the prescribing interface was significantly shorter than the other three systems (p=0.001). PCS users have the second longest durations for acute prescribing (mean 25.4 s, SD 11.8 s); clinicians deciding to review the pricing details were responsible for most of the longer duration data entries.

Synergy users took the longest to create new acute prescriptions (mean 26.8 s, SD 9.3 s); they needed to cancel three to five pop-ups, which they usually did without detailed review.

Regression analysis

The regression analysis generated a poorly fitting model explaining around a quarter of the variation in computer use (adjusted R2 22.5%, p<0.001). Nevertheless there were four significant predictors of that proportion of computer use. We found that the following factors were associated with a lower proportion of the consultation spent using the computer: (1) female clinicians (β −6.235, p<0.001); (2) extra persons accompanying the patient (β −8.73, p<0.001); and (3) the EMIS-PCS brand of EPR system (β −7.25, p<0.01). The Vision EPR brand (β 6.38, p=0.018) was associated with a larger proportion of the consultation spend using the computer. Two other predictor variables were not excluded from the model: prescribing (β 4.58, p=0.052) and male patient gender (β 3.77, p=0.88); however they were of borderline or not statistically significant effects.

Discussion

Principal findings

When the physical layout of the consulting room allowed the patient to view the computer screen they generally made use of the opportunity. Calling people in by phone connected to a waiting room speaker was fastest, followed by a visual display; personally collecting people was slowest.

Clinicians used their computer for a similar proportion of the consultation; this was not influenced by the total consultation duration or the type of EPR system. Clinician–computer interactions formed a greater proportion of the consultation than clinician–patient interactions. Clinicians once appeared to use their EPR system in a consistent way even when this was not necessarily the fastest or most efficient way to do this.

EPR systems’ design features influenced the time taken to achieve common consultation tasks. A GUI with a display or with icons linking to summary data appears to enable clinicians to review data quickly. However, the keyboard appeared to be faster for frequently performed tasks such as prescribing. Enforcing actions in the consultation did not appear to result in improved quality; for example, enforced problem orientation or prescribing pop-ups did not appear to improve the quality of coding or the safety of prescribing.

Interruptions and consultations with more than one person were regular features of primary care consultations and may influence the amount of time available for maintaining the medical record.

Implications of the findings

Clinicians who wish to enable their patents to interact with the EPR record should adjust their room layout to enable this to happen. However, this appears to be associated with a greater proportion of the consultation being spent interacting with the computer. Practitioners may wish to adopt a faster method of calling in patients or feel that there may be benefits from the extra time calling-in patients personally.

The finding that a constant proportion of the consultation is spent using the computer has enormous implications for policy and practice, especially if replicated in other studies. If clinicians are required to carry out actions on the computer which are not important or influence outcomes for patients, then this may displace more important activities. Designers of EPR systems should create systems that incorporate GUI and keyboard functionality where that is most appropriate. Continuing medical education might usefully include video observation or training for established clinicians to optimize their use of their EPR system.

Multi-tasking and interruptions are frequent and systems could be better designed to allow clinicians to move from one patient's records to another. Sensitizing clinicians that in more complex interactions they are likely to record less, may help improve the quality of records made during these encounters.

Current consultation models should be developed and adapted to incorporate room layout, calling in method and computer use. These models should stress the importance of making the very best use of the relatively fixed proportion of the consultation spent using the computer.

Comparison with the literature

Multi-channel video consultation is relatively new, though has been adopted by others. Previous research has regularly focused on interactions, sometimes adopting ethnographic approaches.52 The lack of attention given to the usability aspects of EPR systems and the need for understanding the nature of the computer use in the context of the doctor–patient communication has been discussed previously.53–55 A video based study comparing four EPR systems has concluded the ‘passive’ and ‘active’ involvement of the computer, with the latter requiring the doctor and patient to adapt their communicative style.56 Previous attempts to look into the multitasking in consultation have combined a video analysis and conversation analysis method.57 A cognitive based observational approach to analyze the data entry by clinicians in an outpatient setting has used a complex set-up: a portable usability laboratory with a video converter, microphone for conversation, and keyboard sound recording.58 Prompts and reminders which were observed in this study as demanding clinicians’ attention, in fact have a significant role associated with clinical guidelines.59 Clinicians tend to consider ability to provide information about medication or presenting reminders as routine tasks associated with EPR systems.60 The SEGUE framework (Set the stage, Elicit and Give information, Understand, Respond and End) also considers the consultation setting and information exchange as important characteristics.61 A study using a similar multi-channel video approach, comparing consultations with two general practice EPR brands and three secondary care settings, suggests the need for a more practical framework aligned with the phenomenological nature of IT use.62

Limitations

A formal workflow model of medical care is difficult to build because medical work is an unpredictable combination of routine and exceptions. Traditional analysis with its emphasis on the technology often misses crucial features of the complex work environments in which the technology is implemented63; the consultation has also been defined as chaotic.64 The variation in real consultations limits the scope for quantitative analysis. This variation may have contributed to the poor fit of the regression model, and its findings should be interpreted with caution. As the model only explained around a quarter of the differences it is likely that other confounding factors have not been included.

Call for further research

How the clinician–patient–computer relationship evolves during the consultation remains a ‘black box’ for many researchers; with a lack of understanding as to what elements of use of IT change or modify health behaviors. More evidence is needed to shed light on this important area and video studies provide important insights. Creation of a standard set of reference consultations with simulated patients could potentially provide more specific information about their functions and recommend strategies for improvements. Some of the findings from this study could now be tested using standardized simulated patients so that better comparisons might be made of prescribing and other consultation tasks.

Conclusions

Direct observation of the clinical consultation, using the ALFA toolkit, is acceptable to patients; it captures the context of the consultation and the precise timing and duration of key tasks. However, the interactions observed are complex and include how the computer, while not the primary focus of the clinician, is often a distracting informant. Clinicians wishing their patients to interact more with the computer should change room layouts. Consultation models need to let the computer in; and future development of EPR systems should be based on direct observation of the consultation. In this study clinicians use the computer for a fixed proportion of the consultation; users and designers need to ensure that the time spent interacting with IT is as efficient and purposeful as possible.

Footnotes

Funding: None.

Competing interests: None.

Ethics approval: The study was approved by the National Research Ethics Service (NRES) for the recording of live consultations (reference number: 06/Q1702/139). Patients and clinicians all gave written consent before and after their consultations.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.de Lusignan S, Chan T. The development of primary care information technology in the United Kingdom. J Ambul Care Manag 2008;31:201. [DOI] [PubMed] [Google Scholar]

- 2.Kurtz S, Silverman J, Benson J, et al. Marrying content and process in clinical method teaching: enhancing the Calgary-Cambridge guides. Acad Med 2003;78:802–9 [DOI] [PubMed] [Google Scholar]

- 3.de Lusignan S, Wells S, Russell C. A model for patient-centred nurse consulting in primary care. Br J Nurs 2003;12:85–90 [DOI] [PubMed] [Google Scholar]

- 4.Pearce C, Dwan K, Arnold M, et al. Doctor, patient and computer—a framework for the new consultation. Int J Med Inform 2009;78:32–8 [DOI] [PubMed] [Google Scholar]

- 5.Ash JS, Bates DW. Factors and forces affecting EHR system adoption report of a 2004 ACMI discussion. J Am Med Inform Assoc 2005;12:8–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Schade CP, Sullivan FM, de Lusignan S, et al. e-Prescribing, efficiency, quality: lessons from the computerization of UK family practice. J Am Med Inform Assoc 2006;13:470–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pearce C. The adoption of computers in Australian General Practice. Healthcare Information Management and Systems Society Asia-Pacific conference. Hong Kong: Healthcare Information Management and Systems Society, 2006 [Google Scholar]

- 8.Sullivan F, Wyatt JC. How computers can help to share understanding with patients. BMJ 2005;331:892–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ash JS, Bates DW. Factors and forces affecting EHR system adoption report of a 2004 ACMI discussion. JAMIA 2005;12:8–12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Morris CJ, Savelyich BSP, Avery AJ, et al. Patient safety features of clinical computer systems: questionnaire survey of GP views. Qual Saf Health Care 2005;14:164–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fernando B, Savelyich BSP, Avery AJet al. Prescribing safety features of general practice computer systems: evaluation using simulated test cases. BMJ 2004;328:1171–2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Petri A, de Lusignan S, Williams J, et al. Management of cardiovascular risk factors in people with diabetes in primary care: cross-sectional study. Public Health 2006;120:654–63 [DOI] [PubMed] [Google Scholar]

- 13.Silverman J, Kinnersley P. Doctors’ non-verbal behaviour in consultations: look at the patient before you look at the computer. Br J Gen Pract 2010;60:76–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hoerbst A, Kohl CD, Knaup P, et al. Attitudes and behaviors related to the introduction of electronic health records among Austrian and German citizens. Int J Med Inform 2010;79:81–9 [DOI] [PubMed] [Google Scholar]

- 15.Campbell EM, Sittig DF, Ash JS, et al. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006;13:547–56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ash JS, Sittig DF, Dykstra RH, et al. Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform 2007;76(Suppl 1):21–7 [DOI] [PubMed] [Google Scholar]

- 17.Pearce CM, Trumble S. Computers can't listen—algorithmic logic meets patient centredness. Aust Fam Physician 2006;35:439–42 [PubMed] [Google Scholar]

- 18.Western M, Dwan K, Makkai T, et al. Measuring IT use in General Practice. Brisbane: University of Queensland, 2001 [Google Scholar]

- 19.Rhodes P, Small N, Rowley E, et al. Electronic medical records in diabetes consultations: participants’ gaze as an interactional resource. Qual Health Res 2008;18:1247–63 [DOI] [PubMed] [Google Scholar]

- 20.Booth N, Robinson P, Kohannejad J. Identification of high-quality consultation practice in primary care: the effects of computer use on doctor-patient rapport. Inform Prim Care 2004;12:75–83 [DOI] [PubMed] [Google Scholar]

- 21.Pearce C, Trumble S, Arnold M, et al. Computers in the new consultation: within the first minute. Fam Pract 2008;25:202–818504254 [Google Scholar]

- 22.Theodom A, de Lusignan S, Wilson E, et al. Using three-channel video to evaluate the impact of the use of the computer on the patient-centredness of the general practice consultation. Inform Prim Care 2003;11:149–56 [DOI] [PubMed] [Google Scholar]

- 23.Avery AJ, Savelyich BS, Sheikh A, et al. Improving general practice computer systems for patient safety: qualitative study of key stakeholders. Qual Saf Health Care 2007;16:28–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Magrabi F, Westbrook JI, Coiera EW. What factors are associated with the integration of evidence retrieval technology into routine general practice settings? Int J Med Inform 2007;76:701–9. [DOI] [PubMed] [Google Scholar]

- 25.Shachak A, Reis S. The impact of electronic medical records on patient-doctor communication during consultation: a narrative literature review. Int J Med Inform 2009;15:641–9. [DOI] [PubMed] [Google Scholar]

- 26.Gorman P, Lavelle M, Ash J. Order creation and communication in healthcare. Methods Infom Med 2003;42:376–84 [PubMed] [Google Scholar]

- 27.Spencer R, Coiera E, Logan P. Variation in communication loads on clinical staff in the emergency department. Ann Emerg Med 2004;44:268–73 [DOI] [PubMed] [Google Scholar]

- 28.Westbrook JI, Duffield C, Li L, et al. How much time do nurses have for patients? A longitudinal study of hospital nurses’ patterns of task time distribution and interactions with other health professionals. BMC Health Serv Res 2011;11:319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Holden R, Scanlon M, Patel Ret al. A human factors framework and study of the effect of nursing workload on patient safety and employee quality of working life. BMJ Qual Saf 2011;20:15–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Westbrook J, Ampt A. Design, application and testing of the Work Observation Method by Activity Timing (WOMBAT) to measure clinicians’ patterns of work and communication. Int J Med Inform 2009;78S:S25–33 [DOI] [PubMed] [Google Scholar]

- 31.Coiera E. The science of interruption. BMJ Qual Saf 2012;21:357–36 [DOI] [PubMed] [Google Scholar]

- 32.Ross S, Ryan C, Duncan EMet al. Perceived causes of prescribing errors by junior doctors in hospital inpatients: a study from the PROTECT programme. BMJ Qual Saf 2012;0:1–6. [DOI] [PubMed] [Google Scholar]

- 33.Chisholm CD, Dornfeld AM, Nelson DR, et al. Work interrupted: a comparison of workplace interruptions in emergency departments and primary care offices. Ann Emerg Med 2001;38:146–51 [DOI] [PubMed] [Google Scholar]

- 34.Westbrook JI, Coiera E, Dunsmuir WT, et al. The impact of interruptions on clinical task completion. Qual Saf Health Care 2010;19:284–9 [DOI] [PubMed] [Google Scholar]

- 35.Grundgeiger T, Sanderson P. Interruptions in healthcare: theoretical views. Int J Med Inf 2009;78:293–307 [DOI] [PubMed] [Google Scholar]

- 36.Westbrook JI, Woods A, Rob M, et al. Association of interruptions with increased risk and severity of medication administration errors. Arch Intern Med 2010;170:683–90 [DOI] [PubMed] [Google Scholar]

- 37.Hitchen L. Frequent interruptions linked to drug errors. BMJ 2008;336:1155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gravelle H, Sutton M, Ma A. Doctor Behaviour under a Pay for Performance Contract: Treating, Cheating and Case Finding? Econ J 2010;120:129–56 [Google Scholar]

- 39.de Lusignan S, Wells SE, Russell C, et al. Development of an assessment tool to measure the influence of clinical software on the delivery of high quality consultations. A study comparing two computerized medical record systems in a nurse run heart clinic in a general practice setting. Med Inform Internet Med 2002;27:267–80 [DOI] [PubMed] [Google Scholar]

- 40.Leong A, Koczan P, De Lusignan S, et al. A framework for comparing video methods used to assess the clinical consultation: a qualitative study. Med Inform Internet Med 2006;31:255–65 [DOI] [PubMed] [Google Scholar]

- 41.Sheeler I, Koczan P, Wallage W, et al. Low-cost three-channel video for assessment of the clinical consultation. Inform Prim Care 2007;15:25–31 [PubMed] [Google Scholar]

- 42.de Lusignan S, Kumarapeli P, Chan T, et al. The ALFA (Activity Log Files Aggregation) toolkit: a method for precise observation of the consultation. J Med Internet Res 2008;10:e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Pflug B, Kumarapeli P, van Vlymen J, et al. Measuring the impact of the computer on the consultation: an open source application to combine multiple observational outputs. Inform Health Soc Care 2010;35:10–24 [DOI] [PubMed] [Google Scholar]

- 44.Weed LL. Medical records that guide and teach. New Engl J Med 1987;278:593–9 and 278:652–657 [DOI] [PubMed] [Google Scholar]

- 45.de Lusignan S, Pearce C, Kumarapeli P, et al. Reporting Observational Studies of the Use of Information Technology in the Clinical Consultation. A Position Statement from the IMIA Primary Health Care Informatics Working Group (IMIA PCI WG). Yearb Med Inform 2011;6:39–47 [PubMed] [Google Scholar]

- 46.Refsum C, Kumarapeli P, Gunaratne A, et al. Measuring the impact of different brands of computer systems on the clinical consultation: a pilot study. Inform Prim Care 2008;16:119–27 [DOI] [PubMed] [Google Scholar]

- 47.Tai TW, Anandarajah S, Dhoul N, et al. Variation in clinical coding lists in UK general practice: a barrier to consistent data entry? Inform Prim Care 2007;15:143–50 [DOI] [PubMed] [Google Scholar]

- 48.Scott D, Purves I. Triadic relationship between doctor, computer and patient. Interacting with Computers 1996;8:347–63 [Google Scholar]

- 49.Pearce C, Kumarpeli P, de Lusignan S. Getting seamless care right from the beginning—integrating computers into the human interaction. Stud Health Technol Inform 2010;155:196–202 [PubMed] [Google Scholar]

- 50.Moulene MV, de Lusignan S, Freeman G, et al. Assessing the impact of recording quality target data on the GP consultation using multi-channel video. Stud Health Technol Inform 2007;129(Pt 2):1132–6 [PubMed] [Google Scholar]

- 51.Field A. Discover statistics using SPSS. London: Sage, 2009 [Google Scholar]

- 52.Ventres W, Kooienga S, Vuckovic N. Physicians, patients, and the electronic health record: an ethnographic analysis. Ann Fam Med 2006;4:124–31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Krause P, de Lusignan S. Procuring Interoperability at the expense of usability: a case study of UK National Programme for IT assurance process. Stud Health Technol Inform 2010;155:143–9 [PubMed] [Google Scholar]

- 54.Alsos OA, Das A, Svanaes D. Mobile health IT: the effect of user interface and form factor on doctor-patient communication. Int J Med Inform 2012;81:12–28 [DOI] [PubMed] [Google Scholar]

- 55.Fiks AG, Alessandrini EA, Forrest CB, et al. Electronic medical record use in pediatric primary care. J Am Med Inform Assoc 2011;18:38–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Pearce C, Arnold M, Phillips CB, et al. The many faces of the computer: An analysis of clinical software in the primary care consultation. Int J Med Inform 2012;81:475–84 [DOI] [PubMed] [Google Scholar]

- 57.Gibson M, Jenkings KN, Wilson R, et al. Multi-tasking in practice: coordinated activities in the computer supported doctor-patient consultation. Int J Med Inform 2005;74:425–36 [DOI] [PubMed] [Google Scholar]

- 58.Cimino JJ, Patel VL, Kushniruk AW. Studying the human-computer-terminology interface, J Am Med Inform Assoc 2001;8:163–73 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Foy R, Hawthorne G, Gibb I, et al. A cluster randomised controlled trial of educational prompts in diabetes care: study protocol. Implementation Sci 2007;2:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Yu D, Seger D, Lasser K, et al. Impact of implementing alerts about medication black-box warnings in electronic health records. Pharmacoepidemiol Drug Saf 2010;20:192–202. [DOI] [PubMed] [Google Scholar]

- 61.Makoul G. The SEGUE Framework for teaching and assessing communication skills. Patient Educ Couns 2001;45:23–34 [DOI] [PubMed] [Google Scholar]

- 62.Thomson F, Milne H, Hayward Jet al. Understanding the impact of information technology on interactions between patients and healthcare professionals: the INTERACT-IT study. Health Serv J 2012;(Suppl):P46 special supplement [Google Scholar]

- 63.Reddy M, Pratt W, Dourish P, et al. Sociotechnical requirements analysis for clinical systems. Methods Inf Med 2003;42:437–44 [PubMed] [Google Scholar]

- 64.Beasley JW, Wetterneck TB, Temte Jet al. Information chaos in primary care: implications for physician performancje and patient safety. J Am Board Fam Med 2011;24:745–51 [DOI] [PMC free article] [PubMed] [Google Scholar]