Abstract

Biological differences between signed and spoken languages may be most evident in the expression of spatial information. PET was used to investigate the neural substrates supporting the production of spatial language in American Sign Language as expressed by classifier constructions, in which handshape indicates object type and the location/motion of the hand iconically depicts the location/motion of a referent object. Deaf native signers performed a picture description task in which they overtly named objects or produced classifier constructions that varied in location, motion, or object type. In contrast to the expression of location and motion, the production of both lexical signs and object type classifier morphemes engaged left inferior frontal cortex and left inferior temporal cortex, supporting the hypothesis that unlike the location and motion components of a classifier construction, classifier handshapes are categorical morphemes that are retrieved via left hemisphere language regions. In addition, lexical signs engaged the anterior temporal lobes to a greater extent than classifier constructions, which we suggest reflects increased semantic processing required to name individual objects compared with simply indicating the type of object. Both location and motion classifier constructions engaged bilateral superior parietal cortex, with some evidence that the expression of static locations differentially engaged the left intraparietal sulcus. We argue that bilateral parietal activation reflects the biological underpinnings of sign language. To express spatial information, signers must transform visual–spatial representations into a body-centered reference frame and reach toward target locations within signing space.

INTRODUCTION

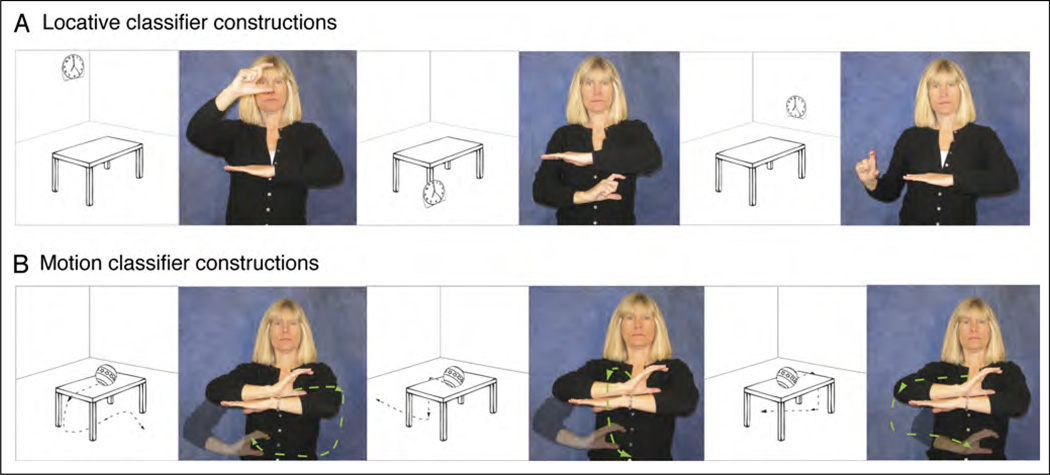

Signed languages differ dramatically from spoken languages with respect to how spatial information is encoded linguistically. Spoken languages tend to categorize and schematize spatial information using a combination of closed-class grammatical elements (e.g., prepositions or locative affixes) and lexical forms (e.g., lexical verbs expressing motion or position). In contrast, signed languages tend to encode spatial information via complex predicates, often referred to as classifier constructions (Emmorey, 2003). In these constructions, a handshape morpheme represents an object of a specific type (e.g., long and thin; vehicle), and the location and movement of the hands in signing space iconically depict spatial relations and object motion. For example, in Figure 1A, a signer uses American Sign Language (ASL) to describe three pictures that show three distinct locations of a clock in relation to a table; in Figure 1B, she describes three distinct movement trajectories for a ball rolling off the table. In these examples, the location and movement of her hand are analogue representations of the location and movement of the figure objects within each scene—location and movement information is not encoded by lexical verbs or functional morphemes. To our knowledge, there are no sign languages that use prepositions or affixes as the primary linguistic mechanism for structuring spatial information. This difference between spoken and signed languages appears to arise from differences in the biology of linguistic expression. For spoken languages, neither sound nor movements of the tongue can easily be used to create motivated representations of space and motion, whereas for signed languages, the movement and position of the hands are observable and can be readily used to create nonarbitrary, iconic linguistic representations of spatial information.

Figure 1.

Illustration of ASL classifier constructions expressing (A) the location of a clock with respect to the table, as shown in the adjacent pictures and (B) the movement paths of a ball rolling off the table, as shown in the adjacent pictures.

In addition, prepositions and lexical affixes are categorical, denoting a particular spatial configuration; for example, in English, “above” is a morpheme that specifies a relationship in which the figure object is aligned with a vertical projection of the upright axis of a reference object. However, in ASL classifier constructions, the spatial location of a figure object can be expressed in a gradient fashion, rather than in terms of morphemic categories (Emmorey & Herzig, 2003; but see Supalla, 2003, for an alternative view). The use of signing space to represent physical space allows ASL signers to distinguish among several spatial relationships that would all be described as “above” by English speakers (e.g., clocks at different heights that are all above a table). Similarly, the gradient use of space allows signers to naturally express variations in the path of motion in situations where English speakers might use the same verb phrase; for example, all of the motion paths shown in Figure 1B could be described in English as “A ball rolls off the table.” In contrast to the location of the hands within a classifier construction, Emmorey and Herzig, (2003) and Schwartz (1979) have shown that ASL signers treat hand configurations as categorical morphemes, rather than as gradient, analogue representations. Furthermore, unlike locations in signing space, lexical representations for classifier handshapes are argued to contain phonological specifications, semantic classificatory information, and language-specific selectional restrictions (e.g., Schembri, Jones, & Burnham, 2005; Liddell, 2003).

In the current work, we investigated the possible neural consequences of the spatial language system that is unique to signed languages. Specifically, we used PET to identify what neural regions are engaged when signers overtly produce classifier constructions that express location, motion, or object type. Previous neuroimaging and lesion studies suggest that the production of classifier constructions engages the right hemisphere to a greater extent than production of lexical signs or sentences that do not involve classifier forms. Emmorey et al. (2002) found bilateral parietal activation when deaf native signers produced locative classifier constructions compared with naming objects with lexical signs; in contrast, for English speakers, Damasio et al. (2001) reported predominantly left hemisphere engagement for the production of prepositions in response to the same stimuli. Emmorey et al. (2005) partially replicated these results with hearing ASL–English bilinguals who exhibited greater right parietal activation when producing ASL classifier constructions compared with English prepositions. Supporting the neuroimaging findings, Hickok, Pickell, Klima, and Bellugi (2009) found that right hemisphere damaged (RHD) signers made a significant number of errors producing classifier constructions while making very few lexical errors in a narrative production task. In contrast, left hemisphere damaged (LHD) signers made significantly more lexical errors and did not differ from the RHD patients in the rate of classifier errors. For British Sign Language (BSL), Atkinson, Marshall, Woll, and Thacker (2005) also found that RHD signers made significantly more errors comprehending BSL classifier constructions expressing spatial relations (object location and orientation) than age-matched control signers, but RHD signers did not differ from controls on tests of lexical sign and sentence comprehension. Hickok et al. (2009, p. 386) speculate “this increased right hemisphere involvement for classifiers, as opposed to lexical signs, may reflect the analogue nature of the spatial encoding of classifier signs.”

What is not clear from previous research is whether the production of the different components of classifier constructions—classifier handshapes, locations in space, and movement paths—might each engage distinct neural regions with different patterns of hemispheric lateralization. For example, if classifier handshapes are categorical morphemes, their production might involve left hemisphere dominant lexical retrieval processes. However, production data from RHD and LHD signers have not clearly yielded the error patterns that would be predicted by this hypothesis, namely more classifier handshape errors for LHD signers and more location or movement errors for RHD signers (Hickok et al., 2009). Nonetheless, the PET results of Emmorey et al. (2002) indicated that left inferior temporal (IT) cortex was differentially engaged during the production of locative classifier constructions in contrast to lexical prepositions. Emmorey et al. (2002) hypothesized that greater activation was observed in left IT because, unlike lexical prepositions, classifier handshapes encode information about object type and left IT is hypothesized to be involved in mediating between object recognition and lexical retrieval processes (e.g., Tranel, Grabowski, Lyon, & Damasio, 2005).

On the other hand, Emmorey et al. (2005) reported a lack of activation in the left inferior frontal gyrus (IFG) when bilingual signers produced locative classifier constructions, in contrast to the production of ASL lexical signs (nouns) and in contrast to the production of English prepositions. Emmorey et al. (2005) suggested that locative classifier constructions might not engage left IFG to the same extent as lexical signs or English prepositions because these constructions do not “name” entities, actions, or spatial relationships. In addition, classifier handshape morphemes constitute a small closed set of morphemes that can refer to many distinct objects, for example, the location of a brush, pen, and ruler would all be expressed using a 1 handshape that specifies a long thin object. In contrast, lexical signs are unique for each object—a brush, pen, and ruler would all be named with different signs. Thus, the lexical retrieval demands differ for lexical signs compared with classifier handshape morphemes.

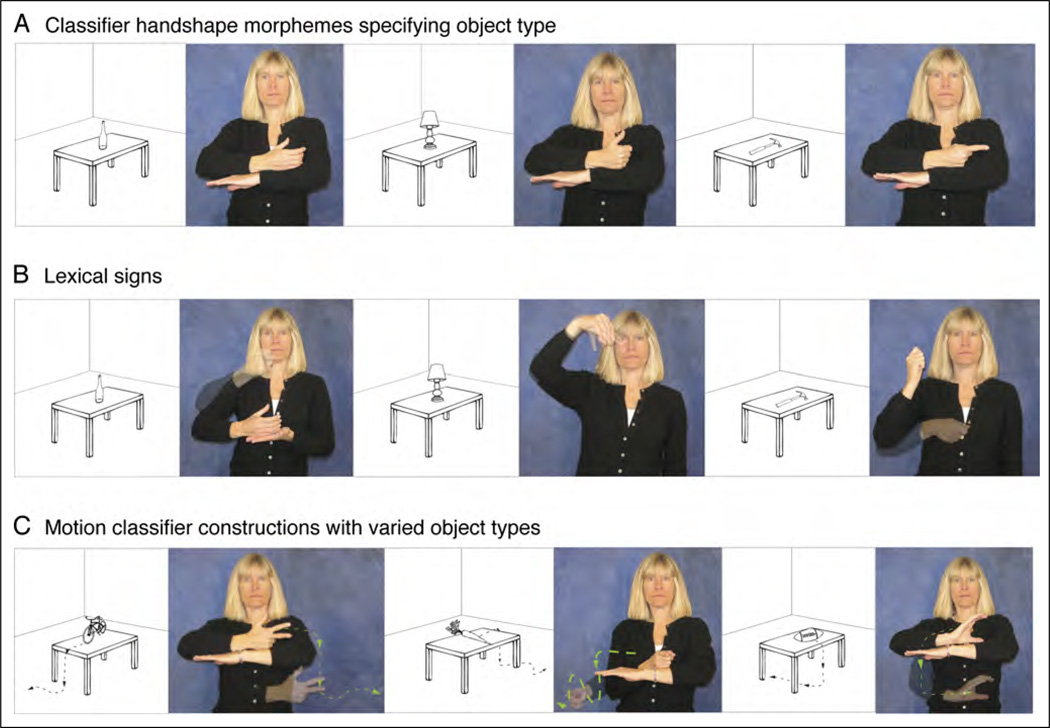

In the current experiment, we endeavored to tease apart what neural regions are involved when native deaf signers produce different components of classifier constructions. Deaf signers were presented with line drawings and produced (a) classifier constructions that varied only in the location of an object (Figure 1A), (b) classifier constructions that varied only in the motion of an object (Figure 1B), (c) classifier constructions that varied only in the object type (Figure 2A), (d) lexical signs for the same objects (Figure 2B), and (e) classifier constructions that varied in both object type and object motion (Figure 2C).

Figure 2.

Illustration of (A) classifier constructions expressing the location of different object types, (B) lexical signs for the same objects, and (C) motion classifier constructions expressing the movement paths of different object types, as shown in the adjacent pictures.

We hypothesized that the depiction of location and motion information in classifier constructions recruits parietal cortex bilaterally, and thus, we predicted that the production of locative and motion classifier constructions (Figure 1) would activate bilateral parietal cortices to a greater extent than the production of object type classifier constructions (Figure 2A) or lexical signs (Figure 2B). Furthermore, the contrast between location classifier constructions (Figure 1A) and motion classifier constructions (Figure 1B) was designed to investigate whether the production of spatial location versus movement differentially engaged parietal cortices. Expression of movement path is spatially more complex, which might result in increase parietal involvement and might also recruit motion-sensitive brain regions (MT+). Comprehension of motion semantics encoded by ASL classifier constructions has been shown to activate MT+ to a greater extent than sentences with classifier constructions expressing static location information (McCullough, Saygin, Korpics, & Emmorey, 2012).

We hypothesized that the production of lexical signs would recruit left fronto-temporal cortices that support lexical retrieval and phonological encoding, whereas we hypothesized that the expression of location and motion within classifier constructions involves a gradient, analogue spatial mapping between the hands and referent object, rather than lexical retrieval of location and movement morphemes (contra Supalla, 1978, 1986). If these hypotheses are correct, then the contrast between the production of lexical signs (Figure 2B) and classifier constructions expressing either location or movement (Figure 1A, B) should result in greater activation in the left fronto-temporal cortices for lexical signs. Furthermore, we predicted greater bilateral parietal activation for the production of location and motion classifier constructions than for lexical signs. We hypothesized that the analogue, spatial mapping between the location/ movement of the hands in signing space and the location/ movement of objects would recruit parietal cortices that support visuospatial processing and body-centered hand movements.

If the production of classifier handshapes encoding object type requires the retrieval of categorical morphemes, then the contrast between classifier constructions encoding object type (Figure 2A) and location classifier constructions (Figure 1A) should result in greater activation within the left inferior frontal cortex for object type classifier constructions, reflecting lexical search and retrieval demands for classifier handshape morphemes. On the other hand, given the results of Emmorey et al. (2005), it is possible that retrieval of classifier morphemes does not strongly engage lexical retrieval processes associated with left IFG but rather depends primarily on object processing for lexical–semantic binding within IT cortex. Classifier hand configurations represent object properties (e.g., the shape, size, and type of object), and unlike classifier morphemes in spoken languages, there is an iconic mapping between the form of the classifier morpheme and the form of the referent object.

Finally, the contrast between motion classifier constructions that require retrieval of distinct object type morphemes (Figure 2C) and motion classifier constructions with a single nonvarying object (Figure 1B) were predicted to result in greater left fronto-temporal activation for the former because several distinct classifier handshapes must be retrieved and produced. Furthermore, the contrast between the production of lexical signs for different objects and classifier expressions describing the movement of different objects was predicted to result in greater activation within left hemisphere language areas (specifically, left IFG) for lexical signs, but greater activation in bilateral parietal cortices for the motion classifier constructions.

In summary, these different conditions allowed us to tease apart and identify what neural regions support the expression of location, the expression of movement path, and the expression of object type within classifier constructions in ASL. In addition, we could identify how the production of classifier constructions differed from the production of lexical signs.

More generally, teasing apart the neural correlates of categorical morphemes (i.e., classifier handshapes, lexical signs) and schematic, analogue representations of spatial information (i.e., locations/movements in signing space) has implications for our understanding of the interface between language and mental representations of space. For spoken languages (primarily English), the preponderance of evidence indicates a specific role for the left supramar-ginal gyrus (SMG) in the comprehension and production of categorical locative morphemes, that is, spatial verbs and prepositions (Noordzij, Neggers, Ramsey, & Postma, 2008; Tranel & Kemmerer, 2004; see Kemmerer, 2006, for review). Recently, Amorapanth et al. (2012) found that the right SMG may play a critical role in extracting schematic spatial representations relevant to the use of locative prepositions (see also Damasio et al., 2001). Location and movements within a classifier construction are schematic in the sense used by Amorapanth et al. (2012), that is, representations in which “perceptual detail has been abstracted away from a complex scene or event while preserving critical aspects of its analog qualities (p. 226).” If the link between linguistic and visual–spatial representations is parallel for signed and spoken languages, then the conjunction of categorical and schematic representations in classifier constructions might result in bilateral activation in inferior parietal cortex. Alternatively, the linguistic–spatial interface might differ for sign languages because classifier morphemes categorize object type— not type of spatial relation—and the location and motion schemas map iconically to the body, rather than to categorical spatial morphemes.

METHODS

Participants

Eleven right-handed deaf signers aged 19–33 years (mean age = 24 years) participated in the study (five women). All had deaf parents and acquired ASL as their first language from birth. All participants were congenitally deaf, and all but one participant had severe or profound hearing loss (one participant had a moderate hearing loss). All deaf participants used ASL as their primary and preferred language. All participants had 12 or more years of formal education, and all gave informed consent in accordance with federal and institutional guidelines.

Materials and Task

The stimuli were created using Adobe Illustrator CS (Apple, Inc.). Each stimulus consisted of a line drawing of an object in a room, and each object was located with respect to a table (see Figures 1 and 2). The object drawings were from the Center for Research on Language International Picture Naming Project (Székely et al., 2003; Bates et al., 2000). The stimuli and tasks were grouped into the following five conditions:

Location classifier condition: Twenty-five pictures of a clock in 25 different locations with respect to a table (see Figure 1A for three examples); 25 pictures of a square mirror in different locations with respect to the table. The clock and mirror pictures were presented in separate runs. For each run, participants were asked to indicate where the clock or the mirror was located with respect to the table. The table was represented by a flat B handshape (nondominant hand); the clock and the mirror were represented by a curved L handshape and a flat B handshape, respectively.

Motion classifier condition: Twenty-five pictures of a ball rolling off a table with 25 different movement trajectories, each depicted by a dotted line and arrow (see Figure 1B for three examples); 25 pictures of a toy car rolling off a table with 25 different movement trajectories, each depicted by a dotted line and arrow. The ball and car pictures were presented in separate sets. For each run, participants were asked to indicate the path of the ball or the car. The ball was represented by a curved 5 handshape, and the car was represented by the ASL vehicle classifier (the middle finger, index finger, and thumb are extended in a 3 handshape).

Object type classifier condition: Fifty pictures of different objects (presented in two runs of 25) with each object located on top of a table (see Figure 2A for three examples). Participants were asked to produce the appropriate classifier handshape for each object on top of the table, represented by a B handshape by the nondominant hand. The following classifier handshapes were elicited: airplane classifier (ILY handshape; one picture), flat object (B handshape; one picture), vehicle classifier (3 handshape; three pictures), flat round object (curved L handshape; four pictures), upright standing object (A-bar handshape; four pictures), cylindrical object (C handshape; ten pictures), long thin object (1 handshape; 12 pictures), spherical object (curved 5 handshape; 15 pictures).

Lexical signs: The same 50 line drawings from condition (c) in two runs of 25, with different random orders of presentation. Participants were asked to name each object with the appropriate ASL sign (see Figure 2B for three examples). Frequency norms are not available for ASL, but the Emmorey lab at SDSU maintains a database of familiarity ratings for ASL signs based on a scale of 1 (very infrequent) to 7 (very frequent), with each sign rated by at least four deaf signers. The mean ASL sign familiarity rating for the ASL signs was 3.8 (SD = 1.2). The mean log-transformed frequency of the English translations of the ASL signs was 2.8 (SD = 0.7) from SUBTLEXus (expsy.ugent.be/subtlexus/).

Motion classifier constructions with varied object types: Fifty pictures of different objects (presented in two runs of 25) with each object depicted falling off the table (see Figure 2C for three examples). Participants were asked to produce the appropriate classifier handshape for each object and to indicate its path of motion with respect to the table, which again was represented by a B handshape on the nondominant hand. The following classifier handshapes were elicited: airplane classifier (ILY handshape; one picture), flat object (B handshape; one picture), vehicle classifier (3 handshape; three pictures), flat round object (curved L handshape; four pictures), cylindrical object (C handshape; six pictures), spherical object (curved 5 handshape; 15 pictures), long thin object (1 handshape; 20 pictures).

Procedure

Image Acquisition

All participants underwent MR scanning in a 3.0T TIM Trio Siemens scanner to obtain a 3-D T1-weighted structural scan with isotropic 1-mm resolution using the following protocol: magnetization prepared rapid gradient echo, repetition time = 2530, echo time = 3.09, inversion time = 800, field of view = 25.6 cm, matrix = 256 × 256 × 208. The MR scans were used to confirm the absence of structural abnormalities, aid in anatomical interpretation of results, and facilitate registration of PET data to a Talairach-compatible atlas.

PET data were acquired with a Siemens ECAT EXACT HR+ PET system using the following protocol: 3-D, 63 image planes, 15 cm axial field of view, 4.6 mm transaxial, and 3.5 mm axial FWHM resolution. Participants performed the experimental tasks during the intravenous bolus injection of 15 mCi of [15O]water. Arterial blood sampling was not performed.

Images of regional CBF (rCBF) were computed using the [15O]water autoradiographic method (Hichwa, Ponto, & Watkins, 1995; Herscovitch, Markham, & Raichle, 1983) as follows. Dynamic scans were initiated with each injection and continued for 100 sec, during which twenty 5-sec frames were acquired. To determine the time course of bolus transit from the cerebral arteries, time activity curves were generated for the whole brain based on scanner-derived log files. The eight frames representing the first 40 sec immediately after transit of the bolus from the arterial pool were summed to make an integrated 40-sec count image. These summed images were reconstructed into 2 mm pixels in a 128 × 128 matrix.

Spatial Normalization

PET data were spatially normalized to a Talairach-compatible atlas through a series of coregistration steps (see Emmorey, McCullough, Mehta, Ponto, & Grabowski, 2011; Damasio, Tranel, Grabowski, Adolphs, & Damasio, 2004; Grabowski et al., 1995, for details). Before registration, the MR data were manually traced to remove extracerebral voxels. Talairach space was constructed directly for each participant via user identification of the anterior and posterior commissures and the midsagittal plane on the 3-D MRI data set in Brainvox. An automated planar search routine defined the bounding box, and piecewise linear transformation was used (Frank, Damasio, & Grabowski, 1997), as defined in the Talairach atlas (Talairach & Tournoux, 1988). After Talairach transformation, the MR data sets were warped (AIR fifth order nonlinear algorithm) to an atlas space constructed by averaging 50 normal Talairach-transformed brains, rewarping each brain to the average, and finally averaging them again, analogous to the procedure described in Woods, Dapretto, Sicotte, Toga, and Mazziotta (1999).

For each participant, PET data from each injection were coregistered to each other using Automated Image Registration (AIR 5.25, Roger Woods, UCLA). The coregis-tered PET data were averaged to produce a mean PET image. Additionally, the participants’ MR images were segmented using a validated tissue segmentation algorithm (Grabowski, Frank, Szumski, Brown, & Damasio, 2000), and the gray matter partition images were smoothed with a 10-mm kernel. These smoothed gray matter images served as the target for registering participants’ mean PET data to their MR images, with the registration step performed using FMRIB Software Library’s linear registration tool (Jenkinson, Bannister, Brady, & Smith, 2002; Jenkinson & Smith, 2001). The deformation fields computed for the MR images were then applied to the PET data to bring them into register with the Talairach-compatible atlas.

After spatial normalization, the PET data were smoothed with a 16-mm FWHM Gaussian kernel using complex multiplication in the frequency domain. The final calculated voxel resolution was 17.9 × 17.9 × 18.9 mm. PET data from each injection were normalized to a global mean of 1000 counts per voxel.

Regression Analyses

PET data were analyzed with a pixelwise general linear model (Friston et al., 1995). Regression analyses were performed using tal_regress, a customized software module based on Gentleman’s least squares routines (Miller, 1991), and cross-validated against SAS (Grabowski et al., 1996). The regression model included covariables for the task conditions and subject effects. We contrasted the following conditions: (a) locative and object type classifier constructions (Figure 1A vs. Figure 2A); (b) locative and motion classifier constructions (Figure 1A vs. Figure 1B), (c) lexical signs and locative/motion classifier constructions combined (Figure 2B vs. Figure 1A and B), (d) lexical signs and object type classifier constructions (Figure 2B vs. Figure 2A), (d) lexical signs and motion classifier constructions with varied object types (Figure 2B vs. Figure 2C). Contrasts were tested with t tests (familywise error rate p < .05), using random field theory to correct for multiple spatial comparisons across the whole brain (Worsley, 1994; Worsley, Evans, Marrett, & Neelin, 1992).

RESULTS

Participants performed the production tasks with few errors. Nonresponses and fingerspelled responses (for the lexical sign condition) were scored as incorrect. Mean accuracies for each condition were as follows: (a) location classifier condition = 100%, (b) motion classifier condition = 98%, (c) object classifier condition = 100%, (d) lexical signs = 98%, (e) motion classifier with varied objects condition = 98%.

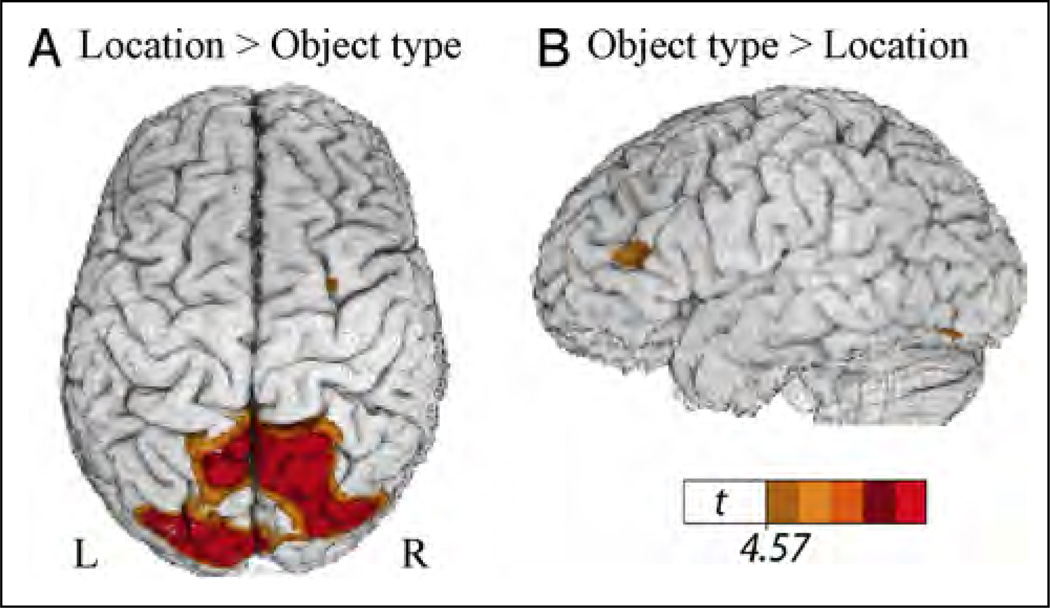

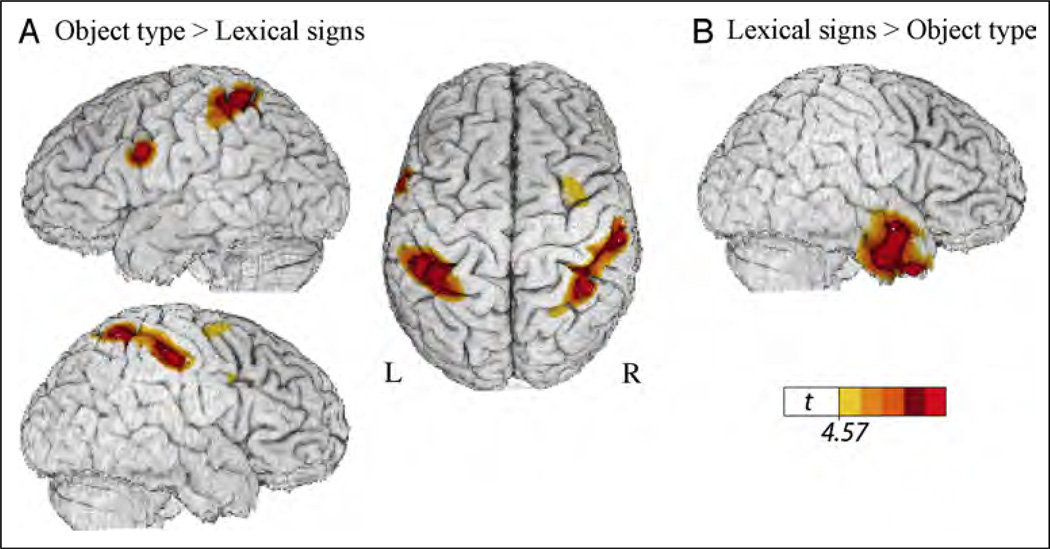

Location–Object Type

Table 1 presents the local maxima of areas of increased activity when signers produced locative classifier constructions that varied only in the spatial location of the figure object (Figure 1A) compared with locative classifier constructions that varied only in object type (Figure 2A). This contrast directly compares the expression of spatial location with the retrieval of classifier handshape morphemes within a locative classifier construction. As can be seen in Figure 3, the expression of spatial location resulted in greater activation in superior parietal cortex and occipital cortex bilaterally, whereas the production of classifier handshape morphemes resulted in greater activation in the left inferior frontal cortex and in a small region in the left posterior IT cortex. These results support our hypothesis that the production of gradient spatial locations within classifier constructions engages the right hemisphere (in particular, right parietal cortex), whereas the production of classifier handshape morphemes engages left hemisphere language regions.

Table 1.

Local Maxima of Areas with Increased Activation for Producing Location Classifier Constructions in Comparison to Classifier Constructions Expressing Object Type

| Region | Brodmann’s Area | Side | x | y | z | t |

|---|---|---|---|---|---|---|

| Location > Object Type | ||||||

| Superior frontal sulcus | BA 6 | R | +27 | −1 | +58 | 4.69 |

| SPL | BA 7 | R | +14 | −65 | +58 | 8.15 |

| Precuneus | BA 7 | L | −5 | −61 | +51 | 6.99 |

| Cuneus | BA 7 | R | +21 | −73 | +35 | 7.21 |

| BA 19 | R | +30 | −82 | +34 | 7.56 | |

| BA 18/BA 19 | L | −16 | −85 | +23 | 9.00 | |

| Lingual gyrus | BA 18 | R | +9 | −74 | +2 | 7.88 |

| Object Type>Location | ||||||

| IFG | BA 45 | L | −42 | +27 | +12 | −4.74 |

| BA 47 | L | −25 | +30 | −9 | −4.89 | |

| Middle frontal gyrus | BA 46/45 | L | −48 | +38 | +16 | −4.90 |

| Lateral orbital gyrus | BA 11 | R | +30 | +44 | −10 | −5.74 |

| Frontal pole | BA 10 | R | +18 | +64 | −3 | −4.91 |

| IT cortex | BA 19 | L | −46 | −76 | −14 | −4.67 |

| Anterior cingulated | BA 24 | L | −9 | +25 | +14 | −4.71 |

| Anterior insula | R | +26 | +13 | −7 | −4.80 | |

Results are from a whole brain analysis (critical t(94) = 4.57, p < .05, corrected).

Figure 3.

Illustration of the comparison between classifier constructions expressing location (see Figure 1A) and object type (see Figure 2A), which revealed (A) greater activity in bilateral superior parietal cortex for classifier constructions expressing location and (B) greater activity in left inferior frontal cortex and within a small region in left IT cortex for classifier constructions expressing object type. The color overlay indicates t statistic values where there is a significant increase in activity associated with the contrast (p < .05, corrected).

Location–Motion

The contrast between locative classifier constructions (Figure 1A) and motion classifier constructions (Figure 1B) did not result in significant differences in activation at a corrected threshold, suggesting that the production of distinct spatial locations and distinct movement paths are supported by very similar neural substrates. However, at a lower threshold (t > 3.18; p < .001, uncorrected), this contrast revealed greater activation for location expressions in the left intraparietal sulcus (the cluster includes the inferior parietal lobule), whereas motion expressions engaged a much more medial region in superior parietal cortex (see Table 2). The contrast also revealed greater activation for motion expressions in the left posterior middle temporal gyrus, near MT+ extending into occipital cortex.

Table 2.

Local Maxima of Areas with Increased Activation for Producing Location Classifier Constructions in Comparison to Motion Classifier Constructions, at an Uncorrected Threshold (p < .001)

| Region | Brodmann’s Area | Side | x | y | z | t |

|---|---|---|---|---|---|---|

| Location>Motion | ||||||

| Middle temporal gyrus | BA 21 | L | −42 | −3 | −29 | −3.46 |

| IT gyrus | BA 20 | L | −53 | −20 | −22 | −3.63 |

| BA 20 | L | −62 | −22 | −22 | −3.63 | |

| Intraparietal sulcus | BA 7 | L | −42 | −62 | +50 | −4.23 |

| Parahippocampal gyrus | BA 35 | R | +29 | −13 | −23 | −3.42 |

| Uncus | R | +30 | −11 | −33 | −3.37 | |

| Cuneus | BA 18 | L | −7 | −97 | +21 | −3.43 |

| Motion>Location | ||||||

| Postcentral gyrus | BA 1 | L | −35 | −28 | 65 | 3.46 |

| Middle temporal gyrus | BA 19 | L | −49 | −79 | +17 | 3.46 |

| SPL | BA 7 | L | −13 | −67 | +57 | 3.23 |

| Middle occipital gyrus | BA 19 | R | +30 | −94 | +15 | 3.46 |

| Lingual gyrus | BA 18 | L | −18 | −76 | −4 | 4.50 |

| Cerebellum | R | +2 | −59 | −20 | 4.46 | |

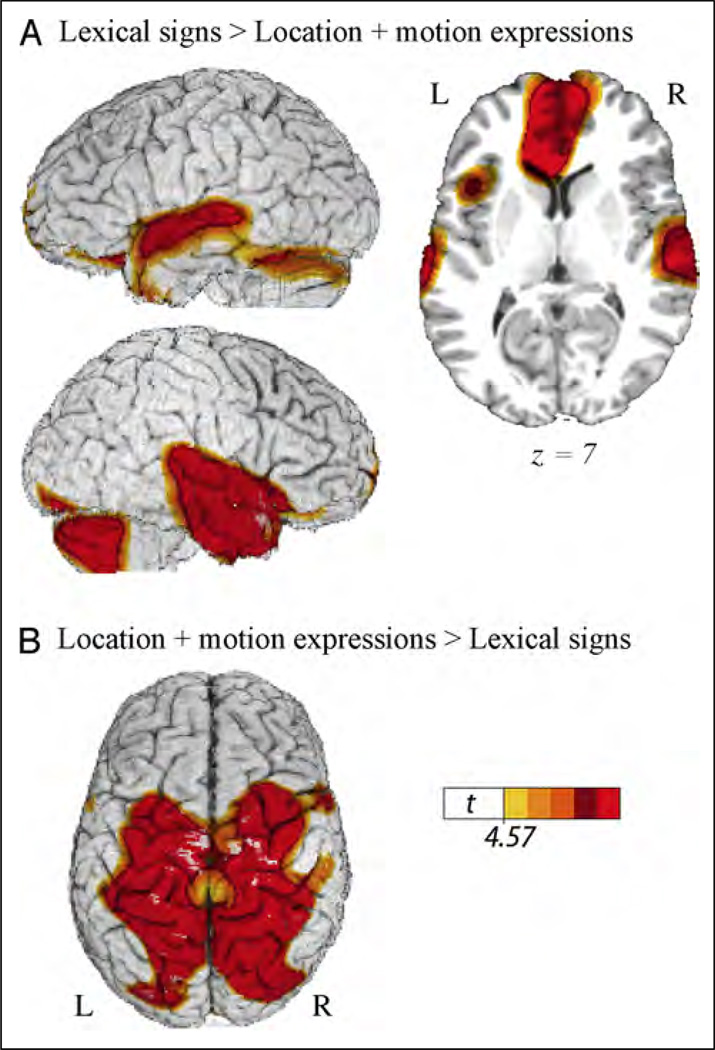

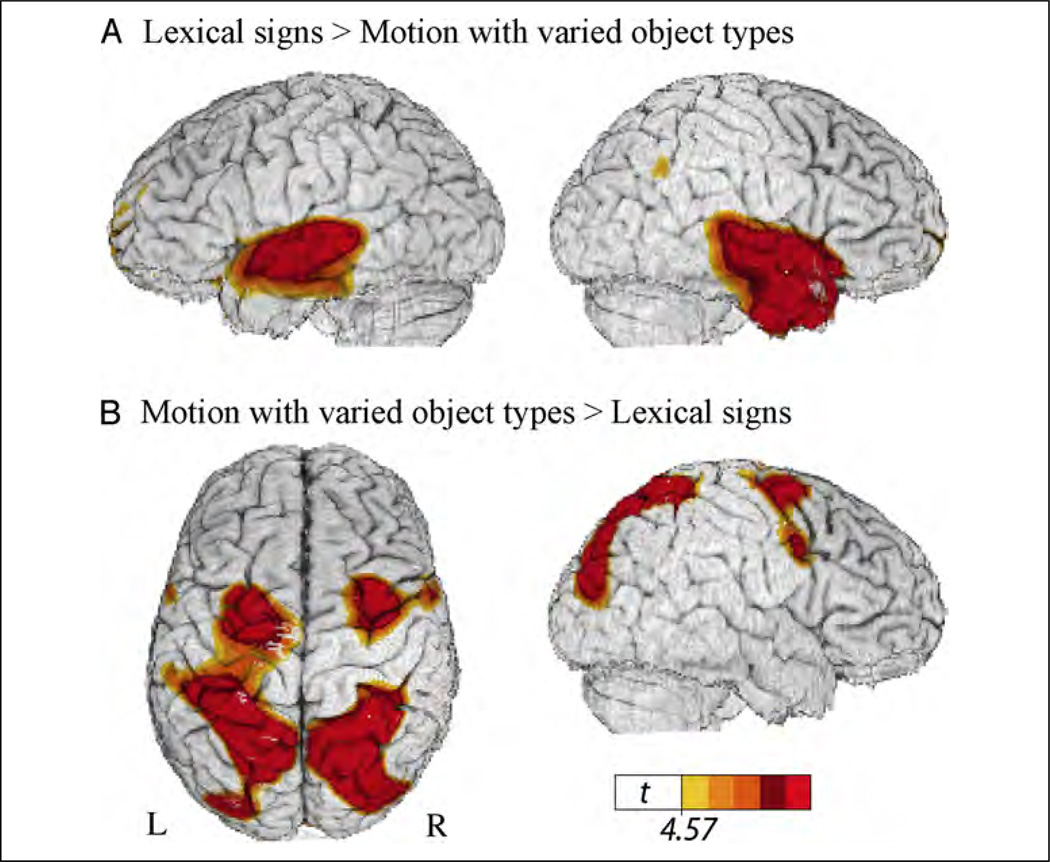

Lexical Signs–Location and Motion Classifier Constructions

Table 3 presents the local maxima of areas of increased activity when deaf signers produced lexical signs (Figure 2B) compared with producing location and motion classifier constructions (Figure 1A and B). As predicted, this contrast revealed greater activation for lexical signs in the left fronto-temporal cortices (Figure 4A) and greater activation in parietal cortices for classifier constructions expressing gradient spatial locations and movement paths (Figure 4B). In addition, the production of lexical signs generated increased activation in the anterior temporal lobes and in the cerebellum. Production of location and motion expressions also engaged sensory motor cortices to a greater extent than lexical signs.

Table 3.

Local Maxima of Areas with Increased Activation for Producing Lexical Signs in Comparison to Producing Location and Motion Classifier Constructions (Combined)

| Region | Brodmann’s Area | Side | x | y | z | t |

|---|---|---|---|---|---|---|

| Lexical Signs > Location and Motion Classifier Constructions | ||||||

| Superior frontal gyrus/sulcus | BA 9/10 | L | −30 | +55 | +24 | −4.74 |

| L | −21 | +65 | +15 | −4.70 | ||

| IFG | BA 47 | L | −17 | +12 | −18 | −7.56 |

| Frontal operculum | BA 44 | L | −40 | +13 | +7 | −5.70 |

| Superior temporal gyrus | BA 42/BA 22 | L | −66 | −26 | +6 | −6.47 |

| L | −53 | −2 | −5 | −6.94 | ||

| R | +50 | +2 | −13 | −9.57 | ||

| Anterior temporal pole | BA 38 | L | −27 | +1 | −25 | −7.79 |

| R | +37 | +7 | −35 | −7.11 | ||

| Parahippocampal gyrus | BA 35 | L | −34 | −28 | −11 | −9.59 |

| R | +23 | −22 | −12 | −5.91 | ||

| Ventromedial frontal cortex | BA 11 | 0 | +45 | −3 | −9.84 | |

| Inferior occipital gyrus | BA 18 | R | +38 | −84 | −14 | −6.47 |

| R | +29 | −95 | −7 | −5.33 | ||

| IT/fusiform | BA 37 | L | −45 | −74 | −16 | −5.74 |

| R | +41 | −76 | −15 | −5.66 | ||

| Cerebellum | L | −39 | −81 | −17 | −5.25 | |

| L | −50 | −65 | −19 | −5.69 | ||

| L | −30 | −85 | −25 | −5.50 | ||

| R | +43 | −66 | −38 | −6.92 | ||

| R | +34 | −82 | −21 | −6.35 | ||

| R | +51 | −63 | −25 | −6.79 | ||

| Location and Motion Classifier Constructions > Lexical Signs | ||||||

| Precentral gyrus | BA 6 | L | −58 | +6 | +30 | 5.36 |

| R | +56 | +8 | +32 | 6.91 | ||

| Superior frontal gyrus | BA 6 | L | −26 | −5 | +62 | 8.59 |

| Middle frontal gyrus | BA 6 | R | +30 | −1 | +56 | 10.26 |

| SPL | BA 40/7 | L | −29 | −46 | +53 | 12.08 |

| R | +29 | −45 | +50 | 12.78 | ||

| BA 7 | L | −14 | −67 | +58 | 13.09 | |

| R | +18 | −63 | +56 | 15.18 | ||

| Middle occipital gyrus | BA 19 | L | −24 | −79 | +22 | 10.04 |

| Lingual gyrus | BA 18 | R | +8 | −76 | +2 | 11.23 |

Results are from a whole-brain analysis (critical t(94) = 4.57, p < .05, corrected).

Figure 4.

Illustration of the comparison between lexical signs for objects (seeFigure 2B) and location and motion classifier constructions (combined; see Figure 1A and B), which revealed (A) greater activity in the ATLs and in left inferior frontal cortex for lexical signs and (B) greater activity in bilateral superior parietal cortex for location and motion classifier constructions. The color overlay indicates t statistic values where there is a significant increase in activity associated with the contrast (p < .05, corrected).

Object Type–Lexical Signs

Table 4 presents the local maxima of areas of increased activity when signers produced classifier handshape morphemes expressing object type (Figure 2A) compared with lexical signs for the same objects (Figure 2B). This contrast did not reveal differential activation within left IFG, suggesting that the production of both lexical signs and classifier handshapes engage left IFG. The contrast revealed greater activation for lexical signs in right anterior temporal cortex compared with the expression of object type (Figure 5B). In contrast to lexical signs, the expression of object type (within a locative classifier construction) recruited parietal cortex bilaterally and a region in the left precentral sulcus (Figure 5A).

Table 4.

Local Maxima of Areas with Increased Activation for Producing Object Type Classifier Morphemes in Comparison to Producing Lexical Signs for the Same Objects

| Region | Brodmann’s Area | Side | x | y | z | t |

|---|---|---|---|---|---|---|

| Object type >Lexical Signs | ||||||

| Middle frontal gyrus | BA 6 | R | +31 | +1 | +56 | 4.90 |

| Inferior frontal sulcus | BA 9 | R | +46 | +5 | +33 | 4.83 |

| Precentral sulcus | BA 6 | L | −57 | +8 | +29 | 6.36 |

| Inferior parietal sulcus | BA 40 | L | −34 | −44 | +47 | 7.73 |

| R | +45 | −28 | +45 | 6.46 | ||

| SPL | BA 7 | R | +39 | −49 | +58 | 6.30 |

| Lexical Signs >Object Type | ||||||

| Ventromedial pFC | BA 11 | R | +1 | +43 | 0 | −6.93 |

| Angular gyrus | BA 39 | L | −59 | −60 | +27 | −4.67 |

| Anterior middle temporal gyrus | BA 21 | R | +55 | +1 | −16 | −6.07 |

| Anterior IT gyrus | BA 20 | R | +51 | −5 | −26 | −6.10 |

| Parahippocampal gyrus | L | −28 | −2 | −19 | −5.11 | |

Results are from a whole-brain analysis (critical t(94) = 4.57, p < .05, corrected).

Figure 5.

Illustration of the comparison between object type classifier constructions and lexical signs (see Figure 2A and B), which revealed (A) greater activity in bilateral superior parietal cortex and in the left precentral sulcus for location classifier constructions expressing object type and (B) greater activity in the right ATL for lexical signs. The color overlay indicates t statistic values where there is a significant increase in activity associated with the contrast (p < .05, corrected).

Motion Classifier Constructions (Same Object)– Motion Classifier Constructions with Varied Object Types

The contrast between classifier constructions expressing movement paths of the same object falling off a table (Figure 1B) and classifier constructions expressing the movement paths of various objects (Figure 2C) did not result in significant differences in activation, possibly because for both conditions the focus of the expression was on the movement path, rather than on the object type. At a lower threshold (t > 3.18; p < .001, uncorrected), this contrast revealed greater activation for motion classifier constructions with varied object types in the left frontal cortex (BA 46; −49, +38, +15, t = 3.38), left fusiform gyrus (BA 37; −48, −61, −13, t = 3.30), and left parahippocampal gyrus (−34, −26, −24, t = 4.24). No regions were more active for motion classifier constructions in which the object type did not vary.

Lexical Signs–Motion with Varied Object Types

Table 5 presents the local maxima of areas of increased activity when signers produced lexical signs naming objects (Figure 2B) compared with motion classifier constructions that described the paths of different objects (Figure 2C). As can be seen in Figure 6, the production of lexical signs resulted in greater neural activity in anterior temporal lobes bilaterally, whereas the production of motion classifier constructions resulted in increased activity in superior parietal and occipital cortices bilaterally.

Table 5.

Local Maxima of Areas with Increased Activation for Producing Lexical Signs in Comparison to Producing Motion Classifier Constructions with Varied Object Types

| Region | Brodmann’s Area | Side | x | y | z | t |

|---|---|---|---|---|---|---|

| Lexical Signs >Motion with Varied Object Types | ||||||

| Superior frontal gyrus | BA 9/10 | L | −28 | +55 | +26 | −5.05 |

| Ventromedial pFC | BA 11 | R | +3 | +42 | +1 | −10.24 |

| STS | BA 22 | L | −65 | −28 | +4 | −7.13 |

| BA 21 | L | −53 | −7 | −8 | −7.22 | |

| Hippocampus | L | −34 | −29 | −8 | −5.59 | |

| Anterior temporal pole | BA 38 | R | +41 | +9 | −35 | −6.77 |

| BA 38 | R | +48 | +3 | −20 | −8.49 | |

| Inferior parietal lobule | BA 40 | R | +59 | −54 | +34 | −5.10 |

| Precuneus/cingulate | BA 7 | 0 | −45 | +34 | −4.63 | |

| Medial orbital gyrus | 25 | L | −10 | +10 | −11 | −7.00 |

| Motion with Varied Object Types >Lexical Signs | ||||||

| Precentral gyrus | BA 6 | L | −58 | +6 | +30 | 5.56 |

| R | +57 | +7 | +34 | 6.18 | ||

| Superior frontal gyrus | BA 6 | L | −25 | −2 | +62 | 7.54 |

| Middle frontal gyrus | BA 6 | R | +30 | 0 | +55 | 7.92 |

| SPL | BA 7 | L | −16 | −66 | +56 | 11.63 |

| R | +20 | −63 | +54 | 12.22 | ||

| SPL/intraparietal sulcus | BA 7 | L | −30 | −46 | +50 | 9.40 |

| R | +30 | −76 | +26 | 8.10 | ||

| Intraparietal sulcus | BA 39 | L | −26 | −80 | +21 | 9.95 |

| Lingual gyrus | BA 18 | R | +5 | −78 | −2 | 9.54 |

Results are from a whole brain analysis (critical t(94) = 4.57, p < .05, corrected).

Figure 6.

Illustration of the comparison between lexical signs and motion classifier constructions expressing the movement paths of different object types (see Figure 2B and C), which revealed (A) greater activity in ATLs for lexical signs and (B) greater activity in bilateral superior parietal cortex for motion classifier constructions. The color overlay indicates t statistic values where there is a significant increase in activity associated with the contrast (p < .05, corrected).

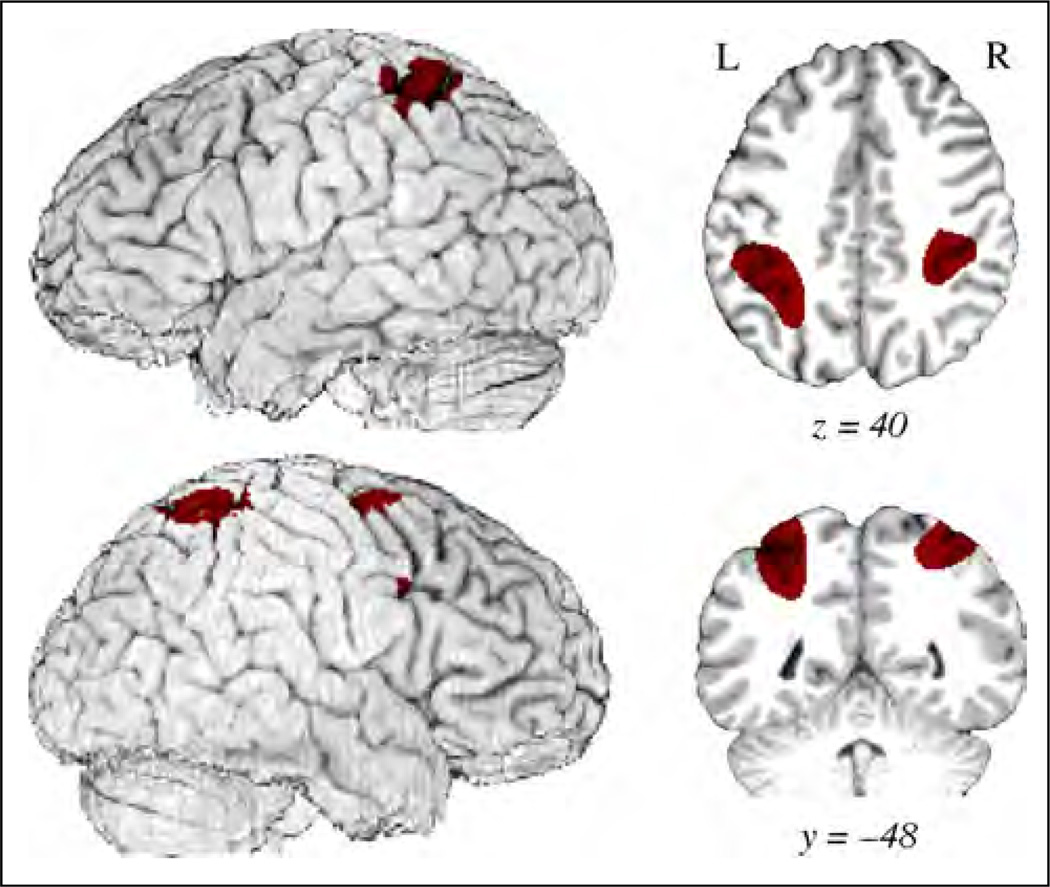

Conjunction Analysis

To test for common activation across all classifier conditions (Figures 1A, B and 2A, C) relative to lexical signs (Figure 2B), a conjunction analysis was performed using the minimum statistic to test the conjunction null hypothesis (Nichols, Brett, Andersson, Wager, & Poline, 2005). This type of conjunction analysis is by nature conservative as it requires identified voxels to be independently significant for each contrast. The results are shown in Table 6 and Figure 7. Common activation for locative and motion classifier constructions was observed in right premotor cortex (BA 6, BA 9) and in bilateral superior parietal cortex along the intraparietal sulcus.

Table 6.

Center of Mass Maxima for the Conjunction Results Common to All Classifier Constructions Contrasted with Lexical Signs (p < .05, Corrected)

| Region | Brodmann’s Area | Side | x | y | z |

|---|---|---|---|---|---|

| Middle frontal gyrus | BA 6 | R | +31 | 0 | +55 |

| IFG | BA 9 | R | +48 | +5 | +42 |

| Intraparietal sulcus/SPL | BA 40/BA 7 | L | −33 | −43 | +47 |

| R | +33 | −41 | +49 |

Figure 7.

Illustration of the conjunction results for all classifier constructions compared with lexical signs. The color overlay denotes regions of significantly greater activation common to all classifier constructions as compared with lexical signs (p < .05, corrected). Regions include bilateral superior parietal cortex/intraparietal sulcus and right premotor cortex (inferior and middle frontal gyri).

DISCUSSION

The visual–manual modality of signed languages affords the bodily use of peripersonal space and hand movements to depict the location and motion of objects, typically expressed via classifier constructions in which the handshape represents the type of object. We investigated whether expression of different components within a classifier construction engaged distinct neural regions and how the production of classifier constructions differs from the production of lexical signs for objects. As can be seen in Figure 3, producing classifier constructions that depict the location of an object engaged the posterior superior parietal lobule (SPL) bilaterally, whereas the production of classifier handshape morphemes that express object type differentially engaged left IFG. Similarly, the contrast between lexical signs and classifier constructions depicting the location or movement of an object revealed greater activation within left IFG for lexical signs (Figure 4A) and greater activation in bilateral SPL for classifier constructions (Figure 4B), replicating the results of Emmorey et al. (2002) and Emmorey et al. (2005). These results also extend our previous findings by showing that the same parietal regions are engaged when signers express spatial locations in response to a set of graded figure-ground locations, as well as to the set of more “categorical” location representations used in Emmorey et al. (2002, 2005) in which each stimulus represented a specific location expressed by an English preposition (e.g., in, on, above, under, beside, etc.).

The SPL is known to be involved in the on-line control and programming of reach movements to target locations in space (e.g., Striemer, Chouinard, & Goodale, 2011; Glover, 2004) and in the control of visual spatial attention, particularly right SPL (e.g., Molenberghs, Mesulam, Peeters, & Vandenberghe, 2007; Corbetta, Shulman, Miezin, & Petersen, 1995). To accurately express the location of a figure object, such as the clock in Figure 1A, signers must attend to the spatial location of the object in the scene, transform this visual representation into a body-centered reference frame, and reach toward the target location with respect to the nondominant hand, which depicts the (non-changing) location of the ground object (the table). Spatial attention, visuomotor transformation, and on-line control of reach movements are all functions that have been attributed to superior parietal cortex (particularly, medial and posterior regions of SPL). We suggest that the gradient expression of spatial locations in signing space engages these parietal functions because of the biology of linguistic expression: the hand, unlike the tongue, can move to specific locations in front of the body to iconically depict spatial locations. Furthermore, right SPL involvement in these functions may explain why signers with RHD exhibit deficits in the production of classifier constructions (e.g., Hickok et al., 2009; Atkinson et al., 2005; Emmorey, Corina, & Bellugi, 1995; Poizner, Klima, & Bellugi, 1987).

When signers produced different classifier handshape morphemes, we observed greater activation in left IFG, as evidenced by the contrast between object type and locative classifier constructions (Figure 3B) and the contrast between motion classifier constructions with varied object types and those with a single object (although this latter result was only significant at a lower threshold). This finding supports the hypothesis that the production of classifier handshape morphemes involves lexical retrieval/ selection and phonological encoding, processes associated with the left IFG (e.g., Indefrey & Levelt, 2004; Thompson-Schill, D’Esposito, Aguirre, & Farah, 1997). The finding further supports the hypothesis that classifier handshapes, unlike locations or movements, are categorical morphemes that must be accessed via left hemisphere language regions. In addition, these contrasts revealed greater activation in left IT cortex for the production of object type morphemes, although the difference was relatively weak, with a small spatial extent (Figure 3B) or significant only at a lower threshold. To select the correct classifier handshape morpheme, participants must recognize each object in the scene, and visual object recognition is a function primarily associated with IT cortex (see DiCarlo, Zoccolan, & Rust, 2012, for a review). The finding that activation was left lateralized supports the hypothesis that left IT mediates between object recognition and lexical retrieval (Tranel et al., 2005; Damasio et al., 2004). Finally, this pattern of results suggests that the production of classifier handshape morphemes is not solely dependent upon visual object processing within lateral IT cortex, despite the iconic representation of object form.

As expected, lexical signs engaged left IFG to a greater extent than classifier constructions expressing the location/ motion of a single object (Figure 4A). Although lexical signs are produced with movements of the hand to different locations in space or on the body, such locations are retrieved as part of a stored phonological representation, and gradient changes in production are not (generally) associated with gradient changes in meaning. Importantly, the contrast between lexical signs and classifier constructions expressing the movement of different objects (requiring retrieval of different classifier handshape morphemes) did not reveal greater activation in left IFG for lexical signs (Figure 6A; Table 5). This null result is expected if both lexical signs and classifier morphemes have stored lexical representations that must be retrieved and selected for picture naming and scene description tasks.

Interestingly, we observed greater activation in the anterior temporal lobes (ATLs) for lexical signs compared with location and motion classifiers (Figures 4A and 6A). Similarly, the contrast between lexical signs and object type classifier constructions revealed greater activation in right ATL for lexical signs (Figure 5B). We note that PET imaging is not subject to the susceptibility artifacts that can lead to a loss in signal from the ATLs for fMRI studies (i.e., spatial distortions and signal drop-off near the sinuses; Visser, Jefferies, & Lambon Ralph, 2009; Devlin et al., 2000). Our findings are particularly intriguing because several studies report greater ATL activation for specific-level concepts (e.g., identifying an animal as a robin) than for basic level concepts (e.g., identifying an animal as a bird; Rogers et al., 2006; Tyler et al., 2004). Lexical signs encode object information at a much more specific level than object type classifier morphemes. Such morphemes encode the shape of an object (e.g., long and thin; cylindrical) or the semantic category of an object (a vehicle; an airplane), whereas lexical signs identify the particular object at the basic level (e.g., a carrot, a bottle, a bicycle).

Some researchers have suggested that there is a topographic gradient along the IT cortex such that unique concepts (e.g., familiar persons/buildings) are represented in the anterior temporal pole and more general concepts are represented along posterior IT (e.g., Grabowski et al., 2001; Martin & Chao, 2001). Our results are partially consistent with this view: naming objects with more specific lexical signs engaged anterior temporal cortex, relative to the production of classifier constructions that express only the general type of object. However, under the specificity gradient hypothesis, we might expect greater activation in posterior IT for object type classifiers than for lexical signs, which was not observed. Our results are also consistent with the semantic “hub” hypothesis, which posits that the ATLs function to link together conceptual information from different modalities (vision, audition, somatosensory, motor) to create amodal, domain general representations of concepts (Lambon Ralph & Patterson, 2008; Patterson, Nestor, & Rogers, 2007; Rogers et al., 2004). Specifically, Patterson et al. (2007) proposed that the ATLs encode similarity relations among various different concepts such that semantically related items (e.g., different types of vehicles) are coded with similar patterns across ATL neurons. Thus, naming a specific instance of a category (e.g., a bicycle) requires the ATLs to instantiate a specific representation to distinguish the target concept from semantically similar concepts (e.g., a motorcycle, a car). In contrast, to “name” the same object with a classifier handshape morpheme does not require a specific representation of the object, and the ATLs need only instantiate a representation that is sufficiently “vehicle-like.” Patterson et al. (2007) predict a stronger metabolic response in the ATLs for naming tasks that require differentiation among overlapping semantic representations.

As seen in Figure 5B, the direct contrast between lexical signs and object type classifier morphemes yielded greater activation for lexical signs only in right ATL. The explanation for this laterality effect is not completely clear. The preponderance of evidence from patient and neuroimaging studies indicates bilateral ATL involvement in semantic representation, with a bias toward the left hemisphere for tasks that require linguistic processing and toward the right hemisphere for tasks that involve emotion processing or face recognition (see Wong & Gallate, 2012, for a review). It is possible that retrieval of object type morphemes activated only left ATL whereas retrieval of lexical signs activated ATL bilaterally. Object type classifiers represent a closed class set of bound morphemes (i.e., they must occur within a verbal predicate), and they often function like pronouns (Sandler & Lillo-Martin, 2006). Therefore, the semantic representations of object type morphemes may be more strongly lateralized to the left hemisphere than open class, content signs.

As shown in Figure 5A, when contrasted with lexical signs, object type classifier constructions activated superior parietal cortex bilaterally and a region in the left precentral sulcus. It is not clear why we observed differential activation in the left precentral sulcus for the object type classifier constructions. The articulation of these constructions differs from lexical signs with respect to coordination of the two hands because the right and left hands represent the location of the figure and ground objects, respectively, whereas for two-handed lexical signs (58% of the signs), the location and configuration of the two hands are not phonologically and semantically independent (e.g., the left hand often mirrors the right and can sometimes be dropped; Padden & Perlmutter, 1987; Battison, 1978). It is possible that this difference in bimanual coordination recruits left precentral cortex to a greater extent for classifier constructions. We suggest that the difference in parietal activation is related to the spatial semantics of the object type classifier constructions—for this condition, signers indicated that an object of a particular type was located on a table (specifically, in the middle of the table). For these constructions, the orientation of the object is indicated by the orientation of the classifier handshape. For example, in Figure 2A, the signer indicates that the bottle is upright and that the hammer is oriented lengthwise with respect to the table. For lexical signs, handshape orientation is specified phonologically and is unaffected by the orientation of the to-be-named object. We hypothesize that the need to specify object orientation with respect to the tabletop engaged bilateral parietal cortex for object type classifier constructions.

The direct contrast between location and motion classifier constructions revealed differences in the left parietal activation, although only at an uncorrected threshold (Table 2). The expression of spatial locations differentially activated the intraparietal sulcus (extending into IPL), whereas the expression of movement paths differentially activated medial SPL. This later result is consistent with the findings of Wu, Morganti, and Chatterjee (2008), who reported greater activation in the left medial SPL for selective attention to movement path (as opposed to manner of motion). Greater activation within medial SPL may reflect enhanced attention to the spatial configuration of the trajectories that indicated movement path in the picture (Vandenberghe, Molenberghs, & Gillebert, 2012; Vandenberghe, Gitelman, Parrish, & Mesulam, 2001). For location expressions, McCullough et al. (2012) recently reported greater activation in left inferior parietal cortex (near the IPS) when ASL signers comprehended sentences containing “static” location classifier constructions (e.g., “The deer slept along the hillside,” in which a legged-classifier handshape is placed at a location in space representing the hillside) compared with matched ASL sentences with motion classifier constructions (e.g., “The deer walked along the hillside,” in which a legged-classifier handshape is moved along the location of the hillside in signing space). MacSweeney et al. (2002) reported similar left parietal activation when users of BSL comprehended “topographic” sentences (sentences that expressed spatial relationships using classifier constructions and signing space) compared with “nontopographic” sentences. Crucially, hearing English speakers showed no differences in parietal activation when comprehending English versions of the two sentence types (Saygin, McCullough, Alac, & Emmorey, 2010; MacSweeney et al., 2002). Left parietal cortex (adjacent to and within the left IPS) may be uniquely engaged for comprehending and expressing spatial relationships for sign languages because the precise configuration of the hands in space, rather than a preposition or other closed-class morpheme, must be mapped to the perceptual (and conceptual) representation of the spatial relationship between figure and ground objects.

Finally, the pattern of superior parietal activation (following the intraparietal sulcus) that was common to all classifier constructions (Figure 7) contrasts with what has been observed for spatial language in spoken languages because we did not find that the SMG was strongly activated for classifier constructions—although SPL activation did extend into SMG. This result is consistent with the findings of Emmorey et al. (2005), who reported that the production of English prepositions engaged the left SMG to a greater extent than locative classifier constructions for hearing ASL–English bilinguals. Left superior rather than inferior parietal cortex may be more engaged for spatial expressions in sign language because of the on-line control of reaching movements and the visuomotor transformation required to translate visual representations into the body-centered reference frame required to express location and motion within a classifier construction. For spoken language, the right SMG is hypothesized to be involved in the abstraction of categorical locative relations from a spatial scene (Amorapanth et al., 2012; Damasio et al., 2001). For signed languages, right superior rather than inferior parietal cortex may be engaged because schematic locative representations must map to an analogue, rather than a categorical linguistic representation of spatial information.

However, the interpretation of our results is somewhat limited by the lack of data from spoken languages that express positional or locative information using classificatory verbs or verbal classifiers, such as native American or Amazonian languages. Although recent research has shown that noun classifiers in Chinese engage the left IFG (Chou, Lee, Hung, & Chen, 2012), these morphemes obligatorily accompany numbers or demonstratives (this, that) in a noun phrase and do not appear in predicates that express spatial information. Verbal classifiers are relatively rare in the world’s spoken languages (Grinevald, 2003), and the parallel between verbal classifiers and classifier constructions in sign languages has been questioned (Hoza, 2012; Schembri, 2003). Nonetheless, it is unknown whether right parietal cortex might be recruited for spoken languages with positional or locative morphemes that indicate the orientation or shape of a figure object in a spatial relation. For example, in the Mayan language Tzeltal, the dispositional adjectives waxal- and xik’il- are used with the general preposition ta to indicate that a figure object is vertically erect or leaning vertically (Brown, 2004).

In summary, the results indicate that production of both lexical signs for objects and object type classifier morphemes engage left inferior frontal cortex and left IT cortex. Lexical signs activated the ATLs to a greater extent than location and motion classifier constructions, which we hypothesize reflects the increased semantic processing required to name individual objects. Both location and motion classifier constructions activated bilateral superior parietal cortex, with some evidence that the expression of spatial locations differentially activated left intraparietal sulcus. We conclude that the bilateral engagement of superior parietal cortex reflects the biological underpinnings of spatial language in signed language and supports the hypothesis that, unlike classifier handshape morphemes, the location and movements within these constructions are not categorical morphemes that are selected and retrieved via left hemisphere language regions.

Acknowledgments

This research was supported by NIH grants RO1 DC006708 and R01 DC010997 awarded to Karen Emmorey and San Diego State University. We would like to thank Joel Bruss, Jocelyn Cole, Franco Korpics, Erica Parker, Jill Weisberg, and the University of Iowa PET Center nurses and staff for their assistance with the study. We also thank all of the Deaf participants who made this research possible.

REFERENCES

- Amorapanth P, Kranjec A, Bromberger B, Lehet M, Widick P, Woods AJ, et al. Language, perception, and the schematic representation of spatial relations. Brain and Language. 2012;120:226–236. doi: 10.1016/j.bandl.2011.09.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atkinson J, Marshall J, Woll B, Thacker A. Testing comprehension abilities in users of British Sign Language after CVA. Brain and Language. 2005;94:233–248. doi: 10.1016/j.bandl.2004.12.008. [DOI] [PubMed] [Google Scholar]

- Bates E, Andonova E, D’Amico S, Jacobsen T, Kohnert K, Lu C-C, et al. Introducing the CRL International Picture-Naming Project (CRL-IPNP) Center for Research in Language Newsletter. 2000;12:1–4. [Google Scholar]

- Battison R. Lexical borrowing in American Sign Language. Silver Spring, MD: Linstok Press; 1978. [Google Scholar]

- Brown P. The INs and ONs of Tzeltal locative expressions. Linguistics. 2004;32:743–790. [Google Scholar]

- Chou T-I, Lee S-H, Hung S-M, Chen H-C. The role of inferior frontal gyrus in processing Chinese classifiers. Neuropsychologia. 2012;50:1408–1415. doi: 10.1016/j.neuropsychologia.2012.02.025. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman G, Miezin F, Petersen S. Superior parietal cortex activation during spatial attention shifts and visual feature conjunction. Science. 1995;270:802–805. doi: 10.1126/science.270.5237.802. [DOI] [PubMed] [Google Scholar]

- Damasio H, Grabowski T, Tranel D, Ponto L, Hichwa R, Damasio AR. Neural correlates of naming actions and of naming spatial relations. Neuroimage. 2001;13:1053–1064. doi: 10.1006/nimg.2001.0775. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Russell RP, Davis MH, Price CJ, Wilson J, Moss HE, et al. Susceptibility-induced loss of signal: Comparing PET and fMRI on a semantic task. Neuroimage. 2000;11:589–600. doi: 10.1006/nimg.2000.0595. [DOI] [PubMed] [Google Scholar]

- DiCarlo JJ, Zoccolan D, Rust NC. How does the brain solve visual object recognitin? Neuron. 2012;73:415–434. doi: 10.1016/j.neuron.2012.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, editor. Perspectives on classifier constructions in signed languages. Mahwah, NJ: Erlbaum; 2003. [Google Scholar]

- Emmorey K, Corina D, Bellugi U. Differential processing of topographic and referential functions of space. In: Emmorey K, Reilly J, editors. Language, gesture, and space. Hillsdale, NJ: Erlbaum; 1995. pp. 43–62. [Google Scholar]

- Emmorey K, Damasio H, McCullough S, Grabowski T, Ponto L, Hichwa R, et al. Neural systems underlying spatial language in American Sign Language. Neuroimage. 2002;17:812–824. [PubMed] [Google Scholar]

- Emmorey K, Grabowski TJ, McCullough S, Ponto L, Hichwa R, Damasio H. The neural correlates of spatial language in English and American Sign Language: A PET study with hearing bilinguals. Neuroimage. 2005;24:832–840. doi: 10.1016/j.neuroimage.2004.10.008. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Herzig M. Categorical versus gradient properties of classifier constructions in ASL. In: Emmorey K, editor. Perspectives on classifier constructions in signed languages. Mahwah: NJ: Erlbaum; 2003. pp. 222–246. [Google Scholar]

- Emmorey K, McCullough S, Mehta S, Ponto LB, Grabowski T. Sign language and pantomime production differentially engage frontal and parietal cortices. Language and Cognitive Processes. 2011;26:878–901. doi: 10.1080/01690965.2010.492643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank RJ, Damasio H, Grabowski TJ. Brainvox: An interactive, multimodal visualization and analysis system for neuroanatomical imaging. Neuroimage. 1997;5:13–30. doi: 10.1006/nimg.1996.0250. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline J-B, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: A general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Glover S. Separate visual representations in the planning and control of action. Behavioral and Brain Sciences. 2004;27:3–78. doi: 10.1017/s0140525x04000020. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Damasio H, Frank R, Hichwa RD, Ponto LL, Watkins GL. A new technique for PET slice orientation and MRI-PET coregistration. Human Brain Mapping. 1995;2:123–133. [Google Scholar]

- Grabowski TJ, Damasio H, Tranel D, Ponto LL, Hichwa RD, Damasio AR. A role for the left temporalpole in the retrieval of words for unique entities. Human Brain Mapping. 2001;13:199–212. doi: 10.1002/hbm.1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabowski TJ, Frank R, Brown CK, Damasio H, Boles Ponto LL, Watkins GL, et al. Reliability of PET activation across stastical methods, subject groups, and sample sizes. Human Brain Mapping. 1996;4:23–46. doi: 10.1002/(SICI)1097-0193(1996)4:1<23::AID-HBM2>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Frank RJ, Szumski NR, Brown CK, Damasio H. Validation of partial tissue segmentationof single-channel magnetic resonance images. Neuroimage. 2000;12:640–656. doi: 10.1006/nimg.2000.0649. [DOI] [PubMed] [Google Scholar]

- Grinevald C. Classifier systems in the context of a typology of nominal classification. In: Emmorey K, editor. Perspectives on classifier constructions. Mahwah, NJ: Erlbaum; 2003. pp. 91–109. [Google Scholar]

- Herscovitch P, Markham J, Raichle ME. Brain blood flow measure with intravenous H2 15O. I. Theory and error analysis. Journal of Nuclear Medicine. 1983;24:782–789. [PubMed] [Google Scholar]

- Hichwa RD, Ponto LL, Watkins GL. Clinical blood flow measurement with [150]water and positron emission tomography (PET) In: Emran AM, editor. Chemists’ views of imaging centers, symposium proceedings of the International Symposium on Chemists’ Views of Imaging Centers. New York: Plenum; 1995. pp. 401–417. [Google Scholar]

- Hickok G, Pickell H, Klima E, Bellugi U. Neural dissociation in the production of lexical versus classifier signs in ASL: Distinct patterns of hemispheric asymmetry. Neuropychologia. 2009;47:382–387. doi: 10.1016/j.neuropsychologia.2008.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoza J. The introduction of new terminology and the death of classifiers? Journal of Deaf Studies and Deaf Education. 2012;17:386. [Google Scholar]

- Indefrey P, Levelt WMJ. The spatial and temporal signatures of word production components. Cognition. 2004;92:101–144. doi: 10.1016/j.cognition.2002.06.001. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister PR, Brady JM, Smith SM. Improved optimisation for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith SM. A global optimization method for robust affine registration of brain images. Medical Image Analysis. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Kemmerer D. The semantics of space: Integrating linguistic typology and cognitive neuroscience. Neuropsychologia. 2006;44:1607–1621. doi: 10.1016/j.neuropsychologia.2006.01.025. [DOI] [PubMed] [Google Scholar]

- Lambon Ralph MA, Patterson K. Generalization and differentiation in semantic memory: Insights from semantic dementia. Cognitive Neuroscience. 2008;1124:61–76. doi: 10.1196/annals.1440.006. [DOI] [PubMed] [Google Scholar]

- Liddell SK. Grammar, gesture, and meaning in American Sign Language. Cambridge, UK: Cambridge University Press; 2003. [Google Scholar]

- MacSweeney M, Woll B, Campbell R, Calvert GA, McGuire P, David A, et al. Neural correlates of British Sign Language comprehension: Spatial processing demands of topographic language. Journal of Cognitive Neuroscience. 2002;14:1064–1075. doi: 10.1162/089892902320474517. [DOI] [PubMed] [Google Scholar]

- Martin A, Chao LL. Semantic memory and the brain: Structure and processes. Current Opinion in Biology. 2001;11:194–201. doi: 10.1016/s0959-4388(00)00196-3. [DOI] [PubMed] [Google Scholar]

- McCullough S, Saygin AP, Korpics K, Emmorey K. Motion-sensitive cortex and motion semantics in American Sign Language. Neuroimage. 2012;15:111–118. doi: 10.1016/j.neuroimage.2012.06.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller AJ. Least squares routines to supplement those of gentleman [AS 274] Applied Statistics. 1991;41:458–478. [Google Scholar]

- Molenberghs P, Mesulam M, Peeters R, Vandenberghe R. Re-mapping attentional priorities: Differential contribution of superior parietal lobule and intraparietal sulcus. Cerebral Cortex. 2007;17:2703–2712. doi: 10.1093/cercor/bhl179. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25:653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Noordzij ML, Neggers SFW, Ramsey NF, Postma A. Neural correlates of locative prepositions. Neuropsychologia. 2008;46:1576–1580. doi: 10.1016/j.neuropsychologia.2007.12.022. [DOI] [PubMed] [Google Scholar]

- Padden C, Perlmutter D. American Sign Language and the architecture of phonological theory. Natural Language Linguistic Theory. 1987;5:335–375. [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Poizner H, Klima ES, Bellugi U. What the hands reveal about the brain. Cambridge, MA: MIT Press; 1987. [Google Scholar]

- Rogers TT, Hocking J, Noppeney U, Mechelli A, Gorno-Tempini ML, Patterson K, et al. Anterior temporal cortex and semantic memory: Reconciling findings from neuropsychology and functional imaging. Cognitive, Affective, and Behavioral Neuroscience. 2006;6:201–213. doi: 10.3758/cabn.6.3.201. [DOI] [PubMed] [Google Scholar]

- Rogers TT, Lambon Ralph MA, Garrard P, Bozeat S, McClelland JL, Hodges JR, et al. Structure and deterioration of semantic memory: A neuropsychological and computational investigation. Psychological Review. 2004;111:205–235. doi: 10.1037/0033-295X.111.1.205. [DOI] [PubMed] [Google Scholar]

- Sandler W, Lillo-Martin D. Sign language and linguistic universals. Cambridge, UK: Cambridge University Press; 2006. [Google Scholar]

- Saygin A, McCullough S, Alac M, Emmorey K. Modulation of BOLD response in motion sensitive lateral temporal cortex by real and fictive motion sentences. Journal of Cognitive Neuroscience. 2010;22:2480–2490. doi: 10.1162/jocn.2009.21388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schembri A. Rethinking “classifiers” in signed languages. In: Emmorey K, editor. Perspectives on classifier constructions. Mahwah, NJ: Erlbaum; 2003. pp. 3–34. [Google Scholar]

- Schembri A, Jones C, Burnham D. Comparing action gestures and classifier verbs of motion: Evidence from Australian Sign Language, Taiwan Sign Language, and nonsigners’ gestures without speech. Journal of Deaf Studies and Deaf Education. 2005;10:272–290. doi: 10.1093/deafed/eni029. [DOI] [PubMed] [Google Scholar]

- Schwartz C. Discrete vs. continuous encoding in American Sign Language and nonlinguistic gestures. Unpublished manuscript; University of California San Diego: 1979. [Google Scholar]

- Striemer CL, Chouinard PA, Goodale MA. Programs for action in superior parietal cortex: A triple-pulse TMS investigation. Neuropsychologia. 2011;49:2391–2399. doi: 10.1016/j.neuropsychologia.2011.04.015. [DOI] [PubMed] [Google Scholar]

- Supalla T. Morphology of verbs of motion and location in American Sign Language. In: Caccamise F, editor. American Sign Language in a bilingual, bicultural context: Proceedings of the National Symposium on Sign Language Research and Teaching. Silver Spring, MD: National Association of the Deaf; 1978. pp. 27–45. [Google Scholar]

- Supalla T. The classifier system in American Sign Language. In: Craig C, editor. Noun classes and categorization. Philadelphia, PA: John Benjamins; 1986. pp. 181–214. [Google Scholar]

- Supalla T. Revisiting visual analogy in ASL classifier predicates. In: Emmorey K, editor. Perspectives on classifier constructions in signed languages. Mahwah, NJ: Erlbaum; 2003. pp. 249–257. [Google Scholar]

- Székely A, D’Amico S, Devescovi A, Federmeier K, Herron D, Iyer G, et al. Timed picture naming: Extended norms and validation against previous studies. Behavior Research Methods, Instruments & Computers. 2003;35:621–633. doi: 10.3758/bf03195542. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Thompson-Schill SL, D’Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: A reevaluation. Proceedings of the National Academy of Sciences, U.S.A. 1997;94:14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tranel D, Grabowski TJ, Lyon J, Damasio H. Naming the same entities from visual or from auditory stimulation engages similar regions of left inferotemporal cortex. Journal of Cognitive Neuroscience. 2005;17:1293–1305. doi: 10.1162/0898929055002508. [DOI] [PubMed] [Google Scholar]

- Tranel D, Kemmerer D. Neuroanatomical correlates of locative prepositions. Cognitive Neuropsychology. 2004;21:719–749. doi: 10.1080/02643290342000627. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Stamatakis EA, Bright P, Acres K, Abdallah S, Rodd JM, et al. Processing objects at different levels of specificity. Journal of Cognitive Neuroscience. 2004;16:351–362. doi: 10.1162/089892904322926692. [DOI] [PubMed] [Google Scholar]

- Vandenberghe R, Gitelman D, Parrish T, Mesulam M. Functional specificity of superior parietal mediation of spatial shifting. Neuroimage. 2001;14:661–673. doi: 10.1006/nimg.2001.0860. [DOI] [PubMed] [Google Scholar]

- Vandenberghe R, Molenberghs P, Gillebert CR. Spatial attention deficits in humans: The critical role of superior compared to inferior parietal lesions. Neuropsychologia. 2012;50:1092–1103. doi: 10.1016/j.neuropsychologia.2011.12.016. [DOI] [PubMed] [Google Scholar]

- Visser M, Jefferies E, Lambon Ralph MA. Semantic processing in the anterior temporal lobes: A meta-analysis of the functional neuroimaging literature. Journal of Cognitive Neuroscience. 2009;22:1083–1094. doi: 10.1162/jocn.2009.21309. [DOI] [PubMed] [Google Scholar]

- Wong C, Gallate J. The function of the anterior temporal lobe: A review of the evidence. Brain Research. 2012;1449:94–116. doi: 10.1016/j.brainres.2012.02.017. [DOI] [PubMed] [Google Scholar]

- Woods RP, Dapretto M, Sicotte NL, Toga AW, Mazziotta JC. Creation and use of a Talairach-compatible atlas for accurate, automated, nonlinear intersubject registration, and analysis of functional imaging data. Human Brain Mapping. 1999;8:73–79. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<73::AID-HBM1>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley KJ. Local maxima and the expected Euler characteristic of excursion sets of chi-squared, F and t fields. Advanced Applied Probability. 1994;26:13–42. [Google Scholar]

- Worsley KJ, Evans AC, Marrett S, Neelin P. A three-dimensional statistical analysis for CBF activation studies in human brain. Journal of Cerebral Blood Flow & Metabolism. 1992;12:900–918. doi: 10.1038/jcbfm.1992.127. [DOI] [PubMed] [Google Scholar]

- Wu DH, Morganti A, Chatterjee A. Neural substrates of processing path and manner information of a moving event. Neuropsycbologia. 2008;46:704–713. doi: 10.1016/j.neuropsychologia.2007.09.016. [DOI] [PMC free article] [PubMed] [Google Scholar]