Abstract

Gain control is essential for the proper function of any sensory system. However, the precise mechanisms for achieving effective gain control in the brain are unknown. Based on our understanding of the existence and strength of connections in the insect olfactory system, we analyze the conditions that lead to controlled gain in a randomly connected network of excitatory and inhibitory neurons. We consider two scenarios for the variation of input into the system. In the first case, the intensity of the sensory input controls the input currents to a fixed proportion of neurons of the excitatory and inhibitory populations. In the second case, increasing intensity of the sensory stimulus will both, recruit an increasing number of neurons that receive input and change the input current that they receive. Using a mean field approximation for the network activity we derive relationships between the parameters of the network that ensure that the overall level of activity of the excitatory population remains unchanged for increasing intensity of the external stimulation. We find that, first, the main parameters that regulate network gain are the probabilities of connections from the inhibitory population to the excitatory population and of the connections within the inhibitory population. Second, we show that strict gain control is not achievable in a random network in the second case, when the input recruits an increasing number of neurons. Finally, we confirm that the gain control conditions derived from the mean field approximation are valid in simulations of firing rate models and Hodgkin-Huxley conductance based models.

Author Summary

Neural networks in the brain can classify objects as being the same thing regardless of the stimulus intensity, which is referred to as gain control. This intensity invariance occurs during pattern recognition in any sensory modality. We evaluate whether it is possible to design stable neural circuits made of excitatory and inhibitory neurons that are capable of controlling the internal representation of a stimulus using network properties alone. Gain control is important because if the activity gets out of control neurons can die or be damaged by hyper-excitation. It is known that one can control the internal representation by the saturating responses of neurons. However, we show that there also is a precise relationship of network parameters that can account for gain control regardless of the external stimulus without such saturation. The most important network parameters are the connections from the inhibitory population to the rest of the network. This is consistent with experimental findings. We also show that the connections from the excitatory to the inhibitory population do not play an important role in gain control, suggesting that they can be freed for encoding purposes without leaving the operating range of the network when levels of stimulation increase.

Introduction

In sensory perception the salient properties of signals need to be separated from their overall amplitude and, therefore, at some level in the neural processing cascade the response of neurons should become insensitive to the overall amplitude of stimulation. This is the role of gain control, which is ubiquitous for sensory processing in the brain [1]. It allows us to recognize a melody independent of how loud the music plays, identify objects in a wide range of light conditions or recognize an odorant irrespective of its concentration or our distance from the source [2].

In the olfactory system it is particularly important to distinguish odorant composition from intensity. A foraging moth or bee can visit many flowers in a day. During their foraging trips the intensity of stimulation fluctuates over a wide range of concentrations while they approach or leave their target flowers. Nevertheless, they are able to discern a preferred odor and reach their goal, consistent with perceiving the odor at different concentrations as a single perceptual object [3], [4]. This is a typical example where adjusting the organism's sensitivity by setting the appropriate sensory gain for environmental cues is critical for matching the animal's behavioral responses to its ecological needs.

Odor encoding is a spatially distributed process. Olfactory receptor neurons (ORNs) in the antennae detect odors and relay neural activity to the antennal lobe (AL). In insects, each ORN typically expresses two odorant receptor genes. One is ubiquitously expressed in all ORNs. The other is unique to subpopulations of ORNs [5], [6]. The unique receptor determines the range and intensities of odors that the ORN detects. ORNs expressing the same receptor protein send axons onto a single glomerulus in the AL [7], the first signal processing stage of the olfactory pathway. Thus, each odorant receptor gene defines a processing channel which carries information about some particular feature of the odorant stimulus. The stereotypic organization of the AL is relatively simple. Each glomerulus is innervated by about three to five uniglomerular projection neurons (PNs) which propagate the olfactory information downstream to higher brain centers. Glomeruli are anatomically connected by a network of local interneurons (LNs). The AL has both excitatory (eLN) and inhibitory (iLN) local neurons [8]–[10] and inhibitory local circuits play an important role in shaping the response of the output [11], [12]. LNs exclusively branch within the AL and therefore provide a substrate for interactions between olfactory channels.

At the first level of olfactory perception, insects are sensitive to concentration. The stronger the odor the stronger the excitation that the PNs receive and, in addition, the more glomeruli are recruited [13]–[16]. However, by recording the activity of more than 100 PNs it has been found in locusts [17] that the mean firing rate of the excitatory PN population in the AL remains nearly constant across a large range of odor concentrations. Subsequent intracellular studies of the excitatory neurons in Drosophila have confirmed these results [18] by identifying a nonlinear transformation that saturates the PN response to the ORN activation, effectively creating a situation where the level of activity of PNs is insensitive to changes in intensity. Independent evidence in bees indicates that the lateral antenno-cerebral tract (lACT), one of the olfactory pathways in bees, similarly shows very low sensitivity to concentration [19] and is therefore also likely to be subject to gain control. It has also been established using simultaneous optical and electrophysiological recordings in several glomeruli of the Drosophila antennal lobe that the PNs reach their maximum firing rate in response to various odorants at intermediate concentrations [20]. It is this regime prior to full saturation of neural responses at very high concentrations that we are addressing in this paper.

Theoretically one can explain the need for gain control in the AL [21], [22] because the next processing layer of the olfactory system, the mushroom bodies, display sparse activity [23], [24] which is a critical feature of models of associative memory [25]–[27]. But sparse coding is also very sensitive to fluctuations in input strength [21], [28]–[30] implying that the level of activity in the AL has to be carefully controlled.

A number of studies has illustrated the importance of lateral inhibitory networks for sharpening the tuning curves of PNs in response to odors [8], [11], [13], [31]–[33] and demonstrated their role in the formation of odor-specific spatio-temporal activity patterns in the AL [34]–[40]. In particular in [40] the authors analyze how lateral inhibition normalizes the response of a PN to its presynaptic ORNs and how this type of gain control affects PN population codes for odors in Drosophila. In addition, it has been shown in a model of the bee olfactory system [41] that explicitly added gain control allows improved coding of odors and odor mixtures. In this work we analyze the structural and functional network requirements that lead to gain control that keeps the excitatory neurons within a defined narrow range of activity regardless of the stimulus intensity.

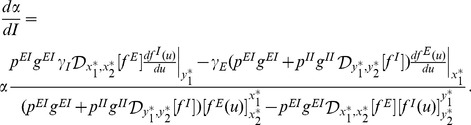

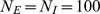

We are investigating these conditions in the framework of a model network of excitatory and inhibitory neurons inspired by the structure of the insect AL (note similar studies in the olfactory bulb [2], [42]). Since this work is of broad relevance to brain microcircuits we will refer to the PNs as the excitatory population and the inhibitory LNs as the inhibitory population in the remainder of the paper. Excitatory LNs are included only indirectly as lateral excitatory connections between neurons of the excitatory population. Excitation from ORNs will be referred to as “sensory input”. The network architecture is illustrated in Fig. 1. As we will show below, one can use a mean field approximation to derive general gain control conditions on the connectivity of the network. We then demonstrate the validity of the mean field solution in simulations of an appropriate firing rate model and a more realistic Hodgkin-Huxley type conductance based network model.

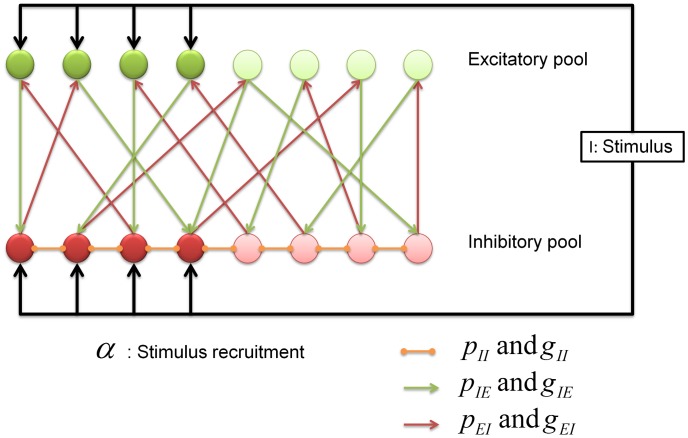

Figure 1. Schematic representation of the network architecture.

There are two populations of neurons, excitatory (green) and inhibitory (red). The inhibitory network controls the activity of the model. The input arrives into a particular subpopulation of all neurons. The fraction of neurons that receive input is denoted by  and the intensity of the external stimulation is denoted by

and the intensity of the external stimulation is denoted by  . The main network parameters are the probability of connections between the neurons,

. The main network parameters are the probability of connections between the neurons,  and their strength

and their strength  . Our theoretical results indicate that the connections from the inhibitory population to the excitatory population are most important for gain control purposes.

. Our theoretical results indicate that the connections from the inhibitory population to the excitatory population are most important for gain control purposes.

We consider two main cases to understand gain control in the theoretical mean field model. First, we consider the situation where increasing the external stimulus increases the level of depolarization of the neurons in the network but otherwise keeps the input, in particular the number of neurons which receive input, unchanged. In the second case we consider a scenario where an increasing number of neurons is recruited (analogous to the recruitment of more glomeruli) by the stimulus when the odor concentration increases.

Results

Mean field description

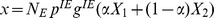

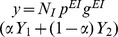

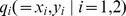

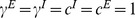

Departing from the firing rate models explained in the model section using equations (29,30) the first step to find global conditions for robust gain control is to use mean field equations which are exact in the limit of large  . These are built by defining new macroscopic variables representing the groups of neurons depicted in Fig. 1 as

. These are built by defining new macroscopic variables representing the groups of neurons depicted in Fig. 1 as

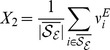

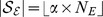

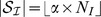

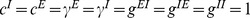

| (1) |

|

(2) |

| (3) |

| (4) |

The quantities  and

and  respectively represent the averaged firing rates of the excitatory and inhibitory populations, which receive sensory input for a given stimulus. We do not consider excitatory LNs explicitly but allow for excitatory connections between the neurons of the excitatory population to emulate their effects on the activity of the network. The sets

respectively represent the averaged firing rates of the excitatory and inhibitory populations, which receive sensory input for a given stimulus. We do not consider excitatory LNs explicitly but allow for excitatory connections between the neurons of the excitatory population to emulate their effects on the activity of the network. The sets  and

and  denote the indices of the excitatory and inhibitory populations.

denote the indices of the excitatory and inhibitory populations.

The size of these sets can be expressed in terms of the ‘sparseness parameter’  as

as  and

and  , where

, where  and

and  are the total number of excitatory and inhibitory neurons, respectively.

are the total number of excitatory and inhibitory neurons, respectively.

For the sake of simplicity we will assume that the external input is not fluctuating and is identical for all neurons in these sets.  and

and  are the average activities of the excitatory and inhibitory neurons that do not receive direct input from the receptors but only laterally from the network (see Fig. 1). Their indices are denoted by

are the average activities of the excitatory and inhibitory neurons that do not receive direct input from the receptors but only laterally from the network (see Fig. 1). Their indices are denoted by  and

and  .

.

The lowest-order mean field approximation is based on the following assumption

| (5) |

where  denotes a population average and the contribution of the higher order moments of the excitatory and the inhibitory synaptic currents have been considered in the vector field functions

denotes a population average and the contribution of the higher order moments of the excitatory and the inhibitory synaptic currents have been considered in the vector field functions  . These functions are smoother than the gain functions

. These functions are smoother than the gain functions  and are derived from averaging over fluctuations in the synaptic input current

and are derived from averaging over fluctuations in the synaptic input current  [43], see model section. Under the assumption of statistical independence between the connectivity and the activity of the network (33) and by virtue of the mean field approximation (5), we can reduce the initial microscopic field equations (29,30) to a set of four ordinary differential equations representing the average firing rate time evolution of the excitatory and inhibitory populations, separately for those that receive external input from the receptors and those that only receive input from the network.

[43], see model section. Under the assumption of statistical independence between the connectivity and the activity of the network (33) and by virtue of the mean field approximation (5), we can reduce the initial microscopic field equations (29,30) to a set of four ordinary differential equations representing the average firing rate time evolution of the excitatory and inhibitory populations, separately for those that receive external input from the receptors and those that only receive input from the network.

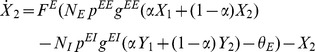

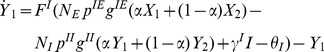

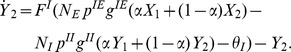

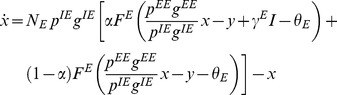

|

|

|

|

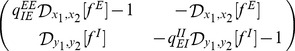

where the dot denotes the time derivative. Let us define the variables  and

and  which represent the average synaptic current from the PN and LN populations respectively. The parameter

which represent the average synaptic current from the PN and LN populations respectively. The parameter  denotes the efficiency of the connection between neurons of different populations and

denotes the efficiency of the connection between neurons of different populations and  the connection probability. With these notations we can compress the four previous equations into

the connection probability. With these notations we can compress the four previous equations into

|

(6) |

|

(7) |

Now, we first would like to prove that all the fixed points of this system of ordinary differential equations are stable and, second, to determine conditions that lead to gain control of the activity level of the excitatory population. To be more specific, we define a gain control system as a neural network with the ability to keep the averaged activity of the excitatory neurons constant over large variations in odor concentration.

Stability analysis

Let us first analyze the stability of the stationary state of the mean field equations. The parameter values should be set in regions where stable fixed points are feasible. Moreover, we can also identify the main source of instability which, in this case, are the excitatory connections within the excitatory population. For simplicity we define the functions

| (8) |

| (9) |

The mean field equations (6, 7) are then replaced by

| (10) |

| (11) |

where the ratio  is a measure of the effective synaptic inhibition in the network, and

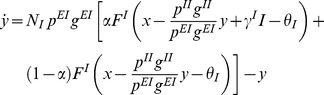

is a measure of the effective synaptic inhibition in the network, and  is a ratio of the effective synaptic excitation in the network. Using linear stability analysis it is easy to determine the stability conditions for the steady states of equations (10) and (11). The Jacobian of the system can be expressed as

is a ratio of the effective synaptic excitation in the network. Using linear stability analysis it is easy to determine the stability conditions for the steady states of equations (10) and (11). The Jacobian of the system can be expressed as

|

(12) |

where  is the composite derivative operator

is the composite derivative operator

| (13) |

and the sub-indices  correspond to the evaluation points

correspond to the evaluation points  ,

,  ,

,  , and

, and  . We are primarily interested in the sign of the eigenvalues of the Jacobian

. We are primarily interested in the sign of the eigenvalues of the Jacobian

|

(14) |

In the absence of lateral excitation,  or

or  , both eigenvalues are always negative because both

, both eigenvalues are always negative because both  and

and  are monotonically increasing functions that implies that their derivatives

are monotonically increasing functions that implies that their derivatives  and

and  . So any fixed point of the system is stable, independent of the spread of the stimulus

. So any fixed point of the system is stable, independent of the spread of the stimulus  . If the input is stationary, the network therefore may evolve to a solution in which the population average firing rates are constant.

. If the input is stationary, the network therefore may evolve to a solution in which the population average firing rates are constant.

However when there is some level of lateral excitation the stability conditions change for high values of the product  . For example, for

. For example, for  and linear gain functions with slope

and linear gain functions with slope  , the boundary condition of stability is

, the boundary condition of stability is  , so

, so

Beyond this level of lateral excitation between the neurons of the excitatory population, the dynamical system becomes unstable and non-functional for stimulus encoding purposes.

Gain control conditions

The first step in the analysis of equations (10) and (11) is to calculate the equilibrium firing rates of the excitatory and inhibitory populations. The equilibrium equations are found by setting  and

and  to zero, leading to

to zero, leading to

|

(15) |

| (16) |

Equations (15) and (16) are nonlinear implicit equations. We need to determine conditions such that

| (17) |

that is, there are no macroscopic changes in the excitatory population activity as a function of the stimulus intensity. We are going to consider two cases. First, we consider the case where increasing intensity of the external stimulus  increases the level of depolarization of the neurons, while keeping the fraction

increases the level of depolarization of the neurons, while keeping the fraction  of neurons that are receiving input constant. In the second part we will consider the case where increasing odor concentration increases both the the fraction

of neurons that are receiving input constant. In the second part we will consider the case where increasing odor concentration increases both the the fraction  of recruited neurons and the input current

of recruited neurons and the input current  received by them [44].

received by them [44].

Condition for independence on the stimulus intensity

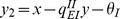

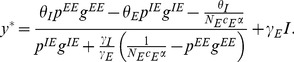

Equations (15) and (16) are implicit equations and we need to determine conditions such that  . If we differentiate equations (15) and (16) we obtain

. If we differentiate equations (15) and (16) we obtain

| (18) |

| (19) |

where the derivative operators  are as defined in (13) and must be evaluated at the fixed point

are as defined in (13) and must be evaluated at the fixed point  . If we solve for

. If we solve for  from equations (18) and (19) and then take into account the constraint (17), we obtain the gain control condition

from equations (18) and (19) and then take into account the constraint (17), we obtain the gain control condition

|

(20) |

This equation is one of the main results of our analysis. It describes a specific relationship between network parameters that must hold in order to maintain constant average PN activity over a large range of input intensities  . This relationship depends on the gain functions of the neurons through the slopes of the vector fields

. This relationship depends on the gain functions of the neurons through the slopes of the vector fields  and

and  of the excitatory and inhibitory populations at the equilibrium firing rates.

of the excitatory and inhibitory populations at the equilibrium firing rates.

Analysis of the gain control conditions

The general gain control condition (eq. 20) may appear complex, but it can be simplified significantly in practice. Whenever a sufficiently strong stimulus is present, and if, as we assume, lateral excitation is dominated by lateral inhibition, the group of excitatory and inhibitory neurons that do not receive direct sensory input become silent due to inhibition by the increasingly responding inhibitory neurons [32], [45]. Note, however, that this does not necessarily imply that odor responses may not broaden within the population of PNs which do receive inputs or by PNs that are additionally recruited to receive input. The gain functions of both excitatory and inhibitory neurons below threshold are constant and hence the evaluation of equation (20) at  and

and  is

is  if they are silenced.

if they are silenced.

Moreover, the excitatory and inhibitory neurons that do receive sensory inputs are most of the time in the linear regime and hence the vector fields  and

and  are approximately linear above their threshold [46]

are approximately linear above their threshold [46]

| (21) |

| (22) |

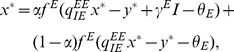

where the mean input current to the excitatory population is  for

for  and zero otherwise, and

and zero otherwise, and  and

and  are gain parameters.

are gain parameters.

Thus, using equations (8,9,21,22),  , which corresponds to the group of excitatory neurons that are shut down during stimulation,

, which corresponds to the group of excitatory neurons that are shut down during stimulation,  , for the same reason for the LNs, and inserting them into equation (20) leads to the simple gain control expression

, for the same reason for the LNs, and inserting them into equation (20) leads to the simple gain control expression

| (23) |

Furthermore, in the simulations that follow, we use  , which simplifies the gain control condition to

, which simplifies the gain control condition to

| (24) |

indicating that the primary dependence of the gain control is on  . The simulation of the full set of ordinary differential equations for the firing rate and Hodgkin-Huxley models confirms this dependence as we will show in the following sections.

. The simulation of the full set of ordinary differential equations for the firing rate and Hodgkin-Huxley models confirms this dependence as we will show in the following sections.

This expression also indicates that in order to maintain gain control, the effective synaptic inhibition should be scaled with the input to the network. This finding is consistent with the idea that a larger number of activated glomeruli may induce more lateral inhibition to set the appropriate sensory gain [20]. It is also remarkable that there is no explicit dependence on the connections from the excitatory to the inhibitory population, which is consistent with other findings [47]–[49] where the key plasticity is found in the connections originating from the LNs.

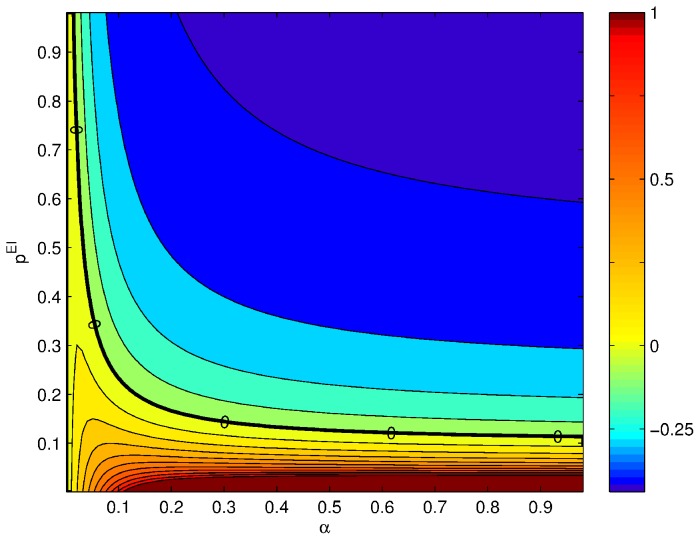

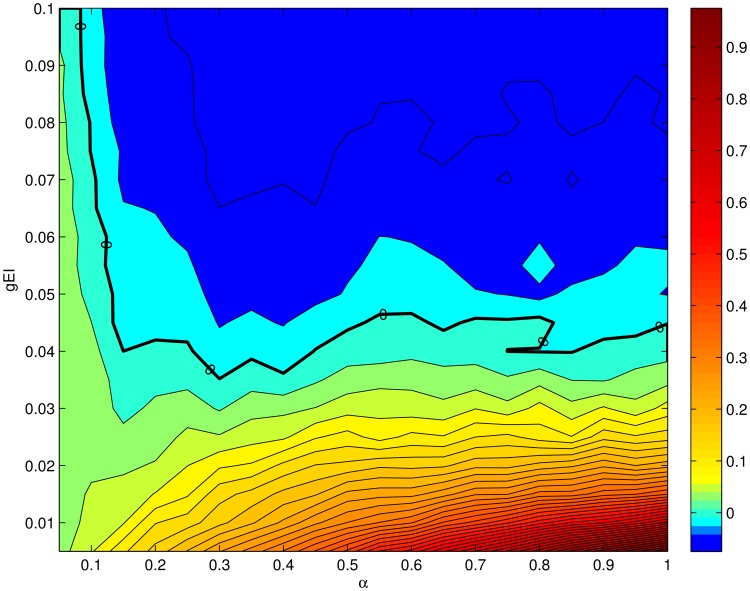

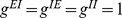

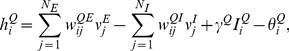

In Fig. 2 we show the derivative of the average rate of the excitatory population with respect to intensity,  , as a function of the probability of connections from the inhibitory to the excitatory population and as a function of

, as a function of the probability of connections from the inhibitory to the excitatory population and as a function of  . The solid black line indicates the exact gain control condition given by equation (24). This plot will be compared with the solutions obtained in the following sections using the complete firing rate and Hodgkin-Huxley network models.

. The solid black line indicates the exact gain control condition given by equation (24). This plot will be compared with the solutions obtained in the following sections using the complete firing rate and Hodgkin-Huxley network models.

Figure 2. Gain control conditions obtained from the mean field approximation for  ,

,  ,

,  ,

,  and

and  .

.

The contour plot shows the absolute value of the slope of the rate in the excitatory population as a function of the input current  from ORNs. The axes of the plot are ranges of parameters. Strict gain control corresponds to

from ORNs. The axes of the plot are ranges of parameters. Strict gain control corresponds to  slope, eq. (23), indicated by thicker black line.

slope, eq. (23), indicated by thicker black line.

Recent work in Drosophila

[40] has demonstrated that lateral inhibition scales linearly with the total sensory input. If we calculate the fixed points of the excitatory and inhibitory populations for large  using the condition for gain control in equation (23), it can be shown that the activity of the inhibitory population scales linearly with

using the condition for gain control in equation (23), it can be shown that the activity of the inhibitory population scales linearly with  as follows

as follows

|

(25) |

Note that if the system operates in the gain control condition, the main dependence on the external input is regulated by the synaptic gain of the input to the excitatory neurons. Equation (25) implies that the activity of the inhibitory network grows linearly with the external input to compensate for the external stimulus.

Condition for gain control with respect to the sparseness parameter

In the previous section we obtained the gain control condition for the activity of the excitatory population if the input intensity  varies but the spread or sparseness parameter

varies but the spread or sparseness parameter  is fixed. As mentioned earlier, in general odor intensity also determines the number of recruited glomeruli and hence the equivalent of

is fixed. As mentioned earlier, in general odor intensity also determines the number of recruited glomeruli and hence the equivalent of  in the insect brain should depend on the stimulus as well [44]. Returning to equations (15) and (16) differentiating them with respect to

in the insect brain should depend on the stimulus as well [44]. Returning to equations (15) and (16) differentiating them with respect to  ,

,  ,

,  and

and  we obtain

we obtain

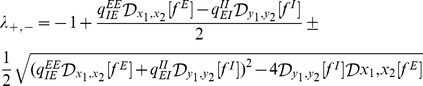

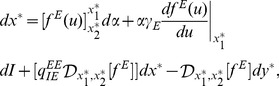

|

(26) |

|

(27) |

When we solve these equations for  and determine the condition such that

and determine the condition such that  , we find

, we find

|

(28) |

This means that there is no solution to the gain control problem for arbitrary relationships between  and

and  . Gain control could be achieved if each change in

. Gain control could be achieved if each change in  were accompanied by the correct change in

were accompanied by the correct change in  to fulfill (28). However, this would imply that this complex equation would have to be implemented in the connectivity between sensory input and the network and/or within the network or appropriate dynamic changes in the connectivity strengths

to fulfill (28). However, this would imply that this complex equation would have to be implemented in the connectivity between sensory input and the network and/or within the network or appropriate dynamic changes in the connectivity strengths  and

and  depending on

depending on  , which appears unlikely.

, which appears unlikely.

The consequences are significant because there are no plausible gain control conditions if the stimulus is encoded with an increasing number of recruited neurons. If one looks at Fig. 2 the explicit  dependence shows that in order to have strict gain control conditions, the network would have to have a mechanism to modulate the probability or strength of the connections. The modulation of the inhibitory connection in real time as a function of the stimulus requires additional circuits that are not part of the mathematical description used here, although they might be possible by modifying the architecture of the network [50], [51]. If the gain control requirements can be relaxed to signify an approximately zero gain of activity with increasing input, relaxed gain control conditions can be found for a large number of inhibitory neurons and high

dependence shows that in order to have strict gain control conditions, the network would have to have a mechanism to modulate the probability or strength of the connections. The modulation of the inhibitory connection in real time as a function of the stimulus requires additional circuits that are not part of the mathematical description used here, although they might be possible by modifying the architecture of the network [50], [51]. If the gain control requirements can be relaxed to signify an approximately zero gain of activity with increasing input, relaxed gain control conditions can be found for a large number of inhibitory neurons and high  . In this case the effect of changes in

. In this case the effect of changes in  becomes negligible (see equation (24)).

becomes negligible (see equation (24)).

Rate model simulation

In this section we assess the validity of our approach of deriving gain control conditions from mean field approximations by numerically solving the full rate model expressed by the coupled ordinary differential equations (29) and (30) explained in the model section. The equations model the firing rate of neurons to a first approximation [52]–[55] and though they are simpler than conductance-based models, they still allow unveiling fundamental principles underlying the cooperative function of neural systems. Population rate models provide an accurate description of the network behavior when the neurons fire asynchronously [56].

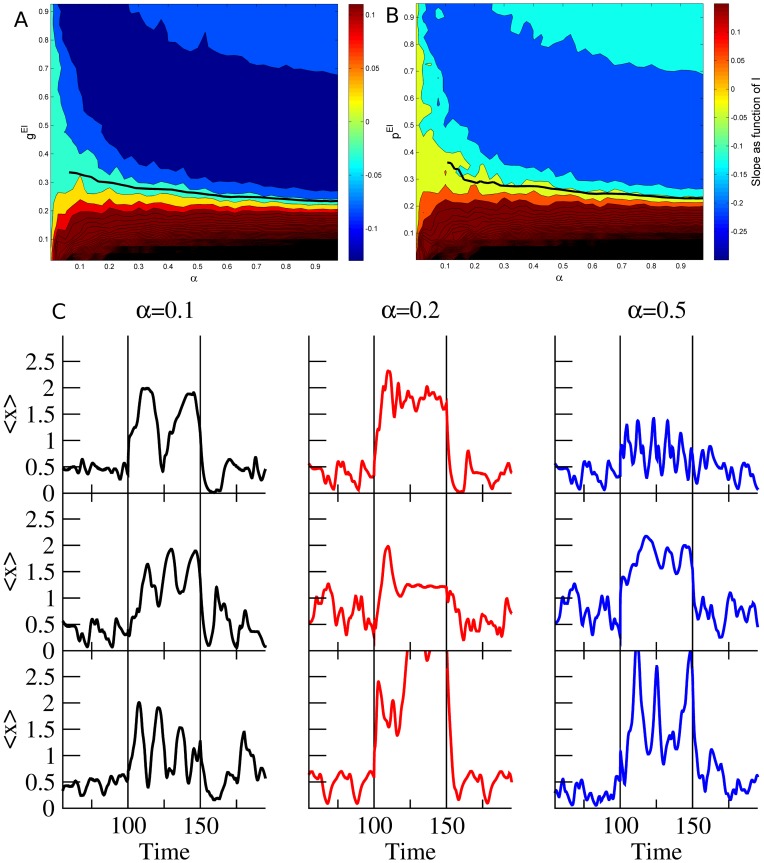

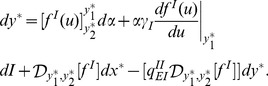

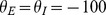

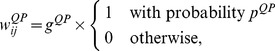

Fig. 3 summarizes how the the steady state firing rate depends on the stimulus intensity  . A grid of

. A grid of  from

from  to

to  with steps of

with steps of  was run

was run  times for each range of concentrations

times for each range of concentrations  . The solid thick line represents the gain control conditions, that are fairly flat for sufficiently high values of

. The solid thick line represents the gain control conditions, that are fairly flat for sufficiently high values of  and hence match the asymptotic theoretical behavior derived in equation (24). However, for low values of

and hence match the asymptotic theoretical behavior derived in equation (24). However, for low values of  , the firing rates decrease significantly as a function of

, the firing rates decrease significantly as a function of  and the numerical estimation does not have sufficient precision. As we can see, there are remarkable similarities between the rate model simulation and the theoretical gain control conditions solved in equation (20) and shown in Fig. 2. In Fig. 2B we can see that the same qualitative dependence exists when the strength

and the numerical estimation does not have sufficient precision. As we can see, there are remarkable similarities between the rate model simulation and the theoretical gain control conditions solved in equation (20) and shown in Fig. 2. In Fig. 2B we can see that the same qualitative dependence exists when the strength  of the connections is varied rather than their probability

of the connections is varied rather than their probability  . On Fig. 2C we can see a few examples of the mean activity of the excitatory population for several levels of

. On Fig. 2C we can see a few examples of the mean activity of the excitatory population for several levels of  for the gain control conditions. As we can see, despite enforcing gain control the dynamics of the network retain a large repertoire of dynamical behaviors that can be used for information processing purposes.

for the gain control conditions. As we can see, despite enforcing gain control the dynamics of the network retain a large repertoire of dynamical behaviors that can be used for information processing purposes.

Figure 3. (A) Contour plot of slopes of response curves in numerical experiments using rate model neurons.

We plot the derivative of change in the PN activity with respect to the external stimulus  as a function of

as a function of  , which is the fraction of neurons that receive input, and

, which is the fraction of neurons that receive input, and  , which is the probability of having a connection from an inhibitory neuron to an excitatory one. The parameter values used in this simulation of the rate model neurons were

, which is the probability of having a connection from an inhibitory neuron to an excitatory one. The parameter values used in this simulation of the rate model neurons were  ,

,  ,

,  , while the probability of connections are

, while the probability of connections are  ,

,  and

and  . The gain constants

. The gain constants  are set to 1 with thresholds

are set to 1 with thresholds  . Strict gain control corresponds to

. Strict gain control corresponds to  slope and is represented by the thick black solid line. The line is not complete because we do not have enough resolution to reliably track the gain control boundary. (B) Exploration of the dependence of the gain control boundaries as a function of the strength of the coupling from the excitatory to the inhibitory population. The parameters were the same as for the simulations shown in A except

slope and is represented by the thick black solid line. The line is not complete because we do not have enough resolution to reliably track the gain control boundary. (B) Exploration of the dependence of the gain control boundaries as a function of the strength of the coupling from the excitatory to the inhibitory population. The parameters were the same as for the simulations shown in A except  . (C) Examples of the mean activity of the excitatory population for different realizations (rows) of the network using the same parameter values as (A) near the gain control conditions. Despite the restraining gain control conditions the dynamics of the rate models are capable of displaying a rich variety of dynamical behaviors. Each column represents different levels of recruitment (

. (C) Examples of the mean activity of the excitatory population for different realizations (rows) of the network using the same parameter values as (A) near the gain control conditions. Despite the restraining gain control conditions the dynamics of the rate models are capable of displaying a rich variety of dynamical behaviors. Each column represents different levels of recruitment ( ) by the input.

) by the input.

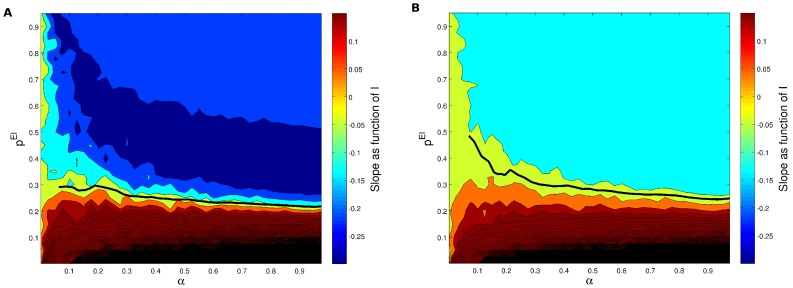

Overall the functional dependence of the average excitatory firing rate on the odor concentration is highly non-linear: weaker inputs from the antenna are amplified greatly, while stronger inputs are amplified less. The inhibition acts as a negative feedback loop keeping the output of the system within a given range. When we run simulations for different values of the connection probability from the excitatory to the inhibitory population,  , (see Fig. 4) we do not find such a high variability in the gain control conditions, which is indicated by the solid black line in Figures 4 and 3. This is again consistent with the expression (24) which lacks an explicit dependence on

, (see Fig. 4) we do not find such a high variability in the gain control conditions, which is indicated by the solid black line in Figures 4 and 3. This is again consistent with the expression (24) which lacks an explicit dependence on  and

and  .

.

Figure 4. (A) Contour plot of slopes of response curves in numerical experiments using rate model neurons but here testing whether the gain control conditions are truly independent of  as predicted by the mean field model.

as predicted by the mean field model.

We plot the derivative of change in the PN activity with respect to the external stimulus  as a function of

as a function of  , that is the fraction of neurons that receive input, and

, that is the fraction of neurons that receive input, and  , which is the probability of having a connection from an inhibitory neuron to an excitatory one. The parameter values used in this simulation of the rate model neurons were

, which is the probability of having a connection from an inhibitory neuron to an excitatory one. The parameter values used in this simulation of the rate model neurons were  ,

,  ,

,  , while the probability of connections are

, while the probability of connections are  ,

,  and

and  . The gain constants

. The gain constants  are again set to

are again set to  and

and  . Strict gain control corresponds to

. Strict gain control corresponds to  slope and is represented by the thick black solid line. The line is not complete because we do not have enough resolution to reliably track the gain control boundary. (B) The same as in the left but using

slope and is represented by the thick black solid line. The line is not complete because we do not have enough resolution to reliably track the gain control boundary. (B) The same as in the left but using  to corroborate the gain control condition (the solid line) does not depend on the connections from the excitatory population as predicted from the theory.

to corroborate the gain control condition (the solid line) does not depend on the connections from the excitatory population as predicted from the theory.

HH model simulation

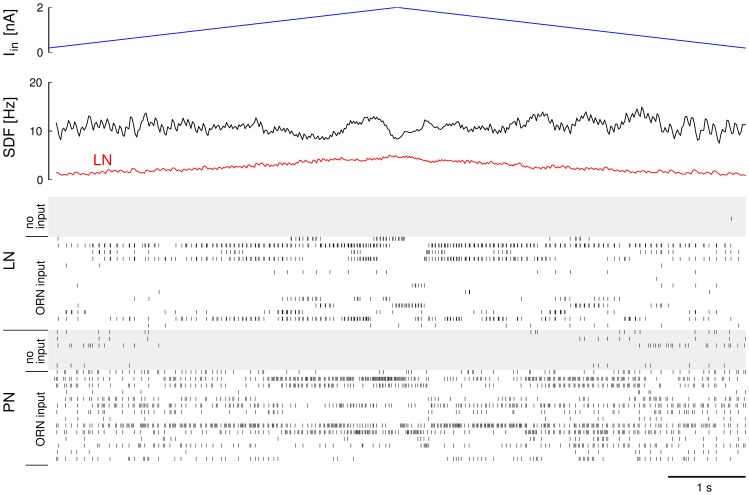

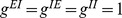

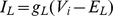

To further test our results in an even more realistic simulation we tested the gain control condition in a network model of Hodgkin-Huxley type model neurons. We simulated a network of  model neurons and after an initial period of

model neurons and after an initial period of  ms simulated time, we excited a fraction

ms simulated time, we excited a fraction  of the PN and LN populations with an input current that was ramped up linearly from

of the PN and LN populations with an input current that was ramped up linearly from  nA to

nA to  nA during

nA during  ms simulated time and then ramped down again to

ms simulated time and then ramped down again to  nA in another

nA in another  ms (Fig. 5). The input current of all excitatory and inhibitory neurons that received input was updated every integration time step. We counted the spikes in the excitatory and inhibitory population in

ms (Fig. 5). The input current of all excitatory and inhibitory neurons that received input was updated every integration time step. We counted the spikes in the excitatory and inhibitory population in  ms windows and added the numbers of the windows with corresponding input current from the up- and down-ramp. We then used Matlab to fit a linear regression to the spike count in the excitatory population as a function of the input current

ms windows and added the numbers of the windows with corresponding input current from the up- and down-ramp. We then used Matlab to fit a linear regression to the spike count in the excitatory population as a function of the input current  . This analysis was repeated for different pairs of values for

. This analysis was repeated for different pairs of values for  and

and  . Fig. 6 summarizes the results. The slope

. Fig. 6 summarizes the results. The slope  of the number of spikes

of the number of spikes  in the excitatory population in

in the excitatory population in  ms simulated time as a function of the input current

ms simulated time as a function of the input current  in nA (normalized to within the interval

in nA (normalized to within the interval  ) is displayed as colors. Successful gain control corresponds to

) is displayed as colors. Successful gain control corresponds to  slope, or green in the plot. It is achieved on what roughly looks like a hyperbolic function

slope, or green in the plot. It is achieved on what roughly looks like a hyperbolic function  (see strong contour line). This is in good correspondence with the dependency we found in the other descriptions (see figures 2,3 and equation (23)).

(see strong contour line). This is in good correspondence with the dependency we found in the other descriptions (see figures 2,3 and equation (23)).

Figure 5. Illustration of the conductance based numerical experiment for an example of successful gain control at  and

and

.

.

The input current to the fraction of  of all PNs and LNs is ramped up from

of all PNs and LNs is ramped up from  nA to

nA to  nA and back down to

nA and back down to  nA (top). In response to this input the firing patterns of PNs and LNs change. While the average rate of LNs increases and decreases proportional to the input (see spike density function (SDF) in the second panel), the average activity of PNs remains constant. Nevertheless, there is a clear and distinctive response to the input in form of slow patterning of the PN activity (see spike raster).

nA (top). In response to this input the firing patterns of PNs and LNs change. While the average rate of LNs increases and decreases proportional to the input (see spike density function (SDF) in the second panel), the average activity of PNs remains constant. Nevertheless, there is a clear and distinctive response to the input in form of slow patterning of the PN activity (see spike raster).

Figure 6. Gain with increasing  in the excitatory population as a function of

in the excitatory population as a function of  and

and  for the conductance based model.

for the conductance based model.

The color map shows the the slope of the spike count in the excitatory population as a function of the input current  from ORNs, normalized to a maximum of

from ORNs, normalized to a maximum of  . The axes of the colormap are the ranges of parameters

. The axes of the colormap are the ranges of parameters  (x-axis) and

(x-axis) and  (y-axis). Strict gain control corresponds to

(y-axis). Strict gain control corresponds to  slope (green, thicker contour line).

slope (green, thicker contour line).

Note that even though the overall activity levels are constant because of the gain control condition, Figures 3C and 5 illustrate that nevertheless the network does produce a variety of spatio-temporal responses of the excitatory population. Gain control is therefore not limiting the capacity to map external information into intrinsic neural representations. It is known that networks of excitatory-inhibitory neurons, like the one used here, can achieve a large repertoire of reproducible spatio-temporal sequences to encode information [57].

Discussion

The function of gain control is necessary if not crucial for any system that aims to separate the quality of stimuli from their intensity. If this separation is achieved there must be a stage in the signal processing system where the response is no longer dependent on the intensity of the signal. This has been observed in biological systems and is believed to be important for the correct function of neural systems [1]. Mechanisms of gain control have been demonstrated at the level of single neurons using, e.g., synaptic adaptation [51]. It was found that adapting synapses allowed signal decoding over a wide dynamical range even though it did not induce signal invariance per se. Another commonly suggested mechanism of gain control is feedback gain control [29] which has been found to effectively stabilize general activity levels independent of stimulus intensity and so support efficient coding of odor identity independent of concentration [41].

Besides being important for separating intensity and identity information, models of the insect brain have demonstrated that gain control is also an important constraint for improving recognition performance [21], [58], [59]. For example in the insect olfactory system, the excitatory neurons of the AL project into a large screen of mushroom body neurons where there is a large variability of activity as a function of small perturbations in the AL [28], [30], [58]. It is therefore imperative to closely control activity levels in the AL in order to have usable sparse coding in the mushroom bodies.

Here, we demonstrated gain control at the level of a subnetwork. Gain control is achieved through a balance between excitation and inhibition, while both excitatory and inhibitory neurons in the system receive excitatory input from primary sensory neurons. Although the neurons in the network are using constant synaptic connections, i.e., are lacking synaptic adaptation, we were able to identify successful gain control conditions. The mechanism that underlies these conditions emerges from the dynamic balance of inhibition and excitation.

Our main finding, obtained by mean field analysis and confirmed by more detailed simulations, is that gain control conditions exist over a defined range of connectivity strengths from excitatory to inhibitory neurons if stimuli of different intensity affect the intensity of stimulation and not the number of neurons that are activated.

The success of gain control is largely determined by the probability and strength of inhibitory (LN) to excitatory (PN) connections. The strength of the connections from excitatory to inhibitory neurons, which is important for odor coding in insects [13], [60], does not play a role in the proper function of this gain control mechanism. We have also investigated the role of lateral excitation that has been found in Drosophila in the form of excitatory LNs [10], [61] and found that it also did not play a role in the effectiveness of gain control. These results are consistent with previous work that explored unsupervised learning in the AL network and found that LN to PN plasticity is most effective in generating olfactory habituation in the fruit fly [48], [49] and honeybee [47]. Moreover, these ideas seem to resonate with the observation that lateral inhibition on PNs narrows the glomerular response profile [32], [45], similar to the ideas proposed in the olfactory bulb of mammals [42].

In the insect olfactory system, when an odorant stimulus increases in strength, both the intensity of the ORN response and the number of different types of ORNs that respond increase [33]. In our model a change of the intensity of the ORN responses would be equivalent to an increase in  . We have demonstrated that we can derive a general gain control condition for changes in

. We have demonstrated that we can derive a general gain control condition for changes in  that depends only on the connections from the inhibitory population to the rest of the network regardless of the number of neurons. This gain control condition derived from the mean field approximation is valid for random networks of excitatory and inhibitory neurons using rate models and realistic conductance based models, demonstrating the generality of the result. Furthermore, in agreement with experimental evidence [40], we found that in order to achieve gain control the activity of the inhibitory population and hence the strength of lateral inhibition needs to scale linearly with the intensity of the input.

that depends only on the connections from the inhibitory population to the rest of the network regardless of the number of neurons. This gain control condition derived from the mean field approximation is valid for random networks of excitatory and inhibitory neurons using rate models and realistic conductance based models, demonstrating the generality of the result. Furthermore, in agreement with experimental evidence [40], we found that in order to achieve gain control the activity of the inhibitory population and hence the strength of lateral inhibition needs to scale linearly with the intensity of the input.

However, if  , the fraction of activated glomeruli, is the main variable that encodes stimulus intensity, we could not identify consistent or stationary gain control conditions, in particular for low values of

, the fraction of activated glomeruli, is the main variable that encodes stimulus intensity, we could not identify consistent or stationary gain control conditions, in particular for low values of  . If increasing stimuli recruit a larger number of glomeruli and hence neurons, the network parameters (the probability and strength of the connections) have to change dynamically in order to regulate the activity levels of the excitatory population. Short term depression of synapses and spike rate adaptation in neurons could be invoked as possible mechanisms [50]. It is in particular unclear whether such mechanisms would be fast enough for efficient gain control and whether they would compromise the sensitivity of the network to subsequent low-intensity inputs.

. If increasing stimuli recruit a larger number of glomeruli and hence neurons, the network parameters (the probability and strength of the connections) have to change dynamically in order to regulate the activity levels of the excitatory population. Short term depression of synapses and spike rate adaptation in neurons could be invoked as possible mechanisms [50]. It is in particular unclear whether such mechanisms would be fast enough for efficient gain control and whether they would compromise the sensitivity of the network to subsequent low-intensity inputs.

A different solution to the problem of input dependent  would be the relaxation of the gain control condition. We have in this paper concentrated on strict gain control by postulating

would be the relaxation of the gain control condition. We have in this paper concentrated on strict gain control by postulating  to be exactly 0 leading to exact conditions on connection probabilities and connection strengths. This is unlikely to be precisely realized in biological networks. The most plausible scenario is that the neural networks in the brain have large parameter spaces in which information processing is not impaired. Within this scenario, one would expect gain control to be approximate rather than strict. It remains to be seen how much our gain control conditions could be relaxed. Perhaps, a reasonable approach to this question may consist of determining a lower and upper bound of

to be exactly 0 leading to exact conditions on connection probabilities and connection strengths. This is unlikely to be precisely realized in biological networks. The most plausible scenario is that the neural networks in the brain have large parameter spaces in which information processing is not impaired. Within this scenario, one would expect gain control to be approximate rather than strict. It remains to be seen how much our gain control conditions could be relaxed. Perhaps, a reasonable approach to this question may consist of determining a lower and upper bound of  instead of equality to 0. In this case a large number of inhibitory neurons, for example, could shift the gain control conditions more aggressively to the left in Figures 2 and 3, effectively achieving very good regulation of excitatory activation for many values of

instead of equality to 0. In this case a large number of inhibitory neurons, for example, could shift the gain control conditions more aggressively to the left in Figures 2 and 3, effectively achieving very good regulation of excitatory activation for many values of  . Furthermore, as the gain control curves are approximately horizontal for

. Furthermore, as the gain control curves are approximately horizontal for  , constant values of the connectivity probabilities and strengths could lead to approximate gain control in this regime even if

, constant values of the connectivity probabilities and strengths could lead to approximate gain control in this regime even if  is input dependent.

is input dependent.

The fact that the gain control conditions derived from the mean field approximation were verified by simulations of a rate model [56] and a more realistic Hodgkin-Huxley conductance based model is an important confirmation that using mean field approximations to understand the structural organization of brain centers is useful. Our formulation of the mean field theory has been proven to be general enough to capture the main function of the system. We would like to interpret this finding to indicate that the main properties of the system we have described do not critically depend on the details of its construction. In this sense, there is a large space of neural circuits with properties similar to the ones observed here.

The confirmation of the gain control conditions in a firing rate model is also important in the context of presynaptic inhibition which has been identified in several forms in the AL[11]. Firing rate models accommodate presynatic inhibition alongside postsynaptic forms of inhibition because all synaptic inputs are integrated in a passive manner. Being confirmed in a firing rate model, our gain control conditions should be valid for any form of synaptic inhibition in any combination.

In conclusion, we used analysis and simulations of a network of excitatory and inhibitory neurons inspired by the AL network to identify a relationship between network parameters that allows strict gain control. The more general question is how such a relationship can be induced and maintained in a biological system. Certainly, strict gain control would necessitate the probability of connections between the population of neurons and the strength of these connections to have a very precise value. This is, as we have already alluded to above, impractical in real world conditions. However, our simulations suggest that there is a range of values around the strict gain control condition line where approximate gain control is achieved. Therefore, a biological mechanism that would control the probability and strength of excitatory to inhibitory connections [62], [63] within a certain range would suffice to achieve the desired approximate intensity invariance.

Models

The firing rate network model

To analytically address the issue of gain control in a random excitatory-inhibitory network, we simulate the network by firing rate models [64]. Rate models [54], [55] are simpler than conductance-based models, but they reveal some of the fundamental principles that underlie the cooperative function of neural systems by providing an accurate description of the network behavior when the neurons fire asynchronously [56].

The network mode l considered here consists of an excitatory PN population, and an inhibitory LN population with  and

and  neurons respectively. We use the sub- or superscript

neurons respectively. We use the sub- or superscript  for variables referring to the excitatory population (PNs) and

for variables referring to the excitatory population (PNs) and  for those referring to the inhibitory population (LNs). The firing rates of all neurons evolve in time according to the following set of ordinary differential equations:

for those referring to the inhibitory population (LNs). The firing rates of all neurons evolve in time according to the following set of ordinary differential equations:

| (29) |

| (30) |

where  are the time constants,

are the time constants,  denotes the total afferent current into the

denotes the total afferent current into the  th neuron in pool

th neuron in pool  and

and  is the corresponding gain function. The individual gain functions represent the steady state firing rates of the neurons as a function of their total input. Note that we do not consider excitatory LNs at this level of description.

is the corresponding gain function. The individual gain functions represent the steady state firing rates of the neurons as a function of their total input. Note that we do not consider excitatory LNs at this level of description.

To be as general as possible we only make two assumptions about the model neurons. First, that they have a threshold, i.e.,

| (31) |

and second, that their gain functions are positive, continuous and monotonically increasing functions (i.e.,  and

and  ). These conditions are quite general and represent fairly well the firing response of neurons [65]. Furthermore, specific gain functions can be determined semi-analytically for specific noise models or numerically for more realistic models [56], [66], [67].

). These conditions are quite general and represent fairly well the firing response of neurons [65]. Furthermore, specific gain functions can be determined semi-analytically for specific noise models or numerically for more realistic models [56], [66], [67].

The neurons are connected through a network of synaptic connections  . The contributions of all synapses are assumed to be added linearly in the main compartment of the neuron via

. The contributions of all synapses are assumed to be added linearly in the main compartment of the neuron via

|

(32) |

where  is the threshold of the

is the threshold of the  th neuron in pool

th neuron in pool  and the term

and the term  represents its external input from the presynaptic ORNs. Both, PNs and LNs, receive afferent input directly from the ORNs.

represents its external input from the presynaptic ORNs. Both, PNs and LNs, receive afferent input directly from the ORNs.

In our model we do not assume any anatomically or functionally structured connectivity between the glomeruli, i.e., the connectivity matrices  are random matrices with entries drawn from the following Bernoulli process

are random matrices with entries drawn from the following Bernoulli process

|

(33) |

where  represents the synaptic conductance or efficiency of the connection from a neuron in the pool

represents the synaptic conductance or efficiency of the connection from a neuron in the pool  to a neuron in the pool

to a neuron in the pool  . Thus, on average, a given neuron receives

. Thus, on average, a given neuron receives  synapses of strength

synapses of strength  from the excitatory population and and

from the excitatory population and and  connections of strength

connections of strength  from the inhibitory population.

from the inhibitory population.

The Hodgkin-Huxley network model

The simulated network of conductance based Hodgkin-Huxley neurons consists of  PNs and

PNs and  LNs which were randomly connected as described for the rate model above. The probabilities for connections were

LNs which were randomly connected as described for the rate model above. The probabilities for connections were  following observations in the honeybee [45],

following observations in the honeybee [45],  and

and  , and

, and  .

.

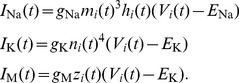

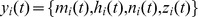

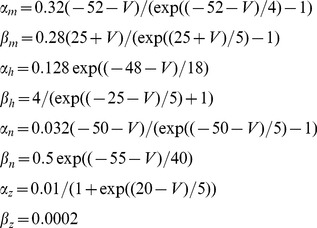

HH neuron model

The neurons in the simulated network model were described by a Hodgkin-Huxley type model based on the model of Traub and Miles [68]. Additionally, a spike rate adaptation (M-type) current was added leading to the following set of equations:

| (34) |

where  is a constant bias current regulating the intrinsic excitability of neurons. The leak current is

is a constant bias current regulating the intrinsic excitability of neurons. The leak current is  and the ionic currents

and the ionic currents  ,

,  , and

, and  are described by

are described by

|

(35) |

The synaptic current  to each neuron is the linear sum of all synapses onto the neuron, each synaptic current given by (38). Each activation and inactivation variable

to each neuron is the linear sum of all synapses onto the neuron, each synaptic current given by (38). Each activation and inactivation variable  satisfied first-order kinetics

satisfied first-order kinetics

| (36) |

with non-linear functions  and

and  given by

given by

|

(37) |

The remaining parameter values were  nF,

nF,

,

,  mV,

mV,

,

,  mV,

mV,

,

,  mV,

mV,

.

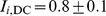

.  nA for PNs and

nA for PNs and  nA for LNs, where

nA for LNs, where  denotes the addition of a random individual bias sampled from a uniformly distributed random variable in

denotes the addition of a random individual bias sampled from a uniformly distributed random variable in  for each neuron.

for each neuron.

Synapse model

Synapses were described by a first order kinetic model [69]. In brief, the synaptic current is given by

| (38) |

where  is the synaptic conductance and

is the synaptic conductance and  the reversal potential. Here,

the reversal potential. Here,  mV for excitatory synapses and

mV for excitatory synapses and  mV for inhibitory synapses.

mV for inhibitory synapses.  describes the activation of the synapse and is governed by

describes the activation of the synapse and is governed by

| (39) |

where  is the duration of synaptic release after a pre-synaptic spike,

is the duration of synaptic release after a pre-synaptic spike,  is the time of the last pre-synaptic spike, detected as a crossing from below of

is the time of the last pre-synaptic spike, detected as a crossing from below of  mV, and

mV, and  and

and  are rates of synaptic release and decay (re-uptake). Here, we used

are rates of synaptic release and decay (re-uptake). Here, we used  ms for excitatory synapses and

ms for excitatory synapses and  ms for inhibitory synapses. The activation and inactivation rates were given by

ms for inhibitory synapses. The activation and inactivation rates were given by  kHz,

kHz,  kHz,

kHz,  kHz and

kHz and  kHz. The maximal synaptic conductances were chosen as

kHz. The maximal synaptic conductances were chosen as

,

,

, and

, and

, where

, where  denotes a random variation by a Gaussian random variable of mean

denotes a random variation by a Gaussian random variable of mean  and standard deviation

and standard deviation  . The model was integrated with a 6/5 order variable time step Runge-Kutta algorithm with maximal time step of

. The model was integrated with a 6/5 order variable time step Runge-Kutta algorithm with maximal time step of  ms, using custom-made C++ code.

ms, using custom-made C++ code.

Funding Statement

RH and BHS acknowledge partial support of NIDCD-R01DC011422-01. ES and RL acknowledge support from MINECO TIN2012-30883 and IPT-2011-0727-020000. TN acknowledges partial support by EPSRC (grant number EP/J019690/1). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Salinas E, Thier P (2000) Gain modulation: A major computational principle of the central nervous system. Neuron 27: 15–21. [DOI] [PubMed] [Google Scholar]

- 2. Cleland TA, Chen SYT, Hozer KW, Ukatu HN, Wong KJ, et al. (2011) Sequential mechanisms underlying concentration invariance in biological olfaction. Front Neuroeng 4: 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Smith BH, Wright G, Daly K (2006) Learning-based recognition and discrimination of oral odors. In: Dudareva N, editor, Biology of Floral Scent. Hoboken: CRC Press. pp. 263–295. Available: http://public.eblib.com/EBLPublic/PublicView.do?ptiID=262223.

- 4. Wright GA, Carlton M, Smith BH (2009) A honeybee's ability to learn, recognize, and discriminate odors depends upon odor sampling time and concentration. Behav Neurosci 123: 36–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Bargmann CI (2006) Comparative chemosensation from receptors to ecology. Nature 444: 295–301. [DOI] [PubMed] [Google Scholar]

- 6. Touhara K, Vosshall LB (2009) Sensing odorants and pheromones with chemosensory receptors. Annu Rev Physiol 71: 307–332. [DOI] [PubMed] [Google Scholar]

- 7. Couto A, Alenius M, Dickson BJ (2005) Molecular, anatomical, and functional organization of the drosophila olfactory system. Current Biology 15: 1535–1547. [DOI] [PubMed] [Google Scholar]

- 8. Wilson RI, Laurent G (2005) Role of GABAergic inhibition in shaping odor-evoked spatiotemporal patterns in the drosophila antennal lobe. J Neurosci 25: 9069–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Shang Y, Claridge-Chang A, Sjulson L, Pypaert M, Miesenbck G (2007) Excitatory local circuits and their implications for olfactory processing in the y antennal lobe. Cell 128: 601–612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Olsen SR, Bhandawat V, Wilson RI (2007) Excitatory interactions between olfactory processing channels in the drosophila antennal lobe. Neuron 54: 89–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Olsen SR, Wilson RI (2008) Lateral presynaptic inhibition mediates gain control in an olfactory circuit. Nature 452: 956–960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Strowbridge BW (2008) A volume control for the sense of smell. Nature Neuroscience 11: 531–533. [DOI] [PubMed] [Google Scholar]

- 13. Sachse S, Galizia CG (2002) Role of inhibition for temporal and spatial odor representation in olfactory output neurons: A calcium imaging study. J Neurophysiol 87: 1106–1117. [DOI] [PubMed] [Google Scholar]

- 14. Anderson P, Hansson BS, Löfqvist J (1995) Plant-odour-specific receptor neurones on the antennae of female and male spodoptera littoralis. Physiological Entomology 20: 189–198. [Google Scholar]

- 15. Hallem EA, Ho MG, Carlson JR (2004) The molecular basis of odor coding in the drosophila antenna. Cell 117: 965–979. [DOI] [PubMed] [Google Scholar]

- 16. Hallem EA, Carlson JR (2006) Coding of odors by a receptor repertoire. Cell 125: 143–160. [DOI] [PubMed] [Google Scholar]

- 17. Stopfer M, Jayaraman V, Laurent G (2003) Intensity versus identity coding in an olfactory system. Neuron 39: 991–1004. [DOI] [PubMed] [Google Scholar]

- 18. Bhandawat V, Olsen SR, Gouwens NW, Schlief ML, Wilson RI (2007) Sensory processing in the drosophila antennal lobe increases the reliability and separability of ensemble odor representations. Nature neuroscience 10: 1474–1482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Yamagata N, Schmuker M, Szyszka P, Mizunami M, Menzel R (2009) Differential odor processing in two olfactory pathways in the honeybee. Frontiers in Systems Neuroscience 3: 16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Root CM, Semmelhack JL, Wong AM, Flores J, Wang JW (2007) Propagation of olfactory information in drosophila. Proceedings of the National Academy of Sciences of the United States of America 104: 11826–11831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Huerta R, Nowotny T, Garcia-Sanchez M, Abarbanel HDI, Rabinovich MI (2004) Learning classification in the olfactory system of insects. Neural Comput 16: 1601–1640. [DOI] [PubMed] [Google Scholar]

- 22. Nowotny T, Huerta R, Abarbanel HDI, Rabinovich MI (2005) Self-organization in the olfactory system: Rapid odor recognition in insects. Biol Cyber 93: 436–446. [DOI] [PubMed] [Google Scholar]

- 23. Perez-Orive J, Mazor O, Turner GC, Cassenaer S, Wilson RI, et al. (2002) Oscillations and sparsening of odor representations in the mushroom body. Science 297: 359–365. [DOI] [PubMed] [Google Scholar]

- 24. Szyszka P, Ditzen M, Galkin A, Galizia CG, Menzel R (2005) Sparsening and temporal sharpening of olfactory representations in the honeybee mushroom bodies. J Neurophysiol 94: 3303–3313. [DOI] [PubMed] [Google Scholar]

- 25. Vicente CJP, Amit DJ (1989) Optimised network for sparsely coded patterns. Journal of Physics A: Mathematical and General 22: 559–569. [Google Scholar]

- 26. Amari Si (1989) Characteristics of sparsely encoded associative memory. Neural Networks 2: 451–457. [Google Scholar]

- 27. Dominguez D, Koroutchev K, Serrano E, Rodriguez FB (2007) Information and topology in attractor neural networks. Neural Computation 19: 956–973. [DOI] [PubMed] [Google Scholar]

- 28.Nowotny T (2009) “Sloppy engineering” and the olfactory system of insects. In: Marco S, Gutierrez A, editors, Biologically Inspired Signal Processing for Chemical Sensing, volume 188 of Studies in Computational Intelligence. Springer. pp.3–32.

- 29. Papadopoulou M, Cassenaer S, Nowotny T, Laurent G (2011) Normalization for sparse encoding of odors by a wide-field interneuron. Science 332: 721–725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. García-Sanchez M, Huerta R (2003) Design parameters of the fan-out phase of sensory systems. Journal of Computational Neuroscience 15: 5–17. [DOI] [PubMed] [Google Scholar]

- 31. Yokoi M, Mori K, Nakanishi S (1995) Refinement of odor molecule tuning by dendrodendritic synaptic inhibition in the olfactory bulb. Proceedings of the National Academy of Sciences 92: 3371–3375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Wilson RI, Turner GC, Laurent G (2004) Transformation of olfactory representations in the drosophila antennal lobe. Science 303: 366–370. [DOI] [PubMed] [Google Scholar]

- 33. Silbering AF, Galizia CG (2007) Processing of odor mixtures in the drosophila antennal lobe reveals both global inhibition and glomerulus-specific interactions. J Neurosci 27: 11966–11977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Rabinovich M, Volkovskii A, Lecanda P, Huerta R, Abarbanel HDI, et al. (2001) Dynamical encoding by networks of competing neuron groups: Winnerless competition. Phys Rev Lett 87: 068102. [DOI] [PubMed] [Google Scholar]

- 35. Turner GC, Bazhenov M, Laurent G (2008) Olfactory representations by drosophila mushroom body neurons. Journal of Neurophysiology 99: 734–746. [DOI] [PubMed] [Google Scholar]

- 36. Bazhenov M, Stopfer M, Rabinovich M, Huerta R, Abarbanel HD, et al. (2001) Model of transient oscillatory synchronization in the locust antennal lobe. Neuron 30: 553–567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Av-Ron E, Vibert JF (1996) A model for temporal and intensity coding in insect olfaction by a network of inhibitory neurons. Biosystems 39: 241–250. [DOI] [PubMed] [Google Scholar]

- 38. Belmabrouk H, Nowotny T, Rospars JP, Martinez D (2011) Interaction of cellular and network mechanisms for efficient pheromone coding in moths. Proc Natl Acad Sci U S A 108: 19790–19795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Silbering AF, Okada R, Ito K, Galizia CG (2008) Olfactory information processing in the drosophila antennal lobe: anything goes? J Neurosci 28: 13075–13087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Olsen SR, Bhandawat V, Wilson RI (2010) Divisive normalization in olfactory population codes. Neuron 66: 287–299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Schmuker M, Yamagata N, Nawrot MP, Menzel R (2011) Parallel representation of stimulus identity and intensity in a dual pathway model inspired by the olfactory system of the honeybee. Frontiers in Neuroengineering 4: 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Cleland TA, Linster C (2012) On-center/inhibitory-surround decorrelation via intraglomerular inhibition in the olfactory bulb glomerular layer. Front Integr Neurosci 6: 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Vreeswijk Cv, Sompolinsky H (1998) Chaotic balanced state in a model of cortical circuits. Neural Computation 10: 1321–1371. [DOI] [PubMed] [Google Scholar]

- 44. Ito I, Bazhenov M, Ong RCy, Raman B, Stopfer M (2009) Frequency transitions in odor-evoked neural oscillations. Neuron 64: 692–706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Girardin CC, Kreissl S, Galizia CG (2013) Inhibitory connections in the honeybee antennal lobe are spatially patchy. Journal of neurophysiology 109: 332–343. [DOI] [PubMed] [Google Scholar]

- 46. Krofczik S, Menzel R, Nawrot MP (2008) Rapid odor processing in the honeybee antennal lobe network. Front Comput Neurosci 2: 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Locatelli F, Fernandez P, Villareal F, Muezzinoglu K, Huerta R, et al. (2013) Nonassociative plasticity alters competitive interactions among mixture components in early olfactory processing. European Journal of Neuroscience 37: 63–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Das S, Sadanandappa MK, Dervan A, Larkin A, Lee JA, et al. (2011) Plasticity of local gabaergic interneurons drives olfactory habituation. Proc Natl Acad Sci U S A 108: E646–E654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Sudhakaran IP, Holohan EE, Osman S, Rodrigues V, Vijayraghavan K, et al. (2012) Plasticity of recurrent inhibition in the drosophila antennal lobe. J Neurosci 32: 7225–7231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Breer H, Shepherd GM (1993) Implications of the no/cgmp system for olfaction. Trends Neurosci 16: 5–9. [DOI] [PubMed] [Google Scholar]

- 51. Abbott LF, Varela JA, Sen K, Nelson SB (1997) Synaptic depression and cortical gain control. Science 275: 221–224. [DOI] [PubMed] [Google Scholar]

- 52. Grossberg S (1967) Nonlinear difference-differential equations in prediction and learning theory. Proc Natl Acad Sci U S A 58: 1329–1334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Wilson HR, Cowan JD (1973) A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Biological Cybernetics 13: 55–80. [DOI] [PubMed] [Google Scholar]

- 54. Shun-Ichi Amarimber (1972) Characteristics of random nets of analog neuron-like elements. IEEE Transactions on Systems, Man and Cybernetics SMC-2: 643–657. [Google Scholar]

- 55. Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proceedings of the National Academy of Sciences 79: 2554–2558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Shriki O, Hansel D, Sompolinsky H (2003) Rate models for conductance-based cortical neuronal networks. Neural Computation 15: 1809–1841. [DOI] [PubMed] [Google Scholar]

- 57. Huerta R, Rabinovich M (2004) Reproducible sequence generation in random neural ensembles. Physical review letters 93: 238104. [DOI] [PubMed] [Google Scholar]

- 58.Huerta R (2013) Learning pattern recognition and decision making in the insect brain. In: American Institute of Physics Conference Series. volume 1510. pp.101–119.

- 59. Huerta R, Nowotny T (2009) Fast and robust learning by reinforcement signals: explorations in the insect brain. Neural computation 21: 2123–2151. [DOI] [PubMed] [Google Scholar]

- 60. Ng M, Roorda RD, Lima SQ, Zemelman BV, Morcillo P, et al. (2002) Transmission of olfactory information between three populations of neurons in the antennal lobe of the y. Neuron 36: 463–474. [DOI] [PubMed] [Google Scholar]

- 61. Huang J, Zhang W, Qiao W, Hu A, Wang Z (2010) Functional connectivity and selective odor responses of excitatory local interneurons in drosophila antennal lobe. Neuron 67: 1021–1033. [DOI] [PubMed] [Google Scholar]

- 62. Vogels TP, Sprekeler H, Zenke F, Clopath C, Gerstner W (2011) Inhibitory plasticity balances excitation and inhibition in sensory pathways and memory networks. Science 334: 1569–1573. [DOI] [PubMed] [Google Scholar]

- 63. Haider B, Duque A, Hasenstaub AR, McCormick DA (2006) Neocortical network activity in vivo is generated through a dynamic balance of excitation and inhibition. The Journal of Neuroscience 26: 4535–4545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ermentout GB, Terman DH (2010) Mathematical Foundations of Neuroscience. Springer.

- 65. McCormick DA, Connors BW, Lighthall JW, Prince DA (1985) Comparative electrophysiology of pyramidal and sparsely spiny stellate neurons of the neocortex. Journal of Neurophysiology 54: 782–806. [DOI] [PubMed] [Google Scholar]

- 66.Tuckwell HC (1988) Introduction to Theoretical Neurobiology: Linear cable theory and dendritic structure. Cambridge University Press.

- 67. Buckley C, Nowotny T (2011) Multiscale model of an inhibitory network shows optimal properties near bifurcation. Physical Review Letters 106: 238109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Traub RD, Miles R (1991) Neural Networks of the Hippocampus. Cambridge University Press. URL http://dx.doi.org/10.1017/CBO9780511895401.

- 69. Destexhe A, Mainen ZF, Sejnowski TJ (1994) Synthesis of models for excitable membranes, synaptic transmission and neuromodulation using a common kinetic formalism. Journal of Computational Neuroscience 1: 195–230. [DOI] [PubMed] [Google Scholar]