Abstract

We describe a clinical research visit scheduling system that can potentially coordinate clinical research visits with patient care visits and increase efficiency at clinical sites where clinical and research activities occur simultaneously. Participatory Design methods were applied to support requirements engineering and to create this software called Integrated Model for Patient Care and Clinical Trials (IMPACT). Using a multi-user constraint satisfaction and resource optimization algorithm, IMPACT automatically synthesizes temporal availability of various research resources and recommends the optimal dates and times for pending research visits. We conducted scenario-based evaluations with 10 clinical research coordinators (CRCs) from diverse clinical research settings to assess the usefulness, feasibility, and user acceptance of IMPACT. We obtained qualitative feedback using semi-structured interviews with the CRCs. Most CRCs acknowledged the usefulness of IMPACT features. Support for collaboration within research teams and interoperability with electronic health records and clinical trial management systems were highly requested features. Overall, IMPACT received satisfactory user acceptance and proves to be potentially useful for a variety of clinical research settings. Our future work includes comparing the effectiveness of IMPACT with that of existing scheduling solutions on the market and conducting field tests to formally assess user adoption.

Keywords: workflow, software, personnel staffing and scheduling, health resources, clinical research informatics, learning health systems

1. Introduction

Randomized controlled trials provide quality medical evidence to guide medical decision-making. However, their high cost severely threatens the clinical research enterprise[1] showing a need for cheaper clinical trials and comparative effectiveness research (CER) in patient care settings.[2, 3] Electronic health records (EHRs) potentially provide a new digital infrastructure for less expensive large-scale and pragmatic clinical trials.[4] The use of EHR data for CER has also been recognized as a new paradigm for clinical research, [5] though the potential of EHRs has yet to be fully realized.[6] A major challenge is to integrate clinical research and patient care workflows. The low adoption of clinical trial management systems (CTMSs), general lack of interoperability between CTMSs and EHRs, and poorly coordinated patient care and clinical research workflows often result in unnecessary or redundant visits or tests for patients and a considerable administrative burden for practice administrators.[7],[8]

Consequently, tools are needed to facilitate interoperability between EHRs and CTMSs. Some encouraging developments have occurred, including the creation of core research data elements that can be exchanged readily between EHRs and CTMSs, [9] the establishment of the EHR Clinical Research Functional Profile, a specification of functional requirements for using EHR data for regulated clinical research, [10] and the development of Retrieve Form for Data Capture by the Clinical Data Interchange Standards Consortium (CDISC) for automatically populating a research form using EHR data with minimal workflow disruption.[11] In 2011, the Partnership to Advance Clinical Electronic Research (PACeR) consortium was formed to enable secondary use of clinical data for research.[12] The American Medical Informatics Association (AMIA) also published a taxonomy of secondary use of EHR data to support clinical research.[13]

Although such standards help to reduce redundant data entry by clinicians who also conduct research, researchers have found that facilitating electronic data exchange is necessary but not sufficient. Coordination of clinical research and patient care workflows is needed. As part of the NIH Roadmap for Reengineering Clinical Research project, [14] we conducted formal ethnographic studies and identified the inefficiency in clinical research workflow and the needs of clinical research coordinators (CRCs) for better informatics support.[8, 15, 16] Similarly, in a randomized trial using electronic data, Mosis found that requiring clinicians to conduct patient recruitment during routine clinical encounters discourages participation in clinical trials.[17] This is because patient recruitment, especially the process of obtaining informed consent is a time-consuming disruption of the normal clinical workflow. In the United States, less than 2% of physicians pursue a research career and this rate continues to decline.[18] Many physicians consider research tedious and expensive.[19] Clinical research sites are extremely under-supported.[20] At these sites, competition for resources (e.g., exam rooms) contributes to awkward tension between staff involved in patient care and those involved in clinical research.[21]

The Institute of Medicine (IOM) proposed a “learning healthcare system” model to accelerate the generation of new evidence.[22] This model supports a “culture of shared responsibility for evidence generation and information exchange, in which stakeholders (researchers, providers, and patients) embrace the concept of a healthcare system that learns and works together.”[22] This model is conceived as dynamic and collaborative but is largely untested. Although this model is conceptually ideal to support patient-centered outcomes research, rarely is there alignment between clinical care and clinical research needs in clinical practices that conduct research.

A key to achieving cost-effective clinical research is to make research an extension of patient care by facilitating collaboration between researchers and patients.[23] It would be ideal for both patients and staff if a research visit could occur on the same day as a scheduled clinical visit. Synchronizing research and clinical visits can also ameliorate two barriers to clinical trial recruitment: determining eligibility and obtaining informed consent. Both routinely require face-to-face meetings between researchers and patients. Such meetings can be inconvenient and sometimes intimidating for patients. One solution is to electronically prescreen patients to determine who is potentially eligible and then arrange for CRCs to meet with these patients in conjunction with their next clinical visit, provided their primary care providers (PCPs) have been notified in advance.[24]

Coordinating research and clinical visits is not a trivial task. Sometimes there is a designated scheduler for research visits but more often CRCs perform this task themselves.[16] Every research visit must occur within a protocol-specified visit window, such as “visit 1 must occur one month, plus or minus 15 days (i.e., the visit ‘window’), after the randomization visit.” The services to be performed during a particular research visit determine the choice of dates for that visit. For some visits, a protocol might require only questionnaires to be completed whereas, for other visits, time-consuming exams, diagnostic tests and blood work may be required. CRCs often seek a date when all resources are available and there is sufficient time to perform the required research services.

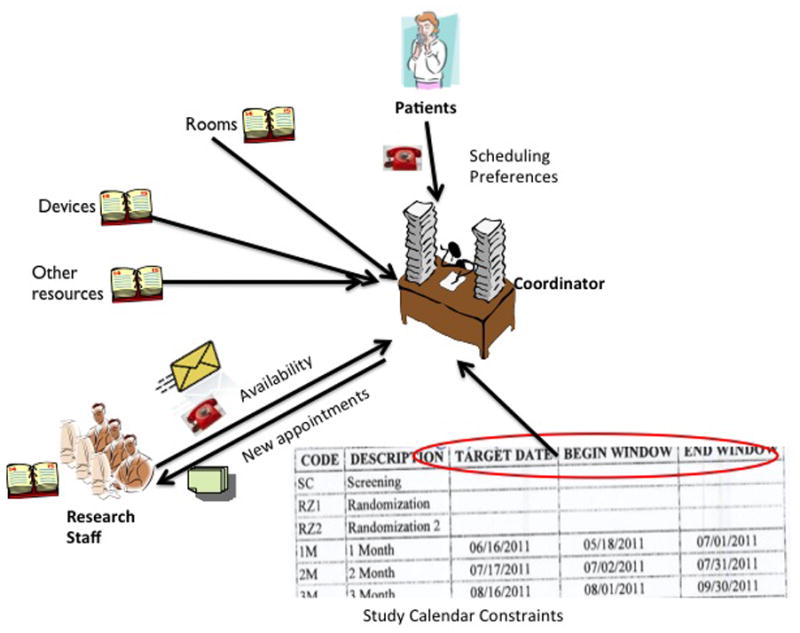

Scheduling a joint appointment can be difficult, even with several weeks of advance notice. The problem can be further complicated if the patient reschedules a visit or fails to appear for one. Rescheduling may require repeating all the necessary steps to find a date that satisfies all the requirements of patients, research staff, equipment, and protocols. Moreover, research visits that do not occur within visit windows may require advanced approval by the sponsor, which, in turn, requires extra paperwork. In addition, routinely clinical staff and CRCs work independently, even when both teams work in the same practice. Figure 1 shows how scheduling a research visit requires CRCs to manually collect and synthesize information about the target date, time window, activities that need to be performed, and the availability of both the research resources and the patient.

Figure 1.

The current workflow for scheduling a research visit. The scheduler or research coordinator needs to manually synthesize temporal constraints of multiple research resources and staff, as well as patients.

We believe that utilizing the patient care information in EHRs to streamline research workflow is essential to realize the learning health system envisioned by the IOM.[3] To this end, we developed a system called Integrated Model for PAtient Care and Clinical Trials (IMPACT) for coordinating research visits with patient care visits using resource optimization. IMPACT is designed to be loosely coupled with an EHR’s clinical practice management functions to import information (e.g., patient identifier, date of birth, and clinical appointments) needed for the clinical research workflow and to recommend the optimal dates and times for clinical research visits so that the two types of visits can co-occur to minimize travel costs for patients.

2. Methods

We used Participatory Design methods[25] to elicit user requirements for IMPACT and translate them into a functioning software prototype. Participatory Design engages all stakeholders of a project in an iterative design process. Our Participatory Design team includes seven members: a clinical trial investigator, a CRC, an informatician, two computer scientists, a web application administrator, and a clinical research site manager familiar with the needs of CRCs. This team has been meeting twice weekly for 14 months to iteratively define business rules and review and refine the designs for the IMPACT prototype. In addition to the seven regular members, a few interested volunteering CRCs frequently attended the meetings to provide feedback for various prototypes.

We used a scenario-based evaluation method [26] to assess the feasibility and usefulness of one of our early IMPACT prototypes. Each scenario is an instantiation of one or more work task types and specifies the goals and steps of the tasks to be performed by members of a clinical research team who play different roles.[27] Of note, the graphical user interface (GUI) evolved significantly throughout the Participatory Design process, but the software functionalities remained the same. The purpose of this evaluation was to assess the usefulness and feasibility of using IMPACT to streamline clinical research scheduling. Following the principles of cross-sectional studies, which involve descriptive studies of a representative user group, we used person-to-person communication methods, including phone calls and emails, to recruit CRCs working in a variety of research settings and specialties with different degrees of research experience and familiarity with health information technology.

The evaluators were asked to use the prototype to follow a set of predefined scenarios (Appendix I), which lasted about an hour. Each evaluator was asked to perform scheduling tasks defined in the scenario using the “think-aloud” protocol”[28] to provide feedback and to take part in a semi-structured interview (Appendix II) afterwards. The sessions were audiotaped and transcribed. Thematic analysis was performed on both the observational notes and interview transcripts to summarize the major themes regarding user needs for efficiently scheduling research visits. The Columbia University Medical Center Institutional Review Board approved this study (IRB-AAAF2962).

3. System Design

The design of the IMPACT system includes: (1) the domain model, (2) the system architecture and functionality modules, (3) the knowledge base of process rules, (4) the multi-user constraint satisfaction and resource optimization algorithm for synchronizing patient care and clinical research visits, (5) the requirements for privacy protection, (6) the system interoperability requirements, and (7) the graphical user interface.

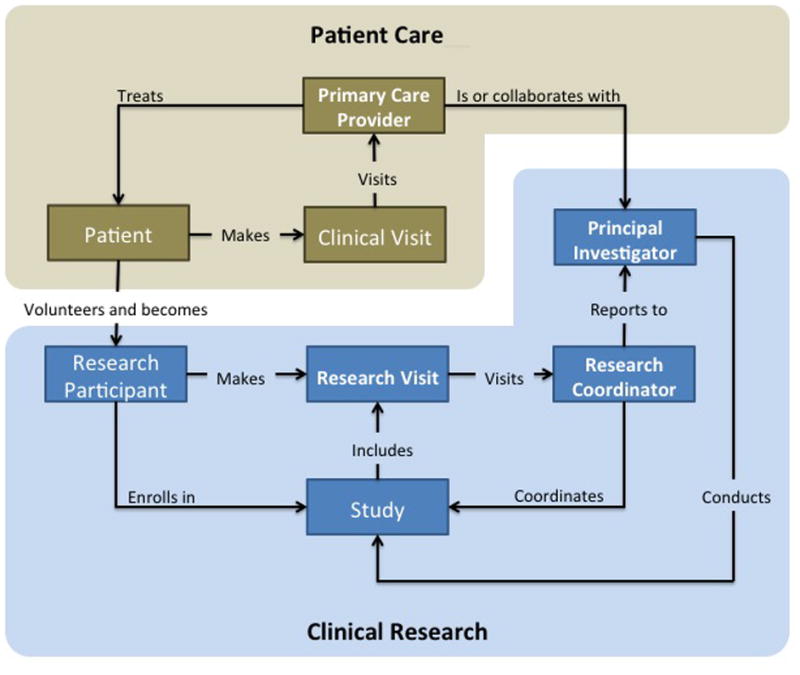

The Domain Model

The domain model defines eight core entities: patient, PCP, CRC, clinical investigator, clinical trial, clinical visit, research visit, and research resource (e.g., research protocol constraints, room, and equipment). Figure 2 depicts their semantic relationships. Once a patient agrees to participate in research, the patient assumes two roles: patient and study participant. PCPs may refer patients to investigators, but sometimes PCPs are investigators themselves. This model helps align clinical and research activities and facilitate information sharing between research and clinical teams.

Figure 2.

The semantic relationships of entities in the domain model.

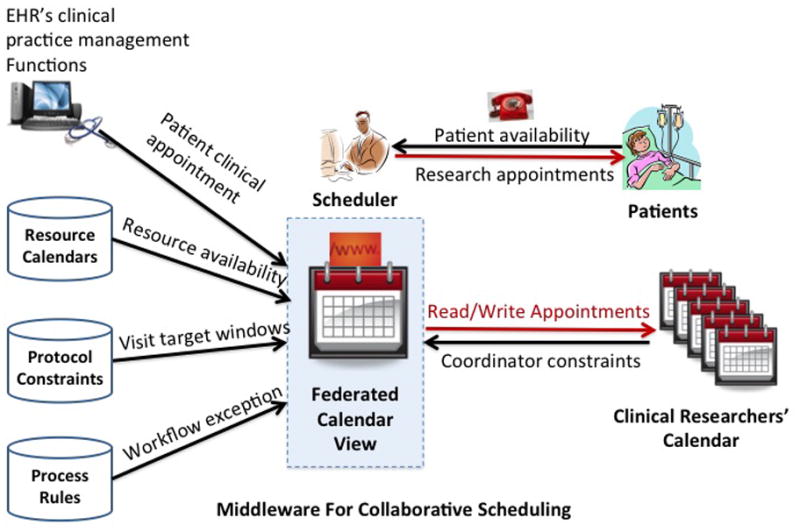

The Architecture and Functionality Modules

As shown in Figure 3, middleware architecture was chosen to provide secure and portable decision support and to be interoperable with many commercial EHRs without interfering with their patient care functionality.

Figure 3.

The IMPACT architecture

IMPACT can (1) collect information about CRC schedules, research protocols’ visit calendars, and patient preferences; (2) recommend the optimal visit dates and times within protocol windows that satisfy all the requirements and avoid scheduling conflicts; (3) export new appointments to external calendars used by CRCs; (4) generate personalized reminders; and (5) facilitate collaboration among CRCs working on the same study by achieving group awareness. The design complies with institutional regulatory and patient privacy protection policies. Table 1 lists the primary functional modules of IMPACT.

Table 1.

The IMPACT Features with Graphical User Interfaces.

| User Interface | Description |

|---|---|

| User authentication | CRCs and clinical investigators have role-based access to IMPACT with individual user names and passwords. |

| Study configuration | A CRC enters the protocol schedule for research visits and defines study-specific patient statuses (e.g., prescreened, potentially eligible, ineligible, declined, withdrawn by the patient, and withdrawn by the PI). |

| Create or import patient list | A CRC imports a list of patients who are potentially eligible for a study from an EHR or enters a new patient, assigns a study ID, and assigns a default research status of “not prescreened” to all potentially eligible patients or research volunteers. |

| CRC calendar synchronization | IMPACT imports CRC availability from, or exports a CRC’s research schedule to the CRC’s personal calendar. |

| Specifying patient preferences | A CRC enters into IMPACT the preferences of a patient for research visits (e.g., Monday afternoons) |

| Appointments visualization | A CRC can view or search the visits and constraints of one patient or all patients in one study of varying statuses in a day, week, or month view. |

| Insert/edit/delete a research visit | Following the IMPACT-recommended optimal visit dates and times based on the multi-user constraint satisfaction algorithm, a user can insert, edit, or cancel a research visit and its details (e.g., notes and tasks for visits) |

| Import clinical appointments for patients | IMPACT receives data feeds from EHRs’ clinical practice management system about patient and physicians’ clinical appointment schedules. |

| Collaboration and awareness | IMPACT provides standard groupware features, such as activity awareness and collaborative information sharing. |

| Reminders and notifications | A CRC receives reminders at preferred times in preferred ways before a participant is scheduled to visit. Reminders can be sent to CRCs about patients with unfinished tasks. |

| Workflow flexibility support | A CRC can configure study-specific workflow details to accommodate workflow variations for different types of clinical trials. |

| System auditability | All user activities are logged with details for timestamps for menu item selections, button clicks, and user identity. |

Table 2 lists the basic system-level IMPACT modules. These noninteractive modules run in the background. As a multi-user collaborative system, IMPACT employs standard concurrency control in its database access to account for multiple users, e.g., to prevent two CRCs from simultaneously editing the schedules of a particular research participant. In addition, IMPACT leverages experienced clinical researchers’ empirical knowledge of the clinical research workflow. For each visit, IMPACT selects dates that satisfy all constraints, ranks them chronologically, and then recommends the earliest date within the next research visit window. For example, if a CRC is available Monday, Wednesday, and Friday mornings, a patient prefers Fridays and has a clinical appointment on Friday, 4/20/2012, and the protocol window is sometime between 4/20/2012 and 4/27/2012, then the optimal time for a research visit is on the morning of 4/20/2012, the earliest time that satisfies all three constraints.

Table 2.

The Backend Modules of IMPACT.

| Module | Description |

|---|---|

| Read/write CRCs’ personal calendars in real time | Uses the iCalendar format (RFC 5545)[29] to exchange scheduling information with CRCs’ personal calendars, such as Outlook and Thunderbird. |

| Multi-user schedule optimization | Selects the optimal visit date and time by matching the protocol visit schedule with the availability of coordinators, patients, and physical resources. |

Scheduling Optimization Algorithm

IMPACT includes two algorithms to support multi-user constraint satisfaction and to optimize research resource allocation. Each day is divided into 96 discrete 15-minute intervals, e.g., 8:00am–8:15am. Each research visit may require a room and some equipment, and must satisfy the constraints of CRCs and patients. Each resource has its own availability calendar (dynamic) and utility attributes (static), e.g., some rooms may be equipped with ECG machines while others are not; also, some CRCs may be certified to perform cognitive evaluations while others are not. In order to search for the next optimal date and time slot for a research visit, IMPACT aligns the calendars for all users and resources and then uses this federated calendar to search for time slots when all the research staff and resources required for a research visit are available. The following principles are also used for schedule optimization: (1) first-come-first-serve precedence is applied when multiple CRCs compete for the same time slot; (2) maximizing job satisfaction among CRCs; and (3) maximizing diversity and flexibility. For example, if Monday and Wednesday are both acceptable, IMPACT picks Monday, which offers more flexibility if the visit must be rescheduled. If both room A and B are available, but A has more functionalities or equipment, IMPACT picks room B because room A has a better chance than room B to meet a different participant’s or the same participant’s next research visit’s scheduling requirements.

A Knowledge Base of Process and Policy Rules

Our design includes a knowledge base, contributed by the Participatory Design team, of configurable process rules that define user access to Protected Health Information (PHI) by clinical research staff. This knowledge base also allows workflow exceptions to account for special circumstances, and specify protocol-specific temporal constraints for clinical research visits (e.g., two-hour randomization visit occurs <1 month after screening visit). Separation of such rules from programming logic has two advantages: (1) it accommodates workflow variability in different research settings and (2) it allows for flexible modeling of policies without changing the software functionality modules. Frame-based knowledge representation[30] was selected for its strong expressiveness and ease of implementation as a relational database.

An example process rule is for sending reminders and notifications to CRCs regarding visit scheduling. Figure 4 shows the complex status transitions of a research visit. The initial status of a protocol-specified research visit is “to be scheduled”, which changes to “scheduled” after scheduling. This status can later change to “canceled” or “to occur soon” as the date for the visit gets close. Both situations require staff to be reminded in advance of the visit to either get prepared for the visit or to release the previously booked research resources (e.g., rooms or equipment). A “canceled” visit needs to be automatically flagged as “to be scheduled” to redraw the attention of the research staff and can later be “rescheduled”. IMPACT automates all of these visit status transitions.

Figure 4.

Status Transition for a Research Visit that is Automated by IMPACT.

In our context, a reminder is an email sent to a CRC, his or her colleagues, or the patient, with necessary preparations for the upcoming visit. In contrast, a notification consists of an email sent to collaborative team members and a message displayed on the screen to achieve group awareness[31] and to update appointment-related activities. When scheduling a visit, it is important to explain the details of each visit to the patient regarding the location, time, contact person, the length of the visit, tasks to be completed at the visit, and special instructions the patient must follow before or after the visit. After the visit is scheduled, a follow-up phone call or letter to the patient is required to reinforce the study requirements and to further remind the patient of important details regarding the visit. CRCs also need to understand the patient’s personal schedule and to help identify research visit times that are convenient for the patient without violating protocol windows. Before each visit, research personnel should be reminded of all tasks that may need to be completed. If applicable, physician orders need to be completed and authorized for related lab tests; research lab kits and data collection forms should be prepared and available to the appropriate research personnel; forms for research activity billing purposes must be made available to related research staff; and any items required to respond to adverse events or patient withdrawal during visits should be in place if such events occur.

Our focus groups with clinical investigators and CRCs confirmed that CRCs need task-specific reminders or notifications preferably sent automatically via email7. Our frame-based representation for reminders and notifications includes four attributes: timing, receiver, purpose, and message (Table 3). Of note, the same type of reminders can be sent multiple times. For example, a CRC can be reminded to call or notify a patient about an appointment immediately after the appointment is made and one day prior to the visit. The CRCs in the focus group requested notifications about appointments, edits, re-scheduling, and cancellations by their colleagues to release reserved resources (e.g., room and equipment), since CRCs may forget to free the original allocated time slot after appointment re-scheduling. Therefore, CRCs need reminders to release research resources for canceled appointments if they forget to do so during rescheduling. We have included these features in the IMPACT design to automate the generation of these reminders. These CRCs also requested that notifications be labeled regarding their urgency.

Table 3.

Types of e-Reminders (#) or e-Notifications (*) Requested by CRCs

| Timing | Receiver(s) | Purpose | Example Message |

|---|---|---|---|

| Before scheduling a visit (*) | Scheduler/ Research staff | To remind the research staff which patients need appointments | “patient Po called this morning to volunteer for the SPRINT trial, please prescreen and schedule him |

| After scheduling a visit (*) | Research staff | To notify research personnel involved with the visit | “Linda added an appointment with you for patient Bob” |

| After a visit is canceled or modified (*) | Research staff | To notify research personnel involved with the visit about the changes in the schedule and to free research resources | “patient Hugo canceled her appointment with you tomorrow.” |

| Before a visit (#) | Patient | To remind patient of important tasks or cautions before a visit | “hi- your blood sugar test is tomorrow, please don’t eat breakfast before you come.” |

| Before a visit (#) | Research staff | To make ready the required research resources | “Please contact Dr. Bigger to get the ECG machine for room 103” |

| After a visit (#) | Scheduler/ Research staff | To avoid missing the protocol window for the next visit | “Please schedule the next visit within this time interval…” |

| After a visit (#) | Patient | To remind patient of important protocol- related alerts | “Please don’t exercise heavily within the next 3 days” |

Requirements for Privacy and Security

The implementation of IMPACT faces three significant socio-technical barriers:[32] (1) patients are managed using the clinical practice management functions in EHRs, while patients participating in research are managed by protocols; (2) PCPs are covered entities, [33] permitted to access patient health information in EHR as a health care provider, a health care plan, or a health care clearinghouse, which is often off-limits to clinical researchers; and (3) often there is a lack of communication between clinical and research teams because they have different job priorities and possibly different employers. Two significant design considerations of IMPACT are patient privacy protection and institutional regulatory compliance. We consulted bioethics experts and identified many controversial issues[32, 34] regarding the accessibility of patient information by researchers in EHRs. Different institutions or research settings (e.g., academic medical center vs. community-based research practice sites) may have different policies and procedures in place to address privacy concerns. Since there is no consistent practice in this area, we defined the following design principles for storing and displaying patient information in IMPACT. First, IMPACT uses secure client-server architecture in which research participants’ PHI collected from EHRs is stored in an encrypted middleware server. IMPACT uses the Secure HTTP Protocol (HTTPS) to exchange information between the server and the web browser. Second, IMPACT interoperates with only institutionally approved calendaring systems, such as Microsoft Outlook Exchange, which restricts PHI use within the institutional firewall. Third, an IMPACT web session times out after 5 minutes of idle time and requires an ID and password to re-enter the system.

Requirements for System Interoperability

When introducing a new scheduling tool to busy clinical researchers, it is important to maximize information reuse and minimize redundant data entry. We identified the common systems in use by CRCs and their potential needs for exchanging information with IMPACT (Table 4). In addition, to improve the interoperability between IMPACT and a variety of scheduling software used by CRCs, we adopted the RFC standard 5545[29] format, called iCalendar, for our calendar. iCalendar is a computer file format created by the Internet Engineering Task Force that allows Internet users to send meeting requests and tasks to other Internet users via email, or to share files with an extension of .ics.[35] This format is supported by Outlook, Thunderbird, Apple’s iCal, and many other commercial scheduling software systems and is independent of the information transport protocol so that individual events can be sent via email or the entire calendar file can be shared or edited.

Table 4.

Interoperable systems with IMPACT

| System Type | When | Direction | Information Exchanged |

|---|---|---|---|

| Electronic Health Records (EHR) | Before or during visit scheduling | Import | Patient primary care provider information; patient health information for eligibility determination and safety monitoring (e.g., adverse drug reactions) |

| Clinical Practice Management Systems for EHR | During visit scheduling | Import | Appointment schedules with primary care providers |

| Clinical Trial Management System (CTMS) | During visit scheduling | Import | Protocol-specified visit windows |

| Clinical Trial Management System (CTMS) | After a visit | Export | Completed reimbursable research visits |

| Personal Calendars (e.g., Outlook) | During visit scheduling | Import; export | Availability of a CRC or a Principal Investigator |

Graphical User Interface

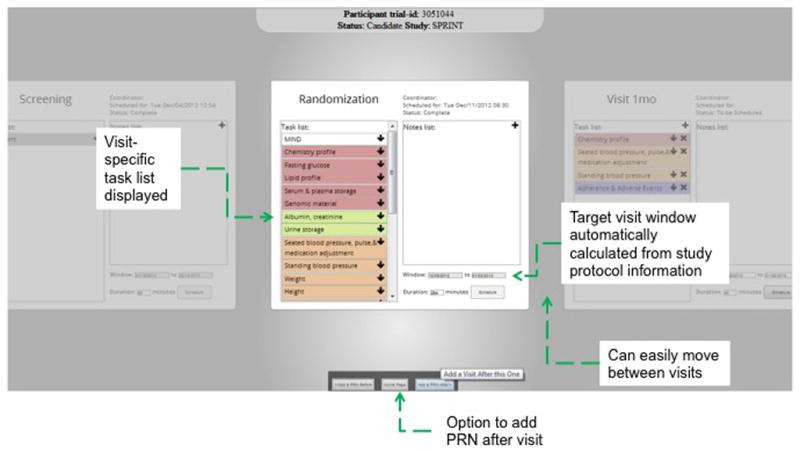

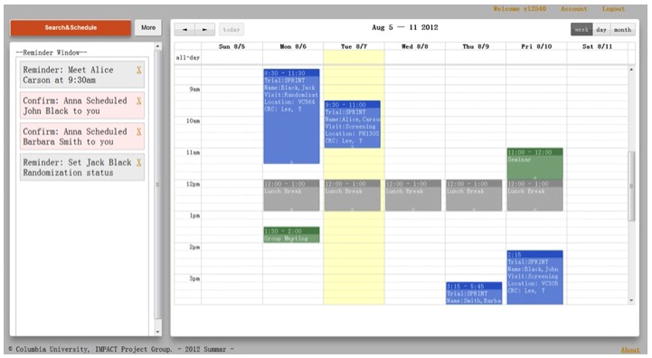

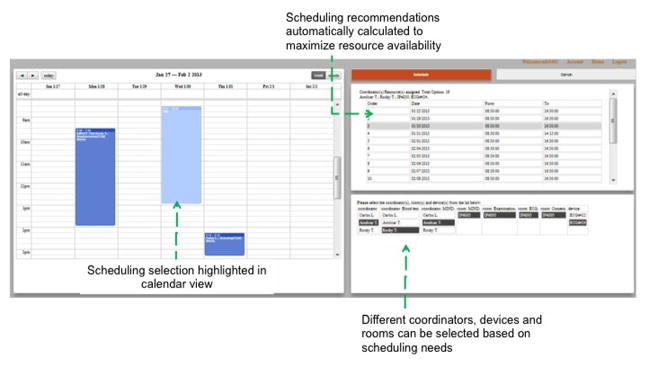

Since our participatory design methods generated many prototypes, our design has evolved over time. Figures 5–7 show the graphical user interface for the final IMPACT prototype for creating a research visit, selecting an optimal visit date and time recommended by IMPACT, and displaying all the appointments in a monthly view respectively. The prototype was implemented using Python version 2.7 and its web framework Django.

Figure 5.

The IMPACT prototype screenshots for adding a research visit (e.g., a randomization visit). All protocol specified visits are ordered as flashcards so that a user can scroll left or right to see previous or future visits. The current visit is brighter than others. This screen displays the protocol window and its tasks and notes for the visit. The tasks and their affiliated resources can be edited. A user can click the button “Schedule” to trigger the multi-user constraint satisfaction algorithm to receive automatically recommended optimal dates and times.

Figure 7.

The IMPACT prototype screenshots for displaying the monthly calendar view after adding a research visit. The system added a visit on 8/10, 14:30–17:30 on the weekly view of the calendar. Different events have different colors. The Yellow lane indicates the current date. On the left, there is an awareness window providing background notifications about the group activities to CRCs.

4. Evaluation Results

Ten CRCs with varying levels of experience working in a variety of clinical research settings in an academic medical center were invited to participate in the scenario-based evaluation of the IMPACT prototype. Table 5 shows the evaluator characteristics.

Table 5.

Characteristics of the ten evaluators of IMPACT

| Evaluator | Specialty Area | Frequent Patient Type | Common Research Type | Domain Experience (Years) | Gender (F/M) |

|---|---|---|---|---|---|

| 1 | Cardiology | In-patient; Out-patient | Trials | >30 | M |

| 2 | Diabetes | In-patient; Out-patient | Observational studies & Trials | 26 | F |

| 3 | Neurology- Critical Care | In-patient | Observational Studies & Trials | > 20 | F |

| 4 | Nursing | In-patient | Observational | 12 | F |

| 5 | Emergency Care | In-patient; Out-patient | Observational Studies & Trials | 11 | F |

| 6 | Endocrine | Out-patient | Observational studies & Trials | 11 | M |

| 7 | Cardiology | Out-patient | Observational Studies & Trials | 5 | F |

| 8 | Metabolism & Memory | In-patient; Out-patient | Trials | 5 | M |

| 9 | Pediatrics | In-patient; Out-patient | Observational Studies & Trials | 5 | M |

| 10 | Clinical Heart Failure | In-patient; Out-patient | Trials | >4 | M |

The qualitative data analysis confirmed seven common user needs: (1) access to the availability of multiple stakeholders and resources; (2) interoperability with other systems; (3) team work support; (4) email reminders for pending visits or tasks; (5) support of workflow exceptions; (6) regulatory compliance; and (7) patient privacy protection. Table 6 lists these themes and sample quotations from the participants.

Table 6.

The themes identified from the evaluation and example quotes

| Themes | Sample CRCs’ Quotes |

|---|---|

| Access to the availability of multiple stakeholders and resources |

|

| Interoperability with other systems |

|

| Collaboration and group awareness support |

|

| Support of workflow exceptions |

|

| Reminders for pending visits or tasks |

|

| Regulatory compliance |

|

| Patient privacy protection |

|

The evaluators confirmed that the current processes for scheduling research visits involve multiple, time-consuming phone calls when trying to reach a patient to schedule the next research visit. The evaluators were pleased that IMPACT collects and synthesizes information required for scheduling a visit. They were enthusiastic that IMPACT recommends optimal visit dates and times convenient for CRCs and patients. Several evaluators stressed the importance that IMPACT communicate with CTMSs to avoid reentry of visit information for reimbursement purposes. Evaluators indicated the need to share calendars and responsibility for visit scheduling when there are several CRCs at a site working on the same study, in order to see each other’s availability and activity updates easily. Providing such information to team members is a classic feature called “group awareness” in computer-supported collaborative work.[31]

Evaluators expressed the desire to receive support for workflow exceptions. For example, a CRC should be able to schedule a visit not dictated by the research protocol. These are called PRN (Latin pro re nata, “as needed”) visits that only occur when medically necessary. Moreover, evaluators stated that they sometimes need to schedule research visits outside normal working hours, such as early mornings and weekends, and that this need should be supported by a robust scheduling system.

When visits take longer than the allotted time, research participants may be unable to complete all tasks required by the protocol for a given research visit and thus another visit must be scheduled in order to complete them. CRCs also reported that as more incomplete visits occur, it becomes harder to keep track of them. Other requested features included a printing function and weekly view as the default calendar display setting.

All clinical research systems must have privacy and regulatory compliance features. Because evaluators expected to view protected health information (e.g., medical record numbers) on the IMPACT interface, they expressed concern about the security of the IMPACT communication protocol and confirmed the importance of using HTTPS. The interviews gave us a lot of ideas for improving the design of IMPACT, such as selecting the right granularity for temporal knowledge representation for user constraints (i.e., questions 4, 5, 6 in Section I in Appendix II), Table 7 excludes answers to these questions but shows only a summary of answers that are specifically related to user perceived usefulness and usability issues about IMPACT.

Table 7.

Collective responses to selected questions about IMPACT

| Questions for Evaluators | Collective Answers |

|---|---|

| (1) Do you currently use a tool that suggests a date or time for a research visit? | No (100%) |

| (2) What tools or resources do you use when scheduling a research visit? |

|

| (3) How long does it take to schedule a research visit currently? | > 10 minutes including multiple phone calls (90%) “…it depends on how soon the patient answers your call or how many calls you need to make, etc.” (10%) |

| (10) Would it be faster to schedule a research visit with the assistance of IMPACT? |

|

| (12) Will you use this tool when it is available? |

|

| (13) What systems would you like the IMPACT tool to exchange information with (please specify what information to be exchanged with each system)? |

|

| (15) In your team, who else might benefit from using this tool? |

|

| (17) What features are missing from the current design? | Sharable and searchable notes for a visit or for a patient |

Most evaluators confirmed the usefulness of the IMPACT design. However, one evaluator believed that IMPACT would not decrease the time needed to schedule a research visit and commented,

“It depends on the patients…I do not believe this will shorten the time because we still need to do a lot of talking.”

Another evaluator noted that data incompleteness can be a complicating issue during scheduling by stating that:

“Good luck (with IMPACT)! The biggest problem is that no calendar captures complete information even when you can automatically collect information from all calendars. People just do not put all the information in their calendars. Only when you talk to them you may find out that some slots are already taken but the information is not reflected in the electronic or paper calendar.”

Therefore, incomplete information in individual calendars can be a barrier to the success of IMPACT. However, we are optimistic that a design such as IMPACT will motivate users to consolidate their appointments in a single source, such as a personal calendar, which would provide IMPACT with a complete set of scheduling information.

5. Discussion

Our scenario-based evaluation was effective in focusing evaluation efforts and in identifying the range of technical, human, organizational, and other factors that affect system success. Our evaluation results confirmed that there is a great need for IMPACT among CRCs. Although there exist generic calendar systems, such as Google, Outlook, Zimbra, support e-reminders and e-notifications, to the best of our knowledge, they do not provide important protocol-specific scheduling functionalities needed by CRCs for research visit scheduling. Based on our discussions with CRCs who have used Study Manager (Allscripts Corp, Chicago IL), OpenEMR, Velos, Inc., and other CTMSs for scheduling, none of these systems automatically synthesizes fragmented information about clinical appointments, protocol constraints, CRC’s personal calendars, and patient preference to facilitate research visit scheduling optimization.

We are unaware of any other system like IMPACT that simplifies the process of scheduling research visits for CRCs. Moreover, we are unaware of any system having any of the following functionalities: (1) programmatically reading from and writing to CRC’s calendars; (2) automatically recommending the next optimal research visit window for a patient to CRCs; and (3) enabling easy scheduling of a research visit around an existing clinical appointment for a patient without manual information synthesis by CRCs. IMPACT automates information synthesis and multi-source temporal constraint satisfaction for CRCs through the use of multiple computational methods for computer-supported collaborative work and schedule optimization. IMPACT also allows patients to combine research visits and clinical visits in one trip. Without IMPACT, CRCs have to print a list of patients’ appointments, align them with the visit windows specified by research protocols, and manually calculate the dates for meeting patients when they come to see their doctors. We think this is the greatest advantage of IMPACT over existing methods.

We also realize that system interoperability with EHRs is essential for IMPACT to work in a real-world clinical setting. The increasing adoption of EHRs and smart phones are important catalysts for increasing the availability of an electronic format of clinical appointments and CRC availability, which make IMPACT feasible and efficient. Another necessary condition for the deployment of IMPACT is strong support for clinical research and willingness to grant clinical researchers access to clinical information on the part of leadership of clinical facilities or hospitals that own EHR data. Without these permissions, IMPACT will not be able to interoperate with CTMSs and EHRs. In addition, a role-based user access permission management mechanism is needed to ensure secure information sharing. Progress tracking for all participants and visits and timely reminders for due tasks are also needed. These will be part of our future work.

One limitation of the current IMPACT prototype is that it does not interact with patients directly yet. CRCs must enter patient preferences and availability. Allowing patients themselves to enter this information or importing it from personal health records would reduce the workload of CRCs but also may introduce new complexities that warrant investigation before commitment. These include patient-controlled protected health information access by non-covered entities (e.g., CRCs), information incompleteness detection and handling, and automated mechanisms for confirming new appointments with patients. Following the spiral model for software engineering [36], we believe it is safer to add considerations for patients and facilitate their interactions with CRCs in the system only after we successfully meet the needs of CRCs.

In this study, our evaluation has several limitations. First, it was a formative evaluation conducted in a laboratory setting for verifying software requirements, identifying software deficiencies, and assessing feature usefulness based on the feedback of participatory design users. This study had a small sample size: only 10 CRCs participated in our evaluation, though they represented considerable diversity in research setting, experience, and specialty. Also, the evaluation was limited to a set of predefined scenarios so that the evaluation session was brief and the results may not help us identify problems that may occur for long-time users. Therefore, readers should be cautiously optimistic about the potential of IMPACT. More formal evaluations are necessary to evaluate the usefulness, usability, and user acceptance of IMPACT and its benefit to clinical research staff in real work settings using validated instruments.

The two most widely used information system theories for evaluating technology and innovation adoption and acceptance are the Technology Acceptance Model (TAM) [37, 38] and the Unified Theory of Acceptance and Use of Technology (UTAUT) [39]; the latter is an extension to the former. On this basis, there is a large body of literature on adoption and acceptance behavior for health information technologies and innovations [40–44]. The key predictive constructs in TAM are user-perceived usefulness and user-perceived ease of use. There exist numerous validated evaluation instruments based on these models. However, this study used our self-developed technology adoption and acceptance questionnaire instrument (Appendix II) for two primary reasons. First, our focus was on software validation and verification in order to guide our continuous Participatory Design process. Since there were few equivalent technologies for coordinating workflows in clinical research and clinical care, we believe our informal and “internal” evaluation was necessary to assess whether we developed our novel concept into a useful software prototype correctly before we could assess its adoption and impact on clinical research with a larger group of users in real-world settings. Therefore, we chose to ask specific questions pertinent to the design of IMPACT rather than generic questions available in existing validated instruments. Second, our instrument was informed by the TAM theoretical model but has alterations in the wording of questions to fit the study context. We adapted the TAM model and break down the “user perceived usefulness” and “user perceived ease of use” measurements into fine-grained, concrete design questions that can be answered in the context of IMPACT, specifically questions 1, 2, 3, 10, 12, 13, 15, and 17 in Appendix II.

6. Conclusions

This paper contributes a unique design, IMPACT, to relieve busy clinical research staff from time-consuming tasks during research visit scheduling. As a result of the Participatory Design process, IMPACT revealed unmet needs of clinical research coordinators and provides unique design features to meet these needs. On this basis, IMPACT leverages the increasing availability of EHR infrastructure for clinical research. Our scenario-based evaluation confirmed IMPACT’s potential usefulness in a variety of clinical research settings. IMPACT represents a new model for clinical research decision support that interoperates with EHRs and hence can potentially help achieve learning health systems. We plan to make this software available as an open source tool.

One of our ongoing longitudinal evaluation studies is using mixed methods, combining time-motion analysis, software log analysis, qualitative studies via observations and interviews, and quantitative studies via surveys, to rigorously assess the user perceived usefulness, ease of use, and behavioral control, as guided by the TAM model, for IMPACT at the following three conceptual levels: (1) human-computer interaction design, (2) impact on the team work efficiency, and (3) the socio-technical factors that may affect clinical research coordinators’ perception of the usefulness and usability of IMPACT. We will report these results in the near future.

Figure 6.

The IMPACT prototype screenshots for recommending optimal visit dates and times. After a user clicks the button “Schedule” on the previous screen, this screen will be displayed showing all automatically recommended dates. The grey row indicates a highlighted datetime under consideration. A user can select a datetime from the recommended list, and available CRCs, equipment, and rooms in the lower panel, and click the “Book” button to pick this datetime.

Acknowledgments

Funding: This research was supported by grants R01LM009886 and R01LM010815 from the National Library of Medicine, R01HS019853 from Agency for Healthcare Research and Quality, and UL1 TR000040 from the National Center for Advancing Translational Sciences.

We thank the following clinical research coordinators for contributing their valuable time to participate in our scenario-based user evaluation: Mary Beth Marks, Noeleen Ostapkovich, Paul Gonzalez, Stephen Helmke, Michelle Mota, Sunmoo Yoon, Juan Cordero, Rafi Cabral, Yohaimi Cosme, and Patricia Kringas. We thank Dr. Victor Grann and Ms. Maxine Ashby-Thompson for joining our evaluation team and for providing feedback to the design of IMPACT.

Footnotes

Competing Interests: None.

References

- 1.Sung NS, Crowley WF, Jr, Genel M, et al. Central Challenges Facing the National Clinical Research Enterprise. JAMA. 2003;289(10):1278–87. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- 2.Kush DRD. Transforming Medical Research with Electronic Health Records. iHealth Connections. 2011;1(1):16–20. [Google Scholar]

- 3.Friedman CP, Wong AK, Blumenthal D. Achieving a Nationwide Learning Health System. Science Translational Medicine. 2010;2(57):57cm29. doi: 10.1126/scitranslmed.3001456. [DOI] [PubMed] [Google Scholar]

- 4.Blumenthal D. Stimulating the Adoption of Health Information Technology. N Engl J Med. 2009:1477–9. doi: 10.1056/NEJMp0901592. [DOI] [PubMed] [Google Scholar]

- 5.Mitka M. US Government Kicks Off Program for Comparative Effectiveness Research. JAMA. 2010;304(20):2230–2231. doi: 10.1001/jama.2010.1663. [DOI] [PubMed] [Google Scholar]

- 6.DesRoches CM, Campbell EG, Rao SR, et al. Electronic Health Records in Ambulatory Care -- A National Survey of Physicians. N Engl J Med. 2008;359(1):50–60. doi: 10.1056/NEJMsa0802005. [DOI] [PubMed] [Google Scholar]

- 7.Stead WW, Lin HS, editors. Computational Technology for Effective Health Care: Immediate Steps and Strategic Directions. National Research Council; 2009. [PubMed] [Google Scholar]

- 8.Khan SA, Kukafka R, Bigger JT, Johnson SB. Re-engineering Opportunities in Clinical Research using Workflow Analysis in Community Practice Settings. AMIA Annu Symp Proc. 2008:363–7. [PMC free article] [PubMed] [Google Scholar]

- 9.HITSP. Use of Electronic Health Records in Clinical Research: Core Research Data Element Exchange (Draft Detailed Use Case, March 06, 2009) 2009 Sep 26;2009 Available from: http://publicaa.ansi.org/sites/apdl/EHRClinicalResearch/Forms/AllItems.aspx. [Google Scholar]

- 10.EHR/CR: EHR for Clinical Research. 2011 Oct 2; Available from: http://www.eclinicalforum.org/ehrcrproject/Home/tabid/344/language/en-US/Default.aspx.

- 11.Retrieve Form for Data Capture. 2011 Oct 4; Available from: http://wiki.ihe.net/index.php?title=Retrieve_Form_for_Data_Capture.

- 12.PACeR (Partnership to advance clinical electronic research) 2011 Sep 30; Available from: http://pacerhealth.org/

- 13.A Taxonomy of Secondary Uses and Re-uses of Healthcare Data. American Medical Informatics Association; [Google Scholar]

- 14.Alving B. The NIH Roadmap for Reengineering Clinical Research. 2006 Apr 16;2012 Available from: http://www.ncrr.nih.gov/research_infrastructure/workshop/CR-Roadmap_RCMI2006.pdf. [Google Scholar]

- 15.Khan SA, Payne PRO, Johnson SB, Bigger JT, Kukafka R. Modeling clinical trials workflow in community practice settings. AMIA Annu Symp Proc. 2006:419–23. [PMC free article] [PubMed] [Google Scholar]

- 16.Khan S, Kukafka R, Payne P, Bigger J, Johnson S. A day in the life of a clinical research coordinator: observations from community practice settings. Stud Health Technol Inform. 2007;129(Pt 1):247–51. [PubMed] [Google Scholar]

- 17.Mosis G, Dieleman JP, Stricker BC, van der Lei J, Sturkenboom MCJM. A randomized database study in general practice yielded quality data but patient recruitment in routine consultation was not practical. J Clin Epidemiol. 2006;59(5):497–502. doi: 10.1016/j.jclinepi.2005.11.007. [DOI] [PubMed] [Google Scholar]

- 18.Institute of Medicine, Working Group Participants draw from the Clinical Effectiveness Research Innovation Collaborative of the IOM Roundtable on Value & Science-Driven Health Care. The Common Rule and Continuous Improvement in Health Care: A Learning Health System Perspective. 2011 Oct; [Google Scholar]

- 19.Institute of Medicine. Beyond the HIPAA privacy rule: enhancing privacy, improving health through research. Washington DC: 2009. [PubMed] [Google Scholar]

- 20.Califf RM. Clinical Research Sites—The Underappreciated Component of the Clinical Research System. JAMA: The Journal of the American Medical Association. 2009;302(18):2025–2027. doi: 10.1001/jama.2009.1655. [DOI] [PubMed] [Google Scholar]

- 21.Bakken S, Lantigua RA, Busacca LV, Bigger JT. Barriers, Enablers, and Incentives for Research Participation: A Report from the Ambulatory Care Research Network (ACRN) J Am Board Fam Med. 2009;22(4):436–445. doi: 10.3122/jabfm.2009.04.090017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Olsen L, Aisner D, McGinnis J. Roundtable on evidence-based medicine, institute of medicine: learning healthcare system: workshop summary; Washington DC. 2007. [PubMed] [Google Scholar]

- 23.Conway PH, Clancy C. Transformation of Health Care at the Front Line. JAMA. 2009;301(7):763–765. doi: 10.1001/jama.2009.103. [DOI] [PubMed] [Google Scholar]

- 24.Weng C, Batres C, Borda T, et al. A Real-Time Patient Identification Alert Improves Patient Recruitment Efficiency. AMIA Annual Symp Proc. 2011:1489–1493. [PMC free article] [PubMed] [Google Scholar]

- 25.Ehn P. Scandinavian design: On participation and skill. In: Namioka DSaA., editor. Participatory Design: Principles and Practices. Lawrence Erlbaum Associates; Hillsdale, New Jersey: 1993. pp. 41–77. [Google Scholar]

- 26.Haynes SR, Purao S, Skattebo AL. Situating evaluation in scenarios of use. Proceedings of the 2004 ACM conference on Computer supported cooperative work; Chicago, Illinois, USA: ACM; 2004. pp. 92–101. [Google Scholar]

- 27.Damianos L, Drury J, Fanderclai T, et al. CHI ‘00 extended abstracts on Human factors in computing systems. ACM; The Hague, The Netherlands: 2000. Scenario-based evaluation of loosely-integrated collaborative systems; pp. 127–128. [Google Scholar]

- 28.Lewis CH. Technical report IBM RC-9265. 1982. Using the “Thinking Aloud” Method In Cognitive Interface Design. [Google Scholar]

- 29.iCalendar. 2012 Jul 7; Available from: http://tools.ietf.org/html/rfc5545.

- 30.Steels L. MIT Artificial Intelligence Laboratory Working Papers, WP-170. 1978. Frame-based Knowledge Representation. [Google Scholar]

- 31.Paul D, Victoria B. Awareness and coordination in shared workspaces. Proceedings of the 1992 ACM conference on Computer-supported cooperative work; Toronto, Ontario, Canada: ACM; 1992. [Google Scholar]

- 32.Weng C, Appelbaum P, Hripcsak G, et al. Using EHRs to Integrate Research with Patient Care: Promises and Challenges. Journal of American Medical Informatics Association. 2012;19(5):684–687. doi: 10.1136/amiajnl-2012-000878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.HHS. Covered Entities. 2012 Jun 27; Available from: http://www.hhs.gov/ocr/privacy/hipaa/understanding/coveredentities/index.html.

- 34.Kahn MG, Kaplan D, Sokol RJ, DiLaura RP. Configuration Challenges: Implementing Translational Research Policies in Electronic Medical Records. Academic Medicine. 2007;82(7):661–669. doi: 10.1097/ACM.0b013e318065be8d. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wikipedia definition for iCalendar. 2012 Jul 7; Available from: http://en.wikipedia.org/wiki/ICalendar.

- 36.Boehm B. A Spiral Model of Software Development and Enhancement. ACM SIGSOFT Software Engineering Notes, “ACM”. 1986;11(4):14–24. [Google Scholar]

- 37.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989;13(3):319–340. [Google Scholar]

- 38.Davis FD, Bagozzi RP, Warshaw PR. User acceptance of computer technology: A comparison of two theoretical models. Management Science. 1989;35:982–1003. [Google Scholar]

- 39.Venkatesh V, Morris MG, Davis GB, Davis FD. User Acceptance of Information Technology: Toward a Unified View. MIS Quarterly. 2003;27(3):425–478. [Google Scholar]

- 40.Zheng K, PR, Johnson MP, et al. Evaluation of healthcare IT applications: the user acceptance perspective. In: KJ, editor. Studies in Computational Intelligence. Vol. 65. Heidelberg, Germany: Springer Berlin; 2007. p. 49e78. [Google Scholar]

- 41.Zheng K, Fear K, Chaffee BW, et al. Development and validation of a survey instrument for assessing prescribers’ perception of computerized drug–drug interaction alerts. Journal of the American Medical Informatics Association. 2011;18(Suppl 1):i51–i61. doi: 10.1136/amiajnl-2010-000053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Koppel R, Kreda D. Healthcare IT usability and suitability for clinical needs: challenges of design, workflow, and contractual relations. Stud Health Technol Inform. 2010;(157):7–14. [PubMed] [Google Scholar]

- 43.Harrison MI, Koppel R, Bar-Lev S. Unintended Consequences of Information Technologies in Health Care—An Interactive Sociotechnical Analysis. Journal of the American Medical Informatics Association. 2007;14(5):542–549. doi: 10.1197/jamia.M2384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sittig D, Krall M, Dykstra R, Russell A, Chin H. A survey of factors affecting clinician acceptance of clinical decision support. BMC Medical Informatics and Decision Making. 2006;6(1):6. doi: 10.1186/1472-6947-6-6. [DOI] [PMC free article] [PubMed] [Google Scholar]