Abstract

Very little is known about the neural underpinnings of language learning across the lifespan and how these might be modified by maturational and experiential factors. Building on behavioral research highlighting the importance of early word segmentation (i.e. the detection of word boundaries in continuous speech) for subsequent language learning, here we characterize developmental changes in brain activity as this process occurs online, using data collected in a mixed cross-sectional and longitudinal design. One hundred and fifty-six participants, ranging from age 5 to adulthood, underwent functional magnetic resonance imaging (fMRI) while listening to three novel streams of continuous speech, which contained either strong statistical regularities, strong statistical regularities and speech cues, or weak statistical regularities providing minimal cues to word boundaries. All age groups displayed significant signal increases over time in temporal cortices for the streams with high statistical regularities; however, we observed a significant right-to-left shift in the laterality of these learning-related increases with age. Interestingly, only the 5- to 10-year-old children displayed significant signal increases for the stream with low statistical regularities, suggesting an age-related decrease in sensitivity to more subtle statistical cues. Further, in a sample of 78 10-year-olds, we examined the impact of proficiency in a second language and level of pubertal development on learning-related signal increases, showing that the brain regions involved in language learning are influenced by both experiential and maturational factors.

Introduction

Language is fundamental to human life. Not surprisingly, considerable research effort has been devoted to investigate how language is acquired and, more recently, how the brain accomplishes this feat. Further, there has been much debate about how the neural systems for language might be modified by biological maturation and experience (see Elman, Bates, Johnson, Karmiloff-Smith, Parisi & Plunkett, 1997, for an extended discussion). Cleverly designed behavioral studies with infants have revealed that language acquisition relies heavily on the computational power of the brain, as patterns are extracted from a number of probabilistic cues available in the input (see Kuhl, 2004, for a review). This statistical learning has been shown to underlie many different aspects of language learning from the level of phonemes to grammar (e.g. Kuhl, Stevens, Hayashi, Deguchi, Kiritani & Iverson, 2006; Maye, Werker & Gerken, 2002; Opitz & Friederici, 2003, 2004; Saffran, Aslin & Newport, 1996a; Smith & Yu, 2007; Wonnacott, Newport & Tanenhaus, 2008). While there is evidence that the language learning process is accompanied by changes in brain function and structure in adults (e.g. Callan, Tajima, Callan, Kubo, Masaki & Akahane-Yamada, 2003, Callan, Callan & Masaki, 2005; Friederici, Steinhauer & Pfeifer, 2002; Golestani & Zatorre, 2004; Hashimoto & Sakai, 2004; McNealy, Mazziotta & Dapretto, 2006; Newman-Norlund, Frey, Petitto & Grafton, 2006; Opitz & Friederici, 2003, 2004; Thiel, Shanks, Henson & Dolan, 2003), neuroimaging studies have only just begun to examine developmental changes in the neural basis of language learning (McNealy, Mazziotta & Dapretto, 2010).

One of the key initial components of language learning is the identification of word boundaries in continuous speech, as there are actually no breaks or pauses between words that reliably indicate where one word ends and the next begins (e.g. Cole & Jakimik, 1980). This process of word segmentation must take place before any further linguistic analysis can be performed (e.g. Jusczyk, 2002; Saffran & Wilson, 2003). To parse a continuous stream of speech, 8-month-old infants have been shown to compute information about the distributional frequency with which certain syllables occur in relation to others and calculate the odds (transitional probabilities) that one syllable will follow another (e.g. Aslin, Saffran & Newport, 1998; Saffran et al., 1996a). In addition to these statistical regularities, speech cues such as stress patterns (i.e. longer duration, increased amplitude, and higher pitch on certain syllables) can also serve as markers for word boundaries (Johnson & Jusczyk, 2001; Thiessen & Saffran, 2003; Johnson & Seidl, 2009). The use of stress cues for word segmentation is influenced by how reliably such cues predict word onset, with a more protracted course of development for their use in languages, such as Dutch and French, in which stress patterns are not as reliable a cue for word onset as they are in English (e.g. Nazzi, Iakimova, Bertoncini, Fredonie & Alcantara, 2006; Koojiman, Hagoort & Cutler, 2009).

Importantly, the ability to discriminate words from fluent speech in infancy has been linked to higher vocabulary scores at 2 years of age and better overall language skills in preschool (Newman, Ratner, Jusczyk, Jusczyk & Dow, 2006). Furthermore, evidence of a direct connection between speech parsing and learning new word meanings comes from a recent study showing that 17-month-old babies’ learning of object labels is significantly facilitated by prior experience with segmenting a continuous speech stream that contained the novel object names as words (Graf Estes, Evans, Alibali & Saffran, 2007).

Neuroimaging studies of word segmentation

Given that word segmentation is a crucial component of the language learning process, we previously adapted a behavioral word segmentation paradigm used in infant studies to explore how the adult brain processes statistical and prosodic cues in order to identify word boundaries within continuous speech (McNealy et al., 2006). Prior behavioral and ERP studies in adults suggest that the process of word segmentation is occurring during initial language learning at all ages (e.g. Saffran, Newport & Aslin, 1996b; Sanders, Newport & Neville, 2002). In order to understand how the word segmentation process changes over time and what implications that might have for language learning at different ages, we began to characterize developmental changes in the neural basis of speech parsing by comparing the adult data to those collected in a sample of 10-year-old children (McNealy et al., 2010), who were still under the age when significant decrements in the ability to fully master the phonology and syntax of a new language are typically observed (e.g. see Johnson & Newport, 1989; Elman et al., 1997; Flege, Yeni-Komshian & Liu, 1999; Piske, MacKay & Flege, 2001; Weber-Fox & Neville, 2001). While undergoing fMRI, participants listened to three distinct streams of concatenated syllables, containing either strong statistical cues to word boundaries, strong statistical and speech cues, or weak statistical regularities that did not readily provide cues to word boundaries (see Figure 1). Both children and adults displayed significant signal increases over time (i.e. as a function of exposure) in temporal and inferior parietal regions when they listened to the artificial language streams containing high statistical regularities, a finding which suggests that they were computing the statistical relationships between syllables online while attempting to segment these streams (McNealy et al., 2006, 2010). While these signal increases were bilaterally distributed in children, they were left-lateralized in adults. Only the 10-year-old children, however, also displayed signal increases over time in temporal regions when they listened to the stream where there was low frequency of co-occurrence between syllables and, hence, minimal cues to word boundaries, indicating that children might be more sensitive to smaller statistical regularities than adults (McNealy et al., 2010).

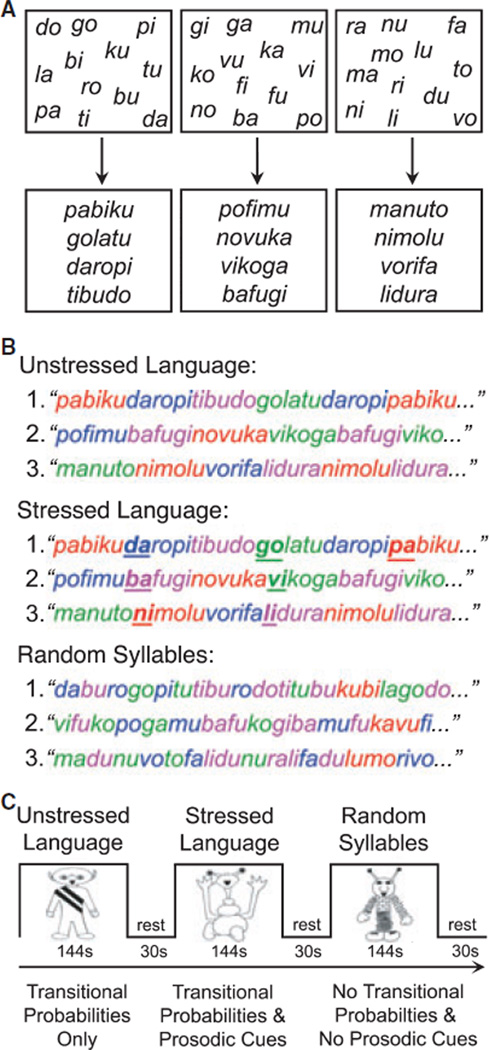

Figure 1.

In the Speech Stream Exposure Task, three different sets of 12 syllables were used to create three sets of four words (A). The Unstressed and Stressed Languages were formed by concatenating these words to form two artificial languages, whereas the Random Syllables stream was formed by pseudorandomly concatenating individual syllables (B). Participants listened to three counterbalanced speech streams (C) containing statistical regularities (Unstressed Language), statistical regularities and prosodic cues (Stressed Language), and no cues to guide word segmentation (Random Syllables). This figure was previously printed in McNealy et al. (2006) and McNealy et al. (2010).

Importantly, while both 10-year-old children and adults performed at chance on a behavioral post-scan test of identification of ‘words’ from the speech streams, there was considerable intersubject variability such that we observed a positive correlation in both groups between accuracy scores and neural activity in left superior temporal gyrus associated with listening to the speech streams with strong statistical regularities. This link between performance and brain activity suggests that the observed signal changes during exposure to the speech streams with high statistical regularities and speech cues indeed reflect statistical learning and implicit word segmentation, a conclusion corroborated by the results of a second fMRI scan that immediately followed the exposure to the speech streams. In that scan, participants listened to trisyllabic combinations that occurred with different frequencies in the streams of speech they just heard. In both adults and children, reliably greater activity in left inferior frontal gyrus was observed when they listened to ‘words’ that had occurred 45 times in the speech streams compared to ‘nonwords’ that had occurred only once in the speech streams (McNealy et al., 2006, 2010). These findings support the notion that implicit speech parsing had taken place for both groups, although this differential activity was more pronounced for the adults.

Our previous imaging studies of word segmentation built upon existing behavioral research, which critically revealed the importance of statistical computations for sequence learning and language acquisition. They allowed us to identify some developmental changes in the neural mechanisms underlying speech parsing, even though the behavioral performance of both children and adults was at chance, a finding consistent with prior behavioral studies in which prolonged exposure or explicit training were not provided (Saffran et al., 1996b; Sanders et al., 2002). Our previously observed developmental differences in the laterality of signal increases as a function of exposure to the speech streams with high statistical regularities, as well as our finding that 10-year-old children, but not adults, exhibited signal increases while listening to the speech stream with low statistical regularities, provide initial evidence that the neural correlates of early language learning undergo significant changes from late childhood to adulthood.

Study aims and hypotheses

In the present study we utilized the same fMRI speech stream exposure paradigm to address two new questions. The first goal was to further examine how the brain processes statistical and prosodic cues across a wider age span. In order to characterize developmental changes in the neural architecture underlying speech parsing, we gathered data from a younger sample of 6-year-old children and a larger sample of 78 10-year-old children, 39 of whom were followed longitudinally and studied again at age 13. We expected that children at all ages would display signal increases over time in temporal and inferior parietal regions as a function of exposure to the speech streams containing high statistical regularities and prosodic cues, as was observed in our prior samples of 10-year-olds and adults (McNealy et al., 2006, 2010). However, given that the signal increases in 10-year-old children were bilaterally distributed and those in adults were lateralized to the left hemisphere, we reasoned that we might observe further age-related differences in the laterality of signal increases over time in our younger sample of 6-year-old children, with greater involvement of right hemisphere temporal regions. We further hypothesized that we would observe developmental decreases with age in the degree of sensitivity to small statistical regularities because our previously studied 10-year-old children, but not adults, displayed signal increases during exposure to the speech stream where statistical cues to word boundaries were minimal (i.e. transitional probabilities between syllables averaged 0.1 in this speech stream as opposed to 0.76 in the streams with high statistical regularities). Accordingly, we expected that 6-year-olds, like the 10-year-olds, would exhibit increases during this condition; however, we also reasoned that we may not see such signal increases in 13-year-olds if indeed some of the changes in the ability to master a new language after puberty might be related to changes in how the brain computes statistical relationships between adjoining syllables.

The second goal of this study was to begin to disentangle the observed effects of age on neural activity during initial, implicit language learning from the effects of experiential and maturational factors. Specifically, we investigated whether neural activity during speech parsing is related to children’s level of proficiency in a second language and to their level of pubertal development in a large sample of 10-year-old children (N = 78). While research on the neural correlates of language in the bilingual brain has examined how proficiency level and age of acquisition impact the overlap between the representation of first and second languages (for a review, see Perani & Abutalebi, 2005), to our knowledge, no study has yet investigated how one’s experience with a second language might impact the neural architecture subserving online language learning. Thus, in our large sample of 10-year-old children who had a wide range of exposure to foreign languages, we examined the relationship between second language proficiency and learning-related signal increases during exposure to the artificial languages containing strong statistical regularities and speech cues to word boundaries. We reasoned that prior experience with learning another language might facilitate the learning process during exposure to the speech streams, as reflected by greater signal increases associated with higher proficiency levels and lower age of acquisition of a second language.

Finally, in light of evidence of a decline in the ability to learn a new language with native-like proficiency following puberty (e.g. Johnson & Newport, 1989; Elman et al., 1997; Flege et al., 1999; Piske et al., 2001; Weber-Fox & Neville, 2001), we examined how individual differences in pubertal maturation within our larger sample of 10-year-old children might impact neural activity during implicit word segmentation. We hypothesized that we might observe a positive relationship between level of pubertal development and brain activity in regions that are increasingly recruited during speech parsing as a function of age, and, conversely, a negative relationship between pubertal development and brain activity in regions that are recruited to a greater extent in younger children.

Materials and methods

Participants

Ninety-four typically developing children were recruited from the greater Los Angeles area via posted flyers, summer camps, and mass mailings. Sixteen children between the ages of 5 and 7 (‘6-year-olds’) participated in this study (six female; mean age, 6.87 years, range, 5.27– 7.83 years). Data from four children were excluded due to average head motion greater than two millimeters, calculated as the average displacement across all voxels in all functional images relative to their mean position in millimeters (see Woods, 2003, for further details). Seventy-eight children between the ages of 9 and 10 (‘10-year-olds’) participated in this study (40 female; mean age, 10.07 years, range, 9.49–10.65 years), 39 of whom were followed longitudinally and studied again between 12 and 13 years of age (‘13-year-olds’; 19 female; mean age at timepoint 2, 12.98 years, range, 12.38–13.7 years; mean age at timepoint 1, 10.07 years, range, 9.49– 10.57 years). Data from a sub-sample of 54 10-year-olds were previously reported in McNealy et al. (2010).

Written informed child assent and parental consent was obtained from all children and their parents, respectively, according to the guidelines set forth by the UCLA Institutional Review Board. Children’s parents filled out several questionnaires that assessed their child’s handedness, health history, and language background. All children were right-handed and had no history of significant medical, psychiatric, or neurological disorders on the basis of parental reports on a detailed medical questionnaire, the Child Behavior Checklist (Achenbach & Edelbrock, 1983), and a brief neurological exam (Quick Neurological Screening Test II; Mutti, Sterling, Martin & Spalding, 1998). All children had a full-scale IQ above 80, as assessed by the Wechsler Intelligence Scale for Children (WISC-III; Wechsler, 1991) in 10-year-old children and by the Wechsler Abbreviated Scale of Intelligence (WASI; Wechsler, 1999) in 6- and 13-year-old children.

Data from these samples of children were compared with those obtained from a sample of 27 neurotypical adults as previously reported (McNealy et al., 2006). By report, these adults (13 female; mean age, 26.63 years; range, 20–44 years) were right-handed, native English speakers with no history of neurological or psychiatric disorders.

fMRI task: speech stream exposure

This study used the exact same speech stream exposure task as described in McNealy and colleagues (2006) and McNealy and colleagues (2010). Children listened to three streams of nonsense speech, which were supposedly spoken by aliens from three different planets and which comprised the three experimental conditions (Unstressed Language stream, Stressed Language stream and Random Syllables stream) used in the single fMRI run. Children were not explicitly instructed to perform a task except to listen, in light of a study showing that implicit learning can be attenuated by explicit memory processes during sequence learning (Fletcher, Zafiris, Frith, Honey, Corlett, Zilles & Fink, 2004). As shown in Figure 1, the three streams were created by repeatedly concatenating 12 syllables. It is important to note that a different set of 12 syllables was used for each speech stream. In each of the two artificial language conditions, the 12 syllables were used to make four trisyllabic words, following the exact same procedure used in previous infant and adult behavioral studies (see Saffran et al., 1996a, 1996b; Aslin et al., 1998; Johnson & Jusczyk, 2001). Each syllable was recorded separately using SoundEdit, ensuring that the average syllable duration (0.267 sec), amplitude (75.8 dB), and pitch (221 Hz) were (1) not significantly different across the experimental conditions and (2) matched those previously used in the behavioral literature. For each artificial language, the four words were randomly repeated three times to form a block of 12 words, subject to the constraint that no word repeated twice in a row. Five such different blocks were created, and then this five-block sequence was itself concatenated three times to form a continuous speech stream lasting 2 min and 24 sec, during which each word occurred 45 times. For example, the four words ‘pabiku’, ‘tibudo’, ‘golatu’, and ‘daropi’ were combined to form a continuous stream of nonsense speech containing no breaks or pauses (e.g. pabikutibudogolatudaropitibudo…). Within the speech stream, transitional probabilities for syllables within a word and across word boundaries were 1 and 0.33, respectively. Thus, as the words were repeated, transitional probabilities could be computed and used to segment the speech stream. In the Unstressed Language condition (U), the speech stream contained only transitional probabilities as cues to word boundaries. In the Stressed Language condition (S), the speech stream contained transitional probabilities, as well as speech, or prosodic, cues introduced by adding stress to the initial syllable of each word, one third of the time it occurred. Stress was added by slightly increasing the duration (0.273 sec), amplitude (76.6 dB), and pitch (234 Hz) of these stressed syllables. At the same time, these small increases were offset by minor reductions in these parameters for the remaining syllables within the Stressed Language condition, which ensured that the mean duration, amplitude, and pitch would not be reliably different across the three experimental conditions. The initial syllable was stressed because 90% of words in conversational English have stress on their initial syllable (Cutler & Carter, 1987).

A Random Syllables condition (R) was also created so as to facilitate a comparison of activity associated with processing input with high statistical and prosodic cues to word boundaries, as in the two artificial language conditions (U+S), and activity related to listening to a series of concatenated syllables that cannot be readily parsed into trisyllabic words. In this condition, the 12 syllables were not arranged into four words as in the two artificial language conditions; rather, these syllables were arranged pseudorandomly such that no three-syllable string was repeated more than twice in the stream (the frequency with which two-syllable strings occurred was also minimized). Therefore, in this condition, the statistical likelihood of any one syllable following another was very low (with an average transitional probability between syllables in the stream of 0.1; range 0.02–0.22). While transitional probabilities between syllables in this stream may still be computed, minimal statistical cues and no prosodic cues were afforded to the listener to aid speech parsing.

As depicted in Figure 1, each child listened to three 144-second speech streams (R, U, and S) interspersed between 30 seconds of resting baseline. A different set of syllables was used in each of the three speech streams presented to a given child, such that the statistical regularities present in one stream would not influence the computation of statistical regularities in another. Short samples of these speech streams are available in Supporting Information. The order of presentation of the three experimental conditions was counterbalanced across children according to a Latin Square design.

It is important to note that: (1) the same number of syllables was repeated the same number of times across all three conditions, although it was only in the two artificial language conditions that strong cues were available to guide word segmentation; (2) across children in each age group, each set of 12 syllables was used with the same frequency in each condition, thus ensuring that any difference between conditions would not be due to different degrees of familiarity with a given set of syllables; (3) to guard against the possibility that the computation of the transitional probabilities between the syllables chosen to form the words in the two artificial languages might be influenced by prior experience with the transitional probabilities between these syllables in English, three different versions of each language were created by rotating the position of the syllables within the words of each language (e.g. ‘pabiku’ in one version became ‘kubipa’ and ‘bipaku’ in the others); (4) the three different versions of each language were assigned as a between-subjects manipulation such that one-third of the participants listened to each version; (5) to ensure that there would not be transfer of learning between conditions due to the order in which the resulting 180 words were combined to create the speech streams (four words each occurring 45 times), different word orders were created for each set of syllables; and (6) while the length of the activation blocks used in this task was unconventional for an imaging study and could have resulted in decreased power to detect reliable differences between conditions, we opted to adhere to the paradigm used in previous behavioral studies in light of our previous word segmentation fMRI study in adults demonstrating that sufficient power was nevertheless achieved (McNealy et al., 2006).

Importantly, the children who were scanned longitudinally at age 13 received a different version of the languages they heard when they were scanned at age 10, such that, for example, if a child heard the speech stream where ‘pabiku’ was a word at age 10, at age 13, s/he heard an alternative version of the speech stream where ‘kubipa’ was a word. Thus, we ensured that any differences in brain activity across time points were not attributable to learning and consolidation of the speech streams between visits.

Finally, it is worth mentioning that this design does not allow for the distinction to be made as to whether the participants are calculating transitional probabilities or frequency of co-occurrence between adjacent syllables; however, the results of a previous behavioral study designed precisely to discern which type of statistical computations learners perform on this input have indicated that learners do indeed track transitional probabilities (Aslin et al., 1998). Further, this study did not directly examine whether participants extracted wordlike units from the speech streams. However, the findings of Graf Estes and colleagues (2007) have demonstrated that the ultimate outcome of tracking statistical regularities in the input is successful word segmentation. Hence, while we refer throughout this manuscript to implicit word segmentation, we acknowledge that the listeners’ degree of learning, particularly after the short period of exposure to the two and a half minute speech streams, may not have proceeded as far as to extract word-like units.

Behavioral tasks

Word discrimination test

A post-test was given outside the scanner to investigate whether children were able to explicitly discriminate between words and partwords (i.e. trisyllabic sets of syllables spanning word boundaries) from the speech streams, and behavioral measures (response times and accuracy scores) were collected. Children listened to the four ‘words’ used to create the artificial language streams (e.g. ‘golatu’, ‘daropi’) as well as to four ‘partwords’, that is, trisyllabic combinations formed by grouping syllables from adjacent words within the speech streams (e.g. the partword ‘tudaro’ consisted of the last syllable of the word ‘golatu’ and the first two of the adjacent word ‘daropi’ within the stream ‘…golatudaropi…’). They then responded yes or no as to whether they thought each trisyllabic combination could be a word in the artificial languages they had previously heard. (Responses from one child were not recorded due to a computer malfunction.)

As in the previous behavioral studies, the transitional probabilities between the first and second syllables in the words were appreciably higher than those between the first two syllables in the partwords (1 as opposed to 0.33), due to the words and partwords having occurred within the speech stream 45 and 15 times, respectively. The words and partwords from the Stressed Language condition were presented in their unstressed version, such that there were no differences in duration, amplitude, or pitch between any of the syllables used in this task.

Second language proficiency assessment

Parents of the 10-year-old children filled out an extensive language background questionnaire, providing detailed information about the child’s experiences with English and any other languages. All children were native English speakers, except for eight 10-year-olds who, nevertheless, learned English before the age of 5 and for whom English was the language in which they were most proficient. Parents rated their children’s proficiency on four dimensions (comprehension, speech, reading and writing) on a 7-point scale, where a rating of 1 represented almost no proficiency and a rating of 7 represented native-like proficiency. An external validation for these parental report data, such as through formal evaluation, was not obtained. For each child, a second language proficiency score was determined by averaging parental ratings of comprehension and spoken language ability (these two ratings were strongly positively correlated, r(51) = .851, p < .001). Based on the overall second language proficiency score, the 10-year-old children were divided into groups of no/low proficiency (scores of 0 or 1; N = 30) and some/high proficiency (scores of 2 and above; N = 30). Fifty-three of the 78 10-year-old children had proficiency scores of 1 or higher; a language other than English was reported as being routinely used in the home in 74% of these children and in a school setting in 58% of these children. Further, 89% of those 53 children were reported as having exposure to a foreign language either in their own home or in the homes of relatives or friends. The average proficiency score was 2.82 (SD = 1.74) on a 7-point scale and the average age of acquisition of the second language was 1.74 years (SD = 2.40). There was not a significant correlation between second language proficiency level and age of second language acquisition. Because both the measures of second language proficiency and age of second language acquisition were significantly positively skewed, the log transforms of these measures were entered into the regression analyses as described below.

Measure of pubertal development

The 78 10-year-old children filled out the Pubertal Development Scale (Petersen, Crockett, Richards & Boxer, 1988), which is a reliable and validated questionnaire assessing changes in body size and shape on a 4-point scale (1 = no development to 4 = development completed). Results from this measure correspond well with those from the Sexual Maturation Scale developed by Tanner (Brooks-Gunn, Warren, Rosso & Gargiulo, 1987; Carskadon & Acebo, 1993). Children were asked to rate their pubic hair growth, skin changes and growth spurt. Additionally, boys rated facial hair and voice deepening and girls rated breast development and menarcheal status. The average pubertal development score was 1.59 (SD = 0.44) on a 4-point scale (boys: M = 1.60, SD = 0.42; girls: M = 1.58, SD = 0.47).

fMRI data acquisition

Functional images were collected using a Siemens Allegra 3 Tesla head-only MRI scanner. A 2D spin-echo scout (TR = 4000 ms, TE = 40 ms, matrix size 256 by 256, 4-mm thick, 1-mm gap) was acquired in the sagittal plane to allow prescription of the slices to be obtained in the remaining scans. For each child, a high-resolution structural T2-weighted echo-planar imaging volume (spin-echo, TR = 5000 ms, TE = 33 ms, matrix size = 128 by 128, FOV = 20 cm, 36 slices, 1.56-mm in-plane resolution, 3-mm thick) was acquired coplanar with the functional scans to allow for spatial registration of each child’s data into a standard coordinate system. During the Speech Stream Exposure Task, one functional scan lasting 8 minutes and 48 seconds was acquired covering the whole cerebral volume (174 images, echo-planar imaging gradient-echo, TR = 3000 ms, TE = 25 ms, flip angle = 90°, matrix size = 64 by 64, FOV = 20 cm, 36 slices, 3.125-mm in-plane resolution, 3-mm thick, 1-mm gap).

Children listened to the auditory stimuli through a set of magnet-compatible stereo headphones (Resonance Technology, Inc.). Stimuli were presented using MacStim 3.2 software (Darby, WhiteAnt Occasional Publishing and CogState Ltd, 2000).

fMRI data analysis

Using Automated Image Registration (AIR; Woods, Grafton, Holmes, Cherry & Mazziotta, 1998a; Woods, Grafton, Watson, Sicotte & Mazziotta, 1998b) functional images for each child were (1) realigned to each other to correct for head motion during scanning and co-registered to their respective high-resolution structural images using a six-parameter rigid body transformation model and a least-square cost function with intensity scaling; (2) spatially normalized into a Talairach-com-patible MR atlas (Woods, Dapretto, Sicotte, Toga & Mazziotta, 1999) using polynomial non-linear warping; and (3) smoothed with a 6-mm FWHM isotropic Gaussian kernel to increase the signal-to-noise ratio. Statistical analyses were implemented in SPM99 (http://www.fil.ion.ucl.ac.uk/spm, Wellcome Department of Cognitive Neurology, London, UK). For each child, contrasts of interest were estimated according to the general linear model using a canonical hemodynamic response function. The exponential decay function in SPM99 (which closely approximates a linear function) was also used to model changes that occurred within each activation block over the course of exposure to the speech stream, and global mean scaling (dividing every voxel’s timeseries by the global mean timeseries) was applied to correct for signal drift across the duration of the scan session during estimation of the first-level model for each subject. Contrast images from these fixed effects analyses were then entered into second-level analyses using random effects models to allow for inferences to be made at the population level (Friston, Holmes, Price, Buchel & Worsley, 1999). Reported activity survived correction for multiple comparisons at the cluster level (p < .05, corrected) and at least t > 3.07 for magnitude (p < .001, uncorrected). For display purposes only, activation maps in the figures are thresholded at p < .05 for both magnitude and spatial extent, corrected for multiple comparisons at the cluster level.

Within each age group, separate one-sample t-tests were implemented for each condition (Unstressed Language, Stressed Language, and Random Syllables vs. resting baseline) to identify blood-oxygenation level dependent (BOLD) signal increases associated with listening to each speech stream. Because statistical regularities are computed online during the course of listening to the speech streams, we also examined whether children in each age group, like adults, would display signal increases over time in language-relevant cortices as a function of exposure to the speech streams. A region-of-interest (ROI) analysis was then conducted in bilateral temporal cortices where such signal increases were observed in order to examine any laterality differences in the extent of learning-related cortical activity. This functionally defined ROI included all voxels showing reliable learning-related signal increases in any age group in either the left or right hemisphere (LH and RH), as well as their respective counterparts in the opposite hemisphere. As previously done for our adult sample, a laterality index was then computed for each child based on the number of voxels showing increased activity over time within these symmetrical ROIs in the LH and RH (number of voxels activated in the LH – number of voxels activated in the RH/number of voxels activated in the LH + RH). We further computed a laterality index for each child based on the magnitude of activation from extracted parameter estimates in all voxels within the same symmetrical ROIs in the LH and RH. We have not re-reported within-group results from the 10-year-old children because there were no significant differences in the regions engaged by the speech streams between the sample of 54 10-year-olds for whom we previously reported data and the samples of 39 and 78 10-year-olds used in this paper for the between-group (and longitudinal) and regression analyses, respectively.

An analysis of variance (ANOVA) with three levels was implemented to compare neural activity observed in the three independent samples of 6-year-olds, 10-year-olds, and adults. A second ANOVA was implemented to compare neural activity observed in the 13-year-olds with that observed in the 6-year-olds and adults. (Please note that an omnibus ANOVA was not performed because the data for the 10- and 13-year-olds were collected in the same children longitudinally.) Finally, paired t-tests were performed to compare changes in neural activity in the sample of 39 10-year-old children who were studied longitudinally at the age of 10 and 13. In order to identify developmental differences in neural activity related to statistical learning that occurred over the course of exposure to the speech streams, all the above between-group statistical analyses (ANOVAs and paired t-tests) were performed using the contrasts modeling signal increases. More specifically, separate analyses were performed using the contrasts of signal increases during exposure to the artificial languages (U↑ + S↑) and the random syllables stream (R↑); for these analyses, we collapsed across the unstressed and stressed language conditions for the sake of brevity, as the same pattern of results was obtained when the analyses were performed for each condition separately. These comparisons were masked by the joint activation maps of significant signal increases for all conditions (vs. resting baseline) in all groups. The above between-group comparisons were implemented using the subset of 39 10-year-olds for whom longitudinal data were available. Importantly, no between-group differences were found in the mean amount of head motion for any comparison between age groups, calculated as the average displacement across all voxels in all functional images relative to their mean position in millimeters (Woods, 2003).

To examine how individual differences in language experience and level of pubertal maturation might be related to variability in learning-related signal increases observed during exposure to the artificial languages, separate regression analyses were also conducted in the larger sample of 10-year-olds (N = 78) to assess the relationships between (1) one’s level of proficiency in a second language and (2) one’s level of pubertal development and increases in neural activity that occurred as a function of listening to the two artificial languages during the Speech Stream Exposure Task. In order to demonstrate that outliers were not present and did not drive these correlations, the SPM toolbox MarsBaR (http://marsbar.sourceforge.net) was used to extract parameter estimates for each participant from regions where significant correlations were observed, and these parameter estimates were plotted against the behavioral variables of interest. No outliers were detected using the Grubb’s test for the parameter estimates or the behavioral variables. Fifty-three children who had some level of second language proficiency were included in the regression analyses designed to examine the influence of having learned a foreign language. In addition, a separate between-group ANCOVA was implemented (with verbal IQ entered as a covariate) to compare learning-related signal increases between 30 10-year-olds who had no second language proficiency (proficiency scores of 0) and 30 10-year-olds who had attained some level of proficiency in a second language (proficiency scores of 2 or higher). These groups did not differ in age, gender, level of pubertal development, IQ (verbal and performance), or accuracy at discriminating word boundaries on the behavioral post-scan test, nor in the amount of head motion during the fMRI scan or counterbalancing order. We also examined whether there might be a relationship between age and increases in neural activity that occurred as a function of listening to the two artificial languages during the Speech Stream Exposure Task in our samples of 6- and 13-year-olds.

Results

The results of the behavioral post-scan test are presented first, followed by the fMRI results. The imaging results are presented to characterize the neural activity during exposure to the streams of speech in 6- and 13-year-olds. These findings are first presented within each group separately (with brief notes as to the extent to which the observed results bear similarities or differences to those seen in other age groups), and then findings of direct comparisons between age groups are presented that formally test whether there are significant developmental differences in the neural correlates of implicit word segmentation between 6-, 10-, and 13-year-olds and adults. Finally, the results of regression analyses examining the relationship between brain activity during language learning and two factors, second language proficiency and pubertal maturation, are presented.

Behavioral results

Accuracy and response times on the behavioral word recognition test conducted after the fMRI scan are reported for each age group in Table 1. In line with the behavioral findings of prior word segmentation studies (e.g. McNealy et al., 2006, 2010; Saffran et al., 1996b; Sanders et al., 2002), children of all ages were unable to explicitly recognize which trisyllabic combinations might have been words in the artificial languages, as demonstrated by accuracy scores not different from chance in any condition (nor were there any differences in accuracy between words and partwords). With regard to response times, there were no significant differences between conditions in response times to either words or part-words; however, responses to words were overall significantly faster than responses to partwords for the 6- and 10-year-old children [6-year-olds, F(1, 11) = 5.73, p < .038; 10-year-olds, F(1, 76) = 32.29, p < .001]. When comparing the behavioral responses of the groups of children and adults, there were no significant group differences in accuracy [F(3, 149) = 0.09, ns], but there were significant group differences in reaction time [F(3, 149) = 14.37, p < .001]. Post-hoc comparisons using Fisher LSD test revealed that 6-year-olds were overall significantly slower than 10-year-olds, 13-year-olds and adults when responding to both words and partwords (p < .015, .020, .001, respectively). While 10-year-olds and 13-year-olds did not differ in reaction time, both groups were also overall significantly slower than adults when responding to words and partwords (ps < .001).

Table 1.

Behavioral performance on the word discrimination task

| Accuracy (% correct) |

Response Time (seconds) |

|||||

|---|---|---|---|---|---|---|

| Words | Partwords | Nonwords | Words | Partwords | Nonwords | |

| 6-year-olds | ||||||

| Unstressed Language | 58.3 (31.8) | 50.0 (25.0) | 1.894 (0.587) | 2.008 (0.729) | ||

| Stressed Language | 45.1 (30.0) | 52.1 (30.0) | 2.168 (0.602) | 2.438 (0.812) | ||

| Random Syllables | 44.1 (24.9) | 2.130 (0.514) | ||||

| 10-year-olds | ||||||

| Unstressed Language | 53.6 (26.6) | 42.3 (25.5) | 1.818 (0.453) | 1.964 (0.469) | ||

| Stressed Language | 51.4 (25.7) | 47.1 (27.2) | 1.842 (0.529) | 2.045 (0.488) | ||

| Random Syllables | 50.3 (26.0) | 1.876 (0.469) | ||||

| 13-year-olds | ||||||

| Unstressed Language | 52.4 (28.8) | 38.5 (26.4) | 1.830 (0.442) | 1.956 (0.475) | ||

| Stressed Language | 51.1 (23.0) | 44.2 (26.4) | 1.873 (0.526) | 1.929 (0.471) | ||

| Random Syllables | 51.0 (24.8) | 1.884 (0.378) | ||||

| Adults | ||||||

| Unstressed Language | 58.3 (21.2) | 39.8 (22.2) | 1.531 (0.241) | 1.574 (0.286) | ||

| Stressed Language | 47.2 (25.3) | 46.3 (25.7) | 1.526 (0.295) | 1.539 (0.257) | ||

| Random Syllables | 50.5 (26.6) | 1.540 (0.298) | ||||

Note: Values presented as mean (SD). It should be noted that for the Random Syllables condition, the Words and Partwords effectively constitute ‘Nonwords’ as they only occurred once during exposure to the Random Syllables stream. See Behavioral Results for a description of significant results.

fMRI speech stream exposure task in 6- and 13-year-olds

For both groups of 6- and 13-year-old children, listening to each of the three speech streams (vs. resting baseline) engaged bilateral temporal, parietal, and frontal cortices (Table 2), as might be expected given that prior studies involving the presentation of speech or speech-like stimuli have consistently shown recruitment of similar language-processing regions in both adults (see Hickok & Poeppel, 2007, for a review) and children (see Friederici, 2006, for a review). Further, these findings are consistent with our previously observed results in adults and a sample of 10-year-old children (McNealy et al., 2006, 2010). Significant activation for all conditions was also seen in the basal ganglia, subcortical structures involved in sequence learning (e.g. Poldrack, Sabb, Foerde, Tom, Asarnow, Bookheimer & Knowlton, 2005).

Table 2.

Peaks of activation during exposure to the speech streams

| All activation versus rest |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 6-year-olds |

13-year-olds |

|||||||||

| Anatomical regions | BA | x | y | z | t | x | y | z | t | |

| Superior frontal gyrus | 10 | R | 10 | 60 | −2 | 4.41 | ||||

| 9 | L | −18 | 44 | 34 | 3.75 | |||||

| 8 | L | −16 | 30 | 48 | 5.51 | |||||

| 6 | L | −26 | 2 | 58 | 3.94 | |||||

| Middle frontal gyrus | 11 | L | −22 | 30 | −12 | 4.24 | ||||

| 11 | R | 24 | 32 | −12 | 3.88 | |||||

| 9 | L | −28 | 12 | 38 | 3.31 | |||||

| 8 | L | −24 | 14 | 46 | 3.55 | |||||

| 6 | L | −24 | −2 | 46 | 4.86 | |||||

| 6 | R | 22 | −2 | 56 | 4.00 | |||||

| Dorsal frontal gyrus | 11 | L | −14 | 48 | −12 | 3.99 | ||||

| 11 | R | 16 | 30 | −12 | 3.59 | |||||

| Inferior frontal gyrus | 44 | L | −50 | 10 | 22 | 4.01 | ||||

| 44 | R | 42 | 6 | 16 | 5.99 | |||||

| 47 | R | 32 | 24 | −14 | 4.54 | |||||

| Superior temporal gyrus | 22 | L | −64 | −14 | 4 | 10.57 | −56 | −10 | 2 | 10.6 |

| 22 | R | 50 | −12 | 2 | 13.48 | 52 | −18 | 6 | 15.3 | |

| 42 | L | −48 | −28 | 10 | 5.55 | −60 | −16 | 8 | 13.5 | |

| 42 | R | 44 | −24 | 8 | 12.98 | 32 | −38 | 12 | 4.06 | |

| Transverse temporal gyrus | 41 | L | −44 | −28 | 12 | 6.02 | ||||

| 41 | R | 36 | −26 | 10 | 6.34 | |||||

| Middle temporal gyrus | 21 | L | −42 | −12 | −14 | 7.88 | −64 | −26 | −10 | 3.81 |

| Inferior parietal lobule | 40 | L | −42 | −36 | 38 | 6.03 | −34 | −38 | 38 | 4.46 |

| 40 | R | 36 | −30 | 24 | 5.70 | 38 | −32 | 42 | 3.81 | |

| Supplementary motor area | 6 | L | −18 | −12 | 52 | 5.63 | ||||

| 6 | R | 16 | −16 | 52 | 4.73 | 22 | −16 | 62 | 3.79 | |

| Paracentral lobule | 5 | L | −28 | −38 | 62 | 6.28 | ||||

| 5 | R | 18 | −14 | 46 | 4.72 | |||||

| Precentral gyrus | 6 | L | −30 | −6 | 32 | 6.34 | −52 | −8 | 42 | 3.94 |

| 6 | R | 50 | −8 | 34 | 4.05 | |||||

| 4 | L | −26 | −20 | 54 | 5.98 | −24 | −28 | 48 | 3.54 | |

| 4 | R | 44 | −14 | 26 | 5.30 | 30 | −20 | 58 | 3.33 | |

| Postcentral gyrus | 312 | L | −64 | −12 | 20 | 5.08 | ||||

| 312 | R | 40 | −16 | 32 | 7.37 | 56 | −24 | 36 | 4.13 | |

| Anterior cingulate gyrus | 24 | L | −12 | 24 | −4 | 4.29 | ||||

| 24 | R | 12 | −18 | 42 | 5.25 | 8 | −2 | 40 | 4.31 | |

| 32 | R | 14 | 30 | −6 | 4.48 | |||||

| Posterior cingulate gyrus | 31 | R | 20 | −22 | 38 | 3.82 | ||||

| insula | L | −38 | −4 | 4 | 5.62 | |||||

| R | 28 | −32 | 20 | 5.31 | ||||||

| Caudate nucleus | L | −12 | 20 | 2 | 3.63 | |||||

| Caudate nucleus | R | 6 | 20 | 4 | 3.91 | |||||

| Putamen | L | −32 | −12 | 6 | 4.60 | |||||

| R | 14 | 0 | 10 | 6.16 | ||||||

| Globus pallidus | R | 18 | −8 | 2 | 5.44 | |||||

| Thalamus | R | 12 | −22 | 4 | 5.18 | |||||

| Hippocampus | R | 30 | −36 | −4 | 6.25 | |||||

| Cerebellum | R | 24 | −48 | −34 | 6.57 | |||||

Note: Activity thresholded at t > 3.21 (p < .001), corrected for multiple comparisons at the cluster level (p < .05). BA refers to putative Brodmann Area; L and R refer to left and right hemispheres; x, y, and z refer to the Talairach coordinates corresponding to the left-right, anterior-posterior, and inferior-superior axes, respectively; t refers to the highest t score within a region. In 6-year-olds, regions in the left hemisphere are part of an 8956 voxel cluster and regions in the right hemisphere are part of a 9921 voxel cluster. In 13-year-olds, regions in the left hemisphere are part of an 8201 voxel cluster, right frontal regions and the caudate nucleus are part of a 2677 voxel cluster, and all other right hemisphere regions are part of a 5096 voxel cluster.

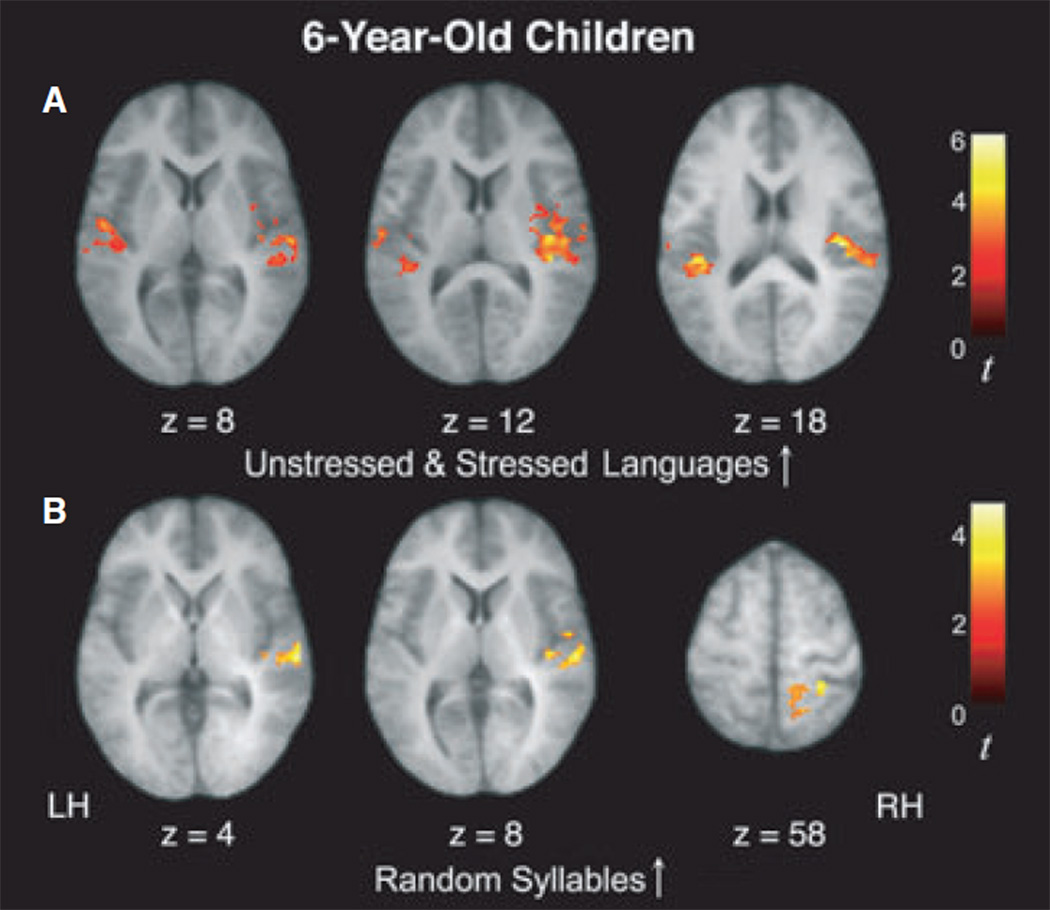

Our primary interest in this study was to investigate the neural correlates of learning that occurs as statistical regularities are computed online as a function of exposure to the speech streams. Thus, we examined the statistical contrast modeling increases in activity over time for the Unstressed and Stressed artificial languages with high statistical regularities (U↑ + S↑). In 6-year-olds, this contrast revealed significant signal increases in bilateral superior temporal gyrus (STG) and right transverse temporal gyrus (TTG), as well as the right insula, right precentral gyrus, and left paracentral lobule, a pattern that is similar to what was observed in 10-year-olds and adults, although it appears to extend more broadly outside of perisylvian regions (Figure 2 and Table 3; see also Figure S1). However, unlike our prior findings in adults and 10-year-old children (who showed left-lateralized and bilaterally distributed signal increases, respectively), a region of interest (ROI) analysis showed that this increasing activity during exposure to the Unstressed and Stressed Language conditions was right-lateralized (laterality index based on spatial extent = −0.16; laterality index based on magnitude = −0.13), indicating a right-to-left developmental progression of this learning-related response in temporal regions from childhood to adulthood (see below for further analyses which directly tested this developmental effect).

Figure 2.

In 6-year-old children, activity within temporal cortices was found to increase over the course of listening to the speech streams for both artificial language conditions (U↑+S↑; A) as well as for the Random Syllables condition (R↑; B).

Table 3.

Peaks of activation for regions where activity increased as a function of exposure to the speech streams in 6-year-olds

| Increases in artificial languages (U↑ + S↑) |

Increases in random syllables (R↑) |

Increases in artificial languages > random syllables (U↑ + S↑ > R↑) |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anatomical regions | BA | x | y | z | t | k | x | y | z | t | k | x | y | z | t | k | |

| Superior temporal gyrus | 22 | L | −58 | −8 | 4 | 4.10 | 297 | −60 | −20 | 0 | 4.21 | 503 | |||||

| 22 | R | 54 | −32 | 20 | 3.54* | 685 | 60 | −22 | 4 | 3.68* | 268 | 50 | −28 | 2 | 5.40 | 2065 | |

| 42 | L | −46 | −30 | 18 | 5.19 | 247 | |||||||||||

| Transverse temporal gyrus | 41 | R | 38 | −30 | 14 | 4.85 | 685 | 40 | −30 | 14 | 5.59 | 2065 | |||||

| Inferior parietal lobule | 40 | R | 60 | −32 | 24 | 4.00 | 268 | ||||||||||

| Paracentral lobule | 5 | L | −24 | −16 | 44 | 3.91 | 466 | ||||||||||

| Precentral gyrus | 6 | R | 38 | −8 | 14 | 4.46 | 685 | ||||||||||

| Insula | R | 36 | −20 | 16 | 5.66 | 685 | 36 | −22 | 20 | 6.13 | 2065 | ||||||

Note: Activity thresholded at t > 3.82 (p < .001), corrected for multiple comparisons at the cluster level (p < .05). * denotes a t score with p < .002. BA refers to putative Brodmann Area; L and R refer to left and right hemispheres; x, y, and z refer to the Talairach coordinates corresponding to the left-right, anterior-posterior, and inferior-superior axes, respectively; t refers to the highest t score within a region; k refers to cluster size (note that different regions with the same cluster size are part of the same cluster).

Six-year-old children also displayed significant signal increases in right STG and inferior parietal lobule (IPL) for the Random Syllables condition (R↑; Figure 2 and Table 3; see also Figure S1), a finding that is similar to what was found in 10-year-olds, although this activity was only observed in the right hemisphere (as opposed to the bilateral signal increases observed in 10-year-old children).

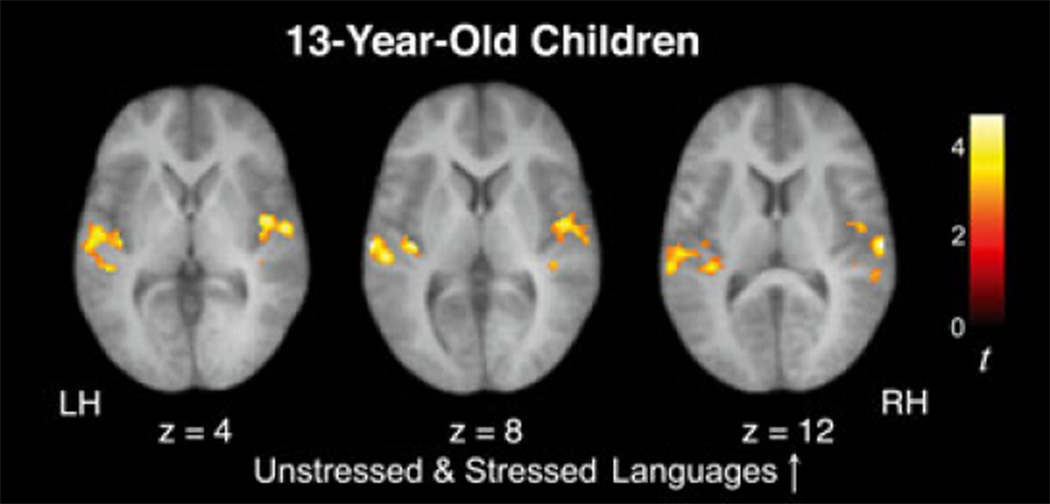

Our longitudinal cohort of 13-year-old children also exhibited significant signal increases over time in bilateral STG and left middle temporal gyrus (MTG) while listening to the two artificial languages (U↑ + S↑), a pattern resembling our findings in all other age groups (adults, 6- and 10-year-olds; Figure 3 and Table 4; see also Figure S2). An ROI analysis revealed that this increasing activity in 13-year-olds did not significantly differ between the left and right hemispheres (laterality index based on spatial extent = −0.03; laterality index based on magnitude = −0.03), as was also the case in 10-year-old children. Importantly, unlike the 6- and 10-year-olds but like the adults, the 13-year-olds did not display any significant signal increases for the Random Syllables condition (R↑).

Figure 3.

In 13-year-old children, activity within temporal cortices was found to increase over the course of listening to the speech streams for both artificial language conditions (U↑+S↑), but not for the stream of Random Syllables, similar to what was previously observed in adults.

Table 4.

Peaks of activation for regions where activity increased as a function of exposure to the speech streams in 13-year-olds

| Increases in artificial languages (U↑ + S↑) |

Increases in artificial languages > random syllables (U↑ + S↑ > R↑) |

|||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Anatomical regions | BA | x | y | z | t | k | x | y | z | t | k | |

| Superior temporal gyrus | 22 | L | −42 | −34 | 16 | 3.43 | 652 | |||||

| 22 | R | 54 | −10 | 4 | 3.56 | 633 | ||||||

| 42 | L | −66 | −20 | 8 | 3.80 | 652 | −66 | −22 | 8 | 3.77 | 575 | |

| 42 | R | 60 | −20 | 12 | 3.89 | 633 | 48 | −30 | 16 | 4.12 | 565 | |

| Middle temporal gyrus | 21 | L | −50 | −18 | −2 | 3.82 | 652 | −48 | −34 | 2 | 3.80 | 575 |

Note: Activity thresholded at t > 3.21 (p < .001), corrected for multiple comparisons at the cluster level (p < .05). BA refers to putative Brodmann Area; L and R refer to left and right hemispheres; x, y, and z refer to the Talairach coordinates corresponding to the left-right, anterior-posterior, and inferior-superior axes, respectively; t refers to the highest t score within a region; k refers to cluster size (note that different regions with the same cluster size are part of the same cluster).

To characterize the differential strength of the signal increases over time between conditions, we first directly compared signal increases occurring during exposure to the two artificial languages to the signal increases occurring during exposure to the Random Syllables condition ((U↑ + S↑) − R↑). In both 6- and 13-year-olds, this statistical contrast revealed significantly greater signal increases for the artificial languages in several regions, including bilateral STG (Tables 3 and 4). It may be worth noting that this pattern held for each age group when comparing signal increases during each artificial language condition individually to the Random Syllables condition (U↑ − R↑ and S↑ − R↑). We then examined the contribution of the presence of prosodic cues in addition to statistical regularities. Direct comparisons between the two artificial languages in 6-year-olds revealed stronger signal increases in the left STG (−40, −18, 6, t = 4.61) for the Stressed Language versus Unstressed Language (S↑ – U↑). In 13-year-olds, this comparison revealed stronger signal increases for the Stressed Language in the left MTG (–60, –24, –4, t = 3.29).

A statistical contrast modeling decreases in activity over time revealed that there were no significant signal decreases over time during any condition in 6-year-old children, consistent with our finding in 10-year-old children. There was a significant signal decrease during exposure to the Unstressed and Stressed Language streams (U↓ + S↓) in 13-year-olds in the left precentral gyrus (−22, )24, 54, t = 4.43). There were no significant decreases in activity over time during exposure to the Random Syllables stream for the 13-year-olds.

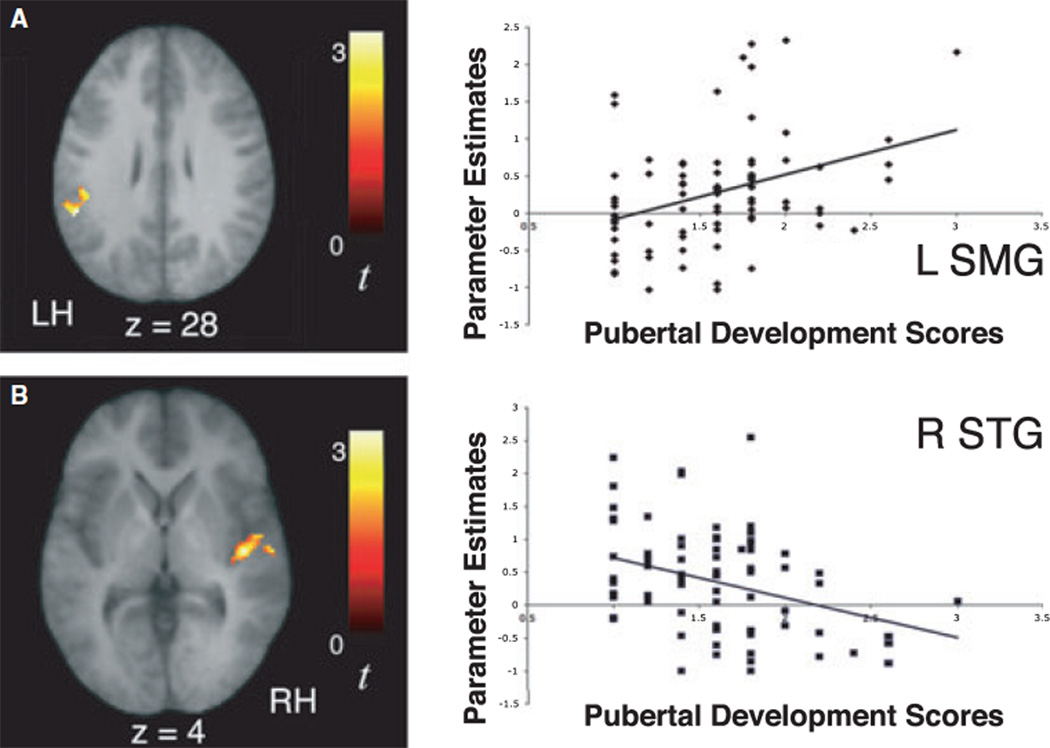

Between-group comparisons of children ages 6, 10, and 13 and adults

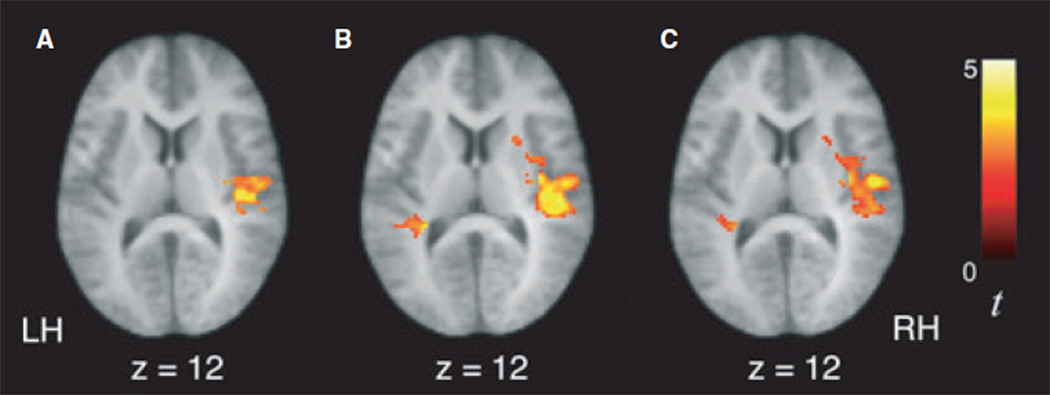

To further characterize developmental differences in learning-related activity, we performed between-group ANOVAs for the contrasts examining signal increases as a function of exposure to the speech streams (i.e. as the statistical regularities were being computed). For the statistical contrast modeling increases in activity over time during exposure to the artificial languages (U↑ + S↑), we observed significantly greater signal increases in 6-year-olds compared to the 10-year-olds, 13-year-olds and adults in several regions, including right temporal cortex, bilateral IPL and precuneus (Figure 4; Table 5). Similarly, the 6-year-olds also displayed greater signal increases than the other age groups during exposure to the random syllables stream (R↑) in right STG as well as dorsal parietal regions (Table 6). Adults showed greater signal increases in activity over time than the 10-year-olds and 13-year-olds during exposure to the artificial languages (U↑ + S↑) in left STG (−62, −6, 2, t = 3.63 and −50,−4, 4, t = 3.42, respectively). For this same contrast, adults also showed greater signal increases than 13-year-olds in left precentral gyrus ()24, )24, 54, t = 3.26). While adults also showed greater signal increases than 6-year-olds in left STG (−60, 4, 6, t = 3.21, k = 51 voxels), this comparison did not survive correction for multiple comparisons at the cluster level.

Figure 4.

Six-year-old children displayed significantly greater signal increases over the course of listening to the artificial language speech streams (U↑ + S↑) in right superior temporal gyrus than 10-year-old children (A), 13-year-old children (B), and adults (C).

Table 5.

Peaks of activation where differences in signal increases over time as a function of exposure to the unstressed and stressed language streams were observed between age groups

| Increases in artificial languages (U↑ + S↑) |

|||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 6-year-olds > 10-year-olds |

6-year-olds > 13-year-olds |

6-year-olds > Adults |

|||||||||||||||

| Anatomical regions | BA | x | y | z | t | k | x | y | z | t | k | x | y | z | t | k | |

| Middle frontal gyrus | 8 | L | −30 | 18 | 44 | 3.42 | 70 | ||||||||||

| Superior temporal gyrus | 22 | L | −52 | −32 | 18 | 3.07 | 2120 | ||||||||||

| 22 | R | 38 | −22 | 16 | 4.23 | 1442 | 42 | −32 | 16 | 4.73 | 2588 | ||||||

| 42 | R | 48 | −26 | 12 | 4.06 | 2588 | |||||||||||

| Transverse temporal gyrus | 41 | R | 40 | −22 | 14 | 4.16 | 2588 | 40 | −22 | 14 | 3.09 | 2324 | |||||

| Inferior parietal lobule | 40 | L | −44 | −32 | 50 | 4.10 | 908 | −26 | −46 | 54 | 5.00 | 2120 | −40 | −44 | 46 | 4.08 | 1837 |

| 40 | R | 28 | −44 | 44 | 3.09 | 205 | 30 | −28 | 42 | 4.21 | 2588 | 32 | −28 | 42 | 5.10 | 2324 | |

| Precuneus | 7 | L | −26 | −46 | 52 | 4.40 | 908 | −26 | −46 | 52 | 4.03 | 1837 | |||||

| 7 | R | 8 | −42 | 58 | 3.32 | 205 | 16 | −50 | 56 | 3.85 | 2588 | ||||||

| Supplementary motor area | 6 | L | −16 | −16 | 56 | 3.72 | 2120 | −16 | −6 | 54 | 3.50 | 1837 | |||||

| 6 | R | 12 | −16 | 58 | 3.78 | 2588 | 10 | −18 | 60 | 3.96 | 2324 | ||||||

| Paracentral lobule | 5 | L | −18 | −30 | 50 | 4.31 | 2120 | −18 | −30 | 48 | 3.64 | 1837 | |||||

| 5 | R | 12 | −22 | 48 | 4.00 | 2588 | |||||||||||

| Precentral gyrus | 6 | R | 50 | −10 | 34 | 3.71 | 2588 | 50 | −14 | 12 | 3.81 | 2324 | |||||

| 4 | L | −20 | −24 | 50 | 4.90 | 2120 | |||||||||||

| 4 | R | 58 | −12 | 26 | 3.94 | 2588 | 52 | −12 | 32 | 3.39 | 2324 | ||||||

| Postcentral gyrus | 312 | L | −38 | −14 | 36 | 4.42 | 2120 | −34 | −30 | 48 | 3.46 | 1837 | |||||

| 312 | R | 54 | −12 | 28 | 4.02 | 1442 | 24 | −18 | 46 | 3.77 | 2588 | ||||||

| Anterior cingulate gyrus | 24 | L | −20 | −8 | 36 | 3.87 | 2120 | −18 | −22 | 34 | 3.54 | 1837 | |||||

| 24 | R | 18 | −4 | 38 | 3.35 | 2588 | 18 | −6 | 38 | 4.29 | 2324 | ||||||

| Posterior cingulate gyrus | 31 | L | −20 | −18 | 42 | 4.00 | 908 | −22 | −16 | 42 | 4.01 | 2120 | −20 | −20 | 38 | 3.86 | 1837 |

| 31 | R | 22 | −38 | 30 | 3.98 | 2588 | 18 | −30 | 42 | 3.41 | 2324 | ||||||

| Insula | L | −32 | −22 | 20 | 3.24 | 2120 | −30 | −12 | 18 | 3.63 | 1837 | ||||||

| R | 36 | −12 | 12 | 3.77 | 2588 | 36 | −24 | 18 | 3.34 | 2324 | |||||||

Note: Activity thresholded at t > 3.07 (p < .001), corrected for multiple comparisons at the cluster level (p < .05). BA refers to putative Brodmann Area; L and R refer to left and right hemispheres; x, y, and z refer to the Talairach coordinates corresponding to the left-right, anterior-posterior, and inferior-superior axes, respectively; t refers to the highest t score within a region; k refers to cluster size (note that different regions with the same cluster size are part of the same cluster).

Table 6.

Peaks of activation where differences in signal increases over time as a function of exposure to the random syllables stream were observed between age groups

| Increases in random syllables (R↑) |

|||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 6-year-olds > 10-year-olds |

6-year-olds > 13-year-olds |

6-year-olds > Adults |

|||||||||||||||

| Anatomical regions | BA | x | y | z | t | k | x | y | z | t | k | x | y | z | t | k | |

| Superior temporal gyrus | 22 | R | 58 | −20 | 6 | 3.37 | 343 | 58 | −22 | 4 | 3.54 | 212 | |||||

| Inferior parietal lobule | 40 | L | −40 | −46 | 40 | 3.49 | 138 | 40 | −36 | 36 | 3.11 | 64 | −40 | −46 | 40 | 4.25 | 258 |

| Superior parietal lobule | 7 | L | −34 | −46 | 60 | 3.81 | 170 | −24 | −48 | 64 | 4.12 | 117 | −22 | −50 | 64 | 3.95 | 186 |

| 7 | R | 24 | −44 | 58 | 3.09 | 147 | |||||||||||

| Precuneus | 7 | L | −14 | −42 | 60 | 3.93 | 170 | −12 | −42 | 60 | 3.08 | 117 | |||||

| 7 | R | 6 | −44 | 66 | 4.01 | 229 | 6 | −44 | 66 | 3.95 | 186 | ||||||

| Paracentral lobule | 5 | L | −32 | −42 | 62 | 4.48 | 186 | ||||||||||

Note: Activity thresholded at t > 3.07 (p < .001), corrected for multiple comparisons at the cluster level (p < .05). BA refers to putative Brodmann Area; L and R refer to left and right hemispheres; x, y, and z refer to the Talairach coordinates corresponding to the left-right, anterior-posterior, and inferior-superior axes, respectively; t refers to the highest t score within a region; k refers to cluster size (note that different regions with the same cluster size are part of the same cluster).

Consistent with the right-lateralized signal increases observed in 6-year-olds and the left-lateralized signal increases observed in adults as a function of exposure to the artificial languages (U↑ + S↑), an ANOVA examining the differences between the 6-year-olds, 10-year-olds, and adults revealed a significant difference in laterality between age groups [F(2, 73) = 5.33, p < .007 for analyses based on spatial extent; F(2, 73) = 12.85, p < .001 for analyses based on magnitude]. Post-hoc analyses further revealed that these laterality differences were significant between the 6-year-olds and adults (p < .008 for spatial extent; p < .001 for magnitude) as well as between the 10-year-olds and adults (p < .006 for spatial extent, p < .001 for magnitude).

Longitudinal comparison between 10- and 13-year-old children

In order to assess changes in the neural substrate of speech parsing occurring as children transition into puberty, we directly compared activity observed at ages 10 and 13 within a group of 39 children who were studied longitudinally. A paired t-test for the statistical contrast modeling increases in activity over time during exposure to the artificial languages (U↑ + S↑) revealed that children displayed greater signal increases in right STG (42, −36, 14, t = 3.71) and left IPL (−46, )34, 26, t = 3.19) at age 10 than they do at age 13. These findings suggest that there are significant changes in the extent to which temporal and parietal language regions are recruited during statistical learning during early adolescence. As may be expected given that we found no signal increases during the random syllables condition for the 13-year-old children, greater signal increases in left STG were observed at age 10 than at age 13 while listening to the random stream of syllables ()58, )18, )2, t = 3.68). This finding indicates the occurrence of marked changes between ages 10 and 13 in how the brain processes weak statistical relationships between syllables during implicit word segmentation.

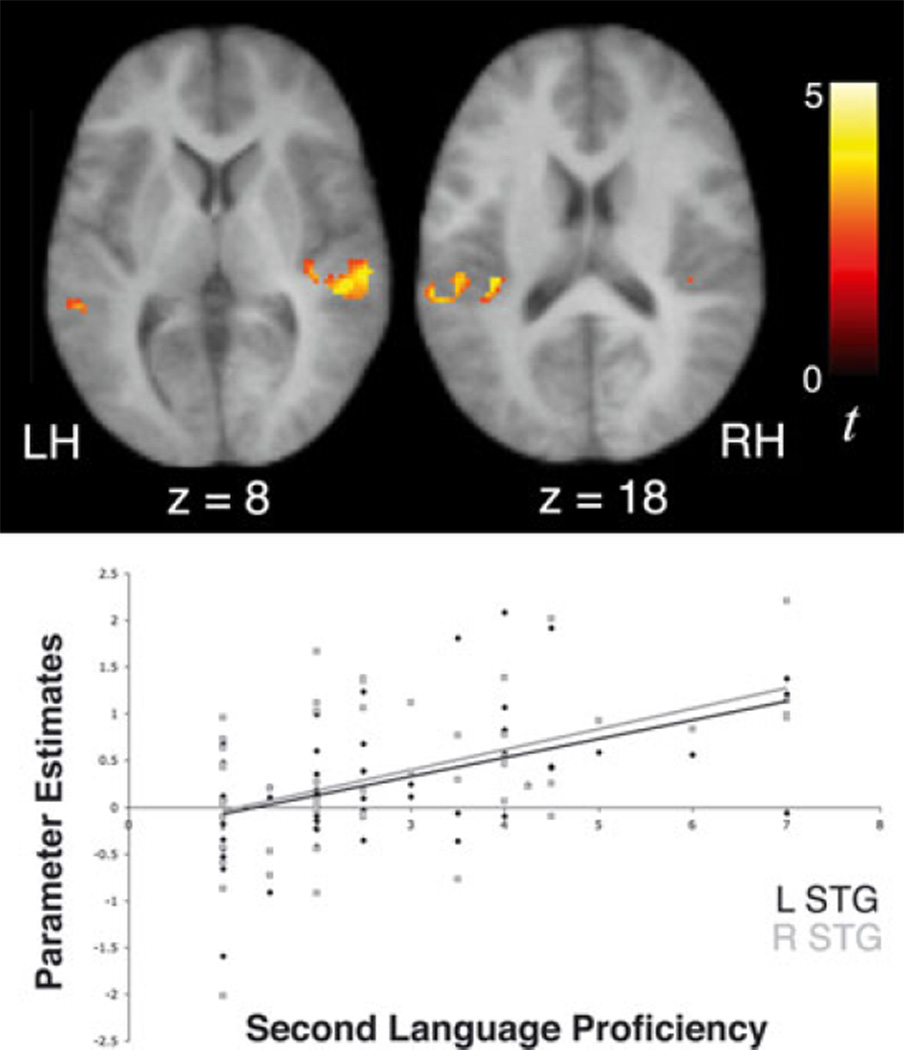

Brain activity associated with language learning and proficiency level in a second language

Regression analyses were performed to examine whether 10-year-old children’s signal increases over time while tracking statistical regularities are related to their levels of proficiency in a foreign language. In a sample of 53 children who had second language proficiency scores ranging between 1 and 7, second language proficiency was positively correlated with signal increases over time in bilateral STG and left TTG (Table 7; Figure 5) while listening to the Unstressed and Stressed Languages, after controlling for both age of acquisition of second language and verbal IQ. Interestingly, earlier age of second language acquisition was also associated with greater signal increases in right STG (42, )10, 10, t = 3.47; Figure 6) during exposure to the artificial languages. We also observed a negative correlation between second language proficiency and signal increases over time in left STG while listening to the random syllables condition (−50, −26, 2, t = 3.99), suggesting that higher proficiency in a second language might allow a differential recruitment of the left STG (to a greater or lesser degree) depending on the regularity of the statistical cues (high versus low). While these findings indicate that the extent to which brain regions are recruited during language learning is in part influenced by one’s prior experience with acquiring novel languages, we also performed a between-group random effects analysis after dividing children into higher and lower second language proficiency groups to further corroborate the results of this regression analysis. These groups were matched across a number of variables, as noted in the methods section. An ANCOVA for the contrast indexing increasing activity during exposure to the Unstressed and Stressed Languages revealed that children with higher proficiency exhibited significantly greater signal increases over time than children with lower or no proficiency in right STG (60, −22, 2, t = 3.41), consistent with the results from the regression analyses, as well as in left STG (−58, −28, 2, t = 3.39) and insula ()40, )38, 20, t = 3.59; Figure 7).

Table 7.

Peaks of activation where 10-year-old children displayed significant correlations between signal increases over time as a function of exposure to the unstressed and stressed languages and second language proficiency

| Increases in artificial languages (U↑ + S↑) |

|||||||

|---|---|---|---|---|---|---|---|

| Second language proficiency |

|||||||

| Anatomical regions | BA | x | y | z | t | k | |

| Superior temporal gyrus | 22 | L | −60 | −36 | 14 | 3.44 | 303 |

| 22 | R | 46 | −32 | 4 | 5.04 | 247 | |

| 42 | L | −52 | −28 | 16 | 3.37 | 303 | |

| 42 | R | 58 | −28 | 8 | 3.72 | 247 | |

| Transverse temporal gyrus | 41 | L | −40 | −32 | 18 | 3.68 | 303 |

Note: Activity thresholded at t > 3.17 (p < .001), corrected for multiple comparisons at the cluster level (p < .05). BA refers to putative Brodmann Area; L and R refer to left and right hemispheres; x, y, and z refer to the Talairach coordinates corresponding to the left-right, anterior-posterior, and inferior-superior axes, respectively; t refers to the highest t score within a region; k refers to cluster size (note that different regions with the same cluster size are part of the same cluster).

Figure 5.

Significant signal increases over time as a function of exposure to the artificial language streams (U ↑ + S↑) in bilateral superior temporal gyri were positively correlated with 10-year-old children’s level of proficiency in a second language, independent of verbal IQ and age of acquisition of the second language (left STG r = 0.506, p < .001; right STG r = 0.484, p < .001).

Figure 6.

Significant signal increases over time as a function of exposure to the artificial language streams (U ↑ + S↑) in right superior temporal gyrus were negatively correlated with 10-year-old children’s age of acquisition of a second language, after controlling for verbal IQ and level of second language proficiency (r = )0.343, p < .01).

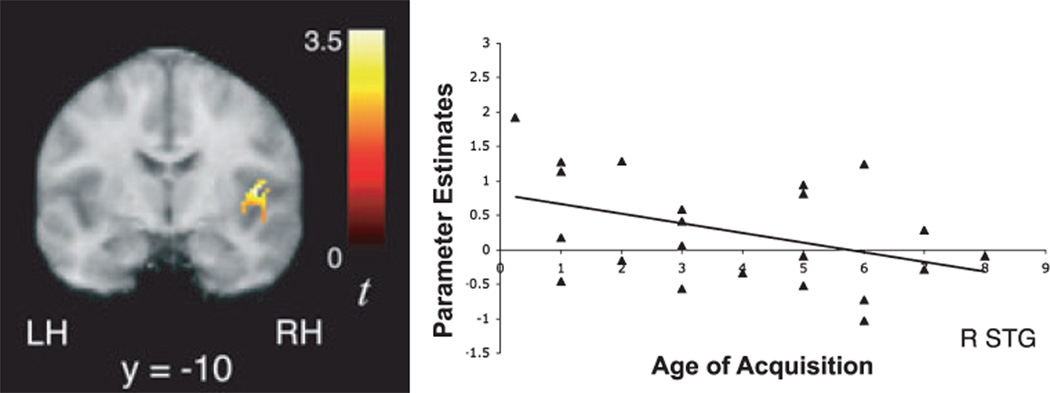

Figure 7.

Ten-year-old children with higher proficiency in a second language exhibited significantly greater signal increases over time as a function of exposure to the artificial language streams (U↑ + S↑) than those with lower or no proficiency in bilateral superior temporal gyri.

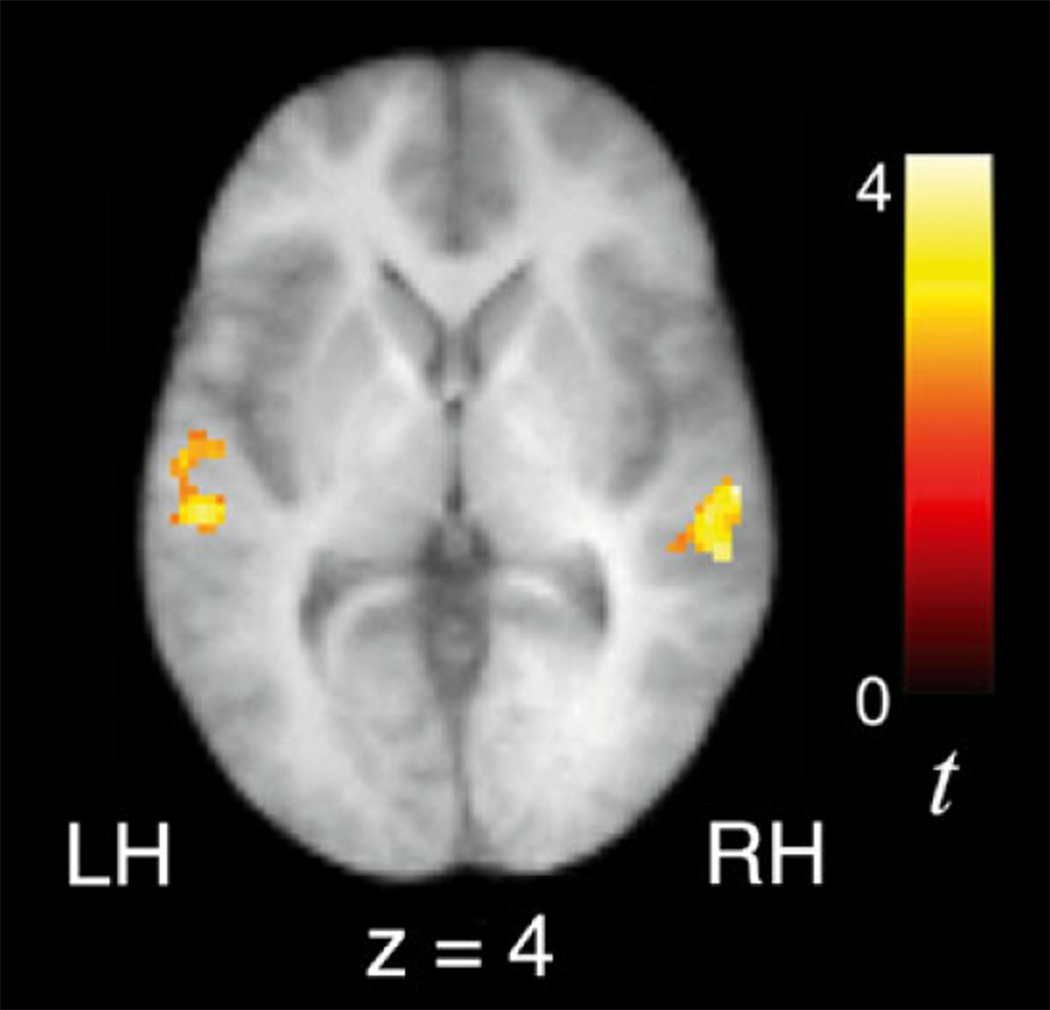

Brain activity associated with language learning and pubertal development

A separate regression analysis was performed in our larger sample of 78 10-year-olds to assess whether the observed signal increases in activity during exposure to the artificial languages might also be related to their level of pubertal development, as assessed with the Pubertal Development Scale (Petersen et al., 1988). When each child’s pubertal development score was entered as a regressor for the contrast of signal increases over time for the Stressed and Unstressed Languages, a significant positive correlation was observed in left supramarginal gyrus (−54, )44, 28, t = 3.13) and a significant negative correlation was observed in the right STG (42, )16, 4, t = 3.25; Figure 8). These results suggest that pubertal maturation significantly may contribute to the observed pattern of increasing left-lateralization of learning-related signal increases over time between different age groups, though it is possible that this relationship be mediated by an unmeasured third variable. Finally, regression analyses performed in 6-year-olds and 13-year-olds to assess whether observed signal increases in activity during exposure to the artificial languages might be related to age revealed a positive correlation between age and signal increases over time in right insula (coordinates 42, )20, 14; t = 4.39) in our sample of 6-year-old children.

Figure 8.

Significant signal increases over time as a function of exposure to the artificial language streams (U↑ + S↑) in left supramarginal gyrus were positively correlated with 10-year-old children’s level of pubertal development (r = 0.353, p < .002; A). In contrast, signal increases over time in right superior temporal gyrus were negatively correlated with 10-year-old children’s level of pubertal development (r = −0.343, p < .002; B). The scatter plots illustrate that the positive and negative correlations between pubertal development scores and activity within the left supramarginal gyrus and right superior temporal gyrus, respectively, are not driven by outliers.

Discussion

The goal of this study was to investigate developmental changes in the brain regions involved in language learning and how these may be influenced by experiential and maturational factors. Infant behavioral studies have revealed that the brain utilizes statistical regularities and prosodic speech cues available in the input to parse continuous speech streams during language learning (e.g. Aslin et al., 1998; Johnson & Jusczyk, 2001; Saffran et al., 1996a; Thiessen & Saffran, 2003). Several studies have also confirmed the critical role that word segmentation plays in the language acquisition process by directly relating early word segmentation skills to later language development (Newman et al., 2006; Graf Estes et al., 2007). By adapting the word segmentation paradigms from the behavioral literature for use with fMRI, we previously identified a neural signature of implicit word segmentation in adults (McNealy et al., 2006) and 10-year-old children (McNealy et al., 2010). While we found that activity in similar neural regions underlies speech parsing in both groups, we observed significant age-related changes in the laterality of learning-related neural activity and the level of sensitivity to low statistical regularities in the input. Here, to gain a better understanding of the developmental changes in the brain regions involved in language learning, we investigated (i) whether our previously observed developmental differences might be even more pronounced in children as young as 5 years of age, (ii) whether observable longitudinal changes are present in neural activity in a group of children studied at ages 10 and 13 as they enter puberty, and (iii) whether neural activity associated with implicit word segmentation is affected by one’s level of proficiency in a second language and one’s level of pubertal maturation.

Developmental right-to-left shift in laterality during implicit word segmentation

In order to identify learning-related activity that might specifically reflect the computation of statistical regularities and the implicit detection of word boundaries, we investigated changes in activity over time as a function of exposure to each speech stream. Consistent with our previous observations in 10-year-old children and adults, BOLD signal increases over time (i.e. within each exposure block) were seen in bilateral temporal cortices when both 6- and 13-year-old children listened to the stressed and unstressed language streams (Figures 2 and 3; Tables 3 and 4). As we previously proposed (McNealy et al., 2006, 2010), these signal increases over time along the superior temporal gyri may reflect the ongoing computation of frequencies of syllable co-occurrence and transitional probabilities between neighboring syllables, an interpretation that is consistent with other studies that have shown the STG to be involved in processing the predictability of sequences over time (Blakemore, Rees & Frith, 1998; Bischoff-Grethe, Proper, Mao, Daniels & Berns, 2000; Ullen, Bengtsson, Ehrsson & Forssberg, 2005; Ullen, 2007). Further, our results are in line with previous studies in which the learning of a linguistic task led to learning-related signal increases in activation in primary and secondary sensory and motor areas, as well as in regions involved in the storage of task-related cortical representations (e.g. Golestani & Zatorre, 2004; Newman-Norlund et al., 2006; see Kelly & Garavan, 2005, for a review). However, we also observed significant developmental changes in the laterality of these signal increases in temporal cortices over time. We had previously seen left-lateralized signal increases in adults (McNealy et al., 2006), and bilateral increases in 10-year-old children (McNealy et al., 2010). Here, while the 13-year-old children also displayed bilateral signal increases, we observed right-lateralized increases in 6-year-old children, indicating a progression from greater right to left hemisphere involvement of temporal cortices with age to subserve the computation of statistical regularities in a novel speech stream.

Direct comparisons between age groups revealed that, when listening to the stressed and unstressed artificial languages, 6-year-old children displayed greater signal increases than 10-year-olds, 13-year-olds and adults in right temporal and bilateral inferior parietal cortices (Figure 4; Table 5). Interestingly, when we examined age-related changes within the 10-year-old children who were followed longitudinally, we also found greater signal increases in right superior temporal gyrus, as well as left inferior parietal cortex, at age 10 than at age 13. In contrast, adults showed greater learning-related signal increases than children during exposure to both artificial languages in left superior temporal gyrus. Taken together, these findings corroborate our prior observation of significant changes in the laterality of learning-related activity from right to left with increasing age.

While a left hemisphere bias for some aspects of language processing has been observed early in life (e.g. Balsamo, Xu, Grandin, Petrella, Braniecki, Elliott & Gaillard, 2002; Dehaene-Lambertz, Dehaene & Hertz-Pannier, 2002; Pena, Maki, Kovacic, Dehaene-Lambertz, Koizumi, Bouquet & Mehler, 2003; Minagawa-Kawai, Mori, Naoi & Kojima, 2007; Ahmad, Balsamo, Sachs, Xu & Gaillard, 2003; see Holland, Vannest, Mecoli, Jacola, Tillema, Karunanayaka, Schmithorst, Yuan, Plante & Byars, 2007, for a review), the degree of language lateralization seems to increase with age and appears to be both task- and area-dependent, such that the functional representation for a particular linguistic skill may shift toward the left hemisphere as that skill is mastered and refined (e.g. Holland, Plante, Weber Byars, Strawsburg, Schmithorst & Ball, 2001; Turkeltaub, Gareau, Flowers, Zeffiro & Eden, 2003; Brown, Lugar, Coalson, Miezin, Petersen & Schlaggar, 2005; Szaflarski, Holland, Schmithorst & Byars, 2006; Holland et al., 2007). For example, increased lateralization to the left hemisphere has been observed in event-related potential (ERP) studies conducted in young children (Mills, Coffey-Corina & Neville, 1993, 1997). In these studies, larger amplitude ERPs in response to known versus unknown words were initially distributed bilaterally, but this effect shifted to the left hemisphere alone as a function of age and increased vocabulary size. While the left hemisphere is considered to play a more prominent role in language processing, it should be noted that, even in adults, the recruitment of right hemisphere homologues of canonical language areas in the left hemisphere has been observed with higher degree of difficulty or complexity of the linguistic task (e.g. Carpenter, Just, Keller, Eddy & Thulborn, 1999; Hasegawa, Carpenter & Just, 2002; Just, Carpenter, Keller, Eddy & Thulborn, 1996; Lee & Dapretto, 2006). In addition, the right hemisphere has also been found to be important for the processing of some linguistic functions such as discourse, metaphoric language, affective prosody and sentence-level intonation in studies in both children (Dapretto, Lee & Caplan, 2005; Plante, Holland & Schmithorst, 2006) and adults (Caplan & Dapretto, 2001; Ethofer, Anders, Wiethoff, Erb, Herbert, Saur, Grodd & Wildgruber, 2006; Lee & Dapretto, 2006; Plante, Creusere & Sabin, 2002; Tong, Gandour, Talavage, Wong, Dzemidzic, Xu, Li & Lowe, 2005).