SUMMARY

Recent anatomical, physiological and neuroimaging findings indicate multisensory convergence at early, putatively unisensory stages of cortical processing. The objective of this study was to confirm somatosensory-auditory interaction in AI, and to define both its physiological mechanisms and its consequences for auditory information processing. Laminar current source density and multiunit activity sampled during multielectrode penetrations of primary auditory area AI in awake macaques revealed clear somatoauditory interactions, with a novel mechanism: somatosensory inputs appear to reset the phase of ongoing neuronal oscillations, so that accompanying auditory inputs arrive during an ideal, high excitability phase, and produce amplified neuronal responses. In contrast, responses to auditory inputs arriving during the opposing low-excitability phase tend to be suppressed. Our findings underscore the instrumental role of neuronal oscillations in cortical operations. The timing and laminar profile of the multisensory interactions in AI indicate that nonspecific thalamic systems may play a key role in the effect.

INTRODUCTION

The sensation given by rubbing one’s fingers over a rough surface is both amplified and changed in quality by hearing the associated sound. This is referred to as the “Parchment-Skin Illusion” (Jousmaki and Hari, 1998) and the underlying somatosensory-auditory interaction in the brain also affects auditory sensation. In the so-called “Hearing Hands Effect”, lightly touching a vibrating probe dramatically changes the perception of an audible vibration (Schurmann et al, 2004). Findings like these, and the neurophysiological investigations that they have inspired, have opened a fascinating view into the workings of sensory processing at early cortical stages, and have contributed to a significant change in the way that we think about the merging of sensory information in cortical processing (reviewed by Ghazanfar and Schroeder, 2006). The most provocative recent discovery concerning multisensory interaction is that it can occur very early in cortical processing, in putatively unisensory cortical regions (reviewed by Schroeder and Foxe, 2005). To explore the neuronal mechanisms and functional significance of low-level multisensory interaction, we focus on the auditory cortex, the system in which these effects are best known.

Non-auditory modulation of neuronal activity in areas of the supratemporal plane in and near primary auditory cortex is suggested by hemodynamic studies in both humans (Calvert et al., 1997; Foxe et al., 2002; Atteveldt et al., 2004; Pekkola et al., 2005) and monkeys (Kayser et al., 2005). Anatomical studies in monkeys show that auditory cortices including AI are directly connected to visual cortex (Falchier et al., 2002) and somatosensory cortex (Cappe and Barone, 2005). All of the auditory cortices examined to date by electrophysiological studies in monkeys display some type of multisensory responsiveness, involving vision (Brosch et al., 2005; Ghanzanfar et al., 2005; Schroeder and Foxe 2002), eye position (Werner-Reiss et al., 2003; Fu et al., 2004) and/or somatosensation (Schroeder et al., 2001; Fu et al., 2003; Brosch et al., 2005). Most remarkably, there is evidence that even at the primary cortical level in AI, neuronal activity can be modulated by nonauditory influences (Werner-Reiss et al., 2003; Fu et al., 2004; Brosch et al., 2005; Ghazanfar et al., 2005).

The goal of this study was to confirm somatosensory-auditory interaction in AI, and to define both its physiological mechanisms and its consequences for auditory information processing. We analyzed laminar current source density (CSD) and multiunit activity (MUA) sampled during multielectrode penetrations of primary cortical area AI in awake macaque monkeys. This approach provides two distinct advantages for our studies (Schroeder et al., 1998; Lipton et al., 2006). First, because CSD analysis indexes the transmembrane currents comprising the first order synaptic response, it provides a sensitive measure of synaptic activity whether or not it leads to changes in local neuronal firing patterns (as measured by MUA). Second, because the recordings sample all layers simultaneously, we can define and quantify laminar activation profiles, thus generating evidence regarding the relative contributions of lemniscal and extralemniscal thalamic inputs, as well as those of cortical inputs (Schroeder et al., 2003).

Since both the somatosensory event related response and the effect of somatosensory stimuli on auditory processing in AI appeared to be modulatory, we tested the specific hypothesis that somatosensory input affects auditory processing by modulating the phase of ongoing local neuronal oscillations. This hypothesis is based on two key observations. First, processing is EEG phase dependent; that is, the momentary high- or low-excitability state of a neuronal ensemble in AI is controlled by the phase of its ongoing oscillatory activity, and momentary excitability state has a determinative effect on the processing of transient stimuli (Kruglikov and Schiff, 2003; Lakatos et al., 2005). Second, transient stimuli, both auditory and nonauditory can reset the phase of the ongoing oscillations (Lakatos et al., 2005). Thus, we reasoned that a somatosensory-induced reset of local oscillatory activity to an optimal excitability phase would enhance the ensemble response to temporally correlated auditory input. Our findings support this hypothesis and underscore the instrumental role of neuronal oscillations in cortical operations.

RESULTS

Laminar profile of auditory versus somatosensory responses in AI

Auditory and somatosensory event related responses were recorded in 38 electrode penetrations distributed evenly along the tonotopic axis of AI in six monkeys (15, 10, 4, 4, 3, and 2 penetrations). No statistically significant difference between monkeys was observed for any of the response parameters (one-way MANOVAs, p values > 0.05 for the main effect, i.e., monkey) described below. The characteristic frequency of the different AI sites ranged from 0.3 kHz to 32 kHz.

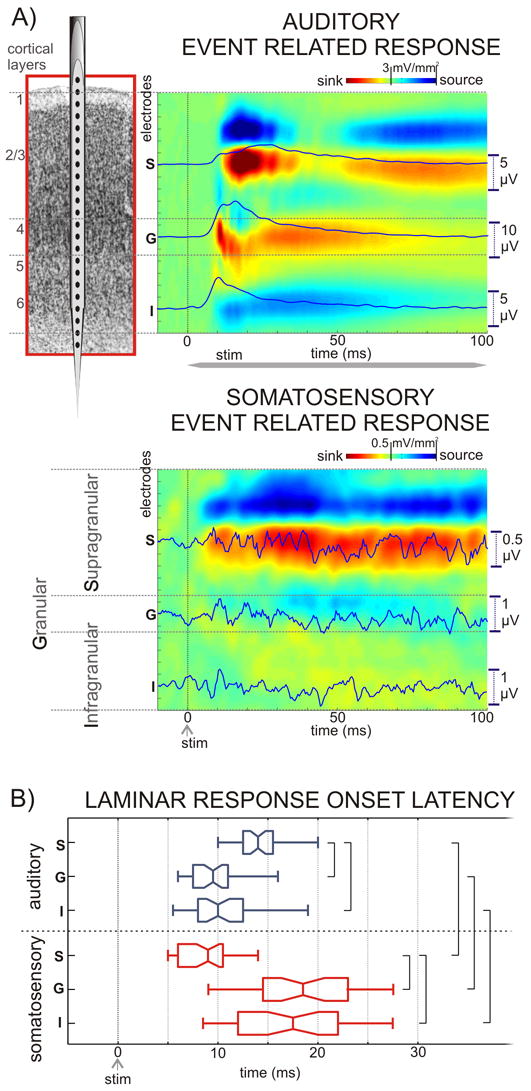

Pure tone stimulation at one representative site’s characteristic frequency produces activation of all cortical layers (Fig. 1A., upper color-map) with initial postsynaptic response (current sink with a concomitant increase in action potentials) in Lamina 4, followed by later responses in the extragranular laminae. To quantify this and other observations, for each CSD profile (recording site), we selected the supragranular (S), granular (G), and infragranular (I) channel with the largest activation for quantitative analysis (Fig. 1B.). Across the entire data set (n=38), activation of the S layers (mean: 14.6 ms, standard deviation (SD): 2.8) occurred significantly later (Games-Howell test, p<0.01) than that of the G layers (mean: 9.5 ms, SD: 2.3). The I layer response appeared to lag the G layer response slightly, but this effect was not statistically significant. The overall pattern is regarded as a ‘feedforward’ type activation profile (Schroeder et al., 1998; Fu et al., 2004; Lipton et al., 2006).

Figure 1. Laminar profiles of auditory and somatosensory event related responses in area AI of the auditory cortex.

A) Field potentials (used to calculate the CSD) and MUA were recorded concomitantly with a linear-array multi-contact electrode positioned to sample from all cortical layers. Laminar boundaries were determined based on functional criteria (see Experimental Procedures). Color-maps show the laminar profiles of a representative characteristic frequency tone and a somatosensory stimulus related averaged CSD (98 and 95 sweeps respectively), recorded in the same location. Current sinks (net inward transmembrane current) are red and current sources (net outward transmembrane current) are blue. Based on their largest amplitude in the auditory CSD one electrode was selected in each layer (S, G, and I) for quantitative analysis. Overlaid traces show MUA in the selected channels. B) Box-plots show pooled onset latencies of the characteristic frequency tone (blue) and somatosensory stimulus (red) related CSD in the selected channels for all experiments. The boxes have lines at the lower quartile, median, and upper quartile values while the notches in boxes graphically show the 95% confidence interval about the median of each distribution. Brackets indicate the significant post hoc comparisons calculated using Games-Howell tests (p<0.01).

In contrast to the auditory event related response, the somatosensory event related response (Fig. 1 middle) is much less intense. In fact, despite the consistent indication of an organized stimulus-related CSD response, it has no consistent phasic MUA correlate. Thus, the somatosensory input by itself does not appear “effective”, in that it does not drive activation over the action potential threshold in most local neurons. In other words, rather than conveying specific information, the somatosensory input appears to be “modulatory” in character. Compounding this observation, the somatosensory CSD response does not fit the simple feedforward (granular followed by extragranular excitation) pattern. The CSD amplitude distribution appears heavily biased toward the S layers, to the extent that the G and I layer responses are barely apparent (Fig. 1A, lower). Quantification of laminar onset profile was hampered by the very low amplitude of the somatosensory response in the lower layers in some of the experiments. Specifically, in 8 of the 38 AI sites the onset latencies for the G and I layers could not be determined, despite the presence of a clear event related supragranular response. However, quantification of latencies across the other 30 sites showed that unlike in the auditory event related response, the supragranular onset latency was significantly (Games-Howell tests, p<0.01) earlier than in the lower layers (supragranular mean: 8.9 ms, SD: 2.7; granular mean: 18.7, SD: 5.7; infragranular mean: 17.8 ms, SD: 6.3). To examine the interaction effect of stimulus and layer on the response latency, a 2 × 3 (Stimulus × Layer) ANOVA was employed, using a 0.01 criterion of statistical significance. There was a significant interaction between stimulus and layer, F (2, 206) = 69.651, p < 0.001, with somatosensory response onset latency being earlier in the supragranular, and later in the granular and infragranular layers than the co-located auditory response.

Auditory-somatosensory interactions

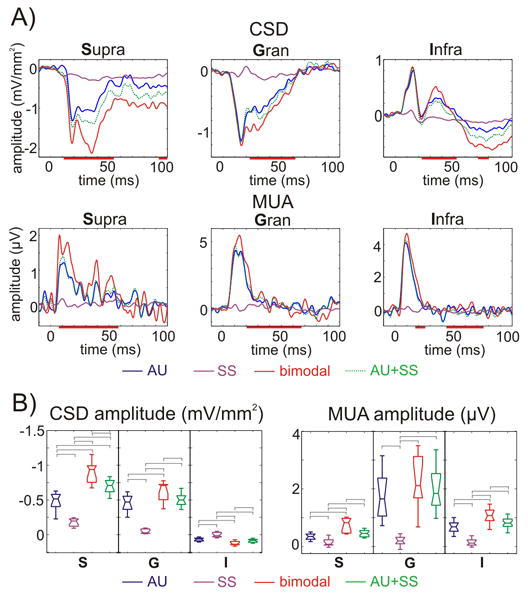

To test for the interaction between auditory and somatosensory stimulation, we presented 40 dB clicks and median nerve pulses (see Experimental Procedures) separately, and then compared the responses to those resulting from presenting somatosensory and auditory stimuli simultaneously. These comparisons are illustrated using the S, G, and I responses from an individual experiment in Figure 2A. Compared to the unisensory responses or the arithmetic sum of these, simultaneous stimulation led to larger activations, reflected in both CSD and MUA, meaning that the bimodal response was super-additive. In the case presented in Figure 2A, multisensory enhancement was greatest in the supragranular layers, which was true for the pooled data (Fig. 2B) as well. This interaction effect in the event related CSD of the supragranular layers started as early as the auditory response onset, and reached its peak between 30–40 ms post-stimulus. The interaction in the granular and infragranular layers was smaller in amplitude, and started about 10 ms later (Fig. 2A). To quantify the enhancement of the bimodal response compared to the unimodal, CSD and MUA response amplitudes were averaged over the 15–60 ms time-window, and then single trial bimodal response amplitudes were compared to the arithmetic sum of average unimodal response amplitudes using one-sample t-tests. In the pooled data (Figure 2B), both CSD and MUA amplitudes showed a significant super-additive enhancement in all layers (Games-Howell tests, p<0.01), with the exception of the granular MUA. To test whether there are any differences across different characteristic frequency (CF) regions of A1 in the onset latency and amplitude of auditory, somatosensory responses and bimodal facilitation, we grouped the data according to CF in three categories: low (0.3–1.5 kHz, n = 14), middle (2–8 kHz, n = 10) and high (11–32 kHz, n = 14) frequency regions. Besides the significant differences in the onset latency of responses to auditory stimuli (CF tones and click) described in detail elsewhere (Lakatos et al., 2005b), none of the variables showed CF dependent significant differences (ANOVA, p>0.05).

Figure 2. Auditory, somatosensory and bimodal event related responses.

A) CSD (upper) and MUA (lower) responses to auditory, somatosensory and bimodal stimuli on the selected supragranular (S), granular (G), and infragranular (I) channels (from the same site as Fig. 1). Green dotted line shows the arithmetic sum of the unimodal responses. Red lines on the time-axis denote time intervals where the averaged bimodal responses were significantly (independent-samples t-tests, p<0.01) greater than the sum of the averaged unimodal responses in the pooled data (n=38). B) Box-plots show pooled (n=38) CSD and MUA amplitudes on the selected channels (S, G, and I) averaged for the 15–60 ms time interval for the same conditions as panel A. Brackets indicate the significant post hoc comparisons calculated using Games-Howell tests (p<0.01).

Principle of inverse effectiveness

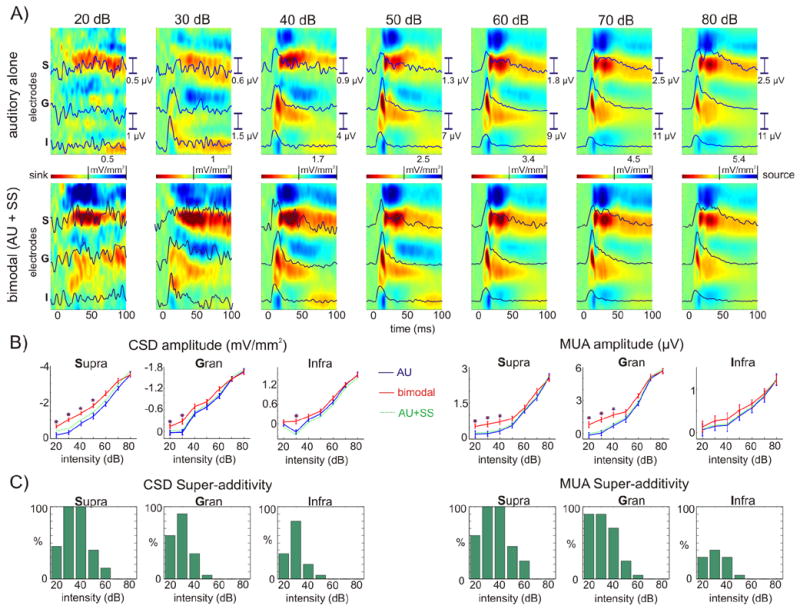

One of the best-agreed-upon observations about multisensory interactions is that they are strongest with stimuli which, when presented alone, are minimally effective in eliciting a neural response (Stein and Meredith, 1993). To test whether the principle of inverse effectiveness applies to the multisensory enhancement described above, we presented different intensity auditory clicks (20–80 dB), both in isolation and paired with somatosensory stimuli. Figure 3 illustrates the comparison of unisensory auditory responses with multisensory responses, as a function of auditory stimulus effectiveness (intensity), holding the somatosensory stimulus constant. Predictably, with unisensory auditory stimulation, response amplitude increased with increasing stimulus intensity (Fig. 3A, upper). At the lowest intensity there was only minimal stimulus related activation in the supragranular layers, and virtually no activity in the granular or infragranular layers; the contrast between the laminar profiles of threshold and suprathreshold auditory responses suggests that these may be promoted by different input mechanisms (see discussion, “Anatomical substrates for multisensory interaction in AI”). The coincident presentation of a somatosensory stimulus at the lowest intensity resulted in definitive Layer 4 CSD and MUA responses (Fig. 3A, lower). Analysis of single trial (CSD and MUA) response amplitudes in the 15–60 ms time interval (Fig. 3B) revealed that bimodal response amplitudes were significantly larger than the sum of the unimodal averaged responses (one-sample t-tests, p<0.01), i.e. multisensory enhancement was significantly super-additive under specific conditions, and the effect pattern generally adhered to the inverse effectiveness principle. At 30 dB the multisensory response is significantly super-additive in all of the layers with the exception of the infragranular MUA, where the enhancement did not reach significance. The most robust multisensory enhancement was in the supragranular layers. In this location, super-additivtity was significant for intensities of 50dB and below; at higher intensities the effect appeared simply additive. We observed the dependence of super-additivtity on the intensity of the auditory stimulus in all of our experiments. Figure 3C shows the percentage of experiments at each intensity for each laminar grouping, where the mutisensory interaction was significantly super-additive. These results are in line with previous multisensory studies which tested the principle of inverse effectiveness on monkey LFPs in A1 (Ghazanfar et al., 2005) and for human ERPs (Callan et al., 2001) in the auditory-visual domain.

Figure 3. Super-additivity and inverse effectiveness.

A) Color-maps show the laminar profiles of auditory (upper) and bimodal (lower) CSD responses at different auditory stimulus intensities. Overlaid traces show MUA in the selected supragranular (S), granular (G), and infragranular (I) channels. B) Line-plots shows single trial CSD and MUA amplitudes on the selected channels (S, G, and I) averaged for the 15–60 ms time interval. Error-bars represent standard error, stars denote where the single trial bimodal response amplitudes were significantly significant larger then the arithmetic sum of the unimodal responses (one-sample t-tests, p<0.01). C) Bar graphs show the percentage of experiments (out of a total of 20) at each auditory intensity, where single trial bimodal response amplitudes (CSD and MUA) were significantly larger then the arithmetic sum of the unimodal responses in each layer.

Temporal principle of multisensory interaction

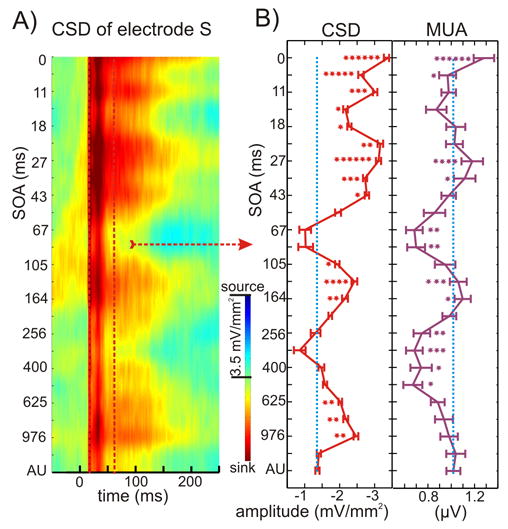

This principle refers to the fact that interaction is most likely for stimuli that overlap in time (Stein and Meredith, 1993). To evaluate adherence to this principle, we performed 6 experiments in three animals with paired stimuli, in which the somatosensory-auditory stimulus onset asynchrony (SOA) was varied between 0 (simultaneous stimuli) and 1220 ms (see the 4th paradigm in Experimental Procedures). Figure 4 shows the results for the supragranular site (where multisensory effects were largest) in one representative experiment. Color-map on the left shows the CSD of the selected supragranular channel as a function of SOA. Quantitative analysis of the single trial CSD and MUA responses over the 15–60 ms time interval (Fig. 4B) shows that – as expected – simultaneous presentation (0 ms SOA) of somatosensory and auditory stimuli results in the largest activation, which was significantly greater than the activation related to the auditory stimulus presented alone in all of the experiments (independent-samples t-tests, p<0.01; number of stars in the figure indicate how many experiments have significant differences in activation at a given SOA).

Figure 4. Effect of somatosensory-auditory SOA on the supragranular bimodal response.

A) Color-map shows the event related CSD of the supragranular channel (S, see Fig. 1) in area AI for different somatosensory-auditory SOAs. Increasing SOAs are mapped to the y-axis from top to bottom, with 0 on top corresponding to simultaneous auditory-somatosensory stimulation. AU in the bottom represents the auditory alone condition. Red dotted lines denote the 20–60 ms time interval for which we averaged the CSD and MUA in single trials for quantitative analysis. B) Traces show mean CSD and MUA amplitude values (x-axis) for the 20–60 ms auditory post-stimulus time interval (error-bars show standard errors) with different somatosensory-auditory SOAs (y-axis). Blue dotted line denotes the mean amplitude of the auditory alone response. At a given SOA, independent-samples t-tests were used for all six experiments (bimodal response amplitude in each experiment was compared to the response amplitude of the auditory alone condition). The number of stars at a given SOA indicates how many experiments have significant differences (independent-samples t-tests, p<0.01) in bimodal activation.

While this finding generally adheres to the temporal principle, there is an interesting structure to the effects. In addition to zero SOA, there were three additional SOA ranges, centered around 27 ms, 140 ms and 781 ms, that consistently yielded significant multisensory enhancement. Intriguingly, these “effective” SOA values correspond to the periods of well-known gamma, theta, and delta band EEG oscillations that comprise the essential structure of spontaneous activity in AI (Lakatos et al., 2005a). Also intriguing is the fact that at intermediate SOA ranges (centered around 14 ms, 67 ms and 320 ms) the paired stimulus response was smaller than the response to the unimodal auditory stimulus by itself. These observations suggest that the mechanism by which somatosensory inputs modulate auditory responses may involve alteration in the phase of ongoing oscillations in the local neuronal ensemble. This issue will be dealt with further in a subsequent section.

Spatial principle of multisensory interaction

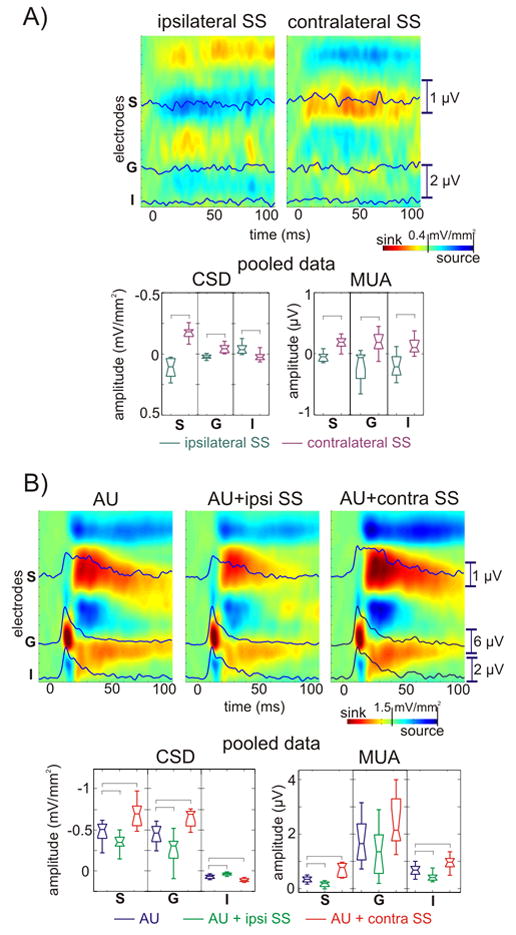

For technical reasons, we were unable to conduct a well-controlled systematic evaluation of the degree to which multisensory interactions in AI depend on the spatial alignment of auditory and somatosensory stimuli. However, we did compare the effects of ipsilateral versus contralateral somatosensory stimulation in 20 of the experiments. As in the case of bilateral somatosensory stimulation shown above (Fig. 1), the laminar positions of sources and sinks in the contralateral somatosensory CSD response (Fig. 5A, right) are similar to those observed in the co-located auditory response (Fig. 5B, left). The ipsilateral somatosensory response profile (Fig 5A, left) presents a remarkable contrast to all of these two response profiles; the laminar pattern of sources and sinks following ipsilateral somatosensory stimulation is essentially opposite to that seen with either auditory or contralateral somatosensory stimulation. The ipsilateral – contralateral difference was observed in all 20 experiments (Fig. 5A, lower) and was statistically significant for the 15–60 ms time window (independent-samples t-tests, p<0.01,). Pairing ipsilateral and contralateral somatosensory stimulation with auditory stimulation revealed that the modulatory effects of each on auditory stimulus processing were also opposite in sign. While contralateral stimulation enhanced the auditory response, ipsilateral stimulation caused suppression. Like the multisensory enhancement, this effect (Fig. 5B, lower) was largest in the supragranular layers, but it was significant for all layers in the pooled data (Games-Howell tests, p<0.01) with the exception of the granular MUA. There was no significant difference between the enhancement caused by bilateral and contralateral somatosensory stimuli.

Figure 5. Ipsi- and contralateral somatosensory event related responses in area AI and their effect on auditory stimulus processing.

A) Color-maps show ipsi- and contralateral somatosensory event related CSD profiles. Overlaid traces show MUA in the selected channels for each cortical layer. Box-plots show pooled averaged CSD and MUA response amplitudes to ipsi- and contralateral somatosensory stimuli on the selected channels for the 15–60 ms time interval. Brackets indicate significant differences between ipsilateral and contralateral conditions calculated using independent-samples t-tests (p<0.01). B) Color-maps with overlaid traces show CSD and MUA of unimodal auditory, and bimodal auditory + ipsilateral and auditory + contralateral somatosensory responses. Box-plots show pooled averaged CSD and MUA response amplitudes to unimodal auditory, auditory + ipsilateral and auditory + contralateral somatosensory stimuli on the selected channels for the 15–60 ms time interval. Brackets indicate the significant post hoc comparisons calculated using Games-Howell tests (p<0.01). There was no significant difference between the response amplitudes to auditory + contralateral and auditory + bilateral somatosensory stimuli (for auditory + bilateral somatosensory response amplitudes in the same paradigm see Fig. 2B.)

Oscillatory mechanisms of multisensory interaction in AI

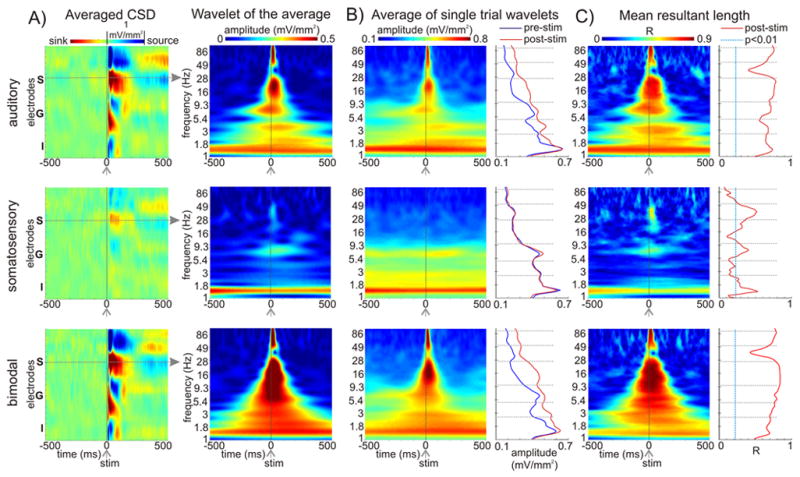

We analyzed the oscillatory components of unimodal and bimodal responses using Morlet wavelet decomposition (see Experimental Procedures). Color-maps on the left in Figure 6A show averaged CSD profiles in response to auditory, somatosensory, and bimodal stimulation. To the right of these, time-frequency plots show the wavelet decomposition of the averaged CSD response in the indicated supragranular site. This analysis defines the amplitudes of ‘phase locked’ oscillations, which survive averaging of the single trial responses. It is clear that oscillations in the bimodal condition have the largest amplitude across the spectrum, with the possible exception of the low delta (~1.3 Hz) band. The spectral content of the unisensory auditory response is very similar to that of the bimodal response, but lower in amplitude. In contrast, the somatosensory ‘phase locked’ oscillations appear confined to three relatively distinct frequency bands, low-delta (~ 1.3 Hz), theta (~ 7 Hz) and gamma (~ 35 Hz) bands. Also, the amplitude of the oscillations is much lower than in either the auditory or the bimodal cases.

Figure 6. Oscillatory properties of auditory, somatosensory and bimodal responses.

A) Color-maps to the left show the laminar profiles of auditory, somatosensory and bimodal event related averaged CSD responses for the −500 to 500 ms timeframe. Time-frequency plots to the right show oscillatory amplitudes of the supragranular (S) averaged responses for the same timeframe (x-axis) with frequency on the logarithmic y-axis. B) Time-frequency plots show the average oscillatory amplitude of the wavelet transformed single trials. The traces to the right show the pre- (blue, −500 –−250 ms) and post-stimulus (red, 0 – 250 ms) amplitudes (x-axis) at different frequencies (y axis). Grey dotted lines indicate the frequency intervals used for quantitative analysis (see Fig. 7). Frequency bands were chosen based on results from previous studies. C) Time-frequency plots show the mean resultant length (R) of the single trial phases at different times/frequencies. This value will be 1 if at a given time point the oscillatory phase is the same in each trial, and will be 0 if the oscillatory phase is random (see Experimental Procedures). Traces to the right show the mean resultant length at 15 ms post-stimulus. Blue dotted line depicts the threshold for significant deviation from a uniform (random) phase distribution (Rayleigh’s uniformity tests, p = 0.01).

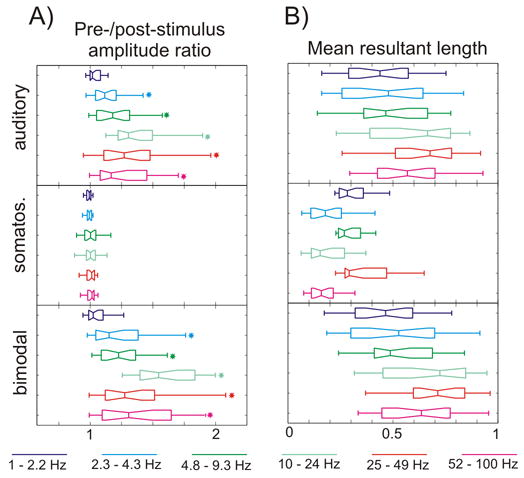

Phase-locked oscillations can be produced by a stimulus-evoked neuronal response, by stimulus-induced phase resetting of ongoing oscillations, or by a combination of the two. According to earlier analyses (Makeig et al., 2004; Shah et al., 2004), evoked responses are accompanied by pre- to post-stimulus power increase in the single-trial responses, while pure phase-resetting results in a pre- to post-stimulus inter-trial phase synchrony increase, without an accompanying power increase. To define stimulus-related power increases, for each condition, we computed the wavelet amplitudes of the single trial responses and averaged them, which is shown in Figure 6B. It is obvious, that the auditory and bimodal events cause a large amplitude increase across the spectrum, with the exception of the low-delta oscillations. The comparison of the time averaged pre- (−500–−250 ms) and post-stimulus (0–250 ms) oscillations to the right of the frequency maps reveals, that the largest amplitude increase occurs in the high-delta (2.3–4 Hz), beta (10–24 Hz), and high-gamma (52–100 Hz) frequency bands (for quantitative analyses using one-sample t-tests, see Fig. 7A). It is also clear that the bimodal stimulus related oscillations are larger in these bands (similar to the results of previous human studies: Sakowitz et al., 2001, 2005; Senkowski et al., 2005, 2006; Kaiser et al., 2005). In contrast, there is no significant somatosensory event related power increase in any of the frequency bands; the post-stimulus spectrum is almost perfectly a match of the pre-stimulus spectrum.

Figure 7. Event related single trial oscillatory amplitudes and phase concentration.

A) Pooled n=38) post-/pre-stimulus single trial oscillatory amplitude ratio (0 – 250 ms/−500 – −250 ms) for different frequency intervals (different colors) of the auditory, somatosensory and bimodal supragranular responses. Stars denote where the amplitude ratio is significantly different than 1 (one-sample t-tests, p<0.01). B) Pooled mean resultant length values at 15 ms post-stimulus. Note that in the case of somatosensory events, significant phase concentration only occurs in the low-delta (1–2.2 Hz), theta (4.8–9.3 Hz) and gamma (25–49 Hz) bands.

One way to show event related phase synchrony is to compute the mean resultant length of the different frequency oscillatory phases, which indicates how well a circular distribution is described as unimodal. This value will be 1 if at a given time point the oscillatory phase is the same in each trial, and will be 0 if the oscillatory phase is random. The results from the analysis of an individual recording are plotted in Figure 6C and quantitative analysis is shown in Figure 7B. While auditory and bimodal events result in a non-random phase distribution all across the spectrum – with phase concentration being larger in the case of bimodal events –, somatosensory events cause discrete stimulus related phase concentration of the low-delta, theta, and gamma oscillations, which are the oscillations present in the pre-stimulus spectrum. The variable that appears to determine the degree of phase resetting is stimulus effectiveness. Figure 6 and 7B show that auditory stimuli cause larger phase resetting than somatosensory stimuli, and that bimodal stimuli have the largest effect on the phase of ongoing oscillations (higher mean resultant length values at dominant ambient oscillatory frequencies). The possibility that phase resetting or amplitude effects are merely a result of cross-modal summation in the bimodal condition is unlikely, because the vast majority of bimodal enhancement effects occur in frequency bands where somatosensory stimulation by itself produces no detectable stimulus related power increase (see Figures. 6 & 7).

To summarize, auditory and bimodal events cause amplitude increase and phase concentration over the entire spectrum with both variables being larger in the case of bimodal events, which explains the large oscillatory amplitude difference in the averaged oscillations (wavelet of the average, see Fig. 6A). The somatosensory averaged waveform in contrast mainly results from an event related phase-concentration of the pre-stimulus –or spontaneous – oscillations (phase-resetting), which show no significant stimulus related amplitude increase.

Next we tested whether the somatosensory event resets the spontaneous oscillations in a manner that could explain the multisensory effects in area AI. A previous study shows that pre-stimulus oscillatory phase influences the amplitude of the auditory response in AI: there are ‘ideal’ and ‘worst’ phases, during which stimulus responsiveness is enhanced and suppressed respectively (Lakatos et al., 2005a). Comparison of contralateral and ipsilateral somatosensory stimulation effects in this study also shows that the somatosensory response can influence the amplitude of the auditory response either by enhancement or suppression.

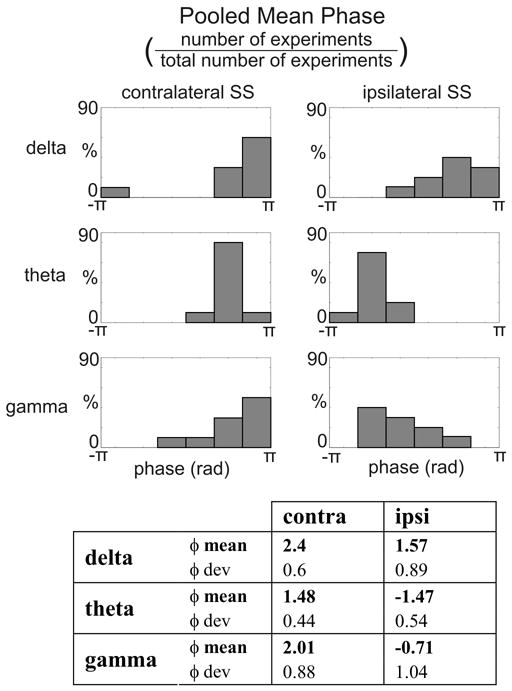

To compare the effects of phase resetting in the case of contra- vs. ipsilateral conditions, for each frequency band with significant phase concentration (the low-delta, theta, and gamma bands, see above) we determined the dominant frequency at 15 ms post-stimulus (the average auditory response onset in the supragranular layers). These frequencies were 1.7 Hz (SD: 0.31) in the delta, 7 Hz (SD: 1.3) in the theta, and 36.8 Hz (SD: 5.5) in the gamma band for contralateral stimuli, and they were not significantly different from those of ipsilateral stimuli (paired-samples t-tests, p>0.05). The distribution of mean phases was non-uniform in both cases for all of the dominant frequency oscillations (Rayleigh’s uniformity tests, p<0.01). In the case of contralateral stimulation the mean phases grouped before and around the negative peak of each of the oscillations (+/−π in Fig. 8), which according to our earlier studies, corresponds to the ideal excitatory phase of spontaneous oscillations. This explains how phase-resetting of these oscillations by somatosensory stimuli can result in the amplification of the subsequent auditory response.

Figure 8. Contra- and ipsilateral somatosensory event related phase at the dominant frequencies.

A) Pooled mean delta, theta and gamma oscillatory phase associated with contra- and ipsilateral somatosensory stimulation on the selected supragranular electrode. Mean phase values are derived from single trial wavelet phases at 15 ms post-stimulus (average auditory onset latency in the supragranular layers) in each experiment. Bar graphs show the percentage of experiments (out of a total of 20) where the mean phase fell into a given phase bin (6 bins from −π to π). Table shows the pooled mean phase values of the dominant oscillations and angular deviance of the means at 15 ms post-stimulus.

In the case of ipsilateral stimuli while the delta phase distribution roughly matched that of the contralateral one, the event related theta and gamma oscillations were in counter phase. The phase of these oscillations corresponded to the worst phase of spontaneous oscillations, thereby explaining how ipsilateral stimuli cause an attenuation of the auditory response if stimuli are presented concurrently. Statistical analysis (nonparametric test for the equality of circular means: Fisher, 1993; Rizzuto et al., 2006) also showed that the theta and gamma frequency event related oscillatory phases were significantly different (p<0.01) from the contralateral oscillatory phases.

DISCUSSION

This study examined somatosensory influences on auditory stimulus processing in primary auditory cortex (area AI). Somatosensory stimulation produced an early event related response concentrated in the supragranular layers in all of our AI recordings. This response consisted of a field potential/CSD modulation with no action potential correlate (Fig. 1), and is the predicted form for a “modulatory”, as opposed to a driving input. Co-presentation of the somatosensory and auditory stimuli resulted in a super-additive multisensory interaction at moderate auditory stimulus intensities. This interaction was largest when stimuli were presented simultaneously. In this case the somatosensory input to the supragranular layers precedes the auditory input, and is thus able to modulate the auditory response in that location. Because the somatosensory response begins in the supragranular layers and spreads to lower layers somewhat later (see Figure 2A and Figure 3A, lowest intensity), the supragranular layer response is amplified from the onset, while enhancement of the granular and infragranular layer responses begins later. Multisensory enhancement also occurred at specific somatosensory-auditory SOAs, each of which corresponds to the period of a spontaneous delta, theta, or gamma oscillation (Lakatos et al., 2005a). Analysis of the event related oscillations revealed that the somatosensory events reset these ambient oscillations, and the phase of these reset oscillations determines the effect on the subsequent auditory response.

Multisensory interaction in primary auditory cortex

One of the more intriguing aspects of our results is that the effects occur in AI, a primary cortical structure widely viewed as exclusively auditory in function. While this observation challenges several fundamental assumptions about sensory processing, it does not mean that neuronal activity in auditory cortex is related to either somatosensory or visual perceptual experiences, or even to the computation of a higher order, multisensory cognitive representation (see e.g., Stone, 2001). On the contrary, we think it is likely that appropriately timed somatosensory and visual inputs to auditory cortex help us to hear better. The best known example of this effect at a perceptual level is the demonstration over 50 years ago by Sumby and Pollack (1954) that viewing a speaker’s lip movements amplifies the subjective loudness of spoken words. The less famous audio-tactile perceptual interaction effects described earlier [i.e., the “Parchment Skin Illusion” and the “Hearing Hands Effect “ (Jousmaki and Hari, 1998; Schurmann et al., 2004)] appear more directly related to the specific sensory interactions described here. In each of these cases, the somatosensory stimulation produces perceptual amplification of auditory input. Our findings suggest that the key to these effects is that the temporal patterns (rhythms) of somatosensory and auditory rhythms match in phase as well as frequency. Thus, the visual or somatosensory input can help to drive the ambient oscillations in auditory cortex into the ideal phase for the auditory input, with the result of an enhanced auditory cortical response.

Anatomical substrates for multisensory interaction in AI

Previous work (Schroeder et al., 2001) demonstrated classical feedforward type somatosensory responses in auditory area CM with characteristic large increases in the MUA, and amplitudes comparable to that of the co-located auditory responses. This is in sharp contrast with the somatosensory response described here, and thus, it is likely that somatosensory input to area A1 and CM are mediated by different anatomical mechanisms. Anatomical studies in monkeys outline three main routes which non-auditory inputs may use to access auditory cortex: 1) feed-forward projections from “nonspecific” thalamic afferents (Hackett et al., 1998b; Jones, 1998), 2) direct lateral projections from low level non-auditory cortices (Falchier et al., 2002; Rockland and Ojima, 2003; Cappe and Barone, 2005), and 3) feedback projections from higher order multisensory regions of neocortex (Hackett et al., 1998a). Two aspects of the effects we observe favor the first alternative.

First, when somatosensory stimulation is applied in the absence of sound, it produces a response that is initiated and largely focused in the supragranular layers (Fig. 1A lower). This contrasts markedly with the expected profile for the typical ascending inputs (Felleman and Van Essen, 1991); that is, a response that is initiated in Lamina 4, and then spreads to extragranular layers which we observe for ascending auditory input to AI (Fig. 1A upper). A supragranular bias of somatosensory influence is exactly the prediction based on the anatomy of the nonspecific thalamic system. There is direct projection to these layers from the magnocellular nucleus of the medial geniculate (Molinari et al., 1995), which along with the auditory input also receives afferent input from the somatosensory system (Poggio and Mountcastle, 1960; Blum et al., 1979). Superficial AI layers also receive direct projections from the multisensory thalamic suprageniculate and posterior nuclei (Morel and Kaas, 1992; De La Mothe et al., 2006). Although lateral projections and feedback cortical projections both target superficial laminae, they also make significant terminations in the inferior laminae (Felleman and Van Essen, 1991), which predicts either a bilaminar or a multilaminar onset profile, neither of which was observed here.

Second, the timing of effects is suggestive of direct feedforward input; somatosensory-related activation of the supragranular layers of AI occurs on average at about 9 ms, while auditory activation of the same location occurs at about 15 ms. Although timing is not definitive, the extremely short onset latency of the somatosensory-induced effects in AI strongly favours the feedforward mechanism. Both lateral and feedback projections would require transmission through somatosensory cortex, and our ongoing studies suggest that under precisely the same experimental conditions, activation of somatosensory areas that are likely sources of cortical projections occurs at about the same time as that of supragranular AI (i.e., ~7–8 ms versus 9 ms).

Implications for multisensory research

Our findings describe an effect that uses a novel mechanism, but is nonetheless a classic example of multisensory interaction. Somatosensory-auditory interaction in AI clearly follows well-established principles of multisensory interaction (Stein and Meredith, 1993), including the principle of inverse effectiveness (Fig. 3.; see also Ghazanfar et al., 2005), the temporal principle (Fig. 4.) and possibly the spatial principle (Fig. 5.). Thus it is possible that similar low level multisensory interactions provide substrates for several behavioural and electrophysiological findings in humans (Murray et al., 2005; Sanabria et al., 2005), including some, such as the ventriloquist effect (Recanzone, 1998), that involve visual-auditory, rather than somatosensory-auditory interactions. Moreover, because CSD analysis is based on field potential recordings, our data can be used to help to elucidate the neural bases for ERP effects noted under similar experimental conditions in humans. First, using a 3/5 rule for extrapolating from monkey to human sensory response latencies (Schroeder et al., 1995), the largest super-additive effect of the somatosensory-auditory interaction between 30–40 ms in the present study extrapolates approximately to the latency of a similar somatosensory-auditory interaction reported by Foxe et al. (2000) in humans. Second, we confirm the localization of effects in this time range to classical auditory cortex (Murray et al, 2005). Finally, our data suggest a functional differentiation of the effects of ipsilateral and contralateral somatosensory stimulation into net suppression versus facilitation, which is not apparent in the scalp ERP distribution (see Murray et al., 2005).

Neuronal oscillations, phase resetting and cortical interaction

This study tested the hypothesis that somatosensory inputs enhance auditory processing in AI by resetting the phase of ongoing neuronal oscillations so that accompanying auditory inputs arrive during a high excitability phase and are amplified. The evidence for this hypothesis is multifaceted and compelling. First, evaluation of SOA effects revealed that somato-auditory enhancement effects do not fall off monotonically (or exponentially) from their maximum at an SOA of zero, but rather, the function exhibits non-linearities or “scallops” at SOA values that coincide with the periods of classic EEG oscillatory frequencies. This effect is predicted by the earlier findings that auditory processing is “EEG phase dependent” (Fries et al., 2001; Kruglikov and Schiff, 2003; Lakatos et al., 2005a) and oscillatory phase is reset by stimulus input, both auditory (Lakatos et al., 2005a, present results), and nonauditory (present results). Interestingly, the intensity threshold for auditory-induced phase-resetting in A1 may be lower than that for the feedforward auditory evoked response in A1 (see Fig 3a upper row). We emphasize here that phase-resetting by auditory stimuli can also influence subsequent auditory processing in the time-range of reset oscillatory wavelength (Galambos et al., 1981; Lakatos et al., 2005a), although this effect was deliberately avoided in the present study. Second, the functional characteristics of the somatosensory response in AI all suggest that it consists primarily of phase-resetting of ongoing neuronal oscillations. That is, our analysis shows pre- to post-stimulus phase concentration with very little increase in power (Figs. 6 & 7), which is a signature of oscillatory phase resetting (Makeig et al., 2004; Shah et al., 2004). Finally, the strong phase dependence of sensory responses in auditory cortex (Lakatos et al., 2005a) predicts suppression as well as enhancement. That is, just as it appears possible to systematically enhance stimulus responses by resetting local neuronal oscillations to the “ideal” phase, it should also be possible to suppress stimulus responses by resetting to the “worst” phase. This prediction is met by the effects of ipsilateral somatosensory stimulation (Figs. 5 & 8).

These results support the idea that spontaneous neuronal oscillations, far from being mere noise, may actually represent an instrument that can be used in sensory processing. Because processing is phase dependent (above) and because the somatosensory input resets the ongoing oscillation to its ideal (high excitability) phase, responses to auditory inputs tightly associated with the somatosensory stimulus are amplified at the expense of stimuli with a random relationship to the somatosensory stimulus. By the same token, auditory stimuli that are offset from the reset by differing fixed amounts, particularly by SOAs corresponding to ½ delta and theta cycles, fall into a low excitability oscillatory phase and are suppressed (Fig 4; see also results of Ghazanfar et al (2005)). The finding that spontaneous oscillations in AI are reliably reset to their worst (lowest excitability) phase by an ipsilateral somatosensory stimulus suggests that the structured correlation between auditory and nonauditory stimuli may also be used to promote active suppression of auditory responses in some circumstances (e.g., suppression of auditory response to one’s own vocalizations). The possibility that nonspecific thalamic projections may mediate somatosensory modulation of AI activity through phase resetting fits with the proposition that this system may be uniquely important in promoting cortical synchrony (Jones, 2001). In any case, it merits emphasis that while trial-by-trial effects manifest as relative suppression versus enhancement at high stimulus intensities, these effects should be “all or none” for stimuli that are weakly effective, either because their intensities are near threshold, or because they occur in a noisy natural environment.

Conclusions

Our data show that multisensory interactions occur at the earliest stage of auditory cortical processing. Non-auditory inputs modulate the phase of ambient oscillatory activity in the supragranular layers, so that accompanying auditory inputs arrive during an ideal, high excitability phase, and are thus amplified. This type of low-level multisensory interaction dramatically illustrates how important the neural system’s “context” is in processing new sensory “content” (Arieli et al., 1996; Fiser et al., 2004; Lakatos et al., 2005). Critically, somatosensory modulation of AI appears more related to hearing than to the computation of a unified higher-order perceptual representation. We speculate that a similar oscillatory phase resetting, albeit by visual input, may be the basis for visual enhancement of speech sound processing. Amplification by oscillatory phase resetting merits consideration as underlying mechanism in other perceptual effects including those of selective attention.

EXPERIMENTAL PROCEDURES

Electrophysiological data were recorded in 38 penetrations of area AI of the auditory cortex in 6 male macaques (Macacca mulatta), who were prepared for chronic awake intracortical recording. Each monkey also served in additional, unrelated neurophysiological experiments, and at the terminal stage, in anatomical tract-tracing studies. All procedures were approved in advance by the Animal Care and Use Committee of the Nathan Kline Institute. Prior to surgery, each animal was adapted to a custom fitted primate chair and to the recording chamber.

Surgery

Surgery was performed under anaesthesia (1–2% Isoflurane), using standard aseptic surgical methods (Schroeder et al., 2001). The tissue overlying the calvarium was resected and appropriate portions of the cranium were removed. The neocortex and overlying dura were left intact. To allow electrode access to the brain, and to promote an orderly pattern of sampling across the surface of the auditory cortices, recording chambers with insert guide grids were placed over auditory cortex. The chambers were angled so that the electrode track would be perpendicular to the plane of auditory cortex, as determined by pre-implant MRI. They were placed within small, appropriately shaped craniotomies, to rest against the intact dura. The chambers, along with a titanium head post and socketed Plexiglas bars (permitting painless head restraint), were secured to the skull with titanium orthopedic screws and embedded in dental acrylic. Post-surgical care included administration of fluids and antibiotics (Cefazolin, 250mg/kg,, BID). Analgesics (e.g., Buprenophine, 0.01 mg/kg, BID; Childrens tylenol, 80 mg/kg TID; occasionally Banamine 1.0 mg/kg, IM- BID) are used initially and later if there is any indication of pain. Monkeys were allowed 2 weeks recovery prior to data collection.

Electrophysiological recording

Laminar profiles of field potentials (EEG) and concomitant population action potentials (multiunit activity or MUA) analyzed in the present study were obtained using a linear array multi-contact electrode (24 contacts, 100 μm intercontact spacing) positioned to sample from all the layers simultaneously (Fig. 1A). Signals were impedance matched with a pre-amplifier (10x gain, bandpass dc-10 kHz) situated on the electrode, and after further amplification (500x) the signal was split into the field potential (0.1–500 Hz) and MUA (300–5000 Hz) range by analogue filtering. Field potentials were sampled at 2 kHz/16 bit precision, MUA was sampled at 20 kHz/12 bit precision. Additional zero phase shift digital filtering (300–5000 Hz) and rectification was applied to the MUA data, and finally it was integrated down to 1 kHz (sampled at 2 kHz) to extract the continuous estimate of cell firing. One-dimensional CSD profiles (e.g. Fig. 1.) were calculated from the spatially smoothed (Hamming window) local field potential profiles using a three-point formula to estimate the second spatial derivative of voltage (Nicholson and Freeman, 1975). CSD profiles provide an index of the location, direction, and density of transmembrane current flow, the first-order neuronal response to synaptic input (Schroeder et al., 1998).

Stimulation methods and paradigms

Prior to data collection, subjects were conditioned to sit quietly and accept painless head restraint. During recording, subjects were monitored continuously using infrared video, and were kept in an alert state by interacting with them, however, were not required to attend or respond to the auditory or somatosensory stimuli; on the contrary, they were purposely habituated to the stimuli by frequent exposure to periods of noncontingent stimulation. In each of the 38 experiments, the stereotypic laminar CSD profile evoked by binaural Gaussian noise burst was used to position the multielectrode array to straddle the auditory cortex from the pial surface to the white matter (Schroeder et al., 2001). Once the position was refined, it was left stable for the duration of the recording session. Characteristic frequency (CF) and tuning profile for each recording site were assessed using a suprathreshold method (Steinschneider et al., 1995; Schroeder et al., 2001; Fu et al., 2004; Lakatos et al., 2005b) entailing presentation of a pseudorandom train of 14 different frequency pure tones (0.3–32 kHz) and a broadband noise burst at 60 dB SPL (duration: 100 ms; rise/fall time: 4 ms). Stimulus onset asynchrony (SOA) was 624 ms and 100 trials were obtained for each stimulus.

The key experimental stimuli for examining auditory-somatosensory interactions in AI were brief (500 μs) auditory clicks and mild electrical stimulation of the median nerve at the wrist. All auditory stimuli were produced using Tucker Davis Technology’s System III coupled with ES-1 speakers. For median nerve stimulation, electrical stimuli consisted of 200 μs constant-current square-wave pulses applied with bipolar electrodes to the skin of the wrist over the median nerve. Intensity was adjusted to 66% of a standard motor threshold value; i.e., intensity that produced a barely discernable twitch in the abductor pollicus brevis muscle distal to the stimulation site (Peterson et al., 1995; Schroeder et al., 1995). Prior to beginning the study, monkeys were thoroughly habituated to median nerve stimulation. The auditory and somatosensory stimuli were used in four different stimulus paradigms. In paradigms 1 and 4 we used longer stimulus onset asynchronies (SOA) to be able to perform wavelet analysis and to prevent entrainment to auditory stimuli respectively. (1) auditory stimuli (40 dB) and somatosensory stimuli presented alone, and in combination (SOA=1524 ms), (2) Binaural auditory stimuli (40 dB) presented alone, and bi-, ipsi- and contralateral somatosensory stimuli presented alone or in combination with auditory stimuli (SOA=624 ms), (3) Auditory stimuli presented at 7 different intensities (20–80 dB) either alone, or paired with constant intensity bilateral somatosensory stimuli (SOA=624 ms), and (4) Somatosensory-auditory stimulus pairs with different SOA ranging from 0 to 1220 ms logarithmically. The SOA between auditory stimuli in this stimulus train was constant (3100 ms). We also presented auditory stimuli without any paired somatosensory stimulus in these stimulus trains (AU in Fig. 5). The stimuli in all of the paradigms were presented randomly, and block length was varied to have 100 presentations of each stimulus type (including the combinations).

Data analysis

In the present study we analyzed data recorded during 38 penetrations of area AI of the auditory cortex. Data were analyzed offline using Matlab (Mathworks, Natick, MA).

Confirmation of recording sites in AI

Recording sites were functionally defined as belonging to AI or belt auditory cortices based on examination of the frequency tuning sharpness, relative sensitivity to pure tones versus broad-band noise of equivalent intensity, and the tonotopic progression across adjacent sites (Steinschneider et al., 1995; Schroeder et al., 2001; Fu et al., 2004; Lakatos et al., 2005b). Since at terminal stage all subjects also participate in anatomical tract tracing studies, we routinely assess the distribution of electrode penetrations in and near AI. Electrode tracks were reconstructed through post-mortem histology, following transcardial perfusion and whole brain sectioning (Schroeder et al., 2001; Fu et al., 2003; Lakatos et al., 2005b). To date recording site distributions in five of the six subjects have been confirmed histologically.

Analysis of effects by laminar location

Using the CF tone related laminar CSD profile, the functional identification of the supragranular, granular and infragranular cortical layers in area AI is straightforward based on our earlier studies (Schroeder et al., 2001; Fu et al., 2003, 2004; Lakatos et al., 2005a). For quantitative analysis of event related CSD response latencies and CSD/MUA amplitudes, one representative electrode contact with the largest CF tone related CSD was selected in each layer (Fig. 1.). Onset latency in each cortical layer was defined as the earliest significant (>2 standard deviation units) deviation of the single channel averaged waveforms from their baseline (−30–0 ms), that was maintained for at least 5 ms. Pooled onset latency and response amplitude values (Fig. 1B) were evaluated statistically by ANOVAs. For significant effects detected with ANOVAs, the post hoc Games-Howell tests were used (Figs. 1B, 2B, and 5B lower) since equal variances were not assumed, and also, it takes into account unequal group sizes.

To determine super-additive multisensory effects, single trial bimodal response amplitudes were compared to the arithmetic sum of average unimodal response amplitudes (Fig. 3B) using one-sample t-tests at different levels of intensity.

Analysis of the event related CSD oscillations

Continuous recordings were epoched off-line from −2000 to 2000 ms to avoid edge effects of the wavelet transformation. Instantaneous power and phase were extracted by wavelet decomposition (Morlet wavelet) on 84 scales from 1 to 101.2 Hz. To determine stimulus related oscillatory amplitude changes, we calculated the post- (0–250 ms)/pre-stimulus (−500–−250 ms) amplitude ratio. For quantitative analysis, amplitude ratio was averaged in six frequency bands, which were chosen based on results from previous studies and by visually inspecting the spectrograms (Figs. 6. & 7.). A ratio of 1 means that there is no event related amplitude change. Significant deviation from 1 was determined using one-sample t-tests (mean amplitude ratio of each frequency band compares to 1; see Fig. 7A).

To characterize phase distribution across trials, the mean angle and the resultant length of the mean vector (mean resultant length, R) was calculated at each frequency and time point from the wavelet transformed data (Fig. 6C.). To calculate R, each observation (across trials at a given frequency and time) is treated as a unit vector. The resultant vector of the observations is calculated and the length of this vector is divided by the sample size. The mean resultant length ranges from 0 to 1; higher values indicate that the observations (phase at a given time-point across trials) are clustered more closely around the mean than lower values. Single trial event related phase values were analyzed by circular statistical methods. Significant deviation from uniform (random) phase distribution was tested with Rayleigh’s uniformity test. Pooled phase distributions (Fig. 8) were compared by a nonparametric test for the equality of circular means (Fisher, 1993; Rizzuto et al., 2006). The alpha value was set at 0.05 for all statistical tests.

Acknowledgments

We thank Dr. George Karmos for helpful comments on an earlier version of the manuscript. We also thank Tammy McGinnis for her invaluable assistance in collecting the data. Support for this work was provided by NIMH grant MH61989.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Arieli A, Sterkin A, Grinvald A, Aertsen A. Dynamics of ongoing activity: explanation of the large variability in evoked cortical responses. Science. 1996;273:1868–1871. doi: 10.1126/science.273.5283.1868. [DOI] [PubMed] [Google Scholar]

- Atteveldt N, Formisano E, Goebel R, Blomert L. Integration of letters and speech sounds in the human brain. Neuron. 2004;43:271–282. doi: 10.1016/j.neuron.2004.06.025. [DOI] [PubMed] [Google Scholar]

- Blum PS, Abraham LD, Gilman S. Vestibular, auditory, and somatic input to the posterior thalamus of the cat. Exp Brain Res. 1979;34:1–9. doi: 10.1007/BF00238337. [DOI] [PubMed] [Google Scholar]

- Brosch M, Selezneva E, Scheich H. Nonauditory events of a behavioral procedure activate auditory cortex of highly trained monkeys. J Neurosci. 2005;25:6797–6806. doi: 10.1523/JNEUROSCI.1571-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callan DE, Callan AM, Kroos C, Vatikiotis-Bateson E. Multimodal contribution to speech perception revealed by independent component analysis: a single-sweep EEG case study. Brain Res Cogn Brain Res. 2001;10:349–353. doi: 10.1016/s0926-6410(00)00054-9. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer MJ, Campbell R, Williams SC, McGuire PK, Woodruff PW, Iversen SD, David AS. Activation of auditory cortex during silent lipreading. Science. 1997;276:593–596. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- Cappe C, Barone P. Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur J Neurosci. 2005;22:2886–2902. doi: 10.1111/j.1460-9568.2005.04462.x. [DOI] [PubMed] [Google Scholar]

- De La Mothe LA, Blumell S, Kajikawa Y, Hackett TA. Thalamic connections of the auditory cortex in marmoset monkeys: Core and medial belt regions. J Comp Neurol. 2006;496:72–96. doi: 10.1002/cne.20924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman DJ, Van E. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Fiser J, Chiu C, Weliky M. Small modulation of ongoing cortical dynamics by sensory input during natural vision. Nature. 2004;431:573–578. doi: 10.1038/nature02907. [DOI] [PubMed] [Google Scholar]

- Fisher NI. Statistical analysis of circular data. New York, NY: Cambridge University Press; 1993. [Google Scholar]

- Foxe JJ, Wylie GR, Martinez A, Schroeder CE, Javitt DC, Guilfoyle D, Ritter W, Murray MM. Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J Neurophysiol. 2002;88:540–543. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- Fries P, Neuenschwander S, Engel AK, Goebel R, Singer W. Rapid feature selective neuronal synchronization through correlated latency shifting. Nat Neurosci. 2001;4:194–200. doi: 10.1038/84032. [DOI] [PubMed] [Google Scholar]

- Fu KM, Johnston TA, Shah AS, Arnold L, Smiley J, Hackett TA, Garraghty PE, Schroeder CE. Auditory cortical neurons respond to somatosensory stimulation. J Neurosci. 2003;23:7510–7515. doi: 10.1523/JNEUROSCI.23-20-07510.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu KM, Shah AS, O’Connell MN, McGinnis T, Eckholdt H, Lakatos P, Smiley J, Schroeder CE. Timing and laminar profile of eye-position effects on auditory responses in primate auditory cortex. J Neurophysiol. 2004;92:3522–3531. doi: 10.1152/jn.01228.2003. [DOI] [PubMed] [Google Scholar]

- Galambos R, Makeig S, Talmachoff PJ. A 40-Hz auditory potential recorded from the human scalp. Proc Natl Acad Sci U S A. 1981;78:2643–2647. doi: 10.1073/pnas.78.4.2643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Maier JX, Hoffman KL, Logothetis NK. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J Neurosci. 2005;25:5004–5012. doi: 10.1523/JNEUROSCI.0799-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Subdivisions of auditory cortex and ipsilateral cortical connections of the parabelt auditory cortex in macaque monkeys. J Comp Neurol. 1998a;394:475–495. doi: 10.1002/(sici)1096-9861(19980518)394:4<475::aid-cne6>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Thalamocortical connections of the parabelt auditory cortex in macaque monkeys. J Comp Neurol. 1998b;400:271–286. doi: 10.1002/(sici)1096-9861(19981019)400:2<271::aid-cne8>3.0.co;2-6. [DOI] [PubMed] [Google Scholar]

- Jones EG. Viewpoint: the core and matrix of thalamic organization. Neuroscience. 1998;85:331–345. doi: 10.1016/s0306-4522(97)00581-2. [DOI] [PubMed] [Google Scholar]

- Jones EG. The thalamic matrix and thalamocortical synchrony. Trends Neurosci. 2001;24:595–601. doi: 10.1016/s0166-2236(00)01922-6. [DOI] [PubMed] [Google Scholar]

- Jousmaki V, Hari R. Parchment-skin illusion: sound-biased touch. Curr Biol. 1998;8:R190. doi: 10.1016/s0960-9822(98)70120-4. [DOI] [PubMed] [Google Scholar]

- Kaiser J, Hertrich I, Ackermann H, Mathiak K, Lutzenberger W. Hearing lips: gamma-band activity during audiovisual speech perception. Cereb Cortex. 2005;15:646–653. doi: 10.1093/cercor/bhh166. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Integration of touch and sound in auditory cortex. Neuron. 2005;48:373–384. doi: 10.1016/j.neuron.2005.09.018. [DOI] [PubMed] [Google Scholar]

- Kruglikov SY, Schiff SJ. Interplay of electroencephalogram phase and auditory-evoked neural activity. J Neurosci. 2003;23:10122–10127. doi: 10.1523/JNEUROSCI.23-31-10122.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Shah AS, Knuth KH, Ulbert I, Karmos G, Schroeder CE. An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J Neurophysiol. 2005a;94:1904–1911. doi: 10.1152/jn.00263.2005. [DOI] [PubMed] [Google Scholar]

- Lakatos P, Pincze Z, Fu KM, Javitt DC, Karmos G, Schroeder CE. Timing of pure tone and noise-evoked responses in macaque auditory cortex. Neuroreport. 2005b;16:933–937. doi: 10.1097/00001756-200506210-00011. [DOI] [PubMed] [Google Scholar]

- Lipton ML, Fu KM, Branch CA, Schroeder CE. Ipsilateral hand input to area 3b revealed by converging hemodynamic and electrophysiological analyses in macaque monkeys. J Neurosci. 2006;26:180–185. doi: 10.1523/JNEUROSCI.1073-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makeig S, Debener S, Onton J, Delorme A. Mining event-related brain dynamics. Trends Cogn Sci. 2004;8:204–210. doi: 10.1016/j.tics.2004.03.008. [DOI] [PubMed] [Google Scholar]

- Molinari M, Dell’Anna ME, Rausell E, Leggio MG, Hashikawa T, Jones EG. Auditory thalamocortical pathways defined in monkeys by calcium-binding protein immunoreactivity. J Comp Neurol. 1995;362:171–194. doi: 10.1002/cne.903620203. [DOI] [PubMed] [Google Scholar]

- Morel A, Kaas JH. Subdivisions and connections of auditory cortex in owl monkeys. J Comp Neurol. 1992;318:27–63. doi: 10.1002/cne.903180104. [DOI] [PubMed] [Google Scholar]

- Murray MM, Molholm S, Michel CM, Heslenfeld DJ, Ritter W, Javitt DC, Schroeder CE, Foxe JJ. Grabbing your ear: rapid auditory-somatosensory multisensory interactions in low-level sensory cortices are not constrained by stimulus alignment. Cereb Cortex. 2005;15:963–974. doi: 10.1093/cercor/bhh197. [DOI] [PubMed] [Google Scholar]

- Nicholson C, Freeman JA. Theory of current source-density analysis and determination of conductivity tensor for anuran cerebellum. J Neurophysiol. 1975;38:356–368. doi: 10.1152/jn.1975.38.2.356. [DOI] [PubMed] [Google Scholar]

- Peterson NN, Schroeder CE, Arezzo JC. Neural generators of early cortical somatosensory evoked potentials in the awake monkey. Electroencephalogr Clin Neurophysiol. 1995;96:248–260. doi: 10.1016/0168-5597(95)00006-e. [DOI] [PubMed] [Google Scholar]

- Poggio GF, Mountcastle VB. A study of the functional contributions of the lemniscal and spinothalamic systems to somatic sensibility. Central nervous mechanisms in pain. Bull Johns Hopkins Hosp. 1960;106:266–316. [PubMed] [Google Scholar]

- Recanzone GH. Rapidly induced auditory plasticity: the ventriloquism aftereffect. Proc Natl Acad Sci U S A. 1998;95:869–875. doi: 10.1073/pnas.95.3.869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzuto DS, Madsen JR, Bromfield EB, Schulze-Bonhage A, Kahana MJ. Human neocortical oscillations exhibit theta phase differences between encoding and retrieval. Neuroimage. 2006;31:1352–1358. doi: 10.1016/j.neuroimage.2006.01.009. [DOI] [PubMed] [Google Scholar]

- Rockland KS, Ojima H. Multisensory convergence in calcarine visual areas in macaque monkey. Int J Psychophysiol. 2003;50:19–26. doi: 10.1016/s0167-8760(03)00121-1. [DOI] [PubMed] [Google Scholar]

- Sakowitz OW, Quiroga RQ, Schurmann M, Basar E. Bisensory stimulation increases gamma-responses over multiple cortical regions. Brain Res Cogn Brain Res. 2001;11:267–279. doi: 10.1016/s0926-6410(00)00081-1. [DOI] [PubMed] [Google Scholar]

- Sakowitz OW, Quian QR, Schurmann M, Basar E. Spatio-temporal frequency characteristics of intersensory components in audiovisually evoked potentials. Brain Res Cogn Brain Res. 2005;23:316–326. doi: 10.1016/j.cogbrainres.2004.10.012. [DOI] [PubMed] [Google Scholar]

- Sanabria D, Soto-Faraco S, Spence C. Spatiotemporal interactions between audition and touch depend on hand posture. Exp Brain Res. 2005;165:505–514. doi: 10.1007/s00221-005-2327-5. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Steinschneider M, Javitt DC, Tenke CE, Givre SJ, Mehta AD, Simpson GV, Arezzo JC, Vaughan HG., Jr Localization of ERP generators and identification of underlying neural processes. Electroencephalogr Clin Neurophysiol Suppl. 1995;44:55–75. [PubMed] [Google Scholar]

- Schroeder CE, Mehta AD, Givre SJ. A spatiotemporal profile of visual system activation revealed by current source density analysis in the awake macaque. Cereb Cortex. 1998;8:575–592. doi: 10.1093/cercor/8.7.575. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Lindsley RW, Specht C, Marcovici A, Smiley JF, Javitt DC. Somatosensory input to auditory association cortex in the macaque monkey. J Neurophysiol. 2001;85:1322–1327. doi: 10.1152/jn.2001.85.3.1322. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe JJ. The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res Cogn Brain Res. 2002;14:187–198. doi: 10.1016/s0926-6410(02)00073-3. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Smiley J, Fu KG, McGinnis T, O’Connell MN, Hackett TA. Anatomical mechanisms and functional implications of multisensory convergence in early cortical processing. Int J Psychophysiol. 2003;50:5–17. doi: 10.1016/s0167-8760(03)00120-x. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Schurmann M, Caetano G, Jousmaki V, Hari R. Hands help hearing: facilitatory audiotactile interaction at low sound-intensity levels. J Acoust Soc Am. 2004;115:830–832. doi: 10.1121/1.1639909. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Molholm S, Gomez-Ramirez M, Foxe JJ. Oscillatory Beta Activity Predicts Response Speed during a Multisensory Audiovisual Reaction Time Task: A High-Density Electrical Mapping Study. Cereb Cortex. 2005;11:1556–1565. doi: 10.1093/cercor/bhj091. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Talsma D, Grigutsch M, Herrmann CS, Woldorff MG. Good times for multisensory integration: Effects of the precision of temporal synchrony as revealed by gamma-band oscillations. Neuropsychologia. 2006 doi: 10.1016/j.neuropsychologia.2006.01.013. [Epub]. [DOI] [PubMed] [Google Scholar]

- Shah AS, Bressler SL, Knuth KH, Ding M, Mehta AD, Ulbert I, Schroeder CE. Neural dynamics and the fundamental mechanisms of event-related brain potentials. Cereb Cortex. 2004;14:476–483. doi: 10.1093/cercor/bhh009. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The Merging of the Senses. Boston, MA: MIT Press; 1993. [Google Scholar]

- Steinschneider M, Reser D, Schroeder CE, Arezzo JC. Tonotopic organization of responses reflecting stop consonant place of articulation in primary auditory cortex (A1) of the monkey. Brain Res. 1995;674:147–152. doi: 10.1016/0006-8993(95)00008-e. [DOI] [PubMed] [Google Scholar]

- Stone JV, Hunkin NM, Porrill J, Wood R, Keeler V, Beanland M, Port M, Porter NR. When is now? Perception of simultaneity Proc R Soc Lond B Biol Sci. 2001;268:31–38. doi: 10.1098/rspb.2000.1326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sumby W, Pollack I. Visual Contribution to Speech Intelligibility in Noise. The Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Werner-Reiss U, Kelly KA, Trause AS, Underhill AM, Groh JM. Eye position affects activity in primary auditory cortex of primates. Curr Biol. 2003;13:554–562. doi: 10.1016/s0960-9822(03)00168-4. [DOI] [PubMed] [Google Scholar]