ABSTRACT

The Cancer Support Community (CSC) provides psychosocial support to people facing cancer in community settings. The purpose of this study was to evaluate the compatibility, effectiveness, and fidelity of the Situation–Choices–Objectives–People–Evaluation–Decisions (SCOPED) question-listing intervention at three CSC sites. Between August 2008 and August 2011, the Program Director at each CSC site implemented question-listing, while measuring patient distress, anxiety, and self-efficacy before and after each intervention. We analyzed the quantitative results using unadjusted statistical tests and reviewed qualitative comments by patients and the case notes of Program Directors to assess compatibility and fidelity. Program Directors implemented question-listing with 77 blood cancer patients. Patients reported decreased distress (p = 0.009) and anxiety (p = 0.005) and increased self-efficacy (p < 0.001). Patients and Program Directors endorsed the intervention as compatible with CSC’s mission and approach and feasible to implement with high fidelity. CSC effectively translated SCOPED question-listing into practice in the context of its community-based psychosocial support services at three sites.

KEYWORDS: Visit preparation, Coaching, Shared decision making, Patient participation, Professional–patient relations, Questioning, SCOPED

INTRODUCTION

People newly diagnosed with cancer want better strategies for addressing their information needs before, during, and after consultations with doctors [1, 2]. Specifically, the need for question-listing arises because people meeting with cancer specialists face barriers to addressing their information needs [3].

In response, researchers have created prompt sheets and coaching processes to help patients develop a written list of questions prior to their medical visits. Roter initiated this line of research into question-asking and found in a randomized controlled trial that patients coached to make a personalized list of questions before a primary care consultation asked more direct questions during the visit [2]. Others found similar results, even when prompt sheets were used without coaching [4–7]. Overall, a meta-analysis of question-listing interventions featuring 8,244 patients in 33 randomized controlled trials found an increase in question-asking [8].

However, question-listing interventions have not been broadly adopted, implemented, evaluated, and reported by health care delivery systems or patient support organizations with a national reach and focused on community settings in the area of cancer. After extensive work in academic settings [5–7, 9, 10], Dimoska et al. published an account of a limited-term implementation in a community clinic in Australia [11]. Belkora and colleagues have reported on long-term implementations in academic and community settings with a regional reach around Northern California [12–17]. The US Agency for Healthcare Research and Quality now provides an online prompt sheet of question topics [18], but has not reported on its use, and the AskMe3 initiative [19] is not focused on cancer [20].

The present study arose because the Cancer Support Community (CSC) thought Belkora’s approach to question-listing [14, 16, 17] could be translated into practice in their network of affiliates around the USA. This neutral, non-directive, one-on-one facilitated approach, known as Situation–Choices–Objectives–People–Evaluation–Decisions (SCOPED), has been associated with improvements in self-efficacy, question-asking, and anxiety [13, 15, 21]. To address knowledge gaps regarding the translation of question-listing into practice by a community-based organization with national reach, the CSC partnered with the University of California, San Francisco (UCSF) and the Education Network to Advance Cancer Clinical Trials. We evaluated SCOPED question-listing as part of an overall study designed to promote consideration of clinical trials among people with blood cancer.

The CSC’s interest in question-listing arose from its mission to “Ensure that all people impacted by cancer are empowered by knowledge, strengthened by action, and sustained by community.” However, prior to this study, CSC’s programs and services were largely group-based, and a one-on-one service such as question-listing represented a new direction. Therefore, CSC leadership sought to study whether the one-on-one SCOPED questing-listing process was compatible with the mission of CSC, effective in improving psychosocial outcomes, and feasible to deliver with high fidelity.

We focused on these key attributes of compatibility, effectiveness, and feasibility because these were the core concerns of the community partner in this community-engaged research project, and they correspond to theoretical constructs in the emerging science of dissemination and implementation. Specifically, they correspond to several dimensions within the RE-AIM framework widely used in translating research-based interventions into practice [22]. The RE-AIM framework proposes that organizations judge the success of an implemented intervention according to its Reach (number served), Effectiveness (impact), Adoption (by organizations and individuals), Implementation (e.g., fidelity and adaptations), and Maintenance (sustainability of an intervention) [23].

The CSC’s first concern, compatibility, has been defined as “the degree to which an innovation is perceived as consistent with the existing values, past experiences, and needs of potential adopters [24].” It therefore falls under the Adoption dimension of the RE-AIM framework. This definition coincides with the CSC’s use of compatibility as referring to congruence with its core commitment to the Patient Active Concept, a model of support that underwrites all of the CSC’s programs and services. The Patient Active Concept posits that patients who actively participate in their care will have improved quality of life and may enhance the possibility of their recovery [25]. Therefore, question-listing needed to be compatible with the Patient Active Concept. CSC leaders assessed descriptions and demonstrations of question-listing as compatible because the intervention was neutral, non-directive, and patient-centered in stimulating patients to think of personalized questions. However, they felt that other CSC personnel should judge compatibility under field-use conditions.

The CSC’s second concern, effectiveness, refers to impact under field conditions, in contrast with efficacy, which refers to the impact of an intervention under controlled circumstances such as academic studies. CSC made it clear that question-listing must be effective in helping patients be active participants in their care as a condition of any further dissemination.

Finally, CSC’s concern about feasibility corresponds to Implementation in the RE-AIM framework. It refers to whether the organization implements an intervention with high fidelity to the original design, or whether major adaptations are needed. In this case, CSC employs licensed mental health professionals to staff their online as well as location-based programs and services. CSC was interested in whether licensed mental health professionals could deliver the question-listing intervention one-on-one with high fidelity or whether major adaptations would be needed.

We limited our study to these outcomes of compatibility, effectiveness, and feasibility because the CSC leadership felt these were pre-requisites to exploring the other RE-AIM issues relevant to translation of evidence-based research into practice. Only after ascertaining these did CSC feel it would go on to examine Reach and Maintenance.

METHODS

Study questions

CSC and its partners embarked on a study to address the following questions:

Compatibility: Is SCOPED question-listing compatible with the Patient Active Concept?

Effectiveness: Do patients report increased self-efficacy, anxiety, and distress after receiving the question-listing intervention?

Fidelity and Adaptation: How do therapists trained in question-listing adhere to or adapt the intervention protocol?

Design

We used a quasi-experimental design featuring pre-/post-quantitative comparisons along with review, discussion, and interpretation of qualitative survey comments, case notes, and meeting notes.

Setting and sample

The Cancer Support Community is a nonprofit organization with online and physical sites where staff and volunteers deliver psychosocial support to people affected by cancer. The study included three CSC sites delivering one-on-one question-listing services. UCSF provided training and technical assistance for the SCOPED question-listing process.

We addressed study question 1 (Compatibility) with the Executive Directors and Program Directors (authors BC, KC, and MS) from three local affiliates: Walnut Creek, CA; Philadelphia, PA; and Cincinnati, OH. This was a purposive sample of thought and practice leaders known to be among the most experienced and familiar with the Patient Active Concept. CSC selected these sites as they serve states with higher incidence and mortality rates for blood cancers especially among African-Americans.

We addressed study question 2 (Effectiveness) by training the Program Directors in delivery of the SCOPED intervention (see below) and recruiting patients at the three participating CSC affiliates. In response to the study funding mechanism, patients were eligible for the study only if they were making decisions about their blood cancer treatment. For patients who heard about the intervention and were not eligible for the study (e.g., they were not diagnosed with blood cancer), the CSC affiliates offered the intervention off-study. During a pre-evaluation period extending from August 2008 through March 2009, the Program Directors gained experience with the intervention and collected only demographic and clinical characteristics from study participants. During the evaluation period of April 2009 to August 2011, Program Directors collected pre-/post-measures of effectiveness as described below. Our study received ethics approval from Ethical and Independent Review Services, an institutional review board, and followed regulations for obtaining consent and protecting participant privacy. We addressed study question 3 (Fidelity and Adaptation) with the Program Directors from the participating affiliates.

Intervention

The SCOPED question-listing intervention begins with a neutral, non-directive interviewing process. This process involves Scribing, Laddering, Categorizing and Checking, and Triaging [26].

Scribing refers to writing down whatever questions are on the patient’s mind. Laddering involves prompting patients to elaborate on their initial question list, using a model called the Ladder of Inference [27].

Categorizing and Checking refers to use of a prompt sheet of topics to check that patients are covering a broad range of questions. The topics are grouped under the headings Situation, Choices, Objectives, People, Evaluation, Decisions [28]. These headings give the overall intervention its name, as their first letters spell SCOPED.

The last step in the question-listing process, Triaging, refers to prioritizing the question list. The point of this step is that patients should prepare to spend the most time discussing the most important issues with their doctor.

Using these steps, a trained facilitator elicits patient questions without providing information or advice and produces a written question list organized according to the SCOPED topics listed above. The facilitator prints out the SCOPED question list and gives copies to the patient to distribute to accompaniers and health care professionals. Examples of a SCOPED question list are available in the literature [14] and online [29].

Measures

To address study question 2 (Effectiveness), we trained the three Program Directors in question listing, and they recruited patients at the three affiliates to receive the intervention and respond to surveys. We relied on the Ottawa Decision Support Framework for our selection of outcomes, measures, and instruments [30]. This framework positions the intervention as addressing baseline patient decision needs and influencing proximal psychological outcomes. Researchers have used this framework to guide numerous studies in decision research, including studies conceptually similar to this one, of nurses engaging in visit preparation with patients [31–33]. Consistent with this framework and based on prior experiences with patients accessing its services, our study team selected psychological outcomes that we knew could interfere with patient–physician communication, information processing, and decision making. Conversely, ameliorating these conditions from baseline to post-intervention could improve patient capacity to engage in these key tasks.

Therefore, during the evaluation period of April 2009 to August 2011, we measured distress, anxiety, and question self-efficacy before and after the intervention. We measured distress using the Distress Thermometer [34]. We measured anxiety and question self-efficacy using custom categorical items ranging from minimum 0 to maximum 10. The question stem for our anxiety measure was, “On a scale of 0–10, with 0 being lowest and 10 being highest, please indicate how anxious you are right now (check one box only please).” The question stem for our self-efficacy measure was, “On a scale of 0–10, with 0 being lowest and 10 being highest, please indicate your confidence level with respect to the following statement (check one box only please): I know what questions to ask my doctor.” We used these custom items because we had previously found them to be sensitive to the intervention in community settings [13], while minimizing participant burden. We repeated these measures (distress, anxiety, and question self-efficacy) after the intervention.

We collected other information from evaluation participants at baseline for the purpose of investigating associations between such predictor variables and our main outcome variables. Predictor variables included demographic and clinical variables (age, gender, education, race, income, disability, time since diagnosis), telephone versus in-person delivery of the intervention, whether participants had reviewed education materials before the question-listing intervention, mental and physical function summary scores from the SF-12 [35], and initial levels of distress, anxiety, and question self-efficacy.

We also invited patients to write comments in their surveys. Our data collection procedures consisted of the Program Directors (authors BC, KC, MS) administering written questionnaires before and after the intervention.

Data analysis

To address study question 1 (Compatibility), we monitored reactions to the SCOPED intervention by Executive and Program Directors from the three participating CSC affiliates. We captured their reactions in case notes, meeting notes, and emails; reviewed and discussed these documents to interpret meaning and significance; and asked for each Executive and Program Director’s overall endorsement or rejection of SCOPED compatibility at the end of the study period.

For study question 2 (Effectiveness), for our quantitative measures, we tested the null hypothesis of no pre-/post-change against the alternate hypothesis of a change significantly different from 0, using paired t tests and a 5 % two-sided level of significance. Because this was a pilot study generating preliminary data, we did not adjust the significance levels for multiple tests. We also explored bivariate and multivariate associations among variables using linear regression. Potential candidate variables for multiple regression analysis included those that were significant in bivariate analysis (p < 0.15). Backward stepwise regression was used to remove variables from the multivariable model one at a time that were not statistically significant (p < 0.05). For open-ended patient comments, the study team read all survey responses and selected key quotes that represented the range of responses.

To address study question 3 (Fidelity and Adaptations), we conducted monthly meetings to review the experience of the three Program Directors delivering the SCOPED question-listing intervention. During those meetings, we reviewed the question lists the Program Directors produced with patients, as well as each Program Director’s written reflections on each case. We entered the meeting and case notes into the study record and reviewed them with the Program Directors before discussing, together, the level of fidelity and any key adaptations.

RESULTS

Study question 1—Compatibility

The Executive and Program Directors endorsed the proposed question-listing intervention as consistent with the CSC’s Patient Active Concept. National leaders of the CSC echoed this assessment. One of the PDs articulated the general consensus among CSC leaders and staff: “I find that this method of supporting our members in their efforts to make well-informed decisions dovetails well with our other efforts to empower our members to consciously engage in their health care decisions. This non-directive approach allows patients to determine what information they personally want or need, and provides them with a tool to critically consider their options.”

Study question 2—Effectiveness

We offered the intervention to 142 patients who responded to outreach and recruitment activities (such as brochures, newsletter items, and web descriptions). We also delivered the intervention off-study to 55 patients who did not have a blood cancer diagnosis. The median age of off-study patients was 62, four (7 %) self-identified with a racial/ethnic minority group, and 13 (24 %) were male.

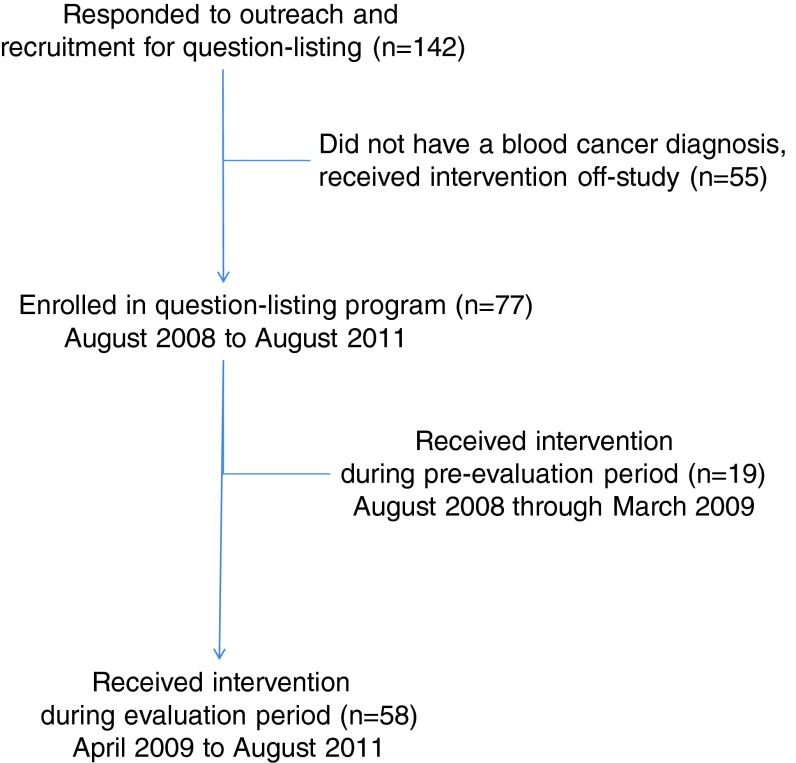

We delivered the intervention on-study to 77 patients with blood cancer. Of these, 19 participated before the evaluation period and provided only baseline data, while 58 filled out pre-/post-surveys. See study flow diagram in Fig. 1.

Fig 1.

Study flow diagram

Table 1 shows demographic and baseline clinical variables for each cohort. There were no statistically significant differences between the pre-evaluation and evaluation participants on baseline variables, except for gender. Ten out of 19 pre-evaluation patients were female (53 %), while 46 out of 78 (79 %) of the evaluation patients were female (p = 0.02)

Table 1.

Demographic and clinical characteristics for pre-evaluation and evaluation cohorts

| Pre-evaluation (n = 19) August 2008–March 2009 | Evaluation cohort (n = 58) April 2009–August 2011 | p valuee | Full sample (n = 77) August 2008 to August 2011 | |||||

|---|---|---|---|---|---|---|---|---|

| na | %b | nc | %d | nf | %g | |||

| Age | <50 years | 1 | 5 | 8 | 14 | 0.43 | 9 | 12 |

| 50–60 years | 9 | 47 | 17 | 29 | 26 | 34 | ||

| 60–70 years | 6 | 32 | 25 | 43 | 31 | 40 | ||

| ≥70 years | 3 | 16 | 8 | 14 | 11 | 14 | ||

| Sex | Female | 10 | 53 | 46 | 79 | 0.02 | 56 | 73 |

| Race and/or ethnicity | Non-Hispanic White | 15 | 79 | 48 | 83 | 0.79 | 63 | 82 |

| Black/African American | 2 | 11 | 5 | 9 | 7 | 9 | ||

| Hispanic or Latino | 0 | 0 | 2 | 3 | 2 | 3 | ||

| Asian or Pacific Islander | 1 | 5 | 1 | 2 | 2 | 3 | ||

| Multiple | 0 | 0 | 1 | 2 | 1 | 1 | ||

| Other | 1 | 5 | 1 | 2 | 2 | 3 | ||

| Education | Some high school or less | 0 | 0 | 1 | 2 | 0.96 | 1 | 1 |

| High school or GED | 1 | 5 | 3 | 5 | 4 | 5 | ||

| Some college | 3 | 16 | 11 | 19 | 14 | 18 | ||

| Bachelor’s degree | 8 | 42 | 23 | 40 | 31 | 40 | ||

| Graduate or professional degree | 8 | 37 | 20 | 34 | 27 | 35 | ||

| Retirement status | Retired | 8 | 42 | 23 | 40 | 0.85 | 31 | 40 |

| Total annual income ($) | Less than 40K | 6 | 33 | 10 | 18 | 0.13 | 16 | 21 |

| 40K–100K | 4 | 21 | 27 | 48 | 31 | 41 | ||

| 100K or more | 7 | 37 | 11 | 20 | 18 | 24 | ||

| Don’t know or do not wish to disclose | 2 | 11 | 8 | 14 | 10 | 13 | ||

| Hematological cancer diagnosis | Multiple Myeloma | 3 | 16 | 23 | 40 | 0.23 | 26 | 34 |

| Leukemia | 9 | 47 | 16 | 28 | 25 | 32 | ||

| Non-Hodgkin’s lymphoma | 6 | 32 | 17 | 29 | 23 | 30 | ||

| Hodgkin’s lymphoma | 1 | 5 | 2 | 3 | 3 | 4 | ||

| Time since hematological cancer diagnosis | Less than 2 months | 5 | 26 | 13 | 23 | 0.88 | 18 | 24 |

| 2–5 months | 4 | 21 | 10 | 18 | 14 | 19 | ||

| 5 months–1 year | 4 | 21 | 13 | 23 | 17 | 23 | ||

| 1–2 years | 2 | 11 | 5 | 9 | 7 | 9 | ||

| 2–5 years | 1 | 5 | 9 | 16 | 10 | 13 | ||

| 5 years or greater | 3 | 16 | 6 | 11 | 9 | 12 | ||

| Stage of treatment for hematological cancer | Diagnosed but not yet treated | 8 | 53 | 18 | 32 | 0.58 | 26 | 37 |

| Considering other treatment options | 5 | 33 | 12 | 21 | 17 | 24 | ||

| Watch and wait | 1 | 7 | 8 | 14 | 9 | 13 | ||

| Disease not responsive to treatment | 1 | 7 | 6 | 11 | 7 | 10 | ||

| Successfully treated but cancer has returned | 0 | 0 | 1 | 2 | 1 | 1 | ||

| On treatment; stable or results not known | 0 | 0 | 10 | 18 | 10 | 14 | ||

| Successfully treated and cancer in remission | 0 | 0 | 0 | 0 | 1 | 1 | ||

| Other | 0 | 0 | 1 | 2 | 1 | 1 | ||

| Physical component summary score (mean ± SD) | 44.8 ± 12.3 | 43.1 ± 11.6 | 0.59h | 43.5 ± 11.7 | ||||

| Mental component summary score (mean ± SD) | 43.8 ± 7.1 | 44.5 ± 11.0 | 0.80 | 44.4 ± 10.1 | ||||

| Baseline level of distress (mean ± SD) | 5.5 ± 2.8 | 5.1 ± 2.7 | 0.63 | 5.2 ± 2.7 | ||||

aNumbers may not total 19 due to missing data

bNumbers may not total 100 % due to rounding

cNumbers may not total 58 due to missing data

dNumbers may not total 100 % due to rounding

eFrom chi-square test with (r − 1)(c − 1) degrees of freedom, where r = number of rows and c = number of columns or 2 in all cases above

fNumbers may not total 77 due to missing data

gNumbers may not total 100 % due to rounding

hFrom t test

Evaluation participants reported a significant decrease in distress (p = 0.009) and anxiety (p = 0.005) and increase in the patient’s question self-efficacy (p < 0.001). See Table 2. In the multiple linear regression, lower income (p = 0.040) and higher baseline distress (p = 0.013) were associated with a greater decrease in distress. Higher education (p = 0.013) and higher baseline anxiety (p < 0.001) were associated with a greater decrease in anxiety. Higher physical functioning (p = 0.005) and lower baseline question self-efficacy (p < 0.001) were associated with a greater increase in question self-efficacy. See Table 3.

Table 2.

Pre- and post-question listing values in distress, anxiety, and question self-efficacy for patients in the evaluation cohort (April 2009 to August 2011)

| Pre-question listing | Post-question listing | Paired t test | |||||

|---|---|---|---|---|---|---|---|

| n | Mean ± SE | n | Mean ± SE | n | Mean ± SE | p value | |

| Distress | 53 | 5.1 ± 0.37 | 54 | 4.5 ± 0.35 | 50 | −0.70 ± 0.26 | 0.009 |

| Anxiety | 57 | 4.6 ± 0.37 | 57 | 3.5 ± 0.34 | 56 | −1.0 ± 0.35 | 0.005 |

| Question self-efficacy | 57 | 4.7 ± 0.29 | 58 | 8.2 ± 0.23 | 57 | 3.5 ± 0.31 | <0.001 |

SE standard error of the mean

Table 3.

Multiple regression analysis of change in distress, anxiety and question self-efficacy among participants in the question-listing evaluation cohort

| Predictor | β | SE β | t | p |

|---|---|---|---|---|

| Change in distressa | ||||

| Lower income (<$40K) | −1.30 | 0.61 | −2.13 | 0.040 |

| Baseline distress | −0.26 | 0.10 | −2.60 | 0.013 |

| Change in anxietyb | ||||

| Higher education (at least a college degree) | −1.71 | 0.67 | −2.56 | 0.013 |

| Baseline anxiety | −0.50 | 0.11 | −4.63 | <0.001 |

| Change in question self-efficacyc | ||||

| Physical functioning | 0.05 | 0.02 | 2.90 | 0.005 |

| Baseline question self-efficacy | −0.72 | 0.09 | −7.76 | <0.001 |

aF(2, 40) = 5.31, p = 0.009, n = 43, adjusted R2 = 0.17

bF(2, 53) = 14.56, p < 0.001, n = 56, adjusted R2 = 0.33

cF(2, 53) = 34.33, p < 0.001, n = 56, adjusted R2 = 0.55

For ten study participants who were interested but faced barriers to visiting a CSC site in person, the CSC Program Directors delivered question listing by telephone. The pre-/post-change in distress, anxiety, or question self-efficacy was not significantly different by the mode of delivering the intervention (phone vs. in person).

Qualitative survey comments echoed the positive effects found in the quantitative survey ratings. One patient wrote before the intervention, “Always anxious when I have an appointment to concentrate on my cancer.” After, the patient wrote:

I came in riddled with anxiety. I leave feeling positive and focused. [Facilitator] has helped me direct my inquiries thoroughly and in an ordered fashion. What could be better than a ‘10’ [satisfaction]! This was one of the most worthwhile appointments I have ever spent my time upon.

Another participant who reported being in pain prior to the intervention wrote afterward:

There’s stress relief (which translates to pain relief) in assistance sorting out issues and questions.

One participant reported that she had acquired the skill to self-administer the question-listing intervention:

This process is wonderful. My husband and I had a very productive meeting with the doctors. We are clear on our next steps. My husband and I are using this technique before all of my appointments. We are clear about our goals and feel more in charge of treatment, etc. Thank you very much.

One participant expressed the view, echoed by a few others, that the intervention, while helpful, should be targeted as early as possible in the patient journey:

This help would have been even more meaningful to me when I was first diagnosed with cancer and was very stressed about the future—‘watch and wait’ or start treatment and questions where to have treatment.

Study question 3—Fidelity and adaptations

Overall, the study team evaluated the Program Director adherence to the SCOPED question-listing protocol as very high. In keeping with the neutral, non-directive nature of the question-listing intervention, Program Directors did not tell patients what questions to ask, or answer patient questions, although they were encouraged to refer patients to relevant resources at the end of their session. Program Directors recognized the value of holding off until the end, when they appreciated being able to refer patients to relevant materials and services.

One recurring issue for the Program Directors, however, was their discomfort with the intervention’s requirement to avoid engaging in discussions about patient emotions. According to the SCOPED manual, facilitators should deal with emotions by listening quietly and then offering to resume the question-listing task, or reschedule it. The facilitator can also refer the patient to resources for emotional support. The Program Directors felt that this process was too constraining for them. They were confident, as licensed mental health professionals, that they could use their skills to switch roles in the middle of the question-listing process; administer a brief therapeutic intervention; and then resume the question-listing. We resolved these dilemmas by modifying the question-listing intervention manual to acknowledge the comfort and skill of Program Directors in layering a brief therapeutic intervention into the question-listing intervention. This was a clear case where using licensed mental health professionals led to an adaptation that would not necessarily be feasible with other types of facilitators.

Program Directors reported one issue that was rare but salient to them. This issue arose when patients approached the question-listing intervention with multiple intentions. As indicated in the standard program manual, the question-listing intervention was designed for patients seeking support with asking questions about treatment decisions. Therefore, the manual and training process emphasize screening, qualifying, and contracting with patients who have a confirmed diagnosis and an upcoming appointment at which they intend to converse with their doctors about treatment options. Program Directors reported that all enrolled patients met these conditions. One patient, however, seemed focused on developing a question list primarily for the purpose to test whether her doctor was a good fit. This patient ended up leaving the physician’s practice and informed the physician that the question-listing process was instrumental in shaping that decision. This situation had the potential to damage the Cancer Support Community’s relationship with this physician, who could have felt set up to fail. In debriefing this incident, the Program Directors agreed that question-listing can and should assist patients in addressing their need to find qualified medical professionals to guide them in their care. However, the Program Directors felt that this should be a by-product of asking treatment-related questions (broadly defined), and not be the primary focus of the question-listing session. This has led us to adopt the practice of clarifying with patients the importance of focusing on the primary intention of raising questions about treatment options.

Program Directors wanted to offer free one-on-one question listing to all comers, but they were concerned this might quickly consume scarce organizational resources if patients required multiple sessions each. As it turned out, the process required just one session for 73 of the study participants.

DISCUSSION

Interpretation and analysis, including connections to the literature

Study question 1 (Compatibility)

Executive and Program Directors at the CSC found the SCOPED question-listing process to be compatible with the CSC’s Patient Active Concept. We believe this is because they have some common underpinnings. In creating this QL intervention [36], the developer was inspired by decision science, including the work of Irving Janis [37]. Harold Benjamin, the founder of the Cancer Support Community, also cites Irving Janis in a book that explains the Patient Active Concept [25]. Benjamin quotes Janis as follows: “No longer are patients seen as passive recipients of health care who are expected to do willingly whatever the doctor says. Rather, they are increasingly regarded as active decision makers, making crucial choices that can markedly affect the kind of treatment they receive and the outcome.” Benjamin writes that one of the implications of this decision-making role relates to asking questions: “Before the visit prepare a written list of the questions you want to ask your doctor, to ensure that all of your questions are asked.” In this light, the SCOPED question-listing process is a programmatic manifestation of advice offered by the founder of the Cancer Support Community and developer of the Patient Active Concept.

Study question 2 (Effectiveness)

The SCOPED question-listing intervention was effective in the context of patients making blood cancer treatment decisions, as delivered by licensed therapists. This is consistent with prior studies showing that a range of personnel, including premedical interns and lay navigators, can deliver SCOPED question-listing to diverse patients facing diverse medical conditions in diverse settings. Specifically, Belkora and colleagues have trained premedical interns at UCSF to engage patients in SCOPED question-listing prior to consultations there [14] and trained former nurses and lay navigators in non-profit organizations to do the same [16]. Evaluations indicate a high degree of patient satisfaction, as well as increases in self-efficacy and question-asking, across a variety of settings and with diverse personnel delivering the intervention [13, 15, 21]. In this study, we relied exclusively on licensed therapists to deliver the intervention. The positive findings in this setting, combined with past experiences elsewhere, suggest that it may be possible to use other CSC personnel to deliver SCOPED question-listing. These could include mental health trainees volunteering at the CSC to gain experience counting toward their professional certification.

Study question 3 (Fidelity and adaptations)

The question-listing intervention required only fairly minor adaptations and modifications, for example in the arena of how to respond to strong expressions of emotion. Generally, this is consistent with findings about similar theory and evidence-based interventions, which can often be replicated in different contexts and with different delivery mechanisms. For example, successful adaptations of Motivational Interviewing have been discussed previously in the literature [38, 39]. In the case of our SCOPED question-listing intervention, we were able to relax the usual requirement of productively bypassing emotions because we were deploying licensed mental health professionals who were well qualified to engage briefly with emotions in a supportive fashion before returning to the question-listing task. However, we found that it made sense to maintain the requirement to wait until the end of question-listing sessions to provide referrals to resources, since this helped the facilitators maintain their neutral, non-directive stance.

Limitations

For study questions 1 and 3, in keeping with the pragmatic and community-based context of this study, and its limited resources, we did not conduct credibility or validity checks of our qualitative analyses. In particular, our discussion of case notes and meeting notes to assess fidelity of the interventions may have reflected a sampling bias in that we did not directly observe the interventions as delivered. CSC leaders felt that direct observations or recordings of client interactions would interfere with the community-based identity of the organization and potentially raise in patient minds a barrier to accessing their programs. Our findings may be distorted by motivational bias, as program directors and other study team members may have a professional stake in confirming the value of question-listing. In addition, assessing compatibility based on the experiences at three sites could also reflect sampling bias, as the three sites may not have been representative of the overall CSC network of sites.

For study question 2, limitations include a possible self-selection bias with a preponderance of English-speaking, Caucasian, female, highly educated, and middle class individuals. Furthermore, it is possible that the effect of the question listing program on question self-efficacy, distress, and anxiety was overestimated due to social desirability. In particular, the improvement in question self-efficacy may have been inflated if participants felt compelled to report greater confidence in asking questions of their doctor on the post-test to fit program expectations. Similarly, the effect of the program may have been overestimated due to cognitive dissonance in which participants report improvement even if it did not occur in order to meet their own expectation that they should have changed. As with any pre-/post-comparison, we cannot rule out that patients who reported improved outcomes might have done so without the intervention.

CONCLUSIONS

We conclude that SCOPED question-listing was feasible to deliver with high fidelity at the CSC and showed strong indications of effectiveness as evidenced by patient survey comments and self-ratings of distress, question self-efficacy, and anxiety. The Cancer Support Community leadership noted the compatibility of question-listing with the organizational mission and the effectiveness of the intervention for people with blood cancer in this study and people with other cancers off-study. On this basis, the Cancer Support Community initiated a new set of activities in the organization’s strategic plan. The goal is to train staff in each of the 50 affiliates in SCOPED question-listing by the end of 2014, as well as launch a national call center to deliver the intervention via a toll-free telephone line. This strategic initiative, known as Open to Options™, will implement the SCOPED theory and evidence-based intervention, making it available to more patients in more diverse settings than was previously the case. We are employing the RE-AIM framework to monitor our progress with respect to adoption and maintenance [23].

This study addressed dissemination and implementation of evidence-based psychological interventions [40]. In addition to being adopted for broader dissemination by the Cancer Support Community, the SCOPED intervention could be relevant to many other community-based organizations that provide psychosocial support to people with cancer, including the American Cancer Society (which runs a telephone helpline), Livestrong, and CancerCare.

Acknowledgments

We wish to thank the patients who participated in this study. This work was supported by a cooperative agreement from the Centers for Disease Control and Prevention under the Geraldine Ferraro Blood Cancer Education Program Number 5U58DP001111. None of the authors have any financial relationship with the funder. The authors control all the primary data which can be made available to the journal or other researchers through a data sharing agreement.

Footnotes

Implications

Practice: Patient support programs should consider coaching patients to list questions using a neutral, non-directive intervention such as SCOPED, as this process is associated with positive psychosocial outcomes.

Policy: Question-listing interventions such as SCOPED are responsive to the Institute of Medicine’s call for routine integration of evidence-based psychosocial support in cancer care.

Research: Future studies should address barriers and facilitators relevant to expanding the reach and maintenance or sustainability of question-listing interventions that are adopted and implemented by community-based patient support organizations.

Contributor Information

Jeff Belkora, Email: jeff.belkora@ucsf.edu.

Mitch Golant, Email: mitch@cancersupportcommunity.org.

References

- 1.Rutten LJ, Arora NK, Bakos AD, Aziz N, Rowland J. Information needs and sources of information among cancer patients: a systematic review of research (1980–2003) Patient Educ Couns. 2005;57(3):250–261. doi: 10.1016/j.pec.2004.06.006. [DOI] [PubMed] [Google Scholar]

- 2.Roter DL. Patient participation in the patient–provider interaction: the effects of patient question asking on the quality of interaction, satisfaction and compliance. Health Educ Monogr. 1977;5(4):281–315. doi: 10.1177/109019817700500402. [DOI] [PubMed] [Google Scholar]

- 3.Frosch DL, May SG, Rendle KA, Tietbohl C, Elwyn G. Authoritarian physicians and patients’ fear of being labeled ‘difficult’ among key obstacles to shared decision making. Health Aff (Millwood) 2012;31(5):1030–1038. doi: 10.1377/hlthaff.2011.0576. [DOI] [PubMed] [Google Scholar]

- 4.Thompson SC, Nanni C, Schwankovsky L. Patient-oriented interventions to improve communication in a medical office visit. Health Psychol. 1990;9(4):390–404. doi: 10.1037/0278-6133.9.4.390. [DOI] [PubMed] [Google Scholar]

- 5.Butow PN, Dunn SM, Tattersall MH, Jones QJ. Patient participation in the cancer consultation: evaluation of a question prompt sheet. Ann Oncol. 1994;5(3):199–204. doi: 10.1093/oxfordjournals.annonc.a058793. [DOI] [PubMed] [Google Scholar]

- 6.Brown R, Butow PN, Boyer MJ, Tattersall MH. Promoting patient participation in the cancer consultation: evaluation of a prompt sheet and coaching in question-asking. Br J Cancer. 1999;80(1–2):242–248. doi: 10.1038/sj.bjc.6690346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Brown RF, Butow PN, Dunn SM, Tattersall MH. Promoting patient participation and shortening cancer consultations: a randomised trial. Br J Cancer. 2001;85(9):1273–1279. doi: 10.1054/bjoc.2001.2073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kinnersley P, Edwards A, Hood K, et al. Interventions before consultations for helping patients address their information needs. Cochrane Database Syst Rev. 2007; (3):CD004565. [DOI] [PMC free article] [PubMed]

- 9.Clayton JM, Butow PN, Tattersall MH, et al. Randomized controlled trial of a prompt list to help advanced cancer patients and their caregivers to ask questions about prognosis and end-of-life care. J Clin Oncol. 2007;25(6):715–723. doi: 10.1200/JCO.2006.06.7827. [DOI] [PubMed] [Google Scholar]

- 10.Butow P, Devine R, Boyer M, Pendlebury S, Jackson M, Tattersall MH. Cancer consultation preparation package: changing patients but not physicians is not enough. J Clin Oncol. 2004;22(21):4401–4419. doi: 10.1200/JCO.2004.66.155. [DOI] [PubMed] [Google Scholar]

- 11.Dimoska A, Butow PN, Lynch J, et al. Implementing patient question-prompt lists into routine cancer care. Patient Educ Couns. 2012;86(2):252–258. doi: 10.1016/j.pec.2011.04.020. [DOI] [PubMed] [Google Scholar]

- 12.Belkora JK, Teng A, Volz S, Loth MK, Esserman LJ. Expanding the reach of decision and communication aids in a breast care center: a quality improvement study. Patient Educ Couns. 2011;83(2):234–239. doi: 10.1016/j.pec.2010.07.003. [DOI] [PubMed] [Google Scholar]

- 13.Belkora JK, O’Donnell S, Golant M, Hacking B. Involving and informing adults making cancer treatment decisions. International Congress of Behavioral Medicine. Washington, DC. 2010.

- 14.Belkora JK, Loth MK, Volz S, Rugo HS. Implementing decision and communication aids to facilitate patient-centered care in breast cancer: A case study. Patient Educ Couns. 2009;77(3):360–368. doi: 10.1016/j.pec.2009.09.012. [DOI] [PubMed] [Google Scholar]

- 15.Belkora JK, Loth MK, Chen DF, Chen JY, Volz S, Esserman LJ. Monitoring the implementation of Consultation Planning, Recording, and Summarizing in a breast care center. Patient Educ Couns. 2008;73(3):536–543. doi: 10.1016/j.pec.2008.07.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Belkora J, Edlow B, Aviv C, Sepucha K, Esserman L. Training community resource center and clinic personnel to prompt patients in listing questions for doctors: follow-up interviews about barriers and facilitators to the implementation of consultation planning. Implement Sci. 2008;3:6. doi: 10.1186/1748-5908-3-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Belkora J, Katapodi M, Moore D, Franklin L, Hopper K, Esserman L. Evaluation of a visit preparation intervention implemented in two rural, underserved counties of Northern California. Patient Educ Couns. 2006;64(1–3):350–359. doi: 10.1016/j.pec.2006.03.017. [DOI] [PubMed] [Google Scholar]

- 18.Agency for Healthcare Research and Quality. Questions are the answer. 2008. http://www.ahrq.gov/questionsaretheanswer/questionBuilder.aspx. Accessed September 19, 2012.

- 19.Mika VS, Wood PR, Weiss BD, Trevino L. Ask Me 3: improving communication in a Hispanic pediatric outpatient practice. Am J Health Behav. 2007;31(Suppl 1):S115–S121. doi: 10.5993/AJHB.31.s1.15. [DOI] [PubMed] [Google Scholar]

- 20.Miller MJ, Abrams MA, McClintock B, et al. Promoting health communication between the community-dwelling well-elderly and pharmacists: the Ask Me 3 program. J Am Pharm Assoc. 2008;48(6):784–792. doi: 10.1331/JAPhA.2008.07073. [DOI] [PubMed] [Google Scholar]

- 21.Hacking B, Wallace L, Scott S, Kosmala-Anderson J, Belkora J, McNeill A. Testing the feasibility, acceptability and effectiveness of a ‘decision navigation’ intervention for early stage prostate cancer patients in Scotland—a randomised controlled trial. Psychooncology. 2012; doi:10.1002/pon.3093. [DOI] [PubMed]

- 22.Glasgow RE, Bull SS, Gillette C, Klesges LM, Dzewaltowski DA. Behavior change intervention research in healthcare settings: a review of recent reports with emphasis on external validity. Am J Prev Med. 2002;23(1):62–69. doi: 10.1016/S0749-3797(02)00437-3. [DOI] [PubMed] [Google Scholar]

- 23.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327. doi: 10.2105/AJPH.89.9.1322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rogers EM. Diffusion of Innovations. 5. New York: Free; 2003. [Google Scholar]

- 25.Benjamin HH. The Wellness Community Guide to Fighting for Recovery from Cancer. New York: Putnam; 1995. [Google Scholar]

- 26.Belkora J. SLCT. 2011; www.slctprocess.org. Accessed July 27, 2011.

- 27.Argyris C. Action science and intervention. J Appl Behav Sci. 1983;19(2):115–135. doi: 10.1177/002188638301900204. [DOI] [Google Scholar]

- 28.Belkora J. SCOPED. 2011; www.scoped.org. Accessed July 27, 2011.

- 29.Belkora J. Example SCOPED question list. http://www.decisionservices.ucsf.edu/example-question-list/. Accessed July 27, 2011.

- 30.O’Connor A, Tugwell P, Wells G, et al. A decision aid for women considering hormone therapy after menopause: decision support framework and evaluation. Patient Educ Couns. 1998;33:267–279. doi: 10.1016/S0738-3991(98)00026-3. [DOI] [PubMed] [Google Scholar]

- 31.Stacey D, O’Connor AM, Graham ID, Pomey MP. Randomized controlled trial of the effectiveness of an intervention to implement evidence-based patient decision support in a nursing call centre. J Telemed Telecare. 2006;12(8):410–415. doi: 10.1258/135763306779378663. [DOI] [PubMed] [Google Scholar]

- 32.Stacey D, Graham ID, O’Connor AM, Pomey MP. Barriers and facilitators influencing call center nurses’ decision support for callers facing values-sensitive decisions: a mixed methods study. Worldviews Evid-Based Nurs. 2005;2(4):184–195. doi: 10.1111/j.1741-6787.2005.00035.x. [DOI] [PubMed] [Google Scholar]

- 33.Stacey D, Pomey MP, O’Connor AM, Graham ID. Adoption and sustainability of decision support for patients facing health decisions: an implementation case study in nursing. Implement Sci. 2006;1:17. doi: 10.1186/1748-5908-1-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Roth AJ, Kornblith AB, Batel-Copel L, Peabody E, Scher HI, Holland JC. Rapid screening for psychologic distress in men with prostate carcinoma: a pilot study. Cancer. 1998;82(10):1904–1908. doi: 10.1002/(SICI)1097-0142(19980515)82:10<1904::AID-CNCR13>3.0.CO;2-X. [DOI] [PubMed] [Google Scholar]

- 35.Ware JJ, Snow K, Kosinski M, Gandek B. SF-36 Health Survey Manual and Interpretation Guide. Boston: New England Medical Center; 1993. [Google Scholar]

- 36.Belkora JK. Mindful Collaboration: Prospect Mapping As An Action Research Approach to Planning for Medical Consultations [Ph.D. Dissertation]. Stanford: Department of Engineering-Economic Systems & Operations Research, Stanford University; 1997.

- 37.Janis IL. The patient as decision maker. In: Gentry WD, editor. Handbook of Behavioral Medicine. New York: Guilford; 1984. p. xvi. [Google Scholar]

- 38.Burke BL, Arkowitz H, Menchola M. The efficacy of motivational interviewing: a meta-analysis of controlled clinical trials. J Consult Clin Psychol. 2003;71(5):843–861. doi: 10.1037/0022-006X.71.5.843. [DOI] [PubMed] [Google Scholar]

- 39.Lane C, Huws-Thomas M, Hood K, Rollnick S, Edwards K, Robling M. Measuring adaptations of motivational interviewing: the development and validation of the behavior change counseling index (BECCI) Patient Educ Couns. 2005;56(2):166–173. doi: 10.1016/j.pec.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 40.McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments. A review of current efforts. Am Psychol. 2010;65(2):73–84. doi: 10.1037/a0018121. [DOI] [PubMed] [Google Scholar]