Abstract

Context

Failure to notify patients of test results is common even when electronic health records (EHRs) are used to report results to practitioners. We sought to understand the broad range of social and technical factors that affect test result management in an integrated EHR-based health system.

Methods

Between June and November 2010, we conducted a cross-sectional, web-based survey of all primary care practitioners (PCPs) within the Department of Veterans Affairs nationwide. Survey development was guided by a socio-technical model describing multiple inter-related dimensions of EHR use.

Findings

Of 5001 PCPs invited, 2590 (51.8%) responded. 55.5% believed that the EHRs did not have convenient features for notifying patients of test results. Over a third (37.9%) reported having staff support needed for notifying patients of test results. Many relied on the patient's next visit to notify them for normal (46.1%) and abnormal results (20.1%). Only 45.7% reported receiving adequate training on using the EHR notification system and 35.1% reported having an assigned contact for technical assistance with the EHR; most received help from colleagues (60.4%). A majority (85.6%) stayed after hours or came in on weekends to address notifications; less than a third reported receiving protected time (30.1%). PCPs strongly endorsed several new features to improve test result management, including better tracking and visualization of result notifications.

Conclusions

Despite an advanced EHR, both social and technical challenges exist in ensuring notification of test results to practitioners and patients. Current EHR technology requires significant improvement in order to avoid similar challenges elsewhere.

Keywords: missed test results, medical errors, diagnostic errors, lack of follow-up, health information technology, electronic health records

Background

Failure to follow up on abnormal test results (‘missed test results’) is a global patient safety concern.1–5 Electronic health records (EHRs) are increasingly used to notify practitioners of abnormal test results.6–8 EHR-based test result notification systems can reduce, if not eliminate, many of the communication problems inherent with paper-based records.9–12 However, ensuring that test results receive appropriate follow-up remains challenging even with electronic transmission.5 13 14 Previous studies of practitioner responses to abnormal test result notifications through EHRs found that 7–8% of abnormal test alerts had no evidence of timely follow-up, even when practitioners had electronically acknowledged result receipt.10 11 14

Although the proposed Stage 2 meaningful use regulations15 to be implemented in 2014 in the USA include structured laboratory result reporting functionality, little is known about how to optimize EHR-based test result management. Currently, several major EHR vendors employ a reporting functionality whereby providers receive notification of results in their inboxes (similar to email). This functionality is being increasingly adopted across EHRs and is likely to have a great impact on future test result management practices.

In complex healthcare settings that use EHRs, test result management encompasses many contextual factors such as clinical workflow, user behaviors, and organizational policies and procedures.16 17 Electronically transmitted test results may be missed or overlooked for a variety of reasons related to EHR design and use.18 19 For instance, while most EHRs deliver abnormal results to practitioners through an inbox, the inbox often also holds less important data (‘noise’) that could dilute important or urgent information (‘signals’).19 Other factors contributing to missed test results include policies and procedures related to the use of EHRs, such as unclear responsibility for follow-up of abnormal results when multiple providers are involved in patient care.10 Thus, contextual factors20 that affect test results management derive from both technical and social dimensions of the EHR-enabled health care system.

To better understand contextual factors that affect practitioners’ test results management practices within the setting of a comprehensive EHR, we designed and administered a nationwide survey to primary care practitioners (PCPs) practicing in all facilities of the Department of Veterans Affairs (VA). Our objective was to use survey data on PCPs’ experiences, practices, and preferences to create a body of knowledge upon which to base future improvements in EHR-based test result reporting systems.

Methods

Between June 10, 2010 and November 5, 2010, we conducted a cross-sectional, web-based survey of PCPs in VA settings nationwide. The local institutional review board approved the study. Within the VA system, most patients are assigned to staff PCPs, who serve as a coordinating hub for most care and thus depend heavily on the clinical information sent to their EHR inboxes. A smaller number of patients are assigned to trainees or subspecialists who see patients on a part-time basis, usually one half-day per week. Thus, these practitioners have a smaller panel (the total number of patients a PCP is responsible for) due to their other competing responsibilities. Our previous experience suggested that staff PCPs’ experiences, practices, and preferences related to test result management might be different from those of trainees and subspecialists who serve as PCPs.16 18 Thus, we used a large administrative VA database, the Veterans Health Administration Support Service Center Clinical Care: Primary Care Management Module, to identify all PCPs with a minimum primary care patient panel size of 250 (N=5001). This strategy allowed us to exclude trainees and subspecialists because they are generally expected to have small panel sizes.

Survey development

Survey development was guided by literature review1 6 21–34 and by a conceptual model describing multiple inter-related dimensions of EHR use (see ‘Survey content areas’ section below; also see table 1 which lists model dimensions).35 A psychometrician (CS) guided the survey development process, which included item writing and refinement, soliciting input from subject matter experts in EHR use, and iterative content review. After refining all survey items, we pilot tested the survey with 10 PCPs for readability, clarity, and ease of completion in a web-based format. With the exception of demographic items and 10 open-ended items, items were rated on a 5-point Likert-type scale with response options ranging from ‘strongly disagree’ to ‘strongly agree.’ Survey completion time was approximately 20–25 min.

Table 1.

Eight interactive socio-technical health information technology (HIT) dimensions addressed for missed test results

| Dimension | Definition |

|---|---|

| Hardware and software | Equipment and software required to run the healthcare applications |

| Clinical content | Data, information, and knowledge entered, displayed, or transmitted in EHRs |

| Human computer interface | Aspects of the EHR system that users interact with (eg, see, touch, or hear) |

| People | Humans involved in the design, development, implementation, and use of HIT |

| Workflow and communication | Work processes needed to ensure that each patient receives the care they need at the time they need it |

| Organizational policies and procedures | Internal culture, structures, policies, and procedures that affect all aspects of HIT management and healthcare |

| External rules, regulations, and pressures | External forces that facilitate or place constraints on the design, development, implementation, use, and evaluation of HIT in the clinical setting |

| System measurement and monitoring | Measurement of system availability, use, effectiveness, and unintended consequences of system use |

EHR, electronic health record.

Survey content areas

Hardware and software, content, and user interface

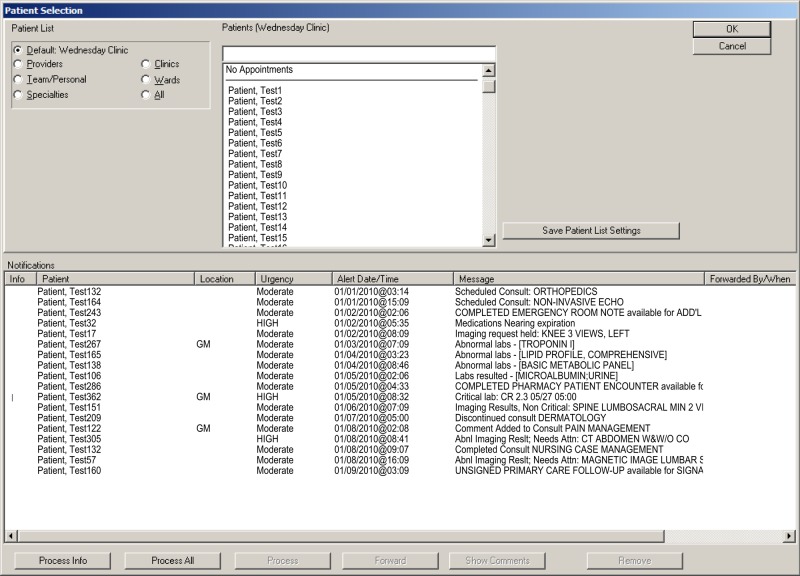

The VA uses the Computerized Patient Record System (CPRS) as its EHR in all its facilities. CPRS uses the ‘View Alert’ notification system to communicate test results (as well as other important clinical information) to practitioners through an inbox. The View Alert system also displays notifications as alerts with various priorities. Practitioners see their patients’ alerts each time they log in to the system or switch between patient records (figure 1). Alerts remain within the inbox until read by the practitioner, but they may be removed automatically if unopened after a certain time period (eg, 14 or 30 days). Although this functionality is used across the VA, individual facilities have discretion over which types of alerts practitioners must receive (eg, they can allow flexibility for practitioners to turn off certain notifications, such as normal test results). Our survey items assessed PCPs’ perceptions of the View Alert notification system, including their views on the content and quantity of alerts received, perceived ease of use, and use of the EHR's features for alert processing and follow-up.

Figure 1.

Alert notification window in the VA Computerized Patient Record System (CPRS).

People

Our survey assessed several characteristics of the respondents including age, gender, race, job classification (ie, academic physician, non-academic physician, nurse practitioner, physician assistant), years employed at the VA, native language (English or other), perceived adequacy of training related to the EHR notification system, and prior use of an EHR other than CPRS.

Workflow and communication

Workflow items assessed perceptions of alert burden, processes related to notifying patients of their test results, and practices used to support alert management.

Organizational features

These items assessed institutional cultural norms and expectancies regarding management of alerts, technical support for alert notifications, organizational support to facilitate patient notification, and the amount of protected time (ie, specifically designated and compensated time) clinicians were given by their organizations to manage alerts. Protected time was measured in hours per week; all other response choices and coding were the same as for other item categories on the 5-point Likert-type scale.

External rules and regulations

We assessed whether practitioners were aware of and adhered to a national VA policy36 released in 2009 requiring notification of patients within 14 days for both normal and abnormal test results.

System measurement and monitoring

We assessed perceptions of practices related to measuring and monitoring alert follow-up at the system level.

New features and functions to improve EHR-based notification

We inquired whether PCPs would endorse several potential strategies to improve EHR-based notification. Strategies were selected based on thematic areas of improvement identified in previous work8 10 11 16 18 37 and addressed both technical and social dimensions.

Survey administration

We solicited support from the section chiefs of primary care at 142 VA facilities nationwide by asking them to email all PCPs at their respective sites to orient them to the project. We subsequently invited all participants by sending a personalized email from the principal investigator (HS) that described the study and provided a link to the web-based survey instrument. To increase response rates, invitation emails and subsequent reminders were followed by telephone attempts to reach non-respondents. In keeping with VA policies, we did not use monetary or other incentives for participation.

Data analysis

Data were downloaded from the internet survey administration service and were analyzed using SPSS statistical software. We generated descriptive statistics to summarize the characteristics of respondents and to aggregate responses to other survey items using descriptive statistics. Likert-type item responses were collapsed into three categories of ‘agree or strongly agree,’ ‘disagree or strongly disagree,’ and ‘neither agree nor disagree.’

Results

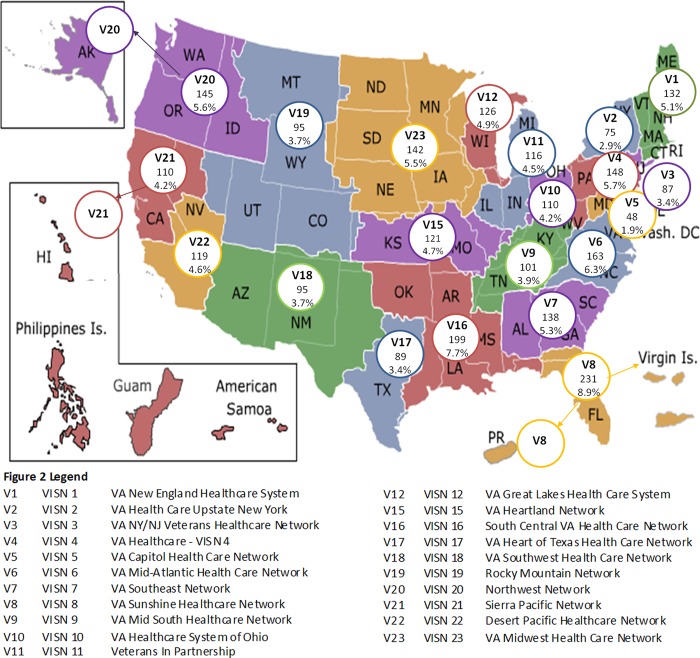

Of 5001 PCPs invited, 2590 (51.8%) responded. Figure 2 shows the geographic distribution of the respondents by Veterans Integrated Service Network (regional divisions of VA). Table 2 shows the characteristics of the respondents. Characteristics of non-respondents were not available for comparison with the respondent group. The vast majority of respondents had considerable experience with the VA EHR, having worked within the VA for 2 or more years. Less than half (45.7%) reported having received sufficient training on the View Alert system, and only a minority (13.7%) reported any refresher training (data not shown in the table). Nevertheless, the majority believed they had the knowledge (74.4%) and proficiency (81.8%) necessary to use the View Alert system (data not shown in the table). Nearly half (46.6%) also had prior experience using a non-VA EHR. Of these, only 19% of providers thought that the non-VA EHRs they used were better than VA's CPRS; 55% indicated that the non-VA EHR they used was overall inferior to CPRS, and 26% perceived it was about the same. No particular non-VA EHR was consistently identified as better or worse than CPRS.

Figure 2.

Distribution of respondents within 21 Veterans Affairs Networks across the USA (Veterans Integrated Service Networks or VISNs).

Table 2.

Characteristics of survey respondents (n=2590)

| Characteristic | n (%) |

|---|---|

| Age | |

| 20–39 | 338 (13.1) |

| 40–49 | 685 (26.4) |

| 50–59 | 961 (37.1) |

| 60 and over | 402 (15.5) |

| Missing | 204 (7.9) |

| Gender | |

| Male | 1080 (41.7) |

| Female | 1343 (51.9) |

| Missing | 167 (6.4) |

| Race | |

| White | 1630 (62.9) |

| Black | 118 (4.6) |

| Asian | 431 (16.6) |

| Other | 188 (7.3) |

| Missing | 223 (8.6) |

| Job classification | |

| Physician, academic | 438 (16.9) |

| Physician, non-academic | 1228 (47.4) |

| Nurse practitioner | 561 (21.7) |

| Physician assistant | 204 (7.9) |

| Missing | 159 (6.1) |

| Years at VA | |

| <2 | 437 (16.9) |

| 2–10 | 1219 (47.1) |

| 11–20 | 589 (22.7) |

| >20 | 201 (7.8) |

| Missing | 144 (5.6) |

| Native language | |

| English | 1911 (73.8) |

| Other | 498 (19.2) |

| Missing | 181 (7.0) |

VA, Department of Veterans Affairs.

Table 3 shows the distribution of responses of selected items related to use and perceptions of the EHR technology (hardware/software, clinical content, and human–computer interface). Although most PCPs found the EHR alert notification system easy to use, more than half believed that the EHR software did not have convenient features for notifying patients of test results. Less than two-thirds of practitioners used a basic sorting feature for alerts, and just over a third used an enhanced functionality to process alerts. Both of these features are intended to promote efficiency when managing alerts. In addition, a significant proportion of PCPs had not altered their alert notification filters to help customize the amount and types of alerts they received. Thus, they received only notifications determined important (and set as default) by the facility (43.0%; data not shown).

Table 3.

Technology-related items associated with EHR-based test result management

| Agree or strongly agree | Disagree or strongly disagree | Neither agree nor disagree | Missing | |

|---|---|---|---|---|

| Item | n (%) | n (%) | n (%) | n (%) |

| Hardware and software | ||||

| CPRS has convenient features for notifying patients of test results | 628 (24.2) | 1437 (55.5) | 421 (16.3) | 104 (4.0) |

| Has a convenient way of generating letters in CPRS for patient notification | 1091 (42.1) | 1042 (40.2) | 347 (13.4) | 110 (4.2) |

| Has an automated voice messaging system that can notify patients of test results | 38 (1.5) | 2337 (90.2) | 113 (4.4) | 102 (3.9) |

| Uses My HealtheVet* to notify patients of test results | 45 (1.7) | 2308 (89.1) | 134 (5.2) | 103 (4.0) |

| Clinical content | ||||

| Acknowledges (ie, clicks on) all high priority alert notifications | 2365 (91.3) | 80 (3.1) | 112 (4.3) | 33 (1.3) |

| Follows up on all high priority alert notifications received | 2372 (91.6) | 77 (3.0) | 97 (3.7) | 44 (1.7) |

| Acknowledges all alert notifications received regardless of priority | 2169 (83.7) | 228 (8.8) | 146 (5.6) | 47 (1.8) |

| Human–computer interface | ||||

| Finds the alert notification system in CPRS easy to use† | 1842 (71.1) | 326 (12.6) | 393 (15.2) | 29 (1.1) |

| Follows up on all alert notifications received | 1968 (76.0) | 311 (12.0) | 258 (10.0) | 53 (2.0) |

| Alert notification system in CPRS makes it possible for providers to miss test results | 1440 (55.6) | 555 (21.4) | 506 (19.5) | 89 (3.4) |

| Sorts alert notifications when necessary according to urgency, patient name, location, or alert date/time | 1625 (62.7) | 562 (21.7) | 381 (14.7) | 22 (0.8) |

| Uses the Process All function of the system when necessary‡ | 981 (37.9) | 1219 (47.1) | 367 (14.2) | 23 (0.9) |

Italicized items are discussed in the text.

*My HealtheVet is a free, online personal health record for VA patients.

†CPRS (Computerized Patient Record System) is the Department of Veterans Affairs electronic health record software.

‡The Process All feature allows clinicians to efficiently process alerts one after another without returning to the View Alert window.

EHR, electronic health record; VA, Department of Veterans Affairs.

Table 4 shows the distribution of responses for survey items related to workflow and communication. A majority of practitioners stayed after hours or came in on weekends to address alerts; only about a third had remote access to the EHR to manage alerts after hours. More than half of PCPs electronically assigned a surrogate or covering practitioner to handle their alerts when out of the office, a process we defined as ‘electronic hand-off.’ Just over a third reported having the help needed for notifying patients of test results. Patient notification varied between normal and abnormal results. Almost half of PCPs reported that they did not immediately notify patients of normal test results and relied on the patient's next visit to notify them, whereas about one-fifth relied on the next visit to notify patients of abnormal results.

Table 4.

Workflow and communication items associated with EHR-based test result management

| Agree or strongly agree | Disagree or strongly disagree | Neither agree nor disagree | Missing | |

|---|---|---|---|---|

| Item | n (%) | n (%) | n (%) | n (%) |

| Workflow and communication | ||||

| Alert burden | ||||

| The number of alerts exceeds what they can effectively manage | 1803 (69.6) | 321 (12.4) | 432 (16.7) | 34 (1.3) |

| Receives too many alerts to easily focus on most important ones | 2078 (80.2) | 215 (8.3) | 244 (9.4) | 53 (2.0) |

| In the past year, missed test results that led to delayed patient care | 772 (29.8) | 1212 (46.8) | 537 (20.7) | 69 (2.7) |

| Uses remote access after hours or on weekends to manage alerts | 893 (34.5) | 1327 (51.2) | 320 (12.4) | 50 (1.9) |

| Has remote access to CPRS | 953 (36.8) | 1599 (61.7) | – | 38 (1.5) |

| Stays after hours or comes in on weekends to manage alerts | 2218 (85.6) | 219 (8.5) | 105 (4.1) | 48 (1.9) |

| Wishes the system provided them with more alerts | 151 (5.8) | 2190 (84.6) | 208 (8.0) | 41 (1.6) |

| Receives too many alert notifications per day | 2251 (86.9) | 164 (6.3) | 166 (6.4) | 9 (0.3) |

| Uses additional paper-based methods to help follow test results | 1451 (56.0) | 1103 (42.6) | – | – |

| Gets too many FYI only alert notifications that require a signature even though no action on their part is required | 2071 (80.0) | 250 (9.7) | 258 (10.0) | 11 (0.4) |

| Often receives alert notifications where they are unsure as to why they were sent to them | 1601 (61.8) | 539 (20.8) | 432 (16.7) | 18 (0.7) |

| Patient notification | ||||

| Consistently notifies patients of abnormal test results | 2146 (82.9) | 151 (5.8) | 185 (7.1) | 108 (4.2) |

| Has the help needed for notifying patients of test results | 981 (37.9) | 1180 (45.6) | 332 (12.8) | 97 (3.7) |

| Consistently notifies patients of normal test results | 1167 (45.1) | 902 (34.8) | 415 (16.0) | 106 (4.1) |

| Relies on a patient's next visit to notify them of their abnormal test results | 521 (20.1) | 1556 (60.1) | 407 (15.7) | 106 (4.1) |

| Relies on a patient's next visit to notify them of their normal test results | 1193 (46.1) | 858 (33.1) | 431 (16.6) | 108 (4.2) |

| Use of surrogates | ||||

| Alert notifications related to surrogates create new safety concerns | 1339 (51.7) | 482 (18.6) | 730 (28.2) | 39 (1.5) |

| Has support staff to assist with management of test result alert notifications | 873 (33.7) | 1304 (50.3) | 311 (12.0) | 102 (3.9) |

| Assigns a surrogate to take care of alert notifications when out of the office | 1525 (58.9) | 788 (30.4) | 239 (9.2) | 38 (1.5) |

Italicized items are discussed in the text.

CPRS, Computerized Patient Record System; EHR, electronic health record; FYI, for your information.

Table 5 summarizes responses to items that assessed people and organizational features affecting alert management. Only about a third of respondents reported having or accessing an assigned technical contact to help with alerts; most admitted receiving help with alert management from colleagues. About a third of respondents reported receiving protected time for alert management; of these, most respondents reported it to be at least 4 hours per week (69.8%).

Table 5.

Organizational and people items associated with EHR-based test result management

| Agree or strongly agree |

Disagree or strongly disagree | Neither agree nor disagree |

Missing |

||

|---|---|---|---|---|---|

| Item | n (%) | n (%) | n (%) | n (%) | |

| Organizational policies and procedures | |||||

| Technical support | |||||

| Clinic has an assigned technical contact that can be accessed for help with alert notifications | 908 (35.1) | 947 (36.6) | 651 (25.1) | 84 (3.2) | |

| IT help person provides useful resources | 621 (24.0) | 882 (34.1) | 988 (38.1) | 99 (3.8) | |

| With questions about or problems with alert notifications, gets the help needed from IT | 887 (34.2) | 809 (31.2) | 807 (31.2) | 87 (3.4) | |

| With questions about or problems with alert notifications, gets the help needed from colleagues | 1564 (60.4) | 348 (13.4) | 587 (22.7) | 91 (3.5) | |

| Protected time | |||||

| Has protected clinical time slots to manage alert notifications* | 779 (30.1) | 1773 (68.5) | – | 38 (1.5) | |

| People | |||||

| Supportive norms | |||||

| Colleagues believe the alert notifications in CPRS help them get their job done effectively | 640 (24.7) | 849 (32.8) | 1041 (40.2) | 60 (2.3) | |

| Supervisor believes alert notifications in CPRS are an essential component of effective primary care | 1403 (54.2) | 143 (5.5) | 981 (37.9) | 63 (2.4) | |

| Senior management has emphasized the importance of the use of alert notifications in high quality care | 1359 (52.5) | 189 (7.3) | 981 (37.9) | 61 (2.4) | |

| Performance expectancy | |||||

| Using alert notifications in CPRS enhances providers’ ability to provide safe patient care | 2098 (81.0) | 167 (6.4) | 275 (10.6) | 50 (1.9) | |

| Using alert notifications in CPRS enhances providers’ effectiveness on the job | 1803 (69.6) | 311 (12.0) | 423 (16.3) | 53 (2.0) | |

| Using alert notifications in CPRS increases providers’ productivity | 952 (36.8) | 858 (33.1) | 723 (27.9) | 57 (2.2) | |

| Using alert notifications in CPRS allows providers to meet performance standards | 1312 (50.7) | 542 (20.9) | 679 (26.2) | 57 (2.2) | |

Italicized items are discussed in the text.

*Of the 779 providers who reported receiving protected time, 224 (28.8%) reported <4 h/week, 544 (69.8%) reported ≥4 h/week, and 11 (1.4%) did not report the number of hours of protected time.

CPRS, Computerized Patient Record System; EHR, electronic health record.

About half of respondents (54.2%) were aware of the 2009 Veterans Health Administration (VHA) policy (ie, external rules and regulations) regarding notification of patients regarding test results. However, among those aware of the policy only 20% reported that they changed their result notification practices accordingly (data not shown in tables). Almost a third (31.9%) reported that their supervisors monitored how they managed notifications, but fewer (23.9%) received feedback on their follow-up practices (ie, system measurement and monitoring).

Table 6 summarizes PCPs’ assessments of potential new features and functions to improve EHR-based notification. PCPs strongly endorsed four functionalities to reduce loss of alert information in the EHR (preventing automated deletion of alerts, being able to retrieve deleted alerts, having a back button, and being able to access alerts in the inbox for at least 30 days). To improve alert management options, most agreed with the need for a feature to remind them at a later date of the necessity to take a follow-up action. In addition, most PCPs endorsed the need for a separate messaging system within the EHR to allow providers to communicate, rather than the traditional method of including these human-generated messages along with the EHR-generated notification system that was used for result notification. About two-thirds of respondents endorsed one or more new visualization techniques, including a separate window for high-priority alerts or a method to filter or color-code alerts based on type. Most PCPs supported strategies to improve safety related to hand-offs, including being able to assign responsibility of test result follow-up and to display who is responsible for follow-up. About half were in favor of receiving feedback about their alert management performance.

Table 6.

New features and functions to improve EHR-based notification

| Agree or strongly agree | Disagree or strongly disagree | Neither agree nor disagree | Missing | |

|---|---|---|---|---|

| Item | n (%) | n (%) | n (%) | n (%) |

| Hardware and software | ||||

| Improving communication and alert management options | ||||

| I would like to be able to set reminders for myself for future actions | 2160 (83.4) | 64 (2.5) | 213 (8.2) | 153 (5.9) |

| I would like to have a messaging system within CPRS that would allow providers to communicate with one another - this would be outside the View Alert system | 1826 (70.5) | 174 (6.7) | 444 (17.1) | 146 (5.6) |

| Human–computer interface | ||||

| Improving alert visualization | ||||

| I would like to receive high priority test result notifications in one window, and all other alert notifications in another window | 1611 (62.2) | 474 (18.3) | 370 (14.3) | 135 (5.2) |

| I would like an option to display only certain alert notifications at a time (ie, filter to display only surrogate, inpatient, or high priority alerts) | 1722 (66.5) | 321 (12.4) | 398 (15.4) | 149 (5.8) |

| I would like to have my alert notifications color-coded according to type (eg, surrogate, inpatient, or high priority alerts) | 1720 (66.4) | 316 (12.2) | 414 (16.0) | 140 (5.4) |

| Better processing and tracking of alerts | ||||

| I would like to be able to retrieve my deleted alert notifications | 2036 (78.6) | 193 (7.5) | 228 (8.8) | 133 (5.1) |

| High priority alert notifications should not disappear until I actively delete them after taking follow-up action | 1913 (73.9) | 266 (10.3) | 273 (10.5) | 138 (5.3) |

| There should be a mechanism in the alert notification system in CPRS to display the name of the person responsible for following up on the test result alert | 1596 (61.6) | 292 (11.3) | 557 (21.5) | 145 (5.6) |

| I would like to have a ‘back button’ in CPRS to retrieve the prior window | 2173 (83.9) | 67 (2.6) | 212 (8.2) | 138 (5.3) |

| All unacknowledged alert notifications should stay in the alert notification window for at least 30 days | 1689 (65.2) | 318 (12.3) | 445 (17.2) | 138 (5.3) |

| Workflow and communication | ||||

| Improving the surrogate process | ||||

| As a surrogate, I should only receive high priority alert notifications for patients assigned to the provider who is out of the office | 1659 (64.1) | 432 (16.7) | 360 (13.9) | 139 (5.4) |

| Currently it is not possible for a provider to directly assign, without IT assistance, more than one surrogate in CPRS. This capability should be introduced | 1555 (60.0) | 264 (10.2) | 618 (23.9) | 153 (5.9) |

| System measurement and monitoring | ||||

| Improving feedback | ||||

| I would like to receive feedback about my performance related to follow-up of high priority alert notifications | 1264 (48.8) | 479 (18.5) | 708 (27.3) | 139 (5.4) |

CPRS, Computerized Patient Record System; EHR, electronic health record.

Interpretation

Test result reporting has substantial patient safety implications and is now being considered in the meaningful use criteria for EHRs.38 However, our data suggest that despite the use of an advanced EHR system, both social and technical challenges exist in ensuring the reliability of test result notification to practitioners and patients within one of the world's largest healthcare systems. Other healthcare systems, most of which have a shorter history of EHR use, are likely to face similar challenges as they begin adopting EHRs. Several technical as well as social (personal, workflow, and organizational) factors need to be addressed in order for EHR-based test reporting functionality to be successful. PCPs in our sample endorsed several new features and functions to reduce loss of information in the EHR and to improve visualization of alerts that communicate test results.

We found that most providers do not routinely notify patients of normal test results, and a substantial proportion use the next scheduled visit to notify patients of both normal and abnormal results. A 1996 study conducted outside the VA reached similar conclusions, suggesting that this pattern has changed little in the last two decades.30 Recent initiatives within the VA are developing additional guidance to address test result management, which might change some of the patterns we found.39 As healthcare quality improvement efforts increasingly emphasize patient engagement, alternative methods are being explored for patients to receive test results. For instance, results are increasingly accessible to patients through online portals,40 although adoption has been slower than expected.41 42 Furthermore, the Department of Health and Human Services recently proposed a rule43 allowing patients to access test results directly from the laboratory upon patient request (ie, bypassing the provider). However, providers’ interpretation of test results within the context of the patient's other clinical conditions remains essential.44 Because most PCPs receive hundreds of test results a week,31 one area for potential improvement is PCPs’ resources to facilitate patient notification. Just over a third of the PCPs reported having administrative support for patient notification, presenting an opportunity to leverage the current shift towards team-based models of care (eg, medical home teams45). For example, with appropriate task delegation and clarity of roles, other members of the team could be responsible for tracking results and notifying patients.45 Future EHR development should focus on innovative methods to facilitate patient notification of test results.

Our findings underscore the additional time burden induced by EHR-based alert systems, with the majority of respondents staying after hours, coming in on weekends, or using remote access from home to deal with notifications. Unfortunately, there is little or no reimbursement for non-face-to-face time related to documentation, follow-up, and patient notification of test results. Although the interventions proposed in the survey focused mostly on technological features and workflow, several organizational and policy interventions are also needed to improve the outcomes of EHR-based test results reporting.

Based on our data, we recommend several strategies for improving test results reporting though EHRs. First, being able to easily retrieve and readily access critical test result data is an absolute requirement for safe and efficient care, and the ability to do so (eg, to retrieve previously viewed alerts) was endorsed by a large majority of respondents. For instance, providers might accidently delete a result notification or need to return to an alert they processed some time ago, and these workflow requirements should inform EHR design. Second, to effectively address alert-related information overload,19 providers need better methods to display, sort, and visualize test result information46 according to patient, date, type, priority, and origin of alerts. Usability testing is essential to evaluate EHR support features for planning and prioritizing among high volumes of alerts. Third, most providers agreed with the need for strategies to improve electronic hand-offs in the EHR, including assignment of responsibility. There is surprisingly little knowledge to guide effective policy setting or practice management in this area. While health information technology can prevent communication breakdowns,47–50 vulnerabilities related to teamwork and care coordination51 52 have received less attention, and novel methods to reduce ambiguities related to these hand-offs are needed. Lastly, EHRs need to be able to support the ‘prospective’ memory53 of providers (memory of an intent to perform a future task) by facilitating alert tracking and self-reminders for future tasks. Some of these features should be deemed as universal requirements in EHRs.

Most of our results are generalizable outside the VA even though there are differences in EHR features, usage, organizational, workflow, and policy-related factors. This is due to the fact that the VA has been a leader in the successful integration and use of comprehensive EHRs, and many of our findings are valuable for other healthcare institutions that are moving to more integrated, technology-enabled care environments as required to meet the new patient management challenges prescribed by accountable care organizations.54 Although the alert notification system was specific to the VA's EHR, most major EHRs use similar methods to notify providers of important clinical findings, and others will follow in compliance with meaningful use requirements. Our study had a relatively low response rate. However, this is comparable to or higher than the response rates of many other physician surveys,55–58 which traditionally are not very high.59 60 In fact, the large number of completed surveys represents one of the largest total responses regarding EHR characteristics ever reported.61 62 Studies that report higher response rates for physician surveys often utilize monetary incentives for participants (which may lead to a different bias in the results), a practice not permitted for data collection within the VA. Lastly, we did not have access to any demographic or practice-based characteristics of non-respondent physicians for comparison.

Overall, our survey data suggest that current capabilities for test result management are limited even within a well-established, mature EHR. A comprehensive socio-technical approach is needed to optimize EHR-based test result management. Key components of this socio-technical approach will include: (1) design, development, and testing of EHR features and functions to support physicians’ test result management workflows and inclusion of these new features in all EHRs; (2) new policies and procedures at the local institutional and national level regarding appropriate methods and timeliness of patient notification; and (3) commitments from organizational leadership and payers to acknowledge and support the additional, non-face-to-face work required to provide care for patients in an EHR-enabled healthcare system.

Acknowledgments

We thank Annie Bradford, PhD for assistance with medical editing.

Footnotes

Funding: The research reported here was supported by the Department of Veterans Affairs National Center for Patient Safety and in part by the Houston VA HSR&D Center of Excellence (HFP90-020), the National Library of Medicine (R01 LM006942), and the Office of the National Coordinator for Health Information Technology (ONC #10510592).

Competing interests: None.

The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs or other funding agencies. The corresponding author had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Ethics approval: Baylor College of Medicine Institutional Review Board approved this study.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Wahls TL, Cram PM. The frequency of missed test results and associated treatment delays in a highly computerized health system. BMC Fam Pract 2007;8:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Schiff GD, Hasan O, Kim S, et al. Diagnostic error in medicine: analysis of 583 physician-reported errors. Arch Intern Med 2009;169:1881–7 [DOI] [PubMed] [Google Scholar]

- 3.Lorincz CY, Drazen E, Sokol PE, et al. Research in Ambulatory Patient Safety 2000–2010: a 10-year review. Am Med Assoc 2011. Available at: www.ama-assn.org/go/patientsafety. (accessed 3 Dec 2012) [Google Scholar]

- 4.Wynia MK, Classen DC. Improving ambulatory patient safety. JAMA 2011;306:22 2504–5 [DOI] [PubMed] [Google Scholar]

- 5.Callen JL, Westbrook JI, Georgiou A, et al. Failure to follow-up test results for ambulatory patients: a systematic review. J Gen Intern Med 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Murff HJ, Gandhi TK, Karson AK, et al. Primary care physician attitudes concerning follow-up of abnormal test results and ambulatory decision support systems. Int J Med Inform 2003;71:137–49 [DOI] [PubMed] [Google Scholar]

- 7.Poon EG, Wang SJ, Gandhi TK, et al. Design and implementation of a comprehensive outpatient Results Manager. J Biomed Inform 2003;36:80–91 [DOI] [PubMed] [Google Scholar]

- 8.Singh H, Vij MS. Eight recommendations for policies for communicating abnormal test results. Jt Comm J Qual Patient Saf 2010;36:226–32 [DOI] [PubMed] [Google Scholar]

- 9.Tenner CT, Shapiro NM, Wikler A. Improving health care provider notification in an academic setting: a cascading system of alerts. Arch Intern Med 2010;170:392. [DOI] [PubMed] [Google Scholar]

- 10.Singh H, Thomas EJ, Mani S, et al. Timely follow-up of abnormal diagnostic imaging test results in an outpatient setting: are electronic medical records achieving their potential? Arch Intern Med 2009;169:1578–86 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Singh H, Thomas EJ, Sittig DF, et al. Notification of abnormal lab test results in an electronic medical record: do any safety concerns remain? Am J Med 2010;123:238–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Singh H, Arora H, Vij M, et al. Communication outcomes of critical imaging results in a computerized notification system. J Am Med Inform Assoc 2007;14:459–66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Casalino LP, Dunham D, Chin MH, et al. Frequency of Failure to Inform Patients of Clinically Significant Outpatient Test Results. Arch Intern Med 2009;169:1123–9 [DOI] [PubMed] [Google Scholar]

- 14.Elder NC, McEwen TR, Flach J, et al. The management of test results in primary care: does an electronic medical record make a difference? Fam Med 2010;42:327–33 [PubMed] [Google Scholar]

- 15.Health Information Technology: Standards, Implementation Specifications, and Certification Criteria for Electronic Health Record Technology, 2014 Edition; Revisions to the Permanent Certification Program for Health Information Technology Office of the National Coordinator for Health Information Technology (ONC), Department of Health and Human Services. Federal Register 2012. (cited 2012 July 25); 77:13845 http://www.gpo.gov/fdsys/pkg/FR-2012–03–07/pdf/2012–4430.pdf (accessed 3 Dec 2012) [PubMed] [Google Scholar]

- 16.Hysong SJ, Sawhney MK, Wilson L, et al. Understanding the management of electronic test result notifications in the outpatient setting. BMC Med Inform Decis Mak 2011;11:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Final Report: Summary review: Evaluation of Veterans Health Administration procedures for communicating abnormal test results. Report number 01-01965-24. 11-25-2002. Ref Type: Report

- 18.Hysong SJ, Sawhney MK, Wilson L, et al. Provider management strategies of abnormal test result alerts: a cognitive task analysis. J Am Med Inform Assoc 2010;17:71–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Singh H, Spitzmueller C, Petersen NJ, et al. Information overload and missed test results in electronic health record-based settings. Arch Intern Med. In press [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Taylor SL, Dy S, Foy R, et al. What context features might be important determinants of the effectiveness of patient safety practice interventions? BMJ Qual Saf 2011;20:611–17 [DOI] [PubMed] [Google Scholar]

- 21.Yano EM, Simon B, Canelo I, et al. 1999 VHA survey of primary care practices. VA HSR&D Center of Excellence for the Study of Healthcare Provider Behavior. 00-MC12. 2000. VA HSR&D Center of Excellence for the Study of Healthcare Provider Behavior. Ref Type: Report

- 22.Forward momentum: Hospital use of information technology http://www.aha org/content/00-10/FINALNonEmbITSurvey105 pdf (2005. (accessed 3 Dec 2012)

- 23.Lewis JR. IBM computer usability satisfaction questionnaires: psychometric evaluation and instructions for use. Int J Hum Comput Interact 1995;7:57–78 [Google Scholar]

- 24.Clason DL, Dormody TJ. Analyzing data measured by individual Likert-type items. J Agricultural Educ 2000;35:31–6 [Google Scholar]

- 25.Lyons SS, Tripp-Reimer T, Sorofman BA, et al. VA QUERI informatics paper: information technology for clinical guideline implementation: perceptions of multidisciplinary stakeholders. J Am Med Inform Assoc 2005;12:64–71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Campbell EG, Regan S, Gruen RL, et al. Professionalism in medicine: results of a national survey of physicians. Ann Intern Med 2007;147:795–802 [DOI] [PubMed] [Google Scholar]

- 27.Jha AK, Ferris TG, Donelan K, et al. How common are electronic health records in the United States? A summary of the evidence. Health Aff (Millwood) 2006;25:w496–507 [DOI] [PubMed] [Google Scholar]

- 28.Cutler DM, Feldman NE, Horwitz JR. U.S. adoption of computerized physician order entry systems. Health Aff (Millwood) 2005;24:1654–63 [DOI] [PubMed] [Google Scholar]

- 29.Ash JS, Gorman PN, Seshadri V, et al. Computerized physician order entry in U.S. hospitals: results of a 2002 survey. J Am Med Inform Assoc 2004;11:95–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Boohaker E, Ward RE, Uman JE, et al. Patient notification and follow-up of abnormal test results. A physician survey. Arch Intern Med 1996;156:327–31 [PubMed] [Google Scholar]

- 31.Poon E, Gandhi T, Sequist T, et al. "I wish I had seen this test result earlier!": dissatisfaction with test result management systems in primary care. Arch Intern Med 2004;164:2223–8 [DOI] [PubMed] [Google Scholar]

- 32.Hickner J, Graham DG, Elder NC, et al. Testing process errors and their harms and consequences reported from family medicine practices: a study of the American Academy of Family Physicians National Research Network. Qual Saf Health Care 2008;17:194–200 [DOI] [PubMed] [Google Scholar]

- 33.Elder NC, Dovey SM. Classification of medical errors and preventable adverse events in primary care: a synthesis of the literature. J Fam Pract 2002;51:927–32 [PubMed] [Google Scholar]

- 34.Elder NC, Pallerla H, Regan S. What do family physicians consider an error? A comparison of definitions and physician perception. BMC Fam Pract 2006;7:73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010;19(Suppl 3):i68–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.2009. VHA Directive 2009–019: Ordering and Reporting Test Results. Veterans Health Administration. http://www1.va.gov/vhapublications/ViewPublication.asp?pub_ID=1864 (accessed 3 Dec 2012)

- 37.Singh H, Wilson L, Reis B, et al. Ten strategies to improve management of abnormal test result alerts in the electronic health record. J Patient Saf 2010;6:121–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Blumenthal D, Tavenner M. The "Meaningful Use" Regulation for Electronic Health Records. N Engl J Med 2010;363:501–4 [DOI] [PubMed] [Google Scholar]

- 39.Sittig DF, Singh H. Improving test result follow-up through electronic health records requires more than just an alert. J Gen Intern Med 2012;27:1235–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Fraser HS, Allen C, Bailey C, et al. Information systems for patient follow-up and chronic management of HIV and tuberculosis: a life-saving technology in resource-poor areas. J Med Internet Res 2007;9:e29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Archer N, Fevrier-Thomas U, Lokker C, et al. Personal health records: a scoping review. J Am Med Inform Assoc 2011;18:515–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sittig DF. Personal health records on the internet: a snapshot of the pioneers at the end of the 20th Century. Int J Med Inform 2002;65:1–6 [DOI] [PubMed] [Google Scholar]

- 43.CLIA Program and HIPAA Privacy Rule Patients’ Access to Test Reports (42 CFR 493, 45 CFR 164). Fed Regist 2011;76 [PubMed] [Google Scholar]

- 44.Davis Giardina T, Singh H. Should patients get direct access to their laboratory test results? An answer with many questions. JAMA 2011;306:2502–3 [DOI] [PubMed] [Google Scholar]

- 45.Singh H, Graber M. Reducing diagnostic error through medical home-based primary care reform. JAMA 2010;304:463–4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Woods DD, Patterson E, Roth EM. Can we ever escape from data overload? A cognitive systems diagnosis. Cogn Technol Work 2002;4:22–36 [Google Scholar]

- 47.Brantley SD, Brantley RD. Reporting significant unexpected findings: the emergence of information technology solutions. J Am Coll Radiol 2005;2:304–7 [DOI] [PubMed] [Google Scholar]

- 48.Kuperman GJ, Teich JM, Tanasijevic MJ, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial. J Am Med Inform Assoc 1999;6:512–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Petersen LA, Orav EJ, Teich JM, et al. Using a computerized sign-out program to improve continuity of inpatient care and prevent adverse events. Jt Comm J Qual Improv 1998;24:77–87 [DOI] [PubMed] [Google Scholar]

- 50.Singh H, Naik A, Rao R, et al. Reducing diagnostic errors through effective communication: harnessing the power of information technology. J Gen Int Med 2008;23:489–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Woolf SH, Kuzel AJ, Dovey SM, Jr, et al. A string of mistakes: the importance of cascade analysis in describing, counting, and preventing medical errors. Ann Fam Med 2004;2:317–26 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Gandhi TK, Sittig DF, Franklin M, et al. Communication breakdown in the outpatient referral process. J Gen Intern Med 2000;15:626–31 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Dismukes K. Remembrance of things future: prospective memory in laboratory, workplace, and everyday settings. Hum Factors Ergon Soc 2010;6:79–122 [Google Scholar]

- 54.Fisher ES, McClellan MB, Safran DG. Building the path to accountable care. N Engl J Med 2011;365:2445–7 [DOI] [PubMed] [Google Scholar]

- 55.Kellerman SE, Herold J. Physician response to surveys. A review of the literature. Am J Prev Med 2001;20:61–7 [DOI] [PubMed] [Google Scholar]

- 56.Singh H, Thomas EJ, Wilson L, et al. Errors of diagnosis in pediatric practice: a multisite survey. Pediatrics 2010;126:70–9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol 1997;50:1129–36 [DOI] [PubMed] [Google Scholar]

- 58.Cummings SM, Savitz LA, Konrad TR. Reported response rates to mailed physician questionnaires. Health Serv Res 2001;35:1347–55 [PMC free article] [PubMed] [Google Scholar]

- 59.Halpern SD, Kohn R, Dornbrand-Lo A, et al. Lottery-based versus fixed incentives to increase clinicians’ response to surveys. Health Serv Res 2011;46:1663–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Nicholls K, Chapman K, Shaw T, et al. Enhancing response rates in physician surveys: the limited utility of electronic options. Health Serv Res 2011;46:1675–82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Poon EG, Wright A, Simon SR, et al. Relationship between use of electronic health record features and health care quality: results of a statewide survey. Med Care 2010;48:203–9 [DOI] [PubMed] [Google Scholar]

- 62.Simon SR, Soran CS, Kaushal R, et al. Physicians’ use of key functions in electronic health records from 2005 to 2007: a statewide survey. J Am Med Inform Assoc 2009;16:465–70 [DOI] [PMC free article] [PubMed] [Google Scholar]