Abstract

The recruitment and retention of participants and the blinding of participants, health care providers, and data collectors present challenges for clinical trial investigators. This article reviews challenges and alternative strategies associated with these three important clinical trial activities. Common recruiting pitfalls, including low sample size, unfriendly study designs, suboptimal testing locations, and untimely recruitment are discussed together with strategies for overcoming these barriers. The use of active controls, technology-supported visit reminders, and up-front scheduling is recommended to prevent attrition and maximize retention of participants. Blinding is conceptualized as the process of concealing research design elements from key players in the research process. Strategies for blinding participants, health care providers, and data collectors are suggested.

MeSH TERMS: behavioral research, clinical trials as topic, double-blind method, patient dropouts, patient selection

As noted by its originators, evidence-based practice (EBP) is typified by “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients” (Sackett, Rosenberg, Gray, Haynes, & Richardson, 1996, p. 71). The concept of EBP has attracted widespread support among stakeholders interested in ensuring that interventions are safe, credible, and appropriate.

This growing commitment to ensuring that practice aligns with sound research findings poses challenges to occupational therapy practitioners. For example, “standard of care” occupational therapy practice has sometimes consisted of clinicians’ impressions of what works with their caseloads, information gained during a clinician’s academic or continuing education training, and the way a disorder has traditionally been addressed at the particular institution. Indeed, in the authors’ shared interest area of neurorehabilitation, conceptualizations about the etiology and treatment of certain disorders and personal investment in certain intervention strategies often significantly influence the selection of certain treatments. Although informative, the sole use of training, clinical judgment, and content expertise is insufficient to evaluate treatment efficacy or to act as the single basis for treatment guidelines. EBP can also be challenging for clinicians who do not have the training, comfort, time, or institutional support to search and integrate evidence into practice. To this end, Green, Gorenflo, and Wyszewianski (2002) reported considerable variability in the value and credibility that clinicians place on experiential versus empirical evidence and in their willingness to integrate new, empirically supported strategies into practice. Such reluctance can delay translation of promising therapies to clinical use.

Regardless of individual clinicians’ comfort with or use of EBP, one outcome of its increasing emphasis has been greater value placed on trials of intervention effectiveness. For example, the American Occupational Therapy Association (AOTA) Centennial Vision asserts that occupational therapy will emerge as an “evidence-based profession” (AOTA, 2007, p. 1). Likewise, AOTA and other allied health organizations (e.g., American Physical Therapy Association [APTA], American Congress of Rehabilitation Medicine) have deployed tools to ease the process of searching for evidence (e.g., APTA’s Hooked on Evidence Web site; focused “white papers,” podcasts, and position statements). For those of us involved in research, clinical trials constitute a tangible method of improving practice and increasing the validity of the occupational therapy profession by producing evidence that guides clinical decision making. Yet, an emphasis on clinical trials also creates unique challenges for professions that largely use behavioral interventions. For example, unlike a pharmacological intervention, a behavioral therapy is not easily controlled, and participants frequently know which intervention they are receiving. Moreover, clients receiving occupational therapy services are often undergoing myriad therapies. Collectively, these and other challenges make recruitment to occupational therapy intervention trials, the isolation of the active therapeutic ingredients, and blinding of participants difficult. This article discusses three of the most vexing challenges associated with occupational therapy behavioral trials: participant recruiting, participant retention, and blinding. Alternative strategies that have been used to overcome these challenges are also discussed.

Recruiting in Clinical Trials

Enrollment of the targeted number of participants is essential to conducting a successful clinical trial, primarily because adequate enrollment provides a basis for proving or disproving the study hypothesis. Enrollment of too few participants can result in an underpowered study, which can cause Type II errors. Additionally, a successful recruitment strategy provides an adequate pool of qualified participants in case a participant decides—or is asked—to withdraw from study participation.

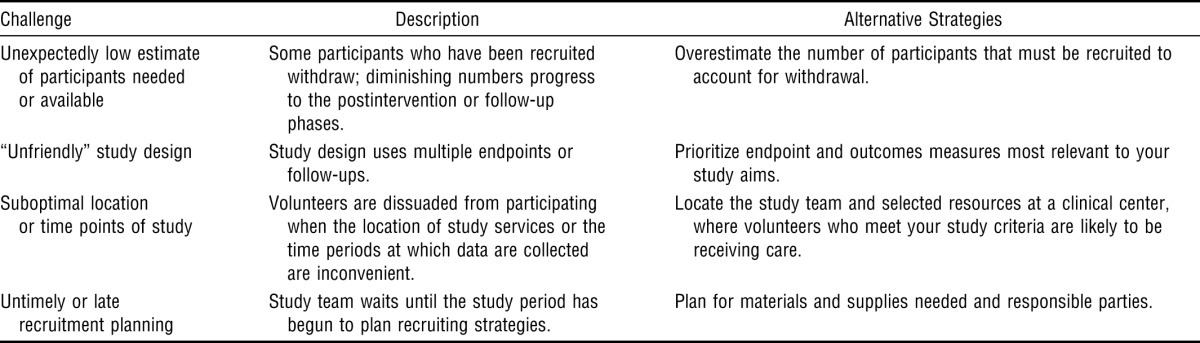

Yet, despite its fundamental importance, successful participant recruitment is a frequent challenge. Although a goal of this article is to share effective recruitment strategies, a brief review of some of the pitfalls encountered during participant recruitment can also be instructive. These pitfalls and some of their alternative strategies are summarized in Table 1.

Table 1.

Recruitment Challenges and Alternative Strategies

| Challenge | Description | Alternative Strategies |

| Unexpectedly low estimate of participants needed or available | Some participants who have been recruited withdraw; diminishing numbers progress to the postintervention or follow-up phases. | Overestimate the number of participants that must be recruited to account for withdrawal. |

| “Unfriendly” study design | Study design uses multiple endpoints or follow-ups. | Prioritize endpoint and outcomes measures most relevant to your study aims. |

| Suboptimal location or time points of study | Volunteers are dissuaded from participating when the location of study services or the time periods at which data are collected are inconvenient. | Locate the study team and selected resources at a clinical center, where volunteers who meet your study criteria are likely to be receiving care. |

| Untimely or late recruitment planning | Study team waits until the study period has begun to plan recruiting strategies. | Plan for materials and supplies needed and responsible parties. |

The number of participants needed to successfully answer the research question is usually established a priori. However, researchers may overlook the fact that although a high fraction of recruited participants successfully reach the enrollment phase, the numbers diminish by the end of pretesting, the end of the intervention phase, and follow-up visits. The principal investigator (PI) should expect that of the participants recruited, some will be screen failures (i.e., will not meet eligibility criteria), some will withdraw on their own, and others will be withdrawn by investigators because of noncompliance or adverse events. Investigators should specify a larger number of initial participants when writing their protocols. In our laboratory, we usually anticipate a 10% rate of study withdrawal and an additional 10% loss during follow-up.

Any study must be rigorously designed to accomplish aims and evaluate hypotheses. When conceptualizing studies, investigators must weigh the requirements of study participation against the toll that participation will exact on participants (e.g., time, mental or physical fatigue). For example, although designs using multiple endpoints and testing sessions may provide a more precise characterization of the intervention response, participants are unlikely to enter studies that involve procedures they find difficult to understand or that require multiple follow-ups. Concurrently, investigators also should consider the necessity of study criteria in designing the study.

On the one hand, inclusion and exclusion criteria are often advantageous because such criteria ensure a well-defined sample of participants and can be created in such a way as to exclude participants with sequelae that may undermine study participation. For instance, in our stroke trials, we often restrict participation to people within a relatively narrow age range; those who are much older or younger may respond differently to an intervention, thereby affecting the study’s internal validity. By keeping participants’ ages within a certain well-defined range, we reduce the likelihood of this extraneous variable affecting outcomes and reducing participant heterogeneity.

On the other hand, delimiting participant characteristics can have disadvantages, including reducing the generalizability of study findings to the general population. For example, if we narrowed the age range of eligible participants to include only younger people, our findings would be less likely to generalize to people who have had strokes, who tend to be older. Investigative teams must weigh the benefits of more versus less restrictive study criteria when initiating a trial. In our laboratory, we usually use more rigid and specific study criteria in our pilot work, when we are trying to affirm safety and efficacy and optimize study endpoints. We may then loosen some criteria as we progress to later and larger trials so that we can identify the participants most likely to respond to the intervention.

In designing their studies, investigators should consider pragmatic issues such as the following: Where will participants be encountered? Is the setting a place that they normally frequent at this point in the trajectory of the disease process? Is there a cost (e.g., parking) for the participant to matriculate to this place? Ideally, the investigator should anticipate where participants will prefer to be seen and minimize the perceived cost and effort associated with study participation to optimize successful recruitment. For example, the first author (Stephen Page) located one of his research laboratories at a rehabilitation hospital approximately 10 miles from his academic medical center. Although this distance created some occasional inconveniences, it positioned his team closer to potential participants. Consideration should also be given to the time point at which clients will likely receive services. For instance, if a trial requires inpatients, it will likely be suboptimal for the research team to be located in an outpatient facility. Expenses associated with all of the above arrangements could be placed in the advertising budget of supporting grants.

Too often, researchers wait until after the study period has begun to develop and implement a recruitment plan, which creates difficulties for a number of reasons. First, some aspects of a recruitment plan may require resources that take time to accumulate. For instance, in our laboratory, we often engage hospitals and clinics across the community as recruitment sites. Thus, we prefer to speak with administrators and clinicians well before the study starts. This strategy allows us to gain their buy in and gives them ample time to recruit candidates. Some aspects of the study may require time or financial resources (e.g., advertisements, supplies) or may require additional institutional review board (IRB) review. For these reasons, one of the authors (Page) scripts the recruiting strategy several months in advance of the trial’s start date, including a timeline that details recruitment activities, supplies needed for that action, and a responsible party for each activity. By being detailed and preemptive, researchers can be ready to recruit and expend their limited resources effectively when the study begins.

Although they do not fit neatly into one of the above categories, we wish to highlight two other points relating to recruiting. First, we have found that conducting pilot trials is useful in confirming the effectiveness of one’s recruitment strategies (Loscalzo, 2009). Such trials allow the investigative team to experience and troubleshoot some of the above-described challenges on a smaller scale. For this reason, funding agencies are increasingly encouraging researchers to use pilot trials to perfect recruitment strategies, confirm intervention safety and efficacy, and optimize study endpoints.

Second, the equitable recruitment of minority populations, women, and children has become an important issue to funders. Ethnicity, gender, and ages of participants should thus be elucidated in the recruiting plan and logged by the study team. During the Advancing Clinical Trials and Outcomes Research (ACTOR) conference, one occupational therapy leader noted that of the more than 1,000 participants her team had recruited into clinical trials, more than 800 were from a single minority population. Researchers should use this as a cautionary reminder that a disproportionate number of participants from any single population is likely to undermine a study’s generalizability.

To our knowledge, we are the first to use the terms passive recruiting and active recruiting to describe the manner in which particular recruitment strategies engage potential participants. The term passive recruiting strategies loosely refers to strategies in which the research team makes an initial effort to gain participants’ attention (e.g., a centrally placed study advertisement), but the onus is largely on the participant to take action. Considered on a continuum with passive strategies, active recruiting strategies are typified by the investigative team taking a more active role in the recruiting process (e.g., providing community-based in-services). In our laboratory, we have found the use of a mix of passive and active strategies to be optimal, with more active strategies emphasized whenever possible. Examples of these strategies are depicted in Figure 1.

Figure 1.

Continuum of selected passive and active recruiting strategies.

*Requires a Health Insurance Portability and Accountability Act waiver or other special permissions.

Retention in Clinical Trials

Identifying participants and enrolling them in the trial do not conclude the investigative team’s responsibilities. Clinical trial teams must also be concerned with ensuring that participants adhere to all aspects of the study protocol (discussed elsewhere in this issue; see Persch & Page, 2013) and remain in the study (i.e., retention). Retention is important because participants who are enrolled but do not complete a trial (study attrition) can undermine the internal and external validity of the findings. Specifically, attrition can cause study results to be biased when participants are not lost randomly but have certain characteristics that sustain better or worse outcomes (Britton, Murray, Bulstrode, McPherson, & Denham, 1995). Although some may argue that a priori randomization and intention-to-treat analysis methods overcome this issue, they cannot account for nonrandom treatment termination. In addition to biasing the trial’s outcomes, study attrition usually necessitates that more participants be enrolled to attain adequate power for the trial results to be valid, which may increase the trial’s cost or duration or delay important results. Although attrition is likely to occur in most clinical trials, bias can be expected when the attrition rate exceeds 20% (Marcellus, 2004).

Several factors can deleteriously affect participant retention. In the sections that follow, we highlight some study facets that may affect retention and suggest alternative strategies.

Study Design

A large number of follow-up tests or a study design in which participants are relegated to a control group that receives no perceived benefit may increase the likelihood of attrition. For example, some participants have withdrawn from our studies following the intervention phase because they are no longer provided with therapies after this point, although study visits continue to occur. It has been suggested that compensation in the form of monetary payments, gifts, or free health or child care could be provided to participants who attend selected study visits (e.g., Cooley et al., 2003). It is difficult, however, to ascertain how to gauge compensation so that it is sufficiently high to encourage participation without being so high that it is coercive. Moreover, the impact of monetary compensation on preventing attrition is not well established (Corrigan & Salzer, 2003; Orrell-Valente, Pinderhughes, Valente, & Laird, 1999). As an alternative to participant remuneration, we have used an active control condition in many of our neurorehabilitation trials. This alternative provides participants with the potential to derive perceived benefit from study participation, even if they are not in the experimental condition, and allows investigators to compare the efficacy of the experimental intervention against a typical care strategy.

Visit Reminders

Recorded messages or telephone calls have become a common method for physician offices to remind clients of an impending appointment. The study team can likewise use these methods to remind participants of an impending study visit and to troubleshoot barriers to matriculation. With the increasing use of cellular telephones, the ability to send text message reminders to participants about study visits is also promising. Whether sent by telephone, text message, or e-mail, the content of reminders should be approved by the IRB and can be made consistent from participant to participant to ensure that no bias is introduced.

Up-Front Scheduling

Participants may not attend a scheduled study session because they forget about the session or do not have a tangible reminder of the visit. In addition to telephone or e-mail visit reminders, we provide the participant and care partner with a written document detailing the dates and times of every testing and therapy visit. The names, locations, and contact information of the person whom participants will encounter during each visit are also included. We have found that providing this information at one of the first meetings after consent induces the participants to “burn it” into their schedules and provides a concrete reminder of their study appointments.

Demographic and Other Factors

A variety of demographic factors appear to be predictive of attrition, including older age, male gender, lower education, functional impairment, poorer cognitive performance, lower verbal intelligence, and greater comorbidities or worse physical health (Driscoll, Killian, Johnson, Silverstein, & Deeb, 2009). Transparency of the informed consent document; a strong relationship among the study coordinator, care providers, and participants; and consistency in protocols for maintaining contact with participants contribute to decreased attrition (Bedlack & Cudkowicz, 2009). In our laboratory, we have one person who acts as participants’ primary contact for the study; however, we also cross-train all of our personnel to be knowledgeable about all of our ongoing trials so that everyone can ably respond to a participant’s needs.

Blinding in Clinical Trials

Blinding is necessary for control of bias in clinical trials. We define blinding as the process of concealing research design elements such as group assignment, treatment agent, and research hypotheses from participants, health care providers, or data collectors (Penson & Wei, 2006; Portney & Watkins, 2000). Blinding allows the researcher to minimize threats to internal validity and construct validity, thereby strengthening external validity and improving the generalizability of results (Portney & Watkins, 2000).

The importance of blinding falls along a relative continuum that the investigator must consider when designing experimental research. When treatment and control group interventions are indistinguishable, such as in pharmaceutical trials, blinding of personnel is relatively less important and easier to achieve. When treatment and control group interventions are dissimilar, however, such as in behavioral trials, blinding becomes relatively more important and harder to achieve. The variety of practice settings and intervention strategies rehabilitation professionals use necessitates a mastery of blinding techniques across this continuum.

Whom Are We Blinding?

For the purposes of this article, we adopt the language used in the Consolidated Standards of Reporting Trials (CONSORT) statement (Schulz, Altman, & Moher, 2010):

Participants should be blinded to group assignment to control for the psychological effects associated with knowing group assignment. Participant knowledge of group assignment may bias the study in terms of altered attitudes, compliance, cooperation, and attendance (Pocock, 1983).

Health care providers are the people administering the intervention to participants or are professionals otherwise involved in the care of participants during the trial. Blinding of health care providers is especially important when knowledge of group assignment may affect normal care treatment decisions, cause a provider to monitor changes more closely, or result in increased excitement or enthusiasm (Pocock, 1983).

Data collectors may administer outcome assessments, score assessments, analyze the data, and manage databases. Blinding of the data collector to group assignment is necessary to ensure objectivity and avoid the risk that assessors will record more favorable responses when treatment status is known or may assume that improved performance is evidence of treatment status (Pocock, 1983). Blinding of data collectors is also important in behavioral trials because of the influence of clinical judgment on outcome assessments.

Strategies for achieving successful blinding with these groups are described in the sections that follow.

Types of Blinding

The terms unblinded, single-blinded, double-blinded, and triple-blinded have been used to describe a variety of design methodologies (Friedberg, Lipsitz, & Natarajan, 2010; Iber, Riley, & Murray, 1987; Meinert & Tonascia, 1986; Penson & Wei, 2006; Portney & Watkins, 2000). Unblinded studies are those in which all parties are aware of group assignment. They are relatively simple to carry out and allow health care providers to make informed treatment decisions. Unblinded trials are limited by an increased likelihood of bias, participant dissatisfaction with nontreatment status, dropouts, and preconceived notions about treatment (Friedman, Furberg, & DeMets, 1998).

The term single-blind is often used in two distinct ways. First, single-blind may be used to describe a trial in which only the investigator is aware of group assignment (Friedman et al., 1998). Such usage is common in pharmaceutical trials, in which it is relatively easy to blind the participant. In the health and rehabilitation sciences, however, the term single-blind refers to trials in which the data collector is blind to group assignment. The advantages and disadvantages of the single-blinded study are similar to those of an unblinded study.

Double-blind is the most commonly used term to describe trials in which neither the participant nor the investigator is aware of group assignment. Having evolved within the realm of pharmaceutical trials, this level of blinding can be difficult to achieve in behavioral research because practitioners are not easily deceived by bogus interventions. Double-blinded trials reduce the risk of bias because the actions of the investigator theoretically affect both the treatment and the control group equally. The term triple-blind is sometimes used interchangeably with double-blind. Accordingly, a degree of ambiguity exists in the usage of these terms. To address this inconsistency, the CONSORT statement suggests that authors “explicitly report the blinding status” of the individuals or groups involved in the trial (Moher et al., 2010, p. 12).

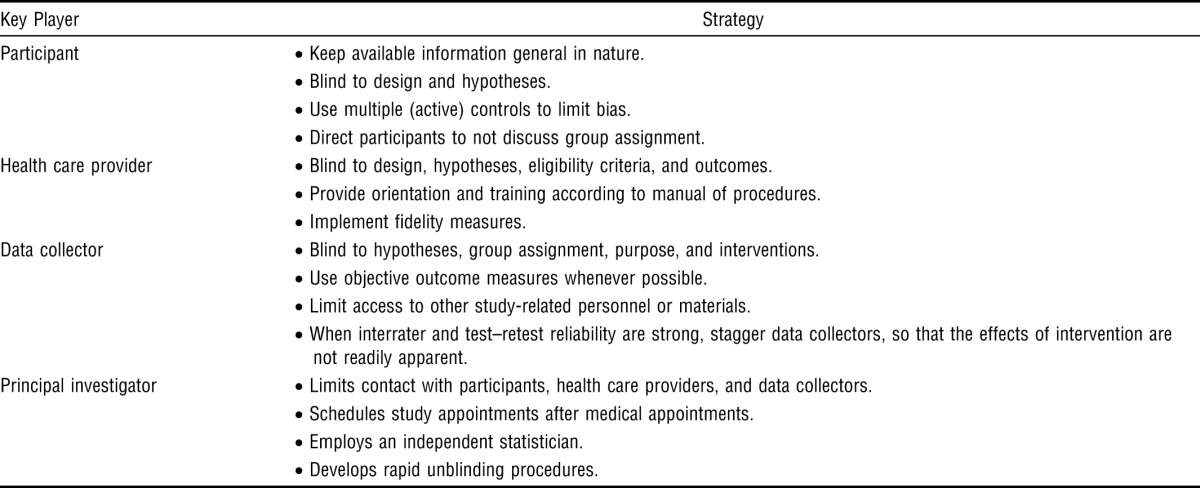

Strategies for Blinding

Many methods are available for successfully blinding participants, health care providers, and data collectors in pharmaceutical trials, in which use of a treatment allocation scheme, established masking guidelines, a data scheme, coding of drugs, and placebos and development of rapid unblinding protocols all make the blinding process relatively easy (Friedman et al., 1998; Meinert & Tonascia, 1986; Penson & Wei, 2006; Pocock, 1983). The application of these strategies to behavioral research is often impossible, impractical, or infeasible, thus making blinding more difficult. Yet, investigators have developed a number of novel approaches for blinding key groups throughout the research process. Table 2 presents strategies for blinding these key groups.

Table 2.

Strategies for Blinding Key Players in Behavioral Trials

| Key Player | Strategy |

| Participant | • Keep available information general in nature. |

| • Blind to design and hypotheses. | |

| • Use multiple (active) controls to limit bias. | |

| • Direct participants to not discuss group assignment. | |

| Health care provider | • Blind to design, hypotheses, eligibility criteria, and outcomes. |

| • Provide orientation and training according to manual of procedures. | |

| • Implement fidelity measures. | |

| Data collector | • Blind to hypotheses, group assignment, purpose, and interventions. |

| • Use objective outcome measures whenever possible. | |

| • Limit access to other study-related personnel or materials. | |

| • When interrater and test–retest reliability are strong, stagger data collectors, so that the effects of intervention are not readily apparent. | |

| Principal investigator | • Limits contact with participants, health care providers, and data collectors. |

| • Schedules study appointments after medical appointments. | |

| • Employs an independent statistician. | |

| • Develops rapid unblinding procedures. |

Blinding Participants.

One strategy for blinding participants is to keep publicly available documents general in nature—for example, by keeping hypotheses out of recruitment literature and consent documents. Blinding is also strengthened when participants are unaware of the research design and when active control participants are used. Participants should be directed to not discuss group assignment with health care providers and data collectors (Lowe, Wilson, Sackley, & Barker, 2011). For example, in our laboratory, we fully describe the nature of the interventions that participants may receive; however, we refrain from using language in our consents or advertisements that may suggest to participants which group is the experimental group or which condition is expected to respond better to the intervention.

Blinding Health Care Providers.

Blinding health care providers presents a unique challenge within the helping professions. Therapists are not easily fooled by sham interventions. They know which interventions are legitimate and which are not. To limit bias, health care providers may be blinded to hypotheses, eligibility criteria, and outcome measures. Therapeutic interventions should be manualized so that they are provided in a consistent manner (Johnson & Remien, 2003). Fidelity measures may be developed and deployed to ensure that interventions are consistent. Training and supervision of health care providers helps control for variability in the delivery of interventions (Johnson & Remien, 2003). For example, in our laboratory, we provide regular in-services to our treating therapists that include a review of the pertinent literature, case studies and videos of the interventions and common treatment responses, and other training strategies to familiarize therapists with the protocol. Additionally, we standardize the therapy regimen in our manual of procedures and conduct regular checks to ensure that therapies are being administered consistently.

Blinding Data Collectors.

To limit bias, the data collector and health care provider groups should be composed of different sets of practitioners. The use of objective outcome measures with established reliability and validity also helps minimize threats of bias (Penson & Wei, 2006). Data collectors should be blinded to hypotheses, group assignment, purpose, and the interventions received by the participants they assess. Qualitative strategies, such as use of a diary, help document any irregularities during data collection. Additionally, data collectors should have no access to study data, including databases, previously completed assessments, notes, or questionnaires (Lowe et al., 2011). Data collectors should be kept away from health care providers whenever possible. If it is not possible to keep these groups away from each other, then study-related business should not be discussed in common areas and incoming telephone calls should be screened so that data collectors do not hear discussion regarding intervention (Lowe et al., 2011).

Blinding Other Personnel.

Other study-related personnel sometimes require blinding. Whenever possible, the PI should limit interactions with participants, health care providers, and data collectors. We acknowledge that the PI must balance the need to limit bias with the logistical considerations of managing the study. We suggest that PIs engage in a process of epoche to document their interactions. Blinding of physicians involved in ordinary care may be required under certain circumstances. When it is not possible to blind the physicians, it is helpful to schedule study-related appointments after medical visits so that participant reports of study-related activities will not affect standard medical care. Blinding of data analysts is arguably easiest to achieve. Blinding of analysts allows for handling of data and statistical issues in an objective manner (Polit, 2011). The best ways to achieve blinding of analysts is to employ an independent statistician, recruit a collaborator who is blinded to group assignment, or withhold the blinding codes from the analysis group until analysis is completed. When it is not possible to employ a statistician, it is best for the PI to enlist a confederate who is responsible for developing the coding scheme, recording and entering data, and withholding the coding key from the PI until the analysis is complete (Polit, 2011).

Conclusion

As EBP continues to be emphasized, occupational therapists must become adept in the deployment and consumption of information from clinical trials. This level of proficiency is important given that evidence is increasingly a prerequisite for reimbursement of services. As a first step, this article presents potential barriers and alternative strategies associated with three of the most vexing aspects of conducting clinical trials: recruitment and retention of participants and blinding. Investigators should keep the following points in mind:

Development and implementation of the recruiting plan start before the study begins.

Successful recruiting plans incorporate both active and passive recruiting strategies.

Participant retention can be maximized through the use of designs with active controls, technology-supported visit reminders, and up-front scheduling.

Participants, health care providers, and data collectors should be blinded when possible.

Concealing design elements from key players helps minimize threats of bias.

Use of these strategies will help increase the success of clinical trials research in the health and rehabilitation sciences.

Contributor Information

Stephen J. Page, Stephen J. Page, PhD, MS, MOT, OTR/L, FAHA, is Associate Professor and Director, Neuromotor Recovery and Rehabilitation Laboratory (the “Rehablab”®), Division of Occupational Therapy, Ohio State University Medical Center, 453 West Tenth Avenue, Suite 416, Columbus, OH 43210; Stephen.Page@osumc.edu

Andrew C. Persch, Andrew C. Persch, MS, OTR/L, is Graduate Assistant, Division of Occupational Therapy, School of Health and Rehabilitation Sciences, and Graduate Student, Doctor of Philosophy in Health and Rehabilitation Sciences, Ohio State University Medical Center, Columbus

References

- American Occupational Therapy Association. 2007. AOTA’s Centennial Vision and executive summary. American Journal of Occupational Therapy, 61, 613–614. http://dx.doi.org/10.5014/ajot.61.6.613 .

- Bedlack R. S., Cudkowicz M. E. Clinical trials in progressive neurological diseases: Recruitment, enrollment, retention and compliance. Frontiers of Neurology and Neuroscience. 2009;25:144–151. doi: 10.1159/000209493. http://dx.doi.org/10.1159/000209493 . [DOI] [PubMed] [Google Scholar]

- Britton A., Murray D., Bulstrode C., McPherson K., Denham R. Loss to follow-up: Does it matter. Lancet. 1995;345:1511–1512. doi: 10.1016/s0140-6736(95)91071-9. http://dx.doi.org/10.1016/S0140-6736(95)91071-9 . [DOI] [PubMed] [Google Scholar]

- Cooley M. E., Sarna L., Brown J. K., Williams R. D., Chernecky C., Padilla G., Danao L. L. Challenges of recruitment and retention in multisite clinical research. Cancer Nursing. 2003;26:376–384. doi: 10.1097/00002820-200310000-00006. quiz 385–386. http://dx.doi.org/10.1097/00002820-200310000-00006 . [DOI] [PubMed] [Google Scholar]

- Corrigan P. W., Salzer M. S. The conflict between random assignment and treatment preference: Implications for internal validity. Evaluation and Program Planning. 2003;26:109–121. doi: 10.1016/S0149-7189(03)00014-4. http://dx.doi.org/10.1016/S0149-7189(03)00014-4 . [DOI] [PubMed] [Google Scholar]

- Driscoll K. A., Killian M., Johnson S. B., Silverstein J. H., Deeb L. C. Predictors of study completion and withdrawal in a randomized clinical trial of a pediatric diabetes adherence intervention. Contemporary Clinical Trials. 2009;30:212–220. doi: 10.1016/j.cct.2009.01.008. http://dx.doi.org/10.1016/j.cct.2009.01.008 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedberg J. P., Lipsitz S. R., Natarajan S. Challenges and recommendations for blinding in behavioral interventions illustrated using a case study of a behavioral intervention to lower blood pressure. Patient Education and Counseling. 2010;78:5–11. doi: 10.1016/j.pec.2009.04.009. http://dx.doi.org/10.1016/j.pec.2009.04.009 . [DOI] [PubMed] [Google Scholar]

- Friedman L. M., Furberg C., DeMets D. L. Fundamentals of clinical trials. New York: Springer; 1998. [Google Scholar]

- Green L. A., Gorenflo D. W., Wyszewianski L. Michigan Consortium for Family Practice Research. Validating an instrument for selecting interventions to change physician practice patterns: A Michigan Consortium for Family Practice Research study. Journal of Family Practice. 2002;51:938–942. [PubMed] [Google Scholar]

- Iber F. L., Riley W. A., Murray P. J. Conducting clinical trials. New York: Plenum Medical; 1987. [Google Scholar]

- Johnson M. O., Remien R. H. Adherence to research protocols in a clinical context: Challenges and recommendations from behavioral intervention trials. American Journal of Psychotherapy. 2003;57:348–360. doi: 10.1176/appi.psychotherapy.2003.57.3.348. [DOI] [PubMed] [Google Scholar]

- Loscalzo J. Pilot trials in clinical research: Of what value are they. Circulation. 2009;119:1694–1696. doi: 10.1161/CIRCULATIONAHA.109.861625. [DOI] [PubMed] [Google Scholar]

- Lowe C. J., Wilson M. S., Sackley C. M., Barker K. L. Blind outcome assessment: The development and use of procedures to maintain and describe blinding in a pragmatic physiotherapy rehabilitation trial. Clinical Rehabilitation. 2011;25:264–274. doi: 10.1177/0269215510380824. http://dx.doi.org/10.1177/0269215510380824 . [DOI] [PubMed] [Google Scholar]

- Marcellus L. Are we missing anything? Pursuing research on attrition. Canadian Journal of Nursing Research. 2004;36:82–98. [PubMed] [Google Scholar]

- Meinert C. L., Tonascia S. Clinical trials: Design, conduct, and analysis. New York: Oxford University Press; 1986. [Google Scholar]

- Moher D., Hopewell S., Schulz K. F., Montori V., Gøtzsche P. C., Devereux P. J., Altman D. G. CONSORT 2010 explanation and elaboration: Updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c869. doi: 10.1136/bmj.c869. http://dx.doi.org/10.1136/bmj.c869 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orrell-Valente J. K., Pinderhughes E. E., Valente E., Jr, Laird R. D. If it’s offered, will they come? Influences on parents’ participation in a community-based conduct problems prevention program. American Journal of Community Psychology. 1999;27:753–783. doi: 10.1023/a:1022258525075. http://dx.doi.org/10.1023/A:1022258525075 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Penson D. F., Wei J. Clinical research methods for surgeons. Totowa, NJ: Humana Press; 2006. [Google Scholar]

- Persch A. C., Page S. J. Protocol development, treatment fidelity, adherence to treatment, and quality control. American Journal of Occupational Therapy. 2013;67:146–153. doi: 10.5014/ajot.2013.006213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pocock S. J. Clinical trials: A practical approach. Chichester, England: Wiley; 1983. [Google Scholar]

- Polit D. F. Blinding during the analysis of research data. International Journal of Nursing Studies. 2011;48:636–641. doi: 10.1016/j.ijnurstu.2011.02.010. http://dx.doi.org/10.1016/j.ijnurstu.2011.02.010 . [DOI] [PubMed] [Google Scholar]

- Portney L. G., Watkins M. P. Foundations of clinical research: Applications to practice. Upper Saddle River, NJ: Prentice Hall; 2000. [Google Scholar]

- Sackett D. L., Rosenberg W. M. C., Gray J. A. M., Haynes R. B., Richardson W. S. Evidence based medicine: What it is and what it isn’t. BMJ. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. http://dx.doi.org/10.1136/bmj.312.7023.71 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz K. F., Altman D. G., Moher D. CONSORT Group. CONSORT 2010 statement: Updated guidelines for reporting parallel group randomised trials. BMC Medicine. 2010;8:18–26. doi: 10.1186/1741-7015-8-18. http://dx.doi.org/10.1186/1741-7015-8-18 . [DOI] [PMC free article] [PubMed] [Google Scholar]