Abstract

Over the past decade, driven by advances in educational theory and pressures for efficiency in the clinical environment, there has been a shift in surgical education and training towards enhanced simulation training. Microsurgery is a technical skill with a steep competency learning curve on which the clinical outcome greatly depends. This paper investigates the evidence for educational and training interventions of traditional microsurgical skills courses in order to establish the best evidence practice in education and training and curriculum design. A systematic review of MEDLINE, EMBASE, and PubMed databases was performed to identify randomized control trials looking at educational and training interventions that objectively improved microsurgical skill acquisition, and these were critically appraised using the BestBETs group methodology. The databases search yielded 1,148, 1,460, and 2,277 citations respectively. These were then further limited to randomized controlled trials from which abstract reviews reduced the number to 5 relevant randomised controlled clinical trials. The best evidence supported a laboratory based low fidelity model microsurgical skills curriculum. There was strong evidence that technical skills acquired on low fidelity models transfers to improved performance on higher fidelity human cadaver models and that self directed practice leads to improved technical performance. Although there is significant paucity in the literature to support current microsurgical education and training practices, simulated training on low fidelity models in microsurgery is an effective intervention that leads to acquisition of transferable skills and improved technical performance. Further research to identify educational interventions associated with accelerated skill acquisition is required.

Keywords: Microsurgery, Clinical competence, Education, Curriculum

INTRODUCTION

Many surgical training programs around the World face the competing pressures of a reduction in clinical training hours-in the UK as a consequence of the European Working Time Directive, and an overall reduction in program length in years. These can be mitigated in part by didactic education provided to a Y-generation electronically and distantly, and by simulated training outside the clinical environment [1]. The latter has the further advantage that early learning curve skill acquisition presents no risk to patients. The management of clinical risk is a further global priority that requires of surgical training programs objective measures of skill, of skill acquisition, and of skill maintenance or loss over time-in the UK as elsewhere, revalidation of clinicians has begun in earnest. Generating such objective measures is likely to be more practical and reliable in a simulation environment. Goals and specific measurable objectives are key components of any curriculum [2], and the design of a standard microsurgery education and training curriculum requires an understanding of the existing evidence for education and training intervention in microsurgery. This paper investigates that evidence in traditional microsurgical skills courses, which constitute simulation courses, in order to establish best evidence practice in microsurgery simulation education, training and curriculum design.

METHODS

This review considered all journal articles, abstracts, and especially randomised controlled clinical trials (RCTs), and from participants including: medical students, physicians in pre-surgical speciality training, surgeons in surgical training, and Consultants/Attending Surgeons. Education and training interventions in microsurgery were included. The primary outcomes considered were: skill acquisition assessed by validated global rating scores, skill retention and durability, and the difference between low fidelity and high fidelity models. Secondary outcomes included: transferability of skill to a more realistic setting, and patency of anastomosis. Detailed search strategies were developed for each database. These were based on the search strategy developed for OVID, but revised appropriately for each other database. The databases searched on the dates indicated were: EMBASE (OVID) (from 1974 to 2012 week 16), MEDLINE (OVID) (from 1946 to April week 2 2012), and PubMed. No hand-search was undertaken of any specific journals, however, the reference lists of potential clinical trials and the review authors' personal database of trial reports were examined to identify any additional studies or those not otherwise identified. We contacted experts in the field to request information on unpublished and ongoing trials. Although there was no language restriction on included studies, we did not find any relevant non-English papers.

Assessment of search results

Review authors independently assessed the abstracts of studies retrieved by the searches. Full copies of all relevant and potentially relevant studies-those that appeared to meet the inclusion criteria, or for which there was insufficient data in the title and abstract to make a clear decision-were obtained. Two authors assessed the full text papers independently and any disagreements on the eligibility of potentially included studies were resolved through discussion. After assessment by the review authors, any duplicate publications or remaining studies that did not match the inclusion criteria were excluded from further review (and the reasons for their exclusion were noted).

Assessment of methodological quality

The selected studies were graded independently with every trial reporting education and training in microsurgery, especially RCTs assessed according to the criterion grading system described in the Cochrane Handbook for Systematic Reviews of Interventions 4.2.6 (updated September 2006) [3]. For the RCTs, the review authors compared the grading and discussed and resolved any inconsistencies in the interpretation of inclusion criteria and their significance to the selected studies. The following parameters of methodological quality were assessed and used to evaluate the risk of bias within the included studies.

Randomisation

This criterion was graded as adequate (A), unclear (B), or inadequate (C). Adequate (A) included any one of the following methods of randomisation: computer generated or table of random numbers, drawing of lots, coin-toss, shuffling cards or throw of a dice. The review authors judged as inadequate (C) methods of randomisation utilising any of the following: case record number, date of birth, or alternate numbers.

Concealment of allocation

The review authors graded this criterion as adequate (A), unclear (B), or inadequate (C). Adequate (A) methods of allocation concealment included their central randomisation or sequentially numbered sealed opaque envelops. The criterion was considered inadequate (C) if there was an open allocation sequence and the participants and trialists could foresee the upcoming assignment.

Blinding of participants and outcomes assessment

■ Blinding of the participants (yes/no/unclear)

■ Blinding of outcome assessment, e.g., scoring videos (yes/no/unclear)

Handling of withdrawals and losses

The review authors graded this criterion as yes (A), unclear (B) and no (C) according to whether there was a clear description given of the difference between the two groups of losses to follow-up (attrition bias). After assessment, the included studies were to be grouped accordingly.

1) Low risk of bias (plausible bias unlikely to seriously alter the results): if all criteria were met.

2) Moderate risk of bias (plausible bias that raised some doubt about the results): if all criteria were at least partly met.

3) High risk of bias (plausible bias that seriously weakened confidence in the results): if one or more criteria were not met as described in Cochrane Handbook for Systematic Review of Interventions 4.2.6 Section 6.7.

In view of the limited number of RCTs reported, pooling of results and meta-analysis of extracted data were not feasible and therefore only data relevant to the primary and secondary outcomes and a descriptive summary of results are presented. A sensitivity analysis was conducted to assess the robustness of the review results by repeating the analysis with the following adjustments: exclusion of studies that were not randomized control trials and published abstracts from conferences. The search results were identical proving the search criteria robust. Subgroup analyses were not possible.

RESULTS

The search strategy retrieved 1,148 citations by interrogating EMBASE with the MeSH words 'microsurgery' and 'education', 'microsurgery' and 'training', and 'microsurgery' and 'curriculum'. These were limited respectively to 9 RCTs and 7 abstracts, 12 RCTs and 4 abstracts, and 0 RCTs and 0 abstracts. The strategy retrieved 1,460 citations by interrogating MEDLINE using Multi-Field Search-"All Fields", meshing 'microsurgery' and 'education' and 'microsurgery' and 'training', with a total of 21 RCTs and 10 abstracts. The strategy retrieved 2,277 citations by interrogating PubMed in a similar way to EMBASE, with a total of 36 RCTs and 14 abstracts. 5 RCT, met our inclusion criteria and were included in this review [4-8].

Grober et al. [6]-The Educational Impact of Bench Model Fidelity on the Acquisition of Technical Skill: The Use of Clinically Relevant Outcome Measures.

This double-blind, randomised controlled trial evaluated whether the acquisition of skill and clinically relevant outcomes are impacted by bench model fidelity. Fifty junior surgical residents were voluntarily recruited for the trial. Trainees with prior experience of >5 microsurgical cases as the primary surgeon (i.e., performing greater than 80% of the procedure) were excluded. The trainees participated in a one-day microsurgical training course. Initially all trainees received an instructional video demonstrating basic microsurgical skills followed by a baseline assessment pre-test drill. Trainees were randomized to one of three groups; those receiving training on high fidelity models (n=21), those receiving training on low fidelity models (n=19), and those receiving didactic training (n=10). High fidelity (HF) model training was performed anastamosing the anaesthestised rat vas deferens. Low fidelity (LF) model training was performed anastamosing silicone tubing, and the didactic group was identified as the control group.

Video recordings of assessments were scored by blinded experts using validated global rating scores. The final product was assessed in a blinded fashion for patency, suture precision and overall quality. The anastamosed vas deferens remained in the living rat for 30 days and was re-evaluated 30-days post anastomosis and tested for: patency, the presence of any sperm granuloma (suggesting leak), and the presence of sperm on microscopy from the abdominal end of the anastomosis to assess functional patency.

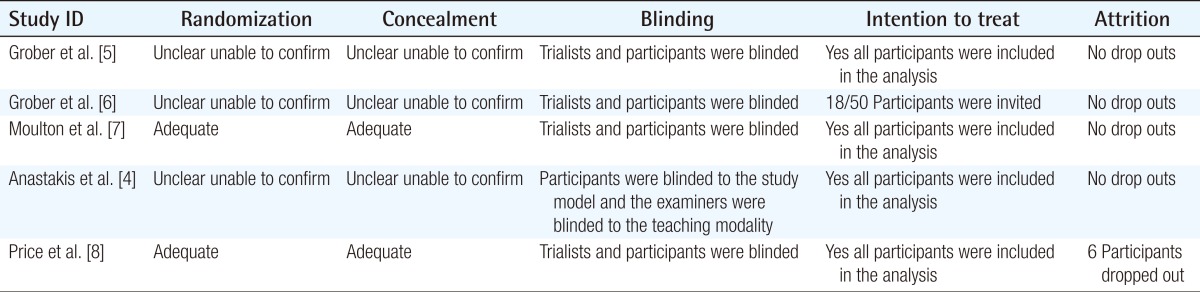

The method of randomization was not described, nor the method of concealment from the expert investigators. Therefore randomisation was graded as (B) unclear. Several questions remained unanswered, i.e., How were the candidates randomized? Was a power calculation performed? How were the candidates entering the trial blinded? Participants were blinded to the intervention as were the experts that were assessing the video recordings. Skill assessment, one of the principal outcomes in this study, was conducted. Handling of withdrawals and losses-There were no withdrawals or losses and all of the 50 participants enrolled in this clinical trial were accounted for and therefore this criterion was graded as (A) yes. As most of the criteria were met, this study was assessed as at low risk of bias (plausible bias unlikely to seriously alter the results) (Table 1).

Table 1.

Quality of included studies

The primary outcomes included: global rating & checklist score, suture precision placement & quality, and anastamosis completion time. Blinded assessors assessed the outcomes using a validated global rating scoresheet. Differences between the pre- and post-est scores were significantly greater in those that received the hands-on model training compared to those who received didactic training alone (P=0.004). The pre- and post-scores were not significantly different between the HF and LF groups. The post test scores were not significantly different in all three groups. Overall suture quality and precision did not differ in all three groups. Anasatomosis completion times were significantly faster in those who received hands on training.

The secondary outcomes included: anastamotic patency, granuloma at the anastamotic site presence of sperm on microscopy, and trainee preference. Seventy-two percent of the rats survived and were re-explored. Of these 50% of the 'didactic' mice survived, 79% of the LF mice survived, and 76% of the HF mice survived. There was significantly higher delayed anastamotic patency among bench-model trainees (P=0.039). There was no significant difference in granuloma formation or the rates of sperm presence on microscopy between the 3 groups. 90% of trainees preferred the HF model. There were no adverse effects reported.

Grober et al. [5]-Laboratory Based Training in urological microsurgery with bench model simulators: a randomized controlled trial evaluating the durability of technical skill.

This double-blind, randomised controlled study evaluated the durability and retention of skill acquisition with clinically relevant outcomes. Fifty junior surgical residents were voluntarily recruited, and randomized to either a high fidelity model, low fidelity model or didactic teaching, as in the previous study. Four months after focused teaching, 18 trainees were invited back (13 bench model trainees, and 5 didactic trainees), and re-assessed on a high fidelity, live animal model.

Blinded experts using validated global rating scores assessed video recordings. During the post-test assessment, all participants were blindly assessed on a live rat model of vas deferens anastamosis. This was then assessed for anastamotic patency.

The method of randomization was not described nor the method of concealment from the expert investigators therefore randomisation was graded as (B) unclear. Several questions remained unanswered i.e., how were the candidates re-selected for retention testing, it states "voluntarily returned", however this is unclear. Was a power calculation performed? How were the candidates entering the initial trial blinded? How did the interim clinical opportunities impact on outcomes. Only the assessors were blinded for this study. Skill assessment, one of the principal outcomes in this study, was conducted. There were no withdrawals or losses-all of the 18 participants enrolled in this clinical trial were accounted for and therefore this criterion was graded as (A) yes. This study was rated as moderate risk (plausible bias that raised some doubt about the results) of bias because of the possible confounding factors; most were selected from the bench model training group (13/18), and only 5/18 from the didactic training group (Table 1).

The primary outcomes included: global rating & checklist score, microsurgical drill: looking at dexterity, visuo-spatial awareness and skill, and a retention test. Blinded assessors assessed the outcomes using a validated global rating score-sheet. Patency testing was carried out on the live model using methylene blue dye injection.

Eight out of the 18 subjects had been exposed to microsurgical clinical opportunities in the interim but there was no significant difference with the frequency of microsurgery practice between the two groups however there was a significantly positive correlation between the number of interim practice opportunities and retention test/global rating scores (P=0.02). Global rating scores still remained significantly higher in those that had initially received hands-on bench model training compared with those that received didactic training (P=0.02) and therefore indicates that simulation training supercedes theoretical training. Anastamotic patency. Anastamotic patency rates were also significantly higher in those that received bench model training.

Moulton et al. [7]-Teaching Surgical Skills: What kind of practice makes perfect?

This single-center, single-blinded, randomised controlled trial evaluated the effect of mass training vs. distributed training on microsurgical skill acquisition and the transferability of the acquired skills to life-like models. 38 postgraduate year one [PGY1], PGY2 & PGY3 surgical residents volunteered for the trial. These trainees participated in a one-day (4-session) mass training microsurgical course or a 4-week distributed (one session/week) microsurgical training course.

Trainees were stratified according to their post-graduate year, and randomized to one of two experimental groups: mass training (n=19), and distributed training (n=19). The mass training group received 4-training sessions in one day, and the distributed group received the same 4 training sessions over 4 weeks. In the first session participants watched a video on the principles of microsurgery, and practiced suturing on a Penrose drain. In the second session, they practiced microvascular anastomosis on a 2 mm poly-vinyl chloride artery simulation model. In the third and fourth sessions participants practiced microvascular anastomoses using the arteries of a turkey thigh. Each group received an equal overall training time. The microsurgical drill test was used in the pre-test during the first training session. The post-test was also a microsurgical drill carried out at the end of the fourth training sessions. The retention and transferability test was carried out one month after the training was completed, and this required that the trainees carry out a microsurgical drill test and an in-vivo infra-renal anastomosis on a live rat model respectively.

Blinded experts using previously validated global rating scores, checklists and end product evaluation methods assessed the pre-test, post-test and retention test (performed one month after the training). This was performed by two blinded experts assessing the video recordings independently. Computer based evaluations were also carried out for time to completion and motion analysis assessments. Clinically relevant outcome measures included the patency of the infra-renal anastomosis, narrowing of the anastomosis, bleeding and completion of the anastomosis.

The method of randomization was clearly described and considered adequate (A). Several questions remained unanswered i.e. Was a power calculation performed? How did they account for sub-speciality experience? Participants were blinded to the intervention, as were the experts that were assessing the video recordings. Skill assessment, one of the principal outcomes in this study, was conducted. There were no withdrawals or losses, and all of the 38 participants enrolled in this clinical trial were accounted for-therefore this criterion was graded as (A) yes. As most of the criteria were met, this study was assessed as at low risk of bias (plausible bias unlikely to seriously alter the results (Table 1).

The primary outcomes included: global rating & checklist score of pre-test, post-test and retention test, computer based evaluation of time to completion and motion efficiency, transferability to an in-vivo rat model of infra-renal anastomosis. Blinded independent experts assessed video recordings of the pre-test, post-test and retention test. There was no significant difference between the two groups at pre-testing, nor at immediate post-testing. Time to completion and the number of hand movements were not significantly different between the mass and distributed groups either, both at pre-test and post-test. Both groups showed significant improvement between the pre-test and post-test when using the global rating score, time to completion and motion analysis, but only the distributed group showed significant improvement when utilizing the validated checklist and end-product evaluation. The retention drill revealed the distributed group performed better in the computer based assessments but not expert-based outcomes. Nonetheless, the distributed group out-performed the mass training group in all expert-based outcome measures during the transfer, the clinically relevant outcome, but not the computer-based evaluations. Inter-rater reliability was also assessed using Cronbach's alpha and varied between 0.67 and 0.89 on all expert based outcome measures. There were no adverse effects to report.

Anastakis et al. [4]-Assessment of technical skills transfer from the bench training model to the human model.

This double-blind, randomised controlled trial evaluated whether technical skills learned by bench models are transferrable to a human cadaver model. Twenty-tree surgical PGY1 residents were recruited for this study and randomized. These trainees participated in a 3-day microsurgical training course. They were assigned to one of three groups: text only, bench model training, or cadaver model training, with 2 of each of the 6 procedures taught using one of the three modalities thus serving as their own control. Each course was a four-hour session, apart from the didactic training. One week following the intervention, the delegates were invited to carry out the procedure on a human cadaver model in an operating room environment to assess transferability. Two examiners, none of whom were previous instructors, were asked to independently evaluate the candidates, and were blinded to the instructional modality used. They were assessed using validated checklists & global rating scores.

The method of randomization was not described, nor the method of concealment from the expert investigators-therefore randomisation was graded as (B) unclear. Several questions remained unanswered i.e., What was the inter-rater reliability? Was a power calculation performed? How were the candidates entering the trial blinded? How was experience accounted for when randomsing the subjects? Participants were blinded to the study model and intervention, and the experts were blinded to the teaching delivery modality as they were assessing them carrying out the live cadaveric procedures. There were no withdrawals or losses, and all of the 23 participants enrolled in this clinical trial were accounted for-therefore this criterion was graded as (A) yes.

The study was graded as moderate to high risk bias. Initially the results analysis did not show any significant differences between the groups for both checklist scores and global rating scores. The team subsequently re-analysed the date to control for the wide variation in subject skill and procedure difficulty, and it was only after repeated measures of analysis of variance that a significant effect on training modality on transferability of skill was accounted for (Table 1).

The primary outcomes included: global rating score, checklist score, transferability. Blinded expert examiners evaluated the delegates' performance in the pre- and post-test microsurgical drill, as well as the transferability study where the candidates had to carry out the procedures on live cadaveric models. After controlling for variance and skill difficulty, the cadaveric and bench model forms of training had a marginally significant impact, only 7% to 10% increase, on the residents' ability to perform each procedure on the human cadaver, when compared to the manual reading group. There were no adverse effects to report.

Price et al. [8]-A randomized evaluation of simulation training on performance of vascular anastomosis on a high fidelity in-vivo model: The role of deliberate practice.

This single-center, single-blinded, randomised controlled trial evaluated the effect of independent and deliberate simulator practice, during non-clinical time, on the performance of an end-to-end anastomosis in an in-vivo model. 39 PGY1 & PGY2 surgical trainees were stratified and randomized to an expert guided tutorial on a procedural trainer group or to an expert guided tutorial on a procedural trainer combined with self directed practice on the same procedural trainer. The distribution of PGY1 & PGY2 were similar between the two groups and the distribution of subspecialties was similar. Trainees were randomized to one of two groups; those receiving training by an expert guided tutorial on a procedural trainer (n=18) and those receiving training by an expert guided tutorial on a procedural trainer followed by 10 sessions of independent self directed training (n=21). The pre-test was taken at the end of the expert-guided tutorial.

Blinded experts used validated Objective Structured Assessment of Technical Skill (OSATS) scores. Initially, the candidates were given an expert guided tutorial and trained on performing end-to-end anastomoses. The 4th anastomosis carried out was considered the pre-test sample and scored. It is unclear if this was done blind. Two weeks after the initial tutorial, the candidates were invited to perform an in-vivo carotid anastomosis on a porcine model, that was blindly assessed by 2 independent expert cardiac surgeons.

The method of randomization was clearly described and considered adequate (A). Several questions remained unanswered i.e., Was a power calculation performed? Participants were blinded to the intervention, as were the experts assessing the video recordings. Skill assessment, one of the principal outcomes in this study, was conducted. 3 candidates from each study group were lost to follow-up.

As most of the criteria were met, this study was assessed as at low risk of bias (plausible bias unlikely to seriously alter the results (Table 1).

The primary outcome was the OSAT score. Blinded independent experts assessed the anastomoses in the in-vivo porcine carotid artery model using OSAT scores. The animal models were used to create a high fidelity simulation model. The group that undertook independent self-directed training after the expert tutorial scored significantly higher OSAT scores. This was also the case when the PGY1 subgroup and PGY2 subgroup were considered independently. When the OSAT domains were considered independently, a statistically significant improvement was seen with the independent practice group in 4 out of the 7 domains. The subgroup analysis supports the robustness of the overall findings.

The secondary outcomes included: time to completion, and end-product evaluation. End product scores were significantly higher, and time to completion was significantly shortened, in the trainees randomized to self-directed practice. Inter-rater reliability was also assessed, and was high between the expert observers (intra-class correlation coefficient=0.8). There were no adverse effects to report.

DISCUSSION

It remains difficult in surgical practice and training to follow an evidence-based path, not least because much of the evidence is weak-a result primarily of the challenge of generating it. The simulated training environment, however, has advantages over the clinical environment [9], and the articles reviewed here are an expression of that, and the review process the relevance of the BestBETs approach under such circumstances albeit only few randomized control trials in the subcategory of microsurgery. Nonetheless, reviews on simulation training in the field of surgery have been published from which parallels to the field of microsurgery can be drawn. As Reznick [10] alluded to, teaching and assessment of skill acquisition is the least standardized component in surgical education, although ironically one of the most important which is why high quality evidence based systematic reviews are essential.

A systematic review by Sutherland et al. [11], looked at 30 randomised control trials, all of methods of delivering surgical education, such as computer simulation, video simulation, and physical models against standard current teaching. They concluded that computer and video simulation did not significantly enhance training but that model and cadaveric training showed promise. The confounding factors and disparate interventions made it difficult to generate more robust conclusions, nonetheless parallels that are applicable to microsurgey can be drawn from which further studies can be carried out.

It would appear from the best available evidence that simulated microsurgery training on low fidelity models can be as effective as on high fidelity models. This has some particular relevance in the current austere climate in many parts of the World, because high fidelity models-i.e., in-vivo models, almost always the rat-are expensive, and increasingly raise regulatory issues [12]. In the UK and elsewhere, the mainstay of microsurgical simulated training has historically been exposure to an in vivo rat microsurgery course, but generally this at a far too early stage in training where the bridge with clinical hands-on exposure to relevant cases cannot be made, and without repetition. Neither would current regulations make any animal simulation appropriate for the purposes of establishing skill maintenance or loss within more senior surgeons' revalidation. A question that does arise is how far a trainee can progress along a reasonable learning curve before a higher fidelity simulation model is indeed required. That requirement is likely to be in later training years, and parallel to increasing clinical exposure. There are further questions around the potential for increased fidelity of ex vivo microsurgery simulation models with advances in materials science and the whole simulated training world-which have been quite dramatic in recent years [13].

It appears also that practice is key to microsurgery skill maintenance, at least at a trainee level where a laboratory based surgical skills curriculum can significantly improve skill retention. This will come as no great surprise, but it remains to be established in a similar way that this applies to more senior surgeons. If, as is likely, the same applies for established surgeons, some of whom may be presented with microsurgery, or in context microvascular anastomosis, cases infrequently and sporadically, then skill maintenance might most cost-effectively and ethically be provided by exposure to regular ex vivo simulated updates. What remain unclear are: How often? How intensive? How objectively monitored? And, of course, with what consequence if skill has been lost over time? There are likely to be real financial efficiencies to maintaining such skills in established surgeons, than training and employing less experienced surgeons to take their places-although there must be a threshold, yet to be established. Unfortunately, "bad science in the field of medical simulation has become all too common" [14], as stated by Champion and Gallagher [14], and therefore more rigorous study designs are required, that is, better designed randomised controlled trials that are adequately powered and without confounding factors.

Again it is interesting, no great surprise, yet important to have established with some evidential power that microsurgical skills acquired on low fidelity simulated training models do appear to translate or transfer to an improved performance in higher fidelity models. However, the link has yet to be made clearly within the microsurgery simulated training world of skill transfer from the ex vivo to in vivo environments, and this is an overwhelming priority that will focus any developments in the area. In 2008, Sturm et al. [15] carried out a systematic review assessing whether skills acquired in simulation training were transferable to the operating room in the field of general surgery. Fundamentally, there has been a long-standing assumption that skills acquired from simulation settings are transferrable to the operating room, to reduce patient-based training time, improve operating theatre efficacy and ultimately improve patient safety, hence the development of simulation training. This review concluded that on the whole simulation training does transfer to the operative setting and is a safe and effective means for adjunct surgical education particularly in novice trainees, as it helps eliminate part of the steep learning curve [16-18], and improve visuo-spatial awareness [15]. The difficulty though, is that transference cannot solely be attributed to simulator models, as other factors must also play a role, in other words acquisition of technical skill is only one aspect of surgical training [15]. Again, parallels can be drawn and used in the microsurgery simulation based research. These developments will rely in the first instance on establishing and validating practical, objective measures of skill and its acquisition [19-21].

CONCLUSIONS

Evidence of educational and training interventions in the field of microsurgical skills acquisition is still limited and the conclusions possibly naive. Nonetheless, evidence extracted from this, and other systematic reviews in allied specialties, show that in principle, a microsurgery training curriculum based on competency is possible, based on the high level evidence of effective educational interventions reviewed. We can cautiously conclude that simulated microsurgery training on low fidelity models can be as effective as on high fidelity models, skills acquired on low fidelity simulated training models do appear to translate or transfer to an improved performance in higher fidelity models, that practice is key to microsurgery skill maintenance and that laboratory based surgical skills curriculum can significantly improve skill retention. Future ideas and strategies to standardise and advance microsurgery training especially in the era of simulation should be validated and proved effective towards an enhanced evidence based patient-safe training. Ultimately, once the construct and content of a standardized microsurgical training program can be identified and validated, the real question will be, will simulation training improve patient outcomes?

Footnotes

This article was presented at the Inaugural Meeting of the International Microsurgery Simulation Society on Jun 30, 2012 in London, UK.

No potential conflict of interest relevant to this article was reported.

References

- 1.Tsuda S, Scott D, Doyle J, et al. Surgical skills training and simulation. Curr Probl Surg. 2009;46:271–370. doi: 10.1067/j.cpsurg.2008.12.003. [DOI] [PubMed] [Google Scholar]

- 2.Kern DE, Thomas PA, Hughes . Curriculum development for medical education: a six-step approach. 2nd ed. Baltimore: The Johns Hopkins University Press; 2009. [Google Scholar]

- 3.Higgins JP, Green S. Cochrane Handbook for Systematic Reviews of Interventions 4.2.6 [Internet] Clayton: Australia; 2006. [cited 2013 Apr 16]. Available from: http://www.mv.helsinki.fi/home/hemila/karlowski/handbook_4_2_6_Karlowski.pdf. [Google Scholar]

- 4.Anastakis DJ, Regehr G, Reznick RK, et al. Assessment of technical skills transfer from the bench training model to the human model. Am J Surg. 1999;177:167–170. doi: 10.1016/s0002-9610(98)00327-4. [DOI] [PubMed] [Google Scholar]

- 5.Grober ED, Hamstra SJ, Wanzel KR, et al. Laboratory based training in urological microsurgery with bench model simulators: a randomized controlled trial evaluating the durability of technical skill. J Urol. 2004;172:378–381. doi: 10.1097/01.ju.0000123824.74075.9c. [DOI] [PubMed] [Google Scholar]

- 6.Grober ED, Hamstra SJ, Wanzel KR, et al. The educational impact of bench model fidelity on the acquisition of technical skill: the use of clinically relevant outcome measures. Ann Surg. 2004;240:374–381. doi: 10.1097/01.sla.0000133346.07434.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Moulton CA, Dubrowski A, Macrae H, et al. Teaching surgical skills: what kind of practice makes perfect?: a randomized, controlled trial. Ann Surg. 2006;244:400–409. doi: 10.1097/01.sla.0000234808.85789.6a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Price J, Naik V, Boodhwani M, et al. A randomized evaluation of simulation training on performance of vascular anastomosis on a high-fidelity in vivo model: the role of deliberate practice. J Thorac Cardiovasc Surg. 2011;142:496–503. doi: 10.1016/j.jtcvs.2011.05.015. [DOI] [PubMed] [Google Scholar]

- 9.Grunwald T, Krummel T, Sherman R. Advanced technologies in plastic surgery: how new innovations can improve our training and practice. Plast Reconstr Surg. 2004;114:1556–1567. doi: 10.1097/01.prs.0000138242.60324.1d. [DOI] [PubMed] [Google Scholar]

- 10.Reznick RK. Teaching and testing technical skills. Am J Surg. 1993;165:358–361. doi: 10.1016/s0002-9610(05)80843-8. [DOI] [PubMed] [Google Scholar]

- 11.Sutherland LM, Middleton PF, Anthony A, et al. Surgical simulation: a systematic review. Ann Surg. 2006;243:291–300. doi: 10.1097/01.sla.0000200839.93965.26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Reznick RK, MacRae H. Teaching surgical skills--changes in the wind. N Engl J Med. 2006;355:2664–2669. doi: 10.1056/NEJMra054785. [DOI] [PubMed] [Google Scholar]

- 13.Ilie VG, Ilie VI, Dobreanu C, et al. Training of microsurgical skills on nonliving models. Microsurgery. 2008;28:571–577. doi: 10.1002/micr.20541. [DOI] [PubMed] [Google Scholar]

- 14.Champion HR, Gallagher AG. Surgical simulation - a 'good idea whose time has come'. Br J Surg. 2003;90:767–768. doi: 10.1002/bjs.4187. [DOI] [PubMed] [Google Scholar]

- 15.Sturm LP, Windsor JA, Cosman PH, et al. A systematic review of skills transfer after surgical simulation training. Ann Surg. 2008;248:166–179. doi: 10.1097/SLA.0b013e318176bf24. [DOI] [PubMed] [Google Scholar]

- 16.Sedlack RE, Kolars JC. Computer simulator training enhances the competency of gastroenterology fellows at colonoscopy: results of a pilot study. Am J Gastroenterol. 2004;99:33–37. doi: 10.1111/j.1572-0241.2004.04007.x. [DOI] [PubMed] [Google Scholar]

- 17.Ahlberg G, Hultcrantz R, Jaramillo E, et al. Virtual reality colonoscopy simulation: a compulsory practice for the future colonoscopist? Endoscopy. 2005;37:1198–1204. doi: 10.1055/s-2005-921049. [DOI] [PubMed] [Google Scholar]

- 18.Cohen J, Cohen SA, Vora KC, et al. Multicenter, randomized, controlled trial of virtual-reality simulator training in acquisition of competency in colonoscopy. Gastrointest Endosc. 2006;64:361–368. doi: 10.1016/j.gie.2005.11.062. [DOI] [PubMed] [Google Scholar]

- 19.Grober ED, Hamstra SJ, Wanzel KR, et al. Validation of novel and objective measures of microsurgical skill: Hand-motion analysis and stereoscopic visual acuity. Microsurgery. 2003;23:317–322. doi: 10.1002/micr.10152. [DOI] [PubMed] [Google Scholar]

- 20.Kalu PU, Atkins J, Baker D, et al. How do we assess microsurgical skill? Microsurgery. 2005;25:25–29. doi: 10.1002/micr.20078. [DOI] [PubMed] [Google Scholar]

- 21.Temple CL, Ross DC. A new, validated instrument to evaluate competency in microsurgery: the University of Western Ontario Microsurgical Skills Acquisition/Assessment instrument. Plast Reconstr Surg. 2011;127:215–222. doi: 10.1097/PRS.0b013e3181f95adb. [DOI] [PubMed] [Google Scholar]