Abstract

A Bayesian method with spatial constraint is proposed for vessel segmentation in retinal images. The proposed model makes the assumption that the posterior probability of each pixel is dependent on posterior probabilities of their neighboring pixels. An energy function is defined for the proposed model. By applying the modified level set approach to minimize the proposed energy function, we can identify blood vessels in the retinal image. Evaluation of the developed method is done on real retinal images which are from the DRIVE database and the STARE database. The performance is analyzed and compared to other published methods using a number of measures which include accuracy, sensitivity, and specificity. The proposed approach is proved to be effective on these two databases. The average accuracy, sensitivity, and specificity on the DRIVE database are 0.9529, 0.7513, and 0.9792, respectively, and for the STARE database 0.9476, 0.7147, and 0.9735, respectively. The performance is better than that of other vessel segmentation methods.

1. Introduction

Retinal vessel segmentation plays an important role in medical image processing. It can provide much help for the detection of eye diseases and other medical diagnosis. A large number of methods for retinal vessel segmentation have been proposed. A survey on retinal vessel segmentation methods is presented in the literature [1]. According to the image processing methodologies and algorithms, these retinal vessel segmentation approaches can be categorized into pattern recognition techniques, matched filtering, vessel tracking, mathematical morphology, multiscale approaches, and model-based approaches.

The algorithm based on pattern recognition can detect or classify the retinal blood vessel features and the background. This group of algorithms can be divided into two categories: supervised and unsupervised approaches. In supervised methods, the prior labeling information is used to decide whether a pixel belongs to a vessel or not. In [2], a ridge-based vessel segmentation methodology has been proposed. In [3], a method which combines the radial projection and the support vector machines classifier has been introduced for vessel segmentation. A supervised method which is based on neural network has been presented in [4]. The Gaussian matched filter and the k-nearest neighbor algorithm are used for vessel segmentation [5]. In [6], a 2D Gabor wavelet has been applied for vessel segmentation. The unsupervised methods perform the vessel segmentation without any prior labeling knowledge. In [7], a spatially weighted fuzzy C-means clustering method has been used for vessel segmentation. In [8], a vessel detection system based on a maximum likelihood estimation has been developed.

The matched filtering approaches [9–11] are also popular methods to detect and measure blood vessels. In [9], a method which combines local and region-based properties of retinal blood vessels has been described. In [10], a modified second-order Gaussian filter has been used for retinal vessel detection. In [11], the zero-mean Gaussian filter and the first-order derivative of the Gaussian have been applied to detect vessels.

In the vessel tracking-based method framework [12–14], several seed pixels are chosen on the boundaries and the centerlines of vessels, and then we detect vessels from these seeded pixels. In [13], the Bayesian method with the maximum a posteriori (MAP) probability criterion has been used to identify the vessel's boundary points. In [12, 14], a probabilistic tracking method has been used to detect the vessel edge points by using local grey level statistics and vessel's continuity properties.

The mathematical morphology-based methods extract image components which are useful in the representation and description of region shapes such as features, boundaries, skeletons, and convex hulls. In [15], a unique combination of vessel centerlines detection and morphological bit plane slicing has been introduced to extract the blood vessel tree form the retinal images. The fast discrete curvelet transforms and multistructure mathematical morphology have been employed for vessel detection [16]. In [17], the combination of morphological filters and cross-curvature evaluation have been used to segment vessel-like patterns. A difference of offset gaussian filter [18] has been utilized for retinal vasculature extraction. A general framework of locally adaptive thresholding method for retinal vessel segmentation has been introduced in [19].

Multiscale approaches are to separate out information related to the blood vessel having varying width at different scales. A scale space segmentation algorithm has been proposed in [20], which has been used to measure and quantify geometrical and topological properties of the retinal vascular tree. Two extensions of this scale space algorithm have been demonstrated in [21, 22]. A multi-scale line tracking for vasculature segmentation has been presented in [23].

The model-based approaches are very popular techniques for image segmentation and have been used for retinal vessel segmentation. Active contour models which are based on curve evolution are very commonly used for image segmentation. The main advantage is their great performance. Recently, they have been used to detect boundaries of vessels in retinal images [24–28]. The classical snake in combination with blood vessel topological properties has been used to extract the vasculature from retinal image [29]. A methodology based on nonlinear projections has been proposed for vessel segmentation [30]. Level set method is a very good approach to deal with topological changes [31] and has been successfully used for vessel segmentation of retinal images [32, 33]. Graph-based approach is very popular and interesting method for image segmentation and also has been applied to vessel boundary detection [34].

The above methods [12–14] are based on the Bayesian model. However, the main disadvantage of these approaches is that the pixels are assumed to be independent. Spatial dependence is very important to guarantee connectedness of the vessel structure. To take into account the dependence in the spatial space, Markov random field (MRF) models have been widely used for solving the image segmentation problem [35–37]. However, one of the drawbacks of the MRF-based methods is that the computational cost is quite high.

In this paper, we present a novel Bayesian segmentation method for vessel segmentation. The proposed method takes the spatial information into account. We found that maximizing log-likelihood function is equivalent to energy function minimization. The parameters of the model can be estimated via energy minimization. In order to detect the boundaries of the blood vessels, the modified level set approach is used for solving the energy function minimization problem. The method was evaluated on two publicly available databases, the DRIVE database [38] and the STARE database [39]. Results of the proposed method are compared to those from other methods, leading to the conclusion that our approach outperforms other techniques.

The remainder of this paper is organized as follows. In Sections 2 and 3, we describe the details of the proposed model and the modified level set algorithm. In Section 4, we show the experimental results and conclude with a discussion in Section 5.

2. Proposed Model

2.1. Image Segmentation

Let 𝒳 = {x i, i ∈ Ω} denote an observed image, where x i is the observation of pixel i and Ω is image domain. Let 𝒦 = {1,2,…, K} denote a label set, and K is the total number of classes. Let 𝒴 = {y i ∈ 𝒦, i ∈ Ω} be an image of labels. The aim of labeling is to assign a label y i ∈ 𝒦 to each pixel i ∈ Ω, based on x i. The goal of segmentation is to separate the image domain Ω into disjoint regions Ω 1,…, Ω K and ensure smooth inside each region Ω k. Notice that, given a labeling 𝒴, the collection Ω k = {i ∈ Ω | y i = k} for k ∈ 𝒦 is one of these regions. Also, given the segmentation Ω k for k ∈ 𝒦, the image {y i | y i = k if i ∈ Ω k, i ∈ Ω} is a labeling. It is a one-to-one relationship between the labeling and the segmentation. Thus, the image segmentation problem can be considered as a labeling problem.

2.2. Bayesian Model with Spatial Constraint

In Bayesian framework, inference is often carried out by maximizing the posterior distribution

| (1) |

where P(𝒳 | 𝒴) is the likelihood function and P(𝒴) is the prior distribution.

When the pixels are considered independent of each other, the likelihood function can be written as

| (2) |

However, the spatial relationships between neighboring pixels are not taken into account. To improve the accuracy of the segmented results, the spatial dependencies should be taken into account.

In this paper, we use a modeling strategy for the spatial dependencies between the conditional probabilities. The conditional probability P(x i | y i) is defined as a mixture distribution over the conditional probabilities of neighboring pixels j, j ∈ 𝒩 i, that is,

| (3) |

where λ ij are fixed positive weights and for each i holds ∑j λ ij = 1. The mixing weight λ ij depends on the geometric closeness between the pixels i and j. Thus, the above likelihood function can be expressed as

| (4) |

Let P(x i | θ k) be the parameter form of the P(x i | y i = k). Usually, it is assumed to be Gaussian distribution

| (5) |

The log-likelihood function of Bayesian model (4) is given by

| (6) |

where λ ij are fixed positive weights and for each i it holds ∑j λ ij = 1. The mixing weight λ ij depends on the geometric closeness between the pixels i and j [40].

The geometric closeness h ij is a Gaussian function of the magnitude of the relative position vector of pixel j from pixel i, ||u i − u j||. The geometric closeness function is given as a decreasing function when the distance ||u i − u j|| increases as

| (7) |

where σ g is parameter, which defines the desired structural locality between neighboring pixels, and u i and u j are the location of the pixel i and j, respectively. We use a 3 × 3 neighborhood window and we suggest σ g 2 = 10.

We define the mixing weight λ ij as follows:

| (8) |

Note that λ ij are fixed constants, and we can drop the term that depends only on λ ij. Image segmentation can be performed by maximizing log-likelihood function ℒ(μ, σ) (6) with respect to the parameter μ, σ as

| (9) |

2.3. Energy Minimization

Since the logarithm is a monotonically increasing function, it it more convenient to consider the negative likelihood function as an energy function as

| (10) |

Thus, image segmentation problem can be solved by minimizing energy ℰ(μ, σ) (10) with respect to the parameter μ, σ.

We assume that the variance of the proposed energy (10) has the common form σ. Thus, the functional for the proposed energy (10) can be written as

| (11) |

We introduce kernel function ρ(x i, x j) as a nonnegative window function

| (12) |

With the window function, the energy function (11) can be rewritten as

| (13) |

By exchanging the order of sum, we have

| (14) |

For convenience, we can rewrite the above energy functional ℱ(μ) in the following form:

| (15) |

where e k(x j) is the function defined by

| (16) |

We minimize the above proposed energy functional ℱ(μ) using the modified level set approach.

3. Algorithm

In this section, the energy (10) is converted to a level set formulation by representing the disjoint regions Ω 1,…, Ω K with a number of level set functions, with a regularization term on these level set functions.

We consider the two-phase level set formulation. The image domain 𝒳 is segmented into two disjoint regions Ω 1 and Ω 2. In level set methods, a level set function is a function that takes positive and negative signs. We use level set function to represent a partition of the domain 𝒳 into two disjoint regions Ω 1 and Ω 2 as

| (17) |

The regions Ω 1 and Ω 2 can be represented with their membership functions defined by the Heaviside function H(ϕ) as

| (18) |

Thus, the energy (15) can be expressed as the following level set formulation:

| (19) |

where e k(x j) is defined in (16). The level set function ϕ and the parameters μ are the variables of the energy ℱ (19).

Let ℒ(ϕ) and ℛ(ϕ) denote the regularization terms of level set function ϕ. The energy term ℒ(ϕ) is defined by [41]

| (20) |

which computes the arc length of the zero level contour of ϕ and serves to smooth the contour [41]. The energy term ℛ(ϕ) is defined by [42]

| (21) |

where function ℛ is an energy density function, which is called a distance regularization term [42].

Therefore, combining these two energy terms with the energy ℱ (19), the total energy functional becomes

| (22) |

By minimizing the above energy, we obtain the result of image segmentation given by the level set function ϕ and the estimation of the parameters μ. The details of the algorithm for minimizing the energy (22) are given in the next section.

3.1. Modified Level Set Algorithm

The energy minimization is achieved by an iterative process: in each iteration, we minimize the energy ℱ(ϕ, μ) (22) with respect to each of its variables ϕ, μ, given the other two updated in previous iteration. We give the solution to the energy minimization with respect to each variable as follows.

Keeping μ fixed and minimizing ℱ(ϕ, μ) (22) with respect to ϕ, we use the gradient descent method to solve the gradient flow equation as

| (23) |

where ∂ℱ/∂ϕ is the Gâteaux derivative [41] of the energy ℱ.

We compute the Gâteaux derivative ∂ℱ/∂ϕ and the corresponding gradient flow equation is

| (24) |

During the evolution of the level set function according to (24), the variables μ are updated by minimizing the energy ℱ(ϕ, μ) (22) with respect to μ.

Keeping ϕ fixed and minimizing ℱ(ϕ, μ) (22) with respect to μ, the variable μ can be expressed as

| (25) |

Finally, the principal steps of the algorithm are as follows.

3.2. Numerical Approximation

In numerical implementation, in order to compute the unknown function ϕ, we consider the slightly regularized version of the Heaviside function H, denoted here by H ε, which is computed by [41]

| (26) |

Accordingly, the dirac delta function δ, which is the derivative of the Heaviside function, is replaced by the derivative of approximation Heaviside function H ε. The dirac delta function δ is given by

| (27) |

Since the energy is nonconvex, the solution may be the local minima. With the Heaviside function H ε and the dirac delta function δ ε, the algorithm has the tendency to compute the global minimizer. Thus, the algorithm is not sensitive to the position of the initial curve.

4. Experiments

In this section, we have evaluated and compared the proposed method for the segmentation of retinal images. The retinal images are obtained from two publicly available databases the DRIVE database [38] and the STARE database [39]. In the experiments, we generally choose the parameters as follows: α = 1.0 and the time step Δt = 0.1. After many experiments on a small number of example images, we have found that, when β = 0.001 × 2552, the performance is very good. In all the following experiments, the values of the parameters are same.

The images of the DRIVE database are with 565 × 584 pixels and 8 bits per color channel. The database includes binary images with the results of manual segmentation, which have been used as ground truth to evaluate the performance of the vessel segmentation methods. The retinal images of the STARE database are digitized to 700 × 605 pixels, 8 bits per RGB channel. The STARE database contains 20 images for blood vessel segmentation: 10 normal images and 10 abnormal images. Binary images with manual segmentations are also available for each image of this database.

Evaluation of the developed method is done on the DRIVE and STARE databases. Experimental results are compared to those obtained using other vessel segmentation methods. To facilitate the comparison with other retinal vessel segmentation methods, the segmentation accuracy has been selected as performance measure. The segmentation accuracy has been defined by the ratio of the total number of correctly classified pixels by the number of pixels in the field of view (FOV). It contains values in the range [0,1], with values closer to 1 indicating a good result. Other important measures are sensitivity and specificity. In this paper, the sensitivity is estimated by the percentage of pixels correctly classified as vessel pixels. The specificity stands for the fraction of pixels erroneously classified as vessel pixels. The ground truth for evaluating the performance measures was a manual segmentation result which is provided together with each database image.

A majority of the pixels are often easy to be classified by the previous methods; however, some of the pixels, such as those on the boundary of a vessel, those for small vessels, and those for vessels near pathology, are difficult to be classified. The proposed method provides a novel way to account for spatial dependence between image pixels. Thus, it can reduce the sensitivity of the segmented results and guarantee connectedness of the vessel structure.

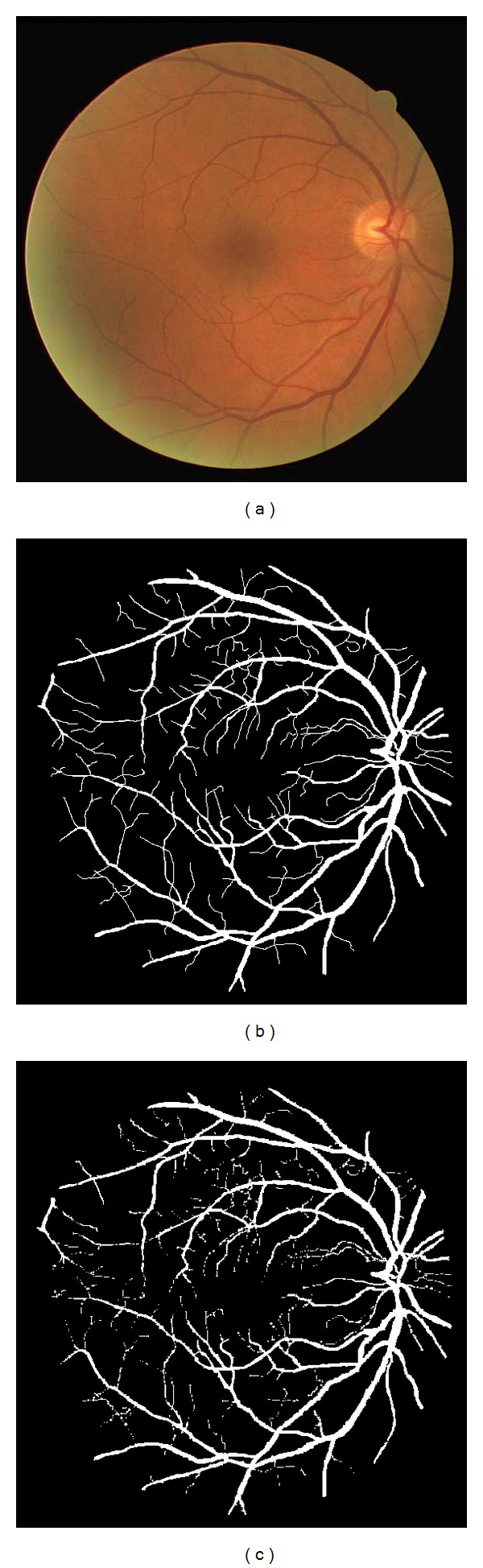

In the first experiment, the proposed method is done on retinal vessel images of DRIVE database. Image in Figure 1(a) shows the original retinal vessel images. The ground truth of the original retinal image is presented in Figure 1(b). The segmentation result obtained by using the proposed method is illustrated in Figure 1(c). It can observed that the proposed method obtains good results.

Figure 1.

Experiment on two retinal images of DRIVE database. (a) Original retinal images. (b) Ground truth. (c) Segmentation results obtained by the proposed method.

In order to test the accuracy and determine the efficiency of the proposed method, we do experiment on another retinal vessel image of the DRIVE database and compare the result with those obtained by other methods. Figure 2(a) shows a retinal image of the DRIVE database. The image in Figure 2(b) is the ground truth. Figures 2(c)–2(f) present the segmentation results obtained by Niemeijer et al.'s method [5], Staal et al.'s method [2], Mendonça and Campilho (green intensity) method [18], and the proposed method, respectively. For visual inspection of the results, the proposed method produces a very good segmentation result.

Figure 2.

Experiment on retinal image of DRIVE database. (a) Original retinal image. (b) Ground truth. (c)–(f) Segmentation result obtained by Niemeijer et al.'s method [5], Staal et al.'s method [2], Mendonça and Campilho (green intensity) method [18], and the proposed method, respectively.

To facilitate the comparison of our results to those presented by other authors in their original papers, the results have been calculated for the images of the DRIVE database. The value results of the proposed method are shown in Table 1. The other vessel segmentation methods which are reported in their published papers are also presented in Table 1. The performance measures of the proposed method in Table 1 are the average values for all the images of DRIVE database. We can view that the proposed method can capture more correctly classified vessel pixels and less erroneously classified vessel pixels than the other methods. The average accuracy of the proposed method is better than the other techniques.

Table 1.

Performance of vessel segmentation methods (DRIVE images).

| Method | Average accuracy | Sensitivity | Specificity |

|---|---|---|---|

| Staal et al. [2] | 0.9442 | 0.7194 | 0.9773 |

| You et al. [3] | 0.9434 | 0.7410 | 0.9751 |

| Marín et al. [4] | 0.9452 | 0.7067 | 0.9801 |

| Niemeijer et al. [5] | 0.9417 | 0.6898 | 0.9696 |

| Zhang et al. [11] | 0.9382 | 0.7120 | 0.9724 |

| Yin et al. [12] | 0.9267 | 0.6252 | 0.9710 |

| Fraz et al. [15] | 0.9430 | 0.7152 | 0.9769 |

| Miri and Mahloojifar [16] | 0.9458 | 0.7352 | 0.9795 |

| Mendonça and Campilho [18] | 0.9452 | 0.7344 | 0.9764 |

| Martinez-Perez et al. [21] | 0.9344 | 0.7246 | 0.9655 |

| Martinez-Perez et al. [22] | 0.9220 | 0.6602 | 0.9612 |

| Vlachos and Dermatas [23] | 0.9291 | 0.7472 | 0.9550 |

| Espona et al. [29] | 0.9352 | 0.7436 | 0.9615 |

| Proposed Method | 0.9529 | 0.7513 | 0.9792 |

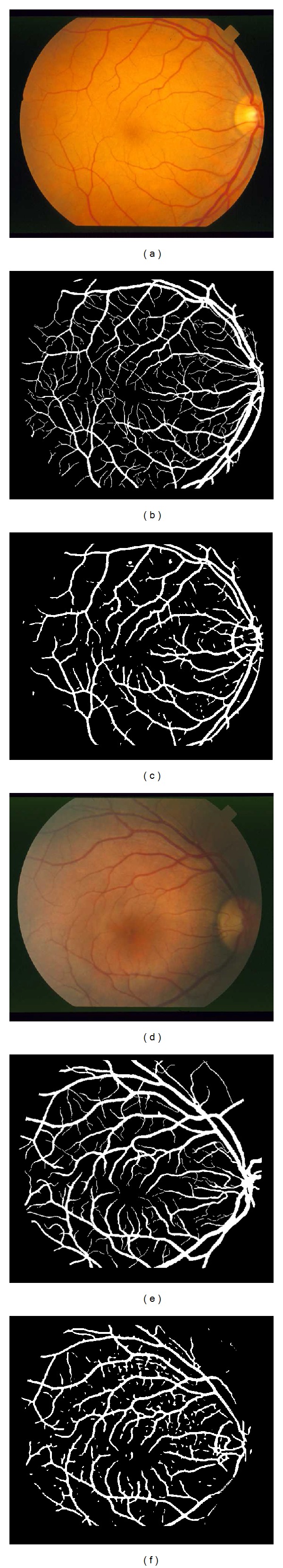

In order to further test the accuracy and determine the efficiency of the proposed method, the proposed method has been tested on the images of the STARE database. Figures 3(a) and 3(d) show two retinal images of STARE database. Figures 3(b) and 3(e) present the ground truth, respectively. The results obtained by using the proposed method are shown in Figures 3(c) and 3(f), respectively. For visual inspection, it can be seen that the proposed method can obtain very good results.

Figure 3.

Experiment on retinal images of STARE database. (a), (d) Original retinal images. (b), (e) Ground truth. (c), (f) Segmentation results obtained by the proposed method.

To compare our results to those reported in other published papers, we give the performance measures in Table 2. The average accuracy, sensitivity, and specificity are used to measure the performance. We can note that the proposed method performs better than the other techniques.

Table 2.

Performance of vessel segmentation methods (STARE images).

| Method | Average accuracy | Sensitivity | Specificity |

|---|---|---|---|

| Staal et al. [2] | 0.9442 | 0.7194 | 0.9773 |

| Soares et al. [6] | 0.9454 | 0.7212 | 0.9730 |

| Hoover et al. [9] | 0.9267 | 0.6751 | 0.9567 |

| Yin et al. [12] | 0.9413 | 0.7249 | 0.9666 |

| Fraz et al. [15] | 0.9442 | 0.7311 | 0.9680 |

| Mendonça and Campilho [18] | 0.9440 | 0.6996 | 0.9730 |

| Martinez-Perez et al. [21] | 0.9410 | 0.7506 | 0.9569 |

| Martinez-Perez et al. [22] | 0.9240 | 0.7790 | 0.9409 |

| Zhang et al. [30] | 0.9087 | 0.7373 | 0.9736 |

| Proposed Method | 0.9476 | 0.7147 | 0.9735 |

The execution time of the proposed method depends on many parameters. For the images of the STARE database, one iteration time of the proposed method is less than 10 seconds. Convergence of the proposed algorithm may be achieved in less than 10 iterations.

5. Conclusion

The accurate extraction of the retinal blood vessel can provide much help for diagnosis of cardiovascular and ophthalmologic diseases. Even though many techniques and algorithms have been developed, there is still room for improvement in blood vessel segmentation methodologies. In this paper, we have presented a novel Bayesian model for vessel segmentation. To overcome the drawback that the spatial information is not taken into account, the proposed model exploits the spatial information. To obtain the boundaries of the blood vessels, the modified level set approach is employed for minimizing the proposed energy function. The proposed method has been tested on real retinal image databases and the experimental results have been compared with those of other methods. Since the developed method takes the spatial information into account, it can result in very good performance in the detection of narrow and low contrast vessels and guarantee connectedness of the vessel structure. The comparison demonstrates that the proposed method outperforms other methods. In future work, we will compare the proposed method to other algorithms. Most of the techniques in the literature and the proposed method are evaluated on a limited range of databases (DRIVE and STARE). We will do more experiments on larger database and compare to more techniques in future.

References

- 1.Fraz MM, Remagnino P, Hoppe A, et al. Blood vessel segmentation methodologies in retinal images—a survey. Computer Methods and Programs in Biomedicine. 2012;108(1):407–433. doi: 10.1016/j.cmpb.2012.03.009. [DOI] [PubMed] [Google Scholar]

- 2.Staal J, Abràmoff MD, Niemeijer M, Viergever MA, van Ginneken B. Ridge-based vessel segmentation in color images of the retina. IEEE Transactions on Medical Imaging. 2004;23(4):501–509. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 3.You X, Peng Q, Yuan Y, Cheung YM, Lei J. Segmentation of retinal blood vessels using the radial projection and semi-supervised approach. Pattern Recognition. 2011;44(10-11):2314–2324. [Google Scholar]

- 4.Marín D, Aquino A, Gegúndez-Arias ME, Bravo JM. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Transactions on Medical Imaging. 2011;30(1):146–158. doi: 10.1109/TMI.2010.2064333. [DOI] [PubMed] [Google Scholar]

- 5.Niemeijer M, Staal J, van Ginneken B, Loog M, Abràmoff MD. Comparative study of retinal vessel segmentation methods on a new publicly available database. In: Fitzpatrick JM, Sonka M, editors. Medical Imaging 2004: Image Processing; February 2004; San Diego, Calif, USA. pp. 648–656. [Google Scholar]

- 6.Soares JVB, Leandro JJG, Cesar RM, Jr., Jelinek HF, Cree MJ. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Transactions on Medical Imaging. 2006;25(9):1214–1222. doi: 10.1109/tmi.2006.879967. [DOI] [PubMed] [Google Scholar]

- 7.Kande GB, Subbaiah PV, Savithri TS. Unsupervised fuzzy based vessel segmentation in pathological digital fundus images. Journal of Medical Systems. 2010;34(5):849–858. doi: 10.1007/s10916-009-9299-0. [DOI] [PubMed] [Google Scholar]

- 8.Ng J, Clay ST, Barman SA, et al. Maximum likelihood estimation of vessel parameters from scale space analysis. Image and Vision Computing. 2010;28(1):55–63. [Google Scholar]

- 9.Hoover A, Kouznetsova V, Goldbaum M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter reponse. IEEE Transactions on Medical Imaging. 2000;19(3):203–210. doi: 10.1109/42.845178. [DOI] [PubMed] [Google Scholar]

- 10.Gang L, Chutatape O, Krishnan SM. Detection and measurement of retinal vessels in fundus images using amplitude modified second-order Gaussian filter. IEEE Transactions on Biomedical Engineering. 2002;49(2):168–172. doi: 10.1109/10.979356. [DOI] [PubMed] [Google Scholar]

- 11.Zhang B, Zhang L, Zhang L, Karray F. Retinal vessel extraction by matched filter with first-order derivative of Gaussian. Computers in Biology and Medicine. 2010;40(4):438–445. doi: 10.1016/j.compbiomed.2010.02.008. [DOI] [PubMed] [Google Scholar]

- 12.Yin Y, Adel M, Bourennane S. Retinal vessel segmentation using a probabilistic tracking method. Pattern Recognition. 2012;45(4):1235–1244. [Google Scholar]

- 13.Adel M, Moussaoui A, Rasigni M, Bourennane S, Hamami L. Statistical-based tracking technique for linear structures detection: application to vessel segmentation in medical images. IEEE Signal Processing Letters. 2010;17(6):555–558. [Google Scholar]

- 14.Yin Y, Adel M, Bourennane S. An automatic tracking method for retinal vascular tree extraction. Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP ’12); March 2012; Kyoto, Japan. pp. 709–712. [Google Scholar]

- 15.Fraz MM, Barman SA, Remagnino P, et al. An approach to localize the retinal blood vessels using bit planes and centerline detection. Computer Methods and Programs in Biomedicine. 2012;108(2):600–616. doi: 10.1016/j.cmpb.2011.08.009. [DOI] [PubMed] [Google Scholar]

- 16.Miri MS, Mahloojifar A. Retinal image analysis using curvelet transform and multistructure elements morphology by reconstruction. IEEE Transactions on Biomedical Engineering. 2011;58(5):1183–1192. doi: 10.1109/TBME.2010.2097599. [DOI] [PubMed] [Google Scholar]

- 17.Zana F, Klein JC. Segmentation of vessel-like patterns using mathematical morphology and curvature evaluation. IEEE Transactions on Image Processing. 2001;10(7):1010–1019. doi: 10.1109/83.931095. [DOI] [PubMed] [Google Scholar]

- 18.Mendonça AM, Campilho A. Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Transactions on Medical Imaging. 2006;25(9):1200–1213. doi: 10.1109/tmi.2006.879955. [DOI] [PubMed] [Google Scholar]

- 19.Jiang X, Mojon D. Adaptive local thresholding by verification-based multithreshold probing with application to vessel detection in retinal images. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2003;25(1):131–137. [Google Scholar]

- 20.Martinez-Perez ME, Hughes AD, Stanton AV, Thom SA, Bharath AA, Parker KH. Proceedings of the 2nd International Conference on Medical Image Computing and Computer-Assisted Intervention. London, UK: Springer; 1999. Retinal blood vessel segmentation by means of scale-space analysis and region growing; pp. 90–97. [Google Scholar]

- 21.Martinez-Perez ME, Hughes AD, Thom SA, Bharath AA, Parker KH. Segmentation of blood vessels from red-free and fluorescein retinal images. Medical Image Analysis. 2007;11(1):47–61. doi: 10.1016/j.media.2006.11.004. [DOI] [PubMed] [Google Scholar]

- 22.Martinez-Perez ME, Hughes AD, Thom SA, Parker KH. Improvement of a retinal blood vessel segmentation method using the insight segmentation and registration toolkit (ITK). Proceedings of the 29th Annual International Conference of IEEE-EMBS, Engineering in Medicine and Biology Society (EMBC ’07); August 2007; Lyon, France. pp. 892–895. [DOI] [PubMed] [Google Scholar]

- 23.Vlachos M, Dermatas E. Multi-scale retinal vessel segmentation using line tracking. Computerized Medical Imaging and Graphics. 2010;34(3):213–227. doi: 10.1016/j.compmedimag.2009.09.006. [DOI] [PubMed] [Google Scholar]

- 24.Al-Diri B, Hunter A, Steel D. An active contour model for segmenting and measuring retinal vessels. IEEE transactions on medical imaging. 2009;28(9):1488–1497. doi: 10.1109/TMI.2009.2017941. [DOI] [PubMed] [Google Scholar]

- 25.Sun K, Chen Z, Jiang S. Local morphology fitting active contour for automatic vascular segmentation. IEEE Transactions on Biomedical Engineering. 2012;59(2):464–473. doi: 10.1109/TBME.2011.2174362. [DOI] [PubMed] [Google Scholar]

- 26.Shang Y, Deklerck R, Nyssen E, et al. Vascular active contour for vessel tree segmentation. IEEE Transactions on Biomedical Engineering. 2011;58(4):1023–1032. doi: 10.1109/TBME.2010.2097596. [DOI] [PubMed] [Google Scholar]

- 27.Yazdanpanah A, Hamarneh G, Smith BR, Sarunic MV. Segmentation of intra-retinal layers from optical coherence tomography images using an active contour approach. IEEE Transactions on Medical Imaging. 2011;30(2):484–496. doi: 10.1109/TMI.2010.2087390. [DOI] [PubMed] [Google Scholar]

- 28.Espona MOL, Carreira MJ, Penedo MG. A snake for retinal vessel segmentation. (Lecture Notes in Computer Science).Pattern Recognition and Image Analysis. 2007;4478:178–185. [Google Scholar]

- 29.Espona L, Carreira MJ, Penedo MG, Ortega M. Retinal vessel tree segmentation using a deformable contour model. Proceedings of the 19th International Conference on Pattern Recognition (ICPR ’08); December 2008; pp. 1–4. [Google Scholar]

- 30.Zhang Y, Hsu W, Lee ML. Detection of retinal blood vessels based on nonlinear projections. Journal of Signal Processing Systems. 2009;55(1–3):103–112. [Google Scholar]

- 31.Malladi R, Sethian JA, Vemuri BC. Shape modeling with front propagation: a level set approach. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1995;17(2):158–175. [Google Scholar]

- 32.Sum KW, Cheung PYS. Vessel extraction under non-uniform illumination: a level set approach. IEEE Transactions on Biomedical Engineering. 2008;55(1):358–360. doi: 10.1109/TBME.2007.896587. [DOI] [PubMed] [Google Scholar]

- 33.Yu H, Barriga ES, Agurto C, et al. Fast localization and segmentation of optic disk in retinal images using directional matched filtering and level sets. IEEE Transactions on Information Technology in Biomedicine. 2012;16(4):644–657. doi: 10.1109/TITB.2012.2198668. [DOI] [PubMed] [Google Scholar]

- 34.Xu X, Niemeijer M, Song Q, et al. Vessel boundary delineation on fundus images using graph-based approach. IEEE Transactions on Medical Imaging. 2011;30(6):1184–1191. doi: 10.1109/TMI.2010.2103566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Zhang Y, Brady M, Smith S. Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Transactions on Medical Imaging. 2001;20(1):45–57. doi: 10.1109/42.906424. [DOI] [PubMed] [Google Scholar]

- 36.Forbes F, Peyrard N. Hidden Markov random field model selection criteria based on mean field-like approximations. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2003;25(9):1089–1101. [Google Scholar]

- 37.Chatzis SP, Varvarigou TA. A fuzzy clustering approach toward hidden Markov random field models for enhanced spatially constrained image segmentation. IEEE Transactions on Fuzzy Systems. 2008;16(5):1351–1361. [Google Scholar]

- 38.Niemeijer M, van Ginneken B. Digital retinal image for vessel extraction (DRIVE) database. 2002, http://www.isi.uu.nl/Research/Databases/DRIVE/

- 39.Hoover A. Structured analysis of the retina (STARE) database. http://www.parl.clemson.edu/~ahoover/stare/

- 40.Wong WCK, Chung ACS. Bayesian image segmentation using local iso-intensity structural orientation. IEEE Transactions on Image Processing. 2005;14(10):1512–1523. doi: 10.1109/tip.2005.852199. [DOI] [PubMed] [Google Scholar]

- 41.Chan TF, Vese LA. Active contours without edges. IEEE Transactions on Image Processing. 2001;10(2):266–277. doi: 10.1109/83.902291. [DOI] [PubMed] [Google Scholar]

- 42.Li C, Xu C, Gui C, Fox MD. Distance regularized level set evolution and its application to image segmentation. IEEE Transactions on Image Processing. 2010;19(12):3243–3254. doi: 10.1109/TIP.2010.2069690. [DOI] [PubMed] [Google Scholar]