Background

Forced alignment is a speech signal recognition technique where the onset and offset of speech sounds are identified based on a known sequence of phonemes. Although manual acoustic-phonetic segmentation is considered a more accurate method, it could be very time-consuming; especially when transcribing a large-scale speech corpus. Hence, forced alignment has become a tool for doing the task automatically. It would be extremely useful if the technique could be applied to the study of communication disorders; however, its accuracy in the case of production errors (e.g., literal paraphasias in aphasia) has not been investigated. Moreover, whether manual adjustment of the phonetic segmentation will be needed and to what extent it will be needed are unknown. Therefore, this study was conducted to answer these questions. The findings are important for a larger project, currently undertaken by the authors, that aims to build a language database of 180 individuals with aphasia and 180 typical speakers of Cantonese, and to conduct an acoustic analysis of prosody at the discourse level, as one of the data analyses.

Method

Two sets of speech samples collected from native speakers of Cantonese in Hong Kong were used. The first set was read speech produced by a typical 33-year-old female and a30-year-old male, with tertiary education level. The stimuli were 30 short paragraphs adopted from the editorial of a local newspaper (Apple Daily) issued between 31-October and 14-December 2009. The second data set was spontaneous speech, collected using single picture description (Cat Rescue picture) and story-telling (Tortoise and Hare) from a 47-year-old female with transcortical motor aphasia, a 49-year-old male with anomic aphasia and two age-, gender- and education level-matched typical individuals – a 44-year-old female and a 47-year-old male; the education level was secondary or below.

Using the HTK (Hidden Markov Model Toolkit), forced alignment was conducted to automatically segment and label the initial consonant and rime of each syllable. The information was saved and converted to TextGrid format for subsequent acoustic analysis using Praat (Boersma & Weenink, 1992–2009). For manual adjustment, the accuracy of segmentation was visually inspected based on the waveform, wide-band spectrogram and careful listening. Any consonant-rime and syllable boundary that deviated for more than 10 milliseconds was adjusted. Syllable duration, and the intensity and fundamental frequency of each rime were measured for both versions of segmentation.

Results

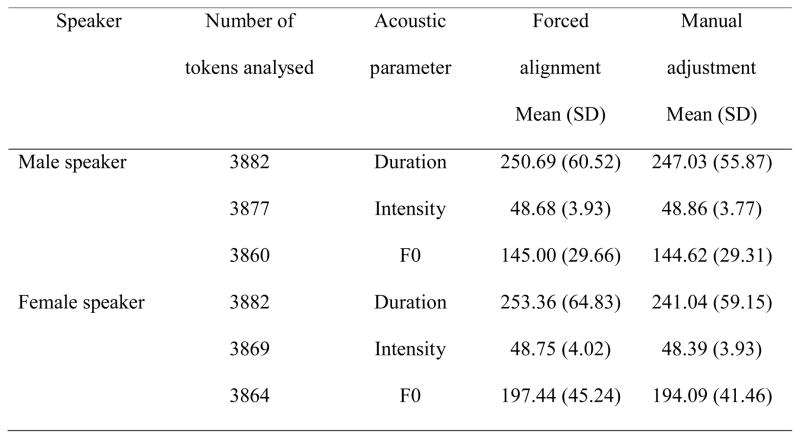

Paired-samples t-tests showed significant difference in the three acoustic parameters for each speech task, for each speaker (with p-level adjusted to 0.008). The mean difference was apparently larger in duration than intensity, and fundamental frequency (see Figure 1).

Figure 1.

The mean and standard deviation (SD) of syllable duration (ms), intensity (dB), and fundamental frequency (F0; Hertz) for read speech, as an example to illustrate the difference between the phonetic segmentation done by forced alignment and that with manual adjustment after forced alignment.

Discussion and conclusion

It has been suggested that the speech data could be used to train the speech recognition system first before conducting forced alignment to improve accuracy. Anyhow, the present findings supported the use of visual inspection and manual adjustment after forced alignment.

References

- Boersma P, Weenink D. Praat (Version 5.1.02) Amsterdam: 1992–2009. [Google Scholar]

- Tseng C, Pin S, Lee Y, Wang H, Chen Y. Fluent speech prosody: Framework and modeling. Speech Communication. 2005;46:284–309. [Google Scholar]