Abstract

The ventral intraparietal area (VIP) of the macaque brain is a multimodal cortical region with directionally selective responses to visual and vestibular stimuli. To explore how these signals contribute to self-motion perception, neural activity in VIP was monitored while macaques performed a fine heading discrimination task based on vestibular, visual, or multisensory cues. For neurons with congruent visual and vestibular heading tuning, discrimination thresholds improved during multisensory stimulation, suggesting that VIP (like the medial superior temporal area; MSTd) may contribute to the improved perceptual discrimination seen during cue combination. Unlike MSTd, however, few VIP neurons showed opposite visual/vestibular tuning over the range of headings relevant to behavior, and those few cells showed reduced sensitivity under cue combination. Our data suggest that the heading tuning of some VIP neurons may be locally remodeled to increase the proportion of cells with congruent tuning over the behaviorally relevant stimulus range. VIP neurons also showed much stronger trial-by-trial correlations with perceptual decisions (choice probabilities; CPs) than MSTd neurons. While this may suggest that VIP neurons are more strongly linked to heading perception, we also find that correlated noise is much stronger among pairs of VIP neurons, with noise correlations averaging 0.14 in VIP as compared with 0.04 in MSTd. Thus, the large CPs in VIP could be a consequence of strong interneuronal correlations. Together, our findings suggest that VIP neurons show specializations that may make them well equipped to play a role in multisensory integration for heading perception.

Introduction

In monkeys, the medial superior temporal area (MSTd) and ventral intraparietal (VIP) area are candidate neural substrates for heading perception. Both areas show selectivity for optic flow patterns that simulate self-motion, as well as directional tuning for inertial motion in darkness (MSTd; Duffy and Wurtz, 1995; Lappe et al., 1996; Duffy, 1998; Page and Duffy, 2003; Gu et al., 2006) (VIP; Bremmer et al., 2002; Schlack et al., 2002; Zhang et al., 2004; Zhang and Britten, 2010; Chen et al., 2011a). Although some differences in response properties between VIP and MSTd have been noted (Maciokas and Britten, 2010; Chen et al., 2011c; Yang et al., 2011), a better understanding of the respective roles of these areas in visually guided navigation remains an important goal.

Previous studies have suggested a role for MSTd in multisensory heading perception (for reviews, see Angelaki et al., 2009; 2011; Fetsch et al., 2010). When heading is specified by visual and vestibular cues simultaneously, behavioral performance is largely predicted by optimal (Bayesian) cue integration models (Gu et al., 2008; Fetsch et al., 2009; 2012). Correspondingly, multisensory MSTd neurons with congruent visual and vestibular heading preferences appear to account for both enhanced sensitivity during cue combination and for changes in cue weighting with stimulus reliability, but cells with opposite visual and vestibular heading preferences cannot account for these observations (Gu et al., 2008; Fetsch et al., 2012). Responses of congruent MSTd cells are also more strongly correlated with perceptual decisions during cue combination than are opposite cells, suggesting that the animal selectively monitors congruent cells during cue integration (Gu et al., 2008). Finally, electrical microstimulation and reversible chemical inactivation of MSTd were shown to affect heading judgments (Britten and van Wezel, 1998; Gu et al., 2012). However, the observed deficits were modest even after large bilateral muscimol injections, suggesting that other multisensory areas also contribute to heading discrimination (Gu et al., 2012).

VIP is also a strong candidate to play a major role in multisensory heading perception. Previous studies have shown that VIP contributes to heading judgments based solely on optic flow (Zhang and Britten, 2010, 2011). However, neural activity in VIP has not been examined during a multisensory visual/vestibular heading task. Given that VIP receives considerable input from MSTd (Boussaoud et al., 1990; Baizer et al., 1991) and also reflects other sensory and motor influences (Duhamel et al., 1998; Cooke et al., 2003; Avillac et al., 2005), we hypothesized that VIP may be further specialized for multisensory heading perception than MSTd. For example, VIP might contain a greater percentage of congruent neurons that are useful for cue integration, or VIP responses might show stronger correlations with perceptual decisions. We recorded from VIP neurons during a multimodal heading discrimination task based on visual and vestibular cues. Consistent with our hypothesis, we find substantial differences between VIP and MSTd, including less weight given to visual inputs by VIP neurons, a greater proportion of congruent cells, stronger choice probabilities, and correspondingly stronger correlated noise between neurons in VIP.

Materials and Methods

Subjects and surgery.

Five male rhesus monkeys (Macaca mulatta) weighing 6–10 kg were chronically implanted with a circular molded, lightweight plastic ring for head restraint; a recording grid; and scleral coils for monitoring eye movements. After recovery, all animals were trained using standard operant conditioning to fixate visual targets for fluid reward. All animal surgeries and experimental procedures, which were performed at Washington University, were approved by the Institutional Animal Care and Use Committee at Washington University and were in accordance with National Institutes of Health guidelines.

Motion platform and visual/vestibular heading stimuli.

Translation of the monkey in the horizontal plane was accomplished using a six degree of freedom motion platform (Moog 6DOF2000E). The trajectory of inertial motion was controlled in real time at 60 Hz over an Ethernet interface. A 3 chip DLP projector (Christie Digital Mirage 2000) was mounted on top of the platform and rear-projected images onto a 60 cm × 60 cm tangent screen attached to the front of the field coil frame. The display was viewed from a distance of 30 cm, thus subtending 90 × 90° of visual angle. The sides of the coil frame were covered with a black enclosure. Visual stimuli were generated by an OpenGL accelerator board (nVidia Quadro FX 3000G). The display had a pixel resolution of 1280 × 1240, 32-bit color depth, and was updated at the same rate as the movement trajectory (60 Hz).

Visual stimuli were presented stereoscopically as red/green anaglyphs, and were viewed through Kodak Wratten filters (red #29, green #61). The stimulus contained a variety of depth cues, including horizontal disparity, motion parallax, and size information. The motion coherence of the visual display was manipulated by randomizing the 3D location of a certain percentage of the stars on each display update while the remaining stars moved according to the specified heading. This manipulation degrades optic flow as a heading cue and was used to reduce psychophysical sensitivity in the visual condition such that it approximately matched vestibular sensitivity. Behavioral tasks and data acquisition were controlled by a commercially available software package (TEMPO; Reflective Computing).

Heading discrimination task.

Two monkeys (animals U and C) were trained, using standard operant conditioning techniques, to report their direction of translation during a two-alternative, forced-choice heading discrimination task (see Fig. 1A,B). In each trial, the monkey experienced forward motion with a small leftward or rightward component (Fig. 1A). During stimulation, the animal was required to maintain fixation on a head-fixed visual target located at the center of the display screen. Trials were aborted if conjugate eye position deviated from a 2° × 2° electronic window around the fixation point. At the end of the 2 s trial, the fixation spot disappeared, two choice targets appeared (5° to the left and right of the fixation point) and the monkey made a saccade to one of the targets within 1 s of their appearance to report his perceived motion as leftward or rightward relative to straight ahead (Fig. 1B). The saccade endpoint had to remain within 3° of the target for at least 150 ms to be considered a valid choice. Correct responses were rewarded with a drop of water or juice.

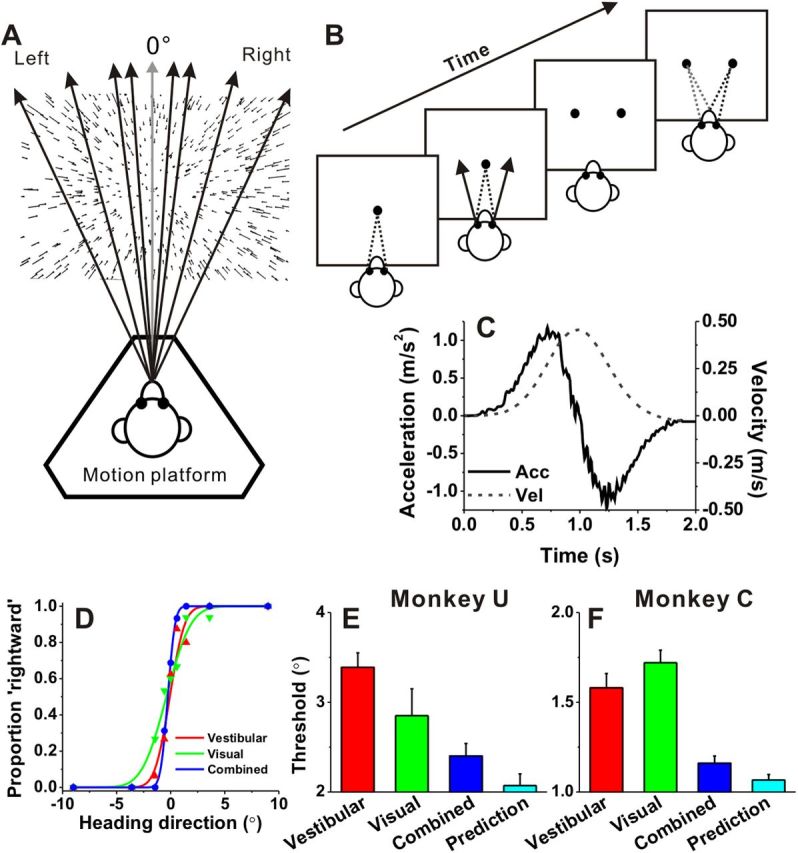

Figure 1.

Heading task and behavioral performance. A, Monkeys were seated on a motion platform and were translated forward along different heading directions in the horizontal plane to provide vestibular stimulation. A projector mounted on the platform displayed images of a 3D star field, and thus provided visual motion (optic flow) stimulation. B, After fixating a visual target, the monkey experienced forward motion with a small leftward or rightward (arrow) component, and subsequently reported his perceived heading (“left” vs “right”) by making a saccadic eye movement to one of two targets. C, The inertial motion stimulus followed a Gaussian velocity profile over the stimulus duration of 2 s (dashed line). The corresponding acceleration profile was biphasic (solid lines) with a peak acceleration of 1 m/s2 (shown as the output of a linear accelerometer attached to the motion platform). D, Example psychometric functions from one session. The proportion of “rightward” decisions is plotted as a function of heading direction. Red, green, and blue symbols represent data from the vestibular, visual, and combined conditions, respectively. Smooth curves are best-fitting cumulative Gaussian functions. E, F, Average psychophysical thresholds from two monkeys (monkey U, n = 31 and monkey C, n = 64) for each of the three stimulus conditions, and predicted thresholds computed from optimal cue integration theory (cyan). Error bars indicate SEM.

Across trials, heading was varied in fine steps around straight ahead. The range of headings was chosen based on extensive psychophysical testing using a staircase paradigm. For neural recordings, we used the method of constant stimuli, in which a fixed set of nine logarithmically spaced heading angles were tested for each monkey, including an ambiguous straight forward direction (± 9°, ± 3.5°, ± 1.3°, ± 0.5°, 0°). These values were chosen to obtain near-maximal psychophysical performance while allowing neural sensitivity to be estimated reliably for most neurons. For the ambiguous heading condition (0°, straight ahead motion), rewards were delivered randomly on half of the trials. Coherence was set to 20% for monkey U and 29% for monkey C, to approximately match visual and vestibular heading thresholds.

The experiment consisted of three randomly interleaved stimulus conditions: (1) a “vestibular” condition, in which the monkey was translated by the motion platform while fixating a head-fixed target on a blank screen (no optic flow), and we have previously demonstrated that performance in this condition depends heavily on vestibular signals (Gu et al., 2007); (2) a “visual” condition, in which the motion platform remained stationary while optic flow simulated the same range of headings; and (3) a “combined” condition, in which congruent inertial motion and optic flow were provided. Simultaneous visual and vestibular stimuli were precisely calibrated and tightly synchronized (Gu et al., 2006). Each of the 27 unique stimulus conditions (9 headings × 3 cue conditions) was typically repeated ∼30 times, for a total of ∼800 discrimination trials per recording session. Thus, it was necessary to maintain isolation of neurons for well over an hour to obtain a useful dataset.

The motion trajectory was 2 s in duration and followed a Gaussian velocity profile (Fig. 1C, dashed curve) with a corresponding biphasic linear acceleration profile (Fig. 1C, solid curve). The total displacement along the motion trajectory was 30 cm, with a peak acceleration of ±0.1 G (± 0.98 ms−2) and a peak velocity of 45 cm/s. These accelerations far exceed vestibular detection thresholds (MacNeilage et al., 2010a,b).

Electrophysiological recordings.

We used standard methods for extracellular single unit recording, as described previously (Gu et al., 2006). Briefly, a tungsten microelectrode (Frederick Haer; tip diameter 3 μm, impedance 1–2 MΩ at 1 kHz) was advanced into the cortex through a transdural guide tube, using a micromanipulator (Frederick Haer) mounted on top of the head restraint ring. Single neurons were isolated using a conventional amplifier, a bandpass eight-pole filter (400–5000 Hz), and a dual voltage-time window discriminator (Bak Electronics). The times of occurrence of action potentials and all behavioral events were recorded with 1 ms resolution by the data acquisition computer. Eye movement traces were lowpass filtered and sampled at 250 Hz. Raw neural signals were also digitized at 25 kHz and stored to disk for off-line spike sorting and additional analyses.

Area VIP was localized using a combination of magnetic resonance imaging scans, stereotaxic coordinates, white/gray matter transitions, and physiological response properties, as described previously (Chen et al., 2011a). Most recordings concentrated on the medial tip of the intraparietal sulcus, where robust visual and vestibular activity has been reported (Chen et al., 2011a). Once the action potential of a single neuron was isolated, we measured heading tuning either in the horizontal plane (eight directions relative to straight ahead: 0, ± 45, ± 90, ± 135, and 180°) or in three dimensions (26 directions spaced 45° apart in azimuth and elevation) during both vestibular and visual stimulation (Chen et al., 2011a). During this “classification” protocol, monkeys were simply required to fixate a head-centered target, while five repetitions were collected for each stimulus. A total of 103 and 154 neurons from three hemispheres (monkeys U and C) were tested with this classification protocol in the horizontal plane and in 3D, respectively. Among these, 113 neurons showed significant tuning (p < 0.05, ANOVA) in the horizontal plane for either the vestibular or the visual stimulus, and these cells were further tested during the heading discrimination task. Our inclusion criteria resulted in 95 VIP cells that were tested with a sufficient number of stimulus repetitions (>10) during the heading discrimination task. Among these 95 neurons that comprise the main dataset, 15 cells were tuned for heading only in the vestibular condition, 24 cells were tuned only in the visual condition, and 56 cells had significant tuning under both single-cue conditions.

In addition to the data collected in these two trained animals, data from an additional three “naive” animals (Chen et al., 2011a) were re-analyzed off-line to search for pairs of well isolated single units from the same electrode. These three naive animals were trained only to fixate, but not to discriminate heading. The heading tuning of neurons in naive animals was tested with the 3D tuning protocol (Chen et al., 2011a).

Data analysis-heading discrimination task.

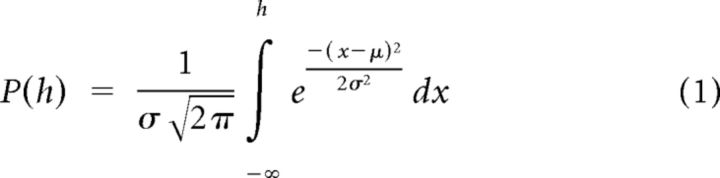

Behavioral performance was quantified by plotting the proportion of “rightward” choices as a function of heading (the angular distance of the motion direction from straight ahead). Psychometric curves were fit with a cumulative Gaussian function:

|

Psychophysical threshold was defined as the SD of the Gaussian fit, σ, which corresponds to 84% correct performance. Predicted thresholds for the combined condition, assuming optimal (maximum likelihood) cue integration, were computed as follows (Ernst and Banks, 2002):

|

where σvestibular, and σvisual represent psychophysical thresholds for the vestibular and visual conditions, respectively.

For the analyses of neural responses, we used mean firing rates calculated during the middle 1 s interval of each stimulus presentation (different analysis windows were also used, as described below). To characterize neuronal sensitivity, we used receiver operating characteristic (ROC) analysis to compute the ability of an ideal observer to discriminate between two oppositely directed headings (for example, −9° and +9°) based on the firing rate of the recorded neuron and a presumed “anti-neuron” with opposite tuning. ROC values were plotted as a function of heading direction, resulting in neurometric functions that were also fit with a cumulative Gaussian function. Neuronal thresholds were defined as the SD of these functions. Because neuronal and psychophysical thresholds were recorded simultaneously during each experimental session, the two could be compared quantitatively.

To quantify the relationship between VIP responses and the monkey's perceptual decisions, we also computed choice probabilities (CPs) using ROC analysis. For each heading, neuronal responses were sorted into two groups, based on the choice that the animal made at the end of each trial (“preferred” vs “null” choices). For each heading with at least three data points for each choice, responses were normalized (z-scored) by subtracting the mean response and dividing by the SD across stimulus repetitions. Z-scored responses were then pooled across headings into a single pair of distributions for preferred and null choices. ROC analysis on this pair of distributions yielded the grand CP. The statistical significance of CPs (whether they were significantly different from the chance level of 0.5) was determined using a permutation test (1000 permutations). For each stimulus condition (visual, vestibular, combined), CPs were computed based on a neuron's preferred sign of heading (leftward or rightward) for that particular stimulus condition. Hence, CPs are defined relative to the heading preference for each stimulus modality.

To quantify the congruency between visual and vestibular heading tuning functions measured during the discrimination task, we calculated a congruency index (CI; Gu et al., 2008; Fetsch et al., 2012) over the narrow range of headings (±9°) that was tested. A Pearson correlation coefficient was first computed for each single-cue condition. This quantified the strength of the linear trend between firing rate and heading for vestibular (Rvestibular) and visual (Rvisual) stimuli. CI was then defined as the product of these correlation coefficients:

CI = Rvestibular × Rvisual.

CI ranges from −1 to 1, with values near 1 indicating that visual and vestibular tuning functions have a consistent slope and values near −1 indicating opposite slopes. CI reflects both the congruency of tuning and the steepness of the slopes of the tuning curves around straight ahead. In the current study, we denoted neurons with CI > 0.4 as “congruent cells,” neurons with CI < −0.4 as “opposite cells,” and neurons with a CI between ±0.4 as “intermediate.” Note that CI reflects a “local” measure of the congruency of visual and vestibular tuning. In addition, we also computed a “global” measure of congruency based on each cell's complete heading tuning curve in the horizontal plane. This global measure of congruency is defined as the absolute difference between direction preferences (|Δ preferred direction|) measured in the visual and vestibular conditions (Gu et al., 2006; Chen et al., 2011a).

We observed that some global heading tuning curves have a single peak whereas others exhibit two peaks. Thus, we classified each tuning curve as “unimodal” versus “bimodal” (the latter group also potentially includes multimodal cells) as follows. First, we interpolated the tuning data to 5° resolution in the horizontal plane, and then we adapted a multimodality test based on the kernel density estimate method (Silverman, 1981; Fisher and Marron, 2001; Anzai et al., 2007). This test generates two p values, with the first one (p_uni) for the test of unimodality and the second one (p_bi) for the test of bimodality. When p_uni > 0.05, the tuning curve is classified as unimodal. If p_uni < 0.05 and p_bi > 0.05, unimodality is rejected and the distribution is classified as bimodal. If p_bi < 0.05 also, this could indicate the existence of more than two modes in the tuning curve (which we lumped into the bimodal category, as multimodal tuning was rarely observed). Accordingly, heading tuning was classified as either unimodal or bimodal for each peak time (Chen et al., 2010).

Noise correlation analysis.

To analyze trial-by-trial correlations between firing rates of simultaneously recorded neurons, we used spike sorting software (Spike2; Cambridge Electronics Design) to extract data from pairs of well isolated cells recorded from a single electrode. Such cell pairs could be extracted from 32 recordings in animal U (trained) and 32 recordings from animal C (trained), as well as from 75 recordings from the three additional naive animals trained only to fixate during visual/vestibular stimulation. From these datasets, correlated noise was quantified for 109 pairs of neurons that were tested with visual/vestibular heading stimuli in 3D. For another 30 pairs of neurons, noise correlations were measured during experiments in which heading tuning was characterized only in the horizontal plane. Only pairs of neurons that showed clearly separate clusters in the first three principle components of the spike waveform were included in this analysis.

Noise correlation (rnoise) was computed as the Pearson correlation coefficient (ranging between −1 and 1) of the trial-by-trial responses from two simultaneously recorded neurons (Zohary et al., 1994; Bair et al., 2001; Gu et al., 2011). The response in each trial was taken as the number of spikes during the middle 1 s of the stimulus duration. For each heading, responses were z-scored to remove the effect of heading on the mean responses of the neurons, such that the measured noise correlation reflected only trial-to-trial variability. To avoid artificial correlations caused by outlier responses, we removed data points with z-values larger than 3 SDs outside the mean response (Zohary et al., 1994). We then pooled data across headings to compute rnoise; the corresponding p value was used to assess the significance of noise correlation for each pair of neurons. To remove slow fluctuations in responsiveness that could result from changes in the animal's internal state over time (e.g., level of arousal), we renormalized the z-scored responses in blocks of 20 trials as done previously by Zohary et al. (1994). The time course of noise correlations was generated from spike counts using a 400 ms window that was slid across the data in 25 ms steps, for a total of 81 time points.

Signal correlation (rsignal) was computed as the Pearson correlation coefficient (ranging between −1 and 1) between heading tuning curves from two simultaneously recorded neurons. Tuning curves for each stimulus condition were constructed by computing the mean response (averaging firing rate during the middle 1 s of the stimulus duration) across trials for each heading direction.

Comparisons with area MSTd.

One of the goals of the present study is to compare responses of VIP neurons with those from MSTd. Thus, we have re-analyzed and replotted heading discrimination data from Gu et al. (2007, 2008) and noise correlation data from Gu et al. (2011). The heading discrimination dataset from MSTd was comprised of 182 cells, among which 155 had significant vestibular heading tuning, 177 had significant visual heading tuning, and 150 had significant tuning for both cues. To make a fair comparison with VIP noise correlation data, all of which were recorded with a single electrode, we only include data from 67 MSTd cell pairs that were also recorded with a single electrode (Gu et al., 2011).

Results

We measured the visual and vestibular heading tuning of 257 VIP neurons. Subsequently, for 113 neurons that showed significant heading tuning in the horizontal plane for visual or vestibular stimuli, we measured neuronal responses while the animals performed a fine heading discrimination task based on visual and/or vestibular cues. Sufficient data were collected from 95 VIP neurons during the discrimination task (see Materials and Methods), and these neurons constitute the main sample for this study. We first describe the effects of cue combination on behavioral performance before considering neuronal sensitivity and the relationship between neural activity and perceptual decisions.

Psychophysical performance

Two monkeys (monkey U and monkey C) were trained to perform a fine heading discrimination task (Fig. 1). During each trial, the animal experienced real (vestibular condition) or simulated (visual condition) translational motion in the horizontal plane (Fig. 1A), and the monkey reported its perceived direction of motion (rightward vs leftward relative to straight forward) by making an eye movement toward one of two choice targets (Fig. 1B). Heading direction was defined by inertial motion alone (vestibular condition), optic flow alone (visual condition), or both vestibular and visual cues (combined condition). Each movement trajectory followed a Gaussian velocity profile, with a corresponding biphasic acceleration profile (peak acceleration: 1 m/s2; Fig. 1C).

Behavioral performance was quantified by constructing a psychometric function for each stimulus condition (Fig. 1D), plotting the percentage of “rightward” choices as a function of heading direction. Data were fit with cumulative Gaussian functions (smooth lines in Fig. 1D), and psychophysical threshold was defined as the SD of the cumulative Gaussian fit (corresponding to 84% correct performance). For the example session in Figure 1D, thresholds were 1.1° for the vestibular condition (red) and 1.8° for the visual condition (green), respectively. Note that the reliability of optic flow was approximately equated with the reliability of vestibular signals by reducing the coherence of the visual motion stimulus (see Materials and Methods). This balancing of cue reliabilities is important, as it maximizes the opportunity to observe improvement in performance when cues are combined (Ernst and Banks, 2002; Gu et al., 2008). For the example session, the monkey's heading threshold was substantially reduced in the combined condition (0.5°), as evidenced by the steeper slope of the psychometric function (Fig. 1D, blue).

Summary behavioral data for the two animals trained on the heading task are illustrated in Figure 1, E and F. For monkey U, psychophysical thresholds averaged 3.4 ± 0.16° and 2.9 ± 0.31° (mean ± SE, 31 sessions) in the vestibular and visual conditions, respectively (Fig. 1E, red and green). The average threshold in the combined condition (2.4 ± 0.14°; Fig. 1E, blue) was less than the vestibular threshold (p < 0.001, Wilcoxon matched pairs test), but not significantly different from the visual threshold (p = 0.41). The combined threshold was close to, but slightly greater than, the optimal prediction (2.07 ± 0.13°; p = 0.02; Fig. 1E, cyan). Note that these summary data for monkey U are based on 31 recording sessions. If we include data from an additional 38 training sessions (after performance had largely reached plateau), the combined threshold (2.6 ± 0.10°) becomes significantly less than both the visual (3.3 ± 0.17°, p < 0.001) and vestibular (3.6 ± 0.13°, p < 0.001) thresholds.

For monkey C, the heading threshold in the combined condition (1.2 ± 0.04°) was significantly less than both the vestibular (1.6 ± 0.08°) and visual (1.7 ± 0.07°) thresholds (p < 0.001, Wilcoxon matched pairs test; 64 sessions) and very close to the optimal prediction (1.1 ± 0.03°; p = 0.04; Fig. 1F). This reduction in psychophysical threshold during combined stimulation demonstrates that the animals integrate visual and vestibular cues to improve heading discrimination. Having established similar behavioral cue-integration effects as in our previous study in MSTd (Gu et al., 2008), we now proceed with the characterization of neural responses in area VIP.

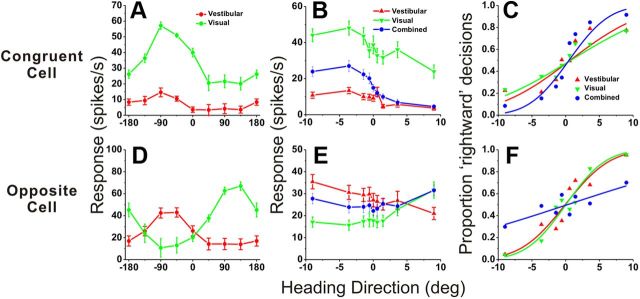

Neuronal sensitivity during heading discrimination

Figure 2 shows example responses from one “congruent” cell (Fig. 2A–C) and one “opposite” cell (Fig. 2D–F) from area VIP. To classify VIP cells as unisensory or multisensory, we measured heading tuning curves in the horizontal plane under both single-cue conditions while the animal maintained visual fixation. Figure 2, A and D, show global tuning curves for the example multisensory neurons. Both cells were significantly tuned under both single-cue conditions (p < 0.05, ANOVA). The neuron of Figure 2A preferred leftward headings (−90°) for both stimulus modalities, and was classified as a congruent cell. The neuron of Figure 2D preferred leftward headings (−90°) for vestibular stimuli (red) and rightward headings (90°) for visual stimuli (green); thus, it was classified as an opposite cell. Note that the heading variable plotted on the abscissa refers to the direction of real or simulated body motion, such that congruent cells should have visual and vestibular tuning curves with aligned peaks. During the heading discrimination task (Fig. 2B,E), only a narrow range of headings was tested and the resulting heading tuning curves were approximately monotonic. Using signal detection theory (see Materials and Methods; Gu et al., 2008), the neural firing rates in Figure 2, B and E, were converted into corresponding neurometric functions, as shown in Figure 2, C and F. The neuronal threshold for each stimulus condition was computed as the SD of the respective best-fitting cumulative Gaussian function.

Figure 2.

Examples of neuronal tuning and neurometric functions for one congruent cell (A–C) and one opposite cell (D–F). A, D, Heading tuning curves measured in the horizontal plane (red, vestibular; green, visual). The 0° heading denotes straight forward translation, whereas positive/negative numbers indicate rightward/leftward directions, respectively. B, E, Responses of the same neurons during the heading discrimination task, tested with a narrow range of heading angles placed symmetrically around straight ahead (0°). C, F, Neurometric functions computed by ROC analysis. Smooth curves show best-fitting cumulative Gaussian functions. Neuronal thresholds were as follows: congruent cell (A–C): 8.6, 10.6, and 4.7°; opposite cell, 5.2, 4.6, and 19.7°, for vestibular, visual, and combined data, respectively.

If single VIP neurons combine visual and vestibular cues near optimally, then neuronal sensitivity should be greater during combined visual/vestibular stimulation than when either single cue is presented alone. Indeed, for the example congruent cell (Fig. 2C), the neuronal threshold was smaller in the combined condition (4.7°) than in the visual (8.6°) and vestibular (10.6°) conditions. Thus, this congruent neuron could discriminate smaller variations in heading when both cues were presented together. In contrast, the heading tuning curve of the opposite cell during combined stimulation was flatter than tuning curves for the visual and vestibular conditions (Fig. 2E, blue vs green and red). As a result, the combined neurometric function for this neuron was substantially shallower than those for the single-cue conditions (Fig. 2F). Neuronal threshold increased from 5.2° (vestibular) and 4.6° (visual) to 19.7° during combined stimulation (Fig. 2F). Thus, this opposite cell carried less precise information about heading during cue combination.

We obtained sufficient data from 95 VIP cells that showed significant global tuning during the vestibular or visual conditions (or both, see Materials and Methods). The first question to address at the population level is whether neuronal thresholds, like the monkey's behavioral thresholds, are significantly lower for the combined condition than the single-cue conditions, as would be expected from cue integration theory. Given the examples in Figure 2 and previous findings from area MSTd (Gu et al., 2008), we expected the results to depend on congruency of visual and vestibular heading tuning. Thus, we computed a quantitative index of congruency between visual and vestibular tuning functions obtained during the heading discrimination task (CI, see Materials and Methods). The CI is near +1 when visual and vestibular tuning functions have a consistent slope (Fig. 2B), near −1 when they have opposite slopes (Fig. 2E), and near zero when either tuning function is flat (or even-symmetric) over the range of headings tested.

The ratio of combined to predicted thresholds is plotted against CI for all VIP cells with significant tuning under both the vestibular and visual conditions (Fig. 3A). There is a significant negative correlation (r = −0.34, p = 0.01, Spearman rank correlation) such that neurons with large positive CIs have thresholds close to the optimal prediction (ratios near unity). In contrast, neurons with large negative CIs generally have combined/predicted threshold ratios well in excess of unity. For the purposes of this study, we defined neurons with CI > 0.4 as congruent cells (Fig. 3, cyan symbols) and neurons with CI < −0.4 as opposite cells (Fig. 3, magenta symbols). Among 56 VIP neurons with significant visual and vestibular heading tuning, 36 (64%) were classified as congruent cells, only 5 (9%) were classified as opposite cells, and the remaining 15 cells (27%) were classified as intermediate. The average combined/predicted threshold ratio for congruent cells was 1.10 ± 0.29 SE (geometric mean ± SE; Fig. 3B). Overall, combined thresholds for congruent cells were significantly lower than both visual and vestibular thresholds (p = 0.02 and p = 0.01, respectively, Wilcoxon matched pairs test) and were not significantly different from the optimal predictions (p = 0.20, Wilcoxon matched pairs test). Results were consistent for the two animals (Fig. 3A, circles versus triangles).

Figure 3.

Improvement in neuronal sensitivity under cue combination depends on congruency of visual and vestibular tuning. A, Scatter plot of the ratio of the threshold measured in the combined condition relative to the prediction for optimal cue integration (Gu et al., 2008) versus the local CI (see Materials and Methods). Cyan symbols are used for congruent neurons with CI > 0.4 (n = 36); magenta symbols are used for opposite neurons with CI < −0.4 (n = 5); black symbols mark intermediate neurons for which −0.4 < CI < 0.4 (n = 15). Dashed horizontal line: threshold in the combined condition is equal to the prediction. Solid line shows type II linear regression. Triangles and circles denote data from monkeys C and U, respectively. B, Marginal distributions comparing this ratio for opposite (magenta), intermediate (black), and congruent (cyan) VIP neurons. Arrowheads illustrate geometric mean values.

In contrast, for the few opposite cells encountered in VIP, the average ratio of combined to predicted neuronal thresholds was 4.25 ± 1.21 SE (geometric mean ± SE; Fig. 3B). Overall, combined thresholds for opposite cells were greater than single-cue thresholds, indicating that these neurons became less sensitive during cue combination. However, these differences were not significant due to the small number of opposite cells (p = 0.32, combined vs vestibular; p = 0.06, combined vs visual, Wilcoxon matched pairs test, N = 5).

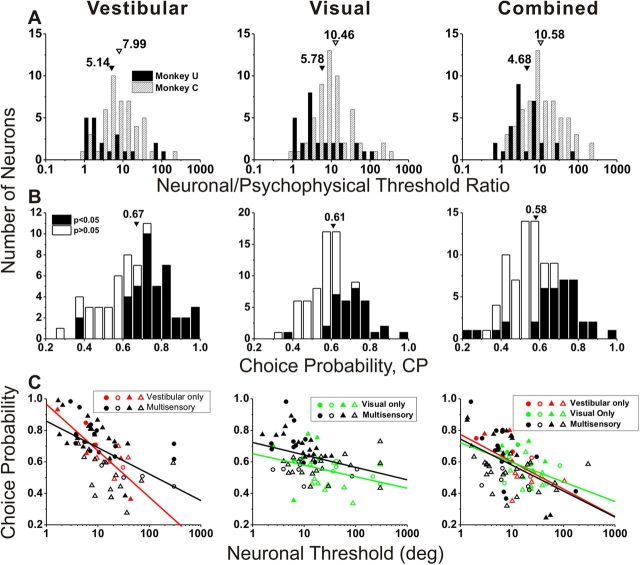

Since we recorded the spiking activity of VIP neurons during performance of the heading discrimination task, neuronal and behavioral thresholds are directly comparable. As illustrated in Figure 4A, the ratio of neuronal to psychophysical thresholds (N/P ratio) was generally greater than unity, averaging 5.1/8.0 (vestibular), 5.8/10.5 (visual), and 4.7/10.6 (combined) for monkeys U/C, respectively. The average N/P ratios for monkey U were significantly less than those for monkey C in all three stimulus conditions (vestibular: p = 0.04; visual: p = 0.02; combined: p = 0.003, Wilcoxon rank sum test). This appears to be driven mainly by the greater psychophysical thresholds in monkey U than monkey C (p < 0.001, Wilcoxon rank sum test) for all stimulus conditions, given that there were no strongly significant differences in average neuronal thresholds between the two animals (vestibular: p = 0.98; visual: p = 0.06; combined: p = 0.32, Wilcoxon rank sum test). Overall, the majority of VIP neurons were less sensitive than the monkey and only the most sensitive neurons rivaled behavioral performance. These findings are approximately comparable to those measured during heading discrimination in area MSTd (Gu et al., 2008). Note that, in both studies, the stimulus range in the heading discrimination task was not tailored to the tuning of individual neurons, such that many neurons have large thresholds mainly because their tuning curves were flat over the range of headings tested during discrimination.

Figure 4.

Summary of neuronal threshold and choice probability (middle 1 s). A, Distributions of neuronal/psychophysical threshold (N/P) ratio for monkey U (filled bars) and monkey C (hatched bars). Arrowheads illustrate geometric mean N/P ratios. B, Distributions of CP, with filled bars denoting cells with CPs significantly different from chance (CP = 0.5). Arrowheads illustrate mean values. C, CP plotted against neuronal threshold (circles, monkey U; triangles, monkey C), shown separately for vestibular-only (red, n = 15), visual-only (green, n = 24), and multisensory (black, n = 56) cells. Filled symbols denote neurons for which the CP was significantly different from 0.5. Solid lines are linear regressions. Different columns show vestibular (left, n = 71), visual (middle, n = 80), and combined (right, n = 95) responses.

Choice probabilities

If VIP neurons contribute to heading discrimination, one may expect to find significant correlations between neuronal activity and behavior. To test this hypothesis, we computed CPs (Britten et al., 1996) to quantify the trial-to-trial correlations between neuronal responses and perceptual choices (see Materials and Methods; Gu et al., 2007, 2008). For the example congruent cell in Figure 2A–C, the choice probabilities were 0.78, 0.74, and 0.67 for the vestibular, visual, and combined conditions, respectively (all values significantly different from the chance level of 0.5; p < 0.001, permutation test). A choice probability significantly greater than chance (0.5) indicates that perceptual decisions of the monkey can be predicted from neural responses (independent of the sensory stimulus), demonstrating a correlation between neural responses and heading judgments. The congruent cell of Figure 2, A–C, generally increased its firing rate when the monkey made a choice in favor of the preferred heading of the neuron (which is leftward in this case). Choice probabilities for the opposite cell of Figure 2, D–F, were 0.71 (vestibular, p < 0.001), 0.63 (visual, p = 0.01), and 0.61 (combined, p = 0.12, permutation test).

Considering all VIP cells with significant global heading tuning in the horizontal plane (Fig. 4B), the average CPs in VIP were significantly larger than chance (p < 0.001, t test), with mean values of 0.67 ± 0.02 SE for the vestibular condition, 0.61 ± 0.01 SE for the visual condition, and 0.58 ± 0.01 SE for the combined condition. Fifty-nine percent of VIP neurons with significant global vestibular tuning (42/71) showed grand CPs that were significantly > 0.5 (Fig. 4B, filled bars). Similarly, 39% (31/80) of VIP neurons with significant global visual tuning showed significant grand CPs > 0.5, and 36% (34/95) showed significant grand CPs > 0.5 in the combined condition (Fig. 4B, filled bars). For area MSTd, the corresponding percentages are 42% (65/155, vestibular), 30% (53/177, visual), and 13% (24/182, combined). For each stimulus condition, the mean CP for VIP neurons is significantly greater than the corresponding mean CP for MSTd neurons tested in the identical task (p < 0.001 for each condition, t tests).

In line with previous studies in MSTd (Gu et al., 2007, 2008), CPs were significantly correlated with neuronal thresholds (vestibular: r = −0.58; visual: r = −0.42; combined: r = −0.51, p < 0.001; Spearman rank correlations), as illustrated in Figure 4C. Neurons with the largest CPs tended to be among the most sensitive neurons in our VIP sample. Moreover, there was no significant difference in the slope of the CP-threshold relationship between multisensory and unisensory neurons in Figure 4C (ANCOVA, interaction effect, p > 0.19).

To ensure that the large CPs observed in VIP were not an artifact of unintended stimulus variations, we measured platform motion with an accelerometer and computed CP from the integrated output of the accelerometer (Gu et al., 2007). Although the accelerometer was ∼10-fold more sensitive than the average VIP neuron, CPs computed from accelerometer responses were not significantly different from 0.5 (t test, p = 0.96) and were not significantly correlated with neuronal CPs measured in the same sessions (r = −0.16, p = 0.26, Spearman rank correlation).

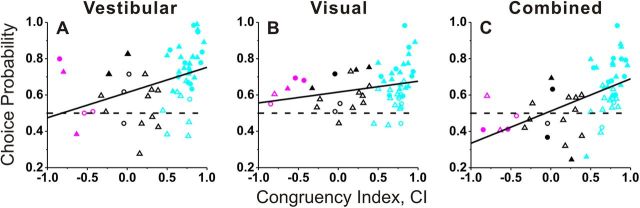

The relationship between CP and visual/vestibular congruency is illustrated in Figure 5, where congruency is quantified by computing CI from the “local” tuning curves obtained during discrimination. For each stimulus condition, the mean CP of congruent cells was significantly > 0.5 (vestibular: 0.73 ± 0.02 SE, p < 0.001; visual: 0.66 ± 0.02 SE, p < 0.001; combined: 0.65 ± 0.02 SE, p < 0.001, t tests), whereas the mean CP of opposite cells was not significantly different from 0.5 (vestibular: 0.58 ± 0.08 SE, p = 0.31; visual: 0.63 ± 0.03 SE, p = 0.06; combined: 0.46 ± 0.04 SE, p = 0.35, t tests). For the single-cue conditions, there was a modest correlation between CP and CI (Fig. 5A, vestibular condition: r = 0.40, p = 0.002; Fig. 5B, visual condition: r = 0.26, p = 0.06, Spearman rank correlation), with congruent cells tending to have larger CPs than opposite cells. For the combined condition, CPs depended strongly on CI (r = 0.54, p < 0.001, Spearman rank correlation), and the average CP was significantly greater for congruent than for opposite cells (p = 0.007, Wilcoxon rank sum test). For the vestibular and visual conditions, the difference in CP between congruent and opposite cells did not reach statistical significance (p > 0.09, Wilcoxon rank sum test), although this comparison was limited by the small number of opposite cells encountered in VIP.

Figure 5.

CP as a function of congruency of tuning. CPs for vestibular (A), visual (B), and combined responses (C), are plotted as a function of the CI. Filled symbols denote neurons for which the CP was significantly different from 0.5. Cyan, magenta, and black symbols are used for congruent (n = 36), opposite (n = 5), and intermediate (n = 15) neurons, respectively. Circles and triangles show data from monkey U and monkey C. Solid lines show linear regressions.

Neural weighting of visual and vestibular inputs

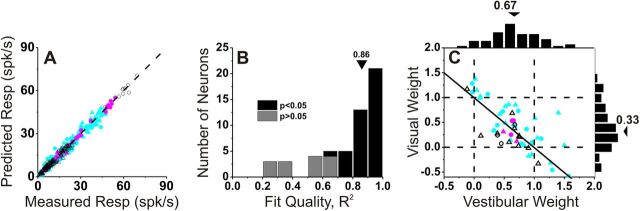

To explore further the relative contributions of vestibular and visual responses to the combined activity of multisensory VIP neurons, we fit the combined responses using a linear model with independent visual and vestibular weights (Gu et al., 2008). The weighted linear model provided good fits, and predicted responses were strongly correlated with responses measured in the combined condition (Fig. 6A, r = 0.99, p < 0.001). Across the population, the correlation coefficients between predicted and measured responses had a median value of 0.86 and were individually significant (p < 0.05) for 40/56 VIP neurons (71%; Fig. 6B, black bars). The remaining cells did not show significant correlation coefficients mainly because the combined responses were noisy or the tuning curve was flat over the range of headings tested (Fig. 6B, gray bars).

Figure 6.

Combined condition responses are well approximated by linear weighted summation. A, Predicted responses from weighted linear summation are strongly correlated with measured responses under the combined condition (r = 0.99, p ≪ 0.001). Each symbol represents the response of one neuron at one heading angle (after spontaneous activity is subtracted). Cyan, magenta, and black symbols are used for congruent (n = 36), opposite (n = 5), and intermediate (n = 15) neurons, respectively. B, Distribution of correlation coefficients from the linear regression fits. Three cases with negative (but not significant) R2 values are not shown. C, Visual and vestibular weights derived from the best fit of the linear weighted sum model for each neuron with significant R2 values (black bars in B). Arrowheads illustrate mean values. Solid line shows linear regression. Circles, monkey U; triangles, monkey C.

Vestibular and visual weights derived from linear model fits averaged 0.67 ± 0.05 (SEM) and 0.33 ± 0.08, respectively (Fig. 6C). There was also a robust and significant negative correlation between vestibular and visual weights in VIP (r = −0.70, p < 0.001, Spearman rank correlation), such that neurons with large visual weights tended to have small vestibular weights, and vice versa. Comparing with previous results from area MSTd (Gu et al., 2008), we find that the average vestibular weight in VIP is not significantly different from that in MSTd (mean: 0.57 ± 0.06 SE, p = 0.22, Wilcoxon rank sum test). In contrast, the average visual weight in VIP is significantly less than that seen for MSTd neurons (mean: 0.84 ± 0.06 SE, p < 0.001, Wilcoxon rank sum test). This result shows that there is a substantial difference between areas in how multisensory neurons combine their visual and vestibular inputs: VIP cells place substantially less relative weight on their visual inputs than do MSTd neurons.

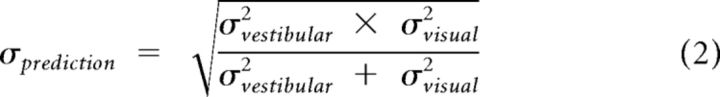

Global and local measures of congruency

As shown in Figure 5, there were only a handful of opposite cells when VIP neurons were classified based on the CI measure computed from local tuning curves (see Materials and Methods). In a previous study, in contrast, we reported that congruent and opposite neurons were encountered in approximately equal proportions using a global measure of congruency based on the difference in 3D heading preference between the visual and vestibular heading tuning curves (Chen et al., 2011a). There are two possible explanations for this difference. First, it is possible that there is a sampling bias in the group of neurons tested during heading discrimination in the present study. As illustrated in Figure 7A, which shows the distribution of the absolute difference in 3D direction preference (|Δ preferred direction|) between visual and vestibular heading tuning, there were nearly equal proportions of congruent and opposite cells based on this global congruency measure. Indeed, the distribution is significantly nonuniform (p = 0.028, uniformity test) and marginally meets the criteria to be classified as bimodal (puni = 0.06; pbi = 0.86, modality test). Thus, a sampling bias in the present study does not seem to account for the dearth of opposite cells, as assessed by the local congruency measure.

Figure 7.

Relationship between local and global measures of visual/vestibular congruency. A, Global congruency, defined as the difference between vestibular/visual preferred headings, |Δ Preferred Heading|, computed from horizontal plane tuning curves (as in Gu et al., 2006; Chen et al., 2011c). B, Scatter plot comparing the global measure (from A) and the local measure (CI; see Materials and Methods). Blue circles represent cells with unimodal heading tuning curves for both the vestibular and visual conditions. Orange triangles denote neurons with bimodal heading tuning in either the vestibular or visual condition. Data are only shown for multisensory neurons (n = 56). C, D, Global and local tuning curves for two cells (marked “1” and “2” in B) for which local and global congruency measures do not agree well. Cells 1 and 2 would be classified as opposite based on global congruency (|Δ Preferred Heading| = 124.9 and 158.4°), or congruent based on local congruency (CI = 0.74 and 0.82). Red, vestibular tuning curves; green, visual tuning curves.

A second possibility is that the global and local measures of congruency do not always provide consistent indicators of congruency of tuning across modalities. This possibility is explored in Figure 7B, which shows the relationship between global and local metrics of congruency. If these measures are consistent, then negative CI values should be associated with large differences in heading preference, |Δ preferred direction|, and thus there should be a negative correlation between local and global congruency measures with most data points residing in the upper left or lower right quadrants (Fig. 7B). Indeed, a significant negative correlation was observed (r = −0.52, p < 0.001, Spearman rank correlation), indicating that there was an overall tendency for global and local measures of congruency to agree. However, for some neurons that have values in the upper right quadrant (e.g., data points marked 1 and 2), the local congruency measure (CI) is widely discrepant from the global measure of congruency (|Δ preferred direction|). Specifically, data in this quadrant correspond to neurons that are congruent by the local measure but opposite by the global measure; hence, there are more congruent cells based on the local congruency metric. Indeed the pattern of results seen in Figure 7B for VIP is different from that found previously for MSTd neurons (Gu et al., 2008). For VIP, 34% (19/56) of neurons reside in the upper right quadrant of Figure 7B and only 5% (3/56) reside in the lower left quadrant. In contrast, for MSTd, 18 and 15% of neurons had congruency metrics that lie in the upper right and lower left quadrants, respectively. Thus, the specific tendency for some neurons to be locally congruent and globally incongruent is much more prominent in VIP.

Figure 7, C and D, shows data from the example neurons marked 1 and 2 in Figure 7B. For the cell in Figure 7C, the global vestibular and visual heading tuning curves are unimodal (single peaked), but the heading preferences are nearly opposite. In contrast, the local heading tuning curves have similar slopes over the narrow range of headings around straight forward, such that the neuron is classified as congruent based on its local tuning. For the example neuron in Figure 7D, the vestibular tuning curve is bimodal (two peaks), but the dominant peak occurs at a heading approximately opposite to the preferred visual heading. Again, however, this neuron has local tuning curves with similar slopes. To examine whether mismatches between local and global congruency of tuning might be generally related to the presence of bimodal heading tuning curves (Fig. 7D), we classified each global heading tuning curve as unimodal or bimodal (see Materials and Methods). The color coding in Figure 7B indicates neurons for which both global tuning curves are unimodal (blue) or at least one tuning curve is bimodal (orange). Clearly, mismatches between local and global congruency measures are not restricted to neurons with bimodal tuning; in fact, cells with unimodal tuning appear to be more likely to lie in the upper right quadrant of Figure 7B.

Thus, the reason for mismatches between local and global tuning remains unclear (see Discussion). We have used the local congruency metric for all analyses here because we consider the tuning of the neurons around straight forward to be the most relevant measure of their contributions to our fine heading discrimination task.

We also considered the possibility that locally congruent but globally opposite neurons might have been “trained-in” during the extensive learning phase of this task, and that such neurons might therefore have different CPs than neurons with consistent local and global congruency metrics. We performed an ANCOVA with local and global congruency as continuous covariates, and an ordinal variable that coded whether local and global congruency metrics were consistent for each neuron. We found no significant effect of the local/global consistency variable on CPs (p > 0.10, ANCOVA).

Temporal evolution of population responses in area VIP

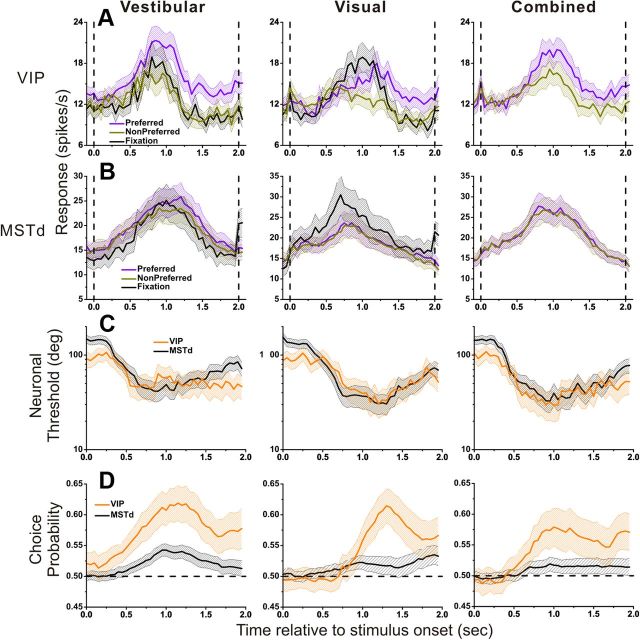

In line with previous studies (Gu et al., 2008), the analyses in Figures 2–7 were based on mean firing rates computed from the middle 1 s of the 2 s stimulus period. However, the motion stimuli were dynamic, with a Gaussian velocity and biphasic acceleration profile (Fig. 1C), and the resulting neuronal responses are temporally modulated (Chen et al., 2011a,c). When examined with 3D heading stimuli during passive fixation, vestibular responses in VIP reflected balanced contributions of velocity and acceleration, whereas visual responses were dominated by velocity (Chen et al., 2011a). Thus, it is important to investigate whether and how neuronal thresholds and choice probabilities depend on the time interval used for computing firing rates. To do this, we computed firing rates, neurometric functions, and CPs for multiple 400 ms time windows, slid across the stimulus period in 50 ms increments.

Figure 8A shows population responses to a straight forward heading stimulus, separated according to the perceptual reports of the animal during heading discrimination. In trials leading to preferred choices, responses (violet) were larger than those involving nonpreferred choices (dark yellow). Notably, a similar analysis of population responses in area MSTd revealed much more subtle differences in firing rate associated with choice (Fig. 8B), consistent with the weaker CPs found in MSTd (Gu et al., 2007, 2008).

Figure 8.

Time course of population responses, neuronal threshold, and CP. A, B. Average forward motion (0° heading) population responses from VIP (A) and MSTd (B). Data from discrimination trials in which the animal made choices in favor of the preferred (violet) or non-preferred (dark yellow) direction are compared with data obtained during passive fixation (black). Vertical lines indicate the onset and offset of the stimulus. C, D. Average neuronal thresholds (C) and CP (D) for VIP (orange) and MSTd (black) cells. Left, Vestibular (VIP: n = 71; MSTd: n = 164), middle, visual (VIP: n = 80; MSTd: n = 178); right, combined (VIP: n = 95; MSTd: n = 182). Dotted horizontal lines in D indicate chance level (CP = 0.5). All responses are computed from a 400 ms sliding window and a step size of 50 ms. Shaded areas around each mean illustrate 95% confidence intervals.

To further investigate differences between VIP and MSTd responses as a function of time, we also examined the temporal evolution of neuronal thresholds (Fig. 8C) and CPs (Fig. 8D). For the vestibular condition, the temporal profile of neuronal thresholds in VIP largely overlapped with that for MSTd during most of the trial epoch, but they diverged somewhat before the end of the stimulus (t >1.5 s). For MSTd, the average neuronal threshold came back toward prestimulus levels, whereas the average neuronal threshold in VIP remained close to the minimum level achieved earlier. During the last 500 ms of the stimulus period, the average neural threshold in MSTd was significantly greater than that for VIP (p < 0.001, Wilcoxon rank sum test). For the visual and combined conditions, VIP and MSTd showed very similar temporal profiles of neuronal thresholds. Overall, average neuronal thresholds in VIP and MSTd were quite comparable.

In contrast, the strength and time course of CPs were markedly different between VIP (orange) and MSTd (Fig. 8D, black). Over the entire time period of the trial, CPs in VIP were significantly greater than those in MSTd for each stimulus condition (p < 0.001, permutation test). For VIP, the time at which the average CP becomes significantly larger than 0.5 was 0.38 s (95% confidence interval: 0.37–0.39, bootstrap analysis) for the vestibular condition, which is much earlier than the time at which average CP becomes significant for the visual condition (t = 0.88 s, 95% CI: 0.87–0.89). The result for the combined condition was intermediate (t = 0.66 s, 95% CI: 0.65–0.68). The corresponding times for area MSTd were 0.60 s (95% CI: 0.58–0.61) for vestibular, 0.74 s (95% CI: 0.72–0.76) for visual, and 0.85 s (95% CI: 0.83–0.87) for combined. Thus, VIP shows substantially earlier choice probabilities than MSTd for the vestibular condition, but significantly later choice probabilities in the visual condition.

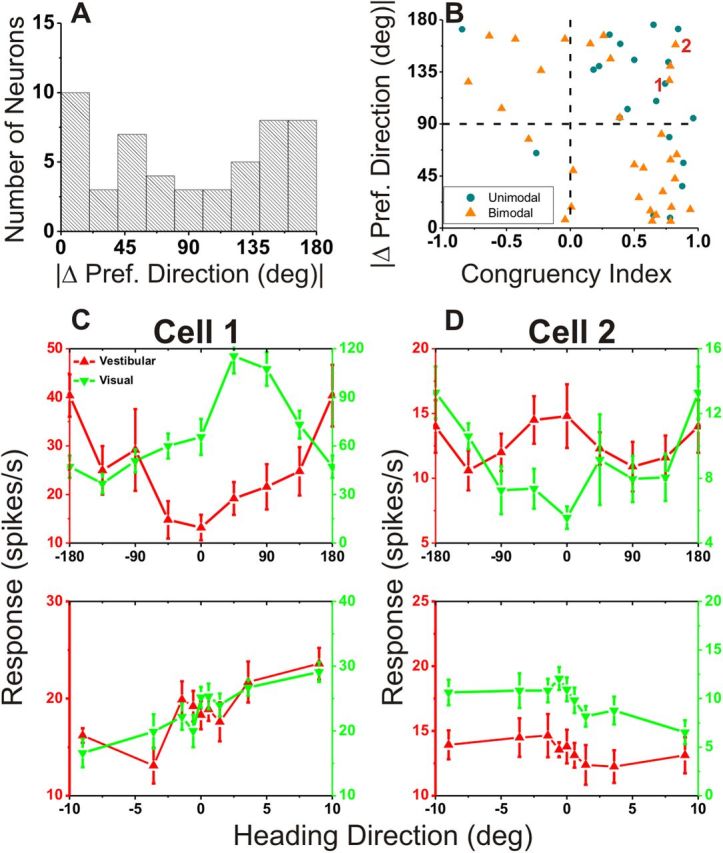

Noise correlations and their dependence on signal correlation in VIP

The remarkably strong CPs in VIP, as compared with MSTd, prompted us to further investigate differences in correlated noise among the two areas. There is theoretical evidence that CPs depend strongly on correlated noise among neurons in a population (i.e., “noise correlation”) (Shadlen et al., 1996; Cohen and Newsome, 2009; Nienborg and Cumming, 2010; Haefner et al., 2012). Without correlated noise, sizeable CPs are possible only with implausibly small neuronal pool sizes. Thus, we examined whether the difference in CP between VIP and MSTd is accompanied by differences in correlation structure across areas.

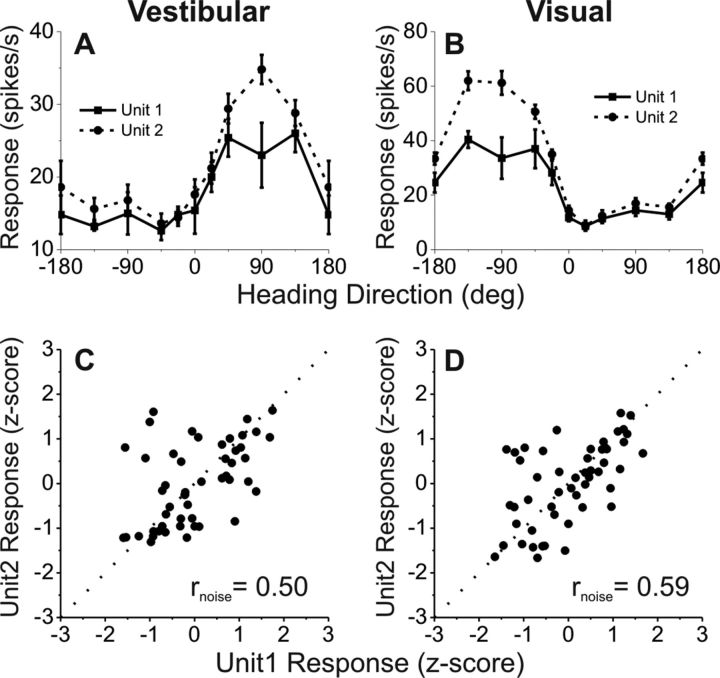

Noise correlations were measured by analyzing VIP data recorded during passive fixation trials in which visual/vestibular stimuli were presented to measure heading tuning (Chen et al., 2011a). Recordings for which two single neurons could be satisfactorily discriminated off-line were used in this analysis. Tuning curves of a simultaneously recorded pair of VIP cells are shown in Figure 9, A and B. In this case, both members of the pair are opposite cells. The similarity of heading tuning between each pair of neurons was quantified as the Pearson correlation coefficient of mean responses across all stimulus directions (signal correlation, rsignal). For this example pair of neurons, rsignal = 0.91 and 0.97 for the vestibular and visual conditions, respectively.

Figure 9.

Measuring noise correlation (rnoise) between pairs of single neurons recorded simultaneously from the same electrode. A, B, Examples of vestibular (A) and visual (B) heading tuning curves for a pair of simultaneously recorded VIP neurons (solid and dashed black curves). Responses are plotted as a function of heading direction in the horizontal plane, with 0° indicating a straight forward trajectory. Error bars indicate SEM. C, D, Normalized responses from the same two neurons were weakly correlated across trials during vestibular (C) and visual (D) stimulation, with noise correlation values of rnoise = 0.50 and 0.59, respectively. Dotted lines mark the unity-slope diagonal.

Figure 9, C and D, illustrates computation of the noise correlation, rnoise. Responses of the two VIP cells are shown as scatter plots, where each datum represents the spike counts of the two neurons from a single trial. Because heading direction was varied across trials, spike counts from individual trials have been z-scored to remove the stimulus effect and to allow pooling of data across headings (see Materials and Methods). Noise correlation, rnoise, is then computed as the Pearson correlation coefficient of the normalized trial-by-trial spike counts, and reflects the degree of correlated neuronal variability across trials (see Materials and Methods). For this example pair of cells, there was a significant positive correlation, such that when one neuron fired more spikes, the other neuron did as well (vestibular condition: rnoise = 0.50, p < 0.001, Fig. 9C; visual condition: rnoise = 0.59, p < 0.001, Fig. 9D).

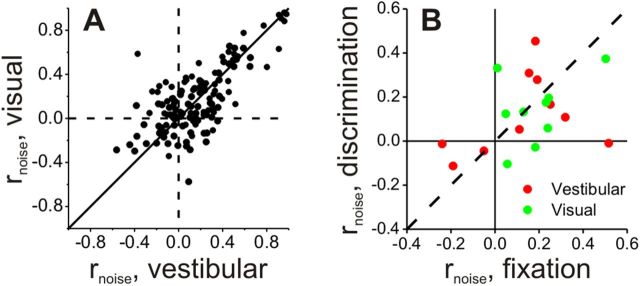

Noise correlations were measured for 64 pairs of VIP neurons from animals C and U (who were trained to perform the heading discrimination task) and 75 pairs from three additional animals who were only trained to perform passive fixation trials (Chen et al., 2011a). We first examined whether noise correlations in VIP depended on stimulus modality by comparing rnoise for all 139 pairs tested under both the visual and vestibular conditions (Fig. 10A). Noise correlations were significantly correlated across stimulus conditions (r = 0.72, p ≪ 0.001, Spearman rank correlation), and the mean values were not significantly different (vestibular: 0.16 ± 0.02 SE, visual: 0.18 ± 0.02 SE, p = 0.46, Wilcoxon matched pairs test). Ten pairs of VIP neurons were recorded during both the passive fixation and heading discrimination tasks. As found previously for area MSTd (Gu et al., 2011), noise correlations did not depend significantly on whether trained monkeys performed a passive fixation task or the heading discrimination task (p > 0.36, Wilcoxon matched pairs test) (Fig. 10B).

Figure 10.

Properties of noise correlations for the VIP population. A, Comparison of noise correlations measured during visual and vestibular stimulation (n = 139). B, Comparison of noise correlations measured during the passive fixation-only task and the active heading discrimination task (n = 10), shown separately for visual and vestibular responses.

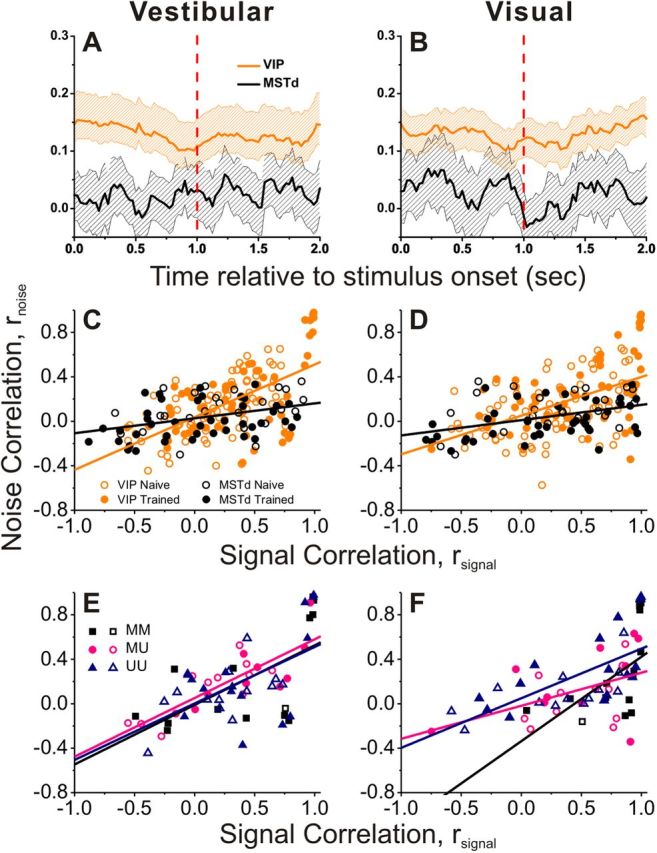

Summary data are shown in Figure 11. We first illustrate the time course of the average noise correlation for VIP (orange) and MSTd (black), shown separately for the vestibular (Fig. 11A) and visual (Fig. 11B) conditions. Correlated noise in VIP was significantly greater than rnoise in MSTd throughout the time course of the trial epoch (p < 0.001, permutation test). Considering spike counts computed during the middle 1 s of the stimulus period, the relationship between rnoise and rsignal is shown in Figure 11, C (vestibular) and D (visual). In line with previous studies (Zohary et al., 1994; Kohn and Smith, 2005; Cohen and Newsome, 2008; Gutnisky and Dragoi, 2008; Smith and Kohn, 2008; Cohen and Maunsell, 2009; Huang and Lisberger, 2009), rnoise was positively correlated with rsignal.

Figure 11.

Comparison of the interneuronal correlations between VIP and MSTd. A, B, Average (±95% CI) time course of noise correlation (rnoise) in area VIP (orange) and MSTd (black) during vestibular (A) and visual (B) stimulation (VIP: n = 139; MSTd: n = 67). C, D, Relationship between rnoise and signal correlation (rsignal) in area VIP (orange) and MSTd (black) during vestibular (C) and visual (D) stimulation. Data are separated into those recorded from naive (open symbols, VIP: n = 75; MSTd: n = 25) and trained (filled symbols, n = 64; MSTd: n = 42) animals. Lines show linear regression fits to the data from each area (pooled across naive and trained animals). E, F, VIP data with significant tuning either to vestibular or visual stimuli for each pair, now separated into the type of recorded pair. MM (Multisensory + Multisensory), both cells were multisensory (n = 14); UM (Unisensory + Multisensory), one cell was multisensory, the other unisensory (n = 21); and UU (Unisensory + Unisensory), both cells were unisensory (n = 29). Lines represent regression fits (ANCOVA). Only data recorded from a single electrode are shown (MSTd data from Gu et al., 2011).

To compare this relationship across areas, we used an ANCOVA, with rsignal for each stimulus condition (visual or vestibular) as a continuous variable and brain area as a categorical factor. In VIP, there was a significant positive correlation between rnoise and rsignal for both the vestibular and visual conditions (p < 0.001, ANCOVA, data pooled across naive and trained animals), reflecting the fact that noise correlations tended to be positive for pairs of neurons with similar tuning (rsignal > 0) and near zero or negative for pairs with opposite tuning (rsignal < 0). For both the vestibular and visual conditions, there was a significant difference between VIP and MSTd in the slope of the relationship between rnoise and rsignal (Vestibular: p < 0.001; Visual: p = 0.004, ANCOVA interaction effect). Specifically, the slope of the rnoise versus rsignal relationship was 2–3 times steeper for VIP than MSTd. This substantial difference in the structure of interneuronal correlations between areas may account for the large differences in CP described above.

The above analysis pooled together data from trained and naive animals. When the data are separated by training history, we found no difference in either the slope (Vestibular: p = 0.98; Visual: p = 0.57, ANCOVA interaction effect) or the intercept (vestibular: p = 0.24; Visual: p = 0.08, ANCOVA) of the linear model for VIP. For MSTd, there was also no difference in slope (Vestibular: p = 0.92; Visual: p = 0.90, ANCOVA interaction effect), but there was a significant difference in the intercept (Vestibular: p = 0.02; Visual p = 0.05), with a lower average noise correlation following training, as shown previously (Gu et al., 2011).

Finally, we considered whether noise and signal correlations depend on whether members of a pair of neurons are multisensory or unisensory. Figure 11, E and F, show the relationship between rnoise and rsignal for VIP cells, color-coded according to whether both cells in the pair were multisensory (black), both unisensory (blue), or a combination of multisensory and unisensory (pink). A significant positive correlation between rnoise and rsignal was observed for all three groups (p < 0.001, ANCOVA), and there were no significant differences in either slope (Vestibular: p = 0.99; Visual: p = 0.31, ANCOVA interaction effect) or intercept (Vestibular: p = 0.75; Visual: p = 0.16, ANCOVA effect) between groups. Thus, the relationship between noise and signal correlations appears to be independent of the multisensory tuning properties of the neurons in a pair.

Discussion

We examined neural correlates of heading perception in area VIP during a multimodal heading discrimination task. Our findings can be summarized as a few key points. First, many VIP neurons show increased sensitivity to small variations in heading when visual and vestibular cues are combined, suggesting that VIP (like MSTd; Gu et al., 2008; Fetsch et al., 2012) may contribute to cue integration for self-motion perception. Second, although neural sensitivity was very similar overall between VIP and MSTd, VIP neurons show much greater correlations with perceptual decisions (choice probabilities) than MSTd neurons. Third, the greater choice probabilities in VIP are associated with substantially larger interneuronal noise correlations among VIP neurons, which may account at least partially for the greater choice probabilities seen in VIP. Fourth, many more VIP neurons have locally congruent heading tuning than locally opposite tuning, which is not expected from the overall pattern of global heading tuning in the visual and vestibular conditions. This suggests that the heading tuning of VIP neurons may be locally remodeled as a specialization for heading perception.

Choice probabilities and correlated noise

Neurons in area VIP exhibit substantially greater CPs than MSTd neurons tested under identical conditions. In fact, the average CP values that we have found for VIP neurons—0.67 ± 0.02 for the vestibular condition and 0.61 ± 0.01 for the visual condition—are among the largest CPs ever reported. In comparison, Newsome et al. reported mean values of 0.56 for the middle temporal (MT) area (Britten et al., 1996) and 0.59 for area MSTd (Celebrini and Newsome, 1994) in a coarse visual motion discrimination task. Other studies of MT neurons found mean CPs of 0.55 for fine direction discrimination (Purushothaman and Bradley, 2005), 0.52 for speed discrimination (Liu and Newsome, 2005), and 0.59 for disparity discrimination (Uka and DeAngelis, 2004). Related to heading perception, previous studies of area MSTd have found mean CPs of 0.55 for fine vestibular heading discrimination (Gu et al., 2007, 2008) and 0.51 for optic flow discrimination (Heuer and Britten, 2004). To our knowledge, only the study of Dodd et al. (2001), involving perception of bistable structure from motion displays, found an average CP (0.67) comparable to what we have observed in VIP.

How should we interpret the substantially larger CPs seen in VIP relative to MSTd in our task (Fig. 8D)? There has been considerable debate in the literature regarding the interpretation of CPs (for reviews, see Nienborg and Cumming, 2010; Nienborg et al., 2012). On one hand, some studies have provided evidence that the pattern of CPs across a population of neurons may reveal how neurons are weighted in the decoding (or “read-out”) of sensory signals that drives perceptual decisions (Uka and DeAngelis, 2004; Purushothaman and Bradley, 2005; Gu et al., 2008; Law and Gold, 2009). On the other hand, experimental and modeling studies have suggested that CPs may be determined mainly by the pattern of correlated noise among neurons (Shadlen et al., 1996; Cohen and Maunsell, 2009). A recent theoretical study provides an elegant resolution to this issue, showing mathematically that CP depends on both the decoding weights and the noise covariance across a population of neurons that contributes to a behavior of interest (Haefner et al., 2012). The relative contributions of decoding weights and noise correlations to CPs will depend on specific parameters of the size of the population and the structure of the interneuronal correlations.

In this light, differences in CP between areas and tasks are generally difficult to interpret in the literature because most previous studies of CPs have not also measured noise correlations. Here, we show that area VIP exhibits both substantially greater CPs than MSTd and also substantially greater noise correlations. This suggests that at least a portion of the difference in CP between VIP and MSTd can be accounted for by correlated noise. It is not possible to conclude from the present results that VIP makes greater contributions to heading perception than MSTd, and additional studies using causal manipulations may be necessary to address this question more directly.

Recently, for vestibular heading perception, we have uncovered similar relationships between CPs and noise correlations for subcortical neurons. Specifically, cells in the vestibular and cerebellar nuclei show substantially greater CPs than MSTd neurons in a vestibular heading discrimination task, and this difference can be largely accounted for by differences in correlated noise (Liu et al., 2013). That study also demonstrates that it is the slope of the relationship between rnoise and rsignal, not the overall mean value of rnoise, which mainly determines the magnitude of CPs. In this study, we have also found that VIP neurons show a steeper relationship between rnoise and rsignal than MSTd neurons (Fig. 11 C,D). However, because there are fewer VIP neurons with negative values of rsignal than MSTd neurons, this increase in slope also translates into an increase in the mean value of rnoise in VIP.

Visual-vestibular congruency

Our findings reveal an interesting difference in the congruency of heading tuning between areas VIP and MSTd. When congruency of global heading tuning is assessed, both areas show approximately equal proportions of congruent and opposite cells (Gu et al., 2006, 2008; Chen et al., 2011a). However, when we assess the congruency of local heading tuning (measured over the narrowheading range used in the discrimination task), we find that VIP shows a much lower proportion of opposite cells than MSTd. This is because some VIP neurons have heading tuning that is globally opposite yet locally congruent (Fig. 7B–D).

One possible explanation for these findings is that the heading tuning of VIP neurons is specialized for perception of heading around straight forward. By increasing the proportion of neurons with congruent local tuning, a greater proportion of VIP neurons may be able to contribute to increased perceptual sensitivity during cue combination. A related speculation is that the heading tuning curves of VIP neurons may be locally remodeled for this purpose. However, at this time, we cannot determine whether this local remodeling of heading tuning in VIP occurs as a result of the animals being trained to perform our heading discrimination task, or whether this pattern of local and global tuning would be found in naive animals.

In addition to creating more locally congruent cells in VIP, a putative local remodeling of VIP tuning curves could potentially create greater neural sensitivity for discriminating heading around straight forward. Indeed, Maciokas and Britten (2010) reported that VIP neurons generally had steeper heading tuning than MSTd neurons over a narrow range around straight ahead. Their findings appear to be consistent with ours, although they only measured local visual heading tuning so we cannot assess visual-vestibular congruency from their data.

Roles of VIP and MSTd in multimodal heading perception

We previously suggested that area MSTd plays a major role in cue integration for self-motion perception because MSTd activity can account for the increase in perceptual sensitivity that accompanies cue integration (Gu et al., 2008), as well as the perceptual ability to dynamically weight visual and vestibular cues according to their relative reliabilities (Fetsch et al., 2012). We have recently shown that reversible inactivation of MSTd disrupts heading perception based on visual and vestibular cues, but the pattern of results suggested that MSTd alone was not responsible for the behavior (Gu et al., 2012). Rather, a simple model suggested that other areas, which weight vestibular signals more heavily than MSTd, are also involved (Gu et al., 2012).

We show here that VIP neurons also increase their sensitivity during cue integration, a first step in establishing that VIP contributes to visual-vestibular integration for heading perception. Moreover, our present results, along with previous findings (Chen et al., 2011a), show that responses of VIP neurons are more strongly influenced by vestibular inputs than responses of MSTd neurons (Gu et al., 2008; Morgan et al., 2008). Indeed, even relative to the strength of their single-cue responses, VIP neurons appear to more heavily weight their vestibular inputs than their visual inputs (Fig. 6C). Thus, VIP appears to fit the profile, suggested previously (Gu et al., 2012), of an area that is well suited to contribute to heading perception along with area MSTd. Other areas, such as the visual postural sylvan area (Chen et al., 2011b), may also contribute to visual-vestibular integration, but our data suggest that VIP and MSTd are two of the major players. This view is consistent with recent findings that microstimulation of VIP alters heading judgments based solely on optic flow (Zhang and Britten, 2011), as does microstimulation of MSTd (Britten and van Wezel, 1998, 2002).

Our finding that VIP emphasizes vestibular signals more than MSTd may be consistent with what is known about the flow of information through these areas. Whereas MSTd is likely to be a major source of visual inputs to VIP (Boussaoud et al., 1990; Baizer et al., 1991), this may not be the case for vestibular signals. We have previously reported that vestibular signals in MSTd appear to be delayed and more highly processed relative to those in VIP (Chen et al., 2011a,c), suggesting that vestibular signals might flow from VIP to MSTd. However, additional studies will be needed to flesh out the relative roles and hierarchical positions of these areas in a network that subserves perception of self-motion.

Footnotes

This work was supported by U.S. National Institutes of Health Grants EY019087 (D.E.A.) and EY016178 (G.C.D.). We thank A. Turner and E. White for monkey care and training, as well as E. Henry for her participation in early experiments.

References

- Angelaki DE, Gu Y, DeAngelis GC. Multisensory integration: psychophysics, neurophysiology, and computation. Curr Opin Neurobiol. 2009;19:452–458. doi: 10.1016/j.conb.2009.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki DE, Gu Y, DeAngelis GC. Visual and vestibular cue integration for heading perception in extrastriate visual cortex. J Physiol. 2011;589:825–833. doi: 10.1113/jphysiol.2010.194720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anzai A, Peng X, Van Essen DC. Neurons in monkey visual area V2 encode combinations of orientations. Nat Neurosci. 2007;10:1313–1321. doi: 10.1038/nn1975. [DOI] [PubMed] [Google Scholar]

- Avillac M, Denève S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Bair W, Zohary E, Newsome WT. Correlated firing in macaque visual area MT: time scales and relationship to behavior. J Neurosci. 2001;21:1676–1697. doi: 10.1523/JNEUROSCI.21-05-01676.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baizer JS, Ungerleider LG, Desimone R. Organization of visual inputs to the inferior temporal and posterior parietal cortex in macaques. J Neurosci. 1991;11:168–190. doi: 10.1523/JNEUROSCI.11-01-00168.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R. Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol. 1990;296:462–495. doi: 10.1002/cne.902960311. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel JR, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Britten KH, van Wezel RJ. Electrical microstimulation of cortical area MST biases heading perception in monkeys. Nat Neurosci. 1998;1:59–63. doi: 10.1038/259. [DOI] [PubMed] [Google Scholar]

- Britten KH, Van Wezel RJ. Area MST and heading perception in macaque monkeys. Cereb Cortex. 2002;12:692–701. doi: 10.1093/cercor/12.7.692. [DOI] [PubMed] [Google Scholar]

- Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Vis Neurosci. 1996;13:87–100. doi: 10.1017/s095252380000715x. [DOI] [PubMed] [Google Scholar]

- Celebrini S, Newsome WT. Neuronal and psychophysical sensitivity to motion signals in extrastriate area MST of the macaque monkey. J Neurosci. 1994;14:4109–4124. doi: 10.1523/JNEUROSCI.14-07-04109.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Macaque parieto-insular vestibular cortex: responses to self-motion and optic flow. J Neurosci. 2010;30:3022–3042. doi: 10.1523/JNEUROSCI.4029-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Representation of vestibular and visual cues to self-motion in ventral intraparietal cortex. J Neurosci. 2011a;31:12036–12052. doi: 10.1523/JNEUROSCI.0395-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen A, DeAngelis GC, Angelaki DE. Convergence of vestibular and visual self-motion signals in an area of the posterior sylvian fissure. J Neurosci. 2011b;31:11617–11627. doi: 10.1523/JNEUROSCI.1266-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]