Abstract

Robust variable selection procedures through penalized regression have been gaining increased attention in the literature. They can be used to perform variable selection and are expected to yield robust estimates. However, to the best of our knowledge, the robustness of those penalized regression procedures has not been well characterized. In this paper, we propose a class of penalized robust regression estimators based on exponential squared loss. The motivation for this new procedure is that it enables us to characterize its robustness that has not been done for the existing procedures, while its performance is near optimal and superior to some recently developed methods. Specifically, under defined regularity conditions, our estimators are and possess the oracle property. Importantly, we show that our estimators can achieve the highest asymptotic breakdown point of 1/2 and that their influence functions are bounded with respect to the outliers in either the response or the covariate domain. We performed simulation studies to compare our proposed method with some recent methods, using the oracle method as the benchmark. We consider common sources of influential points. Our simulation studies reveal that our proposed method performs similarly to the oracle method in terms of the model error and the positive selection rate even in the presence of influential points. In contrast, other existing procedures have a much lower non-causal selection rate. Furthermore, we re-analyze the Boston Housing Price Dataset and the Plasma Beta-Carotene Level Dataset that are commonly used examples for regression diagnostics of influential points. Our analysis unravels the discrepancies of using our robust method versus the other penalized regression method, underscoring the importance of developing and applying robust penalized regression methods.

Keywords: Robust regression, Variable selection, Breakdown point, Influence function

1. INTRODUCTION

Selecting important explanatory variables is one of the most important problems in statistical learning. To this end, there have been many important progresses in the use of penalized regression methods for variable selection. Those penalized regression methods have a unified framework for theoretical properties and enjoy great flexibility in allowing different choices of penalty such as the bridge regression (Frank and Friedman, 1993), LASSO (Tibshirani, 1996), SCAD (Fan and Li, 2001), and adaptive LASSO (Zou, 2006). It is important to note that many of those methods are closely related to the least squares method. It is well known that the least squares method is sensitive to outliers in the finite samples, and consequently, the outliers can present serious problems for the least squares based methods in variable selection. Therefore, in the presence of outliers, it is desirable to replace the least squares criterion with a robust one.

In a seminal work, Fan and Li (2001) introduced a general framework of penalized robust regression estimators, i.e., to minimize

| (1.1) |

with respect to β, where ϕ(·) is the Huber’s function. Since then, penalized robust regression has attracted increased attention, and different loss functions ϕ(·) and different penalty functions have been proposed and examined. For example, Wang et al. (2007) proposed the LAD-LASSO where ϕ(t) = |t|, pλnj (|βj|) = λnj |βj|; Wu and Liu (2009) introduced the penalized quantile regression when ϕ(t) = t{τ − I̱(t < 0)} with 0 ≤ τ ≤ 1, and pλnj (|βj|) is either the SCAD penalty or the adaptive LASSO penalty; Kai et al. (2011) studied the variable selection in semiparametric varying-coefficient partially linear model via a penalized composite quantile loss (Zou and Yuan, 2008); Johnson and Peng (2008) evaluated a rank-based variable selection; Wang and Li (2009) examined a weighted Wilcoxon-type SCAD method for robust variable selection; Leng (2010) presented the variable selection via regularized rank regression; and Bradic et al. (2011) investigated the penalized composite quasi-likelihood for ultrahigh dimensional variable selection.

Despite these progresses, to the best of our knowledge, the robustness (e.g., the breakdown point and influence function) of these variable selection procedures has not been well characterized or understood. For example, we do not know the answers to the basic questions: What is the breakdown point for a penalized robust regression estimator? Is its influence function bounded? In the regression setting, the choice of loss function determines the robustness of the resulting estimators. Thus, for model (1.1), the loss function ρ(·) is critical to the robustness, and for this reason, a loss function with a superior robustness performance is of great interest.

The motivation of this work is to study the robustness of variable selection procedures, which necessitates us to introduce a new robust variable selection procedure. As discussed above, the robustness has not been well characterized for the existing procedures. Besides the robustness, as presented below, our new procedure performs at a level that is near optimal and superior to two competing methods in practical settings. The numerical performance of our method is not entirely surprising, as the exponential loss function has been used in Adaboost for classification problem with similar success (Friedman et al., 2000).

We begin with the following loss function

which is an exponential squared loss with a tuning parameter γ. The tuning parameter γ controls the degree of robustness for the estimators. When γ is large, ϕγ(t) ≈ t2/γ, and therefore the proposed estimators are similar to the least squares estimators in the extreme case. For a small γ, observations with large absolute values of will result in large losses of ϕγ(ti), and therefore have a small impact on the estimation of β. Hence, a smaller γ would limit the influence of an outlier on the estimators, although it could also reduce the sensitivity of the estimators.

In this paper, we discuss how to select γ so that the corresponding penalized regression estimators are robust and possess desirable finite and large sample properties. We show that our estimators satisfy selection consistency and asymptotic normality. We characterize the finite sample breakdown point and show that our estimators possess the highest asymptotic addition breakdown point for a wide range of penalties, including Lq penalty with q > 0, adaptive LASSO, and elastic net. In addition, we derive the influence functions and show that the influence functions of our estimators are bounded with respect to the outliers in either the response or the covariate domain.

The rest of this paper is organized as follows. In Section 2, we introduce the penalized robust regression estimators with the exponential squared loss, and investigate the sampling properties. In Section 3, we study the robustness properties by deriving the influence functions and finite sample breakdown point. In Section 4, numerical simulations are conducted to compare the performance of the proposed method with composite quantile loss and L1 loss using the oracle method as the benchmark. We conclude with some remarks in Section 5. The proofs are given in the Appendix.

2. PENALIZED ROBUST REGRESSION ESTIMATOR WITH EXPONENTIAL SQUARED LOSS

Assume that {xi, Yi), i = 1, …, n} is a random sample from population (x, Y). Here, Y is a univariate response, x is a d-dimensional predictor, and (x, Y) has joint distribution F. Suppose that (xi, Yi) satisfying a linear regression model

| (2.1) |

where β is a d-dimensional vector of unknown parameters, and the error terms εi are i.i.d. with unknown distribution G, E(εi) = 0, and εi is independent of xi. An intercept term is included if the first elements of all xi’s are 1. Let Di = (xi, Yi), and Dn = (D1, ⋯, Dn) be the observed data. Variable selection is necessary because some of the coefficients in β are zero; that is, some of the variables in xi do not contribute to Yi. Without loss of generality, let , where β1 ∈ ℝs and β2 ∈ ℝd−s. The true regression coefficients are with each element of β01 being nonzero, and β02 = 0. Let , where xi1 and xi2 are the covariates corresponding to β1 and β2.

The objective function of penalized robust regression consists of a loss function and a penalty term. In this paper, we propose maximizing

| (2.2) |

with respect to β, which is a special case of (1.1) for any γn ∈ (0, +∞). Because γn is a tuning parameter and needs to be chosen adaptively according to the data, our objective function (2.2) is distinct from (1.1). This additional feature is critical for our estimators to have a high breakdown point and high efficiency.

Let be the resulting estimator of (2.2),

and

where r = Y − xTβ.

We assume the following regularity condition:

(C1) ∑ = E(xxT) is positive definite, and E‖x‖3 < ∞.

Condition (C1) ensures that the main term dominates the remainder in the Taylor expansion. It warrants further examination as to whether this condition can be weakened. With these preparations, we present the following sampling properties for our proposed estimators.

Theorem 1 Assume that condition (C1) holds, bn = op(1), and I(β0, γ0) is negative definite.

If γn − γ0 = op(1) for some γ0 > 0, there exists a local maximizer β̂n such that ‖β̂n − β0‖ = Op(n−1/2 + an).

- (Oracle property) If , and with probability 1,

then we have: (a) sparsity, i.e., β̂n2 = 0 with probability 1; (b) asymptotic normality,(2.3)

where ,(2.4)

Remark 1 Note that not all penalties satisfy the conditions in Theorem 1. For example, LASSO is inconsistent, and the oracle property does not hold. Zou (2006) proposed the adaptive LASSO, and showed that it enjoys the oracle property. The adaptive LASSO penalty has the form of pλnj (|βj|) = λnj |βj| with λnj = τnj/|β̃j|k for some k > 0, where β̃ = (β̃1, ⋯, β̃d)T is a estimator of β0, and τ nj’s are the regularization parameters. With this penalty in the penalized robust regression (2.2), we can prove that our estimators are and have the oracle property under the following condition (C2) in addition to the regularity condition (C1). The detailed proof is omitted here.

(C2): .

In fact, by the definition of an. Note that some data-driven methods for selecting λnj, e.g., cross-validation, may not satisfy condition (C2). Here, we utilize a BIC criterion for which (C2) holds.

3. ROBUSTNESS PROPERTIES AND IMPLEMENTATION

3.1 Finite sample breakdown point

Finite sample breakdown point is used to measure the maximum fraction of outliers in a sample that an estimator can tolerate before returning arbitrary values. It is a global measurement of robustness in terms of resistance to outliers. Several definitions of the finite sample breakdown point have been proposed in literature [see Hampel (1971); Donoho (1982); Donoho and Huber (1983)].

Here, let us describe the addition measure defined by Donoho and Huber (1983). Recall the notations Di = (xi, Yi), and Dn = (D1, ⋯, Dn). We assume that Dn contains m bad points and n − m good points. Denote the bad points by Dm = {D1, ⋯, Dm} and the good points by Dn−m = {Dm+1, ⋯, Dn}. The fraction of bad points in Dn is m/n. Let β̂(Dn) denote a regression estimator based on sample Dn. The finite sample addition breakdown point of an estimator is defined as

where ‖ · ‖ is the Euclidean norm. In the regression setting, many estimators such as S-estimator (Rousseeuw and Yohai, 1984), MM-estimator, τ-estimator (Yohai and Zamar, 1988), and REWLS-estimator (Gervini and Yohai, 2002), can achieve the highest asymptotic breakdown point of 1/2.

Next, we derive the finite sample breakdown point for our proposed penalized robust estimators with the exponential squared loss. We first take an initial estimator β̃n. For a contaminated sample Dn, let

| (3.1) |

where . It is easy to see that ζ(γn) is a real number ranged in (0, 2]. Let

Note that if a set of d regressor variables is linearly independent, then anm = (d − 1)/(n − m). Denote by BP(β̂n;Dn−m, γn) the breakdown point for the penalized robust regression estimators β̂n with the tuning parameter γn.

Theorem 2 For any penalty function of the form pλnj (|βj|) = λnjg(|βj|), where g(·) is a strictly increasing and unbounded function defined on [0,∞], and the weight λnj is positive for all j = 1, ⋯, d. If m/n ≤ ε < (1−2anm)/(2−2anm), anm < 0.5, and ζ(γn) < (1−ε)(2−2anm) hold, then, for any initial estimator β̃n of β0, we have

| (3.2) |

Theorem 2 provides the lower bound of breakdown point of the proposed penalized robust estimators. The breakdown point depends on the breakdown point of an initial estimate and the tuning parameter γn. If β̃n is a robust estimator with asymptotic breakdown point 1/2, and γn is chosen such that ζ(γn) ∈ (0, 1], then BP(β̂n;Dn−m, γn) is asymptotically 1/2. This observation guides us in selecting γn to reach the highest efficiency. We should note that the breakdown point is a limiting measure of the bias robustness (He and Simpson, 1993).

There are many commonly used penalties that utilize the penalty form of Theorem 2, such as LASSO, adaptive LASSO, the Lq penalty with q > 0, logarithm penalty, elastic-net penalty, and adaptive elastic-net penalty. Hence the breakdown point result holds for these penalized robust regression estimators. However, it is an open question to extend the result of Theorem 2 to bounded penalties such as SCAD (Fan and Li, 2001) and MCP (Zhang, 2010). As a practical solution, for those bounded penalties, we may employ the one-step or k-step local linear approximation (LLA) method proposed by Zou and Li (2008). Additional discussions will be given in Section 5.

3.2 Influence function

The influence function introduced by Hampel (1968) measures the stability of estimators given an infinitesimal contamination. Denote by δz the point mass probability distribution at a fixed point z = (x0, y0)T ∈ ℝd+1. Given the distribution F of (x, Y) in ℝd+1 and proportion ε ∈ (0, 1), the mixture distribution of F and δz is Fε = (1 − ε)F + εδz. Suppose that λnj’s have the limit point λ0j’s, let

Note that is a shrinkage of the true coefficient β0 to 0, and under the conditions in Theorem 1. For the exponential-type penalized robust estimators, the influence function at a point z ∈ ℝd+1 is defined as , provided that the limit exists.

Theorem 3 For the penalized robust regression estimators with exponential squared loss, the j-th element of the influence function has the following form:

| (3.3) |

where Γj denotes the j-th row of {2A(γ0)/γ0 − B}−1, ,

and

| (3.4) |

For the case of adaptive LASSO penalty, with the regularization parameter selected by the BIC described in Section 3.3, by the condition (C2), we have λ0j = 0 for j = 1, ⋯, s, and λ0j = +∞ for j = s + 1, ⋯, d. Therefore, the corresponding influence functions of the zero coefficients are zero. For the nonzero coefficients, the influence functions have the form

| (3.5) |

3.3 Algorithm and the choice of tuning parameters

The penalty function facilitates variable selection in regression. Theorem 2 implies that there may be many penalties that can facilitate robust variable selection; however, we believe it is a lesser issue to compare the performance of different penalties for a given type of loss function. A more important issue is to compare the performance of different loss functions for a given form of penalty.

In the simulations, we focus on the adaptive LASSO penalty pλnj (|βj|) = τnj |βj|/|β̃nj|k with k = 1, λnj = τnj/|β̃nj|, and β̃nj is an initial robust regression estimator. The maximization of (2.2) with an adaptive LASSO penalty involves nonlinear weighted L1 regularization. To facilitate the computation, we use a quadratic approximation to replace the loss function. Let

| (3.6) |

Suppose that β̃ is an initial estimator, then the loss function is approximated as . Next, we maximize

with respect to β, which leads to an approximated solution of (2.2).

There exist efficient computing algorithms for this L1 regularization problem. Popular algorithms include the least angle regression (Efron et al., 2004) and coordinate descent procedures such as the pathwise coordinate optimization introduced by Friedman et al. (2007), coordinate descent algorithms proposed by Wu and Lange (2008), and the block coordinate gradient descent (BCGD) algorithm proposed by Tseng and Yun (2009). In our paper, we use BCGD algorithm for computation.

To implement our methodology, we need to select both types of tuning parameters λnj and γn. Since λnj and γn depend on each other, it could be treated as a bivariate optimization problem. In this paper, we consider a simple selection method for λnj, and a data-driven procedure for γn.

The choice of the regularization parameter λnj

In general, many methods can be used to select λnj, such as cross-validation, AIC, BIC. To reduce intensive computation, and guarantee consistent variable selection, we consider the regularization parameter by minimizing a BIC-type objective function (see Wang et al. (2007)):

This leads to λnj = τ̂nj/|β̃nj|, where

Note that this simple choice satisfies the conditions for oracle property of the adaptive LASSO, as described in the following corollary.

Corollary 1 If the regularization parameter is chosen as τ̂nj = log(n)/n, then the solution of (2.2) with adaptive LASSO penalty possess the oracle property.

The choice of tuning parameter γn

The tuning parameter γn controls the degree of robustness and efficiency of the proposed robust regression estimators. To select γn, we propose a data-driven procedure which yields both high robustness and high efficiency simultaneously. We first determine a set of the tuning parameters such that the proposed penalized robust estimators have asymptotic breakdown point at 1/2, and then select the tuning parameter with the maximum efficiency. We describe the whole procedure in the following steps:

-

Find the pseudo outlier set of the sample

Let Dn = {(x1, Y1), ⋯, (xn, Yn)}. Calculate ** and Sn = 1.4826 × mediani |ri (β̂n) − medianj(rj(β̂n))|. Then, take the pseudo outlier set Dm = {(xi, Yi) : |ri(β̂n)| ≥ 2.5Sn}, set m = #{1 ≤ i ≤ n : |ri(β̂n)| ≤ 2.5Sn}, and Dn−m = Dn/Dm.

-

Update the tuning parameter γn

Let γn be the minimizer of det(V̂ (γ)) in the set G = {γ : ζ(γ) ∈ (0, 1]}, where ζ(γ) is defined in (3.1), det(·) denotes the determinant operator, V̂(γ) = {Î1(β̂n)}−1Σ̃2{Î1(β̂n)}−1, and -

Update β̂n:

With fixed λnj = log(n)/(n|β̂nj|), and the selected γn in step 2, and update β̂n by maximizing (2.2).

In practice, we set the MM-estimator β̃n as the initial estimate, i.e., β̂n = β̃n, then repeat Steps 1 − 3 until β̂n and γn converges. Note that λnj is fixed within the iterations. One may update β̂n in step 3 with λnj selected by cross-validation, however, this approach requires huge computation. See discussions in section 5.

Remark 2 In the initial cycle of our algorithm, we set β̂n as the MM estimator and detect the outliers in step 1. This allows us to calculate ζ(γn) by (3.1). Based on Theorem 2, the proposed penalized robust estimators achieve asymptotic breakdown point 1/2 if two conditions hold: (1) the initial robust estimator possesses asymptotic breakdown point 1/2; and (2) the tuning parameter γn is chosen such that ζ(γn) ∈ (0, 1]. Therefore, β̂n achieves the asymptotic breakdown point 1/2 at all iterations.

To attain high efficiency, we choose the tuning parameter γn by minimizing the determinant of asymptotic covariance matrix as in step 2. Since the calculation of of det(V̂ (γ)) depends on estimate β̂n, we update β̂n in step 3, and repeat the algorithm until convergence. Based on our limited experience in simulation, the computing algorithm converges very quickly. In practice, we repeat Steps 1–3 once.

4. SIMULATION AND APPLICATION

4.1 Simulation study

In this section, we conduct simulation studies to evaluate the finite-sample performance of our estimator. We first illustrate how to select the tuning parameter γ. We choose n = 200, d = 8, and β = (1, 1.5, 2, 1, 0, 0, 0, 0)T. We generate xi = (xi1, ⋯, xid)T from a multi-normal distribution N(0, Ω2), where the (i, j)-th element of Ω2 is ρ|i−j|, ρ = 0.5. The error term follows a Cauchy distribution.

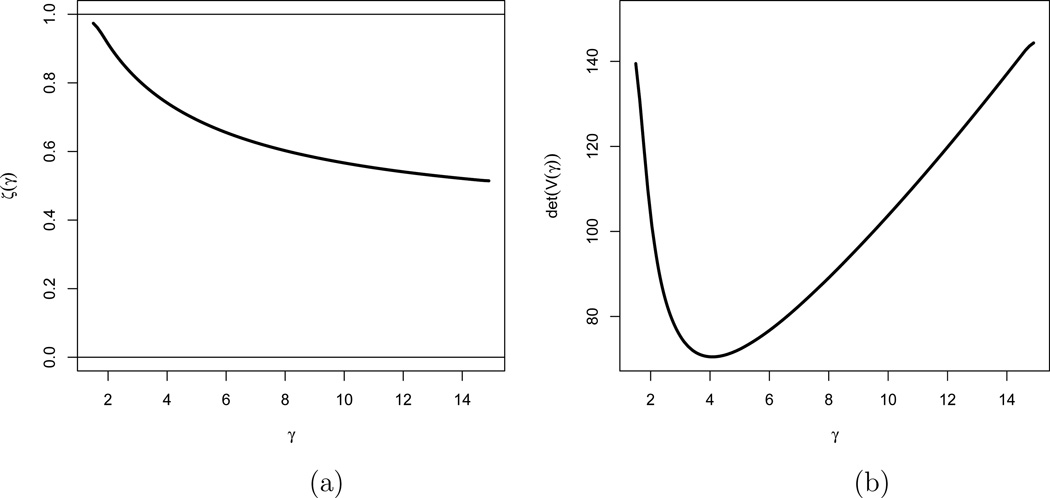

Following the procedure described in section 3.3, we obtain the values of ζ(γ) and det(V̂ (γ)), and plot them against the tuning parameter γ as depicted in Figure 1. Set G can be determined from Figure 1(a). The tuning parameter γ is selected by minimizing the det(V̂(γ)) over G as illustrated in Figure 1(b).

Figure 1.

(a) ζ(γ) against γ; (b) The determinant of matrix V̂(γ) against γ

Next, we evaluate the performance of various loss functions with different sample sizes. For each setting, we simulate 1000 data sets from model (2.1) with sample sizes of n = 100, 150, 200, 400, 600, 800. We choose d = 8 and β = (1, 1.5, 2, 1, 0, 0, 0, 0)T. We use the following three mechanisms to generate influential points:

Influential points in the predictors. Covariate xi follows a mixture of d-dimensional normal distributions 0.8N(0, Ω1) + 0.2N(μ, Ω2), Ω1 = Id×d, μ = 31d, 1d is d-dimensional vector of ones, and the error term follows a standard normal distribution;

Influential points in the response. Covariate xi follows a multi-normal distribution N(0, Ω2), and the error term follows a mixture normal distribution 0.8N(0, 1) + 0.2N(10, 62);

Influential points in both the predictors and response. Covariate xi follows a mixture of d-dimensional normal distributions 0.8N(0, Ω1) + 0.2N(μ, Ω2), and the error term follows a Cauchy distribution.

For each mechanism mentioned above, we compare the performance of four methods: CQR-LASSO (CQR is the shortening of the composite quantile regression introduced by Zou and Yuan (2008)); LAD-LASSO proposed by Wang et al. (2007); the oracle method based on MM-estimator; and our method (ESL-LASSO). For CQR-LASSO, we set the quantiles τk = k/10 for k = 1, 2, ⋯, 9. The performance is represented by the positive selection rate (PSR), the non-causal selection rate (NSR), and the median and median absolute deviation (MAD) of the model error advocated by Fan and Li (2001), where PSR(Chen and Chen, 2008) is the proportion of causal features selected by one method in all causal features, NSR(Fan and Li, 2001) is the average restricted only to the true zero coefficients, and the model error is defined by

The tuning parameter γ is selected for each simulated sample, and γ̄n and ζ̄(γn) are the averages of the selected values in 1000 simulations.

From Tables 1, 2, and 3, we find that ESL-LASSO yields larger model error than LAD-LASSO and CQR-LASSO when the sample size is small, because it involves some consistent estimators in its selection procedure. With the increase of the sample size, the medians and MADs of the model error decrease in all three settings. Especially in the view of the median of the model error, although ESL-LASSO’s is always larger than CQR-LASSO’s but shall be smaller than LAD-LASSO’s if the sample size is at least 200 in the first setting, while ESL-LASSO’s shall be smaller than both LAD-LASSO’s and CQR-LASSO’s if the sample size is large enough in the other two settings.

Table 1.

Simulation results in the first setting

| Model error | |||||||

|---|---|---|---|---|---|---|---|

| n | Method | γ̄n | ζ̄(γn) | PSR | NSR | Median | MAD |

| ESL-LASSO | 3.965 | 0.260 | 0.982 | 0.999 | 0.076 | 0.040 | |

| CQR-LASSO | – – | – – | 1.000 | 0.877 | 0.041 | 0.021 | |

| 100 | LAD-LASSO | – – | – – | 1.000 | 0.581 | 0.057 | 0.030 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.034 | 0.018 | |

| ESL-LASSO | 4.303 | 0.282 | 0.999 | 1.000 | 0.038 | 0.020 | |

| CQR-LASSO | – – | – – | 1.000 | 0.906 | 0.026 | 0.013 | |

| 150 | LAD-LASSO | – – | – – | 1.000 | 0.554 | 0.035 | 0.016 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.022 | 0.011 | |

| ESL-LASSO | 4.450 | 0.309 | 1.000 | 1.000 | 0.027 | 0.013 | |

| CQR-LASSO | – – | – – | 1.000 | 0.935 | 0.019 | 0.010 | |

| 200 | LAD-LASSO | – – | – – | 1.000 | 0.539 | 0.028 | 0.012 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.017 | 0.009 | |

| ESL-LASSO | 4.500 | 0.331 | 1.000 | 1.000 | 0.012 | 0.006 | |

| CQR-LASSO | – – | – – | 1.000 | 0.966 | 0.010 | 0.005 | |

| 400 | LAD-LASSO | – – | – – | 1.000 | 0.498 | 0.0142 | 0.007 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.009 | 0.005 | |

| ESL-LASSO | 4.500 | 0.337 | 1.000 | 1.000 | 0.008 | 0.004 | |

| CQR-LASSO | – – | – – | 1.000 | 0.980 | 0.006 | 0.003 | |

| 600 | LAD-LASSO | – – | – – | 1.000 | 0.480 | 0.009 | 0.005 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.006 | 0.003 | |

| ESL-LASSO | 4.500 | 0.338 | 1.000 | 1.000 | 0.005 | 0.003 | |

| CQR-LASSO | – – | – – | 1.000 | 0.988 | 0.005 | 0.002 | |

| 800 | LAD-LASSO | – – | – – | 1.000 | 0.498 | 0.007 | 0.003 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.004 | 0.002 | |

Table 2.

Simulation results in the second setting

| Model error | |||||||

|---|---|---|---|---|---|---|---|

| n | Method | γ̄n | ζ̄(γn) | PSR | NSR | Median | MAD |

| ESL-LASSO | 4.315 | 0.454 | 0.939 | 1.000 | 0.352 | 0.231 | |

| CQR-LASSO | – – | – – | 1.000 | 0.781 | 0.066 | 0.033 | |

| 100 | LAD-LASSO | – – | – – | 1.000 | 0.738 | 0.113 | 0.061 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.051 | 0.026 | |

| ESL-LASSO | 4.375 | 0.629 | 0.995 | 1.000 | 0.148 | 0.075 | |

| CQR-LASSO | – – | – – | 1.000 | 0.739 | 0.066 | 0.033 | |

| 150 | LAD-LASSO | – – | – – | 1.000 | 0.737 | 0.070 | 0.034 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.035 | 0.017 | |

| ESL-LASSO | 4.449 | 0.633 | 1.000 | 1.000 | 0.080 | 0.039 | |

| CQR-LASSO | – – | – – | 1.000 | 0.789 | 0.046 | 0.023 | |

| 200 | LAD-LASSO | – – | – – | 1.000 | 0.712 | 0.050 | 0.026 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.025 | 0.013 | |

| ESL-LASSO | 4.496 | 0.638 | 1.000 | 1.000 | 0.027 | 0.012 | |

| CQR-LASSO | – – | – – | 1.000 | 0.864 | 0.021 | 0.010 | |

| 400 | LAD-LASSO | – – | – – | 1.000 | 0.686 | 0.023 | 0.011 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.012 | 0.006 | |

| ESL-LASSO | 4.499 | 0.642 | 1.000 | 1.000 | 0.015 | 0.007 | |

| CQR-LASSO | – – | – – | 1.000 | 0.897 | 0.015 | 0.007 | |

| 600 | LAD-LASSO | – – | – – | 1.000 | 0.650 | 0.016 | 0.008 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.008 | 0.004 | |

| ESL-LASSO | 4.499 | 0.642 | 1.000 | 1.000 | 0.009 | 0.005 | |

| CQR-LASSO | – – | – – | 1.000 | 0.910 | 0.010 | 0.006 | |

| 800 | LAD-LASSO | – – | – – | 1.000 | 0.633 | 0.011 | 0.005 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.006 | 0.003 | |

Table 3.

Simulation results in the third setting

| Model error | |||||||

|---|---|---|---|---|---|---|---|

| n | Method | γ̄n | ζ̄(γn) | PSR | NSR | Median | MAD |

| ESL-LASSO | 4.565 | 0.662 | 1.000 | 0.989 | 0.174 | 0.101 | |

| CQR-LASSO | – – | – – | 1.000 | 0.675 | 0.173 | 0.097 | |

| 100 | LAD-LASSO | – – | – – | 1.000 | 0.488 | 0.113 | 0.061 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.098 | 0.050 | |

| ESL-LASSO | 3.646 | 0.731 | 1.000 | 1.000 | 0.081 | 0.046 | |

| CQR-LASSO | – – | – – | 1.000 | 0.730 | 0.103 | 0.055 | |

| 150 | LAD-LASSO | – – | – – | 1.000 | 0.492 | 0.067 | 0.036 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.066 | 0.036 | |

| ESL-LASSO | 3.654 | 0.722 | 1.000 | 1.000 | 0.058 | 0.030 | |

| CQR-LASSO | – – | – – | 1.000 | 0.778 | 0.068 | 0.031 | |

| 200 | LAD-LASSO | – – | – – | 1.000 | 0.487 | 0.051 | 0.025 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.049 | 0.023 | |

| ESL-LASSO | 3.589 | 0.850 | 1.000 | 1.000 | 0.022 | 0.012 | |

| CQR-LASSO | – – | – – | 1.000 | 0.856 | 0.031 | 0.016 | |

| 400 | LAD-LASSO | – – | – – | 1.000 | 0.459 | 0.023 | 0.011 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.023 | 0.011 | |

| ESL-LASSO | 3.590 | 0.717 | 1.000 | 1.000 | 0.014 | 0.007 | |

| CQR-LASSO | – – | – – | 1.000 | 0.897 | 0.020 | 0.010 | |

| 600 | LAD-LASSO | – – | – – | 1.000 | 0.431 | 0.014 | 0.007 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.016 | 0.008 | |

| ESL-LASSO | 3.525 | 0.833 | 1.000 | 1.000 | 0.010 | 0.005 | |

| CQR-LASSO | – – | – – | 1.000 | 0.912 | 0.015 | 0.008 | |

| 800 | LAD-LASSO | – – | – – | 1.000 | 0.435 | 0.011 | 0.005 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.012 | 0.006 | |

The PSR is around 1 for all three methods in all settings. Therefore, the performance of the three methods are comparable in terms of the model error and PSR. What distinguishes ESL-LASSO from LAD-LASSO and CQR-LASSO is NSR. Indeed, the NSR of the ESL-LASSO estimator is as close 1 as that of the oracle estimator, while the NSR of the LAD-LASSO and CQR-LASSO ranges from 0.431 to 0.738, and from 0.675 to 0.988, respectively. These simulation experiments suggest that the ESL-LASSO estimator leads to a consistent variable selection in common situations where outliers result from either the response or the covariate domain.

So far, we only considered d = 8. Next, we run a simulation study for d = 100. The true coefficients are set to be β = (1, 1.5, 2, 1, 0p)T, where 0p is p-dimensional row vector of zeros, and p = 96. Covariate xi follows a multi-normal distribution N(0, Ω2), and the error term follows a mixture normal distribution 0.7N(0, 1) + 0.3N(5, 32). Since our proposed method requires that p is fixed and less than n, we take n = 1000. We replicate the simulation 500 times to evaluate the finite-sample performance of ESL-LASSO. The simulation results are summarized in Table 4. This table reveals that ESL-LASSO still performs better in variable selection and has much lower model error than LAD-LASSO and CQR-LASSO.

Table 4.

Simulation results when d = 100

| Model error | |||||||

|---|---|---|---|---|---|---|---|

| n | Method | γ̄n | ζ̄(γn) | PSR | NSR | Median | MAD |

| ESL-LASSO | 4.441 | 0.736 | 1.000 | 1.000 | 0.010 | 0.005 | |

| CQR-LASSO | – – | – – | 1.000 | 0.866 | 0.030 | 0.013 | |

| 1000 | LAD-LASSO | – – | – – | 1.000 | 0.661 | 0.022 | 0.008 |

| Oracle | – – | – – | 1.000 | 1.000 | 0.010 | 0.005 | |

4.2 Applications

Example 1

We apply the proposed methodology to analyze the Boston Housing Price Dataset which is available on http://lib.stat.cmu.edu/datasets/boston. This dataset was presented by Harrison and Rubinfeld (1978), and is commonly used as an example for regression diagnostics (Belsley et al. (1980)).

The data contains the following 14 variables: crim (per capita crime rate by town), zn (proportion of residential land zoned for lots over 25,000 sq.ft), indus (proportion of non-retail business acres per town), chas (Charles River dummy variable: equal to 1 if tract bounds river; 0 otherwise), nox (nitrogen oxides concentration: parts per 10 million), rm (average number of rooms per dwelling), age (proportion of owner-occupied units built prior to 1940), dis (weighted mean of distances to five Boston employment centres), rad (index of accessibility to radial highways), tax (full-value property-tax rate per ten thousand dollar), ptratio (pupil-teacher ratio by town), black (1000(Bk − 0.63)2, where Bk is the proportion of blacks by town), lstat (lower status of the population (percent)), and medv (median value of owner-occupied homes in thousand dollars). There are 506 observations in the dataset. The response variable is medv, and the rest are the predictors. In this section, the predictors are scaled to have mean zero and unit variance.

Table 5 compares the estimates of the regression coefficients from the MM method and ordinary least-squares (OLS) besides ESL-LASSO, CQR-LASSO and LAD-LASSO. The selected variables and their coefficients are clearly different among the five methods.

Table 5.

Estimated regression coefficients from the Boston Housing Price Data

| Method | |||||

|---|---|---|---|---|---|

| Variable | ESL-LASSO | CQR-LASSO | LAD-LASSO | MM | OLS |

| crim | 0 | 0 | 0 | −0.097 | −0.101 |

| zn | 0 | 0 | 0 | 0.072 | 0.118 |

| indus | 0 | 0 | 0 | −0.005 | 0.015 |

| chas | 0 | 0 | 0 | 0.038 | 0.074 |

| nox | 0 | 0 | 0 | −0.097 | −0.224 |

| rm | 0.590 | 0.422 | 0.503 | 0.491 | 0.291 |

| age | 0 | 0 | 0 | −0.117 | 0.002 |

| dis | 0 | −0.057 | −0.013 | −0.235 | −0.338 |

| rad | 0 | 0 | 0 | 0.156 | 0.290 |

| tax | −0.105 | −0.133 | −0.058 | −0.208 | −0.226 |

| ptratio | −0.076 | −0.153 | −0.155 | −0.179 | −0.224 |

| black | 0 | 0.040 | 0.085 | 0.124 | 0.092 |

| lstat | −0.131 | −0.334 | −0.243 | −0.174 | −0.408 |

With the proposed ESL-LASSO procedure, Steps 1 and 2 of the selection procedure first chooses the tuning γn at 2.1, which corresponds to m = 8, the number of ”bad points” in the observations. Table 5 reveals that ESL-LASSO selects four of the six variables selected by both CQR-LASSO and LAD-LASSO. The four variables are rm, tax, ptratio, and lstat. The two variables selected by LAD-LASSO but not by CQR-LASSO and ESL-LASSO are dis and black.

Example 2

As another illustration, we analyze the Plasma Beta-carotene Level Dataset from a cross-sectional study. This dataset consists of 315 sample, in which there are 273 female patients, and can be downloaded at http://lib.stat.cmu.edu/datasets/Plasma Retinol.

In this example, we only analyze the 273 female patients. Of interest are to study the relationships between the plasma beta-carotene level (betaplasma) and the following 10 covariates: age, smoking status (smokstat), quetelet, vitamin use (vituse), number of calories consumed per day (calories), grams of fat consumed per day (fat), grams of fiber consumed per day (fiber), number of alcoholic drinks consumed per week (alcohol), cholesterol consumed (cholesterol), and dietary beta-carotene consumed (betadiet).

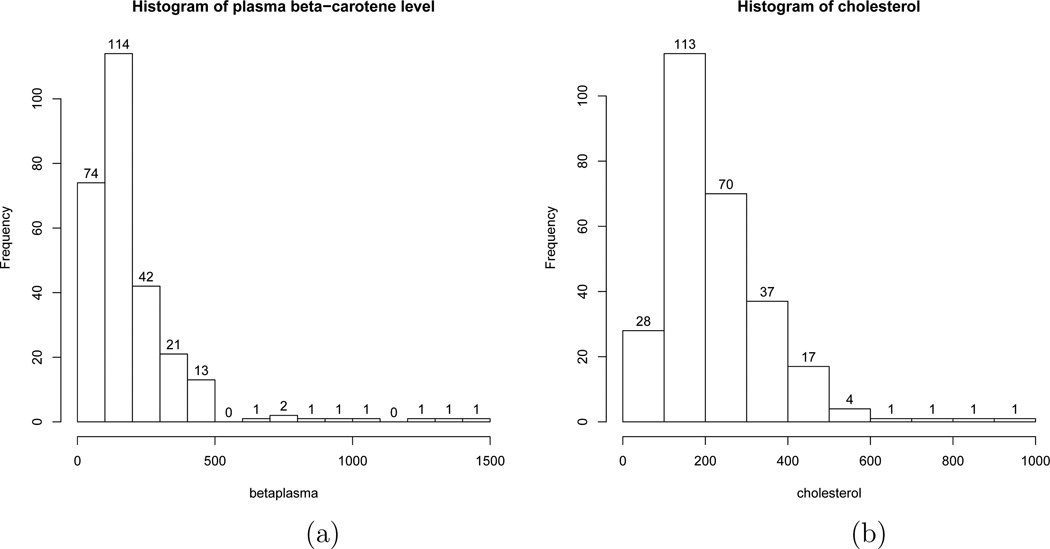

We plot a histogram of betaplasma and cholesterol in Figure 2. Figure 2 indicates that there are unusual points in either the response or the covariate domain. In the following, the predictors are scaled to have zero mean and unit variance. We use the first 200 sample as a training data set to fit the model, and then use the remaining 73 sample to evaluate the predictive ability of the selected model. The prediction performance is measured by the median absolute prediction error (MAPE).

Figure 2.

Histogram of betaplasma (a) and cholesterol (b).

Next, we apply ESL-LASSO, CQR-LASSO and LAD-LASSO to analyze the plasma beta-carotene level dataset. For ESL-LASSO, we first apply the proposed procedure to choose the tuning parameter γn, and obtain γn = 0.190. The results are summarized in Table 6. This table reveals that ESL-LASSO selects “fiber” that is also selected by CQR-LASSO and LAD-LASSO. In addition, CQR-LASSO selects “quetelet” and LAD-LASSO selects “batadiet”, and these two different choices are not selected by ESL-LASSO. Although ESL-LASSO selects one fewer covariate than LAD-LASSO, ESL-LASSO has a slightly smaller MAPE. ESL-LASSO also selects one fewer covariate than CQR-LASSO, the MAPE of ESL-LASSO is 10% higher.

Table 6.

Estimated regression coefficients from the plasma beta-carotene level data

| Method | |||

|---|---|---|---|

| Variable | ESL-LASSO | CQR-LASSO | LAD-LASSO |

| age | 0 | 0 | 0 |

| smokstat | 0 | 0 | 0 |

| quetelet | 0 | −0.057 | 0 |

| vituse | 0 | 0 | 0 |

| calories | 0 | 0 | 0 |

| fat | 0 | 0 | 0 |

| fiber | 0.114 | 0.077 | 0.058 |

| alcohol | 0 | 0 | 0 |

| cholesterol | 0 | 0 | 0 |

| betadiet | 0 | 0 | 0.075 |

| MAPE | 0.559 | 0.503 | 0.568 |

For further comparisons, we apply a combination of the bootstrap and cross validation Figure 2 method to obtain the standard errors of the estimates for the number of non-zeros and the model errors for two real datasets. For each bootstrap sample, we randomly split the 506 observations into training and testing sets in the Boston Housing Price Dataset of sizes 300 and 206, respectively. For the plasma beta-carotene level dataset, we randomly split the 273 observations into training and testing datasets of sizes 200 and 73, respectively. For ESL-LASSO, CQR-LASSO and LAD-LASSO, we calculate the median of absolute prediction error based on |y − xTβ̂| and MAD of prediction error based on y − xTβ̂ for the test set, respectively. The average errors and the average numbers of estimated nonzero coefficients over 200 repetitions are summarized in Table 7. The standard deviations are given in their corresponding parentheses. ESL-LASSO tends to select the fewest number of non-zeros.

Table 7.

Bootstrap results

| Model error | ||||

|---|---|---|---|---|

| Dataset | Method | No. of non-zeros | Median | MAD |

| Boston Housing | ESL-LASSO | 3.710(0.830) | 0.381(0.021) | 0.180(0.069) |

| Price | CQR-LASSO | 7.025(1.015) | 0.286(0.016) | 0.258(0.020) |

| LAD-LASSO | 5.020(0.839) | 0.277(0.017) | 0.113(0.075) | |

| Plasma Beta- | ESL-LASSO | 0.305(0.462) | 0.459(0.030) | 0.180(0.054) |

| Carotene Level | CQR-LASSO | 2.915(1.026) | 0.453(0.032) | 0.299(0.050) |

| LAD-LASSO | 2.570(1.020) | 0.429(0.036) | 0.176(0.161) | |

5. DISCUSSION

In this paper, we proposed a robust variable selection procedure via a penalized regression with the exponential squared loss. We investigated the sampling properties and studied the robustness properties of the proposed estimators. Through the theoretical and simulation results, we demonstrate the merits of our proposed method. We also illustrate that our proposed method can result in notable difference in real data analysis. Specifically, we show that our estimators possessed the highest finite sample breakdown point, and the influence functions are bounded with respect to outliers in either the response or the covariate domain.

Although Theorem 2 requires that the penalty has the form pλnj (|βj|) = λnjg(|βj|), where g(·) is a strictly increasing and unbounded function in [0, ∞]. We conjecture that the breakdown point result also holds for bounded penalties. Intuitively, we may use the one-step or k-step local linear approximation (LLA) proposed by Zou and Li (2008), where . LLA provides a reasonable approximation in the sense that it posses the ascent property of the MM algorithm (Hunter and Lange, 2004), and yields the best convex majorization. Furthermore, the one-step LLA enjoys the oracle property. For the case where all , Theorem 2 is applicable to LLA. For penalties where the derivative equals zero for some j (e.g., SCAD), the LLA can be implemented by using Algorithm 2 as suggested in Section 4 of Zou and Li (2008).

How to select both γn and regularization parameters λnj in a data-driven way is a difficult problem, since selection of γn depends on the choice of λnj and an estimate of β. Although the data-adaptive methods such as cross-validation can be applied, it could cause huge computation and may not satisfy condition (C2) of Remark 1. To implement our proposed ESL-LASSO, we consider a relative simple approach. We first choose regularization parameters λnj via a simple BIC criterion, and then proposed a data-driven approach to selecting the tuning parameter γn. We demonstrate the advantages of our methodology via simulation study and application. According to our simulation studies, the performance of our ESL-LASSO implementation is comparable to the oracle procedure irrespective of the presence and the mechanisms of outliers. We measure the performance by the model error, positive selection rate, and the non-causal selection rate. In the presence of outliers (regardless of the mechanisms), LAD-LASSO and CQR-LASSO perform poorly in terms of the non-causal selection rate.

After re-analyzing the Boston Housing Price Dataset and the Plasma Beta-Carotene Level Dataset, we find that the results and interpretation can be quite different with different methods. Although we do not know the real model from which the real data were generated, our simulation results suggest that a plausible cause of the discrepancies among ESL-LASSO, CQR-LASSO and LAD-LASSO is that there are outliers in the datasets. We refer to Harrison and Rubinfeld (1978) on the identification of influential points for these datasets. In short, our proposed ESL-LASSO is expected to produce a more reliable model without the need to identifying the specific influential points.

Acknowledgments

Huang’s research is partially supported by a funding through Projet 211 Phase 3 of SHUFE, and Shanghai Leading Academic Discipline Project, B803. Wang’s research is partially supported by NSFC(11271383), RFDP(20110171110037), SRF for ROCS, SEM, Fundamental Research Funds for the Central Universities. Zhang’s research is partially supported by grant R01DA016750-08 from the U.S. National Institute on Drug Abuse.

APPENDIX

Proof of Theorem 1 (i). Let ξn = n−1/2 + an. We first prove that for any given ε > 0, there exists a large constant C such that

| (A.1) |

where u is d-dimensional vector such that ‖u‖ = C. Then, we prove that there exists a local maximizer β̂n such that ‖β̂n − β0‖ = Op(ξn). Let

Since pλnj (0) = 0 for j = 1, ⋯, d and γn − γ0 = op(1), by Taylor’s expansion, we have

| (A.2) |

Note that n−1/2Dn(β0, γ0) = Op(1). Therefore, the order of the first term on the right side is equal to in the last equation of (A.2). By choosing a sufficiently large C, the second term dominates the first term uniformly in ‖u‖ = C. Meanwhile, the third term in (A.2) is bounded by

Since bn = op(1), the third term is also dominated by the second term of (A.2). Therefore, (A.1) holds by choosing a sufficiently large C. The proof of Theorem 1 (i) is completed.

Lemma 1 Assume that the penalty function satisfies (2.3). If for some γ0 > 0, bn = op(1), , then with probability 1, for any given β satisfying ‖β − β0‖ = Op(n−1/2) and any constant C,

Proof of Lemma 1. We will show that with probability 1, for any β1 satisfying β1 − β01 = Op(n−1/2), and for some small εn = Cn−1/2 and j = s + 1, ⋯, d, we have ∂ℓn (β) /∂βj < 0, for 0 < βj < εn, and ∂ℓn (β) /∂βj > 0, for −εn < βj < 0. Let

| (5.3) |

By Taylor’s expansion, we have

where β⋇ lies between β and β0. Note that

and

Since bn = op(1) and , we obtain β − β0 = Op(n−1/2). By , we have

Since with probability 1, the sign of the derivative is completely determined by that of βj. This completes the proof of Lemma 1.

Proof of Theorem 1 (ii). Part (a) holds by Lemma 1. We have showed that there exists a root-n consistent local maximizer of ℓn{(β1, 0)}, satisfying that

| (5.4) |

Since β̂n1 is a consistent estimator, we have

where Qn (β, γ) is defined in (5.3). Since , the proof of part (b) is completed by Slutsky Lemma and the central limit theorem.

Lemma 2 Let Dn = {D1, ⋯, Dn} be any sample of size n, Dm = {D1, ⋯, Dm} be a contaminating sample of size m, and . Assume

For the weighted vector λ = (λn1, ⋯, λnd), if

there exists a C such that m/n ≤ ε implies

| (5.5) |

where , β̌n is an initial estimator of β and ϕγ(t) = 1 − exp(−t2/γ).

Proof of Lemma 2. By definition of anm, we have

for all β. Since ε < (1 − 2anm)/(2 − 2anm) and ζ(γn) < (1 − ε)(2 − 2anm), there exist an1 > anm and an2 > anm such that ε < (1 − 2an1)/(2 − 2an1) and ζ(γn) < (1 − ε)(2−2an2).

Take , we have

Since , by using a compacity argument (Yohai (1987)), we can find δ > 0 such that

Since , we can find η such that . Take Δ which satisfies

and take . We then have a0 < min{1 − η, ζ(γn)/2}. Then m/n ≤ ε implies

Because ϕγn(t) is a continuous, bounded, and even function, there exists a k2 ≥ 0 such that ϕγn(k2) = a0/(1 − ζ). Let C1 ≥ (k2 + maxm+1≤i≤n |Yi|)/δ. Then m/n ≤ ε implies

| (5.6) |

where .

Let . Take C = max{C1, C2}. We have

By (5.6), we only need to deal with the second term. Note that ‖β‖ ≥ C2 implies that there exists an element βj of β such that for some j. Hence,

Proof of Theorem 2. For a contaminated sample Dn, and m/n ≤ ε, according to Lemma 2, if ‖β̂n‖ ≥ C, we have

This is a contradiction to the fact that β̂n minimizes for β ∈ ℝd. Therefore, we have

Proof of Theorem 3. Note that

| (5.7) |

where .

Let . Differentiating with respect to ε in both sides of (5.7) and letting ε → 0, we obtain

| (5.8) |

where . By using (5.7) and (5.8), it can be shown that

| (5.9) |

where

with

This completes the proof of Theorem 3.

Proof of Corollary 1. Since β̃n is a root-n consistent estimator and τ̂nj = log(n)/n, we obtain

| (5.10) |

which satisfies the conditions of oracle property in Theorem 1. This completes the proof of Corollary 1.

Contributor Information

Xueqin Wang, Email: wangxq88@mail.sysu.edu.cn, Department of Statistical Science, School of Mathematics and Computational Science, Sun Yat-Sen University, Guangzhou, 510275, China; and Zhongshan School of Medicine, Sun Yat-Sen University, Guangzhou, 510080, China; and Xinhua College, Sun Yat-Sen University, Guangzhou, 510520, China.

Yunlu Jiang, Email: jiangyunlu2011@gmail.com, Department of Statistical Science, School of Mathematics and Computational Science, Sun Yat-Sen University, Guangzhou, 510275, China.

Mian Huang, Email: huang.mian@mail.shufe.edu.cn, School of Statistics and Management, Shanghai University of Finance and Economics, Shanghai, 200433, China.

Heping Zhang, Email: heping.zhang@yale.edu, Department of Biostatistics, Yale University School of Public Health, New Haven, Connecticut 06520-8034, U.S.A. and Changjiang Scholar, Department of Statistical Science, School of Mathematics and Computational Science, Sun Yat-Sen University, Guangzhou, 510275, China.

REFERENCES

- Belsley D, Kuh E, Welsch R. Regression Diagnostics: Identifying Influential Data And Sources Of Collinearity. Wiley; 1980. [Google Scholar]

- Bradic J, Fan J, Wang W. Penalized composite quasi-likelihood for ultrahigh dimensional variable selection. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2011;73(3):325–349. doi: 10.1111/j.1467-9868.2010.00764.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J, Chen Z. Extended Bayesian information criteria for model selection with large model spaces. Biometrika. 2008;95(3):759–771. [Google Scholar]

- Donoho D. Technical report. Boston: Harvard University; 1982. Breakdown properties of multivariate location estimators. Technical report. [Google Scholar]

- Donoho D, Huber P. The notion of breakdown point. A Festschrift for Erich L. Lehmann. 1983:157–184. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. The Annals of statistics. 2004;32(2):407–499. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96(456):1348–1360. [Google Scholar]

- Frank I, Friedman J. A Statistical View of Some Chemometrics Regression Tools. Technometrics. 1993;35(2):109–135. [Google Scholar]

- Friedman J, Hastie T, Höfling H, Tibshirani R. Pathwise coordinate optimization. The Annals of Applied Statistics. 2007;1(2):302–332. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Additive logistic regression: a statistical view of boosting. The Annals of Statistics. 2000;28(2):337–407. [Google Scholar]

- Gervini D, Yohai V. A class of robust and fully efficient regression estimators. The Annals of Statistics. 2002;30(2):583–616. [Google Scholar]

- Hampel F. PhD thesis. Berkeley: University of California; 1968. Contributions to the theory of robust estimation. [Google Scholar]

- Hampel F. A general qualitative definition of robustness. The Annals of Mathematical Statistics. 1971;42(6):1887–1896. [Google Scholar]

- Harrison D, Rubinfeld D. Hedonic prices and the demand for clean air. J. Environ. Economics and Management. 1978;5:81–102. [Google Scholar]

- He X, Simpson D. Lower bounds for contamination bias: Globally minimax versus locally linear estimation. The Annals of Statistics. 1993;21(1):314–337. [Google Scholar]

- Hunter D, Lange K. A tutorial on MM algorithms. The American Statistician. 2004;58(1):30–37. [Google Scholar]

- Johnson B, Peng L. Rank-based variable selection. Journal of Nonparametric Statistics. 2008;20(3):241–252. [Google Scholar]

- Kai B, Li R, Zou H. New Efficient Estimation and Variable Selection Methods for Semiparametric Varying-Coefficient Partially Linear Models. The Annals of Statistics. 2011;39(1):305–332. doi: 10.1214/10-AOS842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leng C. Variable selection and coefficient estimation via regularized rank regression. Statistica Sinica. 2010;20:167–181. [Google Scholar]

- Rousseeuw P, Yohai V. Robust regression by means of S-estimators. Robust and Nonlinear Time Series. 1984;26:256–272. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological) 1996;58:267–288. [Google Scholar]

- Tseng P, Yun S. A coordinate gradient descent method for nonsmooth separable minimization. Mathematical Programming. 2009;117(1):387–423. [Google Scholar]

- Wang H, Li G, Jiang G. Robust regression shrinkage and consistent variable selection through the LAD-Lasso. Journal of Business and Economic Statistics. 2007;25(3):347–355. [Google Scholar]

- Wang L, Li R. Weighted Wilcoxon-Type Smoothly Clipped Absolute Deviation Method. Biometrics. 2009;65(2):564–571. doi: 10.1111/j.1541-0420.2008.01099.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu T, Lange K. Coordinate descent algorithms for lasso penalized regression. The Annals of Applied Statistics. 2008;2(1):224–244. doi: 10.1214/10-AOAS388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu Y, Liu Y. Variable selection in quantile regression. Statistica Sinica. 2009;19(2):801–817. [Google Scholar]

- Yohai V. High breakdown-point and high efficiency robust estimates for regression. The Annals of statistics. 1987;15(2):642–656. [Google Scholar]

- Yohai V, Zamar R. High breakdown-point estimates of regression by means of the minimization of an efficient scale. Journal of the American Statistical Association. 1988;83(402):406–413. [Google Scholar]

- Zhang C. Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics. 2010;38(2):894–942. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101(476):1418–1429. [Google Scholar]

- Zou H, Li R. One-step sparse estimates in nonconcave penalized likelihood models. The Annals of Statistics. 2008;36(4):1509–1533. doi: 10.1214/009053607000000802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H, Yuan M. Composite quantile regression and the oracle model selection theory. The Annals of Statistics. 2008;36(3):1108–1126. [Google Scholar]