Abstract

There is a knowledge gap concerning how well community-based teams fare in implementing evidence-based interventions (EBIs) over many years, a gap that is important to fill because sustained high quality EBI implementation is essential to public health impact. The current study addresses this gap by evaluating data from PROSPER, a community-university intervention partnership model, in the context of a randomized-control trial of 28 communities. Specifically, it examines community teams’ sustainability of implementation quality on a range of measures, for both family-focused and school-based EBIs. Average adherence ratings approached 90% for family-focused and school-based EBIs, across as many as 6 implementation cohorts. Additional indicators of implementation quality similarly showed consistently positive results. Correlations of the implementation quality outcomes with a number of characteristics of community teams and intervention leaders were calculated to explore their potential relevance to sustained implementation quality. Though several relationships attained statistical significance at particular points in time, none were stable across cohorts. The role of PROSPER’s continuous, proactive technical assistance in producing the positive results is discussed.

Low-quality implementation of preventive evidence-based interventions (EBIs) frequently has been found to result in less positive outcomes (Domitrovich & Greenberg, 2000; Durlak & DuPre, 2008; Fixsen, Naoon, Blasé, Friedman, & Wallace, 2005), and thus constitutes a major barrier to the achievement of public health impact that might otherwise result from scaling up EBIs (Glasgow, Klesges, Dzewaltowski, Bull, & Estabrooks, 2004).

Two previous studies demonstrated the benefits of community-university partnerships in achieving high levels of quality in implementing universal preventive interventions (both reported in Spoth, Guyll, Trudeau, & Goldberg-Lillehoj, 2002). In addition, an earlier report from the same project as presented herein showed that both family and school-based EBIs administered by community teams (as opposed to being administered by research staff) were implemented with high quality (Spoth, Guyll, Lillehoj, Redmond, & Greenberg, 2007). High levels of implementation quality were observed with implementation adherence averaging over 90%, for both the family and school-based EBIs. However, these earlier reports examined implementation quality over no more than two cohorts. We are aware of no published studies addressing the sustained implementation quality of EBIs implemented by community teams across a period of time longer than two years. Thus, it remains an open question as to how well community-based teams might fare in implementing evidence-based interventions over time, as they become more established and are no longer primarily funded by research dollars. Addressing this knowledge gap is essential to achieving public health impact through broader diffusion well-implemented preventive EBIs targeting youth (Glassgow et al., 2004; Spoth & Greenberg, 2005).

Here we examine the long-term sustainability of implementation quality and the correlates of implementation quality across 4 additional cohorts for the family-focused EBIs and 3 additional cohorts of school-based EBIs, that is, beyond those reported by Spoth, Guyll et al. (2007). The importance of examining long-term sustainability of high-quality implementation stems from two well-established findings in implementation science. First, low-implementation quality is associated with poorer EBI outcomes (Durlak & DuPre, 2008; Fixsen et al., 2005). For example, a meta-analysis by Derzon, Sale, Springer, and Brounstein (2005) showed that mean effect sizes in intervention outcome studies typically were 2-3 times higher when the interventions were implemented with high quality. Second, the quality of EBI implementation tends to drift over time (Brown, Feinberg, & Greenberg, 2010; Fixsen et al., 2005; McHugh & Barlow, 2010). That is, the longer service providers are implementing a given EBI with successive cohorts of youth, the more likely the implementation is to drift to lower quality levels, absent quality controls. Achieving community-level, public health impact in successive cohorts of youth targeted by EBIs requires sustained, high quality implementation. Thus, it is critically important to examine the ability of community-based efforts to sustain quality implementation of EBIs with successive cohorts of youth, particularly with community-based teams.

Here we define implementation quality as the delivery of a program as designed (Dane & Schneider, 1998). Thus, implementation quality can be assessed by a variety of measures and indices. In this study we assess quality according to three broad dimensions: adherence, quality of delivery, and participant engagement or responsiveness. Adherence focuses on a program's content, procedures, and format, and indicates the degree to which a particular implementation has remained faithful to an intervention's logic model and related guidelines for implementation. The relevant literature reports observed adherence levels from approximately 40% to 90% (Elliott & Mihalic, 2004; Fagan & Mihalic, 2003; Gottfredson, 2001), suggesting that values toward the upper end of this range indicate realistically achievable levels of implementation quality. Quality of delivery is the degree to which facilitators deliver the program in a skilled manner, with expert knowledge and appropriate responsivity to participants’ questions. Finally, participant engagement is the extent to which the intervention delivery succeeds in eliciting recipients’ interest and active participation.

Recent reviews and meta-analytic studies suggest that context and setting factors likely influence implementation quality. For example, in their review, Durlak and DuPre (2008) found that at least 23 context and setting factors (such as administrative support, quality training, technical assistance, organizational climate, and shared decision making) were predictive of implementation quality. These findings bolster recommendations for EBI implementation technical assistance and support systems (Mihilac, Fagan, Irwin, Ballard, & Elliott, 2004), and highlight their importance to ongoing quality maintenance. There has, however, been very limited study of these factors. Accordingly, here we focus on the long-term maintenance of implementation quality by community teams in the PROSPER community-university partnerships – partnerships that provided a support system for both family and school-based EBIs. We specifically address whether sustained high quality implementation is achievable through a support system which provides ongoing, proactive technical assistance.

Our work is guided by theoretical models indicating that implementation quality can be affected by factors operating at multiple levels. Generally, factors that are more proximal to the “core” of an implementation system may exert more influence (Fixsen et al, 2005; Greenberg, Domitrovich, Graczyk, & Zins, 2001). As an example, a teacher’s instructional style or a principal’s support have been shown to influence implementation of school-based interventions (Brown et al., 2010; Ransford, Greenberg, Domitrovich, Small, & Jacobson, 2009) and would be more proximal to school-based program implementation quality than would be the meeting effectiveness of the community team supporting the program.

Our previous study (Spoth, Guyll et al., 2007) revealed little evidence of relationships between the factors considered and implementation quality. For instance, there were only weak relationships between community team characteristics (e.g., team effectiveness, meeting quality, team member attitude toward prevention) and implementation quality of a community-based, family-focused intervention, probably because the teams were less directly involved (i.e. less proximal) in implementation than were the program facilitators. However, Spoth, Guyll et al. characterized their results as somewhat inconclusive because of both limited variability associated with consistently high implementation quality and relatively small numbers of communities involved. One interpretation of these findings is that, overall, the PROSPER partnership model was sufficiently robust to ensure quality implementation in the initial, fully-research funded cohorts. Here, we examine these relationships, with multiple longitudinal assessments, during a period of sustained implementation that is now primarily funded by local community fundraising and support.

We hypothesize that, as in the earlier cohorts, the supports afforded by the PROSPER partnership model will lead to continued high implementation quality across additional cohorts and for both family and school-based interventions. With respect to the potential predictors of sustained implementation quality based on our previous findings (Spoth, Guyll et al., 2007) and literature reviewed, we expected that factors that are more proximal to the actual program implementation would be more likely to be associated with implementation quality. It was assumed that positive team functioning and effective technical assistance would ultimately be linked to higher quality through their favorable effects on the attitudes and self-efficacy of key implementation personnel in the communities, and by increasing their participation in and ownership of the implemented EBIs over time (see Bracht & Kingsbury, 1990; Flynn, 1995).

Methods

Community Selection and Assignment

The project included 28 school districts from two states (Iowa and Pennsylvania). Eligibility criteria for school districts were: (a) school district enrollment from 1,300 to 5,200 and (b) at least 15% of the student population eligible for free or reduced cost school lunches. The 28 school districts were located in a variety of rural towns and small cities across the two states, with community populations ranging from 6,975 to 44,510. School districts were blocked (matched) on size and geographic location and then randomly assigned to either the partnership model condition (N = 14), described below, or to a control condition (N = 14) in which the communities/school districts did not receive programming support, but were free to conduct local programming as usual. At the point of data analysis for this study, partnership-supported family-focused programming has been delivered to 6 cohorts of students and school-based programming to 5 cohorts. The first two of these cohorts have participated in a longitudinal study of prevention program outcomes for families and youth, with positive results reported elsewhere (Redmond et al., 2009; Spoth, Redmond, et al., 2007; Spoth, Redmond, et al., 2010).

PROSPER Partnership Model

The PROSPER (PROmoting School-community-university Partnerships to Enhance Resilience) Partnership Model is a delivery system for large-scale, high quality implementation of EBIs that serve youth and their families. The general purpose of this delivery system is to reduce the prevalence of youth problem behaviors, enhance positive youth development, and strengthen families. It is designed to provide ongoing, proactive technical assistance to assure that EBIs provided for youth and their parents are implemented properly, are supported in the community, and can be sustained over time.

The PROSPER partnership model entails linking the expertise of university researchers with the outreach capabilities of university Extension personnel to partner with community teams focused on coordinating intervention implementation (see Spoth & Greenberg, 2005; Spoth, Greenberg, Bierman, & Redmond, 2004). Each community team was led by a local Extension System staff member and supported by a co-leader from the local public school district. The Extension System team leader facilitated the outreach function for the university, including prevention program dissemination. Members of the team also included health and social service providers, as well as parents and youth from the school district. At the start of the study, community teams typically included from seven to nine members.

The PROSPER partnership model incorporates a four phase developmental process to support community teams and programs, beginning with an organizational phase and ending in a long-term sustainability phase, with benchmarking of progress. Teams are introduced to a sustainability model that guides the development of sustainability plans, with two primary goals. The first goal is to develop and sustain growth in reach and quality of EBIs, with indicators of success including long-term funding and high quality program implementation. The second goal is to sustain well-functioning teams, including: regular, effective team meetings; high team member involvement and commitment to quality programming; effective relationships among the PROSPER community team, the school, and community groups; and high-quality communication, both among team members, and between team members and other community stakeholders.

Functionally, the PROSPER partnership model utilizes a three-tiered structure. The community-level team is the first tier in the PROSPER model; its key tasks are further described below. The middle tier in the partnership model represents the Prevention Coordinator Team that provides technical assistance to the local community teams, serving a liaison function between the community team and the State Management Team. Prevention Coordinators (PCs) work closely with community teams to maximize recruitment, encourage quality implementation, and plan for sustainability—essentially providing targeted technical assistance to teams on all aspects of program adoption, implementation and sustainability. The final tier represents the State Management Team, comprised of university researchers, CES program specialists and administrators. The basic tasks of this team address support of PROSPER efforts through administrative oversight, evaluation efforts, guidance for technical assistance and attainment of support for PROSPER within the Extension System.

The project plan guided the activities performed by PROSPER teams across the first 4 years, from program selection (Phase 1) and implementation operations (Phase 2) to seeking sustainable funding (Phase 3). During this time, teams met on a monthly basis and all team members were invited to a state-wide, cross-team annual meeting. In year three, the team leaders (and, occasionally, other team members) begin gathering together 4-6 times per year for “learning community” meetings. These learning communities had agendas generated by the PROSPER Team Leaders, the PCs, and prevention scientists; they were designed to address team needs and to solve current and emerging implementation problems. These meetings provided a venue for prevention science updates for the Team Leaders (e.g., concerning effective use of data, team development, sustainability planning, media and communication planning, implementation fidelity, and evidence-based prevention practices; Mincemoyer, Perkins, & Santiago, 2008).

As part of the proactive consultation and support provided to each PROSPER team, PCs assisted with meeting agendas, communication and decision-making processes; these efforts included bi-weekly phone calls and face-to-face visits at least quarterly. During the operations phase each PROSPER team implemented both a family-focused intervention and a school-based intervention. One of the initial tasks of the Community teams was to select a universal family-based program from a menu of three EBIs that were appropriate for 6th graders and their families. The following year each team selected a school-based program from a menu of three EBIs that were appropriate for 7th graders in their classrooms. The family-focused intervention was implemented in the first year of the implementation phase and each year thereafter, whereas the school-based program was first implemented during the second year of the operations phase, and each year thereafter. As the local teams selected interventions, trained facilitators in the implementation of selected programs, recruited participants, and implemented the interventions, training and TA were provided to teams to understand the literature-based rationale for high implementation quality, appreciate the benefits of ongoing implementation monitoring, and learn about effective monitoring techniques.

The federally-funded research project covered costs during the first two cohorts of intervention delivery, for each program chosen. Program implementation funds gradually were withdrawn over a two-year period. For Cohort 3, local teams were required to financially support a new implementation of the family-based program, but research matching funds were provided if local funds were raised for one group. For Cohort 4, research matching funds were only provided if teams increased the percentage of families recruited by 5% over the previous year. By Cohort 5, teams were responsible for financially supporting the implementation of both programs. Financially supporting the family-based programs entailed garnering approximately $3,000/group, with the typical PROSPER team implementing between three and five groups per year. Supporting the school-based program involved fewer financial resources ($200-1,000 per year); however, there was a commitment of teachers’ time to conduct all the sessions with seventh graders. PROSPER also provided three additional types of financial supports. First, 25% of the Extension team leaders’ salary was funded for Cohorts 1-5; in Cohort 6 this declined to 20%. Second, university funds provided a small yearly budget for team meetings and related activities. Third, team members were funded to attend the annual state-wide PROSPER meeting that focused on team development, recruitment, implementation and other relevant issues. Thus, in order to continue the implementation of the EBIs, each team had to generate ongoing local funding each year. As each community continued to implement EBIs, all communities were successful in raising local funds to support financial sustainability, the results of which a separate paper will report. Additional detail regarding the PROSPER partnership model may be obtained from Spoth et al. (2004).

Family Intervention: SFP 10-14

In all communities, teams selected the Strengthening Families Program: For Parents and Youth 10-14 (SFP 10-14) for implementation. The SFP 10-14 is designed to reduce adolescent substance use and other problem behaviors by improving parent-child relationships, increasing parenting skills, and facilitating youth prosocial and peer resistance skills (DeMarsh & Kumpfer, 1986; Kumpfer, Molgaard, & Spoth, 1996; Molgaard, Kumpfer, & Fleming, 1997; Molgaard, Spoth, & Redmond, 2000). The SFP 10-14 consisted of seven weekly family sessions when children were in the 6th grade. A total of three facilitators directed each session, with one assigned to the parents and two assigned to the youth, when they met separately, and all three being present when parents and youth met together. The interested reader may obtain further detail regarding SFP 10-14 at www.extension.iastate.edu/sfp/.

School Interventions

Multiple community team selected each of the three school-based interventions offered, each of which is next described.

Life Skills Training (LST)

Based on social learning (Bandura, 1977) and problem behavior (Jessor & Jessor, 1977) theories, the 15 session LST focuses on peer resistance training, anxiety management, improvement of social and personal skills, and provision of information regarding substance use (Botvin, 1996, 2000).

Project ALERT

The 11 session school-based Project ALERT intervention is based on the social influence model of prevention that focuses on the effects of individuals’ beliefs, as reflected in the health belief model (Becker, 1974; Rosenstock, Strecher, & Becker, 1988), and the social learning and self-efficacy theories (Bandura, 1977). In particular, Project ALERT targets adolescents’ substance use beliefs and their resistance to social pressures to use.

All Stars

Guided by social learning (Bandura, 1977) and problem behavior (Jessor & Jessor, 1977) theories, All Stars seeks to decrease the favorability of attitudes toward substance use and violence, increase accuracy of perceptions of peer norms regarding these behaviors, increase school bonding, and lead students to commit to avoidance of substance use and violence (Hansen, 1996).

Control communities

Neither the family-focused nor school-based interventions were initially offered to the control communities, so implementation assessments were not conducted in those communities. In year four of the project, control communities were offered intervention training and materials. However, only two of the 14 control communities took advantage of the supports for school-based programming (specifically, LST and Project Alert), and only one of these communities also elected to receive support for implementing the family-focused SFP 10-14 intervention.

Procedures

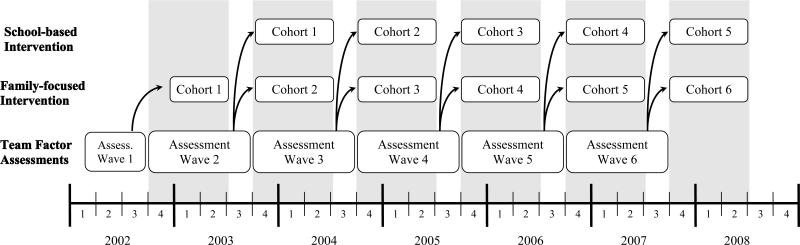

Across the 6 year time period, there were 6 cohorts of families participating in the family-focused SFP 10-14 and 5 cohorts of students participating in the school-based interventions, with implementation outcomes from the first 2 cohorts of both intervention types having been previously reported (Redmond et al., 2009; Spoth, Guyll et al., 2007; Spoth et al., 2010). In all communities and for all cohorts implementation was accomplished during the academic year from September to May. Assessment of local team functioning occurred at a variety of time intervals (e.g., biweekly, quarterly, biannually, and annually), as described below. Assessments of team functioning were used to predict implementation outcomes for a particular cohort using the following procedure. Each team assessment was used to predict implementation outcomes for only the succeeding cohort, such that assessments would temporally precede implementation outcomes, and thus occur before the start of the academic year marking the onset of implementation in a new cohort. All available assessments from the preceding year were combined (averaged) to predict implementation outcomes for each cohort. A flow chart of intervention implementations and assessments is provided in Figure 1.

Figure 1. Study timeline: Team factor assessment waves and implementation cohorts.

Study timeline showing temporal relationships between team factor assessment waves and implementation cohorts of the family-focused and school-based interventions. Shaded regions denote academic years, during which all interventions were conducted. Arrows indicate which team factor assessment wave data were used to predict individual cohort’s implementation quality outcome data in analyses. The instructor-related implementation factors were assessed during implementation of the school-based interventions, and were used to predict school-based implementation quality outcomes observed in the same cohort.

Measures of team characteristics were derived from reports provided by community team members and PCs. First, team process interviews lasting approximately one hour with each team member were conducted at the time of team formation, six months after team formation, and annually thereafter. The sample at pretest included 120 individuals from the 14 partnership model communities. A large majority of team members (87.5%) lived in or near the school district that organized the PROSPER community team. Second, data from PCs included observational ratings and perceptions of community team activities, with PC ratings derived from two different assessments: a biweekly report based on telephone contacts between the PCs and the PROSPER community team leaders, and a PC-completed questionnaire assessing a range of team characteristics that was completed on a quarterly basis initially, and then changed to a biannual basis four years after initial EBI implementation.

Measures

Implementation Adherence and Quality: Family-focused intervention

Implementation adherence was defined as the degree to which facilitators delivered the full, core content of the intervention (Dane & Schneider 1998). Implementation outcomes for the SFP10-14 family intervention were based on independent ratings by trained observers (see Spoth, Guyll et al., 2007). Observers completed adherence checklists, marking whether or not facilitators delivered specific content and activities prescribed by the intervention, with the implementation adherence score for that session being quantified as the proportion of program-specific content actually delivered. The adherence checklists were created by the program developers and adapted for this study, with specific versions and forms made not only for each particular session observed, but also for the youth, parent, and family portions of each session. Typically, a single observer attended the observed sessions; however, during implementation with the first two cohorts of students, some sessions were observed by two observers in order to assess the reliability of the observation procedure. Approximately 25% of the observed sessions in cohorts 1 and 2 were attended by two observers; the average correlation between observers’ adherence ratings exceeded .80 (Spoth, Guyll et al., 2007), supporting the reliability of the ratings.

Additional aspects of implementation quality were based on observers’ ratings of two other facets of intervention delivery. Specifically, group engagement and participation was assessed by observers completing two items regarding the amount of active family participation and engagement in the session. In addition, quality of delivery was assessed with a measure of facilitation quality, assessed by observers rating a number of both positive and negative features of facilitators’ behavior, such as their friendliness and ability to answer questions effectively. Both group engagement and facilitator quality were rated by the observer using a series of Likert ratings on a scale from 0 to 4. Implementation adherence and quality outcomes for the family-based SFP10-14 were quantified for each community by first averaging their values across all sessions conducted by each facilitator, and then averaging these values across all facilitators within a community.

Implementation Adherence and Quality: School-based interventions

Similar to the measures of implementation outcomes for the family-focused intervention, school-based intervention checklists were created by researchers who were the program developers and then adapted for this study. Implementation outcomes for the school-based interventions also were assessed by trained observers, with inter-rater reliability assessed for 23% of the observed sessions across cohorts 1 and 2, yielding an average correlation of .75 between observers’ adherence ratings (Spoth, Guyll et al., 2007). To assess implementation adherence, observers completed session-specific forms to indicate whether or not specific content had been delivered to student participants. In a separate section of the rating form, observers rated student engagement, including the appropriateness of their behavior, their attitudes toward the lesson, their interest in the content, and their willingness to discuss the material. Observers completed student engagement items on a 1 to 4, scale, with greater values indicating greater engagement. For each community, the implementation adherence and student engagement values were averaged across all sessions.

Instructor-Related Implementation Factors

Instructor affiliation: Agency personnel vs. school teachers

In the school-based interventions, the instructor could either be a member of a social services agency or a teacher at the school. In the analyses, agency personnel were coded as “1” and school teachers were coded as “2.”

Instructor lecturing

Observers of the school-based interventions reported the percentage of lesson time spent using each of four teaching techniques: lecture, discussion, demonstration, and practice. The amount of instructor lecturing is the percentage of the entire lesson spent lecturing. As with the instructor affiliation variable, the instructor lecturing variable is only relevant to the school-based interventions.

Each instructor was scored on the two instructor-related factors (affiliation and lecturing) each time she or he was observed, with multiple ratings being averaged to yield a single score for each instructor on each factor, which were then averaged across instructors to create school district scores.

Team Factors

Five characteristics of community teams were investigated. Additional descriptive detail and information on psychometrics is provided in earlier reports (Greenberg et al, 2007; Spoth, Guyll et al., 2007). Effectiveness reflected team members’ perceptions of their community team with regard to being cohesive, task oriented, and well-led, as revealed by responses to 16 items contained in the yearly team process interview. Items included “There is a strong sense of belonging in this team,” “There is strong emphasis on practical tasks in this team,” and “The team leadership has a clear vision for the team.” Team Attitude Regarding Prevention was assessed using two items in the annual team process interview (e.g., “Violence prevention programs are a good investment”). For both items team members responded according to a scale ranging from 1 “Not at all True” to 4 “Very True.” PCs’ also rated the Meeting Quality of community team meetings on a scale from 1 “Poor” to 5 “Excellent.” using the bi-weekly team data. The bi-weekly reports were aggregated into quarterly assessments that were then averaged into a single yearly Meeting Quality score.

Related to team functioning is the quality and amount of TA provided to community teams. Two variables associated with TA were Effectiveness of TA Collaboration and Frequency of TA Requests. As part of their regular reports on team functioning, PCs rated the team’s Effective TA Collaboration by completing 7 items, including “Cooperation with technical assistance” and “Timeliness of reports, applications, materials,” on a scale ranging from 1 “Poor” to 7 “Excellent.” The Frequency of TA Requests was assessed by summing the total number of TA requests within a quarter, as reported in PCs’ bi-weekly reports.

Analytic Procedures

Descriptive analyses of the observation-based implementation adherence and quality measures were conducted and inter-rater agreement was assessed for the first two cohorts (for which double observations were employed). To address issues related to sustainability of implementation across time, repeated measures analyses of variance (RM-ANOVAs) were performed on the implementation quality outcome variables, with each community providing repeated measures across the different cohorts.

At Cohort 1, 14 communities provided observer ratings of implementation quality for both the family-focused and school-based interventions, though this level of 100% participation was not maintained at all assessments. Specifically, as few as 11 communities provided implementation observational data for the family-focused intervention (Cohort 6), and as few as 8 provided such data for the school-based interventions (Cohort 4). In some schools in the later cohorts, principals or teachers elected to discontinue allowing external observers in the classroom. Across cohorts and program types, an average of 12 communities provided observational data regarding implementation quality. A full information maximum likelihood procedure was used to address occurrences in which a community did not provide outcome data for a particular cohort. Community participation in the family-focused programs remained high throughout the years. Eleven of the 14 intervention communities implemented SFP for all six cohorts and, by the last cohort, 13 communities continued to actively implement this program. Available data on groups conducted and attendance indicated that the number of groups per community decreased from an initial high of 4.7 to 2.7 by the end of the study, though this was somewhat offset by greater attendance in the available groups, which increased from 8.3 to 11.1 across the same period. Total attendance was greatest for Cohort 1, with approximately 550 families attending (about 39 per community), and then stabilized from Cohort 3 through 6, at approximately 390 families per cohort (about 30 per participating community/school district). Community participation in the school-based program was similarly high, with 13 communities continuing implementation by the end of the study, and with 12 communities implementing for all five cohorts. Due to the nature of school-based programming, nearly all adolescents in the implementing schools attended the school-based interventions.

A total of five RM-ANOVAs were conducted, one for each of the three outcomes related to implementation of the SFP 10-14, and one for each of the two outcomes related to implementation of the school-based programs. For the SFP 10-14 analyses, the cohort factor had 6 levels, and for the school-based analyses the cohort factor had 5 levels. In order to explore correlates of implementation adherence and quality, bivariate correlations between the implementation ratings and the potential predictors previously described were calculated. These bivariate relationships were examined for the implementation outcomes for each cohort, with the team-related predictors having been assessed during the preceding year.

Results

Implementation Quality

Program adherence

Results presented in Table 1 show that adherence to the SFP 10-14 was generally high, typically exceeding 90% coverage of prescribed program content in each session (mean = 91.6%). Sites also exhibited high levels of adherence for the school-based interventions, with overall adherence levels across programs averaging approximately 87% across multiple cohorts. Adherence for individual programs and cohorts seldom fell below 80% adherence, and never below 70%.

Table 1.

Observer Ratings of Implementation Quality Indicators

| Program | Implementation Indicator |

Cohort | Na | Mean |

Standard

deviation |

Range | Inter-rater reliability (r)b |

|---|---|---|---|---|---|---|---|

| SFP 10-14 | |||||||

| Adherence | 1 | 411 | 90.9% | 9.2% | 48%—100% | .86 (n = 131) | |

| 2 | 350 | 90.8 | 10.9% | 24%—100% | .76 (n = 106) | ||

| 3 | 109 | 89.2% | 12.9% | 29%—100% | |||

| 4 | 125 | 92.6% | 8.4% | 68%—100% | |||

| 5 | 126 | 91.9% | 9.6% | 57%—100% | |||

| 6 | 91 | 94.1% | 8.1% | 69%—100% | |||

| Group engagement/ participation |

1 | 328 | 3.64 | .53 | 1.50—4.00 | .66 (n = 101) | |

| 2 | 283 | 3.58 | .56 | 2.00—4.00 | .63 (n = 90) | ||

| 3 | 90 | 3.45 | .64 | 1.00—4.00 | |||

| 4 | 102 | 3.71 | .47 | 2.00—4.00 | |||

| 5 | 105 | 3.72 | .52 | 1.00—4.00 | |||

| 6 | 75 | 3.69 | .44 | 2.00—4.00 | |||

| Facilitation quality |

1 | 894 | 3.76 | .34 | 1.89—4.00 | .71 (n = 271) | |

| 2 | 754 | 3.71 | .40 | 1.25—4.00 | .66 (n = 229) | ||

| 3 | 224 | 3.73 | .34 | 1.40—4.00 | |||

| 4 | 261 | 3.77 | .34 | 1.81—4.00 | |||

| 5 | 250 | 3.84 | .29 | 1.94—4.00 | |||

| 6 | 180 | 3.79 | .27 | 2.17—4.00 | |||

| All Stars | |||||||

| Adherence | 1 | 67 | 94.4% | 9.3% | 47%—100% | .94 (n = 22) | |

| 2 | 63 | 87.3% | 22.7% | 0%—100% | .67 (n = 22) | ||

| 3 | 26 | 81.0% | 20.4% | 40%—100% | |||

| 4 | 21 | 81.5% | 14.8% | 55%—100% | |||

| 5 | 16 | 70.3% | 25.0% | 20%—100% | |||

| Student engagement |

1 | 67 | 3.49 | .59 | 1.75—4.00 | .46 (n = 19) | |

| 2 | 64 | 3.60 | .53 | 1.50—4.00 | .68 (n = 21) | ||

| 3 | 26 | 3.37 | .61 | 2.00—4.00 | |||

| 4 | 21 | 3.59 | .51 | 2.00—4.00 | |||

| 5 | 16 | 3.40 | .54 | 2.50—4.00 | |||

| LST | |||||||

| Adherence | 1 | 41 | 88.8% | 14.6% | 33%—100% | .84 (n = 11) | |

| 2 | 48 | 88.5% | 16.4% | 27%—100% | .94 (n = 6) | ||

| 3 | 25 | 84.1% | 21.7% | 20%—100% | |||

| 4 | 13 | 77.5% | 22.9% | 21%—100% | |||

| 5 | 15 | 96.6% | 5.2% | 87%—100% | |||

| Student engagement |

1 | 41 | 3.37 | .51 | 1.75—4.00 | .71 (n = 9) | |

| 2 | 48 | 3.49 | .63 | 1.75—4.00 | .90 (n = 6) | ||

| 3 | 25 | 3.48 | .55 | 2.25—4.00 | |||

| 4 | 13 | 3.46 | .34 | 3.00—4.00 | |||

| 5 | 15 | 3.26 | .49 | 2.25—4.00 | |||

| Project Alert | |||||||

| Adherence | 1 | 67 | 88.2% | 14.6% | 25%—100% | .24 (n = 25) | |

| 2 | 50 | 89.3% | 10.2% | 64%—100% | .70 (n = 14) | ||

| 3 | 45 | 90.9% | 15.9% | 9%—100% | |||

| 4 | 64 | 93.9% | 10.8% | 60%—100% | |||

| 5 | 73 | 90.0% | 12.7% | 33%—100% | |||

| Student engagement |

1 | 67 | 3.39 | .50 | 1.75—4.00 | .43 (n = 25) | |

| 2 | 50 | 3.31 | .53 | 2.00—4.00 | .23 (n = 13) | ||

| 3 | 45 | 3.50 | .44 | 2.00—4.00 | |||

| 4 | 64 | 3.55 | .47 | 1.75—4.00 | |||

| 5 | 72 | 3.60 | .39 | 2.25—4.00 | |||

| All School-based Programsc | |||||||

| Adherence | 1 | 175 | 90.7% | 13.1% | 25%—100% | .81 (n = 58) | |

| 2 | 162 | 88.3% | 17.6% | 0%—100% | .69 (n = 42) | ||

| 3 | 96 | 86.5% | 19.1% | 9%—100% | |||

| 4 | 98 | 89.0% | 15.2% | 21%—100% | |||

| 5 | 104 | 87.9% | 16.4% | 20—100% | |||

| Student engagement |

1 | 175 | 3.42 | .54 | 1.75—4.00 | .55 (n = 53) | |

| 2 | 162 | 3.48 | .57 | 1.50—4.00 | .55 (n = 40) | ||

| 3 | 96 | 3.46 | .52 | 2.00—4.00 | |||

| 4 | 98 | 3.55 | .46 | 1.75—4.00 | |||

| 5 | 103 | 3.51 | .44 | 2.25—4.00 |

Note. Values for Cohorts 1 and 2 were reported in the earlier paper, as noted in the introduction (Spoth, Guyll et al., 2007).

Values of N in this column correspond to the number of ratings for each variable. The ratings used for these statistics were those made in sessions observed only by a single observer. For SFP 10-14, there was one rating of adherence per session observed. Group participation was not rated for all sessions, resulting in an average of less than one rating per observed session. In each session, facilitation quality was rated once for the parent sessions (because they had a single facilitator), twice for youth sessions (because they had two facilitators), and three times for family sessions (because they had one parent and two youth facilitators). The school-based programs yielded one rating of adherence and one rating of student engagement for each session observed.

Inter-rater reliability was routinely assessed through double-observations during the first two cohorts of each program’s implementation. Values reflect bivariate correlations between ratings provided by two observers rating the same session. The values of n in this column correspond to the number of rating pairs on which each correlation is based. See previous footnote for information regarding the number of ratings produced per session for each of the variables from the SFP 10-14 and school-based programs.

The school-based programs included All Stars, LST and Project Alert.

Group participation, student engagement, and facilitator qualities

Participants tended to actively participate in and be well engaged by the family-focused and school-based interventions, as evidenced by high scores on the relevant measures (Table 1). In addition, the facilitator qualities described in the measures section—which were assessed only for the family-focused program—also tended toward the upper end of the scale, indicating that facilitators characteristically exhibited positive qualities.

Sustained Implementation Quality

The results presented in Table 1 show that the indicators of implementation quality tended to be sustained across time from cohorts 1 through 6 for the family-focused SFP10-14, and from cohorts 1 through 5 for the school-based programs. Further, analyses suggested only a single variable for which implementation quality significantly varied across time. Specifically, observer ratings of group participation in SFP10-14 exhibited differences across cohorts (F = 1.98, p < .10). However, examination of the means for each cohort indicates that group participation was generally higher in later cohorts than in earlier cohorts. Thus, overall, these results show that the community teams successfully sustained high quality implementation across six years while delivering two different kinds of preventive interventions.

Correlates of Implementation Quality

Table 2 presents the bivariate correlations of the five indicators of implementation quality with the seven team and instructor variables considered for their potential relevance to implementation quality. In light of the low statistical power resulting from the small number of observations associated with the community-level analysis (N = 14) and the exploratory nature of the analysis, we applied a significance level of p ≤ .10. For the family-focused SFP 10-14 intervention, a total of nine correlations attained significance at p < .10, a total consistent with the number that would be expected by chance alone across the 105 correlations examined. With respect to the school-based interventions, 10 of the 80 correlations examined in this case were significant at p < .10, though this number only slightly exceeds the number expected by chance. Although consistent patterns of strong correlations were absent, in the case of instructor affiliation, implementation adherence by school teachers was significantly greater in two cohorts, and correlations of .50 or higher were observed for three of the five cohorts.

Table 2.

Potential Correlates of Implementation Quality

| Family-Focused Intervention (SFP 10-14) |

School-Based Interventions (All Stars, LST, Project Alert)b |

||||||

|---|---|---|---|---|---|---|---|

| Implementation Quality Indicator |

Implementation Quality Indicator |

||||||

|

| |||||||

| Potential Correlates | Cohort | Adherence | Group engagement/ participation |

Facilitator quality |

Cohort | Adherence | Student engagement |

| Team Factors | |||||||

| Team effectivenessa | 1 | n/a | n/a | n/a | n/a | n/a | |

| 2 | 0.13 | 0.07 | 0.13 | 1 | 0.03 | 0.25 | |

| 3 | 0.21 | 0.35 | 0.37 | 2 | 0.70** | −0.25 | |

| 4 | 0.11 | 0.58* | 0.66* | 3 | 0.51 | −0.25 | |

| 5 | 0.04 | −0.32 | 0.29 | 4 | 0.35 | −0.31 | |

| 6 | −0.28 | 0.10 | 0.08 | 5 | 0.25 | 0.24 | |

| Meeting quality | 1 | −0.04 | 0.16 | −0.01 | n/a | n/a | |

| 2 | −0.22 | −0.16 | −0.09 | 1 | 0.08 | 0.46 | |

| 3 | −0.23 | −0.14 | −0.06 | 2 | 0.45 | −0.03 | |

| 4 | 0.14 | 0.12 | 0.14 | 3 | −0.14 | −0.27 | |

| 5 | 0.04 | 0.05 | 0.42 | 4 | −0.19 | −0.46 | |

| 6 | −0.29 | 0.18 | 0.26 | 5 | 0.45 | −0.14 | |

| Team attitude regarding prevention |

1 | −0.01 | 0.06 | −0.06 | n/a | n/a | |

| 2 | 0.23 | 0.36 | 0.43 | 1 | −0.16 | 0.55* | |

| 3 | 0.27 | 0.41 | 0.49 | 2 | 0.51+ | 0.29 | |

| 4 | 0.23 | 0.82** | 0.82** | 3 | 0.25 | 0.49 | |

| 5 | 0.10 | 0.04 | 0.11 | 4 | 0.10 | −0.40 | |

| 6 | 0.11 | 0.25 | 0.69* | 5 | 0.51 | 0.19 | |

| Effective technical assistance collaboration |

1 | −0.25 | −0.13 | −0.33 | n/a | n/a | |

| 2 | −0.24 | −0.20 | −0.12 | 1 | 0.20 | 0.32 | |

| 3 | −0.58* | −0.17 | −0.14 | 2 | 0.15 | −0.37 | |

| 4 | 0.04 | 0.12 | 0.06 | 3 | −0.09 | −0.44 | |

| 5 | −0.73* | 0.60* | −0.28 | 4 | −0.30 | −0.42 | |

| 6 | −0.37 | 0.28 | 0.06 | 5 | −0.12 | −0.12 | |

| Frequency of technical assistance requests |

1 | −0.15 | −0.25 | −0.29 | n/a | n/a | |

| 2 | 0.10 | 0.14 | 0.11 | 1 | −0.44 | −0.15 | |

| 3 | 0.17 | −0.23 | −0.39 | 2 | −0.25 | 0.34 | |

| 4 | 0.43 | 0.31 | 0.50 | 3 | 0.59+ | 0.21 | |

| 5 | 0.04 | −0.42 | −0.18 | 4 | 0.51 | 0.31 | |

| 6 | 0.34 | 0.35 | 0.47 | 5 | 0.52 | 0.03 | |

| Instructor Factorsc | |||||||

| Instructor affiliationd | n/a | n/a | |||||

| 1 | 0.31 | 0.39 | |||||

| 2 | .51+ | 0.10 | |||||

| 3 | 0.42 | .65* | |||||

| 4 | 0.50 | −0.09 | |||||

| 5 | .67* | 0.29 | |||||

| Instructor use of lecturing | n/a | n/a | |||||

| 1 | −0.72** | −0.24 | |||||

| 2 | 0.25 | −0.09 | |||||

| 3 | 0.19 | −0.04 | |||||

| 4 | 0.37 | 0.13 | |||||

| 5 | 0.40 | −0.27 | |||||

Team effectiveness was not assessed prior to the first implementation of the interventions.

The family program was first implemented during the 2002-2003 school year to cohort 1 (6th grade), and the school-based programs were first implemented during the following year, 2003-2004 to cohort 1 (7th grade). Therefore, there is no corresponding data for the school-based programs for the year in which the family-based program was first implemented.

The Instructor factors only pertain to instructors delivering the school-based interventions and are not relevant to the family-based program.

Instructor affiliation was coded as 1 for agency personnel and 2 for school teachers.

p ≤ .10.

p ≤ .05.

p ≤ .01.

Discussion

Pattern of Sustained Implementation Quality

First and foremost, this study demonstrates sustained implementation quality outcomes on a range of measures, across different types of interventions. Average adherence ratings approached 90% for both the family-focused and school-based interventions, a high level of quality that was sustained across as many as 6 implementation cohorts. Consistent with the sustained adherence ratings, other indicators of implementation quality also showed consistently positive results. Specifically, measures of participation by attending families and adolescents remained quite high throughout the observed period, as did measures of facilitator quality. These findings are especially noteworthy in light of the literature indicating that EBI implementation quality tends to decrease over time, especially when conducted by community teams (Brown et al., 2010; Fixsen et al., 2005; McHugh & Barlow, 2010). The positive outcomes reported in the present investigation likely reflect the strategies to sustain high quality implementation that are an integral component of the PROSPER partnership model. In particular, the PROSPER partnership model applies the expertise of University Extension personnel and researchers to ongoing, proactive technical assistance for community teams, in order to address a range of factors influencing sustained implementation quality, as described in the Methods section.

A number of variables associated with the characteristics of community teams and intervention facilitators were considered for their potential relevance to implementation quality. Although a few relationships did attain statistical significance at particular points in time, none of these relationships tended to be stable across cohorts. Similar to previous findings (Spoth, Guyll et al., 2007), overall, there was little evidence for clear, stable, predictive relationships—insomuch as the pattern of correlations revealed little consistency—thus providing no basis for strong conclusions. It is likely that the small number of communities (N = 14) and limited variability in the dependent variable contributed to the instability in the relationships across cohorts. Given the rather large number of correlations examined, it also is likely that some of these simply reflect chance variation. The small number of communities that was feasible to include in the intervention condition of the trial (i.e., n = 14) limits statistical power, creating conditions in which the absence of clear patterns of significant findings is not surprising, a circumstance that is consistent with previous analyses based on earlier assessments in the PROSPER trial (Spoth, Guyll et al., 2007).

One factor that reduces the likelihood of obtaining significant relationships is the aforementioned primary focus of the PROSPER partnership model on maximizing implementation fidelity through support and technical assistance provided by prevention coordinators, University Extension, and university researchers. As a consequence, the appropriate utilization of the PROSPER partnership model leads to implementation quality that is generally quite high and of limited variability. For example, a primary reason that implementation is assessed each year is to monitor its quality. If it is low or declining, the PC and local team leader devise a plan to either provide remedial training/feedback to the facilitator or discontinue work with the facilitator. Functionally, this may result in a restriction of range in the dependent variables (i.e., the indicators of implementation quality) and mitigate the effects of factors that might otherwise affect implementation outcomes. The longitudinal nature of the present study allowed for examination of correlates over multiple years, and thereby provided a unique opportunity to explore whether any particular factors might emerge as being influential over time. No such relationships emerged in a definitive way, likely because of the sustained high implementation quality and other limitations already noted. Given the success of the PROSPER partnership model in sustaining implementation quality, it may be more revealing to test for correlates of implementation quality in settings that do not achieve such uniformly high levels of quality.

In sum, these findings extend earlier work by the authors and other researchers in the field by examining how indicators of implementation may change over an extended period of time, across multiple cohorts of implementation, and in the course of delivering qualitatively different intervention types. In addition, the current findings have greater ecological validity in the sense that they were obtained over a significant period of time following commencement of the research project, and thus are unlikely to be unduly influenced by community and facilitator motivation associated with projects in their early phases. Rather, these findings likely reflect typical levels of implementation quality likely to be attainable when supported by a well-functioning partnership involving community teams with stable sources of support, such as Extension personnel and University researchers.

In this context, it is worth noting how PROSPER is designed to promote continuous TA support, even in the long term. First, TA is thoroughly integrated into the PROSPER model in multiple ways. It constitutes a basic structural component of the model, with two of the three-tiers designed to support ongoing, proactive TA. Also, detailed protocols for the ongoing TA include a benchmarking process applied across all model implementation phases, thereby ensuring TA effectiveness in the long term. It is expected that community teams in advanced stages of development will require sustained TA, although at reduced levels for more highly functioning teams.

Second, PROSPER model implementation includes a design for institutionalization through the states’ land grant university Cooperative Extension System. The original two states implementing the model began by securing dedicated TA provider positions (called Prevention Coordinators, or PCs). In addition, across each subsequent phase of model implementation, an increasing level of effort was devoted to enhancing Cooperative Extension System capacity and commitment to ongoing model implementation (e.g., through administrator and staff awareness building, staff development practices, hiring, and long-term strategic work plans), in order to sustain such implementation beyond grant funding. While it is expected that most TA support for community teams will originate from the state-level TA system, it also is the case that communities could fund their own TA to some degree, depending on resources available to the community, in conjunction with that available from the Cooperative Extension System and the state.

Study Limitations

Three study limitations are noteworthy. First, although observer ratings are considered to be critically important, there is some debate in the literature regarding whether the presence of observers causes facilitators to demonstrate higher-quality implementation. Nonetheless, the use of trained observers has been recommended for intervention conditions such as those encountered in the current study (Dane & Schneider, 1998). In addition, unobtrusive measures such as hidden recording devices were not feasible because the varied settings in which the interventions were delivered did not typically provide support for such technology. And finally, the predictive validity of observational measures has been reported to exceed the validity of self-report measures (Lillehoj, Griffin, & Spoth, 2004).

Second, it should be noted that the number of observations (and the number of sites observed) decreased following the first two cohorts of program delivery. In the case of the family-focused program, this was due in part to variations in the number of program groups conducted, as well as to reduced resources devoted to observations. As noted earlier, in the case of the school-based program, some schools and teachers eventually elected to stop allowing external observers into their classrooms. In this context, there were no observations of factors coincidental with the increasing missing data that would be expected to contribute to poorer implementation quality. Also, as noted earlier, missing data issues were addressed by using full information likelihood analytic procedures. Although it is not possible to assess how the inclusion of more complete data may have altered findings, there is no clear evidence to suggest that findings based on data from later cohorts (where there are more missing data) are meaningfully different from those based on earlier cohorts that provided complete data. Nonetheless, the lack of definitive information on factors related to missing data is a limitation and lower implementation quality in unobserved sites cannot be conclusively ruled out.

Finally, in this context it is noteworthy that, although significant intervention effects on youth problem behavior outcomes were found for Cohorts 1 and 2, and were positive through 4.5 years past baseline (e.g., Spoth et al., 2010), outcomes for subsequent cohorts were not tracked, and therefore could not be assessed.

Implications and Future Directions

Broader diffusion of EBIs can be effective only under conditions wherein sustainability is achieved. The partnering of community teams who are proximal to intervention delivery, with more distal but enduring university entities, such as University Extension, faculty, and research personnel, might offer one means of promoting sustainability. The PROSPER delivery system utilizes such partnerships and, in the current study, successfully achieved high levels of implementation quality that were sustained across six intervention cohorts. A key feature of PROSPER that likely contributes to the positive sustained implementation quality findings is its provision of continuous, proactive technical assistance, as outlined above. Indeed, these findings demonstrate that with appropriate, ongoing proactive technical assistance, communities are capable of implementing EBIs on a larger scale, with high and sustained quality. Ultimately, this capability constitutes a critical step in favorably impacting public health through community based interventions.

In the current work the PROSPER partnership model was utilized in all intervention communities, whereas PROSPER technical assistance and related supports were not provided to control communities. The incremental usefulness of employing the PROSPER partnership model could be examined by comparing implementation quality outcomes in communities that receive PROSPER supports for implementing interventions, with outcomes in communities that deliver the interventions on the PROSPER menu and have ongoing technical assistance support from program developers, but without the full benefits of PROSPER partnership model.

Finally, it remains essential to conduct additional ecologically-valid evaluations of the sustainability of implementation quality to assure public health impact of scaled-up EBIs. The results of this study encourage further deployment of intervention delivery systems such as PROSPER as a means of conducting large scale preventive interventions in general populations.

Acknowledgments

Work on this paper was supported by the National Institute on Drug Abuse (DA013709) and co-funding from the National Institute on Alcohol Abuse and Alcoholism.

References

- Bandura A. Social learning theory. Prentice Hall; Englewood Cliffs, NJ: 1977. [Google Scholar]

- Becker MH. The Health Belief Model and Personal Health Behavior. Health Education Monographs. 1974;2(4):324–473. [Google Scholar]

- Botvin GJ. Life Skills Training: Promoting heath and personal development. Princeton Health Press; Princeton, NJ: 1996. [Google Scholar]

- Botvin GJ. Life Skills Training: Promoting health and personal development. Princeton Health Press; Princeton, NJ: 2000. [Google Scholar]

- Bracht N, Kingsbury L. Community organization principles in health promotion: A five-stage model. In: Bracht N, editor. Health Promotion at the Community Level. Sage; Newbury Park, CA: 1990. [Google Scholar]

- Brown LD, Feinberg ME, Greenberg MT. Determinants of community coalition ability to support evidence-based programs. Prevention Science. 2010;11(3):287–97. doi: 10.1007/s11121-010-0173-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: Are implementation effects out of control? Clinical Psychology Review. 1998;18(1):23–45. doi: 10.1016/s0272-7358(97)00043-3. [DOI] [PubMed] [Google Scholar]

- DeMarsh JP, Kumpfer KL. Family-oriented interventions for the prevention of chemical dependency in children and adolescents. In: Griswold-Ezekoye S, Kumpfer KL, Bukowski WJ, editors. Childhood and chemical abuse: Prevention and intervention. Haworth Press; New York: 1986. pp. 117–151. [Google Scholar]

- Derzon JH, Sale E, Springer JF, Brounstein P. Estimating intervention effectiveness: Synthetic projection of field evaluation results. The Journal of Primary Prevention. 2005;26:321–343. doi: 10.1007/s10935-005-5391-5. [DOI] [PubMed] [Google Scholar]

- Domitrovich CE, Greenberg MT. The study of implementation: Current findings from effective programs that prevent mental disorders in school-aged children. Journal of Educational and Psychological Consultation. 2000;11(2):193–221. [Google Scholar]

- Durlak JA, DuPre E. Implementation Matters: A Review of Research on the Influence of Implementation on Program Outcomes and the Factors Affecting Implementation. American Journal of Community Psychology. 2008;41:327–350. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- Elliott DS, Mihalic S. Issues in disseminating and replicating effective prevention programs. Prevention Science. 2004;5(1):47–53. doi: 10.1023/b:prev.0000013981.28071.52. [DOI] [PubMed] [Google Scholar]

- Fagan AA, Mihalic S. Strategies for enhancing the adoption of school-based prevention programs: Lessons learned from the Blueprints for Violence Prevention replications of the Life Skills Training program. Journal of Community Psychology. 2003;31(3):235–253. [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Researcher Network (FMHI Publication #231); Tampa, FL: 2005. [Google Scholar]

- Flynn BS. Measuring community leaders’ perceived ownership of health education programs: Initial tests of reliability and validity. Health Education Research. 1995;10:27–36. doi: 10.1093/her/10.1.27. [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Klesges LM, Dzewaltowski DA, Bull SS, Estabrooks P. The future of health behavior change research: What is needed to improve translation of research into health promotion practice? Annals of Behavioral Medicine. 2004;27:3–12. doi: 10.1207/s15324796abm2701_2. [DOI] [PubMed] [Google Scholar]

- Gottfredson DC. Schools and delinquency. Cambridge University Press; Cambridge, UK: 2001. [Google Scholar]

- Greenberg MT, Domitrovich CE, Graczyk PA, Zins JE. The study of implementation in school-based prevention research: Theory, research and practice. U.S. Department of Health and Human Services; 2001. Report submitted to the Center for Mental Health Services (CMHS) and Substance Abuse and Mental Health Administration (SAMHSA) [Google Scholar]

- Greenberg MT, Feinberg ME, Meyer-Chilenski S, Spoth RL, Redmond C. Community and team member factors that influence the early phases of local team partnerships in prevention: The PROSPER project. Journal of Primary Prevention. 2007;28:485–504. doi: 10.1007/s10935-007-0116-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hansen WB. Pilot test results comparing the All Stars program with seventh grade D.A.R.E. program integrative and mediating variable analysis. Substance Use and Misuse. 1996;31(10):1359–77. doi: 10.3109/10826089609063981. [DOI] [PubMed] [Google Scholar]

- Jessor R, Jessor SL. Problem behavior and psychosocial development: A longitudinal study of youth. Academic Press; New York: 1977. [Google Scholar]

- Kumpfer KL, Molgaard V, Spoth R. The Strengthening Families Program for the prevention of delinquency and drug use. In: Peters RD, McMahon RJ, editors. Preventing childhood disorders, substance abuse, and delinquency. Sage; Thousand Oaks, CA: 1996. pp. 241–267. [Google Scholar]

- Lillehoj (Goldberg) CJ, Griffin KW, Spoth R. Program provider and observer ratings of school-based preventive intervention implementation: Agreement and relation to youth outcomes. Health Education and Behavior. 2004;31(2):242–257. doi: 10.1177/1090198103260514. [DOI] [PubMed] [Google Scholar]

- McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments. American Psychologist. 2010;65:73–84. doi: 10.1037/a0018121. [DOI] [PubMed] [Google Scholar]

- Mihalic S, Fagan A, Irwin K, Ballard D, Elliott D. Blueprints for Violence Prevention. U.S. Department of Justice, Office of Justice Programs, Office of Juvenile Justice and Delinquency Prevention; Washington, DC: Jul, 2004. OJJDP (Office of Juvenile Justice and Delinquency Prevention) Juvenile Justice Bulletin (NCJ 204274) [Google Scholar]

- Mincemoyer C, Perkins D, Santiago A. Forum for Family and Consumer Issues. An exploration of team leader’s perspectives of the Pennsylvania and Iowa PROSPER learning communities. —02/08 Published on-line. See http://ncsu.edu/ffci/publications/2008/v13-n3-2008-winter/index-v13-n3-winter-2008.php. [Google Scholar]

- Molgaard VM, Spoth R, Redmond C. Competency training: The Strengthening Families Program for Parents and Youth 10–14. U.S. Department of Justice, Office of Justice Programs, Office of Juvenile Justice and Delinquency Prevention; Washington, DC: 2000. OJJDP Juvenile Justice Bulletin (NCJ 182208) [Google Scholar]

- Molgaard V, Kumpfer K, Fleming B. Iowa State University Extension; Ames, IA: 1997. The Strengthening Families Program: For Parents and Youth 10–14. [Google Scholar]

- Ransford C, Greenberg MT, Domitrovich CE, Small M, Jacobson L. The role of teachers’ psychological experiences and perceptions of curriculum supports on implementation of a social and emotional learning curriculum. School Psychology Review. School Psychology Review. 2009;38(4):510–532. [Google Scholar]

- Redmond C, Spoth RL, Shin C, Schainker L, Greenberg M, Feinberg M. Long-term protective factor outcomes of evidence-based interventions implemented by community teams through a community-university partnership. Journal of Primary Prevention. 2009;30:513–530. doi: 10.1007/s10935-009-0189-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenstock IM, Strecher VJ, Becker MH. Social learning theory and the Health Belief Model. Health Education Quarterly. 1988;15(2):175–183. doi: 10.1177/109019818801500203. [DOI] [PubMed] [Google Scholar]

- Spoth R, Greenberg M, Bierman K, Redmond C. PROSPER community-university partnership model for public education systems: Capacity-building for evidence-based, competence-building prevention [Invited article for Special issue] Prevention Science. 2004;5(1):31–39. doi: 10.1023/b:prev.0000013979.52796.8b. [DOI] [PubMed] [Google Scholar]

- Spoth R, Guyll M, Lillehoj CJ, Redmond C, Greenberg M. PROSPER study of evidence-based intervention implementation quality by community-university partnerships. Journal of Community Psychology. 2007;35(8):981–999. doi: 10.1002/jcop.20207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth R, Guyll M, Trudeau L, Goldberg-Lillehoj C. Two studies of proximal outcomes and implementation quality of universal preventive interventions in a community-university collaboration context. Journal of Community Psychology. 2002;30(5):499–518. [Google Scholar]

- Spoth R, Redmond C, Clair S, Shin C, Greenberg M, Feinberg M. Preventing substance misuse through community-university partnerships and evidence-based interventions: PROSPER outcomes 4½ years past baseline. 2010. Manuscript submitted for publication. [DOI] [PMC free article] [PubMed]

- Spoth R, Redmond C, Shin C, Greenberg M, Clair S, Feinberg M. Substance use outcomes at 18 months past baseline: The PROSPER community-university partnership trial. American Journal of Preventive Medicine. 2007;32(5):395–402. doi: 10.1016/j.amepre.2007.01.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spoth RL, Greenberg MT. Toward a comprehensive strategy for effective practitioner-scientist partnerships and larger-scale community benefits. American Journal of Community Psychology. 2005;35(3/4):107–126. doi: 10.1007/s10464-005-3388-0. [DOI] [PMC free article] [PubMed] [Google Scholar]