Abstract

Objectives

Survey by questionnaire is a widely used research method in dental radiology. A major concern in reviews of questionnaires is non-response. The objectives of this study were to review questionnaire studies in dental radiology with regard to potential survey errors and to develop recommendations to assist future researchers.

Methods

A literature search with the software search package PubMed was used to obtain internet-based access to Medline through the website www.ncbi.nlm.nih.gov/pubmed. A search of the English language peer-reviewed literature was conducted of all published studies, with no restriction on date. The search strategy found articles with dates from 1983 to 2010. The medical subject heading terms used were “questionnaire”, “dental radiology” and “dental radiography”. The reference sections of articles retrieved by this method were hand-searched in order to identify further relevant papers. Reviews, commentaries and relevant studies from the wider literature were also included.

Results

53 questionnaire studies were identified in the dental literature that concerned dental radiography and included a report of response rate. These were all published between 1983 and 2010. In total, 87 articles are referred to in this review, including the 53 dental radiology studies. Other cited articles include reviews, commentaries and examples of studies outside dental radiology where they are germane to the arguments presented.

Conclusions

Non-response is only one of four broad areas of error to which questionnaire surveys are subject. This review considers coverage, sampling and measurement, as well as non-response. Recommendations are made to assist future research that uses questionnaire surveys.

Keywords: dentistry, dental radiography, questionnaires, research design

Introduction

Surveys of dentists by questionnaire on the subject of dental radiology are a widely used research method. They can provide important, cost-effective information on dentists' knowledge, attitudes and practices.1-3 There have been several reviews or commentaries on the design of such studies. Nevertheless, the focus of these articles is overwhelmingly on the quantity of response, in other words non-response error.4-7 Reviews that consider aspects of the quality of response are much less common.8 In 1989, Groves9 described four errors of sample surveys, of which non-response is only one. These were coverage error, sampling error, non-response error and measurement error. No reviews or commentaries were identified in which all of these aspects of the design of questionnaires for health studies are considered. Consequently, the objectives of this study were to review questionnaire studies in dental radiology with regard to potential survey errors and to develop recommendations to assist future researchers.

This review of questionnaire studies concerned dental radiology in particular but drew on the wider dental and medical literature where this was considered relevant.

Materials and methods

A literature search with the software search package PubMed was used to obtain internet-based access to Medline through the website www.ncbi.nlm.nih.gov/pubmed. A search of the English language peer-reviewed literature was conducted of all published studies, with no restriction on date. The search strategy resulted in articles with dates from 1983 to 2010. The medical subject heading terms used were “questionnaire”, “dental radiology” and “dental radiography”. The reference sections of articles retrieved by this method were hand-searched in order to identify further relevant papers. This hand search was repeated with each set of newly identified articles until it was felt that the search was as complete as reasonably achievable. As far as possible, we conducted this review using a systematic approach. Nevertheless, formal systematic review, such as those conducted to investigate therapeutic interventions or diagnostic tests, was not feasible.

Inclusion criteria

True questionnaire surveys were considered to be those in which questionnaires were used to survey populations. Whilst the objective was to identify studies that concern dental radiology, studies from the wider literature were also identified by this method and included, where relevant. The search also identified reviews and commentaries on the design of questionnaire studies, and these have been included in this review, where appropriate.

Exclusion criteria

Studies were identified where the word “questionnaire” was used in the report but, in reality, were not surveys of populations. An example would be a study involving a small panel of selected observers where the “questionnaire” was simply a way of recording data. Such studies were excluded from the review.

Data analysis

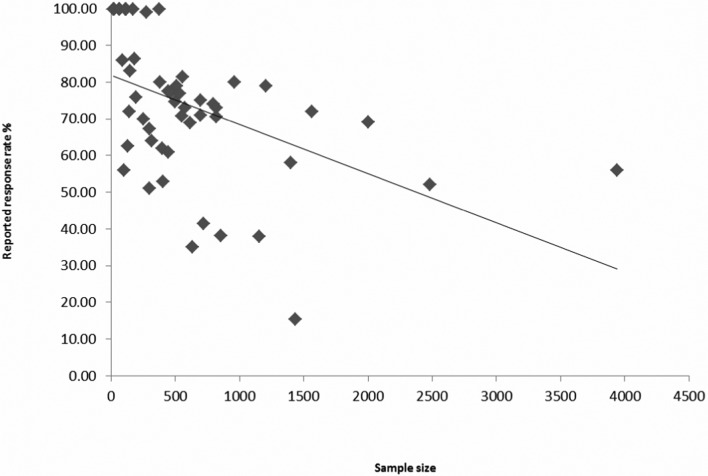

For questionnaire-based studies on dental radiology for which a response rate was reported, the mean response rate was calculated and an analysis of response rates against sample size was conducted. Data were input into PASW statistics 18.0 (formerly SPSS) (PASW; Predictive Analytics Software, SPSS Inc., Chicago, IL). Pearson's correlation coefficient was calculated to determine the relationship between response rate and sample size (r = −0.47, p = 0.01).

Results

This review identified examples of Groves' four key sources of survey error, namely coverage, sampling, non-response and measurement. Each of these may, in turn, be divided into subdivisions. These are summarized in Table 1.

Table 1. The four key sources of error in questionnaire surveys.

| Coverage error | Does the sample frame include all members of the survey population? |

| Is the source information current for the sample frame? | |

| Could the sample frame include duplicates? | |

| Does the sample frame include ineligible individuals? | |

| Sampling error | Random sampling |

| Total population approach | |

| Trading sampling error for non-response error | |

| Better allocation of resources through the use of sampling | |

| An appropriate sample size can provide precision | |

| Repeated large samples can lead to “questionnaire fatigue” | |

| Non-random “convenience” sampling | |

| Sample size selection | |

| Non-response error | Definitions of response rate |

| Response rates found in the dental radiology literature | |

| Non-response and non-response bias | |

| Response enhancement strategies | |

| Measurement error | Do respondents always tell the truth? |

| The wording of questions | |

| Different survey modes |

53 questionnaire studies were identified in the dental literature that concerned dental radiography and included a report of response rate.1,2,10-60 These were all published between 1983 and 2010. In total, 87 articles are referred to in this review, including the 53 dental radiology studies. Other articles include reviews, commentaries and examples of studies outside dental radiology where they are germane to the arguments presented.

Discussion

The number of surveys to which dentists are subjected is unknown. Nonetheless, as early as 1993, Horner et al22 commented, “It is possible that GDPs were suffering from ‘questionnaire fatigue’ ”. In addition to surveys by academic institutions, dentists regularly receive requests to complete commercial surveys. Therefore it is unsurprising that non-response is considered to be a shortcoming of many surveys.61 Many authors have drawn attention to low response rates as sources of bias in surveys of health professionals.62-64 For example, Ho et al65 commented in 2007, “The main threat to the validity of survey findings arises from low response rates”. Nevertheless, non-response is only one component of survey error, and a good response rate alone does not guarantee the validity of findings.8 Furthermore, it has been argued that non-response is a source of error only to the extent that responders and non-responders differ on the variables of interest.5

Coverage error

The sample frame is the list from which a sample is to be drawn in order to represent the survey population. The ideal situation is that every member of the survey population appears once on the sample frame with accurate contact details. This is termed “full coverage”. In this way, every member of the survey population will have the same chance as any other of being selected into the survey sample to receive a questionnaire. When this is not the case, coverage error exists. A number of questions may be raised about coverage.

Does the sample frame include all members of the survey population?

The surveying of participants at a meeting or conference is a common source of coverage error. Participants would be expected to form a special sub-population of dentists who were available, interested and enthusiastic enough about the subject to attend a conference. For example, in 2003 Sakakura et al44 published a study entitled “A survey of radiographic prescription in dental implant assessment”. The authors selected a random sample of 69 dentists attending a dental implant meeting held in São Paulo, Brazil. The sample frame from which the sample was taken was very different from the survey population. This was stated at the outset to be “Dentists in Brazil”, but the sample frame was only those dentists attending one conference on one occasion.

Is the source information current for the sample frame?

There are concerns in some studies that source data could be out of date and thus compromise the sample frame. For example, Jenkins et al33 and Kogon et al24 used lists of graduates from Cardiff and Ontario dental schools, respectively. There is concern that such lists would have errors soon after graduation. Typically, contact details for graduates would only be updated where the graduates themselves had informed their Dental School or their Alumni Associations. Where contact details are incorrect, an individual will have a zero chance of being included in the sample.

Could the sample frame include duplicates?

Kay and Nuttall23 carried out a study of restorative decisions based on bitewing radiographs. The source for the sample frame was the Glasgow Yellow Pages telephone directory. It is not unusual for UK dental practitioners to work in more than one practice. Such a practitioner would therefore have a greater chance of being selected for the sample and potentially have the opportunity to make more than one response.

Does the sample frame include ineligible individuals?

It is possible that a sample frame may include ineligible individuals who are outside the intended survey population. For example, in 2002 Stewardson42 carried out a study to assess endodontic practice. The sample frame was taken from the UK General Dental Council register for the year 2000. Inevitably, some registrants would not be working in clinical dentistry or would be working in areas in which endodontics was not carried out, such as oral medicine or orthodontics.

Sampling error

Estimates can be made by taking a random sample, and sample size calculation can deliver estimates of appropriate precision. Random sampling requires that every member of the sample frame have an equal chance of being selected in the sample. An example of a non-random sample is the 1998 study by Yang and Kiyak.66 This study was carried out to investigate the timing of orthodontic treatment in the USA. The authors reported that there were four groups within the sample. The first was a random sample of members of the American Association of Orthodontists. A second group was a sample taken from the directory of the midwest component of the Angle Society of Orthodontists. In the third, questionnaires were distributed at an annual meeting of orthodontists. The final group consisted of orthodontists who were recommended by the collaborators on the study. The result was a sample that was not randomly selected. Therefore, the authors introduced bias into the selection of the sample and so the study suffered from sampling error.

Total population approach

The most simplistic approach used by researchers is to target the whole of the relevant population. This would seem to have the advantage of eliminating sources of sampling error. Nevertheless, this approach has been criticized on several grounds.

As Dillman et al67 pointed out, “There is nothing to be gained by surveying all 1000 members of a population in a way that produces a 35% response rate”. An example from the dental literature is Wenzel and Møystad's study,36 which evaluated Norwegian general dental practitioners' decision criteria and characteristics for choosing digital radiographic equipment. The authors reported that a questionnaire was sent to all the dental practitioners in Norway, a total of 3940 dentists. The reported response rate was 56%. Whilst one could argue that there was minimal sampling error, the authors had the problem that 44% of their sample did not respond. Dillman et al67 commented further, “Survey sponsors simply trade small amounts of sampling error for potentially large amounts of non-response error”.

A second criticism of a total population approach is that resources may be wasted and that appropriate sampling is more cost-effective. Resources for research are always limited and researchers have an obligation to use them effectively. Reduction of non-response error can be costly. For example, several authors drew attention to the effectiveness of monetary incentives, the use of prepaid return envelopes, personalisation, pre-notification and follow-up contacts.4,5,68,69 Therefore, attempts at contacting an entire population can be at the expense of measures to reduce non-response.

A further concern is whether the findings from a whole population survey are any more valid than those from a random sample of sufficient size. Both Scheaffer et al70 and Dillman et al67 presented formulae to select an appropriate sample size. This is dependent on the size of the population, the estimated variability of the results, the desired margin of error and the desired confidence level. For example, for the population of 3940 investigated by Wenzel and Møystad,36 the authors could have been 95% confident that findings would be within ± 5% with a sample size of 350.67 Therefore, it could be argued that Wenzel and Møystad36 could have better employed their resources by choosing a much smaller sample and spending research funds on response enhancement strategies.

A final concern about whole population surveys relates to “questionnaire fatigue” and the danger that repeated surveys of unnecessarily large samples can jeopardise future co-operation of health professionals. In an example from the medical literature, in 1998 Kaner et al71 published an investigation into the non-response of general medical practitioners to surveys entitled, “So much post, so busy with practice—so no time!” The authors commented, “Response rates by general practitioners to postal surveys have consistently fallen, compromizing the validity of this type of research”. Similarly, McAvoy and Kaner72 published a commentary in the British Medical Journal entitled, “General practice postal surveys: a questionnaire too far?” They reported the case of one practitioner who returned an uncompleted questionnaire in its prepaid envelope, enclosing an invoice for £5 to cover his “administrative costs”. Whilst the above examples are all from the medical literature, one would expect that the pressures on medical and dental practitioners would be similar.

Non-random “convenience” sampling

As an alternative to a whole population approach, some researchers have used a more opportunistic method of sampling: a convenience sample. This may be defined as one that is selected at the convenience of the researcher or where subjects are easy to recruit for a study. An example of convenience sampling is the 2005 study by Gijbels et al,73 which was performed or Belgian dentists to evaluate the use of digital radiographic equipment. A questionnaire was included in a dental magazine that was freely distributed to Belgian dentists. The number of responses to this survey was 350, although the circulation of the magazine was not reported by the authors. Nonetheless, in a 2008 document, European Union figures estimated the number of dentists in Belgium to be 8423.74 This equates to an approximate response rate for this study of 4%. The authors asserted that 30% of Belgian dentists use digital equipment for intraoral exposures. This is an unsafe assumption based on this method of sample selection.

Sample size selection

Sample size calculations are rarely referred to in reports of questionnaire surveys to dentists. 53 questionnaire surveys involving dental radiography from 1983 to 2010 were reviewed. Of these, only four stated that a sample size calculation had been carried out.20,24,75,76

Non-response error

The response of individuals to surveys is often regarded as a key indicator of survey quality. Nevertheless, attention given exclusively to response rates has been questioned. For example, in 2001 Cummings et al77 concluded “Reported response rates are often used by researchers as a quick proxy for survey quality”.

In 1997, Rugg-Gunn,78 as editor of the British Dental Journal, set out guidelines for acceptable response rates. These are still used by researchers. A rate of 80% or over was regarded as good and over 70% as acceptable. In response, Martin79 criticised this approach and commented that “Crude response rates are not a valid way of judging manuscripts”. Burke and Palenik80 added to the discussion and remarked, “To achieve the magic 70% return, researchers will be encouraged to make surveys overly simple and offer them to non-random audiences.” In the same year, Asch et al64 wrote, “Investigators, journal editors and readers should devote more attention to assessments of bias, and less to specific response rate thresholds.”

In summary, widespread concern has been expressed that response rates in themselves are unreliable indicators of survey quality. A high reported response rate may mask other deficiencies in a study. Conversely, a low response rate does not necessarily lead to bias. The following section of this literature review will discuss issues of non-response and the methods used to reduce it.

Definitions of “response rate”

At first glance, the definition of “response rate” seems as simple as dividing the number of responses by the number of surveys distributed. Nevertheless, authors have accounted for the following in their calculations: the number of ineligible responses, the number of ineligible individuals in the sample as a whole, the number of individuals of unknown status, the number of incomplete or partially incomplete surveys and the number of refusals to participate in a survey. These refinements of response rate calculations have led to inconsistency in response rate reporting. Johnson and Owens81 commented, “when a response rate is given with no definition, it can mean anything”. As early as 1977, Kviz82 addressed this problem in his article “Toward a standard definition of response rate”. Kviz gave two distinct definitions, which he termed “response rate” and “completion rate”. Further definitions and terms were introduced by Shosteck and Fairweather83 in 1979, Asch et al64 in 1997, Groves9 in 1989 and Locker7 in 2000. Authors therefore have many options in presenting their response rate. In 2003, Johnson and Owens81 audited published articles from the professional literature and commented, “We have yet to encounter any case in which a response rate has been underestimated. There are powerful incentives to presenting one's work in the most favourable light possible”.

Response rates found in the dental radiology literature

Of the 53 questionnaire-based surveys on dental radiology identified in this review, the analysis shows a mean reported response of 73.7%. Interestingly, if the pooled data for all the studies are analysed using the completion rate of Kviz,82 the overall response is 61.9%. The explanation for the discrepancy between the mean of the reported response rates and the pooled mean is that the smaller sample sizes generally have the higher response rates.

Figure 1 illustrates the significant inverse relationship between sample size and response rates. Whilst the linear regression line is far from a close fit, the graph does show a trend for decreasing response at higher sample sizes and a cluster of the highest response rates for the smaller sample sizes.

Figure 1.

Response rates plotted against sample sizes for 53 questionnaire surveys related to dental radiology identified in the review. Pearson correlation coefficient r = −0.47, p = 0.01

An interesting consideration is, for surveys on dental radiology, whether the subject of the questionnaire may be influential in response rate. For example, surveys on certain subjects, such as adherence to legislation on radiation protection, might be perceived as less interesting or more intrusive than one relating to new developments and techniques, such as digital radiology. Although this was not the objective of the current review, examination of the 53 studies identified here found that they could be separated into several broad subject areas. Taking pooled data for each category of studies, the response rates were as follows: prescription/selection criteria, 64.17% (n = 24); technique/equipment, 54.63% (n = 9); radiation protection, 72.24% (n = 7); interpretation, 57.97% (n = 6); knowledge of radiography, 72.66% (n = 2); and other, 58.08% (n = 5). The category “other” included, for example, studies on infection control in radiography and use of auxiliaries. On the basis of these figures and this small number of studies, it cannot be said that, for studies on dental radiology, the subject of the survey influenced the response rate, especially in view of the many other confounding factors that influence response rate. Further research may be useful.

Interestingly, some authors have surveyed the surveyors. Both Johnson and Owens81 and Asch et al64 carried out investigations of response rates in the medical literature. For this, they contacted authors of relevant articles who had carried out questionnaire surveys. The response from those who carried out surveys themselves was reported as 62.9% and 56%, respectively, rather less than the mean reported response rate above.

Non-response and non-response bias

Although response rates themselves receive the most attention in the medical and dental literature, non-response and non-response bias are different. Non-response is simply the failure of some members of a sample to respond to a request to participate in a survey. Non-response bias is error that occurs as a result. Nonetheless, error will occur only if the non-responders would have replied differently from the responders. Non-response, therefore, represents only the potential for non-response bias. If non-responders would have replied in the same way as responders then, even if there was non-response, there could be no non-response bias.

In an investigation of non-response bias in a survey of dentists' infection control, McCarthy and MacDonald63 commented, “A low response rate does not necessarily entail non-response error. Conversely, it cannot be assumed that surveys with comparatively high response rates do not have non-response bias”. Further, Montori et al84 investigated methods of assessment of non-response bias, and explained, “Similarity in a limited number of characteristics between responders and non-responders does not guarantee similarity in their responses, because characteristics available for comparison between responders and non-responders may be weakly related or totally unrelated to the outcome variables in the survey”.

It is perhaps unsurprising that authors often avoid analysis of response rate and non-response bias. For example, Tan and Burke5 reviewed 77 publications in 1997. These were all mailed questionnaire surveys to dentists. They reported that no information on non-responders was available in any of the 77 papers that they assessed.

Response enhancement strategies

There have been several attempts to identify individual strategies that enhance the response rate to postal and electronic questionnaires. In 2009 Edwards et al4 published the second update of a systematic review of methods to increase response to postal and electronic questionnaires. The authors analysed 481 trials of postal questionnaires using 110 different response enhancement strategies. They also analysed 32 trials of electronic questionnaires using 27 different response enhancement strategies. One criticism of this study is that it included trials of all types, including health-related questionnaires, non-health-related questionnaires and surveys of health professionals and of the general public. Inclusion of surveys of all kinds in this review means that it may be inappropriate to apply its findings to a particular group. Another criticism concerns the finding that the most effective response enhancement strategy for mail surveys was “monetary incentive”. This was defined by the authors as “Any incentive that could be used by participants as money”. Other researchers have demonstrated that there is a crucial difference between incentives freely given with the request to complete a questionnaire and promises to pay participants after completion of the survey. For example, Church85 carried out an investigation into the effect of incentives on mail survey response rates. He reported, “It appears that people respond more favourably to incentives that are included with the questionnaire rather than those that are offered as contingent on the completed return”. Similarly, James and Bolstein86 investigated monetary incentives. They found that incentives as small as $1 significantly increased response rates. Conversely, the promise of $50 after completion and return of the questionnaire had no effect on response. Therefore, in the review by Edwards et al4 the broad category of “monetary incentive” concealed at least two separate strategies: payment at the time of the request and the promise of payment on completion.

Further, it could be argued that individual measures are not independent. In other words, it may be that to concentrate attention on one strategy in isolation is to miss the effectiveness of a package of measures working together. It seems logical that an approach that pays attention to all aspects of a survey design will be the most effective in enhancing response rate. Even though an incentive such as a monetary gift may be used, it seems safe to assume that this will not be fully effective in the absence of attention to other aspects of survey design. For example, Waltemyer et al87 found, under the conditions of their particular investigation, that a mailed questionnaire on coloured paper secured a higher response rate than one on white paper. One would not assume, however, that the brilliance of Pablo Picasso's paintings during his blue period was simply because they were blue. Furthermore, other studies have found that paper colour makes no difference to response and, in any event, it would seem a common sense position to assume that the enhancement of response rate is multifactorial.4

Measurement error

Measurement error occurs when a respondent's answer is inaccurate or imprecise. This raises the question of whether respondents always tell the truth on questionnaires and whether the wording, design or mode of the questionnaire leads respondents to answer in a certain way.

Do respondents always tell the truth?

In 2001 Stewardson35 conducted a questionnaire study to investigate the endodontic practices of general dental practitioners in Birmingham, UK. The author commented that respondents may report the use of materials and techniques that they know to be recommended although, in reality, they do not use them.

The wording of questions

In 2010, Aps2 carried out a questionnaire study of Flemish general dental practitioners' knowledge of dental radiology. The questionnaire included the following question: “For intraoral radiography I usually use the parallel technique”. The respondent was then prompted to answer “yes”, “no” or “no idea”. There was no choice given between the parallel technique and the bisecting angle technique. This probably led the respondents to answer “yes”, especially if they were unsure of the difference between the two techniques. The wording did not take account of the possible low level of knowledge of the respondents with regard to dental radiography.

Different survey modes

Another possible source of measurement error arises from the mode of the survey. For example, Salti and Whaites41 conducted a survey of radiographic practice among general dental practitioners in Damascus, Syria. Only one mailing was sent. Non-responders were then contacted directly and questionnaires were completed either by telephone or by personal interview. Whilst this was undoubtedly an effective way of reducing non-response, one cannot be sure that the respondents did not answer differently according to whether they were interviewed, telephoned or completed a written questionnaire.

Concluding comments

In conclusion, response levels are often used incorrectly as the key indicator of survey quality, and many authors have investigated methods of raising response. Nevertheless, non-response is only one of four broad areas of error to which questionnaire surveys are subject. These are coverage, sampling, non-response and measurement. Researchers will often wish to investigate as broad a population as possible so that findings are widely applicable. The trade-off is that the larger the population, the more difficult it is to correctly identify every member and their contact details so that they can be included in the sample frame. Resources for research are always limited. Therefore, there is a balance between the size of the sample and the costs of contacting the sample members effectively. Attempts to contact large samples, or even whole populations, restrict the resources available to implement response enhancement measures. Under these circumstances, researchers trade sampling error for non-response error. Nevertheless, sample sizes are rarely justified by sample size calculations, and non-random, or convenience sampling, is common. The issue of response rates is complicated by the inconsistency in calculation and the capacity for overestimation of the true response. Further, non-response represents only the potential for non-response bias. Bias will occur only if there are differences between the responders and the non-responders on the measures of interest. Equally, similarity in the demographic characteristics of responders and non-responders does not necessarily mean that they are similar in their attitudes or beliefs with regard to the matter under investigation. Whilst authors have attempted to quantify the effectiveness of response enhancement strategies, analysis presents difficulties. There are many features of a survey to consider, including the subject matter, the length of the questionnaire, the design and the phrasing of questions, as well as individual response enhancement measures such as monetary incentive. It seems logical that all of these will have effects on response rate, both individually and in combination. The effects of single measures are therefore difficult to interpret, and studies that compare individual response enhancement strategies are only partially helpful. The question of what is an acceptable response rate also remains a question of judgment. This situation is probably best encapsulated by Montori et al,84 who commented, “The response rate below which validity is seriously compromized is arbitrary”.

This review has considered Groves'9 four survey errors in relation to questionnaire studies in dental radiology and to surveys in the wider dental and medical literature. From this, we have devised ten key recommendations to assist future researchers. These are presented in Table 2.

Table 2. Recommendations to researchers planning questionnaire studies.

| 1 | Reduce coverage error | To reduce coverage error, define a population so that there is a realistic chance of approaching full coverage in the sample frame |

| 2 | Try to identify every member of the population | Attempt to identify every member of the population with accurate, current contact details so that they can be included in the sample frame. Take steps to avoid including ineligible individuals as far as possible. Avoid duplicates |

| 3 | Do not survey the whole of a large population | Do not survey the whole of a large population in the hope that it will produce more representative data. Among other disadvantages, the response rate is very likely to suffer |

| 4 | Perform a sample size calculation | Select a random sample of a size that is large enough to deliver appropriate precision whilst small enough to enable the application of response enhancement strategies. Allow for a realistic number of non-responders when calculating the sample size |

| 5 | Avoid convenience samples | Avoid convenience samples where possible, they will inevitably introduce bias |

| 6 | Consider response enhancement strategies | Consider appropriate response enhancement strategies that have been reported in the dental and wider literature but do not rely on these in isolation |

| 7 | Report genuine response rates | Report response rates by quoting actual figures for the various categories of response and non-response. Report a headline figure that genuinely reflects the response of the eligible members of the sample frame |

| 8 | Avoid confusing questions | Pay careful attention to the wording and setting out of questions in order to reduce measurement error. Conduct a pilot study to identify any confusing or misleading questions |

| 9 | Avoid data collection by different modes | Try to avoid using different modes of data collection for different individuals. Whilst an initial approach by multiple modes such as post and e-mail is sensible, the same mode of data collection should be used for the whole sample where possible |

| 10 | Analyse the non-responders | Incorporate survey design features that allow analysis of non-responders. Do not rely on demographic data such as year of qualification or gender to make assessments of non-response bias |

Acknowledgment

The authors are grateful to Miss Michaela Goodwin for her statistical advice.

References

- 1.Jacobs R, Vanderstappen M, Bogaerts R, Gijbels F. Attitude of the Belgian dentist population towards radiation protection. Dentomaxillofac Radiol 2004;33:334–339 [DOI] [PubMed] [Google Scholar]

- 2.Aps JK. Flemish general dental practitioners' knowledge of dental radiology. Dentomaxillofac Radiol 2010;39:113–118 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ilguy D, Ilguy M, Dincer S, Bayirli G. Survey of dental radiological practice in Turkey. Dentomaxillofac Radiol 2005;34:222–227 [DOI] [PubMed] [Google Scholar]

- 4.Edwards PJ, Roberts I, Clarke MJ, Diguiseppi C, Wentz R, Kwan I, et al. Methods to increase response to postal and electronic questionnaires. Cochrane Database Syst Rev 2009;3:MR000008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tan RT, Burke FJT. Response rates to questionnaires mailed to dentists. A review of 77 publications. Int Dent J 1997;47:349–354 [Google Scholar]

- 6.Parashos P, Morgan MV, Messer HH. Response rate and nonresponse bias in a questionnaire survey of dentists. Community Dent Oral Epidemiol 2005;33:9–16 [DOI] [PubMed] [Google Scholar]

- 7.Locker D. Response and nonresponse bias in oral health surveys. J Public Health Dent 2000;60:72–81 [DOI] [PubMed] [Google Scholar]

- 8.McColl E, Jacoby A, Thomas L, Soutter J, Bamford C, Steen N, et al. Design and use of questionnaires: a review of best practice applicable to surveys of health service staff and patients. Health Technol Assess 2001;5(31)1–256 [DOI] [PubMed] [Google Scholar]

- 9.Groves RM. Survey errors and survey costs. New York: Wiley, 1989 [Google Scholar]

- 10.Matteson SR, Morrison WS, Stanek EJ, 3rd, Phillips C. A survey of radiographs obtained at the initial dental examination and patient selection criteria for bitewings at recall. J Am Dent Assoc 1983;107:586–590 [DOI] [PubMed] [Google Scholar]

- 11.Stenstrom B, Karlsson L. Collective doses to the Swedish population from panoramic radiography and lateral cephalography. Swed Dent J 1988;12:161–170 [PubMed] [Google Scholar]

- 12.Mileman P, van derWeele L, van dePoel F, Purdell-Lewis D. Dutch dentists' decisions to take bitewing radiographs. Community Dent Oral Epidemiol 1988;16:368–373 [DOI] [PubMed] [Google Scholar]

- 13.Geist JR, Stefanac SJ, Gander DL. Infection control procedures in intraoral radiology: a survey of Michigan dental offices. Clin Prev Dent 1990;12:4–8 [PubMed] [Google Scholar]

- 14.Hintze H, Wenzel A. Oral radiographic screening in Danish children. Scand J Dent Res 1990;98:47–52 [DOI] [PubMed] [Google Scholar]

- 15.Deliens L, De Deyn B. [Radiographic waste coming from Flemish dental offices. A survey of a representative sample of 373 Flemish dentists.] Rev Belge Med Dent 1993;48(44):44–53 [PubMed] [Google Scholar]

- 16.Hintze H. Radiographic screening examination: frequency, equipment, and film in general dental practice in Denmark. Scand J Dent Res 1993;101:52–56 [DOI] [PubMed] [Google Scholar]

- 17.Parks ET, Farman AG. Infection control for dental radiographic procedures in US dental hygiene programmes. Dentomaxillofac Radiol 1992;21:16–20 [DOI] [PubMed] [Google Scholar]

- 18.Pitts NB, Fyffe HE. Scottish dentists' use of and opinions regarding bitewing radiography. Dentomaxillofac Radiol 1991;20:214–218 [DOI] [PubMed] [Google Scholar]

- 19.Smith NJ. Continuing education in radiation protection: assessment of a one-day course. Br Dent J 1991;170:186–188 [DOI] [PubMed] [Google Scholar]

- 20.Swan ES, Lewis DW. Ontario dentists: 1. Radiologic practices and opinions. J Can Dent Assoc 1993;59:62–67 [PubMed] [Google Scholar]

- 21.el-Mowafy OM, Lewis DW. Restorative decision making by Ontario dentists. J Can Dent Assoc 1994;60:305–310, 313–316 [PubMed] [Google Scholar]

- 22.Horner K, Shearer AC, Rushton VE, Czajka J. Use of “rapid” processing techniques by a sample of British dentists. Dentomaxillofac Radiol 1993;22:145–148 [DOI] [PubMed] [Google Scholar]

- 23.Kay EJ, Nuttall NM. Relationship between dentists' treatment attitudes and restorative decisions made on the basis of simulated bitewing radiographs. Community Dent Oral Epidemiol 1994;22:71–74 [DOI] [PubMed] [Google Scholar]

- 24.Kogon S, Bohay R, Stephens R. A survey of the radiographic practices of general dentists for edentulous patients. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 1995;80:365–368 [DOI] [PubMed] [Google Scholar]

- 25.Mileman PA, van derWeele LT. The role of caries recognition: treatment decisions from bitewing radiographs. Dentomaxillofac Radiol 1996;25:228–233 [DOI] [PubMed] [Google Scholar]

- 26.O'Connor BM. Contemporary trends in orthodontic practice: a national survey. Am J Orthod Dentofacial Orthop 1993;103:163–170 [DOI] [PubMed] [Google Scholar]

- 27.Svenson B, Petersson A. Questionnaire survey on the use of dental X-ray film and equipment among general practitioners in the Swedish Public Dental Health Service. Acta Odontol Scand 1995;53:230–235 [DOI] [PubMed] [Google Scholar]

- 28.Rushton VE, Horner K, Worthington HV. Factors influencing the frequency of bitewing radiography in general dental practice. Community Dent Oral Epidemiol 1996;24:272–276 [DOI] [PubMed] [Google Scholar]

- 29.Ekestubbe A, Grondahl K, Grondahl HG. The use of tomography for dental implant planning. Dentomaxillofac Radiol 1997;26:206–213 [DOI] [PubMed] [Google Scholar]

- 30.Svenson B, Soderfeldt B, Grondahl H. Knowledge of oral radiology among Swedish dentists. Dentomaxillofac Radiol 1997;26:219–224 [DOI] [PubMed] [Google Scholar]

- 31.Saunders WP, Chestnutt IG, Saunders EM. Factors influencing the diagnosis and management of teeth with pulpal and periradicular disease by general dental practitioners. Part 1. Br Dent J 1999;187:492–497 [DOI] [PubMed] [Google Scholar]

- 32.Rushton VE, Horner K, Worthington HV. Factors influencing the selection of panoramic radiography in general dental practice. J Dent 1999;27:565–571 [DOI] [PubMed] [Google Scholar]

- 33.Jenkins SM, Hayes SJ, Dummer PM. A study of endodontic treatment carried out in dental practice within the UK. Int Endod J 2001;34:16–22 [DOI] [PubMed] [Google Scholar]

- 34.Pemberton MN, Dewi PS, Hindle I, Thornhill MH. Investigation and medical management of trigeminal neuralgia by consultant oral and maxillofacial surgeons in the British Isles. Br J Oral Maxillofac Surg 2001;39:114–119 [DOI] [PubMed] [Google Scholar]

- 35.Stewardson DA. Endodontic standards in general dental practice–a survey in Birmingham, Part I. Eur J Prosthodont Restor Dent 2001;9:107–112 [PubMed] [Google Scholar]

- 36.Wenzel A, Møystad A. Decision criteria and characteristics of Norwegian general dental practitioners selecting digital radiography. Dentomaxillofac Radiol 2001;30:197–202 [DOI] [PubMed] [Google Scholar]

- 37.Geist JR, Katz JO. Radiation dose-reduction techniques in North American dental schools. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2002;93:496–505 [DOI] [PubMed] [Google Scholar]

- 38.Helminen SE, Vehkalahti M, Murtomaa H. Dentists' perception of their treatment practices versus documented evidence. Int Dent J 2002;52:71–74 [DOI] [PubMed] [Google Scholar]

- 39.Geist JR, Katz JO. The use of radiation dose-reduction techniques in the practices of dental faculty members. J Dent Educ 2002;66:697–702 [PubMed] [Google Scholar]

- 40.Mutyabule TK, Whaites EJ. Survey of radiography and radiation protection in general dental practice in Uganda. Dentomaxillofac Radiol 2002;31:164–169 [DOI] [PubMed] [Google Scholar]

- 41.Salti L, Whaites EJ. Survey of dental radiographic services in private dental clinics in Damascus, Syria. Dentomaxillofac Radiol 2002;31:100–105 [DOI] [PubMed] [Google Scholar]

- 42.Stewardson DA. Endodontics and new graduates: Part I, Practice vs training. Eur J Prosthodont Restor Dent 2002;10:131–137 [PubMed] [Google Scholar]

- 43.Chandler NP, Koshy S. Radiographic practices of dentists undertaking endodontics in New Zealand. Dentomaxillofac Radiol 2002;31:317–321 [DOI] [PubMed] [Google Scholar]

- 44.Sakakura CE, Morais JA, Loffredo LC, Scaf G. A survey of radiographic prescription in dental implant assessment. Dentomaxillofac Radiol 2003;32:397–400 [DOI] [PubMed] [Google Scholar]

- 45.Berkhout WE, Sanderink GC, Van derStelt PF. Does digital radiography increase the number of intraoral radiographs? A questionnaire study of Dutch dental practices. Dentomaxillofac Radiol 2003;32:124–127 [DOI] [PubMed] [Google Scholar]

- 46.Ide M, Scanlan PJ. The role of professionals complementary to dentistry in orthodontic practice as seen by United Kingdom orthodontists. Br Dent J 2003;194:677–681; discussion 673 [DOI] [PubMed] [Google Scholar]

- 47.Taylor GK, Macpherson LM. An investigation into the use of bitewing radiography in children in Greater Glasgow. Br Dent J 2004;196:563–568; discussion 541 [DOI] [PubMed] [Google Scholar]

- 48.Brian JN, Williamson GF. Digital radiography in dentistry: a survey of Indiana dentists. Dentomaxillofac Radiol 2007;36:18–23 [DOI] [PubMed] [Google Scholar]

- 49.Davies C, Grange S, Trevor MM. Radiation protection practices and related continuing professional education in dental radiography: a survey of practitioners in the North East of England. Radiography 2005;11:255–261 [Google Scholar]

- 50.de Morais JA, Sakakura CE, Loffredo Lde C, Scaf G. A survey of radiographic measurement estimation in assessment of dental implant length. J Oral Implantol 2007;33:186–190 [DOI] [PubMed] [Google Scholar]

- 51.Guo Y, Kanjirath PP, Brooks S. Survey of imaging modalities used for implant-site assessment in Michigan. IADR 2007; New Orleans, LA [Google Scholar]

- 52.Hellen-Halme K, Rohlin M, Petersson A. Dental digital radiography: a survey of quality aspects. Swed Dent J 2005;29:81–87 [PubMed] [Google Scholar]

- 53.Li G, van derStelt PF, Verheij JG, Speller R, Galbiati A, Psomadellis F, et al. End-user survey for digital sensor characteristics: a pilot questionnaire study. Dentomaxillofac Radiol 2006;35:147–151 [DOI] [PubMed] [Google Scholar]

- 54.Stavrianou K, Pappous G, Pallikarakis N. A quality assurance program in dental radiographic units in western Greece. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2005;99:622–627 [DOI] [PubMed] [Google Scholar]

- 55.Sutton F, Ellituv ZN, Seed R. A survey of self-perceived educational needs of general dental practitioners in the Merseyside region. Prim Dent Care 2005;12:78–82 [DOI] [PubMed] [Google Scholar]

- 56.Tugnait A, Clerehugh V, Hirschmann PN. Use of the basic periodontal examination and radiographs in the assessment of periodontal diseases in general dental practice. J Dent 2004;32:17–25 [DOI] [PubMed] [Google Scholar]

- 57.Bjerklin K, Bondemark L. Management of ectopic maxillary canines: variations among orthodontists. Angle Orthod 2008;78:852–859 [DOI] [PubMed] [Google Scholar]

- 58.Lee M, Winkler J, Hartwell G, Stewart J, Caine R. Current trends in endodontic practice: emergency treatments and technological armamentarium. J Endod 2009;35:35–39 [DOI] [PubMed] [Google Scholar]

- 59.McCrea SJ. Pre-operative radiographs for dental implants—are selection criteria being followed? Br Dent J 2008;204:675–682; discussion 666 [DOI] [PubMed] [Google Scholar]

- 60.Ribeiro-Rotta RF, Pereira AC, Oliveira GH, Freire MC, Leles CR, Lindh C. An exploratory survey of diagnostic methods for bone quality assessment used by Brazilian dental implant specialists. J Oral Rehabil 2010;37:698–703 [DOI] [PubMed] [Google Scholar]

- 61.Rugg-Gunn AJ. Scientific validity? Bri Dent J 1997;182:41 [Google Scholar]

- 62.Barclay S, Todd C, Finlay I, Grande G, Wyatt P. Not another questionnaire! Maximizing the response rate, predicting non-response and assessing non-response bias in postal questionnaire studies of GPs. Fam Pract 2002;19:105–111 [DOI] [PubMed] [Google Scholar]

- 63.McCarthy GM, MacDonald JK. Nonresponse bias in a national study of dentists' infection control practices and attitudes related to HIV. Community Dent Oral Epidemiol 1997;25:319–323 [DOI] [PubMed] [Google Scholar]

- 64.Asch DA, Jedrziewski MK, Christakis NA. Response rates to mail surveys published in medical journals. J Clin Epidemiol 1997;50:1129–1136 [DOI] [PubMed] [Google Scholar]

- 65.Ho AYJ, Crawford F, Newton J, Clarkson J. The effect of advance telephone prompting in a survey of general dental practitioners in Scotland: a randomised controlled trial. Community Dent Health 2007;24:233–237 [PubMed] [Google Scholar]

- 66.Yang EY, Kiyak HA. Orthodontic treatment timing: a survey of orthodontists. Am J Orthod Dentofacial Orthop 1998;113:96–103 [DOI] [PubMed] [Google Scholar]

- 67.Dillman DA, Smyth JD, Christian LM. Internet, mail and mixed-mode surveys. The tailored design method, 3rd edn Hoboken, NJ: John Wiley & Sons, Inc.; 2009 [Google Scholar]

- 68.VanGeest JB, Johnson TP, Welch VL. Methodologies for improving response rates in surveys of physicians: a systematic review. Eval Health Prof 2007;30:303–321 [DOI] [PubMed] [Google Scholar]

- 69.Kellerman SE, Herold J. Physician response to surveys. A review of the literature. Am J Prev Med 2001;20:61–67 [DOI] [PubMed] [Google Scholar]

- 70.Scheaffer RL, Mendenhall W, Ott RL. Elementary survey sampling, 5th edn Belmont, CA: Wadsworth Publishing Company; 1996 [Google Scholar]

- 71.Kaner EF, Haighton CA, McAvoy BR. ‘So much post, so busy with practice—so, no time!’: a telephone survey of general practitioners' reasons for not participating in postal questionnaire surveys. Br J Gen Pract 1998;48:1067–1069 [PMC free article] [PubMed] [Google Scholar]

- 72.McAvoy BR, Kaner EF. General practice postal surveys: a questionnaire too far? BMJ 1996; 313: 732–733; discussion 733–734 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Gijbels F, Debaveye D, Vanderstappen M, Jacobs R. Digital radiographic equipment in the Belgian dental office. Radiat Prot Dosimetry 2005;117:309–312 [DOI] [PubMed] [Google Scholar]

- 74.Council of European Dentists EU Manual of Dental Practice: version 4, Belgium. Brussels; 2008 [Google Scholar]

- 75.Tugnait A, Clerehugh V, Hirschmann PN. Radiographic equipment and techniques used in general dental practice: a survey of general dental practitioners in England and Wales. J Dent 2003;31:197–203 [DOI] [PubMed] [Google Scholar]

- 76.Svenson B, Soderfeldt B, Grondahl HG. Attitudes of Swedish dentists to the choice of dental X-ray film and collimator for oral radiology. Dentomaxillofac Radiol 1996;25:157–161 [DOI] [PubMed] [Google Scholar]

- 77.Cummings SM, Savitz LA, Konrad TR. Reported response rates to mailed physician questionnaires. Health Serv Res 2001;35:1347–1355 [PMC free article] [PubMed] [Google Scholar]

- 78.Rugg-Gunn AJ. Guidelines for questionnaire studies. Br Dent J 1997;182:68 [Google Scholar]

- 79.Martin MV. Scientific validity. Br Dent J 1997;183:9. [DOI] [PubMed] [Google Scholar]

- 80.Burke FJ, Palenik CJ. Scientific validity. Br Dent J 1997;183:9. [DOI] [PubMed] [Google Scholar]

- 81.Johnson T, Owens L. Proceedings of the survey response rate reporting in the professional literature: 2003; Nashville, TN [Google Scholar]

- 82.Kviz FJ. Toward a standard definition of response rate. Public Opin Q 1977;41:265–267 [Google Scholar]

- 83.Shosteck H, Fairweather WR. Physician response rates to mail and personal interview surveys. Public Opin Q 1979;43:206–217 [DOI] [PubMed] [Google Scholar]

- 84.Montori VM, Leung TW, Walter SD, Guyatt GH. Procedures that assess inconsistency in meta-analyses can assess the likelihood of response bias in multiwave surveys. J Clin Epidemiol 2005;58:856–858 [DOI] [PubMed] [Google Scholar]

- 85.Church AH. Estimating the effect of incentives on mail survey response rates; A meta-analysis. Public Opin Q 1993;57:62–79 [Google Scholar]

- 86.James JM, Bolstein R. Large monetary incentives and their effect on mail survey response rates. Public Opin Q 1992;56:442–453 [Google Scholar]

- 87.Waltemyer S, Sagas M, Cunningham GB, Jordan JS, Turner BA. The effects of personalization and colored paper on mailed questionnaire response rates in a coaching sample. Res Q Exerc Sport 2005;76:A130 [Google Scholar]