Abstract

Objectives

This study proposes methods for blending design components of clinical effectiveness and implementation research. Such blending can provide benefits over pursuing these lines of research independently; for example, more rapid translational gains, more effective implementation strategies, and more useful information for decision makers. This study proposes a “hybrid effectiveness-implementation” typology, describes a rationale for their use, outlines the design decisions that must be faced, and provides several real-world examples.

Results

An effectiveness-implementation hybrid design is one that takes a dual focus a priori in assessing clinical effectiveness and implementation. We propose 3 hybrid types: (1) testing effects of a clinical intervention on relevant outcomes while observing and gathering information on implementation; (2) dual testing of clinical and implementation interventions/strategies; and (3) testing of an implementation strategy while observing and gathering information on the clinical intervention’s impact on relevant outcomes.

Conclusions

The hybrid typology proposed herein must be considered a construct still in evolution. Although traditional clinical effectiveness and implementation trials are likely to remain the most common approach to moving a clinical intervention through from efficacy research to public health impact, judicious use of the proposed hybrid designs could speed the translation of research findings into routine practice.

Keywords: diffusion of innovation, implementation science, clinical trials, pragmatic designs

Much has been written about the nature of health care science-to-service gaps both in general1–3 and relative specifically to health promotion4 and numerous medical specialties.5–9 Thus far, the literature indicates that gaps between research and practice can result from multiple factors, including educational/knowledge deficiencies and/or disagreements,10,11 time constraints for practitioners,12,13 lack of decision support tools and feedback mechanisms,13 poorly aligned incentives,14 and a host of other organizational climate and cultural factors.2,15,16

In addition to these provider-level and systems-level barriers to rapid translation, Glasgow et al4 and others17–20 argue that the time lag between research discovery and routine uptake is also inflated by the dominant developmental approach; that is, one that encourages delimited, step-wise progressions of research through clinical efficacy research, then clinical effectiveness research, and finally implementation research. In addition, it has been suggested that current conceptions of research designs fail to “maximize clinical utility for practicing clinicians and other decision makers”18; for example, through a failure to focus on external validity or implementation-related barriers and facilitators to routine use and sustainability of “effective” practices.4,21,22

Wells19 and Glasgow et al4 suggested that a blending of the efficacy and effectiveness stages of intervention development could improve the speed of knowledge creation and increase the usefulness and policy relevance of clinical research. We propose that a blending of the design components of clinical effectiveness trials and implementation trials also is feasible and desirable. Such blending can provide benefits over pursuing these lines of research independently; for example, more rapid translational gains in clinical intervention uptake, more effective implementation strategies, and more useful information for researchers and decision makers. This study describes the elements of such “effectiveness-implementation hybrid designs,” discusses the indications for such approaches, outlines the design decisions that must be faced in developing such protocols, and provides several examples of funded hybrid studies to illustrate the concepts.

DEFINING TERMINOLOGY

Terminology in this study has been informed by a glossary provided by the Department of Veterans Affairs Quality Enhancement Research Initiative (VA QUERI)22; with which the authors are affiliated. VA QUERI is funded by the VA’s Health Services Research and Development Service and, through multiple centers across the nation, promotes and supports quality improvement and implementation research.23 Key terms based on this nomenclature are defined in Table 1. In addition, we propose the following definition: an effectiveness-implementation hybrid design is one that takes a dual focus a priori in assessing clinical effectiveness and implementation. Hybrid designs will typically take one of 3 approaches: (a) testing effects of a clinical intervention on relevant outcomes while observing and gathering information on implementation; (b) dual testing of clinical and implementation interventions/strategies; or (c) testing of an implementation strategy while observing and gathering information on the clinical intervention’s impact on relevant outcomes.

TABLE 1.

Key Terms and Definitions*

| Effectiveness-implementation hybrid design | A study design that takes a dual focus in assessing clinical effectiveness and implementation. Hybrid designs can typically take 1 of 3 approaches: (a) testing effects of a clinical intervention on relevant outcomes while observing and gathering information on implementation; (b) dual testing of clinical and implementation interventions/strategies; (c) testing of an implementation strategy while observing and gathering information on the clinical intervention’s impact on relevant outcomes. Such dual foci are always stated a priori. |

| Dissemination | An effort to communicate tailored information to target audiences with the goal of engagement and information use; dissemination is an inherent part of implementation. |

| Implementation | An effort specifically designed to get best practice findings and related products into routine and sustained use through appropriate change/uptake/adoption interventions. In this study, we are not talking about implementation in the sense of insuring fidelity during a clinical trial, that is, how a medication or behavioral theory is administered by research personnel. |

| Clinical intervention | A specific clinical/therapeutic practice (eg, use of aspirin after a myocardial infarction), or delivery system/organizational arrangement (eg, chronic care model or new administrative/practice process), or health promotion activity (eg, self-management) being tested or implemented to improve health care outcomes. |

| Implementation intervention | A method or technique designed to enhance adoption of a “clinical” intervention, as defined above. Examples include an electronic clinical reminder, audit/feedback, and interactive education. |

| Implementation strategy | A “bundle” of implementation interventions. Many implementation research trials test such bundles of implementation interventions. |

| Clinical efficacy research | Highly controlled clinical research that is most concerned with internal validity, that is, reducing threats to causal inference of the treatment/clinical intervention under study. Such threats are reduced mainly by using homogeneous samples and controlling intervention parameters. The main outcome measures are usually specific symptoms. |

| Clinical effectiveness research | Clinical research that is most concerned with external validity, that is, generalizability. Clinical Effectiveness Research is conducted with heterogeneous samples in “real-world” study locations, and attention is given to a range of clinical and other outcomes (eg, quality of life, costs). |

| Implementation research | Implementation research is focused on the adoption or uptake of clinical interventions by providers and/or systems of care. Research outcomes are usually provider and/or system behaviors, for example, levels and rates of adoption and fidelity to the clinical intervention. |

| Process evaluation | A rigorous assessment approach designed to identify potential and actual influences on the conduct and quality of implementation but in which data are not used during conduct of the study to influence the process; such data can be collected previous to the study, concurrently, or retrospectively. |

| Formative evaluation | A rigorous assessment approach, integral to the conduct of an action-oriented implementation study, designed to identify and make parallel use of potential and actual influences on the progress, quality and potential sustainability of implementation; that is, formative evaluation data are used during the study to refine, improve and evolve the implementation process and, in some cases, adapt an implementation and/or clinical intervention itself. Formative evaluation involves assessment previous to, concurrent with, and/or after actual implementation activities, and thus provides data for both immediate use to optimize a related study effort and for post hoc interpretation of findings.21 |

| Summative Evaluation | A rigorous assessment of the worth or impact of a “clinical” and/or implementation intervention/strategy, including, for example:

|

| Indirect evidence | Clinical efficacy or effectiveness data from different but associated populations to the one(s) that are the subject of a possible hybrid study. If there is no indirect evidence supporting a clinical intervention from an associated population, we do not recommend pursuing a hybrid design based on consensus guidelines or expert opinion. |

Many definitions based on the Quality Enhancement Research Initiative Glossary.22

CHALLENGES IN LINKING CLINICAL AND IMPLEMENTATION RESEARCH DESIGNS

Clinical and implementation research, in their “ideal types,” typically do not share many design features. As depicted in Table 2, key differences exist in terms of unit of analysis (perhaps the most obvious distinction), typical unit of randomization, outcome measures, and the targets of the interventions being tested. More specifically, highly controlled clinical efficacy research is most concerned with internal validity, that is, reducing threats to causal inference of the treatment under study, and evaluating symptom/functional-focused outcomes.19 With more heterogeneous samples and study locations, and more attention given to a range of clinical and other outcomes (eg, quality of life, costs), clinical effectiveness research is more concerned with external validity or generalizability.24,19 The most recent adaptation of these principles, to enhance the relevance of effectiveness designs for translation, are “practical clinical trials,”17,18 which have found their newest application in the area of policy-relevant “comparative effectiveness research.”25 In each of these clinical trial approaches, designs rely on controlling/ensuring delivery of the clinical intervention, albeit in a less restrictive setting, with little attention to implementation processes likely to be of relevance to transitioning the intervention to general practice settings.

TABLE 2.

Design Characteristics of Clinical Effectiveness and Implementation Trials (Ideal Types)

| Design Characteristic | Clinical Effectiveness Trial | Implementation Trial |

|---|---|---|

| Test | “Clinical” intervention | Implementation intervention or strategy |

| Typical unit of randomization | Patient, clinical unit | Provider, clinical unit, or system |

| Typical unit of analysis | Patient | Provider, clinical unit, or system |

| Summative outcomes | Health outcomes; process/quality measures typically considered intermediate; costs | Adoption/uptake of the “clinical” intervention; process measures/quality measures typically considered outcomes |

In contrast, implementation research is focused on the adoption or uptake of clinical interventions by providers and/or systems of care2,22,26; and research outcomes are usually provider and/or system behaviors, for example, levels and rates of adoption and fidelity to the clinical intervention. Because implementation research often assumes a linear, step-wise approach to knowledge translation, clinical intervention effectiveness is often assumed, and he assessment of patient-level symptom/functional outcomes, therefore, is often not included in the designs.

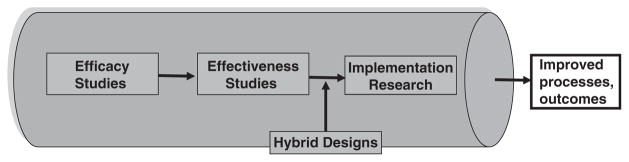

Given the differing priorities, methods, and even language of effectiveness and implementation research (Table 2), it is not surprising that few studies in the peer-reviewed literature are structured to address a priori both clinical intervention and implementation aims. Moreover, those published studies actually doing so have seldom explicitly recognized the hybrid nature of their designs or acknowledged/described the trade-offs entailed in such designs. This is understandable as there has been a dearth of explicit guidance on how such hybridization can be systematically attempted (Fig. 1).

FIGURE 1.

Research pipeline.

HYBRID DESIGNS: CONCEPTUAL DEVELOPMENT

The origins of the hybrid designs proposed herein result from our collective experience over many years in writing, reviewing, and conducting research projects across the efficacy–effectiveness-implementation spectrum. Under the umbrella of the VA QUERI and its implementation frameworks,22 we formed a work group to explore the hybrid concept. The work group, consisting of the authors of the manuscript, brought expertise in implementation research, clinical effectiveness trials, cost effectiveness research, qualitative research, formative evaluation, evidence-based practice, and clinical expertise in psychiatry. The work group discussed the following issues: design elements, challenges, and potential areas or opportunities for blending effectiveness with implementation research designs to hasten the movement of interventions from effectiveness testing through implementation to public health impact. We initially articulated a definition of hybrid designs and tested it both logically and against existing studies. We then revised our definition and typology in light of this testing. We subsequently had the opportunity to make formal presentations to audiences of health services and implementation researchers and trainees, and incorporated their feedback.

The remainder of this study builds on our refined definition of hybrid design and its 3 types as articulated below, with the text amplifying and unpacking the information presented in 2 supporting tables. Table 3 provides a summary of hybrid research aims, design considerations, and trade-offs to be considered within each hybrid type. Table 4 provides funded and published or presented examples of type 1, 2, and 3 hybrid designs, along with a comparison with a published clinical effectiveness randomized-controlled trial and a nonhybrid implementation trial. Most of the examples of the proposed hybrids discussed in the text (except for 2) and all presented in Table 4 are from the VA. As noted above, we have approached this work based on our experience in a VA implementation research program, and most of the examples we know best come from the VA setting. In theory, the hybrid designs should not be more or less effective in the VA or any other setting (as was true for our 2 non-VA examples), and the recommended conditions we propose for their use are not exclusive to, or even more ideal in, a VA or other large/single-payer system.

TABLE 3.

Hybrid Design Characteristics and Key Challenges

| Study Characteristic | Hybrid Trial Type 1 | Hybrid Trial Type 2 | Hybrid Trial Type 3 |

|---|---|---|---|

| Research aims | Primary aim: determine effectiveness of a clinical intervention Secondary aim: better understand context for implementation |

Coprimary aim*: determine effectiveness of a clinical intervention Coprimary aim: determine feasibility and potential utility of an implementation intervention/strategy |

Primary aim: determine utility of an implementation intervention/strategy Secondary aim: assess clinical outcomes associated with implementation trial |

| Research questions (examples) | Primary question: will a clinical treatment work in this setting/these patients? Secondary question: what are potential barriers/facilitators to a treatment’s widespread implementation? |

Coprimary question*: will a clinical treatment work in this setting/these patients? Coprimary question: does the implementation method show promise (either alone or in comparison with another method) in facilitating implementation of a clinical treatment? |

Primary question: which method works better in facilitating implementation of a clinical treatment? Secondary question: are clinical outcomes acceptable? |

| Units of randomization | Patient, clinical unit | Clinical effectiveness: see type I Implementation: see type III, although may be nonrandomized, for example, case study |

Provider, clinical unit, facility, system |

| Comparison conditions | Placebo, treatment as usual, competing treatment | Clinical effectiveness: see type I Implementation: see type III, although may be nonrandomized, for example, case study |

Provider, clinical unit, facility, system: implementation as usual, competing implementation strategy |

| Sampling frames | Patient: limited restrictions, but some inclusion/exclusion criteria Provider, clinical unit, facility, system: choose subsample from relevant participants |

Patient: limited restrictions, but some inclusion/exclusion criteria Providers/clinics/facility/systems; consider “optimal” cases |

Provider/clinic/facility/system: either “optimal” cases or a more heterogeneous group Secondary: all or selected patients included in study locations |

| Evaluation methods | Primary aim: quantitative, summative Secondary aim: mixed methods, qualitative, process-oriented, could also inform interpretation of primary aim findings |

Clinical effectiveness aim: quantitative, summative Implementation aim: mixed method; quantitative, qualitative; formative and summative |

Primary aim: mixed-method, quantitative, qualitative, formative, and summative Secondary aim: quantitative, summative |

| Measures | Primary aim: patient symptoms and functioning, possibly cost Secondary aim: feasibility and acceptability of implementing clinical treatment, sustainability potential, barriers and facilitators to implementation |

Clinical effectiveness aim: patient symptoms and functioning, possibly cost effectiveness Implementation aim: adoption of clinical treatment and fidelity to it, as well as related factors |

Primary aim: adoption of clinical treatment and fidelity to it, as well as related factors Secondary aim: patient symptoms, functioning, services use |

| Potential design challenges | Generating “buy in” among clinical researchers for implementation aims Insuring appropriate expertise on study team to conduct rigorous Secondary aim These studies will likely require more research expertise and personnel, and larger budgets, than nonhybrids |

Generating “buy in” among implementation researchers for clinical intervention aims These studies will require more research expertise and personnel, as well as larger budgets, than nonhybrids Insuring appropriate expertise on study team to rigorously conduct both aims “Creep” of clinical treatment away from fidelity needed for optimal effectiveness IRB complexities with multiple types of participants |

Primary data collection with patients in large, multisite implementation trials can be unfeasible, and studies might need to rely on subsamples of patients, medical record review, and/or administrative data. Patient outcomes data will not be as extensive as in traditional effectiveness trials or even other Hybrid types, and might be insufficient to answer some questions “Creep” of clinical treatment away from fidelity needed for optimal effectiveness IRB complexities with multiple types of participants |

In a grant application, one of these aims/research questions might take precedence, for example in a case where the test of an implementation intervention/strategy is exploratory. Yet, for the purposes of this table, we listed these dual aims/research questions as “coprimary.”

IRB indicates Institutional Review Board.

TABLE 4.

Hybrid Examples

| Nonhybrid Effectiveness Trial: Bauer et al27–29 | Hybrid Type 1: Hagedorn et al30 | Hybrid Type 2: Brown et al31 | Hybrid Type 3: Kirchner et al32 | Nonhybrid Implementation Trial: Lukas et al33 | |

|---|---|---|---|---|---|

| Study aims | |||||

| Clinical effectiveness aim/focus | Randomized clinical effectiveness trial to determine effect of collaborative CCM on clinical, functional, quality of life, and economic outcomes in bipolar disorder | Randomized clinical effectiveness trial to test effectiveness of providing incentives for negative urine screens on frequency of substance use, treatment attendance, and service utilization costs for patients attending treatment for alcohol and/or stimulant use disorders | Controlled trial to evaluate the effectiveness (relative to usual care) of a chronic illness care model on clinical outcomes among persons with schizophrenia | Evaluation of selected clinical outcomes after implementation of a model of integrated primary care and mental health | — |

| Implementation aim/focus | — | Process evaluation to inform future implementation efforts | Controlled trial of implementation strategy (eg, marketing, education, facilitation, product champion interventions) to facilitate adoption and sustain chronic illness care model | Randomized test of an implementation strategy (ie, facilitation from internal and external experts using multiple implementation interventions) to support adoption of 3 models of integrated primary care and mental health in comparison with a nationally supported dissemination/implementation strategy | Impact of the OTM implementation strategy on hand hygiene adherence rates: (a) can the organizational transformation model beimplemented?; (b) is fidelity to the organizational transformation model associated with improved hand hygiene?; (c) what are the sources of variability in organizational transformation model implementation? |

| Study design | |||||

| Clinical effectiveness | Patient-level randomized-controlled trial

|

Patient-level randomized-controlled trial

|

Controlled trial in 4 Veterans Integrated Service Networks (a regional structure in VA; VISNs). In each VISN, 1 medical center assigned to clinical intervention and supporting implementation strategy, and 1 to usual care.

|

Facility-level randomized trial for implementation strategy with 18 matched intervention and comparison sites. Comparison of clinical outcomes (eg, depression symptoms, psychiatric hospitalization) among primary care patients across intervention and comparison sites |

— |

| Implementation | — | Process evaluation with mixed methods of patients, providers and managers

|

Controlled trial in 4 VISNs. In each VISN, 1 medical center assigned to clinical intervention and supporting implementation strategy, and 1 to usual care. FE used to understand and continually adapt implementation of the clinical interventions and implementation strategy.

|

Facility-level randomized trial for implementation strategy with 18 matched intervention and comparison sites. FE used to understand and continually adapt implementation of the clinical interventions and implementation strategy (FE is also one of the implementation interventions used by the facilitators). |

|

| Summative evaluation unit of analysis and measures | |||||

| Health outcomes | Weeks in manic or depressive episode, social role function, quality of life (patient level) | Rates of negative alcohol/drug screens (patient level) | Symptoms, side-effects, adherence, knowledge and attitudes, functioning, quality of life, recovery, satisfaction (patient level) | Depression symptoms and alcohol use variables from routine clinic screening instruments, pertinent service use information such as psychiatric hospitalization | — |

| Health care process/quality outcomes* | Rates of guideline-concordant antimanic treatment (patient level) | Program attendance rates (patient level) | Structure and process of care for use of clozapine, weight management, family involvement, and supported employment

|

Referral rates to integrated primary care and mental health models Fidelity to the specific models Sustainability of uptake |

Hand hygiene adherence rates (medical center level) |

| Organizational Outcomes* | Total direct treatment costs from VHA economic perspective | Total direct treatment costs from VHA economic perspective | Treatment costs, clinician burnout | — | — |

| Key process (PE) or FE Measures | |||||

| Not a focus of research; limited to CCM fidelity monitoring and rectification throughout trial | Process evaluation of:

|

Formative evaluation preimplementation and postimplementation assessment of:

|

Formative evaluation used by the study staff to tailor the facilitation strategy

|

Preimplementation assessment including site visits at 4- to 6- month intervals to monitor and facilitate Interview data regarding characteristics of implementation of OTM, network level support activities Implementation ratings completed by research site visit teams (facility level) |

|

Could be either a clinical intervention-related outcome or an implementation outcome depending on the a priori focus of the study.

CCM indicates chronic care model; FE, formative evaluation; OTM, Organizational Transformation Model; VA, Veterans Affairs; VISN, Veterans Integrated Services Network; VHA, Veterans Health Administration.

Hybrid Type 1

Testing a clinical intervention while gathering information on its delivery during the effectiveness trial and/or on its potential for implementation in a real-world situation.

Rationale

Modest refinements to effectiveness studies are possible that would retain their strength and involve no loss in the ability to achieve their primary goal, whereas simultaneously improving their ability to achieve a key secondary goal; that is, serve as a transition to implementation research. In most cases for Hybrid Type 1, we are advocating process evaluations (Table 1) of delivery/implementation during clinical effectiveness trials to collect valuable information for use in subsequent implementation research trials (hybrid or not). Many potential implementation research questions thus can be addressed, perhaps more comprehensively, accurately and certainly earlier than could be achieved in a sequential “intervention-then-preliminary-implementation” study strategy: What are potential barriers and facilitators to “real-world” implementation of the intervention? What problems were associated with delivering the intervention during the clinical effectiveness trial and how might they translate or not to real-world implementation? What potential modifications to the clinical intervention could be made to maximize implementation? What potential implementation strategies appear promising?

We recommend that the above type of questions should be posed to representatives of relevant stakeholder groups—for example, patients, providers, and administrators. Process evaluation data can also help explain/provide context for summative findings from the clinical effectiveness trial.

Recommended conditions for use: Hybrid 1 designs should be considered under the following conditions: (1) there should be strong face validity for the clinical intervention that would support applicability to the new setting, population, or delivery method in question; (2) there should be a strong base of at least indirect evidence (eg, data from different but associated populations) for the intervention that would support applicability to the new setting, population, or delivery method in question; (3) there should be minimal risk associated with the intervention, including both its direct risk and any indirect risk through replacement of a known adequate intervention. These conditions, to varying degrees, are often found in “research-practice networks” such as the National Institute on Drug Abuse clinical trials network, and Hybrid 1 designs should be particularly attractive for these partnerships.

In addition, there are conditions under which a Hybrid 1 study would seem premature or less feasible—for example, in clinical effectiveness trials with major safety issues, complex comparative effectiveness trials, and “pilot” or very early clinical effectiveness trials. In general, however, we argue that a Hybrid 1 is particularly “low risk” with the potential for high reward given that the implementation research portion is essentially an “add-on” to a routinely designed and powered clinical trial. When moving into the next Hybrid type, there are more complexities to consider and trade-offs to be weighed.

Examples

As summarized in Table 4, a recent Hybrid Type 1 study by Hagedorn et al30 included a randomized clinical effectiveness trial of contingency management with a mixed-method, multistakeholder process evaluation of the delivery of the intervention. In another example (not found in the Table 4), the National Institute on Mental Health-funded “Coordinated Anxiety Learning and Management (CALM)-study”34 tested the clinical effectiveness of a collaborative care intervention for anxiety disorders while also conducting a multistakeholder qualitative process evaluation. Key research questions in the CALM process evaluation were: (1) what were the facilitators/barriers to delivering the CALM intervention?; (2) what were the facilitators/barriers to sustaining the CALM intervention after the study was completed?; (3) how could the CALM intervention be changed to improve adoption and sustainability?

Hybrid Type 2

Simultaneous testing of a clinical intervention and an implementation intervention/strategy.

Rationale

This hybrid type is a more direct blending of clinical effectiveness and implementation research aims in support of more rapid translation. In this case, interventions in both the clinical and implementation spheres are tested simultaneously. It is important to note that we are using the term “test” in a liberal manner, meaning that the interventions in question need not all be tested with randomized, strongly powered designs. What makes for a “test” of an intervention here is that at least 1 outcome measure is being used and that at least 1 related hypothesis, however preliminary, is being studied. The nature of randomizations/comparisons and power can vary depending on research needs and conditions. Given the reality of research funding limits, it is likely that some design/power compromises will be necessary in one or both of the intervention tests; however, in the cases of conditions favorable to this hybrid type (see below), such design compromises need not derail progress toward addressing translation gaps/needs in the literature.

This hybrid type is also motivated by the recognition that conventional effectiveness studies often yield estimates of effectiveness that are significantly different (worse) from the estimates of efficacy studies because the effectiveness study is often conducted in “worst case” conditions; that is, with little or no research team support of delivery/implementation, without clear understanding of barriers to fidelity, and without efforts to overcome those barriers. In a Hybrid Type 2 study, where an implementation intervention/strategy of some kind is being tested alongside and in support of a clinical intervention under study, it is possible to create and study a “medium case”/pragmatic set of delivery/implementation conditions versus “best” or “worst” case conditions. Therefore, while speeding translation, it is possible with Hybrid Type 2 designs to provide more valid estimates of potential clinical effectiveness.

Recommended conditions for use: The following conditions are recommended to consider a Hybrid Type 2: (1) there should be strong face validity for the clinical and implementation interventions/strategies that would support applicability to the new setting, population, or delivery/implementation methods in question; (2) there should be at least a strong base of indirect evidence (defined above) for the clinical and implementation interventions/strategies that would support applicability to the new setting, population, or delivery/implementation method in question; (3) there should be minimal risk associated with the clinical and implementation interventions/strategies, including both the direct risk of the interventions and any indirect risk through replacement of known adequate interventions; (4) there should be “implementation momentum” within the clinical system and/or the literature toward routine adoption of the clinical intervention in question. The momentum could come from a number of possible scenarios or factors—for example, strong “indirect” efficacy or effectiveness data; advocacy from patient groups, providers or lawmakers (often in the case of severe clinical consequences/risks from nonaction); and/or health system administrators seeking rapid uptake of an intervention based on the above or other factors, for example, costs. Evidence of such momentum could come from published reports, official policies, or even from discussions with key stakeholders; (5) there should be reasonable expectations that the implementation intervention/strategy being tested is supportable in the clinical and organizational context under study; (6) there is reason to gather more data on the effectiveness of the clinical intervention (eg, it is being provided in a different format or novel setting).

In addition, we have identified other conditions that might be considered to be “ideal” for this hybrid type. These are: (i) strong indirect clinical evidence (see #2 above) comes from either a population or clinical setting reasonably close to the population or setting in question, thereby not necessitating a large clinical effectiveness trial. If the clinical effectiveness study can be of moderate size, additional budget and efforts can be used toward the implementation intervention/strategy and its evaluation; (ii) the clinical intervention and/or implementation intervention/strategy to be tested are not overly complex in terms of changes necessary within the clinic/organization to support it; that is, the testing of clinical and implementation interventions/strategies within the same providers/clinics/systems is not perceived to be, nor actually is overly taxing to participating stakeholders.

Examples

As summarized in Table 4, the Hybrid Type 2 “Enhancing Quality and Utilization in Psychosis study”31 was an 8-site VA study where 4 sites were randomized to a chronic illness care model for schizophrenia (clinical/delivery system intervention) supported by an implementation strategy (facilitation, quality improvement teams, quality reports, etc.). The study gathered clinical and implementation outcome data at all sites. The investigators recognized a need to test implementation strategies to support recovery-oriented interventions for persons with schizophrenia, as many guidelines and VA directives were encouraging the use of recovery-oriented programs even in the case of less than ideal effectiveness research support. In another example (not in Table 4), the “HIV Translating Initiatives for Depression into Effective Solutions study,”35 human immunodeficiency virus (HIV) patients were randomized to a clinical/delivery system intervention (collaborative care for depression) at 3 VA clinics, whereas the same clinics participated in a nonrandomized, exploratory implementation strategy as well. Although it was clear to the investigators that a patient-level randomized trial of the effectiveness of depression collaborative care in HIV patients was needed (no trials yet in HIV setting), they also recognized that, given the strong evidence of depression collaborative care in primary care settings (similar in scope to many HIV clinics) and momentum in the system for its uptake, it was also timely to use the study to explore an implementation strategy in this setting as well. An additional Hybrid Type 2 design variant (also not in Table 4) comes from the “Healthy Bones” study,36 where both patient-directed and physician-directed interventions for fracture prevention were simultaneously tested in a 2×2 factorial randomized controlled trial. On the basis of the observation that management of osteoporosis and fall prevention was suboptimal and that both patient and provider behaviors needed improvement, the study investors used this design to simultaneously test patient and provider education interventions on a range of outcomes. Although the “clinical” (patient education) and “implementation” (academic detailing) interventions tested were certainly not as complex some of the other examples, perhaps it was this simplicity that allowed for the large factorial design.

Hybrid Type 3

Testing an implementation intervention/strategy while observing/gathering information on the clinical intervention and related outcomes.

Rationale

A “pure,” nonhybrid implementation study is conducted after an adequate body of evidence has accumulated that clearly establishes the effectiveness of a clinical intervention, and thus clearly supports the appropriateness of costly efforts to try to facilitate better implementation. Sometimes, however, we can and do proceed with implementation studies without completion of the full or at times even a modest portfolio of effectiveness studies beforehand. In such cases, it is common that a prevailing health policy dictates/encourages implementation of a clinical intervention that is, to varying degrees, still in question from an effectiveness perspective. Similar to the cases discussed above with reference to “momentum for implementation,” the situations where health systems actually encourage or attempt implementation of a clinical intervention without the desired clinical effectiveness data base could include the presence of respected consensus guidelines; strong “indirect” efficacy or effectiveness data; advocacy from patient groups, providers or lawmakers (often in the case of the current state of practice and severe clinical consequences/risks from nonaction); and administrators seeking to reduce costs by implementing a cheaper clinical alternative. In these cases, it is, therefore, important to proactively include resources to collect evidence of clinical effectiveness.

In addition, Hybrid Type 3 designs are indicated if it is suspected that the clinical intervention effects might be susceptible to change during implementation in a new setting or under conditions less controlled that in effectiveness trials. Such changes in clinical intervention effectiveness could represent either a vulnerability or an enhancement under implementation conditions compared with effects seen during clinical trials.

Recommended conditions for use: The following conditions are recommended to consider a Hybrid Type 3: (1) there should be strong face validity for the clinical and implementation interventions/strategies that would support generalizability to the new setting, population, or delivery/implementation methods in question; (2) there should be a strong base of indirect evidence (defined above) for the clinical and implementation interventions/strategies that would support generalizability to the new setting, population, or delivery/implementation method in question; (3) there should be minimal risk associated with the clinical and implementation interventions/strategies, including both the direct risk of the interventions and any indirect risk through replacement of known adequate interventions; (4) there should be strong “implementation momentum” in the form of actual mandates or strong encouragement within the clinical system and/or the literature toward routine adoption of the clinical intervention in question; (5) there should be evidence that the implementation intervention/strategy being tested is feasible and supportable in the clinical and organization context under study.

Examples

As summarized in Table 4, the Hybrid Type 3 “Blended-Facilitation” study (Kirchner et al32) is a 16-site implementation trial in VA with 8 sites receiving an implementation facilitation strategy consisting of internal and facilitation (plus numerous implementation tools and aids) to support implementation of integrated primary care and mental health. Internal (to the program sites) and external facilitators use a variety of strategies to facilitate implementation including academic detailing, provider education, local change agent participation, stakeholder engagement at all levels of the organization, performance monitoring and feedback, formative evaluation, and marketing. The comparison sites receive a dissemination/implementation strategy being provided by the VA nationally, as integrated primary care mental health is an officially mandated “best practice.” Some components of what is being “rolled-out” nationally do not have a strong clinical effectiveness research base—for example, use of “generic” mental health care managers (the data support only care managers providing service for individual disorders like depression or specific clusters of disorders like anxiety disorders). Therefore, while the main outcomes for the study are implementation focused, the study is also collecting clinical data from the VA’s automated medical record where possible (eg, scores on routine depression screeners). In another example, the “Implementation of the Hospital to Home (H2H) Health Failure Initiative” (Heidenreich et al37) study is testing an implementation strategy to support uptake of the H2H Initiative (a package of clinical interventions) in multiple facilities while also collecting clinical outcome data. The study randomized 122 VA facilities to intervention and comparison conditions, with intervention facilities receiving a range of implementation interventions, including web-based “kick-off” meetings (education and marketing), toolkit dissemination, and roles for local opinion leaders. Comparison programs are converting to intervention programs after 6 months. Although key outcomes variables related to uptake of H2H are defined for the implementation component (eg, enrollment rates other performance measures), the study is also collecting and comparing key patient outcomes data (mortality and rehospitalization) across intervention and comparison sites.

DISCUSSION

As we have explored relevant work in the VA and the implementation field in general, we have seen nonsystematic efforts at blending effectiveness and implementation trial elements. Through this hybrid framework we offer guidance to the field and hopefully provide assistance to investigators searching for identifiable design solutions. In addition, we hope to stimulate further thinking and to encourage new design combinations. The hybrid definition and typology offered here must be considered constructs still in evolution. It is important to note that the “boundaries” we have drawn around these hybrid types are not intended to be rigid, and that future work should refine and extend what has been presented here. In addition, we recognize that some of the “recommended conditions for use” of the hybrids are subjective (eg, current definitions of “strong face validity” and “indirect evidence”) and that they will need to be reasoned and argued by investigators on a case-by-case basis at least until additional work refines the definitions and conditions.

Although traditional clinical effectiveness and implementation trials are likely to remain the most common approach to moving a clinical intervention through from efficacy research to public health impact, judicious use of the proposed hybrid designs could speed the translation of research findings into routine practice. However, despite their potential benefits, we recommend that certain conditions should first be met; and, even when desirable, we recognize that hybrids might not always be feasible or affordable within traditional research budget limits. We recommend that future hybrid research seeks to document in both quantitative and qualitative ways the extent and manner in which translation has been sped. As of now, we can only say that these hybrids have the potential to speed and improve translation. Further, the relative speed of translation is not usually included in traditional cost effectiveness analyses, and it would be interesting to explore the potential benefits of hybrid designs from this perspective.

In considering use of a hybrid, it is important to acknowledge the potential “ecological” challenges associated with pursuing such designs. First, researchers from clinical and implementation research backgrounds often do not share concepts, constructs, and vocabulary; more difficult, sometimes the vocabulary is the same but the meanings are different. This makes it somewhat difficult for researchers from different traditions to communicate efficiently and effectively, which could serve as a barrier to collaboration, and perhaps also impede comprehension during research proposal and manuscript reviews. More specifically, lack of reviewer expertise on grant review panels and among journal reviewers and editorial boards relative to emerging concepts and innovations in the field of implementation science can have an inhibitory effect on the development, implementation, and reporting of hybrid studies. Review of hybrid designs requires at least an appreciation of the complexities balancing internal and external validity considerations in such trials, as well as the design trade-offs inherent in structuring such complex protocols and related budgetary needs. Reviews must also, of course, have sufficient technical expertise across members so that, in aggregate, both the clinical intervention and the implementation aspects of the study can be effectively evaluated.

Finally, the same appreciation and expertise required of journal and grant review bodies is required on promotion and tenure committees, although as implementation research and hybrid designs become more widely appreciated, this lack of expertise will diminish—as it has for effectiveness-oriented clinical trials.4,38–40 Hybrid studies are typically more complex to execute and thus may be relatively “high risk”; however, the successfully implemented hybrid study will likely pay dividends across both the (a priori) clinical and implementation foci, thus yielding rich data that will be of use both scientifically and in terms of public health impact.

The impetus to blend or explicitly link research traditions in the service of accelerating scientific progress and enhancing public health impact is not at all new,4,38–40 and the idea of blending clinical effectiveness and implementation research elements is also not new. As the examples above indicate, “informal” hybrid design trials are already being conducted and reported. The function of this study is both to help the field better organize its thinking and design deliberations concerning these concepts that we felt were not yet clearly articulated and to stimulate further development. The “ecological” challenges noted above will not endure. They can be overcome, like many diffusion of innovation challenges, with education and committed leadership over time.

Acknowledgments

Supported by a research grant for the Department of Veterans Affairs, Health Services Research and Development Service: MNT-05-152 (Pyne, PI) and also funded by a research grant from the National Institute on Drug Abuse: K01 DA15102 (Curran, PI).

Footnotes

The authors declare no conflict of interest.

References

- 1.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: Institute of Medicine of the National Academics Press; 2001. [PubMed] [Google Scholar]

- 2.Grol R, Wensing M, Eccles M. Improving Patient Care: The Implementation of Change in Clinical Practices. Toronto: Elsevier; 2005. [Google Scholar]

- 3.Stetler CB, McQueen L, Demakis J, et al. An organizational framework and strategic implementation for system-level change to enhance research-based practice: QUERI Series. Implement Sci. 2008;3:30. doi: 10.1186/1748-5908-3-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Glasgow RE, Lictenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health. 2003;93:1261–1267. doi: 10.2105/ajph.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shojania KG, Ranji SR, Shaw LK, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol 2: Diabetes Care) Rockville, MD: Agency for Healthcare Research and Quality; 2004. [PubMed] [Google Scholar]

- 6.Bravata DM, Sundaram V, Lewis R, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol 5: Asthma Care) Rockville, MD: Agency for Healthcare Research and Quality; 2007. [PubMed] [Google Scholar]

- 7.Walsh J, McDonald KM, Shojania KG, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol 3: Hypertension Care) Rockville, MD: Agency for Healthcare Research and Quality; 2005. [PubMed] [Google Scholar]

- 8.Carroll KM, Rounsaville BJ. Bridging the gap: a hybrid model to link efficacy and effectiveness research in substance abuse treatment. Psychiatr Serv. 2003;54:333–339. doi: 10.1176/appi.ps.54.3.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ranji SR, Steinman MA, Sundaram V, et al. Closing the Quality Gap: A Critical Analysis of Quality Improvement Strategies (Vol 4: Antibiotic Prescribing Behavior) Rockville, MD: Agency for Healthcare Research and Quality; 2006. [PubMed] [Google Scholar]

- 10.Freemantle N, Eccles M, Wood J, et al. A randomized trial of evidence-based outreach (EBOR) rationale and design. Control Clin Trials. 1999;20:479–492. doi: 10.1016/s0197-2456(99)00023-9. [DOI] [PubMed] [Google Scholar]

- 11.Cochrane LJ, Olson CA, Murray S, et al. Gaps between knowing and doing: understanding and assessing the barriers to optimal health care. J Contin Educ Health Prof. 2007;27:94–102. doi: 10.1002/chp.106. [DOI] [PubMed] [Google Scholar]

- 12.Fruth SJ, Veld RD, Despos CA, et al. The influence of a topic-specific, research-based presentation on physical therapists’ beliefs and practices regarding evidence-based practice. Physiother Theory Pract. 2010;26:537–557. doi: 10.3109/09593980903585034. [DOI] [PubMed] [Google Scholar]

- 13.Caban MD, Rand CS, Powe NR, et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282:1458–1465. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 14.Reschovsky JD, Hadley J, Landon BE. Effects of compensation methods and physician group structure on physicians’ perceived incentives to alter services to patients. Health Serv Res. 2006;41:1200–1220. doi: 10.1111/j.1475-6773.2006.00531.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Racine DP. Reliable effectiveness: a theory on sustaining and replicating worthwhile innovations. Adm Policy Ment Health. 2006;33:356–387. doi: 10.1007/s10488-006-0047-1. [DOI] [PubMed] [Google Scholar]

- 16.Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tunis SR, Stryer DB, Clancey CM. Increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290:1624–1632. doi: 10.1001/jama.290.12.1624. [DOI] [PubMed] [Google Scholar]

- 18.March JS, Silva SG, Comptom S, et al. The case for practical clinical trials in psychiatry. Am J Psychiatry. 2005;162:836–846. doi: 10.1176/appi.ajp.162.5.836. [DOI] [PubMed] [Google Scholar]

- 19.Wells KB. Treatment research at the crossroads: the scientific interface of clinical trials and effectiveness research. Am J of Psychiatry. 1999;156:5–10. doi: 10.1176/ajp.156.1.5. [DOI] [PubMed] [Google Scholar]

- 20.Proctor EK, Landsverk J, Aarons G, et al. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health. 2009;36:24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stetler CB, Legro MW, Wallance CM, et al. The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med. 2006;21:S1–S8. doi: 10.1111/j.1525-1497.2006.00355.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stetler CB, Mittman BS, Francis J. Overview of the VA Quality Enhancement Research Initiative (QUERI) and QUERI Theme Articles: QUERI Series. Implement Sci. 2008;3:8. doi: 10.1186/1748-5908-3-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Demakis JG, McQueen L, Kizer KW, et al. Quality Enhancement Research Initiative (QUERI): a collaboration between research and clinical practice. Med Care. 2000;38:17–25. [PubMed] [Google Scholar]

- 24.Bauer MS, Williford WO, Dawson EE, et al. Principals of effectiveness trials and their implementation in VA Cooperative Study #430: Reducing the efficacy-effectiveness gap in bipolar disorder. J Affect Disord. 2001;67:61–78. doi: 10.1016/s0165-0327(01)00440-2. [DOI] [PubMed] [Google Scholar]

- 25.Sox HC. Comparative effectiveness research: a progress report. Ann Intern Med. 2010;153:469–472. doi: 10.7326/0003-4819-153-7-201010050-00269. [DOI] [PubMed] [Google Scholar]

- 26.Atkins D. QUERI and implementation research: emerging from adolescence into adulthood: QUERI series. Implement Sci. 2009;4:12. doi: 10.1186/1748-5908-4-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bauer MS, McBride L, Williford WO, et al. for the CSP #430 Study Team. Collaborative care for bipolar disorder, Part I: intervention and implementation in a randomized effectiveness trial. Psychiatric Services. 2006a;57:927–936. doi: 10.1176/ps.2006.57.7.927. [DOI] [PubMed] [Google Scholar]

- 28.Bauer MS, McBride L, Williford WO, et al. for the CSP #430 Study Team. Collaborative care for bipolar disorder, Part II: impact on clinical outcome, function, and costs. Psychiatric Services. 2006b;57:937–945. doi: 10.1176/ps.2006.57.7.937. [DOI] [PubMed] [Google Scholar]

- 29.Bauer MS, Biswas K, Kilbourne AM. Enhancing multi-year guideline concordance for bipolar disorder through collaborative care. Am J Psychiatry. 2009;166:1244–1250. doi: 10.1176/appi.ajp.2009.09030342. [DOI] [PubMed] [Google Scholar]

- 30.Hagedorn H, Noorbaloochi S, Rimmele C, et al. The Rewarding Early Abstinence and Treatment Participation (REAP) Study. Presented at Enhancing Implementation Science in VA; Denver, CO. 2010. [Google Scholar]

- 31.Brown AH, Cohen AN, Chinman MJ, et al. EQUIP: implementing chronic care principles and applying formative evaluation methods to improve care for schizophrenia: QUERI Series. Implement Sci. 2008;3:9. doi: 10.1186/1748-5908-3-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kirchner JE, Ritchie M, Curran G, et al. Enhancing Implementation Science meeting sponsored by Department of Veterans Affairs Quality Enhancement Research Initiative. Phoenix: AZ: 2011. Facilitating: Design, Using, and Evaluating a Facilitation Strategy. [Google Scholar]

- 33.Lukas CV, Engle RL, Holmes SK, et al. Strengthening organizations to implement evidence-based clinical practices. Healthcare Manag Rev. 2010;35:235–245. doi: 10.1097/HMR.0b013e3181dde6a5. [DOI] [PubMed] [Google Scholar]

- 34.Roy-Byrne P, Craske MG, Sullivan G, et al. Delivery of evidence-based treatment for multiple anxiety disorders in primary care. JAMA. 2010;303:1921–1928. doi: 10.1001/jama.2010.608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pyne JM, Fortney JC, Curran GC, et al. Effectiveness of collaborative care for depression in human immunodeficiency virus clinics. Arch Intern Med. 2011;171:23–31. doi: 10.1001/archinternmed.2010.395. [DOI] [PubMed] [Google Scholar]

- 36.Solomon DH, Brookhart MA, Polinski J, et al. Osteoporosis action: design of the Healthy Bones project trial. Contemp Clin Trials. 2005;26:78–94. doi: 10.1016/j.cct.2004.11.012. [DOI] [PubMed] [Google Scholar]

- 37.Heidenreich, et al. http://www.queri.research.va.gov/chf/products/h2h.

- 38.Zerhouni E. The NIH Roadmap. Science. 2003;302:63–72. doi: 10.1126/science.1091867. [DOI] [PubMed] [Google Scholar]

- 39.Wells K, Miranda J, Bruce ML, et al. Bridging community intervention and mental health services research. Am J Psychiatry. 2004;161:955–963. doi: 10.1176/appi.ajp.161.6.955. [DOI] [PubMed] [Google Scholar]

- 40.Brown CH, Ten Have TR, Jo B, et al. Adaptive designs for randomized trials in public health. Annu Rev Public Health. 2009;30:1–25. doi: 10.1146/annurev.publhealth.031308.100223. [DOI] [PMC free article] [PubMed] [Google Scholar]