Abstract

In numerous experimental contexts, gesturing has been shown to lighten a speaker’s cognitive load. However, in all of these experimental paradigms, the gestures have been directed to items in the ‘here-and-now’. This study attempts to generalize gesture’s ability to lighten cognitive load. We demonstrate here that gesturing continues to confer cognitive benefits when speakers talk about objects that are not present, and therefore cannot be directly indexed by gesture. These findings suggest that gesturing confers its benefits by more than simply tying abstract speech to the objects directly visible in the environment. Moreover, we show that the cognitive benefit conferred by gesturing is greater when novice learners produce gestures that add to the information expressed in speech than when they produce gestures that convey the same information as speech, suggesting that it is gesture’s meaningfulness that gives it the ability to affect working memory load.

Keywords: gesture, working memory

When speakers talk, they gesture, and those gestures can have a positive effect on communication. For example, listeners are more likely to deduce a speaker’s intended message when speakers gesture than when they do not gesture (Beattie & Shovelton, 1999; Goldin-Meadow & Sandhofer, 1999; Alibali, Flevares, & Goldin-Meadow, 1997; Goldin-Meadow, Wein & Chang, 1992). As another example, children are more likely to learn a task when their instructions include gesture than when they do not include gesture (Church, Ayman-Nolley & Mahootian, 2004; Ping & Goldin-Meadow, 2008; Singer & Goldin-Meadow, 2005; Valenzeno, Alibali & Klatzky, 2000). However, speakers continue to gesture even when their listeners cannot see the gestures (Bavelas, Chovil, Lawrie & Wade, 1992; Iverson & Goldin-Meadow, 1998, 2001), suggesting that gesture may have positive effects not only for listeners but also for the speakers themselves.

Previous research has found that producing gesture helps children learn. For example, encouraging children to gesture either before (Broaders, Cook, Mitchell & Goldin-Meadow, 2007) or during (Cook, Mitchell, & Goldin-Meadow, 2008; Goldin-Meadow, Cook & Mitchell, 2009) a lesson leads to improved performance after the lesson. Gesturing may improve learning because it makes speaking cognitively less taxing. Indeed, studies have shown that gesturing while speaking frees up working memory resources, relative to speaking without gesturing (Goldin-Meadow, Nusbaum, Kelly & Wagner, 2001; Wagner, Nusbaum & Goldin-Meadow, 2004); these resources can then be used for other tasks, such as learning how to solve a new problem.

In previous studies of the effect of gesture on cognitive load, children were asked to solve a math problem, and were then given a list of unrelated items to remember (memory task) while explaining how they solved the problem (explanation task). Speakers were allowed to gesture during some of their explanations, but were told to keep their hands still on other explanations. After each explanation, participants were asked to recall the items they had been given before the explanation task. Since participants had to keep the to-be-remembered items in mind while giving their explanation, their performance on the memory task could serve as a measure of the cognitive resources they expended on the explanation task. Children did better on the memory task when they gestured on the explanation task than when they were told not to gesture (Goldin-Meadow et al., 2001), even when they did not solve the problems correctly. We see the same phenomenon in experts––adults, all of whom knew how to solve factoring problems, remembered more items on a memory test when they gestured while describing how they solved the factoring problems than when they were told not to gesture (Goldin-Meadow et al., 2001; Wagner et al., 2004). In each of these studies, a subset of speakers did not gesture all of the time when gesturing was permitted (on some trials they chose not to gesture), but the pattern of results was the same: speakers’ performance on the memory task was significantly better on trials during which they chose to gesture than on trials during which they chose not to gesture. In other words, gesturing lightened working memory more than not gesturing, both when speakers chose not to gesture and when they were told (by the experimenter) not to gesture.

Our study explores one possible mechanism underlying this effect. In previous studies, the gestures that speakers produced during the explanation task were primarily deictic gestures that directly pointed out numbers or other aspects of the math problems. For example, children pointed to two numbers in the problem and then the blank, while describing how they added the two numbers to get the total. The fact that speakers predominantly used deictic pointing gestures to indicate visible entities raises the possibility that gesturing frees up cognitive resources because of its indexical function—its ability to tie one’s spoken words to objects present in the environment. Pointing allows speakers to use the world as its own best representation, and thereby lowers the cost of maintaining this information in working memory (Ballard, Hayhoe, Pook, & Rao, 1997). Indeed, listeners understand instructions better when the words in the instructions are accompanied by a pointing hand linking the words to objects visible in the environment (“indexing” the objects) than when they are not (Glenberg & Robertson, 1999). Gesturing might then lighten cognitive load by simply linking the speaker’s words to real objects in the immediate environment.

But speakers often produce gestures that refer to objects, events, and phenomena not directly visible in the immediate physical environment. In fact, speakers are actually more likely to gesture when talking about entities that are not visible than when talking about entities that are visible (Wesp, Hesse, Keutmann & Wheaton, 2001; Morsella & Krauss, 2004). For example, a speaker can produce an iconic C-shaped gesture above a table to indicate the height and width of a container that is no longer on the table. Or a speaker could mime the action of swinging a baseball bat in an iconic gesture used to describe how he batted in last week’s game. Even pointing gestures can be used to refer to non-present objects and events. For example, a child points to the place where her father usually sits when talking about something her father, who is not in the room, did. Gestures referring to absent objects do not link the representational information in the speaker’s words directly to objects in the physical, visible environment. If this simple linking or indexing function of gesture (tying the representational information in speech to real objects in the environment) is the mechanism behind gesture’s cognitive benefits, then gestures for non-present objects might not confer the same kind of cognitive benefit for speakers as gestures that refer to objects in the here-and-now.

On the other hand, because gestures that refer to non-present objects do more than simply index objects and often convey meaning through the shape, placement or movement of the hand, they have the potential to lighten the load on working memory by making the absent present. Our study investigates the possibility that even gestures that do not rely on the physical environment to convey meaning can lighten the load on working memory. If so, then gestures of any kind, whether they are about present or absent objects, should help relieve the working memory load associated with speaking.

To explore this possibility, we asked children to explain their answers to a Piagetian liquid quantity conservation task, a task known to elicit iconic as well as deictic gestures (Church & Goldin-Meadow, 1986). We chose this task not only because it elicits iconic gestures whose meanings are not as dependent on present objects as deictic gestures, but also because it can be performed with or without props (i.e., visible objects). We chose to test 7- to 8-year-old children on the task because children in this age range are typically in a state of cognitive change with respect to conservation; we can therefore explore the impact of gesturing on learners who are in a transitional state.

On half of the trials, children were told to use their hands during their explanations of the conservation problems (Told to Gesture trials). On the other half, they were told to keep their hands still during the explanations (Told Not to Gesture trials). For half of the children, the conservation objects were present during their explanations (Objects Present). For the other half, the objects were absent during their explanations (Objects Absent). We expected gesturing in the Objects Present condition to lighten cognitive load in much the same way as it has been shown to do when children explain their answers to math problems (Goldin-Meadow et al, 2001). If gesturing confers these cognitive benefits only because it links the representational information in a speaker’s words to the real objects in the visible world, then we would not expect gesturing to lighten cognitive load when speakers gesture about objects that are not present in the environment, that is, in the Objects Absent condition. If, however, gesturing confers its benefits by some other mechanism––perhaps by taking on some of the representational work that would otherwise be handled by speech––then we ought to find cognitive benefits even when speakers gesture about absent objects.

Method

Participants

Participants were 25 second- and third-grade students from Chicago area public schools (mean age 8.75 years, 15 girls). The children were racially and ethnically diverse: 32% were Caucasian, Hispanic; 28% Caucasian, non-Hispanic; 16% African-American; 24% Asian-American. All of the children were fluent in English and were in monolingual English classrooms. Children were tested individually outside of the classroom during the regular school day and received a small gift in exchange for their participation.

Procedure

Children participated in a dual-task paradigm that was designed to estimate the amount of cognitive effort used when speaking either with or without gesture. Children made equality judgments and explained the reasoning behind their judgments on 20 Piagetian liquid conservation tasks (explanation task). During their explanations, children were also asked to remember two unrelated words (memory task). Better performance on the secondary memory task suggests that fewer cognitive resources are being deployed on the explanation task.

Each child was randomly assigned to either the Objects Present or Objects Absent condition (between-subjects manipulation). Children completed one practice trial without any instruction about what to do with their hands to acclimate them to the experimental procedure. The experiment was split into two blocks of ten liquid conservation problems each. During one block, children were Told to Gesture while they explained their answers to the conservation problems; during the other block, children were Told Not to Gesture during their explanations (within-subjects manipulation). The order of the blocks was counterbalanced between children, as were the words used in the memory task. At the beginning of each block, Experimenter 1 explained the gesture instructions for that block to the child. In the Told to Gesture block, the experimenter said, “During this part of the study, when you talk to [Experimenter 2] about your answers, I want you to be sure to use your hands.” In the Told Not to Gesture block, the experimenter said, “During this part of the study, when you talk to [Experimenter 2] about your answers, I want you to be sure to keep your hands still.”

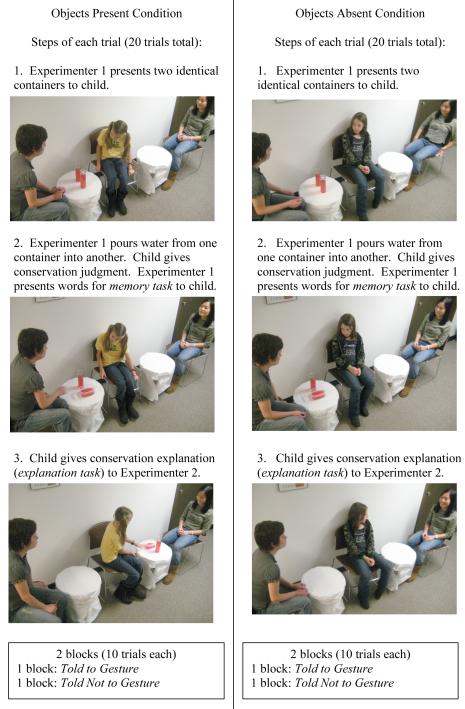

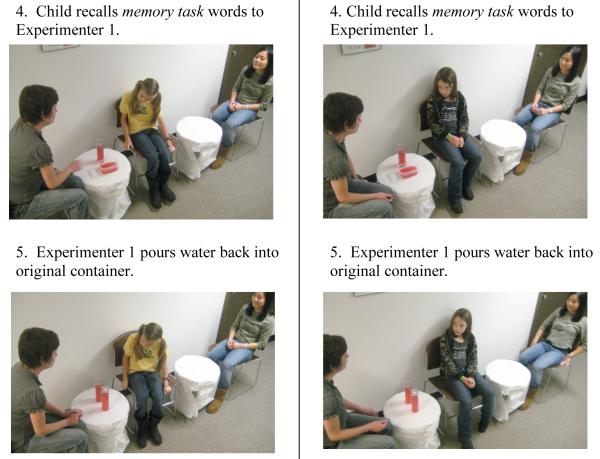

The steps in an individual trial were as follows (see Figure 1): Experimenter 1 showed the child two identical containers with the same amount of water in them. The water levels were adjusted until the child agreed that the two contained equal amounts of water (step 1). Experimenter 1 then poured the water from one container into a differently shaped container and again asked the child whether the new container and the old container held the same amounts of water. Once the child provided an equality judgment, Experimenter 1 gave the child two words to remember.1 The child repeated the words to the experimenter, who repeated them once again to the child (step 2). In both the Objects Present and Objects Absent conditions, the child then turned around in his or her seat to face Experimenter 2, who was located behind the child (see Figure 1). For children in the Objects Present condition, the conservation objects were on the table and could therefore be gestured at; for those in the Objects Absent condition, the objects were placed on the floor, out of view, and could not be gestured at. We asked children to turn around when giving their explanations so that they would not be able to use the original locations of the objects as place-holders for their gestures. Experimenter 2 reminded the child of the gesture instructions for that trial and then asked the child to explain his or her equality judgment (step 3). After the explanation, the child turned back around to face Experimenter 1, who asked the child to recall the words from the beginning of the trial (step 4). After the child recalled as many words as he or she could, Experimenter 1 returned the water to the original glass and asked the child whether the two glasses had the same amounts of water (step 5).

Figure 1.

The steps of an experimental trial for the Objects Present condition (left side) and the Objects Absent condition (right side).

Coding

Two trained coders transcribed all of the child’s speech and gesture and classified strategies expressed in each modality according to a previously developed coding system based on Piaget’s descriptions of children’s conservation responses (Piaget, 1965) and analyses of the responses children commonly give to this question (Church & Goldin-Meadow, 1986). The most common examples of the correct and incorrect strategies that the children expressed in speech and gesture are shown in Table 1. On the first pass through the tape, the child’s speech was transcribed and the strategies expressed were coded without attending to gesture. Over 90% of children’s verbal responses could be coded according to this scheme. On the second pass, the child’s gesture was transcribed and the strategies expressed were coded without attending to speech. Over 99% of children’s gestural responses could be coded according to this scheme. Gestures were also categorized as either deictic (primarily pointing gestures) or iconic. Reliability was assessed on approximately 15% of trials; coder agreement was 96% for strategies expressed in speech, and 94% for strategies expressed in gesture. An answer on the conservation task was considered correct only if the child provided a “same” judgment, along with at least one correct strategy in speech. The number of words correctly recalled on the memory task was tallied for each trial.

Table 1.

Examples of correct and incorrect problem-solving strategies children produced in speech and gesture

| Explanation |

Speech |

Gesture |

|---|---|---|

| CORRECT STRATEGIES | ||

| Compensation | “This one is taller but it’s also wider” | Show the height of one or both containers, then the width of one or both containers |

| Reversibility | “If you poured it back, it would be the same” | Mime “pouring” from the new container back to the original container |

| Add-Subtract | “You didn’t add any and didn’t take any away” | Take-away swiping gesture near the container |

| Initial Equality | “They were the same before you changed them” | N/A |

| Identity | “It’s the same water no matter what” | N/A |

| Comparison + just | “This one is just taller than that one” | N/A |

| Description + just | “The one is just wide” | N/A |

| Transformation + just | “You just poured this one in a different glass” | N/A |

| INCORRECT STRATEGIES | ||

| Description | “This one is tall” | Show the height of one of the containers |

| Comparison | “This one is taller and this one is shorter” | Show the height of both of the containers |

| Transformation | “You poured this in here” | Mime “pouring” from the original container to the new container |

Results

Does gesturing lighten cognitive load?

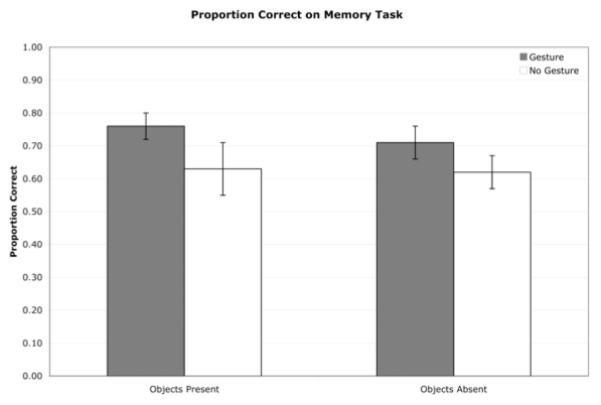

Figure 2 presents the proportion of words recalled during the memory task by children in the four experimental groups. Overall, children recalled more words when they gestured in the Told to Gesture block than when they did not gesture in the Told Not to Gesture block, not only in the Objects Present condition (Told to Gesture: M = 0.76, SE = 0.04; Told Not to Gesture: M = 0.63, SE = 0.08) but also in the Objects Absent condition (Told to Gesture: M = 0.71, SE = 0.05; Told Not to Gesture: M = 0.62, SE = 0.05). The data were analyzed in the R statistical package using logit mixed models (R Development Core Team, 2009) since the outcome of remembering each word on the memory task is binary: 0 (did not correctly recall the word) or 1 (did correctly recall the word) (Jaeger, 2008). The best model of the data, fit using the Laplace approximation, included the random effect of subject, controlled for the effect of trial (subjects were slightly worse at recalling the words as the experiment went on), and the amount of time spent on the explanation task (children were slightly worse at recalling the words during longer explanations), and included Gesture Condition as a predictor (children were less likely to remember the word when Told Not to Gesture than when Told to Gesture) (see Table 2 for the fixed effects of this model; variance = .53, SD = .73 for subject random effect). Adding Objects Condition (Present vs. Absent) to the model as a predictor did not significantly improve the model’s predictive ability (log-likelihood = −503.2, compared to log-likelihood = −503.4 for a model not including Objects Condition, χ2 (2) = 0.45, p > 0.75), and Objects Condition was not a significant predictor (coefficient for Objects Condition [=Absent] when added to this model = 0.21, ns; coefficient for Gesture and Objects interaction when added to this model = −0.06, ns). All variables were centered and were not significantly correlated with one another (correlations were closer to zero than +/−0.50). Repeated-measures ANOVA using the arcsine transformation led to the same pattern of results (significant effect of Gesture Condition, no significant effect of Objects Condition, and no significant interaction). Thus, children performed better on the memory task when they gestured than when they did not gesture, whether or not there were objects present that could be indexed by gesture.

Figure 2.

Proportion of words recalled correctly on the memory task categorized according to whether children gestured vs. did not gesture during the explanation task. Children recalled more words when they gestured than when they did not gesture, regardless of whether the task objects were present or absent. Error bars represent standard errors of the maen.

Table 2.

Summary of the fixed effects in the logit mixed model predicting performance on the memory task (N = 874; log-likehood = −503.4)

| Predictor: | Coefficient | SE | Wald Z | p |

|---|---|---|---|---|

| Intercept | 0.95 | 0.17 | 5.69 | < 0.001 |

| Trial (centered) | −0.04 | 0.01 | −2.87 | < 0.005 |

| Time on Explanation Task (Centered) | −0.01 | 0.01 | −1.20 | > 0.20 |

| Gesture Condition = Told to Gesture | −0.45 | 0.16 | −2.80 | < 0.01 |

Were children equally likely to comply in the two gesture instruction conditions?

All of the children complied with our gesture instructions on almost all trials. When children were Told Not to Gesture during the explanation task, they complied with this instruction on 84% of trials (SE = 7%) in the Objects Present condition and 87% of trials (SE = 4%) in the Objects Absent condition. When children were Told to Gesture, they complied on 97% of the trials (SE = 2%) in the Objects Present condition, and 90% of the trials (SE = 5%) in the Objects Absent condition.2

We are arguing that gesturing lightens the load on working memory. However, inhibiting a natural inclination to gesture when told not to gesture might impose a working memory load in and of itself. In this case, our findings would be due, not to the beneficial effects of gesturing, but to deleterious effects of being told not to gesture. There are three pieces of evidence that argue against this explanation for our findings.

First, two previous studies on the effect of gesture on cognitive load included a condition in which participants were not given instructions about what to do with their hands (Goldin-Meadow et al., 2001; Wagner et al., 2004). A subset of speakers chose not to gesture on some of these trials. Importantly, the results for trials on which participants chose not to gesture were identical to those for trials on which the same participants were instructed not to gesture. In other words, gesturing lightened the load on working memory more than not gesturing, whether speakers chose not to gesture or were instructed not to gesture.

Second, when we investigate the likelihood of following the instructions in our own study, we find that although Gesture Condition is a significant predictor, the effect is qualified by an interaction between Gesture Condition and Objects Condition (see Table 3 for fixed effects; variance = 1.84, SD = 1.35 for subject random effect). Overall, children were less likely to follow instructions when Told Not to Gesture than when Told to Gesture, but only in the Objects Present condition (84% vs. 97%). In the Objects Absent condition, children were equally likely to follow instructions to gesture (90%) and not to gesture (87%) (see Table 4 top for fixed effects [variance = .69, SD = .83 for subject random effect] in the Objects Absent condition; and Table 4 bottom for fixed effects [variance = 4.76, SD = 2.18 for subject random effect] in the Objects Present condition). If being told not to gesture was a cognitive burden driving our main finding, then children in both Object Conditions should have been less likely to comply with the told-not-to gesture instructions than the told-to-gesture instructions––but they were not. Moreover, if being told not to gesture was driving our main finding, then the memory load effect should have been weaker in the Objects Absent condition (where being told not to gesture seemed to be less of a burden) than in the Objects Present condition––but it was not. We found the same pattern of results on the memory task in the Objects Absent condition as in the Objects Present condition (i.e., there was no significant interaction between Gesture Condition and Objects Condition in the model predicting memory task performance).

Table 3.

Summary of the fixed effects in the logit mixed model predicting likelihood of following instructions to gesture and not to gesture (N = 1000; log-likehood = −290.7)

| Predictor: | Coefficient | SE | Wald Z | p |

|---|---|---|---|---|

| Intercept | 2.92 | 0.32 | 9.21 | < 0.001 |

| Gesture Condition | −1.25 | 0.27 | −4.73 | < 0.001 |

| Objects Condition | 0.99 | 0.63 | 1.56 | > 0.10 |

| Gesture Condition : Objects Condition |

−1.73 | 0.52 | −3.30 | < 0.001 |

Table 4.

Summary of the fixed effects in the logit mixed model predicting likelihood of following instructions to gesture and not to gesture in the Objects Absent condition, top table (N = 480; log-likehood = −164.5 ) and in the Objects Present condition, bottom table (N = 520, log-likehood = −123.9).

| Predictor: | Coefficient | SE | Wald Z | p |

|---|---|---|---|---|

| Intercept | 2.29 | 0.29 | 7.92 | < 0.001 |

| Gesture Condition = Told to Gesture |

−0.35 | 0.30 | −1.18 | > 0.20 |

| Predictor: | Coefficient | SE | Wald Z | p |

|---|---|---|---|---|

| Intercept | 3.91 | 0.70 | 5.59 | < 0.001 |

| Gesture Condition = Told to Gesture |

−2.14 | 0.44 | −4.92 | < 0.001 |

Third, if we look at children who did not comply with instructions in both Gesture Conditions (13 children in the Told Not to Gesture condition who gestured on some trials, and 6 children in the Told to Gesture condition who did not gesture on some trials), we find that what mattered was whether children gestured, not which condition they were in: Children in the Told Not to Gesture condition recalled more words on trials when they gestured than children in the Told to Gesture condition on trials when they did not gesture, for both present (0.53, SE = 0.12, vs. 0.46, SE = 0.13) and absent (0.80, SE = 0.09 vs. 0.48, SE = 0.22) objects. Only one child failed to comply with instructions on both conditions (i.e., when told not to gesture and when told to gesture), which prevented a within-subject analysis of these data.

To summarize, based on the previous literature and analyses of our own data, we argue that what is driving our working memory findings is the act of producing gesture, not the instructions to inhibit gesture.

What types of gestures did children produce?

In addition to pointing at the props used in the conservation trials, that is, producing deictic gestures when explaining their answers to the conservation questions, the children also produced iconic gestures. For example, children would make a pouring motion with their hand, or use a C-hand to indicate the width of the container. Table 5 displays the proportion of trials on which children produced iconic gestures only, deictic pointing gestures only, or both iconic and deictic gestures. Not surprisingly, children were less likely to produce pointing gestures when there were no objects to point at (i.e., in the Objects Absent condition). Nevertheless, gesturing had the same cognitive benefits for working memory in the Objects Absent condition as it had in the Objects Present condition (cf. Figure 2) despite the different distributions of iconic and deictic gestures in the two conditions. Thus, type of gesture appeared to have little impact on gesture’s ability to lighten cognitive load.

Table 5.

The mean proportion of trials in the Told to Gesture block on which the child produced Iconic Gesture(s) Only, Deictic Gesture(s) Only, Both Iconic and Deictic Gestures, or No Gestures (i.e., the child did not follow instructions). Numbers in parentheses are standard errors of the mean.

| Iconic Only | Deictic Only | Both Iconic and Deictic |

No Gesture | |

|---|---|---|---|---|

| Objects Present |

0.30 (0.10) | 0.26 (0.08) | 0.41 (0.09) | 0.03 (0.02) |

| Objects Absent |

0.73 (0.08) | 0.05 (0.02) | 0.13 (0.05) | 0.10 (0.05) |

Does the meaning conveyed in gesture affect its ability to lighten cognitive load?

We have found that gesturing while speaking lightens a speaker’s cognitive load. Does the meaning conveyed in gesture, taken in relation to the meaning conveyed in speech, affect this process in any way? It could be the case, for example, that only gestures that reinforce, or directly match, the information conveyed in speech lighten cognitive load. But gestures are often used to convey substantive information that is not conveyed in speech (Goldin-Meadow, 2003). When gesture conveys information that is different from the information conveyed in the accompanying speech, the response is called a gesture-speech mismatch (Church & Goldin-Meadow, 1986). For example, one child in our study produced a comparison strategy in speech (“it’s different because it’s taller,” highlighting only one dimension), while at the same time producing a compensation strategy in gesture (using a flat palm to indicate the height of the glass, followed by narrow C-hand mirroring the width of the glass, thus highlighting two dimensions; see Table 6 for additional examples of gesture-speech mismatches, along with gesture-speech matches, produced by the children in our study). Does gesture lighten cognitive load when it is produced in a gesture-speech mismatch?

Table 6.

Examples of gesture-speech matches and mismatches

| Relation between Speech and Gesture |

Speech Strategy | Gesture Strategy |

|---|---|---|

|

Matching Explanation (incorrect speech and incorrect gesture) |

Transformation strategy (“the water got poured into this one [i.e., the new container]”) |

Transformation strategy (“pouring” gesture from original container toward new container) |

|

Matching Explanation (correct speech and correct gesture) |

Reversibility strategy (“you could pour the water back into the other cup [i.e., the original container]”) |

Reversibility strategy (“pouring” gesture away from new container back to original container) |

|

Mismatching Explanation (incorrect speech and incorrect gesture) |

Comparison strategy (“this one is taller and that one is shorter”) |

Transformation strategy (“pouring” gesture from original container toward new container) |

|

Mismatching Explanation (incorrect speech and correct gesture) |

Description strategy (“this one has more because it’s tall”) |

Reversibility strategy (“pouring” gesture away from new container back to original container) |

In a study of adults asked to explain their answers to math problems they knew how to solve, Wagner and colleagues (2004) found that gestures produced in a gesture-speech mismatch did not lighten cognitive load and, in fact, added to it (adults recalled fewer items on a memory test when they produced gesture-speech mismatches than when they produced gesture-speech matches). But the adults were experts at solving these particular problems. Previous work has shown that although experts and novices in mathematical equivalence both produce gesture-speech mismatches, the types of mismatches they produce are qualitatively different (Goldin-Meadow & Singer, 2003). Experts convey information in the gestural component of their mismatches that, although not conveyed in speech on that particular response, can almost always be found elsewhere in other spoken responses that the experts produce and thus can be considered part of their explicitly spoken repertoires. In contrast, novices convey information in the gestural component of their mismatches that is rarely found in any of their spoken productions––it is uniquely produced in gesture and thus appears to be accessible only implicitly to the manual modality. Of the 12 children who produced mismatches in our study, 8 produced mismatches of this type. For example, one child produced a reversibility explanation in the gestural component of her mismatch, but did not produce reversibility in any of her spoken responses––the information conveyed in her gestures was accessible only to gesture.

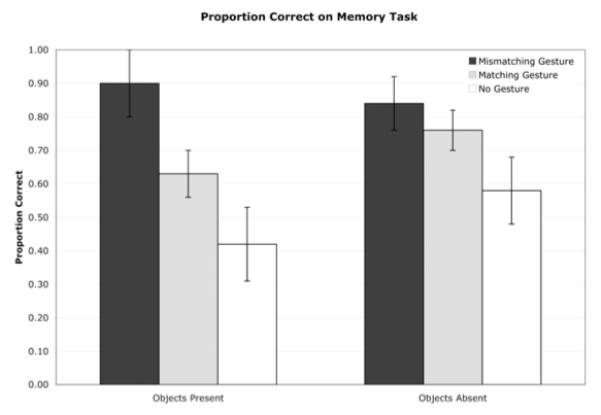

Figure 3 presents the proportion of words recalled on the memory task by the 12 children who produced gesture-speech mismatches (as well as gesture-speech matches) on the explanation task. The proportion of words recalled was greater when children produced gestures that added information to the information conveyed in speech (gesture-speech mismatches) than when they produced gestures that conveyed the same information as conveyed in speech (gesture-speech matches), which, in turn, was greater than when children produced no gestures at all (no gesture), in both the Objects Present condition (0.90, SE = 0.10, vs. 0.63, SE = 0.07, vs. 0.42, SE = 0.11) and the Objects Absent condition (0.84, SE = 0.08, vs. 0.76, SE = 0.06, vs. 0.58, SE = 0.10).

Figure 3.

Proportion of words recalled correctly on the memory task categorized according to whether children produced an explanation containing mismatching gesture, matching gesture, or no gesture. Children recalled more words in the memory task when producing mismatching gesture during the explanation task than when producing matching gesture, which lead to better performance than no gesture. This pattern holds regardless of whether the task objects were present or absent. Error bars represent standard errors of the mean.

The data were analyzed in the R statistical package using logit mixed models. The best model of the data, fit using the Laplace approximation, included the random effect of subject, controlled for the effect of trial (subjects were slightly worse at recalling the words as the experiment went on) and the amount of time spent on the explanation task (children were slightly worse at recalling the words for longer explanations), and included Gesture-Speech Relationship as a predictor (see Table 7 for the fixed effects of this model; variance = .59, SD = .77 for subject random effect). Adding Objects Condition to the model as a predictor did not significantly improve the model’s predictive ability (log-likelihood = −240.0, compared to log-likelihood = −240.7 for a model not including Objects Condition, χ2 (2) = 1.45, p > 0.20), and Objects Condition was not a significant predictor (coefficient for Objects Condition [=Absent] when added to this model = −0.59, ns). Repeated-measures ANOVA using the arcsine transformation led to the same pattern of results (significant effect of Gesture-Speech Relationship, no significant effect of Objects Condition, and no significant interaction). Pair-wise comparisons between the three gesture-speech relationship types showed that memory task performance for trials that included gesture-speech mismatch, gesture-speech match, and no gesture were all significantly different from one another (performance was better for gesture-speech mismatch than gesture-speech match, and both were better than no gesture).

Table 7.

Summary of the fixed effects in the logit mixed model predicting memory task performance depending on Gesture-Speech Relationship; Gesture-Speech Match is the comparison group (N = 412; log-likehood = −240.7)

| Predictor: | Coefficient | SE | Wald Z | p |

|---|---|---|---|---|

| Intercept | 0.91 | 0.28 | 3.27 | < 0.01 |

| Trial (centered) | −0.05 | 0.02 | −2.44 | < 0.05 |

| Gesture-Speech Relationship: Mismatch |

1.05 | 0.49 | 2.14 | < 0.05 |

| Gesture-Speech Relationship: No Gesture |

−0.66 | 0.24 | −2.77 | < 0.01 |

Our results suggest that producing gesture-speech mismatches has different effects on cognitive load for experts and for novices. For the adult experts in the Wagner et al. (2004) study, producing gesture-speech mismatches resulted in worse performance on a concurrent working memory task, compared to gesture-speech matches. These experts always had in their repertoires the explicit spoken equivalent of the strategy that they expressed in the implicit gestural component of their mismatches. It is therefore possible that they implicitly activated this strategy during the mismatches they produced when explaining how they solved the math problems. In contrast, for the child novices in our study, producing gesture-speech mismatches resulted in better performance on a concurrent working memory task, compared to gesture-speech matches. These novices did not have the option of implicitly activating the spoken equivalent of the strategy they expressed in the gestural component of their mismatches, since this strategy was not part of their spoken repertoires. This difference might explain why the children’s mismatches were more effective in lightening cognitive load than the adults’ mismatches (although it does not explain why children’s mismatches were more effective in lightening cognitive load than their matches).

Although we do yet understand the mechanism underlying this phenomenon, our data make it clear that the cognitive cost associated with producing information in gesture that is not produced in speech is different for experts and for novices, and may depend on the nature of the knowledge expressed in gesture. Expressing information in gesture that is accessible only to gesture (i.e., implicit knowledge) appears to free up cognitive resources, whereas expressing information in gesture that is also accessible in speech (i.e., explicit knowledge) appears to require resources.

Discussion

Gesturing is known to convey information that benefits communication partners. Moreover, under certain circumstances, gesturing has been shown to provide cognitive benefits to speakers, as well as to listeners. Our study was designed to explore how general the cognitive benefits of gesturing for speakers are. Specifically, we asked whether gesturing about non-visible entities reduces the cognitive load associated with speaking in the same way that gesturing about visible entities has been shown to do. Previous work has found that gesturing reduces cognitive load when speakers explain their answers to a math problem that is visible during the explanation (Goldin-Meadow et al., 2001; Wagner et al., 2004). Our study replicated this effect on a new task––conservation––and extended the effect to absent objects. We found that gesturing, as opposed to not gesturing, during a conservation explanation task results in better performance on a concurrent secondary memory task even when the objects described are absent and thus cannot be indexed by pointing gestures. The speakers often produced iconic gestures, whose meanings are not as dependent on present objects as pointing gestures, during their explanations. We were thus able to ask whether iconic gestures also lighten the speaker’s cognitive load. We found that they do, again even when the objects described are absent. Overall, our results indicate that both iconic and deictic gestures relieve the speaker’s cognitive burden whether the focus of the talk is on present or absent objects.

What do these observations tell us about the mechanism underlying the cognitive benefit that gesturing confers? Although gesturing can serve as a tool for indexing objects that are present in the environment, it cannot serve this indexing function for objects that are not present. As a result, a mechanism in which gesture reduces cognitive load by tying speakers’ words to real objects in the visible environment cannot fully account for gesture’s cognitive benefits. Gesturing must be serving some other function that confers cognitive benefits when objects are absent.

One possibility is that gesturing helps speakers situate the representational information conveyed by their words in a mental model of the real objects they originally witnessed. When listening to language, listeners automatically make eye saccades to spaces where the objects that are being described were previously located (Spivey & Geng, 2001). These eye movements are taken as evidence that people build mental models of visual scenes that they can refer back to, even when the scene is no longer visible. Producing gestures about absent objects might help speakers retrieve or use information about the previously presented objects by connecting their words to their memory of those objects. Our data make it clear that gesture can fulfill this kind of function in a way that is not tied to locations in actual space, as the children in our study produced their explanations (and their gestures) in a location that was different from the location where the event had originally taken place.

Another possibility is that gesturing helps speakers make absent objects present in a motoric sense. Hostetter & Alibali (2008) argue that gesture production is the result of motor simulations of the information under discussion. If this is the case, producing gestures about non-present objects might help speakers to simulate (and thus more easily use information about) the physical properties of the objects under discussion. In other words, not only do gestures provide speakers with spatial information about the visual scene they have just witnessed (as suggested in the preceding paragraph), but they also provide motor information that could benefit speakers (see also Beilock & Goldin-Meadow, 2010; Goldin-Meadow & Beilock, 2010).

A final possibility is that gesturing provides speakers with a very different representational format from speech––gesture conveys information holistically, whereas speech conveys information in a segmented fashion (McNeill, 1992). As a result, the representations that underlie gesture are likely to be more global than those underlying speech. Because of its global properties, gesture can provide an overarching framework that serves to organize ideas conveyed in speech, in effect chunking mental representations to reduce the load on working memory. Gesturing may thus bring a different kind of mental coherence to the representation of an intended message, one that increases the efficiency of representation, compared to speech without gesture (see also Alibali, Kita & Young, 2000, who argue that gesturing helps speakers package information into units suitable for speech). Under this view, gesture works synergistically with speech to lighten cognitive load.

These explanations are all based on the assumption that gesture’s cognitive benefits stem, at least in part, from the fact that it is meaningful (as opposed to simply indexical). Our findings support this assumption in that the match (or mismatch) between the information conveyed in gesture and its accompanying speech affected the extent to which gesture lightened cognitive load. We found that mismatching gesture (gesture conveying information that differed from the information conveyed in speech) lightened load significantly more than matching gesture (gesture conveying information that was the same as the information conveyed in speech). Gesture’s impact on working memory thus differed as a function of the relationship it held to speech. There is, in fact, evidence from studies of the neural correlates of gesture comprehension (event-related potentials) that mismatching gesture affects speech processing differently than matching gesture does (Kelly, Kravitz & Hopkins, 2004). At early stages of sensory/phonological processing (P1-N1 and P2), speech accompanied by gestures conveying different information (e.g., gesturing thin while saying “tall” to describe a tall, thin container) are processed differently from speech accompanied by gestures conveying the same information (gesturing tall while saying “tall”). 3 Kelly and colleagues suggest that gesture creates a visuospatial context that influences the early processing of the speech it accompanies. Mismatching gestures appear to provide a different context from matching gestures, at least when gesture is seen. Future work is needed to determine whether this phenomenon holds even when gesture is produced.

One unexpected finding from our study is that mismatching gestures appear to function differently in novices and in experts. Wagner et al. (2004) found that when experts produced gesture-speech mismatches, they remembered less on a concurrent secondary memory task than when they produced matches. In contrast, when the novices in our study produced gesture-speech mismatches, they remembered more on a concurrent secondary memory task than when they produced matches. 4 Along these lines, it is worth noting that children have been shown to profit more from instruction that contains two different strategies, one in speech and the other in gesture (i.e., mismatches), than from instruction that contains a single strategy expressed in speech and gesture (i.e., matches, Singer & Goldin-Meadow, 2005). Importantly, this learning effect is not due to the number of strategies children are taught (children profit least when the two different strategies are both taught in speech), but seems to be due to the juxtaposition of the two modalities––information conveyed across gesture and speech is a more effective teaching tool than the same information conveyed entirely in speech. Thus, producing mismatches is particularly effective in lightening the novice’s cognitive load (as we have shown), and seeing mismatches is particularly effective in teaching the novice new information––an intriguing parallel that warrants additional study.

In conclusion, we explored in our study the mechanism responsible for the beneficial effect that gesture confers on working memory. We found that gesturing lightens a speaker’s cognitive load when the speaker talks about objects, not only when they are present but also when they are not present and cannot be directly indexed by the gestures. In addition, gesturing has a beneficial effect on working memory even when those gestures are iconic and less dependent on physical context than pointing gestures. Gesturing thus confers its benefits by more than simply indexing words to the visible world, but rather through some other mechanism(s) involving meaningful information expressed in the gestures. Finally, we found that the cognitive benefits conferred by gesturing is greater when novices produce gestures that add to the information expressed in speech than when they produce gestures that convey the same information as speech, suggesting that it is gesture’s meaningfulness that gives it the ability to affect working memory load.

Acknowledgements

This research was supported by grant number R01 HD47450 from the NICHD to Goldin-Meadow and grant number SBE 0541957 from the NSF. Thanks to Mary-Anne Decatur, Hector Santana and Anna Sarfaty for help with data collection and coding. We also thank Zac Mitchell, Sam Larson and Terina Yip for additional help with data collection, Matt Carlson for help with statistical analyses, and Eric Wat for help with the photos. We thank the students, teachers and principals for their participation in our research.

Footnotes

Words used on the memory task were identical to those used in a previous study of the cognitive benefits of gesture conducted on children in the same age range (Goldin-Meadow et al., 2001). The words were monosyllabic, three-letter concrete nouns, taken from a list of words frequently used by children (Wepman & Hass, 1969).

In all of the working memory analyses, we include data only from the trials on which children followed instructions on the explanation task. Thus, for example, if a child produced a gesture on a trial on which he was told not to gesture, this trial was not included in the analyses.

It is important to note that, at late stages of processing (N400, known to be sensitive to incongruent semantic information, Kutas & Hillyard, 1984), gestures conveying information that is different from, but complementary to, information conveyed in speech (gesturing thin while saying “tall”) are processed no differently from gestures that convey the same information as speech (gesturing tall while saying “tall”) (Kelly et al., 2004). Neither one produces a large negativity at 400 ms; that is, neither one is recognized as a semantic anomaly. However, gestures conveying information that is truly incongruent with the information conveyed in speech (gesturing short while saying “tall”) do produce a large negativity at 400 ms (Kelly et al., 2004). Thus, the information conveyed in our so-called “mismatching” gestures seems to be seamlessly integrated with the information conveyed in speech at higher levels of semantic processing.

Note, however, that the participants in these studies varied on a number of dimensions: the experts were adults solving factoring problems; the novices were children solving conservation problems. Additional work is needed to determine whether the crucial difference is, indeed, between experts and novices.

References

- Alibali M, Flevares L, Goldin-Meadow S. Assessing knowledge conveyed in gesture: Do teachers have the upper hand? Journal of Educational Psychology. 1997;89:183–193. [Google Scholar]

- Alibali MW, Kita S, Young AJ. Gesture and the process of speech production: We think, therefore we gesture. Language and Cognitive Processes. 2000;15(6):593–613. [Google Scholar]

- Ballard DH, Hayhoe MM, Pook PK, Rao RPN. Deictic codes for the embodiment of cognition. Brain and Behavioral Sciences. 1997;20:723–742. doi: 10.1017/s0140525x97001611. [DOI] [PubMed] [Google Scholar]

- Bavelas JB, Chovil N, Lawrie DA, Wade A. Interactive gestures. Discourse Processes. 1992;15:469–489. [Google Scholar]

- Beattie GW, Shovelton HK. Do iconic hand gestures really contribute anything to the semantic information conveyed by speech? An experimental investigation. Semiotica. 1999;123(1-2):1–30. [Google Scholar]

- Beilock S, Goldin-Meadow S. Gesture grounds thought in action. Under review: 2010. [Google Scholar]

- Broaders S, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture reveals implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007 doi: 10.1037/0096-3445.136.4.539. in press. [DOI] [PubMed] [Google Scholar]

- Church RB, Ayman-Nolley S, Mahootian S. The role of gesture in bilingual education: Does gesture enhance learning? International Journal of Bilingual Education & Bilingualism. 2004;7(4):303–320. [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23(1):43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2008;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glenberg AM, Robertson DA. Indexical understanding of instructions. Discourse Processes. 1999;28(1):1–26. [Google Scholar]

- Goldin-Meadow S, Beilock S. Action’s influence on thought: The case of gesture. Perspectives in Psychological Science. 2010 doi: 10.1177/1745691610388764. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Cook SW, Mitchell ZA. Gesturing gives children new ideas about math. Psychological Science. 2009;20(3):267–272. doi: 10.1111/j.1467-9280.2009.02297.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Nusbaum H, Kelly SD, Wagner S. Explaining math: Gesturing lightens the load. Psychological Science. 2001;12(6):516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Sandhofer CM. Gesture conveys substantive information about a child’s thoughts to ordinary listeners. Developmental Science. 1999;2:67–74. [Google Scholar]

- Goldin-Meadow S, Singer MA. From children’s hands to adults’ ears: Gesture’s role in the learning process. Developmental Psychology. 2003;39(3):509–520. doi: 10.1037/0012-1649.39.3.509. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Wein D, Chang C. Assessing knowledge through gesture: Using children’s hands to read their minds. Cognition and Instruction. 1992;9(3):201–219. [Google Scholar]

- Hostetter AB, Alibali MW. Visible embodiment: Gestures as simulated action. Psychonomic Bulletin & Review. 2008;15(3):495–514. doi: 10.3758/pbr.15.3.495. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Goldin-Meadow S. Why people gesture as they speak. Nature. 1998;396:228. doi: 10.1038/24300. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Goldin-Meadow S. The resilience of gesture in talk: Gesture in blind speakers and listeners. Developmental Science. 2001;4:416–422. [Google Scholar]

- Jaeger TF. Categorical data analysis: Away from ANOVAs (transformation or not) and towards logit mixed models. Journal of Memory and Language. 2008;59:434–446. doi: 10.1016/j.jml.2007.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly SD, Kravitz C, Hopkins M. Neural correlates of bimodal speech and gesture comprehension. Brain and Language. 2004;89:253–260. doi: 10.1016/S0093-934X(03)00335-3. [DOI] [PubMed] [Google Scholar]

- Kutas M, Hillyard SA. Brain potentials during reading reflect word expectancy and semantic association. Nature. 1984;307:161–163. doi: 10.1038/307161a0. [DOI] [PubMed] [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thought. University of Chicago Press; Chicago: 1992. [Google Scholar]

- Morsella E, Krauss RM. The role of gestures in spatial working memory and speech. American Journal of Psychology. 2004;117(3):411–424. [PubMed] [Google Scholar]

- Piaget J. The child’s conception of number. W. W. Norton and Company; New York: 1965. [Google Scholar]

- Ping RM, Goldin-Meadow S. Hands in the air: Using ungrounded iconic gestures to teach children conservation of quantity. Developmental Psychology. 2008;44(5):1277–1287. doi: 10.1037/0012-1649.44.5.1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Development Core Team . R: A language and environment for statistical computing. R Foundation for Statistical Computing; Vienna, Austria: 2009. URL: http://www.R-project.org. [Google Scholar]

- Singer MA, Goldin-Meadow S. Children learn when their teacher’s gestures and speech differ. Psychological Science. 2005;16(2):85–89. doi: 10.1111/j.0956-7976.2005.00786.x. [DOI] [PubMed] [Google Scholar]

- Spivey M, Geng J. Oculomotor mechanisms activated by imagery and memory: Eye movements to absent objects. Psychological Research. 2001;65:235–241. doi: 10.1007/s004260100059. [DOI] [PubMed] [Google Scholar]

- Valenzeno L, Alibali MA, Klatzky R. Teachers’ gestures facilitate students’ learning: A lesson in symmetry. Contemporary Educational Psychology. 2003;28(2):187–204. [Google Scholar]

- Wagner S, Nusbaum H, Goldin-Meadow S. Probing the mental representation of gesture: Is handwaving spatial? Journal of Memory and Language. 2004;50:395–407. [Google Scholar]

- Wepman JM, Hass W. A spoken word count (children—ages 5, 6, and 7) Language Research Associates; Chicago: 1969. [Google Scholar]

- Wesp R, Hesse J, Keutmann D, Wheaton K. Gestures maintain spatial imagery. American Journal of Psychology. 2001;114:591–600. [PubMed] [Google Scholar]