Abstract

Objective

The purpose of this study is to assess the individual effect of reverberation and noise, as well as their combined effect, on speech intelligibility by cochlear implant (CI) users.

Design

Sentence stimuli corrupted by reverberation, noise, and reverberation + noise are presented to 11 CI listeners for word identification. They are tested in two reverberation conditions (T60 = 0.6 s, 0.8 s), two noise conditions (SNR = 5 dB, 10 dB), and four reverberation + noise conditions.

Study sample

Eleven CI users participated.

Results

Results indicated that reverberation degrades speech intelligibility to a greater extent than additive noise (speech-shaped noise), at least for the SNR levels tested. The combined effects were greater than those introduced by either reverberation or noise alone.

Conclusions

The effect of reverberation on speech intelligibility by CI users was found to be larger than that by noise. The results from the present study highlight the importance of testing CI users in reverberant conditions, since testing in noise-alone conditions might underestimate the difficulties they experience in their daily lives where reverberation and noise often coexist.

Keywords: Cochlear implants, ACE, channel selection, reverberation

Room reverberation, as produced by early (and direct) and late reflections of the signal, blurs temporal and spectral cues and flattens formant transitions (see review by Nabelek, 1993). The late reflections tend to fill the gaps in the temporal envelope of speech (overlap-masking) and reduce the low-frequency envelope modulations important for speech intelligibility. Unlike reverberation, noise is additive and affects speech differently (see review by Assmann & Summerfield, 2004). Noise masks the weak consonants to a greater degree than the higher intensity vowels, but unlike reverberation this masking is not dependent on the energy of the preceding segments (Nabelek et al, 1989). Given the rather complementary nature of masking of speech by reverberation and noise, it is not surprising that the combined effects of reverberation and noise are more detrimental to speech intelligibility than either reverberation or noise-alone effects (e.g. Nabelek & Mason, 1981).

While much is known about the combined effects of reverberation and noise on speech intelligibility by young children (Neuman et al, 2010; Neuman & Hochberg, 1983), hearing-impaired listeners (e.g. Duquesnoy & Plomp, 1980; Nabelek & Mason, 1981; George et al, 2010), and normal-hearing listeners (Neuman et al, 2010; George et al, 2008), little is known about such effects in cochlear implants. Both reverberation and noise pose a great challenge for CI listeners as they rely primarily on envelope information contained in a limited number of spectral bands. Reverberation greatly reduces the modulations present in the envelopes making it extremely challenging for CI users to extract useful information about speech (e.g. F0 modulations, location of syllable/word boundaries). In addition, speech recognition in noise is particularly challenging in CIs owing to the limited number of channels of information received by CI users (e.g. Friesen et al, 2001). Taken together, it is reasonable to expect that access to degraded temporal envelope information and poor spectral resolution will likely result in poor levels of speech understanding by CI users in daily settings where noise and reverberation coexist. This hypothesis is tested in the present study.

The impact of spectral resolution on speech recognition was investigated by Poissant et al (2006) using sentence stimuli containing varying amounts of reverberation and masking noise. Reverberant stimuli were vocoded into 6–24 channels and presented to normal-hearing listeners for identification. A substantial drop in performance was noted in reverberant conditions (T60 = 0.152, 0.266, and 0.425 s) when speech was vocoded into six channels. In contrast, performance remained relatively high (> 75% correct) in all reverberant conditions (including T60 = 0.425 s) when speech was processed into 12 or more channels. A substantial decrement in performance was observed with 6-channel vocoded stimuli when noise was added, even at the 8-dB SNR level. The outcome from the Poissant et al study with vocoded speech suggests that poor spectral resolution is likely to be a dominant factor contributing to the poor performance of CI users in reverberant environments. Other factors found to contribute included the source-to-listener distance with more favorable performance noted in small (< 3m) distances (Whitmal & Poissant, 2009). The studies by Poissant and colleagues used vocoded speech and normal-hearing listeners, rather than CI listeners, to assess the effects of reverberation and masking noise on speech intelligibility. Useful insights were provided by these studies but the true effect of reverberation on speech recognition by CI users remained unclear. A recent study by Kokkinakis et al (2011) assessed word recognition by CI users as a function of reverberation time. Performance was found to degrade exponentially as reverberation time increased. Mean recognition scores dropped from 90% correct in anechoic conditions to 20% correct in highly-reverberant conditions (T60 = 1.0 s). Although all CI users that participated in their study had 20–22 active electrodes with 8–10 being the number of maxima channels to be selected by ACE strategy in each stimulation cycle, their performance dropped approximately 30% even in a mildly reverberant condition (T60 = 0.3 s). A subsequent study (Kokkinakis & Loizou, 2011) with CI users indicated that the degradation of speech intelligibility in reverberant conditions is caused primarily by self-masking effects that give rise to flattened formant transitions.

Much work has been done assessing the impact of competing-talkers or steady additive noise on speech recognition by CI users (e.g. Stickney et al, 2004), but not in conditions where reverberation was also present. To our knowledge, no study has examined the combined effect of reverberation and noise on speech intelligibility by CI listeners. Such a study is important as it will inform us about the difficulties CI users experience in their daily lives wherein reverberation, in addition to noise, is present in enclosed spaces. The aim of the present study is twofold: (1) to measure the combined effects of noise and reverberation on speech intelligibility by CI listeners, and (2) to determine which of the two has a more detrimental effect on speech intelligibility. Two different reverberation times (T60 = 0.6 s and T60 = 0.8 s), and two different SNR levels (5 and 10 dB) will be used. Of the two reverberation times chosen, one (T60 = 0.6 s) is allowable in classrooms in the US according to the ANSI S12.60 (2002) standard while the other (T60 = 0.8 s) exceeds the ANSI recommended values even for larger classrooms.

Methods

Subjects

Eleven adult CI users participated in this study. All participants were native speakers of American English and post-lingually deafened. Their age ranged from 48 to 77 years (M = 64 years), and they were paid for their participation. All eleven subjects were using a Nucleus (Cochlear Ltd.) device routinely and had a minimum of one year experience with their device. Detailed biographical data for the subjects are given in Table 1.

Table 1.

Biographical data of the CI users tested.

| Subjects | Age | Gender | Years implanted (L/R) |

Number of active electrodes |

CI processor | Etiology of hearing loss |

|---|---|---|---|---|---|---|

| S1 | 48 | F | 8/8 | 22 | ESPirit 3G | Unknown |

| S2 | 72 | F | 9/9 | 22 | Freedom | Unknown |

| S3 | 77 | M | 6/6 | 22 | Freedom | Hereditary |

| S4 | 68 | M | 9/9 | 20 | ESPirit 3G | Noise |

| S5 | 63 | F | 8/8 | 20 | ESPirit 3G | Rubella |

| S6 | 56 | F | 4/7 | 22 | Freedom | Unknown |

| S7 | 70 | F | 6/- | 22 | Freedom | Unknown |

| S8 | 52 | F | 2/1 | 22 | Nucleus 5 | Unknown |

| S9 | 65 | F | 15/8 | 22 | Freedom | Unknown |

| S10 | 60 | F | 6/9 | 22 | Freedom | Hereditary |

| S11 | 70 | F | 3/1 | 20 | Nucleus 5 | Unknown |

Research processor

Three participants were using the Cochlear ESPrit 3G device, six were using the Nucleus Freedom device, and two were using the Nucleus 5 speech processor. All eleven were temporarily fitted with the SPEAR31 device programmed with the ACE speech coding strategy (Vandali et al, 2000).

Stimuli

The IEEE sentence corpus (IEEE, 1969), taken from a CD ROM available in Loizou (2007), was used for the listening tests. Sentences in the IEEE corpus contained 7–12 words, and in total there were 72 lists (10 sentences/list) produced by a male speaker. The root mean square (RMS) value of the energy of all sentences was equalized to the same value (65 dB). All sentence stimuli were recorded at a sampling frequency of 25 kHz and down-sampled to 16 kHz for our study.

Simulated reverberant conditions

Room impulse responses (RIRs) recorded by Neuman et al (2010) were used to simulate the reverberant conditions. To measure these RIRs, Neuman et al used a Tannoy CPA5 loudspeaker inside a rectangular reverberant room with dimensions of 10.06 m × 6.65 m × 3.4 m (length × width × height) and a total volume of 227.5 m3. The source-to-microphone distance was 5.5 m and that was beyond the critical distance. The original room impulse responses were obtained at 48 kHz and down-sampled to 16 kHz for our study. The overall reverberant characteristics of the experimental room were altered by hanging absorptive panels from hooks mounted on the walls close to the ceiling. The average reverberation time (averaged at frequencies 0.5, 1, and 2 kHz) of the room before modification was 0.8 s with a direct-to-reverberant ratio (DRR) of − 3.00 dB. With nine panels hung, the average reverberation time was reduced to approximately 0.6 s with a DRR of − 1.83 dB.

To generate the reverberant (R) stimuli, the RIRs obtained for each reverberation condition were convolved with the IEEE sentence stimuli (recorded in anechoic conditions) using standardized linear convolution algorithms in MATLAB. Speech-shaped noise (SSN) was added to the anechoic and reverberant signals at 5 dB and 10 dB SNR levels in order to generate the noisy (N) and reverberation + noise (R + N) stimuli, respectively. Note that for the R + N stimuli, the reverberant signal served as the target signal in the SNR computation2. For that reason, we refer to these conditions as reverberant SNR (RSNR) conditions.

Procedure

Prior to testing, each subject participated in a short practice session to gain familiarity with the listening task. The stimuli were presented to all 11 Nucleus users unilaterally through the auxiliary input jack of the SPEAR3 processor in a double-wall sound proof booth (Acoustic Systems, Inc.). For the bilateral users, the ear with the highest sentence score in quiet was chosen for testing. During the practice session, the subjects adjusted the volume3 level to a comfortable level, and the volume level was fixed throughout the tests. Subjects were given a 15 minute break every 60 minute during the test session to avoid listener fatigue.

Subjects participated in a total of nine conditions corresponding to: (1) two different reverberation times (T60 = 0.6 and 0.8 s), (2) two SNR levels (SNR= 5 and 10 dB), (3) four combinations of reverberation times and SNR levels (e.g. T60 = 0.6 s and RSNR = 5 dB), and (4) the anechoic (T60 ≈ 0.0 s) quiet condition. The unprocessed sentences in anechoic (T60 ≈ 0.0 s) quiet conditions were used as a control condition. Twenty IEEE sentences (two lists) were used per condition. None of the lists used was repeated across conditions. To minimize any order effects, the order of the test conditions was randomized across subjects. During testing, the participants were instructed to repeat as many words as they could identify. The responses of each individual were collected and scored off-line based on the number of words correctly identified. All words were scored. The percent correct scores for each condition were calculated by dividing the number of words correctly identified by the total number of words in the sentence lists tested.

Results and Discussion

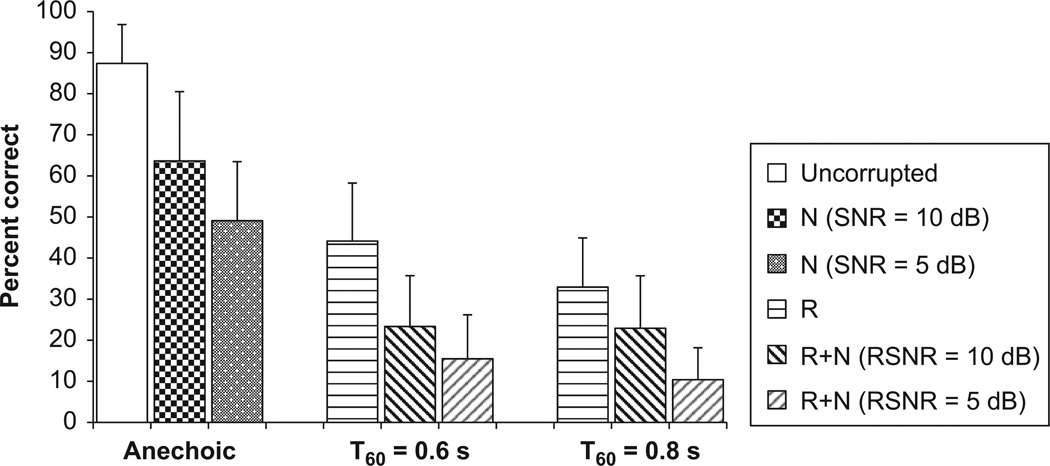

The mean intelligibility scores obtained by the CI listeners in the various conditions are displayed in Figure 1. For comparative purposes, the average score obtained in the anechoic quiet condition corresponding to T60 ≈ 0.0 s is also shown. A two-way ANOVA with repeated measures was run using the SNR level and reverberation time as within-subject factors. Results indicated significant effects of reverberation time (F[2,20] = 236.5, p < 0.0005), significant effects of SNR level (F[2,24] = 93.3, p< 0.0005) and significant interaction (F[4,40] = 7.6, p < 0.0005). The interaction was caused by the fact that in the combined R + N conditions, the SNR level affected speech recognition differently at the two reverberation times. Post-hoc tests (Scheffe) confirmed that there was no statistically significant (p = 0.347) difference between the scores obtained at 5 dB RSNR (T60 = 0.6 s) and 10 dB RSNR (T60 = 0.6 s). The scores, however, obtained at 5 dB RSNR (T60 = 0.8 s) were significantly lower (p = 0.04) than the scores at 10 dB RSNR (T60 = 0.8 s).

Figure 1.

Average percent correct scores of CI users (n = 11) as a function of reverberation time and SNR/RSNR level. Error bars indicate standard deviations.

Speech intelligibility decreased with an increase in the reverberation time and a decrease in RSNR level (Figure 1). The mean speech intelligibility scores dropped from 87.36% (anechoic quiet condition) to 44.16% and 32.94% in conditions with reverberation times of T60 = 0.6 s and T60 = 0.8 s, respectively. Further decrease in scores was noted after adding noise. The highest decrease (nearly 80%) in intelligibility was observed in the R + N condition with T60 = 0.8 s and RSNR = 5 dB. Note that even in the most favorable condition (RSNR =10 dB, T60 = 0.6 s), the mean scores never exceeded 50% correct. This means that the RSNR−50 score (RSNR level needed to obtain a 50% correct score) of the CI users tested in our study is higher than 10 dB (T60 = 0.6 s). According to the recent study by Neuman et al (2010), the corresponding RSNR−50 score obtained by 6-year children with normal hearing is 6 dB in the T60 = 0.6 s condition; note that the same RIRs were used in both studies. Hence, adult CI user’s performance in reverberation + noise conditions is worse than that obtained by 6-year normal-hearing children. Given the known developmental influence of age on recognition of reverberant speech (Neuman et al, 2010), the performance of children wearing CIs is expected to be worse than the performance reported here for adult CI users.

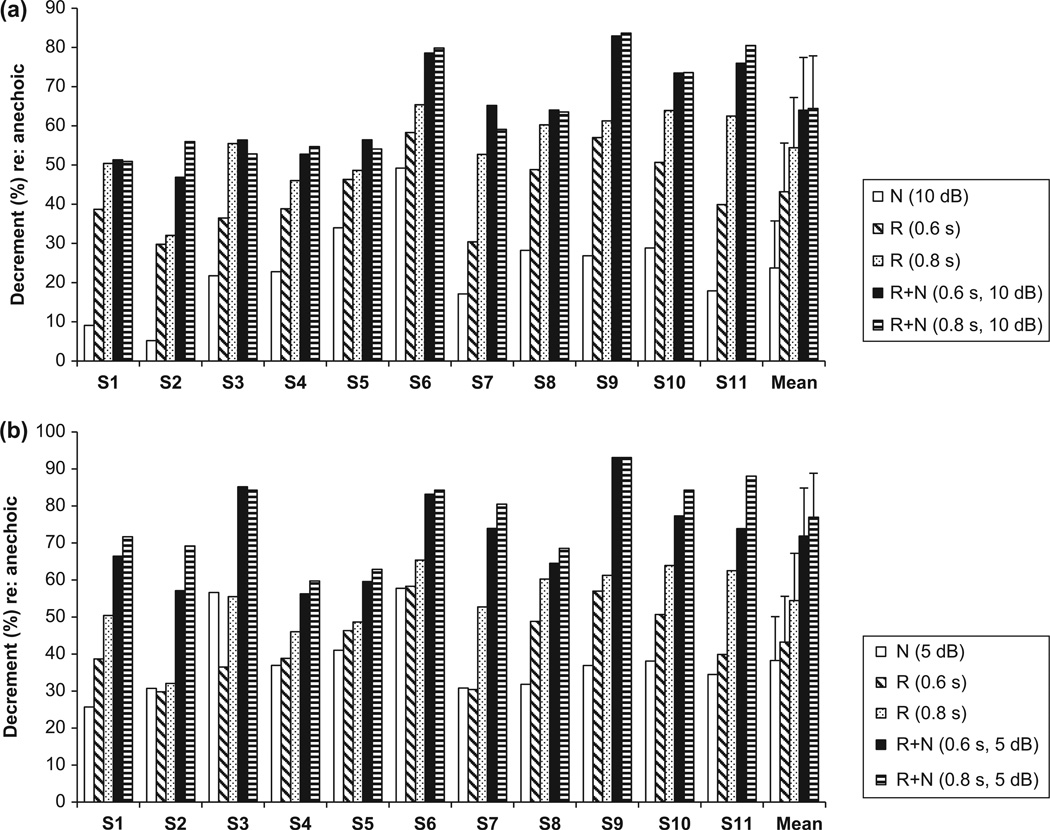

We further analysed the individual effects of noise and reverberation to assess which degraded intelligibility the most. This analysis was done by computing the decrement in performance (in percentage points) introduced by reverberation or noise relative to the corresponding performance obtained in the anechoic quiet condition. The effects of reverberation, noise, and combined reverberation + noise on speech identification are shown in Figure 2 for all subjects tested. Performance degraded on the average by 24% when 10 dB noise was added to the anechoic stimuli, whereas performance degraded by 43% (T60 = 0.6 s) and 55% (T60 = 0.8 s) when reverberation was added (Figure 2, panel a). Hence, reverberation negatively affected sentence intelligibility to a larger degree (by nearly a factor of 2 or 3, depending on the T60 value) than additive noise (RSNR = 10 dB). Similar statements can be made when adding noise at SNR = 5 dB to the anechoic and reverberant signals (Figure 2, panel b). Due to flooring effects, the negative effects of reverberation and noise are more evident in the RSNR = 10 dB condition (Figure 2, panel a) than in the RSNR = 5 dB condition.

Figure 2.

Effects of reverberation (R), noise (N), and reverberation + noise (R+N) on word identification (%) by individual CI users in (a) SNR= 10 dB, and (b) SNR= 5 dB. Effects were computed as the difference in scores obtained in each condition (R, N, or R+N) relative to the score obtained in the anechoic condition. Error bars indicate standard deviations.

The combined effects of reverberation and noise were even greater, and in the T60 = 0.6 s condition the combined effects were in fact larger than the sum of the individual effects of reverberation and noise, at least for some subjects (S1, S2, S7, S11). This outcome was consistent with that observed with normal-hearing listeners (Nabelek & Pickett, 1974). This can be explained by the fact that noise and reverberation, when combined, degrade the speech stimuli in a complementary fashion. In other words, regions in the spectrum that were not originally corrupted by reverberation are masked by noise and vice versa. When the SNR level further decreased to 5 dB, the individual effects of noise and reverberation were nearly the same (~40% decrement) in the T60 = 0.6 s condition, but differed in the T60 = 0.8 s condition. High reverberation (T60 = 0.8 s) affected (negatively) speech intelligibility to a greater extent than additive noise (SNR = 5 dB).

It is clear from the above analysis that reverberation produces a larger degradation in intelligibility than additive noise (Figure 2), at least for the two SNR levels tested. We cannot exclude the possibility, however, that a different outcome might have been observed had we used lower SNR levels or had we used a different method for creating the reverberation + noise stimuli. It is known from STI theory (Houtgast & Steeneken, 1985) that one can vary the level of the masking noise so that it produces equivalent reductions in envelope modulation as those produced by reverberation (corresponding to a given T60 value). Helms et al (2012), for instance, created their stimuli so that the long-term spectrum and amplitude modulations of the noise were equated to the reverberant energy. The SNR level used by Helms et al (2012) with normal-hearing listeners was low (SNR = 0 dB), and likely too challenging for our CI users. It is reasonable to expect that had we decreased the SNR level (lower than 5 dB) in the present study, we might have observed similar effects of noise and reverberation, but that was not the purpose of our study. The aim of the present study was to assess the effects of reverberation and noise using values of SNR level (e.g. SNR = 5 and 10 dB) and T60 that are reflective of real-world situations encountered by CI users. It is in the context of realistic SNR levels and commonly encountered room reverberation times that we wanted to assess the effects of reverberation and noise.

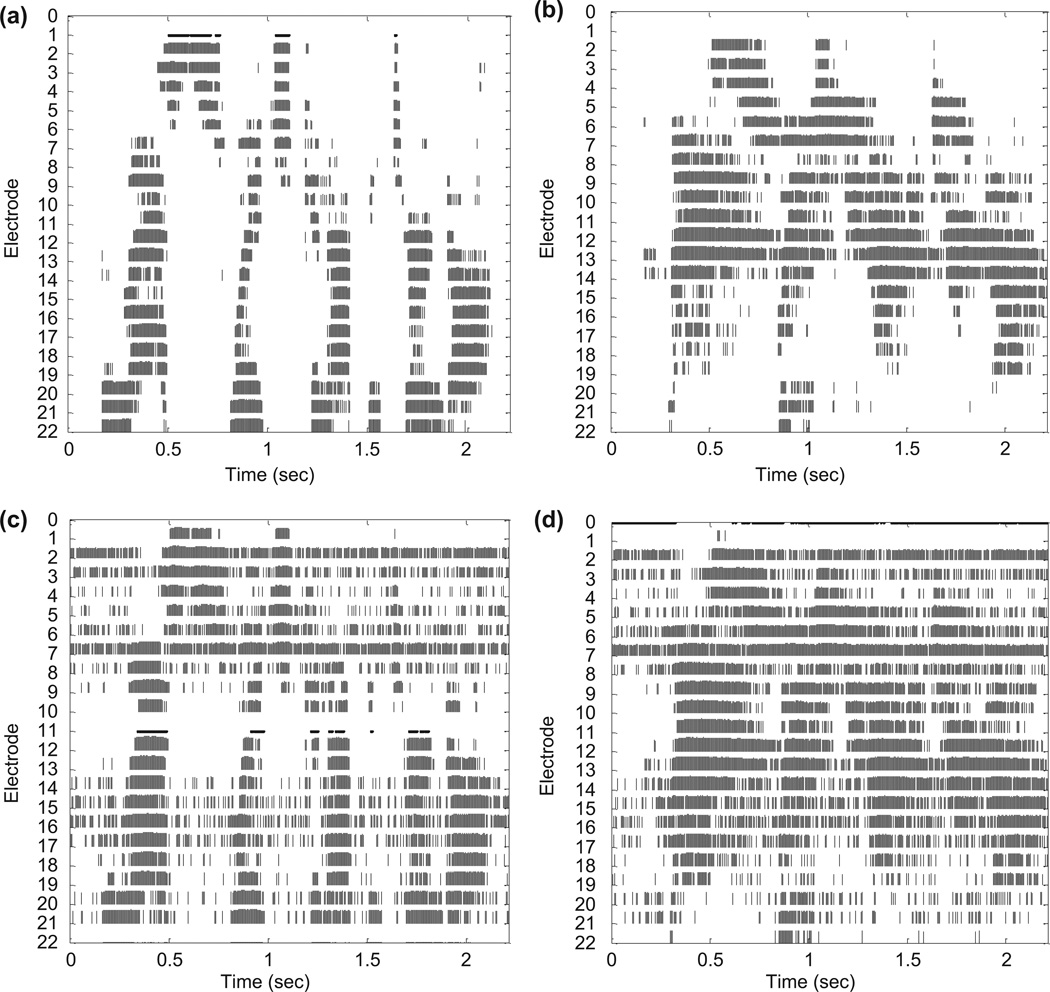

The degradation in intelligibility brought by reverberation, in the present study, can be explained by the way envelope information is selectively coded via the speech coding strategy. All of the CI users tested in the present study used the ACE strategy which is based on selection of 8–10 maximum envelope amplitudes, out of a total of 20–22 channels, in each stimulation cycle (Vandali et al, 2000). Example electrodograms of an IEEE sentence processed with the ACE speech coding strategy are illustrated in Figure 3. Panel a (Figure 3) shows electrodogram of the presented sentence in quiet, panel b in reverberation (T60 = 0.8 s), panel c in noise (SNR = 10 dB), and panel d in reverberation + noise (T60 = 0.8 s and RSNR = 10 dB condition). The maxima selection strategy seems to work adequately well in noise, at least at SNR = 10 dB in that the vowel/consonant boundaries are maintained and the formant transitions are to some extent preserved. That is, many of the important speech phonetic cues are present. In contrast, the ACE strategy mistakenly selects the channels containing reverberant energy since those channels have the highest energy. This is most evident during the unvoiced segments (e.g. stops) of the utterance, where the overlap-masking effect dominates (see for example segments at t = 0.5–0.7 s in Figure 3, b). The overlap-masking effect is generally caused by overlapping of the succeeding segments of speech by the energy of the preceding segments owing to the reflections arriving later than 50–80 ms of the direct sound. As the gaps between words are filled by the late reflections, the vowel/consonant boundaries are blurred, making lexical segmentation extremely challenging for CI listeners. In addition, self-masking effect caused by the reflections arriving within 50–80 ms are also evident in Figure 3 (panel b). Self-masking generally produces flattened F1 and F2 formants, and causes diphthongs and glides to be confused with monophthongs (Nabelek & Letowski, 1985; Nabelek et al, 1989). In the example shown in Figure 3 (panel b), channels corresponding to F1 are rarely selected (since channels with higher amplitude in the mid frequencies are selected), while the F2 formant transitions are flattened (see activity in electrodes 11–12). These effects become more detrimental in the R + N conditions as shown in panel d of Figure 3 (T60 = 0.8 s and RSNR =10 dB). In brief, noise and reverberation obscure the word identification cues in a complementary fashion, degrading the intelligibility even further.

Figure 3.

Electrodograms of the IEEE sentence ‘ The last switch cannot be turned off ‘ processed by the ACE strategy. (a) Electrodogram of unmodified (anechoic) sentence, (b) electrodogram of the same sentence corrupted by reverberation (T60= 0.8 s), (c) electrodogram of the same sentence corrupted by noise (SNR = 10 dB), and (d) electrodogram of the same sentence corrupted by reverberation and noise (T60= 0.8 s and RSNR = 10 dB). In each electrodogram, time is shown along the abscissa and the electrode number is shown along the ordinate.

As illustrated above, since the ACE strategy selects in each cycle the channels with the highest amplitude, it mistakenly selects the channels containing reverberant energy during the unvoiced segments (e.g. stops) of the utterance, where the overlap-masking effect dominates. Hence, the channel selection criterion can negatively influence performance, particularly in reverberant environments (Kokkinakis et al, 2011).

Conclusions

The present study assessed the individual effects of reverberation and masking noise, as well as their joint effects on speech intelligibility by CI users. Results from this experiment indicated that reverberation degrades speech intelligibility to a greater extent than additive noise (speech-shaped noise), at least for the two SNR levels (5 and 10 dB) tested. This was attributed to the temporally-smeared envelopes, overlap-masking effects, and flattened formant transitions, all introduced by reverberation. The combined effects of reverberation and masking noise were greater than those introduced by either reverberation or masking noise alone. In fact, for a subset of the subjects tested, the combined effects were additive. Overall, the results from the present study highlight the importance of testing CI users in reverberant conditions, since testing in noise-alone conditions might underestimate the difficulties the CI users experience in their daily lives where reverberation and noise often coexist.

Acknowledgements

This work was supported by Grant R01 DC010494 awarded from the National Institute of Deafness and other Communication Disorders (NIDCD) of the National Institutes of Health (NIH). The authors would like to thank the CI patients for their time. The authors would also like to thank Dr. Arlene C. Neuman of the NYU Langone Medical Center for providing the RIRs. Thanks also go to the two anonymous reviewers and Dr. Chris Brown for providing valuable feedback to this manuscript.

Abbreviations

- ACE

Advanced combination encoder

- CI

Cochlear implant

- DRR

Direct to reverberant ratio

- N

Noise

- R

Reverberation

- RIR

Room impulse response

- RSNR

Reverberant signal to noise ratio

- SNR

Signal to noise ratio

- SSN

Speech-shaped noise

- STI

Speech transmission index

Footnotes

The ACE strategy implemented in the SPEAR3 processor is very similar to that implemented in the Nucleus 24, Nucleus 5, and Freedom systems and most coding parameters of the SPEAR3 ACE strategy matched those used in the commercial systems. In addition, all parameters used (e.g. stimulation rate, number of maxima, frequency allocation table) were matched to the individual CI user’s clinical settings.

Although adding noise to the clean anechoic signal and then convolving with the room impulse response may reflect a more realistic scenario (e.g. Helms et al, 2012), the method taken in the present study to add noise to the reverberant speech is considered to be common practice in the engineering literature (e.g. Habets, 2010). Nonetheless, there is an approximately 3 dB difference in SNR levels between the reverberation + noise stimuli created by the two methods. For example, the R + N (T60 = 0.6 s, RSNR = 5 dB) condition used in the present study is equivalent, according to speech-transmission index (STI) values (Houtgast & Steeneken, 1985), to adding noise to the anechoic signal at a higher SNR (SNR = 8 dB) and then convolving with the room impulse response (T60 = 0.6 s); this was confirmed by computing the mean of the STI values of 20 reverberation + noise stimuli produced by the two methods (the modulation transfer function in the STI is used to quantify the degree that reverberation and/or noise reduce the modulations). This suggests that convolving both signal and noise with the room impulse response would create stimuli that would be more difficult (by 3 dB in SNR) to recognize than the R + N stimuli used in the present study. Consequently, we would expect the reverberation effects to be larger and the conclusion to be the same in as far as the reverberation degrading intelligibility more than additive noise.

The volume control provides a means for adjusting the overall loudness. It acts at the output stage (envelopes) of the signal-processing stage and not at the input gain.

Declaration of interest: The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the paper.

References

- American National Standards Institute. Acoustical Performance Criteria, Design Requirements and Guidelines for Schools. ANSI pS12.60-2002. New York: ANSI; 2002. [Google Scholar]

- Assmann P, Summerfield Q. The perception of speech under adverse conditions. In: Greenberg S, Ainsworth W, Popper A, R Fay, editors. Speech Processing in the Auditory System. New York: Springer Verlag; 2004. pp. 231–308. [Google Scholar]

- Duquesnoy AJ, Plomp R. Effect of reverberation and noise on the intelligibility of sentences in cases of presbyacusis. J Acoust Soc Am. 1980;68:537–544. doi: 10.1121/1.384767. [DOI] [PubMed] [Google Scholar]

- Friesen LM, Shannon RV, Baskent D, Wang X. Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. J Acoust Soc Am. 2001;110:1150–1163. doi: 10.1121/1.1381538. [DOI] [PubMed] [Google Scholar]

- George ELJ, Festen JM, Houtgast T. The combined effects of reverberation and nonstationary noise on sentence intelligibility. J Acoust Soc Am. 2008;124:1269–1277. doi: 10.1121/1.2945153. [DOI] [PubMed] [Google Scholar]

- George ELJ, Goverts ST, Festen JM, Houtgast T. Measuring the effects of reverberation and noise on sentence intelligibility for hearing-impaired listeners. J Speech Lang & Hear Res. 2010;53:1429–1439. doi: 10.1044/1092-4388(2010/09-0197). [DOI] [PubMed] [Google Scholar]

- Habets E. Speech dereverberation using statistical reverberation models. In: Naylor PA, Gaubitch DG, editors. Speech Dereverberation. United Kingdom: Springer; 2010. pp. 57–93. [Google Scholar]

- Helms-Tillery K, Brown CA, Bacon SP. Comparing the effects of reverberation and of noise on speech recognition in simulated electric-acoustic listening. J Acoust Soc Am. 2012 doi: 10.1121/1.3664101. (in press). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Houtgast T, Steeneken HJM. A review of the MTF concept in room acoustics and its use for estimating speech intelligibility in Auditoria. J Acoust Soc Am. 1985;77:1069–1077. [Google Scholar]

- IEEE. IEEE recommended practice for speech quality measurements. IEEE Trans Audio Electroacoust. 1969;AU-17:225–246. [Google Scholar]

- Kokkinakis K, Hazrati O, Loizou PC. A channel-selection criterion for suppressing reverberation in cochlear implants. J Acoust Soc Am. 2011;129:3221–3232. doi: 10.1121/1.3559683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kokkinakis K, Loizou PC. The impact of reverberant self-masking and overlap-masking effects on speech intelligibility by cochlear implant listeners. J Acoust Soc Am. 2011;103:1099–1102. doi: 10.1121/1.3614539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou PC. Speech Enhancement: Theory and Practice. Boca Raton: CRC; 2007. [Google Scholar]

- Nabelek AK, Pickett JM. Monaural and binaural speech perception through hearing aids under noise and reverberation with normal and hearing-impaired listeners. J Speech Hear Res. 1974;17:724–739. doi: 10.1044/jshr.1704.724. [DOI] [PubMed] [Google Scholar]

- Nabelek AK, Mason D. Effect of noise and reverberation on binaural and monaural word identification by subjects with various audiograms. J Speech Hear Res. 1981;24:375–383. doi: 10.1044/jshr.2403.375. [DOI] [PubMed] [Google Scholar]

- Nabelek AK, Letowski TR. Vowel confusions of hearing-impaired listeners under reverberant and non-reverberant conditions. J Speech Hear Disorders. 1985;50:126–131. doi: 10.1044/jshd.5002.126. [DOI] [PubMed] [Google Scholar]

- Nabelek AK, Letowski TR, Tucker FM. Reverberant overlap- and self-masking in consonant identification. J Acoust Soc Am. 1989;86:1259–1265. doi: 10.1121/1.398740. [DOI] [PubMed] [Google Scholar]

- Nabelek AK. Communication in noisy and reverberant environments. In: Stubebaker GA, Hochberg I, editors. Acoustical Factors Affecting Hearing Aid Performance. Needham Heights, USA: Allyn and Bacon; 1993. pp. 15–28. [Google Scholar]

- Neuman AC, Hochberg I. Children’s perception of speech in reverberation. J Acoust Soc Am. 1983;73:2145–2149. doi: 10.1121/1.389538. [DOI] [PubMed] [Google Scholar]

- Neuman AC, Wroblewski M, Hajicek J, Rubinstein A. Combined effects of noise and reverberation on speech recognition performance of normal-hearing children and adults. Ear Hear. 2010;31:336–344. doi: 10.1097/AUD.0b013e3181d3d514. [DOI] [PubMed] [Google Scholar]

- Poissant SF, Whitmal NA, Freyman RL. Effects of reverberation and masking on speech intelligibility in cochlear implant simulations. J Acoust Soc Am. 2006;119:1606–1615. doi: 10.1121/1.2168428. [DOI] [PubMed] [Google Scholar]

- Stickney GS, Zeng F-G, Litovsky R, Assmann P. Cochlear implant speech recognition with speech maskers. J Acoust Soc Am. 2004;116:1081–1091. doi: 10.1121/1.1772399. [DOI] [PubMed] [Google Scholar]

- Vandali AE, Whitford LA, Plant KL, Clark GM. Speech perception as a function of electrical stimulation rate using the Nucleus 24 cochlear implant system. Ear Hear. 2000;21:608–624. doi: 10.1097/00003446-200012000-00008. [DOI] [PubMed] [Google Scholar]

- Whitmal NA, Poissant SF. Effects of source-to-listener distance and masking on perception of cochlear implant processed speech in reverberant rooms. J Acoust Soc Am. 2009;126:2556–2569. doi: 10.1121/1.3216912. [DOI] [PMC free article] [PubMed] [Google Scholar]