My favorite presentations at conferences are those whose titles are something along this line: “My Worst Complications: Mistakes I’ve Made.” I am always impressed by the willingness of surgeons to share difficult experiences with large audiences. Many surgeons have healthy egos. Some are prone to posturing when it comes to their own surgical prowess and the problems they have experienced in the past. When a prominent clinician shares his or her complications, the experience is often sobering, reassuring, and cathartic.

Perhaps a small part of me is relieved I am not the only surgeon with complications. Perhaps part of me is relieved I have not endured that particular complication. Most importantly, I learn from the experiences of others and these presentations can directly affect how I practice. For those of you out there who have given such presentations, I offer my sincere gratitude.

On a smaller scale, many institutions require a Morbidity and Mortality (M&M) conference where complications from individual cases are examined and discussed. What went wrong? Was the complication preventable or not preventable? What could have been done differently? Most importantly, what can we do in the future to minimize the likelihood of this complication from occurring again? While the discussion of one’s complication and/or error can be uncomfortable, the primary purpose of M&M conferences is to improve quality of care. Most surgeons appreciate the overall value of such a discussion.

It is important to consider individual complications, but we all know that complications occur even when the best possible care has been rendered. Discovering the rate at which complications occur is often more important than the examination of an individual complication. Every surgeon has complications, but how often should every surgeon have complications?

Let us imagine that I have a 4% surgical site infection rate after spine surgery. How do I find out how well or badly I am doing? Traditionally, consulting the scientific literature has provided some reference, but these data have to be interpreted cautiously as there may not be parity between patient populations. As the reported rate ranges from 2% to 14%, I may think that my 4% is actually pretty good and I do not need to change anything about how I practice.

But what if my infection rate is 4% and I suddenly learn that all my local colleagues have a 1% infection rate? How would I respond? I would try to find the reason for that disparity. Is this just chance or am I doing something incorrectly or differently? Are my patients sicker? Am I performing bigger surgeries? Is the autoclave broken? If I am the outlier, then I would to take action to fix that. But how would I know?

One answer is a registry. While there are a number of advantages of a registry, there are several challenges. First, physicians have to buy into the process and either volunteer their data, or allow them to be accessed. Second, infrastructure and resources are needed to collect and organize these data. Third, HIPAA compliance with patient privacy needs to be maintained. Fourth, the data need to have a medicolegal shield. After all, data being submitted are sensitive complication data. Why would any surgeons allow their own complication data to be accessed, if it could possibly increase the likelihood of legal action?

In the state of Washington, the Surgical Care and Outcomes Program (SCOAP) is an example of such a registry. Initially, a quality initiative for cardiac surgery and later general surgery, SCOAP expanded to musculoskeletal surgery, specifically spine surgery. Spine SCOAP began in 2009 and is a physician-driven registry, with patient data protection, medicolegal protection, and dedicated to the improvement of quality and safety of health care through data comparison and discourse. Participation in Spine SCOAP allows medical centers and surgeons to critically self-assess relative to local colleagues. Medical centers and surgeons receive anonymized quarterly reports on process-based and complications-based metrics.

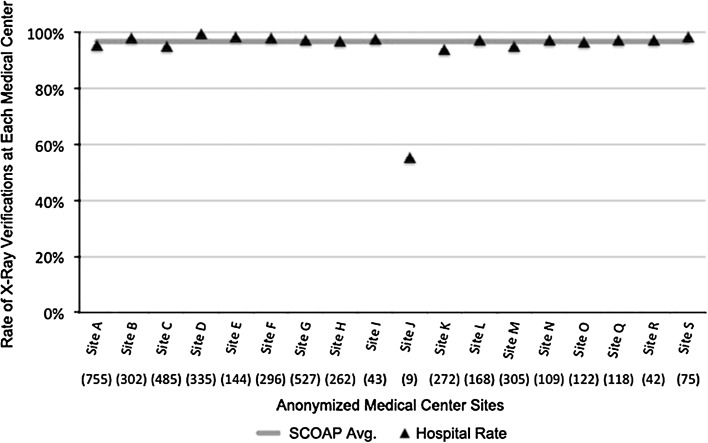

The slide below (Fig. 1) is an example of a process-based metric: radiographic confirmation of level during spine surgery. Each medical center is represented with a letter on the X-axis. Each medical center only knows which letter represents their institution, but not other institutions. We can see that all hospitals with the exception of hospital J have near 100% use of x-ray to confirm surgical level.

Fig. 1.

Rate of radiographic verification of level per anonymized medical center site. Reprinted with permission from Spine SCOAP

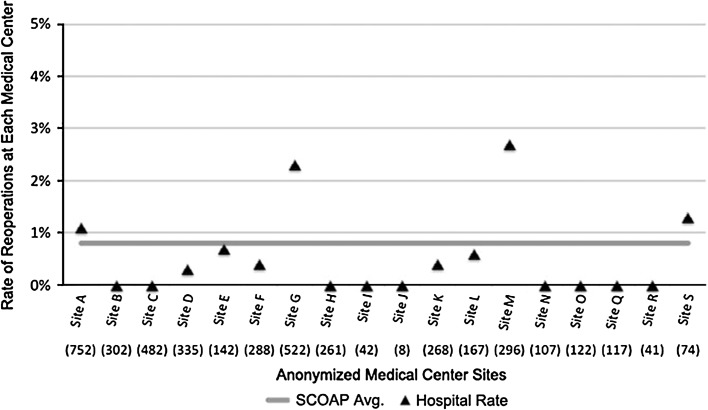

The next slide (Fig. 2) indicates the rates of reoperation for each hospital after lumbar spine surgery. We can see that hospitals G and M have a two- to three-fold higher reoperation rate.

Fig. 2.

Rate of reoperation after lumbar surgery per anonymized medical center site. Reprinted with permission from Spine SCOAP

There could be numerous reasons why hospitals G and M have higher reoperation rates. As Spine SCOAP is still in the earlier stages of development, the data are not yet risk adjusted. However, prior reports show us that just by sharing these data with the participating medical centers and physicians; we see an improvement in the quality of care over time. For example, data sharing in SCOAP has resulted in more frequent imaging for appendicitis. This has led to fewer negative appendectomies surgeries [1, 2]. Nobody wants to be an outlier in a registry.

Perhaps the most important aspect of SCOAP is that it is physician-driven. Physicians from participating hospitals are determining what metrics are being followed. In addition to the usual metrics, SCOAP has the ability to follow exploratory metrics and readily make change in the abstracting of data if deemed appropriate by the health care providers.

While there remain challenges to a registry, one cannot deny that the sharing and comparing data with similar colleagues and a measurement of one’s performance relative to the collective benchmark is likely to improve the safety and quality of health care rendered.

Footnotes

Note from the Editor-in-Chief: We are pleased to publish the next installment of “On Patient Safety” to the readers of Clinical Orthopaedics and Related Research®. The goal of this quarterly column is to explore a broad range of topics that pertain to patient safety. We welcome reader feedback on all of our columns and articles; please send your comments to eic@clinorthop.org.

The author certifies that he, or any members of his immediate family, has no commercial associations (eg, consultancies, stock ownership, equity interest, patent/licensing arrangements, etc) that might pose a conflict of interest in connection with the submitted article.

All ICMJE Conflict of Interest Forms for authors and Clinical Orthopaedics and Related Research editors and board members are on file with the publication and can be viewed on request.

The opinions expressed are those of the writers and do not reflect the opinion or policy of CORR® or the Association of Bone and Joint Surgeons®.

References

- 1.Drake FT, Florence MG, Johnson MG, Jurkovich GJ, Kwon S, Schmidt Z, Thirlby RC, Flum DR, SCOAP Collaborative Progress in the diagnosis of appendicitis: a report from Washington state’s surgical care and outcomes assessment program. Ann Surg. 2012;256:586–594. doi: 10.1097/SLA.0b013e31826a9602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.SCOAP Collaborative. Cuschieri J, Florence M, Flum DR, Jurovich GJ, Lin P, Steele SR, Symons RG, Thirlby R. Negative appendectomy and imaging accuracy in the Washington state surgical care and outcomes assessment program. Ann Surg. 2008;248:557–563. doi: 10.1097/SLA.0b013e318187aeca. [DOI] [PubMed] [Google Scholar]