Abstract

The calls for knowledge-based policy and policy-relevant research invoke a need to evaluate and manage environment and health assessments and models according to their societal outcomes. This review explores how well the existing approaches to assessment and model performance serve this need. The perspectives to assessment and model performance in the scientific literature can be called: (1) quality assurance/control, (2) uncertainty analysis, (3) technical assessment of models, (4) effectiveness and (5) other perspectives, according to what is primarily seen to constitute the goodness of assessments and models. The categorization is not strict and methods, tools and frameworks in different perspectives may overlap. However, altogether it seems that most approaches to assessment and model performance are relatively narrow in their scope. The focus in most approaches is on the outputs and making of assessments and models. Practical application of the outputs and the consequential outcomes are often left unaddressed. It appears that more comprehensive approaches that combine the essential characteristics of different perspectives are needed. This necessitates a better account of the mechanisms of collective knowledge creation and the relations between knowledge and practical action. Some new approaches to assessment, modeling and their evaluation and management span the chain from knowledge creation to societal outcomes, but the complexity of evaluating societal outcomes remains a challenge.

Keywords: assessment, model, evaluation, environment, health, performance, management, quality, uncertainty, effectiveness

1. Introduction

Environment and health assessments and models are to change the world [1], and not only the world of researchers, assessors, and modelers. Rather, they should have effect on the decisions and actions that influence the environment we live in. The societal impacts of assessments and models should not be evaluated merely in terms of scientific quality of their outputs and their so called process effects [2] in the social context of researchers, assessors, and modelers. The performance of assessments and models need to be evaluated also in terms of their outcomes in the broader societal context of everyday life, i.e., practical decisions and actions by policy makers, business managers as well as individual citizens.

Especially in a time when arguably knowledge-based policies and policy-relevance of research is called for more than ever before, there is an increasing need to evaluate the success of environment and health assessments and models according to their societal effectiveness. In a recent thematic issue on the assessment and evaluation of environmental models and software [3], Matthews et al. [2] suggested that the success of environmental modeling and software projects should be evaluated in terms of their outcomes, i.e., changes to values, attitudes, and behavior outside the walls of the research organization, not just their outputs. However, until now, there has been limited appreciation within the environmental modeling and software community regarding the challenges of shifting the focus of evaluation from outputs to outcomes [2].

The situation in the domain of environment and health related assessments, such as integrated assessment [4], health impact assessment [5], risk assessment [6,7,8], chemical safety assessment [9], environmental impact assessment [10], and integrated environmental health impact assessment [11] appears to be similar. A recent study on the state of the art in environmental health assessment revealed that although most assessment approaches aim to influence the society, this is rarely manifested in the principles and practices of evaluating assessment performance [12].

The emphasis in the scientific discourses on evaluating assessments and models has been on rather scientific and technical aspects of evaluation within the research domain, and perspectives that address the impacts of assessments and models in broader societal contexts have emerged only quite recently and are still relatively rare (cf. [13]). Such evaluations are qualitatively different [2], which indicates a need to reconsider the criteria and frameworks for evaluating assessment and model performance. Furthermore, evaluation of assessments and models is not only a matter of judging how good an assessment or a model is, but it also guides their making and the use of their outputs (cf. what you measure is what you get (WYMIWYG) in [14]).

In evaluation of societal effectiveness, both assessments and models are considered as instances of science-based support to decision making upon issues relevant to environment and health. They should thus both help us to understand nature and health, but also help us to formulate goals and propose and implement policies to achieve them [15] Assessments always involve modeling of some kind, at least implicit conceptual models. Conversely, modeling is also often identified with assessment [16]. In addition, decision support systems, information support tools, integrated modeling frameworks and other software tools and information systems to assist in developing, running, and analyzing models are here perceived as integral parts of assessment and modeling (cf. [17]).

Assessments and models can be considered e.g., as diagnostic, prognostic, or summative according to the kinds of questions they address [11], ex-ante or ex-post according to their timing in relation to the activities being assessed [18], and regulatory or academic according to the contexts of their development and application [12]. They can also be developed, executed, and applied by many kinds of actors, e.g., consultants, federal agencies or academic researchers. However, assessments and models, as perceived here, should be clearly distinguished from purely curiosity-driven basic research, as well as ad hoc assessments, and assessments or models made only to justify predetermined decisions.

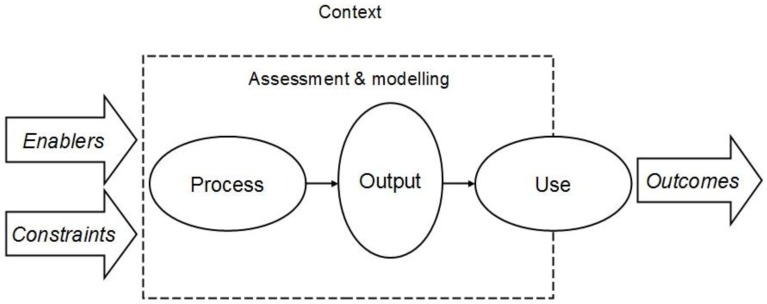

Altogether, assessments and models can be considered as fundamentally having two purposes: (i) describing reality, and (ii) serving the needs of practical decision-making. Accordingly, the structure of the interaction between assessments and models and their societal context can be described as in Figure 1.

Figure 1.

Assessment and modeling in interaction with their societal context (influenced e.g., by [2,19,20,21,22]).

The endeavors of assessment and modeling, primarily located within the domain of researchers, assessors, and modelers (inside the dashed box in Figure 1), are here broken down into:

Process, the procedures and practices of assessment and modeling

Output, the assessment and model results and products, and

Use, the application of the assessment and model outputs

The surrounding context, containing policy making, business and everyday life at large, enables, and on the other hand also constrains, assessment and modeling, e.g., in the form of funding, facilities and education, but also as acceptance of or demand for assessments and models. The societal context is also the medium where the outcomes of assessments and models are realized. Use is located on the boundary between the assessment/modeling domain and the context, which indicates that the application of assessment and model outputs is the primary point of interaction between assessments or models and their societal context.

In following, the approaches to environment and health assessment and model performance in the scientific literature are reviewed and categorized according to which aspects are primarily seen to constitute the goodness of assessments and models. The review primarily focuses on methods, tools, and frameworks, which explicitly aim to identify the factors that determine the success of assessments and models and guide their evaluation. Therefore, methods, tools, and frameworks primarily aimed to support the execution of modeling, assessment or decisions processes are not emphasized. However, this distinction between supporting evaluation and execution is not always clear, but also in these cases, the review primarily focuses on the aspects related to identification and evaluation of the perceived factors of performance.

The identified perspectives to assessment and model performance are called: (i) quality assurance/control, (ii) uncertainty analysis, (iii) technical assessment of models, (iv) effectiveness, and (v) other perspectives. The question underlying this review is how much and how is the interaction with the societal context reflected upon in the approaches to environment and health assessment and model performance in contemporary scientific literature? The understanding of societal context is not limited to communities of experts dealing with assessments and models, but extends to include also policy making and practical actions influenced by or relevant to environment and health assessments and models. The purpose is thus not to discuss the details of different methods, tools and frameworks, but instead map how the approaches and their perceived factors of performance relate to the aspects of assessments and models in interaction with their societal context, as illustrated in Figure 1. Recent contributions in the literature are emphasized, but some important or illustrative examples that were published before 2000 have been included as well. After the review, the approaches and perspectives are discussed in terms of their capability to serve the needs of outcome oriented evaluation and management of assessments and models. In addition, a framework for developing more comprehensive outcome oriented approaches is proposed.

2. Perspectives to Assessment and Model Performance

2.1. Quality Assurance/Control

One of the major themes in assessment and model performance related literature can be referred to as quality assurance/control (QA/QC) perspective. The focus in this perspective is primarily on determining how the processes of assessment and modeling, sometimes also decision making, are to be conducted in order to assure the quality of the output.

There are multiple alternative definitions for quality (see e.g., [23]). However, as regards assessment and models, the interpretation is mostly analogous with the perception in the ISO-9000 framework, i.e., as the organizational structures, responsibilities, procedures, processes, and resources to assure and improve quality [24]. In addition, the hierarchy of evidence in medical science, ranking types of evidence strictly according to the procedure by which they were obtained [25], is an example of the quality assurance/control perspective. However, as pointed out by Cartwright [26] with regard to randomized controlled trials, the procedure alone cannot guarantee delivery of useful information in practical contexts.

One common variation of this perspective is stepwise procedural guidance (Table 1). Such guidance provides relatively strict and detailed descriptions of the steps or phases of an assessment or modeling process that are to be executed in a more or less defined order. Faithful execution of the procedure is assumed to lead to good outputs. A similar, but often less rigorous, variation of the QA/QC perspective is check list guidance emphasizing issues that need to be taken account of in the assessment or modeling process or their evaluation. The checklists can be more or less detailed and they usually do not strictly define the order or sequence of execution.

Table 1.

Examples of quality assurance/control perspective to assessment and model performance.

| Type | Description |

|---|---|

| Stepwise procedural guidance | Ten iterative steps in development and evaluation of environmental models [27] |

| HarmoniQuA guidance for quality assurance in multidisciplinary model-based water management [28] | |

| Methodology for design and development of integrated models for policy support [29] | |

| Framework for integrated environmental health impact assessment [11] | |

| BRAFO tiered approach for benefit-risk assessment of foods [30] | |

| Generic framework for effective decision support through integrated modeling and scenario analysis [31] | |

| Formal framework for scenario development in support of environmental decision making [32] | |

| Check list guidance | Seven attributes of good integrated assessment of climate change [33] |

| List of end use independent process based considerations for integrated assessment [34] | |

| QA/QC performance measurement scheme for risk assessment in Canada [35] | |

| Check list for quality assistance in environmental modeling [36] | |

| Evaluation of input quality | Pedigree analysis in model-based environmental assessment [37] |

| Methodology for recording uncertainties about environmental data [38] | |

| Method for analyzing assumptions in model-based environmental assessments [39] |

In addition, the accounts that address evaluation of input quality can be considered as manifestations of the QA/QC perspective (Table 1). However, the primary focus in QA/QC is often on the outputs, and the input quality evaluations typically complement uncertainty analysis or technical assessments of models (see below). For example, model parameter uncertainty analysis can be considered as an example of evaluation of input quality, but in practice, it is most often considered as an aspect of either uncertainty analysis or technical assessment of models.

Characteristic for stepwise guidance is that it attempts to predetermine a procedure in order to guarantee good quality of outputs. As such, it takes a proactive approach to managing performance in anticipation of future needs. Checklist guidance and evaluation of input quality can also be applied proactively, but the examples found in literature mostly represent a reactive approach of evaluating already completed assessments and models.

The quality assurance/control perspective relates predominantly to the domain of experts, yet most of the approaches within the perspective also intend to reflect the needs of the broader societal context, particularly policy making. The evaluation and management of performance, however, mainly considers the aspects of making assessments and models. Correspondingly, most of the approaches within this perspective simultaneously both identify factors of assessment and model performance and provide guidance for execution of assessment and modeling processes.

2.2. Uncertainty Analysis

Another major theme in the assessment and model performance literature is the uncertainty analysis perspective. The contributions within this perspective vary significantly, ranging from descriptions of single methods to overarching frameworks, but the common idea is characterization of certain properties of the assessment and model outputs. Fundamentally, the perspective builds on quantitative statistical methods based on probability calculus [40], but also other than probability-based approaches to uncertainty have been presented [41,42]. However, the non-probabilistic approaches can be considered as mostly complementary, not competitive, to the probabilistic approaches [43]. Many manifestations of this perspective in the context of environment and health assessment and models also extend to consider qualitative properties of the outputs.

One variation of the uncertainty analysis perspective is identification of the kinds and sources of uncertainty in assessment and model outputs (Table 2). Some uncertainties are often considered as being primarily expressible in quantitative, while others in qualitative terms. The sources of uncertainty may include aspects of the assessment and modeling processes, and in some cases also intended or possible uses and use contexts of the outputs are acknowledged.

Table 2.

Examples of uncertainty analysis perspective to assessment and model performance.

| Type | Description |

|---|---|

| Identification of kinds of uncertainty | Conceptual basis for uncertainty management in model-based decision support [44] |

| Uncertainty in epidemiology and health risk and impact assessment [45] | |

| Uncertainty in integrated assessment modeling [46] | |

| Guidance on dealing with uncertainties | Knowledge quality assessment for complex policy decisions [47] |

| Operationalizing uncertainty in integrated water resource management [48] | |

| Framework for dealing with uncertainty in environmental modeling [49] | |

| Methods for uncertainty analysis | Approaches for performing uncertainty analysis in large-scale energy/economic policy models [50] |

| Modeling of risk and uncertainty underlying the cost and effectiveness of water quality measures [51] | |

| Addressing uncertainty in decision making supported by Life Cycle Assessment [52] | |

| Sensitivity analysis of model outputs with input constraints [53] |

Also guidance on how to assess or deal with different kinds of uncertainties exist (Table 2). Such frameworks usually combine qualitative and quantitative aspects of uncertainty deriving from various sources. Consequently, aspects of the assessment and modeling processes, e.g., input quality and user acceptance, are often also included in the frameworks. The primary focus still remains in the characteristics of the assessment and model.

Numerous more or less explicit methods, means and practices to analyze uncertainties of assessment and model outputs also exist (Table 2). In addition to the standard statistical characterization, for example sensitivity, importance, and value of information analysis and Bayesian modeling are essential in the context of environment and health assessment and models. Such methods are dominantly quantitative.

In the uncertainty analysis perspective, it appears typical that the issue of uncertainty is approached from an external observer’s point of view. The evaluation of performance is thus mainly considered as a separate, reactive activity taking place in addition to the actual assessment or modeling process, not as its integral proactive part. The evaluation usually takes place within the expert domain and primarily serves the purpose of describing reality, and only indirectly serves the secondary needs of the broader societal context. The approaches to uncertainty primarily focus on the assessment or model results, and mostly do not provide direct guidance on how to conduct modeling, assessment or decision processes.

2.3. Technical Assessment of Models

This perspective focusing on characteristics of models is particularly present in the modeling literature. In addition, different kinds of software tools that are applied in developing, running, and analyzing models can be evaluated similarly as models.

The object of interest in the technical assessment of models is development and application of formal methods for testing and evaluating models within defined domains of application (Table 3). Generally, model evaluation and performance is considered to cover structural features of models, representativeness of model results in relation to a certain part of reality, as well as usefulness with regard to a designated task (cf. [54]). However, usefulness mainly refers to expert use of models, corresponding mostly to the so-called process effects, i.e., changes in the capacity of those engaged in the modeling and assessment endeavors, rather than outcomes (cf. [2]). Most commonly, technical assessment of models takes place in terms of validation and verification by comparing models and their results against each other or against measured data (e.g., [55,56]), although it has also been argued that models are fundamentally non-validatable [57].

Table 3.

Examples of technical assessment of models perspective to assessment and model performance.

| Type | Description |

|---|---|

| Means for model and software evaluation | Success factors for integrated spatial decision support systems [58] |

| Criteria for environmental model and software evaluation [59] | |

| Terminology and methodological framework for modeling and model evaluation [60] | |

| Evaluation methods of environmental modeling and software in a comprehensive conceptual framework [2] | |

| Top-down framework for watershed model evaluation and selection [61] | |

| Overview of atmospheric model evaluation tool (AMET) [62] | |

| Appropriateness framework for the Dutch Meuse decision support system [63] | |

| Empirical evaluation of decision support systems [64] | |

| Numerical and visual evaluation of hydrological and environmental models [65] | |

| Evaluation of models | Evaluating an ecosystem model for wheat-maize cropping system in North China [66] |

| Parameterization and evaluation of a Bayesian network for use in an ecological risk assessment [67] | |

| Evaluation of quantitative and qualitative models for water erosion assessment in Ethiopia [68] | |

| Evaluation of modeling techniques for forest site productivity prediction using SMAA [69] | |

| Analysis of model uncertainty | Model uncertainty in the context of risk analysis [70] |

| Scenario, model and parameter uncertainty in risk assessment [71] | |

| Framework for dealing with uncertainty due to model structure error [72] |

A variation of this perspective, more common for the discourses in assessment literature, is analysis of model uncertainty (Table 3). Here the aim typically is to characterize the properties of a model in order to be able to correctly interpret or evaluate its outputs. Model uncertainty is often considered as one aspect of a broader uncertainty analysis concept.

The technical assessment of models is predominantly reactive, as it requires an existing model or software system that can be tested and analyzed. The evaluation, however, is usually perceived as an integral part of the model development, not a separate entity, enabling application of technical assessment of models in different developmental stages within the modeling or assessment process. On the other hand, the common practice of self-evaluation of models may also lead to e.g., limited usability, credibility and acceptability due to lack of interaction with the broader societal context. However, some approaches extend to explicitly take account of the needs of e.g., policy making and engage the model users in the evaluation. Somewhat comparably to uncertainty analysis, the approaches in this perspective focus on the model as the result of a modeling process. However, the evaluations are often also intended and applied as guidance to the execution of modeling processes.

2.4. Effectiveness

Whereas the three former perspectives can be considered conventional, emphasis of assessment and model effectiveness has become a major topic only recently in the assessment and model performance related literature.

In the effectiveness perspective, the aim of assessments and models is perceived as promotion of changes in values, attitudes, and behavior outside the walls of the research community (cf. [2]) by maximizing the likelihood of an assessment process to achieve the desired results and the goals set for it [73]. In principle, here assessment and model performance is thus determined by the impacts delivered into the broader societal context. However, as it might take years to achieve set goals and it often is not immediately clear whether an observed change is a result of a specific decision or action, evaluation of outcomes is often perceived as very difficult, if not impossible [74], and possibly even leading to incorrect conclusions regarding effectiveness (cf. [75]). Consequently, the effectiveness criteria and frameworks (Table 4) often address aspects of process and output, as well as contextual enablers and constraints, rather than outcomes, as determinants of effectiveness. Some contributions also make a distinction between (immediate) impacts and (indirect) outcomes. As a result, although the aim is to address the outcomes, some approaches to effectiveness resemble the checklist guidance of quality assurance/control (see Table 1).

Table 4.

Examples of effectiveness perspective to assessment and model performance.

| Type | Description |

|---|---|

| Frameworks and criteria for effectiveness | Framework for the effectiveness of prospective human impact assessment [74] |

| Process, impact and outcome indicators for evaluating health impact assessment [76] | |

| Criteria for appraisal of scientific inquiries with policy implications [77] | |

| Necessary conditions and facilitating factors for effectiveness in strategic environmental assessment [78] | |

| Components of policy effectiveness in participatory environmental assessment [79] | |

| Dimensions of openness for analyzing the potential for effectiveness in participatory policy support [80] | |

| Properties of good assessment for evaluating effectiveness of assessments [81] | |

| Effectiveness evaluations | Several cases of evaluating effectiveness of health impact assessment in Europe [82] |

| General effectiveness criteria for strategic environmental assessment and their adaptation for Italy [83] | |

| Environmental impact assessment evaluation model and its application in Taiwan [84] | |

| Effectiveness of the Finnish environmental impact assessment system [85] | |

| Example of outcome evaluation for environmental modeling and software [2] | |

| Use of models, tools and outputs | User interaction during development of a decision support system [86] |

| Review of factors influencing use and usefulness of information systems [87] | |

| Bottlenecks of widespread usage of planning support systems [88] | |

| Framework to assist decision makers in the use of ecosystem model predictions [89] | |

| Analysis of contribution of land-use modeling to societal problem solving [90] | |

| Use of decision and information support tools in desertification policy and management [91] | |

| Developing tools to support environmental management and policy [92] | |

| Role of computer modeling in participatory integrated assessments [93] | |

| Usage and perceived effectiveness of decision support systems in participatory planning [94] | |

| Credible uses of the distributed interactive simulation (DIS) system [95] | |

| Analysis of interaction between environmental health assessment and policy [12] |

The approaches emphasizing the use of models, tools and their outputs can also be considered as a manifestation of the effectiveness perspective (Table 4). They can generally be characterized as attempts to operationalize the interaction between assessments or models and the practical uses of their outputs. Most of the contributions are, however, relatively tool-centered, and most often little attention is given to the cognitive processes involved in the delivery and reception of information produced by assessments and models.

Correspondingly, the effectiveness perspective clearly intends to serve the needs of the broader societal context. However, due to practical challenges of measuring societal changes, the focus easily shifts towards guidance of assessment and modeling within the expert domain. Comparably to quality assurance/control, many approaches in this perspective intertwine the principles for determining performance with the guidance for executing assessment, modeling, and decision processes.

2.5. Other Perspectives

Many contributions to assessment and model performance in relevant literature can be quite comfortably located within the four perspectives above. However, there are also some other approaches addressing information quality, acceptance and credibility, communication, participation, and decision process facilitation (Table 5) that deserve mentioning.

Table 5.

Examples of other perspectives to assessment and model performance.

| Type | Description |

|---|---|

| Information quality | A conceptual framework of data quality [98] |

| An asset valuation approach to value of information [99] | |

| Ten aspects that add value to information [100] | |

| Knowledge quality in knowledge management systems [101] | |

| Acceptance and credibility | Obtaining model credibility through peer-reviewed publication process [58] |

| Model credibility in the context of policy appraisal [102] | |

| Salience, credibility and legitimacy of assessments [103] | |

| Communication | Uncertainty communication in environmental assessments [104] |

| Check list for assessing and communicating uncertainties [105] | |

| Communication challenges posed by release of a pathogen in an urban setting [106] | |

| Clarity in knowledge communication [107] | |

| Participation | Openness in participation, assessment and policy making [80] |

| Purposes for participation in environmental impact assessment [108] | |

| OECD/NEA stakeholder involvement techniques [109] | |

| Participation guide for the Netherlands Environmental Assessment Agency [110] | |

| Decision process facilitation | Rational analysis for a problematic world [111] |

| Brief presentations of numerous decision support tools (website) [112] | |

| Decision analysis as tool to support analytical reasoning [113] |

Assessment and modeling are essentially processes of producing structured information. Therefore, the contributions regarding information quality, even outside the fields of assessment and modeling, are of relevance here. Like the uncertainty analysis perspective, information quality looks into certain properties of an information product. Similarly, the variation among contributions addressing information quality is big.

Credibility is often considered necessary for acceptance of assessment and modeling endeavors and their outputs. It can be obtained more or less formally or informally e.g., through peer review, extended peer-review [96] or reputation of the participants involved in assessment and modeling. Acceptance and credibility are often considered as aspects of broader frameworks along with other determinants of performance.

In addition, communication of results, e.g., in terms of communicating uncertainties and risk information, relates to assessment and model performance. However, the issues of communication are often not considered as integral parts of modeling and assessments endeavors. For example, risk assessment, risk management and risk communication are traditionally considered as separate, yet interrelated, entities, each having their own aims, practices, and practitioners (e.g., [97]).

Techniques for involving stakeholders and public most often do not scrutinize performance of assessments and models, but at least implicitly determine certain factors of their performance while guiding conducting of participatory processes. Participation can relate to assessment and modeling or decision making.

There are also several approaches to facilitating decision processes deriving from the domains of decision analysis, operations research and management science in general. Rather than approaches to evaluating assessment and model performance, they are particularly intended for framing and structuring problems as well as guiding the decision processes in searching solutions to them. In some approaches, explicit success criteria for decision processes are presented and some approaches are also applicable as tools for social knowledge creation in stakeholder involvement.

The approaches mentioned as other perspectives vary in their relation to the broader societal context. Many of them, however, have a connection to issues regarding how assessments and models are perceived and interpreted outside the expert domain. Whereas information quality focuses on characteristics of an information product, the rest of the approaches in this category primarily aim to determine and guide procedures of assessment and modeling, communication, participation, or decision making in order to promote certain aspects of assessment, model or decision performance.

Although the approaches in this category relate to how assessments and models may influence the broader societal context, e.g., through societal decision making and social learning, most of them are not strongly linked to the perspectives to assessment and model performance described above. Instead, many approaches seem to be addressing a certain relevant, but not integral, entity related to assessments and models in interaction with the broader societal context.

3. Discussion

3.1. Overview of Approaches and Perspectives

It seems that none of the perspectives reviewed above or any individual approaches alone sufficiently serve the needs of outcome oriented evaluation and management of assessment and model performance. In most approaches, the main emphasis is on the processes and outputs of assessment and modeling, and correspondingly primarily addressing the needs from within the expert domain. Use, outcomes and other aspects of the broader societal context are addressed to a lesser extent, although more frequently in recent literature. Although the approaches focusing on processes and outputs may be robust, they tend to miss important aspects of interaction between assessments and models and the broader societal context. On the other hand, the approaches focusing on the interaction may be vaguer and still provide only partial solutions to considering how and why assessments and models influence their societal contexts, particularly societal decision making. Altogether, the needs of the broader societal context are, although to varying degrees, recognized in most perspectives to assessment and model performance. However, explicit measurement and treatment of outcomes in the broader societal context is difficult.

Certain illuminating differences similarities can be identified between the perspectives and approaches described above. These relate e.g., to which parts of the chain from knowledge creation to outcomes (see Figure 1) are addressed, whether the approach considers products or processes of assessment, modeling and decision making, and whether evaluation is perceived as a separate entity or intertwined with guidance of executing assessment, modeling or decision processes.

Uncertainty analysis and technical assessment of models are somewhat similar in the sense that they focus on the products of assessment and modeling. Uncertainty analysis is, however, more clearly a separate and often reactive process, while technical assessment of models is often linked also to the execution of modeling processes. Both perspectives are mostly confined to the expert domain of assessors and modelers.

Quality assurance/control and effectiveness perspectives are similar in the sense that they often merge evaluation and guidance of processes, although some effectiveness approaches actually aim to consider outcomes, the products of decision making. However, as this is difficult, in practice the main difference between these perspectives is that whereas quality assurance/control focuses on assessment and modeling processes with little reference to the use processes they relate to, effectiveness perspective particularly attempts to look into the use of assessments and models in decision making.

Of the other considered perspectives, information quality is in principle quite similar to some uncertainty analysis approaches, although the focus of evaluation is often other than assessment or model results. Acceptance and credibility, communication, participation, and decision process facilitation all primarily determine procedures related, but not integral, to assessments, models and their use. The linkages to the factors determining and realizing assessment and model performance are, however, often implicit or weak.

Most of the approaches that explicitly identify factors of environment and health assessment and model performance thus focus on processes and outputs of assessment and modeling. This can be considered to be in line with the fact that the issues of effectiveness and policy-relevance of assessments and models have become major topics only during the last decades (as can be seen e.g., by searching scientific article databases). As assessors, modelers and researchers more generally have been lacking requirements and incentives for effectiveness and policy-relevance (cf. [114]), correspondingly the practices, principles and methods of performance management and evaluation have not developed to address these issues. Instead, the impacts of assessments and models have mostly been considered mainly in terms of their process effects (cf. [2]) within the communities of assessors and modelers. However, virtually all assessment and modeling endeavors in the fields of environment and health are motivated, at least nominally, by the aim to influence societal decisions and actions. The need to evaluate and manage assessments and models according to their societal outcomes thus seems justified.

The other perspectives complement the conventional approaches to assessment and model performance by addressing different aspects of interaction of assessments and models with their broader societal context. However, mostly they do not link seamlessly to evaluation and management of assessment and model performance. Only in the relatively recently emerged effectiveness perspective, the use of assessments, models, and their results in decision processes is recognized as a crucial part for assessment and model performance.

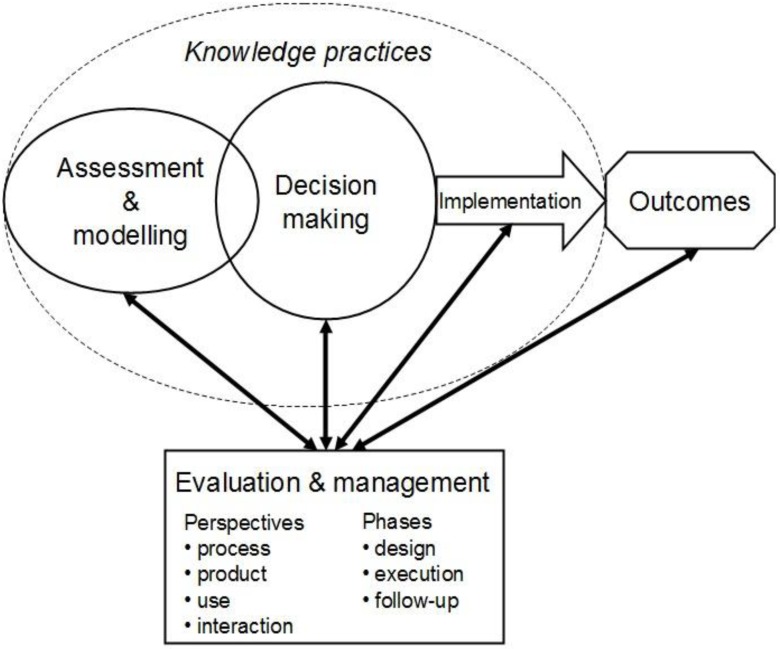

3.2. Towards New Approaches

It appears that more comprehensive approaches that provide a better coverage of the different aspects of assessments and models in their societal context are needed to support evaluation and management of assessment and model performance. In practice, this requires taking account of the making of assessments and models, their use in decision making, practical implementation of the knowledge they deliver, as well as the consequential societal changes they evoke, i.e., outcomes (Figure 2). Such approaches would combine the essential characteristics of the different perspectives reviewed above into one framework, methodology or combination of tools and provide both rigor and better linkage from evaluation and making of assessments and models to the outcomes.

Figure 2.

A framework for comprehensive evaluation and management of assessment and model performance. The chain from assessment and modeling to outcomes mostly consists of production, communication and application of knowledge in a societal context.

However, a mere compilation of features taken from different perspectives would probably not be sufficient. A more thorough account of the mechanisms of collective knowledge creation and the relations between knowledge and action in a societal context is needed in order to bridge assessments and models with their outcomes [115]. Unfortunately, these aspects are barely even recognized in most current approaches to assessment and model performance.

The need to span the whole chain from knowledge creation to outcomes and bringing the producers and users of knowledge to a more intimate interaction for solving practical problems is recognized in some new approaches to assessment, modeling and their evaluation and management (e.g., [2,116,117]). The new approaches can be seen attempts to address the challenges of societal decision making which the more conventional approaches have not succeeded to resolve [12]. Most significantly the new approaches are different in the sense that they consider knowledge and knowledge-based action as their output, and software and information as means for their delivery. The pragmatic and socio-technical approaches also draw the attention to the practices of the collectives involving in intentional creation and use of knowledge in networks consisting of human actors and non-human objects (e.g., tools, models, information) mediating their interaction (see Figure 2 and [118]). Perceiving assessments and models interacting with their contexts as socio-material entities also promotes a multi-perspective approach to their evaluation (cf. [116]).

However, the complexity of evaluating the outcomes remains a challenge. In the eyes of an evaluator, the relative simplicity of considering only processes, outputs or direct impacts in tightly bound settings of expert activities may still appear inviting in comparison to attempting to account for complex indirect impacts within the broader social context. Unfortunately, this would not be adequate for serving the purposes of assessment, models and their evaluation.

In order to overcome this challenge, the new comprehensive approaches should not only focus on either processes, outputs, uses or outcomes of assessments and models, but particularly consider and address the knowledge that is created, transferred and applied within the intertwined processes of modeling, assessment and decision-making (see Figure 2). This means that the evaluation and management should be a continuous counterpart of designing and making assessments and models and applying their outputs in practice. After all, assessments and models can only be evaluated in relative terms, and their primary value is heuristic [119] Correspondingly, the use of assessments and models, as advocated by the effectiveness perspective, appears to be the most critical link in the chain from assessment and modeling to outcomes. The approaches to communication, participation and particularly decision process facilitation hold a lot of potential for developing the practices of evaluating and managing collective knowledge creation in decision making by means of assessments and models.

4. Conclusions

Altogether, the findings of the review can be briefly summarized as follows:

Conventional evaluation of assessments and models focuses on processes and outputs;

Recently also societal outcomes of assessments and models have been emphasized;

Effectiveness of assessments and models can be considered as their likelihood of delivering intended outcomes;

An outcome-oriented turn is taking place in assessment, modeling and their evaluation;

New approaches merge design, making and evaluation of assessments and models;

Assessments and models are useful means for facilitating collective knowledge creation e.g., in societal decision making.

Acknowledgments

This review builds on research done in several projects that have received funding from various sources: the EU projects INTARESE (Integrated Assessment of Health Risks of Environmental Stressors in Europe, 2005–2011, GOCE-CT-2005-018385), and BENERIS (Benefit-Risk Assessment of Food: An iterative Value-of-Information approach, 2006–2009, FOOD-CT-2006-022936), Safefoodera project BEPRARIBEAN (Best Practices for Risk-Benefit Analysis of Foods, project ID 08192), the Academy of Finland (Grants 218114 and 126532), the SYTYKE doctoral programme in environmental health at the University of Eastern Finland, and Ministry of Social Affairs and Health (Tekaisu-project). The authors would also like to thank all the anonymous reviewers for their fruitful comments during different phases of development of this article.

Conflict of Interest

The authors declare no conflict of interest.

References

- 1.Pohjola M. University of Eastern Finland; Kuopio, Finland: May, 2013. Assessments are to Change the World—Prerequisites for Effective Environmental Health Assessment. [Google Scholar]

- 2.McIntosh B.S., Alexandrov G., Matthews K., Mysiak J., van Ittersum M. Thematic issue on the assessment and evaluation of environmental models and software. Environ. Model. Software. 2011;26:245–336. doi: 10.1016/j.envsoft.2010.08.008. [DOI] [Google Scholar]

- 3.Matthews K.B., Rivington M., Blackstock K.L., McCrum G., Buchan K., Miller D.G. Raising the bar?—The challenges of evaluating the outcomes of environmental modeling and software. Environ. Model. Software. 2011;26:247–257. doi: 10.1016/j.envsoft.2010.03.031. [DOI] [Google Scholar]

- 4.Van Der Sluijs J.P. Integrated Assessment. Responding to Global Environmental Change. In: Munn T., editor. Encyclopedia of Global Environmental Change. John Wiley & Sons Ltd; Chichester, UK: 2002. pp. 250–253. [Google Scholar]

- 5.Health Impact Assessment (HIA), main concepts and suggested approach. Gothenburg consensus paper. World Health Organization; Brussels, Belgium: 1999. [Google Scholar]

- 6.NRC. Risk Assessment in the Federal Government: Managing the Progress. The National Research Council National Academy Press; Washington, DC, USA: 1983. [Google Scholar]

- 7.NRC. Understanding Risk: Informing Decisions in a Democratic Society. The National Research Council National Academy Press; Washington, DC, USA: 1996. [Google Scholar]

- 8.NRC. Science and Decisions: Advancing Risk Assessment. The National Research Council National Academy Press; Washington, DC, USA: 2009. [Google Scholar]

- 9.ECHA. Guidance on Information Requirements and Chemical Safety Assessment. Guidance for the implementation of REACH. [(accessed on 18 June 2013)]. Available online: echa.europa.eu/guidance-documents/guidance-on-information-requirements-and-chemical-safety-assessment.

- 10.Wood C. Environmental Impact Assessment: A Comparative Review. Longman Scientific & Technical; New York, NY, USA: 1995. [Google Scholar]

- 11.Briggs D.J. A framework for integrated environmental health impact assessment of systemic risks. Environ. Health. 2008;7:61. doi: 10.1186/1476-069X-7-61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Pohjola M.V., Leino O., Kollanus V., Tuomisto J.T., Gunnlaugsdόttir H., Holm F., Kalogeras N., Luteijn J.M., Magnusson S.H., Odekerken G., et al. State of the art in benefit—Risk analysis: Environmental health. Food Chem. Toxicol. 2012;50:40–55. doi: 10.1016/j.fct.2011.06.004. [DOI] [PubMed] [Google Scholar]

- 13.McIntosh B.S., Alexandrov G., Matthews K., Mysiak J., van Ittersum M. Preface: Thematic issue on the assessment and evaluation of environmental models and software. Environ. Model. Software. 2011;26:245–246. doi: 10.1016/j.envsoft.2010.08.008. [DOI] [Google Scholar]

- 14.Hummel J., Huitt W. GaASCD Newsletter; The Reporter: 1994. [(accessed on 18 June 2013)]. What you measure is what you get. Available online: www.edpsycinteractive.org/papers/wymiwyg.html. [Google Scholar]

- 15.Norton B.G. Integration or Reduction: Two Approaches to Environmental Values. In: Norton B.G., editor. Searching for Sustainability, Interdisciplinary Essays in the Philosophy of Conservation Biology. Cambridge University Press; Cambridge, UK: 2002. [Google Scholar]

- 16.Jakeman A.J., Letcher R.A. Integrated assessment and modeling: Features, principles and examples for catchment management. Environ. Model. Software. 2003;18:491–501. doi: 10.1016/S1364-8152(03)00024-0. [DOI] [Google Scholar]

- 17.Rizzoli A.E., Leavesley G., Ascough II J.C., Argent R.M., Athanasiadis I.N., Brilhante V., Claeys F.H.A., David O., Donatelli M., Gijsbergs P., et al. Integrated Modeling Frameworks for Environmental Assessment Decision Support. In: Jakeman A.J., Voinov A.A., Rizzoli A.E., Chen S.H., editors. Environmental modeling, Software and Decision Support. Elsevier; Amsterdam, The Netherlands: 2008. pp. 101–118. [Google Scholar]

- 18.Pope J., Annandale D., Morrison-Saunders A. Conceptualising sustainability assessment. Environ. Impact Assess. Rev. 2004;24:595–616. doi: 10.1016/j.eiar.2004.03.001. [DOI] [Google Scholar]

- 19.Blackstock K.L., Kelly G.J., Horsey B.L. Developing and applying a framework to evaluate participatory research for sustainability. Ecol. Econ. 2007;60:726–742. doi: 10.1016/j.ecolecon.2006.05.014. [DOI] [Google Scholar]

- 20.Bina O. Context and systems: Thinking more broadly about effectiveness in strategic environmental assessments in China. Environ. Manage. 2008;42:717–733. doi: 10.1007/s00267-008-9123-5. [DOI] [PubMed] [Google Scholar]

- 21.Leviton L.C. Evaluation use: Advances, challenges and applications. Am. J. Eval. 2003;24:525–535. [Google Scholar]

- 22.Patton M.Q. Utilization-Focused Evaluation. SAGE Publications Inc; Thousand Oakes, CA, USA: 2008. [Google Scholar]

- 23.Reeves C.A., Bednar D.A. Defining quality: Alternatives and implications. Acad. Manage. Rev. 1994;19:419–445. [Google Scholar]

- 24.Harteloh P.P.M. Quality systems in health care: A sociotechnical approach. Health Policy. 2002;64:391–398. doi: 10.1016/S0168-8510(02)00183-5. [DOI] [PubMed] [Google Scholar]

- 25.Guyatt G.H., Sackett D.L., Sinclair J.C., Hayward R., Cook D.J., Cook R.J. Users’ guides to the medical literature. IX. A method for grading health care recommendations. JAMA. 1995;274:1800–1804. doi: 10.1001/jama.1995.03530220066035. [DOI] [PubMed] [Google Scholar]

- 26.Cartwright N. Are RCTs the gold standard? BioSocieties. 2007;2:11–20. [Google Scholar]

- 27.Jakeman A.J., Letcher R.A., Norton J.P. Ten iterative steps in development and evaluation of environmental models. Environ. Model. Software. 2006;21:602–614. doi: 10.1016/j.envsoft.2006.01.004. [DOI] [Google Scholar]

- 28.Refsgaard J.C., Henriksen H.J., Harrar W.G., Scholten H., Kassahun A. Quality assurance in model-based water management—Review of existing practice and outline of new approaches. Environ. Model. Software. 2005;20:1201–1215. doi: 10.1016/j.envsoft.2004.07.006. [DOI] [Google Scholar]

- 29.van Delden H., Seppelt R., White R., Jakeman A.J. A methodology for the design and development of integrated models for policy support. Environ. Model. Software. 2011;26:266–279. doi: 10.1016/j.envsoft.2010.03.021. [DOI] [Google Scholar]

- 30.Hoekstra J., Hart A., Boobis A., Claupein E., Cockburn A., Hunt A., Knudsen I., Richardson D., Schilter B., Schütte K., et al. BRAFO tiered approach for benefit-risk assessment of foods. Food Chem. Toxicol. 2012;50:S684–S698. doi: 10.1016/j.fct.2010.05.049. [DOI] [PubMed] [Google Scholar]

- 31.Liu Y., Gupta H., Springer E., Wagener T. Linking science with environmental decision making: Experiences from an integrated modeling approach to supporting sustainable water resources management. Environ. Model. Software. 2008;23:846–858. doi: 10.1016/j.envsoft.2007.10.007. [DOI] [Google Scholar]

- 32.Mahmoud M., Liu Y., Hartmann H., Stewart S., Wagener T., Semmens D., Stewart R., Gupta H., Dominguez D., Dominguez F., et al. A formal framework for scenario development in support of environmental decision-making. Environ. Model. Software. 2009;24:798–808. doi: 10.1016/j.envsoft.2008.11.010. [DOI] [Google Scholar]

- 33.Granger Morgan M., Dowlatadabi H. Learning from integrated assessment of climate change. Climatic change. 1996;34:337–368. doi: 10.1007/BF00139297. [DOI] [Google Scholar]

- 34.Risbey J., Kandlikar M., Patwardhan A. Assessing integrated assessment. Climatic Change. 1996;34:369–395. doi: 10.1007/BF00139298. [DOI] [Google Scholar]

- 35.Forristal P.M., Wilke D.L., McCarthy L.S. Improving the quality of risk assessments in Canada using a principle-based apporach. Regul. Toxicol. Pharmacol. 2008;50:336–344. doi: 10.1016/j.yrtph.2008.01.013. [DOI] [PubMed] [Google Scholar]

- 36.Risbey J., van der Sluijs J.P., Kloprogge P., Ravetz J., Funtowicz S., Corral Quintana S. Application of a checklist for quality assistance in environmental modeling to an energy model. Environ. Model. Assess. 2005;10:63–79. doi: 10.1007/s10666-004-4267-z. [DOI] [Google Scholar]

- 37.van der Sluijs J.P., Craye M., Funtowicz S., Kloprogge P., Ravetz J., Risbey J. Combining quantitative and qualitative measures of uncertainty in model-based environmental assessment: The NUSAP system. Risk Anal. 2005;25:481–492. doi: 10.1111/j.1539-6924.2005.00604.x. [DOI] [PubMed] [Google Scholar]

- 38.Brown J.D., Heuvelink G.B., Refsgaard J.C. An integrated methodology for recording uncertainties about environmental data. Water Sci. Technol. 2005;52:153–160. [PubMed] [Google Scholar]

- 39.Kloprogge P., van der Sluijs J.P., Petersen A.C. A method for the analysis of assumptions in model-based environmental assessments. Environ. Model. Software. 2011;26:289–301. doi: 10.1016/j.envsoft.2009.06.009. [DOI] [Google Scholar]

- 40.O’Hagan A. Probabilistic uncertainty specification: Overview, elaboration techniques and their application to a mechanistic model of carbon flux. Environ. Model. Software. 2012;36:35–48. doi: 10.1016/j.envsoft.2011.03.003. [DOI] [Google Scholar]

- 41.Colyvan M. Is probability the only coherent approach to uncertainty? Risk Anal. 2008;28:645–652. doi: 10.1111/j.1539-6924.2008.01058.x. [DOI] [PubMed] [Google Scholar]

- 42.Chutia R., Mahanta S., Datta D. Non-probabilistic sensitivity and uncertainty analysis of atmospheric dispersion. AFMI. 2013;5:213–228. [Google Scholar]

- 43.Moens D., Vandepitte D. A survey of non-probabilistic uncertainty treatment in finite element analysis. Comput. Method. Appl. Mech. Eng. 2005;194:1527–1555. doi: 10.1016/j.cma.2004.03.019. [DOI] [Google Scholar]

- 44.Walker W.E., Harremoës P., Rotmans J., van der Sluijs J.P., van Asselt M.B.A., Janssen P., Krayer von Krauss M.P. Defining uncertainty: A conceptual basis for uncertainty management in model-based decision support. Integrated Assess. 2003;4:5–17. doi: 10.1076/iaij.4.1.5.16466. [DOI] [Google Scholar]

- 45.Briggs D.J., Sable C.E., Lee K. Uncertainty in epidemiology and health risk and impact assessment. Environ. Geochem. Health. 2009;31:189–203. doi: 10.1007/s10653-008-9214-5. [DOI] [PubMed] [Google Scholar]

- 46.van Asselt M.B.A., Rotmans J. Uncertainty in integrated assessment modeling: From positivism to Pluralism. Climatic Change. 2002;54:75–105. doi: 10.1023/A:1015783803445. [DOI] [Google Scholar]

- 47.van der Sluijs J.P., Petersen A.C., Janssen P.H.M., Risbey J.S., Ravetz J.R. Exploring the quality of evidence for complex and contested policy decisions. Environ. Res. Lett. 2008;3 doi: 10.1088/1748-9326/3/2/024008. [DOI] [Google Scholar]

- 48.Blind M.W., Refsgaard J.C. Operationalising uncertainty in data and models for integrated water resource management. Water Sci. Technol. 2007;56:1–12. doi: 10.2166/wst.2007.593. [DOI] [PubMed] [Google Scholar]

- 49.Refsgaard J.C., van der Sluijs J.P., Lajer Højberg A., Vanrolleghem P.A. Uncertainty in the environmental modeling process—A framework and guidance. Environ. Model. Software. 2007;22:1543–1556. doi: 10.1016/j.envsoft.2007.02.004. [DOI] [Google Scholar]

- 50.Kann A., Weyant J.P. Approaches for performing uncertainty analysis in large-scale energy/economic policy models. Environ. Model. Assess. 2000;5:29–46. doi: 10.1023/A:1019041023520. [DOI] [Google Scholar]

- 51.Brouwer R., De Blois C. Integrated modeling of risk and uncertainty underlying the cost and effectiveness of water quality measures. Environ. Model. Software. 2008;23:922–937. doi: 10.1016/j.envsoft.2007.10.006. [DOI] [Google Scholar]

- 52.Basson L., Petrie J.G. An integrated approach for the consideration of uncertainty in decision making supported by Life Cycle Assessment. Environ. Model. Software. 2007;22:167–176. doi: 10.1016/j.envsoft.2005.07.026. [DOI] [Google Scholar]

- 53.Borgonovo E. Sensitivity analysis of model output with input constraints: A generalized rationale for local methods. Risk Anal. 2008;28:667–680. doi: 10.1111/j.1539-6924.2008.01052.x. [DOI] [PubMed] [Google Scholar]

- 54.Beck B. Model Evaluation and Performance. In: El-Shaarawi A.H., Piegorsch W.W., editors. Encyclopedia of Environmetrics. Volume 3. John Wiley & Sons Ltd; Chichester, UK: 2002. pp. 1275–1279. [Google Scholar]

- 55.Sargent R. Verification and validation of simulation models. J. Simulat. 2013;7:12–24. doi: 10.1057/jos.2012.20. [DOI] [Google Scholar]

- 56.Thacker B.H., Doebling S.W., Hemez F.M., Anderson M.C., Pepin J.E., Rodriguez E.A. Concepts of Model Verification and Validation. Los Alamos National Laboratory; Los Alamos, NM, USA: 2004. [Google Scholar]

- 57.Oreskes N. Evaluation (not validation) of quantitative models. Environ. Health Perspect. 1998;106:1453–1458. doi: 10.1289/ehp.98106s61453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Van Delden H. Lessons Learnt in the Development, Implementation and Use of Integrated Spatial Decision Support Systems. In: Anderssen R.S., Braddock R.D., Newham L.T.H., editors. Proceedings of 18th World IMACS Congress and MODSIM09 International Congress on Modelling and Simulation; Cairns, Australia. 13–17 July 2009; Cairns, Australia: Modelling and Simulation Society of Australia and New Zealand and International Association for Mathematics and Computers in Simulation; 2009. pp. 2922–2928. [Google Scholar]

- 59.Alexandrov G.A., Ames D., Bellochi G., Bruen M., Crout N., Erechtchoukova M., Hildebrandt A., Hoffman F., Jackisch C., Khaiter P., et al. Technical assessment and evaluation of environmental models and software: Letter to the editor. Environ. Model. Software. 2011;26:328–336. doi: 10.1016/j.envsoft.2010.08.004. [DOI] [Google Scholar]

- 60.Refsgaard J.C., Henriksen H.J. Modeling guidelines—Terminology and guiding principles. Adv. Water Resour. 2004;27:71–82. doi: 10.1016/j.advwatres.2003.08.006. [DOI] [Google Scholar]

- 61.Bai Y., Wagener T., Reed P. A top-down framework for watershed model evaluation and selection under uncertainty. Environ. Model. Software. 2009;24:901–916. doi: 10.1016/j.envsoft.2008.12.012. [DOI] [Google Scholar]

- 62.Wyat Appel K., Gilliam R.C., Davis N., Howard S.C. Overview of the atmospheric model evaluation tool (AMET) v1.1 for evaluating meteorological and air quality models. Environ. Model. Software. 2011;26:434–443. doi: 10.1016/j.envsoft.2010.09.007. [DOI] [Google Scholar]

- 63.Xu Y., Booij M.J., Mynett A.E. An appropriateness framework for the Dutch Meuse decision support system. Environ. Model. Software. 2007;22:1667–1678. doi: 10.1016/j.envsoft.2007.01.002. [DOI] [Google Scholar]

- 64.Sojda R.S. Empirical evaluation of decision support systems: Needs, definitions, potential methods, and an example pertaining to waterfowl management. Environ. Model. Software. 2007;22:269–277. doi: 10.1016/j.envsoft.2005.07.023. [DOI] [Google Scholar]

- 65.Wagener T., Kollat J. Numerical and visual evaluation of hydrological and environmental models using the Monte Carlo analysis toolbox. Environ. Model. Software. 2007;22:1021–1033. doi: 10.1016/j.envsoft.2006.06.017. [DOI] [Google Scholar]

- 66.Mo X., Liu S., Lin Z. Evaluation of an ecosystem model for a wheat-maize double cropping system over the North China Plain. Environ. Model. Software. 2012;32:61–73. doi: 10.1016/j.envsoft.2011.07.002. [DOI] [Google Scholar]

- 67.Pollino C.A., Woodberry O., Nicholson A., Korb K., Hart B.T. Parameterisation and evaluation of a Bayesian network for use in an ecological risk assessment. Environ. Model. Software. 2007;22:1140–1152. doi: 10.1016/j.envsoft.2006.03.006. [DOI] [Google Scholar]

- 68.Sonneveld B.G.J.S., Keyzer M.A., Stroosnijder L. Evaluating quantitative and qualitative models: An application for nationwide water erosion assessment in Ethiopia. Environ. Model. Software. 2011;26:1161–1170. doi: 10.1016/j.envsoft.2011.05.002. [DOI] [Google Scholar]

- 69.Aertsen W., Kint V., van Orshoven J., Muys B. Evaluation of modeling techniques for forest site productivity prediction in contrasting ecoregions using stochastic multicriteria acceptability analysis (SMAA) Environ. Model. Software. 2011;26:929–937. doi: 10.1016/j.envsoft.2011.01.003. [DOI] [Google Scholar]

- 70.Nilsen T., Aven T. Models and model uncertainty in the context of risk analysis. Reliab. Eng. Syst. Safety. 2003;79:309–317. doi: 10.1016/S0951-8320(02)00239-9. [DOI] [Google Scholar]

- 71.Moschandreas D.J., Karuchit S. Scenario-model-parameter: A new method of cumulative risk uncertainty analysis. Environ. Int. 2002;28:247–261. doi: 10.1016/S0160-4120(02)00025-9. [DOI] [PubMed] [Google Scholar]

- 72.Refsgaard J.C., van der Sluijs J.P., Brown J., van der Keur P. A framework for dealing with uncertainty due to model structure error. Adv. Water Resour. 2006;29:1586–1597. doi: 10.1016/j.advwatres.2005.11.013. [DOI] [Google Scholar]

- 73.Hokkanen P., Kojo M. How Environmental Impact Assessment Influences Decision-Making (in Finnish) Ympäristöministeriö; Helsinki, Finland: 2003. [Google Scholar]

- 74.Kauppinen T., Nelimarkka K., Perttilä K. The effectiveness of human impact assessment in the Finnish Health Cities Network. Public Health. 2006;120:1033–1041. doi: 10.1016/j.puhe.2006.05.028. [DOI] [PubMed] [Google Scholar]

- 75.Ekboir J. Why impact analysis should not be used for research evaluation and what the alternatives are. Agr. Syst. 2003;78:166–184. doi: 10.1016/S0308-521X(03)00125-2. [DOI] [Google Scholar]

- 76.Quigley R.J., Taylor L.C. Evaluating health impact assessment. Public Health. 2004;118:544–552. doi: 10.1016/j.puhe.2003.10.012. [DOI] [PubMed] [Google Scholar]

- 77.Clark W.C., Majone G. The critical appraisal of scientific inquiries with policy implications. Sci. Technol. Hum. Val. 1985;10:6–19. doi: 10.1177/016224398501000302. [DOI] [Google Scholar]

- 78.Hildén M., Furman E., Kaljonen M. Views on planning and expectations of SEA: The case of transport planning. Environ. Impact Assess. Rev. 2004;24:519–536. doi: 10.1016/j.eiar.2004.01.003. [DOI] [Google Scholar]

- 79.Baker D.C., McLelland J.N. Evaluating the effectiveness of British Columbia’s environmental assessment process for first nations’ participation in mining development. Environ. Impact Assess. Rev. 2003;23:581–603. doi: 10.1016/S0195-9255(03)00093-3. [DOI] [Google Scholar]

- 80.Pohjola M.V., Tuomisto J.T. Openness in participation, assessment, and policy making upon issues of environment and environmental health: a review of literature and recent project results. Environ. Health. 2011;10:58. doi: 10.1186/1476-069X-10-58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Sandström V., Tuomisto J.T., Majaniemi S., Rintala T., Pohjola M.V. Evaluating Effectiveness of Open Assessments on Alternative Biofuel Sources. [(accessed on 18 June 2013)]. Available online: www.julkari.fi/bitstream/handle/10024/104443/URN_ISBN_978-952-245-883-4.pdf?sequence= 1#page=179.

- 82.Wismar M., Blau J., Ernst K., Figueras J. The Effectiveness of Health Impact Assessment: Scope and Limitations of Supporting Decision-Making in Europe. WHO; Copenhagen, Denmark: 2007. [Google Scholar]

- 83.Fischer T.B., Gazzola P. SEA effectiveness criteria—Equally valid in all countries? The case of Italy. Environ. Impact Assess. Rev. 2006;26:396–409. doi: 10.1016/j.eiar.2005.11.006. [DOI] [Google Scholar]

- 84.Leu W., Williams W.P., Bark A.W. Development of an environmental impact assessment evaluation model and its application: Taiwan case study. Environ. Impact Assess. Rev. 1996;16:115–133. doi: 10.1016/0195-9255(95)00107-7. [DOI] [Google Scholar]

- 85.Pölönen I., Hokkanen P., Jalava K. The effectiveness of the Finnish EIA system—What works, what doesn’t, and what could be improved? Enviro. Impact Assess. Rev. 2011;31:120–128. doi: 10.1016/j.eiar.2010.06.003. [DOI] [Google Scholar]

- 86.Van Delden H., Phyn D., Fenton T., Huser B., Rutledge D., Wedderburn L. User interaction during the development of the Waikato Integrated Scenario Explorer. In: Swayne D.A., Yang W., Rizzoli A., Voinov A., Filatova T., editors. Proceedings of the iEMSs Fifth Biennial Meeting: “Modelling for Environment’s sake”; Ottawa, Canada. 5–8 July 2008; Ottawa, Canada: International Environmental Modelling and Software Society; 2008. [Google Scholar]

- 87.Diez E., McIntosh B.S. A review of the factors which influence the use and usefulness of information systems. Environ. Model. Software. 2009;24:588–602. doi: 10.1016/j.envsoft.2008.10.009. [DOI] [Google Scholar]

- 88.Vonk G., Geertman S., Schot P. Bottlenecks blocking widespread usage of planning support systems. Environ. Plan. A. 2005;37:909–924. doi: 10.1068/a3712. [DOI] [Google Scholar]

- 89.Larocque G.R., Bhatti J.S., Ascough J.C., II, Liu J., Luckai N., Mailly D., Archambault L., Gordon A.M. An analytical framework to assist decision makers in the use of forest ecosystem model predictions. Environ. Model. Software. 2011;26:280–288. doi: 10.1016/j.envsoft.2010.03.009. [DOI] [Google Scholar]

- 90.Sterk B., van Ittersum M.K., Leeuwis C. How, when, and for what reasons does land use modeling contribute to societal problem solving? Enviro. Model. Software. 2011;26:310–316. doi: 10.1016/j.envsoft.2010.06.004. [DOI] [Google Scholar]

- 91.Diez E., McIntosh B.S. Organisational drivers for, constraints on and impacts of decision and information support tool use in desertification policy and management. Environ. Model. Software. 2011;26:317–327. doi: 10.1016/j.envsoft.2010.04.003. [DOI] [Google Scholar]

- 92.McIntosh B.S., Giupponi C., Voinov A.A., Smith C., Matthews K.B., Monticino M., Kolkman M.J., Crossman N., van Ittersum M., Haase D., et al. Bridging the Gaps between Design and Use: Developing Tools to Support Environmental Management and Policy. In: Jakeman A.J., Voinov A.A., Rizzoli A.E., Chen S.H., editors. Environmental Modeling, Software and Decision Support. Elsevier; Amsterdam, The Netherlands: 2008. pp. 33–48. [Google Scholar]

- 93.Siebenhüner B., Barth V. The role of computer modeling in participatory integrated assessments. Environ. Impact Assess. Rev. 2005;25:367–389. doi: 10.1016/j.eiar.2004.10.002. [DOI] [Google Scholar]

- 94.Inman D., Blind M., Ribarova I., Krause A., Roosenschoon O., Kassahun A., Scholten H., Arampatzis G., Abrami G., McIntosh B., Jeffrey P. Perceived effectiveness of environmental decision support systems in participatory planning: Evidence from small groups of end-users. Environ. Model. Software. 2011;26:302–309. doi: 10.1016/j.envsoft.2010.08.005. [DOI] [Google Scholar]

- 95.Dewar J.A., Bankes S.C., Hodges J.S., Lucas T., Saunders-Newton D.K., Vye P. Credible Uses of the Distributed Interactive Simulation (DIS) System. RAND; Santa Monica, CA, USA: 1996. [Google Scholar]

- 96.Funtowicz S.O., Ravetz J.R. Uncertainty and Quality in Science for Policy. Kluwer Academic Publishers; Dordrecht, the Netherlands: 1990. [Google Scholar]

- 97.Food Safety Risk Analysis: A Guide for National Food Safety Authorities. World Health Organization, Food and Agriculture Organization of the United Nations; Rome, Italy: 2006. [PubMed] [Google Scholar]

- 98.Wang R.Y., Strong D.M. Beyond accuracy: What data quality means to data consumers. J. Manage. Inform. Syst. 1996;12:5–34. [Google Scholar]

- 99.Moody D., Walsh P. Measuring the Value of Information: An Asset Valuation Approach; Proceedings of Seventh European Conference on Information System (ECIS’99); Copenhagen Business School, Frederiksberg, Denmark. 23–25 June 1999. [Google Scholar]

- 100.Skyrme D.J. Ten ways to add value to your business. Manag. Inform. 1994;1:20–25. [Google Scholar]

- 101.Tongchuay C., Praneetpolgrang P. Knowledge Quality and Quality Metrics in Knowledge Management Systems; roceedings of the Fifth International Conference on ELearning for Knowledge-Based Society; Bangkok Metro, Thailand. 11–12 December 2008. [Google Scholar]

- 102.Aumann C.A. Constructing model credibility in the context of policy appraisal. Environ. Model. Software. 2011;26:258–265. doi: 10.1016/j.envsoft.2009.09.006. [DOI] [Google Scholar]

- 103.Cash D.W., Clark W., Alcock F., Dickson N., Eckley N., Jäger J. Salience, Credibility, Legitimacy and Boundaries: Linking Research, Assessment and Decision Making. Harvard University; Boston, MA, USA: 2002. [Google Scholar]

- 104.Wardekker J.A., van der Sluijs J.P., Janssen P.H.M., Kloprogge P., Petersen A.C. Uncertainty communication in environmental assessments: Views from the Dutch science-policy interface. Environ. Sci. Policy. 2008;11:627–641. doi: 10.1016/j.envsci.2008.05.005. [DOI] [Google Scholar]

- 105.Janssen P.H.M., Petersen A.C., van der Sluijs J.P., Risbey J.S., Ravetz J.R. A guidance for assessing and communicating uncertainties. Water Sci. Technol. 2005;52:125–131. [PubMed] [Google Scholar]

- 106.Covello V.T., Peters R.G., Wojtecki J.G., Hyde R.C. Risk communication, the west nile virus epidemic, and bioterrorism: Responding to the communication challenges posed by the intentional or unintentional release of a pathogen in an urban setting. J. Urban Health: Bull. N. Y. Acad. Med. 2001;78:382–391. doi: 10.1093/jurban/78.2.382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Bischof N., Eppler M.J. Caring for clarity in knowledge communication. J. Univers. Comput. Sci. 2011;17:1455–1473. [Google Scholar]

- 108.O’Faircheallaigh C. Public participation and environmental impact assessment: Purposes, implications, and lessons for public policy making. Environ. Impact Assess. Rev. 2010;30:19–27. doi: 10.1016/j.eiar.2009.05.001. [DOI] [Google Scholar]

- 109.OECD. Stakeholder Involvement Techniques—Short Guide and Annotated Bibliography. [(accessed on 18 June 2013)]. Available online: www.oecd-nea.org/rwm/reports/2004/nea5418-stakeholder.pdf.

- 110.MNP. Stakeholder Participation Guide for the Netherlands Environmental Assessment Agency: Main Document, Practice Guide & Checklist. Netherlands Environmental Assessment Agency and Radboud University; Nijmegen, The Netherlands: 2008. [Google Scholar]

- 111.Rosenhead J., Mingers J. Rational Analysis for a Problematic World Revisited: Problem Structuring Methods For complexity, Uncertainty and Conflict. Wiley; Chichester, UK: 2001. [Google Scholar]

- 112.University of Cambridge. Institute for Manufacturing. Decision Support Tools (Website) [(accessed on 18 June 2013)]. Available online: www.ifm.eng.cam.ac.uk/research/dstools/

- 113.Narayan S.M., Corcoran-Perry S., Drew D., Hoyman K., Lewis M. Decision analysis as a tool to support an analytical pattern-of-reasoning. Nurs. Health Sci. 2003;5:229–243. doi: 10.1046/j.1442-2018.2003.00157.x. [DOI] [PubMed] [Google Scholar]

- 114.Harris G. Integrated assessment and modeling: An essential way of doing science. Environ. Model. Software. 2002;17:201–207. doi: 10.1016/S1364-8152(01)00058-5. [DOI] [Google Scholar]

- 115.Pohjola M.V., Pohjola P., Paavola S., Bauters M., Tuomisto J.T. Pragmatic Knowledge Services. J. Univers. Comput. Sci. 2011;17:472–497. [Google Scholar]

- 116.Koivisto J., Pohjola P. Practices, modifications and generativity—REA: A practical tool for managing the innovation processes of practices. Syst. Signs Actions. 2012;5:100–116. [Google Scholar]

- 117.Tijhuis M.J., Pohjola M.V., Gunnlaugsdόttir H., Kalogeras N., Leino O., Luteijn J.M., Magnússon S.H., Odekerken G., Poto M., Tuomisto J.T., et al. Looking beyond Borders: Integrating best practices in benefit-risk analysis into the field of food and nutrition. Food Chem. Toxicol. 2012;50:77–93. doi: 10.1016/j.fct.2011.11.044. [DOI] [PubMed] [Google Scholar]

- 118.Miles S.B. Towards Policy Relevant Environmental Modeling: Contextual Validity and Pragmatic Models. U.S. Department of the Interior, U.S. Geological Survey. [(accessed on 18 June 2013)]. Available online: geopubs.wr.usgs.gov/open-file/of00-401/of00-401.pdf.

- 119.Oreskes N., Schrader-Frechette K., Belitz K. Verification, validation, and confirmation of numerical models in the earth science. Science. 1994;263:641–646. doi: 10.1126/science.263.5147.641. [DOI] [PubMed] [Google Scholar]