Abstract

Knowledge about regularities in the environment can be used to facilitate perception, memory, and language acquisition. Given this usefulness, we hypothesized that statistically structured sources of information receive attentional priority over noisier sources, independent of their intrinsic salience or goal relevance. We report three experiments that support this hypothesis. Experiment 1 shows that regularities bias spatial attention: Visual search was facilitated at a location containing temporal regularities, even though these regularities did not predict target location, timing, or identity. Experiments 2 and 3 show that regularities bias feature attention: Attentional capture doubled in magnitude when singletons appeared, respectively, in a color or dimension with temporal regularities among task-irrelevant stimuli. Prioritization of the locations and features of regularities is not easily accounted for in the conventional dichotomy between stimulus-driven and goal-directed attention. This prioritization may in turn promote further statistical learning, helping the mind to acquire knowledge about stable aspects of the environment.

Keywords: statistical learning, attentional capture, cognitive control, feature-based attention, spatial attention, visual search

The environment is highly structured, containing widespread regularities in terms of how objects co-occur over space and time. The mind extracts regularities via statistical learning (Fiser & Aslin, 2001; Perruchet & Pacton, 2006; Saffran, Aslin, & Newport, 1996; Turk-Browne, Scholl, Chun, & Johnson, 2009) and can use them to perceive and interact with the environment more efficiently. For example, exposure to regularities improves learning of object labels (Graf Estes, Evans, Alibali, & Saffran, 2007), facilitates object categorization (Turk-Browne, Scholl, Johnson, & Chun, 2010), and expands visual short-term memory capacity (Brady, Konkle, & Alvarez, 2009; Umemoto, Scolari, Vogel, & Awh, 2010). Given the prevalence and usefulness of regularities, we hypothesized that structured sources of information receive attentional priority in the context of other, noisier sources of information.

Attention is known to be controlled by two factors (Chun, Golomb, & Turk-Browne, 2011; Corbetta & Shulman, 2002; Jonides, 1981; Pashler, Johnston, & Ruthruff, 2001; Posner, 1980; Yantis, 2000). First, attention can be driven exogenously by salient external stimuli (Itti & Koch, 2001; Wolfe & Horowitz, 2004), such as unique features (Theeuwes, 1992), abrupt onsets (Yantis & Jonides, 1984), new and looming motion (Abrams & Christ, 2003; Franconeri & Simons, 2003), and novelty (Johnston, Hawley, Plew, Elliott, & DeWitt, 1990). Second, attention can be directed endogenously by internal goals or task rules (Hopfinger, Buonocore, & Mangun, 2000; Miller & Cohen, 2001), which enhances the processing of goal-relevant stimuli irrespective of salience (Bacon & Egeth, 1994; Folk, Remington, & Johnston, 1992; cf. Theeuwes, 2004).

We propose that attention is also biased by statistical regularities, in a way that is not cleanly accounted for by the stimulus-driven vs. goal-directed framework. Prioritization of regularities is not stimulus driven, in the sense that regularities reflect stable relationships learned over time. That is, the presence of regularities depends only on internal representations of prior experience and not on the salience of any given stimulus. Prioritization of regularities is also not goal directed, in the sense that statistical learning can occur incidentally during other tasks (Turk-Browne et al., 2010). Moreover, this learning can be expressed even when not required by the current task (Zhao, Ngo, McKendrick, & Turk-Browne, 2011).

This form of prioritization is reminiscent of cases in which learning of regularities helps guide attention to expected locations (Chun & Jiang, 1998; Summerfield, Lepsien, Gitelman, Mesulam, & Nobre, 2006) and features (Chalk, Seitz, & Seriès, 2010; Chun & Jiang, 1999). The critical difference between such contextual cuing and the hypothesized prioritization of regularities is that attention can be biased by regularities even when they do not provide information that is helpful for task performance (e.g., information about target location or identity). That is, we tested the more radical claim that regularities among task-irrelevant stimuli spontaneously establish implicit attentional biases that influence performance in other tasks.

In three experiments, we examined the attentional prioritization of locations and features containing regularities. Participants viewed multiple streams of objects while monitoring for occasional visual search arrays. The stream in one “structured” location (Experiment 1), color (Experiment 2), or feature dimension (Experiment 3) was generated from temporal regularities. The remaining “random” streams were generated by shuffling the order of objects. The structured and random streams (and regularities) were irrelevant to the primary visual search task, in terms of both when and where the target appeared and which response was needed. Nevertheless, we hypothesized that responses would be faster when targets happened to appear in the location of the structured stream than in the location of a random stream, and that singletons in the structured color or dimension would capture attention more strongly than singletons in a random color or dimension.

Experiment 1

The goal of this experiment was to test whether visual search is facilitated at a spatial location containing temporal regularities.

Participants

Twenty-five undergraduates (16 female, 9 male; mean age = 20.7 years) from Princeton University participated in the experiment for course credit. Participants reported normal or corrected-to-normal visual acuity and color vision and provided informed consent. The protocol was approved by the Princeton University Institutional Review Board (IRB).

Stimuli

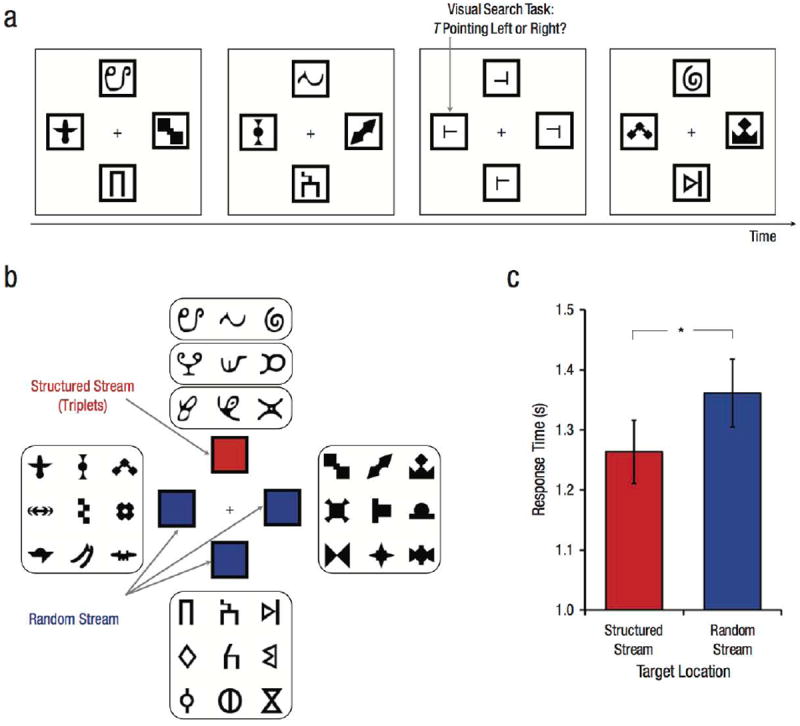

Displays consisted of black shapes subtending 3.3° on a white background in four locations: top, bottom, left, and right of a central fixation cross. The stimuli in each stream were selected from four separate sets of nine shapes (Fig. 1b). The location of each stream was centered 5.1° from fixation and marked by a black outline. The stream in one location (counterbalanced across participants) was structured, whereas the streams in the three remaining locations were random. In the structured stream, the nine shapes were grouped arbitrarily for each participant into three “triplets” that remained constant throughout the experiment (e.g., ABC, DEF, GHI). Each triplet was repeated 50 times, and the order of triplets was randomized, with the constraint that no triplet could repeat back to back (e.g., DEFGHIDEFABCGHI…). In each random stream, the nine shapes were sequenced individually (i.e., not in triplets). Each shape was repeated 50 times, and the order of shapes was randomized, with the constraint that no shape could repeat back to back. Shapes from each stream appeared one at a time and synchronously, such that four shapes appeared per trial—one in each of the top, bottom, left, and right positions on the screen (Fig. 1a). This procedure resulted in 450 trials (9 unique shapes per stream × 50 repetitions), with the frequency of each individual shape equated within and across streams.

Fig. 1.

Sample trial sequence, stimuli, and results from Experiment 1. Streams of shapes were shown at the top, bottom, left, and right of central fixation (a). These streams were interrupted occasionally by a visual search array that contained one T target (indicated here by an arrow) and three L distractors in the four stream locations. The task was to indicate whether the target pointed to the left or right. Each stream was generated from a different set of nine shapes (b). The stream in one location contained temporal regularities (the structured stream). The nine shapes in this stream were grouped into three triplets, whose members always appeared in the same order. The streams in the other locations did not contain temporal regularities (the random streams), with the nine shapes appearing in a shuffled order. The graph shows mean response time in the visual search task as a function of whether the target appeared at the location of the structured stream or at the location of a random stream (c). Error bars indicate ±1 SEM. The asterisk indicates a significant difference between conditions (*p < .05).

Shape streams were interrupted occasionally by a task-relevant visual search array. Each array contained one target and three distractors in the four stream locations, with the location of the target determined randomly. The target was a T shape rotated 90° (i.e., pointing left) or −90° (i.e., pointing right). The distractors were L shapes rotated 0° (i.e., pointing right) or 180° (i.e., pointing left), with the horizontal line offset 20% from either the bottom or top of the vertical line to increase discrimination difficulty. The pointing directions of the target and distractors were counterbalanced in each array. For example, if the target pointed right, then two distractors pointed left and one distractor pointed right. This ensured that any given distractor did not predict target orientation. The target appeared with equal frequency at each of the four locations. Thus, there was no benefit of attending to the structured stream for finding the target, nor was the target location informative about which stream was structured.

Apparatus

Participants were seated 70 cm from a CRT monitor (refresh rate = 100 Hz). Stimuli were presented using MATLAB (The MathWorks, Natick, MA) and the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997).

Procedure

The experiment consisted of an exposure phase that included the shape streams and visual search trials, followed by a test phase to assess whether participants learned regularities. During exposure, each shape trial contained one shape from each of the four streams for 750 ms followed by an interstimulus interval (ISI) of 750 ms. Participants were instructed to fixate while attending to the four locations in order to complete occasional visual search trials. There were 80 search trials interspersed randomly into the 450 shape trials, which resulted in an average of 1 search trial for every 5.6 shape trials (no search trials appeared back to back). Search arrays appeared for 750 ms, followed by an ISI that lasted a minimum of 750 ms. Participants indicated as quickly and accurately as possible whether the target pointed left or right (by pressing the “1” or “0” key, respectively). If they did not respond during the array presentation or ISI, the screen remained blank until response. Participants were first shown 5 random-shape trials and 1 search trial for practice and to clarify the instructions. Unlike many studies of statistical learning, in which the task during exposure is passive viewing, the search task in the present experiment provided a useful cover story for participants that helped obscure the purpose of the study.

To verify that participants were sensitive to regularities, we followed the exposure phase with a surprise two-alternative forced-choice test (Fiser & Aslin, 2002). In each trial, participants viewed two sequences of three shapes presented at fixation. Each shape appeared for 750 ms followed by a 750-ms ISI, and each sequence was separated by a 1,000-ms pause. Participants judged whether the first or second sequence seemed more familiar based on what they saw during exposure. One sequence was a triplet that had appeared repeatedly in the structured stream, and the other sequence was a “foil” composed of three shapes from the structured stream that never appeared sequentially. The foils were constructed by choosing one shape from each of the three triplets, which preserved their position in the original triplets (i.e., AEI, DHC, GBF). Each triplet was tested against each foil twice, which resulted in a total of 18 trials (3 triplets × 3 foils × 2 repetitions). This testing procedure equated the frequency of every triplet and foil at test, as well as the frequency of every individual shape. Thus, to discriminate triplets from foils, participants needed to know which particular shapes followed each other during exposure. The order of trials was randomized, and whether the triplet or foil appeared first was counterbalanced across trials.

After the test, an extensive debriefing was conducted. Participants were told about the triplets in the exposure phase and asked to report whether they had noticed any such regularities. Demand characteristics might encourage an affirmative response, so participants who reported awareness were additionally asked to identify which location contained regularities. Participants were asked several other questions, including how confident they were in the familiarity test, whether they used a strategy of attending to a specific location (and, if so, which one), and what they thought the experiment was testing.

Results and discussion

Triplets were chosen over foils on 54.0% (SD = 7.9%) of test trials, which reveals that statistical learning occurred (chance = 50%), t(24) = 2.52, p = .02, d = 0.50. This finding demonstrates, for the first time, that temporal regularities at one spatial location can be learned when embedded among other random sources of input at different locations.

The critical test of our hypothesis was whether regularities were preferentially attended during the exposure phase. If they were, target discrimination should have been facilitated at the structured location compared with the random locations. The task used in this experiment elicited high accuracy, so we focused on response time (RT) as a more sensitive measure (accuracy data are reported in the Supplemental Material available online). Only trials with correct responses were included in RT analyses, and RTs greater than 3 standard deviations from the mean in each condition (1.2% of all trials) were excluded.

Target-discrimination RTs (Fig. 1c) were faster for targets in the structured location than in the random locations, t(24) = 2.31, p = .03, d = 0.46. In debriefing, 7 participants reported noticing regularities during the exposure phase, but only 1 of these participants correctly identified which location contained regularities (chance = 25%; binomial test: p = .87). Regardless, the RT difference was reliable without these 7 participants, t(17) = 2.80, p = .01, d = 0.66.

Although shape streams were task irrelevant, search items appearing in the location of the structured stream were preferentially attended. These results suggest that attention is biased toward the locations of regularities.

Experiment 2

The goal of this experiment was to generalize the findings of Experiment 1 from spatial attention to feature attention by testing whether temporal regularities among shapes in a color enhance attentional capture by that color.

Participants

Twenty new Princeton University undergraduates (12 female, 8 male; mean age = 20.1 years) participated in the experiment for course credit. Participants reported normal or corrected-to-normal visual acuity and color vision and provided informed consent. The protocol was approved by the Princeton University IRB.

Stimuli

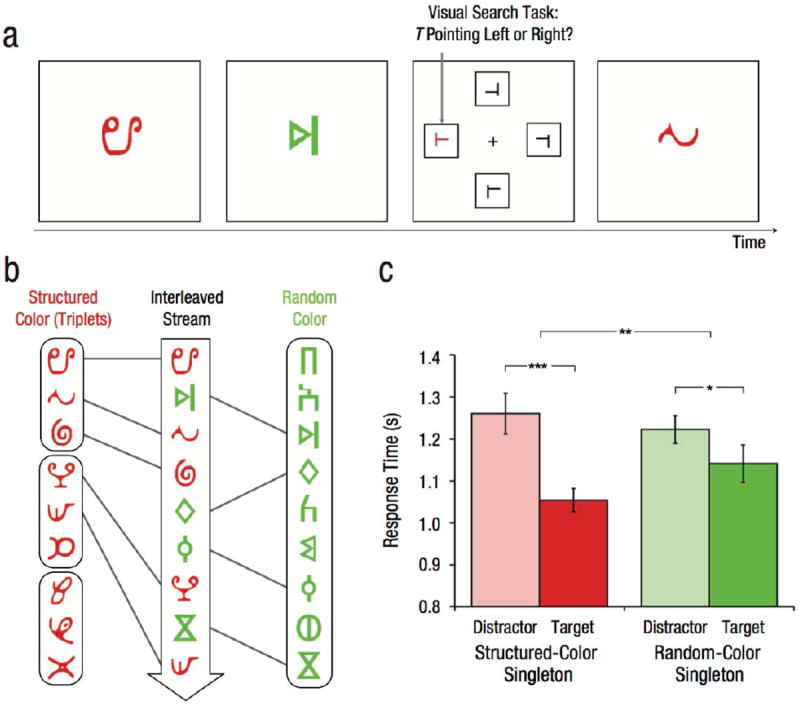

Two sets of shapes were randomly selected for each participant from the sets used in Experiment 1. The shapes in one set were colored red (red, green, blue values: 255, 0, 0, respectively) and the shapes in the other set were colored green (red, green, blue values: 0, 255, 0, respectively). One color was chosen for the structured set and the other color for the random set, and this color assignment was counterbalanced across participants (Fig. 2b). Shapes in the structured set were grouped into three triplets, and a temporal stream was generated by pseudorandomly sequencing 40 repetitions of each triplet. Shapes in the random set were sequenced individually by pseudorandomizing 40 repetitions of each shape. The two streams were then interleaved into one temporal stream of 720 shapes (9 shapes × 40 repetitions × 2 colors). This interleaving was done by randomly sampling shapes in each color stream in order and without replacement, with the constraint that the difference in the number of remaining shapes per stream could not exceed 6. Shapes in the interleaved stream appeared one at a time in the center of the display (Fig. 2a).

Fig. 2.

Sample trial sequence, stimuli, and results from Experiment 2. A single stream of red and green shapes appeared at central fixation (a). This stream was interrupted occasionally by a visual search array that contained one T target (indicated here by an arrow) and three L distractors. One of the items in the array was a red or green color singleton, which could appear as either the target or one of the distractors. Regardless, the task was to indicate whether the target pointed to the left or right. The central stream was created by randomly interleaving shapes from separate red and green streams (b), each generated from a different set of nine shapes. One of these color streams contained temporal regularities (the structured color). The nine shapes in this stream were grouped into three triplets, whose members always appeared in the same order. The other stream did not contain temporal regularities (the random color), with the nine shapes appearing in a random order. The graph shows mean response time in the visual search task as a function of whether the singleton was the target or a distractor, and whether its color matched the structured or random stream. Error bars indicate ±1 SEM. Asterisks indicate significant differences between conditions (*p < .05, **p < .01, ***p < .001).

The shape stream was interrupted occasionally by a task-relevant visual search array. Each search array consisted of one rotated T target and three L distractors (with the horizontal line offset 20% from either the bottom or top of the vertical line) in the four locations from Experiment 1, with the location of the target determined randomly. To assess attention to color, we included one item in every search array (the singleton) that was colored differently than the remaining three black items. This singleton appeared with equal frequency in the color of the structured or random set. The target and each distractor served as the singleton with equal frequency, and the target appeared at each location with equal frequency. Thus, the shapes were not predictive of which item would be the singleton, what color the singleton would be, where the target would appear, or which response was correct.

Apparatus

The apparatus was the same as in Experiment 1.

Procedure

As in Experiment 1, participants completed exposure and test phases. During exposure, each shape trial contained one shape for 500 ms followed by an ISI of 500 ms. Participants were instructed to fixate and passively view shapes while waiting for visual search arrays. The 720 shape trials were randomly interrupted by 128 visual search trials (none back to back), which resulted in an average of 1 search trial for every 5.6 shape trials. The search arrays appeared for 750 ms, followed by a minimum ISI of 750 ms. Participants indicated whether the target pointed left or right (by pressing the “1” or “0” key, respectively)—regardless of its color and location (accuracy data are again reported in the Supplemental Material). Prior to exposure, participants were shown 5 random shape trials and 1 search trial for practice and to clarify the instructions. The test phase was identical to that in Experiment 1, with triplets and foils drawn from the structured color set. After the test phase, an extensive debriefing was conducted.

Results and discussion

Triplets were chosen over foils on 61.1% (SD = 14.9%) of test trials, which reveals that statistical learning occurred (chance = 50%), t(19) = 3.34, p = .003, d = 0.75. Thus, temporal regularities of shapes in one color can be learned despite interruptions by random stimuli in another color.

Target-discrimination RTs (Fig. 2c) were analyzed with a 2 (singleton type: target, distractor) × 2 (singleton color: structured, random) repeated measures analysis of variance (ANOVA). As a manipulation check that color singletons captured attention, we analyzed the speed of target responses and found that they were indeed faster for target singletons than for distractor singletons: main effect of singleton type, F(1, 19) = 17.33, p < .001, ηp2 = .48. Planned comparisons revealed that this occurred for singletons in both the structured color, t(19) = 4.26, p < .001, d = 0.95, and the random color, t(19) = 2.60, p = .02, d = 0.58. There was no main effect of singleton color, F(1, 19) = 1.61, p = .22, ηp2 = .08.

The critical test of our hypothesis was whether attentional capture (defined as the difference between target-discrimination RTs for distractor and for target singletons) was enhanced for the structured color. Indeed, there was an interaction between singleton type and color, with greater attentional capture for the structured than for the random color, F(1, 19) = 8.34, p = .009, ηp2 = .30. In debriefing, 6 participants reported noticing regularities during exposure, but only 2 of these participants correctly identified which color contained regularities (chance = 50%; binomial test: p = .89). Regardless, the interaction remained reliable without these 6 participants, F(1, 13) = 4.97, p = .04, ηp2 = .28.

Although shapes were task irrelevant, search items appearing in the color of shapes with temporal regularities were preferentially attended. These results suggest that attention is biased toward the features of regularities.

Experiment 3

The goal of this experiment was to extend the findings of Experiment 2 by testing whether temporal regularities in a feature dimension enhance capture by a singleton in that dimension.

Participants

Thirty new Princeton University undergraduates (20 female, 10 male; mean age = 19.9 years) participated in the experiment for course credit. Participants reported normal or corrected-to-normal visual acuity and color vision and provided informed consent. The protocol was approved by the Princeton University IRB.

Stimuli

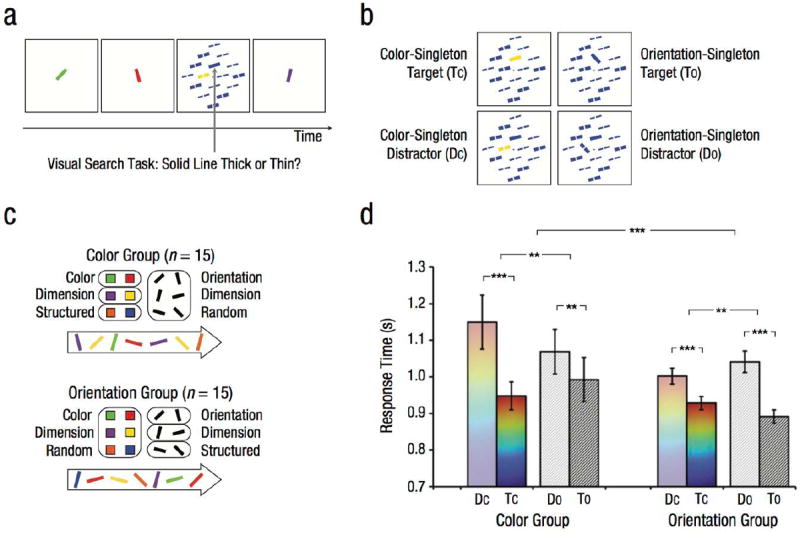

Displays consisted of lines varying in two feature dimensions: color (red, green, blue, yellow, orange, or purple) and orientation (15° 45° 75° 105° 135° or 165°). We used six values per dimension to ensure that values were discriminable. One dimension was chosen as structured and the other as random (Fig. 3c). In the color group (n = 15), the six colors were randomly assigned to three “pairs” for each participant, and a temporal stream of colored lines was generated by pseudorandomly sequencing 60 repetitions of each pair. The orientations of these lines were sequenced individually by pseudorandomizing 60 repetitions of each orientation. These structured (color) and random (orientation) feature sequences were overlaid to create a single stream of 360 lines (6 lines × 60 repetitions). This process was reversed for the orientation group (n = 15), with orientations being structured (sequenced in pairs) and colors being random (sequenced individually). For both groups, the lines subtended 2.4° and appeared one at a time in the center of the display (Fig. 3a).

Fig. 3.

Sample trial sequence, conditions, stimuli, and results from Experiment 3. A single stream of oriented colored lines appeared at central fixation (a). This stream was interrupted occasionally by a visual search array that contained one solid-line target (indicated here by an arrow) among 15 broken-line distractors. The task was to indicate whether the target was a thick or thin line. One of the items in the array was a singleton (b), which differed in either color or orientation from all other items and appeared as either the target or one of the distractors. Participants were assigned to two groups (c). In the color group, the colors of the lines contained temporal regularities, but the orientations did not. The six colors were combined into three pairs, whose members always appeared in the same order; in contrast, the six orientations appeared in a random order. In the orientation group, the orientations of the lines contained temporal regularities, but the colors did not. The six orientations were combined into three pairs, whose members always appeared in the same order; in contrast, the six colors appeared in a random order. The graph shows mean response time in the visual search task as a function of group and type of search array (d). Error bars indicate ±1 SEM. Asterisks indicate significant differences between conditions and groups (*p < .05, **p < .01, ***p < .001).

The line stream was interrupted occasionally by a task-relevant visual search array. Each search array contained either a color or an orientation singleton. The four search locations from previous experiments were surrounded by 12 additional locations, which yielded arrays of 16 lines in a diamond arrangement (18.2° × 18.2°). The larger set size was used to enhance pop-out for orientation singletons because a pilot study with four lines did not lead to a baseline capture effect. The search target was a solid line, whereas distractor lines contained a 0.2° gap (creating a form of serial search; Treisman & Souther, 1985). Because orientation was a dimension of interest, we avoided the orientation-discrimination task from Experiments 1 and 2. Instead, participants judged whether the target line was thick or thin. Half of the lines in each array were thick (0.20°), and the other half of the lines were thin (0.16°). All but one line had the same color and orientation (chosen randomly for each array). The singleton differed in either color or orientation (equated for frequency), with its value in the other dimension matching the other lines (Fig. 3b).

The target and singleton appeared randomly in the four locations used in Experiments 1 and 2, although participants were not informed about this. The singleton was the target on 50% of search trials. This change from Experiment 2 (in which 25% of singletons were targets) was made to increase statistical power in light of the smaller overall number of trials in this experiment. It is important to note that although the singleton was partly informative about the target location, this was true for singletons in both the structured and random dimensions. Therefore, this predictiveness cannot explain differences between the color and orientation groups—the search arrays were identical for all participants. The line trials were not predictive of which search item would be the singleton, what dimension the singleton would appear in, what feature value it would have in that dimension, where the target would appear, or which response was correct.

Apparatus

The apparatus was the same as in Experiments 1 and 2.

Procedure

The exposure phase was similar to that used in Experiment 2 (with colored and oriented lines appearing instead of colored shapes), except that search arrays appeared for 1,000 ms with a minimum ISI of 500 ms. The array duration was extended because of the larger set size. The 360 line trials were interrupted by 80 visual search trials (none back to back), which resulted in an average of 1 search trial for every 4.5 line trials (accuracy data are again reported in the Supplemental Material). Prior to exposure, participants were shown 5 random shape trials and 1 search trial for practice and to clarify the instructions. The test phase was similar to that used in Experiment 2, except that pairs were tested rather than triplets, and test lines appeared with only the structured dimension varying (all horizontal in the color group, and all black in the orientation group). Each pair was tested twice against three two-line foils. After the test phase, an extensive debriefing was conducted.

Results and discussion

Pairs were chosen over foils on 66.3% (SD = 13.4%) and 72.6% (SD = 15.9%) of test trials by the color and orientation groups, respectively, which revealed that statistical learning occurred in both dimensions (chance = 50%): color group, t(14) = 4.73, p < .001, d = 1.22; orientation group, t(14) = 5.50, p < .001, d = 1.42. These levels did not differ between groups, t(28) = 1.17, p = .25, d = 0.43. This replicates findings that regularities in one feature dimension can be learned despite randomness in another feature dimension (Turk-Browne, Isola, Scholl, & Treat, 2008).

Target-discrimination RTs (Fig. 3d) were analyzed with a 2 (group: color, orientation; between subjects) × 2 (singleton type: target, distractor; within subjects) × 2 (singleton dimension: color, orientation; within subjects) mixed-effects ANOVA. We observed a robust main effect of singleton type across both groups, F(1, 28) = 70.01, p < .001, ηp2 = .71, and separately within the color group, F(1, 14) = 27.01, p < .001, ηp2 = .66, and the orientation group, F(1, 14) = 68.32, p < .001, ηp2 = .83, which confirms that our manipulation of attentional capture was successful. Separate mixed-effects ANOVAs within each singleton dimension across groups revealed this main effect of singleton type for both the color dimension, F(1, 28) = 38.17, p < .001, ηp2 = .58, and the orientation dimension, F(1, 28) = 59.43, p < .001, ηp2 = .68. Planned comparisons further revealed this capture effect for both dimensions within both groups (ps < .002, ds > 1.00).

The critical test of our hypothesis was whether attentional capture was stronger for singletons in the structured dimension than in the random dimension. Indeed, there was a three-way crossover interaction between group, singleton type, and singleton dimension, F(1, 28) = 19.18, p < .001, ηp2 = .41, with greater attentional capture for color than orientation singletons in the color group, F(1, 14) = 9.56, p = .008, ηp2 = .41, and greater attentional capture for orientation than color singletons in the orientation group, F(1, 14) = 12.28, p = .003, ηp2 = .47. This interaction was found separately within each singleton dimension, with greater attentional capture in the color than orientation group for color singletons, F(1, 28) = 8.20, p = .007, ηp2 = .23, and greater attentional capture in the orientation than color group for orientation singletons, F(1, 28) = 6.22, p = .02, ηp2 = .18. No other main effects or interactions reached significance (ps > .23, ηp2s < .06). In debriefing, 6 participants in each of the color and orientation groups reported noticing regularities, but only 2 participants per group correctly identified which dimension contained regularities (chance = 50%; binomial test: p = .93). Regardless, the three-way interaction remained reliable without the inclusion of these 12 participants, F(1, 16) = 13.36, p = .002, ηp2 = .46, as did all two-way interactions (ps < .04).

Although the line stream was task irrelevant, search items that were unique in the dimension with temporal regularities were preferentially attended. These results suggest that attention is biased toward feature dimensions that contain regularities.

General Discussion

Across three studies, we found that the locations and features of objects embedded in temporal regularities received priority in visual search. We interpret this effect as reflecting an implicit attentional bias for regularities during statistical learning—that is, an increased likelihood of attending to the structured stream just before the search array appeared. This bias may combine with other orthogonal cues to determine the allocation of attention at any given moment, such as the salience of particular stimuli and the goal of finding the search target.

One explanation for this bias focuses on randomness. Because participants could not know in advance which stream was structured, they may have initially sampled all locations and features. When attempts to learn in random streams failed, attention may have redirected to other streams, eventually settling on the structured stream. Indeed, encountering randomness prevents subsequent learning about the same input (Gebhart, Aslin, & Newport, 2009; Jungé, Scholl, & Chun, 2007).

An alternative explanation focuses on structure. Because regularities in the structured stream matched prior experience, they may have either attracted attention that was initially allocated elsewhere or held attention that was allocated to this stream already on the basis of other cues. Indeed, input that matches prior experience can incidentally cue memory retrieval and reflexively engage attention networks (Ciaramelli, Grady, & Moscovitch, 2008). Repetition biases often appear during initial learning: Infants look longer at repeated stimuli until fully habituated, and neural responses are enhanced for repeated stimuli that are degraded (Turk-Browne, Scholl, & Chun, 2008). Thus, extensive exposure to regularities may dissipate or reverse the bias for structure—in other words, having strong expectations after learning might release attention elsewhere. The duration of the current experiments may not have been sufficient for this, though all experiments showed a numerically weaker effect in the second half compared with the first half (ps > .11).

On the surface, the notion that regularities might attract attention seems incompatible with demonstrations that attention is necessary for statistical learning (Baker, Olson, & Behrmann, 2004; Campbell, Zimerman, Healey, Lee, & Hasher, 2012; Turk-Browne, Jungé, & Scholl, 2005). However, these findings can be reconciled by suggesting that attention and learning may interact in a closed-loop manner: In contrast to tasks in prior studies that required selective attention to one stream, the tasks in the current experiments left participants free to attend broadly and they could thus learn a little from all streams. When subsequent input matched what had been learned, attention may have been drawn toward or held on the spatial location or features of this regular information. This more selective attentional focus would help promote further statistical learning, and in turn, more sophisticated learning would lead to better matches and more attention, and so on. Although this possibility requires further investigation, such an interaction would be highly ecological, with prior experience helping shape attentional priorities and these priorities ensuring the acquisition of knowledge about stable aspects of the environment.

In contrast to implicit accounts, our results could in principle be explained if participants became explicitly aware of regularities and intentionally oriented to them. This is unlikely for three reasons. First, most participants reported no awareness, the attentional bias was robust in just these participants, and those who did report awareness performed at chance in identifying the location, color, or dimension of regularities. Second, only 8 out of 75 participants across the three experiments reported using a strategy of selectively attending to one stream; among them, only 1 participant reported attending to the stream with regularities. Third, unlike findings that regularities among task-relevant stimuli can affect performance on the same task (Brady et al., 2009; Chun & Jiang, 1998; Smyth & Shanks, 2008; Umemoto et al., 2010; Zhao et al., 2011), the present regularities were defined over task-irrelevant stimuli and did not provide predictive information about the visual search task. Thus, there was no evidence that intentional orienting to regularities occurred, nor would it have been a useful strategy for improving task performance. The discovery of robust orienting based on a completely task-irrelevant “summoning signal” distinguishes our findings from prior work.

To conclude, the current findings are significant in several ways. We uncovered a novel factor in the control of attention driven neither by intrinsic salience (stimuli were randomly assigned to streams) nor by intentional goals (participants were not aware of regularities, and regularities were task irrelevant). Moreover, the current paradigm provides a novel implicit and online measure of statistical learning, moving beyond the conventional use of familiarity to measure a process that is often implicit (Turk-Browne et al., 2009). Finally, recent work has shown that statistical learning interacts with several other cognitive processes (Brady et al., 2009; Graf Estes et al., 2007; Turk-Browne et al., 2010; Umemoto et al., 2010; Zhao et al., 2011), and the current findings show that statistical learning, even of task-irrelevant stimuli, can control the allocation of spatial and feature attention.

Supplementary Material

Acknowledgments

For helpful conversations, we thank Ed Awh, Floris de Lange, Dan Osherson, Aaron Seitz, and the Turk-Browne Lab.

Funding

This work was supported by a Postdoctoral Fellowship from the Natural Sciences and Engineering Research Council of Canada (to N. A.-A) and by National Institutes of Health Grant No. R01-EY021755 (to N. B. T.-B.).

Footnotes

Supplemental Material

Additional supporting information may be found at http://pss.sagepub.com/content/by/supplemental-data

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

References

- Abrams RA, Christ SE. Motion onset captures attention. Psychological Science. 2003;14:427–432. doi: 10.1111/1467-9280.01458. [DOI] [PubMed] [Google Scholar]

- Bacon WF, Egeth H. Overriding stimulus-driven attentional capture. Perception & Psychophysics. 1994;55:485–496. doi: 10.3758/bf03205306. [DOI] [PubMed] [Google Scholar]

- Baker CI, Olson CR, Behrmann M. Role of attention and perceptual grouping in visual statistical learning. Psychological Science. 2004;15:460–466. doi: 10.1111/j.0956-7976.2004.00702.x. [DOI] [PubMed] [Google Scholar]

- Brady TF, Konkle T, Alvarez GA. Compression in visual short-term memory: Using statistical regularities to form more efficient memory representations. Journal of Experimental Psychology: General. 2009;138:487–502. doi: 10.1037/a0016797. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Campbell KL, Zimerman S, Healey MK, Lee MM, Hasher L. Age differences in visual statistical learning. Psychology and Aging. 2012;27:650–656. doi: 10.1037/a0026780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chalk M, Seitz A, Seriès P. Rapidly learned stimulus expectations alter perception of motion. Journal of Vision. 2010;10(8) doi: 10.1167/10.8.2. Article 2. Retrieved from http://www.journalofvision.org/content/10/8/2.abstract. [DOI] [PubMed] [Google Scholar]

- Chun MM, Golomb JD, Turk-Browne NB. A taxonomy of external and internal attention. Annual Review of Psychology. 2011;62:73–101. doi: 10.1146/annurev.psych.093008.100427. [DOI] [PubMed] [Google Scholar]

- Chun MM, Jiang Y. Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology. 1998;36:28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- Chun MM, Jiang Y. Top-down attentional guidance based on implicit learning of visual covariation. Psychological Science. 1999;10:360–365. [Google Scholar]

- Ciaramelli E, Grady CL, Moscovitch M. Top-down and bottom-up attention to memory: A hypothesis (AtoM) on the role of the posterior parietal cortex in memory retrieval. Neuropsychologia. 2008;46:1828–1851. doi: 10.1016/j.neuropsychologia.2008.03.022. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:215–229. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Fiser J, Aslin RN. Unsupervised statistical learning of higher-order spatial structures from visual scenes. Psychological Science. 2001;12:499–504. doi: 10.1111/1467-9280.00392. [DOI] [PubMed] [Google Scholar]

- Fiser J, Aslin RN. Statistical learning of higher-order temporal structure from visual shape sequences. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:458–467. doi: 10.1037//0278-7393.28.3.458. [DOI] [PubMed] [Google Scholar]

- Folk CL, Remington RW, Johnston JC. Involuntary covert orienting is contingent on attentional control settings. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:1030–1044. [PubMed] [Google Scholar]

- Franconeri SL, Simons DJ. Moving and looming stimuli capture attention. Perception & Psychophysics. 2003;65:999–1010. doi: 10.3758/bf03194829. [DOI] [PubMed] [Google Scholar]

- Gebhart AL, Aslin RN, Newport EL. Changing structures in midstream: Learning along the statistical garden path. Cognitive Science. 2009;33:1087–1116. doi: 10.1111/j.1551-6709.2009.01041.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graf Estes KM, Evans J, Alibali MW, Saffran JR. Can infants map meaning to newly segmented words? Statistical segmentation and word learning. Psychological Science. 2007;18:254–260. doi: 10.1111/j.1467-9280.2007.01885.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopfinger JB, Buonocore MH, Mangun GR. The neural mechanisms of top-down attentional control. Nature Neuroscience. 2000;3:284–291. doi: 10.1038/72999. [DOI] [PubMed] [Google Scholar]

- Itti L, Koch C. Computational modelling of visual attention. Nature Reviews Neuroscience. 2001;2:194–203. doi: 10.1038/35058500. [DOI] [PubMed] [Google Scholar]

- Johnston WA, Hawley KJ, Plew SH, Elliott JM, DeWitt MJ. Attention capture by novel stimuli. Journal of Experimental Psychology: General. 1990;119:397–411. doi: 10.1037//0096-3445.119.4.397. [DOI] [PubMed] [Google Scholar]

- Jonides J. Voluntary vs. automatic control over the mind’s eye’s movement. In: Long JB, Baddeley AD, editors. Attention and performance. Vol. 9. Hillsdale, NJ: Erlbaum; 1981. pp. 187–203. [Google Scholar]

- Jungé JA, Scholl BJ, Chun MM. How is spatial context learning integrated over signal versus noise? A primacy effect in contextual cueing. Visual Cognition. 2007;15:1–11. doi: 10.1080/13506280600859706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Pashler H, Johnston J, Ruthruff E. Attention and performance. Annual Review of Psychology. 2001;52:629–651. doi: 10.1146/annurev.psych.52.1.629. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Perruchet P, Pacton S. Implicit learning and statistical learning: Two approaches, one phenomenon. Trends in Cognitive Sciences. 2006;10:233–238. doi: 10.1016/j.tics.2006.03.006. [DOI] [PubMed] [Google Scholar]

- Posner MI. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Smyth AC, Shanks DR. Awareness in contextual cuing with extended and concurrent explicit tests. Memory & Cognition. 2008;36:403–415. doi: 10.3758/mc.36.2.403. [DOI] [PubMed] [Google Scholar]

- Summerfield JJ, Lepsien J, Gitelman DR, Mesulam MM, Nobre AC. Orienting attention based on long-term memory experience. Neuron. 2006;49:905–916. doi: 10.1016/j.neuron.2006.01.021. [DOI] [PubMed] [Google Scholar]

- Theeuwes J. Perceptual selectivity for color and form. Perception & Psychophysics. 1992;51:599–606. doi: 10.3758/bf03211656. [DOI] [PubMed] [Google Scholar]

- Theeuwes J. Top-down search strategies cannot override attentional capture. Psychonomic Bulletin & Review. 2004;11:65–70. doi: 10.3758/bf03206462. [DOI] [PubMed] [Google Scholar]

- Treisman A, Souther J. Search asymmetry: A diagnostic for preattentive processing of separable features. Journal of Experimental Psychology: General. 1985;114:285–310. doi: 10.1037//0096-3445.114.3.285. [DOI] [PubMed] [Google Scholar]

- Turk-Browne NB, Isola PJ, Scholl BJ, Treat TA. Multidimensional visual statistical learning. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2008;34:399–407. doi: 10.1037/0278-7393.34.2.399. [DOI] [PubMed] [Google Scholar]

- Turk-Browne NB, Jungé J, Scholl BJ. The automaticity of visual statistical learning. Journal of Experimental Psychology: General. 2005;134:552–564. doi: 10.1037/0096-3445.134.4.552. [DOI] [PubMed] [Google Scholar]

- Turk-Browne NB, Scholl BJ, Chun MM. Babies and brains: Habituation in infant cognition and functional neuroimaging. Frontiers in Human Neuroscience. 2008;2:16. doi: 10.3389/neuro.09.016.2008. Retrieved from http://www.frontiersin.org/Human_Neuroscience/10.3389/neuro.09.016.2008/full. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turk-Browne NB, Scholl BJ, Chun MM, Johnson MK. Neural evidence of statistical learning: Efficient detection of visual regularities without awareness. Journal of Cognitive Neuroscience. 2009;21:1934–1945. doi: 10.1162/jocn.2009.21131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turk-Browne NB, Scholl BJ, Johnson MK, Chun MM. Implicit perceptual anticipation triggered by statistical learning. Journal of Neuroscience. 2010;30:11177–11187. doi: 10.1523/JNEUROSCI.0858-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Umemoto A, Scolari M, Vogel EK, Awh E. Statistical learning induces discrete shifts in the allocation of working memory resources. Journal of Experimental Psychology: Human Perception and Performance. 2010;36:1419–1429. doi: 10.1037/a0019324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolfe JM, Horowitz TS. What attributes guide the deployment of visual attention and how do they do it? Nature Reviews Neuroscience. 2004;5:495–501. doi: 10.1038/nrn1411. [DOI] [PubMed] [Google Scholar]

- Yantis S. Goal-directed and stimulus-driven determinants of attentional control. In: Monsell S, Driver J, editors. Attention and performance. Vol. 18. Cambridge, MA: MIT Press; 2000. pp. 73–103. [Google Scholar]

- Yantis S, Jonides J. Abrupt visual onsets and selective attention: Evidence from visual search. Journal of Experimental Psychology: Human Perception and Performance. 1984;10:601–621. doi: 10.1037//0096-1523.10.5.601. [DOI] [PubMed] [Google Scholar]

- Zhao J, Ngo N, McKendrick R, Turk-Browne NB. Mutual interference between statistical summary perception and statistical learning. Psychological Science. 2011;22:1212–1219. doi: 10.1177/0956797611419304. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.