Abstract

There has recently been an increase in interest in the effects of visual interference on memory processing, with the aim of elucidating the role of the perirhinal cortex (PRC) in recognition memory. One view argues that the PRC processes highly complex conjunctions of object features, and recent evidence from rodents suggests that these representations may be vital for buffering against the effects of pre-retrieval interference on object recognition memory. To investigate whether PRC-dependent object representations play a similar role in humans, we used functional magnetic resonance imaging to scan neurologically healthy participants while they performed a novel interference-match-to-sample task. This paradigm was specifically designed to concurrently assess the impact of object versus spatial interference, on recognition memory for objects or scenes, while keeping constant the amount of object and scene information presented across all trials. Activity at retrieval was examined, within an anatomically defined PRC region of interest, according to the demand for object or scene memory, following a period of object compared with spatial interference. Critically, we found greater PRC activity for object memory following object interference, compared with object memory following scene interference, and no difference between object and scene interference for scene recognition. These data demonstrate a role for the human PRC during object recognition memory, following a period of object, but not scene interference, and emphasize the importance of representational content to mnemonic processing.

Introduction

Despite abundant evidence implicating the perirhinal cortex (PRC) in recognition memory, its precise role remains unclear. Existing theoretical accounts characterize PRC function from differing (but not necessarily contradictory) perspectives, including the types of mnemonic processes [e.g., familiarity: Brown et al. (2010), Yonelinas et al. (2010)], computational operations [e.g., pattern generalization: Norman (2010)], and/or representational content [e.g., items/objects: Davachi (2006), Saksida and Bussey (2010), Ranganath (2010)] for which the PRC may be critical.

According to one representational account, PRC forms the apex of the ventral visual stream and processes complex conjunctions of object features (Murray et al., 2007; Saksida and Bussey, 2010). These object representations are necessary for successful object recognition memory since they buffer against the effects of object-based interference (Cowell et al., 2010). This interference, which arises following perceived or imagined objects before retrieval, can share many individual features with a target object, as well as foil stimuli. PRC complex feature conjunctions may resolve such interference and enable target recognition. In two recent experiments the performance of PRC-lesioned rats on object recognition was linked to the degree of interference presented after, or even before the stimuli to-be-remembered, with the presence or absence of interference leading to impaired or intact performance, respectively (Bartko et al., 2010; McTighe et al., 2010).

Although recent work has demonstrated the detrimental impact of visual interference on object perception in individuals with PRC damage (Barense et al., 2012), no study has yet, to our knowledge, systematically examined the effects of interference on human PRC contributions to recognition memory, which could offer valuable insight into why PRC damage impairs memory (Bowles et al., 2007). Using functional magnetic resonance imaging (fMRI), we scanned participants during a novel interference-match-to-sample (IMTS) task in which they studied an object-in-scene image, underwent a period of directed object or spatial interference, and then at retrieval, were shown target and foil object-in-scene images, which either assessed object or scene memory. Crucially, during encoding/interference, participants were unaware whether object or scene memory would be assessed. This paradigm enabled us to address whether the PRC is associated with object, but not other types of interference (e.g., spatial) for object memory. Moreover, given the scene component, we could test whether PRC interference effects also apply to scene recognition following spatial interference. Since the PRC is critical for object but not scene representations (Lee et al., 2005), we predicted significant PRC activity during object recognition after object, but not spatial, interference, and no effect of spatial or object interference on PRC activity during scene recognition.

Although our focus was on PRC, this paradigm also allowed us to explore interference-related activity in the parahippocampal cortex (PHC) and hippocampus (HC). Based on evidence that the PHC represents spatial contextual information (Staresina et al., 2011), one would predict greater activity following scene, compared with object, interference during scene recognition in this region. Predictions for the HC were less clear. Considering its role in spatial processing (Bird and Burgess, 2008), interference effects in the HC could be scene-specific. Alternatively, in light of other stimulus nonspecific HC processes [e.g., pattern completion: Norman and O'Reilly (2003); mismatch detection: Kumaran and Maguire (2007)], interference effects in this region could be more general.

Materials and Methods

Participants.

Twenty-five volunteers (13 female), aged between 19 and 30 years old took part. According to self-report, all were right-handed native-English speakers with normal or corrected to normal vision and no neurological and/or psychiatric disorders. All participants gave written informed consent before the experiment and were paid £10/h. The data from one subject (female) were removed from the analyses due to failure to complete all three scan runs, as well as poor behavioral performance (z > − 2.5). The mean age of the remaining 24 participants was 22.87. The experiment and its procedures received ethical approval from the Oxfordshire Research Ethics Committee A (Ref: 07/H0604/115).

Materials and Methods.

In this experiment, participants were scanned while they conducted an IMTS task across three scanning runs, separated by a short break to stop and start the scanner. For every IMTS trial there was a study phase, interference phase, and test phase, where the stimuli comprised color pictures of real world objects superimposed onto snapshots of three-dimensional virtual reality scenes (Fig. 1). At study, a stimulus was presented onscreen for 2800 ms, during which participants were instructed to intentionally encode both the object and scene. Following the 2800 ms study phase and a fixed interval stimulus-interval (ISI) of 200 ms, the inference phase began. During interference, seven stimuli appeared sequentially on-screen for 350 ms each and were separated by a fixed ISI of 250 ms. To investigate the effects of objects versus scene interference (see Introduction), these interfering stimuli were designed to differ from the study item, and each other, by presenting (1) the same scene with a novel visually similar object–object interference (OI); or (2) the same object with a visually similar novel scene–scene interference (SI). To ensure the participants paid attention to the items during the interference phase, one of the interference stimuli (one of items 3–6) appeared twice in a row. Participants were asked to signal this repetition by using the right index finger button on a button box (i.e., a one-back task). A third type of interference (scrambled images) was also included but as this condition does not directly address our current goal of comparing spatial and object interference, these trials were excluded from the behavioral and imaging analyses in the present manuscript.

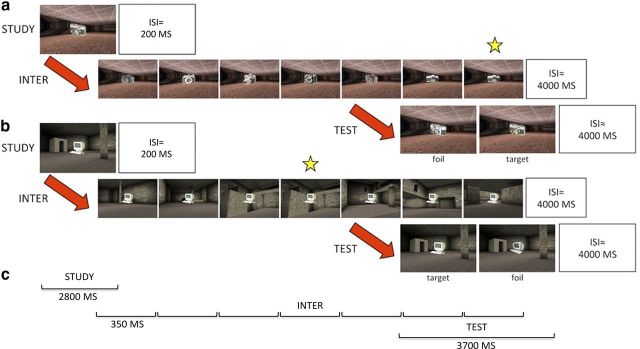

Figure 1.

Example trial types for OROI (a) and ORSI (b). All IMTS trials comprised a study, interference (inter), and test phase. The individual timings for each phase are shown in c. During the study phase an object-in-scene image was presented. Interference either comprised novel objects (object interference; a) or novel scenes (b). In both cases, participants were instructed to detect a repeating item (as indicated by the star) within the 7 presentations. At test, participants had to choose the target stimulus from a similar foil, which either tested object recognition (i.e., the target and foil contain different objects; a, b) or scene recognition (i.e., the target and foil contain different scenes; example not shown).

The end of the interference phase was marked by a variable ISI (mean 4000 ms), which was followed by the test phase. At test two stimuli were presented side-by-side on screen for 3700 ms, one of which comprised the study object/scene pair (target) and the other a novel object-scene image (foil). To assess object or spatial memory, the foil either consisted of the same scene as the target with a novel, but visually similar object [i.e., object recognition (OR)] or the same object with a novel, visually similar scene [i.e., scene recognition SR)]. Using the right index and middle finger buttons, participants indicated whether the left (index) or right (middle) image was the target. The presentation of the target on the left and right hand side of the screen and the location of the repeating object during the interference phase (i.e., fourth–seventh item) were pseudo-random (≤3 in a row) and fully counterbalanced across IMTS trials. At the end of the test phase there was a variable intertrial-interval (ITI; mean 4000 ms), marking the end of each IMTS trial and the beginning of the next.

Permutations of the two interference conditions of interest (OI, SI), and two test scenarios (OR and SR) resulted in four different IMTS trial types: (1) object recognition, object interference (OROI); (2) object recognition, scene interference (ORSI); (3) scene recognition, object interference (SROI); and finally (4) scene recognition, scene interference (SRSI). There were 36 IMTS trials for each of these conditions (12 per run), resulting in a total of 144 trials (48 per run) across the entire experiment. The presentation order of trials within runs was based on a Latin matrix design to ensure that no trial type preceded/followed another for an unequal number of times.

To make the stimuli, 648 images of objects were taken from the Hemera Photo Objects database (Volumes I and II), and 648 virtual reality scenes were created using a commercially available game (Dues Ex, Ion Storm L.P) and a freeware software editor (Dues Ex Software Development Kit v.1112f). For the 648 objects and 648 scenes, 144 were study items and targets at test, 72 were used as foils at test, and 72 sets of 6 (432) comprised distractor items for the interference phase. Notably, to maximize the likelihood of observing interference effects, all the objects within each object interference trial (i.e., the target, foil, and interfering items of OROI and SROI) belonged to the same semantic category and were specifically chosen to possess many overlapping visual-features (Fig. 1). Likewise, all the scenes within each spatial interference trial (i.e., the target, foil, and interfering items of ORSI and SRSI) comprised the same features (e.g., floor/wall/ceiling color/texture, door, window or floor cavity) rearranged into distinct spatial arrays.

An additional 36 objects and scenes were used to make 8 practice trials (2 for each of the 4 conditions). These were administered in a random order to the participants before scanning to ensure that they fully understood the task instructions.

Scanning procedure.

Scanning was performed using a Siemens Magnetom Verio 3T MRI system. The same gradient-echo echo planar imaging sequence was used for all subjects in all three scanning runs. Forty-three slices were collected per image volume and, because temporal lobe regions were the primary area of interest, thinner slices (2.0 mm thickness) were acquired to reduce susceptibility artifacts [interslice distance 0.5 mm, in-plane resolution 3*3 mm, repetition time (TR) = 2400 ms, echo time (TE) = 30 ms, flip angle (FA) = 78°, field of view (FOV) = 192*192*107]. The slices were acquired in a descending order, with an oblique axial tilt relative to the anterior–posterior commissure line (posterior downward) to prevent image ghosting and to maximize coverage of the temporal lobe. Each EPI session was ∼20 min in duration, which included four dummy scans at the start of the scanning run to allow the MR signal to reach equilibrium. To correct for geometrical distortions in the EPI data due to magnetic-field in-homogeneity, a map of the magnetic field was produced from two 3D FLASH images acquired during the scanning session (Jezzard and Balaban, 1995). The FLASH acquisitions were prescribed using the same slice orientation as the EPI data to be unwarped. Parameters for the FLASH acquisitions were as follows: TE = 5.19 ms and 7.65 ms, TR = 430 ms, and FA = 60°. Anatomical images were acquired using a standard T1-weighted sequence comprising 178 axial slices (3D FSPGR). Scanning parameters were as follows: TR = 2040 ms, TE = 4.7 ms, FA = 8°; FOV 192*174*192 mm, and 1 mm isotropic resolution.

FMRI data preprocessing.

Image data preprocessing and analyses were performed using FEAT (FMRI Expert Analysis Tool) Version 5.98, which is part of FSL (FMRIB Software Library; www.fmrib.ox.ac.uk/fsl) (Smith et al., 2004). The following preprocessing steps were applied to each of the three runs of single subject data: (1) motion correction using MCFLIRT, which realigns all images using rigid body registration to a central volume (Jenkinson et al., 2002), (2) unwarping of the EPI data using the acquired fieldmaps and PRELUDE+FUGUE (Jenkinson, 2003) to correct for image distortions arising from magnetic field inhomogeneities, (3) segmentation of brain from nonbrain matter using a mesh deformation approach (BET) (Smith, 2002), (4) applying spatial smoothing using a Gaussian kernel of FWHM 6.0 mm, (5) grand mean intensity normalization of the entire 4D data by a single multiplicative factor, (6) high-pass temporal filtering using Gaussian-weighted least-squares straight line fitting with σ = 50 s, (7) independent component analysis to investigate and remove any noise artifacts (MELODIC) (Beckmann and Smith, 2004), and finally, (8) registration of the EPI data to high-resolution 3D anatomical T1 scans (per participant) and to a standard Montreal Neurological Institute (MNI-152) template image (for group average) was performed using a combination of FLIRT and FNIRT (Jenkinson and Smith, 2001; Jenkinson et al., 2002). As such, coordinates (x, y, z) of activity are reported in MNI space.

FMRI data statistical analysis.

After preprocessing the three experimental runs for each fMRI time series for each participant, the data were submitted to a general linear model, with one predictor that was convolved with a double-gamma model of the human hemodynamic response function (HRF) for each event-type. The specification of the event types (or explanatory variables, EVs) was guided by the phase of the IMTS trials (e.g., encoding, interference and test: E/I/T), type of interference (object or scene interference: OI/SI), and the type of memory being assessed (object or scene recognition: OR/SR). There were 12 EVs in total: from the encoding phase (1) subsequent object recognition, object interference (OROI-E), (2) subsequent object recognition, scene interference (ORSI-E), (3) subsequent scene recognition, object interference (SROI-E), and (4) subsequent scene recognition, scene interference (SRSI-E); from the interference phase (5) subsequent object recognition, object interference (OROI-I), (6) subsequent object recognition, scene interference (ORSI-I), (7) subsequent scene recognition, object interference (SROI-I), and (8) subsequent scene recognition, scene interference (SRSI-I); and finally from the test phase, (9) object recognition, object interference (OROI-T), (10) object recognition, scene interference (ORSI-T), (11) scene recognition, object interference (SROI-T), and (12) scene recognition, scene interference (SRSI-T). The described encoding, interference, and test phase EVs only comprise the trials in which participants made an accurate match-to-sample judgment at retrieval. As match-to-sample accuracy was high (Fig. 2, left), however, errors from the test phase were not modeled.

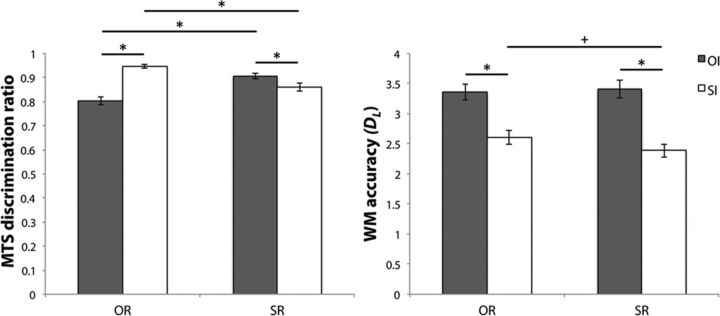

Figure 2.

MTS (left) and one-back WM (right) discrimination accuracy for OI (dark gray) and SI (white), classified according to OR (left side) and SR (right side) recognition. Error bars represent SEM. *p < 0.05, Bonferroni corrected; +p < 0.05, uncorrected.

Parameter estimates relating the height of the HRF response to each of the 12 EVs were calculated on a voxel by voxel basis, via a multiple linear regression of the response time course, to create one β image for each regressor/EV per run, per participant. In the light of our predictions (see Introduction), we identified any fMRI signal changes between the OROI-T and ORSI-T EVs as being critical for examining the effects of object interference on PRC contributions to object memory. As such, parameter estimate images were created for contrasts between the OR test EVs: OROI-T>ORSI-T and ORSI-T>OROI-T. Similarly, as any differences between the SRSI-T and SROI-T EVs were critical for investigating the effect of spatial interference during scene recognition memory, there were also contrasts between the SR test EVs: SROI-T>SRSI-T and SRSI-T>SROI-T. Using FEAT, a mean dataset was created per participant from the individual runs in a fixed effects analysis, and the subsequent parameter estimate images were combined in a higher-level (group) mixed effects FLAME analysis (FMRIB's Local Analysis of Mixed Effects) (Beckmann et al., 2003; Woolrich et al., 2004, 2009). Patterns of activity were investigated using a method of permutation-based nonparametric inference, applying a cluster extent threshold of Z > 2.3 (Randomise function of FSL: http://www.fmrib.ox.ac.uk/fsl/randomise, version 2.1). To correct for multiple comparisons, a multi-threshold meta-analysis of random field theory cluster p values was applied within preselected regions of interest (ROI; see later for further details), with clusters that survived a small-volume corrected family-wise (svc-FWE) p-value of <0.05 being considered statistically significant.

Before the planned critical computations described above, analyses were conducted to reinforce our focal interest on the retrieval conditions, and to ensure that any patterns of activity observed for object/scene retrieval (i.e., OROI-T vs ORSI-T; SRSI-T vs SROI-T) did not mutually apply during encoding and interference. In other words, for any effects to be considered relevant to overcoming memory interference during recognition they would need to apply uniquely to processing at the time of memory retrieval. For instance, if the same pattern of activity (e.g., OROI>ORSI=SROI=SRSI) were to occur at both encoding and retrieval, it would be unclear whether this effect pertains to overcoming interference during retrieval and/or as a result of an encoding effect that renders items more (or less) resistant to subsequent interference between encoding and retrieval.

For these initial analyses we ran a within-subjects factorial ANOVA across the 12 EVs to identify fluctuations between the different phases of the IMTS trials and the different types of interference and recognition memory. Specifically, averaged per-subject images for each retrieval/interference condition (i.e., OROI, ORSI, SROI and SRSI), from each phase of the IMTS trial (i.e., E, I, and T) were entered in a higher-level mixed effects FLAME analysis, modeling factors of phase (E/I/T), recognition (OR/SR), and interference (OI/SI). An F test was conducted on these data to test for interactions between phase, recognition, and interference. In the presence of significant interactions with phase at this level, two follow-up 2*2*2 ANOVAS were conducted testing for phase by recognition and/or interference interactions in the data from encoding and test, and interference and test. If interactions with phase occurred in one (or both) of these follow-up ANOVAs, patterns of activity across conditions from the retrieval phase were investigated further and considered reliably different from those from the encoding and/or interference phases.

As we were specifically interested in the contribution of the PRC to our task we focused our analyses in this region. First, the left and right PRC were hand drawn in accordance with published guidelines for each participant based on individual neuroanatomy (Insausti et al., 1998). Second, these masks were registered from subject space to MNI space (using FLIRT) (Jenkinson et al., 2002), combined, thresholded to reflect a 50% probability of being the left and right PRC in our subjects, and then binarised. In total, these left and right PRC masks comprised 494 and 435 voxels, respectively.

On finding any significant interactions between the encoding/interference and test IMTS phases in the group level PRC ROIs, the contrasts OROI-T>ORSI-T, ORSI-T>OROI-T and SROI-T>SRSI-T, SRSI-T>SROI-T were conducted (p < 0.05, FWE-svc). Then, we asked whether any resulting clusters identified from these retrieval comparisons were also pertinent to the other stimulus-type (e.g., OROI-T>ORSI-T; SROI-T>SRSI-T), or were stimulus-specific (e.g., OROI-T>ORSI-T; SROI-T=SRSI-T). Stimulus-specific clusters were statistically defined as those voxels passing a high threshold for a given recognition/interference contrast (p < 0.05, FWE-svc), which were nonsignificant for another recognition/interference contrast (i.e., a masking analysis), even at an extremely low inclusion threshold (p < 0.05, uncorrected).

Although not the central focus of the current investigation, comparable analyses were performed to investigate the effects of memory interference in the HC and the PHC. As with the PRC, two group level ROIs that comprised >50% probability of being the left and right HC (left: 364 voxels; right: 392 voxels) and PHC (left: 198 voxels; right: 193 voxels) in our subjects were created from hand-drawn left and right HC and PHC masks for each participant based on existing methods [HC: Watson et al. (1992), PHC: Lee and Rudebeck (2010)]. Subsequent to any interactions between phase and recognition/interference, results from the four critical retrieval recognition/interference contrasts (OROI-T vs ORSI-T; SROI-T vs SRSI-T) were investigated with a small volume correction within these ROIs (p < 0.05, FWE-svc). Significant clusters were also interrogated for stimulus-specificity by assessing the presence of contrasting recognition/interference effects using the liberal threshold contrast masking approach described previously (p < 0.05, uncorrected).

Results

Behavior

For ease of exposition, interference conditions and object/scene recognition will be generally referred to as “interference” and “recognition,” and in all reported factorial ANOVAs the Greenhouse–Geisser (Greenhouse and Geisser, 1959) correction for nonsphericity was applied, where necessary, and is indicated by adjusted degrees of freedom.

Target versus foil match-to-sample (MTS) discrimination was above chance for all of the 4-recognition/interference IMTS conditions (all t(23) > 17.81, Fig. 2, left). To investigate possible main effects and interactions between recognition and interference in MTS accuracy, discrimination ratios for the IMTS conditions were entered into a 2*2 ANOVA with factors of recognition (scene vs object) and interference (scene vs object). Results from this ANOVA indicated a significant recognition*interference interaction (F(1,23) = 87.37, p < 0.01). Follow-up Bonferroni corrected planned pairwise comparisons (4 comparisons, adjusted p < 0.013) revealed better memory for objects following scene versus object interference (t(23) = 9.56, p < 0.013), whereas for scene recognition, the reverse was true (t(23) = 4.26, p < 0.013). When comparing across the recognition conditions, MTS accuracy was superior for scene, compared with object recognition following object interference (t(23) = 6.70, p < 0.013), whereas performance was better for object versus scene recognition, following scene interference (t(23) = 6.21, p < 0.013).

To ensure participants attended to the stimuli, they conducted a one-back task during the interference phase. The proportion of correctly identified repeating stimuli–hits (H) and incorrectly identified new stimuli as repeats–false alarms (FA) were calculated for each of the recognition/interference conditions. In accordance with signal detection theory (Snodgrass and Corwin, 1988), values for H and FA were used to calculate d-prime (dL) scores as a measure of working memory (WM) accuracy for each recognition/interference condition (e.g., dL = Z(H) − Z(FA); Fig. 2, right). These dL scores were submitted to a 2*2 ANOVA with factors of recognition (scene vs object) and interference (scene vs object), which also indicated a recognition*interference interaction (F(1,23) = 4.47, p < 0.05). Follow up tests (4 comparisons, adjusted p < 0.013) indicated reliably superior WM performance for object compared with scene interference for both object and scene recognition (t(23) > 6.38, p < 0.013). There was also a trend for higher WM accuracy across the object and scene memory conditions for scene interference (t(23) = 2.13, p < 0.05).

As the one-back task was implemented to ensure participants paid attention to the interfering items, it is possible that WM accuracy (dL) correlated with MTS accuracy. For instance, one may predict a negative correlation between WM and MTS accuracy when the type of interference and recognition were congruent (i.e., OROI, SRSI), reflecting the possibility that greater attention to the interfering items resulted in greater interference and thus, poorer recognition memory. In contrast, the presence of a negative correlation between WM and MTS accuracy when interference and recognition type were incongruent (i.e., ORSI, SROI) would suggest that better MTS accuracy during these conditions compared with the interference-recognition congruent conditions (i.e., OROI<OISI, SROI>SRSI) simply reflected poorer attention to the intervening WM task. Alternatively, the absence of any consistent relationship between WM and MTS accuracy may suggest that processes that contribute to successful one-back performance are unlikely to overlap entirely with those that are necessary for successful recognition memory performance and that the MTS behavioral effects we observed are related primarily to the type of interference presented. To investigate this, we ran four paired correlations between the WM and MTS accuracy scores for each of the recognition/interference conditions, applying the Bonferroni correction for multiple comparisons (4 comparisons p = 0.013). Notably, there was no evidence that better WM performance was associated with reduced MTS accuracy in any condition, and the only significant relationship observed was a positive correlation between WM and MTS performance for ORSI (r(24) = 0.52, p < 0.013; all else: r(24) < 0.21).

Last, it is possible that there was a relationship between the serial position of the one-back repetition during the interference phase and MTS performance. Specifically, an earlier repetition in the interference phase may have resulted in more time to rehearse the study item, thus leading to better recognition at retrieval. As a result, we calculated MTS accuracy for each recognition/interference condition separately for trials with the earliest (third item position repeats) and latest (sixth item position repeats) serial repetition at interference (across conditions, the difference between early and late repetition MTS accuracy ranged from 0.07 to −0.02). These early and late repetition MTS discriminations were then entered into a 2*2*2 ANOVA (factors of repetition: early vs late; recognition: object vs scene; interference: object vs scene), which revealed a main effect of repetition (F(1,23) = 5.71, p < 0.05), in addition to an overall interaction between recognition and interference (F(1,23) = 42.92, p < 0.01). This main effect of repetition reflected an average early versus late difference in MTS accuracy of 0.03 (SE = 0.01) across all conditions. Notably, as there were no interactions between repetition and recognition, and/or interference (F(2,48) < 2.49, p > 0.13), one can assume that the advantage of early WM repetition on MTS accuracy was equally pertinent to all of the object and scene interference/recognition conditions.

In sum, the recognition*interference interactions in MTS accuracy (e.g., OROI<ORSI; SROI>SRSI) were not reflected in the pattern of findings from (1) correlational analyses between WM and MTS accuracy; or (2) the analysis of the impact of early versus late serial position effects on MTS discrimination. Thus, the observed MTS behavioral effects can be attributed to the type of interfering information present during the delay, as opposed to the amount of attention paid to the interfering items and/or performance on the WM task.

FMRI data

In the first stage of our analyses, encoding (E) versus interference (I) versus test (T) phase-related differences in PRC activity across the four object/scene recognition (OR/SR) and interference conditions (OI/SI) were assessed via a 3*2*2 (phase vs recognition vs interference) within-subjects ANOVA comprising average per-subject images for each of the 12 conditions of interest (see Materials and Methods). Results from this ANOVA revealed a significant interaction between phase and interference in the right PRC (peak: 28, − 8, − 44; p < 0.005, FWE-svc, 244 voxels), and a significant interaction between phase and interference in the left PRC (peak: − 24, − 10, − 42; p < 0.01, FWE-svc; 186 voxels; Fig. 3). When activity in the right hemisphere was compared separately across encoding versus test, and interference versus test in two follow-up 2*2*2 ANOVAs, a phase*interference interaction was apparent in both tests (encoding vs test peak: 28, − 8, − 44; p < 0.05, FWE-svc; 188 voxels; interference vs test peak: 28, − 8, − 44; p < 0.01, FWE-svc; 290 voxels). In the left PRC, these 2*2*2 analyses indicated a two-way phase*interference interaction for encoding versus test (peak: − 24, − 10, − 42; p < 0.05, FWE-svc; 146 voxels), and a three-way phase*recognition*interference interaction for interference versus test (peak: − 26, − 8, − 42; p < 0.05, FWE-svc; 133 voxels).

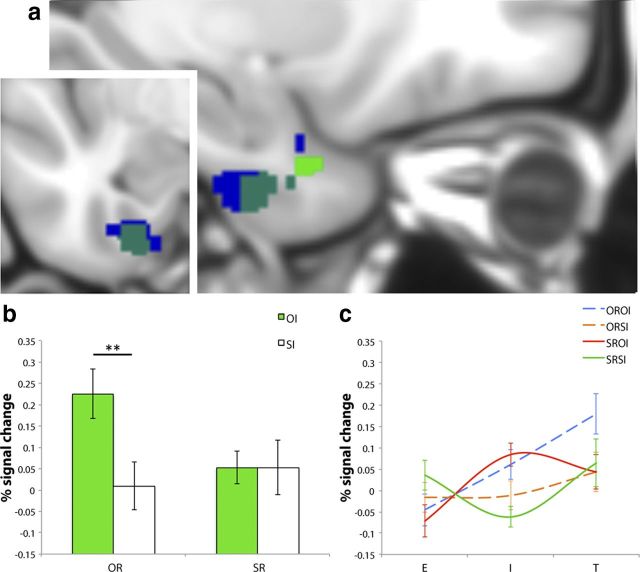

Figure 3.

a, Sagittal and coronal views of significant clusters for overall phase*interference interaction (blue), OROI-T>ORSI-T (green), and where these effects overlap (turquoise) in the left PRC (at −26, −8, −42). b, Average percentage signal change values extracted from the OROI-T>ORSI-T cluster for OI (green) and SI (white), classified according to OR (left side) and SR (right side). c, Average percentage signal change values from the left PRC ROI plotted for the E, I, and T phases of the OROI (blue dashed), ORSI (orange dashed), SROI (red solid), and SRSI (green solid) IMTS trials. Error bars represent SEM. **p < 0.005, FWE-svc.

In accordance with our specific hypothesis, we followed up the interactions between phase and recognition and/or interference in the left and right PRC via pairwise comparisons between OROI-T and ORSI-T, and between SROI-T and SRSI-T. The results from these four contrasts revealed significant effects for OROI-T>ORSI-T in the left PRC only, in the same location for which significant interactions between phase and interference (overall; encoding vs test) and phase, interference, and recognition (interference vs test) were observed (peak: − 26, − 8, − 42, p < 0.005, FWE-svc; 99 voxels; Fig. 3). Interestingly, subsequent masking analyses revealed that this OROI>ORSI activity did not apply to scene recognition, as characterized by no equivalent interference effect between the SROI and SRSI conditions, even at an extremely low threshold for inclusion (p > 0.05, uncorrected). Neither the OROI-T versus ORSI-T, nor the SROI-T versus SRSI-T pairwise comparisons reached significance in the right PRC (all p > 0.18 FWE-svc).

Next, an identical four-step analysis was conducted to test for effects of object and spatial interference on object and scene memory in our group level HC ROIs. Overall interactions in these ROIs were for phase*interference bilaterally (left peak: −22, −10, −30; p < 0.001; 282 voxels; right peak: 26, −8, −30; p < 0.001, FWE-svc; 310 voxels; Fig. 4). The subsequent 2*2*2 ANOVAs indicated a marginal phase*recognition interaction for encoding versus test in the right HC (peak: 20, −8, −28; p = 0.08, FWE-svc; 109 voxels), and significant phase*interference interactions for interference versus test in the left and right HC (left peak: −22, −10, −30; p < 0.001, FWE-svc; 306 voxels; right peak: 26, −10, −30; p < 0.001, FWE-svc; 347 voxels). Follow up tests on the retrieval data further revealed that, in contrast to left PRC, there was a significant effect of SRSI-T>SROI-T in the right HC in a similar region to which the significant interaction effects were observed (peak: 28, −14, −26; p < 0.01, FWE-svc; 76 voxels; Fig. 4). Masking analyses indicated that this SRSI-T>SROI-T right HC activity did not apply to object recognition, as there were no significant differences between OROI-T and ORSI-T even at a liberal threshold (p > 0.07, uncorrected). Notably, there was a trend for SRSI-T>SROI-T in the left HC (peak: −28, −12, −24; p = 0.08, FWE-svc; 21 voxels). As this effect failed to reach significance, however, subsequent masking analyses were not conducted.

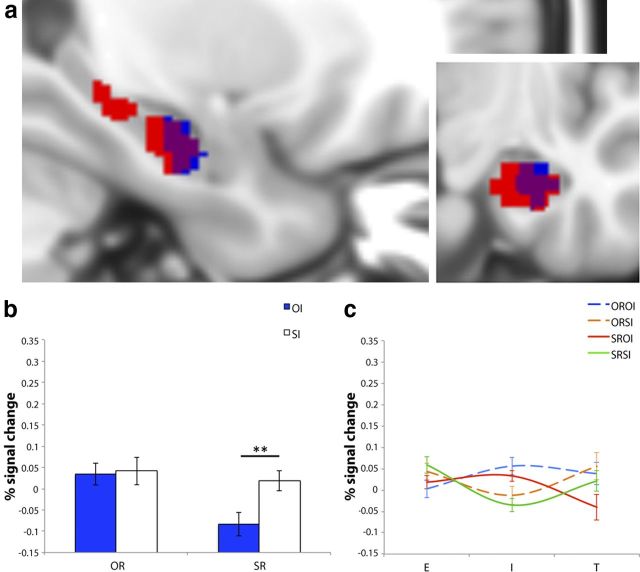

Figure 4.

a, Sagittal and coronal views of significant clusters for overall phase*interference interaction (red), SRSI-T>SROI-T (blue), and where these effects overlap (purple) in the right HC (at 28, −14, −26). b, Average percentage signal change values extracted from the SRSI-T>SROI-T cluster for OI (blue) and SI (white), classified according to OR (left side) and SR (right side). (c) Average percentage signal change values from the right HC ROI plotted for the E, I, and T phases of the OROI (blue dashed), ORSI (orange dashed), SROI (red solid), and SRSI (green solid) IMTS trials. Error bars represent SEM. **p < 0.01, FWE-svc.

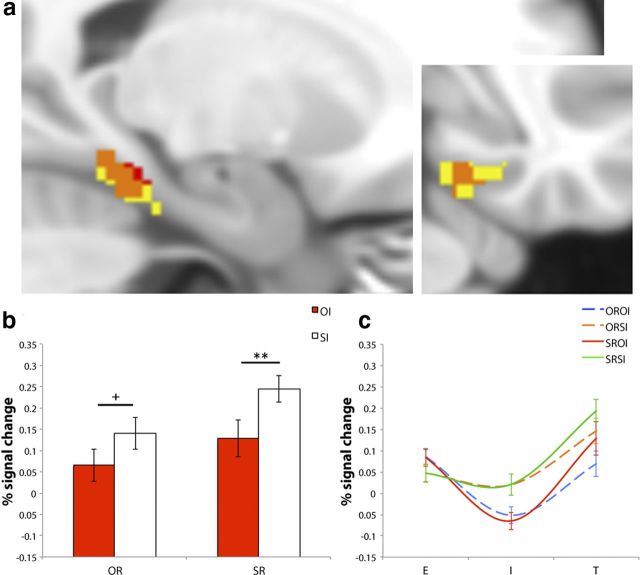

Finally, we also investigated differences in activity in the PHC. The initial 3*2*2 ANOVA indicated that there was an overall phase*interference interaction in the right PHC ROI only (peak: 26, −28, −26; p < 0.01, FWE-svc; 119 voxels; Fig. 5). Due to the lack of interactions with phase at this level in the left PHC ROI (all p > 0.27 FWE-svc), follow up analyses (e.g., 2*2*2 ANOVAs) were not conducted for this region (see Materials and Methods). The 2*2*2 ANOVAs in right PHC indicated reliable and marginal (respectively) two-way phase*interference interactions for encoding versus test (peak: 26, −28, −26; p < 0.01, FWE-svc; 125 voxels) and interference versus test (peak: 22, −32, −22; p = 0.06, FWE-svc; 71 voxels). Pairwise comparisons between the test conditions in this region revealed a significant cluster for SRSI-T>SROI-T in the same location (peak: 20, −34, −20; p < 0.005, FWE-svc; 52 voxels; Fig. 5). In contrast to the right HC, however, masking analyses indicated the presence of an ORSI-T>OROI-T effect (peak: 18, −32, −20; p < 0.01, uncorrected; 13 voxels).

Figure 5.

a, Sagittal and coronal views of significant clusters for overall phase*interference interaction (yellow), SRSI-T>SROI-T (red), and where these effects overlap (orange) in the right PHC (at 20, −34, −20). b, Average percentage signal change values extracted from SRSI-T>SROI-T for OI (red) and SI (white), classified according to OR (left side) and SR (right side). c, Average percentage signal change values from the right PHC ROI plotted for the E, I, and T phases of the OROI (blue dashed), ORSI (orange dashed), SROI (red solid), and SRSI (green solid) IMTS trials. Error bars represent SEM. **p < 0.01, FWE-svc; +p < 0.01, uncorrected.

Discussion

Using a novel IMTS paradigm, we have demonstrated that the human PRC is sensitive to memory interference. Critically, this effect applies particularly to the object domain, where PRC involvement is greatest when object information is relevant during both interference and memory retrieval. Motivated by previous work highlighting a role for the PRC in processing complex objects (Bussey et al., 2002; Lee et al., 2005; Barense et al., 2007), our task was specifically designed to examine the effects of interference (in the form of successive presentations of novel, but visually similar, objects or scenes) on object versus scene recognition memory. Since objects and scenes were always presented simultaneously, but only memory for one was assessed, participants had to process both object and scene details throughout the IMTS trials. Furthermore, in contrast to previous experiments in which different stimuli were often presented separately (Preston et al., 2010), our paradigm enabled us to compare memory for objects and scenes, while holding the other type of information constant.

Using this task we observed greater PRC activity when object memory was assessed following object interference, compared with spatial interference (OROI-T>ORSI-T). Importantly, follow-up inclusive masking analyses indicated no significant differences in activity within these PRC voxels during scene recognition following object or spatial inference (SRSI-T=SROI-T), even at a liberal inclusion threshold (p < 0.05, uncorrected).

The reported pattern of PRC activity during retrieval supports a hierarchical–representational account of PRC function, which suggests that PRC-dependent complex object representations contribute to object recognition memory by buffering against the effects of visual interference at the object featural level (Saksida and Bussey, 2010). Thus, greater PRC activity for OROI-T, compared with ORSI-T, could reflect increased demand for these PRC-dependent representations because MTS performance could no longer be supported via single object features due to the interfering objects sharing individual features with the object memoranda. When scene memory was assessed (SRSI-T, SROI-T) or when object memory was tested after spatial interference (ORSI-T), these representations were less critical for recognition performance, leading to reduced levels of PRC activity.

Critically, the observed pattern of PRC activity during test was not evident during the encoding or interference phases (as indicated by significant phase*interference and phase*recognition*interference interactions, respectively). First, this indicates that the effects at retrieval cannot be explained easily by pre-existing differences in fMRI activity at earlier stages of the IMTS trial. In particular, although encoding efficacy at study may influence the resistance of memories to visual interference (Bartko et al., 2010), our current PRC findings apply uniquely to the test phase of the paradigm. Second, these interactions with phase, in combination with an activity increase for object interference during the delay (OROI-I>ORSI-I; SROI-I>SRSI-I) that markedly alters due to the demand for object versus scene retrieval at test (Fig. 3c), provide further support for the representational account of object interference and memory put forward here.

As there were insufficient trials to model forgotten items, our study cannot speak directly to the relationship between retrieval success and the role of PRC-dependent object representations for interference resolution. Nevertheless, considering reports that object recognition deficits in rodents with PRC damage are exacerbated by increasing visual interference before retrieval (Bartko et al., 2010) or ameliorated by reducing interference (McTighe et al., 2010), it is evident that PRC object representations contribute significantly to successful object recognition following interference. What is unclear, however, is whether this PRC involvement is predictive of retrieval success, or whether PRC representations that are elicited following sufficient levels of interference are passed forward to higher-order executive regions responsible for overcoming the interference (i.e., ventrolateral prefrontal cortex) (Badre and Wagner, 2007).

Although our study is, to our knowledge, the first to demonstrate the contribution of the human PRC to resolving object interference during object recognition, a handful of fMRI experiments have similarly investigated the neural correlates of interference or distraction using different stimulus materials (Sakai and Passingham, 2004; Schultz et al., 2012). Schultz et al. (2012) observed increased PRC activity for face, but not scene, memory following a period of high interference/distraction, compared with no interference/distraction. Notably, however, the interfering/distracting information comprised a combination of novel faces and scenes, and thus, the effect of stimulus-type could not be ascertained. Moreover, since the type of distracting information was not controlled, it is unclear whether the pattern of PRC activity observed by Schultz et al. reflects fluctuations in retrieval demands due to the global effects of distraction (Sakai et al., 2002), or the type and amount of interfering information. Our study adds significantly to these findings by demonstrating that the PRC specifically activates following object, but not scene, interference during object recognition memory. Importantly, our PRC findings are unlikely to reflect increased retrieval demands. First, although there was a difference in the type of information, the amount of interference across IMTS trials was equivalent. Second, no interference effects were observed in PRC for scenes.

It is conceivable that the enhanced PRC activity for OROI-T could, in fact, reflect the presentation of valid attentional cues (in the form of the interfering objects) before object memory retrieval. This is since classic cued attention paradigms have demonstrated that the presentation of a valid, but not invalid, cue leads to increased visual cortex and extrastriate activity during visual target search (Moran and Desimone, 1985; Hopfinger et al., 2000; Corbetta et al., 2005; Munneke et al., 2008). In our opinion, this alternative interpretation of our data is unlikely. As cued attention typically has a facilitatory effect on task performance (Spitzer et al., 1988; Posner and Petersen, 1990), an attentional explanation would predict superior MTS accuracy when the domain of the interfering items matches that of the memory assessed at retrieval (i.e., memory performance for OROI>ORSI; SRSI>SROI). Contrary to this, however, we found that object and scene interference had a detrimental impact on object and scene memory performance, respectively (ORSI>OROI; SROI>SRSI; Fig. 2), in accordance with a representational-interference interpretation of our data.

Finally, the observed pattern of PRC activity at retrieval (Fig. 3) could also reflect the presentation of repeating objects during ORSI-I compared with novel objects during OROI-I, leading to reduced PRC activation for ORSI-T compared with OROI-T (a repetition suppression effect) (Henson et al., 2003). Although this account is plausible, considering the overall phase*interference (3*2) and interference versus test-phase*recognition*interference (2*2*2) interactions in the left PRC, as well as the profile of activity across IMTS trials for ORSI/OROI, we argue that it cannot explain our data entirely (see Results; Fig. 3c). More specifically, it is apparent that ORSI is characterized by little/no fluctuation in activity from encoding through to interference, and a slight increase from interference to test. Critical for this alternative explanation, however, activity for ORSI should reduce during interference due to the presence of repeating objects.

The incorporation of scene stimuli allowed us to investigate the relationship between spatial memory interference and fMRI activity in the HC and PHC, regions suggested to process spatial/contextual information (Staresina et al., 2011). In contrast to PRC, analyses revealed greater HC and PHC activity for scene recognition following scene interference, compared with scene recognition following object interference (SRSI-T>SROI-T). Interestingly, although the inclusive masking analyses in PHC also indicated an effect of ORSI-T>OROI-T (at an uncorrected threshold), the same analysis revealed no difference between OROI-T and ORSI-T in HC.

At first glance, this pattern of activity in the right HC at retrieval is not inconsistent with the possibility that this region contributes to the resolving of scene-based interference during scene recognition. A closer inspection of the profiles of activity in HC reveals a number of complications that render this straightforward interpretation difficult. The SRSI-T>SROI-T effect in HC propagated from a decrease for SROI trials from interference to retrieval, with activity at retrieval for these trial types being less than that for the other conditions of interest (Fig. 4c). The reasoning behind this profile of activity is not immediately clear. Broadly speaking, as the HC has been implicated in a number of interrelated, domain-general processes, such as match-mismatch detection, associative/relational binding, and pattern completion (Norman and O'Reilly, 2003; Kumaran and Maguire, 2007; Ranganath, 2010), our paradigm may have placed a demand on some or all of these processes to varying degrees across the different trial types given the nature of the stimuli used (i.e., objects presented with scenes). In short, further research is necessary to clarify how memory retrieval in the HC is influenced by visual interference and whether the interference-related processes in these regions are comparable or distinct to those postulated for the PRC.

The pattern in the PHC (SRSI-T>SROI-T; ORSI-T>OROI-T) suggests a general response to scene interference, as well as scene recognition. Considering the activity in PHC across the MTS paradigm (Fig. 5c), all conditions exhibited substantial activity increases from interference to retrieval, with this difference being greatest for SRSI and SROI. Notably, however, the object, but not scene, interference conditions were associated with sizeable PHC activity decreases during the interference phase (SROI-I; OROI-I), perhaps reflecting a suppression effect of presenting repeating scenes. As such, it may be more appropriate to describe the current PHC effect as an interplay between spatial repetition–suppression, as well as spatial-interference and spatial memory. Nevertheless, these data are consistent with views proposing dissociable roles for the PRC and PHC in item/object and context/spatial memory, respectively (Ranganath, 2010; Staresina et al., 2011).

In conclusion, we have provided novel fMRI evidence that sheds light on the contribution of the PRC to recognition memory. As our data indicate that activity in the PRC during object recognition is sensitive to visual object, but not spatial interference, they support a hierarchical–representational account, which characterizes PRC function according to the type and complexity of the memoranda, as opposed to the underlying processes that support remembering. Finally, our findings are consistent with the suggestion that amnesia after brain damage may be better understood, not only in terms of the loss of specific mnemonic processes (e.g., encoding/retrieval) but also in terms of the type of visual information that may be represented in the damaged region and the degree to which relevant visual interference has occurred (Cowell et al., 2010).

Footnotes

This work was funded by the Wellcome Trust (#802315) and the National Sciences and Engineering Council of Canada (#412309-2011, #402651-2011). We thank our colleagues at the Oxford Centre for Functional MRI of the Brain for help and support during data acquisition.

References

- Badre D, Wagner AD. Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia. 2007;45:2883–2901. doi: 10.1016/j.neuropsychologia.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Barense MD, Gaffan D, Graham KS. The human medial temporal lobe process online representations of complex objects. Neuropsychologia. 2007;45:2963–2974. doi: 10.1016/j.neuropsychologia.2007.05.023. [DOI] [PubMed] [Google Scholar]

- Barense MD, Groen II, Lee ACH, Yeung LK, Brady SM, Gregori M, Kapur N, Bussey TJ, Saksida LM, Henson RN. Intact memory for irrelevant information impairs perception in amnesia. Neuron. 2012;75:157–167. doi: 10.1016/j.neuron.2012.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartko SJ, Cowell RA, Winters BD, Bussey TJ, Saksida LM. Heightened susceptibility to interference in an animal model of amnesia: impairment in encoding, storage, retrieval—or all three? Neuropsychologia. 2010;48:2987–2997. doi: 10.1016/j.neuropsychologia.2010.06.007. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Smith SM. Probabilistic independent component analysis for functional magnetic resonance imaging. IEEE Trans Med Imaging. 2004;23:137–152. doi: 10.1109/TMI.2003.822821. [DOI] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in FMRI. Neuroimage. 2003;20:1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Bird CM, Burgess N. The hippocampus and memory: insights from spatial processing. Nature. 2008;9:182–194. doi: 10.1038/nrn2335. [DOI] [PubMed] [Google Scholar]

- Bowles B, Crupi C, Mirsattari SM, Pigott SE, Parrent AG, Pruessner JC, Yonelinas AP, Köhler S. Impaired familiarity with preserved recollection after anterior temporal-lobe resection that spares the hippocampus. Proc Natl Acad Sci U S A. 2007;104:16382–16387. doi: 10.1073/pnas.0705273104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown MW, Warburton EC, Aggleton JP. Recognition memory: Material, processes and substrates. Hippocampus. 2010;20:1228–1244. doi: 10.1002/hipo.20858. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Saksida LM, Murray EA. Perirhinal cortex resolves feature ambiguity in complex visual discriminations. Eur J Neurosci. 2002;15:365–374. doi: 10.1046/j.0953-816x.2001.01851.x. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Tansy AP, Stanley CM, Astafiev SV, Snyder AZ, Shulman GL. A functional MRI study of preparatory signals for spatial location and objects. Neuropsychologia. 2005;43:2041–2056. doi: 10.1016/j.neuropsychologia.2005.03.020. [DOI] [PubMed] [Google Scholar]

- Cowell RA, Bussey TJ, Saksida LM. Functional dissociations within the ventral object processing pathway: cognitive modules or a hierarchical continuum? J Cogn Neurosci. 2010;22:2460–2479. doi: 10.1162/jocn.2009.21373. [DOI] [PubMed] [Google Scholar]

- Davachi L. Item, context and relational episodic encoding in human. Curr Opin Neurobiol. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Greenhouse GW, Geisser S. On methods in the analysis of profile data. Psychometrika. 1959;49:95–112. [Google Scholar]

- Henson RN, Cansino S, Herron JE, Robb WG, Rugg MD. A familiarity signal in human anterior medial temporal cortex? Hippocampus. 2003;13:259–262. doi: 10.1002/hipo.10117. [DOI] [PubMed] [Google Scholar]

- Hopfinger JB, Buonocore MH, Mangun GR. The neural mechanisms of top–down attentional control. Nat Neurosci. 2000;3:284–291. doi: 10.1038/72999. [DOI] [PubMed] [Google Scholar]

- Insausti R, Juottonen K, Soininen H, Insausti AM, Partanen K, Vainio P, Laakso MP, Pitkänen A. MR volumetric analysis of the human entorhinal, perirhinal, and temporopolar cortices. AJNR Am J Neuroradiol. 1998;19:659–671. [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M. Fast, automated, N-dimensional phase-unwrapping algorithm. Magn Res Med. 2003;49:193–197. doi: 10.1002/mrm.10354. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Jezzard P, Balaban RS. Correction for geometric distortion in echo planar images from B0 field variations. Magn Reson Med. 1995;34:65–73. doi: 10.1002/mrm.1910340111. [DOI] [PubMed] [Google Scholar]

- Kumaran D, Maguire EA. Match mismatch processes underlie human hippocampal responses to associative novelty. J Neurosci. 2007;27:8517–8524. doi: 10.1523/JNEUROSCI.1677-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee ACH, Rudebeck SR. Human medial temporal lobe damage can disrupt the perception of single objects. J Neurosci. 2010;30:6588–6594. doi: 10.1523/JNEUROSCI.0116-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee ACH, Buckley MJ, Pegman SJ, Spiers H, Scahill VL, Gaffan D, Bussey TJ, Davies RR, Kapur N, Hodges JR, Graham KS. Specialization in the medial temporal lobe for processing of objects and scenes. Hippocampus. 2005;15:782–797. doi: 10.1002/hipo.20101. [DOI] [PubMed] [Google Scholar]

- McTighe SM, Cowell RA, Winters BD, Bussey TJ, Saksida LM. Paradoxical false memory for objects after brain damage. Science. 2010;330:1408–1410. doi: 10.1126/science.1194780. [DOI] [PubMed] [Google Scholar]

- Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229:782–784. doi: 10.1126/science.4023713. [DOI] [PubMed] [Google Scholar]

- Munneke J, Heslenfeld DJ, Theeuwes J. Directing attention to a location in space results in retinotopic activation in primary visual cortex. Brain Res. 2008;1222:184–191. doi: 10.1016/j.brainres.2008.05.039. [DOI] [PubMed] [Google Scholar]

- Murray EA, Bussey TJ, Saksida LM. Visual perception and memory: a new view of medial temporal lobe function in primates and rodents. Annu Rev Neurosci. 2007;30:99–122. doi: 10.1146/annurev.neuro.29.051605.113046. [DOI] [PubMed] [Google Scholar]

- Norman KA. How hippocampus and cortex contribute to recognition memory: revisiting the complementary learning systems model. Hippocampus. 2010;20:1217–1227. doi: 10.1002/hipo.20855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman KA, O'Reilly RC. Modeling hippocampal and neocortical contributions to recognition memory: a complementary learning-systems approach. Psychol Rev. 2003;110:611–646. doi: 10.1037/0033-295X.110.4.611. [DOI] [PubMed] [Google Scholar]

- Posner MI, Petersen SE. The attention system of the human brain. Annu Rev Neurosci. 1990;13:25–42. doi: 10.1146/annurev.ne.13.030190.000325. [DOI] [PubMed] [Google Scholar]

- Preston AR, Bornstein AM, Hutchinson JB, Gaare ME, Glover GH, Wagner AD. High-resolution fMRI of content-sensitive subsequent memory responses in human medial temporal lobe. J Cogn Neurosci. 2010;22:156–173. doi: 10.1162/jocn.2009.21195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C. A unified framework for the functional organization of the medial temporal lobes and the phenomenology of episodic memory. Hippocampus. 2010;20:1263–1290. doi: 10.1002/hipo.20852. [DOI] [PubMed] [Google Scholar]

- Sakai K, Passingham RE. Prefrontal selection and medial temporal lobe reactivation in retrieval of short term verbal information. Cereb Cortex. 2004;14:914–921. doi: 10.1093/cercor/bhh050. [DOI] [PubMed] [Google Scholar]

- Sakai K, Rowe JB, Passingham RE. Parahippocampal reactivation signal at retrieval after interruption of rehearsal. J Neurosci. 2002;22:6315–6320. doi: 10.1523/JNEUROSCI.22-15-06315.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saksida LM, Bussey TJ. The representational–hierarchical view of amnesia: Translation from animal to human. Neuropsychologia. 2010;48:2370–2384. doi: 10.1016/j.neuropsychologia.2010.02.026. [DOI] [PubMed] [Google Scholar]

- Schultz H, Sommer T, Peters J. Direct evidence for domain-sensitive functional subregions in human entorhinal cortex. J Neurosci. 2012;32:4716–4723. doi: 10.1523/JNEUROSCI.5126-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Hum Brain Mapp. 2002;17:143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy R, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23(Suppl 1):S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Snodgrass JG, Corwin J. Pragmatics of measuring recognition memory: applications to dementia and amnesia. J Exp Psychol Gen. 1988;117:34–50. doi: 10.1037//0096-3445.117.1.34. [DOI] [PubMed] [Google Scholar]

- Spitzer H, Desimone R, Moran J. Increased attention enhances both behavioral and neuronal performance. Science. 1988;240:338–340. doi: 10.1126/science.3353728. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Duncan KD, Davachi L. Perirhinal and parahippocampal cortices differentially contribute to later recollection of object- and scene-related event details. J Neurosci. 2011;31:8739–8747. doi: 10.1523/JNEUROSCI.4978-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson C, Andermann F, Gloor P, Jones-Gotman M, Peters T, Evans A, Olivier A, Melanson D, Leroux G. Anatomic basis of amygdaloid and hippocampal volume measurement by magnetic resonance imaging. Neurology. 1992;42:1743–1750. doi: 10.1212/wnl.42.9.1743. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Behrens TE, Beckmann CF, Jenkinson M, Smith SM. Multilevel linear modelling for FMRI group analysis using Bayesian inference. Neuroimage. 2004;21:1732–1747. doi: 10.1016/j.neuroimage.2003.12.023. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Jbabdi S, Patenaude B, Chappell M, Makni S, Behrens T, Beckmann C, Jenkinson M, Smith SM. Bayesian analysis of neuroimaging data in FSL. Neuroimage. 2009;45:S173–S186. doi: 10.1016/j.neuroimage.2008.10.055. [DOI] [PubMed] [Google Scholar]

- Yonelinas AP, Aly M, Wang WC, Koen JD. Recollection and familiarity: Examining controversial assumptions and new directions. Hippocampus. 2010;20:1178–1194. doi: 10.1002/hipo.20864. [DOI] [PMC free article] [PubMed] [Google Scholar]