Abstract

Operators of a pair of robotic hands report ownership for those hands when they hold image of a grasp motion and watch the robot perform it. We present a novel body ownership illusion that is induced by merely watching and controlling robot's motions through a brain machine interface. In past studies, body ownership illusions were induced by correlation of such sensory inputs as vision, touch and proprioception. However, in the presented illusion none of the mentioned sensations are integrated except vision. Our results show that during BMI-operation of robotic hands, the interaction between motor commands and visual feedback of the intended motions is adequate to incorporate the non-body limbs into one's own body. Our discussion focuses on the role of proprioceptive information in the mechanism of agency-driven illusions. We believe that our findings will contribute to improvement of tele-presence systems in which operators incorporate BMI-operated robots into their body representations.

The mind-body relationship has been always an appealing question to human beings. How we identify our body and use it to perceive our “self” is an issue that has fascinated many philosophers and psychologists throughout history. But only in the past few decades has it become possible to empirically investigate the mechanism of self-perception.

Our recently developed human-like androids have become new tools for investigating how humans perceive their own “body” and correspond it to their “self”. Operators of these tele-operated androids report unusual feelings of being transformed into robot's body1. This sensation of owning a non-body object, generally called the “illusion of body ownership transfer”, was first scientifically reported as the “rubber hand illusion” (RHI)2. Following RHI, many researchers have studied the conditions under which the illusion of body ownership transfer can be induced3,4. In these works, the feeling of owning a non-body object was mainly challenged by the manipulation of sensory inputs (vision, touch, proprioception) that are congruently supplied to the subject from his own body and a fake body. In previously reported illusions, the correlation of at least two channels of sensory information was indispensable. Either the illusion was passively evoked by synchronized visuo-tactile2,3,4 or tactile-proprioceptive5 stimulation or it was evoked by synchronized visuo-proprioceptive6 stimulation in voluntarily performed actions.

However, the question remains whether body-ownership illusions can be induced without the correlation of multiple sensory modalities. We are specifically interested in the role of sensory inputs in evoking motion-involved illusions. In such illusions, which are aroused by triggering a sense of agency toward the actions of a fake body, at least two afferent signals (vision and proprioception) need to be integrated with efferent signals to generate a coherent self-presentation. Walsh et al. recently discussed the contribution of proprioceptive feedback in the inducement of the ownership illusion for an anesthetized moving finger6. They focused on the exclusive role of the sensory receptors in muscles by eliminating the cutaneous signals from skin and joints. Unlike that study, in this work we hypothesized that even in the absence of proprioceptive feedback, only the match between efferent signals and visual feedback can trigger a sense of agency toward the robot's motion and therefore induce the illusion of body ownership for robot's body.

In this study, we employed a BMI system to translate operator's thoughts into robot's motions and removed the proprioceptive updates of real motions from operators' sensations. BMIs were primarily developed as a promising technology for future prosthetic limbs. To that end, the incorporation of these devices into a patient's body representation also becomes considerable. Investigation on monkeys has shown that bidirectional communication in a brain-machine-brain interface contributes to the incorporation of a virtual hand in a primate's brain circuitry7. Such closed-loop multi channel BMIs, which deliver visual feedback and such multiple sensory information as cortically microstimulated tactile or proprioceptive-like signals, are conceived as the future generation of prosthetics to feel and act like a human body8. Unfortunately such designs demand invasive approaches that are risky and costly with human subjects. We must explore how at the level of experimental studies, non-invasive operation can incorporate human-like limbs into body presentation.

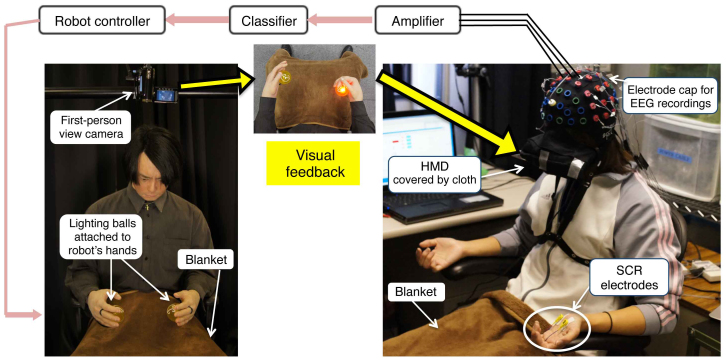

In our experiments, subjects conducted a set of motor imagery tasks of right or left grasp, and their online EEG signals were classified into two classes of right or left hand motions performed by a robot. During tele-operation, they wore a head-mounted display and watched real time first-perspective images of the robot's hands (Figure 1).

Figure 1. Experiment setup.

EEG electrodes placed on subject's sensorimotor cortex recorded brain activities during motor imagery tasks. Subjects wore a head mounted display through which they had a first person view of the robot's hands. They received cues by lighting balls in front of the robot's hands and held grasp images for their own corresponding hands. Classifier detected two classes of results (right or left) and sent a motion command to robot's hand. SCR electrodes, attached to subject's left hands, measured physiological arousal during the session. Identical blankets were laid on both robot and subject legs so the background views were the same.

The aroused sense of body ownership in the operators was evaluated in terms of subjective assessments and physiological reactions to an injection given to the robot's body at the end of tele-operation sessions.

Results

Forty subjects participated in our BMI-operation experiment. They operated a robot's hands while watching them through a head-mounted display. Each participant performed the following two randomly conditioned sessions:

1. Still condition: The robot's hands did not move at all, even though subjects performed the imagery task and tried to operate the hands. (This was the control condition).

2. Match condition: Based on the classification results, the robot's hands performed a grasp motion, but only when the result was correct. If the subject missed a trial, the hands did not move.

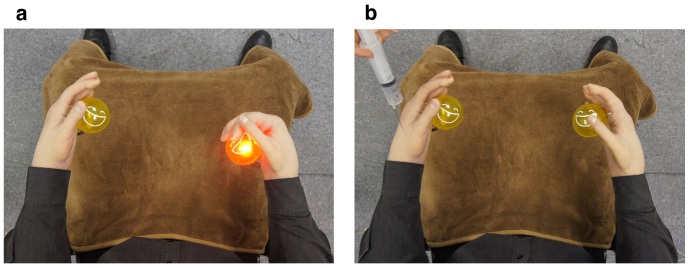

The subjects were unaware of the condition setups. In both sessions, they were told that precise performance of the motor imagery would produce a robot motion. To ease imagination and give a visual cue for the motor imagery tasks, two balls were placed in front of the robot's hands that randomly lighted to indicate which hand the subjects were required to move (Figure 2a). At the end of each test session, a syringe was inserted into the thenar muscle of the robot's left hand (Figure 2b). Immediately after the injection, the session was terminated and participants were asked the following questions: Q1) When the robot's hand was injected, did it feel as if your own hand was receiving the injection? Q2) Throughout the entire session while you were operating the robot's hands, did it feed as if they were your own hands? Participants answers to Q1 and Q2 were scored based on a seven-point Likert Scale, where 1 denoted, “didn't feel anything at all” and 7 denoted, “felt very strongly”.

Figure 2. Participant's view in HMD.

(a) Robot's right hand grasped lighted ball based on classification results of subject's EEG patterns. (b) Robot's left hand received injection at the end of each test session, and subject reactions were subjectively and physiologically evaluated.

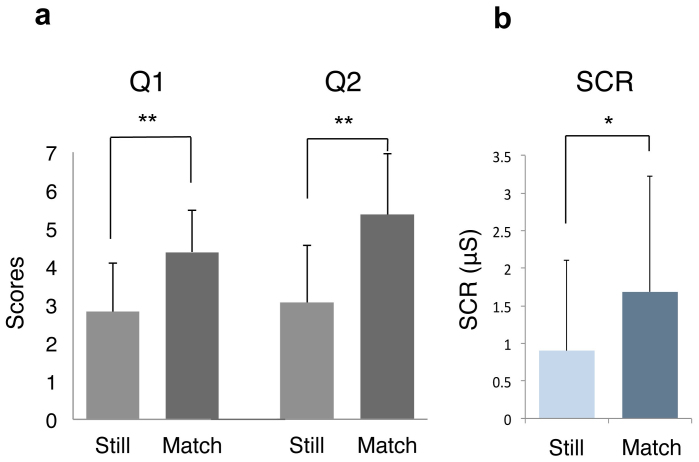

The acquired scores for each condition were averaged and compared within subjects by paired t-test (Figure 3a). The Q1 results were significant between the Match (M = 4.38, SD = 1.51) and Still conditions (M = 2.83, SD = 1.43); [Match > Still, p = 0.0001, t(39) = −4.33]. Similarly, there was a significant difference in the Q2 scores for Match (M = 5.15, SD = 1.10) and Still (M = 2.93, SD = 1.25); [Match > Still, p = 2.75 × 10−12, t(39) = −9.97].

Figure 3. Evaluation results.

(a) Participants answered Q1 and Q2 immediately after watching injections. Q1) When robot's hand was given a shot, did it feel as if your own hand was being injected? Q2) Throughout the session while you were performing the task, did it feel as if the robot's hands were your own hands? Mean score values and standard deviations for each condition were plotted. Significant difference between conditions (**p < 0.001; paired t-test) was confirmed. (b) SCR peak value after injection was assigned as reaction value. Mean reaction values and standard deviations were plotted, and results show significant differences between conditions (*p < 0.01; paired t-test).

In addition to the self-assessment, we physiologically measured the body ownership illusion by recording the skin conductance responses (SCR). We only evaluated the SCR recordings of 34 participants, since six participants were excluded from analysis because they showed unchanged responses during the experiment. The peak response value9 within a 6-seconds interval was selected as the SCR reaction value [see Methods]. The SCR results were significant between Match (M = 1.68, SD = 1.98) and Still (M = 0.90, SD = 1.42); [Match > Still, p = 0.002, t(33) = −3.29] although the subject responses were spread out over a large range of values (Figure 3b).

Discussion

From both the questionnaire and SCR results, we can conclude that the operator reactions to a painful stimulus (injection) were significantly stronger in the Match condition in which the robot's hands followed the operator intentions. This reaction is evidence for the body ownership illusion and verifies our hypothesis. We showed that body ownership transfer to the robot's moving hands can be induced exclusive of the proprioceptive feedback from an operator's actual movements. This is the first report of body ownership transfer to a non-body object that is induced without integration among multiple afferent inputs. In the presented illusion, a correlation between efferent information (operator's plan of motion) and a single channel of sensory input (visual feedback of the intended motion) was enough to trigger the illusion of body ownership. Since this illusion occurs in the context of action, we estimate that the sense of ownership for the robot's hand is modulated from the sense of agency generated for the robot hand motions. Although all participants were perfectly aware that the congruently placed hands they were watching through the HMD were non-body human-like objects, the explicit sense that “I am the one causing the motions of these hands” and the life-long experience of performing motions for their own bodies, modulated the sense of body ownership toward the robot's hands and provoked the illusion.

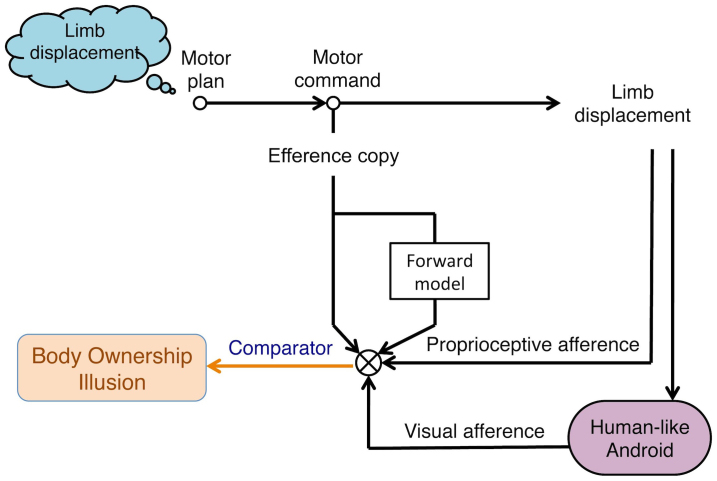

The original ownership illusion for tele-operated androids1 (mentioned at the beginning of this paper) was a visuo-proprioceptive illusion due to motion synchronization between the operator and the robot's body10. The mechanism behind this illusion can be explained based on a cognitive model that integrates one's body to oneself in a self-generated action11. When operators move their bodies and watch the robot copying them, the match between the motion commands (efferent signals carrying both raw and processed predictive information) and the sensory feedbacks from the motion (visual afference from the robot's body and proprioceptive afference from the operator's body) modulates a sense of agency over the robot's actions and ultimately results into the integration of robot's body into operator's sense of self-body (Figure 4). In this paper, we particularly targeted the role of proprioception in this model and showed that our presented mechanism remains valid even when the proprioceptive feedback channel is blocked from being updated.

Figure 4. Body recognition mechanism.

Explanation of mechanism of body ownership illusion to an operated robotic body is based on Tsakiris cognitive model for self-recognition. During tele-operation of a very human-like android, match between efferent signals of a motor intention and afferent feedback of the performed motion (proprioceptive feedback from operator's body and visual feedback from robot's body) yields illusion that robot's body belongs to the operator. However, the role of proprioceptive feedback in modulation of such feelings has never been completely clarified. This work confirms that the body ownership illusion was elicited without proprioceptive feedback and by modulation of only motor commands and visual inputs.

An important element associated with the occurrence of the illusion in the new paradigm is probably the attention subjects paid to the operation task. Motor imagery is a difficult process that requires increased attention and concentration. Subjects focus on picturing a grasp motion to cause action, and their selective attention to the visual feedback of movement can play a noticeable role in filtering out such distracting information as the different size and texture of the visible hands, the delay between the onset of motor imagery and the robot's motions, and the subconscious sense of the real hand positions.

On the other hand, the exclusively significant role of visual feedback in the process of body attribution is disputable, since the cutaneous signals of the subject's real body were not blocked. This may reflect the effect of the movement-related gating of sensory signals before and during the execution of self-generated movements. In a voluntary movement, feed-forward efference copy signals predict the expected outcome of the movement. This expectation modulates the incoming sensory input and reduces the transmission of tactile inputs12. Such attenuation of sensory inputs has also been reported at the motor planning stage13, and has been found in the neural patterns of primates prior to the actual onset of voluntary movements14,15. These findings support the idea of the premovement elevation of a tactile perception threshold during motor imagery tasks. Therefore, the suppression or the gating of peripheral signals may have enhanced the relative salience of other inputs, which in this case are visual signals.

Although in this work we discuss motor-visual interaction based on a previously introduced cognitive mechanism of body recognition, evidence exists that the early stages of movement preparation not only occur in the brain's motor centers but may also occur simultaneously in the spinal levels16. This suggests that even in the absence of a subject's movement, further sensory circuitry may in fact be engaged in the presented mechanism at the level of motor planning.

Correspondingly, although subjects were strictly prevented from performing grasp motions by their own hands, regardless of such instruction, it is probable that a few of them did occasionally involuntarily contract their upper arm muscles. Such a possibility prompts argument on the complete cancelation of proprioceptive feedback. In the future, further experiments with EMG recordings are required to improve the consistency of our results by excluding participants whose performance involved muscle activity.

Finally, from the observations of this experiment and many other studies, we conclude that for inducing illusions of body transfer, the congruence between only two channels of information, either efferent or afferent, is sufficient to integrate a non-body part to one's own body, regardless of the context in which the body transfer experience occurs. In passive or externally generated experiences, the integration of two sensory modalities from both non-body and body parts was indispensable. However, in voluntary actions, since efferent signals play a critical role in the recognition of one's own motions, their congruence with only a single channel of visual feedback from non-body part motions was adequate to override the internal mechanism of body ownership.

Methods

This experiment was conducted with the approval of the Ethics Review Board of Advanced Telecommunications Research Institute International (ATR), Kyoto, Japan. Approval ID: 11-506-1.

Subjects

We selected 40 healthy participants (26 males, 14 females) in an age range of 18 ~ 28 (M = 21.13, SD = 1.92), most of whom were university students, 38 were right-handed, and two were left-handed. All were naïve to the research topic. According to the instructions that have been approved by the ethical review, the subjects received an explanation of the experiment and signed a consent form. At the end, all participants were paid for their participation.

EEG recording

Subject cerebral activities were recorded by g.USBamp biosignal amplifiers developed at Guger Technologies (Graz, Austria). They wore an electrode cap, and 27 EEG electrodes were installed over their primary sensori-motor cortex. The electrode placement was based on the 10–20 system. The reference electrode was placed on the right ear and the ground electrode on the forehead.

Classification

The acquired data were processed online under Simulink/MATLAB (Mathworks) for real-time parameter extraction. This process included bandpass filtering between 0.5 and 30 Hz, sampling at 128 Hz, cutting off artifacts by a notch filter at 60 Hz, and adopting Common spatial pattern (CSP) algorithm to discriminate Event Related Desynchronization (ERD) and Event Related Synchronization (ERS) patterns associated with motor imagery task17. Results were classified with weight vectors that weighed each electrode based on its importance for the discrimination task and suppressed the noise in individual channels by using the correlations between neighboring electrodes. During each right or left imagery movement, the decomposition of the associated EEG led to a new time series, which was optimal for the discrimination of two populations. The patterns were designed such that the signal from the EEG filtering with CSP had maximum variance for the left trials and minimum variance for the right trials and vice versa. In this way, the difference between the left and right populations was maximized and the only information contained in these patterns was where the EEG variance fluctuated the most during the comparisons between the two conditions. Finally, when the discrimination between left and right imaginations was made, the classification block outputted a linear array signal in the range of [−1,1], where −1 denotes the extreme left and 1 denotes the extreme right.

Motor imagery task

Participants imagined a grasp or squeeze motion for their own hand. In both the training and experiment sessions, a visual cue specified the timing and the hand for which they were supposed to hold the image.

Training

Participants practiced the motor imagery task to move a feedback bar on a computer screen to the left or right. They sat in a comfortable chair in front of a 15-inch laptop computer and remained motionless. The first run consisted of 40 trials conducted without feedback. They watched a cue of an arrow randomly pointing to the left or right, and imagined a gripping or squeezing motion for the corresponding hand. Each trial lasted 7.5 seconds and started with the presentation of a fixation cross on the display. Two seconds later an acoustic warning “beep” was given. From 3 to 4.25 seconds, an arrow pointing to the left or right was shown. Depending on its direction, the participants were instructed to perform motor imagery. They continued the imagery task until the screen content was erased (7.5 seconds). After a short pause the next trial started. The recorded brain activities in this non-feedback run were used to set up a subject specific classifier for the following feedback runs. In the feedback runs, participants performed similar trials; however, after the appearance of the arrow and the execution of motor imagery task, this time the classification results were shown as a horizontal feedback bar on the screen. The subject tasks were to immediately hold imagination after the arrow and extend the feedback bar in the same direction as long as possible. Both the feedback and non-feedback runs consisted of 40 randomly presented trials with 20 trials per class (left/right).

Participants performed two training sessions with feedback until they became familiar with the motor imagery task. We recorded the subject performances during each session to evaluate their improvement. At the end of the training sessions, most participants reached a performance of 60 to 100%.

Experiment setup

Subjects wore a head-mounted display (Vuzix iWear VR920) through which they had a first person view of the robot's hands. Since this HDM design is not protected from environment light, we wrapped a piece of cloth around the HMD frame to block the surrounding light. Two balls that can be lightened were placed in front of the robot's hands to simplify the imagery task during the experiment sessions. Participants received visual cues when the balls randomly lighted and held grasp images for their corresponding hand. The classifier detected two classes of results (right or left) from the EEG patterns and sent a motion command to the robot's hand. Identical blankets were laid on both the robot and subject legs so that the background view of the robot and subject bodies was identical.

We attached SCR electrodes to the subject hand and measured the physiological arousal during each session. A bio-amplifier recording device (Polymate II AP216, TEAC, Japan) with a sampling rate of 1000 Hz was used for the SCR measurements. Participants rested their hands with palms up on chair arms, and SCR electrodes were placed on the thenar and hypothenar eminences of their left palm. Since the robot's hands were shaped in an inward position for the grasping motion, the participants tended to alter their hand position to an inward figure that resembled the robot's hands. However, since this might complicate reading the SCR electrodes and give the participants the space and comfort to perform unconscious gripping motions during the task, we asked them to keep their hands and elbows motionless on the chair arms with their palms up.

Testing

Participants practiced operating the robot's hand by motor imagery in one session and then performed two test sessions. All sessions consisted of 20 imagery trials. Test sessions were randomly conditioned as “Still” and “Match”. In the former condition, the robot's hands did not move at all, even though the subjects performed imagery tasks based on the cue stimulus and expected robot's motion. In the Match condition, the robot's hands performed a grasp motion, but only in those trials whose classification results were correct and identical as the cue. If subjects made a mistake during a trial, the robot's hands didn't move.

At the end of each test session, a syringe was injected into the thenar muscle of the robot's left hand, which was the same hand on which the SCR electrodes had been placed. We slowly moved the syringe toward the robot's hand, taking about two seconds from the moment it appeared in the participant's view until it touched the robot's skin. Immediately after the injection, the session was terminated and participants were orally asked the following two questions: Q1) When the robot's hand was given a shot, did it feel as if your own hand was being injected? Q2) Throughout the session while you were performing the task, did it feel as if the robot's hands were your own hands? They scored each question based on a seven-point Likert scale, where 1 was “didn't feel at all” and 7 was “felt very strongly”.

SCR measurements

The peak value of the responses to injection was selected as a reaction value. Generally SCRs start to rise 1 ~ 2 seconds after a stimulus and end 5 seconds after that9. The moment at which the syringe appeared in the participant's view was selected as the starting point for the evaluations, because some participants reacted to the syringe itself even before it was inserted into the robot's hands as a result of the body ownership illusion10. Therefore, SCR peak values were sought within an interval of 6 seconds: 1 second after the syringe appeared in the participant's view (1 second before it was inserted) to 5 seconds after the injection was actually made.

Data analysis

We averaged and compared the acquired scores and the SCR peak values for each condition within subjects. Statistical analysis was carried out by paired t-test. A significant difference between two conditions was revealed in Q1 and Q2 (Match > Still, p < 0.001), and the SCR responses (Match > Still, p < 0.01).

Author Contributions

M.A. wrote the main manuscript text, S.N. and H.I. reviewed the manuscript.

Acknowledgments

This work was supported by Grant-in Aid for Scientific Research, KAKENHI (25220004) and KAKENHI (24650114).

References

- Nishio S. & Ishiguro H. Android science research for bridging humans and robots. Trans IEICE. 91, 411–416 (2008) (in Japanese). [Google Scholar]

- Botvinick M. Rubber hands feel touch that eyes see. Nature 391, 756 (1998). [DOI] [PubMed] [Google Scholar]

- Ehrsson H. H. The experimental induction of out-of-body experiences. Science 317, 1048 (2007). [DOI] [PubMed] [Google Scholar]

- Petkova V. I. & Ehrsson H. H. If I were you: perceptual illusion of body swapping. PLoS One 3, e3832 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrsson H. H., Holmes N. P. & Passingham R. E. Touching a rubber hand: feeling of body ownership is associated with activity in multisensory brain areas. J Neurosci. 25, 10564–10573 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walsh L. D., Moseley G. L., Taylor J. L. & Gandevia S. C. Proprioceptive signals contribute to the sense of body ownership. J physiol. 589, 3009–3021 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty J. E. et al. Active tactile exploration using a brain-machine-brain interface. Nature 479, 228–231 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lebedev M. A. & Nicolelis M. A. Brain-machine interfaces: past, present and future. Trends Neurosci. 29, 536–546 (2006). [DOI] [PubMed] [Google Scholar]

- Armel K. C. & Ramachandran V. S. Projecting sensations to external objects: Evidence from skin conductance response. Proc Biol Sci. 270, 1499–1506 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe T., Nishio S., Ogawa K. & Ishiguro H. Body ownership transfer to android robot induced by teleoperation. Trans IEICE. 94, 86–93 (2011) (in Japanese). [Google Scholar]

- Tsakiris M., Haggard P. Frank N., Mainy N. & Sirigu A. A specific role for efferent information in self-recognition. Cognition 96, 215–231 (2007). [DOI] [PubMed] [Google Scholar]

- Chapman C. E., Jiang W. & Lamarre Y. Modulation of lemniscal input during conditioned arm movements in the monkey. Exp Brain Res. 72, 316–334 (1988). [DOI] [PubMed] [Google Scholar]

- Voss M., Ingram J. N., Wolpert D. M. & Haggard P. Mere Expectation to Move Causes Attenuation of Sensory Signals. PLoS ONE 3, e2866 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lebedev M. A., Denton J. M. & Nelson R. J. Vibration-entrained and premovement activity in monkey primary somatosensory cortex. J Neurophysiol. 72, 1654–1673 (1994). [DOI] [PubMed] [Google Scholar]

- Seki K. & Fetz E. E. Gating of sensory input at spinal and cortical levels during preparation and execution of voluntary movement. J Neurosci. 32, 890–902 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prut Y. & Fetz E. E. Primate spinal interneurons show pre-movement instructed delay activity. Nature 401, 590–594 (1999). [DOI] [PubMed] [Google Scholar]

- Neuper C., Muller-Putz G. R., Scherer R. & Pfurtscheller G. Motor imagery and EEG-based control of spelling devices and neuroprostheses. Progress in brain research 159, 393–409 (2006). [DOI] [PubMed] [Google Scholar]