Abstract

Objective

The aim of this study was to assess the benefit of having preserved acoustic hearing in the implanted ear for speech recognition in complex listening environments.

Design

The current study included a within subjects, repeated-measures design including 21 English speaking and 17 Polish speaking cochlear implant recipients with preserved acoustic hearing in the implanted ear. The patients were implanted with electrodes that varied in insertion depth from 10 to 31 mm. Mean preoperative low-frequency thresholds (average of 125, 250 and 500 Hz) in the implanted ear were 39.3 and 23.4 dB HL for the English- and Polish-speaking participants, respectively. In one condition, speech perception was assessed in an 8-loudspeaker environment in which the speech signals were presented from one loudspeaker and restaurant noise was presented from all loudspeakers. In another condition, the signals were presented in a simulation of a reverberant environment with a reverberation time of 0.6 sec. The response measures included speech reception thresholds (SRTs) and percent correct sentence understanding for two test conditions: cochlear implant (CI) plus low-frequency hearing in the contralateral ear (bimodal condition) and CI plus low-frequency hearing in both ears (best aided condition). A subset of 6 English-speaking listeners were also assessed on measures of interaural time difference (ITD) thresholds for a 250-Hz signal.

Results

Small, but significant, improvements in performance (1.7 – 2.1 dB and 6 – 10 percentage points) were found for the best-aided condition vs. the bimodal condition. Postoperative thresholds in the implanted ear were correlated with the degree of EAS benefit for speech recognition in diffuse noise. There was no reliable relationship among measures of audiometric threshold in the implanted ear nor elevation in threshold following surgery and improvement in speech understanding in reverberation. There was a significant correlation between ITD threshold at 250 Hz and EAS-related benefit for the adaptive SRT.

Conclusions

Our results suggest that (i) preserved low-frequency hearing improves speech understanding for CI recipients (ii) testing in complex listening environments, in which binaural timing cues differ for signal and noise, may best demonstrate the value of having two ears with low-frequency acoustic hearing and (iii) preservation of binaural timing cues, albeit poorer than observed for individuals with normal hearing, is possible following unilateral cochlear implantation with hearing preservation and is associated with EAS benefit. Our results demonstrate significant communicative benefit for hearing preservation in the implanted ear and provide support for the expansion of cochlear implant criteria to include individuals with low-frequency thresholds in even the normal to near-normal range.

Keywords: reverberation, noise, cochlear implant, EAS, hearing preservation, bimodal, hybrid, interaural time difference (ITD)

Introduction

There is increasing interest in preservation of acoustic hearing with cochlear implantation and multiple reports have demonstrated that it is feasible both with short electrodes and shallow insertion (e.g., Buchner et al., 2009; Gantz et al., 2009; Lenarz et al., 2009; Woodson et al., 2010) and longer electrodes with deeper insertion depth (e.g., Gstoettner et al., 2008, 2009; Arnolder et al., 2010; Carlson et al., 2011; Helbig et al., 2011; Skarzyński et al., 2009, 2011; Obholzer and Gibson, 2011). Degree of hearing preservation has been to shown to be somewhat dependent upon the insertion depth of the array. The range of mean threshold elevation following cochlear implantation ranges from 10 to 25 dB for shorter electrode arrays (10 mm, Gantz et al., 2005, 2009; 16 mm, Lenarz et al., 2009) and 10 to 40+ dB for longer electrode arrays (16− to 30+ mm, Gstoettner et al., 2008, 2009; Arnolder et al., 2010; Carlson et al., 2011; Helbig et al., 2011; Skarzyński et al., 2009, 2011; Obholzer and Gibson, 2011).

In the papers referenced above, however, there are reports of complete hearing preservation with both long and short electrodes as well as complete loss of residual hearing with both long and short electrodes thresholds. There are many variables thought to be associated with hearing preservation including drug delivery, surgical approach, electrode arrays and dimensions, individual inflammatory response to trauma, etc. (e.g., Eshraghi, 2006; van de Water et al., 2010). It is still not clear which of these variables, in isolation or in combination, yields the highest rate of hearing preservation.

Individuals with hearing preservation have demonstrated comparable performance to bimodal listeners on tasks including monosyllabic word recognition as well as sentence recognition in quiet and noise (Dorman et al., 2009). The reason is that in most clinical settings—including the conditions tested as part of the US FDA clinical trial of both Nucleus Hybrid and Med El Electric and Acoustic Stimulation (EAS)—speech and noise are presented from a single loudspeaker (Gantz et al., 2009; Woodson et al., 2010). There would be little to no benefit of having binaural acoustic hearing in such listening conditions. On the other hand, if speech is presented from one loudspeaker in a loudspeaker array and noise is presented from the other loudspeakers that surround the listener, then hearing preservation patients should have an advantage over bimodal patients. This is because when speech and noise originate from different spatial locations, hearing preservation patients have the potential to use both ITD and ILD cues to separate the target and noise. Unilaterally implanted patients with preserved acoustic hearing in the implanted ear will most likely be making use of bilateral, low-frequency amplification. This is not thought to be problematic as bilateral hearing aids—even when not synchronized—have been shown to transmit ITD cues and to a lesser extent, ILD cues (Musa-Shufani et al., 2006).

Dunn et al. (2010) also reported significant benefit for the addition of acoustic hearing in the implanted ear for 11 recipients of the Hybrid S8 (10 mm, 6 electrodes). Spondee word recognition was assessed with an array of eight loudspeakers arranged in an arc of 108 degrees placed in front of the listener using three conditions: bimodal (CI + contralateral acoustic), hybrid (CI + ipsilateral acoustic) and combined (CI + bilateral acoustic). Dunn et al. (2010) showed a significant 2-dB improvement in the SRT with the addition of acoustic hearing in the ipsilateral, implanted ear to the standard bimodal condition. That is, the best performance was observed with bilateral acoustic hearing in combination with the CI. The subjects in their study had short electrodes and considerable low-frequency acoustic hearing in the implanted ear.

Dorman and Gifford (2010) and Gifford et al. (2010) also reported significant benefit of ipsilateral acoustic hearing for 8 hearing preservation patients listening in a restaurant simulation with a high-level, diffuse noise (see also Rader et al., 2009). However, just as with Dunn et al. (2010) the sample size was small, the patients had very good pre- and post-implant hearing thresholds and were all implanted with a 10-mm electrode. Thus it is not clear whether patients with longer electrode arrays (up to 31 mm) and different levels of pre- and post-implant hearing would also benefit from preservation of acoustic hearing in the implanted ear.

In the two experiments reported here, we evaluated the speech recognition abilities of 38 hearing preservation patients in two complex listening environments. In one, speech was presented from one loudspeaker from an 8-loudspeaker array that surrounded the patient and restaurant noise was presented from all 8 loudspeakers at the level commonly found in restaurants (72 dBA). The second experiment used a similar loudspeaker configuration but the sentences were processed to have a reverberation time (RT) of 0.6 seconds. The hypotheses for the current study were that hearing preservation patients with binaural acoustic hearing would demonstrate significantly higher levels of speech perception in the best aided EAS condition (CI + bilateral acoustic hearing) than in the bimodal condition (CI + contralateral acoustic hearing, with the ipsilateral ear occluded) in both the diffuse noise as well as for reverberant speech. For speech recognition in diffuse, restaurant noise, preserved hearing in the implanted ear may allow access to interaural time difference (ITD) cues allowing listeners to squelch the noise as it arrives at the two ears at various time delays relative to the speech signal which arrives at the two ears at the same time delay. For reverberant speech recognition, the source stimulus will arrive at the two ears at the same time delay whereas the reflections will arrive at various time delays. Since hearing preservation in the implanted ear may allow the listener access to ITD cues, it is hypothesized that the listeners will be able to squelch the reflections yielding higher levels of speech perception in the best aided EAS condition.

Experiment 1: Sentence recognition in a restaurant-noise environment

Participants

Our test sample included 21 English-speaking participants and 17 Polish-speaking participants. All participants had preserved acoustic hearing in the implanted ear. In addition to the 38 listeners with cochlear implants, data for 16 listeners with normal hearing were also obtained for normative purposes. Of the listeners with normal hearing, 10 were English speaking (mean age = 28.8 years) and 6 were Polish speaking (mean age = 32.0 years).

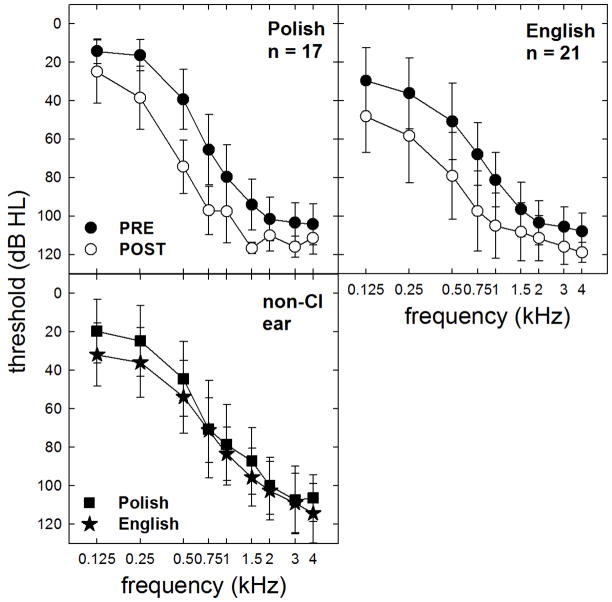

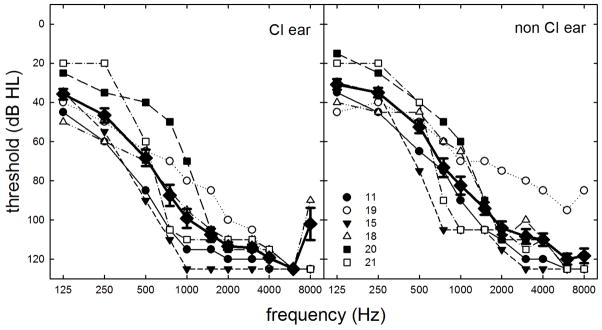

The pre- and post-operative audiometric thresholds for the implanted ear as well as the non-implanted ear are shown in Figure 2. The mean degree of low-frequency threshold shift averaged across 125, 250 and 500 Hz was 22.0 and 22.6 dB for the English- and Polish-speaking participants, respectively. Statistical analysis revealed no difference in the degree of postoperative threshold elevation across the Polish and English speaking groups (F(1, 36) = 0.31, p = 0.60), though the Polish participants did have lower (i.e. better) preoperative audiometric thresholds than the English-speaking participants for the implanted ear (F(1, 36) = 11.6, p = 0.002). Thresholds in the non-implanted ear, however, were not significantly different across the Polish- and English-speaking groups on the date of testing (F(1, 36) = 2.7, p = 0.12).

FIGURE 2.

Mean pre- and post-implant audiometric thresholds for the implanted ears of the Polish- and English-speaking participants are shown as filled and unfilled circles, respectively. Mean thresholds for the non-implanted ears of the Polish (filled squares) and English (filled stars) participants are also displayed. Error bars represent +/− 1 standard deviation.

Table 1 displays demographic and device information for all 38 participants. In addition, CNC (Peterson and Lehiste, 1962) monosyllabic word recognition performance for the 21 English-speaking participants is shown for the bimodal and best aided EAS conditions. As shown in Table 1, the 21 English-speaking participants were recipients of Nucleus Hybrid S8 (10 mm, n = 6), Hybrid L24 (16 mm, n = 3), Nucleus 24 (CI24RCA 17.8 mm, n = 3), Nucleus Freedom (CI24RE 17.8 mm, n = 2), Nucleus 5 (CI512 17.8 mm, n = 2), Med El Sonatati100 (31 mm, n = 3) and Med El FLEXeas (20.9 mm, n = 2). The mean age of the English-speaking participants at testing was 59.9 years (SD = 11.8 years) with a range of 34 to 77 years. Ten participants were female and eleven were male. Mean CNC word recognition scores were 79 and 80 percent correct for the bimodal and best aided EAS conditions, respectively. On a standard clinical metric of speech perception, the bimodal and best aided EAS conditions yielded approximately equivalent performance. At the individual level, only one subject (E10) demonstrated a significant difference between CNC word scores in the bimodal and best aided EAS conditions based on a binomial distribution statistic for administration of a 50-word list (Thornton and Raffin, 1978).

Table 1.

Participant demographic information including age at testing, months of electric experience, device type, degree of low-frequency (LF) threshold shift (125, 250, and 500 Hz) in dB, processor, insertion depth, number of active electrodes, and CNC monosyllabic word recognition (% correct) in the bimodal and best aided EAS conditions. The five listeners who did not wear a hearing aid in either the implanted or non-implanted ear are denoted with an asterisk next to the participant label

| Subject | Age | Months CI experience | Device | LF threshold shift (dB) | Processor | Insertion depth (mm) | Active electrodes | CNC (Bimodal, best EAS) |

|---|---|---|---|---|---|---|---|---|

| E1 | 71.2 | 13.5 | L24 | 3.3 | Hybrid SP | 16 | 22 | 74, 78 |

| E2 | 70.5 | 56.0 | CI24RCA | 45.0 | Freedom | 18 | 22 | 98, 94 |

| E3 | 69.6 | 12.0 | S8 | 11.7 | Freedom | 10 | 6 | 80, 82 |

| E4 | 52.1 | 7.3 | CI512 | 50.0 | CP810 | 18 | 22 | 98, 98 |

| E5 | 49.7 | 54.2 | CI24RE(CA) | 33.3 | Freedom | 18 | 22 | 96, 98 |

| E6 | 79.3 | 20.5 | CI24RE(CA) | 11.7 | Freedom | 18 | 22 | 96, 94 |

| E7 | 61.2 | 25.3 | L24 | 11.7 | Hybrid SP | 16 | 18 | 90, 88 |

| E8 | 61.9 | 12.0 | CI512 | 23.3 | CP810 | 18 | 22 | 80, 84 |

| E9 | 45.0 | 16.5 | Sonata, H | 36.7 | Opus2 | 31 | 10 | 82, 84 |

| E10 | 53.3 | 48.4 | S8 | 5.0 | Freedom | 10 | 6 | 62, 80 |

| E11 | 58.3 | 76.7 | CI24RCA | 20.8 | Freedom | 18 | 22 | 84, 86 |

| E12 | 52.8 | 24.2 | S8 | 62.5 | Freedom | 10 | 6 | 86, 86 |

| E13 | 34.4 | 49.0 | CI24RCA | 10.0 | Freedom | 18 | 22 | 88, 90 |

| E14 | 52.1 | 16.6 | L24 | 11.7 | Hybrid SP | 16 | 18 | 88, 86 |

| E15 | 47.4 | 70.9 | S8 | 36.7 | Freedom | 10 | 6 | 52, 52 |

| E16 | 77.5 | 6.9 | Sonata, H | 6.7 | Opus2 | 31 | 12 | 46, 56 |

| E17 | 61.3 | 20.7 | Sonata, H | 10.0 | Opus2 | 31 | 12 | 58, 56 |

| E18 | 67.2 | 10.0 | Sonata, FLEXeas | 18.3 | Duet | 21 | 12 | 60, 54 |

| E19 | 69.4 | 58.1 | Sonata, FLEXeas | 11.7 | Duet | 21 | 12 | 80, 72 |

| E20 | 54.7 | 79.9 | S8 | 0.0 | Hybrid SP | 10 | 6 | 48, 68 |

| E21 | 61.9 | 70.9 | S8 | 10.0 | Hybrid SP | 10 | 6 | 78, 76 |

| P1 | 44.1 | 35.4 | Pulsar, M | 13.3 | Duet | 20 | 12 | DNT |

| P2 | 55.4 | 83.9 | C40+, H | 41.7 | Duet | 20 | 8 | DNT |

| P3 | 57.8 | 86.3 | C40+, H | 30.0 | Duet2 | 20 | 8 | DNT |

| P4 | 29.5 | 37.1 | Pulsar, H | 23.3 | Duet2 | 20 | 7 | DNT |

| P5* | 17.2 | 9.0 | Pulsar, M | 25.0 | Opus2 | 20 | 12 | DNT |

| P6 | 73.0 | 84.7 | C40+, H | 25.0 | Duet2 | 20 | 8 | DNT |

| P7 | 58.1 | 54.8 | C40+, H | 21.7 | Duet | 28 | 8 | DNT |

| P8 | 32.9 | 92.6 | C40+, H | 20.0 | Duet2 | 20 | 8 | DNT |

| P9 | 21.7 | 24.9 | Pulsar, H | 26.7 | Duet2 | 28 | 10 | DNT |

| P10 | 15.8 | 56.3 | C40+, M | 15.0 | Duet | 20 | 12 | DNT |

| P11* | 48.8 | 27.0 | Pulsar, H | 13.3 | Opus2 | 28 | 12 | DNT |

| P12* | 45.9 | 5.0 | Pulsar, FLEXeas | 20.0 | Opus2 | 21 | 12 | DNT |

| P13* | 35.0 | 26.1 | Pulsar, H | 21.7 | Opus2 | 28 | 11 | DNT |

| P14 | 41.3 | 35.5 | Pulsar, H | 23.3 | Duet2 | 20 | 8 | DNT |

| P15 | 15.5 | 51.5 | Pulsar, M | 16.7 | Duet2 | 20 | 12 | DNT |

| P16 | 44.0 | 24.7 | Pulsar, H | 43.3 | Duet2 | 28 | 10 | DNT |

| P17* | 28.0 | 8.8 | Pulsar, H | 3.3 | Opus2 | 20 | 8 | DNT |

| MEAN | 50.4 | 39.3 | N/A | 21.4 | N/A | 19.7 | 12.4 | 77, 79 |

| STDEV | 17.2 | 27.0 | N/A | 14.11 | N/A | 6.1 | 5.8 | 16, 15 |

The electrode was inserted via cochleostomy in all cases for the English-speaking participants. The cochleostomy was drilled just anteroinferior to the round window, beginning with a 1.5-mm diamond burr to expose the endosteum. The last remaining bone and endosteum were opened with a 1.0-mm diamond burr (at low speed). Intraoperative intravenous steroids were provided in all cases.

As shown in Table I, all Polish-speaking participants were recipients of either the Pulsar (n = 11) or Combi40+ (n = 6) device. The electrodes used were standard H (31 mm, n = 11), medium M (24 mm, n = 3) or FLEXeas (20.9 mm, n = 3). For patients implanted with a standard H array, the electrode was inserted to various depths. The patients had anywhere from 10 to 12 electrodes inserted and 8 to 12 activated in their map. Table 1 displays the insertion depth and number of active electrodes for each of the subjects. The mean age of the Polish participants at testing was 39.1 years (SD = 16.7 years) with a range of 15 to 58 years. Nine subjects were female and eight subjects male.

The electrode was inserted via round window in all cases for the Polish-speaking subjects using the technique described in Skarzynski et al. (2007, 2011). The demographic information for all subjects including age, months of implant experience, device and electrode type, processor, and electrode insertion depth is displayed in Table 1.

All but 5 listeners wore hearing aids in both the implanted and non-implanted ears. Those subjects who did not wear hearing aids in either ear are denoted in Table I with an asterisk next to the subject label. For those five participants, the mean preoperative LF PTA was 20 dB HL and the degree of postoperative threshold shift was 16.7 dB. For these same five participants, the mean LF PTA in the non-implanted ear was 17.3 dB HL. For all other subjects, hearing aid settings were verified prior to testing using probe microphone measurements to match output to NAL-NL1 targets (Dillon et al., 1998) for 60-dB-SPL speech. In cases where target audibility was not being met by the listener’s current hearing aid settings, the hearing aid(s) were reprogrammed and output verified prior to commencing testing.

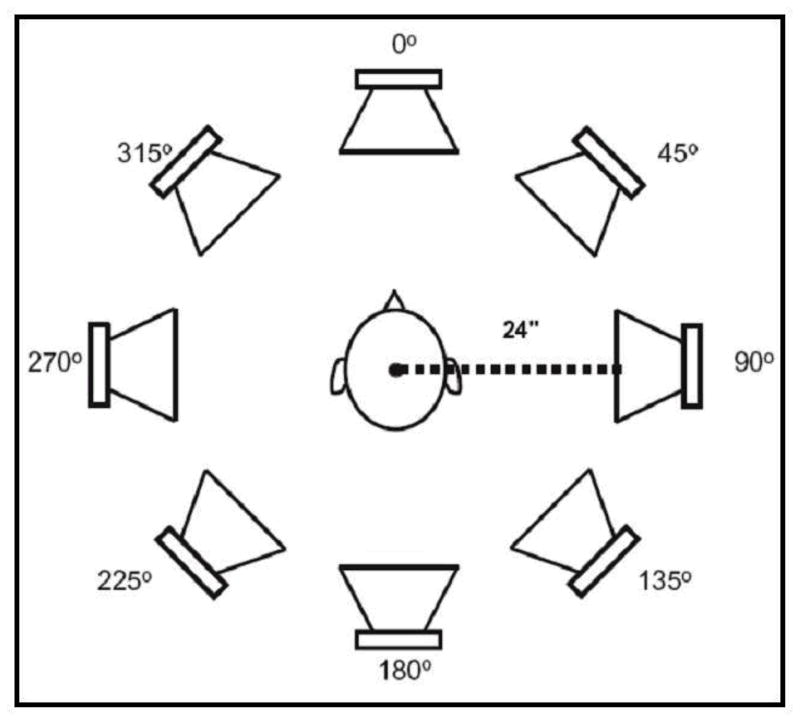

Test environment and stimuli

Sentence recognition in noise experiments were conducted using the Revitronix R-SPACE™ sound simulation system. This system consists of an eight-loudspeaker array that is placed in a circular pattern around the subject. Each speaker is placed at a distance of 60 cm from the listener’s head. The speakers are each separated by 45 degrees. A schematic of the speaker array is shown in Figure 1.

FIGURE 1.

R-SPACE™ 8-loudspeaker system.

The restaurant environmental stimuli were recorded using eight microphones set in the same circular pattern around a Knowles Electronics Mannequin for Acoustic Research (KEMAR) as shown in Figure 1. The eight tracks that were captured in the restaurant were fed to eight loudspeakers at respective positions in the R-SPACE playback system. The speech stimuli always originate from the speaker placed at 0° azimuth and the noise originated from all 8 loudspeakers—as might occur at a large social gathering or noisy restaurant. For additional detail regarding the recording of the stimuli, please refer to Compton-Conley et al., 2004.

The restaurant noise was fixed at a level of 72 dBA which matched the physical level of the restaurant noise from which the stimuli were recorded. Though this level may seem high at face value, Lebo et al. (1994) showed that the mean noise level for 27 restaurants surveyed in the San Francisco area was 71 dBA and the median level was 72 dBA. Thus the presentation level of the restaurant noise used in the R-SPACE system would be considered representative of real-world restaurant environments.

The speech stimuli were presented 1) adaptively with a one-down, one-up stepping rule to track the signal-to-noise ratio (SNR) required for 50% correct, and 2) at a fixed SNR of both +6 and +2 dB SNR. The adaptive speech reception threshold (SRT) for the English-speaking participants was achieved by concatenating two 10-sentence HINT lists that were presented as a single run. The last six presentation levels for sentences 15 through 20 were averaged to provide an SRT for that run. Two runs were completed per condition and the SRT’s were averaged to yield a final SRT for each listening condition. Prior to data collection, every subject was presented with a trial run of 20 sentences for task familiarization in both the bimodal and best aided EAS listening conditions. The sentence lists as well as condition order were randomly selected to counterbalance for order effects. Randomization was set prior to subject enrollment and ensured that an equal number of participants were initially tested in the bimodal and best aided EAS conditions as well as in the condition of restaurant noise (Experiment 1) versus reverberation (Experiment 2). HINT sentence recognition in quiet was obtained for all listeners to ensure that at least 50% correct performance could be achieved in quiet prior to the administration of the adaptive test.

The adaptive SRT for Polish-speaking participants was based on the average of two, 30-sentence lists from the Polish sentence matrix test (PSMT) described in Ozimek et al., 2010. Just as for the English-speaking participants, the last six presentation levels for sentences 25 through 30 were averaged to provide the SRT for each individual run and the mean of two runs yielded the final SRT. Prior to data collection all Polish participants were provided with a practice run of one full list of 30 sentences for task familiarization. Identical randomization and counterbalancing of conditions was determined for the Polish participants as described for the English-speaking participants.

As defined in the original description of the HINT adaptive task (Nilsson et al., 1994), the listener was required to correctly repeat all words in the sentence in order for the SNR to decrease. Thus the actual percent correct—if measured per each word repeated correctly at that SNR—would expectedly be higher than 50% correct. It is for this reason that the developers of the HINT have recommended a modified adaptive rule to allow different points on the PI function be tracked based on the number of errors allowed per sentence to be counted as a “correct” repetition (Chan et al., 2008). Given that data collection began prior to the release of the modified adaptive rule, the original rule was followed for the current study for the sake of consistency.

For the fixed level SNR for English-speaking participants, two 10-sentence lists were presented per listening condition to yield a percent correct score. For the Polish-speaking participants, one 30-sentence list of the Polish Matrix Sentence Test (PMST) sentences was presented per listening condition to yield a percent correct score.

Speech recognition was assessed for all 38 participants in the best-aided EAS condition (cochlear implant + binaural acoustic hearing) as well as the bimodal condition with the ipsilateral ear occluded with a foam EAR plug. In addition, the 21 English-speaking and 17 Polish-speaking participants were additionally assessed for the binaural acoustic only listening condition for the adaptive SRT. For all listening conditions, processor volume and sensitivity was held constant at the subject’s everyday use settings. Participants were not permitted to switch processor settings during testing nor between conditions.

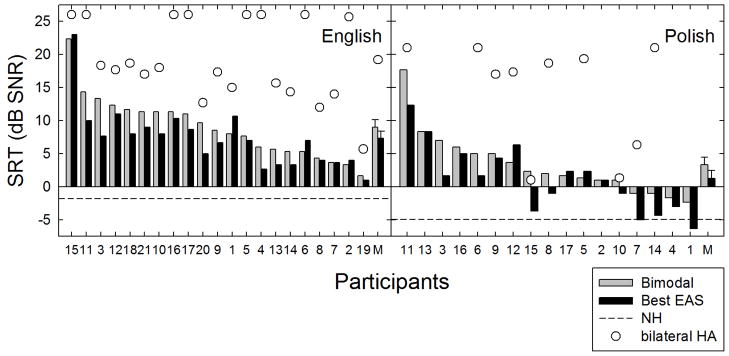

Results for Experiment 1

Adaptive SRT

Individual and mean SRT results for the adaptive SRT experiment are shown in Figure 3. The SRT in dB SNR is plotted as a function of subject number with the gray bars representing performance in the bimodal condition (ipsilateral ear occluded) and the black bars representing performance in the best aided EAS condition. A lower score is representative of better performance. SRTs have been ranked ordered from poorest to best performance along the abscissa.

FIGURE 3.

Individual and mean speech reception thresholds (SRT) in dB SNR are shown for the bimodal (gray bars) and best aided EAS (black bars) listening conditions. Unfilled circles represent SRT data for the binaural aided condition. Error bars represent +/− 1 standard error.

28 of the 38 participants demonstrated either equivalent or better performance in the best aided condition with binaural acoustic hearing as compared to the bimodal condition for which acoustic hearing was only available from the contralateral ear. For the participants demonstrating an improvement in the SRT in the best aided condition, the degree of improvement ranged from 0.3 to 6.0 dB. The mean SRT for all 38 participants was 6.3 and 4.5 dB SNR for the bimodal and best aided EAS conditions, respectively. Thus the mean EAS-related benefit (best aided EAS – bimodal) in the SRT was 1.8 dB.

For the English-speaking listeners, the mean SRT for the bimodal and best aided conditions was 9.0 and 7.3 dB SNR, respectively. For the Polish-speaking listeners, the mean SRT for the bimodal and best aided EAS conditions was 3.3 and 1.2 dB SNR, respectively. A repeated-measures analysis of variance (ANOVA) was completed on data for all 38 subjects which revealed a significant effect of listening condition [F(1, 36) = 21.1 p < 0.001]. Thus, the presence of preserved hearing in the implanted ear significantly improved performance in this test environment.

Given that this population of EAS patients—particularly the Polish speaking participants—had considerable low-frequency acoustic hearing in both ears, one might question whether comparable performance could have been obtained in the acoustic only condition. That is, did the implant yield additional benefit over that afforded by high levels of binaural acoustic hearing? Thus all 21 of the English speaking and 10 of the 17 Polish participants were also tested in their binaural acoustic hearing condition without the use of the cochlear implant. Time did not allow for assessment of this condition for seven of the Polish participants tested.

The binaural acoustic SRT’s are shown in Figure 3 as unfilled circles. Considering just the listeners for whom binaural acoustic performance was obtained, the mean SRT for the acoustic only, bimodal and best aided conditions for the English-speaking listeners was 19.2, 9.0 and 7.3 dB SNR, respectively. For the ten Polish participants for whom binaural acoustic condition was completed, the mean SRT for the acoustic only, bimodal and best aided EAS conditions was 14.4, 3.6, and 1.2 dB SNR, respectively. These data demonstrate the effectiveness of the cochlear implant even for patients who have considerable binaural low-frequency acoustic hearing.

Fixed SNR

In addition to presenting the sentence stimuli adaptively in the simulated restaurant noise environment, 15 of the 17 Polish participants and 15 of the 21 English speaking participants were also run using a fixed SNR to obtain a percent correct measurement. This condition was added after the first 6 English-speaking participants had already completed testing and time did not allow for completion of this condition for 2 of the 17 Polish-speaking participants. All individuals scoring above 30% in the bimodal condition at +6 dB SNR were also run at +2 dB SNR.

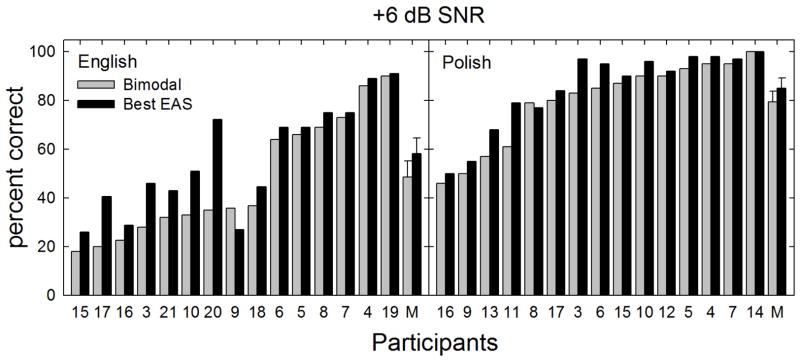

The results for the fixed SNR testing at +6 dB SNR are shown in Figure 4. For the Polish-speaking participants, mean performance was 79.4% for the bimodal and 85.1% for the best aided condition. For the English-speaking participants, mean performance was 48.7% for the bimodal and 58.3% for the best aided EAS conditions. The mean EAS-related benefit (best aided EAS – bimodal) was 5.7 and 9.6 percentage points for the Polish and English participants, respectively.

FIGURE 4.

Individual and mean speech recognition scores in percent correct are shown for fixed level SNR of +6 dB. The bimodal and best aided EAS listening conditions are represented by gray and black bars, respectively. Error bars represent +/− 1 standard error.

Visual inspection of the data indicated that many patients were near a ceiling in performance in the bimodal test condition. This likely restricted the benefit shown in the best-aided condition. None-the-less, when the scores from the Polish and English speaking listeners were pooled, statistical analysis revealed a significant difference between performance in the bimodal and best aided conditions [χ2(1) = 16.9, p < 0.001].

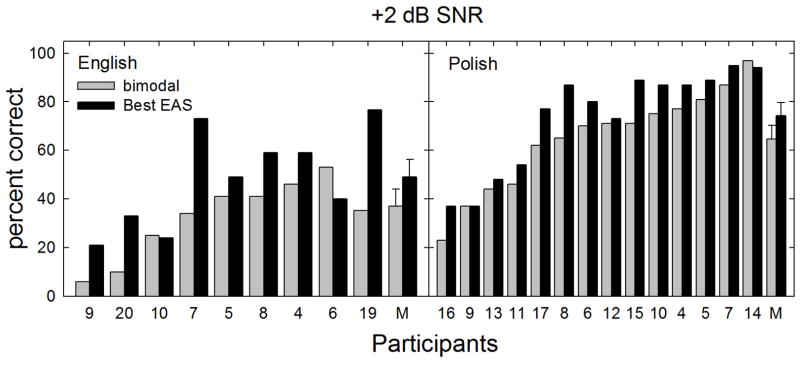

The results for the fixed SNR testing at +2 dB SNR as shown in Figure 5. Visual inspection indicated that fewer patients were near the ceiling in the bimodal condition than for the +6 dB SNR condition. The mean scores for all listners in the bimodal and best-aided EAS conditions at +2 dB SNR were 54.9 and 65.1%, respectively. For the Polish participants, mean performance was 64.7% for the bimodal and 74.2% for the best aided EAS condition. For the English-speaking participants, mean performance was 40.0% for the bimodal and 50.2% for the best aided EAS conditions. Thus the EAS-related benefit was 9.5 and 10.2 percentage points for the Polish and English participants at +2 dB SNR. Since these data did not meet the assumption of equal variance, a X2 analysis was completed. Statistical analysis revealed a significant difference between performance in the bimodal and best aided conditions [χ2(1) = 8.0, p = 0.005].

FIGURE 5.

Individual and mean speech recognition scores in percent correct are shown for fixed level SNR of +2 dB. The bimodal and best aided EAS listening conditions are represented by gray and black bars, respectively. Error bars represent +/− 1 standard error.

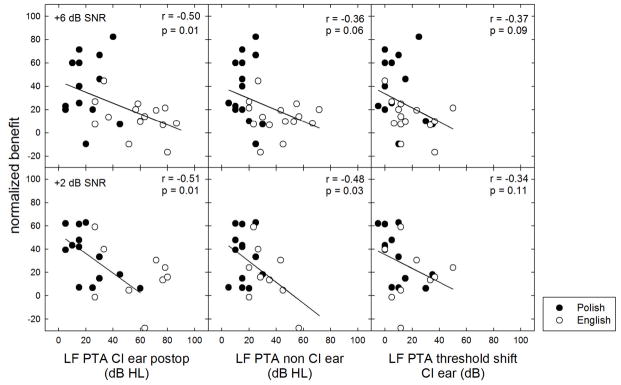

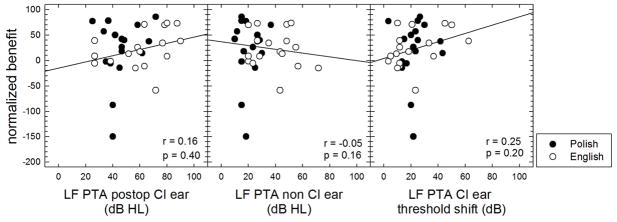

Benefit and residual hearing

In this section we ask whether the benefit seen in the best-aided conditions as compared to bimodal—for the +6 and +2 SNR conditions—is predicted by (i) the low-frequency pure tone average (LF PTA, mean of 125, 250 and 500 Hz) in dB HL for the implanted ear, (ii) and the LF PTA in the non-CI ear, and (iii) the degree of LF PTA threshold elevation for the implanted ear in dB.

Benefit in speech understanding was calculated using the equation: [(Best aided score – bimodal score)/(100 – bimodal score)*100]. This approach to calculating benefit normalizes for the starting point of the bimodal score. The approach allows small gains in performance when the bimodal score is high to be equivalent to large gains when the bimodal score is low.

Figure 6 displays normalized benefit as a function of (i) the low-frequency pure tone average (LF PTA, mean of 125, 250 and 500 Hz) in dB HL for the implanted ear, (ii) LF PTA for non-implanted ear, and (iii) the degree of postoperative LF PTA threshold elevation for the implanted ear. The top row of Figure 6 displays data for +6 dB SNR and the bottom row displays data for +2 dB SNR. Significant correlations were found between normalized benefit at +6 and +2 dB SNR and postoperative LF PTA for the implanted ear as well as normalized benefit at +2 dB SNR and LF PTA for the non-implanted ear. These data suggest that (i) better low frequency thresholds in the implanted ear yield higher level of benefit at both +6 and +2 dB SNR, and (ii) that better low frequency thresholds in both the implanted and non-implanted ear yield greater benefit in the most challenging listening condition, +2 dB SNR.

FIGURE 6.

Normalized EAS benefit for speech recognition at +6 and +2 dB SNR as a function of low-frequency pure tone average (LF PTA) in dB HL in the implanted ear postoperatively, in the non-CI ear, as well as the degree of LF PTA elevation. Polish and English subject data are shown by filled and unfilled circles, respectively.

Additional Pearson correlation analyses were completed for duration of CI experience (Table I), electrode insertion depth (Table I), degree of LF PTA threshold shift and degree of EAS benefit for the adaptive SRT, raw fixed SNR scores, and normalized benefit at both +6 and +2 dB SNR. There were no significant correlations found for any of these variables.

Experiment 2: Reverberant speech perception

Introduction

Reverberation refers to the collection of reflected sounds from the surfaces in an enclosed space such as a classroom, chapel, or auditorium. Reverberation time (RT) is typically characterized by RT60 which defines the time required for the sound to decay by 60 dB after the source stimulus is removed. The effects of reverberation have been largely ignored in the cochlear implant literature—primarily due to the fact that many implant recipients have traditionally performed just fairly on measures of speech understanding even in standard, low-reverberant environments. There have been studies documenting the effects of varying amounts of reverberation on speech understanding in cochlear implant simulations (e.g., Qin and Oxenham, 2005; Poissant et al., 2006; Whitmal and Poissant, 2009; Drgas and Blaszak, 2010). To date, Kokkinakis et al. (2011) have published the single study examining the effects of reverberation on speech recognition for cochlear implant recipients. While demonstrating that speech recognition decreased with increasing RT from 0.3 to 1.0 sec, they did not however, examine the effects of reverberation across different groups of cochlear implant recipients or listening conditions.

There are no published reports studying the effects of room reverberation on EAS or bimodal listeners with binaural low-frequency hearing as compared to bimodal hearing with one acoustic-hearing ear. A classic study by Hawkins and Yacullo (1984) reported a significant binaural advantage for monosyllabic word recognition over monaural hearing in all reverberant conditions. Thus, there is reason to believe that EAS listeners with two acoustic-hearing ears would outperform bimodal listeners on measures of speech identification in various reverberant conditions. The reason is that for recognition of reverberant speech, the source stimulus will arrive at the two ears at the same time delay—provided that the listener is facing the source. The reflections, however, will arrive at the two ears at various time delays. Since hearing preservation in the implanted ear may allow the listener access to ITD cues, it is hypothesized that the listeners with hearing preservation in the implanted ear will be able to squelch the reflections yielding higher levels of speech perception in the best aided EAS condition.

METHODS

Participants

Reverberant speech performance was obtained for all 17 Polish participants, 19 of the 21 English-speaking participants and all 16 subjects with normal hearing. Performance for English-speaking listeners 15 and 16 was assessed though neither subject was unable to score above 0% correct during multiple training sessions. Thus their data were not included in data analysis. Subject demographic information is included in Table I.

Stimuli

The English-speaking listeners were tested with the AzBio sentences (Spahr et al., 2012). The AzBio sentence corpus is comprised of 33 lists of 20 sentences that include 2 male and 2 female talkers. Two 20-sentence lists were presented for each condition and the mean of the two lists, in percent correct, was calculated for each of the listening conditions.

The Polish-speaking listeners were tested with the Polish sentence matrix test (PSMT) which was the same metric used for Experiment 1. One 30-sentence list was presented for each condition.

Stimuli were presented using the same Revitronix R-SPACE™ sound simulation system as used in Experiment 1 and shown in Figure 1. Digital Performer software with the use of the eVerb acoustic manipulation was used to impose reverberation on the audio files used for presentation. A closed-circuit recording of an impulse noise (click) was fed through the eVerb reverberation setting and then transferred to Sound Forge 9 professional audio editing software. The reverberant stimuli were calibrated in both the broadband and in 1/3-octave bands. The dry, or source, component was presented at 0° azimuth at a calibrated level of 60 dBA. The wet, or reflected/reverberant, components were presented from 0°, 45°, 90°, 135°, 180°, 225°, 270°, and 315° degrees azimuth. The RT60 was 0.6 seconds for both the English- and Polish-speaking listeners which is considered acoustically similar to an empty classroom.

The sentence lists as well as condition order were randomly selected to counterbalance for order effects. Randomization was set prior to enrollment and ensured that an equal number of participants were initially tested in the bimodal and best aided EAS conditions. Prior to data collection, one complete list of sentences was presented to each listener for training purposes.

Conditions

For all participants, speech understanding was assessed in the best aided EAS condition and in the bimodal condition with the ipsilateral ear occluded with a foam plug. 20 of the 21 English-speaking and 13 of the 17 Polish-speaking participants were additionally tested in the bilateral acoustic-only condition. English-speaking participant 12 was not tested in the bilateral acoustic condition as time did not allow. The first four Polish-speaking participants were not tested in the acoustic only condition as this was added to the protocol after these listeners had completed experimentation. For all listening conditions, processor volume and sensitivity was held constant at the subject’s everyday use settings. Listeners were not permitted to switch processor settings during testing nor between conditions.

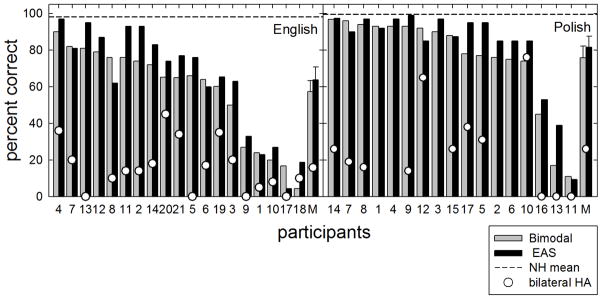

Results

Individual and mean scores are shown in Figure 7. Sentence recognition, in percent correct, is plotted as a function of subject number with the gray bars representing the bimodal condition and the black bars representing the best aided EAS condition. Mean performance for listeners with normal hearing is shown as the horizontal dashed line in each figure. Implant participants’ scores have been ranked ordered from highest to lowest scores, in percent correct, along the abscissa.

FIGURE 7.

Individual and mean reverberant speech recognition, in percent correct, is shown for reverberation time of 0.6 seconds. The bimodal and best aided EAS listening conditions are represented by gray and black bars, respectively. Unfilled circles represent data obtained in the binaural aided condition. Horizontal dashed lines represent mean performance for the listeners with normal hearing. Error bars represent +/− 1 standard error.

29 of the 36 participants demonstrated either equivalent or better performance in the best aided EAS condition compared to the bimodal condition. Improvement ranged from less than 1 to over 22 percentage points. The overall mean scores in the bimodal and best aided conditions were 66.1 and 72.2 percent correct, respectively. For the English-speaking listeners, mean scores were 57.3 and 63.8 percent correct, respectively. For the Polish-speaking listeners, mean scores were 75.8 and 81.6 percent correct, respectively. Statistical analysis revealed a significant effect of listening condition [F(1, 35) = 15.5, p < 0.001]. Just as we found in Experiment 1 with the restaurant noise, these results demonstrate significant benefit from hearing preservation.

Eighteen of the nineteen English-speaking participants and thirteen of the seventeen Polish participants were also tested in their binaural acoustic hearing condition without the use of the cochlear implant. Performance in this condition is shown in Figure 7 as unfilled circles. In all cases, the acoustic only condition yielded poorer performance than the bimodal or best aided conditions. Thus, as we found in Experiment 1, a CI is effective even for patients who have considerable low-frequency acoustic hearing in both ears.

Figure 8 displays normalized EAS benefit as a function of (i) the low-frequency pure tone average (LF PTA, mean of 125, 250 and 500 Hz) in dB HL for the implanted ear, (ii) the LF PTA for non-implanted ear, and (iii) the degree of LF PTA threshold elevation for the implanted ear. Unlike that observed for sentence recognition performance in fixed level SNR (Experiment 1), none of the correlations reached statistical significance. The likely reason is that there were more individuals exhibiting near ceiling level performance for reverberant speech recognition in the reference bimodal condition than there were for recognition in noise at a fixed SNR. Seven of the seventeen Polish participants’ (1, 3, 4, 7, 8, 9, 12 and 14) scores were potentially confounded by ceiling effects as these individuals scored above 90% correct in the reference bimodal listening condition. This is in contrast to the fixed SNR condition shown in Figure 6 where only 3 of the Polish participants scored above 90% correct in the bimodal condition.

FIGURE 8.

Normalized EAS benefit for reverberant speech recognition as a function of low-frequency pure tone average (LF PTA) in dB HL in the implanted ear postoperatively, in the non-CI ear, as well as the degree of LF PTA elevation. Polish and English participant data are shown by filled and unfilled circles, respectively.

Discussion

There is ample evidence demonstrating that electrodes can be inserted into the scala tympani without destroying residual hearing and that patients can combine information delivered via EAS. At issue in this report is whether or not benefit is gained from having two acoustic-hearing ears vs. one. Our results in complex listening environments document that preserved acoustic hearing in the implanted ear contributes significantly to speech understanding. This was true for patients tested with both English and Polish test materials and for patients with short (10 mm), medium (16 to 20 mm) and long (> 20 mm) electrode insertions.

We found a statistically significant, though relatively small, benefit of 1.7 dB for adaptive sentence recognition in our ‘restaurant’ environment. In our restaurant environment we also found statistically significant, but small, a best aided benefit of 7.6 percentage points for speech at +6 dB SNR and a 10.2 percentage point benefit for speech at +2 dB SNR. We found a 6.2 percentage point benefit in the reverberant environment. The amount of hearing preservation benefit was largest for the most difficult listening condition—speech recognition at +2 dB SNR. A likely reason is that at the higher SNR tested, +6 dB, many of the participants exhibited ceiling-level performance in the bimodal condition and thus had little-to-no room for further improvement. Consequently the largest improvement was observed in the most challenging listening condition. These results are in agreement with the best-aided benefit reported by Lorens et al. (2008) in which the benefit of having two acoustic-hearing ears was compared to the ipsilateral EAS listening condition. They reported a benefit of 6.4-percentage points for speech recognition at +10 dB SNR in a steady-state noise background.

The degree of normalized EAS benefit was also significantly correlated with postoperative LF PTA in the implanted ear for +6 and +2 dB SNR as well as the LF PTA for the non-implanted ear at +2 dB SNR. As stated in the introduction, it has been hypothesized that the preservation of ITD cues may be responsible—at least in part—for such a finding. Prior work by Hawkins and Wightman (1981) found also reported correlation between degree of hearing loss and sensitivity to interaural timing cues. On that basis we could argue that these data are consistent with the results of Hawkins and Wightman (1981) as those individuals with the best LF PTA exhibited the greatest degree of normalized EAS benefit.

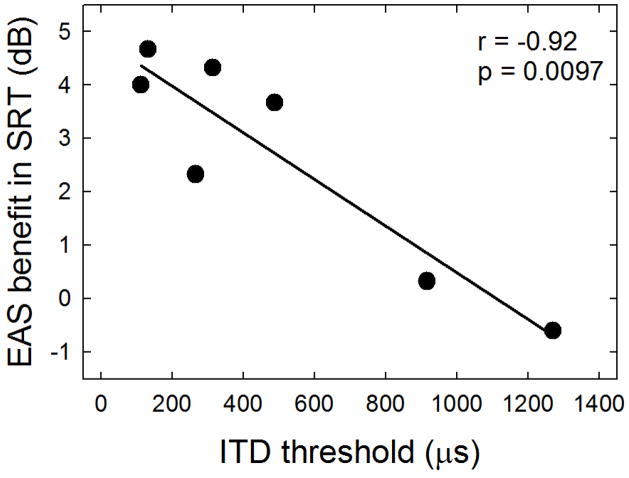

We were able to recruit 6 of the original 21 English-speaking participants (11, 15, 18, 19, 20 and 21) for additional testing to investigate 1) whether ITD cues might be present for unilaterally implanted listeners with binaural acoustic hearing, and 2) whether a correlation may exist between ITD thresholds and degree of EAS benefit. ITD thresholds were obtained, under headphones, for a 200-ms, 250-Hz signal presented at 90 dB SPL. Additional experimental details and figures are provided in Appendix A. ITD thresholds were in the range of 131 microseconds to 1271 microseconds for the 6 participants with hearing preservation. As seen in the Appendix Figure A2, the degree of EAS-related benefit for the SRT task was significantly correlated with ITD thresholds (p = 0.002). This, however, is not to say that underlying preservation of ITD cues is the sole underlying mechanism for the EAS-related benefit observed in Experiments 1 and 2. These data do, however, suggest that binaural timing cues are associated with the degree of EAS benefit. For additional detail, please see Appendix A.

Another possibility is that the listeners in the current study could have taken advantage of the head shadow effect in the best aided condition. Though the literature has reported limited benefit of spatial release from masking in listeners with hearing loss (e.g., Duquesnoy, 1983; Bronkhorst and Plomp, 1992; Marrone et al., 2008; Best et al., 2011; Ching et al., 2011), many of the participants in the current study had normal to near-normal hearing in the low-frequency region. In fact, the majority of the Polish-speaking participants had LF PTA 40 dB HL or less in both the implanted and non-implanted ears; thus the low-to-mid frequency thresholds were generally lower (i.e. better) than those in the past studies. Although head and torso shadow is generally thought to be present for higher frequency stimuli, head related transfer function (HRTF) research has provided evidence in support for the presence of head and torso shadow for frequencies below 1000–1500 Hz, albeit smaller in magnitude than physically present with higher frequency stimuli (e.g., Kulkarni et al., 1999; Avendano et al., 1999; Shinn-Cunningham et al., 2000). Thus for the individuals with normal to near-normal hearing in the low-to-mid frequency region, it is reasonable to hypothesize that head shadow played a role in the EAS-related benefit seen in the present study—particularly given the listener-to-speaker distance as shown in Figure 1. Given that the noise originated from multiple sources about the listener, it may not be the case that the current experimental design allowed listeners to take much advantage of head shadow.

Another possibility is that these listeners were able to take advantage of ILD cues in the lower frequency region. Although ILDs are generally regarded as high-frequency cues, ILDs are present for lower frequency stimuli but are generally in the range of 2 dB or less (e.g., Yost and Dye, 1988). Given the magnitude of the effect in Experiment 1, it is possible that ILD cues were present and utilized by the unilaterally implanted listeners with binaural acoustic hearing. Of course, this possibility was not directly assessed in the current study.

Aside from preservation of ITD cues and/or head shadow, another possible explanation is that binaural summation played a role. CNC word recognition scores for the bimodal and best aided EAS conditions, however, were essentially equivalent (Table I). This finding demonstrates that binaural summation resulting from two acoustic hearing ears played little to no role in the EAS-related benefit reported in the current study.

Binaural masking level difference (BMLD) may also have contributed to the underlying mechanism for the EAS benefit. The current experimental paradigm for Experiment 1 could have possibly resulted in BMLD benefit as the signal and masker were spatially separated and would thus have represented a condition labeled NuS0 (though there was some noise also originating from 0° azimuth). Quaranta and Cervellera (1974) reported BMLD for listeners with varying degrees of sensory hearing loss for which BMLD averaged to 4 dB for the NuS0 condition. Further research directly gauging BMLD as a potential contributor to EAS benefit is required.

Another possible explanation that must be mentioned is that the participants in the current study were making active use of bilateral hearing aids in combination with the implant processor. The forced bimodal condition represented an unfamiliar, acute listening condition. Thus it is possible that the EAS effect as reported here may have resulted, at least in part, from an acute bimodal listening condition to which the participants were not accustomed. Future work could evaluate this finding by asking participants to forego use of the ipsilateral acoustic amplification for a period of time after which testing could be completed in the chronic bimodal condition.

The degree of EAS benefit reported here was considerably less than that reported in both Dorman and Gifford (2010) and Gifford et al., (2010). The most likely reason is that referred to in the introduction—prior studies examined only individuals with the shortest electrodes (10-mm insertion) and high levels of preserved hearing. The current dataset includes a much large sample size, broader range of electrode insertions and degrees of hearing preservation. Despite the diversity of subject, device, and surgical approach, significant benefit for hearing preservation was still observed.

Although we report a statistically significant improvement in speech recognition with preserved hearing in the implanted ear, one might question the utility of providing what may appear to be low levels of improvement—ranging from 1.7 dB to 10.2 percentage points. Of interest is that nearly all participants commented how much easier the task was when allowed to use binaural acoustic hearing as compared to the bimodal condition (with the ipsilateral acoustic hearing occluded). Given the magnitude and consistency of this feedback, it is likely that the measures used in the current study were not sensitive enough to fully capture the extent to which having preserved acoustic hearing in the implanted ear aids speech understanding. In fact, it may be the case that having binaural acoustic hearing in combination with unilateral electric hearing affords benefit attributed to greater ease of listening or reduced listening effort and/or fatigue. Studies using measures of ‘listening effort’ such as pupillometry (e.g., Kramer et al., in press; Zekveld et al., 2010, 2011), electroencephalography (Strauss et al., 2008), or heart rate (e.g., Mackersie and Cones, 2011) may reveal a larger benefit of hearing preservation than studies using more traditional measures of performance.

The preoperative low-frequency thresholds for many of the participants in the current study would have excluded them from cochlear implant candidacy given the current FDA labeled criteria for adult cochlear implantation in the U.S. Yet for all patients, electric stimulation yielded considerably higher levels of performance than the binaural acoustic hearing condition in all Polish-speaking participants tested (Figures 3 and 7). Thus these data not only provide functional efficacy for preservation of hearing in the implanted ear, but also for the expansion of cochlear implant criteria to include individuals with low-frequency thresholds in even the normal to near-normal range.

Because high levels of hearing preservation are possible following EAS and/or conventional cochlear implantation, it is likely that this outcome will be touted when patients inquire about surgical options. Of critical importance is that patients understand the expected benefit in terms of complex listening environments of preserving hearing in the implanted ear—as we are generally in complex listening environments throughout the day. The current dataset provides evidence that hearing preservation in the implanted ear yields significantly higher levels of speech recognition in complex listening environments than having just monaural acoustic hearing. Thus it would follow that attempts at minimally traumatic surgery for hearing preservation followed by postoperative amplification of acoustic hearing in the implanted ear would produce the best outcomes for speech recognition in complex listening environments—environments in which we find ourselves in most communication settings.

Summary

The aim of this study was to assess the benefit of preserved acoustic hearing in the implanted ear for speech recognition in complex listening environments. The data from 38 hearing preservation patients showed a small, but significant, mean improvement in performance (1.7 – 2.1 dB and 6 – 10 percentage points) in the best-aided EAS vs. the bimodal conditions. Postoperative thresholds in the implanted ear were correlated with the degree of EAS benefit for speech recognition in the restaurant noise. There was no reliable relationship among audiometric threshold in the implanted ear, nor elevation in threshold following surgery, and improvement in speech understanding for reverberant speech recognition. Our results suggest that (i) preserved low-frequency hearing in the implanted ear improves speech understanding in realistic restaurant and reverberant noise situations for CI recipients (ii) testing in complex listening environments, in which binaural level and timing cues differ for signal and noise, may best show the value of having two ears with low-frequency acoustic hearing, and (iii) those with better post-implant thresholds do show a wider range and higher maximum possible performance than those with lower thresholds, and (iii) preservation of binaural timing cues is possible following unilateral cochlear implantation with hearing preservation and is associated with the degree of EAS benefit. Our results provide support for the expansion of cochlear implant criteria to include individuals with low-frequency thresholds in even the normal to near-normal range as well as for attempts at hearing preservation for individuals with considerable low-frequency hearing to preserve.

Acknowledgments

The research reported here was supported by grant R01 DC009404 from the NIDCD to the first author and by a research grant provided by Med El Corporation to the Institute of Physiology and Pathology of Hearing, Warsaw, Poland. We thank Amy Olund, AuD, Sterling Sheffield, AuD, Bob Dwyer, Louise Loiselle, MS, Malgorzata Zgoda, MA, and Bartosz Trzaskowski, MSc for their assistance with data collection and subject recruitment and Dariusz Kutzner and Pawel Libiszewski for editing the Polish test materials. We thank two anonymous reviewers for their helpful comments and suggestions on an earlier version of this manuscript. Portions of these data were presented at the 2010 Hearing Preservation Workshop in Miami, FL, the 3rd International Electric-Acoustic Cochlear Implant Workshop in Iowa City, IA and the 2011 European Federation of Audiology Societies (EFAS) in Warsaw, Poland and the 2012 IHCON meeting in Tahoe City, CA.

APPENDIX A. Interaural time difference (ITD) thresholds

Participants

As mentioned in the Discussion section, 6 of the 21 English-speaking listeners (11, 15, 18, 19, 20 and 21) were recruited for a supplemental experiment examining interaural time difference (ITD) thresholds for a 200-ms, 250-Hz signal. The participants’ ages ranged from 47 to 69 years with a mean age of 59.5 years. The individual and mean postoperative audiograms for the implanted and non-implanted ears are shown in Figure A1.

Methods

An adaptive two-interval forced-choice procedure was used for which the stimulus was presented bilaterally in each of the two intervals, separated by 400 ms. In the first interval, an ITD was presented favoring one side, and in the second interval an interaural difference of the same magnitude favored the opposite side, with order randomized for each trial. Participants were asked to indicate the whether the sequence of the sound images moved from the left to right or from right to left by pressing a button on a response box. Correct answer feedback was provided via LED on the response box. All listeners were provided with training on the ITD task for at least 45 to 60 minutes prior to commencing data collection.

Thresholds were tracked using a 2-down, 1-up stepping rule to track 70.7% correct (Levitt, 1971). That is, after two consecutive correct responses, the task was made more difficult and after one incorrect response, the task was made easier. The initial step size was set to a large value so that the listeners could clearly detect the lateral position change. Following listener training during the practice runs, step sizes were set individually to allow for efficient threshold tracking. Each threshold run was terminated following eight reversals with the ITD threshold computed as the mean of the last six reversals in a given run. Each reported threshold was based on at least three runs obtained for each listener within a single 2- to 3-hour session.

The 250-Hz signal was presented at 90 dB SPL to each ear via Sennheiser HD 250 Linear II headphones. Stimuli were calibrated prior to experimentation for each enrolled participant using a Fluke 8050A digital multimeter. Given the absolute thresholds measured for the 200-ms, 250-Hz signal in each ear, the signal presentation level varied from 12 to 40 dB SL for each of the 6 participants.

Results

ITD thresholds were found to be within the range of 131 to 1271 microseconds with a mean of 556 microseconds. Mean ITD thresholds for highly trained listeners with normal hearing at 250 Hz are in the range of 30 to 60 microseconds (Klump and Eady, 1956; Hafter et al., 1979). Thus the listeners in the current study exhibited abnormal ITD thresholds as compared to young, highly trained listeners with normal hearing. There are reports in the literature of ITD thresholds increasing with age—even independent of hearing loss (e.g., Strouse et al., 1998; Kubo et al., 1998; Babkoff et al., 2002).

Pearson product moment correlation analyses were completed for EAS benefit observed with the adaptive SRT, fixed level SNR, and reverberation. There was a highly significant correlation (r = −0.92, p = 0.0097) between EAS benefit in the SRT and ITD thresholds (Figure A2). Correlations were not significant for normalized EAS benefit and ITD thresholds for speech recognition at +6 dB SNR nor for reverberant speech recognition.

Pearson product moment correlation analyses were also completed for audiometric thresholds (in dB HL) at the 250-Hz signal frequency in both the implanted ear and the non-implanted ear and ITD threshold, in microseconds. Threshold at the signal frequency was not found to be correlated with ITD threshold for the implanted ear (p = 0.29) nor the non-implanted ear (p = 0.18). Although two listeners with the best thresholds also had the lowest ITD thresholds, the remaining four listeners had very similar audiometric thresholds with ITD thresholds ranging from 314 to 1271 microseconds.

Discussion and Summary

The results of this supplemental experiment can be characterized into three main findings. First, ITD thresholds were found to be generally quite poor in the range of 131 to 1271 microseconds for 200-ms, 250-Hz signals. Second, ITD thresholds were not correlated with audiometric threshold at the test frequency for the implanted ear nor for the non-implanted ear. Third, there was a statistically significant correlation between the ITD thresholds and the degree of EAS-related benefit for speech recognition in diffuse noise for the adaptive SRT. These results suggest that binaural timing cues can be preserved, to some extent, with hearing preservation cochlear implantation and that the availability of ITD cues is associated with higher levels of EAS-related benefit for speech recognition in complex listening environments. Given the small sample size and the limited spectral range tested, more research is needed to determine whether the presence of ITD cues are the primary underlying mechanism responsible for EAS benefit seen with unilaterally implanted patients with binaural acoustic hearing.

FIGURE A1.

Individual and mean audiometric thresholds, in dB HL, as a function of signal frequency, in Hz, for the implanted and the non-implanted ears obtained on the date of testing. Error bars represent +/− standard error measurement.

FIGURE A2.

EAS benefit as a function of ITD threshold, in microseconds.

APPENDIX REFERENCES

- Babkoff H, Muchnik C, Ben-David N, Furst M, Even-Zohar S, Hildesheimer M. Mapping lateralization of click trains in younger and older populations. Hear Res. 2002;165(1–2):117–27. doi: 10.1016/s0378-5955(02)00292-7. [DOI] [PubMed] [Google Scholar]

- Hafter ER, Dye RH, Gilkey RH. Lateralization of tonal signals which have neither onsets nor offsets. J Acoust Soc Am. 1979;65:471–77. doi: 10.1121/1.382346. [DOI] [PubMed] [Google Scholar]

- Kubo T, Sakashita T, Kusuki M, Kyunai K, Ueno K, Hikawa C, Wada T, Shibata T, Nakai Y. Sound lateralization and speech discrimination in patients with sensorineural hearing loss. Acta Otolaryngol Suppl. 1998;538:63–69. [PubMed] [Google Scholar]

- Klumpp RG, Eady HR. Some measurements of interaural time difference thresholds. J Acoust Soc Am. 1956;28:859–860. [Google Scholar]

- Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1971;49:467–477. [PubMed] [Google Scholar]

- Strouse A, Ashmead DH, Ohde RN, Grantham DW. Temporal processing in the aging auditory system. J Acoust Soc Am. 1998;104:2385–99. doi: 10.1121/1.423748. [DOI] [PubMed] [Google Scholar]

- Yost WA, Dye RH., Jr Discrimination of interaural differences of level as a function of frequency. J Acoust Soc Am. 1988;83:1846–51. doi: 10.1121/1.396520. [DOI] [PubMed] [Google Scholar]

References

- Anderson Gosselin P, Gagné JP. Older adults expend more listening effort than young adults recognizing speech in noise. J Speech Lang Hear Res 2011. 2011 Jun;54(3):944–58. doi: 10.1044/1092-4388(2010/10-0069). [DOI] [PubMed] [Google Scholar]

- Arnoldner C, Helbig S, Wagenblast J, Baumgartner WD, Hamzavi JS, Riss D, Gstoettner W. Electric acoustic stimulation in patients with postlingual severe high-frequency hearing loss: clinical experience. Adv Otorhinolaryngol. 2010;67:116–24. doi: 10.1159/000262603. [DOI] [PubMed] [Google Scholar]

- Avendano C, Algazi VR, Duda RO. A head-and-torso model for low-frequency binaural elevation effects. Proc IEEE App Sig Proc Audio Acoustic. 1999;1999:179–182. [Google Scholar]

- Baumann U, Rader T, Fastl H, Helbig S. Speech perception in complex noise: Combined electric-acoustic stimulation (EAS) outperforms bilateral cochlear implant. Paper presented at the Hearing Preservation Workshop VIII; Vienna, Austria. 2009. [Google Scholar]

- Best V, Mason CR, Kidd G. Spatial release from masking in normally hearing and hearing-impaired listeners as a function of the temporal overlap of competing talkers. J Acoust Soc Am 2011. 2011 Mar;129(3):1616–25. doi: 10.1121/1.3533733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bronkhorst AW, Plomp R. Effect of multiple speechlike maskers on binaural speech recognition in normal and impaired hearing. J Acoust Soc Am. 1992;92:3132–3139. doi: 10.1121/1.404209. [DOI] [PubMed] [Google Scholar]

- Büchner A, Schüssler M, Battmer RD, Stöver T, Lesinski-Schiedat A, Lenarz T. Impact of low-frequency hearing. Audiol Neurootol. 2009;14(Suppl 1):8–13. doi: 10.1159/000206490. [DOI] [PubMed] [Google Scholar]

- Carlson MC, Driscoll CLW, Gifford RH, Service GJ, Tombers NM, Hughes-Borst RJ, Neff BA, Beatty CW. Implications of minimizing trauma during conventional length cochlear implantation. Otol Neurotol. 2011;32(6):962–8. doi: 10.1097/MAO.0b013e3182204526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan JC, Freed DJ, Vermiglio AJ, Soli SD. Evaluation of binaural functions in bilateral cochlear implant users. Int J Audiol. 2008;47:296–310. doi: 10.1080/14992020802075407. [DOI] [PubMed] [Google Scholar]

- Ching TY, van Wanrooy E, Dillon H, Carter L. Spatial release from masking in normal-hearing children and children who use hearing aids. J Acoust Soc Am. 2011;129:368–375. doi: 10.1121/1.3523295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Compton-Conley CL, Neuman AC, Killion MC, Levitt H. Performance of directional microphones for hearing aids: real-world versus simulation. J Am Acad Audiol. 2004;15(6):440–55. doi: 10.3766/jaaa.15.6.5. [DOI] [PubMed] [Google Scholar]

- Dillon H, Katsch R, Byrne D, Ching T, Keidser G, Brewer S. National Acoustics Laboratories Research and Development, Annual Report 1997/98. Sydney: National Acoustics Laboratories; 1998. The NAL-NL1 prescription procedure for non-linear hearing aids; pp. 4–7. [Google Scholar]

- Dorman MF, Gifford RH, Lewis K, McKarns S, Ratigan J, Spahr A, Shallop JK, Driscoll CLW, Luetje C, Thedinger BS, Beatty CW, Syms M, Novak M, Barrs D, Cowdrey L, Black J, Loiselle L. Word recognition following implantation of conventional and 10 mm Hybrid electrodes. Audiol Neurotol. 2009;14:181–189. doi: 10.1159/000171480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Intl J Audiol. 2010;49:912–9. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48(3):668–80. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Dunn CC, Perreau A, Grantz BJ, Tyler RS. Benefits of localization and speech perception with multiple noise sources in listeners with a short-electrode cochlear implant. J Am Acad Audiol. 2010;21:44–51. doi: 10.3766/jaaa.21.1.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duquesnoy AJ. The intelligibility of sentences in quiet and in noise in aged listeners. J Acoust Soc Am. 1983;74:1136–44. doi: 10.1121/1.390037. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, Oleson JJ, Reiss LA, Parkinson AJ. Hybrid 10 clincial trial: preliminary results. Audiol Neurotol. 2009;14(Supp 1):32–8. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Brown CB. Psychophysical properties of low-frequency hearing: implications for perceiving speech and music via electric and acoustic stimulation. Adv Otorhinolaryngol. 2010;67:51–60. doi: 10.1159/000262596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford RH, Dorman MF, Spahr AJ, Bacon SP, Lorens A, Skarzyński H. Hearing preservation surgery: Psychophysical estimates of cochlear damage in recipients of a short electrode array. J Acoust Soc Am. 2008;124:2164–2173. doi: 10.1121/1.2967842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grantham DW, Ashmead DH, Ricketts TA, Haynes DS, Labadie RF. Interaural time and level difference thresholds for acoustically presented signals in post-lingually deafened adults fitted with bilateral cochlear implants using CIS+ processing. Ear Hear. 2008;29:33–44. doi: 10.1097/AUD.0b013e31815d636f. [DOI] [PubMed] [Google Scholar]

- Grantham DW, Ashmead DH, Ricketts TA, Labadie RF, Haynes DS. Horizontal-plane localization of noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants. Ear Hear. 2007;28:524–41. doi: 10.1097/AUD.0b013e31806dc21a. [DOI] [PubMed] [Google Scholar]

- Gstoettner WK, van de Heyning P, O’Connor AF, Morera C, Sainz M, Vermeire K, Mcdonald S, Cavallé L, Helbig S, Valdecasas JG, Anderson I, Adunka OF. Electric acoustic stimulation of the auditory system: results of a multi-centre investigation. Acta Otolaryngol. 2008;128(9):968–75. doi: 10.1080/00016480701805471. [DOI] [PubMed] [Google Scholar]

- Gstoettner W, Helbig S, Settevendemie C, Baumann U, Wagenblast J, Arnoldner C. A new electrode for residual hearing preservation in cochlear implantation: first clinical results. Acta Otolaryngol. 2009;129(4):372–9. doi: 10.1080/00016480802552568. [DOI] [PubMed] [Google Scholar]

- Hawkins DB, Yacullo WS. Signal-to-noise ratio advantage of binaural hearing aids and directional microphones under different levels of reverberation. J Speech Hear Disord. 1984;49:278–286. doi: 10.1044/jshd.4903.278. [DOI] [PubMed] [Google Scholar]

- Helbig S, Van de Heyning P, Kiefer J, Baumann U, Kleine-Punte A, Brockmeier H, Anderson I, Gstoettner W. Combined electric acoustic stimulation with the PULSARCI(100) implant system using the FLEX(EAS) electrode array. Acta Otolaryngol. 2011;131:585–95. doi: 10.3109/00016489.2010.544327. [DOI] [PubMed] [Google Scholar]

- Kokkinakis K, Hazrati O, Loizou PC. A channel-selection criterion for suppressing reverberation in cochlear implants. J Acoust Soc Am 2011. 2011 May;129(5):3221–32. doi: 10.1121/1.3559683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kramer SE, Lorens A, Coninx F, Zekveld AA, Piotrowska A, Skarzynski H. Processing load during listening: the influence of task characteristics on the pupil response. Lang Cogn Process (in press) [Google Scholar]

- Kulkarni A, Isabelle SK, Colburn HS. Sensitivity of human subjects to head-related transfer function phase spectra. J Acoust Soc Am. 1999;105:2821–2840. doi: 10.1121/1.426898. [DOI] [PubMed] [Google Scholar]

- Lebo CP, Smith MFW, Mosher ER, Jelonek SL, Schwind DR, Decker KE, Krusemark HJ, Kurz PL. Restaurant noise, hearing loss, and hearing aids. West J Med. 1994;161:45–9. [PMC free article] [PubMed] [Google Scholar]

- Lenarz T, Stöver T, Buechner A, Lesinski-Schiedat A, Patrick J, Pesch J. Hearing conservation surgery using the Hybrid-L electrode. Results from the first clinical trial at the Medical University of Hannover. Audiol Neurotol. 2009;14(Suppl 1):22–31. doi: 10.1159/000206492. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Parkinson A, Arcaroli J. Spatial hearing and speech intelligibility in bilateral cochlear implant users. Ear Hear. 2009;30(4):419–31. doi: 10.1097/AUD.0b013e3181a165be. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lorens A, Polak M, Piotrowska A, Skarzynski H. Outcomes of treatment of partial deafness with cochlear implantation: a DUET study. Laryngoscope. 2008;118:288–294. doi: 10.1097/MLG.0b013e3181598887. [DOI] [PubMed] [Google Scholar]

- Mackersie CL, Cones H. Subjective and psychophysiological indexes of listening effort in a competing-talker task. J Am Acad Audiol 2011. 2011 Feb;22(2):113–22. doi: 10.3766/jaaa.22.2.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marrone N, Mason CR, Kidd G. Tuning in the spatial dimension: evidence from a masked speech identification task. J Acoust Soc Am. 2008;124:1146–58. doi: 10.1121/1.2945710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musa-Shufani S, Walger M, von Wedel H, Meister H. Influence of dynamic compression on directional hearing in the horizontal plane. Ear Hear. 2006;27(3):279–85. doi: 10.1097/01.aud.0000215972.68797.5e. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli S, Sullivan J. Development of the hearing in noise test for the measurement of speech reception thresholds in quiet and in noise. J Acoust Soc Am. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- Obholzer RJ, Gibson WP. Cochlear function following implantation with a full electrode array. Cochlear Implants Int. 2011;12(1):44–7. doi: 10.1179/146701010X486525. [DOI] [PubMed] [Google Scholar]

- Ozimek E, Warzybok A, Kutzner D. Polish sentence matrix test 20 for speech intelligibility measurement in noise. Intl J Audiol. 2010;49:444–454. doi: 10.3109/14992021003681030. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- Poissant SF, Whitmal NA, Freyman RL. Effects of reverberation and masking on speech intelligibility in cochlear implant simulations. J Acoust Soc Am. 2006;119:1606–1615. doi: 10.1121/1.2168428. [DOI] [PubMed] [Google Scholar]

- Potts LG, Skinner MW, Litovsky RA, Strube MJ, Kuk F. Recognition and localization of speech by adult cochlear implant recipients wearing a digital hearing aid in the nonimplanted ear (bimodal hearing) J Am Acad Audiol. 2009;20(6):353–73. doi: 10.3766/jaaa.20.6.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of envelope-vocoder processing on F0 discrimination and concurrent-vowel identification. Ear Hear. 2005;26:451–460. doi: 10.1097/01.aud.0000179689.79868.06. [DOI] [PubMed] [Google Scholar]

- Sarampalis A, Kalluri S, Edwards B, Hafter E. Objective measures of listening effort: effects of background noise and noise reduction. J Speech Lang Hear Res. 2011;52(5):1230–40. doi: 10.1044/1092-4388(2009/08-0111). [DOI] [PubMed] [Google Scholar]

- Shinn-Cunningham BG, Santarelli S, Kopco N. Tori of confusion: binaural localization sources within reach of a listener. J Acoust Soc Am. 2000;107:1627–1636. doi: 10.1121/1.428447. [DOI] [PubMed] [Google Scholar]

- Skarzyński H, Lorens A, Piotrowska A, Podskarbi-Fayette R. Results of partial deafness cochlear implantation using various electrode designs. 2009;14(Suppl 1):39–45. doi: 10.1159/000206494. [DOI] [PubMed] [Google Scholar]

- Skarzyński H, Lorens A, Matusiak M, Porowski M, Skarzyński PH, James CJ. Partial Deafness Treatment with the Nucleus Straight Research Array Cochlear Implant. Audiol Neurootol. 2011;17(2):82–91. doi: 10.1159/000329366. [DOI] [PubMed] [Google Scholar]

- Skarzyński H, Lorens A. Partial deafness treatment. Cochlear Implants Int. 2010;11(Suppl 1):29–41. doi: 10.1179/146701010X12671178390799. [DOI] [PubMed] [Google Scholar]

- Skarzynski H, Lorens A, Piotrowska A, Anderson I. Preservation of low frequency hearing in partial deafness cochlear implantation (PDCI) using the round window surgical approach. Acta Oto-Laryngol. 2007;127:41–48. doi: 10.1080/00016480500488917. [DOI] [PubMed] [Google Scholar]

- Skarzynski H, Lorens A, Zgoda M, Piotrowska A, Skarzynski PH, Szkielkowska A. Atraumatic round window deep insertion of cochlear electrodes. Acta Oto-Laryngologica. 2011 doi: 10.3109/00016489.2011.557780. epub ahead of print. [DOI] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF, Litvak LL, Van Wie S, Gifford RH, Loizou PC, Loiselle LM, Oakes T, Cook S. Development and Validation of the AzBio Sentence Lists. Ear Hear. 2012;33:112–7. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strauss DJ, Corona-Strauss FI, Trenado C, Bernarding C, Reith W, Latzel M, Froehlich M. Electrophysiological correlates of listening effort: neurodynamical modeling and measurement. Cogn Neurodyn. 2010;4(2):119–31. doi: 10.1007/s11571-010-9111-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Hoesel RJ, Tyler RS. Speech perception, localization, and lateralization with bilateral cochlear implants. J Acoust Soc Am. 2003;113:1617–30. doi: 10.1121/1.1539520. [DOI] [PubMed] [Google Scholar]

- Woodson EA, Reiss LA, Turner CW, Gfeller K, Gantz BJ. The Hybrid cochlear implant: a review. Adv Otorhinolaryngol. 2010;67:125–34. doi: 10.1159/000262604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zekveld AA, Kramer SE, Festen JM. Cognitive load during speech perception in noise: the influence of age, hearing loss, and cognition on the pupil response. Ear Hear. 2011;32(4):498–510. doi: 10.1097/AUD.0b013e31820512bb. [DOI] [PubMed] [Google Scholar]

- Zekveld AA, Kramer SE, Festen JM. Pupil response as an indication of effortful listening: the influence of sentence intelligibility. Ear Hear. 2010;31(4):480–90. doi: 10.1097/AUD.0b013e3181d4f251. [DOI] [PubMed] [Google Scholar]