Abstract

Objectives

Electronic laboratory reporting (ELR) reduces the time between communicable disease diagnosis and case reporting to local health departments (LHDs). However, it also imposes burdens on public health agencies, such as increases in the number of unique and duplicate case reports. We assessed how ELR affects the timeliness and accuracy of case report processing within public health agencies.

Methods

Using data from May–August 2010 and January–March 2012, we assessed timeliness by calculating the time between receiving a case at the LHD and reporting the case to the state (first stage of reporting) and between submitting the report to the state and submitting it to the Centers for Disease Control and Prevention (second stage of reporting). We assessed accuracy by calculating the proportion of cases returned to the LHD for changes or additional information. We compared timeliness and accuracy for ELR and non-ELR cases.

Results

ELR was associated with decreases in case processing time (median = 40 days for ELR cases vs. 52 days for non-ELR cases in 2010; median = 20 days for ELR cases vs. 25 days for non-ELR cases in 2012; both p<0.001). ELR also allowed time to reduce the backlog of unreported cases. Finally, ELR was associated with higher case reporting accuracy (in 2010, 2% of ELR case reports vs. 8% of non-ELR case reports were returned; in 2012, 2% of ELR case reports vs. 6% of non-ELR case reports were returned; both p<0.001).

Conclusion

The overall impact of increased ELR is more efficient case processing at both local and state levels.

Electronic laboratory reporting (ELR) has been shown to reduce the time interval between diagnosis of reportable communicable diseases and reporting these cases to public health agencies.1–4 In addition, some data fields are more likely to be completed when reports are made via ELR.1–3 Ideally, increases in electronic data transfer would decrease the processing burden within public health agencies; at the very least, the burden of data entry should be reduced. However, automated reporting increases the total number of cases reported1–3,5 and can increase the number of reports not meeting reportable disease case definitions,5,6 thereby potentially increasing the time required for case processing for local health department (LHD) staff. In addition, ELR does not capture important case information, such as treatment details, which need to be added to case reports following investigation by local or state personnel.

The proportion of case reports received by ELR is increasing with the support of the 2009 Health Information Technology for Economic and Clinical Health (HITECH) Act.7 Increases in the use of electronic health records8,9 will also increase the potential for electronic transfer of laboratory testing data to public health. However, public health funding is not increasing.10 There is little published information on whether the increasing number of cases will require additional processing time and resources; therefore, it is difficult to predict the impact of increased ELR on the public health infrastructure.

North Carolina implemented the North Carolina Electronic Disease Surveillance System (NC EDSS) during 2007–2008, including ELR from the state public health laboratory and one large private laboratory. The increased case volume from these laboratories, in addition to the limited workforce available to process cases, resulted in a backlog of approximately 18,000 cases. From 2010 to 2012, the North Carolina Division of Public Health (NCDPH) staff made a concerted effort to address the backlog of reportable disease cases accumulated at health departments at the local and state level. During this period, the North Carolina Preparedness and Emergency Response Research Center was conducting an evaluation of electronic disease surveillance systems used in North Carolina.

To assess the effect of ELR on case reporting, we evaluated data from the NC EDSS. We evaluated these data for two periods: 2010, when the backlog created by the addition of ELR was large; and 2012, when the system was more mature and the backlog was eliminated. We used these data to test the hypothesis that ELR decreases the case-processing burden, by comparing case report processing timeliness and accuracy for reportable disease cases delivered by ELR and non-ELR methods to North Carolina LHDs during these two time periods.

METHODS

In North Carolina, reportable communicable disease case data are captured in the NC EDSS using the Maven software system.11 Data on cases submitted by ELR and non-ELR means were received from NCDPH surveillance staff. During the analysis period, ELR cases entered the NC EDSS by electronic transmission from two laboratories: the State Laboratory of Public Health and the Laboratory Corporation of America (LabCorp). Non-ELR cases, submitted by faxed and/or mailed reports from laboratories and clinicians, were entered in the system by local and state public health agency staff. We analyzed NC EDSS data from May–August 2010 and January–March 2012 from all North Carolina counties. Data on tuberculosis, syphilis, and human immunodeficiency virus cases were excluded from analysis because these cases are handled differently.

Using these data, we assessed timeliness by calculating the time between receiving a case at the LHD and reporting the case to NCDPH (first stage of reporting) and between submitting the case report to NCDPH and submitting the report to the Centers for Disease Control and Prevention (CDC) (second stage of reporting). We assessed reporting accuracy by calculating the proportion of cases returned from NCDPH to the LHD for correction or additional data collection. We compared ELR and non-ELR reporting by disease for diseases for which more than 10 cases were reported and cases were reported by both methods. We used Mann-Whitney tests to identify significant differences in median evaluated time intervals and z-scores to identify significant differences in proportions of cases returned to LHDs. All data analysis was performed using SAS® version 9.2.12

RESULTS

Data

The analysis datasets contained 11,560 cases reported in 2010 and 16,095 cases reported in 2012. While data from 2010 were obtained 6–9 months following the date cases were reported to public health, data from 2012 were obtained closer to the date of initial report to public health. (The longest processing period -possible for 2012 cases in this dataset was 105 days.) We created a comparison 2010 database similarly limited to 105 days that excluded some cases that took longer than 105 days for case processing (n=4,419 cases, 28%). Most cases in both datasets were sexually transmitted disease (STD) cases (94% of the 2012 dataset, 91% of the 2010 comparison dataset, and 86% of the cases excluded from the 2010 comparison dataset). Cases reported by ELR comprised 38% of total cases in the 2010 dataset (n=4,345) and 38% of cases in the 2012 dataset (n=6,129).

Overall timeliness

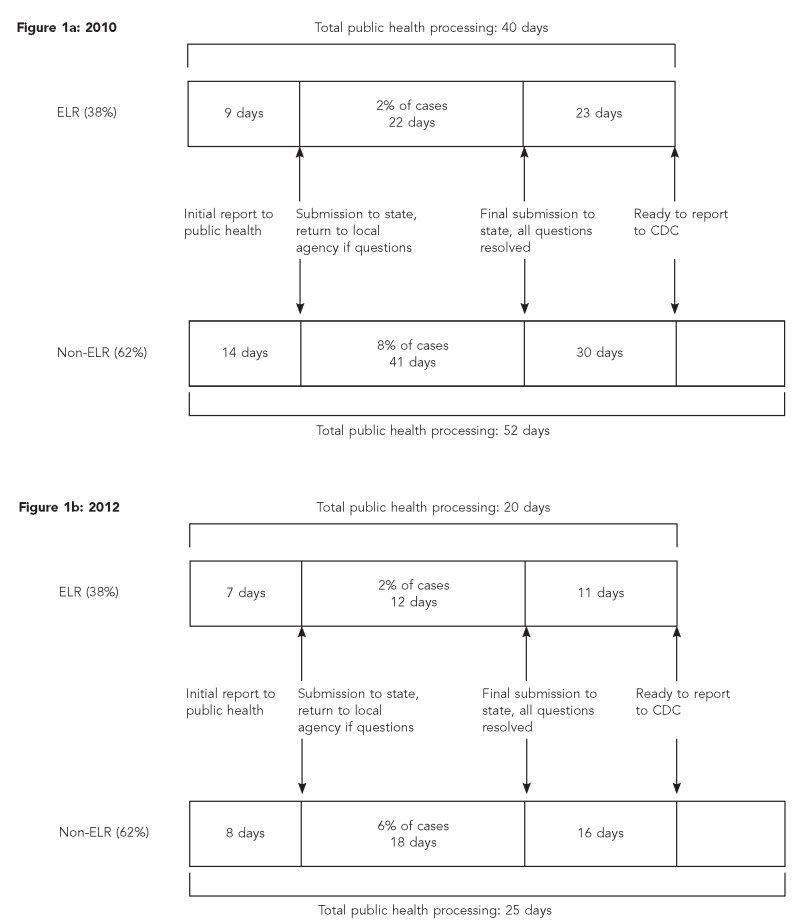

As shown in Figure 1a, in 2010, the median number of days required for all public health processing for ELR cases (n=40) was fewer than for non-ELR cases (n=52) (p<0.001). In 2012, the overall case-processing time was greatly reduced. These gains in timeliness were due to the combination of ELR, elimination of the backlog of cases waiting to be processed, and a technical assistance effort focused on improving LHD case processing. ELR cases reported in 2012 were also processed more quickly than non-ELR cases (20 vs. 25 days, respectively, p<0.001) (Figure 1b).

Figure 1.

Timeline (median days) of public health processing of a reportable disease or condition at state and local public health agencies by ELR and non-ELR: North Carolina, May–August 2010 and January–March 2012

ELR = electronic laboratory reporting

CDC = Centers for Disease Control and Prevention

Local and state health department processing timeliness and accuracy

Overall processing time can be separated into LHD processing and state processing periods. In 2010, ELR cases required less time for LHD processing than did non-ELR cases. The processing time required prior to reporting to the state health department in 2010 was a median of nine days for ELR cases and 14 days for non-ELR cases (p=0.08) (Figure 1a). In 2012, there was no difference in LHD case-processing time (median = 7 and 8 days for ELR and non-ELR cases, respectively, p=0.31) (Figure 1b).

Following LHD processing, cases were submitted to the state public health agency. After receipt at the state, some records were returned to the LHD due to incorrect or missing information. ELR records were less likely than non-ELR records to be returned. In 2010, 2% of ELR records vs. 8% of non-ELR records were returned (Figure 1a); in 2012, 2% and 6% of ELR and non-ELR records, respectively, were returned (both p<0.001) (Figure 1b). Similarly, ELR case reports were returned to the LHD and then resubmitted to the state in less time than non-ELR records (2010 medians: 22 days for ELR vs. 41 days for non-ELR, p=0.33; 2012 medians: 12 days for ELR vs. 18 days for non-ELR, p=0.31) (Figures 1a and 1b). We also assessed the difference in accuracy seen in the full 2010 dataset. In this dataset, with more case follow-up time, 4% of ELR cases vs. 14% of non-ELR cases were returned (p<0.001) (data not shown).

The median interval between receipt of the case at the state public health department with the correct information and submission to CDC was also shorter for ELR records than for non-ELR records in 2010 (median = 23 days vs. 30 days, p<0.001) (Figure 1a) and in 2012 (median = 11 days vs. 16 days, p<0.001) (Figure 1b).

Case processing by disease

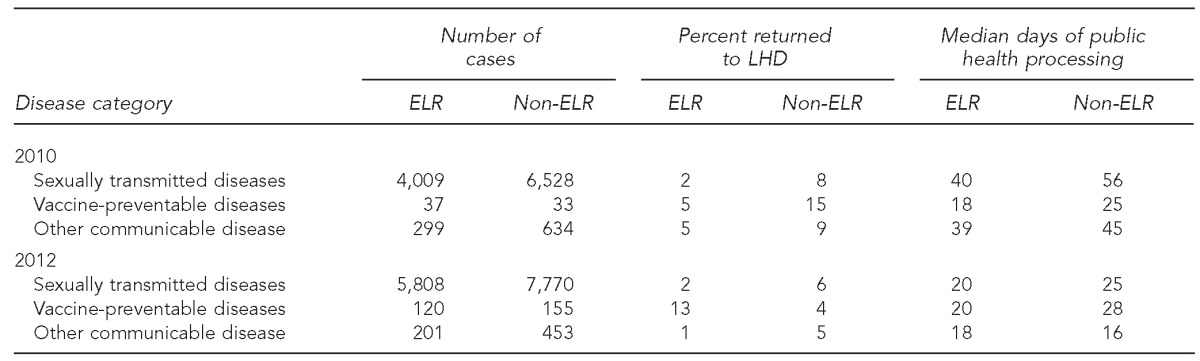

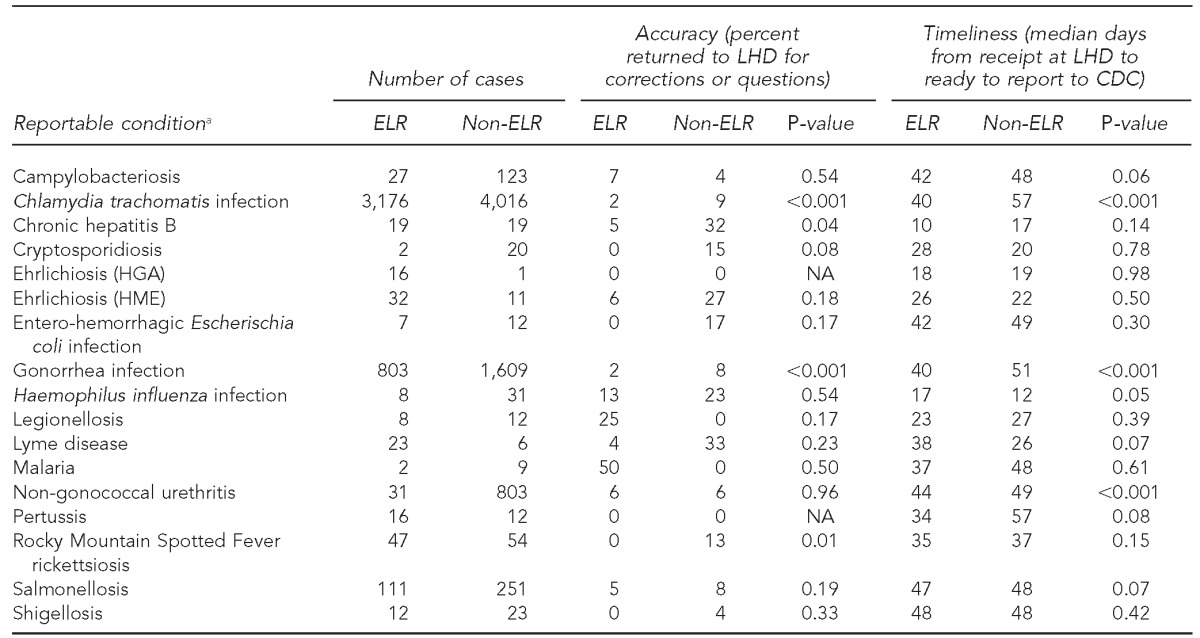

The major gains seen in case-processing timeliness for ELR cases were for STD case reports (Table 1). In 2010, Chlamydia and gonorrhea cases reported by ELR required 40 days for processing vs. 56 days for non-ELR cases (p<0.0001). In 2012, the pattern was similar (20 days vs. 25 days for ELR and non-ELR cases, respectively, p<0.0001). However, the processing time for vaccine-preventable diseases did not differ significantly by reporting method (ELR vs. non-ELR) (Table 1). Total case-processing time was actually significantly longer for ELR reports for two diseases with complex case definitions, Haemophilus influenza infection (p=0.05) and Lyme disease (p=0.07) (Table 2).

Table 1.

Timeliness and accuracy of electronic laboratory reporting by reportable disease category: North Carolina, May–August 2010 and January–March 2012

LHD = local health department

ELR = electronic laboratory reporting

Table 2.

Timeliness and accuracy of electronic laboratory reporting by reportable disease: North Carolina, January–March 2012

aConditions for which cases were present in the NC EDSS database but not reported by ELR were arboviral encephalitis (non-West Nile or La Crosse), brucellosis, Clostridium perfringens infections, Creutzfeldt-Jakob disease, dengue fever, diphtheria, hepatitis A, perinatal hepatitis B, acute hepatitis, influenza, La Crosse (California) encephalitis, Hansen disease (Leprosy), leptospirosis, measles, pelvic inflammatory disease, pneumococcal meningitis, Q fever, rubella, Staphylococcus aureus with reduced susceptibility to vancomycin, invasive group A streptococcal infection, streptococcal toxic shock syndrome, acute typhoid fever, vaccinia, and West Nile virus encephalitis. Some cases, such as hepatitis A, are submitted by ELR but are also submitted by fax and are prioritized for rapid hand entry when the fax arrives (generally, sooner than when the ELR record is received). Therefore, ELR is not the initial method of receipt for these cases.

LHD = local health department

CDC = Centers for Disease Control and Prevention

ELR = electronic laboratory reporting

HGA = human granulocytic anaplasmosis

NA = not applicable

HME = human monocytic ehrlichiosis

NC EDSS = North Carolina Electronic Disease Surveillance System

DISCUSSION

These findings suggest that ELR was associated with reduced time for both stages of case processing—reporting from LHDs to the state and submitting the case report to CDC. Cases submitted by ELR were also processed with fewer inaccuracies, resulting in fewer cases being returned to the LHD. Our data suggest that despite the increase in cases handled in 2012, reporting by ELR allowed an overall increase in timeliness for all cases.

Following implementation of ELR in 2007, the number of cases reported to the state increased.13 Because the staff time available for surveillance duties was limited, in the years immediately following implementation it was not possible to process all case reports in a timely manner, and lower-priority cases accumulated. However, over time, and as efficiency with NC EDSS improved, NCDPH was able to direct staff resources toward working with LHD staff to enter these cases from 2010 to 2011; as a result, case reports were processed much closer to the initial date of report to public health. Therefore, although the increase in cases initially slowed case reporting, gains in efficiency attributed in part to ELR were seen at both local and state levels, and case reporting timeliness improved greatly (Personal communication, Emily Lamb, NCDPH, December 2012).

Conversations with staff participating in the implementation of the electronic system suggest that timeliness gains were due to two major factors. First, case reports received by ELR were more accurate; therefore, they required less follow-up to obtain correct information. Second, the electronic system allowed review of any case during processing, and had comment fields embedded in case reports. This capability resulted in improved insight into case processing, and allowed state and local health department staff to target subsets of case reports for which efficiency could be improved.

The time required to process some diseases with complex case definitions was increased for cases reported by ELR. These diseases were characterized by a requirement for multiple test results to make a case reportable. However, receipt of a single laboratory test result by ELR resulted in a database record requiring attention from LHD communicable disease staff (i.e., an open case). These cases would then remain open until all results were assembled. In contrast, non-ELR reports were more likely to include the results of more than one test; therefore, they required less processing time. This issue could be addressed by modifications to the electronic surveillance system to limit notification of LHD communicable disease staff (that is, limit “opening a case”) to cases with sufficient information assembled.

Limitations

Our analysis of ELR was subject to three major limitations. First, data were captured during the summer months in 2010 and winter months in 2012; given seasonal patterns of disease, some diseases for which ELR altered reporting, such as Lyme disease, represented different proportions of total cases in the two databases. However, because improvements in timeliness were seen for most reportable diseases, these differences were not responsible for the overall findings presented. Second, because ELR in North Carolina is limited to two laboratories, ELR results reflect the tests performed at these facilities. However, all reportable diseases are reported by these laboratories. Finally, timeliness comparisons were based on a limited time window. Although the comparison presented is valid, the results are likely to be underestimates of the true processing times.

CONCLUSION

ELR implementation is associated with meaningful decreases in case report processing time. These findings suggest that the expansion of ELR statewide and nationally to include hospitals and commercial laboratories may support more efficient case processing at local and state health departments.

Footnotes

The authors thank Lauren DiBiase of the University of North Carolina (UNC) and Robert G. Pace of the North Carolina Division of Public Health for their valuable insight on these data. This research was carried out by the North Carolina Preparedness and Emergency Response Research Center (NCPERRC), which is part of the UNC Center for Public Health Preparedness at the UNC-Chapel Hill Gillings School of Global Public Health and was supported by the Centers for Disease Control and Prevention (CDC) grant #1PO1 TP 000296. The contents of this article are solely the responsibility of the authors and do not necessarily represent the official views of CDC. Additional information can be found at http://cphp.sph.unc.edu/ncperrc.

This work was exempted from review by the Institutional Review Board of UNC-Chapel Hill.

REFERENCES

- 1.Effler P, Ching-Lee M, Bogard A, Leong MC, Nekomoto T, Jernigan D. Statewide system of electronic notifiable disease reporting from clinical laboratories: comparing automated reporting with conventional methods [published erratum appears in JAMA 2000;283:2937] JAMA. 1999;282:1845–50. doi: 10.1001/jama.282.19.1845. [DOI] [PubMed] [Google Scholar]

- 2.Nguyen TQ, Thorpe L, Makki HA, Mostashari F. Benefits and barriers to electronic laboratory results reporting for notifiable diseases: the New York City Department of Health and Mental Hygiene experience. Am J Public Health. 2007;97(Suppl 1):S142–5. doi: 10.2105/AJPH.2006.098996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Overhage JM, Grannis S, McDonald CJ. A comparison of the completeness and timeliness of automated electronic laboratory reporting and spontaneous reporting of notifiable conditions. Am J Public Health. 2008;98:344–50. doi: 10.2105/AJPH.2006.092700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Panackal AA, M'ikanatha NM, Tsui FC, McMahon J, Wagner MM, Dixon BW, et al. Automatic electronic laboratory-based reporting of notifiable infectious diseases at a large health system. Emerg Infect Dis. 2002;8:685–91. doi: 10.3201/eid0807.010493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Effect of electronic laboratory reporting on the burden of Lyme disease surveillance—New Jersey, 2001–2006. MMWR Morb Mortal Wkly Rep. 2008;57(2):42–5. [PubMed] [Google Scholar]

- 6.Macomber KE, Lewis E. The implications of syphilis electronic laboratory reporting on a morbidity-based disease reporting system. Presented at the 2008 National STD Prevention Conference; 2008 Mar 10–13; Chicago. [Google Scholar]

- 7. Public Law 111-5, 123 Stat 226 (2009)

- 8.Jha AK, DesRoches CM, Kralovec PD, Joshi MS. A progress report on electronic health records in U.S. hospitals. Health Aff (Millwood) 2010;29:1951–7. doi: 10.1377/hlthaff.2010.0502. [DOI] [PubMed] [Google Scholar]

- 9.Department of Health and Human Services (US) More than 100,000 health care providers sign up to adopt electronic health records through their regional extension centers; [press release] 2011. Nov 17, [cited 2012 Jun 20]. Available from: URL: http://www.hhs.gov/news/press/2011pres/11/20111117a.html.

- 10.National Association of County and City Health Officials. Local health department job losses and program cuts: findings from January/February 2010 survey. [cited 2013 Mar 22]. Available from: URL: http://www.naccho.org/topics/infrastructure/lhdbudget/upload/Job-Losses-and-Program-Cuts-5-10.pdf.

- 11.Consilience Software. Maven. Austin (TX): Consilience Software; 2007. [Google Scholar]

- 12.SAS Institute, Inc. SAS®: Version 9.2. Cary (NC): SAS Institute, Inc.; 2012. [Google Scholar]

- 13.North Carolina Department of Health and Human Services. Facts and figures: North Carolina communicable disease reports. [cited 2012 Jul 10]. Available from: URL: http://epi.publichealth.nc.gov/cd/figures.html#cds.