Abstract

We investigated the possibility that a range of social stimuli capture the attention of 6-month-old infants when in competition with other non-face objects. Infants viewed a series of six-item arrays in which one target item was a face, body part, or animal as their eye movements were recorded. Stimulus arrays were also processed for relative salience of each item in terms of color, luminance, and amount of contour. Targets were rarely the most visually salient items in the arrays, yet infants' first looks toward all three target types were above chance, and dwell times for targets exceeded other stimulus types. Girls looked longer at faces than did boys, but there were no sex differences for other stimuli. These results are interpreted in a context of learning to discriminate between different classes of animate stimuli, perhaps in line with affordances for social interaction, and origins of sex differences in social attention.

Keywords: face perception, sex differences, infant development, attention, saliency map

Humans are a highly social species. A rich body of evidence highlights the importance of faces to social interactions (Farah et al., 1998; Haxby et al., 2002), and faces are an especially important class of visual stimulus for infants. Building on observations of early developments in visual scanning of faces within the eye region (Haith et al., 1977), researchers have established that faces and face-like configurations attract infants' attention to a greater extent than foil stimuli, as revealed by experiments employing preferential looking (Mondloch et al., 1999), preferential tracking (Johnson et al., 1991) and electrophysiological measures (de Haan et al., 2002) Infants are sensitive to eye contact (Farroni et al., 2002), direction of gaze (Farroni et al., 2004), and emotional expression (Walker, 1982). Infants discriminate between infant- and adult-directed dynamic faces (Kim and Johnson, submitted), and modulate their behavior when engaged in live face-to-face interactions in response to facial movements (Meltzoff and Moore, 1977; Murray and Trevarthen, 1985). These behaviors are subserved by early-developing mechanisms supporting efficient detection and identification of faces and their properties.

The extent to which infants' attention to faces stems from specialized or general-purpose mechanisms is a matter of dispute (e.g., Morton and Johnson, 1991; Valenza et al., 1996; cf. Bukach et al., 2006; McKone et al., 2007). An important approach employed to adjudicate these views addresses the possibility that stimulus attributes unique to faces attract infants' gaze when embedded in complex scenes. Current evidence for this possibility, however, is mixed. On the one hand, when watching a cartoon stimulus, 6-month-old infants' attention was drawn similarly to visually salient regions of the display—defined in terms of motion, color, and luminance—and to faces (Frank et al., 2009), implying that attention to faces is not obligatory, and calling into question the extent to which faces necessarily may be considered a “special” class of stimulus. On the other hand, 6-month-olds' attention was captured more effectively by static faces than by distracters consisting of pictures of common objects arranged in six-item arrays (Gliga et al., 2009; Di Giorgio et al., 2012; see Schietecatte et al., 2011, for a similar demonstration in a more naturalistic setting). Attentional capture persisted when faces were inverted; suggesting that attraction to faces did not likely stem from their configural properties, given the disruptive effects of inversion on face recognition (Turati et al., 2004). Gliga et al. also tested effects of “scrambled” faces to evaluate the possibility that low-level stimulus attributes, present in upright and inverted faces but not in distracters, were responsible for capture; scrambled faces preserved color and contrast of intact faces but disrupted their phase spectra (i.e., featural and configural information). Under these conditions, scrambled faces did not capture attention.

By 6 months, therefore, faces often attract infants' attention in complex scenes, but this effect may not hold across all contexts, and the nature and mechanisms of attentional capture remain unclear. We consider two possibilities. First, faces may attract attention due to an unlearned propensity to orient to face-like visual stimuli, observed in neonates Valenza et al., 1996; Johnson et al., 1991, and perhaps augmented by a stored representation of their social benefits (e.g., affordances for social interactions, importance for identification of conspecifics, and relevance for discriminating humans from other animate entities) that builds over the first several months after birth. On this account, faces may have captured attention in the Di Giorgio et al. (2012) and Gliga et al. (2009) experiments due to a propensity to seek social information. Second, faces may attract attention due to low-level salience of key facial features, most notably the high-contrast eye region, which computational image processing models have established as an especially salient part of the human face (e.g., Li and Ngan, 2008; Khan et al., 2011), and which attracts infants' gaze even in inverted faces (Gallay et al., 2006). On this account, faces captured attention due to an intrinsic propensity to direct gaze toward salient portions of a visual scene (cf. Frank et al., 2009). Note that unlearned mechanisms for detecting faces and eyes (Farroni et al., 2002, 2004) are consistent with each of these accounts, yet play different roles in guiding visual attention: On a face-specific account, an inherent sensitivity to eye contact provides opportunities for social exchange, facilitating acquisition of socially relevant information. On a salience account, attention is drawn to the eye region due to its low-level properties.

As noted, extant evidence from infants is compatible with both face-specific and salience accounts of attentional capture by faces. Evidence from adults, too, is mixed. Experiments have examined the possibility that faces “pop out” preattentively in multi-element arrays, operationalized as response times to detect faces that are independent of the number of distracters. An early attempt to establish facial popout (Nothdurft, 1993) revealed positive evidence when the target was a drawing of an upright face set amongst inverted faces, but the effect remained even when facial features were omitted, implying that the contours of the hairline were responsible. Hansen and Hansen (1988) reported popout of an angry face set amongst happy faces, though this result may have stemmed from luminance differences between target and distracters (Purcell et al., 1996). Kuehn and Jolicoeur (1994) observed facial popout when distracters contained no facial features; face detection was impaired, however, when distracters consisted of rearranged facial features. Likewise, Herschler and Hochstein (2005) found that facial popout was most robust when distracters were visually distinct non-face objects and when inner facial features were clearly visible, either in photographs or drawings, but there was no evidence for popout of other common items, such as cars, houses, or animal faces. Other experiments revealed a processing advantage for faces when adults were asked to detect rapid (“flickered”) changes in item identity (Ro et al., 2001), or to match item identity with a briefly primed category name (Ro et al., 2007), in six-item arrays (faces, appliances, clothing, foods, musical instruments, or plants). The matching study found a similar effect for body parts (e.g., hands), but not for animal faces. In an identification task, however, human and animal faces were both detected rapidly (less than 400 ms) and accurately (greater than 95% correct) when viewed in photographs depicting natural scenes (Rousselet et al., 2003). Inversion impaired performance with human faces to a greater extent than animal faces, consistent with past research demonstrating important contributions of configural information to face recognition (e.g., Farah et al., 1995). Taken together, these studies provide evidence that for adults, as for infants, faces capture attention in complex arrays of distracter objects under some circumstances, yet they fail to reveal the sources of these effects: experience, familiarity, and expertise, or visual properties of the objects themselves.

To examine in greater detail the face-specific account as it pertains to infancy, we investigated 6-month-olds' attentional capture by three types of stimulus. We reasoned that a face-specific propensity to seek social information would lead to rapid attentional capture by full, upright faces, and might extend as well to stimuli that depart to varying extents from this “ideal” stimulus. This included human body parts that were either parts of faces (eyes, noses, and mouths) or were independent of faces (hands and feet). We also examined effects of animals as targets, all of which included faces. Animal faces did not capture adults' attention in a visual search task (Ro et al., 2007), but were detected with brief exposures (Rousselet et al., 2003). Additionally, experience with pets influences infants' visual inspection and categorization of animal pictures (Kovack-Lesh et al., 2008), implying that infants are interested in and learn rapidly about animals. If, however, social stimuli attract attention principally by virtue of their visual salience—perhaps due to regions of high contrast, or combinations of color and luminance—then computer-generated salience maps should reveal that faces and other kinds of social content are typically characterized these visual attributes more than the distracters with which they were paired in our study.

In addition, we addressed the possibility of sex differences in attentional capture by comparing girls' and boys' performance. Connellan et al. (2000) and Alexander et al. (2008) reported greater interest in faces by newborn and 6-month-old girls, respectively, relative to boys, and there is evidence of “female advantage” in face processing in adults, as discussed subsequently. In contrast, Weinberg et al. (1999) reported that 6-month-old boys were more socially oriented than girls when engaged in face-to-face interactions with their mothers. However, the majority of the face perception literature does not report analysis of sex differences, leaving open the question of a female advantage in attentional capture by social stimuli.

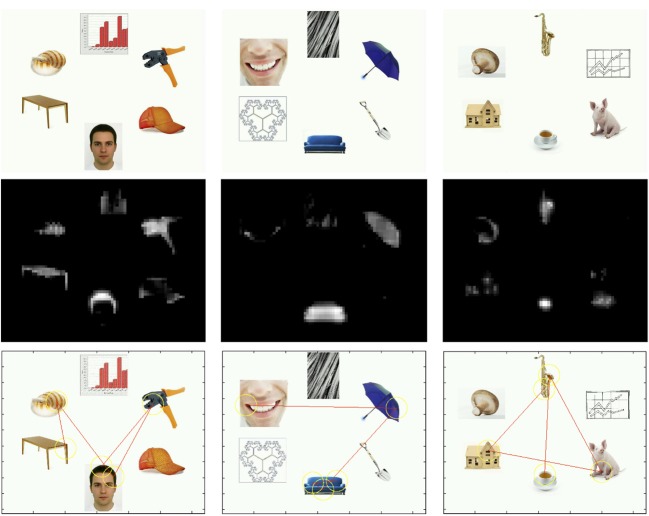

We adapted the visual search method first described by Langton et al. (2008) and Ro et al. (2007) with adults and later employed by Di Giorgio et al. (2012) and Gliga et al. (2009) with infants. Infants viewed a series of six-item arrays (Figure 1) in which one item was either a face, body part, or animal. The other five items consisted of a variety of distracters, described subsequently. We observed 6-month-olds due to conflicting evidence at this age for (a) the extent to which visual attention is drawn by faces (Frank et al., 2009; Gliga et al., 2009), and (b) sex differences in social attention (Weinberg et al., 1999; Alexander et al., 2008). We reasoned that attentional capture by social content would be revealed as looking at faces, body parts, and animals at levels greater than might be expected by chance—that is, infants' initial looks at trial onset and overall attention would tend to be directed at the social stimuli. In addition, we obtained salience measures of all stimuli to assess the possibility that the social stimuli may have been the most salient in terms of low-level visual attributes.

Figure 1.

Top row: Examples of stimulus arrays with a face (left), body part (center), or animal (right). Center row: Saliency maps of stimulus arrays in the top row. Bottom row: Modeled visual scanning based on saliency maps. For the face, saliency ranking = 1 (i.e., a region within the face AOI was determined as most salient). For the body part, saliency ranking = 5. For the animal, saliency ranking = 3.

Methods

Participants

The final sample consisted of thirty-two 6-month-old infants (16 girls and 16 boys), ranging from 5.5 to 6.6 month (M = 6.0 month). Five infants were observed but excluded from analysis because they provided data in fewer than half the trials (48 possible). All infants were full-term with no known developmental difficulties. Infants were selected from a public database of new parents and were recruited by letters and telephone calls.

Apparatus and Procedure

A Tobii 1750 eye tracker with 17-inch monitor (screen resolution 1280 × 1024; refresh rate 60 Hz) was used to collect eye movement data. Stimuli were presented and data collected with Clearview software.

Infants were seated in a parent's lap ~60 cm from the monitor. Each infant's point of gaze was first calibrated with a standard five-point calibration scheme, wherein gaze was directed toward five coordinates on the screen in sequence. All infants provided at least four acceptable calibration points.

Each trial was preceded by an attention-getter to re-center the infant's point of gaze. An experimenter commenced each trial when the infant was determined to fixate on the attention-getter. Following this, infants viewed each stimulus array in turn for 4 s. Infants were tested until completion of 48 trials or until the experimenter determined that the infant's state would not permit further data collection due to excessive fussiness.

Stimuli

Stimulus arrays consisted of six photographs placed approximately 11.0 cm (10.5° visual angle at the infant's 60-cm viewing distance) from the center of the screen (see Figure 1). Stimulus dimensions varied between ~2.0 and 12.0 cm (1.9–11.4°). Prior to analysis we marked the location and identity of each item with an “area of interest” (AOI) that continued 1 cm (0.96°) past its maximum horizontal and vertical extent.

One “target” photograph in each array contained one of eight faces (two infants, two adult males, four adult females, all presented en face), eight body parts (two eyes, three hands, one pair of feet, one nose, one mouth), or eight animals (rabbit, cat, chicken, cow, frog, pig, raccoon, dog). That is, each array contained one target (the face, body part, or animal) for purposes of analysis. The other five distracter items consisted of (a) household items or other artifacts (e.g., battery, globe, lamp, chair, hairbrush), (b) mechanical objects (e.g., bicycle, drill, electric fan, screw), (c) natural objects (e.g., tree, apple, mushroom, seashell), (d) musical instruments (e.g., saxophone, drum, piano, violin) and (e) abstract images or graphs. Photographs were gathered from the internet; each was presented twice over the course of the experiment. One target (face, body part, or animal) was shown during each trial. Stimulus locations were randomly determined. Trial order was pseudorandom such that there were no more than two consecutive trials of each type of target. Trial duration was 4 s.

We used the Saliency Toolbox (www.saliencytoolbox.net) to identify the most salient regions in each stimulus array. The Saliency Toolbox is a set of Matlab functions and scripts that can compute a salience map and model visual scanning based on relative salience of regions within the image (Walther and Koch, 2006). Salience within an image is determined by distinctions in color, luminance, and contour. (Frequency of distinct orientations in an image has been considered a proxy for its complexity; Escalera et al., 2007.) This is accomplished with separate feature maps each tuned to a single elementary attribute in each image (i.e., color, luminance, and contour). These feed into a “winner-take-all” neural network that pools inputs and determines the most salient location by emulating the inhibitory-excitatory organization found in early visual processing (i.e., retinal ganglion cells and the lateral geniculate nucleus), enhancing feature contrast and guiding visual attention. Identification of saliency is not tantamount to detection of objects, instead corresponding a process of highlighting regions of a visual array that may merit attention and further processing by an attentive observer, regardless of acuity, contrast sensitivity, and so forth.

Results

We conducted three sets of analysis: first looks, dwell times, and salience. First looks and dwell times correspond to “attention-getting” and “attention-holding” properties of visual stimuli described by Cohen (1972), and analyzed by Gliga et al. (2009). For first looks, the dependent variable was the proportion of trials in which each infant's initial gaze shifts landed in the target AOI (face, body part, or animal). For dwell times, the dependent variable was the accumulation of fixations (expressed in ms) within target AOIs for each infant on each trial. For analyses of first looks and dwell times, we conducted planned comparisons of sex differences for the three target types, or categories of social content (faces, body parts, and animals) separately to ascertain the extent to which girls and boys may have distinct patterns of allocation of social attention. As noted, few studies of social attention report analysis for sex differences, and those that did yielded conflicting results (e.g., Weinberg et al., 1999; Alexander et al., 2008). A second motivation for our approach stems from the possibility of sex differences in social attention to non-face stimuli.

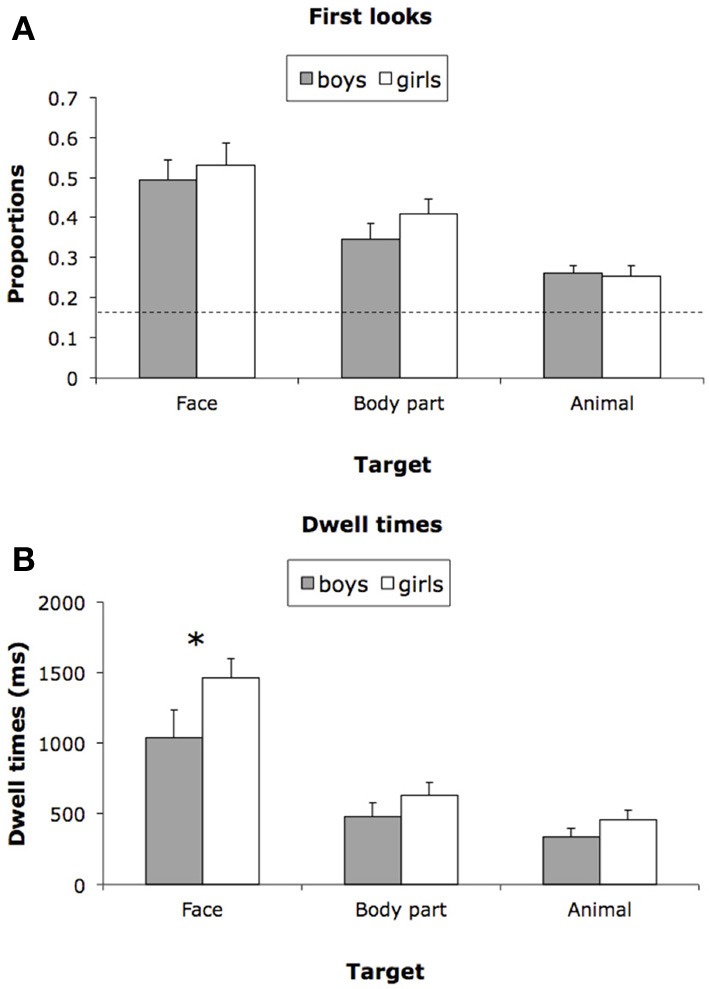

First looks

Figure 2A shows first look proportions for girls and boys for each target type. To address the possibility that targets attract visual attention more than distracters, first look proportions were compared to chance level performance (0.167). First looks toward all three types of target (faces, body parts, animals) were well above chance, ts(31) = 9.33, 7.75, and 5.42, respectively, ps < 0.0001, ds = 3.35, 2.78, and 1.95; first looks toward body parts in faces (eyes, noses, and mouths) were not reliably greater than first looks toward non-face body parts (feet and hands), t(29) = 1.56, ns. A 2 (Sex: girls vs. boys) × 3 (Target: face, body part, or animal) mixed ANOVA, with repeated measures on the second factor, yielded a significant main effect of Target, F(2, 60) = 21.09, p < 0.0001, partial η2 = 0.41, and no other reliable effects. Tests of simple effects revealed that faces attracted first looks more frequently than body parts, F(1, 30) = 8.78, p < 0.01, d = 0.73, and body parts in turn attracted more first looks than animals, F(1, 30) = 16.82, p < 0.001, d = 0.95. Planned comparisons (simple effects) revealed no sex differences in first looks toward any of the three target types, Fs < 1.5, ps > 0.26, ns. No other item category attracted first looks greater than chance levels, and there were no sex differences in first looks to non-social categories, ts < 1.5, ps > 0.24, ns.

Figure 2.

(A) M first look proportions for girls and boys for each target type; chance level performance = 0.167. (B) M dwell times for girls and boys for each target type. *p < 0.05.

Dwell times

Figure 2B shows dwell times for girls and boys for each target type. A Sex × Target mixed ANOVA yielded a significant main effect of Target, F(2, 60) = 60.45, p < 0.0001, partial η2 = 0.67, and a marginally significant main effect of Sex, F(1, 30) = 3.88, p = 0.058, partial η 2 = 0.11. The interaction was not statistically significant. Tests of simple effects revealed longer dwell times for face stimuli than for body parts, F(1, 30) = 57.76, p < 0.0001, d = 1.22, and longer dwell times for body parts than for animals, F(1, 30) = 4.60, p < 0.05, d = 0.47. Planned comparisons (simple effects) revealed a sex difference in dwell times for face stimuli, F(1, 30) = 5.32, p < 0.05, d = 0.61, but not for body parts or animals, Fs < 0.2, ps > 0.20, ns. Dwell times for all other item categories were reliably lower, and there were no sex differences in dwell times for any non-social category, ts < 1, ps > 0.39, ns.

Salience

Each of the 48 stimulus arrays was processed with the Saliency Toolbox, yielding a salience map and rank ordering of salient regions in the image (see Figure 1 for examples). For each stimulus array we recorded rankings of the most salient regions (a value of 1 = most salient). For faces, M rank = 3.31; for body parts, M rank =3.63; and for animals, M rank = 3.56. For each of the 16 arrays with each type of target (face, body part, and animal), two were the most salient. In other words, targets were the most salient item in terms of low-level visual attributes in only six of the 48 trials (sign test p > 0.9999), suggesting that these properties alone, distinct from other stimulus properties (e.g., social affordances), are not likely to have captured infants' visual attention consistently.

Discussion

We presented six-item arrays of common objects and social stimuli (faces, body parts, and animals) to 6-month-old infants as we recorded their patterns of visual attention. All three types of target attracted infants' attention initially, but face stimuli were best able to maintain attention, especially for girls. These results clarify our understanding of the means by which social content attracts and holds young infants' attention, and they bear important implications for possible sex differences in social development. Each issue is addressed in turn.

Several prominent theories have posited a role for an innate representation of faces, perhaps taking the form of a schematic “template” for facial structure, that guides visual orienting in infants and contributes to formation of cortical mechanisms for face recognition in adults (e.g., Morton and Johnson, 1991; McKone et al., 2007; Sugita, 2009). Such a representation could underlie the effects of face stimuli on the attentional capture we observed. However, it seems unlikely that unlearned representations are the best explanation for similar effects of the other targets, given the number of structural templates (for several distinct body parts and animals) that might be required to account for their attractive properties. We can also rule out a strong contribution from low-level visual attributes (color, luminance, and contour) as being principally responsible for these findings, because faces, body parts, and animals were rarely the most salient stimuli in the multi-item arrays the infants viewed.

In a previous comparison of attentional capture by faces vs. salience in cartoon stimuli, Frank et al. (2009) found no reliable differences attentional capture by faces vs. salience for 6-month-olds, but the stimuli contained motion (of the characters and of background elements as the camera tracked across the scene). Motion can be detected even by very young infants (Banton and Bertenthal, 1997), perhaps due to the relative maturity at birth of low-level motion detection mechanisms in retinal and early cortical areas. Therefore, motion may have been particularly effective in capturing attention at the expense of faces. Gliga et al. (2009) reported infants' attentional capture by upright and inverted but not scrambled faces, concluding that configural aspects of faces are not necessary to attract infants' attention and favoring an account based on faces' color or amplitude spectra. Gliga et al. did not, however, test attentional capture in arrays without faces (nor did Di Giorgio et al., 2012), as we did, nor did they test effects of scrambling other stimulus categories. Therefore, the extent to which color or amplitude spectra can be accepted as the principal determinant of infant performance remains an open question.

Instead, we prefer an account of attentional capture by social stimuli that acknowledges a strong contribution of learning the importance of distinguishing between different animate entities, presumably for their social affordances. Young infants are inclined to respond to all three types of target we tested—faces, body parts, and animals—and such predispositions may form the basis for developmental trajectories promoting optimal social contact with conspecifics and other species. Three independent lines of evidence support this view. First, neonates recognize the correspondence between others' faces and their own (proposed as an important means of differentiating others; Meltzoff and Moore, 1997), and face discrimination skills are refined across the first year after birth through experience-dependent mechanisms (e.g., Pascalis et al., 2002). Second, infants are sensitive to eye contact (Farroni et al., 2004) and recognize aspects of goal-directedness in observed hand motions (Woodward, 1998). Goal detection may have roots in an unlearned capacity to discriminate simple motions of limbs and extremities, such as those that move toward or away from the body (Craighero et al., 2011), and further developments are facilitated by infants' own manual action experience (Sommerville et al., 2005). Third, young infants discriminate animate from inanimate motions (Frankenhuis et al., 2013) and attend to features that differentiate categories of animals (Mareschal and Quinn, 2001). These skills, in turn, may stem from intrinsic biases to attend to animals (Simion et al., 2008) and are facilitated by infants' continued exposure to other species (Kovack-Lesh et al., in press). Taken together, these studies and the present research support a view of development of social preferences built on predispositions to orient toward a limited set of features of animate stimuli, elaborated by the accrual of experience with their defining characteristics. Yet the present results remain compatible with theoretical views of the face as “special,” given their greater attention-getting and attention-holding properties relative to other social content. And our results demonstrate that these properties extend beyond en face presentations of wholly visible human faces characteristic of the majority of the literature, including the studies cited here, because the body part and animal stimuli we used contain part-faces in some instances and animal faces, respectively. These effects were diminished, but still greater than for any other stimulus class we tested.

Finally, consider the sex difference in face dwell times that we observed. When sex differences in social attention are reported, they are often consistent with a female advantage (but not always; Pascalis et al., 1998). For example, early reports documented greater interest in infants by women than by men (e.g., Feldman and Nash, 1978; Frodi and Lamb, 1978). More recently, there have been reports of superior performance by females in detection of gaze direction (Bayliss et al., 2005) and face recognition, in particular for female (own-sex) faces (Lewin and Herlitz, 2002), as well as sex differences in hemispheric specialization for face processing (Fischer et al., 2004; Proverbio et al., 2006). The female advantage may have its source in intrinsic differences between girls and boys in spontaneous attraction to faces (Connellan et al., 2000); this, combined with greater exposure to female faces during infancy (Quinn et al., 2002), may help explain the same-sex recognition advantage observed in adults (Ramsey-Rennels and Langlois, 2006).

Notably, however, many published reports of face perception in adults and infants do not mention sex differences. The reasons for this are unknown, but for many such reports, including most cited in this article, no analyses for sex differences are provided. It may be that the methods used in the present study are more sensitive than other paradigms (e.g., ERPs) in revealing performance differences between girls and boys, but this possibility remains speculative. Nevertheless, our results can help clarify the female advantage in social attention seen in adults. Our results reveal no sex differences in initial “attention-getting” attraction to social stimuli, but rather in the ensuing “attention-holding” properties of faces, in particular for girls. By 6 months, therefore, girls and boys are equally liable to show immediate interest in faces, body parts, and animals, but only faces hold girls' interest. Further investigations are necessary to examine the possibility of an “own-sex” face processing advantage in infancy, and the developmental consequences of such an advantage.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was supported by NIH Grants R01-HD40432 and R01-HD73535. We thank the UCLA Baby Lab crew for assistance with data collection and coding. We also thank the parents and infants who participated in this study.

References

- Alexander G. M., Wilcox T., Woods R. (2008). Sex differences in infants' visual interest in toys. Arch. Sex. Behav. 38, 427–433 10.1007/s10508-008-9430-1 [DOI] [PubMed] [Google Scholar]

- Banton T., Bertenthal B. I. (1997). Multiple developmental pathways for motion processing. Optom. Vis. Sci. 74, 751–760 10.1097/00006324-199709000-00023 [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., di Pellegrino G., Tipper S. P. (2005). Sex differences in eye gaze and symbolic cuing of attention. Q. J. Exp. Psychol. A 58, 631–650 [DOI] [PubMed] [Google Scholar]

- Bukach C. M., Gauthier I., Tarr M. J. (2006). Beyond faces and modularity: the power of an expertise framework. Trends Cogn. Sci. 10, 159–166 10.1016/j.tics.2006.02.004 [DOI] [PubMed] [Google Scholar]

- Cohen L. B. (1972). Attention-getting and attention-holding processes of infant visual preferences. Child Dev. 43, 869–879 10.2307/1127638 [DOI] [PubMed] [Google Scholar]

- Connellan J., Baron-Cohen S., Wheelwright S., Batki A., Ahluwalia J. (2000). Sex differences in human neonatal social perception. Infant Behav. Dev. 23, 113–118 10.1016/S0163-638300032-1 [DOI] [Google Scholar]

- Craighero L., Leo I., Umiltà C., Simion F. (2011). Newborns' preference for goal-directed actions. Cognition 120, 26–32 10.1016/j.cognition.2011.02.011 [DOI] [PubMed] [Google Scholar]

- de Haan M., Pascalis O., Johnson M. H. (2002). Specialization of neural mechanisms underlying face recognition in human infants. J. Cogn. Neurosci. 14, 199–209 10.1162/089892902317236849 [DOI] [PubMed] [Google Scholar]

- Di Giorgio E., Turati C., Altoè G., Simion F. (2012). Face detection in complex visual displays: an eye-tracking study with 3- and 6-month-old infants and adults. J. Exp. Child Psychol. 113, 66–77 10.1016/j.jecp.2012.04.012 [DOI] [PubMed] [Google Scholar]

- Escalera S., Radeva P., Pujol O. (2007). “Complex salient regions for computer vision problems,” in IEEE Conference on Computer Vision and Pattern Recognition, (Minneapolis, MN: IEEE Computer Society; ), 1–8 [Google Scholar]

- Farah M. J., Tanaka J. W., Drain H. M. (1995). What causes the face inversion effect? J. Exp. Psychol. Hum. Percept. Perform. 21, 628–634 10.1037/0096-1523.21.3.628 [DOI] [PubMed] [Google Scholar]

- Farah M. J., Wilson K. D., Drain M., Tanaka J. N. (1998). What is “special” about face perception. Psychol. Rev. 105, 482–498 10.1037/0033-295X.105.3.482 [DOI] [PubMed] [Google Scholar]

- Farroni T., Csibra G., Simion T., Johnson M. H. (2002). Eye contact detection in humans from birth. Proc. Natl. Acad. Sci. U.S.A. 99, 9602–9605 10.1073/pnas.152159999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farroni T., Massaccesi S., Pividori D., Johnson M. H. (2004). Gaze following in newborns. Infancy 5, 39–60 10.1207/s15327078in0501_2 [DOI] [Google Scholar]

- Feldman S. S., Nash S. C. (1978). Interest in babies during young adulthood. Child Dev. 49, 617–622 10.2307/1128228 [DOI] [Google Scholar]

- Fischer H., Sandblom J., Herlitz A., Fransson P., Wright C. I., Bäckman L. (2004). Sex-differential brain activation during exposure to female and male faces. Neuroreport 15, 235–238 10.1097/00001756-200402090-00004 [DOI] [PubMed] [Google Scholar]

- Frank M. C., Vul E., Johnson S. P. (2009). Development of infants' attention to faces during the first year. Cognition 110, 160–170 10.1016/j.cognition.2008.11.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frankenhuis W. E., House B., Barrett C., Johnson S. P. (2013). Infants' perception of chasing. Cognition 126, 224–233 10.1016/j.cognition.2012.10.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frodi A. M., Lamb M. E. (1978). Sex differences in responsiveness to infants: a developmental study of psychophysiological and behavioral responses. Child Dev. 49, 1182–1188 10.2307/1128758 [DOI] [PubMed] [Google Scholar]

- Gallay M., Baudoin J., Durand K., Lemoine C., Lécuyer R. (2006). Qualitative differences in the exploration of upright and upside-down faces in four-month-old infants: an eye-movement study. Child Dev. 77, 984–996 10.1111/j.1467-8624.2006.00914.x [DOI] [PubMed] [Google Scholar]

- Gliga T., Elsabbagh M., Andravizou A., Johnson M. (2009). Faces attract infants' attention in complex displays. Infancy 14, 550–562 10.1080/15250000903144199 [DOI] [PubMed] [Google Scholar]

- Haith M. M., Bergman M. J., Moore M. J. (1977). Eye contact and face scanning in early infancy. Science 198, 853–855 10.1126/science.918670 [DOI] [PubMed] [Google Scholar]

- Hansen C. H., Hansen R. D. (1988). Finding the face in the crowd: an anger superiority effect. J. Pers. Soc. Psychol. 54, 917–924 10.1037/0022-3514.54.6.917 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2002). Human neural systems for face recognition and social communication. Biol. Psychiatry 51, 59–67 10.1016/S0006-322301330-0 [DOI] [PubMed] [Google Scholar]

- Herschler O., Hochstein S. (2005). At first sight: a high-level pop out effect for faces. Vision Res. 45, 1707–1724 10.1016/j.visres.2004.12.021 [DOI] [PubMed] [Google Scholar]

- Johnson M. H., Dziurawiec S., Ellis H., Morton J. (1991). Newborns' preferential tracking of face-like stimuli and its subsequent decline. Cognition 40, 1–19 10.1016/0010-0277(91)90045-6 [DOI] [PubMed] [Google Scholar]

- Khan R. A., Meyer A., Konik H., Bouakaz S. (2011). “Facial expression recognition using entropy and brightness features,” in Paper Presented at the 11th International Conference on Intelligent Systems Design and Applications, (Córdoba, Spain: ). [Google Scholar]

- Kovack-Lesh K. A., Horst J. S., Oakes L. M. (2008). The cat is out of the bag: the joint influence of previous experience and looking behavior on infant categorization. Infancy 13, 285–307 10.1080/15250000802189428 [DOI] [Google Scholar]

- Kovack-Lesh K. A., McMurray B., Oakes L. M. (in press). Four-month-old infants' visual investigation of cats and dogs: relations with pet experience and attentional strategy. Dev. Psychol. 10.1037/a0033195 [DOI] [PubMed] [Google Scholar]

- Kuehn S. M., Jolicoeur P. (1994). Impact of quality of the image, orientation, and similarity of the stimuli on visual search for faces. Perception 23, 95–122 10.1068/p230095 [DOI] [PubMed] [Google Scholar]

- Langton S. R. H., Law A. S., Burton A. M., Schweinberger S. R. (2008). Attentional capture by faces. Cognition 107, 330–342 10.1016/j.cognition.2007.07.012 [DOI] [PubMed] [Google Scholar]

- Lewin C., Herlitz A. (2002). Sex differences in face recognition: women's faces make the difference. Brain Cogn. 50, 121–128 10.1016/S0278-262600016-7 [DOI] [PubMed] [Google Scholar]

- Li H., Ngan K. N. (2008). Saliency model-based face segmentation and tracking in head-and-shoulder visual sequences. J. Vis. Commun. Image Rep. 19, 320–333 10.1016/j.jvcir.2008.04.001 [DOI] [Google Scholar]

- Mareschal D., Quinn P. C. (2001). Categorization in infancy. Trends Cogn. Sci. 5, 443–450 10.1016/S1364-661301752-6 [DOI] [PubMed] [Google Scholar]

- McKone E., Kanwisher N., Duchaine B. (2007). Can generic expertise explain special processing for faces. Trends Cogn. Sci. 11, 8–15 10.1016/j.tics.2006.11.002 [DOI] [PubMed] [Google Scholar]

- Meltzoff A. N., Moore M. K. (1977). Imitation of facial and manual gestures by human neonates. Science 198, 75–78 10.1126/science.198.4312.75 [DOI] [PubMed] [Google Scholar]

- Meltzoff A. N., Moore M. K. (1997). Explaining facial imitation: a theoretical model. Early Dev. Parenting 6, 179–192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mondloch C. J., Lewis T. L., Budreau R., Maurer D., Dannemiller J. L., Stephens B. R., et al. (1999). Face perception during early infancy. Psychol. Sci. 10, 419–422 10.1111/1467-9280.00179 [DOI] [Google Scholar]

- Morton J., Johnson M. H. (1991). CONSPEC and CONLERN: a two-process theory of infant face recognition. Psychol. Rev. 98, 164–181 10.1037/0033-295X.98.2.164 [DOI] [PubMed] [Google Scholar]

- Murray L., Trevarthen C. (1985). “Emotional regulation of interactions between two-month-olds and their mothers,” in Social Perception in Infants, eds Field T. M., Fox N. A. (Norwood, NJ: Ablex Publishers; ), 177–197 [Google Scholar]

- Nothdurft H. C. (1993). Faces and facial expressions do not pop out. Perception 22, 1287–1298 10.1068/p221287 [DOI] [PubMed] [Google Scholar]

- Pascalis O., de Haan M., Nelson C. A. (2002). Is face processing species-specific during the first year of life. Science 296, 1321–1323 10.1126/science.1070223 [DOI] [PubMed] [Google Scholar]

- Pascalis O., de Haan M., Nelson C. A., de Schonen S. (1998). Long-term recognition memory for faces assessed by visual paired comparison in 3- and 6-month-old infants. J. Exp. Psychol. Learn. Mem. Cogn. 24, 249–260 10.1037/0278-7393.24.1.249. [DOI] [PubMed] [Google Scholar]

- Proverbio A. M., Brignone V., Matarazzo S., Del Zotto M., Zani A. (2006). Gender differences in hemispheric asymmetry for face processing. BMC Neurosci. 7:44 10.1186/1471-2202-7-44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Purcell D. G., Stewart A. L., Skov R. B. (1996). It takes a confounded face to pop out of a crowd. Perception 25, 1091–1108 10.1068/p251091 [DOI] [PubMed] [Google Scholar]

- Quinn P. C., Yahr J., Kuhn A., Slater A. M., Pascalis O. (2002). Representation of the gender of human faces by infants: a preference for female. Perception 31, 1109–1121 10.1068/p3331 [DOI] [PubMed] [Google Scholar]

- Ramsey-Rennels J. L., Langlois J. H. (2006). Infants' differential processing of female and male faces. Curr. Dir. Psychol. Sci. 15, 59–62 10.1111/j.0963-7214.2006.00407.x [DOI] [Google Scholar]

- Ro T., Friggel A., Lavie N. (2007). Attentional biases for faces and body parts. Vis. Cogn. 15, 322–348 10.1080/13506280600590434 [DOI] [Google Scholar]

- Ro T., Russell C., Lavie N. (2001). Changing faces: a detection advantage in the flicker paradigm. Psychol. Sci. 12, 94–99 10.1111/1467-9280.00317 [DOI] [PubMed] [Google Scholar]

- Rousselet G. A., Macé M. J., Fabre-Thorpe M. (2003). Is it an animal. Is it a human face? Fast processing in upright and inverted natural scenes. J. Vis. 3, 440–456 10.1167/3.6.5 [DOI] [PubMed] [Google Scholar]

- Schietecatte I., Roeyers H., Warreyn P. (2011). Can infants' orientation to social stimuli predict later joint attention skills. Br. J. Dev. Psychol. 30, 267–282 10.1111/j.2044-835X.2011.02039.x [DOI] [PubMed] [Google Scholar]

- Simion F., Regolin L., Bulf H. (2008). A predisposition for biological motion in the newborn baby. Proc. Natl. Acad. Sci. U.S.A. 105, 809–813 10.1073/pnas.0707021105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sommerville J. A., Woodward A. L., Needham A. (2005). Action experience alters 3-month-old infants' perception of others' actions. Cognition 96, B1–B11 10.1016/j.cognition.2004.07.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugita Y. (2009). Innate face processing. Curr. Opin. Neurobiol. 19, 39–44 10.1016/j.conb.2009.03.001 [DOI] [PubMed] [Google Scholar]

- Turati C., Sangrigoli S., Ruel J., de Schonen S. (2004). Evidence of the face inversion effect in 4-month-old infants. Infancy 6, 275–297 10.1207/s15327078in0602_8 [DOI] [PubMed] [Google Scholar]

- Valenza E., Simion F., Macchi Cassia V., Umiltà C. (1996). Face preference at birth. Dev. Psychol. 22, 892–903 [DOI] [PubMed] [Google Scholar]

- Walker A. S. (1982). Intermodal perception of expressive behaviors by human infants. J. Exp. Child Psychol. 33, 514–535 10.1016/0022-096590063-7 [DOI] [PubMed] [Google Scholar]

- Walther D., Koch C. (2006). Modeling attention to salient proto-objects. Neural Netw. 19, 1395–1407 10.1016/j.neunet.2006.10.001 [DOI] [PubMed] [Google Scholar]

- Weinberg M. K., Tronick E. Z., Cohn J. F., Olson K. L. (1999). Gender differences in emotional expressivity and self-regulation during early infancy. Dev. Psychol. 35, 175–188 10.1037/0012-1649.35.1.175 [DOI] [PubMed] [Google Scholar]

- Woodward A. L. (1998). Infants selectively encode the goal object of an actor's reach. Cognition 69, 1–34 10.1016/S0010-027700058-4 [DOI] [PubMed] [Google Scholar]