Abstract

Neurons in the dorsal frontal and parietal cortex are thought to transform incoming visual signals into the spatial goals of saccades, a process known as target selection. Here, we used functional magnetic resonance imaging (fMRI) to test how target selection may generalize beyond visual transformations when auditory and semantic information is used for selection. We compared activity in the frontal and parietal cortex when subjects made visually, aurally, and semantically guided saccades to one of fourts. Selection was based on a visual cue (i.e., one of the dots blinked), an auditory cue (i.e., a white noise burst was emitted at one of the dots’ location), or a semantic cue (i.e., the color of one of the dots was spoken). Although neural responses in frontal and parietal cortex were robust, they were non-specific with regard to the type of information used for target selection. Decoders, however, trained on the patterns of activity in the intraparietal sulcus could classify both the type of cue used for target selection and the direction of the saccade. Therefore, we find evidence that the posterior parietal cortex is involved in transforming multimodal inputs into general spatial representations that can be used to guide saccades.

Keywords: saccades, auditory, semantic, spatial cognition, fMRI

1. INTRODUCTION

We navigate a world of sensory stimuli by selecting among objects or specific locations that are most relevant to our current behavioral goals. Saccades, which are rapid ballistic eye movements, are the most common means by which we select, in this case foveate, a portion of the visual field for further processing. Saccades are typically directed towards salient visual objects or the abrupt onset of visual stimuli (Goldberg et al., 2006; Gottlieb et al., 1998; Schall and Hanes, 1993). In addition to visual information, the brain also uses acoustic information to guide saccades (Kikuchi-Yorioka and Sawaguchi, 2000; Mazzoni et al., 1996; Mullette-Gillman et al., 2005).

The frontal and parietal cortices are thought to play an important role, not only in visually guided saccades, but also in aurally guided saccades. Anatomically, neurons in dorsal frontal cortex and parietal cortex receive visual inputs from neurons in extrastriate areas and auditory spatial inputs from the caudal belt in temporoparietal cortex (Hackett et al., 1999; Romanski et al., 1999a; Romanski et al., 1999b). Spatial information is thus transmitted from perceptual areas to dorsal frontal and parietal cortices, and neurons in these regions may represent the spatial goals used for planning potential gaze shifts by combining signals from multiple input channels. Non-human primate studies have demonstrated that neuronal activity in frontal and parietal cortices may index the locus of visual attention (Bisley and Goldberg, 2003; Thompson et al., 2005), which is a reliable predictor of natural gaze. Within frontoparietal areas, sensory inputs may be transformed into the spatial coordinates useful for planning saccades, a process known as target selection.

Neuronal activity in frontal and parietal cortex increases when subjects make a saccade to an auditory target as well as a visual target, suggesting perhaps that neurons in frontoparietal regions represent spatial information in a supramodal way (Ahveninen et al., 2006; Bremmer et al., 2001a; Bremmer et al., 2001b; Bushara et al., 1999; Butters et al., 1970; Cohen and Andersen, 2000; Cohen et al., 2004; Cusack et al., 2000; Deouell and Soroker, 2000; Mazzoni et al., 1996; Mullette-Gillman et al., 2005; Stricanne et al., 1996; Warren et al., 2002). Consistent with non-human primate research, imaging studies with human subjects have shown that the activity in frontal and parietal regions increases when subjects make saccades towards visual targets (Beurze et al., 2009; Curtis and Connolly, 2008; Levy et al., 2007) and when they represent auditory space (Bushara et al., 1999; Tark and Curtis, 2009; Zatorre et al., 2002).

Indeed, neurons in frontal and parietal cortex are thought to represent the spatial positions of salient visual stimuli in maps of eye-centered space (Fecteau and Munoz, 2006; Gottlieb, 2007; Gottlieb et al., 1998; Kusunoki et al., 2000; Thompson and Bichot, 2005). It is not clear, however, how these regions in the human brain represent the salience of non-visual signals and convert those signals into motor commands. In the present study, we investigated whether regions in frontal and parietal cortex that have been associated with the planning of visually guided saccades are also involved in transforming auditory and semantic signals into saccade goals. We employed rapid event-related functional magnetic resonance imaging (fMRI) while subjects performed visually, aurally and semantically guided saccades. Based on previous electrophysiological findings (Mazzoni et al., 1996; Mullette-Gillman et al., 2005; Russo and Bruce, 1994; Stricanne et al., 1996) and theory about the roles of the dorsal frontoparietal network in attention (Bisley and Goldberg, 2010; Corbetta and Shulman, 2002; Ikkai and Curtis, 2011), we predicted that the frontoparietal network, particularly human frontal eye field (FEF) and intraparietal sulcus (IPS), would respond in a supramodal manner to the various saccade cues.

2. RESULTS

To test our hypothesis, we measured eye position and blood oxygen level-dependent (BOLD) activity in humans while they performed three types of saccade tasks that differed in the type of cue that guided the saccade. Subjects made saccades to either visually (i.e., one of the dots blinked), aurally (i.e., a white noise burst was emitted at one of the dots’ location), or semantically (i.e., the color of one of the dots was spoken) cued locations.

2.1. Behavioral results

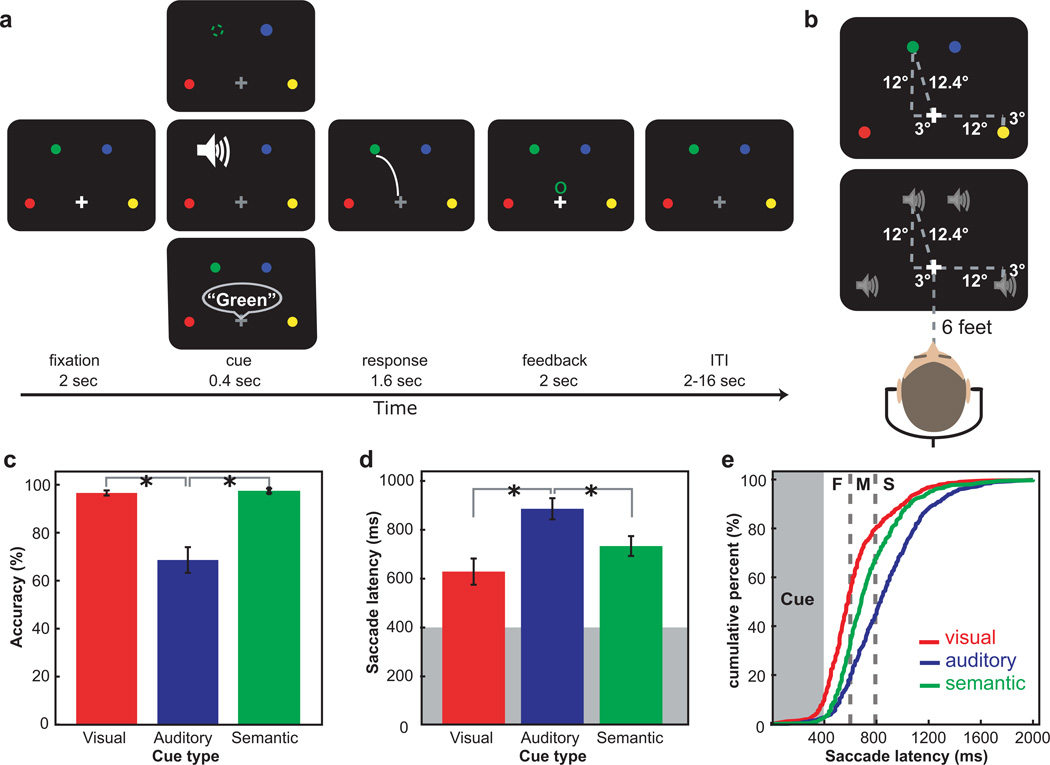

The accuracy in the aurally guided saccades was significantly lower than in the other two cue types (F(2, 22) = 24.894, P < 0.01), suggesting that the task difficulty of cue types was not equal. The accuracy in visually and semantically guided saccades did not differ (t(11) = −0.615, P > 0.05) (Fig. 1c). Aurally guided saccades were longer than visually (t(11) = −5.618, P < 0.01) and semantically guided saccades (t(11) = −2.146, P < 0.05). The latency of visually guided saccades did not differ from that of semantically guided saccades (t(11) = 2.678, P > 0.05) (Fig. 1d). Visually guided saccades were often initiated earlier than the other two types of saccades (Fig. 1e). Overall, the cue type influenced the speed and accuracy of target selection. To deal with accuracy differences, we limit our further analyses to correct trials only. Next, we ask what effects, if any, do differences in saccade latency have on BOLD activity.

Figure 1.

a. The procedure of saccade tasks. Each trial began with a white fixation and four colored dots. On visual trials (top), one of the dots briefly disappeared. On auditory trials (middle), a sound was presented at one of the dot’s locations. On semantic trials (bottom), the color of one of the dots was spoken. Subjects made a saccade to the indicated dot, and then feedback was given followed by an ITI. b. Stimulus and speaker locations in the recording session prior to imaging are illustrated. c. The accuracy in aurally guided saccades was significantly lower than visually and semantically guided saccade trials. d. Saccade latency was shorter in visually guided saccades than the other two. Error bars in (c) and (d) indicate standard error of the mean. e. The cumulative distribution of saccade latency in each cue type. The gray box indicates the duration of a stimulus presentation and dashed lines indicate the cutoff for fast, medium and slow saccade latency bins. ‘F’, ‘M’ and ‘S’ indicate fast, medium and slow saccade latency. Visually guided saccades were initiated earlier than semantically and aurally guided saccades.

2.2. Surface-based statistical tests

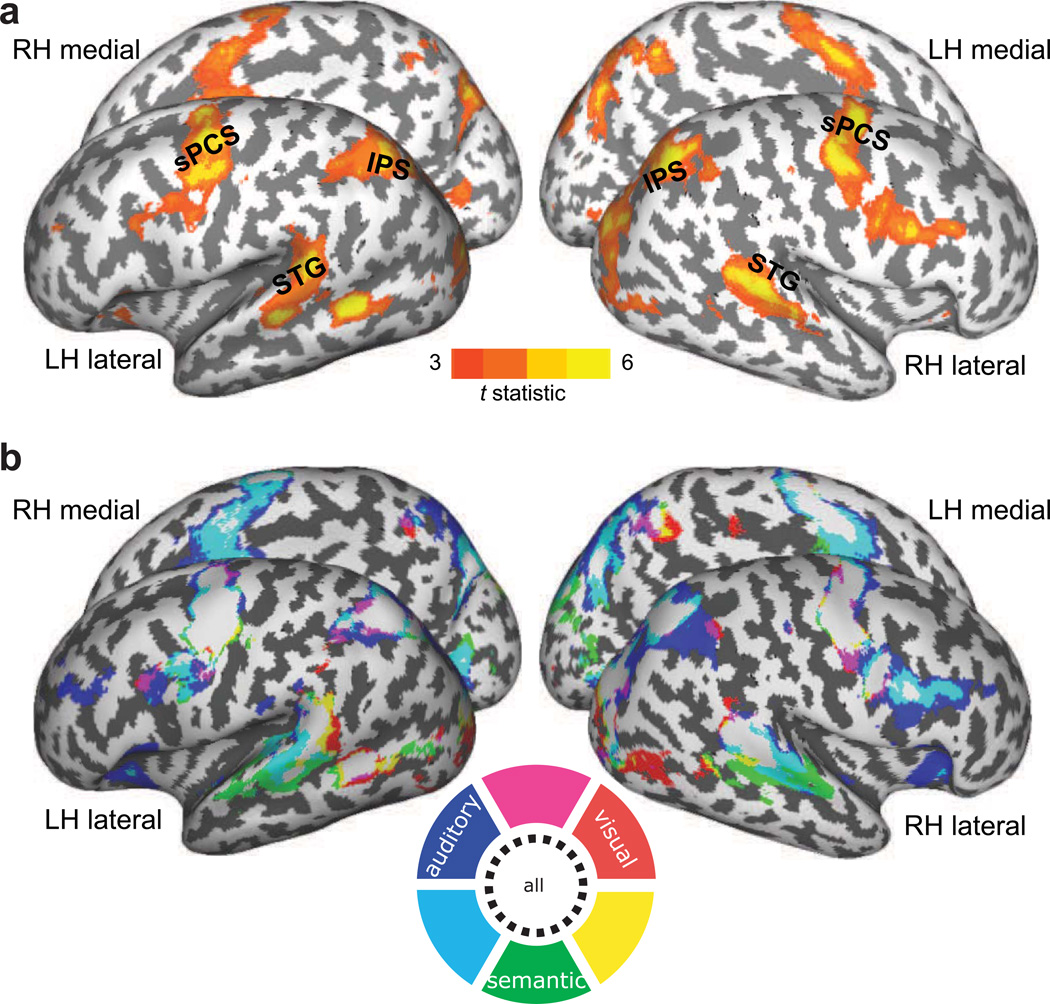

To quantitatively compare the cortical activations that correlated with saccade latency and cue types, we normalized and projected each subject’s parameter maps to a common cortical surface (see Methods). In general, as saccade latency increased, BOLD responses increased bilaterally in sPCS, IPS, STG and the right middle temporal gyrus across all cue types; no region was negatively correlated with the saccade latency (Fig. 2a). The BOLD signal in same portions of the frontal and parietal lobes positively correlated with latency in all three types of cues, but activity in right inferior parietal lobule correlated only with aurally guided saccades. Activity in anterior STG correlated only with semantically guided saccades, and activity in ventral lateral prefrontal regions correlated with both aurally and semantically guided saccades (Fig. 2b). These latency effects present a potential confound in interpreting the main effects of cue type on BOLD responses. Therefore, we computed another GLM that orthogonalized saccade latency to the cue type covariate. This removes the variance of saccade latency from the parameter estimates associated with cue type.

Figure 2.

a. Cortical areas in which BOLD activity correlated with trial-to-trial saccade latencies. Across cue types, the activity in bilateral sPCS, IPS, posterior STG and right inferior frontal sulcus was greater on trials in which saccades were slower to initiate. b. The cortical regions whose activity correlated with trial-to-trial saccade latency are shown in each cue type. The activities in bilateral sPCS, IPS and posterior STG increased as saccade latency increased in all cue types. Activity in anterior STG correlated only in semantically guided saccades and activity in right inferior parietal lobule correlated only in aurally guided saccades. Red, blue and green indicate visually, aurally and semantically guided saccades respectively, and overlapping areas were marked in magenta, cyan, yellow or white, as illustrated in a color wheel. The map is thresholded at t >= 3.0.

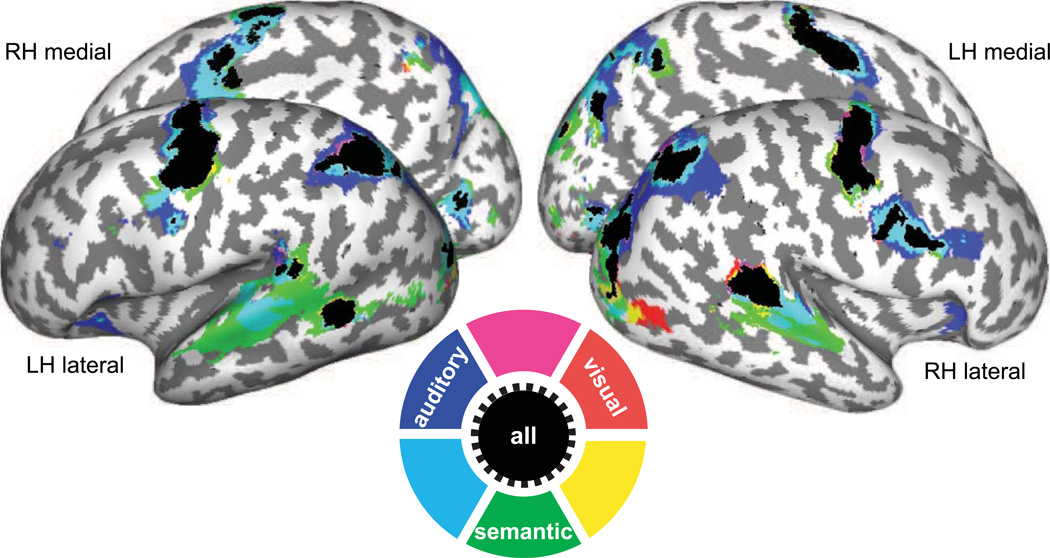

After removing the effects of saccade latency (see Methods), we still observed robust activations in bilateral sPCS, IPS, calcarine sulcus and left middle temporal gyrus for all cue types (Fig. 3). However, the activation maps were indistinguishable for the different cue types in dorsal frontal and parietal cortex; no differences were found between the cue types, including between externally guided (visual, auditory) and internally guided (semantic) saccades. Consistent with presumed roles in auditory perception, activity in STG and the right inferior frontal sulcus was significantly greater during aurally and semantically guided saccades, compared to visually guided saccades. The results suggest that the same portion within dorsal frontal and parietal cortex were equally active during target selection regardless of the type of information that guided the saccade.

Figure 3.

Surface activation maps across cue types. Red, blue and green indicate areas which showed increased BOLD response to visually, aurally and semantically guided saccades respectively, and overlapping areas are marked in magenta, cyan, yellow or black, as illustrated in a color wheel. Significant activations are overlaid on cortical surface rendering where dark gray color indicates sulci and light gray color indicates gyral areas. Increased BOLD responses were found in sPCS and IPS bilaterally in allcue types and in the bilateral SPL during aurally and semantically guided saccades. The map is thresholded at t >= 3.0.

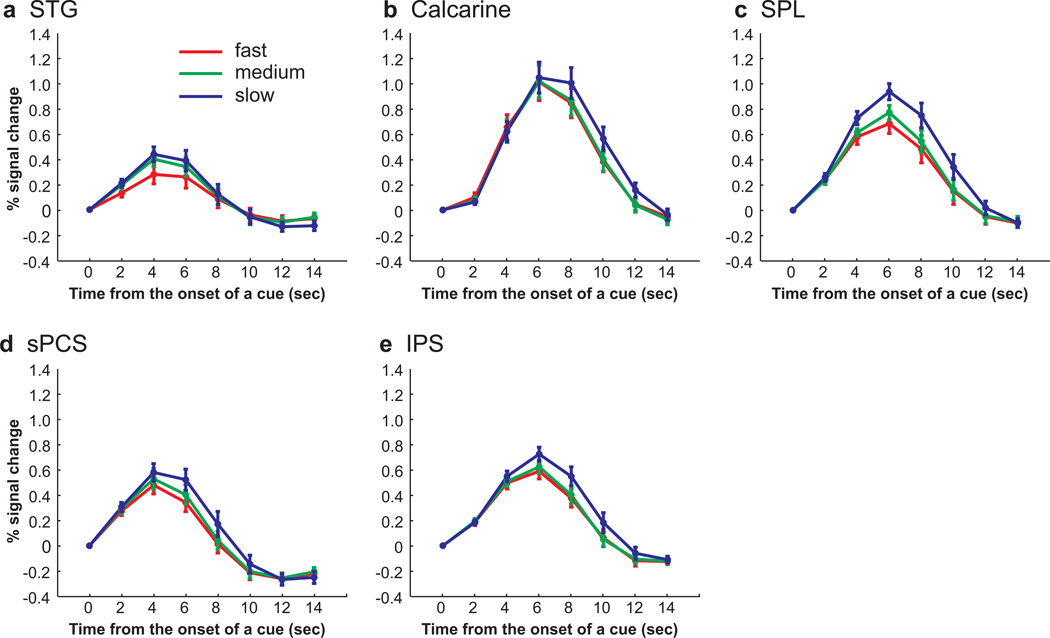

2.3. ROI time series analysis

To increase our sensitivity to detect differences, we followed up with a ROI-based approach that makes little assumptions about individual differences in the spatial distribution of the brain activity (see Methods). We extracted the time series in several ROIs to further test our hypotheses that BOLD responses in specific regions were evoked differentially according to the information used to guide the saccade. To quantitatively evaluate the differences in BOLD time courses across cue types, we averaged the signal from the three time points around the peak of the hemodynamic response for each subject (see Methods).

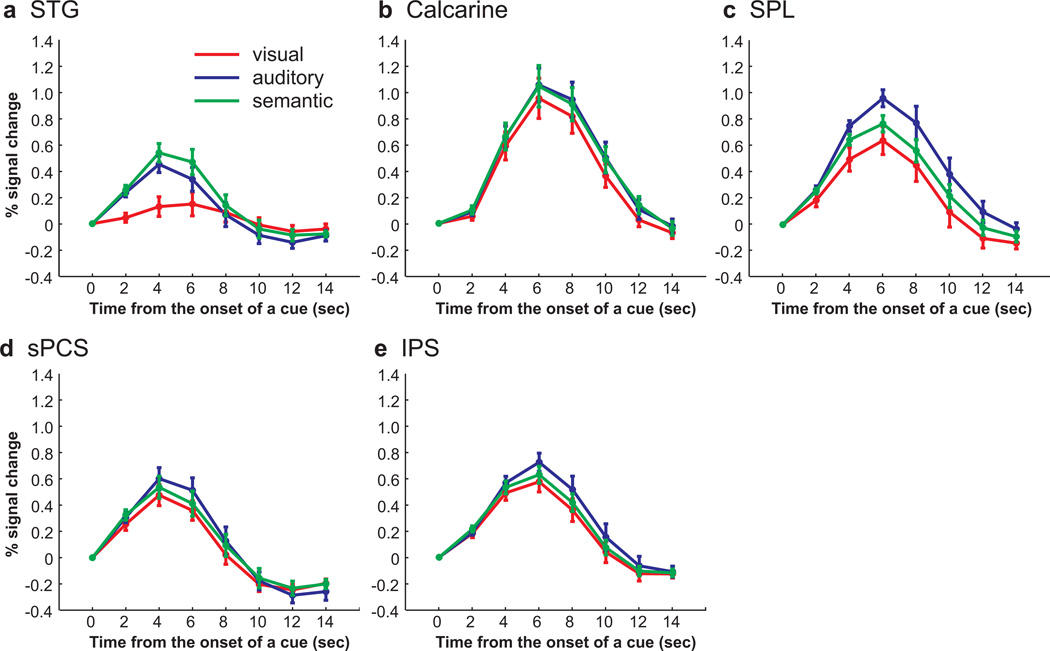

Although we observed large BOLD responses time-locked to the cue in the calcarine sulcus, presumably the substrate for early visual areas, there were no differences between the three cue types (F(2, 22) = 2.987, P > 0.05) (Fig. 4b). Presumably, the lack of an effect in visual cortex is the result of several factors. The four colored dots were on the screen continuously and the visual offset lasted only 400ms on visually cued trials. Such small stimuli combined with such a brief transient might be difficult to detect unless one uses ROIs limited to the retinotopic subregions corresponding to the location of the visual cue. The BOLD responses in STG differed according to cue type (F(2, 22) = 67.166, P < 0.001). A phasic response was observed when subjects made saccades to an aurally or semantically cued location, but not when subjects made visually guided saccades (Fig. 4a). The activity during aurally guided saccades was greater than visually guided saccades (t(11) = −8.854, P < 0.001) and semantically guided saccades evoked greater activity than aurally guided saccades (t(11) = −3.402, P < 0.01) and visually guided saccades (t(11) = −9.458, P < 0.001). This is not surprising because of the increased demands on auditory perceptual processing during the aurally and semantically guided saccades, but they do confirm the sensitivity of our method and approach.

Figure 4.

Group-averaged BOLD time courses of cue types in each ROI, time locked to the onset of a cue. a. In STG, semantically and aurally guided saccades evoked larger BOLD responses than visually guided saccades. b. No differences were found across cue types in calcarine sulcus. c. In SPL, auditory cued saccades evoked larger responses than visual or semantic cued saccades. d. In sPCS, equally strong responses were evoked by all cue types. e. In IPS, the activity in aurally guided saccades was greater compared to the activity in visually guided saccades.

We next tested if the responses were different according to a cue type used for target selection in parietal (i.e., SPL, IPS) and frontal (i.e., sPCS) cortex. In parietal and frontal ROIs, we observed robust phasic responses time-locked to cue that differed according to the cue type (SPL, F(2, 22) = 9.001, P < 0.001; IPS, F(2, 22) = 3.823, P < 0.05; sPCS, F(2, 22) = 4.427, P < 0.05) (Fig 4c–e). The activity during aurally guided saccades was greater than visually guided saccades in all three ROIs (SPL, t(11) = −3.694, p < 0.01; IPS, t(11) = −3.461, p < 0.01; sPCS, t(11) = –2.477, p < 0.05). In SPL and sPCS, aurally guided saccades evoked greater responses than semantically guided saccades (t(11) = 3.230, P < 0.01; t(11) = 2.557, P < 0.05, for SPL and sPCS respectively), but in IPS, there was no significant differences between aurally and semantically guided saccades (t(11) = 1.688, P > 0.05). Moreover, activity did not differ between visually and semantically guided saccades in all three ROIs (SPL, t(11) = −1.680, P > 0.05; IPS, t(11) = –0.9511, P > 0.05; sPCS, t(11) = −0.917, P > 0.05).

Our time-course analyses from the frontal and parietal ROIs suggested that BOLD responses were greater during aural, compared to visual and semantic, cued trials. However, our surface based statistical parametric mapping analyses failed to detect any reliable differences across subjects. This could mean that the time-course analyses were more sensitive or less effected by individual differences in the spatial distribution of the brain activity. Alternatively, the differences in BOLD activity in the time-courses of each cue type could be confounded by the differences in saccade latency across the cue types. Recall that BOLD signal correlated with saccade latency in many cortical areas (Fig. 2a) and the variance due to differences in saccade latency were removed from the GLMs that yielded no differences in cue type (Fig. 3; see Methods). To test if differences in the time series of BOLD responses across cue types can be accounted for by saccade latency, we classified all trials according to their saccade latency into three bins (i.e., fast, medium and slow), and analyzed the time series from the same voxels in each ROI. Fast saccade latency trials consisted of 54.8% of visually guided saccade trials, 18.1% of aurally guided saccade trials, and 26.5% of semantically guided saccade trials; medium saccade latency trials consisted of 30.5% of visually guided saccade trials, 30.7% of aurally guided saccade trials, and 40% of semantically guided saccade trials; and slow saccade latency trials consisted of 14.7% of visually guided saccade trials, 51.2% of aurally guided saccade trials, and 33.5% of semantically guided saccade trials. The mean saccade latency from cue onset was 502 ms, 693 ms and 1032 ms for fast, medium and slow saccade latency bins, respectively. In all saccade latency bins, the BOLD responses were phasic and time locked to the cue (Fig. 5). Moreover, ANOVAs confirmed that these responses showed a significant linear effect (i.e., fast < medium < slow) in all ROIs except calcarine sulcus (sPCS, Flinear(1, 11) = 7.111, P < 0.05; IPS, Flinear (1, 11) = 9.546, P < 0.05; SPL, Flinear(1, 11) = 17.843, P < 0.05; STG, Flinear(1, 11) = 6.691, P < 0.05; calcarine sulcus, Flinear(1, 11) = 1.902, P > 0.05). These results, combined with those depicted in Figs. 2 and 3, suggest that the higher responses to aurally guided saccades in frontal and parietal ROIs can be explained by longer saccade latencies, or longer time-on-task. We therefore conclude that the activity in frontal and parietal regions does not differ according to cue type.

Figure 5.

Group-averaged BOLD time courses binned by saccade latency in each ROI, time locked to the onset of the cue. a–e. In STG, calcarine sulcus, SPL, sPCS and IPS, BOLD responses increased linearly with saccade latency in all ROIs, except calcarine sulcus.

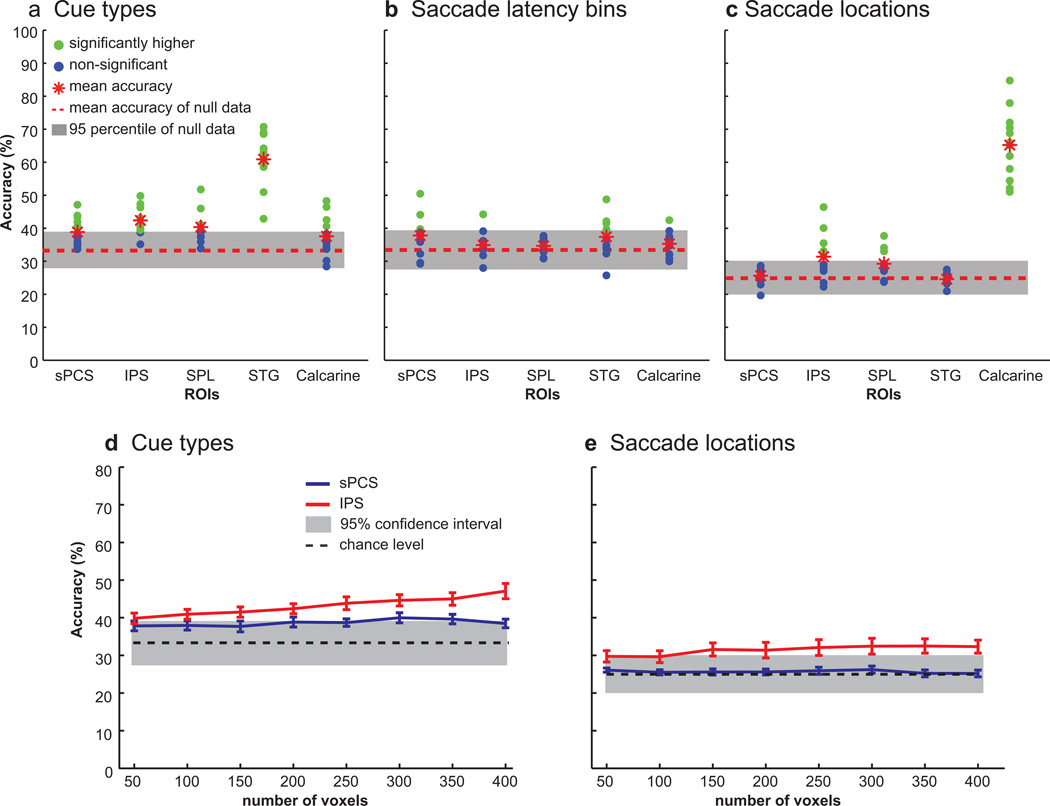

2.4. Multi-voxel pattern analysis (MVPA) results

Although the surface based analyses and time-course analyses did not reveal any gross patterns of activity indicative of cue type specificity in frontal or parietal cortex, we next asked if the multi-voxel patterns of activity contain information about the cue type, about the location of the saccade goal, and as a control, the latency of the saccade onset. We used MVPA to assess whether multi-voxel patterns of activity could predict the type of inputs that were used for saccade selection and the location to which a saccade was made (see Methods). Decoding the multi-voxel patterns of activity in STG and IPS could predict the type of cue used to guide saccades (mean accuracy in STG = 61%, IPS = 43%; chance = 33%) (Fig. 6a). It is not surprising that multi-voxel patterns of activity discriminated the cue type in STG since our univariate analysis also showed differences according to a cue type. However, in IPS we did not observe any differences in the surface based or time-course analyses above, but the multi-voxel patterns of activity could reliably predict the type of cue used to guide the saccade. Decoding was not significant in any other ROIs, where significance was determined by comparison with permuted null distributions of decoding accuracies (mean accuracy = 33%; 5th percentile = 27%; 95th percentile = 39%).

Figure 6.

Decoding accuracies in each ROI. a. Decoding accuracy for cue types (e.g., visual, auditory or semantic cues). The multi-voxel patterns of activity in IPS and STG could reliably predict which information led to a saccade. b. The patterns of activity did not predict saccade latency bins (e.g., fast, medium and slow) in any ROIs. c. The multi-voxel patterns of activity in IPS and calcarine sulcus could predict to which location a saccade was made. In (a)-(c), gray boxes indicate 95th percentile for null data estimated with randomization tests . Red dashed lines are the mean accuracy of null data, which is chance level (33.3% for cue and saccade latency bins, and 25% for saccade locations), and green dots indicate subjects whose decoding accuracy was higher than the chance level after a randomization test, and blue dots indicate subjects whose decoding accuracy was not higher than the chance level. Red asterisks indicate mean decoding accuracy across subjects. d–e. Decoding accuracy of sPCS and IPS (shown in blue and red line, respectively) to discriminate (d) cue types and (e) saccade locations with different numbers of voxels. The accuracy did not increase remarkably as the number of used voxels was increased from 50 to 400.

Since we observed significantly longer saccade latencies in aurally guided saccade trials, we could not exclude the possibility that the classifier was using BOLD patterns associated with different latencies to predict the type of cue used to guide the saccade. To test this possibility, we asked if multi-voxel patterns of activity could predict saccade latency, binned into the fast, medium, and slow categories. Decoders could not predict saccade latency in any ROIs (all mean accuracies < 39%) (Fig. 6b). Therefore, the higher decoding accuracy for predicting cue types in IPS is unlikely to be the result of nonequivalent saccade latencies for the different cue types.

Next, we tested if multi-voxel patterns of activity could predict the direction of the saccade, regardless of the type of cue. Decoding was significantly above chance in both IPS and calcarine sulcus ROIs (mean accuracy in IPS = 32%, calcarine = 65%, chance = 25%) (Fig. 6c). Furthermore, the decoding accuracy for locations was higher than chance level in IPS and calcarine sulcus when classifiers were trained on two of any cue types and tested on the remaining cue type (all mean accuracies > 30%). In no other ROI was decoding significantly different from the null distribution (mean accuracy = 25%; 5th percentile = 20%; 95th percentile = 30%).

To see if the number of voxels influenced our results, we reran the same analysis with different numbers of voxels in sPCS and IPS, varying from 50 to 400 voxels. There was no remarkable difference in decoding accuracies across different number of voxels (Fig. 6d–e).

3. DISCUSSION

In the present study, we investigated whether regions in frontal and parietal cortex that have been associated with the planning of visually guided saccades are also involved in transforming auditory and semantic signals into saccade goals. We found that during the visually, aurally and semantically guided saccades, the same portions of dorsal frontal and parietal cortex were significantly, but equally active. Time courses from these areas suggested that aurally guided saccades evoked greater activity than visually and semantically guided saccades. However, since saccades latencies were longer in the auditory condition and BOLD signal increased with saccade latency, the difference is likely due to longer time-on-task (Yarkoni et al., 2009). Indeed, when we removed the variance due to saccade latency, the increase in BOLD response in the auditory compared to visual and semantic conditions disappeared. Although we did not observe differences in cortical activity in frontal and parietal regions in these univariate analyses, the multi-voxel patterns of activity in IPS could be decoded to predict the type of cue used to guide the saccade, and to which location the saccade was made.

3.1. BOLD responses scale with saccade latency

We found that BOLD responses across the cortex tended to be greater as the onset times of saccades lengthened. This effect held within and across each cue type. There are several potential causes of this correlation. First, the amount of processing time required to encode the stimulus cue likely varied for each cue type. The location of the visual cue could be detected the instant it disappeared. The encoding of the auditory cues, both the spoken words and the noise bursts, required longer integration windows. For instance, the spoken color word could not be interpreted until at least the end of the first phoneme was heard (e.g., “gr” for green; “bl” for blue), which was between 80–100 ms for the words used here. Since the locations of the colored targets did not change during the experiment, once the word color was recognized, the matching target could be rapidly selected. These differences could account for why saccades to visually cued locations were faster than saccades to semantically cued locations.

Why were saccades faster to semantically compared to aurally cued locations? Sound localization must be inferred by analyzing binaural and spectral cues (Blauert, 1997; Cohen and Knudsen, 1999), a comparative process that must integrate the acoustic information over longer epochs of time. Given that the sound stimulus was presented in a noisy scanner environment, sound localization may have been rather taxed. Even in a quiet environment, shifting gaze towards a sound source, compared to a visual source, is more difficult, especially in the current case where subjects are required to discriminate closely spaced sounds due to the environmental constraints of the MRI setting. Indeed, previous studies have demonstrated that making saccades to an aurally cued location takes longer to initiate compared to visually guided saccades (Yao and Peck, 1997; Zahn et al., 1978). The longer saccade latency in aurally guided saccades, then, may reflect the difficulty in separating the sound signal from the scanner noise combined with the longer integration time of stimulus processing. It is unlikely that an area like the FEF, for example, contains neurons that support computations related to auditory sound localization or semantic decomposition. So why then does activity scale with saccade latency? One probable explanation is that neurons that code for the locations of the four potential saccade targets increase their activity at the beginning of each trial in advance of selection (Cisek, 2007; Curtis and Connolly, 2008; Lee et al., 2006; Lee and Keller, 2008). The increased BOLD signal may reflect the integration of this increased level of activity over the time period until the target is finally selected, a length of time that depends on the durations of computations performed in other brain areas.

3.2. Acoustic signal processing in STG

Cue types differentially modulated the activity in STG, with semantically guided saccades eliciting greater responses than aurally guided saccades, and aurally guided saccades evoking greater responses than visually guided saccades. Additionally, the multi-voxel patterns of activity in the STG could be decoded to predict the cue type. Given that STG neurons are believed to process acoustic signals (Alain et al., 2001; Binder et al., 2000; Bushara et al., 1999; Zatorre et al., 2002), it is not surprising that higher responses were found when the cue was presented aurally (i.e., noise bursts and spoken color words) than when a cue was presented visually. Similarly, the greater response to semantically guided saccades in STG compared to aurally guided saccades is consistent with past findings that speech sounds evoke greater activity than non-speech sounds (Price et al., 2005; Uppenkamp et al., 2006; Vouloumanos et al., 2001). Moreover, neurons in monkey caudal STG represent auditory spatial information (Benson et al., 1981; Hackett et al., 1999; Leinonen et al., 1980; Rauschecker and Tian, 2000; Romanski et al., 1999b; Tian et al., 2001). Therefore, the enhanced BOLD responses associated with aurally guided and semantically guided saccades may reflect both the complexity of the acoustic analysis or the spatial perception of the acoustic stimulus.

3.3. Target selection in the frontal and parietal cortex

Our main aim was to test whether BOLD activity in portions of the dorsal frontoparietal attention network (Corbetta and Shulman, 2002) was sensitive to the type of cue used to guide target selection, in this case, saccades. We found the type of cue, whether visual, auditory, or semantic, did not modulate the strength of the BOLD responses in frontal or parietal cortex when the latency differences associated with each cue type were eliminated. BOLD activity did increase with saccade latency even within each cue type, as discussed above. However, these differences are likely to be related to time-on-task differences in perceptual processing and not to target selection, per se.

Nonetheless, we cannot rule out the possibility that different subsets of neurons in frontal and parietal cortex select saccade goals depending on the type of relevant information. If such modality-dependent neurons were not spatially segregated within sPCS and IPS, our pooling of responses over voxels and individuals would obscure any differences in cortical activation maps. To test this possibility, we asked if individual multi-voxel patterns of activity were predictive of the different cue types. In the IPS, but not sPCS, the type of cue that was used to guide the saccade could be decoded from the multi-voxel pattern of activity. Thus, despite no differences in the overall level of BOLD activity, the spatial pattern of BOLD responses contained information about the type of saccade cue. This suggests that the population of neurons from which we are sampling contain neurons with preferences for visual, auditory, or semantic cues. Indeed, electrophysiological studies with non-human primates, have demonstrated that some prefrontal and parietal neurons respond selectively to either visually or aurally guided saccades (Azuma and Suzuki, 1984; Kikuchi-Yorioka and Sawaguchi, 2000; Mullette-Gillman et al., 2005; Mullette-Gillman et al., 2009; Russo and Bruce, 1994). Alternatively, since BOLD signals may reflect the inputs from neuronal activation elsewhere in the brain (Heeger and Ress, 2002; Logothetis et al., 2001), the classifiers may be sensitive to these disparate input signals from parts of the brain that analyze visual, auditory, and semantic information. In that case, patterns of activity would differ according to the distribution of neurons receiving the cue-specific inputs. Another possibility is that the classifiers were sensitive to differences in ways that the cues must be transformed into spatial goals. In the case of visual cues, they are already in retinal, or eye-centered, coordinates and need not be transformed to another frame of reference. In the case of aural cues, the locations of the sounds are initially coded in cranial, or head-centered, coordinates. The transformation from head to eye-centered frame of reference has been linked to neural activity in the monkey parietal cortex (Andersen and Zipser, 1988; Mazzoni et al., 1996; Mullette-Gillman et al., 2005; Stricanne et al., 1996). It is not clear why we were not able to reliably decode cue type from the multi-voxel pattern of activity in the sPCS. In half of the subjects the pattern was predictive of the saccade cue, and therefore, we must be tentative with our conclusion regarding the role of the sPCS in multi-modal transformation of sensory cues into saccade plans.

We also found that the pattern of BOLD activity in IPS could also be decoded to predict the location of the saccade target. Remarkably, these patterns of activity in IPS were general in nature as they could discriminate saccade goals within and even across cue types (e.g., a classifier trained on visually or aurally guided saccade trials could predict the saccade goal even on semantically guided saccade trials). In this case, the classifier may be sensitive to differences in the activities of populations of neurons organized in a topographic map of space, where the spatial goal of the saccade is represented. The human IPS contains at least four retinotopically-organized maps of contralateral space (Jerde et al., 2012; Kastner et al., 2007; Schluppeck et al., 2005; Silver et al., 2005; Swisher et al., 2007). Theoretically, the activity of topographically organized populations of neurons in posterior parietal cortex may represent a map of prioritized space whose readout could be used to guide target selection (Bisley and Goldberg, 2010; Colby and Goldberg, 1999; Fecteau and Munoz, 2006; Gottlieb, 2007; Serences and Yantis, 2006).

3.4 General conclusions

Here, we show that the same portions of the frontal and parietal cortex that are known to play important roles in the visually guided saccade planning are also involved in the planning of saccades to sounds and to semantically cued locations. BOLD responses increased in many cortical areas as a function of saccade latency challenging simple interpretations based solely on BOLD amplitude. However, multi-voxel patterns of activity in IPS were predictive of the type of information used to select among saccade goals and the spatial goal of the saccade. These results suggest that a frontoparietal network transforms visual and non-visual inputs into saccade goals possibly through the establishment of prioritized maps of space.

4. EXPERIMENTAL PROCEDURES

4.1. Subjects

Twelve neurologically healthy human subjects (7 females; age between 22 and 41) were recruited for participation and paid for their time. All subjects had normal hearing and normal or corrected-to-normal vision. All subjects gave written informed consent according to procedures approved by the human subjects institutional Review Board at New York University.

4.2. Recording procedures

The day before scanning, we recorded white-noise bursts (400-ms duration, Polk Audio RM2350 speaker) emitted from four locations, located 6 feet in front of the subjects, with small microphones placed in their ear canals (KE 4–211-1 microphones, Sennheiser; Firewire 410 MIDI amplifier/digitizer, M-Audio). Playback of these custom sound recordings via stereo headphones preserves the perceived spatial quality of the emitted sounds, because the interaural level timing differences and sound distortions caused by head shadows and ear pinna shape that are specific for each individual are preserved. The four sound locations were at 12.4° radius apart from the center, illustrated in Fig. 1b; two peripheral locations were at 12° apart from the center to the right or left side and 3° below fixation, and the other two locations were 3° apart to the right or left side and 12° above fixation. The distance from the fixation cross to each target location was equated. These four locations during recording sessions corresponded to the location of a visual stimulus in the experiment, allowing subjects to perceive the acoustic stimulus emitted from each location occupied by a visual stimulus. In the scanner, these sounds were replayed via MRI-compatible stereo headphones (MR Confon, GmbH) in the saccade tasks.

4.3. Tasks

The experimental stimuli were controlled by Matlab (MathWorks) and MGL (available at http://justingardner.net/mgl) and projected (Eiki LCXG100) into the bore of the scanner on a screen that was viewed by subjects through an angled mirror. We measured BOLD activity while subjects performed three types of saccade tasks (Fig. 1a). Since most of the subjects reported that the aurally guided saccade task was harder than the others, we increased the number of trials for the aurally guided saccade tasks. Thus, each subject performed 180 trials, consisting of 52 visually guided saccade trials, 74 aurally guided saccade trials, and 52 semantically guided saccade trials; the number of correct trials was approximately equal across the three cue types (50.96 trials for visually guided saccades, 51.15 trials for aurally guided saccades, and 51.51 trials for semantically guided saccades). In the beginning of each trial, a white fixation (2 sec) indicated that a stimulus would be presented soon. When the fixation turned to gray, a visual, auditory or semantic cue was presented in a pseudo-random order on a given trial (400 ms). In visually guided saccade trials, one of the four colored dots disappeared for 400 ms and reappeared; in aurally guided saccade trials, a white noise burst acquired in each subject’s recording session was replayed so that the subject would perceive it as being emitted from one of the four locations; in semantically guided saccade trials, one of the color names (i.e., ‘red’, ‘green’, ‘blue’, or ‘yellow’) was spoken. As soon as the cue was given, subjects made an eye movement to a corresponding dot as fast as they could. Trials on which they did not make a response within 1.6 sec were excluded from analysis. Feedback (a green ‘o’ for correct and a red ‘x’ for incorrect responses) was given (2 sec), followed by an inter-trial interval (ITI, 2–16 sec). The three cue types and the cue locations were randomly intermixed within a block, and subjects did not know which type of cue would be given on the current trial until it was presented. To adapt to auditory space within the magnet given the scanner noise, each subject practiced one block of the aurally guided saccade task in the scanner during anatomical scans in advance of the experiment.

4.4. Oculomotor procedures

Eye position was monitored in the scanner at 1000 Hz with an infrared videographic camera equipped with a telephoto lens (Eyelink 1000, SR research Ltd., Kanata, Ontario, Canada). Nine-point calibrations were performed at the beginning of the session and between runs when necessary. Eye movement data were transformed to degrees of visual angle, calibrated using a third-order polynomial algorithm that fit eye positions to known spatial positions, and scored offline with in-house software (GRAPES). Incorrect trials and trials in which subjects did not comply with task instructions (e.g., breaking fixation, excessive blinks) were excluded from further analysis. Most often, subjects made a single saccade, but in cases where they made saccades to more than one target before the feedback was presented, we used the last target fixated.

4.5. Neuroimaging methods

We used fMRI at 3T (Allegra; Siemens, Erlangen, Germany) to measure BOLD changes in cortical activity. During each fMRI scan, a time series of volumes was acquired using a T2*-sensitive echo planar imaging pulse sequence; repetition time, 2000 ms; echo time, 30 ms; flip angle 80°; 32 slices; 3 mm3 isotropic voxels; inplane field of view of 192 mm2; bandwidth 2112 Hz. Images were acquired using custom radio-frequency coil (NOVA Medical). High-resolution (1mm3 isotropic voxels) magnetization-prepared rapid gradient echo three-dimensional T1-weighted scans were acquired for anatomical registration, segmentation and display. To minimize head motion, subjects were stabilized with foam padding around the head.

4.6. fMRI data preprocessing and surface based statistical analysis

Post hoc image registration was used to correct for residual head motion (motion correction using the Linear Image Registration Tool from Oxford University’s Center for Functional MRI of the Brain) (Jenkinson et al., 2002). The time series of each voxel was band-passed (0.01 to 0.25 Hz) to compensate for the slow drift that is typically seen in fMRI measurements; the time series of each voxel was divided by its mean intensity to convert to percent signal modulation and compensate for the decrease in mean image intensity with distance from the receive coil; the data were spatially smoothed to 6 mm at full-width half maximum.

The fMRI responses were modeled with an impulse time locked to the onset of the cues with a canonical hemodynamic response function (HRF) (Polonsky et al., 2000). Each cue type was modeled separately in the design matrix and entered into a modified general linear model (GLM) for statistical analysis using Voxbo (http://www.voxbo.org). To test for BOLD signal differences for each cue type (i.e., visual, auditory, semantic), we compared the BOLD responses to each cue type. Behaviorally, the latencies of saccades for the different cue types differed consistently and pose a potential confound; the time-on-task, or duration of neural processing could drive BOLD differences instead of the type of cue used to guide the saccade. We dealt with this in two ways. First, to examine the relationship between the saccade latency and BOLD signal, we computed correlations between the two on a trial-by-trial basis. For this analysis, incorrect trials were excluded and each trial’s normalized saccade latency was convolved with a canonical HRF and regressed against the BOLD activity. Second, we removed the potential effects of different saccade latencies associated with different cue types on BOLD activity to estimate cue type differences independent of saccade latency. To do so, we forced the saccade latency covariate to be orthogonal to the cue type covariate, essentially removing the variance of the saccade latency from the parameter estimates associated with the cue type covariate. This procedures is analogous to a step-wise regression where the variance accounted for by latency is first entered, followed by the entry of any additional variance that can be accounted for by cue type.

For each subject, we used Caret (http://brainmap.wustl.edu/caret) for anatomical segmentation, gray-white matter surface generation, flattening, and multi-fiducial deformation mapping to the PALS atlas (Van Essen, 2005). Registering subjects in a surface space using precise anatomical landmark constraints (e.g., central sulcus, sylvian and calcarine fissures, etc.) results in greater spatial precision of the alignment compared with standard volumetric normalization methods (Van Essen, 2005). Further, statistical maps for contrasts of interest were created using the beta-weights estimated from each subject’s GLM. For overall task-related activity, we contrasted all cue types with the ITI baseline. These parameter maps were then deformed into the same atlas space and t-statistics were computed for each contrast across subjects in spherical atlas space. We used a nonparametric statistical approach on the basis of permutation tests to help address the problem of multiple statistical comparisons. First, we constructed a permuted distribution of clusters of neighboring surface nodes with t values > 3.0. We chose a primary t statistic cutoff of 3.0 because it is strict enough that intense focal clusters of activity are lost. In the case of a one-sample comparison, where measured values are compared with the test value of 0, the signs of the beta values for each node were randomly permuted for each subject’s surface, before computing the statistic. One thousand iterations, N, of this procedure were performed to compute a permutation distribution for each statistical test performed. We then ranked the resulting suprathreshold clusters by their area. Finally, corrected p values at α = 0.05 for each suprathreshold cluster were obtained by comparing their area to the area of the top 5% of the clusters in the permuted distribution. Where C = Nα + 1, we considered clusters ranked Cth or smaller significant at t = 3.0. The permutation tests controlled for type I error by allowing us to formally compute the probability that an activation of a given magnitude could cluster together by chance (Holmes et al., 1996; Nichols and Holmes, 2002).

4.7. Region-of-Interest (ROI) time series procedures

We used ROI-based analyses of the time courses of BOLD signal change. First, on each subject’s high resolution anatomical scans, we traced around grey matter of several a priori ROIs motivated by past studies and preliminary inspection of single subject activations, including sPCS (the dorsal segment of precentral sulcus at the junction of superior frontal sulcus), IPS (extending from the junction with postcentral sulcus to the junction with parieto-occipital sulcus and lateral to the horizontal segment of IPS), superior parietal lobule (SPL; the gyrus posterior to postcentral sulcus, anterior to parietal-occipital sulcus, lateral to interhemispheric sulcus, medial to horizontal segment of IPS), superior temporal gyrus (STG; extending from the posterior bank of superior temporal gyrus to the middle part of superior temporal gyrus) and calcarine sulcus (Curtis and Connolly, 2008; Ikkai and Curtis, 2008; Srimal and Curtis, 2008; Tark and Curtis, 2009). To identify the most task-related voxels within each ROI, we selected the 20 voxels (540 mm3) with the strongest main effect of the linear combination of all cue type covariates (F-test). These voxels showed some consistent deviation from baseline during the task without being biased by any specific cue type. In order to estimate time-course in ROIs, BOLD data were smoothed over two voxels and converted into percent signal change for deconvolution using AFNI (Cox, 1996). Eight regressors, each corresponding to a time point, were used to estimate the hemodynamic response time-locked to the onset of the cue presentation. The linear combination of these regressors was used to estimate the evoked hemodynamic response for each trial type without any assumption about its shape. We plotted the time series of BOLD responses, averaged across voxels within an ROI and averaged across subjects from analogous ROIs, time-locked to the presentation of the cue. Since we did not find any remarkable differences between the right and the left hemispheres in any ROIs, we combined data from left and right homologous ROIs. Error bars are standard errors between subjects at each time point. For an individual subject, the average of three TRs around the peak of the impulse response function (time points 4, 6, and 8 sec) from each condition was extracted from each ROI and used as a dependent variable in statistical analysis of the time course. To test if differences in BOLD signal across cue types can be accounted for by systematic differences in processing duration, for each cue type (i.e., visual, auditory, semantic) we binned trials by saccade latencies into fast, medium, and slow saccade latency bins. Within each cue type, the number of trials was approximately equal across saccade latency bins. Then, we reanalyzed the BOLD time courses separately for each saccade latency bin.

4.8. MVPA

We used the Princeton MVPA Toolbox (www.pni.princeton.edu/mvpa) to test the hypothesis that multi-voxel patterns of BOLD response in ROIs (i.e., sPCS, IPS, SPL, STG and calcarine sulcus) could predict which type of cue guided saccades and the location of saccades. In addition, we tested if the patterns of activities could predict the saccade latency to rule out the possibility that high accuracy for predicting the cue type was induced by nonequivalent saccade latencies associated with cue types. Incorrect trials were excluded from the analysis, and the time series of each voxel was motion corrected (see Methods) and normalized by subtracting the mean activity and dividing by the standard deviation to yield a mean of 0 and standard deviation of 1. The BOLD epoch used for analysis was shifted by 4 seconds to adjust for the hemodynamic lag. To identify the most task-related voxels in each of our ROIs (see Method), we selected the 200 voxels with the strongest main effect of the linear combination of all cue type covariates (F-test). Using all but two trials per condition, classifiers were trained with sparse multinomial logistic regression to discriminate the cue type for target selection (i.e., visual, auditory, semantic), saccade latency (i.e., fast, medium, slow), or the saccade location (i.e., lower left, upper left, lower right, upper right). The classifier was repeatedly trained on data from all but two trials, and then tested on the left-out trials. We repeated this procedure 500 times, randomly leaving out different trials on each iteration to create average classification accuracies for each subject. We also trained classifiers across cue types to discriminate saccade locations (e.g., a classifier was trained on the data from visually and aurally guided saccades, and then tested on two randomly selected trials from semantically guided saccade trials, etc). The significance of the decoder’s accuracy was estimated by comparison with a null distribution formed by repeating the classification and decoding on shuffled data 6000 times. The trial’s order, and thus the cue type, saccade location, and saccade latency, was randomly shuffled. If the result from real non-shuffled data was outside of the 95% confidence interval, then it was deemed to be significant at the P < 0.05 level.

Highlights.

Saccades made to visual, auditory, and semantic cues were compared with fMRI.

Activity in frontoparietal areas correlated with saccade latency.

Activity did not distinguish between the cues used for target selection.

Decoders of activity patterns, however, predicted cue and saccade direction in IPS.

Therefore, frontoparietal cortex converts multimodal inputs to saccade goals.

ACKNOWLEDGEMENTS

We thank Adam Riggall and Keith Sanzenbach for technical support. Funded by US National Institutes of Health R01 EY016407.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Ahveninen J, et al. Task-modulated "what" and "where" pathways in human auditory cortex. Proc Natl Acad Sci U S A. 2006;103:14608–14613. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, et al. "What" and "where" in the human auditory system. Proc Natl Acad Sci U S A. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen RA, Zipser D. The role of the posterior parietal cortex in coordinate transformations for visual-motor integration. Can J Physiol Pharmacol. 1988;66:488–501. doi: 10.1139/y88-078. [DOI] [PubMed] [Google Scholar]

- Azuma M, Suzuki H. Properties and distribution of auditory neurons in the dorsolateral prefrontal cortex of the alert monkey. Brain Res. 1984;298:343–346. doi: 10.1016/0006-8993(84)91434-3. [DOI] [PubMed] [Google Scholar]

- Benson DA, Hienz RD, Goldstein MH., Jr. Single-unit activity in the auditory cortex of monkeys actively localizing sound sources: spatial tuning and behavioral dependency. Brain Res. 1981;219:249–267. doi: 10.1016/0006-8993(81)90290-0. [DOI] [PubMed] [Google Scholar]

- Beurze SM, et al. Spatial and effector processing in the human parietofrontal network for reaches and saccades. J Neurophysiol. 2009;101:3053–3062. doi: 10.1152/jn.91194.2008. [DOI] [PubMed] [Google Scholar]

- Binder JR, et al. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Neuronal activity in the lateral intraparietal area and spatial attention. Science. 2003;299:81–86. doi: 10.1126/science.1077395. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Attention, intention, and priority in the parietal lobe. Annu Rev Neurosci. 2010;33:1–21. doi: 10.1146/annurev-neuro-060909-152823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blauert J. Spatial hearing : the psychophysics of human sound localization. Vol. MIT Press; Cambridge, Mass: 1997. [Google Scholar]

- Bremmer F, et al. Space coding in primate posterior parietal cortex. Neuroimage. 2001a;14:S46–S51. doi: 10.1006/nimg.2001.0817. [DOI] [PubMed] [Google Scholar]

- Bremmer F, et al. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron. 2001b;29:287–296. doi: 10.1016/s0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Bushara KO, et al. Modality-specific frontal and parietal areas for auditory and visual spatial localization in humans. Nat Neurosci. 1999;2:759–766. doi: 10.1038/11239. [DOI] [PubMed] [Google Scholar]

- Butters N, Barton M, Brody BA. Role of the right parietal lobe in the mediation of cross-modal associations and reversible operations in space. Cortex. 1970;6:174–190. doi: 10.1016/s0010-9452(70)80026-0. [DOI] [PubMed] [Google Scholar]

- Cisek P. Cortical mechanisms of action selection: the affordance competition hypothesis. Philos Trans R Soc Lond B Biol Sci. 2007;362:1585–1599. doi: 10.1098/rstb.2007.2054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen YE, Knudsen EI. Maps versus clusters: different representations of auditory space in the midbrain and forebrain. Trends Neurosci. 1999;22:128–135. doi: 10.1016/s0166-2236(98)01295-8. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Andersen RA. Reaches to sounds encoded in an eye-centered reference frame. Neuron. 2000;27:647–652. doi: 10.1016/s0896-6273(00)00073-8. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Cohen IS, Gifford GW., 3rd Modulation of LIP activity by predictive auditory and visual cues. Cereb Cortex. 2004;14:1287–1301. doi: 10.1093/cercor/bhh090. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Curtis CE, Connolly JD. Saccade preparation signals in the human frontal and parietal cortices. J Neurophysiol. 2008;99:133–145. doi: 10.1152/jn.00899.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cusack R, Carlyon RP, Robertson IH. Neglect between but not within auditory objects. J Cogn Neurosci. 2000;12:1056–1065. doi: 10.1162/089892900563867. [DOI] [PubMed] [Google Scholar]

- Deouell LY, Soroker N. What is extinguished in auditory extinction? Neuroreport. 2000;11:3059–3062. doi: 10.1097/00001756-200009110-00046. [DOI] [PubMed] [Google Scholar]

- Fecteau JH, Munoz DP. Salience, relevance, and firing: a priority map for target selection. Trends Cogn Sci. 2006;10:382–390. doi: 10.1016/j.tics.2006.06.011. [DOI] [PubMed] [Google Scholar]

- Goldberg ME, et al. Saccades, salience and attention: the role of the lateral intraparietal area in visual behavior. Prog Brain Res. 2006;155:157–75. doi: 10.1016/S0079-6123(06)55010-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottlieb J. From thought to action: the parietal cortex as a bridge between perception,action, and cognition. Neuron. 2007;53:9–16. doi: 10.1016/j.neuron.2006.12.009. [DOI] [PubMed] [Google Scholar]

- Gottlieb JP, Kusunoki M, Goldberg ME. The representation of visual salience in monkey parietal cortex. Nature. 1998;391:481–484. doi: 10.1038/35135. [DOI] [PubMed] [Google Scholar]

- Hackett TA, Stepniewska I, Kaas JH. Prefrontal connections of the parabelt auditory cortex in macaque monkeys. Brain Res. 1999;817:45–58. doi: 10.1016/s0006-8993(98)01182-2. [DOI] [PubMed] [Google Scholar]

- Heeger DJ, Ress D. What does fMRI tell us about neuronal activity? Nat Rev Neurosci. 2002;3:142–151. doi: 10.1038/nrn730. [DOI] [PubMed] [Google Scholar]

- Holmes AP, et al. Nonparametric analysis of statistic images from functional mapping experiments. J Cereb Blood Flow Metab. 1996;16:7–22. doi: 10.1097/00004647-199601000-00002. [DOI] [PubMed] [Google Scholar]

- Ikkai A, Curtis CE. Cortical activity time locked to the shift and maintenance of spatial attention. Cereb Cortex. 2008;18:1384–1394. doi: 10.1093/cercor/bhm171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ikkai A, Curtis CE. Common neural mechanisms supporting spatial working memory, attention and motor intention. Neuropsychologia. 2011;49:1428–1434. doi: 10.1016/j.neuropsychologia.2010.12.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M, et al. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Jerde TA, et al. Prioritized maps of space in human frontoparietal cortex. J Neurosci. 2012;32:17382–17390. doi: 10.1523/JNEUROSCI.3810-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, et al. Topographic maps in human frontal cortex revealed in memory-guided saccade and spatial working-memory tasks. J Neurophysiol. 2007;97:3494–3507. doi: 10.1152/jn.00010.2007. [DOI] [PubMed] [Google Scholar]

- Kikuchi-Yorioka Y, Sawaguchi T. Parallel visuospatial and audiospatial working memory processes in the monkey dorsolateral prefrontal cortex. Nat Neurosci. 2000;3:1075–1076. doi: 10.1038/80581. [DOI] [PubMed] [Google Scholar]

- Kusunoki M, Gottlieb J, Goldberg ME. The lateral intraparietal area as a salience map: the representation of abrupt onset, stimulus motion, and task relevance. Vision Res. 2000;40:1459–1468. doi: 10.1016/s0042-6989(99)00212-6. [DOI] [PubMed] [Google Scholar]

- Lee KM, Wade AR, Lee BT. Differential correlation of frontal and parietal activity with the number of alternatives for cued choice saccades. Neuroimage. 2006;33:307–315. doi: 10.1016/j.neuroimage.2006.06.039. [DOI] [PubMed] [Google Scholar]

- Lee KM, Keller EL. Neural activity in the frontal eye fields modulated by the number of alternatives in target choice. J Neurosci. 2008;28:2242–2251. doi: 10.1523/JNEUROSCI.3596-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leinonen L, Hyvarinen J, Sovijarvi AR. Functional properties of neurons in the temporo-parietal association cortex of awake monkey. Exp Brain Res. 1980;39:203–215. doi: 10.1007/BF00237551. [DOI] [PubMed] [Google Scholar]

- Levy I, et al. Specificity of human cortical areas for reaches and saccades. J Neurosci. 2007;27:4687–4696. doi: 10.1523/JNEUROSCI.0459-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logothetis NK, et al. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- Mazzoni P, et al. Spatially tuned auditory responses in area LIP of macaques performing delayed memory saccades to acoustic targets. J Neurophysiol. 1996;75:1233–1241. doi: 10.1152/jn.1996.75.3.1233. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94:2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Motor-related signals in the intraparietal cortex encode locations in a hybrid, rather than eye-centered reference frame. Cereb Cortex. 2009;19:1761–1775. doi: 10.1093/cercor/bhn207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nichols TE, Holmes AP. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum Brain Mapp. 2002;15:1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polonsky A, et al. Neuronal activity in human primary visual cortex correlates with perception during binocular rivalry. Nat Neurosci. 2000;3:1153–1159. doi: 10.1038/80676. [DOI] [PubMed] [Google Scholar]

- Price C, Thierry G, Griffiths T. Speech-specific auditory processing: where is it? Trends Cogn Sci. 2005;9:271–276. doi: 10.1016/j.tics.2005.03.009. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of "what" and "where" in auditory cortex. Proc Natl Acad Sci U S A. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Bates JF, Goldman-Rakic PS. Auditory belt and parabelt projections to the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1999a;403:141–157. doi: 10.1002/(sici)1096-9861(19990111)403:2<141::aid-cne1>3.0.co;2-v. [DOI] [PubMed] [Google Scholar]

- Romanski LM, et al. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999b;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russo GS, Bruce CJ. Frontal eye field activity preceding aurally guided saccades. J Neurophysiol. 1994;71:1250–1253. doi: 10.1152/jn.1994.71.3.1250. [DOI] [PubMed] [Google Scholar]

- Schall JD, Hanes DP. Neural basis of saccade target selection in frontal eye field during visual search. Nature. 1993;366:467–469. doi: 10.1038/366467a0. [DOI] [PubMed] [Google Scholar]

- Schluppeck D, Glimcher P, Heeger DJ. Topographic organization for delayed saccades in human posterior parietal cortex. J Neurophysiol. 2005;94:1372–1384. doi: 10.1152/jn.01290.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT, Yantis S. Selective visual attention and perceptual coherence. Trends Cogn Sci. 2006;10:38–45. doi: 10.1016/j.tics.2005.11.008. [DOI] [PubMed] [Google Scholar]

- Silver MA, Ress D, Heeger DJ. Topographic maps of visual spatial attention in human parietal cortex. J Neurophysiol. 2005;94:1358–1371. doi: 10.1152/jn.01316.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Srimal R, Curtis CE. Persistent neural activity during the maintenance of spatial position in working memory. Neuroimage. 2008;39:455–468. doi: 10.1016/j.neuroimage.2007.08.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J Neurophysiol. 1996;76:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- Swisher JD, et al. Visual topography of human intraparietal sulcus. J Neurosci. 2007;27:5326–5337. doi: 10.1523/JNEUROSCI.0991-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tark KJ, Curtis CE. Persistent neural activity in the human frontal cortex when maintaining space that is off the map. Nat Neurosci. 2009;12:1463–1468. doi: 10.1038/nn.2406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson KG, Bichot NP. A visual salience map in the primate frontal eye field. Prog Brain Res. 2005;147:251–262. doi: 10.1016/S0079-6123(04)47019-8. [DOI] [PubMed] [Google Scholar]

- Thompson KG, Biscoe KL, Sato TR. Neuronal basis of covert spatial attention in the frontal eye field. J Neurosci. 2005;25:9479–9487. doi: 10.1523/JNEUROSCI.0741-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian B, et al. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- Uppenkamp S, et al. Locating the initial stages of speech-sound processing in human temporal cortex. Neuroimage. 2006;31:1284–1296. doi: 10.1016/j.neuroimage.2006.01.004. [DOI] [PubMed] [Google Scholar]

- Van Essen D. A Population-Average, Landmark- and Surface-based (PALS) atlas of human cerebral cortex. Neuroimage. 2005;28:635–662. doi: 10.1016/j.neuroimage.2005.06.058. [DOI] [PubMed] [Google Scholar]

- Vouloumanos A, et al. Detection of sounds in the auditory stream: event-related fMRI evidence for differential activation to speech and nonspeech. J Cogn Neurosci. 2001;13:994–1005. doi: 10.1162/089892901753165890. [DOI] [PubMed] [Google Scholar]

- Warren JD, et al. Perception of sound-source motion by the human brain. Neuron. 2002;34:139–148. doi: 10.1016/s0896-6273(02)00637-2. [DOI] [PubMed] [Google Scholar]

- Yao L, Peck CK. Saccadic eye movements to visual and auditory targets. Exp Brain Res. 1997;115:25–34. doi: 10.1007/pl00005682. [DOI] [PubMed] [Google Scholar]

- Yarkoni T, et al. BOLD correlates of trial-by-trial reaction time variability in gray and white matter: a multi-study fMRI analysis. PLoS One. 2009;4 doi: 10.1371/journal.pone.0004257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zahn JR, Abel LA, Dell'Osso LF. Audio-ocular response characteristics. Sens Processes. 1978;2:32–37. [PubMed] [Google Scholar]

- Zatorre RJ, et al. Where is 'where' in the human auditory cortex? Nat Neurosci. 2002;5:905–909. doi: 10.1038/nn904. [DOI] [PubMed] [Google Scholar]