Abstract

This special series focuses upon the ways in which research on treatment integrity, a multidimensional construct including assessment of the content and quality of a psychosocial treatment delivered to a client as well as relational elements, can inform dissemination and implementation science. The five articles for this special series illustrate how treatment integrity concepts and methods can be applied across different levels of the mental health service system to advance dissemination and implementation science. In this introductory article, we provide an overview of treatment integrity research and describe three broad conceptual models that are relevant to the articles in the series. We conclude with a brief description of each of the five articles in the series.

Keywords: children’s mental health, dissemination and implementation research, evidence-based treatments, treatment integrity

To draw valid inferences from clinical research, treatments must be well specified, well tested, and carried out as intended (Kazdin, 1994). Steps taken to ensure treatment integrity are therefore a necessary part of clinical research conducted in research and practice settings (Hagermoser Sanetti & Kratochwill, 2009; McLeod, Southam-Gerow, & Weisz, 2009; Perepletchikova, 2011). Despite the importance of establishing treatment integrity in clinical research, the science and measurement of treatment integrity is underdeveloped (McLeod et al., 2009; Perepletchikova, Treat, & Kazdin, 2007), and few clinical trials adequately assess for treatment integrity (Hagermoser Sanetti, Gritter, & Dobey, 2011; Perepletchikova et al., 2007; Weisz, Jensen-Doss, & Hawley, 2005). Recently, however, treatment integrity has received renewed attention, an interest driven in part by dissemination and implementation (D&I) researchers, emphasis on the importance of treatment integrity for efforts to span the gap between the science and practice of child (the term child will be used to refer to children and adolescents) psychotherapy (Schoenwald, Garland, Southam-Gerow, Chorpita, & Chapman, 2011).

This special issue of Clinical Psychology: Science and Practice focuses upon this emerging area of science, with five main articles each focused on a different way in which treatment integrity research can be used to inform D&I research. Additionally, there are four commentaries for the issue, each written by leading scientists who integrate these new scientific directions into the broader fields of services and treatment research. In this introductory article, we outline several definitional issues and discuss frameworks and models that are represented across the five articles. Specifically, we (a) define the conceptual basis for treatment integrity research, (b) briefly review research on treatment integrity and its applications, (c) briefly define D&I research goals, and (d) describe three different health care and mental health care frameworks related to D&I research and locate treatment integrity within those frameworks. We conclude this article with a brief introduction to each of the five articles in the issue.

TREATMENT INTEGRITY RESEARCH

Treatment integrity (also referred to as treatment fidelity, treatment adherence, and intervention integrity) is a broad term used to mean the degree to which a treatment was delivered as intended (McLeod et al., 2009; Perepletchikova & Kazdin, 2004, 2005). The field has yet to coalesce around a unified definition of treatment integrity (Hagermoser Sanetti & Kratochwill, 2009), as a variety of definitions and conceptual models have been proposed (e.g., Dane & Schneider, 1998; Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005; Hagermoser Sanetti & Kratochwill, 2009; Jones, Clarke, & Power, 2008; Perepletchikova & Kazdin, 2005; Waltz, Addis, Koerner, & Jacobson, 1993). There is some overlap and consensus across the different definitions and models proposed to date. For example, most definitions highlight the importance of measuring therapist adherence to the treatment model. However, important differences exist across the various definitions, which suggests that the field has yet to reach consensus on the important facets that comprise treatment integrity.

According to our definition, treatment integrity is composed of four components—treatment adherence, treatment differentiation, therapist competence, and relational elements (Perepletchikova & Kazdin, 2004, 2005; McLeod et al., 2009; Waltz et al., 1993). Treatment adherence refers to the extent to which the therapist delivers the treatment as designed. Treatment differentiation refers to the extent to which treatments under study differ along appropriate lines, often defined by the treatment manual. Therapist competence refers to the level of skill and degree of responsiveness demonstrated by the therapist when delivering the technical and relational elements of a treatment. And the relational elements refer to the quality of the client–therapist alliance and level of client involvement. Each component is thought to capture a unique aspect of the content and quality of treatment that together, and/or in isolation, may be responsible for therapeutic change (Perepletchikova & Kazdin, 2005).

Treatment integrity is an important methodological factor in clinical research. Establishing, maintaining, and measuring treatment integrity are essential for interpreting findings generated by clinical trials. A number of factors are hypothesized to influence treatment integrity. For example, therapist and client factors have been found to account for significant variance in treatment adherence and competence in a clinical trial (Barber, Foltz, Crits-Christoph, & Chittams, 2004). Accordingly, investigators take steps to establish and maintain treatment integrity in clinical research (Carroll & Nuro, 2002; Gresham, 1997; Perepletchikova et al., 2007; Schoenwald et al., 2011). To establish treatment integrity, investigators can provide an operational definition of the treatment (i.e., a treatment manual) as well as train therapists. To help maintain treatment integrity over the course of a clinical trial, investigators can provide supervision to therapists. Together, some have called the steps taken to establish and maintain treatment “quality control procedures” (Schoenwald et al., 2011), connecting this strand of clinical research with quality of care models from business and health care, a topic to which we return shortly.

CURRENT STATUS

Despite the importance of establishing, maintaining, and measuring treatment integrity for interpreting findings generated by clinical trials, the science and measurement of treatment integrity is in its infancy in child psychotherapy (McLeod et al., 2009; Perepletchikova et al., 2007). Most treatment integrity measure development, and research conducted to date, has been in the adult psychotherapy field (see Webb, DeRubeis, & Barber, 2010). Moreover, recent reviews have concluded that few randomized clinical trials adequately measure treatment integrity in child psychotherapy (Hagermoser Sanetti et al., 2011; McLeod & Weisz, 2004; Perepletchikova et al., 2007; Weisz et al., 2005). Clearly, more measure development and treatment integrity research are needed in the child field.

Next, we briefly define the four components of treatment integrity—adherence, differentiation, competence, and relational factors (alliance, client involvement)—and describe the state of the science for each. We also provide a short overview of the quality control methods used to bolster treatment integrity in research and clinical applications.

Treatment Adherence

To date, treatment adherence has received the most attention in the child field, with treatment differentiation and competence remaining relatively unstudied (Hagermoser Sanetti et al., 2011; Perepletchikova et al., 2007). Unfortunately, despite the relative strength of adherence research over other integrity components, treatment adherence measurement remains rare in child therapy (Hagermoser Sanetti et al., 2011; Weisz et al., 2005). A few exemplar research programs do, however, exist (e.g., Forgatch, Bullock, Patterson, & Steiner, 2004; Hogue et al., 2008; Schoenwald, Carter, Chapman, & Sheidow, 2008). For example, Schoenwald, Sheidow, and Letourneau (2004) have developed parent-report measures of therapist adherence for multisystemic treatment, and across a program of research have found important relations among therapist adherence, supervisor adherence, parenting behaviors, and client outcomes (e.g., Huey, Henggeler, Brondino, & Pickrel, 2000).

Treatment Differentiation

Whereas treatment adherence assesses whether a therapist follows a particular approach, treatment differentiation evaluates whether (and “to where”) therapists deviate from that approach (Kazdin, 1994). Measuring treatment differentiation therefore provides the means to understand whether and/or how the use of proscribed interventions influences clinical outcomes. To date, most treatment differentiation measurement has been performed in treatment–treatment comparisons to check for contamination and/or to ensure that treatment implementation is consistent across treatment sites (e.g., Hill, O’Grady, & Elkin, 1992; Hogue et al., 1998). Such checks provide valuable information regarding patterns of treatment implementation that might influence study findings–differences in implementation across sites. Treatment differentiation checks can also aid understanding of whether and/or how protocol violations influence treatment effects (e.g., Perepletchikova, 2011; Waltz et al., 1993). For example, research has found that cases receiving treatment with greater “purity” had better long-term outcomes (Frank, Kupfer, Wagner, & McEachran, 1991).

Therapist Competence

Therapist competence pertains to the quality of treatment implementation from both technical and relational standpoints. Although therapist competence is considered an important treatment integrity component, few studies have found a relation between therapist competence and outcomes in clinical trials (see Webb et al., 2010, for a review). To our knowledge, only two competence measures have been developed for child therapy (see Chu & Kendall, 2009; Hogue et al., 2008). The dearth of therapist competence measures developed specifically for child psychotherapy is a notable gap in the field given the differences between adult and child psychotherapy.

Investigators interested in developing competence measures for child psychotherapy must wrangle with several thorny definitional issues concerning measurement of competence. First, how broadly should competence be defined? Is it better to define competence specific to individual treatments (i.e., “technical” or “limited domain” competence; Barber, Sharpless, Klostermann, & McCarthy, 2007) or in terms of common factors (also called “nonspecific” or “global” competence; Asay, Lambert, Hubble, Duncan, & Miller, 1999; Barber et al., 2007)? Extant studies have mostly focused on skill in the application of prescribed interventions (e.g., assigning homework; see Barber et al., 2007; Webb et al., 2010).

A focus on technical competence is consistent with the field’s emphasis on evidence-based treatments (EBTs), which have been primarily technical in their focus. However, technical competence is only one way of conceptualizing therapist competence. Some scientists have maintained that common competence also represents an important dimension and thus warrants study (also called “global” competence; Barber et al., 2007). However, few studies have focused on common competence—competence in skills in the nonspecific elements of psychotherapy that are common across treatments (e.g., alliance building; Carroll et al., 2000)—and (almost) none of that work has occurred in the context of child therapy.

Relational Factors

Most definitions of treatment integrity have focused upon the quantity and quality of treatment as implemented by a therapist; however, a few definitions have included relational factors such as alliance or client involvement in treatment (e.g., Dane & Schneider, 1998; McLeod et al., 2009). The alliance and client involvement have both been associated with positive clinical outcomes in child psychotherapy (e.g., Chu & Kendall, 2004; Karver, Handelsman, Fields, & Bickman, 2006; McLeod, 2011; Shirk & Karver, 2011) and are considered by some to be elements of evidence-based practice (e.g., Karver, Handelsman, Fields, & Bickman, 2005; Norcross, 2011). Relational factors therefore represent an important component of a treatment and thus should be measured as part of treatment integrity.

Quality Control Methods

Measuring the four components of treatment integrity is critical to interpreting study findings (Perepletchikova, 2011), but efforts to establish and maintain treatment integrity also play an important role in clinical research. Researchers are increasingly acknowledging the role quality control methods (i.e., treatment manuals, therapist training, therapist supervision) play in ensuring treatment integrity in clinical research projects conducted in research and practice settings (e.g., Beidas & Kendall, 2010; Herschell, Kolko, Baumann, & Davis, 2010). For example, there are recent efforts to understand how different methods of therapist training may improve treatment integrity (e.g., Fairburn & Cooper, 2011; Herschell et al., 2010; Simons et al., 2010). Similarly, recent research has demonstrated a clear connection between methods of supervision and consultation and treatment integrity (e.g., Schoenwald, Sheidow, & Chapman, 2009). Although quality control methods have long been considered an important part of clinical research, D&I researchers have refocused attention upon this area of treatment integrity research.

Treatment integrity represents a multidimensional construct that while critical to understanding clinical treatment research remains under-researched, particularly with regard to child psychotherapy. As the five articles in the issue will illustrate, treatment integrity research has multiple applications relevant for D&I research. Before describing those articles, we turn first to some conceptual background on D&I research and then describe several models that provide useful frameworks to consider when reading the articles in the special issue.

THE ROLE OF TREATMENT INTEGRITY IN DISSEMINATION AND IMPLEMENTATION RESEARCH

Children’s mental health care is a public health concern for the United States. Large numbers of children with mental health needs do not receive adequate psychosocial treatments (e.g., Tang, Hill, Boudreau, & Yucel, 2008). Even though there are literally hundreds of EBTs studied for over 30 years (e.g., Chorpita et al., 2011), progress disseminating those treatments has been slow (e.g., Aarons, Hurlburt, & Horwitz, 2011; McGlynn, Norquist, Wells, Sullivan, & Liberman, 1988). Underscoring this point, an Institute of Medicine (2001) report found that it takes approximately 17 years for evidence-based practices to be disseminated to practice settings. Dissemination and implementation research developed, in part, to help remedy this problem. Primary goals of D&I research are to (a) identify mechanisms to increase the speed of information transmission and (b) optimize psychosocial treatments for multiple contexts. Interestingly, D&I research has some of its roots in treatment development and evaluation research.

The stage model of treatment development (Carroll & Rounsaville, 2007; Rounsaville, Carroll, & Onken, 2001) is one reason that we have made so much progress in developing and establishing potent psychosocial treatments. The stage model and similar approaches (e.g., the efficacy–effectiveness progression) that focus upon the treatment development and evaluation process have driven the federal treatment research agenda for many years (Chambless & Ollendick, 2001). The initial model included three stages (Rounsaville et al., 2001). The first stage focused on treatment development and early clinical testing. Assuming a treatment passes this “test” (i.e., produces replicable and positive effects for clients and is safe for clients), the next stage generally involves efficacy studies that utilize randomized clinical trial methodology. Finally, the third stage involves effectiveness studies that evaluate a treatment in community contexts. Ideally, the third stage ends when a treatment has been tested in a variety of community contexts and has been proven successful.

Until recently, Stage III represented the terminus of the model. An implicit assumption was that a treatment, once successful in efficacy and effectiveness tests, was deemed ready for widespread dissemination (i.e., the targeted distribution of an EBT; Chambers, Ringeisen, & Hickman, 2005; Fixsen et al., 2005). Some researchers had argued that psychosocial treatment development and evaluation requires more than three stages (e.g., Chorpita & Nakamura, 2004; Hogue, 2010; Schoenwald & Hoagwood, 2001), an assertion supported by the fact that some EBTs have progressed to the third stage but have not been successful in effectiveness tests (e.g., Clarke et al., 2005; Southam-Gerow et al., 2010; Weisz et al., 2009). Thus, an emerging consensus is that treatment development and evaluation models need additional stages that assess fit between EBTs and different practice contexts (Schoenwald & Hoagwood, 2001).

Two stages that have received attention in D&I research are (a) transportability studies and (b) dissemination studies. Transportability studies focus on the processes involved in moving an EBT from a research setting into a community setting, with the key being the elucidation of strategies needed to encourage the adoption and the effective execution of an EBT in new practice settings (e.g., training and supervision procedures for therapists and supervisors; Schoenwald & Hoagwood, 2001). Dissemination studies, on the other hand, focus upon how to distribute the treatment and its training and support “package,” with the primary outcome being the sustainability of adoption (Southam-Gerow, Marder, & Austin, 2008). Thus, a primary focus of transportability and dissemination studies is how to adapt an EBT to maximize the effectiveness and sustainability of the program in practice settings.

It is important to note that a critical shift in focus occurs with D&I research. Transportability and dissemination research shift away from an exclusive focus upon clinical outcomes. In fact, as discussed elsewhere (e.g., Schoenwald & Hoagwood, 2001; Southam-Gerow et al., 2008), the methods needed to properly implement a treatment in a new setting become a central focus. Because a key part of implementation involves training and supervising therapists to deliver a specific set of treatment procedures, the extent to which the elements of that intervention are delivered according to the original treatment model becomes of critical importance (Center for Substance Abuse Prevention, 2001; Schoenwald et al., 2011). In short, D&I research places considerable emphasis on the importance of assessing the integrity of treatment implementation (i.e., degree to which an intervention is delivered according to the original treatment model). The measurement of treatment integrity is important because it can help D&I researchers to determine whether “failure” to produce a desired clinical outcome was due to the EBT (i.e., implementation was sufficient, so the treatment is not effective; thus, adapt the EBT or select an alternative intervention) or its implementation (i.e., implementation was insufficient; thus, engage in staff training; e.g., Schoenwald et al., 2011).

TREATMENT INTEGRITY AS VIEWED FROM CLINICAL SERVICES FRAMEWORKS

Another result of the emergence of D&I research has been the identification of new frameworks from which to consider important research questions, and thus to design the next generation of clinical studies. Next, we outline three frameworks that are relevant to treatment integrity research and its applications for D&I research as well as highlighted throughout the special issue. Specifically, we discuss three frameworks: (a) mental health systems ecological model, (b) quality of care framework, and (c) evidence-based services system model.

Mental Health Systems Ecological Model

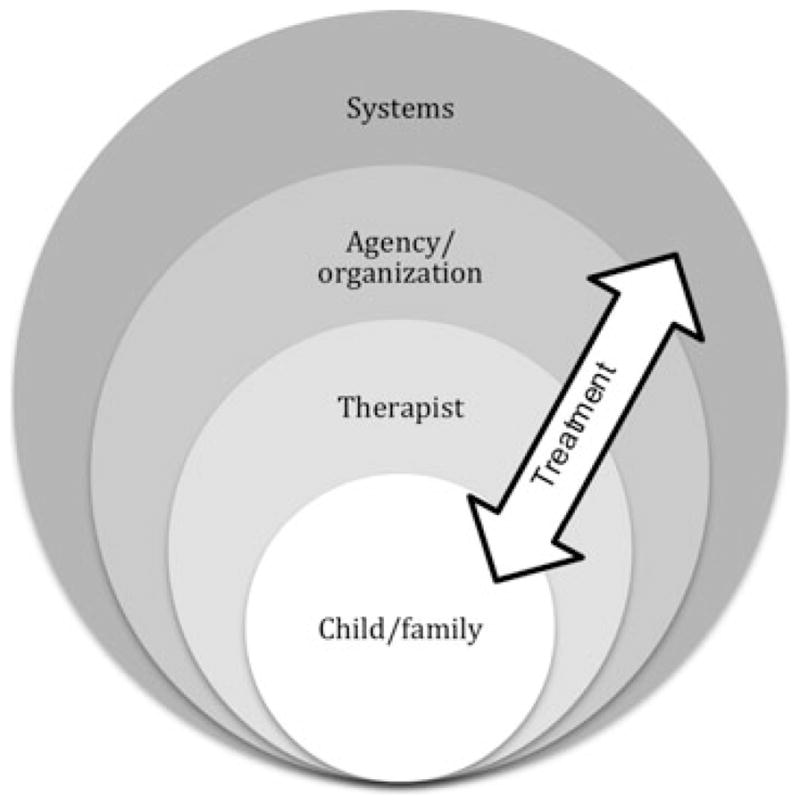

Interventions and services research occurs within the multilayered and dynamic context of mental health service delivery (see Figure 1, adapted from Schoenwald & Hoagwood, 2001; cf. Damschroder & Hagedorn, 2011; Fixsen et al., 2005; Proctor et al., 2009). This context has been described as consisting of client- and family-level factors (e.g., symptoms, functioning), therapist-level factors (e.g., level of professional experience, attitudes), intervention-specific characteristics (e.g., therapeutic modality), organizational influences (e.g., culture and climate), and systems-level factors (e.g., service system financing policies). The traditions of interventions and services research have led to differences in their relative emphases on the various aspects of this multilayered context. Interventions research has traditionally focused on client, therapist, and intervention characteristics, whereas services research has focused primarily on service delivery parameters, organizational characteristics, and environmental factors (e.g., Southam-Gerow, Ringeisen, & Sherrill, 2006). D&I research seeks to understand how the different levels of the mental health systems ecological (MHSE) model interact to influence treatment implementation.

Figure 1.

Mental health systems ecological model.

The MHSE model is reflected in all of the articles in the series, although each article has a somewhat different emphasis. For example, the Garland and Schoenwalds (2013) and McLeod, Southam-Gerow, Tully, Rodríguez, and Smith (2013) articles primarily emphasize the intervention and, to a lesser extent, therapist levels; the Schoenwald, Mehta, Frazier, and Shernoff (2013) and Hogue, Ozechowski, Robbins, and Waldron (2013) articles emphasize the therapist, intervention, and organization levels; and the Regan, Daleiden, and Chorpita (2013) article highlights the organization and systems levels.

Quality of Care Framework

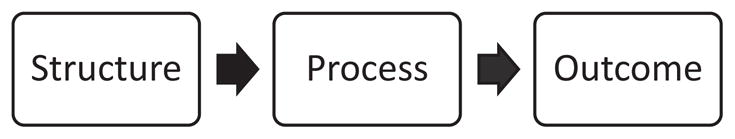

D&I researchers have turned to diverse fields like health care, business, education, public health, and industrial/organizational psychology for research models that provide the means to study how factors that operate at the multiple levels of the mental health service system influence treatment implementation (Aarons et al., 2011). One such model is the quality of care framework, an approach used to understand how the context in which care is provided influences treatment implementation and outcome (McGlynn et al., 1988; Mendel et al., 2008). With its roots in health care (Donabedian, 1988), quality of care research seeks to improve the outcomes of individuals who access care across a variety of health care settings (Burnam, Hepner, & Miranda, 2009; Donabedian, 1988; McGlynn et al., 1988). To achieve this goal, quality of care research seeks to understand how the structural elements of health care settings (e.g., contextual elements of where care is provided, including attributes of settings, clients, and providers) and the processes of care (e.g., activities and behaviors associated with delivering and receiving care) influence patient outcomes (e.g., symptom reduction, client satisfaction, client functioning; Donabedian, 1988). This basic model is depicted in Figure 2.

Figure 2.

Quality of care framework (based on Donabedian, 1988).

One important goal of quality of care research is to identify quality indicators. Health care quality indicators are structural or process elements that are proven to lead to improvements in patient outcomes (Agency for Healthcare Research & Quality, 2006). Typically identified through literature reviews and expert consensus, quality indicators are based upon evidence demonstrating causal links between (a) the structural elements and processes of care and (b) processes of care and outcomes (Burnam et al., 2009; Donabedian, 1988). Once identified, quality indicators (e.g., specific evidence-based practices proven to improve outcomes) are used to generate clinical practice guidelines defining what constitutes appropriate care for particular problems. Importantly, quality indicators provide stakeholders with the means to assess, track, and monitor provider performance relative to current “best practices” (Hussey, Mattke, Morse, & Ridgely, 2007) and are a necessary prerequisite for quality improvement efforts (Garland, Bickman, & Chorpita, 2010; Pincus, Spaeth-Rublee, & Watkins, 2011).

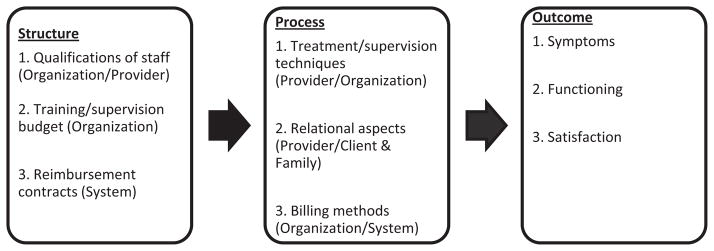

Because the quality of care framework provides the means to understand the interplay between the different levels of the health care system, D&I researchers have used this model to guide efforts to span the science-practice gap in mental health (see, e.g., Garland et al., 2010; Knox & Aspy, 2011; McGlynn et al., 1988; Seidman et al., 2010). Moreover, this framework can help researchers investigate how factors, present at different levels of the service system, influence treatment implementation. Figure 3 replicates Figure 2, with children’s mental health services examples included at each step, highlighting structural and process elements that have been linked to outcomes in child mental health care.

Figure 3.

Example of quality of care framework applied to children’s mental health.

Like the MHSE model, the quality of care model is reflected in all of the articles in the series. For instance, the McLeod et al. (2013) article explicitly proposes using the quality of care model as a basis for developing treatment integrity measures as quality indicators. The Garland and Schoenwald (2013) article, for example, focuses on the state of the science of quality control procedures, a critical component of efforts to assess and ensure quality mental health care, and Hogue et al. (2013) also focus on quality of care, proposing a different way to develop and implement quality assurance procedures when implementing treatments in diverse community settings. Finally, the Regan et al. (2013) article presents a quality model that considers a broad array of quality indicators using their expansive conceptualization of integrity.

Evidence-Based Services System Model

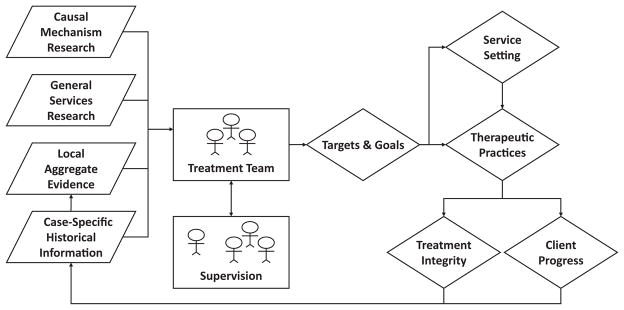

The last of the three conceptual models relevant for this series is the evidence-based services (EBS) system model described by Daleiden and Chorpita (2005). This model demonstrates how individual, agency-wide, and systemic decisions related to the selection of specific treatments can be made based upon several evidence bases. Developed as a means to reorganize mental health services in the wake of the Felix Consent Decree (e.g., Chorpita & Donkervoet, 2005; Nakamura et al., 2011), the model has broad application for mental health services and, most relevant to the current issue, depicts the important role of treatment integrity (see Figure 4).

Figure 4.

The evidence-based services system model (Daleiden & Chorpita, 2005).

On the left side of the model are the four evidence bases upon which clinical services can be grounded. First is causal mechanism research, the science and theory related to the causes of mental health problems. Second is what is called general services research, basically the various clinical studies of specific EBTs, including the many randomized controlled trials most often cited as the gold standard. The third box represents local aggregate evidence, essentially those systematically collected data on practices and outcomes aggregated at a team, agency, or system level. Finally, there is case-specific historical information, those data collected on the outcomes and practices for a specific client. The model specifies that these four evidence bases influence choices made moving left to right across the model, such as (a) who will provide and supervise the treatment; (b) what specific problems will be targeted; and, most relevant to this issue, (c) several specific aspects of the services delivered, depicted on the far right side of the model. These include the therapeutic practices delivered (i.e., the specific psychological treatments provided) and the treatment integrity with which the therapeutic practices are delivered.

All five articles in the series reflect aspects of the EBS system model as here described. The Regan et al. (2013) article directly cites the EBS model, whereas the other four describe the importance of gathering evidence (the left side of the model) and using that evidence to inform the decisions depicted on the right side of the model. For example, Hogue et al. (2013) describe leveraging and building multiple evidence bases as a means to develop quality assurance processes. Other articles focus on the decisions to be made, as depicted on the right side of the model. For example, Schoenwald et al. (2013) focus somewhat on the supervisory decision, whereas McLeod et al. (2013) emphasize the feedback loop to the treatment team from data on integrity and outcome.

Summary

These three frameworks represent different and complementary perspectives on mental health care. The mental health services ecological model takes the broadest perspective, emphasizing how different levels of the ecology can influence how a treatment is implemented. One could hypothesize that treatment integrity could be influenced, for example, by factors at all levels of the ecology. The quality of care framework provides a framework for studying how the different structural and process factors interact and influence clinical outcomes. From this perspective, treatment integrity is viewed as a measure of a key process (i.e., the treatment provided to the client). Finally, the EBS system model focuses specifically on how treatment teams plan and execute services. Treatment integrity is specifically included in the EBS model as an important indicator of both the quality of treatment provided as well as the performance of individual therapists and/or teams of therapists. As described, these frameworks are reflected throughout the five articles of the series, either explicitly or implicitly. We now turn to a summary of each of the five articles.

ARTICLES IN THE SERIES

In the first article, McLeod et al. make the case of using the components of treatment integrity to identify quality indicators for children’s mental health services. Relying heavily on conceptual models drawn from health care and business, including application of the quality of care framework, they demonstrate how one could use treatment integrity measures to inform the establishment of feedback systems designed to optimize treatment integrity in clinical services settings, particularly through a novel application of benchmarking strategies.

In the second article, Garland and Schoenwald present a meta-analytic review of what they call quality control methods (including therapist training and supervision procedures). Their meta-analysis catalogues the use of various quality control methods across more than 300 studies of evidence-based psychosocial treatments. An important goal of their article was to delineate whether clinical trials had used the most effective and efficient methods as well as the extent to which there was variability in how the different methods were used for different treatment approaches, populations, or settings.

In the third article, Schoenwald et al. shift the emphasis of the issue from treatment to clinical supervision, underscoring the importance of supervision to treatment integrity. Specifically, they present data from a novel school-based intervention study called Links to Learning, in which the authors have adapted a model of supervision used in a successfully disseminated treatment, Multisystemic Therapy. They present quantitative and qualitative data relevant to adherence to the supervision model, with their findings highlighting some of the challenges inherent in bringing evidence-based practices to scale in diverse community settings.

In the fourth article, Hogue et al. argue that the approach to treatment integrity may need to shift to help promote sustainability of EBTs, a primary goal of D&I science. Specifically, they suggest that instead of purveyor-driven integrity measurement (treatment developer defines integrity and develops measurement tools), the field could develop and adopt localized approaches to measuring integrity. In the article, they present three different pathways to this outcome. First, they describe the adaptation of observational methods for therapists and/or supervisors to report integrity. Second, they suggest applying statistical process control methods to develop a benchmarking approach to integrity and quality assurance. Third, in a manner akin to the flexibility within fidelity approach for training therapists (see Kendall, Gosch, Furr, & Sood, 2008), they encourage purveyors to permit local customization of integrity procedures.

In the fifth article, Regan et al. provide a broad and systemic view on integrity. They do so first by clarifying the definition of integrity, simplifying it to refer to discrepancies between observed and expected values. With this conceptualization as a background, they introduce some relatively novel concepts into the treatment integrity scientific conversation. For example, they note the traditional model of integrity measurement as monitoring integrity within a clinical episode to see the expected ingredients (i.e., those suggested by the clinical evidence for the client’s target problems). They also describe how the order of elements within a clinical episode could constitute integrity. In other words, some treatment practices may be optimal when they are used in conjunction with (or after) another practice. As an example, exposure may work better when preceded by psychoeducation. A third manner of integrity described by Regan et al. concerns the application of multiple treatment approaches across multiple episodes of care. In the end, the authors link these concepts to the activities of an organization working toward the most efficient and evidence-based approach to providing care to children and families.

CONCLUSIONS

In this article, we introduced the special issue on applications of treatment integrity research for dissemination and implementation science. In doing so, we provided definitions of key terms as well as a description of three different frameworks that influenced the five articles in the issue. Our aim in organizing this special issue was to inform readers of the journal about innovative advances in D&I science specifically related to treatment integrity. We hope that the issue will serve as an inspiration for a new generation of studies to move the field forward in its effort to provide scientific guidance for systems seeking to deliver the best possible services to as many children and families as possible.

Acknowledgments

Preparation of this article was supported in part by a grant from the National Institute of Mental Health (RO1 MH086529; McLeod & Southam-Gerow).

References

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(1):4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Agency for Healthcare Research and Quality. AHRQ Publication No 07-0012. Rockville, MD: U.S. Department of Health and Human Services; 2006. National healthcare disparities report. [Google Scholar]

- Asay TP, Lambert MJ, Hubble MA, Duncan BL, Miller SD. The empirical case for the common factors in therapy: Quantitative findings. In: Hubble MA, Duncan BL, Miller SD, editors. The heart and soul of change: What works in therapy. Washington, DC: American Psychological Association; 1999. pp. 23–55. [Google Scholar]

- Barber JP, Foltz C, Crits-Christoph P, Chittams J. Therapists’ adherence and competence and treatment discrimination in the NIDA Collaborative Cocaine Treatment Study. Journal of Clinical Psychology. 2004;60 (1):29–41. doi: 10.1002/jclp.10186. [DOI] [PubMed] [Google Scholar]

- Barber JP, Sharpless BA, Klostermann S, McCarthy KS. Assessing intervention competence and its relation to therapy outcome: A selected review derived from the outcome literature. Professional Psychology: Research and Practice. 2007;38(5):493–500. doi: 10.1037/0735-7028.38.5.493. [DOI] [Google Scholar]

- Beidas R, Kendall PC. Training therapists in evidence-based practice: A critical review of studies from a systems-contextual perspective. Clinical Psychology: Science and Practice. 2010;17(1):1–30. doi: 10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnam MA, Hepner KA, Miranda J. Future research on psychotherapy practice in usual care. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36:1–5. doi: 10.1007/s10488-009-0254-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carroll KM, Nich C, Sifty RL, Nuro KF, Frankfurter TL, Ball SA, Rounsaville BJ. A general system for evaluating therapist adherence and competence in psychotherapy research. Drug and Alcohol Dependence. 2000;57(3):225–238. doi: 10.1016/S0376-8716(99)00049-6. [DOI] [PubMed] [Google Scholar]

- Carroll KM, Nuro KF. One size cannot fit all: A stage model for psychotherapy manual development. Clinical Psychology: Science and Practice. 2002;9(4):396–406. doi: 10.1093/clipsy/9.4.396. [DOI] [Google Scholar]

- Carroll KM, Rounsaville BJ. A vision of the next generation of behavioral therapies research in the addictions. Addiction. 2007;102(6):850–869. doi: 10.1111/j.1360-0443.2007.01798.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Center for Substance Abuse Prevention. Finding the balance: Program fidelity and adaptation in substance abuse. Rockville, MD: SAMHSA, U.S. Department of Health and Human Services; 2001. [Google Scholar]

- Chambers DA, Ringeisen H, Hickman EE. Federal, state, and foundation initiatives around evidence-based practices for child and adolescent mental health. Child and Adolescent Psychiatric Clinics of North America. 2005;14(2):307–327. doi: 10.1016/j.chc.2004.04.006. [DOI] [PubMed] [Google Scholar]

- Chambless DL, Ollendick TH. Empirically supported psychological interventions: Controversies and evidence. Annual Review of Psychology. 2001;52:685–716. doi: 10.1146/annurev.psych.52.1.685. [DOI] [PubMed] [Google Scholar]

- Chorpita BF, Daleiden EL, Ebesutani C, Young J, Becker KD, Nakamura BJ, Starace N. Evidence-based treatments for children and adolescents: An updated review of indicators of efficacy and effectiveness. Clinical Psychology: Science and Practice. 2011;18(2):154–172. doi: 10.1111/j.1468-2850.2011.01247.x. [DOI] [Google Scholar]

- Chorpita BF, Donkervoet C. Implementation of the Felix Consent Decree in Hawaii: The impact of policy and practice development efforts on service delivery. In: Steele RG, Roberts MC, editors. Handbook of mental health services for children, adolescents, and families. New York, NY: Kluwer; 2005. pp. 317–322. [Google Scholar]

- Chorpita BF, Nakamura BJ. Four considerations for dissemination of intervention innovations. Clinical Psychology: Science and Practice. 2004;11:364–367. doi: 10.1093/clipsy/bph093. [DOI] [Google Scholar]

- Chu BC, Kendall PC. Positive association of child involvement and treatment outcome within a manual-based cognitive-behavioral treatment for children with anxiety. Journal of Consulting and Clinical Psychology. 2004;72(5):821–829. doi: 10.1037/0022-006X.72.5.821. [DOI] [PubMed] [Google Scholar]

- Chu BC, Kendall PC. Therapist responsiveness to child engagement: flexibility within manual-based CBT for anxious youth. Journal of Clinical Psychology. 2009;65(7):736–755. doi: 10.1002/jclp. [DOI] [PubMed] [Google Scholar]

- Clarke G, Debar L, Lynch F, Powell J, Gale J, O’Connor E, Hertert S. A randomized effectiveness trial of brief cognitive-behavioral therapy for depressed adolescents receiving antidepressant medication. Journal of the American Academy of Child and Adolescent Psychiatry. 2005;44(9):888–898. doi: 10.1097/01.chi.000017-1904.23947.54. [DOI] [PubMed] [Google Scholar]

- Daleiden EL, Chorpita BF. From data to wisdom: quality improvement strategies supporting large-scale implementation of evidence-based services. Child and adolescent psychiatric clinics of North America. 2005;14:329–349. doi: 10.1016/j.chc.2004.11.002. [DOI] [PubMed] [Google Scholar]

- Damschroder LJ, Hagedorn HJ. A guiding framework and approach for implementation research in substance use disorders treatment. Psychology of Addictive Behaviors. 2011;25(2):194–205. doi: 10.1037/a0022284. [DOI] [PubMed] [Google Scholar]

- Dane AV, Schneider BH. Program integrity in primary and early secondary prevention: Are implementation effects out of control? Clinical Psychology Review. 1998;18:23–45. doi: 10.1016/S0272-7358(97)00043-3. [DOI] [PubMed] [Google Scholar]

- Donabedian A. The quality of care: How can it be assessed? Journal of the American Medical Association. 1988;260(12):1743–1748. doi: 10.1001/jama.1988.03410120089033. [DOI] [PubMed] [Google Scholar]

- Fairburn CG, Cooper Z. Therapist competence, therapy quality, and therapist training. Behaviour Research and Therapy. 2011;49(6–7):373–378. doi: 10.1016/j.brat.2011.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blasé KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. (FMHI Publication #231) [Google Scholar]

- Forgatch MS, Bullock BM, Patterson GR, Steiner H. From theory to practice: Increasing effective parenting through role-play. In: Steiner H, editor. Handbook of mental health interventions in children and adolescents: An integrated developmental approach. San Francisco, CA: Jossey-Bass; 2004. pp. 782–813. [Google Scholar]

- Frank E, Kupfer DJ, Wagner EF, McEachran AB. Efficacy of interpersonal psychotherapy as a maintenance treatment of recurrent depression: Contributing factors. Archives of General Psychiatry. 1991;48:1053–1059. doi: 10.1001/archpsyc.1991.01810360017002. [DOI] [PubMed] [Google Scholar]

- Garland AF, Bickman L, Chorpita BF. Change what? Identifying quality improvement targets by investigating usual mental health care. Administration and Policy in Mental Health and Mental Health Services Research. 2010;37(1–2):15–26. doi: 10.1007/s10488-010-0279-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garland A, Schoenwald SK. Use of effective and efficient quality control methods to implement psychosocial interventions. Clinical Psychology: Science and Practice. 2013;20(1–2):33–43. doi: 10.1007/s10488-010-0279-y. [DOI] [Google Scholar]

- Garland A, Schoenwald SK. Use of effective and efficient quality control methods to implement psychosocial interventions. Clinical Psychology: Science and Practice. 2013;20(1):33–43. [Google Scholar]

- Gresham FM. Treatment integrity in single-subject research. In: Franklin RD, Allison DB, Gorman BS, editors. Design and analysis of single-case research. Mahwah, NJ: Erlbaum; 1997. pp. 93–117. [Google Scholar]

- Hagermoser Sanetti LM, Gritter KL, Dobey LM. Treatment integrity of interventions with children in the school psychology literature from 1995 to 2008. School Psychology Review. 2011;40(1):72–84. [Google Scholar]

- Hagermoser Sanetti LM, Kratochwill TR. Treatment integrity assessment in the schools: An evaluation of the treatment integrity planning protocol. School Psychology Quarterly. 2009;24(1):24–35. doi: 10.1037/a0015431. [DOI] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010;30(4):448–466. doi: 10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill CE, O’Grady KE, Elkin I. Applying the Collaborative Study Psychotherapy Rating Scale to therapist adherence in cognitive-behavior therapy, interpersonal therapy, and clinical management. Journal of Consulting and Clinical Psychology. 1992;60(1):73–79. doi: 10.1037/0022-006X.60.1.73. [DOI] [PubMed] [Google Scholar]

- Hogue A. When technology fails: Getting back to nature. Clinical Psychology: Science and Practice. 2010;17(1):77–81. doi: 10.1111/j.1468-2850.2009.01196.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogue A, Henderson CE, Dauber S, Barajas PC, Fried A, Liddle HA. Treatment adherence, competence, and outcome in individual and family therapy for adolescent behavior problems. Journal of Consulting and Clinical Psychology. 2008;76(4):544–555. doi: 10.1037/0022-006X.60.1.73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogue A, Liddle HA, Rowe C, Turner RM, Dakof GA, LaPann K. Treatment adherence and differentiation in individual vs. family therapy for adolescent substance abuse. Journal of Counseling Psychology. 1998;45(1):104–114. doi: 10.1037/0022-0167.45.1.104. [DOI] [Google Scholar]

- Hogue A, Ozechowski TJ, Robbins MS, Waldron HB. Making fidelity an intramural game: Localizing quality assurance procedures to promote sustainability of evidence-based practices in usual care. Clinical Psychology: Science and Practice. 2013;20(1):60–77. [Google Scholar]

- Huey SJ, Henggeler SW, Brondino MJ, Pickrel SG. Mechanisms of change in multisystemic therapy: Reducing delinquent behavior through therapist adherence and improved family and peer functioning. Journal of Consulting and Clinical Psychology. 2000;69:451–467. doi: 10.1037/0022-006X.68.3.451. [DOI] [PubMed] [Google Scholar]

- Hussey PS, Mattke S, Morse L, Ridgely MS. Evaluation of the use of AHRQ and other quality indicators. Prepared for the Agency for Healthcare Research and Quality. Rockville, MD: RAND Health; 2007. [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- Jones HA, Clarke AT, Power TJ. Expanding the concept of intervention integrity: A multidimensional model of participant engagement. Balance, Newsletter of Division 53 (Clinical Child and Adolescent Psychology) of the American Psychological Association. 2008;23(1):4–5. [Google Scholar]

- Karver MS, Handelsman JB, Fields S, Bickman L. A theoretical model of common process factors in youth and family therapy. Mental Health Services Research. 2005;7:35–51. doi: 10.1007/s11020-005-1964-4. [DOI] [PubMed] [Google Scholar]

- Karver MS, Handelsman JB, Fields S, Bickman L. Meta-analysis of therapeutic relationship variables in youth and family therapy: The evidence for different relationship variables in the child and adolescent treatment outcome literature. Clinical Psychology Review. 2006;26(1):50–65. doi: 10.1016/j.cpr.2005.09.001. [DOI] [PubMed] [Google Scholar]

- Kazdin A. Methodology, design, and evaluation in psychotherapy research. In: Bergin AE, Garfield SL, editors. Handbook of psychotherapy and behavior change. 4. Oxford, UK: John Wiley & Sons; 1994. pp. 19–71. [Google Scholar]

- Kendall PC, Gosch E, Furr JM, Sood E. Flexibility within fidelity. Journal of the American Academy of Child and Adolescent Psychiatry. 2008;47(9):987–993. doi: 10.1097/CHI.0b013e31817eed2f. [DOI] [PubMed] [Google Scholar]

- Knox LM, Aspy CB. Quality improvement as a tool for translating evidence based interventions into practice: What the youth violence prevention community can learn from healthcare. American Journal of Community Psychology. 2011;48(1–2):56–64. doi: 10.1007/s10464-010-9406-x. [DOI] [PubMed] [Google Scholar]

- McGlynn EA, Norquist GS, Wells KB, Sullivan G, Liberman RP. Quality-of-care research in mental health: Responding to the challenge. Inquiry: A Journal of Medical Care Organization, Provision and Financing. 1988;25(1):157–170. Retrieved from http://www.jstor.org/stable/29771940. [PubMed] [Google Scholar]

- McLeod BD. Relation of the alliance with outcomes in youth psychotherapy: A meta-analysis. Clinical Psychology Review. 2011;31(4):603–616. doi: 10.1016/j.cpr.2011.02.001. [DOI] [PubMed] [Google Scholar]

- McLeod BD, Southam-Gerow MA, Tully CB, Rodríguez A, Smith MM. Making a case for treatment integrity as a psychosocial treatment quality indicator for youth mental health care. Clinical Psychology: Science and Practice. 2013;20(1):14–32. doi: 10.1111/cpsp.12020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLeod BD, Southam-Gerow MA, Weisz JR. Conceptual and methodological issues in treatment integrity measurement. School Psychology Review. 2009;38(4):541–546. [Google Scholar]

- McLeod BD, Weisz JR. Using dissertations to examine potential bias in child and adolescent clinical trials. Journal of Consulting and Clinical Psychology. 2004;72(2):235–251. doi: 10.1037/0022-006X.72.2.235. [DOI] [PubMed] [Google Scholar]

- Mendel P, Meredith LS, Schoenbaum M, Sherbourne CD, Wells KB. Interventions in organizational and community context: a framework for building evidence on dissemination and implementation in health services research. Administration and Policy in Mental Health. 2008;35:21–37. doi: 10.1007/s10488-007-0144-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura BJ, Chorpita BF, Hirsch M, Daleiden E, Slavin L, Amundson MJ, Vorsino WM. Large-scale implementation of evidence-based treatments for children 10 years later: Hawaii’s evidence-based services initiative in children’s mental health. Clinical Psychology: Science and Practice. 2011;18(1):24–35. doi: 10.1111/j.1468-2850.2010.01231.x. [DOI] [Google Scholar]

- Norcross JC, editor. Psychotherapy relationships that work: Evidence-based responsiveness. 2. New York, NY: Oxford University Press; 2011. [Google Scholar]

- Perepletchikova F. On the topic of treatment integrity. Clinical Psychology: Science and Practice. 2011;18(2):148–153. doi: 10.1111/j.1468-2850.2011.01246.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perepletchikova F, Kazdin AE. Assessment of parenting practices related to conduct problems: Development and validation of the management of children. Journal of Child and Family Studies. 2004;13(4):385–403. doi: 10.1023/B:JCFS.0000044723.45902.70. [DOI] [Google Scholar]

- Perepletchikova F, Kazdin AE. Treatment integrity and therapeutic change: Issues and research recommendations. Clinical Psychology: Science and Practice. 2005;12:365–383. doi: 10.1093/clipsy/bpi045. [DOI] [Google Scholar]

- Perepletchikova F, Treat TA, Kazdin AE. Treatment integrity in psychotherapy research: Analysis of the studies and examination of the associated factors. Journal of Consulting and Clinical Psychology. 2007;75:829–841. doi: 10.1037/0022-006X.75.6.829. [DOI] [PubMed] [Google Scholar]

- Pincus HA, Spaeth-Rublee B, Watkins KE. Analysis and commentary: The case for measuring quality in mental health and substance abuse care. Health Affairs (Project Hope) 2011;30(4):730–736. doi: 10.1377/hlthaff.2011.0268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36(1):24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Regan J, Daleiden EL, Chorpita BF. Integrity in mental health systems: An expanded framework for managing uncertainty in clinical care. Clinical Psychology: Science and Practice. 2013;20(1):78–98. [Google Scholar]

- Rounsaville BJ, Carroll KM, Onken LS. A stage model of behavioral therapies research: Getting started and moving on from Stage I. Clinical Psychology: Science and Practice. 2001;8(2):133–142. doi: 10.1093/clipsy/8.2.133. [DOI] [Google Scholar]

- Schoenwald SK, Carter RE, Chapman JE, Sheidow AJ. Therapist adherence and organizational effects on change in youth behavior problems one year after multisystemic therapy. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35(5):379–394. doi: 10.1007/s10488-008-0181-z. [DOI] [PubMed] [Google Scholar]

- Schoenwald SK, Garland AF, Southam-Gerow MA, Chorpita BF, Chapman JE. Adherence measurement in treatments for disruptive behavior disorders: Pursuing clear vision through varied lenses. Clinical Psychology: Science and Practice. 2011;18(4):331–341. doi: 10.1111/j.1468-2850.2011.01264.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenwald SK, Hoagwood K. Effectiveness, transportability, and dissemination of interventions: What matters when? Psychiatric Services. 2001;52(9):1190–1197. doi: 10.1176/appi.ps.52.9.1190. [DOI] [PubMed] [Google Scholar]

- Schoenwald SK, Mehta TG, Frazier SL, Shernoff ES. Clinical supervision in effectiveness and implementation research. Clinical Psychology: Science and Practice. 2013;20(1):44–59. [Google Scholar]

- Schoenwald SK, Sheidow AJ, Chapman JE. Clinical supervision in treatment transport: Effects on adherence and outcomes. Journal of Consulting and Clinical Psychology. 2009;77:410–421. doi: 10.1037/a0013788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenwald SK, Sheidow AJ, Letourneau EJ. Toward effective quality assurance in evidence-based practice: Link between expert consultation, therapist fidelity, and child outcomes. Journal of Clinical Child and Adolescent Psychology. 2004;33:94–104. doi: 10.1207/S15374424JCCP3301_10. [DOI] [PubMed] [Google Scholar]

- Seidman E, Chorpita BF, Reay WE, Stelk W, Garland AF, Kutash K, Ringeisen H. A framework for measurement feedback to improve decision-making in mental health. Administration and Policy in Mental Health and Mental Health Services Research. 2010;37(1–2):128–131. doi: 10.1007/s10488-009-0260-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shirk SR, Karver MS. Alliance in child and adolescent psychotherapy. In: Norcross JC, editor. Psychotherapy relationships that work: Evidence-based responsiveness. 2. New York, NY: Oxford University Press; 2011. pp. 70–91. [Google Scholar]

- Simons AD, Padesky CA, Montemorano J, Lewis CC, Marukami J, Lamb K, Beck AT. Training and dissemination of cognitive behavior therapy for depression in adults: A preliminary examination of therapist competence and client outcomes. Journal of Consulting and Clinical Psychology. 2010;78(5):751–756. doi: 10.1037/a0020569. [DOI] [PubMed] [Google Scholar]

- Southam-Gerow MA, Marder AM, Austin AA. Transportability and dissemination of evidence-based manualized treatments in clinical settings. In: Steele RG, Elkin TD, Roberts MC, editors. Handbook of evidence based therapies for children and adolescents. New York, NY: Springer; 2008. pp. 447–469. [Google Scholar]

- Southam-Gerow MA, Ringeisen HL, Sherrill JT. Integrating interventions and services research: Progress and prospects. Clinical Psychology: Science and Practice. 2006;13(1):1–8. doi: 10.1111/j.1468-2850.2006.00001.x. [DOI] [Google Scholar]

- Southam-Gerow MA, Weisz JR, Chu BC, McLeod BD, Gordis EB, Connor-Smith JK. Does cognitive behavioral therapy for youth anxiety outperform usual care in community clinics? An initial effectiveness test. Journal of the American Academy of Child and Adolescent Psychiatry. 2010;49(10):1043–1052. doi: 10.1016/j.jaac.2010.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tang MH, Hill KS, Boudreau AA, Yucel RM. Medicaid managed care and the unmet need for mental health care among children with special health care needs. Health Services Research. 2008;43(3):882–900. doi: 10.1111/j.1475-6773.2007.00811.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waltz J, Addis ME, Koerner K, Jacobson NE. Testing the integrity of a psychotherapy protocol: Assessment of adherence and competence. Journal of Consulting and Clinical Psychology. 1993;61(4):620–630. doi: 10.1037/0022-006X.61.4.620. [DOI] [PubMed] [Google Scholar]

- Webb CA, DeRubeis RJ, Barber JP. Therapist adherence/competence and treatment outcome: A meta-analytic review. Journal of Consulting and Clinical Psychology. 2010;78(2):200–211. doi: 10.1037/a0018912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weisz JR, Jensen-Doss A, Hawley KM. Youth psychotherapy outcome research: A review and critique of the literature. Annual Review of Psychology. 2005;56:337–363. doi: 10.1146/annurev.psych.55.090902.141449. [DOI] [PubMed] [Google Scholar]

- Weisz JR, Southam-Gerow MA, Gordis EB, Connor-Smith JK, Chu BC, Langer DA, Weiss B. Cognitive-behavioral therapy versus usual clinical care for youth depression: An initial test of transportability to community clinics and clinicians. Journal of Consulting and Clinical Psychology. 2009;77(3):383–396. doi: 10.1037/a0013877. [DOI] [PMC free article] [PubMed] [Google Scholar]