Abstract

Objective. To incrementally create and embed biannual integrated knowledge and skills examinations into final examinations of the pharmacy practice courses offered in the first 3 years of the pharmacy curriculum that would account for 10% of each course’s final course grade.

Design. An ad hoc integrated examination committee was formed and tasked with addressing 4 key questions. Integrated examination committees for the first, second, and third years of the curriculum were established and tasked with identifying the most pertinent skills and knowledge-based content from each required course in the curriculum, developing measurable objectives addressing the pertinent content, and creating or revising multiple-choice and performance-based questions derived from integrated examination objectives. An Integrated Examination Review Committee evaluated all test questions, objectives, and student performance on each question, and revised the objectives and questions as needed for the following year’s iteration. Eight performance objectives for the examinations were measured.

Assessment. All 8 performance objectives were achieved. Sixty-four percent of the college’s faculty members participated in the integrated examination process, improving the quality of the examination. The incremental development and implementation of the examinations over a 3-year period minimized the burden on faculty time while engaging them in the process. Student understanding of expectations for knowledge and skill retention in the curriculum also improved.

Conclusions. Development of biannual integrated examinations in the first 3 years of the classroom curriculum enhanced the college’s culture of assessment and addressed accreditation guidelines for formative and summative assessment of students’ knowledge and skills. The course will continue to be refined each year.

Keywords: assessment, evaluation, integrated, progress exams, milestone exams

INTRODUCTION

Ensuring that all students build and retain a core foundation of knowledge and skills is a requirement and growing concern for doctor of pharmacy (PharmD) programs.1 Annual progress examinations (eg, milestone, mile-marker or benchmark examinations) have received increasing support from many colleges and schools of pharmacy as the tool for measuring students’ retention of core knowledge and skills for future clinical application.2-7 Despite the attention annual progress examinations have received in the academy and in accreditation standards, questions remain about what test to use and how and when to use it. After reviewing the literature2-7 about these progress examinations and evaluating the Accreditation Council for Pharmacy Education (ACPE)1 Standards and Guidelines for guidance, the University of Oklahoma College of Pharmacy incrementally created biannual integrated knowledge and skills examinations and embedded them into the final examinations of pharmacy practice courses (I – VI) offered in the first 3 years. The development of these examinations was based on ACPE Guideline 15.1, which calls for “periodic, psychometrically sound, comprehensive, knowledge-based, and performance-based formative and summative assessments,” and Standard 13, which requires programs to integrate, apply, reinforce and advance knowledge, skills and attitudes throughout the curriculum.1 However, the ACPE standards offer little guidance regarding which examination to use and how and when to offer the examination. This paper describes the development and implementation of the integrated examinations for the first 3 years of the classroom curriculum, embedding each integrated examination into the final examinations of the pharmacy practice course series (I – VI). To ease the stress of change in the college’s assessment program, these examinations were developed and implemented incrementally over a 3-year period, minimizing the burden on faculty members’ time and engaging them in the development of a process that requires a sustained effort.

DESIGN

The incremental development of the examination began in the summer of 2008 when the college charged an ad hoc assessment committee of faculty members and preceptors to explore the feasibility and sustainability of biannual progress examinations. The committee was presented with 4 specific questions to address that would impact how the college created its examinations: (1) Should the examinations be locally developed or nationally developed to enable benchmarking?2-5 (2) Should colleges or schools offer the examination every year, every semester, or immediately prior to advanced pharmacy practice experiences, or would a different timetable be better? The answer to this question impacts the use and frequency of cumulative examination questions; specifically, should these questions be cumulative per year or cumulative across all years of the professional program?6-7 (3) Which question type should be used: multiple-choice, written essay or case-based, objective structured clinical examinations (OSCEs), or other performance-based assessments? (4) Should the examination be high stakes and affect progression in the program or a course grade, or low stakes and have no impact on grades or progression?6-7 If the examination is low stakes, students may not study and may subsequently perform poorly, impacting the ability to make interpretations about the results. In contrast, high-stakes examinations carry their own implications.6,7 For example, what if students fail the examination but are in good academic standing?

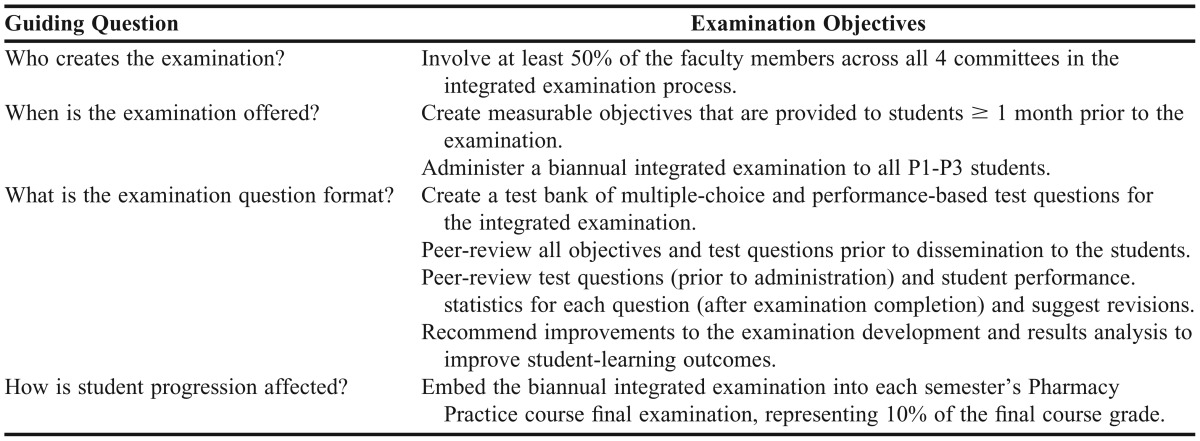

The 4 guiding-specific questions were addressed with 8 integrated examination objectives (Table 1). The committee concluded that embedding knowledge and skills-based progress examinations into the college’s existing P1-P3 pharmacy practice course series (I-VI) final examinations was possible and sustainable.

Table 1.

Guiding Questions and Associated Objectives for the Integrated Examination

The college began its work of incrementally creating integrated examinations for use in the classroom curriculum by first focusing on committee formation. A first-year (P1) integrated examination committee was formed in fall 2008 that included the course coordinators within the specific year; assessment and curriculum committee members; and basic, clinical, and administrative sciences faculty members. This committee was charged with identifying the most pertinent knowledge and skills for each course for each semester and then developing measurable objectives with corresponding multiple-choice and performance-based test questions that would account for 10% of the final course grade in the pharmacy practice course. A second-year (P2) integrated examination committee was formed in 2009 and a third-year (P3) integrated examination committee was formed in 2010, with similar team compositions to the P1 committee and the same charges. In 2010, an Integrated Examination Review Committee, composed of curriculum and assessment committee members with training in national item writing, was established. The review committee was charged with peer-reviewing all objectives and test questions before and after each integrated examination, reviewing tests statistics, and suggesting changes to objectives and test questions when needed.

EVALUATION AND ASSESSMENT

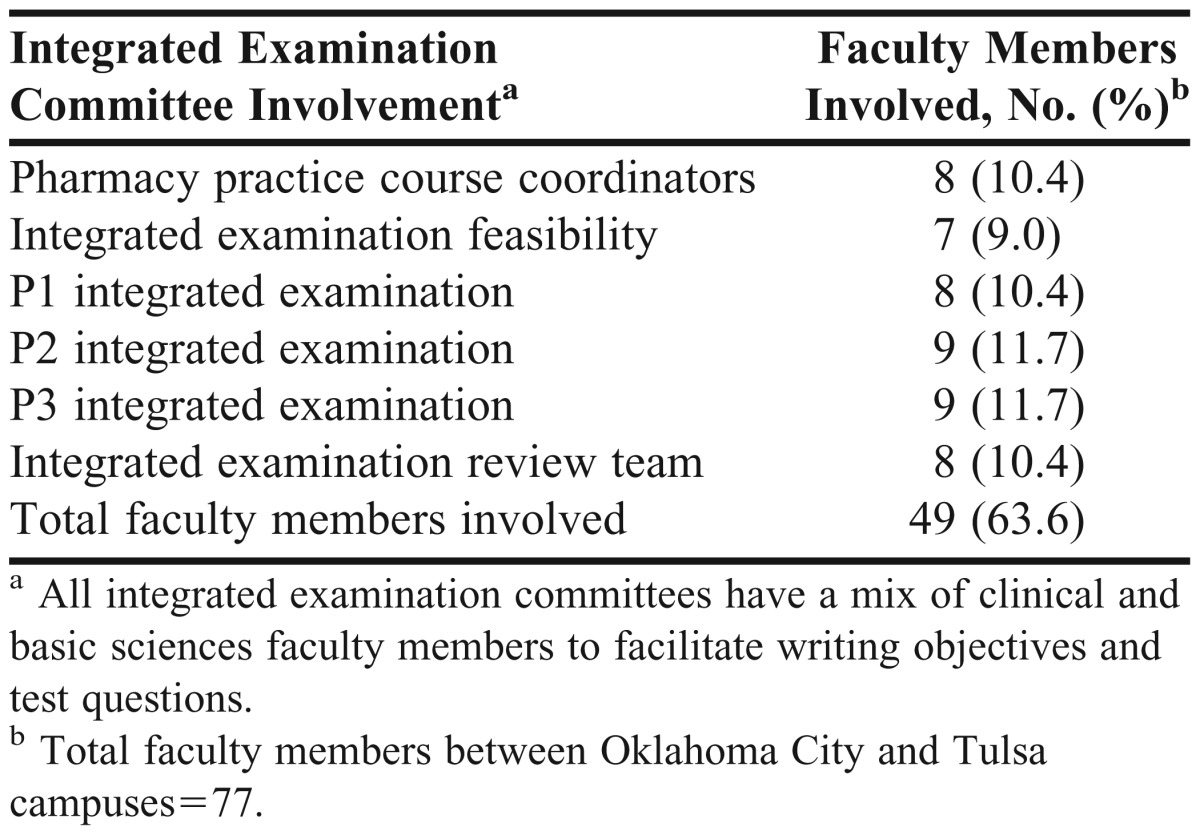

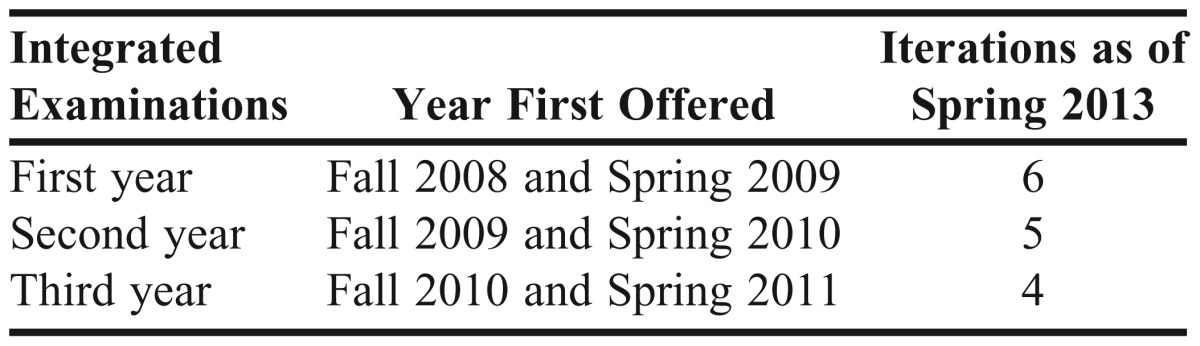

Sixty-four percent of the college’s faculty members participated in the integrated examination process (Table 2) between 2008 and 2013, meeting objective 1 of having at least 50% of faculty members involved (Table 1). Students received a list of examination objectives covering all of their courses for that given semester at least 1 month prior to the examination to assist them in their examination preparation, meeting objective 2. Two examples of the objectives students received for the third-year fall examination are: (1) given a patient case, select the best therapeutic plan for prevention or treatment of osteoporosis, and (2) select an appropriate statistical test after considering the level of data measurement and type of research design. Beginning in fall 2010, a biannual examination was administered to students each semester in the first 3 years of the professional curriculum, achieving objective 3 (Table 3).

Table 2.

Faculty Members Involved in the Integrated Examination Process

Table 3.

Number of Iterations of Examinations for Each Year of the Pharmacy Curriculum

To address objective 4, multiple-choice test and written case-based test questions were created and used on the integrated examination, and a test bank of multiple-choice and case-based questions were continually updated and expanded. All P1-P3 objectives and test questions were reviewed prior to objective dissemination and test administration each semester, meeting the requirements of objective 5. This outcome yielded additional benefit in that the review process has provided faculty development in objective and test-item writing.

For objective 6, the Integrated Examination Review Committee reviewed student performance statistics for each question and suggested revisions. For test-question review, point biserial correlations of 0.2 and higher were considered desirable; other factors included percentage of students answering each question correctly and the overall percentage of the class selecting the correct answer. On questions for which a majority of students (100%) provided a correct answer or a majority answered the question incorrectly (>50%), the Integrated Examination Review Committee consulted with the integrated examination committee for that specific class year, as well as the faculty member(s) teaching the related content for insight about the results. In some instances, no changes were made to the question, although the faculty members who teach the content were made aware of the results so content can be clarified or emphasized as needed. This feedback was important for faculty members considering that each integrated examination committee and course faculty members determined the most relevant content for each course.

During the college’s experience with integrated examinations, continuous improvements were made to the examination development process and results analysis to improve student-learning outcomes (objective 7). For instance, guidelines were created to standardize administration and review of each examination (data analysis) to ensure consistency among examinations and years. The Integrated Examination Review Committee specifically analyzed how students’ performance on the course-specific integrated examination questions correlated with specific course performance (final percentage grade received). The correlation analysis for 1 course and the integrated examination questions affiliated with that course revealed a variable association between how well students did in a particular course and how well the students performed on the course-related integrated-examination questions. The variability in these results revealed the need for an increased number of test questions offered from each course so that the college could better associate specific integrated examination results with the related course grades.

To achieve objective 8, the biannual integrated examination was embedded into the final examination of each semester’s and year’s Pharmacy Practice course and accounted for 10% of the final course grade, giving this assessment method substantial weight and making it possible to manage poor performances within existing academic standings policy. This approach avoided the problems of a high-stakes examination yet raised the stakes for student preparation and accountability throughout the curriculum.

DISCUSSION

Using an examination created by the college’s faculty members has been a successful strategy because faculty members are charged with determining the important knowledge and skills within the courses they teach in the professional curriculum. This process involves well over 50% of the faculty members in the college in the complexities of developing, administering, and assessing measures of student performance across the professional curriculum. All 3 committees, along with the help of the review committee, continue to meet and revise the examinations. Peer-reviews of objectives and test questions by senior-level faculty members with national test writing experience improves the quality of the overall assessment as the critiques that are offered contribute to important faculty development on writing effective objectives and test questions. Having the college’s faculty members create the integrated examination allows them to emphasize the learning and retention expectations for core knowledge and skills in the professional curriculum. Broad faculty involvement is important in creating and sustaining a culture of assessment, as involvement by only a few faculty members would perpetuate a “silo” mentality of focusing only on the delivery and assessment of individual courses. Broad involvement contributes to faculty understanding of the curriculum as an organic whole, with each component course supporting knowledge, skill, and attitude development for the broad curricular outcomes. Additionally, broad faculty involvement supports our college’s value of continual quality improvement of the curriculum. It is hard to have a meaningful and informed discussion about the effectiveness of the curriculum when a majority of faculty members can speak only about the effectiveness of the course(s) they teach and even then do so in isolation from the rest of the curriculum. The college’s emphasis on broad involvement has helped faculty members see the whole curriculum and understand how their collective efforts contribute to the terminal outcomes of our program. Faculty members’ appreciation for broad involvement has evolved over time. In the beginning, they contributed to the examination creation by articulating and creating questions to assess the most relevant content. Now faculty members are asking questions about the results in relation to the curriculum and are interested in the examination process, such as the timing of the objectives and examination creation during a semester. As a result of their involvement, the process of creating objectives and test questions will move from the fall and spring semesters to the preceding summer timeframe to better accommodate faculty members’ schedules.

Distributing the examination objectives to students 1 month in advance allows them to study in advance. Although student motivation to prepare for the examination has not been evaluated, embedding the integrated examination into the final examination of each semester’s Pharmacy Practice course successfully increases the stakes of the examination. We find that embedding the examination into an existing course also facilitates examination administration and allows poor performance to be managed within the college’s recognized academic standing policies. In contrast, some programs use high-stakes examinations on which failure could interrupt students’ progression in the program. Justification and enforcement of this outcome could be difficult, especially if the student has passed all courses and has a grade-point average above 2.0. Other programs make the examinations low stakes, wherein there is no penalty for failure, which may affect student motivation for preparation.

One challenge with the integrated examination is creating new questions and psychometrically evaluating the questions for inclusion in the bank. The college would like to find an efficient yet cost effective way to enter the test questions in a database and is evaluating tools such as ExamSoft (ExamSoft Worldwide Inc., Boca Raton, FL) testing software to facilitate this process. The challenges related to test-bank creation and management highlight the limitations of using a college-specific integrated examination compared with using a nationally standardized examination, such as the Pharmacy Curriculum Outcomes Assessment, for which teams of item writers and statisticians are available to build and refine the test bank, and benchmarking of items is available. Despite these limitations, the college still embraces the advantages of college-created examinations for which faculty members who are teaching the curriculum determine vital content, write questions directly based on that content, receive feedback about students’ retention of the content, and manage poor performance within academic standing policies. The benefits of this approach outweigh the limitations because the integrated examination process facilitates the college’s quality-improvement efforts directly related to its curriculum, allowing refinement of its teaching and assessment programs, as needed.

The main focus of the integrated examination process to date has been on process, specifically creation and delivery of the examinations. Next, the college will focus on student learning outcomes, such as ensuring that questions and objectives are tagged to our curricular outcomes, tracking student performance on these outcomes, evaluating how well the students are meeting the outcomes, and creating examination report cards so students can receive feedback about areas of strength and those in need of improvement. The college is also expanding the scope and range of questions used on the integrated examination. Efforts are under way to make each examination cumulative by including test questions from the previous semester or year on future iterations of the examination to evaluate students’ long-term retention of material. The cumulative questions will be incrementally introduced by first making the examinations cumulative within a year; then the examinations will become cumulative across years. The college item-writers are constructing more integrated test questions based on a patient case, and the integrated examination committees are exploring the development of a more performance-based or OSCE-type component to the examination in the P3 spring semester. Students not performing at expected levels could be required to complete a brief remediation program prior to beginning advanced pharmacy practice experiences, but the administration and implications of this process are still under review.

SUMMARY

The development of biannual integrated examinations for the first 3 years of the classroom curriculum at the University of Oklahoma College of Pharmacy involves a majority of faculty members, is incrementally implemented, and includes peer review of test questions, examination objectives, and test results. Incorporation of the integrated examination enhanced the college’s culture of assessment by facilitating faculty members’ understanding of the complexities of writing objectives and test items and reviewing examinations and by helping students better understand expectations for knowledge and skill retention in the curriculum. The incremental development and implementation of the examinations over a 3-year period minimized the burden on faculty time while engaging them in the development of an ongoing process. The course will continue to be refined.

ACKNOWLEDGEMENTS

The authors thank the integrated examination, assessment, and curriculum committee members at the University of Oklahoma College of Pharmacy for their time and contributions to implementation and refinement of the integrated examination. The authors also thank the American Association of Colleges of Pharmacy for their recognition of this work with the 2012 Assessment Award.

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. 2007. http://www.acpe-accredit.org/pdf/ACPE_Revised_PharmD_Standards_Adopted_Jan152006.DOC. Accessed August 24, 2012.

- 2.Szilagyi JE. Curricular progress assessments: the Milemarker. Am J Pharm Educ. 2008;72(5):Article 101. doi: 10.5688/aj7205101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sansgiry SS, Chanda S, Lemke TL, Szilagyi JE. Effect of incentives on student performance on Milemarker examinations. Am J Pharm Educ. 2006;70(5):Article 103. doi: 10.5688/aj7005103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sansgiry SS, Nadkarni A, Lemke T. Perceptions of PharmD students towards a cumulative examination: the Milemarker process. Am J Pharm Educ. 2004;68(4):Article 93. [Google Scholar]

- 5.Alston GL, Love BL. Development of a reliable, valid annual skills mastery assessment examination. Am J Pharm Educ. 2010;74(5):Article 80. doi: 10.5688/aj740580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kirschenbaum HL, Brown ME, Kalis MM. Programmatic curricular outcomes assessment at colleges and schools of pharmacy in the United States and Puerto Rico. Am J Pharm Educ. 2006;70(1):Article 08. doi: 10.5688/aj700108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Plaza CM. Progress examinations in pharmacy education. Am J Pharm Educ. 2007;71(2):Article 34. doi: 10.5688/aj710466. [DOI] [PMC free article] [PubMed] [Google Scholar]