Abstract

We consider the median regression with a LASSO-type penalty term for variable selection. With the fixed number of variables in regression model, a two-stage method is proposed for simultaneous estimation and variable selection where the degree of penalty is adaptively chosen. A Bayesian information criterion type approach is proposed and used to obtain a data-driven procedure which is proved to automatically select asymptotically optimal tuning parameters. It is shown that the resultant estimator achieves the so-called oracle property. The combination of the median regression and LASSO penalty is computationally easy to implement via the standard linear programming. A random perturbation scheme can be made use of to get simple estimator of the standard error. Simulation studies are conducted to assess the finite-sample performance of the proposed method. We illustrate the methodology with a real example.

Keywords: Variable selection, Median regression, Least absolute deviations, Lasso, Perturbation, Bayesian information criterion

1 Introduction

In the general linear model with independent and identically distributed errors, the Least Absolute Deviation (LAD) or L1 method has been a viable alternative to the least squares method especially for its superior robustness properties. Consider the linear regression model

| (1) |

where xi are known p-vectors, β0 the unknown p-vector of regression coefficients, and ei the i.i.d random errors with a common distribution F.

The L1 estimator β̂L1 is defined as a minimizer of the L1 loss function

| (2) |

Although there is no explicit analytic form for β̂L1, the minimization may be carried out easily via linear programming (see for example, Koenker and D’Orey 1987). The more recent paper by Portnoy and Koenker (1997) gives speedy ways to compute the L1 minimization, even for very large problems.

An important aspect in (regression) model building is model (variable) selection. For the least squares-based regression, there are a number of well established methods, including the Akaike Information Criterion (AIC), the Bayesian Information Criterion (BIC), Mallows’s Cp and etc. These approaches are characterized by two basic elements: a goodness of fit measure and a complexity index. The selection criteria are typically based upon various ways of balancing the two elements, so the resultant prediction errors are minimized. Remarkably, for the L1 based regression, there have been very few works on model selection. Hurvich and Tsai (1990) proposed a (double-exponential) likelihood function-based criterion and studied its small sample properties via simulations. Robust modification of Mallows’s Cp were proposed by Ronchetti and Staudte (1994) under the framework of M estimation. Developing general variable selection methods with sound theoretical foundation and feasible implementation for L1-based regression remains a great challenge.

An intriguing and novel recent advancement in variable selection is known as Basis Pursuit, proposed by Chen and Donoho (1994) or the Least Absolute Shrinkage and Selection Operator (LASSO), proposed by Tibshirani (1996). In it, the estimation and model selection are simultaneously treated as a single minimization problem. Knight and Fu (2000) established some asymptotic results for LASSO-type estimators. Fan and Li (2001) introduced Smoothly Clipped Absolute Deviation (SCAD) approach and proved its optimal properties. Efron et al. (2004) introduced Least Angel Regression (LARS) algorithm and its close connection to LASSO is extensively discussed.

With the fixed p and the invertible XT X, the least squares estimate βLS = (XT X)−1XT Y uniquely minimizes the squared loss

LASSO estimate is defined as the minimizer of

where 0 ≤ s ≤ 1 controls the amount of shrinkage that is applied to the estimates.

LASSO is similar in form to the ridge regression where the term in the constraint is rather than |βj|. A remarkable feature of LASSO, as a result of the L1 constraint, is that for some βj’s, their fitted values are exactly 0. In fact, as the shrinkage parameter s goes from 1 to 0, the estimates go from no 0 to all 0. LASSO can also be regarded as a penalized least squares estimator with L1 penalty: a minimizer of the objective function:

where λn is the tuning parameter.

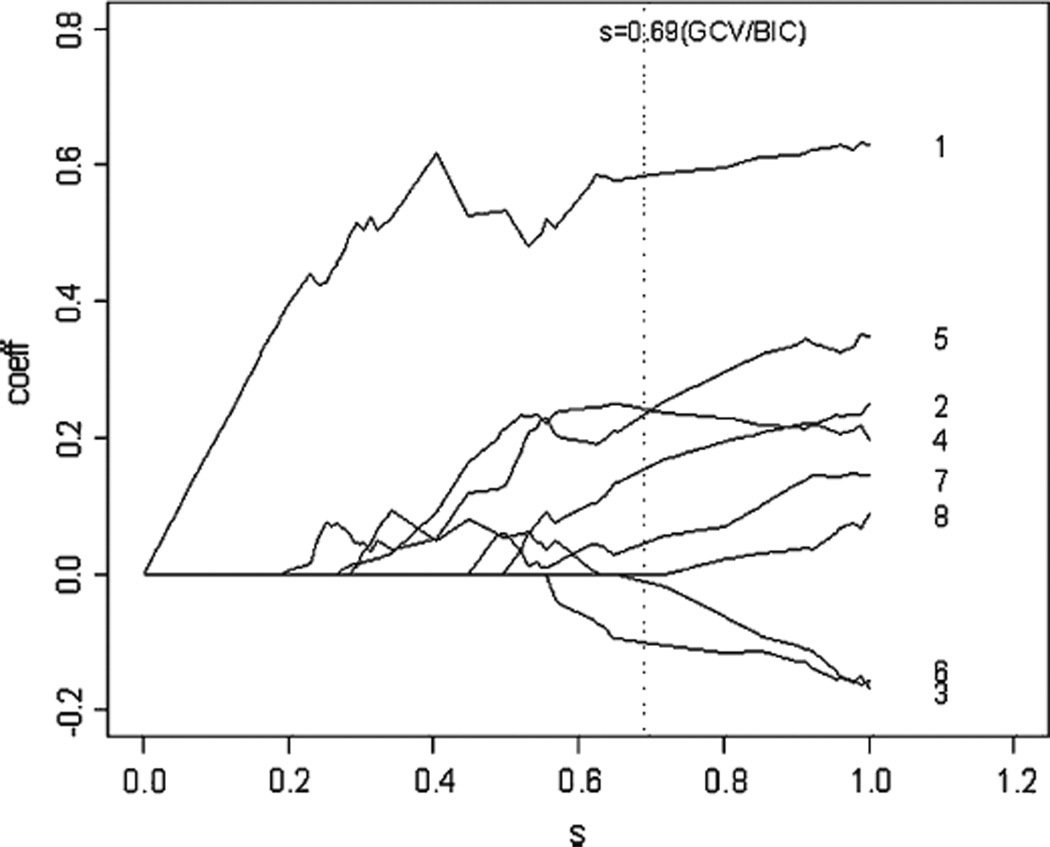

In the present paper, we propose a parallel approach borrowing the ideas from LASSO by using the L1 penalty, but with the least squares loss replaced by the L1 loss. In doing so, we gain advantages in two fronts. First, it allows us to penetrate the difficult problem of variable selection for the L1 regression. Appealingly, the shrinkage property of the LASSO estimator continues to hold in L1 regression, see Fig. 1. Second, the single criterion function with both components being of L1-type reduces (numerically) the minimization to a strictly linear programming problem, making any resulting methodology extremely easy to implement.

Fig. 1.

Graphical display of LASSO shrinkage of eight coefficients as a function of shrinkage parameter s in the prostate cancer example. The broken line s = 0.69 is selected by both BIC and GCV criterion

To be specific, our proposed estimator is a minimizer of the following criterion function

It can be equivalently defined as a minimizer of the objective function

where βL1 is the usual L1 estimator.

As pointed out by Fan and Li (2001), LASSO does not possess the so-called oracle property in the sense that it cannot simultaneously have the best rate of convergence while correctly, with probability tending to one as sample size increases, set all unnecessary coefficients to 0.With this in mind, they proposed a variant of the penalty, called SCAD, smoothly clipped absolute deviation. Using such penalty, they were able to achieve the oracle property for the resulting estimator. Unfortunately, if we modify our approach by replacing the L1 penalty function with the SCAD function, then the resultant minimization will be much more complicated. In particular, it is no longer numerically solvable by the linear programming.

To maintain numerical simplicity and uniqueness of solution of the linear programming, and to achieve the desirable oracle property, it is necessary for us to modify and extend the LASSO-type objective function. The tuning parameter λn there plays a crucial role of striking a balance between estimation of β and variable selection. Large values of λn tend to remove variables and increase bias in the estimation aspect while small values tend to retain variables. Thus it would be ideal that a large λn be used if a regression parameter is 0 (to be removed) and a small value be used if it is not 0. To this end, it becomes clear that we need a separate λn for each parameter component βj. In other words, we need to consider the estimator as a minimizer of the objective function:

In particular, we are interested in the case that λnj = ηnξnj, where ξnj are fixed weighting parameter. Likewise, the estimator can be regarded as a minimizer of

In the Bayesian view, the βjs have independent prior distributions- double exponential

With proper choice of tuning parameters, we will show the resultant penalized estimator exhibit optimal properties. After we obtained our results (Xu 2005), we noticed a recent work of Adaptive LASSO by Zou (2006) which has the same spirit in proposing different scaling parameters in LASSO for fixed p and squared loss. However, our work is motivated by a unified L1 based approach for simultaneous estimation and variable selection and focus on theoretical investigation of the resultant data-driven procedure for the absolute deviation loss.

The rest of the paper is organized as follows. In Sect. 2, we introduce some notations for L1 regression, list some conditions under which our main results hold and establish a useful proposition. In Sect. 3, asymptotics for the estimator are considered. The conditions under which consistency or -consistency hold are given and limiting distribution results are proved. In Sect. 4, for properly chosen tuning parameters, we establish the oracle property of the estimator and use the perturbation method to estimate the standard error of the estimator. A two-stage data-driven procedure is also provided and proved to automatically select asymptotically optimal tuning parameters. In Sect. 5, simulation study as well as real data application are conducted to examine the performance of the proposed approach.

2 Differentially penalized L1 estimator

We define the differentially penalized L1 estimator β̂ as a minimizer of the objective function

| (3) |

where λnj, 1 ≤ j ≤ p are regularization parameters.

We need to make the following assumptions on the error distribution and the covariates. These assumptions are essentially the same as those made in Pollard (1991) and Rao and Zhao (1992).

-

(C.1)

{ei} are i.i.d with median 0 and a density function f(·) which is continuous and strictly positive in a neighborhood of 0.

-

(C.2)

{xi} is a deterministic sequence and there exists a positive definite matrix V for which .

Now we introduce some notations. Let the true coefficient vector , where is s-vector and is (p − s)-vector. Without loss of generality, assume 1 ≤ s < p, . Considering only the first s covariates, by (C.2), we have , where is the subvector of xi which contains the first s components.

Denote , then

In order to study the asymptotical properties of the penalized estimator, we need to establish the Local Asymptotic Quadratic (LAQ) property of the loss function (2).

Proposition 1 Under (C.1)–(C.2), for every sequence dn > 0 with dn → 0 in probability, we have

| (4) |

holds uniformly in ‖β − β0‖ ≤ dn.

Proof It is easy to see that

so

Since for any compact set B, the class of functions , is Euclidean with an integrable envelope in the sense of Pakes and Pollard (1989), we can apply the maximal inequality of Pollard (1990, p. 38) to get, for some C > 0,

as n → ∞ and dn → 0. Thus uniformly in ‖β − β0‖ ≤ dn,

| (5) |

and

where . Since Gn(β) is a convex function, it has derivative 0 at β0, and its second derivative at β0 is , by Taylor expansion,

| (6) |

3 Large sample properties

Knight and Fu (2000) studied the limiting distributions of LASSO-type estimator in least squares setting. In this section, we establish similar large sample properties for the proposed estimator β̂n. The key tools we use are the LAQ property of the loss function and a novel inequality. The following result shows that β̂n is consistent provided λnj = op(n).

Theorem 1 Under (C.1)–(C.2) and , 1 ≤ j ≤ p, β̂n → argmin (Z), where

In particular, since β0 is the minimizer of Gn (β), β̂n is consistent, provided λnj = op(n).

Proof By the uniform law of large numbers (Pollard 1990), n−1Ln(β) − n−1Ln(β0) − Gn(β) = o(1) uniformly for β in any compact set K, hence Zn(β) − Z(β) = op(1). Since and argmin (Ln) = Op(1), we know that argmin (Zn) = Op(1). It follows that β̂n → argmin (Z).

In order to establish the root-n consistency of β̂n, we need to study the following object function:

where u ∈ Rp, D is a positive definite matrix, λ1, …, λs are constants, λs+1, …, λp are nonnegative constants, and suppose that û is a minimizer of c(u), then we have the following proposition

Proposition 2 For any u, we have C(u) − C(û) ≥ (u − û)T D(u − û)/2

Proof First, let us look at the case when s = 0, and without loss of generality, assumes ,,where û1 ∈ Rr, and û1i ≠ 0, 1 ≤ i ≤ r

denote

where D11, D12, D21, D22 are r × r, r × (p − r), (p − r) × r, (p − r) × (p − r) matrices, and .

denote , a1, a2 are r, (p − r) dimensional vectors respectively.

Since the are minimizer of C(u), we have

| (7) |

| (8) |

the first equality holds because û1 is not zero componentwise and is the minimizer of the objective function

so the derivative of the objective function at the minimizer will be 0. The second inequality holds because generally 0 ∈ Rp is the minimizer of

is equivalent to say that

To show the proposition holds, we need to prove the inequality

equivalent to

by (7)

and

so we only need to prove that

which is apparent by (8).

Now we consider the general case when the s > 0, denote

where C, B, BT, A are s × s, s × (p − s), (p − s) × s, (p − s) × (p − s)matrices.

denote , a1, a2 are s, (p − s) dimensional vectors respectively.

denote b = (λ1, …, λs)T we take the transformation υ1 = u1+C−1Bu2, the objective function becomes

and when minimizing this function u2 and υ1 can be separated. for the function of u2 we apply the result we just got above, and rewrite the function of υ1 in its quadratic form and it is straightforward to have

Hence the proposition holds in the general case.

Suppose that ûn is a minimizer of the objective function

| (9) |

The following result shows that β̂n is -consistent provided .

Theorem 2 Under (C.1)–(C.2) and , 1 ≤ j ≤ p, then , where

and W has a N(0, V2) distribution. In particular if , the penalized estimator behaves like the full L1 estimator β̂L1.

Proof It follows from Theorem 1 that, β̂n is consistent. By (4)

let , then

It is easy to see that . So converges to 0 in probability, again by (4), we have .

Furthermore, since β̂n is a minimizer of Zn(β), we have

by the Proposition 2, we have,

It says that and ûn has the same asymptotic distribution, and completes the proof.

4 Adaptive two-stage procedure

4.1 Oracle property

In this section, we show that for properly chosen tuning parameters, the resultant penalized estimator exhibits the so-called oracle property. Suppose the {λnj} satisfy the following conditions:

-

(C.3)

The first part of (C.3) tries to preserve the -consistency of the estimator, and the second part of it does the work of shrinking the zero coefficients directly to zero. Notice that the rates of regularization parameters are different between zero coefficients and nonzero ones. Practically, we do not know before hand about such information, and actually this is exactly the task that the variable selection procedure is trying to accomplish. However, since we can estimate the coefficients with some precision, we can choose data-driven tuning parameters with asymptotically correct rates, and then the penalized estimator can exhibit the same asymptotic properties as the one with ideal tuning parameters. An approach based on this idea is given and illustrated in Sect. 4.3.

Perturbation methods are used to estimate the covariance matrix, define a new loss function

where ωi (i = 1, …, n) are independent positive random variables with E(ωi) = Var(ωi) = 1 and are independent of the data (Yi, Xi)(i = 1, …, n), let the be a minimizer . We will show in Sect. 4.2 that conditional on the data (Yi, xi)(i = 1, …, n), has the same asymptotic distribution as , hence the realizations of by repeatedly generalizing the random sample (ω1, …, ωn) can be used to estimate the covariance matrix.

We need to establish the following -consistency for later use.

Proposition 3 (-consistency) Under (C.1)–(C.3), we have .

Proof We only need to show that for any given ε > 0, there exists a large constant C such that

| (10) |

together with the convexity of Zn, this implies that .

And

| (11) |

for sufficiently large C, the second term of (11) dominates the rest terms, hence (10) holds. It completes the proof.

The following Proposition is needed to establish the oracle property of the estimator.

Proposition 4 Under conditions (C.1)–(C.3), with probability tending to one, for any given β1 satisfying that and any constant C,

Proof Denote the gradient of Ln(β) by . It is sufficient to show that with probability tending to one as n → ∞, for any β1 satisfying that , and ‖β2‖ ≤ Cn−1/2, Unj (β) + λnj sgn(βj) and βj have the same signs for βj ∈ (−Cn−1/2, Cn−1/2) for j = s + 1, …, p.

Similarly as in Proposition 1, we have

| (12) |

it follows that

since , for j = s+1, …, p, the sign of the Uj (β)+λnj sgn(βj) is completely determined by the sign of the βj, this completes the proof.

Now, we can establish the following main theorem. The first component is exactly zero and the second component is estimated as well as if the correct model were known. This is the so-called oracle property.

Theorem 3 (Oracle property) Under (C.1)–(C.3), with probability tending to one, the penalized estimator has the following properties:

.

Proof It follows from Proposition 4 that part (a) holds. To prove part (b), notice that is the minimizer of the object function

| (13) |

it is the penalized estimator considering only the first s covariates. Since is the minimizer and λnj = o(n1/2), 1 ≤ j ≤ s, by Theorem 2, we know that , where , and W1 has a distribution. . It completes the proof.

Remark 1 By Theorem 2, we can see that although with positive probability, the LASSO estimate shrinks some coefficients to zero, it does necessarily shrink the true zero coefficients and thus may erroneously retain insignificant variables in the model and in the meantime increase biases in the estimation of true nonzero coefficients. However, by Theorem 3 (b), with differentially scaled tuning parameters, the MLASSO performs the correct variable selection and achieves the asymptotic efficiency.

4.2 Distributional approximation

Now we establish the asymptotic properties of the perturbed penalized estimator, is a minimizer of the loss function

| (14) |

We are able to show conditional on data, the randomly perturbed estimator can be used to approximate the distribution of the estimator. To be more specific, we have the following theorem:

Theorem 4 Under conditions (C.1)–(C.3), with probability tending to one, conditional on the data (Yi, xi)(i = 1, …, n), has the following properties:

.

Proof Using the same arguments as in proving Proposition 1, denote , for every sequence dn > 0 with dn → 0 in probability, we can prove

| (15) |

holds uniformly in ‖β − β0‖ ≤ dn, Then as in Proposition 3, we can prove that conditionally on the original data, , and as in Proposition 4, for any given β1 satisfying that and any constant C, conditionally on the original data, we have

By Theorem 1, with probability tending to one, , it follows that conditionally on the original data, , then considering only the first s covariates, is a minimizer of function

By Proposition 1 and Theorem 2, we have

| (16) |

and similarly we have

Thus,

It suffices to show conditionally on (Yi, xi), i = 1, …, n,

| (17) |

Since and , by the central limit theorem, (17) follows, it completes the proof.

4.3 Selection of tuning parameters

Although the theoretical result in Sects. 4.1 and 4.2 is interesting, it is impractical if we can not find λnj which satisfy C.3. Noticing that the rates of tuning parameters for nonzero and zero coefficients are different, we must base our selection of λnj on some preliminary estimation procedure. Denote the components of the L1 estimator β̂L1 by aj, j = 1, …, p. The estimates of their standard errors are denoted by bj, j = 1, …, p. Let

| (18) |

For the λnj defined in (18), we have the following proposition:

Proposition 5 For a fixed (η, γ), the λnj defined in (18) satisfy C.3.

Proof (i) If the jth component of the β0 is zero, then aj/bj converges to a N(0, 1) r.v.. Denoting the sequence by Zn and the latter by Z, we have

(ii) If the jth component of the β0 is non-zero, denote it by θ, it is known that , where σ is a fixed positive constant, denote the sequence by Zn and the latter by Z. So and , then .

Now if the λnj is chosen as above to estimate the β0, we also have to select parameters η and γ. Resampling-based model selection methods such as cross-validation or generalized degrees of freedom (Shen and Ye 2002) can be employed which are computationally intensive. Tibshirani (1996) constructed the generalized cross-validation style statistic to select the tuning parameter. A key statistic therein is the number of effective parameters or the degrees of freedom. An interesting property of the LASSO-type procedure is that the number of nonzero coefficients of the estimator is an unbiased estimate of its degrees of freedom; see, for example, Efron et al. (2004) or Zou et al. (2004). In this connection, generalized cross-validation (GCV) can be modified to choose η and γ where the residual sums of squares is replaced with the sum of absolute residuals and the degrees of freedom d is taken as the number of nonzero coefficients of the differentially penalized L1 estimator. This allows us to reduce computational burden greatly. In traditional subset selection, GCV criterion is not consistent in the sense of choosing true subset model with probability tending to 1 as sample size goes to infinity while the bayesian information criterion (BIC) is. To come up with a consistent data-driven variable selection procedure, a BIC type criterion seems necessary. For tradition subset selection in the L1 regression, the BIC is defined as

where is the maximum likelihood estimate of scale parameter σ in the full model with all the candidate variables and α is the size of subset model. The size of subset model is its degrees of freedom while the degrees of freedom of the Lasso-type procedure can be unbiasedly estimated by the number of nonzero coefficients of the estimator. Thus the number of nonzero coefficients is denoted by d and the following BIC-type criterion is proposed for the selection of tuning parameters η and γ.

The selected (ηn, γn) minimizes the BIC function in the region (η, γ) ∈ (0, ∞) × (1, ∞).

The proposed selection criterion has at least two advantages. First, since the optimal tuning parameters are found by a grid search, computationally it is feasible. Second, the asymptotic optimality such as the oracle property is usually established for the penalized estimator with fixed tuning parameters (Fan and Li 2002). Theoretically, it is more worthy to investigate theoretical properties of the penalized estimator with data-driven tuning parameters. Interestingly, in the following theorem, the differentially penalized L1 estimator with the BIC-based data-driven tuning parameters indeed exhibits the oracle property.

Theorem 5 If we define the tuning parameters {λnj} as in (18), and select (η, γ) by the BIC function defined above, the resultant penalized estimator exhibits the oracle property.

Proof It suffices to prove that the selected tuning parameters satisfy the C.3 or the penalized estimator is asymptotically equivalent to the estimator with tuning parameters satisfying the C.3.Without loss of generality, we assume that and . In the case where the limits do not exist, similar arguments can be applied for subsequences of λnj. It is easy to see that tuning parameters {λnj} can fall into the following five cases.

,

,

,

,

- ,

- For tuning parameters in case 1, the estimator have no zero components, denote it by β̂(p), while the penalized estimator with tuning parameters in case 3 only have s true nonzero coefficients, denote it by β̂(s), denote the usual L1 estimator with all the p covariates and only the first s covariates by β̂L1 (p) and β̂L1 (s), respectively. Hence

and

since β̂L1 (s) and β̂(s) are both – consistent and satisfy C.3, . And by Proposition 1, we know that

and

are both Op(1), so with probability tending to 1, BIC(β̂(p)) > BIC(β̂(s)), hence the selected tuning parameters are not in case 1. - For tuning parameters in case 2, by consistency, true nonzero components of the estimator remain nonzero, and some of true zero components might be zero. If all true zero components are zero, then the estimator is asymptotically equivalent to the penalized estimator with tuning parameters in case 3, otherwise, apply the same argument as before, with probability tending to 1, those tuning parameters are not chosen.

Ln(β) = Op(n), with probability tending to 1, true zero components of the estimator are zero, some of true nonzero components might be zero. If all true nonzero components are nonzero, then its degrees of freedom is the same as in the case 3. Suppose that the estimator is β̃(s) and the estimator corresponding to tuning parameters in case 3 is β̂(s). Since its tuning parameters are larger than the ones in the case 3, if they are not asymptotically equivalent, Ln(β̃(s)) is strictly larger than Ln(β̂(s)) and tuning parameters are not chosen. In the other situation, the subset of nonzero components of the resultant estimator is a true subset of {1, 2, …, s}. Without loss of generality, assume the subset of nonzero components of the resultant estimator is {1, 2, …, s − 1}, denote it by β̂(s − 1), to show these tuning parameters are not chosen, it suffices to prove that, with probability tending to 1, BIC(β̂(s − 1)) > BIC(β̂(s)), denote the L1 estimator with only the first s − 1 covariates by β̂L1 (s − 1), we only need to prove that .

Actually, we will show that for sufficiently large n,

| (19) |

where δ is a positive number.

Denote , let . By the uniform law of large number, we have

| (20) |

For large n, , thus there exists a point β̂(s) ∈ ℬ satisfying,

Also there exists β̄(s) such that

So

thus (19) holds, hence concludes the proof.

Remark 2 Other model selection criteria such as GCV and AIC can also be modified accordingly to choose parameters η and γ. However, as GCV and AIC criteria are not consistent in the sense of choosing the true model with probability tending to 1 as n goes to infinity, the GCV or AIC based differentially scaled L1 penalized estimator do not enjoy the desired oracle property.

Simulation results in Sect. 5 shows that the finite-sample performance of the estimates does not vary much with different values of γ. As a referee pointed out, it is worthy to explore how the tuning parameter γ changes the behavior of the estimator. To gain some insights into this, here we study how the estimate changes with γ in the orthonormal case. Denote by znj and . In the orthonormal case, the covariate vector x1, …, xp are mutually orthogonal, and . For true zero coefficients, ; for true nonzero coefficients, znj = Op(1). The magnitudes of znj of true zero coefficients are much larger than those of true nonzero coefficients and this contrast is power factored by γ into λnj to differentially shrink coefficients. Large γ itself leads to large tuning parameters for nonzero coefficients although it magnifies the difference of tuning parameters for true zero coefficients and nonzero coefficients. Thus the ideal γ should be slightly larger than 1 to not only assure the correct asymptotic rate and but also minimize its impact on inflating the tuning parameters. From the explicit formula for the estimates, we see that small change of γ will not in general influence much on the behavior of the estimates.

5 Numerical studies

We have conducted extensive numerical studies to compare our proposed method with LASSO, traditional subset selection methods and the oracle least absolute deviations estimates. We denote our method by MLASSO since it is the natural generalization of LASSO by considering multiple tuning parameters. All simulations are conducted using IMSL’s routine for L1 regression RLAV in Fortran. We select the tuning parameter of LASSO and MLASSO by BIC or GCV function. For MLASSO, the results between selecting both η and γ and selecting only η with a fixed γ = 1.5 are very similar. So we let γ = 1.5 in most of the examples. For subset selection methods, the best subset is chosen as the one which minimizes BIC or GCV function. Following Tibshirani (1996) and Fan and Li (2001), we report simulation results in terms of model error instead of prediction error (PE). In the setting of linear models, suppose that

from the (Y, X) we get β̂ as an estimate of β and use the β̂T Xfuture to predict the future response Yfuture, where (Yfuture, Xfuture) is an independent copy of (Y, X). The mean-squared error (ME) is defined by

The prediction error (PE) is defined as

where R is the population covariance matrix of X, and σ2 is the variance of the error.

5.1 Normal error case

Considering the simulation scenario of Fan and Li (2001), we simulate 100 datasets. Each of them consists of n observations from the model

| (21) |

β = (3, 1.5, 0, 0, 2, 0, 0, 0)T, the components of x and ε are standard normal. The correlation between xi and xj is ρ|i−j| with ρ = 0.5. We compare the model error of each variable selection procedure to that of the full L1 estimator. The relative value is called the relative model error (RME). We do the simulations for different sample sizes and σ values. In Table 1, we summarize the results in terms of the median of relative model errors (MRME), the average correct 0 coefficients and the average incorrect 0 coeffients over 100 simulated datasets. Our resampling procedure uses 1, 000 random samples from standard exponential distribution. The results are similar for the other distributions. From Table 1, we see that in the situation of small sample size and big noise, Best subset performs the best in reducing the model error while LASSO tends to identify the least incorrect zero components. When the noise level is decreased, even in the situation of small sample size, all procedures do not identify any nonzero component to be zero and in terms of both the number of correctly identified zero components and the reduction of the model error, MLASSO and Best subset perform much better than LASSO. When the sample size is increased, MLASSO tends to perform better than Best subset and closer to the true oracle estimator. It is also interesting to notice that the same criterion (BIC or GCV) based Best subset and MLASSO perform very similarly. In all the simulations, the methods of fixing γ = 1.5 and selecting γ give almost the identical performance. Hence in the later examples, we will let γ = 1.5.

Table 1.

Variable selection in normal error case

| Avg. no. of 0 coefficients | |||

|---|---|---|---|

| Method | MRME (%) | Correct | Incorrect |

| n = 40, σ = 3.0 | |||

| LASSO (BIC) | 72.49 | 3.34 | 0.15 |

| LASSO (GCV) | 75.76 | 3.55 | 0.17 |

| LASSO (AIC) | 75.69 | 2.60 | 0.15 |

| MLASSO1 (BIC) | 81.12 | 3.85 | 0.32 |

| MLASSO1 (GCV) | 78.81 | 4.21 | 0.42 |

| MLASSO1 (AIC) | 77.33 | 3.20 | 0.13 |

| MLASSO2 (BIC) | 80.87 | 3.90 | 0.32 |

| MLASSO2 (GCV) | 79.54 | 4.21 | 0.41 |

| MLASSO2 (AIC) | 76.22 | 3.24 | 0.13 |

| Subset (BIC) | 69.40 | 4.11 | 0.34 |

| Subset (GCV) | 65.09 | 4.44 | 0.40 |

| Subset (AIC) | 68.52 | 3.44 | 0.29 |

| Oracle | 37.95 | 5 | 0 |

| n = 40, σ = 1.0 | |||

| LASSO (BIC) | 72.49 | 3.25 | 0 |

| LASSO (GCV) | 72.49 | 3.46 | 0 |

| LASSO (AIC) | 73.85 | 2.60 | 0 |

| MLASSO1 (BIC) | 59.63 | 4.19 | 0 |

| MLASSO1 (GCV) | 51.83 | 4.51 | 0 |

| MLASSO1 (AIC) | 73.58 | 3.46 | 0 |

| MLASSO2 (BIC) | 59.63 | 4.19 | 0 |

| MLASSO2 (GCV) | 50.92 | 4.52 | 0 |

| MLASSO2 (AIC) | 72.40 | 3.48 | 0 |

| Subset (BIC) | 56.45 | 4.16 | 0 |

| Subset (GCV) | 52.21 | 4.51 | 0 |

| Subset (AIC) | 68.86 | 3.40 | 0 |

| Oracle | 37.95 | 5 | 0 |

| n = 60, σ = 1.0 | |||

| LASSO (BIC) | 69.19 | 3.45 | 0 |

| LASSO (GCV) | 68.53 | 3.53 | 0 |

| LASSO (AIC) | 73.82 | 2.38 | 0 |

| MLASSO1 (BIC) | 53.30 | 4.35 | 0 |

| MLASSO1 (GCV) | 52.13 | 4.44 | 0 |

| MLASSO1 (AIC) | 72.79 | 3.28 | 0 |

| MLASSO2 (BIC) | 54.35 | 4.37 | 0 |

| MLASSO2 (GCV) | 54.06 | 4.42 | 0 |

| MLASSO2 (AIC) | 71.60 | 3.32 | 0 |

| Subset (BIC) | 65.07 | 4.26 | 0 |

| Subset (GCV) | 55.33 | 4.39 | 0 |

| Subset (AIC) | 81.48 | 3.48 | 0 |

| Oracle | 33.63 | 5 | 0 |

The value of γ in MLASSO1 is selected, whereas the value of γ in MLASSO2 is 1.5

We also use the simulations to test the accuracy of the estimated standard error of the estimator via the perturbation method. The standard error of 100 estimates (SD) is regarded as the true standard error of the estimator. The mean and the standard error of 100 estimated standard errors of the estimator via the perturbation method (SDm, SDs) are used to assess the performance of the perturbation method. In Table 2, we summarize the results for the situation of n = 60, σ = 1.0. From Table 2, it can be seen that SD and SDm are very close and hence the perturbation method performs very well.

Table 2.

Estimation in normal error case (n = 60, σ = 1.0)

| β̂1 | β̂2 | β̂5 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Method | SD | SDm | (SDs) | SD | SDm | (SDs) | SD | SDm | (SDs) |

| LASSO (BIC) | 0.208 | 0.224 | 0.055 | 0.216 | 0.230 | 0.050 | 0.193 | 0.231 | 0.058 |

| LASSO (GCV) | 0.207 | 0.225 | 0.055 | 0.214 | 0.231 | 0.050 | 0.191 | 0.231 | 0.058 |

| LASSO (AIC) | 0.207 | 0.224 | 0.056 | 0.215 | 0.230 | 0.049 | 0.191 | 0.232 | 0.058 |

| MLASSO1 (BIC) | 0.200 | 0.205 | 0.053 | 0.225 | 0.206 | 0.051 | 0.191 | 0.191 | 0.054 |

| MLASSO1 (GCV) | 0.195 | 0.206 | 0.054 | 0.209 | 0.205 | 0.051 | 0.193 | 0.190 | 0.055 |

| MLASSO1 (AIC) | 0.196 | 0.205 | 0.053 | 0.217 | 0.205 | 0.050 | 0.192 | 0.190 | 0.054 |

| MLASSO2 (BIC) | 0.198 | 0.205 | 0.053 | 0.224 | 0.207 | 0.050 | 0.191 | 0.190 | 0.053 |

| MLASSO2 (GCV) | 0.198 | 0.206 | 0.055 | 0.211 | 0.205 | 0.050 | 0.190 | 0.189 | 0.053 |

| MLASSO2 (AIC) | 0.199 | 0.206 | 0.054 | 0.212 | 0.206 | 0.049 | 0.190 | 0.190 | 0.053 |

| Subset (BIC) | 0.199 | 0.201 | 0.050 | 0.226 | 0.201 | 0.054 | 0.181 | 0.182 | 0.044 |

| Subset (GCV) | 0.194 | 0.202 | 0.051 | 0.215 | 0.201 | 0.051 | 0.181 | 0.182 | 0.041 |

| Subset (AIC) | 0.195 | 0.203 | 0.052 | 0.217 | 0.203 | 0.055 | 0.183 | 0.182 | 0.042 |

| Oracle | 0.192 | 0.209 | 0.052 | 0.196 | 0.205 | 0.051 | 0.155 | 0.180 | 0.039 |

The value of γ in MLASSO1 is selected, whereas the value of γ in MLASSO2 is 1.5

To demonstrate the consistent property of BIC-type MLASSO, we increase the sample size. In Table 3, we summarize the results about the proportion of the procedure selecting the true model. It can be seen from Table 3 BIC-based MLASSO and BIC-based Best subset tend to select the true model with the proportion increases to 1 as the sample size increases. The other procedures do not exhibit this good property. It can also be seen that BIC-based MLASSO performs even better than BIC-based Best subset when the sample size is large.

Table 3.

Performance on consistency

| sample size (n) | 60 | 100 | 200 | 500 | 1,000 | 2,000 |

|---|---|---|---|---|---|---|

| Subset (BIC) | 0.45 (4.26) | 0.66 (4.58) | 0.64 (4.61) | 0.81 (4.79) | 0.81 (4.80) | 0.80 (4.77) |

| Subset (GCV) | 0.53 (4.39) | 0.60 (4.52) | 0.56 (4.44) | 0.62 (4.54) | 0.56 (4.42) | 0.52 (4.34) |

| Subset (AIC) | 0.16 (3.48) | 0.19 (3.55) | 0.18 (3.50) | 0.23 (3.60) | 0.21 (3.54) | 0.22 (3.56) |

| LASSO (BIC) | 0.24 (3.45) | 0.27 (3.83) | 0.28 (3.75) | 0.38 (4.11) | 0.44 (3.97) | 0.19 (3.53) |

| LASSO (GCV) | 0.29 (3.53) | 0.27 (3.79) | 0.20 (3.53) | 0.25 (3.65) | 0.29 (3.47) | 0.17 (3.18) |

| LASSO (AIC) | 0.05 (2.38) | 0.06 (2.39) | 0.08 (2.42) | 0.07 (2.40) | 0.10 (2.50) | 0.10 (2.52) |

| MLASSO (BIC) | 0.65 (4.35) | 0.68 (4.59) | 0.83 (4.78) | 0.84 (4.80) | 0.85 (4.83) | 0.90 (4.90) |

| MLASSO (GCV) | 0.67 (4.44) | 0.67 (4.57) | 0.67 (4.52) | 0.68 (4.51) | 0.71 (4.53) | 0.61 (4.40) |

| MLASSO (AIC) | 0.25 (3.28) | 0.26 (3.29) | 0.28 (3.35) | 0.29 (3.36) | 0.32 (3.38) | 0.32 (3.34) |

The number in the parenthesis is the average number of correctly identified nonzero coefficients

5.2 Laplace error case

In this example and the next example, we change the error distribution in model (21) to explore the robustness of the proposed estimator. We simulate 100 datasets consisting of 60 observations from model (21) with the error distribution now drawn from the standard double exponential (Laplace) distribution. The σ is set to be 1.0. Table 4 and Table 5 summarize the results of the simulations. From Table 4, it can be seen that the MLASSO performs favorably compared to the other methods. Form Table 5, we see that the perturbation method indeed gives a very accurate estimate of the standard error for the estimator.

Table 4.

Variable selection in Laplace error case

| Avg. no. of 0 coefficients | |||

|---|---|---|---|

| Method | MRME (%) | Correct | Incorrect |

| LASSO (BIC) | 61.17 | 3.67 | 0 |

| LASSO (GCV) | 60.94 | 3.81 | 0 |

| LASSO (AIC) | 58.55 | 2.71 | 0 |

| MLASSO (BIC) | 42.89 | 4.64 | 0 |

| MLASSO (GCV) | 41.20 | 4.74 | 0 |

| MLASSO (AIC) | 61.32 | 3.88 | 0 |

| Subset (BIC) | 43.73 | 4.69 | 0 |

| Subset (GCV) | 42.59 | 4.71 | 0 |

| Subset (AIC) | 59.32 | 3.89 | 0 |

| Oracle | 25.32 | 5 | 0 |

Table 5.

Estimation in Laplace error case

| β̂1 | β̂2 | β̂5 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Method | SD | SDm | (SDs) | SD | SDm | (SDs) | SD | SDm | (SDs) |

| LASSO (BIC) | 0.213 | 0.252 | 0.059 | 0.225 | 0.270 | 0.072 | 0.214 | 0.257 | 0.069 |

| LASSO (GCV) | 0.212 | 0.253 | 0.060 | 0.222 | 0.270 | 0.072 | 0.213 | 0.258 | 0.068 |

| LASSO (AIC) | 0.212 | 0.253 | 0.060 | 0.224 | 0.271 | 0.071 | 0.214 | 0.259 | 0.068 |

| MLASSO (BIC) | 0.212 | 0.222 | 0.060 | 0.203 | 0.229 | 0.068 | 0.191 | 0.204 | 0.058 |

| MLASSO (GCV) | 0.213 | 0.222 | 0.0584 | 0.203 | 0.229 | 0.067 | 0.183 | 0.204 | 0.0580 |

| MLASSO (AIC) | 0.212 | 0.222 | 0.059 | 0.202 | 0.228 | 0.065 | 0.183 | 0.205 | 0.057 |

| Subset (BIC) | 0.210 | 0.217 | 0.064 | 0.218 | 0.217 | 0.056 | 0.162 | 0.187 | 0.046 |

| Subset (GCV) | 0.210 | 0.218 | 0.0638 | 0.218 | 0.217 | 0.056 | 0.164 | 0.188 | 0.046 |

| Subset (AIC) | 0.211 | 0.217 | 0.064 | 0.219 | 0.218 | 0.057 | 0.164 | 0.189 | 0.047 |

| Oracle | 0.201 | 0.224 | 0.067 | 0.210 | 0.221 | 0.057 | 0.153 | 0.190 | 0.047 |

5.3 Mixed error case

In this example, we do the same simulations as in the previous example except that we now draw the error distribution from the standard normal distribution with 30% outliers from standard Cauchy distribution. Table 6 and Table 7 summarize the simulation results. It can be seen that the MLASSO performs the best in this situation and the perturbation method still performs very well.

Table 6.

Variable selection in mixed error case

| Avg. no. of 0 coefficients | |||

|---|---|---|---|

| Method | MRME (%) | Correct | Incorrect |

| LASSO (BIC) | 71.35 | 3.90 | 0.00 |

| LASSO (GCV) | 71.29 | 4.02 | 0.00 |

| LASSO (AIC) | 56.43 | 3.24 | 0.00 |

| MLASSO (BIC) | 37.84 | 4.76 | 0.00 |

| MLASSO (GCV) | 36.38 | 4.80 | 0.00 |

| MLASSO (AIC) | 45.45 | 4.28 | 0.00 |

| Subset (BIC) | 41.28 | 4.82 | 0.03 |

| Subset (GCV) | 41.00 | 4.86 | 0.03 |

| Subset (AIC) | 29.02 | 4.09 | 0.00 |

| Oracle | 37.27 | 5 | 0 |

Table 7.

Estimation in mixed error case

| β̂1 | β̂2 | β̂5 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Method | SD | SDm | (SDs) | SD | SDm | (SDs) | SD | SDm | (SDs) |

| LASSO (BIC) | 0.241 | 0.272 | 0.091 | 0.239 | 0.271 | 0.072 | 0.222 | 0.269 | 0.085 |

| LASSO (GCV) | 0.251 | 0.274 | 0.090 | 0.237 | 0.273 | 0.072 | 0.213 | 0.268 | 0.085 |

| LASSO (AIC) | 0.247 | 0.274 | 0.090 | 0.236 | 0.272 | 0.073 | 0.214 | 0.269 | 0.086 |

| MLASSO (BIC) | 0.231 | 0.236 | 0.067 | 0.244 | 0.233 | 0.063 | 0.171 | 0.204 | 0.051 |

| MLASSO (GCV) | 0.230 | 0.236 | 0.067 | 0.245 | 0.234 | 0.064 | 0.170 | 0.204 | 0.050 |

| MLASSO (AIC) | 0.234 | 0.238 | 0.066 | 0.247 | 0.233 | 0.064 | 0.171 | 0.204 | 0.050 |

| Subset (BIC) | 0.267 | 0.245 | 0.066 | 0.322 | 0.234 | 0.075 | 0.280 | 0.202 | 0.053 |

| Subset (GCV) | 0.267 | 0.245 | 0.066 | 0.322 | 0.234 | 0.074 | 0.279 | 0.202 | 0.053 |

| Subset (AIC) | 0.269 | 0.245 | 0.067 | 0.322 | 0.233 | 0.074 | 0.280 | 0.203 | 0.054 |

| Oracle | 0.215 | 0.245 | 0.064 | 0.239 | 0.242 | 0.069 | 0.188 | 0.203 | 0.050 |

5.4 Prostate cancer example

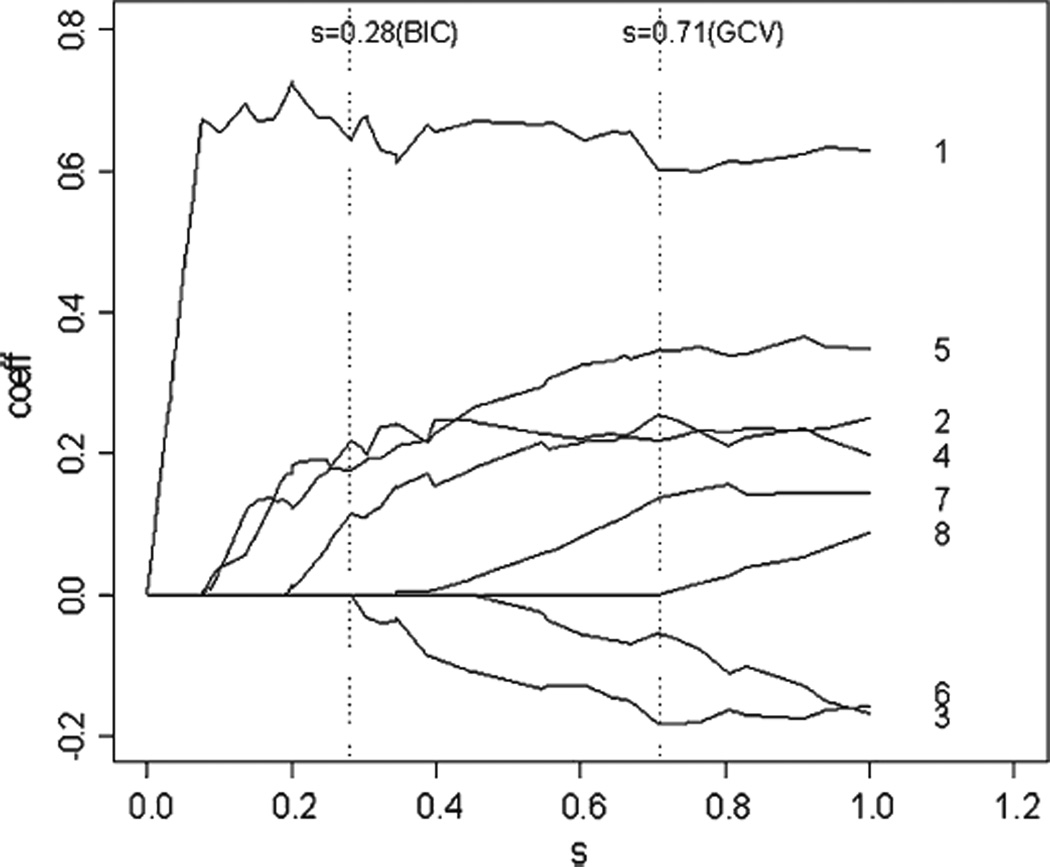

In this example, we apply the proposed approach to the prostate cancer data. The dataset comes from a study by Stamey et al. (1989). It consists of 97 patients who were about to receive a radical prostatectomy. A number of clinical measures for each patient were recorded. The purpose of the study was to examine the correlation between the level of prostate specific antigen and eight factors. The factors are log (cancer volume) (lcavol), log (prostate weight) (lweight), age, log (benign prostaic hyperplasia amount) (lbph), seminal vesicle invasion (svi), log (capsular penetration) (lcp), Gleason score (gleason) and percentage Gleason scores 4 or 5 (pgg45). First we standardize the predictors and center the response variable, then we fit a linear model that relates the log (prostate specific antigen) (lpsa) to the predictors. We use the full LAD, the LASSO and the MLASSO method to estimate the coefficients in the model. The results are summarized in Table 8. With BIC or GCV, LASSO and Best subset result in the identical model and both of them exclude variable lcp and pgg45. With AIC, Best subset excludes only variable pgg45 while LASSO selects η = 0 and results in the full LAD. With GCV, MLASSO selects η = 0.16 and excludes only variable pgg45 while with BIC it selects η = 0.84 and results in a very parsimonious model that retains only the four variables (lcavol, lweight, lbph and svi). With AIC, η is again selected to be 0 and MLASSO produces the full LAD. In Fig. 1, we show the LASSO estimates as a function of shrinkage parameter s, both BIC-based and GCV-based approach select the shrinkage parameter s = 0.69. In Fig. 2, we show the MLASSO estimates as a function of shrinkage parameter s, the BIC-based and GCV-based approach select the shrinkage parameter s = 0.28, 0.71 respectively.

Table 8.

Prostate cancer example

| Predictor | 1 lcavol | 2 lweight | 3 age | 4 lbph | 5 svi | 6 lcp | 7 gleason | 8 pgg45 |

|---|---|---|---|---|---|---|---|---|

| LAD | 0.63(0.13) | 0.25 (0.12) | −0.16 (0.08) | 0.20 (0.11) | 0.35 (0.12) | −0.17 (0.14) | 0.14 (0.11) | 0.09 (0.13) |

| Subset (BIC) | 0.58 (0.11) | 0.23 (0.11) | −0.19 (0.08) | 0.25 (0.11) | 0.32 (0.13) | 0.00 (−) | 0.12 (0.09) | 0.00 (−) |

| Subset (GCV) | 0.58 (0.11) | 0.23 (0.11) | −0.19 (0.08) | 0.25 (0.11) | 0.32 (0.13) | 0.00 (−) | 0.12 (0.09) | 0.00 (−) |

| Subset (AIC) | 0.61 (0.12) | 0.22 (0.11) | −0.17 (0.09) | 0.21 (0.10) | 0.38 (0.12) | −0.15 (0.08) | 0.19 (0.11) | 0.00 (−) |

| LASSO (BIC) | 0.59 (0.11) | 0.24 (0.11) | −0.11 (0.08) | 0.17 (0.11) | 0.23 (0.13) | 0.00 (0.07) | 0.04 (0.07) | 0.00 (0.09) |

| LASSO (GCV) | 0.59 (0.11) | 0.24 (0.11) | −0.11 (0.08) | 0.17 (0.11) | 0.23 (0.13) | 0.00 (0.07) | 0.04 (0.07) | 0.00 (0.09) |

| LASSO (AIC) | 0.63 (0.13) | 0.25 (0.12) | −0.16 (0.08) | 0.20 (0.11) | 0.35 (0.12) | −0.17 (0.14) | 0.14 (0.11) | 0.09 (0.13) |

| MLASSO (BIC) | 0.64 (0.12) | 0.18 (0.10) | 0.00 (0.05) | 0.11 (0.07) | 0.22 (0.11) | 0.00 (0.04) | 0.00 (0.03) | 0.00 (0.00) |

| MLASSO (GCV) | 0.60 (0.12) | 0.22 (0.11) | −0.18 (0.08) | 0.25 (0.11) | 0.35 (0.12) | −0.06 (0.09) | 0.14 (0.08) | 0.00 (0.05) |

| MLASSO (AIC) | 0.63 (0.13) | 0.25 (0.12) | −0.16 0.08) | 0.20 (0.11) | 0.35 (0.12) | −0.17 (0.14) | 0.14 (0.11) | 0.09 (0.13) |

Fig. 2.

Graphical display of MLASSO shrinkage of eight coefficients as a function of shrinkage parameter s in the prostate cancer example. The broken line s = 0.28 is selected by BIC and s = 0.71 is selected by GCV

6 Discussion

Variable selection is a fundamental problem in statistical modeling. A variety of methods have been well developed in the least squares-based regression while their counterparts in the median regression are much less understood. With the recent advancement of the linear programming techniques for the L1 minimization, numerical simplicity is now also a nice property for the methods in the median regression. In this article, we consider the problem of simultaneous estimation and variable selection in the median regression model via penalizing the L1 loss function via the L1 (Lasso) penalty. Combining L1 loss function with LASSO-type penalty, the penalized estimator can be solved easily by standard linear programming packages. Differentially scaled L1 penalty are used to achieve desirable properties in terms of both identifying zero coefficients and estimating nonzero coefficients. Large sample properties of the proposed estimator are established by using local asymptotic quadratic property of the L1 loss function and a novel inequality. Standard error of the estimator is obtained by using the random perturbation method. It is shown that for properly chosen tuning parameters, the differentially penalized L1 estimator exhibits the oracle property. More interestingly, a modified BIC function is employed to obtain data-driven tuning parameters and the resultant two-stage procedure is proved to enjoy optimal properties. Extensive numerical studies show that the unified L1 method fares comparably well in terms of simultaneous estimation and variable selection and retains the appealing robustness of L1 estimator. The numerical simplicity of the proposed methodology gains extra benefits in real data analysis.

In spirit, the differentially scaled L1 penalty is similar to the Adaptive Lasso proposed by Zou (2006) though the latter is developed for the squared loss while our investigation is conducted under the L1 loss setting. A practical issue for both the differentially scaled L1 penalty and the Adaptive Lasso is the construction of tuning parameters which behaves differently for the true nonzero coefficients and the true zero coefficients. A slight difference between our construction and the Adaptive Lasso is that we standardize the preliminarily estimated coefficients via their standard errors while the Adaptive Lasso uses the unstandardized ones. As the magnitudes of the standard errors for the least squares estimates or the median estimates may differ substantially in practice especially when the predictor variables are highly correlated, the differential or adaptive weights should be standardized to decrease their impact on the tuning parameters.

Acknowledgments

This research was supported by grants from the U.S. National Institutes of Health, the U.S. National Science Foundation and the National University of Singapore (R-155-000-075-112).We are very grateful to the editor, the associate editor and the referees for their helpful comments which have greatly improved the paper.

Contributor Information

Jinfeng Xu, Email: staxj@nus.edu.sg, Department of Statistics and Applied Probability, National University of Singapore, Singapore 117546, Singapore.

Zhiliang Ying, Email: zying@stat.columbia.edu, Department of Statistics, Columbia University, New York, NY 10027, USA.

References

- Chen S, Donoho D. Basis pursuit. 28th Asilomar Conference Signals; Systems Computers; Asilomar. 1994. [Google Scholar]

- Efron B, Johnstone I, Hastie T, Tibshirani R. Least angle regression (with discussions) Annals of Statistics. 2004;32:407–499. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Fan J, Li R. Variable selection for Cox’s proportional hazards model and frailty model. Annals of Statistics. 2002;30:74–99. [Google Scholar]

- Hurvich CM, Tsai CL. Model selection for Least absolute Deviations Regressions in Small Samples. Statistics and Probability Letters. 1990;9:259–265. [Google Scholar]

- Knight K, Fu WJ. Asymptotics for Lasso-type estimators. Annals of Statistics. 2000;28:1356–1378. [Google Scholar]

- Koenker R, D’Orey V. Computing regression quantiles. Applied Statistics. 1987;36:383–393. [Google Scholar]

- Shen X, Ye J. Adaptive model selection. Journal of the American Statistical Association. 2002;97:210–221. [Google Scholar]

- Pakes A, Pollard D. Simulation and the asymptotics of optimization estimators. Econometrica. 1989;57:1027–1057. [Google Scholar]

- Pollard D. Empirical Processes: Theory and Applications, Reginal Conference Series Probability and Statistics; Institute of Mathematical Statistics; Hayward. 1990. [Google Scholar]

- Pollard D. Asymptotics for least absolute deviation regression estimators. Econometric Theory. 1991;7:186–199. [Google Scholar]

- Portnoy S, Koenker R. The Gaussian hare and the Laplacian tortoise: Computability of squared-error versus absolute-error estimators. Statistical Science. 1997;12:279–296. [Google Scholar]

- Rao CR, Zhao LC. Approximation to the distribution of M-estimates in linear models by randomly weighted bootstrap. Sankhyā A. 1992;54:323–331. [Google Scholar]

- Ronchetti E, Staudte RG. A Robust Version of Mallows’s Cp. Journal of the American Statistical Association. 1994;89:550–559. [Google Scholar]

- Stamey T, Kabalin J, McNeal J, Johnstone I, Freiha F, Redwine E, Yang N. Prostate specific antigen in the diagnosis and treatment of adenocarcinoma of the prostate, ii: Radical prostatectomy treated patients. Journal of Urology. 1989;16:1076–1083. doi: 10.1016/s0022-5347(17)41175-x. [DOI] [PubMed] [Google Scholar]

- Tibshirani RJ. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B. 1996;58:267–288. [Google Scholar]

- Xu J. PhD dissertation. Columbia University; 2005. Parameter estimation, model selection and inferences in L1-based linear regression. [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. On the “degrees of freedom” of the LASSO. Annals of Statistics. 2007;35:2173–2192. [Google Scholar]