Abstract

The developing brain responds to the environment by using statistical correlations in input to guide functional and structural changes—that is, the brain displays neuroplasticity. Experience shapes brain development throughout life, but neuroplasticity is variable from one brain system to another. How does the early loss of a sensory modality affect this complex process? We examined cross-modal neuroplasticity in anatomically defined subregions of Heschl's gyrus, the site of human primary auditory cortex, in congenitally deaf humans by measuring the fMRI signal change in response to spatially coregistered visual, somatosensory, and bimodal stimuli. In the deaf Heschl's gyrus, signal change was greater for somatosensory and bimodal stimuli than that of hearing participants. Visual responses in Heschl's gyrus, larger in deaf than hearing, were smaller than those elicited by somatosensory stimulation. In contrast to Heschl's gyrus, in the superior-temporal cortex visual signal was comparable to somatosensory signal. In addition, deaf adults perceived bimodal stimuli differently; in contrast to hearing adults, they were susceptible to a double-flash visual illusion induced by two touches to the face. Somatosensory and bimodal signal change in rostrolateral Heschl's gyrus predicted the strength of the visual illusion in the deaf adults in line with the interpretation that the illusion is a functional consequence of the altered cross-modal organization observed in deaf auditory cortex. Our results demonstrate that congenital and profound deafness alters how vision and somatosensation are processed in primary auditory cortex.

Introduction

A central goal of research on neuroplasticity is to understand the interacting roles of genetic and experiential factors in sculpting brain function and structure. Brain development can be characterized as the gradual unfolding of a powerful, self-organizing network of processes with complex interactions between genes and environment (Johnson, 2001; Johnson and Munakata, 2005). In this context, cross-modal neuroplasticity refers to sensory-specific cortex adapting to respond to an alternative sensory modality after a prolonged absence of the default sensory modality. There are limits to brain plasticity (Bavelier and Neville, 2002; Stevens and Neville, 2006), and little is known about plasticity of the primary auditory cortex in congenitally deaf individuals.

Cross-modal neuroplasticity within auditory cortex is an important area of active research. While some animal studies indicate that both vision and somatosensation play a role in altered cross-modal organization of primary auditory cortex or closely related regions (Allman et al., 2009; Meredith et al., 2009; Lomber et al., 2010; Meredith and Lomber, 2011), other studies report deaf primary auditory cortex does not respond to vision (Kral et al., 2003). Comparisons of auditory cortex across different species suggest the existence of common principles of auditory cortical organization across mammals (Woods et al., 2010; Hackett, 2011), but a one-to-one relationship between auditory cortical areas in humans and different animal models has not been established, complicating comparisons across humans and animal models.

Evidence of cross-modal neuroplasticity in human primary auditory cortex is limited. To our knowledge, no study to date has used a precise anatomical delineation of Heschl's gyrus, the site of human primary auditory cortex, to measure cross-modal neuroplasticity of primary auditory cortex. In past fMRI studies of deaf adults (Finney et al., 2001), standard practice was to use coordinates from the Talairach atlas (Talairach and Tournoux, 1988), a histological atlas based on a single elderly human brain, to localize primary auditory cortex; even so, most visual studies report visual responses caudal to rather than overlapping Heschl's gyrus (Bavelier et al., 2006). The somatosensory modality has not been extensively studied. There are only two human neuroimaging studies reporting somatosensory responses in auditory cortex in congenitally deaf participants. One is a magnetoencephalography (MEG) study of one deaf person showing a source in auditory cortex responded to vibrotactile stimulation (Levänen et al., 1998). The second study, a vibrotactile fMRI study of six deaf adults with extensive hearing aid use, was analyzed as a spatial average, and responses to vibrotactile stimulation occurred in deaf Heschl's gyrus (Auer et al., 2007). Both studies provide evidence that deaf auditory cortex may respond to somatosensory stimulation, but they are limited in anatomical precision. A third study, an MEG and fMRI study of a single congenitally deaf individual, found neither visual nor somatosensory responses in deaf auditory cortex (Hickock et al., 1997).

We examined whether visual, somatosensory, and bimodal processing is altered in congenitally deaf adult humans in a group analysis and by quantifying fMRI signal change within superior-temporal cortex and Heschl's gyrus subregions. An additional critical question is whether cross-modal neuroplasticity has functional consequences such as altered perception in deaf individuals. Only the congenitally deaf adults in our study reported a somatosensory double-flash illusion, a visual percept induced by a somatosensory stimulus, and we examined which regions of auditory cortex had signal that correlated with the response rate to the illusion across deaf participants.

Materials and Methods

Participants

All participants were healthy, were not taking psychoactive medications, and did not have a history of neurological or psychiatric conditions. Procedures were approved by the Institutional Review Board of the University of Oregon. Participants gave their informed and written consent and were paid for their participation.

Deaf participants.

Thirteen congenitally deaf adults (mean age, 27.7; range, 20–40 years) were recruited via deaf community organizations and electronic bulletin boards (10 were female). Participants were profoundly deaf in both ears since birth (bilateral attenuation >90 dB), had minimal past hearing aid use, had a family history of congenital deafness, and reported learning American Sign Language (ASL) to fluency in childhood. Consent was acquired via a written consent form, with a certified ASL interpreter present throughout the experiment. We assessed nonverbal reasoning using a timed (30 min) 12-question short form of Raven's Advanced Progressive Matrices (Bors and Stokes, 1998); scores ranged from 2 to 9, with an average score of 5.2.

Hearing participants.

Twelve hearing adults (mean age, 30.8; range, 19–48 years) were recruited via community electronic bulletin boards (7 were female). Raven's Advanced Progressive Matrices for hearing adults scores ranged from 0 to 12, with an average score of 7.8. Neither age (t(23) = −1.1, p = 0.3) nor Raven's score (t(20) = −2, p = 0.06) was significantly different between groups.

Stimuli and procedures

Apparatus.

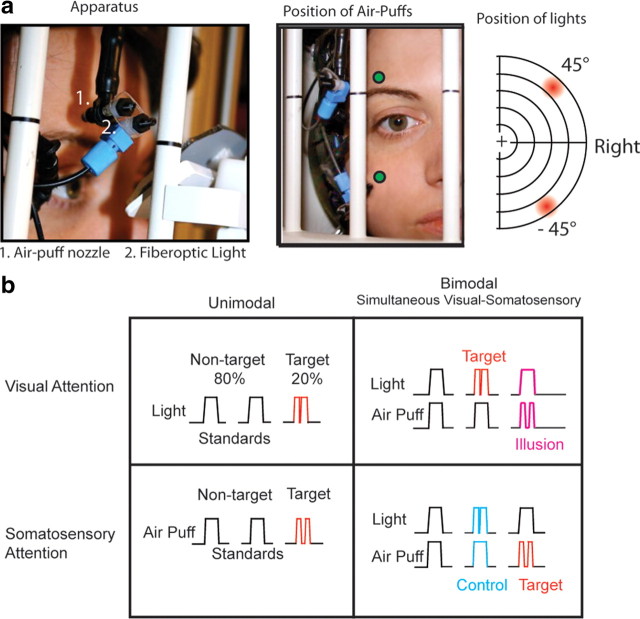

An fMRI-compatible apparatus was constructed to allow precise positioning of somatosensory and visual stimuli for each participant in the MRI scanner (Fig. 1a). The somatosensory component of the device was based on previous somatosensory studies (Huang and Sereno, 2007; Smith et al., 2009). Somatosensory stimuli were “air puffs” above and below the right eye delivered via flexible tubing connected to high-speed solenoids with an adjustable-flow valve (NVKF334; SMC Pneumatics). A compressor provided pressurized air to the solenoids, which can be operated at frequencies of up to 10 Hz by an NI DAQCard and in-house software (PCI-6229 and LabView software; National Instruments). Solenoids and the compressor were in a separate room, with tubing entering the scanning room through a wall portal (waveguide). Inside the MRI bore, the flexible tubing (one-quarter inch inner diameter, one-sixteenth inch wall) was connected to a jointed rig of stiff plastic tubing mounted on the MRI-compatible headset. The jointed rig was adjusted for each participant to deliver air puffs above the right eyebrow and to the cheek or below the right eye. The nozzle was located 0.25 cm from the skin. The air puffs were angled away from the eye and did not cause any blinking or drying of the eye. Visual stimuli, “lights,” were delivered via fiberoptic cables with a 3 mm diameter diffuser mounted directly below the air puff nozzle, connected to red light-emitting diodes in the console room and controlled by the LabView program and the NI DAQCard. Lights were positioned individually for each subject to be at a radial location of 45° above or below the horizontal meridian and at 45° eccentricity to the right of the vertical meridian. Bimodal stimuli were the simultaneous presentations of the visual and somatosensory stimuli delivered from the same location on the apparatus (air puffs to the cheek with visual stimuli below the horizontal meridian, air puffs above the eyebrow with visual stimuli above the meridian). The distance between the tip of the air puff tubing and the location of the light source was 8 mm. In accordance with safety protocols for the MRI center, both hearing and deaf individuals wore both sound-attenuating earplugs and a sound-attenuating headset. Before initiating the scanning session, we verified that participants were able to easily detect all standard stimuli from within the MRI bore and made minor adjustments to the position of the apparatus until participants reported a subjective match between the strength of visual and somatosensory standards. A projector positioned at the back of the MRI bore projected a video display of a central fixation cross on a black background and an instruction cue, a letter, via Presentation software (Neurobehavioral Systems). The brightness of the projector was decreased to 50%. Participants viewed the projection with a custom mirror that did not interfere with the visual-somatosensory apparatus.

Figure 1.

a, Apparatus. Somatosensory stimuli were air puffs to the right side of the face, and visual stimuli were presented with fiberoptics attached to the air puff nozzles. These lights were at 45° eccentricity in the right visual field. In each block, visual, somatosensory, or combined bimodal stimuli were presented in one field, either upper or lower. b, Stimulus types in each block. Nontarget standard stimuli, 80% of the stimuli per block, are of 300 ms stimulus duration, while target stimuli (20%) are interrupted by an off gap, 40 ms for light and 120 ms for puff, a double light and double puff. The interval between stimuli was 700–900 ms. In each bimodal block, visual and somatosensory stimuli were presented simultaneously in the same field, either above or below the horizontal meridian, with attention and target detection in one sensory modality. For example, in a Bimodal Visual Attention block the target was a double light, regardless of whether it was paired with a single or a double puff. The illusory stimulus and the nonillusory control stimulus are labeled in magenta and cyan, respectively.

We monitored participants from the console room via an infrared video camera and used an eye-tracker to monitor central fixation, blinking, and participant alertness. For the deaf participants, a video camera displayed the console room and the ASL interpreter between scans to allow deaf participants to communicate with research staff; the hearing participants used an intercom. Participants had access to a “squeeze-ball” alert button to terminate a scan.

Stimuli and task.

Blocks were 20 to 24 s duration. Each run consisted of 16 randomly ordered “task blocks” with central fixation interspersed with 5 blocks with central fixation in which participants were instructed to rest and be ready for the next block of task trials, “resting fixation blocks.” The total duration of each run was 5 to 5.5 min. A total of 8 randomized runs were available for the experiment. Runs were excluded for drowsiness or excessive motion or were omitted if time did not allow for the full 8 runs. For one deaf and one hearing participant, the first run of the session included saccades; fixation instructions to the participant were then repeated, and the first run was excluded. There was no significant difference in the number of runs analyzed for deaf and hearing groups (hearing, 7.1 runs; deaf, 6.4 runs; t(23) = 1.37, p = 0.18), and each participant completed at least 4 runs. A sample run is depicted in Table 1. Visual stimuli were lights in the peripheral visual field (45°), and somatosensory stimuli were air puffs to the face; both were presented via a custom fiberoptic/pneumatic apparatus (Fig. 1a). Stimuli were presented in blocks of visual stimuli alone (Unimodal Visual), somatosensory stimuli alone (Unimodal Somatosensory), or simultaneous visual-somatosensory “bimodal” stimuli with attention to either visual or somatosensory stimuli in separate blocks (Bimodal Visual Attention or Bimodal Somatosensory Attention) (Fig. 1b). Each block consisted of stimulation to only one stimulus field, either the upper or the lower. Participants detected target stimuli that were 20% of the stimuli in each block. Targets were “double lights” (two brief flashes of light) or “double puffs” (two brief air puffs). Standard light flashes were duration 300 ms while infrequent targets, presented 20% of the time, had a 40 ms gap centered at 150 ms. Standard air puffs were also duration 300 ms while infrequent targets had a 120 ms gap centered at 150 ms. The interval between stimuli was 700–900 ms. A cue letter at fixation, present during the entire block, instructed participants to attend to and detect targets in either the visual or the somatosensory modality or to rest with eyes at the fixation cross while there were no additional stimuli presented. The result was a crossed design in which stimuli were either unimodal or bimodal with a target-detection attention task for either the visual or the somatosensory modality.

Table 1.

Example of a single run of 16 blocks used to create the design matrix

| Block |

||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | |

| Visual | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |||||||

| Somatosensory | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | |||||||

| Bimodal interaction visual | 1 | 1 | 1 | |||||||||||||

| Bimodal interaction somatosensory | 1 | 1 | 1 | 1 | ||||||||||||

| Fixation | _ | _ | _ | _ | _ | |||||||||||

1, Location of a boxcar that was then convolved with a canonical hemodynamic response function to yield the task-related regressor; _, Block in which participants maintained fixation and waited for the next task block.

Our main behavioral measures were the overall response rate, or hit rate, to true targets in Unimodal Visual and Somatosensory blocks, the percentage of responses to illusory bimodal targets in Bimodal Visual Attention blocks, the percentage of response to control bimodal targets in Bimodal Somatosensory Attention blocks, and the false alarm rate, the percentage of responses to nontargets excluding the illusory and control stimuli.

The auditory-induced double-flash, a phenomenon wherein a single flash of light paired with two or more brief auditory events is perceived as multiple flashes of light (Shams et al., 2000; Mishra et al., 2007), has also been reported for the somatosensory modality (Violentyev et al., 2005), which correlates with activity in visual and somatosensory areas (Lange et al., 2011). In our paradigm (Fig. 1b), double lights and double puffs were the target stimuli to which participants responded. In a Bimodal Visual Attention block, a single light paired with a double puff is not a target; however, if a participant is susceptible to the touch-induced double-flash illusion, this single flash will be perceived as the target “double flash.” Thus the response rate to these nontarget stimuli indicates the strength of this illusory percept. To ensure that an increased response rate to these targets did not simply indicate an increased response rate in the presence of competing stimuli, we included a “nonillusory control” condition—double lights paired with single puffs—in the Bimodal Somatosensory Attention blocks, since double lights do not induce an illusory percept of two sounds or touches. This asymmetry in double-flash illusions is often interpreted as the sensory modality with greater temporal precision (e.g., auditory) influencing the timing of a less precise modality (vision) (for review, see Shams et al., 2004). We reasoned that if the deaf auditory cortex processes somatosensory and bimodal visual-somatosensory processing to a greater degree than that of hearing people, the deaf participants would perceive a stronger touch-induced double-flash illusion but would not differ in the nonillusory control condition.

Statistical analyses for behavior.

For the main behavioral analysis of the illusion, we performed repeated measures ANOVA on the percentage of responses to the illusion and control conditions [2 Conditions (Visual Bimodal [Illusion] and Somatosensory Bimodal [Control]) × 2 Groups (Deaf and Hearing)] with an α level of 0.05 and group as a between-subjects factor. In a separate analysis, we tested whether hit rates for nonillusory true targets differed by condition or group. We performed a repeated measures ANOVA on the percentage of responses to true targets [2 Attention Modes (Visual and Somatosensory × 2 Stimulus Types (Unimodal and Bimodal) × 2 Groups (Deaf and Hearing)] with group as a between-subjects factor. We used the same ANOVA structure to test for differences by group or condition for false alarm. Pearson's correlations were calculated between the signal change in each region of interest (ROI) (all voxels in the region, not pre-thresholded) and the difference between the response rates to illusory and control stimuli separately by group.

fMRI procedures and analysis.

We used a 3 T Siemens Allegra head-only MRI system to collect whole-brain gradient echo EPI images. The TR was 2 s, TE was 30 ms, and flip-angle was 80°. We collected 32 axial slices with a thickness of 3.125 mm with interleaved acquisition order. We used a Siemens Prospective Acquisition Correction (PACE) protocol to compensate for head motion in real time before the acquisition of each whole brain image. We excluded any run in which there was significant motion not accounted for by PACE. fMRI data processing was carried out using FEAT version 5.98, part of FSL (Smith et al., 2004) (FMRI Expert Analysis Tool; FMRIB Software Library, www.fmrib.ox.ac.uk/fsl). The following pre-statistics processing was applied: slice-timing correction; spatial smoothing using a Gaussian kernel of FWHM 4 mm; grand mean intensity normalization; high-pass temporal filtering (cutoff = 0.0125 Hz). The task-related regressor was modeled as boxcars convolved with the FSL default canonical hemodynamic response function. Separate boxcars represented visual and somatosensory blocks and interaction terms for bimodal blocks (Table 1). Time-series statistical analysis using the GLM was carried out using FILM with local autocorrelation correction (Woolrich et al., 2001). Functional images were coregistered to each individual participant's T1-weighted structural image, which was then coregistered and resampled to the FSL standard 2 × 2 × 2 mm brain (MNI/ICBM 152 template (Mazziotta et al., 2001) using FLIRT with 12 degrees of freedom (Jenkinson and Smith, 2001; Jenkinson et al., 2002). Higher-level analysis was carried out using FLAME (FMRIB Local Analysis of Mixed Effects) stage 1 and stage 2 (Beckmann et al., 2003; Woolrich et al., 2004). For group analyses, individual structural and functional volumes were coregistered to a common stereotaxic template. Z (Gaussianized T/F) statistical images were initially thresholded using clusters determined by Z > 2.8 and were further corrected by cluster significance of p < 0.01 for comparisons across deaf and hearing groups (Worsley, 2001). The coordinates of local peaks within significant clusters were reported relative to the Jülich and Harvard-Oxford atlases in FSL. The superior-temporal region of interest (ROI) was based on the Harvard-Oxford Atlas (25% threshold) and included the anterior and posterior superior-temporal gyrus, and planum temporale.

Individual Heschl's gyrus ROIs.

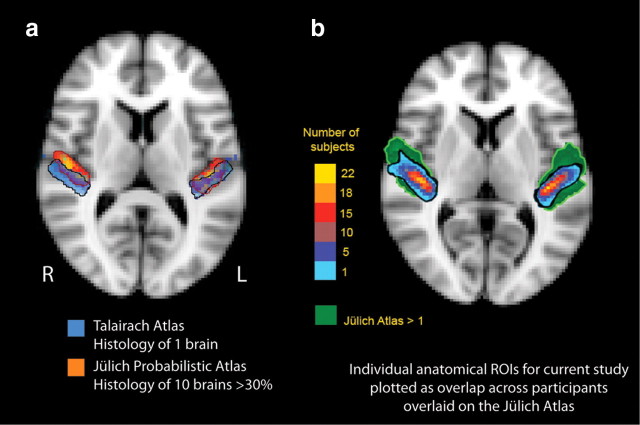

There is considerable variability in individual Heschl's gyri. For example, for individual brains coregistered to a template brain, Heschl's gyrus in one person may partially overlap the inferior frontal gyrus of another person. Other nonlinear coregistration methods may not adequately coregister a single gyrus morphology of Heschl's gyrus in one participant to the partial or complete duplication of Heschl's gyrus in another. For this reason, we defined Heschl's gyrus anatomically in individuals. To further illustrate individual variability, Figure 2a shows the probabilistic location of primary auditory cortex in the Jülich histological atlas, an atlas based on the microscopic and quantitative histological examination of 10 human postmortem brains, 3D reconstructed and linearly transformed into MNI152 space (Eickhoff et al., 2005, 2006, 2007). The location of primary auditory cortex is shown thresholded at 30% probability (3 of 10 brains). The overlay is a digitized version of the Talairach atlas location of area 41 (primary auditory cortex) registered to MNI152 space (Talairach and Tournoux, 1988; Lancaster et al., 2000, 2007; Lacadie et al., 2008). Note that the Talairach atlas is based on a single elderly individual, and the location of the right posterior primary auditory cortex extends posterior to the probabilistic primary auditory cortex as defined by the Jülich histological atlas.

Figure 2.

a, Variability of atlas-defined primary auditory cortex. Primary auditory cortex of the Talairach atlas is based on a single elderly brain (Talairach and Tournoux, 1988), shown here with the corresponding Jülich probabilistic atlas thresholded at 30% overlap across the 10 brains. Both are displayed on the MNI152 template brain (a single individual). Note that Talairach-defined primary auditory cortex is more caudal than in the Jülich atlas on the right. b, Heschl's gyrus ROIs for the current study overlaid on atlas-defined primary auditory cortex. Cytoarchitectonic borders of primary auditory cortex are well approximated by macroanatomic borders of Heschl's gyrus (Morosan et al., 2001), but Heschl's gyrus is variable across individuals. The individual ROIs for our study were drawn based on Heschl's gyrus anatomical landmarks and are shown here as overlap across all participants (color bar) coregistered into a common stereotactic space and overlaid on the standard MNI152 brain. The green underlay is the Jülich probabilistic atlas delineation of primary auditory cortex defined histologically thresholded at n = 1, which means at least one participant in the histological study of the brains of 10 participants had primary auditory cortex in a given voxel in standardized space. We chose an anatomical ROI approach for Heschl's gyrus because of the marked individual variability across participants.

Blind to the group status of each participant's brain, we parcellated each Heschl's gyrus by hand on a structural volume (T1-weighted MPRAGE) coregistered and resampled to the 2 × 2 × 2 mm FSL standard brain (MNI 152); ROIs were drawn using Space software (http://lcni.uoregon.edu/∼dow/Space_program.html). On sagittal planes, an initial boundary of Heschl's gyrus was drawn. These boundaries were projected onto coronal planes, and the boundaries were adjusted if the gyrus was visible in a cross section at either sagittal or coronal orientation. The boundaries were also checked in projection on the axial planes, where voxels with low neighborhood support were excluded and voxels with high neighborhood support were included. All voxels within the boundary were included. Figure 2b shows our anatomically defined Heschl's gyri fall within reasonable boundaries for primary auditory cortex, as defined by the Jülich histological atlas.

The individual Heschl's gyri were partitioned into anterior and posterior subdivisions by a plane oriented along the first principle component of voxel centers to allow comparison to recent tonotopic functional neuroimaging demonstrating that human primary cortical areas A1 and R respect anatomical boundaries of anterior and posterior Heschl's gyrus, respectively (Da Costa et al., 2011). In a second parcellation, we divided each individual Heschl's gyrus ROI into three subregions along its length—central, caudomedial, and rostrolateral—to approximate cytoarchitectonic divisions Te1.0, Te1.1, and Te1.2 respectively, corresponding to the human primary auditory cortex. The central region, Te1.0, is most granular with the thickest layer IV and the small-sized layer IIIc pyramidal cells; the medial area, Te1.1, has less distinct layers with medium-sized layer IIIc pyramidal cells; and the lateral area, Te1.2, has a thick layer III with medium-sized layer IIIc pyramidal cells (Morosan et al., 2001). The subregion divisions were the planes through one-third of the distance between the extreme caudomedial position along the first principle component axis. The parameter estimates from all unthresholded voxels within the boundary were extracted for each term in the model scaled to percentage signal change.

Statistical analyses within ROIs.

There were four experimental blocks: unimodal visual, unimodal somatosensory, bimodal visual-somatosensory with visual attention, and bimodal visual-somatosensory with somatosensory attention. The experiment was a 2 × 2 design. The manipulations were as follows: stimulus type, either a unimodal or a bimodal stimulus; attention modality, attention to the visual or somatosensory stimulus. The dependent measure was percentage signal change in the BOLD signal relative to the fixation baseline, extracted as the mean parameter estimate using Featquery in FSL; our GLM modeled the bimodal blocks as contributions from the visual and somatosensory modality and an interaction term (Table 1), which were summed for percentage signal change in bimodal blocks. Normal distribution of data for each variable was tested with a Kolmogorov-Smirnov test, and none violated the assumption of normality (p > 0.5). For the ROI analyses we performed an ANOVA [2 Stimulus Types (Unimodal, Bimodal) × Attention Modes (Visual, Somatosensory) × 2 Hemisphere (Contralateral, Ipsilateral)], with Group (Deaf, Hearing) as a between-subjects factor; Greenhouse-Geisser corrections were applied. Heschl's gyrus analysis also included subregion (Rostral, Central, Medial or Anterior, Posterior). All variables were repeated measures except group, which was a between-subjects factor. The p values for followup t test contrasts were multiplied by the number of comparisons to control type I error.

Results

Group analysis

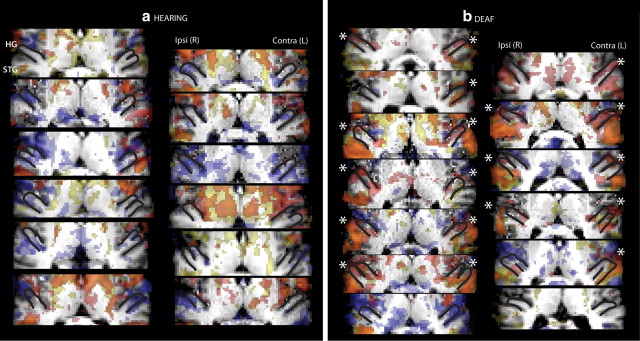

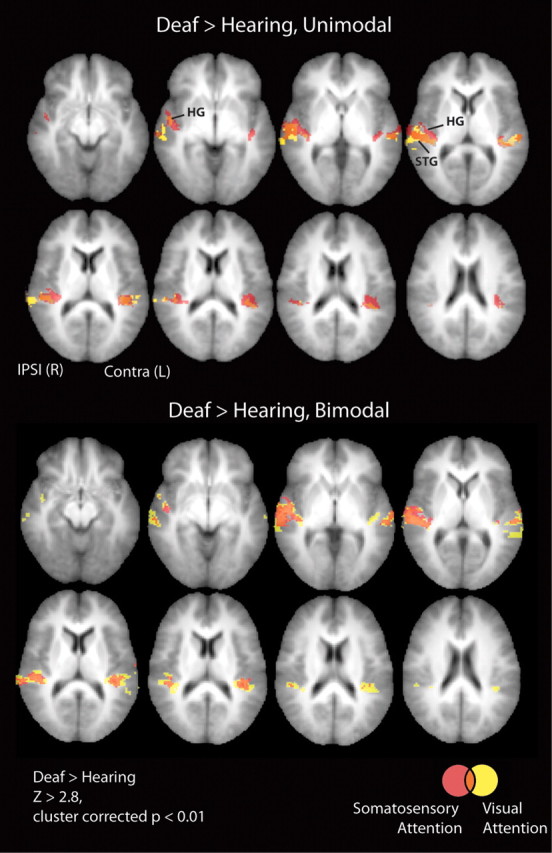

While group analyses spatially averaged across participants are less anatomically specific than individual ROI analyses, we performed a whole-brain group analysis in standard MNI152 stereotaxic space to allow our results to be compared to the existing literature. The statistical threshold was Z > 2.8, cluster corrected at two-tailed p < 0.01. There were no regions in which signal for the hearing adults was significantly greater than for the deaf adults and no differences between upper- and lower-field stimulation. Figure 3 illustrates the results of group level contrasts between deaf and hearing adults for visual and somatosensory unimodal and bimodal responses for all slices with a significant difference between groups. We found that deaf participants had larger visual and somatosensory responses than hearing participants in superior-temporal lobe regions. Signal in the anterior/rostral aspect of atlas-defined Heschl's was significantly larger in the deaf than the hearing for unimodal somatosensory stimulation, while unimodal visual stimulation only elicited greater signal in deaf than hearing in the posterior/caudal portion of atlas-defined Heschl's. The coordinates for local peaks within significant clusters with probabilistic atlas descriptions of their locations are summarized in Tables 2 and 3. To illustrate that the group effect does not depend on a few individuals, Figure 4 shows that among individual participants, hearing adults had little positive signal change in Heschl's gyrus, even at a low statistical threshold of Z > 1.65), in contrast to the majority of deaf participants. This qualitative result was quantified by extracting signal change estimates from individual ROIs.

Figure 3.

Whole-brain analysis of group differences. Group level differences between deaf and hearing adults provided a whole-brain view of the results that we then analyzed in more detail with ROI analyses. Heschl's gyrus, planum temporale, and superior-temporal cortex are sites where deaf had larger signal than hearing for the contrasts of stimulation versus resting fixation (see Tables 2 and 3) and are shown overlaid on axial slices of the average structural MRI of the participants in the study for slices where signal in the deaf participants was greater than signal in hearing participants (Z > 2.8, cluster corrected p < 0.01). Slices inferior and superior to those shown had no significant clusters, and there were no clusters with significantly greater activation for hearing than deaf participants in any slice. Top, Unimodal somatosensory and visual stimulation. Bottom, Bimodal stimulation with somatosensory or visual attention. Red, Somatosensory; yellow, visual; orange, overlap of visual and somatosensory. Signal in the anterior aspect of atlas-defined Heschl's was significantly larger in deaf than hearing only for unimodal somatosensory stimulation while unimodal visual stimulation elicited greater signal in deaf than hearing only in the posterior portion of atlas-defined Heschl's. For bimodal stimulation, both visual and somatosensory attention elicited signal in atlas-defined Heschl's. Ipsi, Ipsilateral to stimulation; Contra, contralateral to stimulation.

Table 2.

Unimodal stimulation: deaf > hearing

| Hemi | MNI coordinates |

Za | Harvard-Oxford Atlasb | Jülich Atlasb | |||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Unimodal somatosensory | R | 40 | −32 | 8 | 5.5 | 14% Prim Aud (TE1.1) | |

| R | 52 | −16 | −6 | 4.86 | 34% Post STG | ||

| R | 34 | −30 | 6 | 4.66 | 17% Insula; 17% optic radiation | ||

| R | 50 | −12 | −6 | 4.54 | 17% Plan Pol | 8% Acoustic radiation | |

| R | 48 | −10 | −12 | 4.42 | 20% Post STG | 12% Insula (Id1) | |

| R | 40 | −28 | 2 | 4.4 | 20% Insula (Id1) | ||

| L | −40 | −40 | 16 | 6.39 | 22% PT | 7% SII/OP1 | |

| L | −46 | −38 | 16 | 4.9 | 42% PT | 44% PFcm | |

| L | −40 | −32 | 10 | 4.83 | 54% PT | 58% Prim Aud (TE1.1) | |

| L | −34 | −30 | 16 | 4.7 | 42% PT | 46% Prim Aud (TE1.1) | |

| L | −40 | −26 | −6 | 4.49 | 68% Optic radiation | ||

| L | −32 | −34 | 16 | 4.45 | 20% PT | 33% Prim Aud (TE1.1) | |

| Unimodal visual | R | 52 | −18 | −6 | 4.53 | 30% Post STG | |

| R | 52 | −14 | −4 | 4.51 | 18% Post STG | 10% Acoustic radiation | |

| R | 42 | −32 | 4 | 4.48 | 6% Post STG | ||

| R | 52 | −10 | −8 | 4.41 | 20% Post STG | ||

| R | 66 | −18 | −4 | 4.33 | 35% Post STG | ||

| R | 56 | −14 | 0 | 4.27 | 11% PT | ||

| L | −40 | −32 | 12 | 4.42 | 63% PT | 62% Prim Aud (TE1.1) | |

| L | −64 | −16 | −4 | 4.42 | 21% Post STG | ||

| L | −40 | −40 | 16 | 4.39 | 22% PT | 7% SII/OP1 | |

| L | −38 | −30 | 4 | 4.36 | 32% Acoustic radiation | ||

| L | −56 | −30 | 6 | 4.27 | 23% PT | 10% SII/OP1 | |

| L | −40 | −38 | 12 | 3.99 | 45% PT | 14% Prim Aud (TE1.1); 14% SII/OP1 | |

aSurvives cluster correction, Z > 2.8, p < 0.01.

bMost probable location description listed. Hemi, Hemisphere; L, left; PFcm, inferior parietal; Plan Pol, planum polare; Post STG, posterior superior temporal gyrus; Prim Aud, primary auditory cortex; PT, planum temporale; R, right; SII/OPI, secondary somatosensory/parietal operculum.

Table 3.

Bimodal stimulation: deaf > hearing

| Hemi | MNI coordinates |

Za | Harvard-Oxford Atlasb | Jülich Atlasb | |||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Bimodal somatosensory attention | R | 62 | −14 | −4 | 4.94 | 18% Post STG | |

| R | 54 | −14 | 2 | 4.88 | 17% HG | 46% Prim Aud (TE1.0) | |

| R | 60 | −14 | 2 | 4.7 | 20% PT | ||

| R | 38 | −22 | 0 | 4.6 | 20% Insula | 40% Acoustic radiation | |

| R | 42 | −22 | −6 | 4.39 | 41% Optic radiation | ||

| R | 42 | −28 | 10 | 4.31 | 29% PT | 51% Prim Aud (TE1.1) | |

| L | −42 | −38 | 10 | 4.96 | 31% PT | 13% SII/OP1; 13% Prim Aud (TE1.1) | |

| L | −40 | −34 | 10 | 4.58 | 61% PT | 46% Prim Aud (TE1.1) | |

| L | −64 | −14 | 0 | 4.5 | 23% Post STG | ||

| L | −34 | −34 | 10 | 4.07 | 7% PT | 32% Acoustic radiation | |

| L | −66 | −18 | 4 | 3.89 | 38% Post STG | ||

| L | −46 | −32 | 12 | 3.84 | 54% PT | 26% SII/OP1 | |

| Bimodal visual attention | R | 36 | −38 | 16 | 4.9 | 29% Optic radiation | |

| R | 40 | −28 | −4 | 4.37 | 78% Optic radiation | ||

| R | 52 | −12 | −4 | 4.13 | 11% Post STG, | 10% Acoustic radiation | |

| R | 36 | −38 | 12 | 4.12 | 49% Optic radiation | ||

| R | 52 | −12 | −8 | 4.09 | 41% HG | 90% Prim Aud (TE1.0) | |

| R | 54 | −26 | 2 | 4.08 | 27% STG | ||

| L | −38 | −32 | 8 | 4.59 | 25% PT | ||

| L | −42 | −24 | −8 | 4.53 | 39% Optic radiation | ||

| L | −30 | −36 | 16 | 4.42 | 47% Post TF | ||

| L | −36 | −32 | 4 | 4.34 | 29% Optic radiation | ||

| L | −32 | −32 | 8 | 4.27 | 17% Optic radiation | ||

| L | −34 | −28 | 4 | 4.16 | 7% Insula | 23% Acoustic radiation | |

aSurvives cluster correction. Z > 2.8, p < 0.01.

bMost probable location description listed. Hemi, Hemisphere; L, left; PFcm, inferior parietal; Plan Pol, planum polare; Post STG, posterior superior temporal gyrus; Prim Aud, primary auditory cortex; PT, planum temporale; R, right; SII/OPI, secondary somatosensory/parietal operculum.

Figure 4.

Task-related signal in individual hearing and deaf participants. a, b, Each panel shows the response for stimulation relative to resting fixation overlaid on each individual's brain for hearing participants (a) and deaf participants (b). Heschl's gyrus (HG) outlined in black shows the characteristic anatomical variability across individuals with single, double, or partial duplication of HG. Red voxels represent regions where somatosensory task-related signal was greater than fixation (Z > 1.65), yellow voxels represent regions where the visual task-related signal was greater than fixation (Z > 1.65), and orange voxels represent areas of overlap between vision and somatosensation. Blue voxels represent areas where signal was lower during visual or somatosensory stimulation than at fixation (Z < −1.65). Note that even at this liberal threshold, task-positive voxels spare Heschl's gyrus in hearing participants but overlap HG in the majority of deaf participants (*). This qualitative result was quantified by extracting signal change estimates from ROIs (see Figs. 5–7). Ipsi, Ipsilateral to stimulation; Contra, contralateral to stimulation.

Heschl's Gyrus ROIs

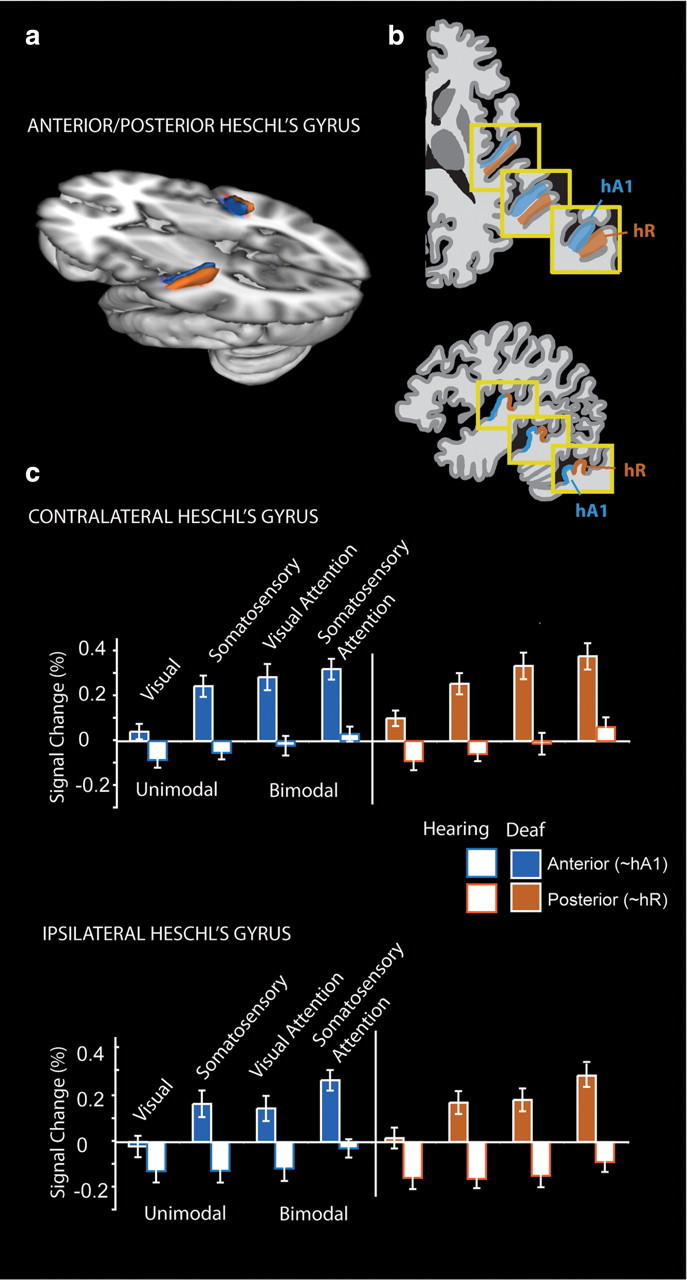

Anterior and posterior subregions

Figure 5 illustrates the signal change in Heschl's gyrus anterior and posterior subregions for deaf and hearing participants. According to a recent tonotopic fMRI study (Da Costa et al., 2011), the anterior aspect of Heschl's likely corresponds mainly to human A1, while the posterior aspect corresponds to human area R. Both A1 and R are core auditory regions in the macaque monkey (Hackett, 2011). Deaf participants had a larger response across both regions (Main Effect of Group: F(1,23) = 29.9, p < 0.001). The difference between deaf and hearing was larger for somatosensory and bimodal stimuli than visual (Group × Stimulus: F(1,23) = 5.7, p = 0.025; Group × Attention: F(1,23) = 7.5, p = 0.012), and Group differences did not interact with hemisphere (p > 0.10). Group differences did interact with the Anterior/Posterior subdivisions (F(1,23) = 4.6, p = 0.042) and because hemisphere tended to interact with subregion (F(1,23) = 3.8, p = 0.06), we performed follow-up t test contrasts between deaf and hearing participants in the anterior (Ant) and posterior (Post) subregions separately in the contralateral (Contra) and ipsilateral (Ipsi) hemispheres for a total of 16 contrasts, corrected for multiple comparisons. Deaf participants had larger responses than hearing participants in each subregion for each condition with the exception of unimodal vision, which was significantly different between groups only in the contralateral posterior subregion [Unimodal Somatosensory (Ant Contra: t(23) = 5.2, p < 0.01; Post Contra: t(23) = 5.4, p < 0.01; Ant Ipsi: t(23) = 3.7, p = 0.017; Post Ipsi: t(23) = 5.1, p < 0.01), Bimodal Somatosensory Attention (Ant Contra: t(23) = 5.0, p < 0.01; Post Contra: t(23) = 4.3, p < 0.01; Ant Ipsi: t(23) = 4.9, p < 0.01; Post Ipsi: t(23) = 5.7, p < 0.01), Bimodal Visual Attention (Ant Contra: t(23) = 4.1, p < 0.01; Post Contra: t(23) = 4.5, p < 0.01; Ant Ipsi: t(23) = 3.4, p = 0.04; Post Ipsi: t(23) = 4.7, p < 0.01), and Unimodal Visual Stimulation (Ant Contra: t(23) = 2.6, p = 0.26; Post Contra: t(23) = 3.6, p = 0.023; Ant Ipsi: t(23) = 1.6, p > 1; Post Ipsi: t(23) = 2.6, p = 0.25)].

Figure 5.

Anterior to posterior subdivisions of Heschl's gyrus. a, Anatomical Heschl's gyrus ROIs drawn on individual structural brain images were parcellated along the first principle component of voxel centers into an anterior and a posterior subdivision. The divisions are summarized here as a three-dimensional representation at 30% overlap between participants. b, Da Costa et al. (2011) defined human primary auditory cortical areas A1 and R using tonotopy in hearing adults, shown in diagram form here in three examples of anatomical variation. c, Signal change relative to the resting fixation baseline was extracted from individual participant Heschl's gyrus subregions for each block type ipsilateral and contralateral to stimulation for each block type. Deaf participants had larger responses than hearing participants across both regions, and the difference was larger for somatosensory and bimodal stimuli than visual. Error bars represent ± SEM.

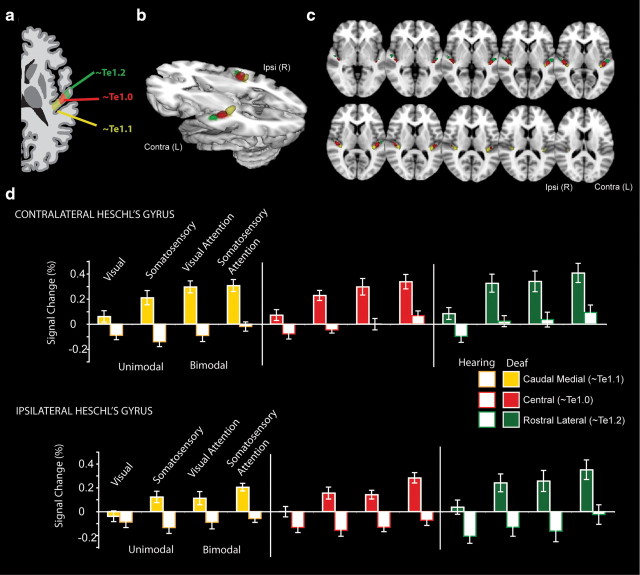

Caudal to rostral subregions

Figure 6 illustrates the signal change in Heschl's gyrus subregions—caudomedial, central, and rostrolateral—for deaf and hearing participants to approximate cytoarchitectonic regions Te1.1, Te1.0, and Te1.2 (Morosan et al., 2001). As shown in Figure 6d, the deaf had a larger response across all Heschl's gyrus regions (Group main effect: F(1,23) = 29.3, p < 0.001). The difference between deaf and hearing adults was larger for somatosensory and bimodal blocks than for visual blocks (Group × Stimulus: F(1,23) = 5.34, p = 0.03; Group × Attention: F(1,23) = 6.98, p = 0.015), but Group did not interact with subregion or hemisphere (p > 0.10). There was a main effect of Heschl's gyrus subregions with the largest signal, on average, in the rostrolateral region (Region Effect: F(2,46) = 4.39, p = 0.018). Bimodal stimuli elicited a larger response than unimodal stimuli across both deaf and hearing groups (Main Effect of Stimulus Type: F(1,23) = 36.8, p < 0.001). Since group status did not interact with subregion or hemisphere, we averaged across Heschl's gyrus regions to perform 10 follow-up t test contrasts, corrected for multiple comparisons. Deaf adults had larger responses than hearing adults to unimodal somatosensory stimulation (t(23) = 5.3, p < 0.01), bimodal stimulation with visual attention (t(23) = 4.6, p < 0.01), and bimodal stimulation with somatosensory attention (t(23) = 5.5, p < 0.01) and with a trend toward larger responses to unimodal visual stimulation (t(23) = 2.9, p = 0.08). Comparing conditions in the deaf participants, we found that somatosensory stimuli elicited larger activations than visual stimuli (t(12) = 4.76, p < 0.01), and bimodal visual signal was larger than unimodal visual signal (t(12) = 4.86, p < 0.01), but the contrasts between bimodal somatosensory and unimodal were not significant (t(12) = 2.3, p = 0.4). In the hearing, bimodal stimuli with somatosensory attention elicited larger signal than unimodal somatosensory stimuli (t(11) = 3.6, p = 0.04), but there was no difference between unimodal visual and unimodal somatosensory (t(11) = 0.44, p > 1) or unimodal visual and bimodal stimuli with visual attention (t(11) = 1.19, p > 1).

Figure 6.

Caudal-to-rostral subdivisions of anatomically defined Heschl's gyrus. a, Human primary auditory cortical areas Te1.2, 1.0, and 1.1 along the rostrolateral to caudomedial extent of Heschl's gyrus, shown in diagram form (Morosan et al., 2001). b, To approximate these regions, anatomical Heschl's gyrus ROIs drawn on individual structural brain images were parcellated into three rostral-to-caudal divisions, illustrated here at 30% overlap between participants as a three-dimensional representation. c, Axial slices illustrating subdivisions at 30% overlap across participants. d, Signal change relative to the resting fixation baseline was extracted from each individual participant's Heschl's gyrus subregion, contralateral (Contra) and ipsilateral (Ipsi) to stimulation, for each block type. The deaf had a larger somatosensory and bimodal response across all Heschl's gyrus regions, and the difference between deaf and hearing adults was larger for somatosensory and bimodal stimuli than for visual stimuli. Error bars represent ± SEM.

Superior-temporal ROI

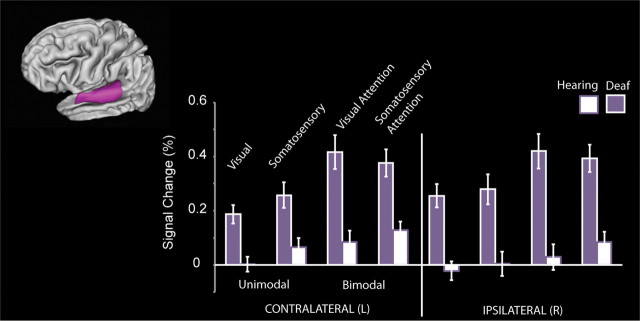

For comparison to Heschl's gyrus and to complement the group analysis, we performed a superior-temporal ROI analysis (Fig. 7). The deaf had a larger superior-temporal response overall (Group main effect: F(1,23) = 24.7, p < 0.001), and the increased signal for bimodal versus unimodal stimulation was larger in deaf than in hearing participants (Group × Stimulus: F(1,23) = 6.923, p = 0.015). There were no significant interactions between group and hemisphere or attention modality. Collapsing across hemisphere, we performed 10 follow-up contrasts corrected for multiple comparisons. Deaf participants had significantly greater superior-temporal signal for each condition (Unimodal Visual, t(23) = 5.0, p < 0.01; Unimodal Somatosensory, t(23) = 3.7, p < 0.01; Bimodal Visual Attention, t(23) = 4.8, p < 0.01; Bimodal Somatosensory Attention, (t(23) = 4.8, p < 0.01). Comparing conditions within the deaf participants, we found that contrasts between bimodal visual and unimodal visual stimuli were significant (t(12) = 4.68, p < 0.01) and tended toward significance for unimodal somatosensory stimuli versus bimodal (t(12) = 3.1, p = 0.09). In the hearing participants, the contrast between unimodal visual stimuli and bimodal was not significant (t(11) = 2.3, p > 0.10) but was significant for somatosensory stimuli versus bimodal (t(11) = 4.1, p = 0.02). Importantly, within each group, there was no difference between unimodal visual and unimodal somatosensory signal in the superior-temporal region for either the deaf (t(12) = 2.0, p > 0.65) or the hearing (t(11) = 1.72, p > 1) participants.

Figure 7.

Superior-temporal ROI. Signal change relative to the resting fixation baseline was extracted from the superior-temporal region for deaf and hearing participants. The deaf participants had larger signal than hearing participants for each condition and had a larger difference between unimodal and bimodal signal. In contrast to the results from Heschl's gyrus, where somatosensory signal was greater than visual signal in the deaf participants in the superior-temporal region, there was no significant difference between unimodal visual and unimodal somatosensory signal change. Error bars represent ± SEM.

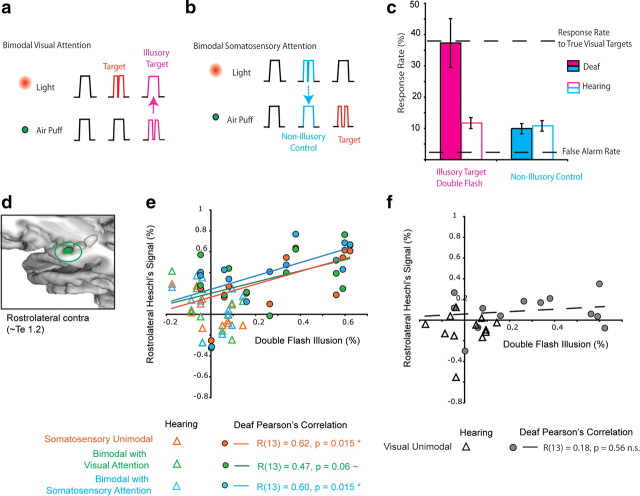

Visual-somatosensory double-flash illusion

A central question is whether the altered organization of primary auditory cortex in the deaf is related to altered perception. We investigated this question with a somatosensory variant of the double-flash illusion (Fig. 8). Behavioral measures are reported in Tables 4 and 5. Hit rates for somatosensory attention (57% ± 3% SEM) overall were higher than for visual (37% ± 5% SEM, F(1,23) = 52.3, p < 0.001) and did not differ by or interact with group. False alarm rates to standard stimuli were low but were significantly higher for unimodal (4.0% ± 0.2% SEM) than for bimodal (3.2% ± 0.3 SEM) (F(1,23) = 10.8, p = 0.003). There tended to be fewer false alarms in the deaf group for somatosensory stimuli (Group × Attention modality interaction: F(1,23) = 4.2, p = 0.051). The hit and false alarm rates indicated that target detection was well above the perceptual threshold and the response criterion was conservative [somatosensory: d-prime 2.1, criterion (C) 0.88; visual, d-prime 1.6, C 1.1]. In the main comparison, illustrated in Figure 8c, deaf and hearing participants responded differently to the illusion and control stimuli (Condition × Group Interaction, F(1,21) = 9.08, p = 0.007). Deaf people responded on average to 37% (±7.8% SEM) of the illusory double-flash stimuli, similar to their response rate for true visual targets in bimodal blocks (36% ± 5% SEM). In contrast, the responses of hearing participants indicate they were not susceptible to the illusion; hearing people responded to only 12% (±1.8% SEM) of the illusory double-flash stimuli, while their response rate to true visual targets was 39% (±4% SEM). In the nonillusory control condition, deaf adults responded to 9.8% (±1.6%) and hearing adults responded to 10.8% (±1.7%) of these stimuli.

Figure 8.

Somatosensory-induced double-flash illusion. a, In bimodal blocks, both lights and puffs were presented simultaneously. Targets were the double stimuli in the attended mode (double flash for visual attention, double puff for somatosensory attention), while double stimuli in the unattended mode were to be ignored. In Visual Attention blocks, a double light was the target whether paired with a single or a double puff, but if participants were susceptible to a somatosensory induced double-flash illusion, a single light paired with a double puff (illustrated in magenta) appeared to flash, eliciting a button press to the illusory target. b, In Somatosensory Attention blocks, a double puff was the target. A single somatosensory stimulus paired with a double visual stimulus does not generally elicit an illusory double touch sensation. The paired single-puff and double-light stimulus (illustrated in cyan) is perceived as a single puff and is considered a nonillusory control stimulus. This stimulus (illustrated in cyan) is still perceived as a single puff and is considered a nonillusory control stimulus. c, The ignored double puff in the visual attention condition (magenta) elicited a double-flash illusion in deaf but not hearing, while the double light in the somatosensory condition (cyan) did not elicit an illusory double puff in deaf or hearing. In the deaf, average response rates (± SEM) to the illusory stimuli were equal to their response rates to true visual targets. d, The rostrolateral subregion of Heschl's gyrus is shown highlighted in green. e, Signal change in the rostrolateral Heschl's gyrus for each block containing a somatosensory stimulus predicts the strength of the illusion in deaf adults. Pearson's correlations were calculated for the deaf participants only, separately for the ROI measures from each block containing a somatosensory stimulus. The relationship between signal change and the illusion was similar in all three blocks. All voxels in the anatomically defined region were included, not only those voxels thresholded for statistical significance. f, Signal change in the rostrolateral Heschl's gyrus for unimodal visual stimulation was not related to the strength of the illusion in deaf adults. Contra, Contralateral to stimulation.

Table 4.

Mean response rates for true targets and the illusion stimuli by group

| Unimodal hit rate |

Bimodal hit rate |

Double-flash illusion |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Visual | Somatosensory | Visual attention | Somatosensory attention | Illusion | Nonillusory control | |||||||

| Deaf (%) | 36 | (4.7) | 58 | (2.7) | 36 | (5.0) | 59 | (3.3) | 37 | (7.8) | 9.8 | (1.6) |

| Hearing (%) | 44 | (4.3) | 56 | (1.7) | 39 | (5.0) | 55 | (3.8) | 12 | (1.8) | 10.8 | (1.7) |

SEM is in parentheses. There were no significant group differences in hit rates to true unimodal or bimodal targets; response rates to the illusion condition were larger in the deaf group.

Table 5.

False alarms, responses to standards, for each condition by group

| Unimodal false alarms |

Bimodal false alarms |

|||||||

|---|---|---|---|---|---|---|---|---|

| Visual | Somatosensory | Visual attention | Somatosensory attention | |||||

| Deaf (%) | 4.1 | (0.3) | 3.4 | (0.5) | 3.5 | (0.6) | 2.3 | (0.3) |

| Hearing (%) | 3.8 | (0.3) | 4.5 | (0.5) | 3.6 | (0.5) | 3.2 | (0.3) |

SEM is in parentheses.

We expressed the illusion as the difference between the response rates to the illusory target and the nonillusory control and then tested whether this metric was predicted by the signal change in Heschl's gyrus subregions and the superior-temporal regions using Pearson's correlation. The signal change in rostral-contralateral Heschl's gyrus predicted the strength of the illusion in deaf participants for somatosensory and bimodal blocks, but not visual (Fig. 8). In other words, deaf participants whose Heschl's gyrus was more responsive for blocks with somatosensory stimulation (either unimodal or bimodal) had a stronger illusion effect. Correlations in other ROIs were not significant (p > 0.05).

Discussion

We demonstrated that fMRI signal change in Heschl's gyrus—the site of human primary auditory cortex—responded to unimodal somatosensory stimuli in congenitally deaf adults but not in hearing adults. Bimodal stimuli elicited a larger response than unimodal stimuli in Heschl's gyrus in both deaf and hearing adults but represented a more robust increase from the fixation baseline only in deaf adults. In deaf Heschl's gyrus, visual responses were weaker than somatosensory or bimodal stimulation. For unimodal vision, group differences were only significant in the contralateral posterior subregion of Heschl's gyrus, a likely homolog of primate area R (Da Costa et al., 2011). In contrast to Heschl's gyrus, there was no difference between unimodal somatosensation and vision in the deaf superior-temporal region (auditory association and multisensory cortex). A key finding was that there were marked perceptual differences between deaf and hearing adults; deaf, but not hearing, adults were susceptible to an illusory percept of a double flash of light when a single flash was paired with a double touch to the face. The strength of the illusion was predicted by signal change in the contralateral rostral subregion of Heschl's gyrus (approximate Te1.2) (Morosan et al., 2001) in the deaf adults.

A limitation of previous studies of cross-modal neuroplasticity of auditory cortex in deaf humans is the spatial resolution afforded by the techniques that were used. Individual Heschl's gyri vary in morphology and position (Morosan et al., 2001; Da Costa et al., 2011), and analyses that spatially average across individual brains can result in activity from the planum temporale or somatosensory regions of the parietal operculum being misattributed to Heschl's gyrus or true activity in Heschl's gyrus being missed due to low spatial concordance across participants. To our knowledge, no previous study of congenitally deaf humans has used an ROI approach to identify Heschl's gyrus or has subdivided these regions to test whether cross-modal neuroplasticity differs in subregions approximating human primary auditory cortical areas. In past fMRI studies of deaf adults, such as in Finney et al. (2001), standard practice was to use Talairach coordinates, an atlas based on a single elderly brain, to localize primary auditory cortex. As we illustrate (Fig. 2), Talairach coordinates for primary auditory cortex are more posterior on the right than recent probabilistic atlases (Eickhoff et al., 2007). Even so, most studies of altered visual organization in deaf participants report cross-modal altered organization caudal to, rather than overlapping, the posterior aspect of Heschl's gyrus (Bavelier et al., 2006).

Our results suggest that cross-modal neuroplasticity in deaf primary auditory cortex is greater for the somatosensory than the visual modality. This could be explained by stimulus intensity differences between visual and somatosensory stimuli, but this is unlikely in our experiment. Although somatosensory targets were more readily distinguished from somatosensory standards than visual targets from visual standards, the standard stimuli (80% of the trials) were easily detected for both modalities, and in the superior-temporal region there was no significant difference between visual and somatosensory signal amplitudes. Another possible explanation for the disparity between modalities in primary auditory cortex could be that a different stimulus type, such as peripheral visual motion, is more suited to elicit responses in deaf primary auditory cortex. Future studies with more diverse visual stimulation and parametric manipulation of stimulus strength with anatomical ROIs are needed to definitively address this issue.

Our results demonstrating robust responses in Heschl's gyrus for the somatosensory modality are consistent with the two previous reports of somatosensory responses in the auditory cortex of deaf humans. Auer et al. (2007) reported somatosensory activation overlapping atlas-defined Heschl's gyrus in a deaf group relative to fixation, but ROI analysis was not performed making it difficult to determine whether responses were in primary auditory cortex. A magnetoencephalography (MEG) study using source modeling in a single elderly deaf person reported somatosensory responses were accounted for by a source in auditory cortex (Levänen et al., 1998) although the spatial precision of MEG is lower than that of fMRI, making more precise localization problematic. However, in a different MEG and fMRI study of a 28-year-old congenitally deaf man, no visual or somatosensory responses were found in auditory cortex (Hickock et al., 1997). In our sample of 13 congenitally deaf adults, there were individual differences in the cross-modal responses in deaf auditory cortex, and these differences were correlated with altered perception.

An interesting point to consider is whether group differences are influenced by qualitatively different experiences of background sounds in an MRI experiment. For example, the negative response of Heschl's gyrus in hearing participants could be elicited by overt attention to MRI scanner sounds during resting fixation. However, this interpretation is not supported by results in the superior-temporal region, which were positive or near zero for hearing participants. It seems unlikely that this auditory and multisensory region is less responsive with overt attention to the MRI scanner noise than primary auditory cortex. In addition, group differences in the resting fixation condition do not account for differential signal change between conditions. Unfortunately, MRI background sounds are inherent to the MRI technique and cannot be matched between deaf and hearing groups.

We found that the deaf participants perceived a somatosensory-induced double-flash illusion while hearing participants did not. The absence of any illusion in hearing participants is surprising in light of previous reports that have shown that this double-flash illusion may be observed for either auditory or somatosensory stimulation in hearing adults (Violentyev et al., 2005; Lange et al., 2011). This may be due to stimulus differences; our somatosensory stimuli were air puffs to the face and were spatially coregistered with the lights in the far visual periphery while previous studies used vibrotactile stimulation to the fingertips. We positioned the lights in the far periphery because previous studies have shown that visual enhancements in the deaf are strongest in the visual periphery (Neville and Lawson, 1987; Bavelier et al., 2000) and this factor, combined with increased deaf tactile sensitivity (Levänen and Hamdorf, 2001), may be what led to a robust illusory percept only in the deaf participants. Across deaf individuals, there was variability in the susceptibility to the illusion, and the response in rostrolateral Heschl's gyrus predicted the strength of the illusion in the deaf participants. This finding is consistent with our hypothesis that cross-modal neuroplasticity in primary auditory cortex contributes to altered perceptions in deaf people.

Notably, although somatosensory responses were robust in each subregion of Heschl's gyrus, it was the rostrolateral region (Te1.2) that predicted the strength of the somatosensory-induced double-flash illusion and had the largest overall signal change. The functional specialization of different regions of human primary auditory cortex is not currently known, but our results suggest that altered cross-modal organization of primary auditory cortex in deaf people is not uniform. In addition, while visual responses in deaf Heschl's gyrus were weak compared to somatosensory and bimodal responses, they were equal to somatosensory responses in the superior-temporal region. These findings are interesting in light of evidence from animal studies of visual-somatosensory cross-modal plasticity in auditory cortex. In cats, there are dissociations between auditory cortical regions; a core auditory area, the anterior auditory field (AAF), in cats responds to somatosensory stimulation (Meredith and Lomber, 2011) and shows different cross-modal responses than the auditory field of the anterior ectosylvian sulcus (fAES) (Meredith et al., 2011). In addition, multisensory visual-somatosensory neurons are prevalent in the primary auditory cortex of congenitally deaf mice (Hunt et al., 2006). Research addressing which human auditory areas are likely homologs of regions in animal models would allow for more direct comparisons across models.

An important question is how altered organization of the auditory cortex arises. One possibility is the developmental stabilization of cross-modal connections that occur even in typically developing individuals. Primate studies indicate that multisensory interactions between hearing and touch occur in the auditory cortex of hearing individuals (Kayser et al., 2005), with some very early somatosensory responses to median nerve electrical stimulation in primary auditory cortex (Lakatos et al., 2007). In addition, visual stimuli influence auditory cortex (Bizley et al., 2007; Kayser et al., 2007), and multisensory interactions occur in low-level auditory cortex (Musacchia and Schroeder, 2009). In our study, even the hearing participants had increased signal in Heschl's gyrus and superior-temporal cortex for bimodal stimuli relative to unimodal stimuli. If cross-modal connections are typical in the auditory cortex of hearing individuals, it is reasonable to speculate that these connections increase in number and strength when acoustic input is reduced. The receptive fields for these cross-modal inputs into deaf auditory cortex may be large and extend bilaterally (Meredith and Lomber, 2011; Meredith et al., 2011).

Future research using methods sensitive to the timing of multisensory responses in auditory cortex, such as EEG and MEG, may elucidate whether these signals occur early in the sensory processing hierarchy or are due to later feedback from other cortical areas (e.g., subcortical connectivity, corticocortical feedback, or feedforward pathways between primary cortices). Future studies using event-related designs or block designs with alternating rest (Kayser et al., 2005, 2007) could address whether time-series differ between regions and conditions. Another important question for future research is whether altered organization and altered perception have a sensitive period leading to different plasticity for individuals who become deaf later in childhood or adulthood and how it is affected by later reintroduction of auditory nerve input through cochlear implantation; for example, deafness in adulthood induces somatosensory conversion of ferret auditory cortex (Allman et al., 2009). It is important to understand how the age of onset of deafness, sign language learning, and degree of deafness influence cross-modal neuroplasticity of auditory cortex and perceptual changes such as the somatosensory-induced double-flash illusion. Together, our results highlight the central role of experiential factors in driving brain development and function, even at the level of primary sensory cortices, and have practical implications for educational and rehabilitative programs for both typically and nontypically developing individuals.

Footnotes

This research was supported by the National Institute of Deafness and Communication Disorders Grant R01 DC000128 (H.J.N.). We thank the volunteers who participated and our American Sign Language interpreter Candice Kingrey for her important assistance. Scott Frey, Jolinda Smith, Bill Troyer, Tara Armstrong, and Tony Mecum provided technical contributions to the experimental apparatus. Joseph Wekselblatt assisted with data analysis. Scott Watrous and Tara Armstrong assisted with data collection.

References

- Allman BL, Keniston LP, Meredith MA. Adult deafness induces somatosensory conversion of ferret auditory cortex. Proc Natl Acad Sci U S A. 2009;106:5925–5930. doi: 10.1073/pnas.0809483106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Auer ET, Jr, Bernstein LE, Sungkarat W, Singh M. Vibrotactile activation of the auditory cortices in deaf versus hearing adults. Neuroreport. 2007;18:645–648. doi: 10.1097/WNR.0b013e3280d943b9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Neville HJ. Cross-modal plasticity: where and how? Nat Rev Neurosci. 2002;3:443–452. doi: 10.1038/nrn848. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell T, Corina D, Liu G, Neville H. Visual attention to the periphery is enhanced in congenitally deaf individuals. J Neurosci. 2000;20:RC93. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bavelier D, Dye MW, Hauser PC. Do deaf individuals see better? Trends Cogn Sci. 2006;10:512–518. doi: 10.1016/j.tics.2006.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in FMRI. Neuroimage. 2003;20:1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Bizley JK, Nodal FR, Bajo VM, Nelken I, King AJ. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb Cortex. 2007;17:2172–2189. doi: 10.1093/cercor/bhl128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bors D, Stokes T. Raven's advanced progressive matrices: norms for first-year university students and the development of a short form. Educ Psychol Meas. 1998;58:382–398. [Google Scholar]

- Da Costa S, van der Zwaag W, Marques JP, Frackowiak RS, Clarke S, Saenz M. Human primary auditory cortex follows the shape of Heschl's gyrus. J Neurosci. 2011;31:14067–14075. doi: 10.1523/JNEUROSCI.2000-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Heim S, Zilles K, Amunts K. Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. Neuroimage. 2006;32:570–582. doi: 10.1016/j.neuroimage.2006.04.204. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, Grosbras MH, Evans AC, Zilles K, Amunts K. Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage. 2007;36:511–521. doi: 10.1016/j.neuroimage.2007.03.060. [DOI] [PubMed] [Google Scholar]

- Finney EM, Fine I, Dobkins KR. Visual stimuli activate auditory cortex in the deaf. Nat Neurosci. 2001;4:1171–1173. doi: 10.1038/nn763. [DOI] [PubMed] [Google Scholar]

- Hackett TA. Information flow in the auditory cortical network. Hear Res. 2011;271:133–146. doi: 10.1016/j.heares.2010.01.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D, Clark K, Buxton RB, Rowley HA, Roberts TP. Sensory mapping in a congenitally deaf subject: MEG and fMRI studies of cross-modal non-plasticity. Hum Brain Mapp. 1997;5:437–444. doi: 10.1002/(SICI)1097-0193(1997)5:6<437::AID-HBM4>3.0.CO;2-4. [DOI] [PubMed] [Google Scholar]

- Huang RS, Sereno MI. Dodecapus: aAn MR-compatible system for somatosensory stimulation. Neuroimage. 2007;34:1060–1073. doi: 10.1016/j.neuroimage.2006.10.024. [DOI] [PubMed] [Google Scholar]

- Hunt DL, Yamoah EN, Krubitzer L. Multisensory plasticity in congenitally deaf mice: how are cortical areas functionally specified? Neuroscience. 2006;139:1507–1524. doi: 10.1016/j.neuroscience.2006.01.023. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Johnson MH. Functional brain development in humans. Nat Rev Neurosci. 2001;2:475–483. doi: 10.1038/35081509. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Munakata Y. Processes of change in brain and cognitive development. Trends Cogn Sci. 2005;9:152–158. doi: 10.1016/j.tics.2005.01.009. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Integration of touch and sound in auditory cortex. Neuron. 2005;48:373–384. doi: 10.1016/j.neuron.2005.09.018. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Functional imaging reveals visual modulation of specific fields in auditory cortex. J Neurosci. 2007;27:1824–1835. doi: 10.1523/JNEUROSCI.4737-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kral A, Schröder JH, Klinke R, Engel AK. Absence of cross-modal reorganization in the primary auditory cortex of congenitally deaf cats. Exp Brain Res. 2003;153:605–613. doi: 10.1007/s00221-003-1609-z. [DOI] [PubMed] [Google Scholar]

- Lacadie CM, Fulbright RK, Rajeevan N, Constable RT, Papademetris X. More accurate Talairach coordinates for neuroimaging using non-linear registration. Neuroimage. 2008;42:717–725. doi: 10.1016/j.neuroimage.2008.04.240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakatos P, Chen CM, O'Connell MN, Mills A, Schroeder CE. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach atlas labels for functional brain mapping. Hum Brain Mapp. 2000;10:120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Tordesillas-Gutiérrez D, Martinez M, Salinas F, Evans A, Zilles K, Mazziotta JC, Fox PT. Bias between MNI and Talairach coordinates analyzed using the ICBM-152 brain template. Hum Brain Mapp. 2007;28:1194–1205. doi: 10.1002/hbm.20345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lange J, Oostenveld R, Fries P. Perception of the touch-induced visual double-flash illusion correlates with changes of rhythmic neuronal activity in human visual and somatosensory areas. Neuroimage. 2011;54:1395–1405. doi: 10.1016/j.neuroimage.2010.09.031. [DOI] [PubMed] [Google Scholar]

- Levänen S, Hamdorf D. Feeling vibrations: enhanced tactile sensitivity in congenitally deaf humans. Neurosci Lett. 2001;301:75–77. doi: 10.1016/s0304-3940(01)01597-x. [DOI] [PubMed] [Google Scholar]

- Levänen S, Jousmäki V, Hari R. Vibration-induced auditory-cortex activation in a congenitally deaf adult. Curr Biol. 1998;8:869–872. doi: 10.1016/s0960-9822(07)00348-x. [DOI] [PubMed] [Google Scholar]

- Lomber SG, Meredith MA, Kral A. Cross-modal plasticity in specific auditory cortices underlies visual compensations in the deaf. Nat Neurosci. 2010;13:1421–1427. doi: 10.1038/nn.2653. [DOI] [PubMed] [Google Scholar]

- Mazziotta J, Toga A, Evans A, Fox P, Lancaster J, Zilles K, Woods R, Paus T, Simpson G, Pike B, Holmes C, Collins L, Thompson P, MacDonald D, Iacoboni M, Schormann T, Amunts K, Palomero-Gallagher N, Geyer S, Parsons L, et al. A probabilistic atlas and reference system for the human brain: International Consortium for Brain Mapping (ICBM) Philos Trans R Soc Lond B Biol Sci. 2001;356:1293–1322. doi: 10.1098/rstb.2001.0915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Lomber SG. Somatosensory and visual crossmodal plasticity in the anterior auditory field of early deaf cats. Hear Res. 2011;280:38–47. doi: 10.1016/j.heares.2011.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Allman BL, Keniston LP, Clemo HR. Auditory influences on non-auditory cortices. Hear Res. 2009;258:64–71. doi: 10.1016/j.heares.2009.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Kryklywy J, McMillan AJ, Malhotra S, Lum-Tai R, Lomber SG. Crossmodal reorganization in the early deaf switches sensory, but not behavioral roles of auditory cortex. Proc Natl Acad Sci U S A. 2011;108:8856–8861. doi: 10.1073/pnas.1018519108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mishra J, Martinez A, Sejnowski TJ, Hillyard SA. Early cross-modal interactions in auditory and visual cortex underlie a sound-induced visual illusion. J Neurosci. 2007;27:4120–4131. doi: 10.1523/JNEUROSCI.4912-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morosan P, Rademacher J, Schleicher A, Amunts K, Schormann T, Zilles K. Human primary auditory cortex: cytoarchitectonic subdivisions and mapping into a spatial reference system. Neuroimage. 2001;13:684–701. doi: 10.1006/nimg.2000.0715. [DOI] [PubMed] [Google Scholar]

- Musacchia G, Schroeder CE. Neuronal mechanisms, response dynamics and perceptual functions of multisensory interactions in auditory cortex. Hear Res. 2009;258:72–79. doi: 10.1016/j.heares.2009.06.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: an event-related potential and behavioral study. II. Congenitally deaf adults. Brain Res. 1987;405:268–283. doi: 10.1016/0006-8993(87)90296-4. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Illusions: what you see is what you hear. Nature. 2000;408:788. doi: 10.1038/35048669. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Modulations of visual perception by sound. In: Calvert G, Spence C, Stein B, editors. Handbook of multisensory processes. London: MIT; 2004. pp. 27–34. [Google Scholar]

- Smith J, Bogdanov S, Watrous S, Frey S. Rapid digit mapping in the human brain at 3T. Proc Int Soc Magn Reson Med. 2009;34:3714. [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23(Suppl 1):S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Stevens C, Neville H. Neuroplasticity as a double-edged sword: deaf enhancements and dyslexic deficits in motion processing. J Cogn Neurosci. 2006;18:701–714. doi: 10.1162/jocn.2006.18.5.701. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme Medical Publishers; 1988. [Google Scholar]

- Violentyev A, Shimojo S, Shams L. Touch-induced visual illusion. Neuroreport. 2005;16:1107–1110. doi: 10.1097/00001756-200507130-00015. [DOI] [PubMed] [Google Scholar]

- Woods DL, Herron TJ, Cate AD, Yund EW, Stecker GC, Rinne T, Kang X. Functional properties of human auditory cortical fields. Front Syst Neurosci. 2010;4:155. doi: 10.3389/fnsys.2010.00155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Behrens TE, Beckmann CF, Jenkinson M, Smith SM. Multilevel linear modelling for FMRI group analysis using Bayesian inference. Neuroimage. 2004;21:1732–1747. doi: 10.1016/j.neuroimage.2003.12.023. [DOI] [PubMed] [Google Scholar]

- Worsley K. Statistical analysis of activation images. Functional MRI: an introduction to methods. 2001:251–270. [Google Scholar]