Abstract

The May 2012 Sackler Colloquium on “The Science of Science Communication” brought together scientists with research to communicate and scientists whose research could facilitate that communication. The latter include decision scientists who can identify the scientific results that an audience needs to know, from among all of the scientific results that it would be nice to know; behavioral scientists who can design ways to convey those results and then evaluate the success of those attempts; and social scientists who can create the channels needed for trustworthy communications. This overview offers an introduction to these communication sciences and their roles in science-based communication programs.

Keywords: decision making, environment, judgment, risk, uncertainty

We need science for many things, the most profound of which is supporting our sense of wonder about the world around us. Everyone has had moments in which science has solved or created a mystery. For me, one of those moments was as an undergraduate in Introductory Geology at Wayne State University, when Prof. (Joseph) Mozola explained the origins of the Harrisburg surface, which gives central Pennsylvania its distinctive lattice pattern of rivers and gaps. At that moment, I realized that all landforms, even the flats of Detroit, were created by processes that science could decode. Another such moment was reading Joanna Burger’s The Parrot Who Owns Me (1) and getting some insight into how our white-crested cockatoo, Big Bird, appeared to have such human emotions, despite our species having diverged so long ago (even if he was born on Pittsburgh’s South Side). A third was following a proposal from our son Ilya, an evolutionary biologist, to take out our lawn and let our yard go feral with native Pennsylvania flora—after which he taught us how to observe the seasons. Now, I can wonder why the first grackles arrived 10 days early this year, even though the main flock took over the yard on March 7th as usual. Was it a sign of climate change—or of my own improved powers of observation?

Science provides a sense of wonder not just from revealing the world, but also from showing that the world can be revealed. For that reason, I tell my advisees, in our Decision Science major, that they should join a research laboratory, any research laboratory, just to see how science is done. They may discover that they love the life, including the camaraderie of the graduate students and postdocs who are the engines of much science. Or, they may find the constant fussing over details to be “mind-numbingly dull” (to use the phrase that Calvin, of Calvin and Hobbes, applied to archaeology) and then choose to get their science from places like Nova, Frontline, the New York Times, and Wired. Either way, they will have seen the pursuit of uncertainty that distinguishes science from other forms of knowing and the scientific turn of mind: trying to get to the bottom of things, knowing that one never will.

Decisions That Require Understanding Science

Although people can choose not to do science, they cannot choose to ignore it. The products of science permeate their lives. Nuclear power, genetically modified organisms, nanotechnology, geoengineering, and xenotransplantation are just a few of today’s realities that would have been impossible, even inconceivable, without scientific advances. Their very existence should be a source of wonder, even for people in whom they evoke a sense of terror (“we can do that?!”).

The same mixed emotions may accompany phenomena that arise from the confluence of technology and society. How is it that human actions can both discover antibiotics and encourage microbial resistance to them, create “smart” electricity grids and threaten them with cyberattacks, and produce a food supply whose sheer abundance undermines the health of some people, while others still go hungry?

Without some grasp of the relevant science, it is hard to make informed decisions about these issues. Those include private decisions, such as whether to choose fuel-efficient vehicles, robot-guided surgery, or dairy products labeled as “produced by animals not treated with bovine growth hormone—not known to cause health effects.” And they include public decisions, such as whether to support politicians who favor fuel efficiency, lobby for disclosing the risks of medical devices, or vote for referenda limiting agricultural biotechnology.

Effective science communications inform people about the benefits, risks, and other costs of their decisions, thereby allowing them to make sound choices. However, even the most effective communication cannot guarantee that people will agree about what those choices should be. Reasonable people may disagree about whether expecting X days less convalescence time is worth expecting Y% more complications, whether the benefits of organic produce justify Z% higher prices, or whether their community has been offered fair compensation for having a hazardous facility sited in its midst. Reasonable people may also disagree about what time frame to consider when making a decision (the next year? the next decade? the next century?), about whose welfare matters (their own? their family’s? their country’s? all humanity’s?), or about whether to consider the decision-making process as well as its outcomes (are they being treated respectfully? are they ceding future rights?).

The goal of science communication is not agreement, but fewer, better disagreements. If that communication affords people a shared understanding of the facts, then they can focus on value issues, such as how much weight to give to nature, the poor, future generations, or procedural issues in a specific decision. To realize that potential, however, people need a venue for discussing value issues. Otherwise, those issues will percolate into discussions of science (2). For example, if health effects have legal standing in a facility-siting decision, but compensation does not, then local residents may challenge health effect studies, when their real concern is money. Science communication cannot succeed when people feel that attacking its message is the only way to get redress for other concerns (3).

Because science communication seeks to inform decision making, it must begin by listening to its audience, to identify the decisions that its members face—and, therefore, the information that they need. In contrast, science education begins by listening to scientists and learning the facts that they wish to convey (4). Science education provides the foundation for science communication. The more that people know about a science (e.g., physics), the easier it will be to explain the facts that matter in specific decisions (e.g., energy policy). The more that people know about the scientific process, per se, the easier it will be for science communications to explain the uncertainties and controversies that science inevitably produces.

Adapting terms coined by Baddeley (5), the sciences of science communication include both applied basic science (seeing how well existing theories address practical problems) and basic applied science (pursuing new issues arising from those applications). In Baddeley’s view, these pursuits are critical to the progress of basic science, by establishing the boundaries of its theories and identifying future challenges.

Science communications that fulfill this mission must perform four interrelated tasks:

Task 1: Identify the science most relevant to the decisions that people face.

Task 2: Determine what people already know.

Task 3: Design communications to fill the critical gaps (between what people know and need to know).

Task 4: Evaluate the adequacy of those communications.

Repeat as necessary.

The next four sections survey the science relevant to accomplishing these tasks, followed by consideration of the institutional support needed to accomplish them. Much more detail can be found in the rest of this special issue and at the Colloquium Web site (www.nasonline.org/programs/sackler-colloquia/completed_colloquia/science-communication.html).

Task 1: Identify the Science Relevant to Decision Making

Although advances in one science (e.g., molecular biology) may provide the impetus for a decision (e.g., whether to use a new drug), informed choices typically require knowledge from many sciences. For example, predicting a drug’s risks and benefits for any patient requires behavioral science research, extrapolating from the controlled world of clinical trials to the real world in which people sometimes forget to take their meds and overlook the signs of side effects (6). Indeed, every science-related decision has a human element, arising from the people who design, manufacture, inspect, deploy, monitor, maintain, and finance the technologies involved. As a result, predicting a technology’s costs, risks, and benefits requires social and behavioral science knowledge, just as it might require knowledge from seismology, meteorology, metrology, physics, mechanical engineering, or computer science.

No layperson could understand all of the relevant sciences to any depth. Indeed, neither could any scientist. Nor need they have such vast knowledge. Rather, people need to know the facts that are “material” to their choices (to use the legal term) (7). That knowledge might include just summary estimates of expected outcomes (e.g., monetary costs, health risks). Or, it might require enough knowledge about the underlying science to understand why the experts make those estimates (8). Knowing the gist of that science could not only increase trust in those claims, but also allow members of the public to follow future developments, see why experts disagree, and have a warranted feeling of self-efficacy, from learning—and being trusted to learn—about the topic (9, 10).

Thus, the first science of communication is analysis: identifying those few scientific results that people need to know among the myriad scientific facts that it would be nice to know (11, 12). The results of that analysis depend on the decisions that science communications seek to inform. The scientific facts critical to one decision may be irrelevant to another. Decision science formalizes the relevance question in value-of-information analysis, which asks, “When deciding what to do, how much difference would it make to learn X, Y, or Z?” Messages should begin with the most valuable fact and then proceed as long as the benefits of learning more outweigh its costs—or recipients reach their absorptive capacity (13). Although one can formalize such analyses (7, 11, 12), just asking the question should reduce the risk of assuming that the facts that matter to scientists also matter to their audiences (2, 6, 8, 9, 11).

For example, in the context of medical decisions (6, 7, 11), such analyses ask when it is enough to describe a procedure’s typical effects and when decision makers need to know the distribution of outcomes (e.g., “most patients get mild relief; for some, though, it changes their lives”). Such analyses can also ask when uncertainties matter (e.g., “because this is a new drug, it will take time for problems to emerge”) and how precise to be (e.g., will patients make similar choices if told that “most people get good results” and “researchers are 90% certain that between 30% and 60% of patients who complete the treatment will be disease-free a year later”) (12, 14–16). Thus, such analyses, like other aspects of the communication process, must consider the range of recipients’ circumstances, paying particular attention to vulnerable populations, both as an ethical duty and because they are most likely to have unique information needs.

Because decisions are defined by decision makers’ goals, as well as by their circumstances, different scientific facts may be relevant to different decision makers. For example, in research aimed at helping women reduce their risk of sexual assault, we found that communications often ignore outcomes on some women’s minds, such as legal hassles and risks to others (17). In research aimed at helping young women reduce their risk of sexually transmitted infections, we found that communications often ignored outcomes important to many of them, such as relationships and reputations (18).

As elsewhere, such applied basic science can reveal basic applied science opportunities. For example, we found the need for additional research regarding the effectiveness of self-defense strategies in reducing the risk of sexual assault, studies that are rare compared with the many studies on how victims can be treated and stigmatized. More generally, the methodological challenges of asking people what matters to them, when forced to choose among risky options, have changed scientific thinking about the nature of human values. Rather than assuming that people immediately know what they want, when faced with any possible choice, decision scientists now recognize that people must sometimes construct their preferences, to determine the relevance of their basic values for specific choices (19, 20). As a result, preferences may evolve, as people come to understand the implications of novel choices (such as those posed by new technologies). When that happens, the content of science communications must evolve, too, in order to remain relevant.

Task 2: Determine What People Already Know

Having identified the scientific facts that are worth knowing, communication researchers can then identify the subset of those facts that are worth communicating—namely, those facts that people do not know already. Unless communications avoid repeating facts that go without saying, they can test their audience’s patience and lose its trust (“Why do they keep saying that? Don’t they think that I know anything?”). That can happen, for example, when the same communication tries to meet both the public’s right to know, which requires saying everything, and its need to know, which requires saying what matters. Full disclosure is sometimes used in hopes of hiding inconvenient facts in plain sight, by burying them in irrelevant ones (6, 7, 10).

Identifying existing beliefs begins with formative research, using open-ended, one-on-one interviews, allowing people to reveal whatever is on their minds in their normal ways of expressing themselves (8, 21). Without such openness, communicators can miss cases where people have correct beliefs, but formulate them in nonscientific terms, meaning that tests of their “science literacy” may underestimate their knowledge. Conversely, communicators could neglect lay misconceptions that never occurred to them (7, 8). Focus groups provide an open-ended forum for hearing many people at once. However, for reasons noted by their inventor, Robert Merton, focus groups cannot probe individuals’ views at depth, shield participants from irrelevant group pressure, or distinguish the beliefs that people bring to a group session from those that they learn in it (22). As a result, focus groups are much more common in commercial research than in scientific studies.

Having understood the range and language of lay beliefs, researchers can then create structured surveys for estimating their prevalence. Such surveys must pose their questions precisely enough to assess respondents’ mastery of the science. Thus, they should not give people credit for having a superficial grasp of a science’s gist nor for being sophisticated guessers. Nor should they deduct credit for not using scientific jargon or not parsing clumsily written questions (10, 16, 23).

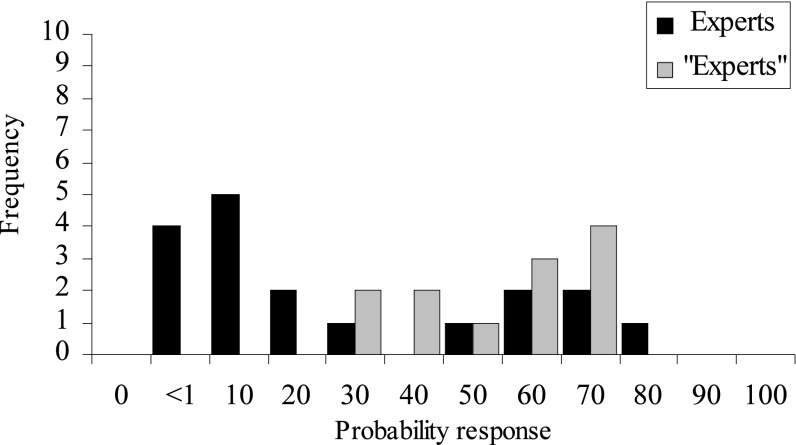

Fig. 1 illustrates such a structured question, based on formative research into how people think about one decision-relevant scientific issue (24). In Fall 2005, as avian flu loomed, we had the opportunity to survey the beliefs of both public health experts, who could assess the threat, and technology experts, who might create options for keeping society going, should worst come to worst. When asked how likely the virus was to become an efficient human-to-human transmitter in the next 3 years, most public health experts saw a probability around 10%, with a minority seeing a much higher one. The technology experts all saw relatively high probabilities (median = 60%). Perhaps they had heard the range of views among public health experts and then sided with the more worried ones. More likely, though, they had heard the great concern among the experts and then assumed that the probability was high (“otherwise, why would they be so alarmed?”). Given that the public health experts anticipated a 7% case-fatality rate (their median response to another question), a 15% chance of efficient transmission provides ample reason for great concern. However, such numeric judgments were nowhere to be found in the voluminous coverage of the threat. Even though the ambiguity of verbal quantifiers (e.g., “likely”) has long been known, experts are often reluctant to express themselves in clear numeric terms (14–16, 25–27).

Fig. 1.

Response by public health experts (black bars) and technology experts (gray bars) to the following question: “What is the probability that H5N1 will become an efficient human-to-human transmitter (capable of being propagated through at least two epidemiological generations of affected humans) sometime during the next 3 years?” Reproduced with permission from ref. 76.

When decision makers are forced to read between the lines of experts’ statements, they cannot make full use of scientific knowledge. Nor can they fairly evaluate experts’ performance. For example, if public health experts seemed to be thinking 60% in 2005, then they might have appeared alarmist, once the pandemic failed to materialize—especially if they also seemed to be thinking 60% during the subsequent (2009) H1N1 scare. Thus, by communicating vaguely, experts make themselves needlessly vulnerable to second guessing. Perhaps they omit quantitative assessments because they do not realize their practical importance (Task 1). Perhaps they exaggerate how well others could infer those probabilities from everything else that they said. Perhaps they do not think that the public can handle such technical information. Whatever its source, vagueness undermines the precision essential to all science.

Such communication lapses are not surprising. Behavioral research has identified many ways in which people misread others’ minds (28, 29). For example, the common knowledge effect arises when people exaggerate how widely their beliefs are shared (30). It can lead people to confuse others by failing to say things that are seemingly, but not actually, obvious. The false consensus effect arises when people exaggerate how widely their attitudes are shared (31). It can leave people surprised when others see the same facts but make different choices, because they have different goals. The myth of panic arises when people misinterpret others’ intense responses to disasters as social disintegration, rather than as mobilization (e.g., the remarkable, if tragically incomplete, evacuation of the World Trade Center on 9/11) (32, 33). The myth of adolescents’ unique sense of invulnerability arises when adults assume that teens misperceive risks, without recognizing the factors that can lead teens to act against their own better judgment (e.g., social pressure, poor emotional regulation) (34, 35).

Thus, because intuition cannot be trusted, communicators must study what people are thinking. Otherwise, they choose to fly blind when creating their messages (36). Given the stakes riding on effective communication, these studies should be conducted to a publication standard, even if their topics lack the theoretical interest needed for actual publication. That standard includes testing the validity of the elicitation procedures. One class of tests examines the consistency of beliefs elicited in different ways. For example, the question in Fig. 1 opened a survey whose final question reversed the conditionality of the judgment. Instead of asking about the probability of efficient transmission in 3 years, it asked for the time until the probability reached several values (10%, 50%, 90%). Finding consistent responses to the two formulations suggested that respondents had succeeded in translating their beliefs into these numeric terms. A second class of tests examines construct validity, asking whether responses to different measures are correlated in predicted ways. For example, in a study of teens’ predictions for significant life events, we found that they gave high probabilities of getting work, pregnant, and arrested if they also reported higher rates of educational participation, sexual activity, and gang activity, respectively—in response to questions in other parts of the survey. That study also found evidence of predictive validity, such that the events were more likely for teens who assigned them higher probabilities. Whether these probabilities were over- or underestimated seemed to depend on teens’ beliefs about the events, rather than on their ability to express themselves numerically (34).

Task 3: Design Communications to Fill the Critical Gaps

A century-plus of social, behavioral, and decision science research has revealed many principles that can be used in communication design. Table 1 shows some of the principles that govern judgment (assessing states of the world) and choice (deciding what to do, given those assessments). For example, the first judgment principle is that people automatically keep track of how often they have observed an event (e.g., auto accident, severe storm) (37), providing an estimate that they can use for predicting future events, by applying the availability heuristic (13). Although often useful, relying on such automatic observation can lead people astray when an event is disproportionately visible (e.g., because of media reporting practices) and they cannot correct for the extent to which appearances are deceiving. For example, people may think that (widely reported) homicides are more common than (seldom reported) suicides, even though these two causes of death occur about as often (38). The first choice principle (in Table 1) is that people consider the return on investing in decision-making processes (39). Applying that principle could lead them to disregard communications that seem irrelevant, incomprehensible, or untrustworthy (40).

Table 1.

Partial list of behavioral principles

| Judgment | Choice |

| People are good at tracking what they see, but not at detecting sample bias. | People consider the return on their investment in making decisions. |

| People have limited ability to evaluate the extent of their own knowledge. | People dislike uncertainty, but can live with it. |

| People have difficulty imagining themselves in other visceral states. | People are insensitive to opportunity costs. |

| People have difficulty projecting nonlinear trends. | People are prisoners to sunk costs, hating to recognize losses. |

| People confuse ignorance and stupidity. | People may not know what they want, especially with novel questions. |

Each of these principles was a discovery in its time. Each is supported by theories, explicating its underlying processes. Nonetheless, each is also inconclusive. Applying a principle requires additional (auxiliary) assumptions regarding its expression in specific circumstances. For example, applying the first judgment principle to predicting how people estimate an event’s frequency requires assumptions about what they observe, how they encode those observations, how hard they work to retrieve relevant memories, whether the threat of biased exposure occurs to them, and how they adjust for such bias. Similarly, applying the first choice principle to predicting how people estimate the expected return on their investment in reading a science communication requires assumptions about how much they think that they know already, how trustworthy the communication seems, and how urgent such learning appears to be.

Moreover, behavioral principles, like physical or biological ones, act in combination. Thus, although behavior may follow simple principles, there are many of them (as suggested by the incomplete lists in Table 1), interacting in complex ways. For example, dual-process theories detail the complex interplay between automatic and deliberate responses to situations, as when people integrate their feelings and knowledge about a risk (9, 10, 13, 35, 38). Getting full value from behavioral science requires studying not only how individual principles express themselves in specific settings, but also how they act in consort (41). Otherwise, communicators risk grasping at the straws that are offered by simplistic single-principle, “magic bullet” solutions, framed as “All we need to do is X, and then the public will understand”—where X might be “appeal to their emotions,” “tell them stories,” “speak with confidence,” “acknowledge uncertainties,” or “emphasize opportunities, rather than threats” (42). Indeed, a comprehensive approach to communication would consider not only principles of judgment and choice, but also behavioral principles identified in studies of emotion, which find that feelings can both aid communication, by orienting recipients toward message content, and undermine it, as when anger increases optimism—and diminishes the perceived value of additional learning (43, 44). A comprehensive approach would also consider the influences of social processes and culture on which information sources people trust and consult (45).

Whereas the principles governing how people think may be quite general (albeit complex in their expression and interaction), what people know naturally varies by domain, depending on their desire and opportunity to learn. People who know a lot about health may know little about physics or finance, and vice versa. Even within a domain, knowledge may vary widely by topic. People forced to learn about cancer may know little about diabetes. People adept at reading corporate balance sheets may need help with their own tax forms. As a result, knowing individuals’ general level of knowledge (sometimes called their “literacy”) in a domain provides little guidance for communicating with them about specific issues. For that, one needs to know their mental model for that issue, reflecting what they have heard or seen on that topic and inferred from seemingly relevant general knowledge.

The study of mental models has a long history in cognitive psychology, in research that views people as active, if imperfect, problem solvers (46–48). Studies conducted with communication in mind often find that people have generally adequate mental models, undermined by a few critical gaps or misconceptions (8, 21). For example, in a study of first-time mothers’ views of childhood immunization (49), we found that most knew enough for future communications to focus on a few missing links, such as the importance of herd immunity to people who cannot be immunized and the effectiveness of postlicensing surveillance for vaccine side effects. A study of beliefs about hypertension (50) found that many people know that high blood pressure is bad, but expect it to have perceptible symptoms, suggesting messages about a “silent killer.” A classic study found that many people interpret “once in 100 years” as a recurrence period, rather than as an annual probability, showing the importance of expressing hydrologic forecasts in lay terms (51).

At other times, though, the science is so unintuitive that people have difficulty creating the needed mental models. In such cases, communications must provide answers, rather than asking people to infer them. For example, risks that seem negligible in a single exposure (e.g., bike riding, car driving, protected sex) can become major concerns through repeated exposure (52, 53). Invoking a long-term perspective might help some (“one of these days, not wearing a helmet will catch up with you”) (54). However, when people need more precise estimates of their cumulative risk, they cannot be expected to do the mental arithmetic, any more than they can be expected to project exponential processes, such as the proliferation of invasive species (55), or interdependent nonlinear ones, such as those involved in climate change (56). If people need those estimates, then someone needs to run the numbers for them. Similarly, people cannot be expected to understand the uncertainty in a field, unless scientists summarize it for them (6, 11, 16, 27, 57, 58).

Task 4: Evaluate their adequacy and repeat as necessary

Poor communications cause immediate damage if they keep people from using available science knowledge. They cause lasting damage if they erode trust between scientists and the public. That happens when lay people see scientists as insensitive to their needs and scientists see lay people as incapable of grasping seemingly basic facts (2, 9, 24, 50). Such mistrust is fed by the natural tendency to attribute others’ behavior to their essential properties rather than to their circumstances (59). Thus, it may be more natural for laypeople to think, “Scientists don’t care about the public” than “Scientists have little chance to get to know the public.” And it may be more natural for scientists to think, “The public can’t understand our science” than “The public has little chance to learn our science.” Indeed, the very idea of science literacy emphasizes the public’s duty to know over the experts’ duty to inform.

To avoid such misattributions, communication programs must measure the adequacy of their performance. A task analysis suggests the following test: a communication is adequate if it (i) contains the information that recipients need, (ii) in places that they can access, and (iii) in a form that they can comprehend. That test can be specified more fully in the following three standards.

(i) A materiality standard for communication content. A communication is adequate if it contains any information that might affect a significant fraction of users’ choices. Malpractice laws in about half of the United States require physicians to provide any information that is material to their patients’ decisions (60). (The other states have a professional standard, requiring physicians to say whatever is customary in their specialty.) The materiality standard can be formalized in value-of-information analysis (7, 11, 12), asking how much knowing an item of information would affect recipients’ ability to choose wisely. That analysis might find that a few items provide all that most recipients need, or that target audiences have such diverse information needs that they require different brief messages, or that there are too many critical facts for any brief message to suffice. Without analysis, though, one cannot know what content is adequate (61).

(ii) A proximity standard for information accessibility. A communication is adequate if it puts most users within X degrees of separation from the needed information, given their normal search patterns. Whatever its content, a communication has little value unless it reaches its audience, either directly (e.g., through warning labels, financial disclosures, or texted alerts) or indirectly (e.g., through friends, physicians, or journalists). If there are too many bridging links, then messages are unlikely to connect with their targets. If there are too few links, then people will drown in messages, expecting them to become experts in everything, while ignoring the social networks that they trust to select and interpret information for them (9, 45, 62, 63). As a result, the proximity standard evaluates the distribution of messages relative to recipients’ normal search patterns.

As elsewhere, the need for such research is demonstrated by the surprising results that it sometimes produces, such as the seeming “contagion” of obesity and divorce (64) or the ability of messages to reach people through multiple channels (65, 66). Casman et al. (67) found that traditional, trusted distribution systems for “boil water” notices could not possibly reach some consumers in time to protect them from some contaminants. Winterstein and Kimberlin (68) found that the system for distributing consumer medication information sheets puts something in the hands of most patients who pick up their own prescriptions. Downs et al. (49) found that first-time mothers intuitively choose search terms that lead to Web sites maintained by vaccine skeptics, rather than by proponents. In each case, one would not know who gets the messages without such study.

Once users connect with a communication, they must find what they need in it. Research has identified design principles for directing attention, establishing authority, and evoking urgency (41, 69). The adequacy of any specific communication in revealing its content can be studied by direct observation or with models using empirically based assumptions. Using such a model, Riley et al. (70) found that users of several methylene chloride-based paint stripper products who relied on product labels would not find safety instructions if they followed several common search strategies (e.g., read the first five items, read everything on the front, read only warnings).

(iii) A comprehensibility standard for user understanding. A communication is adequate if most users can extract enough meaning to make sound choices. This standard compares the choices made by people who receive a message with those of fully informed individuals (6, 71). It can find that an otherwise poor communication is adequate, because recipients know its content already, and that an otherwise sound communication is inadequate, because recipients’ mental models are too fragmentary to absorb its content. Applying the standard is conceptually straightforward with persuasive communications (72), designed to encourage desired behaviors (e.g., not smoking, evacuating dangerous places): see whether recipients behave that way. However, with nonpersuasive communications, designed to help people make personally relevant choices (e.g., among careers, public policies, medical procedures), the test is more complicated. It requires taking an “inside view,” identifying recipients’ personal goals and the communication’s contribution to achieving them (11, 13, 17). That view may lead to creating options that facilitate effective decision making, such as subsidizing (or even requiring) sustained commitment to protective behaviors (e.g., flood insurance), signaling its importance and encouraging long-term perspectives, as an antidote to myopic thinking. That view may also require removing barriers, such as the lack of affordable options (73).

Each of these three standards includes verbal quantifiers whose resolution requires value judgments. The materiality standard recognizes that users might face such varied decisions that the best single communication omits information that a fraction need; someone must then decide whether that fraction is significant. The accessibility standard recognizes that not all users can (or should) be reached directly; someone must then decide whether most users are close enough to someone with the information. The comprehensibility standard recognizes that not everyone will (or must) understand everything; someone must then decide whether most people have extracted enough meaning. Although scientists can estimate these impacts, they alone cannot decide whether communicators’ efforts are good enough.

Organizing for Communication

As seen in the Colloquium and this special issue, scientifically sound science communication demands expertise from multiple disciplines, including (a) subject matter scientists, to get the facts right; (b) decision scientists, to identify the right facts, so that they are not missed or buried; (c) social and behavioral scientists, to formulate and evaluate communications, and (d) communication practitioners, to create trusted channels among the parties.

Recognizing these needs, some large organizations have moved to have such expertise on staff or on call. For example, the Food and Drug Administration has a Strategic Plan for Risk Communication and a Risk Communication Advisory Committee. The Centers for Disease Control and Prevention has behavioral scientists in its Emergency Operations Center. Communications are central to the Federal Emergency Management Agency’s Strategic Forecast Initiative and the National Oceanic and Atmospheric Administration’s Weather-Ready Nation program. The National Academy of Sciences’ Science and Entertainment Exchange answers and encourages media requests for subject matter expertise.

For the most part, though, individual scientists are on their own, forced to make guesses about how to meet their audiences’ information needs. Reading about the relevant social, behavioral, and decision sciences might help them some. Informal discussion with nonscientists might help some more, as might asking members of their audience to think aloud as they read draft communications (41). However, such ad hoc solutions are no substitute for expertise in the sciences of communication and evidence on how to communicate specific topics (74, 75).

The scientific community owes individual scientists the support needed for scientifically sound communication. One might envision a “Communication Science Central,” where scientists can take their problems and get authoritative advice, such as: “here’s how to convey how small risks mount up through repeated exposure”; “in situations like the one that you’re facing, it is essential to listen to local residents’ concerns before saying anything”; “we’ll get you estimates on the economic and social impacts of that problem”; “you might be able to explain ocean acidification by building on your audience’s mental models of acids, although we don’t have direct evidence”; or “that’s a new topic for us; we’ll collect some data and tell you what we learn.” By helping individual scientists, such a center would serve the scientific community as a whole, by protecting the commons of public goodwill that each communication either strengthens or weakens.

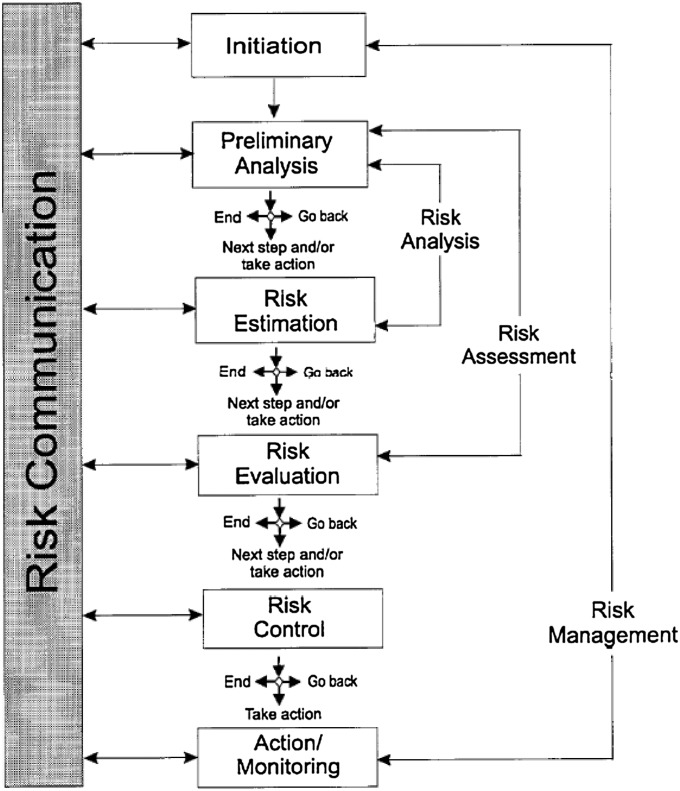

Realizing the value of science communication requires integrating it with the decision-making processes that it is meant to inform. Fig. 2 (76) shows one version of the advice coming from many consultative bodies (2, 77, 78). In the center is a conventional management process, going from initiation to implementation and monitoring. At the left is a commitment to two-way communication at each stage. Thus, a process begins by reporting preliminary plans and hearing the public’s thoughts early enough to incorporate its input. Sustained contact, throughout the process, reduces the chance of any party blindsiding the other with unheard views or unexpected actions. In this view, the process itself represents a communication act, expressing a belief in the public’s right to know and ability to understand.

Fig. 2.

A process for integrating communication and decision making. Reproduced with permission from ref. 24.

The specifics of such consultations will depend on the decisions involved. Siting a factory, wind farm, or halfway house may require direct conversation with those affected by it. Informing a national debate may need social scientists to act as intermediaries between the distant parties. The Ocean Health Index (79, 80) uses natural and decision science to identify the impacts that various stakeholders value and then synthesize the evidence relevant to them. The Blue Ribbon Commission on America’s Nuclear Future adapted its ambitious plans for public consultation in response to social science input about how to establish trusted communication channels (81, 82). Its recommendations called for sustained communications, to ensure that proposals for nuclear power and waste disposal receive fair hearings (83).

Conclusion

Communications are adequate if they reach people with the information that they need in a form that they can use. Meeting that goal requires collaboration between scientists with subject matter knowledge to communicate and scientists with expertise in communication processes—along with practitioners able to manage the process. The Sackler Colloquium on the “Science of Science Communication” brought together experts in these fields, to interact with one another and make their work accessible to the general scientific community. Such collaboration affords the sciences the best chance to tell their stories.

It also allows diagnosing what went wrong when communications fail. Did they get the science wrong, and lose credibility? Did they get the wrong science, and prove irrelevant? Did they lack clarity and comprehensibility, frustrating their audiences? Did they travel through noisy channels, and not reach their audiences? Did they seem begrudging, rather than forthcoming? Did they fail to listen, as well as to speak? Did they try to persuade audiences that wanted to be informed, or vice versa?

Correct diagnoses provide opportunities to learn. Misdiagnoses can compound problems if they lead to blaming communication partners, rather than communication processes, for failures (46). For example, scientists may lose patience with a public that cannot seem to understand basic facts, not seeing what little chance the public has had to learn them. Conversely, laypeople may lose faith in scientists who seem distant and uncaring, not appreciating the challenges that scientists face in reaching diverse audiences. When a science communication is successful, members of its audience should agree on what the science says relevant to their decisions. They need not agree about what decisions to make if they value different things. They may not be reachable if issues are so polarized that the facts matter less than loyalty to a cause that interprets them in a specific way (45). However, in such cases, it is social, rather than communication, processes that have failed.

Thus, by increasing the chances of success and aiding the diagnosis of failure, the sciences of communication can protect scientists from costly mistakes, such as assuming that the public can’t handle the truth, and then denying it needed information, or becoming advocates, and then losing the trust that is naturally theirs (9, 24, 72). To these ends, we need the full range of social, behavioral, and decision sciences presented at this Colloquium, coupled with the best available subject matter expertise. When science communication succeeds, science will give society the greatest practical return on its investment—along with the sense of wonder that exists in its own right (84).

Acknowledgments

Preparation of this paper was supported by the National Science Foundation Grant SES-0949710.

Footnotes

The author declares no conflict of interest.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “The Science of Science Communication,” held May 21–22, 2012, at the National Academy of Sciences in Washington, DC. The complete program and audio files of most presentations are available on the NAS Web site at www.nasonline.org/science-communication.

This article is a PNAS Direct Submission. D.A.S. is a guest editor invited by the Editorial Board.

References

- 1.Burger J. The Parrot Who Owns Me. New York: Random House; 2002. [Google Scholar]

- 2.Dietz T. Bringing values and deliberation to science communication. Proc Natl Acad Sci USA. 2013;110:14081–14087. doi: 10.1073/pnas.1212740110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Löfstedt R, Fischhoff B, Fischhoff I. Precautionary principles: General definitions and specific applications to genetically modified organisms (GMOs) J Policy Anal Manage. 2002;21(3):381–407. [Google Scholar]

- 4.Klahr D. What do we mean? On the importance of not abandoning scientific rigor when talking about science education. Proc Natl Acad Sci USA. 2013;110:14075–14080. doi: 10.1073/pnas.1212738110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Baddeley AD. Applied cognitive and cognitive applied research. In: Nilsson LG, editor. Perspectives on Memory Research. Hillsdale, NJ: Erlbaum; 1978. [Google Scholar]

- 6.Schwartz LM, Woloshin S. The Drug Facts Box: Improving the communication of prescription drug information. Proc Natl Acad Sci USA. 2013;110:14069–14074. doi: 10.1073/pnas.1214646110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Merz J, Fischhoff B, Mazur DJ, Fischbeck PS. Decision-analytic approach to developing standards of disclosure for medical informed consent. J Toxics Liability. 1993;15(1):191–215. [Google Scholar]

- 8.Bruine de Bruine W, Bostrom A. Assessing what to address in science communication. Proc Natl Acad Sci USA. 2013;110:14062–14068. doi: 10.1073/pnas.1212729110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lupia A. Communicating science in politicized environments. Proc Natl Acad Sci USA. 2013;110:14048–14054. doi: 10.1073/pnas.1212726110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Reyna VF. A new intuitionism: Meaning, memory, and development in Fuzzy-Trace Theory. Judgm Decis Mak. 2012;7(3):332–339. [PMC free article] [PubMed] [Google Scholar]

- 11.von Winterfeldt D. Bridging the gap between science and decision making. Proc Natl Acad Sci USA. 2013;110:14055–14061. doi: 10.1073/pnas.1213532110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Raiffa H. Decision Analysis. Reading, MA: Addison-Wesley; 1968. [Google Scholar]

- 13.Kahneman D. Thinking, Fast and Slow. New York: Farrar Giroux & Strauss; 2011. [Google Scholar]

- 14.Morgan MG, Henrion M. Uncertainty. New York: Cambridge University Press; 1990. [Google Scholar]

- 15.O'Hagan A, et al. Uncertain Judgements: Eliciting Expert Probabilities. Chichester, UK: Wiley; 2006. [Google Scholar]

- 16.Campbell P. Understanding the receivers and the reception of science’s uncertain messages. Philos Trans R Soc. 2011;369:4891–4912. doi: 10.1098/rsta.2011.0068. [DOI] [PubMed] [Google Scholar]

- 17.Fischhoff B. Giving advice: Decision theory perspectives on sexual assault. Am Psychol. 1992;47(4):577–588. doi: 10.1037//0003-066x.47.4.577. [DOI] [PubMed] [Google Scholar]

- 18.Downs JS, et al. Interactive video behavioral intervention to reduce adolescent females' STD risk: A randomized controlled trial. Soc Sci Med. 2004;59(8):1561–1572. doi: 10.1016/j.socscimed.2004.01.032. [DOI] [PubMed] [Google Scholar]

- 19.Fischhoff B. Value elicitation: Is there anything in there? Am Psychol. 1991;46(8):835–847. [Google Scholar]

- 20.Lichtenstein S, Slovic P, editors. The Construction of Preferences. New York: Cambridge Univ Press; 2006. [Google Scholar]

- 21.Morgan MG, Fischhoff B, Bostrom A, Atman C. Risk Communication: The Mental Models Approach. New York: Cambridge Univ Press; 2001. [Google Scholar]

- 22.Merton RF. The focussed interview and focus groups. Public Opin Q. 1987;51(4):550–566. [Google Scholar]

- 23.Bruine de Bruin W, Downs JS, Fischhoff B, Palmgren C. Development and evaluation of an HIV/AIDS knowledge measure for adolescents focusing on misconceptions. J HIV AIDS Prev Child Youth. 2007;8(1):35–57. [Google Scholar]

- 24.Bruine De Bruin W, Fischhoff B, Brilliant L, Caruso D. Expert judgments of pandemic influenza risks. Glob Public Health. 2006;1(2):178–193. doi: 10.1080/17441690600673940. [DOI] [PubMed] [Google Scholar]

- 25. Kent S (1994). Words of estimative probability. Sherman Kent and the Board of National Estimates: Collected Essays, ed Steury DP (Central Intelligence Agency, Washington, DC), pp 151–166. Available at https://www.cia.gov/library/center-for-the-study-of-intelligence/csi-publications/books-and-monographs/sherman-kent-and-the-board-of-national-estimates-collected-essays/6words.html. Accessed July 26, 2013.

- 26.Wallsten T, Budescu D, Rapoport A, Zwick R, Forsyth B. Measuring the vague meanings of probability terms. J Exp Psychol Gen. 1986;115:348–365. [Google Scholar]

- 27.Fischhoff B. Communicating uncertainty: Fulfilling the duty to inform. Issues Sci Technol. 2012;28(4):63–70. [Google Scholar]

- 28.Keysar B, Lin S, Barr DJ. Limits on theory of mind use in adults. Cognition. 2003;89(1):25–41. doi: 10.1016/s0010-0277(03)00064-7. [DOI] [PubMed] [Google Scholar]

- 29.Pronin E. How we see ourselves and how we see others. Science. 2008;320(5880):1177–1180. doi: 10.1126/science.1154199. [DOI] [PubMed] [Google Scholar]

- 30.Nickerson RA. How we know – and sometimes misjudge – what others know. Psychol Bull. 1999;125(6):737–759. [Google Scholar]

- 31.Dawes RM, Mulford M. The false consensus effect and overconfidence. Organ Behav Hum Decis Process. 1996;65(3):201–211. [Google Scholar]

- 32.Wessely S. Don't panic! Short and long-term psychological reactions to the new terrorism. J Ment Health. 2005;14(1):1–6. [Google Scholar]

- 33. Silver RC, ed (2011) Special Issue: 9/11: Ten Years Later. Am Psychol 66(6) [DOI] [PubMed]

- 34.Fischhoff B. Assessing adolescent decision-making competence. Dev Rev. 2008;28(1):12–28. [Google Scholar]

- 35.Reyna V, Farley F. Risk and rationality in adolescent decision making: Implications for theory, practice, and public policy. Psychol Public Interest. 2006;7(1):1–44. doi: 10.1111/j.1529-1006.2006.00026.x. [DOI] [PubMed] [Google Scholar]

- 36.Fischhoff B. Communicating about the risks of terrorism (or anything else) Am Psychol. 2011;66(6):520–531. doi: 10.1037/a0024570. [DOI] [PubMed] [Google Scholar]

- 37.Hasher L, Zacks RT. Automatic processing of fundamental information: The case of frequency of occurrence. Am Psychol. 1984;39(12):1372–1388. doi: 10.1037//0003-066x.39.12.1372. [DOI] [PubMed] [Google Scholar]

- 38.Slovic P, editor. Perception of Risk. London: Earthscan; 2001. [Google Scholar]

- 39.Kahneman D, Tversky A, editors. Choice, Values, and Frames. New York: Cambridge Univ Press; 2000. [Google Scholar]

- 40.Löfstedt R. Risk Management in Post-Trust Societies. London: Palgrave/MacMillan; 2005. [Google Scholar]

- 41.Fischhoff B, Brewer N, Downs JS, editors. Communicating Risks and Benefits: An Evidence-Based User’s Guide. Washington, DC: Food and Drug Administration; 2011. [Google Scholar]

- 42.Fischhoff B. Risk perception and communication unplugged: Twenty years of process. Risk Anal. 1995;15(2):137–145. doi: 10.1111/j.1539-6924.1995.tb00308.x. [DOI] [PubMed] [Google Scholar]

- 43.Lerner JS, Keltner D. Fear, anger, and risk. J Pers Soc Psychol. 2001;81(1):146–159. doi: 10.1037//0022-3514.81.1.146. [DOI] [PubMed] [Google Scholar]

- 44.Slovic P, editor. The Feeling of Risk. London: Earthscan; 2010. [Google Scholar]

- 45.Haidt JH. The Righteous Mind. New York: Pantheon; 2012. [Google Scholar]

- 46.Bartlett RC. Remembering. Cambridge: Cambridge Univ Press; 1932. [Google Scholar]

- 47.Gentner D, Stevens AL, editors. Mental Models. Hillsdale, NJ: Erlbaum; 1983. [Google Scholar]

- 48.Johnson-Laird P. Mental Models. New York: Cambridge Univ Press; 1983. [Google Scholar]

- 49.Downs JS, Bruine de Bruin W, Fischhoff B. Parents’ vaccination comprehension and decisions. Vaccine. 2008;26(12):1595–1607. doi: 10.1016/j.vaccine.2008.01.011. [DOI] [PubMed] [Google Scholar]

- 50.Meyer D, Leventhal H, Gutmann M. Common-sense models of illness: The example of hypertension. Health Psychol. 1985;4(2):115–135. doi: 10.1037//0278-6133.4.2.115. [DOI] [PubMed] [Google Scholar]

- 51.Kates RW. 1962. Hazard and Choice Perception in Flood Plain Management (Univ of Chicago Press, Chicago), Department of Geography Research Paper No. 78.

- 52.Cohen J, Chesnick EI, Haran D. Evaluation of compound probabilities in sequential choice. Nature. 1971;232(5310):414–416. doi: 10.1038/232414a0. [DOI] [PubMed] [Google Scholar]

- 53.Slovic P, Fischhoff B, Lichtenstein S. Accident probabilities and seat belt usage: A psychological perspective. Accid Anal Prev. 1978;10:281–285. [Google Scholar]

- 54.Weinstein ND, Kolb K, Goldstein BD. Using time intervals between expected events to communicate risk magnitudes. Risk Anal. 1996;16(3):305–308. doi: 10.1111/j.1539-6924.1996.tb01464.x. [DOI] [PubMed] [Google Scholar]

- 55.Wagenaar W, Sagaria S. Misperception of exponential growth. Percept Psychophys. 1975;18(6):416–422. [Google Scholar]

- 56.Sterman JD, Sweeney LB. Cloudy skies: Assessing public understanding of global warming. Syst Dyn Rev. 2002;18(2):207–240. [Google Scholar]

- 57.Budescu DV, Broomell S, Por HH. Improving communication of uncertainty in the reports of the intergovernmental panel on climate change. Psychol Sci. 2009;20(3):299–308. doi: 10.1111/j.1467-9280.2009.02284.x. [DOI] [PubMed] [Google Scholar]

- 58.Politi MC, Han PKJ, Col NF. Communicating the uncertainty of harms and benefits of medical interventions. Med Decis Making. 2007;27(5):681–695. doi: 10.1177/0272989X07307270. [DOI] [PubMed] [Google Scholar]

- 59.Nisbett RE, Ross L. Human Inference. Englewood Cliffs, NJ: Prentice-Hall; 1980. [Google Scholar]

- 60.Merz J. An empirical analysis of the medical informed consent doctrine. Risk. 1991;2(1):27–76. [Google Scholar]

- 61.Feldman-Stewart D, et al. A systematic review of information in decision aids. Health Expect. 2007;10(1):46–61. doi: 10.1111/j.1369-7625.2006.00420.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Scheufele DA. Communicating science in social settings. Proc Natl Acad Sci USA. 2013;110:14040–14047. doi: 10.1073/pnas.1213275110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Eveland WP, Cooper KE. An integrated model of communication influence on beliefs. Proc Natl Acad Sci USA. 2013;110:14088–14095. doi: 10.1073/pnas.1212742110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Christakis NA, Fowler J. Connected. New York: Little, Brown; 2009. [Google Scholar]

- 65.Brossard D. New media landscapes and the science information consumer. Proc Natl Acad Sci USA. 2013;110:14096–14101. doi: 10.1073/pnas.1212744110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Nisbet MC, Kotcher J. A two step flow of influence? Opinion-leader campaigns on climate change. Science Communication. 2009;30:328–358. [Google Scholar]

- 67.Casman EA, Fischhoff B, Palmgren C, Small MJ, Wu F. An integrated risk model of a drinking-water-borne cryptosporidiosis outbreak. Risk Anal. 2000;20(4):495–511. doi: 10.1111/0272-4332.204047. [DOI] [PubMed] [Google Scholar]

- 68.Winterstein AG, Kimberlin CL. Usefulness of consumer medication information dispensed in retail pharmacies. Arch Intern Med. 2010;170:1314–1324. doi: 10.1001/archinternmed.2010.263. [DOI] [PubMed] [Google Scholar]

- 69.Wogalter M, editor. The Handbook of Warnings. Hillsdale, NJ: Lawrence Erlbaum Associates; 2006. [Google Scholar]

- 70.Riley DM, Fischhoff B, Small MJ, Fischbeck P. Evaluating the effectiveness of risk-reduction strategies for consumer chemical products. Risk Anal. 2001;21(2):357–369. doi: 10.1111/0272-4332.212117. [DOI] [PubMed] [Google Scholar]

- 71.Eggers SL, Fischhoff B. A defensible claim? Behaviorally realistic evaluation standards. J Public Policy Mark. 2004;23:14–27. [Google Scholar]

- 72.Fischhoff B. Nonpersuasive communication about matters of greatest urgency: Climate change. Environ Sci Technol. 2007;41(21):7204–7208. doi: 10.1021/es0726411. [DOI] [PubMed] [Google Scholar]

- 73.Kunreuther H, Michel-Kerjan E. People get ready: Disaster preparedness. Issues Sci Technol. 2011;28(1):1–7. [Google Scholar]

- 74.Peters HP. Gap between science and media revisited: Scientists as public communicators. Proc Natl Acad Sci USA. 2013;110:14102–14109. doi: 10.1073/pnas.1212745110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Yocco V, Jones EC, Storksdieck M. Factors contributing to amateur astronomers' involvement in education and public outreach. Astronomy Education Review. 2012;11(1):010109. [Google Scholar]

- 76.Canadian Standards Association . Risk Management: Guidelines for Decision Makers (Q850) Ottawa, Canada: Canadian Standards Association; 1997. [Google Scholar]

- 77.National Research Council . Understanding Risk. Washington, DC: National Academy Press; 1996. [Google Scholar]

- 78.Presidential/Congressional Commission on Risk Assessment and Risk Management . Risk Management. Washington, D.C.: Environmental Protection Agency; 1998. [Google Scholar]

- 79. Ocean Health Index. Available at www.oceanhealthindex.org/. Accessed July 26, 2013.

- 80.Halpern BS, et al. Elicited preferences for components of ocean health in the California Current. Mar Policy. 2013;42(1):68–73. [Google Scholar]

- 81.Dietz T, Stern P, editors. Public Participation in Environmental Assessment and Decision Making. Washington, DC: National Academy Press; 2008. [Google Scholar]

- 82.Rosa EA, et al. Nuclear waste: Knowledge waste? Science. 2010;329(5993):762–763. doi: 10.1126/science.1193205. [DOI] [PubMed] [Google Scholar]

- 83.Blue Ribbon Commission on America’s Nuclear Future . Report to the Secretary of Energy. Washington, DC: Department of Energy; 2012. [Google Scholar]

- 84.Fischhoff B, Kadvany J. Risk: A Very Short Introduction. Oxford: Oxford Univ Press; 2011. [Google Scholar]