Abstract

This essay examines the societal dynamics surrounding modern science. It first discusses a number of challenges facing any effort to communicate science in social environments: lay publics with varying levels of preparedness for fully understanding new scientific breakthroughs; the deterioration of traditional media infrastructures; and an increasingly complex set of emerging technologies that are surrounded by a host of ethical, legal, and social considerations. Based on this overview, I discuss four areas in which empirical social science helps clarify intuitive but sometimes faulty assumptions about the social-level mechanisms of science communication and outline an agenda for bench and social scientists—driven by current social-scientific research in the field of science communication—to guide more effective communication efforts at the societal level in the future.

Keywords: public opinion, mass media, journalism, communication theory

In 1999, Cornell entomologist John Losey and colleagues (1) published a Scientific Correspondence in the journal Nature outlining results from laboratory studies that suggested that Bacillus thuringiensis (Bt)-transgenic corn might have harmful effects on Monarch butterfly larvae. The report triggered an intense academic debate, including criticism from some of Losey’s own colleagues at Cornell, who raised methodological concerns about the generalizability of laboratory-based findings (2). Other criticisms focused on the fact that an earlier version had been rejected as a research article by the journal Science (3) and was now being published as a Correspondence piece in Nature after potentially much less rigorous peer review (4).

This technical debate among a small group of specialized scientists was largely glossed over by the news outlets covering the Nature piece. Instead, USA Today’s front page made the sweeping announcement that “Engineered corn kills butterflies” (5), and the Washington Post pitted “biotech” researchers against the monarch butterfly—the “‘Bambi’ of insects.” (6)

This disconnect between scientific discourse and public debate highlights two important points about the societal dynamics surrounding science communication. First, communication disconnects between science and the public can have immense impacts on markets and policy debates. In fact, a number of scientists argued that the media debate about Bt corn had done irreparable damage to the emerging scientific field of genetic engineering: “[I]mmediately after publication of the Nature correspondence, there was a nearly 10% drop in the value of Monsanto stock, possible trade restrictions by Japan, freezes on the approval process for Bt-transgenic corn by the European Commission (Brussels), and calls for a moratorium on further planting of Bt-corn in the United States” (2).

Second, the way new technologies or scientific breakthroughs are communicated in social settings is at least as important as the scientific content that is being conveyed when lay audiences interpret new technologies or make decisions about public funding for science.

The success of Greenpeace’s “Frankenfood” campaign is a good illustration. The campaign invoked the imagery of Frankenstein’s monster by inventing “Tony the Frankentiger” as a fictitious spokesperson for genetically modified foods—or Frankenfoods, for short. Hearing the term Frankenfood likely triggers a series of socially and culturally shared interpretive schemas in an audience member’s head, ranging from “playing god” to “runaway science” and the notion of “unnatural, artificial” food (7). And the use of metaphors or allegories by journalists, such as the “‘Bambi’ of insects” headline in the Washington Post article, plays to similar culturally shared imagery.

Unfortunately, some of the public statements made by scientists during the Bt corn debate also demonstrated how difficult it can be for scientists to present their work in ways that resonate with lay audiences. When pressed by a journalist about the impacts of Bt-transgenic corn on larvae of monarch butterflies, for example, Cornell entomologist Tony Shelton dismissed the concerns by asking, “[H]ow many monarchs get killed on the windshield of a car?” (as cited in ref. 8). This highly publicized statement unintentionally distilled two competing metaphors: the beloved monarch butterfly (or the “Bambi” of butterflies), on the one hand, and the image of a heartless scientist, on the other hand, who is not concerned at all about the impacts that his or her work has on society.

This paper explores some of the societal complexities that surround science communication, especially during controversies such as the Bt corn debate: an inattentive public, increasingly complex and fast-moving scientific developments, and the decline of science journalism in traditional news outlets. Based on this overview, I outline four areas in which empirical social science has helped clarify sometimes faulty intuitive assumptions about the mechanisms of science communication in societal contexts. I will close with a set of recommendations about building and sustaining better science–society interfaces in the future.

Science in Modern Communication Environments

Disconnects between science and the societal environment within which it operates, of course, are not new. The Roman Inquisition’s prosecution of Galileo Galilei was probably one of the earliest run-ins that modern science had with the values, beliefs, and social norms of its time. In modern democracies, of course, the public plays a central role in determining how science is funded, used, and regulated. This democratic decision making about regulatory and funding infrastructures for science can pose challenges for some issues, such as evolution, where public acceptance lags far behind scientific consensus (9). However, public engagement can also serve as an important regulatory mechanism in instances when scientific recommendations may not serve the larger public good (10). All of these dynamics are indicative of political and social environments that—at least in their current constellation—create new sets of challenges when it comes to the societal debates surrounding complex and sometimes controversial science. Three challenges are particularly worth highlighting.

Preparedness for New Scientific Information.

The first challenge relates to a US citizenry that is not as accepting of scientific facts as other nations. Comparative surveys in Europe and the United States, for example, show that “one in three American adults firmly rejects the concept of evolution, a significantly higher proportion than found in any western European country” (9). Data on levels of information about science show similar patterns.

Since 1979, the National Science Board has conducted biannual trend surveys that have tracked, among other variables, knowledge levels, understanding, and attitudes toward science among the American public. Known as the “Science and Engineering Indicators,” these surveys show that levels of knowledge of basic scientific facts among the American public have traditionally been quite low. Between 1992 and 2010, the dates for which comparable data were collected, knowledge levels have stayed fairly stable, with US adults being able to answer an average of 59% of factual knowledge questions correctly in 1992 and 63% in 2010 (11). Respondents were asked whether the earth goes around the sun or vice versa, which 73% of respondents were able to answer correctly, and how long it takes for the earth to go around the sun (only asked for people who answered the previous question correctly). Sixty-three percent of respondents answered the second question correctly.

Some scholars have rightfully pointed out that the ability to answer factual questions about scientific topics may not be the best indicator of what lay publics can realistically be expected to know or normatively should know about emerging science (12). However, results from the same survey show that a lack of scientific understanding among many members of the US public goes beyond factual recall. Many members of the public lack the ability to differentiate a sound scientific study from a poorly conducted one and to understand the scientific process more broadly. In the most recent iteration of the Science and Engineering Indicators survey, for example, only two thirds of respondents (66%) had a correct understanding of the concept of probability, 51% were able to pick the correct definition of an experiment, and only 18% could correctly describe the components of a scientific study (11).

Nature of Modern Science.

The lack of scientific literacy among nonexpert publics and their limited frameworks for processing new scientific information are of particular concern, given the scientific and policy uncertainties surrounding many areas of emerging science. In fact, we live in a world of what some have called postnormal science, i.e., technologies and scientific breakthroughs for which scientific “facts are uncertain, values in dispute, stakes high and decisions urgent” (13).

Nanotechnology is just one recent example of postnormal science. The technology involves the observation and modification of materials at the scale of 1–100 nanometers, with a nanometer being a billionth of a meter. Although well over 1,500 nano-based applications are available on the consumer end market today, ranging from cosmetics to automobile products, sporting goods, and foods (14), a recent National Research Council report raised serious concerns about the ongoing uncertainties surrounding engineered nanomaterials. In fact, the report implicitly describes nanotechnology as an example of postnormal science, i.e., a technology that is characterized by significant scientific uncertainties and high-stakes, urgent policy choices: “Despite some progress in assessing research needs and in funding and conducting research,” the report states, “developers, regulators, and consumers of nanotechnology-enabled products remain uncertain about the variety and quantity of nanomaterials in commerce or in development, their possible applications, and any potential risks” (15).

However, nanotechnology is only one part of what has been described as a broader Nano-Bio-Info-Cogno (NBIC) convergence across scientific disciplines (16). This NBIC convergence involves rapidly emerging intersections among fields, such as biology, nanotechnology, or information science. In addition to the scientific complexities surrounding each of their components, NBIC technologies also confront nonexpert publics with an increasingly complex set of decisions about the ethical, legal, and social implications (ELSI) of emerging interdisciplinary research areas, such as Big Data or synthetic biology, but also political programs, such as President Obama’s recent initiative to invent and refine new technologies to understand the human brain.

Given their rapid development and transdisciplinary nature, emerging NBIC technologies have the potential to further complicate the challenges that postnormal science poses for lay audiences (17). The scientific uncertainties surrounding the toxicity of novel nanomaterials, the value-based debates around the potential creation of artificial life in the laboratory, or the urgency of developing policy frameworks for patenting naturally occurring and synthetic human genes are just a few recent examples.

Crumbling Science–Public Infrastructures.

A third challenge relates to the rapid decline of traditional infrastructures for bridging public–science divides. We are in the midst of a tectonic transformation of our traditional media infrastructures and many of the sources for science news that nonexpert audiences have traditionally relied on. Many of these shifts are discussed in greater detail in Dominique Brossard's article in this colloquium issue (18), but three overall trends are worth highlighting in the context of this broader overview.

A first trend relates to shrinking audiences for traditional print and broadcast media, especially for news about science and technology. Recent studies have shown significant shifts among audiences away from traditional news (mostly television and newspapers) as primary sources for scientific information and toward news diets that are heavily supplemented by or rely exclusively on online sources as the primary source for scientific information. Most of this development is due to cohort shifts, especially among younger audiences, who are growing up without news diets dominated by print newspapers or television and are therefore significantly more likely to develop news use habits based on online-only sources for science news or at least to supplement use of traditional outlets with online sources (11, 19).

In fact, the most recent set of Science and Engineering Indicators data—collected in 2010—marked the first time that Americans were about equally likely to rely on the Internet (35%) and on television (34%) as their primary source for news about science and technology. These results mark an increase of about 6% for the Internet and a drop of about 5% for television from 2 y earlier. The increasing importance of the Internet as an everyday source of information becomes even clearer when Americans are asked where they turn when wanting to “learn about scientific issues such as global warming or biotechnology.” Almost two thirds (59%) of Americans cite the Internet as their primary source, with television coming in a distant second at 15% (11).

It is difficult to disentangle, of course, whether audiences increasingly migrate to online channels in response to traditional outlets offering less science-related content or vice versa. What is clear, however, is that audience shifts for science and technology news have coincided with a second trend: the shrinking size of news holes devoted to science and technology. The amount of news available in traditional news outlets is not just a problem affecting science and technology news. News holes in general, i.e., the number of column inches devoted to news in print or the time available for news on television, are shrinking. Newsweek, for example, published its final print issue in December 2012, and even bigger daily newspapers, such as the Detroit Free Press, have reduced home delivery to three issues a week (20). Some global outlets, such as The Economist, and national papers in countries, such as Germany, have been less affected by these trends but have also supplemented their print editions with paywalled online and mobile editions. Most newspapers that do continue to publish print editions, however, have had to severely cut back on the amount of science and technology related news they are able to print. In 1989, for example, 95 newspapers had weekly science sections. This number dropped to 34 science sections in 2005 and—this year—is down to only 19 newspapers who still publish weekly science sections (21).

The deterioration of traditional media infrastructures also contributes to a third trend: the disappearance of trained science journalists in traditional newsrooms. This trend has affected television outlets, such as CNN, who in 2008 cut its entire science, technology, and environment news staff, including Miles O’Brien, its chief technology and environment correspondent (22), but also print newspapers, such as The New York Times, who earlier this year dismantled their environmental desk and reassigned their seven reporters and two editors to other sections of the newspaper (23).

The trend among many media organizations to no longer use (full-time) science journalists raises a series of concerns, given the important roles that these journalists have traditionally played as translators of complex scientific phenomena into formats that attract interest and are easily digestible by nonexpert audiences. The dwindling numbers of full-time science journalists is particularly problematic for issues, such as nanotechnology, that combine complex basic research, high levels of scientific uncertainty, and multifaceted policy dilemmas.

A recent content analysis of over 20 y of newspaper coverage of nanotechnology in the United States, for instance, examined the proportion of journalists who wrote regularly about the issue during this time period (24). Among the 656 journalists in the sample, only about 6% (38 journalists) had written at least six articles over the roughly 20-y time period the study covered, and only about 1% (7 journalists) had written 25 articles. Almost three in four (70%) journalists identified in the study had written only one nanotechnology article over the roughly 20-y time period analyzed.

Two aspects of these findings warrant particular attention. First, the vast majority of articles on emerging technologies are written by reporters whose primary responsibilities do not involve scientific topics, including fashion editors running stories on nano-based cosmetics, or sports writers summarizing the latest nano materials used for tennis rackets or downhill skis. Second, even the seven most prolific writers in the area of nanotechnology only averaged a little over one story per year. This number illustrates how small a proportion of the news hole is occupied by scientific breakthroughs, such as nanotechnology. As of today, two of the seven most prolific science journalists identified by the study—the Washington Post’s Rick Weiss and the New York Times’ Barnaby Feder—no longer work as science journalists.

Science–Public Interfaces: Intuition vs. Social Science

Ralph Cicerone, President of the National Academy of Sciences, identified many of these problems facing the science–public interface in an editorial back in 2006. Disappearing news holes for science and the thinning ranks of science journalists led him to attribute some responsibility for bridging science–public divides to scientists themselves who—he argued—“must do a better job of communicating directly to the public” (25). In a 2007 keynote address at the annual meeting of the American Association for the Advancement of Science (AAAS), Google cofounder Larry Page echoed those arguments and bluntly accused science of having a “serious marketing problem” (26).

However, the notion of scientists at least partially filling the void left by traditional news outlets comes with its own set of potential pitfalls. First, the structure and rewards systems of academic research institutions are not particularly conducive to encouraging bench scientists and engineers to engage with nonexpert publics (27, 28). In fact, university tenure and promotion guidelines more often reward securing extramural research funding and publishing in high-impact journals than they promote public scholarship and communication with nonexpert publics. One of Larry Page’s suggestion in his AAAS keynote was therefore to directly tie the awarding of tenure and grant money to the media impact of that a scientist’s research program has.

Regardless of the likelihood of academic reward structures changing in the short term, Page’s idea highlights a second complexity of scientists directly engaging with the public: their scientific training and the internalized norms about communicating within their peer communities that result from it. As part of their socialization into the field of science, young scholars are trained to analyze, present, and communicate scientific data to their scientific peers in ways that overcome all of the shortcomings of subjective human inquiry and lay communication (29). As a result, the very same conventions and skill sets that are invaluable for publications in peer-reviewed journals and proposals for extramural research grants become potential liabilities when it comes to scientists communicating with nonexpert audiences whose cognitive frameworks and communication patterns are directly at odds with many of these scientific conventions.

As a result, AAAS, the National Science Foundation, and many universities have begun to implement various practical training programs to teach science, technology, engineering, and math (STEM) scientists how to interact with journalists or other nonacademic audiences. These programs tend to be taught by practitioners and focus on establishing best practices among scientists for interacting with lay audiences or journalists and typically build little capacity for long-term or short-term empirical evaluations of the outcomes of these ad hoc communication efforts. Although these efforts to build practical day-to-day communication skills are laudable, they do not address a third complexity related to scientists engaging in communication with nonexpert publics: lack of interaction between bench scientists and engineers, on the one hand, and social scientists, on the other. As a result, efforts to bridge science–public disconnects are often less informed than they could be by the large body of research on the individual-level mechanisms underlying human decision making about science, the communication dynamics surrounding emerging technologies at both the group and societal level, and the impacts that the various interfaces between mass media, political stakeholders, and the scientific enterprise can have on public opinion.

A Few Areas That Require Us to Rethink Our Assumptions—and the Empirical Social Science That Tells Us Why

The May 2012 Sackler Colloquium that this special issue is based on provided a first attempt to provide an overview of this research and to establish a more formal exchange among social scientists, bench scientists, and engineers. To contextualize and highlight the importance of the various review articles in this colloquium issue, I will discuss four areas in which systematic input from the social sciences will be particularly useful for building and sustaining more effective science–public interfaces. Each of the four areas originates from assumptions that make a lot of intuitive sense but are often not supported by empirical social science.

Assumption 1: Knowledge Deficits Are Responsible for a Lack of Public Support of Science.

Many efforts to build bridges between science and nonexpert audiences have focused on what have been labeled “knowledge deficit models” (30, 31). Reinforced by a number of government reports in Europe and the United States in the 1980s and 1990s, knowledge deficit models attribute a lack of public support for emerging technologies to insufficient information (or a knowledge deficit) among nonexpert publics. Effective communication, based on this logic, is about explaining the science better or to “selling science,” as Dorothy Nelkin called it, to ultimately build public support for the scientific enterprise (32). Aside from the obvious normative concerns about scientists engaging in the “selling” or “marketing” of science, however, results from empirical studies raise at least two concerns about the usefulness of knowledge deficit models, more broadly.

First, empirical support for the statistical relationship between levels of information among nonexpert publics and their attitudes toward scientific issues is mixed at best. Over time, different researchers found that levels of knowledge can lead to more positive public attitudes toward science or undermine support for science, depending on the particular scientific issue people were debating. In fact, for controversial science topics the relationship between literacy and attitudes approaches zero (33). The most recent updates on this literature suggest that—regardless of issue—the relationship disappears or is significantly weakened after we control for factors such as deference toward scientific authority, trust in scientists, issue involvement (28), and levels of knowledge surrounding the political infrastructures in which science is debated (31).

I do not mean to suggest that higher levels of scientific knowledge among the general public are not inherently desirable and that both informal and formal science education efforts are not crucially important for contributing to a more informed citizenry. Previous research does not support the notion, however, that increasing public understanding will also lead to more public “buy-in” for science.

A second concern relates to the potential unintended consequences of narrowly promoting (informal) learning as an outcome variable without taking into account the broader societal infrastructures in which learning takes place. One illustration is trends in attendance levels in science and technology museums, tracked in the Science and Engineering Indicators datasets. Between 2006 and 2008, for example, attendance in science and technology museums stagnated at around 8% among the least educated segment in the US population (respondents who did not finish high school). Attendance among the most highly educated segment (respondents with a BA degree or higher) increased from 37% in 2006 to 43% in 2008 (34, 35).

Given the complex interplay of influences on museum attendance over time, it is important not to overinterpret this finding by itself. It does suggest, however, that even the most well-intended efforts to inform the least-educated segments of citizens limit their potential reach unless they are based on empirical data on how to best reach these audiences. In fact, even among respondents with at least a college degree, attendance at least once a year was below 50% on average. Second, the data also show a widening attendance gap between 2006 and 2008, with the least-educated segment staying at 8% and the most highly educated segment increasing attendance by about six percentage points.

Education-based gaps in knowledge are a phenomenon that communication researchers have been studying in the fields of health and political communication since the 1970s under the label “knowledge gaps.” When tracking the dissemination and adoption of health information in communities over time, scholars noticed that, “[a]s the infusion of mass media information into a social system increases, segments of the population with higher socioeconomic status tend to acquire this information at a faster rate than the lower status segments, so that the gap in knowledge between these segments tends to increase rather than decrease” (36). In other words, highly educated people are able to extract information they receive from museums, media, or other informational sources more efficiently and therefore learn more quickly than their less-educated counterparts.

And national surveys tracking the US public’s factual knowledge on nanotechnology show patterns directly consistent with knowledge gap phenomena. Although many researchers have bemoaned low and stagnant levels of awareness and knowledge about nanotechnology over time (37–39), recent analyses show that empirical patterns are more complex. In particular, as more and more nanotechnology-based products have arrived on the consumer end market and agencies such as the Food and Drug Administration and Environmental Protection Agency have struggled with developing adequate regulatory models, knowledge levels about nanotechnology, measured as the number of correct responses on a true/false knowledge scale, increased somewhat among the most highly educated segment of the population. Among the least-educated segment, however, knowledge levels dropped, effectively producing a widening informational gap between the already information rich and the information poor (40).

These findings highlight the pitfalls of assuming that simply making scientific information widely available through museums, Web sites, and other tools will attract audiences equally across sociodemographic strata. These results also reinforce the need for scientists and policy makers to understand the large body of literature and empirical findings surrounding the dissemination and uptake of scientific information in different social structures.

Assumption 2: Declining Levels of Trust Threaten Public Support for Science.

A second assumption is based on the important role that public trust in science can play in shaping public attitudes about specific emerging technologies. Levels of trust in scientists and the scientific enterprise have long been shown to be associated with more positive attitudes toward specific technologies (41, 42). More recently, concerns have been raised about potential partisan divides in the United States with respect to confidence in the scientific enterprise. In fact, data from the General Social Survey (GSS) show a widening rift in confidence between Republicans, who showed a significant decline in confidence in science since 1974, and Democrats, whose levels of confidence on average have increased since 1974 (43). However, a more careful look across different studies in communication and political science shows that this phenomenon may be neither surprising nor particular disconcerting.

First, a recent national survey tracking US opinions on climate change showed that frequent users of partisan media were also more polarized along ideological lines with respect to trust in scientists as information sources. In particular, respondents who regularly turned to Fox News and The Rush Limbaugh Show were significantly less likely to trust scientists as a source of information about global warming. In contrast, frequent audiences of CNN, MSNBC, National Public Radio, and network news were significantly more likely to trust scientists as information sources of climate change (44). This pattern, of course, directly parallels the widening gaps between liberals and conservatives observed in the GSS data.

And the fact that partisan news outlets (re)shape and polarize confidence in institutions on both sides of the political aisle is not particularly surprising, given the increasingly fragmented news environment in the United States that maximizes profits by tailoring news toward highly partisan audiences (45). Or, as MSNBC talk show host Rachel Maddow put it in a lecture at Harvard: “Opinion-driven media makes the money that politically neutral media loses” (46). For partisan media to attract likeminded audiences and further polarize their perceptions for highly politicized scientific issues, such as climate change or embryonic stem cell research, is therefore not an unintended consequence of this new type of journalism. It is part of its business model.

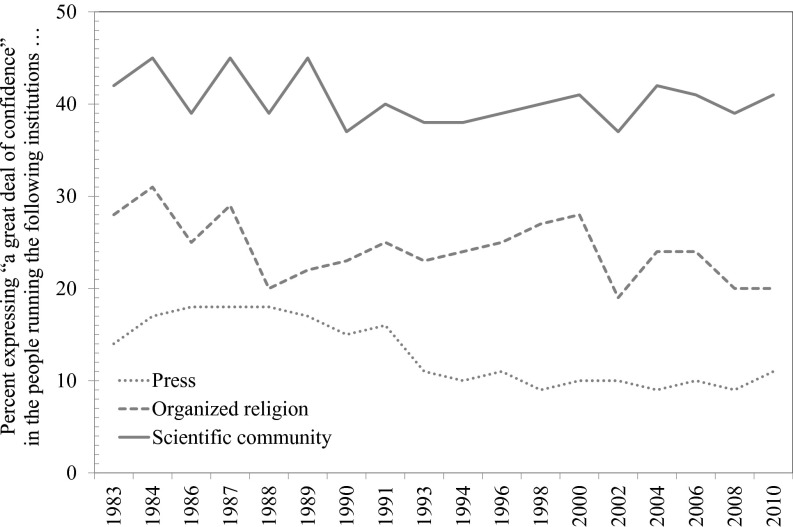

Second, despite this media-driven polarization, levels of trust in science among the general public have remained fairly stable. In fact, national surveys show that, even for postnormal scientific issues, such as nanotechnology, university scientists remain among the most trusted sources of information, ahead of industry scientists, consumer organizations, regulatory agencies, and news media (47). In addition, Fig. 1 shows a comparison of confidence in the people running different institutions, based on the same GSS datasets described earlier. The data plot only those respondents who have expressed “high” (as opposed to “some”) confidence in the people running each institution. The graphs in Fig. 1 show, on the one hand, that confidence in science has been fairly stable and even increasing slightly since the early 1990s, with temporary slumps after September 11, 2001 and the banking crash and subsequent recession of 2008. Religious organizations and the press are plotted for comparison purposes. Both institutions enjoy much lower levels of public confidence and—in the case of the press—a significant decline in confidence since the mid-1980s.

Fig. 1.

Levels of confidence in US institutions over time. Note: Data are based on National Opinion Research Center in-person interviews with national adult samples, collected as part of a continuing series of social indicators since 1972.

Third, a growing body of research suggests that temporary fluctuations in levels of trust or confidence, potentially driven by events like “Climategate” or highly politicized scientific debates surrounding vaccines, are less important in shaping attitudes than are more stable beliefs in what has been labeled the cultural authority of science (43) or deference toward scientific authority (48). Strongly correlated with formal education—both in general and in science-related fields—deference toward scientific authority represents the belief that the processes, norms, and structures of the scientific enterprise produce outcomes that are—by definition—in the broader public interest and superior to other form of systematic inquiry. As a stable predisposition toward science as an institution, deference toward scientific authority has been linked to more positive attitudes toward issues like nanotechnology, agricultural biotechnology, and stem cell research both directly (48–51) and indirectly through its influence on less stable dispositions, such as trust in scientists (48, 49).

Assumption 3: (Mass) Media’s Main Function Is to Inform the Public About Science.

The important role that media can play in polarizing audience views on science already highlights the pitfalls of a third assumption: the idea that media’s role in public debates around science is primarily that of a conveyor of scientific information.

This is not to say that news media do not play a crucially important role as informational conduits between complex and often uncertain science, as described earlier, and a public who on average have little formal science training and a limited understanding of the scientific process. Unfortunately, however, only a small minority of the US public takes advantage of media as a conveyor of scientific information. The percentage of Americans who report paying “very close” attention to science and technology news, for example, has dropped from 22% in 1998 to just 13% in 2010 (11). That decline has gone hand in hand with less and less coverage of science by traditional media.

Some scholars argue that it makes rational sense for audiences to limit the amount of effort they invest in seeking and processing information about complex science. In political campaigns, this idea has often been referred to as low information rationality (52). The concept of low information rationality is based on the assumption that human beings are cognitive satisficers and minimize the economic costs of making decisions and forming attitudes. As undesirable as this behavior may be with respect to the ideal of an informed electorate, it is important to keep in mind that these patterns of information processing make perfect sense for citizens who have to deal with thousands of pieces of new information every day and need to establish patterns of doing so quickly and efficiently.

And the less expertise citizens have on an issue initially, the more likely they will be to rely on such shortcuts as imperfect rules for decision making. Examples include religious or ideological predispositions, other affective and emotional responses, such as perceptions of other people’s opinions or trust in scientists, and a variety of cues from mass media about how to interpret scientific issues (53). Many of the more individual-level shortcuts that help audiences make sense of scientific issues, even in the absence of information, will be discussed in other contributions to this colloquium issue. However, recent research has identified two particularly powerful shortcuts provided to nonexpert audiences by mass media when it comes to scientific issues: cultivation and framing.

Cultivation refers to the idea that entertainment media provide us with powerful long-term shortcuts about the societal realities surrounding us, especially for issues and phenomena we cannot observe directly. First introduced by George Gerbner, cultivation theory was based on the idea that media portrayals of social realities are both ubiquitous and consonant. For instance, audiences might be exposed to consistent images of older, white, male scientists, regardless of which media channel they turn to. Over time, Gerbner argued, these consonant portrayals across different channels “cultivate” particular world views.

Early empirical research on cultivation focused on correlations between people’s perceptions of the likelihood of their becoming the victim of a violent crime, for example, and the time they spent viewing entertainment television (54). Gerbner’s assumptions about the effect of television was based on the “mean world syndrome,” i.e., the idea that television inundates viewers with a stream of consonant portrayals of a violent world. As a result, frequent viewers are more likely to see the world as more dangerous than it really is.

Subsequent empirical work in the 1980s extended the idea of cultivation to the realm of science and highlighted the important role that media have in shaping attitudes toward science through entertainment programming, rather than by informing audiences. Gerbner and his team content-analyzed entertainment television programming to determine whether scientists were portrayed positively or negatively, how the proportion of positive and negative portrayals compared with the portrayals of other professions, and how these portrayals mapped onto people’s confidence in science (55, 56). Results show that scientists were shown in an overall positive light, but that the proportion of negative or quirky portrayals of scientists on television was nonetheless much higher than for other professions (56). As a result, frequent TV viewing was related to less favorable views toward science, especially among respondents whose education levels and other demographic characteristics made them initially more likely to support science (55).

Recent analyses of TV content have shown that scientists are portrayed in a much more positive light nowadays—even in comparison with other professions—than was the case during Gerbner’s earlier fieldwork (57). Despite these more positive portrayals of scientists as a profession, however, surveys continue to show a negative link between frequent TV viewing and beliefs in the promise of science, even after controlling for potential sociodemographic confounds and other types of media use (58). More importantly, Gerbner’s assumptions about the mechanisms behind cultivation continue to be highly relevant in a society in which most members of the public never have the opportunity to observe a laboratory scientist at work. However, many of us have a mental image of what a typical scientist looks likes and how he or she thinks and acts. Those perceived realities continue to be cultivated by media and provide powerful heuristics when we make policy choices about new technologies or form judgments about how much we trust science as an institution.

And the lessons from this empirical work on cultivation for closing science–public divides continue to have applications today. More than 25 y ago, Gerbner wrote: “In an age when a single episode on prime-time television can reach more people than all science and technology promotional efforts put together, scientists must forget their aversion to the mass media and seek stronger ties with those who write, produce, and direct television news and entertainment programs” (55). Today, the National Academy of Sciences’ collaboration with various directors and writers in Hollywood as part of the Science and Entertainment Exchange is just one example of an initiative that continues to capitalize on the mechanisms behind cultivation by connecting entertainment industry professionals with top scientists and engineers. The goal of this collaborative effort between science and media professionals is to create film and TV programming that combines engaging narratives and storylines with accurate portrayals of science.

The politicization of science has also given prominence to a second and more subtle model for media effects on science: framing (59). The term framing goes back to work in sociology (60) and psychology (61) in the 1970s and in cognitive linguistics in the 1980s (62) and assumes that all human perception is dependent on frames of reference that can be established by presenting information in particular way. Framing is therefore not concerned with presenting different types of information, but with how the same piece of information can be presented in different ways, and how these differences in presentation can influence how well the message resonates with an underlying cognitive schema (63).

Framing effects are particularly relevant for ambiguous stimuli, i.e., issues or objects that can be interpreted in different ways (64). And, for nonexpert audiences, many emerging technologies are the equivalent of an ambiguous stimulus, especially when they involve preliminary findings or a scientific controversy about the validity of research findings (7). As a result, the terminology or imagery that is being used to describe scientific findings can serve as a very powerful heuristic when audiences are being asked to make judgments about the risks associated with emerging technologies or about regulatory policies to attenuate the risks (53).

Greenpeace’s Frankenfood frame, which was discussed at the outset of this article, is a good illustration of this effect. Without providing additional information, the Frankenfood frame shapes audience attitudes simply by tying the issue of genetically modified foods to existing schemas we all share, such as Frankenstein or runaway science (65). Nanotechnology, in a similar fashion, has been framed as the “next plastic” or the “next asbestos” in public debate, implicitly triggering mental connections to a previous health controversy and specifically the absence of adequate regulatory oversight of asbestos. The phrase also activates the notion that emerging nanotechnologies may open a Pandora’s box of long-term effects that will be unknown for years to come.

It is important to keep in mind, however, that frames are not just tools for strategic communication, but are an integral part of our day-to-day communication. As a result, they are also important journalistic tools to translate complex science to often inattentive audiences. A well-framed science story helps readers tie complex scientific phenomena to their everyday experiences and therefore make sense of the potential policy choices or funding decisions surrounding them. As a result, the way scientists frame scientific issues for public audiences is less a matter of being persuasive or of “spinning” science than it is a matter of presenting information in a way that makes it accessible to nonexpert publics.

Assumption 4: Science Should Be Debated in Isolation from Personal Values.

As outlined earlier, when NBIC technologies, such as nanotechnology or synthetic biology, enter the public arena, they trigger an almost instant debate about the ethical, legal, and social implications of their application in society. And many of these debates are less concerned about what science can do than what science should do. This increasing focus on the societal aspects of emerging science has at least two immediate implications.

First, people’s personal value systems become an important basis for decision making for audiences when they think about these technologies. The importance of values in public debates is partly due to the rapid development and the scientific complexity of many NBIC technologies. Values or religious beliefs provide citizens with convenient mental shortcuts for judging technologies that are surrounded by a significant degree of scientific uncertainty. And, once scientific issues become more politicized, mass media often make values an even more salient part of the debate by focusing on the conflict between competing value systems in society. A study of the issue cycles surrounding stem cell research, for example, shows that print media covered the scientific potential of a wide variety of stem cells between before the early 1990s, but then—driven by the emerging political debate around ethical and religious concerns—refocused almost 75% of its coverage narrowly toward embryonic stem cell research beginning in the early 2000s (66).

The use of values and ideological predispositions as shortcuts, however, is a phenomenon that can also be observed in expert audiences. Research has shown that the scientific uncertainties surrounding modern science make it more likely for scientists themselves to rely on their value systems when asked to judge the policy implications of their work. A recent study of highly cited nano scientists in the United States, for instance, showed that, even after controlling for scientific rank, discipline, and judgments about objective risks and benefits, a scientist’s political ideology continued to significantly predict his or her views on the need for more regulations in the field of nanotechnology (67). In other words, the assumption that societal discussion surrounding science can or should occur in isolation from personal value systems is unrealistic, even for expert publics.

Values and other predispositional influences, however, play a second important role in (re)shaping societal debates about science, beyond simply serving as replacements for information. In particular, recent research has examined the role of values as filtering mechanisms that explain why and how different audiences respond differently to new scientific information (68). Different scholars have offered a variety of labels for this phenomenon, including “perceptual filters” and “cultural cognition.” (68, 69) They all tap the same underlying mechanism, however: the idea that all human beings engage to varying degrees in biased information processing, motivated by values, worldviews, normative expectations, or religious beliefs, that ultimately favors goal-supportive evidence over contradictory facts when forming attitudes. As a result, the same scientific facts will mean different things to different audiences, depending on which values or beliefs most motivate their information processing (70).

Recent surveys have shown, for instance, that the relationship between levels of scientific understanding and belief in the impacts of climate change was moderated significantly by egalitarian/hierarchical worldviews. In other words, for respondents with egalitarian worldviews, scientific understanding played a significantly stronger positive role in shaping beliefs in the consequences of climate change than for respondents with hierarchical worldviews (71). Similar processes can be found for other areas of science (68, 72). Survey panel data from the 2004 US presidential election show that respondents with higher levels of understanding of embryonic stem cell research, measured through true/false survey questions similar to the Science and Engineering Indicators measures, were also more likely to support embryonic stem cell research. This knowledge–attitude link, however, was significant only for respondents who self-identified as being not or only somewhat religious. Among highly religious respondents, a better understanding of the scientific facts surrounding stem cell research showed no significant relationship to support for embryonic stem cell research (50).

Dynamic Nature of the Science–Society Interface

This essay provided an overview of the societal dynamics surrounding modern science. It highlighted at least three challenges that any effort to communicate science in social environments needs to grapple with: lay publics with varying levels of preparedness for fully understanding new scientific breakthroughs; crumbling media infrastructures, at least as far as traditional media are concerned; and an increasingly complex set of NBIC technologies that are surrounded by a host of ethical, legal, and social considerations.

Given these complexities, it is more important than ever to base any social-level communication effort about science on a firm empirical understanding of what we know about media, audiences, and the interaction between the two. Toward that end, this essay examined four broad assumptions about science–society interfaces that may have some intuitive validity, but that are at least partly at odds with empirical findings from various fields of social science.

The first assumption refers to knowledge deficit models and their simplistic assumption about more knowledgeable citizens also being more supportive of science. It is important to keep in mind, of course, that the lack of empirical support for a link between knowledge and attitudes discussed here does in no way diminish the importance of an informed citizenry in democratic societies. In fact, as some of the research discussed earlier shows, preventing widening knowledge gaps among groups with different socioeconomic status should be a continued focus of communication researchers and professionals.

Previous research has also highlighted the important role that trust in scientists and in science as an institution plays in shaping public attitudes toward science. Two points are particularly worth highlighting. First, most empirical data do not show declining levels of trust in science in recent years, even though some research suggests that an increasingly polarized political and news environment is also mirrored in more pronounced partisan differences related to trust in science. Second, research suggests that more long-term orientations, such as deference toward science, may be more important than relatively short-term fluctuations in trust. As discussed earlier, deference toward scientific authority taps a general buy-in among citizens to the scientific process and a willingness to defer to scientific expertise in areas they know little about. Initial data show that deference toward scientific authority is strongly linked to formal schooling in K–16, but the processes that help create it in various educational settings is much less understood.

A third assumption discussed earlier referred to the informational mission of mass media. Without a doubt, raising awareness of new technologies and providing information to audiences continues to be an important function of any form of public communication. The ability of media to push scientific issues to the forefront of public debate, for example, has been well-documented in countless studies since the 1970s (65). However, we also know from decades of communication research that media influences are multifaceted and go well beyond simply conveying information. Some efforts spearheaded by the National Academy of Sciences already take advantage of media effects models, such as cultivation, that have demonstrated how entertainment media can have long-term influences on the images audiences have of scientists.

A final assumption deals with the potential clash of social values and scientific research. As previous research has shown, values are important influences on attitudes toward emerging technologies, both among nonexpert and expert audiences, which is partly a function of the speed of development or the complexity of NBIC technologies. It is also a result of the particular questions addressed by NBIC technologies and their real-world applications. Should synthetic biologists create life in the laboratory, for example? Is it a good idea to create nanomaterials that do not exist in nature? And what are the moral considerations surrounding de-extinction, i.e., restoring extinct species of plants or animals by using genetic engineering or related techniques? None of these questions have exclusively scientific answers, but will require careful societal debates about the amalgam of scientific, political, moral, ethical, and religious questions they raise.

All four assumptions and the research behind them highlight the enormous potential and need for scientists, policy makers, and academics to think creatively about new directions for rebuilding science–society interfaces and for participating in the ongoing debates surrounding emerging technologies. These efforts will have to take into account all of the challenges outlined at the outset of this article, including the nature of emerging technologies, the ongoing transformation of our communication infrastructures, and—most importantly—the insights from social science about nonexpert audiences and their interfaces with other societal stakeholders.

Building formal collaborative infrastructures between the bench and social sciences is crucially important in a time where highly diverse sets of NBIC technologies constantly produce new scientific, social, and political challenges. As a result, academic institutions, funding agencies, and the federal government will have to prioritize institutional capacity building and infrastructure at the science–society interface, including (i) sustained social science efforts surrounding emerging technologies and (ii) formalized interfaces between social and natural sciences. Building these sustainable collaborative infrastructures is not a luxury. It is a necessity, especially as issues like global warming, nanotechnology, regenerative medicine, and agricultural biotechnology are increasingly blurring the lines between science, society, and politics. I hope this colloquium issue will be a first step in this direction by providing an initial overview and starting a conversation about the empirical social science that needs to be part of this infrastructure.

Acknowledgments

Preparation of this paper was supported by National Science Foundation Grant SES-0937591.

Footnotes

The author declares no conflict of interest.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “The Science of Science Communication,” held May 21–22, 2012, at the National Academy of Sciences in Washington, DC. The complete program and audio files of most presentations are available on the NAS Web site at www.nasonline.org/science-communication.

This article is a PNAS Direct Submission. B.F. is a guest editor invited by the Editorial Board.

References

- 1.Losey JE, Rayor LS, Carter ME. Transgenic pollen harms monarch larvae. Nature. 1999;399(6733):214. doi: 10.1038/20338. [DOI] [PubMed] [Google Scholar]

- 2.Shelton AM, Roush RT. False reports and the ears of men. Nat Biotechnol. 1999;17(9):832. doi: 10.1038/12779. [DOI] [PubMed] [Google Scholar]

- 3. Delborne JA (2005) Pathways of scientific dissent in agricultural biotechnology. PhD dissertation (Univ of California, Berkeley, CA)

- 4.Beringer JE. Cautionary tale on safety of GM crops. Nature. 1999;399(6735):405. doi: 10.1038/20784. [DOI] [PubMed] [Google Scholar]

- 5. Fackelmann K (May 20, 1999) Engineered corn kills butterflies, study says. USA Today, p 1A.

- 6. Weiss R (May 20, 1999) Biotech vs. ‘Bambi’ of insects? Gene-altered corn may kill Monarchs. The Washington Post, p A3.

- 7.Nisbet MC, Scheufele DA. The future of public engagement. Scientist. 2007;21(10):38–44. [Google Scholar]

- 8.Fedoroff NV, Brown NM. Mendel in the Kitchen: A Scientist's View of Genetically Modified Food. Washington, DC: National Academies Press/Joseph Henry Press; 2004. [Google Scholar]

- 9.Miller JD, Scott EC, Okamoto S. Science communication. Public acceptance of evolution. Science. 2006;313(5788):765–766. doi: 10.1126/science.1126746. [DOI] [PubMed] [Google Scholar]

- 10.Wynne B. Misunderstood misunderstanding: Social identities and public uptake of science. Public Underst Sci. 1992;1(3):281–304. [Google Scholar]

- 11. National Science Board (2012) Science and technology: Public attitudes and understanding. Science and Engineering Indicators 2012 (National Science Foundation, Arlington, VA), Chap 7.

- 12.Brossard D, Shanahan J. Do they know what they read? Building a scientific literacy measurement instrument based on science media coverage. Sci Commun. 2006;28(1):47–63. [Google Scholar]

- 13.Funtowicz SO, Ravetz JR. Science for the post-normal age. Futures. 1993;25(7):739–755. [Google Scholar]

- 14.Berube DM, Searson EM, Morton TS, Cummings CL. Project on Emerging Nanotechnologies – consumer product inventory evaluated. Nanotechnol Law Bus. 2010;7(2):152–163. [Google Scholar]

- 15.National Nanotechnology Initiative . Environmental, Health, and Safety Research Strategy. Washington, DC: National Science and Technology Council; 2011. [Google Scholar]

- 16.Roco MC, Bainbridge WS. Converging Technologies for Improving Human Performance. Dordrecht, The Netherlands: Kluwer; 2003. [Google Scholar]

- 17.Khushf G. An ethic for enhancing human performance through integrative technologies. In: Bainbridge WS, Roco MC, editors. Managing Nano-Bio-Info-Cogno Innovations: Converging Technologies in Society. Dordrecht, The Netherlands: Springer; 2006. pp. 255–278. [Google Scholar]

- 18. Brossard D (2013) New media landscapes and the science information consumer. Proc Natl Acad Sci USA 110:14096–14101. [DOI] [PMC free article] [PubMed]

- 19.Anderson AA, Brossard D, Scheufele DA. The changing information environment for nanotechnology: Online audiences and content. J Nanopart Res. 2010;12(4):1083–1094. doi: 10.1007/s11051-010-9860-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Rich F (April 7, 2013) Inky tears. New York Magazine, pp 22–28.

- 21. Morrison S (January 2, 2013) Hard numbers: Weird science. Columbia Journalism Review.

- 22. Brainard C (December 4, 2008) CNN cuts entire science, tech team. Columbia Journalism Review.

- 23. Oremus W (March 4,2013) The Times kills its environmental blog to focus on horse racing and awards shows. Slate.

- 24.Dudo AD, Dunwoody S, Scheufele DA. The emergence of nano news: Tracking thematic trends and changes in U.S. newspaper coverage of nanotechnology. Journalism Mass Comm. 2011;88(1):55–75. [Google Scholar]

- 25.Cicerone RJ. Celebrating and rethinking science communication. In Focus. 2006;6(3):3. [Google Scholar]

- 26. Ham B (2007) Larry Page: Science's “Serious Marketing Problem.” AAAS News Blog.

- 27.Corley EA, Kim Y, Scheufele DA. Leading U.S. nano-scientists’ perceptions about media coverage and the public communication of scientific research findings. J Nanopart Res. 2011;13(12):7041–7055. [Google Scholar]

- 28.Dunwoody S, Brossard B, Dudo A. Socialization of rewards? Predicting U.S. scientist-media interactions. Journalism Mass Comm. 2009;86(2):299–314. [Google Scholar]

- 29. Popper K (1994) Logic der Forschung [The Logic of Scientific Discovery] (Mohr, Tübingen, Germany), 10th Ed.

- 30.Brossard D, Lewenstein B, Bonney R. Scientific knowledge and attitude change: The impact of a citizen science project. Int J Sci Educ. 2005;27(9):1099–1121. [Google Scholar]

- 31.Sturgis P, Allum N. Science in society: Re-evaluating the deficit model of public attitudes. Public Underst Sci. 2004;13(1):55–74. [Google Scholar]

- 32. Nelkin D (1995) Selling Science: How the Press Covers Science and Technology (Freeman, New York), Rev Ed.

- 33.Bauer MW. Survey research on public understanding of science. In: Bucchi M, Trench B, editors. Handbook of Public Communication of Science and Technology. New York: Routledge; 2006. pp. 111–129. [Google Scholar]

- 34. National Science Board (2008) Science and technology: Public attitudes and understanding. Science and Engineering Indicators 2008 (National Science Foundation, Arlington, VA), Chap 7.

- 35. National Science Board (2010) Science and technology: Public attitudes and understanding. Science and Engineering Indicators 2010 (National Science Foundation, Arlington, VA)

- 36.Tichenor PJ, Donohue GA, Olien CN. Mass media flow and differential growth in knowledge. Public Opin Q. 1970;34(2):159–170. [Google Scholar]

- 37. Peter D. Hart Research Associates (2006) Public awareness of nano grows: Majority remain unaware (Woodrow Wilson International Center for Scholars, Washington, DC)

- 38. Peter D. Hart Research Associates (2007) Poll reveals public awareness of nanotech stuck at low level (Woodrow Wilson International Center for Scholars, Washington, DC)

- 39.Satterfield T, Kandlikar M, Beaudrie CEH, Conti J, Herr Harthorn B. Anticipating the perceived risk of nanotechnologies. Nat Nanotechnol. 2009;4(11):752–758. doi: 10.1038/nnano.2009.265. [DOI] [PubMed] [Google Scholar]

- 40.Corley EA, Scheufele DA. Outreach gone wrong? When we talk nano to the public, we are leaving behind key audiences. Scientist. 2010;24(1):22. [Google Scholar]

- 41.Einsiedel EF. Mental maps of science: Knowledge and attitudes among Canadian adults. Int J Public Opin Res. 1994;6(1):35–44. [Google Scholar]

- 42.Sjoberg L. Attitudes toward technology and risk: Going beyond what is immediately given. Policy Sci. 2002;35(4):379–400. [Google Scholar]

- 43.Gauchat G. Politicization of science in the public sphere. Am Sociol Rev. 2012;77(2):167–187. [Google Scholar]

- 44. doi: 10.1177/0963662513480091. Hmielowski JD, Feldman L, Myers TA, Leiserowitz A, Maibach E (2013) An attack on science? Media use, trust in scientists, and perceptions of global warming. Public Underst Sci, 10.1177/0963662513480091. [DOI] [PubMed] [Google Scholar]

- 45.Nisbet MC, Scheufele DA. The polarization paradox: Why hyperpartisanship strengthens conservatism and undermines liberalism. Breakthrough Journal. 2012;3:55–69. [Google Scholar]

- 46. Maddow R (2010) Theodore H. White Lecture on Press and Politics [transcript] (Joan Shorenstein Center on the Press, Politics and Public Policy, Harvard University, Cambridge, MA)

- 47.Corley EA, Kim Y, Scheufele DA. Public challenges of nanotechnology regulation. Jurimetrics. 2012;52(3):371–381. [Google Scholar]

- 48.Brossard D, Nisbet MC. Deference to scientific authority among a low information public: Understanding U.S. opinion on agricultural biotechnology. Int J Public Opin Res. 2007;19(1):24–52. [Google Scholar]

- 49.Anderson AA, Scheufele DA, Brossard D, Corley EA. The role of media and deference to scientific authority in cultivating trust in sources of information about emerging technologies. Int J Public Opin Res. 2012;24(2):225–237. [Google Scholar]

- 50.Ho SS, Brossard D, Scheufele DA. Effects of value predispositions, mass media use, and knowledge on public attitudes toward embryonic stem cell research. Int J Public Opin Res. 2008;20(2):171–192. [Google Scholar]

- 51.Lee CJ, Scheufele DA. The influence of knowledge and deference toward scientific authority: A media effects model for public attitudes toward nanotechnology. Journalism Mass Comm. 2006;83(4):819–834. [Google Scholar]

- 52. Popkin SL (1994) The Reasoning Voter: Communication and Persuasion in Presidential Campaigns (Univ of Chicago Press, Chicago), 2nd Ed.

- 53.Scheufele DA. Messages and heuristics: How audiences form attitudes about emerging technologies. In: Turney J, editor. Engaging Science: Thoughts, Deeds, Analysis and Action. London: Wellcome Trust; 2006. pp. 20–25. [Google Scholar]

- 54.Gerbner G, Gross L. System of cultural indicators. Public Opin Q. 1974;38:460–461. [Google Scholar]

- 55.Gerbner G. Science on television: How it affects public conceptions. Issues Sci Technol. 1987;3(3):109–115. [Google Scholar]

- 56.Gerbner G, Gross LP, Morgan M, Signorielli N. Scientists on the TV screen. Cult Soc. 1981;42:51–54. [Google Scholar]

- 57.Dudo A, et al. Science on television in the 21st century: Recent trends in portrayals and their contributions to public attitudes toward science. Communic Res. 2011;48(6):754–777. [Google Scholar]

- 58.Nisbet MC, et al. Knowledge, reservations, or promise? A media effects model for public perceptions of science and technology. Communic Res. 2002;29(5):584–608. [Google Scholar]

- 59.Scheufele DA. Framing as a theory of media effects. J Commun. 1999;49(1):103–122. [Google Scholar]

- 60.Goffman E. Frame Analysis: An Essay on the Organization of Experience. New York: Harper & Row; 1974. [Google Scholar]

- 61.Kahneman D, Tversky A. Prospect theory: Analysis of decision under risk. Econometrica. 1979;47(2):263–291. [Google Scholar]

- 62.Lakoff G, Johnson M. Metaphors We Live By. Chicago: Univ of Chicago Press; 1981. [Google Scholar]

- 63.Price V, Tewksbury D. News values and public opinion: A theoretical account of media priming and framing. In: Barett GA, Boster FJ, editors. Progress in Communication Sciences: Advances in Persuasion. Vol 13. Greenwich, CT: Ablex; 1997. pp. 173–212. [Google Scholar]

- 64.Kahneman D. Maps of bounded rationality: A perspective on intuitive judgment and choice. In: Frängsmyr T, editor. Les Prix Nobel: The Nobel Prizes 2002. Stockholm, Sweden: Nobel Foundation; 2003. pp. 449–489. [Google Scholar]

- 65.Nisbet MC, Scheufele DA. What’s next for science communication? Promising directions and lingering distractions. Am J Bot. 2009;96(10):1767–1778. doi: 10.3732/ajb.0900041. [DOI] [PubMed] [Google Scholar]

- 66.Nisbet MC, Brossard D, Kroepsch A. Framing science: The stem cell controversy in an age of press/politics. Harv Int J PressPolit. 2003;8(2):36–70. [Google Scholar]

- 67.Corley EA, Scheufele DA, Hu Q. Of risks and regulations: How leading U.S. nanoscientists form policy stances about nanotechnology. J Nanopart Res. 2009;11(7):1573–1585. doi: 10.1007/s11051-009-9671-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Brossard D, Scheufele DA, Kim E, Lewenstein BV. Religiosity as a perceptual filter: Examining processes of opinion formation about nanotechnology. Public Underst Sci. 2009;18(5):546–558. [Google Scholar]

- 69.Kahan DM, Braman D, Slovic P, Gastil J, Cohen G. Cultural cognition of the risks and benefits of nanotechnology. Nat Nanotechnol. 2009;4(2):87–90. doi: 10.1038/nnano.2008.341. [DOI] [PubMed] [Google Scholar]

- 70.Kunda Z. The case for motivated reasoning. Psychol Bull. 1990;108(3):480–498. doi: 10.1037/0033-2909.108.3.480. [DOI] [PubMed] [Google Scholar]

- 71.Kahan DM, et al. The polarizing impact of science literacy and numeracy on perceived climate change risks. Nature Clim. Change. 2012;2(10):732–735. [Google Scholar]

- 72.Nisbet MC. The competition for worldviews: Values, information, and public support for stem cell research. Int J Public Opin Res. 2005;17(1):90–112. [Google Scholar]