Abstract

To recognize objects quickly and accurately, mature visual systems build invariant object representations that generalize across a range of novel viewing conditions (e.g., changes in viewpoint). To date, however, the origins of this core cognitive ability have not yet been established. To examine how invariant object recognition develops in a newborn visual system, I raised chickens from birth for 2 weeks within controlled-rearing chambers. These chambers provided complete control over all visual object experiences. In the first week of life, subjects’ visual object experience was limited to a single virtual object rotating through a 60° viewpoint range. In the second week of life, I examined whether subjects could recognize that virtual object from novel viewpoints. Newborn chickens were able to generate viewpoint-invariant representations that supported object recognition across large, novel, and complex changes in the object’s appearance. Thus, newborn visual systems can begin building invariant object representations at the onset of visual object experience. These abstract representations can be generated from sparse data, in this case from a visual world containing a single virtual object seen from a limited range of viewpoints. This study shows that powerful, robust, and invariant object recognition machinery is an inherent feature of the newborn brain.

Keywords: newborn cognition, animal cognition, avian cognition, imprinting

Human adults recognize objects quickly and accurately, despite the tremendous variation in appearance that each object can produce on the retina (i.e., a result of changes in viewpoint, scale, lighting, and so forth) (1–4). To date, however, little is known about the origins of this ability. Does invariant object recognition have a protracted development, constructed over time from extensive experiences with objects? Or can the newborn brain begin building invariant representations at the onset of visual object experience?

Because of challenges associated with testing newborns experimentally, previous studies of invariant object recognition were forced to test individuals months or years after birth. Thus, during testing, subjects were using visual systems that had already been shaped (perhaps heavily) by their prior experiences. Natural scenes are richly structured and highly predictable across space and time (5), and the visual system exploits these statistical redundancies during development to fine-tune the response properties of neurons (6–8). For example, studies of monkeys and humans show that object recognition machinery changes rapidly in response to statistical redundancies present in the organism’s environment (9, 10), with significant neuronal rewiring occurring in as little as 1 h (11, 12). Furthermore, there is extensive behavioral evidence that infants begin learning statistical redundancies soon after birth (13–15). These findings allow for the possibility that even early emerging object concepts (e.g., abilities appearing days, weeks, or months after birth) are learned from experience early in postnatal life (16).

Analyzing the development of object recognition therefore requires an animal that can learn to recognize objects and whose visual environment can be strictly controlled and manipulated from birth. Domestic chickens (Gallus gallus) meet both of these criteria. First, chickens can recognize objects, including 2D and 3D shapes (17–21). Second, chickens can be raised from birth in environments devoid of objects (22, 23). Unlike newborn primates and rodents, newborn chickens do not require parental care and, because of early motor development, are immediately able to explore their environment. In addition, newborn chickens imprint to conspicuous objects they see after hatching (24–26). Chickens develop a strong social attachment to their imprinted objects, and will attempt to spend the majority of their time with the objects. This imprinting response can therefore be used to test object recognition abilities without training (18, 19). Together, these characteristics make chickens an ideal animal model for studying the development of core cognitive abilities (for a general review, see ref. 27).

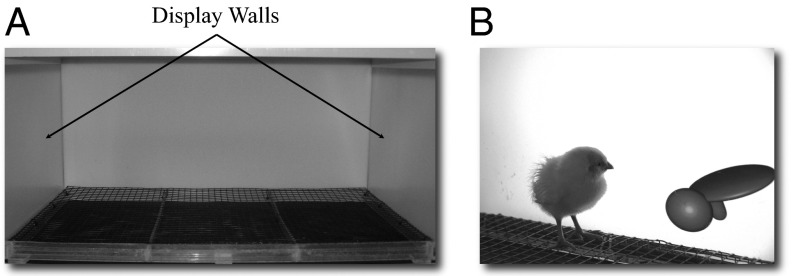

To investigate the origins of invariant object recognition, chickens were raised from birth for 2 wk within novel, specially designed controlled-rearing chambers. These chambers provided complete control over all visual object experiences from birth. Specifically, the chambers contained extended surfaces only (Fig. 1A). Object stimuli were presented to the subjects by projecting virtual objects onto two display walls situated on opposite sides of the chamber (Fig. 1B). Food and water were available within transparent holes in the ground. Grain was used as food because it does not behave like an object (i.e., the grain was nonsolid and did not maintain a rigid, bounded shape). All care of the chickens (i.e., replenishment of food and water) was performed in darkness with the aid of night vision goggles. Thus, subjects’ entire visual object experience was limited to the virtual objects projected onto the display walls.

Fig. 1.

(A) The interior of a controlled-rearing chamber. The front wall (removed for this picture) was identical to the back wall. (B) A young chicken with one of the virtual objects.

The virtual objects were modeled after those used in previous studies that tested for invariant object recognition in adult rats (28, 29). These objects are ideal for studying invariant recognition because changing the viewpoint of an object can produce a greater within-object image difference than changing the identity of the object while maintaining its viewpoint (see SI Appendix, Section 1 for details). Distinguishing between these objects from novel viewpoints therefore requires an invariant representation that can generalize across large, novel, and complex changes in the object’s appearance on the retina.

In the first week of life (the input phase), subjects’ visual object experience was limited to a single virtual object rotating through a 60° viewpoint range. In the second week of life (the test phase), I probed the nature of the object representation generated from that limited input by using the two-alternative forced-choice test. During each test trial, the imprinted object was projected onto one display wall and an unfamiliar object was projected onto the other display wall. If subjects recognize their imprinted object, then they should spend a greater proportion of time in proximity to the imprinted object compared with the unfamiliar object during the test trials (24–26).

All of the subjects’ behavior was tracked by microcameras embedded in the ceilings of the chambers and analyzed with automated animal tracking software. This made it possible to: (i) eliminate the possibility of experimenter bias, (ii) sample behavior noninvasively (i.e., the subjects did not need to be moved to a separate testing apparatus and there were no visual interactions between the subjects and an experimenter), and (iii) collect a large number of data points from each newborn subject across multiple testing conditions. Specifically, I was able to collect 168 test trials from each newborn subject across 12 different viewpoint ranges. This process allowed for a detailed analysis of object recognition performance for both the individual subjects and the overall group.

Results

Exp. 1.

Exp. 1 examined whether newborn chickens can generate invariant object representations at the onset of visual object experience. In the first week of life (the input phase), subjects were raised in an environment that contained a single virtual object (Fig. 2A). This object moved continuously, rotating through a 60° frontal viewpoint range about a vertical axis passing through its centroid (see Movies S1 and S2 for animations). The object only moved along this 60° trajectory; subjects never observed the object from any other viewpoint in the input phase.

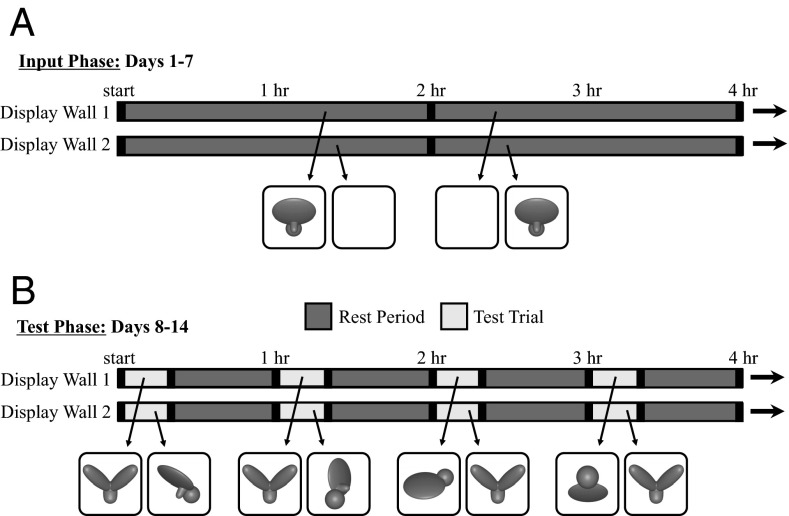

Fig. 2.

A schematic showing the presentation schedule of the virtual objects on the two display walls. This schedule shows a 4-h period from (A) the input phase and (B) the test phase during Exp. 1. These subjects were imprinted to object A in the input phase, with object B serving as the unfamiliar object in the test phase.

In the second week of life (the test phase), I examined whether subjects could recognize their imprinted object across changes in viewpoint. The test phase was identical to the input phase, except every hour subjects received one 20-min test trial (Fig. 2B). During the test trials, a different virtual object was projected onto each display wall and I measured the amount of time subjects spent in proximity to each object. One object was the imprinted object from the input phase shown from a familiar or novel viewpoint range, and the other object was an unfamiliar object. The unfamiliar object had a similar size, color, motion speed, and motion trajectory as the imprinted object from the input phase. Specifically, on all of test trials, the unfamiliar object was presented from the same frontal viewpoint range as the imprinted object from the input phase (Fig. 3). Consequently, on most of the test trials, the unfamiliar object was more similar to the imprinting stimulus than the imprinted object was to the imprinting stimulus (from a pixel-wise perspective). To recognize their imprinted object, subjects therefore needed to generalize across large, novel, and complex changes in the object’s appearance. Object recognition was tested across 12 viewpoint ranges. Each viewpoint range was tested twice per day.

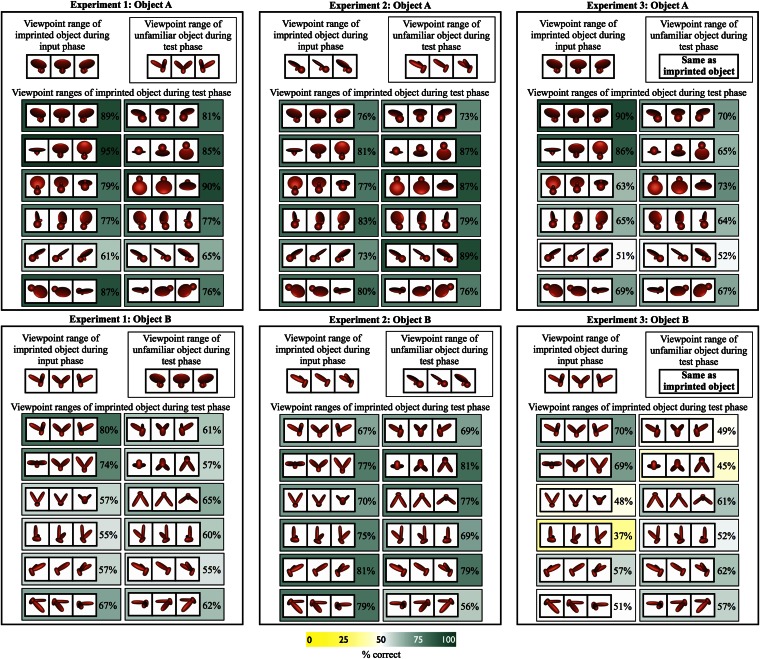

Fig. 3.

Results from Exps. 1–3. The Upper part of each panel shows the viewpoint range of the imprinted object presented during the input phase. The Lower part of the panel shows the viewpoint ranges of the imprinted object presented during the test phase, along with the percentage of test trials in which the subjects successfully distinguished their imprinted object from the unfamiliar object. Chance performance was 50%. To maximize the pixel-level similarity between the unfamiliar object and the imprinted object, the unfamiliar object (Inset) was always presented from the same viewpoint range as the imprinted object from the input phase in Exps. 1 and 2. In Exp. 3, the unfamiliar object and the imprinted object were presented from the same viewpoint range on each test trial to minimize the pixel-wise image differences between the two test objects and to equate the familiarity of the test animations.

Test trials were scored as “correct” when subjects spent a greater proportion of time with their imprinted object and “incorrect” when they spent a greater proportion of time with the unfamiliar object. These responses were then analyzed with hierarchical Bayesian methods (30) that provided detailed probabilistic estimates of recognition performance for both the individual subjects and the overall group (see SI Appendix, Sections 3 and 4 for details).

Subjects successfully recognized their imprinted object on 71% (SEM, 5%) of the test trials (Fig. 3). Overall, the probability that performance was above chance was greater than 99.99% (SI Appendix, Fig. S6). Across the 12 subjects, the probability that performance was above chance was 99.99% or greater for nine of the subjects, 97% for one of the subjects, and 89% for another subject. The remaining subject scored below chance level. Across the 24 viewpoint tests (i.e., 12 viewpoint tests for each of the two imprinted objects), the probability that performance was above chance was 99% or greater for 13 of the viewpoint ranges, 90% or greater for 19 of the viewpoint ranges, and 75% or greater for all 24 of the viewpoint ranges (SI Appendix, Figs. S9 and S10). Thus, these newborn subjects were able to recognize their imprinted object with high precision across the majority of the viewpoint ranges, despite never having observed that object (or any other object) move beyond the limited 60° viewpoint range seen in the input phase.

To confirm that subjects generated invariant representations, I computed the pixel-level similarity between the imprinted object presented in the input phase and the test objects presented in the test phase (see SI Appendix, Section 1 for details). Because a retinal image consists of a collection of signals from photoreceptor cells, and each photoreceptor cell registers the brightness value from a particular region in the image, a pixel-level description of a stimulus provides a reasonable first-order approximation of the retinal input (31). The within-object image difference (i.e., the pixel-level difference between the test animation of the imprinted object and the input animation of the imprinted object) was greater than the between-object image difference (i.e., the pixel-level difference between the test animation of the unfamiliar object and the input animation of the imprinted object) on 68% of the novel viewpoint test trials (SI Appendix, Fig. S2). Furthermore, subjects’ object recognition performance did not vary as a function of the pixel-level similarity between the test animation of the imprinted object and the input animation of the imprinted object (Pearson correlation: r = −0.17, P = 0.44). Thus, for the majority of these newborn subjects, the outputs of object recognition generalized well beyond the input coming in through the senses.

Exp. 2.

In Exp. 2 I attempted to replicate and extend the findings from Exp. 1. The methods were identical to those used in Exp. 1, except subjects were presented with a different viewpoint range of the imprinted objects during the input phase. Specifically, subjects viewed a side viewpoint range of the imprinted object rather than a frontal viewpoint range (see Movies S3 and S4 for animations). This side viewpoint range increased the pixel-level similarity between the imprinted object presented during the input phase and the unfamiliar object presented during the test phase. Consequently, the within-object image difference was greater than the between-object image difference on 100% of the novel viewpoint test trials (SI Appendix, Fig. S3). This experiment thus provided a particularly strong test of newborns’ ability to generate invariant object representations from sparse visual input.

Subjects successfully recognized their imprinted object on 76% (SEM, 3%) of the test trials (Fig. 3). Overall, the probability that performance was above chance was greater than 99.99% (SI Appendix, Fig. S7). The probability that performance was above chance was also 99.99% or greater for all of the 11 subjects. Across the 24 viewpoint tests, the probability that performance was above chance was 99% or greater for 21 of the viewpoint ranges, 90% or greater for 23 of the viewpoint ranges, and 75% or greater for all 24 of the viewpoint ranges (SI Appendix, Figs. S11 and S12). Furthermore, as in Exp. 1, subjects’ object recognition performance did not vary as a function of the pixel-level similarity between the test animation of the imprinted object and the input animation of the imprinted object (Pearson correlation: r = −0.07, P = 0.75). These results replicate and extend the findings from Exp. 1. Newborns can generate viewpoint-invariant representations that support object recognition across large, novel, and complex changes in the object’s appearance.

Exp. 3.

In Exps. 1 and 2, the unfamiliar object was always presented from the same viewpoint range in the test phase, whereas the imprinted object was presented from 12 different viewpoint ranges. Thus, subjects might simply have preferred the more novel test animation, without necessarily having generated an invariant representation of the imprinted object. This alternative explanation is unlikely because many studies have shown that chickens prefer to spend time with their imprinted objects rather than novel objects (24–27). Nevertheless, it was important to control for this possibility within the context of the present testing methodology. To do so, I conducted an additional experiment (Exp. 3) in which the unfamiliar object was presented from the same viewpoint range as the imprinted object on each of the test trials (see Movies S5 and S6 for animations). This aspect made the two test animations equally novel to the subjects on each test trial, while also minimizing the pixel-level image difference between the two test animations. The experiment was identical to Exp. 1 in all other respects.

Subjects successfully recognized their imprinted object on 61% (SEM = 4%) of the test trials (Fig. 3). Overall, the probability that performance was above chance was greater than 99% (SI Appendix, Fig. S8). Across the 12 subjects, the probability that performance was above chance was 99% or greater for eight of the subjects and 92% for another subject. The remaining three subjects scored at or below chance level. Across the 24 viewpoint tests, the probability that performance was above chance was 99% or greater for 7 of the viewpoint ranges, 90% or greater for 13 of the viewpoint ranges, and 75% or greater for 16 of the viewpoint ranges (SI Appendix, Figs. S13 and S14). Performance was at or below chance for the remaining eight viewpoint ranges. In general, subjects were able to recognize their imprinted object across novel viewpoint ranges, even when the test animations were equally novel to the subjects. Furthermore, because presenting the two test objects from the same viewpoint range minimized the pixel-level image difference between the two test animations, these results also show that newborn chickens can distinguish between test objects that produce similar retinal projections over time.

Change Over Time Analysis.

Imprinting in chickens is subject to a critical period, which ends approximately 3 d after birth. Thus, subjects’ representation of their imprinted object was not expected to change over the course of the test phase (which began 7 d after birth). To test this assumption, I analyzed the proportion of time subjects spent in proximity to their imprinted object as a function of trial number (e.g., first presentation, second, third, etc.). For all experiments, performance was high and significantly above chance even for the first presentation of the novel stimuli (one-tailed t tests, all P < 0.002), and remained stable over the course of the test phase (SI Appendix, Fig. S4) with little variation as a function of presentation number (one-way ANOVAs, all P > 0.57). The test-retest reliability was also high in all experiments (Cronbach’s α = all 0.89 or greater). These analyses show that subjects’ recognition behavior was spontaneous and robust, and cannot be explained by learning taking place across the test phase. Newborn chickens immediately achieved their maximal performance and did not significantly improve thereafter.

Analysis of Individual Subject Performance.

With this controlled-rearing method it was possible to collect a large number of data points from each newborn subject across multiple testing conditions. This process allowed for a detailed analysis of the performance of each individual subject. I first examined whether all of the subjects were able to build an invariant representation from the sparse visual input provided in the input phase. To do so, I computed whether each subject’s performance across the test trials exceeded chance level. Twenty-nine of the 35 subjects successfully generated an invariant object representation (Fig. 4).

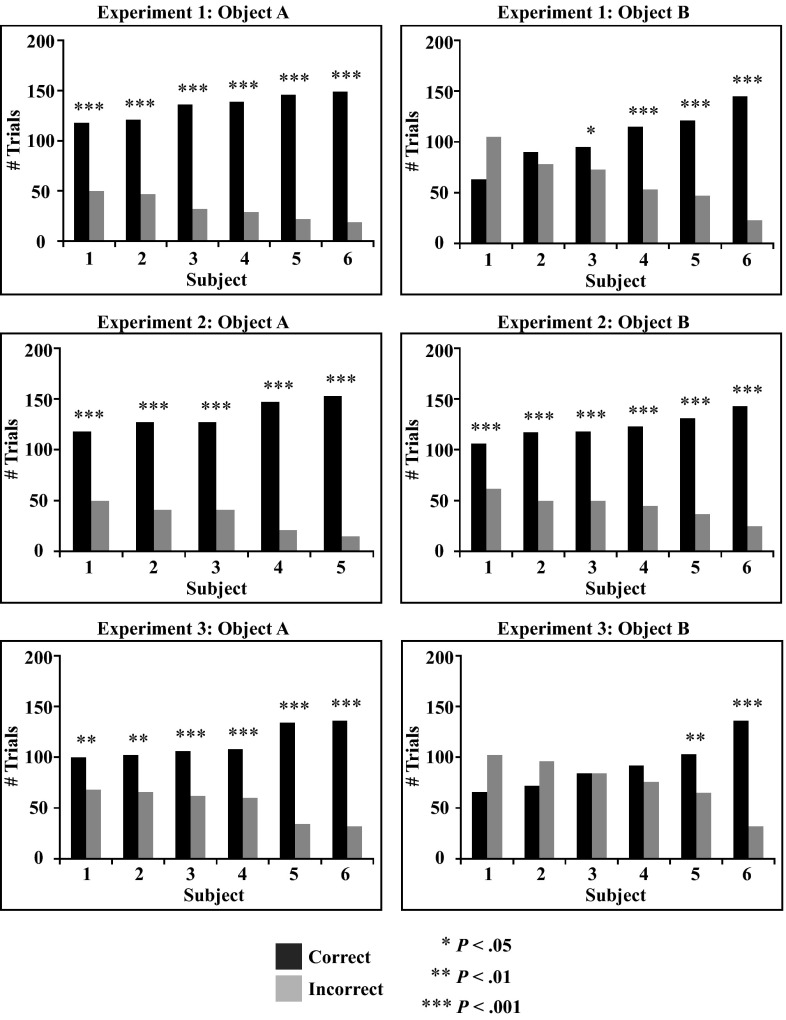

Fig. 4.

Performance of each individual subject (ordered by performance). The graphs show the total number of correct and incorrect test trials for each subject across the test phase. P values denote the statistical difference between the number of correct and incorrect test trials as computed through one-tailed binomial tests.

The six subjects who did not generate an invariant representation could have failed in this task for two reasons. First, the subjects may have failed to imprint to the virtual object presented in the input phase, and thus lacked motivation to approach either of the virtual objects presented in the test phase. Second, the subjects may have successfully imprinted to the virtual object, but nevertheless failed to generate a viewpoint-invariant representation of that object. To distinguish between these possibilities, I examined whether subjects showed a preference for the imprinted object during the rest periods in the test phase. During the rest periods, the input animation was projected onto one display wall and a white screen was projected onto the other display wall (Fig. 2B). All 35 subjects spent the majority of the rest periods in proximity to the imprinting stimulus (mean = 92% of trials; SEM = 1%; one-tailed Binomial tests, all P < 10−7), including the six subjects who failed to generate an invariant representation of the imprinted object. Thus, it is possible to imprint to an object but fail to generate a viewpoint-invariant representation of that object. More generally, these results suggest that there can be significant variation in newborns’ object recognition abilities, even when raised from birth in identical visual environments.

To test for the presence of individual differences more directly, I examined whether the identity of the subject was a predictor of object recognition performance. In all experiments, one-way ANOVAs showed that the identity of the subject was a strong predictor of performance: Exp. 1, F(11, 167) = 29.54, P < 0.001; Exp. 2, F(10, 153) = 8.65, P < 0.001; and Exp. 3, F(11,167) = 29.54, P < 0.001. Despite being raised in identical visual environments, there were significant individual differences in the object recognition abilities of these newborn subjects.

All 17 of the subjects who were imprinted to object A successfully generated a viewpoint-invariant representation, whereas only 12 of the 18 subjects imprinted to object B successfully did so (Fig. 4). Furthermore, all six of the unsuccessful subjects were imprinted to the frontal viewpoint range of object B (as opposed to the side viewpoint range). Why did subjects have greater difficulty generating a viewpoint-invariant representation from this particular set of visual input? Although this experiment was not designed to address this question, a study with adult rats who were trained to distinguish between these same two objects indicates that object B contains greater structural complexity than object A (29). Specifically, the frontal viewpoint range of object B presents three fully visible, spatially separated, and approximately equally sized lobes, whereas object A has one large lobe and two smaller, less salient lobes. These feature differences strongly influenced rats’ performance, causing high intersubject variability in recognition strategies for object B and low intersubject variability for object A (for details, see ref. 29). It would be interesting for future research to examine systematically which objects and viewpoint ranges are better and which are worse for generating viewpoint-invariant representations in a newborn visual system, because these input-output patterns could then be used as benchmarks for assessing the accuracy of computational models of invariant object recognition.

Discussion

This study examined whether newborns can generate invariant object representations at the onset of visual object experience. To do so, newborn chickens were raised in environments that contained a single virtual object. The majority of subjects were able to generate a viewpoint-invariant representation of this object. This result shows that newborns can build an invariant representation of the first object they see.

This finding does not necessarily imply that newborns build 3D geometric representations of whole objects (28). Chickens could generate invariant object representations by building invariant representations of subfeatures that are smaller than the entire object. These feature detectors might respond to only a portion of the object, or be sensitive to key 2D, rather than 3D, features. Indeed, many leading computational models of invariant object recognition in humans and monkeys explicitly rely on such subfeatures (32, 33). Remarkably, at least some of these invariant feature detectors appear to be present at the onset of visual object experience. It will be interesting for future studies to examine the specific characteristics of these feature detectors.

This study extends the existing literature concerning chickens’ visual abilities (17–21, 34–36). Although previous studies show that chickens are proficient at using vision to solve a variety of tasks, they did not look at invariant object recognition specifically. Previous studies primarily used 2D shapes or simple 3D objects as stimuli, so there was little variation in the individual appearances of the objects. This aspect makes it difficult to characterize the representations that supported recognition, because subjects could have used either “lower-level” or “higher-level” strategies (28). For example, in many previous studies, chickens could have recognized their imprinted object by encoding and matching patterns of retinal activity. In contrast, in the present study chickens needed to recognize their imprinted object across large, novel, and complex changes in the retinal activity produced by the object.

To what extent do these results illuminate the development of invariant object recognition in humans? The answer to this question depends on whether humans and chickens use similar neural machinery to recognize objects. If humans and chickens use similar machinery, then invariant recognition should have a similar developmental trajectory in both species. Conversely, if humans and chickens use different machinery, then invariant recognition might have distinct developmental trajectories across species. Although additional research is needed to distinguish between these possibilities, there is growing evidence that humans and chickens use similar machinery for processing sensory information. On the behavioral level, many studies have shown that humans and chickens have similar core cognitive abilities (reviewed by ref. 27), such as recognizing partly occluded objects (19), tracking and remembering the locations of objects (34), and reasoning about the physical interactions between objects (37). Similarly, on the neurophysiological level, researchers have identified common ‘cortical’ cells and circuits in mammals and birds (38–40). Specifically, although mammalian brains and avian brains differ in their macroarchitecture, (i.e., layered versus nuclear organization, respectively), their basic cell types and connections of sensory input and output neurons are nearly identical (for a side by side comparison of avian and mammalian cortical circuitry, see figure 2 in ref. 38). For example, in avian circuitry, sensory inputs are organized in a radial columnar manner, with lamina-specific cell morphologies, recurrent axonal loops, and re-entrant pathways, typical of layers 2–5a of the mammalian neocortex. Similarly, long descending telencephalic efferents in birds contribute to the recurrent axonal connections within the column, akin to layers 5b and 6 of the mammalian neocortex. Studies using molecular techniques to examine gene expression show that these neural circuits are generated by homologous genes in mammals and birds (40). This discovery that mammals and birds share homologous cells and circuits suggests that their brains perform similar, or even identical, computational operations (41).

In sum, this study shows that (i) the first object representation built by a newborn visual system can be invariant to large, novel, and complex changes in an object’s appearance on the retina; and (ii) this invariant representation can be generated from extremely sparse data, in this case from a visual world containing a single virtual object seen from a limited 60° viewpoint range. From a computer vision perspective, this is an extraordinary computational feat. Viewpoint-invariant object recognition is widely recognized to be a difficult computational problem (42, 43), and it remains a major stumbling block in the development of artificial visual systems. While many previous studies have emphasized the importance of visual experience in the development of this ability (44–47), the present experiments indicate that the underlying machinery can be present and functional at birth, in the absence of any prior experience with objects.

Materials and Methods

Subjects.

Thirty-five domestic chickens of unknown sex were tested. The chicken eggs were obtained from a local distributor and incubated in darkness in an OVA-Easy (Brinsea) incubator. For the first 19 d of incubation, the temperature and humidity were maintained at 99.6 °F and 45%, respectively. On day 19, the humidity was increased to 60%. The incubation room was kept in complete darkness. After hatching, the chickens were moved from the incubation room to the controlled-rearing chambers in darkness with the aid of night vision goggles. Each chicken was raised singly within its own chamber.

Controlled-Rearing Chambers and Task.

The controlled-rearing chambers measured 66 cm (length) × 42 cm (width) × 69 cm (height) and were constructed from white, high-density plastic. The display walls were 19′′ liquid crystal display monitors (1,440 × 900 pixel resolution). Food and water were provided within transparent holes in the ground that measured 66 cm (length) × 2.5 cm (width) × 2.7 cm (height). The floors were wire mesh and supported 2.7 cm off the ground by thin, transparent beams; this allowed excrement to drop away from the subject. Subjects’ behavior was tracked by microcameras and Ethovision XT 7.0 software (Noldus Information Technology) that calculated the amount of time chickens spent within zones (22 cm × 42 cm) next to the left and right display walls.

On average, the virtual objects measured 8 cm (length) × 7 cm (height) and were suspended 3 cm off the floor. Each object rotated through a 60° viewpoint range about an axis passing through its centroid, completing the full back and forth rotation every 6 s. The objects were displayed on a uniform white background at the middle of the display walls. All of the virtual object stimuli presented in this study can be viewed in Movies S1–S6.

In the input phase, the imprinted object was displayed from a single 60° viewpoint range and appeared for an equal amount of time on the left and right display wall. The object switched walls every 2 h, following a 1-min period of darkness. One-half of the subjects were imprinted to object A (with object B serving as the unfamiliar object), and the other one-half of the subjects were imprinted to object B (with object A serving as the unfamiliar object) (Fig. 3). In the test phase, subjects received one 20-min test trial every hour, followed by one 40-min rest period. During each rest period, the input animation from the input phase appeared on one display wall and a white screen appeared on the other display wall. The 12 viewpoint ranges were tested 14 times each within randomized blocks over the course of the test phase.

These experiments were approved by The University of Southern California Institutional Animal Care and Use Committee.

Supplementary Material

Acknowledgments

I thank Samantha M. W. Wood for assistance on this manuscript and Aditya Prasad, Tony Bouz, Lynette Tan, and Jason G. Goldman for assistance building the controlled-rearing chambers. This work was supported by the University of Southern California.

Footnotes

The author declares no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1308246110/-/DCSupplemental.

References

- 1.DiCarlo JJ, Zoccolan D, Rust NC. How does the brain solve visual object recognition? Neuron. 2012;73(3):415–434. doi: 10.1016/j.neuron.2012.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Grill-Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- 3.Wang G, Obama S, Yamashita W, Sugihara T, Tanaka K. Prior experience of rotation is not required for recognizing objects seen from different angles. Nat Neurosci. 2005;8(12):1768–1775. doi: 10.1038/nn1600. [DOI] [PubMed] [Google Scholar]

- 4.Biederman I, Bar M. One-shot viewpoint invariance in matching novel objects. Vision Res. 1999;39(17):2885–2899. doi: 10.1016/s0042-6989(98)00309-5. [DOI] [PubMed] [Google Scholar]

- 5.Field DJ. What is the goal of sensory coding. Neural Comput. 1994;6(4):559–601. [Google Scholar]

- 6.Edelman S, Intrator N. Towards structural systematicity in distributed, statically bound visual representations. Cogn Sci. 2003;27(1):73–109. [Google Scholar]

- 7.Olshausen BA, Field DJ. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature. 1996;381(6583):607–609. doi: 10.1038/381607a0. [DOI] [PubMed] [Google Scholar]

- 8.Wallis G, Rolls ET. Invariant face and object recognition in the visual system. Prog Neurobiol. 1997;51(2):167–194. doi: 10.1016/s0301-0082(96)00054-8. [DOI] [PubMed] [Google Scholar]

- 9.Wallis G, Bülthoff HH. Effects of temporal association on recognition memory. Proc Natl Acad Sci USA. 2001;98(8):4800–4804. doi: 10.1073/pnas.071028598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cox DD, Meier P, Oertelt N, DiCarlo JJ. ‘Breaking’ position-invariant object recognition. Nat Neurosci. 2005;8(9):1145–1147. doi: 10.1038/nn1519. [DOI] [PubMed] [Google Scholar]

- 11.Li N, DiCarlo JJ. Unsupervised natural experience rapidly alters invariant object representation in visual cortex. Science. 2008;321(5895):1502–1507. doi: 10.1126/science.1160028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Li N, DiCarlo JJ. Unsupervised natural visual experience rapidly reshapes size-invariant object representation in inferior temporal cortex. Neuron. 2010;67(6):1062–1075. doi: 10.1016/j.neuron.2010.08.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kirkham NZ, Slemmer JA, Johnson SP. Visual statistical learning in infancy: Evidence for a domain general learning mechanism. Cognition. 2002;83(2):B35–B42. doi: 10.1016/s0010-0277(02)00004-5. [DOI] [PubMed] [Google Scholar]

- 14.Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274(5294):1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- 15.Bulf H, Johnson SP, Valenza E. Visual statistical learning in the newborn infant. Cognition. 2011;121(1):127–132. doi: 10.1016/j.cognition.2011.06.010. [DOI] [PubMed] [Google Scholar]

- 16.Johnson SP, Amso D, Slemmer JA. Development of object concepts in infancy: Evidence for early learning in an eye-tracking paradigm. Proc Natl Acad Sci USA. 2003;100(18):10568–10573. doi: 10.1073/pnas.1630655100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fontanari L, Rugani R, Regolin L, Vallortigara G. Object individuation in 3-day-old chicks: Use of property and spatiotemporal information. Dev Sci. 2011;14(5):1235–1244. doi: 10.1111/j.1467-7687.2011.01074.x. [DOI] [PubMed] [Google Scholar]

- 18.Bolhuis JJ. Early learning and the development of filial preferences in the chick. Behav Brain Res. 1999;98(2):245–252. doi: 10.1016/s0166-4328(98)00090-4. [DOI] [PubMed] [Google Scholar]

- 19.Regolin L, Vallortigara G. Perception of partly occluded objects by young chicks. Percept Psychophys. 1995;57(7):971–976. doi: 10.3758/bf03205456. [DOI] [PubMed] [Google Scholar]

- 20.Dawkins MS, Woodington A. Pattern recognition and active vision in chickens. Nature. 2000;403(6770):652–655. doi: 10.1038/35001064. [DOI] [PubMed] [Google Scholar]

- 21.Mascalzoni E, Osorio D, Regolin L, Vallortigara G. Symmetry perception by poultry chicks and its implications for three-dimensional object recognition. Proc Biol Sci. 2012;279(1730):841–846. doi: 10.1098/rspb.2011.1486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chiandetti C, Vallortigara G. Experience and geometry: Controlled-rearing studies with chicks. Anim Cogn. 2010;13(3):463–470. doi: 10.1007/s10071-009-0297-x. [DOI] [PubMed] [Google Scholar]

- 23.Vallortigara G, Sovrano VA, Chiandetti C. Doing Socrates experiment right: Controlled rearing studies of geometrical knowledge in animals. Curr Opin Neurobiol. 2009;19(1):20–26. doi: 10.1016/j.conb.2009.02.002. [DOI] [PubMed] [Google Scholar]

- 24.Bateson P, editor. What Must Be Known in Order to Understand Imprinting? Cambridge, MA: MIT Press; 2000. [Google Scholar]

- 25.Horn G. Pathways of the past: The imprint of memory. Nat Rev Neurosci. 2004;5(2):108–120. doi: 10.1038/nrn1324. [DOI] [PubMed] [Google Scholar]

- 26.Johnson MH, Horn G. Development of filial preferences in dark-reared chicks. Anim Behav. 1988;36(3):675–683. [Google Scholar]

- 27.Vallortigara G. Core knowledge of object, number, and geometry: A comparative and neural approach. Cogn Neuropsychol. 2012;29(1–2):213–236. doi: 10.1080/02643294.2012.654772. [DOI] [PubMed] [Google Scholar]

- 28.Zoccolan D, Oertelt N, DiCarlo JJ, Cox DD. A rodent model for the study of invariant visual object recognition. Proc Natl Acad Sci USA. 2009;106(21):8748–8753. doi: 10.1073/pnas.0811583106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Alemi-Neissi A, Rosselli FB, Zoccolan D. Multifeatural shape processing in rats engaged in invariant visual object recognition. J Neurosci. 2013;33(14):5939–5956. doi: 10.1523/JNEUROSCI.3629-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Kruschke JK. What to believe: Bayesian methods for data analysis. Trends Cogn Sci. 2010;14(7):293–300. doi: 10.1016/j.tics.2010.05.001. [DOI] [PubMed] [Google Scholar]

- 31.Seung HS, Lee DD. Cognition. The manifold ways of perception. Science. 2000;290(5500):2268–2269. doi: 10.1126/science.290.5500.2268. [DOI] [PubMed] [Google Scholar]

- 32.Serre T, Oliva A, Poggio T. A feedforward architecture accounts for rapid categorization. Proc Natl Acad Sci USA. 2007;104(15):6424–6429. doi: 10.1073/pnas.0700622104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ullman S, Vidal-Naquet M, Sali E. Visual features of intermediate complexity and their use in classification. Nat Neurosci. 2002;5(7):682–687. doi: 10.1038/nn870. [DOI] [PubMed] [Google Scholar]

- 34.Rugani R, Fontanari L, Simoni E, Regolin L, Vallortigara G. Arithmetic in newborn chicks. Proc Biol Sci. 2009;276(1666):2451–2460. doi: 10.1098/rspb.2009.0044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Regolin L, Garzotto B, Rugani R, Pagni P, Vallortigara G. Working memory in the chick: Parallel and lateralized mechanisms for encoding of object- and position-specific information. Behav Brain Res. 2005;157(1):1–9. doi: 10.1016/j.bbr.2004.06.012. [DOI] [PubMed] [Google Scholar]

- 36.Mascalzoni E, Regolin L, Vallortigara G. Innate sensitivity for self-propelled causal agency in newly hatched chicks. Proc Natl Acad Sci USA. 2010;107(9):4483–4485. doi: 10.1073/pnas.0908792107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chiandetti C, Vallortigara G. Intuitive physical reasoning about occluded objects by inexperienced chicks. Proc Biol Sci. 2011;278(1718):2621–2627. doi: 10.1098/rspb.2010.2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Karten HJ. Neocortical evolution: Neuronal circuits arise independently of lamination. Curr Biol. 2013;23(1):R12–R15. doi: 10.1016/j.cub.2012.11.013. [DOI] [PubMed] [Google Scholar]

- 39.Wang Y, Brzozowska-Prechtl A, Karten HJ. Laminar and columnar auditory cortex in avian brain. Proc Natl Acad Sci USA. 2010;107(28):12676–12681. doi: 10.1073/pnas.1006645107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Dugas-Ford J, Rowell JJ, Ragsdale CW. Cell-type homologies and the origins of the neocortex. Proc Natl Acad Sci USA. 2012;109(42):16974–16979. doi: 10.1073/pnas.1204773109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Karten HJ. Evolutionary developmental biology meets the brain: The origins of mammalian cortex. Proc Natl Acad Sci USA. 1997;94(7):2800–2804. doi: 10.1073/pnas.94.7.2800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pinto N, Cox DD, DiCarlo JJ. Why is real-world visual object recognition hard? PLOS Comput Biol. 2008;4(1):e27. doi: 10.1371/journal.pcbi.0040027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.DiCarlo JJ, Cox DD. Untangling invariant object recognition. Trends Cogn Sci. 2007;11(8):333–341. doi: 10.1016/j.tics.2007.06.010. [DOI] [PubMed] [Google Scholar]

- 44.Sinha P, Poggio T. Role of learning in three-dimensional form perception. Nature. 1996;384(6608):460–463. doi: 10.1038/384460a0. [DOI] [PubMed] [Google Scholar]

- 45.Sigman M, Gilbert CD. Learning to find a shape. Nat Neurosci. 2000;3(3):264–269. doi: 10.1038/72979. [DOI] [PubMed] [Google Scholar]

- 46.Schyns PG, Goldstone RL, Thibaut JP. The development of features in object concepts. Behav Brain Sci. 1998;21(1):1–17, discussion 17–54. doi: 10.1017/s0140525x98000107. [DOI] [PubMed] [Google Scholar]

- 47.Foldiak P. Learning invariance from transformation sequences. Neural Comput. 1991;3(2):194–200. doi: 10.1162/neco.1991.3.2.194. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.