Abstract

Maximizing rewards per unit time is ideal for success and survival for humans and animals. This goal can be approached by speeding up behavior aiming at rewards, and this is done most efficiently by acquiring skills. Importantly, reward-directed skills consist of two components: finding a good object (object skill) and acting on the object (action skill), which occur sequentially. Recent studies suggest that object skill is based on high capacity memory on object-value association. When a learned object appears, the corresponding memory is quickly expressed as a value-based gaze bias, leading to the automatic acquisition or avoidance of the object. Object skill thus plays a crucial role in increasing rewards per unit time.

Keywords: object-value memory, stable value, reward delay, saccade, gaze, automaticity

Evolutionary advantage of skill

The majority of daily human activities, such as lacing shoes, writing with a pencil, riding a bicycle, and using a computer, involve skilled behavior that is carried out with little or no conscious thought. Each kind of skill may be acquired through prolonged and intensive practice across many years [1, 2]. This is not unique to modern human societies. In less labor-divided societies (e.g., hunter-gatherer societies) hunting is a dominant skill among men. The hunting skill is learned gradually with ample experience until it reaches a plateau level toward the end of the life course [3]. Such a protracted course of skill acquisition makes longevity suitable for maintaining and advancing human society and therefore evolutionarily adaptable [4]. Skill also provides evolutionary advantage to many animals [5].

The importance of skills in human and animal societies strongly suggests that the brain has a robust mechanism that controls skills. Indeed, many studies have been aimed at revealing the neural mechanism of skills [6–13]. However, how exactly the changes in behavior associated with skill acquisition benefit humans and animals has not been fully addressed. In this article, we focus on one prominent aspect of skill - speed - and argue that owing to its speed, skill is crucial for success and survival.

An earlier reward is more valued

“You can get $10 now, but if you wait until the next week you will get $15. Which would you choose?” Many people would choose the first option even though the outcome is smaller. This phenomenon is common to humans and animals [14, 15] and is often referred to as ‘temporal discounting of rewards’. Why is an early reward more valued than a late reward? A standard answer in economics is that the value of a future reward is discounted because of the risk involved in waiting for it [16]. An additional reason is that one will receive a larger amount of reward per unit time if the reward is delivered earlier (Figure 1). Indeed, this has been recognized as a key factor in the field of animal foraging research [17, 18] and has been implied in operant conditioning research [19]. So for both humans and animals an early reward is more valued.

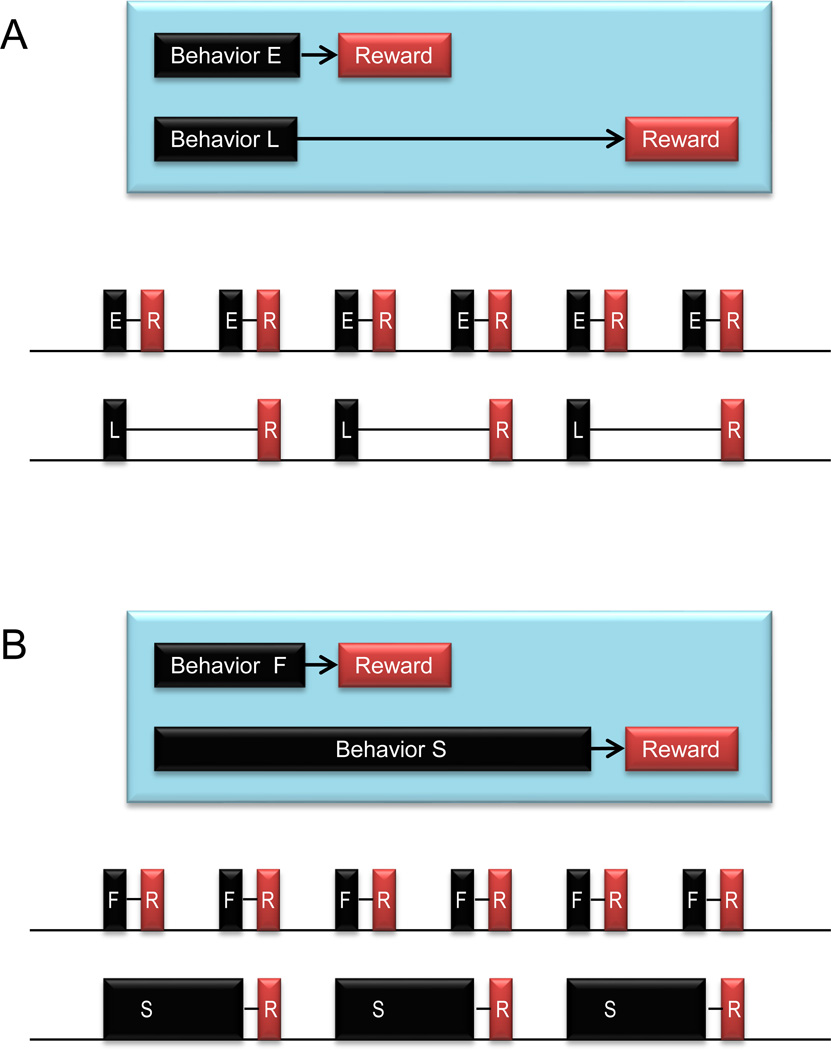

Figure 1.

Two kinds of reward delay affecting reward value – hypothetical tasks. A: External delay. In front of you (as a subject) are two buttons, E and L. If you press button E, you will get a drop of juice 1 second later. If you press button L, you will get a drop of juice 5 seconds later. After you have consumed the drop of juice, you can press the button again. Shown here are two extreme cases: you continue to press button E, or continue to press button L. The amount of reward per unit time is larger in the former case. B: Internal delay. In front of you are two buttons, F and S. They are located differently so that you can reach button F in 1 second and button S in 5 seconds. Once you press either button, you will get a drop of juice 1 second later.

One important question concerns the reasons for the reward delay. In the example shown in Fig. 1A, one would simply have to wait for the reward. But this is certainly not the only situation. Another and perhaps more common type of reward delay derives from one’s own behavior (Fig. 1B). Thus, the duration of the behavior acts as a reward delay [20, 21]. If the behavior takes too long a time, it becomes less useful.

There is an important difference between the two kinds of reward delay: unlike the waiting-based reward delay (Fig. 1A), the behavior-based reward delay (Fig. 1B) can be shortened (Fig. 2A) by modifying one’s behavior. From the viewpoint of foraging theory, a quicker behavior enhances one’s fitness to survive because one can get more energy per unit time [20, 22].

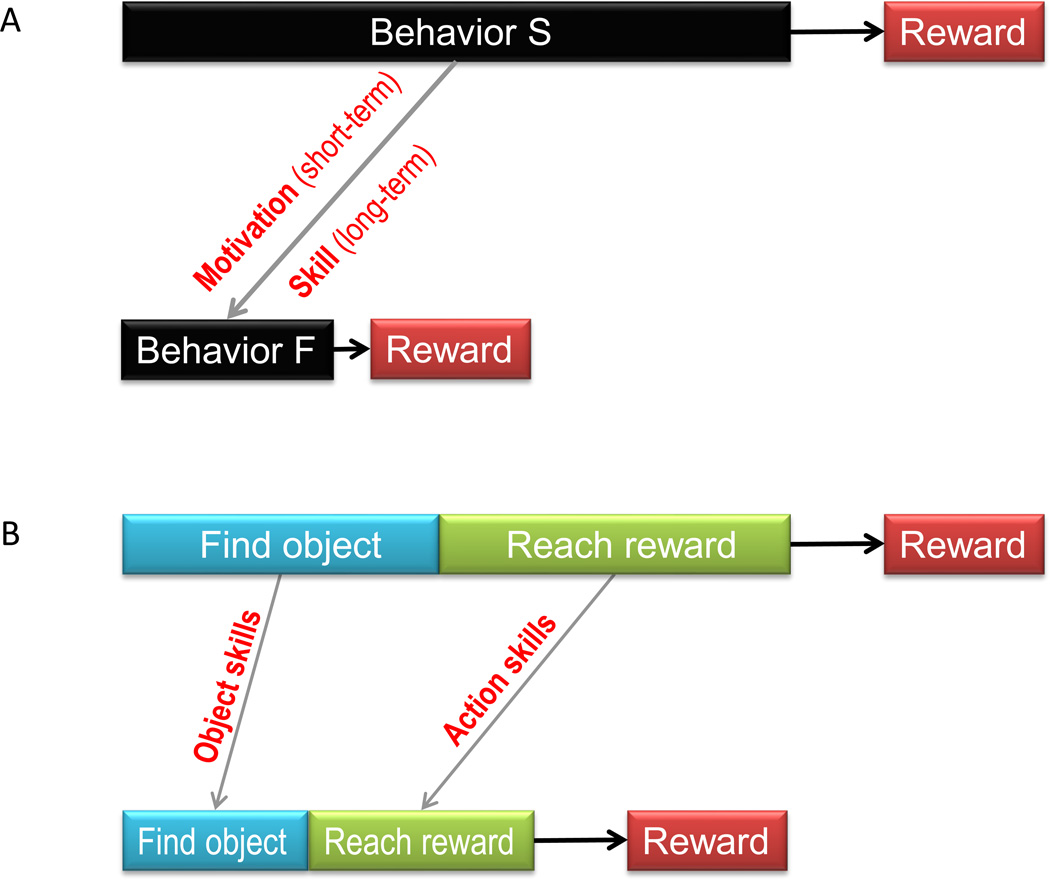

Figure 2.

Internal reward delay can be shortened. A: Motivation or skill shortens internal reward delay by shortening own behavior. B: Two kinds of skill that shorten reward delay.

Motivation and skill reduce the duration of behavior

The speed of behavior (and consequently its value) can be increased temporarily by motivation or permanently by skill (Fig. 2A). Motivation is initiated quickly if a reward is expected, but is dissolved quickly if no reward is expected. Its major advantage is flexibility. Skill is acquired slowly by repeating a behavior, but is maintained for a long time. Its major advantage is stability.

Which one is more effective in reducing reward delay? The duration of a behavior (e.g., saccadic eye movement) is shorter if a reward is expected than if no reward is expected after the behavior [23, 24]. This is due to motivation. A similar but larger bias occurs to reaction time (the time until the behavior starts). However, the difference is usually less than twofold [25–27]. The effect of skill is often much larger. If the duration of a behavior is compared between the initial (novice) stage and the late (skill) stage, the shortening could be nearly tenfold [1, 28]. Therefore, in the long run, skill can reduce reward delay more robustly than motivation. Other features of skill enable individuals to reach a goal easily (by automaticity) and securely (by stereotypy and stability). Therefore, we will focus mainly on skill in the following discussion.

Two kinds of skill

Our main message above was “reward delay can be shortened by accelerating own behavior.” But what exactly does ‘behavior’ mean? Human hunters as well as monkeys spend time in capturing an animal as their food resource [3, 29]. But before that, they need to find the animal using various sensory cues. By repeated experience, the humans and monkeys become proficient in both finding good prey animals and capturing them. Thus, the modifiable reward delay consists of two components: 1) the delay before finding a good object and 2) the delay before reaching a reward by acting on the object (Fig. 2B). If the goal of learning is to maximize reward gain [30], both of these delays need to be shortened. Indeed, the delays become a lot shorter by learning. These two types of skill could be called “object skill” and “action skill.”

A similar distinction has been proposed in foraging research [17, 31]. While foraging, animals spend time in two steps - ‘searching’ and ‘handling’ food – before consuming it. Since these two kinds of foraging time are usually spent sequentially, any attempt to reduce each of these times should enhance fitness for survival.

Note, however, that abilities equivalent to object skill and action skill have been studied extensively in the laboratory setting. The acquisition of object skill may depend on attention, perceptual learning, and episodic memory [32–37]. The acquisition of action skill may depend on motivation, procedural learning, and motor learning [10, 38–40]. We feel, however, that these mental abilities have rarely been studied in the integrative manner with a common functional basis, as recent opinion articles argue [41, 42]. This is particularly prominent among abilities supporting object skill. For both humans and animals, finding good objects can be the most challenging and time-consuming process [3, 29]. We thus will focus on object skill in the rest of this article.

Object skill – stable values

In experiments on attention and perceptual learning, the subjects are usually presented with stimuli or objects and are asked to mentally choose one of them. In everyday life, however, there is often no such instruction. We choose a particular object because, by doing so, we have accomplished something rewarding, probably multiple times. We avoid an object because the outcome was less rewarding or punishing. In other words, the proficiency of finding good objects is the result of value-based learning [41, 42].

What is critical about the development of object skill is consistency: an object needs to be associated with a high value consistently. This has been shown repeatedly in various psychological experiments [32, 43, 44]. Actually, such a situation is not unusual in laboratory experiments as well as everyday life. As a result, people may develop amazing abilities to find important objects or their changes [2, 32, 45, 46]. There are many similar situations in everyday life for humans and animals. For example, the visual appearance of a favorable food may not change during one’s entire life. Its value has been high for many years and will remain high for many years. The object has a stable high value.

Object skill – gaze and attention

There are several ways to find a good object. Animals may use different sensory modalities, visual, auditory, somatosensory, olfactory, electromagnetic etc. [47–49]. For primates including humans, visual information is a dominant modality [50, 51]. Indeed, studies on humans and monkeys have suggested that gaze and/or attention is drawn to an object that has a high value [52–58], which is often followed by a manual action performed on the object [59–62]. The values were attached to the objects during previous experiences with the objects. Importantly, such a gaze/attention bias may occur regardless of the subject’s current intention [55, 58]. This implies that the gaze/attention bias can be automatic. This is distractive in the volatile condition where the values of objects change frequently. In the more stable condition, however, the automatic gaze/attention bias is critical for survival, as we will discuss below.

Hikosaka and colleagues have conducted a series of behavioral and physiological experiments using macaque monkeys to address questions about object skill using the value-based gaze bias as a behavioral measure [63–65]. Unlike other mammals and like humans, macaque monkeys have well developed visual cortices, especially the temporal cortex where information on visual objects are processed [50]. They make saccadic eye movements vigorously to orient their gaze to surrounding objects [66, 67]. Such saccades are faster than in humans [67, 68].

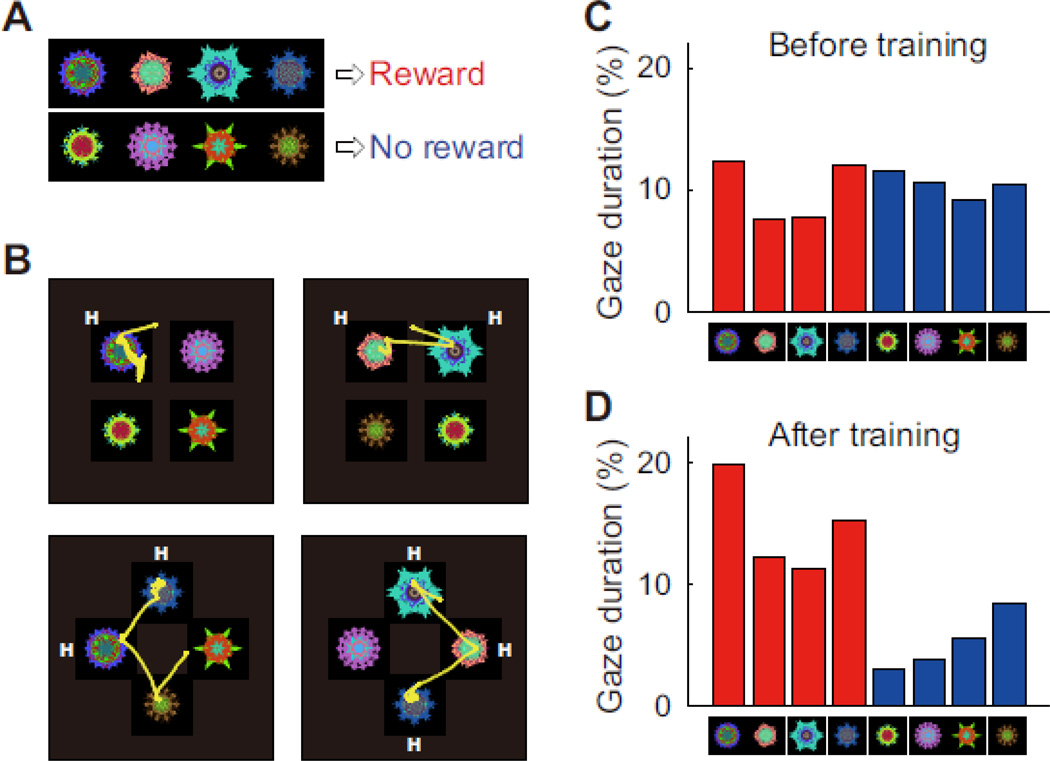

The hypothesis underlying this series of experiment was: Object skill develops if the subject experiences objects repeatedly with stable values. The monkeys in these experiments experienced many fractal objects, each with a large or small (or no) reward (Fig. 3). In consequence, half of the objects became high-valued, and the other half low-valued. The monkeys’ eye movements were tested using a free viewing condition, as exemplified in Fig. 4. After several daily learning sessions, the monkey started showing a gaze bias: more frequent saccades to high-valued objects and longer gaze on them [63, 65]. The gaze bias was considered automatic because it occurred reliably and repeatedly even though no reward was ever given after a saccade to any object during free viewing. This is consistent with the data obtained in human subjects described above.

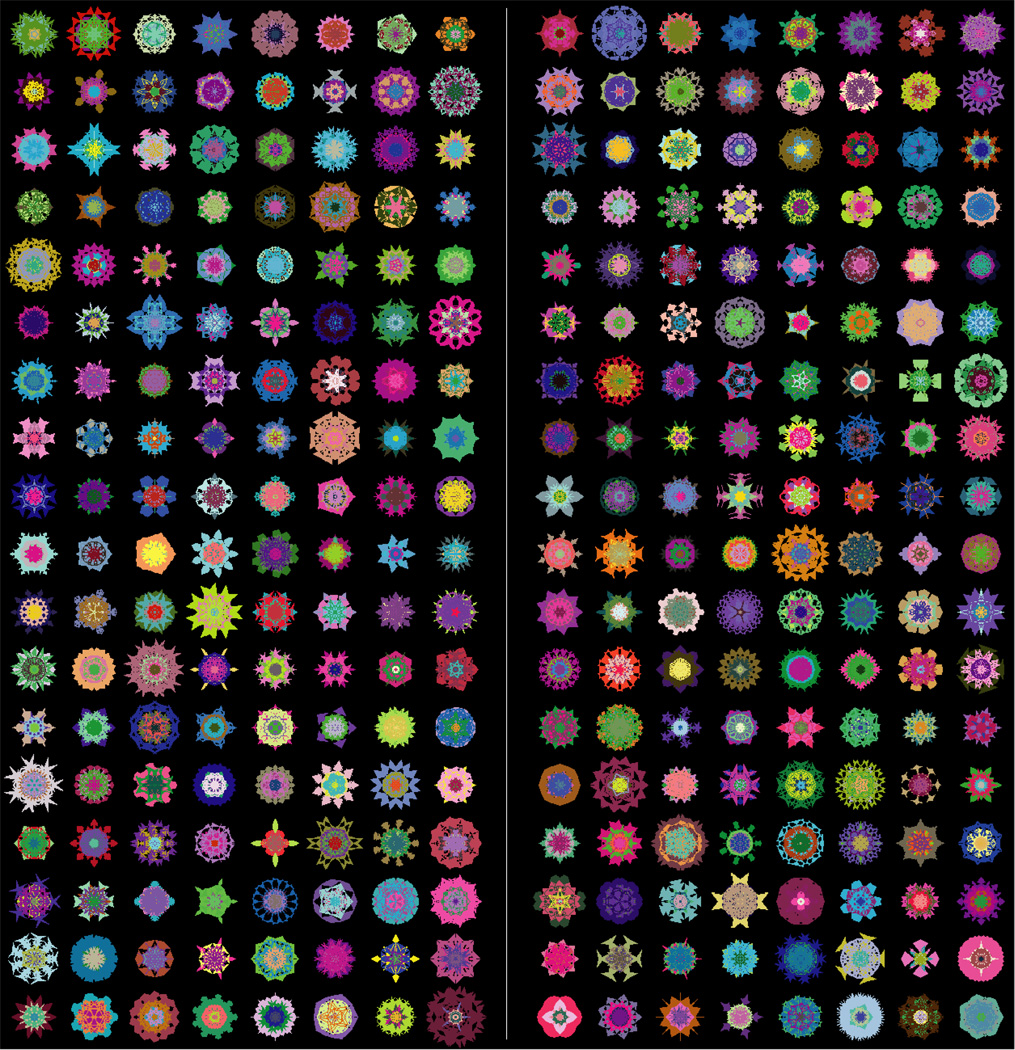

Figure 3.

Fractal objects that one monkey experienced for a long time with biased rewards (n=288). In each row of eight fractals, the left four fractals were high-valued objects (consistently associated with a large reward) and the right four fractals were low-valued objects (consistently associated with a small reward). Reproduced from [65].

Figure 4.

Object skill expressed as automatic gaze bias. A: Procedure for learning stable values of visual objects. Half of the objects were associated with a reward (high-valued objects) and the other associated with no (or a small) reward (low-valued objects). B: Free viewing procedure for testing the value-based gaze bias. On each trial, 4 fractal objects were chosen pseudo-randomly from a set of 8 learned objects (A), were presented simultaneously, and the monkey freely looked at them, but no reward was delivered. Shown here are examples of saccade trajectories. The monkey tended to look at high-valued objects (denoted as ‘H’). (C, D) The percentage of the gaze duration on each object before (C) and after (D) the long-term learning. Red and blue indicated high- and low-valued objects. Reproduced from [63].

Object skill – automaticity

A skill is characterized by automaticity [2, 7, 69]. When human observers have extensively learned to find particular visual objects, they become unaware of their own perceptual distinction or memory retrieval [32, 33, 70]. The automatic nature of object skill is important for the following reasons. Suppose you have experienced all of the fractals in Fig. 3, half of them with a reward and the other half with no reward, many times. Suppose, then, some of these objects are placed in front of you, as in Fig. 4B.

Can you choose the objects with high values and avoid the objects with low values? In fact, this is similar to what the monkeys did. All of the objects shown in Fig. 3 are what one of the monkeys experienced with high and low values many times, and the monkeys’ choice was tested using the free viewing procedure shown in Fig. 4. For any combination of high- and low-valued objects, the monkeys showed a gaze bias similar to that shown in Fig. 4C and D [63, 65].

Such proficient performance would not be possible if humans or monkeys rely on voluntary (not automatic) search. We would have to rely on search images of desired objects in working memory [71]. Note that we cannot hold many items (say, more than seven) in working memory [72]. If we have experienced many objects with different values (as in Fig. 3), it is unlikely that the objects we encounter (as in Fig. 4B) match the search images held in working memory. Then, we cannot choose high-valued objects right away. We have to retrieve a memory associated with each object and judge its value based on the memory. And we have to repeat this memory retrieval-judgment process for all objects we encounter. This is a serial process and hence time-consuming [73, 74]. As we discussed at the beginning, the amount of reward we get per unit time would be very small.

What monkeys experienced in the laboratory (Fig. 3 and 4) is probably similar to what they would experience in the wild. A monkey would experience many objects as they forage foods and as they interact with animated and non-animated objects. On any day, the monkey may encounter some of these objects, but often unexpectedly. The situation may be magnified in human lives. For example, when we read newspapers, there are many pieces of information but we are quickly drawn to important ones even if we have not expected them.

These considerations suggest that the amazing ability of choosing high-valued objects among many others (i.e., object skill) depends on an automatic mechanism which is different from the mechanisms underlying voluntary choice. Neurophysiological experiments have suggested that the neural mechanism of object skill is, at least partly, located in a restricted part of the basal ganglia (the tail of the caudate nucleus and the substantia nigra pars reticulata) [63–65] (Box 1). This is consistent with a long-standing theory that the medial temporal lobe (including hippocampal areas) is essential for declarative memory whereas the basal ganglia are essential for non-declarative memory, specifically skills or habits [75]. Indeed, it was shown that the tail of the caudate nucleus, which receives inputs from temporal visual cortical areas [76], is necessary for learning to choose high-valued objects [77].

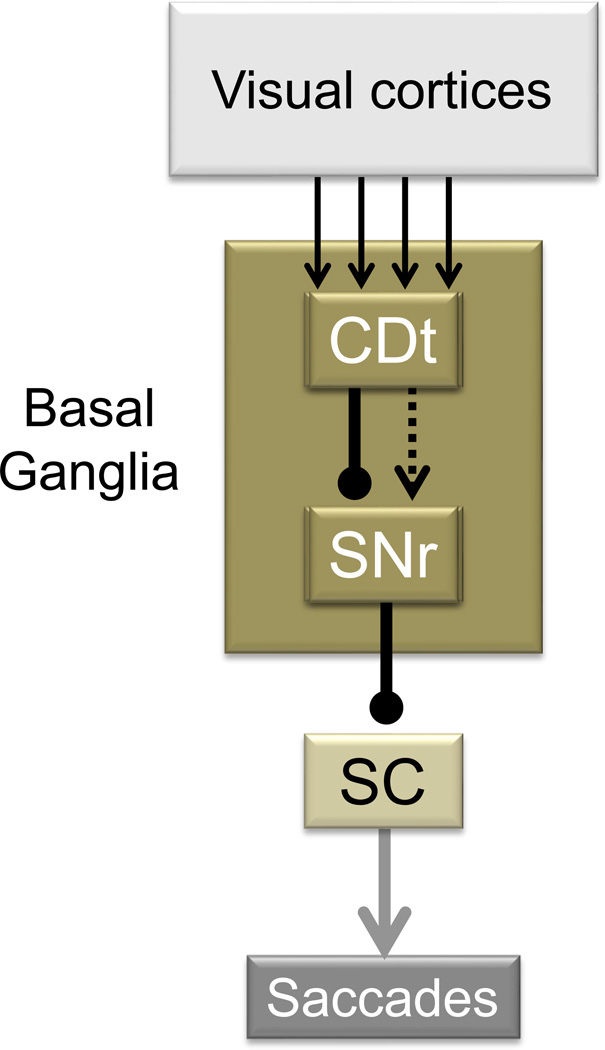

Box 1: Neural mechanism of object skill.

Experiments by Hikosaka and colleagues have suggested that object skills are supported by a neural circuit in the basal ganglia, namely, the connection from the tail of the caudate nucleus (CDt) to the superior colliculus (SC) through the substantia nigra pars reticulata (SNr) (Figure). The CDt-SNr-SC circuit represents the characteristics described in the main text, as shown below.

Stable values: Both CDt and SNr neurons encoded stable values of visual objects. During learning, monkeys looked at many fractal objects repeatedly and consistently in association with high- or low-reward values (see Fig. 3 and 4A). After short-term learning CDt and SNr neurons showed little value-based preference. However, after learning across several days, CDt and SNr neurons started showing differential responses to the high- and low-valued objects. CDt neurons are typically excited more strongly by high-valued objects [63]. SNr neurons are typically inhibited by high-valued objects and excited by low-valued objects [65].

Gaze: Many of the SNr neurons were shown to project their axons to the SC [65] which is the key structure for initiating saccadic eye movements [93]. Weak electrical stimulation in the CDt induced saccades [64]. The CDt-induced saccades are likely caused by the disinhibition of SC neurons, since both the direct CDt-SNr connection and the SNr-SC connection are GABAergic and inhibitory [94]. The CDt-SNr-SC oculomotor circuit is necessary for object skill because reversible inactivation of the CDt with a GABA agonist (muscimol) eliminated the gaze bias during free viewing (Fig. 4), but only for objects presented on the side contralateral to the inactivation [86].

Automaticity: Both CDt and SNr neurons responded to high- and low-valued objects differentially even when no reward was delivered after the object presentation. The differential responses occurred either when a saccade was prohibited [65] or allowed [86]. During the free viewing procedure (Fig. 4), CDt neurons were excited before saccades, and did so more strongly when the saccades were directed to objects with their preferred values [86].

High capacity memory: After the monkeys have experienced many objects (up to 300 for each monkey) with biased values, both CDt and SNr neurons reliably classified them based on their stable values [65].

Long-term retention: The neuronal bias based on stable values remained intact even after >100 days of no training, even though the monkey continued to learn many other objects. This was shown systematically for SNr neurons [65].

Object skill – high capacity memory

It is now clear that object skill requires high capacity memory. The association of an object and its stable value makes up a single memory. The number of memories should correspond to the number of learned objects. This is the minimum requirement, however. If a combination of objects has a different value (which is likely), the number of memories would increase drastically.

Moreover, the object-value memory must have a robust access to motor outputs, so that we can physically choose high-valued objects. Importantly, this memory-motor connection must be established for each object. For primates (humans and monkeys), eye movement would be the primary motor output for choice, because a gaze choice precedes or guides a manual choice, as we discussed earlier. Saccadic eye movements during free viewing (Fig. 4) would reflect the robust connection between value memory and eye movement.

In other words, the automatic nature of object skill is enabled only after establishing the memory-motor connections for individual objects, robustly and selectively. This ascertains two important aspects of skill: accuracy and speed. Only if the memory-motor connection is unique to individual objects, can the choice be accurate. Only if the object-value information is quickly transmitted through the memory-motor connection, can the choice be made quickly.

This feature – memory-motor connection – is similar to another popular concept – stimulus-response association – which characterizes skill or habit [78]. In the framework of operant conditioning, a particular response or stimulus-response association is reinforced [78, 79]; ‘stimulus’ itself is assigned no particular value. This may apply to action skill in which the right ‘response’ needs to be chosen and quickened. For object skill, however, it is possible that the representation of ‘stimulus’ is reinforced. According to this scheme, the brain first creates a memory of stimulus-value association, based on which approach or avoid responses are recruited. The neurophysiological data (Box 1) are consistent with this scheme [63, 65]. We thus hypothesize that object skill requires high capacity memory for individual stimuli (i.e., objects), whereas action skill requires high capacity memory for individual responses (i.e., motor actions).

One feature may be missing in the above argument: spatial position. Each object may occupy a particular position and thus the action performed on the object may have a corresponding spatial feature (e.g., saccade with a particular vector). Although it is unclear whether the object-value memory contains spatial information, the neurophysiological data (Box 1) suggest that the basal ganglia system supporting object skill carries spatial information.

Object skill – long-term retention

Human studies on the value-based gaze/attention bias have shown that, once the subjects have acquired a bias, the bias is retained for several days [57, 80]. Studies using monkeys showed that the value-based gaze bias remains robust for more than 100 days [65]. In this specific study [67], the monkeys were not shown some of the value-associated objects for a long time, and then their choices were suddenly tested using free viewing. The monkeys looked at the previously high-valued objects preferentially more often than the previously low-valued objects. This was non-trivial because, during the retention period, the monkeys continued to experience many other fractals, several of which were very similar to the retained fractals. This means that the stable object-value memory is highly object-selective and is hardly interfered with by more recently experienced objects.

Conceptually, this endurance of the object-value memory is necessary for its high capacity. High capacity memory is created only if each memory is retained for a long time. Otherwise, as new memories are acquired, old memories are lost; then, memories would not accumulate. On the other hand, the stable object-value memory is not created immediately. It took about 5 daily learning sessions before the monkeys started preferentially looking at objects that have been associated with high values [65]. To summarize, the stable object-value memory as well as its associated object skill is created slowly, but once established it remains for a long time. This is what characterizes ‘skill’ [40, 81].

Object skill – Limitations

We have emphasized mainly the advantage of skill, especially object skill. However, relying on object skill completely is risky because of its automatic nature. Object skill is basically blind to recent changes in the values of individual objects. If a previously high-valued object becomes toxic, we may not be able to stop reaching for it [53]. This poses a serious problem because motor behavior triggered by object skill (e.g., gaze orienting and reaching) occurs quickly and automatically in response to the appearance of high-values objects. A similar situation happens during drug abuse [82].

A reasonable way to cope with this problem would be to have another system that is sensitive to recent changes in objects’ values and, based on the updated information, change its outputs. This is how voluntary search would work. As we indicated, however, voluntary search itself is slow and capacity-limited. Therefore, the brain should have two systems, one working for voluntary search and the other for automatic search (object skill), as several studies suggest [38, 83–90].

Conclusion

An ultimate advantage of skilled behavior is multi-tasking [91]. Because skills are automatic, more than one can be performed in parallel (including a combination of object and action skills). Object skill enables humans and animals to evaluate objects in any environment and choose a high-valued object quickly and automatically. The choice then allows humans and animals to act on the chosen object quickly so that rewarding outcome can be presented as soon as possible. When one is writing a manuscript, one’s gaze and fingers are moving automatically. The automaticity frees up one’s cognitive resources so that one can focus on the conceptual arrangement of the manuscript. This means that cognitive functions heavily rely on one’s ability to utilize skills [92].

Figure Box 1.

Basal ganglia circuit that supports object skill. Neurons in the monkey CDt receives inputs from the temporal visual cortices and respond to visual objects differentially. Their responses are modulated by the stable (not flexible) values of the visual objects. Neurons in the SNr, which receive inputs from the CDt directly or indirectly, categorize visual objects into high- and low-valued objects. Their stable value signals are then sent to the SC, thereby biasing gaze toward high-valued objects. Arrows indicate excitatory connections (or effects). Lines with circular dots indicate inhibitory connections. Solid and hatched lines indicate direct and indirect connections, respectively.

Box 2. Questions for future research.

In addition to reward values, there are several important factors that influence skill acquisition or are influenced by skills, which need to be analyzed in future research. They include cost, uncertainty, and salience.

Cost

The value of a reward is reduced if the cost for obtaining the reward is high [17, 95]. In this sense, skill is beneficial since the metabolic energy cost decreases as a skill is acquired [96]. More important but less well known is whether or how much the energy spent in the brain decreases with skill acquisition. Activations of cortical and striatal areas (measured in fMRI experiments) tend to decrease with practice [11, 97] except for the early visual cortical areas [98], but it is unclear whether this reflects the general decrease in brain energy expenditure.

Uncertainty

There are two aspects of uncertainty that are relevant to skills, but in different ways. 1) Uncertainty in the environment: Reward outcome is often uncertain. Reward uncertainty, which is detected as reward prediction error [99], may drive learning [41, 100, 101], and consequently facilitate skill acquisition. On the other hand, if there is another option that provides certain information or reward, one may choose the certain option [102, 103], thereby suppressing learning and skill acquisition. 2) Uncertainty of own behavior: Behavior is intrinsically variable [104, 105], which may lead to a random delay of reward, or even no reward [106]. However, the variability is reduced by skill acquisition [107, 108]. This may act as an incentive for establishing skills.

Salience

In addition to something rewarding, humans and animals may need to efficiently deal with something harmful. If a potentially harmful object appears, one would first look at it [109]. One then needs to escape or fight with the object [110]. It would be useful to develop skills to detect such harmful objects quickly (object skill) and escape or fight quickly (action skill). In a broad sense, one needs to find and act on both something rewarding and something harmful. Little is known about whether the brain processes these two kinds of saliency in the same or a different manner in order to develop skills [111].

Highlights.

Skill makes a behavior quicker and thus increases rewards per unit time.

Skill consists of object skill (find good objects) and action skill (act on them).

Object skill is acquired through repeated experiences of objects with stable values.

Object skill allows automatic and quick choices of good objects among many.

Object skill compensates for the limited capacity of voluntary search.

Acknowledgements

This work was supported by the intramural research program at the National Eye Institute.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Newell A, Rosenbloom PS. Mechanisms of skill acquisition and the law of practice. In: Anderson JR, editor. Cognitive Skills and Their Acquisition. Erlbaum; 1981. pp. 1–55. [Google Scholar]

- 2.Ericsson KA, Lehmann AC. Expert and exceptional performance: evidence of maximal adaptation to task constraints. Annual review of psychology. 1996;47:273–305. doi: 10.1146/annurev.psych.47.1.273. [DOI] [PubMed] [Google Scholar]

- 3.Gurven M, et al. How long does it take to become a proficient hunter? Implications for the evolution of extended development and long life span. Journal of human evolution. 2006;51:454–470. doi: 10.1016/j.jhevol.2006.05.003. [DOI] [PubMed] [Google Scholar]

- 4.Kaplan HS, Robson AJ. The emergence of humans: the coevolution of intelligence and longevity with intergenerational transfers. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:10221–10226. doi: 10.1073/pnas.152502899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Helton WS. Expertise acquisition as sustained learning in humans and other animals: commonalities across species. Animal cognition. 2008;11:99–107. doi: 10.1007/s10071-007-0093-4. [DOI] [PubMed] [Google Scholar]

- 6.Doyon J. Skill learning. International review of neurobiology. 1997;41:273–294. doi: 10.1016/s0074-7742(08)60356-6. [DOI] [PubMed] [Google Scholar]

- 7.Ashby FG, et al. Cortical and basal ganglia contributions to habit learning and automaticity. Trends Cogn Sci. 2010;14:208–215. doi: 10.1016/j.tics.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hikosaka O, et al. Central mechanisms of motor skill learning. Curr Opin Neurobiol. 2002;12:217–222. doi: 10.1016/s0959-4388(02)00307-0. [DOI] [PubMed] [Google Scholar]

- 9.Salmon DP, Butters N. Neurobiology of skill and habit learning. Current Opinion in Neurobiology. 1995;5:184–190. doi: 10.1016/0959-4388(95)80025-5. [DOI] [PubMed] [Google Scholar]

- 10.Willingham DB. A neuropsychological theory of motor skill learning. Psychological Review. 1998;105:558–584. doi: 10.1037/0033-295x.105.3.558. [DOI] [PubMed] [Google Scholar]

- 11.Poldrack RA, et al. The neural correlates of motor skill automaticity. J Neurosci. 2005;25:5356–5364. doi: 10.1523/JNEUROSCI.3880-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yin HH, et al. Dynamic reorganization of striatal circuits during the acquisition and consolidation of a skill. Nat Neurosci. 2009 doi: 10.1038/nn.2261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Seger CA, Spiering BJ. A critical review of habit learning and the Basal Ganglia. Front Syst Neurosci. 2011;5:66. doi: 10.3389/fnsys.2011.00066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Rodriguez ML, Logue AW. Adjusting delay to reinforcement: comparing choice in pigeons and humans. Journal of experimental psychology. Animal behavior processes. 1988;14:105–117. [PubMed] [Google Scholar]

- 15.Rosati AG, et al. The evolutionary origins of human patience: temporal preferences in chimpanzees, bonobos, and human adults. Curr Biol. 2007;17:1663–1668. doi: 10.1016/j.cub.2007.08.033. [DOI] [PubMed] [Google Scholar]

- 16.Myerson J, Green L. Discounting of delayed rewards: Models of individual choice. Journal of the experimental analysis of behavior. 1995;64:263–276. doi: 10.1901/jeab.1995.64-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Pyke GH, et al. Optimal foraging: a selective review of theory and tests. The Quarterly Review of Biology. 1977;52:137–154. [Google Scholar]

- 18.Emlen JM. The role of time and energy in food preference. Am Nat. 1966;100:611–617. [Google Scholar]

- 19.Chung SH, Herrnstein RJ. Choice and delay of reinforcement. Journal of the experimental analysis of behavior. 1967;10:67–74. doi: 10.1901/jeab.1967.10-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Shadmehr R. Control of movements and temporal discounting of reward. Current opinion in neurobiology. 2010;20:726–730. doi: 10.1016/j.conb.2010.08.017. [DOI] [PubMed] [Google Scholar]

- 21.Shadmehr R, et al. Temporal discounting of reward and the cost of time in motor control. J Neurosci. 2010;30:10507–10516. doi: 10.1523/JNEUROSCI.1343-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Niv Y, et al. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- 23.Haith AM, et al. Evidence for hyperbolic temporal discounting of reward in control of movements. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2012;32:11727–11736. doi: 10.1523/JNEUROSCI.0424-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Takikawa Y, et al. Modulation of saccadic eye movements by predicted reward outcome. Experimental Brain Research. 2002;142:284–291. doi: 10.1007/s00221-001-0928-1. [DOI] [PubMed] [Google Scholar]

- 25.Lauwereyns J, et al. A neural correlate of response bias in monkey caudate nucleus. Nature. 2002;418:413–417. doi: 10.1038/nature00892. [DOI] [PubMed] [Google Scholar]

- 26.Bowman EM, et al. Neural signals in the monkey ventral striatum related to motivation for juice and cocaine rewards. Journal of Neurophysiology. 1996;75:1061–1073. doi: 10.1152/jn.1996.75.3.1061. [DOI] [PubMed] [Google Scholar]

- 27.Minamimoto T, et al. Complementary process to response bias in the centromedian nucleus of the thalamus. Science. 2005;308:1798–1801. doi: 10.1126/science.1109154. [DOI] [PubMed] [Google Scholar]

- 28.Crossman ERFW. A theory of the acquisition of speed-skill. Ergonomics. 1959;2:153–166. [Google Scholar]

- 29.Gunst N, et al. Development of skilled detection and extraction of embedded prey by wild brown capuchin monkeys (Cebus apella apella) Journal of comparative psychology. 2010;124:194–204. doi: 10.1037/a0017723. [DOI] [PubMed] [Google Scholar]

- 30.Dayan P, Balleine B. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- 31.Kamil AC, Roitblat HL. The ecology of foraging behavior: implications for animal learning and memory. Annual review of psychology. 1985;36:141–169. doi: 10.1146/annurev.ps.36.020185.001041. [DOI] [PubMed] [Google Scholar]

- 32.Shiffrin RM, Schneider W. Controlled and automatic human information processing: II. Perceptual learning, automatic attending, and a general theory. Psychol Rev. 1977;84:127–190. [Google Scholar]

- 33.Sigman M, Gilbert CD. Learning to find a shape. Nature neuroscience. 2000;3:264–269. doi: 10.1038/72979. [DOI] [PubMed] [Google Scholar]

- 34.Karni A, Bertini G. Learning perceptual skills: behavioral probes into adult cortical plasticity. Current opinion in neurobiology. 1997;7:530–535. doi: 10.1016/s0959-4388(97)80033-5. [DOI] [PubMed] [Google Scholar]

- 35.Ahissar M, Hochstein S. Learning pop-out detection: specificities to stimulus characteristics. Vision research. 1996;36:3487–3500. doi: 10.1016/0042-6989(96)00036-3. [DOI] [PubMed] [Google Scholar]

- 36.Seitz AR, et al. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61:700–707. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Schwartz BL, Evans S. Episodic memory in primates. American journal of primatology. 2001;55:71–85. doi: 10.1002/ajp.1041. [DOI] [PubMed] [Google Scholar]

- 38.Hikosaka O, et al. Parallel neural networks for learning sequential procedures. Trends in Neurosciences. 1999;22:464–471. doi: 10.1016/s0166-2236(99)01439-3. [DOI] [PubMed] [Google Scholar]

- 39.Krakauer JW, Mazzoni P. Human sensorimotor learning: adaptation, skill, and beyond. Current opinion in neurobiology. 2011;21:636–644. doi: 10.1016/j.conb.2011.06.012. [DOI] [PubMed] [Google Scholar]

- 40.Adams JA. Historical Review and Appraisal of Research on the Learning, Retention, and Transfer of Human Motor Skills. Psychol Bull. 1987;101:41–74. [Google Scholar]

- 41.Gottlieb J. Attention, learning, and the value of information. Neuron. 2012;76:281–295. doi: 10.1016/j.neuron.2012.09.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Awh E, et al. Top-down versus bottom-up attentional control: a failed theoretical dichotomy. Trends in cognitive sciences. 2012;16:437–443. doi: 10.1016/j.tics.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Nissen MJ, Bullemer P. Attentional requirements of learning: evidence from performance measures. Cognitive Psychology. 1987;19:1–32. [Google Scholar]

- 44.Chun MM. Contextual cueing of visual attention. Trends in Cognitive Sciences. 2000;4:170–178. doi: 10.1016/s1364-6613(00)01476-5. [DOI] [PubMed] [Google Scholar]

- 45.Williams AM. Perceptual skill in soccer: implications for talent identification and development. Journal of sports sciences. 2000;18:737–750. doi: 10.1080/02640410050120113. [DOI] [PubMed] [Google Scholar]

- 46.Dye MW, et al. Increasing Speed of Processing With Action Video Games. Current directions in psychological science. 2009;18:321–326. doi: 10.1111/j.1467-8721.2009.01660.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rowe C. Receiver psychology and the evolution of multicomponent signals. Animal behaviour. 1999;58:921–931. doi: 10.1006/anbe.1999.1242. [DOI] [PubMed] [Google Scholar]

- 48.Laska M, et al. Which senses play a role in nonhuman primate food selection? A comparison between squirrel monkeys and spider monkeys. American journal of primatology. 2007;69:282–294. doi: 10.1002/ajp.20345. [DOI] [PubMed] [Google Scholar]

- 49.Kulahci IG, et al. Multimodal signals enhance decision making in foraging bumble-bees. Proceedings. Biological sciences / The Royal Society. 2008;275:797–802. doi: 10.1098/rspb.2007.1176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Orban GA, et al. Comparative mapping of higher visual areas in monkeys and humans. Trends in cognitive sciences. 2004;8:315–324. doi: 10.1016/j.tics.2004.05.009. [DOI] [PubMed] [Google Scholar]

- 51.Paukner A, et al. Tufted capuchin monkeys (Cebus apella) spontaneously use visual but not acoustic information to find hidden food items. Journal of comparative psychology. 2009;123:26–33. doi: 10.1037/a0013128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Hikosaka O, et al. Basal ganglia orient eyes to reward. J Neurophysiol. 2006;95:567–584. doi: 10.1152/jn.00458.2005. [DOI] [PubMed] [Google Scholar]

- 53.Peck CJ, et al. Reward modulates attention independently of action value in posterior parietal cortex. J Neurosci. 2009;29:11182–11191. doi: 10.1523/JNEUROSCI.1929-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Theeuwes J, Belopolsky AV. Reward grabs the eye: oculomotor capture by rewarding stimuli. Vision research. 2012;74:80–85. doi: 10.1016/j.visres.2012.07.024. [DOI] [PubMed] [Google Scholar]

- 55.Hickey C, et al. Reward guides vision when it's your thing: trait reward-seeking in reward-mediated visual priming. PLoS One. 2010;5:e14087. doi: 10.1371/journal.pone.0014087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kristjansson A, et al. Fortune and reversals of fortune in visual search: Reward contingencies for pop-out targets affect search efficiency and target repetition effects. Atten Percept Psychophys. 2010;72:1229–1236. doi: 10.3758/APP.72.5.1229. [DOI] [PubMed] [Google Scholar]

- 57.Anderson BA, et al. Value-driven attentional capture. Proc Natl Acad Sci U S A. 2011;108:10367–10371. doi: 10.1073/pnas.1104047108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Chelazzi L, et al. Rewards teach visual selective attention. Vision research. 2012 doi: 10.1016/j.visres.2012.12.005. [DOI] [PubMed] [Google Scholar]

- 59.Hayhoe M, Ballard D. Eye movements in natural behavior. Trends Cogn Sci. 2005;9:188–194. doi: 10.1016/j.tics.2005.02.009. [DOI] [PubMed] [Google Scholar]

- 60.Miyashita K, et al. Anticipatory saccades in sequential procedural learning in monkeys. J Neurophysiol. 1996;76:1361–1366. doi: 10.1152/jn.1996.76.2.1361. [DOI] [PubMed] [Google Scholar]

- 61.Johansson RS, et al. Eye-hand coordination in object manipulation. Journal of Neuroscience. 2001;21:6917–6932. doi: 10.1523/JNEUROSCI.21-17-06917.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Land MF. Eye movements and the control of actions in everyday life. Progress in retinal and eye research. 2006;25:296–324. doi: 10.1016/j.preteyeres.2006.01.002. [DOI] [PubMed] [Google Scholar]

- 63.Yamamoto S, et al. Reward value-contingent changes of visual responses in the primate caudate tail associated with a visuomotor skill. Journal of Neuroscience. 2013:2013. doi: 10.1523/JNEUROSCI.0318-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Yamamoto S, et al. What and where information in the caudate tail guides saccades to visual objects. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2012;32:11005–11016. doi: 10.1523/JNEUROSCI.0828-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Yasuda M, et al. Robust representation of stable object values in the oculomotor Basal Ganglia. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2012;32:16917–16932. doi: 10.1523/JNEUROSCI.3438-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Kato M, et al. Eye movements in monkeys with local dopamine depletion in the caudate nucleus. I. Deficits in spontaneous saccades. Journal of Neuroscience. 1995;15:912–927. doi: 10.1523/JNEUROSCI.15-01-00912.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Berg DJ, et al. Free viewing of dynamic stimuli by humans and monkeys. Journal of vision. 2009;9:1911–1915. doi: 10.1167/9.5.19. [DOI] [PubMed] [Google Scholar]

- 68.Fuchs AF. Saccadic and smooth pursuit eye movements in the monkey. J Physiol. 1967;191:609–631. doi: 10.1113/jphysiol.1967.sp008271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Logan GD. Skill and automaticity: Relations, implications, and furure directions. Can J Psychol. 1985;39:367–386. [Google Scholar]

- 70.Chun MM, Jiang Y. Contextual cueing: implicit learning and memory of visual context guides spatial attention. Cogn Psychol. 1998;36:28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- 71.Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- 72.Cowan N. The magical number 4 in short-term memory: a reconsideration of mental storage capacity. The Behavioral and brain sciences. 2001;24:87–114. doi: 10.1017/s0140525x01003922. discussion 114–185. [DOI] [PubMed] [Google Scholar]

- 73.Sternberg S. Memory-scanning: mental processes revealed by reaction-time experiments. Am Sci. 1969;57:421–457. [PubMed] [Google Scholar]

- 74.Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- 75.Squire LR, Zola SM. Structure and function of declarative and nondeclarative memory systems. Proceedings of the National Academy of Sciences of the United States of America. 1996;93:13515–13522. doi: 10.1073/pnas.93.24.13515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Saint-Cyr JA, et al. Organization of visual cortical inputs to the striatum and subsequent outputs to the pallido-nigral complex in the monkey. The Journal of comparative neurology. 1990;298:129–156. doi: 10.1002/cne.902980202. [DOI] [PubMed] [Google Scholar]

- 77.Fernandez-Ruiz J, et al. Visual habit formation in monkeys with neurotoxic lesions of the ventrocaudal neostriatum. Proc Natl Acad Sci U S A. 2001;98:4196–4201. doi: 10.1073/pnas.061022098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- 79.Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- 80.Della Libera C, et al. Dissociable effects of reward on attentional learning: from passive associations to active monitoring. PLoS One. 2011;6:e19460. doi: 10.1371/journal.pone.0019460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Hikosaka O, et al. Long-term retention of motor skill in macaque monkeys and humans. Exp Brain Res. 2002;147:494–504. doi: 10.1007/s00221-002-1258-7. [DOI] [PubMed] [Google Scholar]

- 82.Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat Neurosci. 2005;8:1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- 83.Ashby FG, Maddox WT. Human category learning. Annu Rev Psychol. 2005;56:149–178. doi: 10.1146/annurev.psych.56.091103.070217. [DOI] [PubMed] [Google Scholar]

- 84.Daw ND, et al. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 85.Evans JS. Dual-processing accounts of reasoning, judgment, and social cognition. Annu Rev Psychol. 2008;59:255–278. doi: 10.1146/annurev.psych.59.103006.093629. [DOI] [PubMed] [Google Scholar]

- 86.Kim HF, Hikosaka O. Distinct basal ganglia circuits controlling behaviors guided by flexible and stable values. Neuron. doi: 10.1016/j.neuron.2013.06.044. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Redgrave P, et al. Goal-directed and habitual control in the basal ganglia: implications for Parkinson's disease. Nat Rev Neurosci. 2010;11:760–772. doi: 10.1038/nrn2915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Schneider W, Shiffrin RM. Controlled and automatic human information processing: I. Detection, Search, and Attention. Psychol Rev. 1977;84:1–66. [Google Scholar]

- 89.Seger CA. How do the basal ganglia contribute to categorization? Their roles in generalization, response selection, and learning via feedback. Neurosci Biobehav Rev. 2008;32:265–278. doi: 10.1016/j.neubiorev.2007.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Yin HH, Knowlton BJ. The role of the basal ganglia in habit formation. Nat Rev Neurosci. 2006;7:464–476. doi: 10.1038/nrn1919. [DOI] [PubMed] [Google Scholar]

- 91.Lee FJ, Taatgen NA. Multi-tasking as Skill Acquisition. Proceedings of the twenty-fourth annual conference of the cognitive science society. 2002:572–577. [Google Scholar]

- 92.Fischer KW. A theory of cognitive development: The control and construction of hierarchies of skills. Psychol Rev. 1980;87:477–531. [Google Scholar]

- 93.Sparks DL. The brainstem control of saccadic eye movements. Nat Rev Neurosci. 2002;3:952–964. doi: 10.1038/nrn986. [DOI] [PubMed] [Google Scholar]

- 94.Hikosaka O, et al. Role of the basal ganglia in the control of purposive saccadic eye movements. Physiol Rev. 2000;80:953–978. doi: 10.1152/physrev.2000.80.3.953. [DOI] [PubMed] [Google Scholar]

- 95.Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Lay BS, et al. Practice effects on coordination and control, metabolic energy expenditure, and muscle activation. Human movement science. 2002;21:807–830. doi: 10.1016/s0167-9457(02)00166-5. [DOI] [PubMed] [Google Scholar]

- 97.Wu T, et al. How self-initiated memorized movements become automatic: a functional MRI study. Journal of neurophysiology. 2004;91:1690–1698. doi: 10.1152/jn.01052.2003. [DOI] [PubMed] [Google Scholar]

- 98.Sigman M, et al. Top-down reorganization of activity in the visual pathway after learning a shape identification task. Neuron. 2005;46:823–835. doi: 10.1016/j.neuron.2005.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Schultz W, et al. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 100.Behrens TE, et al. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 101.Dayan P, et al. Learning and selective attention. Nat Neurosci. 2000;(3 Suppl):1218–1223. doi: 10.1038/81504. [DOI] [PubMed] [Google Scholar]

- 102.Bromberg-Martin ES, Hikosaka O. Midbrain dopamine neurons signal preference for advance information about upcoming rewards. Neuron. 2009;63:119–126. doi: 10.1016/j.neuron.2009.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Kiani R, Shadlen MN. Representation of confidence associated with a decision by neurons in the parietal cortex. Science. 2009;324:759–764. doi: 10.1126/science.1169405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Faisal AA, et al. Noise in the nervous system. Nat Rev Neurosci. 2008;9:292–303. doi: 10.1038/nrn2258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Newell KM, Corcos DM. Variability and Motor Control. Human Kinetics Pub; 1993. [Google Scholar]

- 106.Ratcliff R, Rouder JN. Modeling Response Times for Two-Choice Decisions. Psychol Sci. 1998;9:347–356. [Google Scholar]

- 107.Dosher BA, Lu ZL. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proceedings of the National Academy of Sciences of the United States of America. 1998;95:13988–13993. doi: 10.1073/pnas.95.23.13988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Shmuelof L, et al. How is a motor skill learned? Change and invariance at the levels of task success and trajectory control. Journal of neurophysiology. 2012;108:578–594. doi: 10.1152/jn.00856.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 109.Mogg K, et al. Anxiety and orienting of gaze to angry and fearful faces. Biol Psychol. 2007;76:163–169. doi: 10.1016/j.biopsycho.2007.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 110.Ellis ME. Evolution of aversive information processing: a temporal trade-off hypothesis. Brain, behavior and evolution. 1982;21:151–160. doi: 10.1159/000121623. [DOI] [PubMed] [Google Scholar]

- 111.Matsumoto M, Hikosaka O. Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature. 2009;459:837–841. doi: 10.1038/nature08028. [DOI] [PMC free article] [PubMed] [Google Scholar]