Abstract

The present study investigates the relationship between inter-individual differences in fearful face recognition and amygdala volume. Thirty normal adults were recruited and each completed two identical facial expression recognition tests offline and two magnetic resonance imaging (MRI) scans. Linear regression indicated that the left amygdala volume negatively correlated with the accuracy of recognizing fearful facial expressions and positively correlated with the probability of misrecognizing fear as surprise. Further exploratory analyses revealed that this relationship did not exist for any other subcortical or cortical regions. Nor did such a relationship exist between the left amygdala volume and performance recognizing the other five facial expressions. These mind-brain associations highlight the importance of the amygdala in recognizing fearful faces and provide insights regarding inter-individual differences in sensitivity toward fear-relevant stimuli.

Introduction

Fearful faces convey signals of potential threat, and recognizing such facial expressions with precision in conspecifics is evolutionarily advantageous [1], [2]. Within the human brain, the amygdala is presumed to play an essential role in processing such facial expressions [3]. Selective recognition deficits for fearful facial expressions were observed in humans with amygdala lesions [3]–[9]. A disrupted response to fearful faces in conspecifics was also shown in a recent study on amygdala-lesioned monkeys [10]. Converging evidence from functional neuroimaging studies indicated that viewing fearful faces led to increased activation in the amygdala for both normal humans [11]–[17] and monkeys [18].

Inter-individual differences in behavior can be predicted by differences in brain structures, providing insights into the neural substrates underlying the corresponding behaviors [19], [20]. Reduced or decreased amygdala volume has been observed in patients with spider phobia [21], posttraumatic stress disorder (PTSD) [22], [23], and pediatric anxiety (particularly social phobia) [24], compared to normal adults. These patients are thought to have an increased sensitivity to specific fear-related stimuli [21]–[25], such as patients with social phobia being more sensitive to critical facial expressions [26]. Research on normal subjects revealed that individuals with a smaller amygdala volume either had a smaller social network size [27] or were less extraverted [28]. Additionally, low extraversion/high introversion was related to increased levels of fear conditioning and fear sensitivity [29]. Hence, it is reasonable to hypothesize based on these studies of patients and normal adults that a smaller amygdala might be more sensitive to fear-relevant stimuli, such as fearful faces [30], and that performance when recognizing fearful faces could be predicted by the variation in volume between individuals.

To date, few studies have directly investigated the relationship between amygdala volume and inter-individual differences in fearful face recognition amongst a group of normal adults. In the present study, to evaluate an individual's performance in recognizing fearful facial expressions, a facial expression recognition test was conducted. To ensure the reliability of our behavioral data, we performed the same behavioral test offline twice with an interval of one month between tests. MRI scans were also obtained twice with a one-week interval to create a single, high signal-to-noise average volume [31]. We then calculated the correlation coefficients between amygdala volume and performance in fearful expression recognition. In addition, factors such as the intensity of fear in the presented images and the participants' trait anxiety levels were also considered [27], [32]–[35] to obtain a more comprehensive understanding of the relationship between amygdala volume and performance in fearful face recognition.

Methods

Ethics statement

The experimental procedure was approved by the IRB of the Institute of Psychology at the Chinese Academy of Sciences. All participants provided informed written consent before participating in our experiments.

Participants

A total of 30 right-handed normal undergraduates (age: 20.93±1.72 yrs; 21 females) from Southwest University in China were recruited. Participants were asked about any severe physical or mental injuries they had in the past, prior to recruitment, and only those who reported no severe physical or mental injuries were recruited. In addition, before entering the MRI scanner, they completed a questionnaire provided by the Southwest University MRI Center that required all individuals to honestly report their current health status and medical records, including physical injuries and mental disorders. Of the 30 participants, two failed to submit their trait anxiety surveys through email, leaving 28 participants who completed all tests and surveys. One participant whose accuracy scores for recognizing fearful faces in both facial expression recognition tests were greater than 2.0 standard deviations from the overall mean score was excluded from all subsequent analyses (Test 1: 0.17<0.63 – 0.16*2 = 0.31; Test 2: 0.20<0.63 – 0.16*2 = 0.31). Another participant whose accuracy score for recognizing fearful faces in a single facial expression recognition test was more than 2.0 standard deviations from the overall mean score was also excluded from all subsequent analyses (Test 2: 0.97>0.63+0.16*2 = 0.95).

In addition, 34 adults (age: 22.71±1.38 yrs; 20 females) were recruited to evaluate the intensity of fear presented by the human model in each image. None of the 34 randomly recruited participants performed any other experiment in this study except for the 7-point scale survey to evaluate the intensity of fear in each image.

Behavioral tests and measures

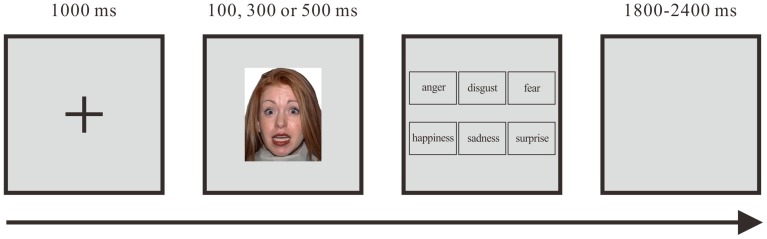

A 17-inch cathode-ray tube (CRT) monitor running at a refresh rate of 60 Hz and the software package E-prime 2.0 were used for stimuli presentation and data collection. The target stimuli were images of six types of facial expressions (anger, disgust, fear, happiness, sadness, and surprise) posed by human models (5 males and 5 females from different ethnic backgrounds, to account for the effect between perceived race and the race of the subject [36]) from the NimStim database [37]. A total of 60 images (one image for each of the 6 basic expressions ×10 models) were selected from the database and trimmed to 192×220 pixels. All stimuli were presented on a uniform silver gray background, which remained silver gray throughout the experiment. The protocol was based on Ekman and Friesen's Brief Affect Recognition Test (BART) [38], with a few minor modifications. In a single trial, a black fixation cross was first presented in the center of the silver gray background for 1000 ms, followed by a facial expression image presented in the center of the screen for either 100, 300 or 500 ms. Six emotion options (anger, disgust, fear, happiness, sadness and surprise) were then presented on the screen. Subjects were asked to choose the emotion option that best describes the facial expression. After participants chose an answer, an inter-trial interval ranging from 1800 ms to 2400 ms was randomly chosen and displayed in between each trial (Figure 1). Participants performed 60 trials per display duration (100, 300 or 500 ms) for a total of 180 trials (60 trials ×3 display durations) in a single test. Each participant completed 2 tests (360 trials) with an interval of one month between the two tests for reliability. Prior to the formal experiment, subjects were required to perform 10 trials to become familiar with the procedures and tasks.

Figure 1. The procedure for a single trial of the facial expression test.

To measure trait anxiety, we contacted the participants through email and asked them to complete the State-Trait Anxiety Inventory (STAI). The STAI is commonly used to measure state-trait anxiety [39]. The trait anxiety subscale contains 20 items rated on a 4-point scale (e.g., from “Almost Never” to “Almost Always”). Higher scores indicate greater anxiety.

To account for the intensity of fear in each image, we conducted a separate survey. The fearful facial expression images of 10 different models selected from the NimStim database (NimStim database individual IDs: 01, 07, 11, 17, 19, 27, 34, 38, 42, and 43) used in our facial expression recognition test were listed in a questionnaire. The subjects were 34 randomly recruited Chinese adults whose task was to evaluate the intensity of fear presented by the human model in each image on a 7-point scale (“1” represents the lowest intensity of fear and “7” represents the highest intensity of fear). After acquiring a mean intensity score for each fearful expression image, we sorted the 10 images according to their scores from the lowest to the highest intensity. The three lowest-intensity images were then categorized as the low-intensity group, and the three highest-intensity images were categorized as the high-intensity group (see Table S1 in File S1).

Structural MRI data acquisition and morphometric analysis

During the MRI scans, two high-resolution structural images of the whole brain were acquired for each participant on a Siemens 3T scanner (Siemens, Erlangen, Germany) with a 12-channel head matrix coil. Structural MRI data for the sample were acquired using sagittal T1-weighted magnetization prepared rapid gradient echo (MPRAGE) sequences (TI = 1100 ms, TR/TE = 2530/2.5 ms, FA = 7°, FOV = 256×256 mm2, voxel-size = 1.0×1.0×1.3 mm3, 128 slices).

All structural image analysis was conducted using the Connectome Computation System (CCS: http://lfcd.psych.ac.cn/ccs.html) pipeline [40]. Specifically, each participant's MR images were first denoised through a spatially adaptive non-local means filter [41], [42]. The two denoised MRI scans for each participant were averaged to create a single high signal-to-noise average volume [31]. To determine the amygdala volume, we performed quantitative morphometry analysis on the averaged T1-weighted MRI data using an automated segmentation and probabilistic region of interest (ROI) labeling technique [43]. These images were first corrected for intensity variations due to MR inhomogeneities [44]. As described in the Freesurfer Wiki document (http://surfer.nmr.mgh.harvard.edu/fswiki), a hybrid watershed/surface deformation procedure [45] was first employed to extract brain tissues that were then automatically segmented into the cerebrospinal fluid (CSF), white matter (WM) and deep gray matter (GM) volumetric structures [44]. To explore the relationship between fearful face recognition performance and other brain areas, we further conducted individual cortical surface reconstructions to measure the volumes of these regions. Two researchers (Y.M and L.Y.), blind to the hypotheses, manually inspected the results of the automated brain tissue segmentation. The criteria used for quality assurance on brain extraction, surface reconstruction and anatomical image registration are as follows: 1) the quality of the brain extraction and intensity bias correction must be visually assessed and manually corrected if the procedure failed, and 2) the brain tissue segmentation was also visually checked to ensure good quality. A detailed description of the criteria can be found on the following website: http://lfcd.psych.ac.cn/ccs/QC.html, and in our previous work [40]. After the visual inspection, there were 6 participants whose brainmask datasets were manually edited to achieve better estimates of pial surfaces. The results of the automated segmentation were verified as accurate without the need for any correction.

The cortical surface was parcellated into 34 parcellation elements (parcels) for each hemisphere, defined by the Desikan-Killiany atlas in FreeSurfer [46], [47]. The subcortical structure was segmented into a total of 17 regions, consisting of the Brainstem and 8 regions in each hemisphere: amygdala, caudate, hippocampus, accumbens-area, pallidum, putamen, thalamus-proper and cerebellum-cortex. The volume of each of these 41 brain regions was then calculated for subsequent exploratory analyses.

Mind-Brain Association Analyses

We employed linear regression models to examine the relationships between both left and right amygdala volume (independent variables) and the accuracy (number of correct judgments/total number of judgments) for recognizing fearful faces (dependent variable). To control for the total intracranial volume (ICV), we included the ICV as a covariate in the regression model. Instead of dividing the amygdala volume by the total intracranial volume (ICV), we calculated the correlation coefficients between the behavioral data and amygdala volume using a linear regression model with the ICV as a covariate to control for the inter-individual variability in brain size. This approach was used because the reliability theory of measurements states that the ratio measurement has reduced test-retest reliability [48], and the approach was inspired by our recent demonstration of the standardization of functional connectome metrics [49]. We adopted this approach in the following analysis and calculated the power for each correlation coefficient. To demonstrate the differences in detecting mind-brain associations between the two approaches, we also included results with the amygdala volume divided by the ICV (Table S2 in File S1). To further examine a key concept for mind-brain association studies – test-retest reliability – we computed the intraclass correlation coefficients (ICCs) for both the amygdala volume and the facial expression test. Further exploratory analyses examined the relationships between fearful face recognition performance and the volumes of the 41 (7 subcortical and 34 cortical) other brain regions for each hemisphere.

Results

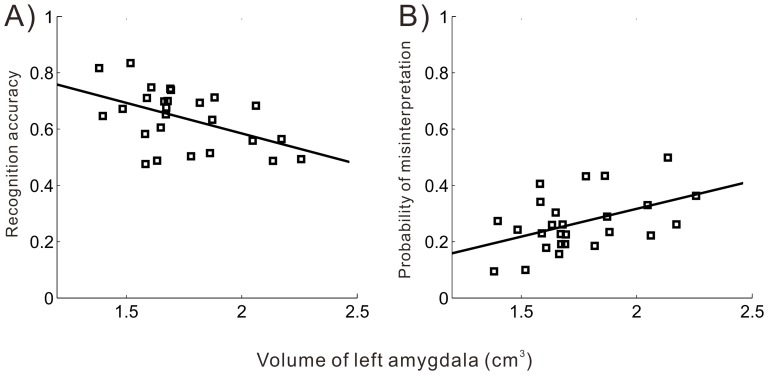

Our results show that the volume of the left amygdala negatively correlated with the subjects' accuracy on fearful face recognition in Test 1 (r = −0.61, p = 0.001, power = 0.94), Test 2 (r = −0.43, p = 0.03, power = 0.66), and the average of the two tests (r = −0.66, p<0.001, power = 0.98) (Figure 2 and Table 1). The correlation coefficients for the recognition accuracy score and left amygdala volume for Test 1 and Test 2 (through Fisher's z-Test) did not differ (z = 1.25, p = 0.10). Therefore, data from Tests 1 and 2 were merged and mean scores were used to conduct all subsequent analyses in the present study. To further investigate the specificity of the relationship, a total of 36 outcomes (6 presented facial expressions and 6 emotion options) were generated in every subject's confusion matrix [50], indicating how subjects judged the presented facial expressions. Relationships between the presented facial expressions (ground truth) and the participants' judgments (classification results) are shown in detail in Table 2. We did not find any other consistent correlations (in Test 1 or Test 2) between amygdala volume and recognition performance for the other facial expressions (Table S3 in File S1). Interestingly, we discovered that the left amygdala volume is positively correlated with the probability of misinterpreting fearful faces as surprised faces in Test 1 (r = 0.57, p = 0.003, power = 0.87), Test 2 (r = 0.45, p = 0.026, power = 0.66), and the average of the two tests (r = 0.63, p = 0.001, power = 0.90).

Figure 2. The left amygdala volume was correlated with the mean accuracy for recognizing fearful faces and the mean error rates for judging fear as surprise.

A) Scatter plot of recognition accuracy for fearful facial expressions (y-axis) versus the total adjusted left amygdala volume (x-axis, cm3). B) Scatter plot of the probability of misinterpreting fear as surprise (y-axis) versus the total adjusted left amygdala volume (x-axis, cm3). The best-fit lines are plotted based on the average results of the two experiments. The correlation coefficients between amygdala volume and performance in fearful face recognition were obtained while controlling for total intracranial volume.

Table 1. Correlation based on linear regression using amygdala and hippocampal volumes as the independent variables and performance in recognizing fearful faces as the dependent variable.

| Amygdala | Hippocampus | |||

| Left | Right | Left | Right | |

| Test 1 | ||||

| fear-fear | −0.606 (0.001) | −0.270 (0.192) | −0.360 (0.077) | −0.278 (0.178) |

| fear-surprise | 0.571 (0.003) | −0.051 (0.808) | 0.299 (0.147) | 0.231 (0.267) |

| Test 2 | ||||

| fear-fear | −0.432 (0.031) | −0.302 (0.142) | −0.194 (0.352) | −0.116 (0.581) |

| fear-surprise | 0.445 (0.026) | 0.271 (0.191) | 0.217 (0.297) | 0.079 (0.709) |

| Mean | ||||

| fear-fear | −0.663 (0.000) | −0.372 (0.067) | −0.350 (0.086) | −0.246 (0.235) |

| fear-surprise | 0.625 (0.001) | 0.212 (0.310) | 0.316 (0.124) | 0.184 (0.378) |

The table shows correlation coefficients (p-values). Results with p-values <0.05 are indicated in bold. The correlation coefficients between amygdala volume and performance in fearful face recognition were obtained while controlling for total intracranial volume.

Table 2. The confusion matrix of relationships between the presented facial expressions and the participants' judgments.

| Presented facial expression | Identified facial expression | |||||

| Anger | Disgust | Fear | Happiness | Sadness | Surprise | |

| Anger | 0.69 | 0.25 | 0.01 | 0.00 | 0.04 | 0.01 |

| Disgust | 0.25 | 0.65 | 0.04 | 0.00 | 0.04 | 0.02 |

| Fear | 0.01 | 0.03 | 0.64 | 0.00 | 0.05 | 0.27 |

| Happiness | 0.00 | 0.00 | 0.00 | 0.99 | 0.00 | 0.00 |

| Sadness | 0.02 | 0.14 | 0.01 | 0.00 | 0.77 | 0.06 |

| Surprise | 0.00 | 0.00 | 0.20 | 0.00 | 0.00 | 0.80 |

The data presented in each column is the probability of the participants' judgments. The recognition accuracy for each facial expression is marked in bold. All trials from Tests 1 and 2 are included.

Our exploratory analyses showed that no correlation was significant in both tests for any other brain regions, even with a lenient threshold (uncorrected p<0.05). No cortical or subcortical region showed consistent significant correlations with fearful face recognition accuracy except for the left amygdala (for more details see Tables S4-6 in File S1). We also showed the distribution of amygdala volume for subcortical regions across two tests (Tables S7-10 in File S1).

In addition, to determine whether differences existed in the correlation coefficients for amygdala volume and fearful face recognition across the 3 image display durations (100 ms: r = −0.45, p = 0.026, power = 0.66; 300 ms: r = −0.51, p = 0.009, power = 0.79, and 500 ms: r = −0.49, p = 0.014, power = 0.76), we used Fisher's z-Test. The results showed that the correlation coefficients between recognition accuracy score and left amygdala volume for the display durations of 100 ms compared with 300 ms (z = 0.390, p = 0.35) and 500 ms (z = 0.256, p = 0.40) were not significant; 300 ms and 500 ms (z = −0.133, p = 0.55) showed no difference as well. To examine whether the intensity of fear in the images we selected had an effect on the relationship between the amygdala volume and fearful face recognition, a Fisher's z-Test was conducted on the accuracy-volume correlation coefficients derived from the low-intensity image group and the high-intensity image group. Analysis of the intensity of fear in the images revealed that the accuracy-volume correlation coefficients of the high-intensity group (r = −0.63, p = 0.001, power = 0.90) were marginally higher than those in the low-intensity group (r = −0.41, p = 0.04, power = 0.55), (z = 1.53, p = 0.06). Our results revealed no correlation between amygdala volume and trait anxiety (left: r = −0.13, p = 0.54, power = 0.09; right r = −0.007, p = 0.974, power <0.05). The test-retest reliability for the left amygdala volume, measured by the intraclass correlation coefficient (ICC), was 0.810, and the test-retest reliability for the right amygdala volume was 0.734. The test-retest reliability for the facial expression test was 0.757.

Discussion

Numerous studies have observed that the amygdala is particularly responsive to fearful facial expressions [8]–[9], [11]–[18], [51]–[54], yet few studies have investigated the relationship between amygdala volume and inter-individual differences in performance on tests of fearful face recognition. To our knowledge, this may be the first study that reveals an association between amygdala volume and fearful face recognition amongst normal adult subjects. Our results revealed that the left amygdala volume negatively correlates with recognition accuracy for fearful faces. Additionally, the left amygdala volume positively correlated with the probability of misrecognizing expressions of fear as surprise. These findings were based on data obtained from two behavioral experiments and two MRI scans conducted on each individual. Test-retest reliability was almost perfect (ICC = 0.810) for the left amygdala volume and was substantial for the right amygdala volume (ICC = 0.734) [55]. Exploratory analyses revealed that only performance for recognizing fearful facial expressions correlated with left amygdala volume across both tests. Such a relationship did not exist between the left amygdala volume and the performance for recognizing the other five facial expressions. Further analysis revealed that the correlation with performance for recognizing fearful facial expressions did not exist for any subcortical or cortical regions except the left amygdala volume across both tests.

This specific relationship between amygdala volume and fearful face recognition suggests a crucial role for the amygdala in the processing of fearful faces. In previous studies, researchers found that patients with amygdala lesions had impaired recognition performance [8]. Increased amygdala activation was also observed in both humans [11]–[17] and monkeys [18] when processing fearful facial expressions compared with other facial expressions. The present study provides further insights regarding the amygdala and its relationship to fearful face recognition, demonstrating that inter-individual differences in amygdala volume can predict performance on tests of fearful face recognition.

Amongst facial expressions, fearful faces might not only be hard to recognize [5]–[6], [56]–[57] but also have the highest probability of being misinterpreted as surprised faces [5], [58]–[61]. Consistent with these studies, our behavioral results indicated that the accuracy scores for recognizing fearful faces were the lowest. Participants were more inclined to misinterpret fear as surprise. Confusion of facial expressions between fear and surprise is universal across cultures [62]. This might be because both surprised faces and fearful faces are “wide-eyed, information gathering” facial expressions. In addition, this confusion is one-sided and recognition accuracy for surprised faces is generally slightly higher than fearful faces, as shown in previous studies [5], [61], [63]. Surprised faces are rarely misinterpreted as any other emotions except fear, whilst fearful faces are generally misinterpreted as surprise but also misinterpreted as anger, disgust, and sadness. Furthermore, through Mind-Brain association analysis, our results indicated that smaller amygdala volumes are associated with better performance for recognizing fearful faces (overall made less mistakes), whilst individuals with larger amygdala volumes were more inclined to misinterpret fearful faces as surprise. However, no relationship was found between amygdala volume and recognition accuracy for surprised faces or the probability of misrecognizing surprised faces as fear.

Therefore, an intriguing question begs an answer; “why does a smaller amygdala predict better performance in fearful face recognition?” Our findings, from the relationship between amygdala volume and fearful face recognition to the one-sided confusion of misinterpreting fearful faces as surprise, may speak to this. Our results support the speculation that the amygdala responds most specifically to fear when subjects attend to the stimuli [64], [65] and are highly sensitive to fearful faces, as demonstrated in studies showing greater amygdala activation for fearful faces in comparison to angry faces [66], happy faces [11], and neutral faces [12], [13]. Therefore, it is possible that subjects with smaller amygdala volumes are more sensitive to fear-relevant stimuli, and these subjects had higher accuracy scores in fearful face recognition. However, subjects with larger amygdala volumes were less sensitive to fear-relevant stimuli and had a higher probability of misrecognizing fear as surprise. This speculation is also partly supported by findings from previous studies, which observed reduced or decreased amygdala volume in patients with spider phobia [21], posttraumatic stress disorder (PTSD) [22], [23], or pediatric anxiety (particularly social phobia) [24]. These patients were thought to have an increased sensitivity to specific fear-relevant stimuli [21]–[25]. Together with observations from lesion studies of patients with amygdala damage (especially SM), these studies highlight the indispensable role that the amygdala plays in promoting survival by compelling the organism away from danger [67], [68], and it appears that without the amygdala, the evolutionary value of fear is lost [69].

Our results reveal that the intensity of the fearful facial expressions and trait anxiety did not influence the relationship between amygdala volume and fearful face recognition. This is consistent with several previous findings that found brain regions other than the amygdala, such as the left anterior insula, left pulvinar and right anterior cingulate, to be responsive to increasing intensity of fear [14], [70].

Several limitations should be noted when interpreting our findings. Our study has shown an association between amygdala volume and fearful face recognition performance using a small sample. While we utilized the test-retest measure to examine the reproducibility of our findings in this small sample, future work based on a large sample would further verify these findings and could examine gender effects on this mind-brain association. In addition, it is relatively difficult to achieve an accurate segmentation of the amygdala, compared to other brain structures, because of its small size. However, the test-retest reliability for the left amygdala volume was almost perfect (ICC = 0.810), indicating robust and reliable amygdala segmentation in our study.

Supporting Information

Tables S1–S10.

(DOCX)

Acknowledgments

The authors thank Prof. F. Xavier Castellanos for comments in the early stage of preparing the manuscript.

Funding Statement

This research was supported in part by grants from 973 Program (No. 2011CB302201) and the National Natural Science Foundation of China (Nos. 61075042, 81171409, 81220108014). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Darwin C (2002) The Expression of The Emotions in Man and Animals. Oxford University Press, New York (Original work published 1872).

- 2.Kling AS, Brothers LA (1992) The Amygdala: Neurobiological Aspects of Emotion, Memory and Mental Dysfunction. Wiley-Liss, New York.

- 3. Adolphs R (2002) Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav Cognit Neurosci Rev 1: 21–62. [DOI] [PubMed] [Google Scholar]

- 4. Anderson AK, Phelps EA (2000) Expression without recognition: contributions of the human amygdala to emotional communication. Psychol Sci 11: 106–111. [DOI] [PubMed] [Google Scholar]

- 5. Broks P, Young AW, Maratos EJ, Coffey PJ, Calder AJ, et al. (1998) Face processing impairments after encephalitis: amygdala damage and recognition of fear. Neuropsychologia 36: 59–70. [DOI] [PubMed] [Google Scholar]

- 6. Calder AJ, Young AW, Rowland D, Perrett DI, Hodges JR, et al. (1996) Facial emotion recognition after bilateral amygdala damage: Differentially severe impairment of fear. Cogn Neuropsychol 13: 699–745. [Google Scholar]

- 7. Sprengelmeyer R, Young AW, Schroeder U, Grossenbacher PG, Federlein J, et al. (1999) Knowing no fear. Proc Roy Soc Lond B Biol Sci 266: 2451–2456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Adolphs R, Tranel D, Damasio H, Damasio A (1994) Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372: 669–672. [DOI] [PubMed] [Google Scholar]

- 9. Adolphs R, Tranel D, Damasio H, Damasio AR (1995) Fear and the human amygdala. J Neurosci 15: 5879–5891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Hadj-Bouziane F, Liu N, Bell AH, Gothard KM, Luh WM, et al. (2012) Amygdala lesions disrupt modulation of functional MRI activity evoked by facial expression in the monkey inferior temporal cortex. Proc Nat Acad Sci 109: 3640–3648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, et al. (1996) A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383: 812–815. [DOI] [PubMed] [Google Scholar]

- 12. Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, et al. (2001) A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion 1: 70. [DOI] [PubMed] [Google Scholar]

- 13. Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, et al. (1996) Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17: 875–887. [DOI] [PubMed] [Google Scholar]

- 14. Morris JS, Friston KJ, Büchel C, Frith CD, Young AW, et al. (1998) A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain 121: 47–57. [DOI] [PubMed] [Google Scholar]

- 15. Van der Zwaag W, Da Costa SE, Zürcher NR, Adams Jr RB, Hadjikhani N (2012) A 7 tesla FMRI study of amygdala responses to fearful faces. Brain Topogr 25: 125–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Williams MA, McGlone F, Abbott DF, Mattingley JB (2008) Stimulus-driven and strategic neural responses to fearful and happy facial expressions in humans. Eur J Neurosci 27: 3074–3082. [DOI] [PubMed] [Google Scholar]

- 17. Thomas KM, Drevets WC, Whalen PJ, Eccard CH, Dahl RE, et al. (2001) Amygdala response to facial expressions in children and adults. Biol Psychiatr 49: 309–316. [DOI] [PubMed] [Google Scholar]

- 18. Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, Tootell RB (2008) Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proc Nat Acad Sci 105: 5591–5596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Kanai R, Rees G (2011) The structural basis of inter-individual differences in human behaviour and cognition. Nat Rev Neurosci 12: 231–242. [DOI] [PubMed] [Google Scholar]

- 20. Honey CJ, Thivierge JP, Sporns O (2010) Can structure predict function in the human brain? NeuroImage 52: 766–776. [DOI] [PubMed] [Google Scholar]

- 21. Fisler MS, Federspiel A, Horn H, Dierks T, Schmitt W, et al. (2013) Spider phobia is associated with decreased left amygdala volume: a cross-sectional study. BMC Psychiatr 13: 70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Rogers MA, Yamasue H, Abe O, Yamada H, Ohtani T, et al. (2009) Smaller amygdala volume and reduced anterior cingulate gray matter density associated with history of post-traumatic stress disorder. Psychiatr Res 174: 210–216. [DOI] [PubMed] [Google Scholar]

- 23. Woon FL, Hedges DW (2008) Hippocampal and amygdala volumes in children and adults with childhood maltreatment-related posttraumatic stress disorder: A meta-analysis. Hippocampus 18: 729–736. [DOI] [PubMed] [Google Scholar]

- 24. Mueller SC, Aouidad A, Gorodetsky E, Goldman D, Pine DS, et al. (2013) Gray matter volume in adolescent anxiety: an impact of the brain-derived neurotrophic factor val66met polymorphism?. J Am Acad Child Adolesc Psychiatr 52: 184–195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Park HJ, Kim EJ, Ku JI, Woo JM, Lee SH, et al. (2012) Psychological characteristics of early remitters in patients with panic disorder. Psychiatr Res 197: 237–241. [DOI] [PubMed] [Google Scholar]

- 26. Lissek S, Levenson J, Biggs AL, Johnson LL, Ameli R, et al. (2008) Elevated fear conditioning to socially relevant unconditioned stimuli in social anxiety disorder. Am J Psychiatry 165: 124–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Bickart KC, Wright CI, Dautoff RJ, Dickerson BC, Barrett LF (2010) Amygdala volume and social network size in humans. Nat Neurosci 14: 163–164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Cremers H, van Tol MJ, Roelofs K, Aleman A, Zitman FG, et al. (2011) Extraversion is linked to volume of the orbitofrontal cortex and amygdala. PloS one 6: e28421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Hooker CI, Verosky SC, Miyakawa A, Knight RT, D'Esposito M (2008) The influence of personality on neural mechanisms of observational fear and reward learning. Neuropsychologia 46: 2709–2724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Hariri AR, Tessitore A, Mattay VS, Fera F, Weinberger DR (2002) The amygdala response to emotional stimuli: a comparison of faces and scenes. NeuroImage 17: 317–323. [DOI] [PubMed] [Google Scholar]

- 31. Milad MR, Quinn BT, Pitman RK, Orr SP, Fischl B, et al. (2005) Thickness of ventromedial prefrontal cortex in humans is correlated with extinction memory. Proc Nat Acad Sci 102: 10706–10711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Bickart KC, Hollenbeck MC, Barrett LF, Dickerson BC (2012) Intrinsic amygdala-cortical functional connectivity predicts social network size in humans. J Neurosci 32: 14729–14741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Tsuchiya N, Moradi F, Felsen C, Yamazaki M, Adolphs R (2009) Intact rapid detection of fearful faces in the absence of the amygdala. Nat Neurosci 12: 1224–1225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Fox E (2002) Processing emotional facial expressions: The role of anxiety and awareness. Cogn Affect Behav Ne 2: 52–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Davis M (1992) The role of the amygdala in fear and anxiety. Annu Rev Neurosci 15: 353–375. [DOI] [PubMed] [Google Scholar]

- 36. Hart AJ, Whalen PJ, Shin LM, McInerney SC, Fischer H, et al. (2000) Differential response in the human amygdala to racial outgroup vs ingroup face stimuli. Neuroreport 11: 2351–2354. [DOI] [PubMed] [Google Scholar]

- 37. Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, et al. (2009) The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatr Res 168: 242–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Ekman P, Friesen WV (1974) Detecting deception from the body or face. J Pers Soc Psychol 29: 288. [Google Scholar]

- 39.Spielberger CD, Gorsuch RL, Lushene R, Vagg PR, Jacobs GA (1983) Manual for the state-trait anxiety inventory. Palo Alto, CA: Consulting Psychologists Press.

- 40. Zuo XN, Xu T, Jiang LL, Yang Z, Cao XY, et al. (2013) Toward reliable characterization of functional homogeneity in the human brain: Preprocessing, scan duration, imaging resolution and computational space. NeuroImage 65: 374–386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Xing XX, Zhou YL, Adelstein JS, Zuo XN (2011) PDE-based spatial smoothing: a practical demonstration of impacts on MRI brain extraction, tissue segmentation and registration. Magn Reson I 29: 731–738. [DOI] [PubMed] [Google Scholar]

- 42. Zuo XN, Xing XX (2011) Effects of Non-Local Diffusion on Structural MRI Preprocessing and Default Network Mapping: Statistical Comparisons with Isotropic/Anisotropic Diffusion. PLoS ONE 6: e26703 doi:26710.21371/journal.pone.0026703 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Fischl B, Salat DH, Busa E, Albert M, Dieterich M, et al. (2002) Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron 33: 341–355. [DOI] [PubMed] [Google Scholar]

- 44. Dale AM, Fischl B, Sereno MI (1999) Cortical surface-based analysis: I. Segmentation and surface reconstruction. NeuroImage 9: 179–194. [DOI] [PubMed] [Google Scholar]

- 45. Ségonne F, Dale AM, Busa E, Glessner M, Salat D, et al. (2004) A hybrid approach to the skull stripping problem in MRI. NeuroImage 22: 1060–1075. [DOI] [PubMed] [Google Scholar]

- 46. Desikan RS, Segonne F, Fischl B, Quinn BT, Dickerson BC, et al. (2006) An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage 31: 968–980. [DOI] [PubMed] [Google Scholar]

- 47. Fischl B, van der Kouwe A, Destrieux C, Halgren E, Ségonne F, et al. (2004) Automatically Parcellating the Human Cerebral Cortex. Cerebr Cortex 14: 11–22. [DOI] [PubMed] [Google Scholar]

- 48. Arndt S, Cohen G, Alliger RJ, Swayze II VW, Andreasen NC (1991) Problems with ratio and proportion measures of imaged cerebral structures. Psychiatr Res: Neuroimaging 40: 79–89. [DOI] [PubMed] [Google Scholar]

- 49.Yan CG, Craddock RC, Zuo XN, Zang YF, Milham MP (2013) Standardizing the Intrinsic Brain: Towards Robust Measurement of Inter-Individual Variation in 1000 Functional Connectomes. NeuroImage. (inpress). [DOI] [PMC free article] [PubMed]

- 50. Kohavi R, Provost F (1998) Glossary of terms. Mach Learn 30: 271–274. [Google Scholar]

- 51.Rolls ET (1999) The Brain and Emotion. Oxford University Press.

- 52. Seeck M, Michel CM, Mainwaring N, Cosgrove R, Blume H, et al. (1997) Evidence for rapid face recognition from human scalp and intracranial electrodes. NeuroReport 8: 2749–2754. [DOI] [PubMed] [Google Scholar]

- 53. Adams RB, Gordon HL, Baird AA, Ambady N, Kleck RE (2003) Effects of gaze on amygdala sensitivity to anger and fear faces. Science 300: 1536–1536. [DOI] [PubMed] [Google Scholar]

- 54. Phillips M, Medford N, Young AW, Williams L, Williams SCR, et al. (2001) Time courses of left and right amygdalar responses to fearful facial expressions. Hum Brain Mapp 12: 193–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 159–174. [PubMed]

- 56. Rapcsak SZ, Galper SR, Comer JF, Reminger SL, Nielsen L, et al. (2000) Fear recognition deficits after focal brain damage A cautionary note. Neurology 54: 575–575. [DOI] [PubMed] [Google Scholar]

- 57. Costafreda SG, Brammer MJ, David AS, Fu CH (2008) Predictors of amygdala activation during the processing of emotional stimuli: a meta-analysis of 385 PET and fMRI studies. Brain Res Rev 58: 57–70. [DOI] [PubMed] [Google Scholar]

- 58. Du S, Martinez AM (2011) The resolution of facial expressions of emotion. J Vis 11: 1425–1433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Gosselin P, Simard J (1999) Children's knowledge of facial expressions of emotions: Distinguishing fear and surprise. J Genet Psychol 160: 181–193. [Google Scholar]

- 60. Jack RE, Blais C, Scheepers C, Schyns PG, Caldara R (2009) Cultural confusions show that facial expressions are not universal. Curr Biol 19: 1543–1548. [DOI] [PubMed] [Google Scholar]

- 61. Calder AJ, Keane J, Manly T, Sprengelmeyer R, Scott S, et al. (2003) Facial expression recognition across the adult life span. Neuropsychologia 41: 195–202. [DOI] [PubMed] [Google Scholar]

- 62. Ekman P (1992) An argument for basic emotions. Cognit Emot 6: 169–200. [Google Scholar]

- 63. Ekman P, Friesen WV (1986) A new pan-cultural facial expression of emotion. Motiv Emot 10: 159–168. [Google Scholar]

- 64. Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JD (2003) Neural correlates of the automatic processing of threat facial signals. J Neurosci 23: 5627–5633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Adolphs R (2008) Fear, faces, and the human amygdala. Curr Opin Neurobiol 18: 166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, et al. (2001) A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion 1: 70. [DOI] [PubMed] [Google Scholar]

- 67. Amaral DG (2002) The primate amygdala and the neurobiology of social behavior: implications for understanding social anxiety. Biol Psychiatr 51: 11–17. [DOI] [PubMed] [Google Scholar]

- 68. Dicks D, Myers RE, Kling A (1969) Uncus and amygdala lesions: effects on social behavior in the free-ranging rhesus monkey. Science 165: 69–71. [PubMed] [Google Scholar]

- 69. Feinstein JS, Adolphs R, Damasio A, Tranel D (2011) The human amygdala and the induction and experience of fear. Curr Biol 21: 34–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Whalen PJ (1998) Fear, vigilance, and ambiguity: Initial neuroimaging studies of the human amygdala. Curr Dir Psychol Sci 7: 177–188. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Tables S1–S10.

(DOCX)