Abstract

Attention is intrinsic to our perceptual representations of sensory inputs. Best characterized in the visual domain, it is typically depicted as a spotlight moving over a saliency map that topographically encodes strengths of visual features and feedback modulations over the visual scene. By introducing smells to two well-established attentional paradigms, the dot-probe and the visual-search paradigms, we find that a smell reflexively directs attention to the congruent visual image and facilitates visual search of that image without the mediation of visual imagery. Furthermore, such effect is independent of, and can override, top-down bias. We thus propose that smell quality acts as an object feature whose presence enhances the perceptual saliency of that object, thereby guiding the spotlight of visual attention. Our discoveries provide robust empirical evidence for a multimodal saliency map that weighs not only visual but also olfactory inputs.

Keywords: olfaction, saliency map, attention, multi-sensory integration

1. Introduction

The human senses constantly receive vast amounts of environmental inputs, which are subjected to an attentional mechanism to select the more important information for further processing. Attentional selection is both a consequence of perceptual saliency and an expression of cognitive control over perception [1]. Best characterized in the visual domain, the bottom-up stimulus-driven component is modelled with a ‘saliency map’ that originates from neurons tuned to input features (e.g. orientation and colour) and topographically encodes for stimulus conspicuity [2,3], whereas the top-down goal-directed component selects particular spatial locations, features or objects [4] and modulates the corresponding neural activities [5,6].

Traditional discussions on attention have largely been confined to a single modality, in particular vision or audition [7], despite the fact that our perceptual experience is multi-sensory in nature. Considering that the inputs to different senses are correlated in many situations, providing information about the same external object or event, it is reasonable to assume that an effective attentional mechanism operates beyond a single channel of sensory representations. Indeed, recent studies have reported top-down attentional modulation of audio–visual integration [8,9]. The reverse (i.e. stimulus-driven influence of multi-sensory integration on attention), however, is less clear outside the scope of spatial interactions [10,11]. Experiments in the context of audio–visual or tactile–visual synchrony seem to find a positive result when the stimulus in the task-irrelevant modality (audition or touch) is of high (occurring rarely) [12,13], rather than low (part of a continuous stream) saliency [14,15], raising the possibility that the attentional effect could be owing to a stimulus-induced transient boost of general arousal instead of spontaneous multi-sensory integration.

Here, we probe whether olfactory–visual integration drives the deployment of visual attention by introducing spatially uninformative smells to two well-established attentional paradigms, the dot-probe [16] and the visual-search [17] paradigms. As one of the oldest senses in the course of evolution, olfaction has long served the function of object perception, overtaken by vision only in a minority of species, including humans [18], where olfactory modulation of visual perception and eye movements has also been observed [19–21]. In a series of experiments, we assess whether a continuously presented smell is automatically bound with the corresponding visual object (or the visual features associated with that object), jointly forming a multimodal saliency code and hence adding to its perceptual conspicuity.

2. Material and methods

(a). Participants

A total of 188 healthy non-smokers participated in the study, 16 (eight males, mean age ± s.e.m. = 21.9 ± 0.59 years) in Experiment 1, 32 (16 males, 22.8 ± 0.35 years) in Experiment 2, 16 (eight males, 23.9 ± 0.63 years) in Experiment 3, 64 (29 males, 22.9 ± 0.26 years) in Experiment 4, 30 (14 males, 23.0 ± 0.28 years) in Experiment 5 and 30 (14 males, 21.8 ± 0.33 years) in Experiment 6. All reported to have normal or corrected-to-normal vision, normal sense of smell and no respiratory allergy or upper respiratory infection at the time of testing. They were naive to the purpose of the experiments. All gave informed consent to participate in procedures approved by the Institutional Review Board at Institute of Psychology, Chinese Academy of Sciences.

(b). Olfactory stimuli

Smells were presented in identical 280 ml glass bottles. Each bottle contained 10 ml clear liquid and was connected with two Teflon nosepieces via a Y-structure. Participants were instructed to position the nosepieces inside their nostrils, and then press a button to initiate each run of attention tasks, during which they continuously inhaled through the nose and exhaled through their mouth. The olfactory stimuli consisted of phenyl ethyl alcohol (PEA, a rose-like smell, 1% v/v in propylene glycol) and citral (a lemon-like smell, 0.25% v/v in propylene glycol) in Experiments 1 and 3, PEA (0.5% v/v in propylene glycol) and isoamyl acetate (IA, a banana-like smell, 0.02% v/v in propylene glycol) in Experiment 5, as well as two bottles of purified water in Experiments 2 and 6, which served as control experiments for Experiments 1 and 5, respectively. In Experiment 4, half of the subjects smelled PEA (1% v/v in propylene glycol) and purified water, whereas the other half smelled citral (0.25% v/v in propylene glycol) and purified water. Except for water, the smells were supra-threshold to all subjects.

(c). Procedure

(i). Odour judgement

Participants sampled each olfactory stimulus and rated, on a 100-unit visual analogue scale, its intensity and pleasantness, with a 1-min break between the samplings. In Experiments 2 and 6, the subjects were told that the two bottles, respectively, contained a low concentration of rose smell and lemon (Experiment 2)/banana (Experiment 6) smell, and rated on a 100-unit visual analogue scale each bottle's similarities to the smells of rose and lemon (Experiment 2)/banana (Experiment 6), in addition to intensity and pleasantness.

(ii). Dot-probe task

Experiments 1–4 employed the dot-probe paradigm. As shown in figure 1a, each trial began with a fixation on a central cross (0.4° × 0.4°), 1 s after which images (3.1° × 3.1° each) of rose and lemon were presented for 250 ms to both sides of fixation, one on each side (the centre being 6.7° horizontally from fixation) in a counterbalanced manner. Following an ISI of 100 ms, a Gabor patch (1.2°×1.2°) tilted 1.25° clockwise or counterclockwise appeared randomly on the left- or right-hand side of fixation for 100 ms, serving as a test probe in the position that either the rose or the lemon image previously occupied. The subjects were asked to press one of two buttons to indicate its orientation. A response received within 3 s after the disappearance of the Gabor target started the next trial. Each participant completed four runs of the task (two runs per olfactory condition, 40 trials per run in Experiments 1–3, 48 trials per run in Experiment 4) with the order of odour presentation balanced within subjects and counterbalanced across subjects. There was a 5 min break in-between the runs to avoid possible interference.

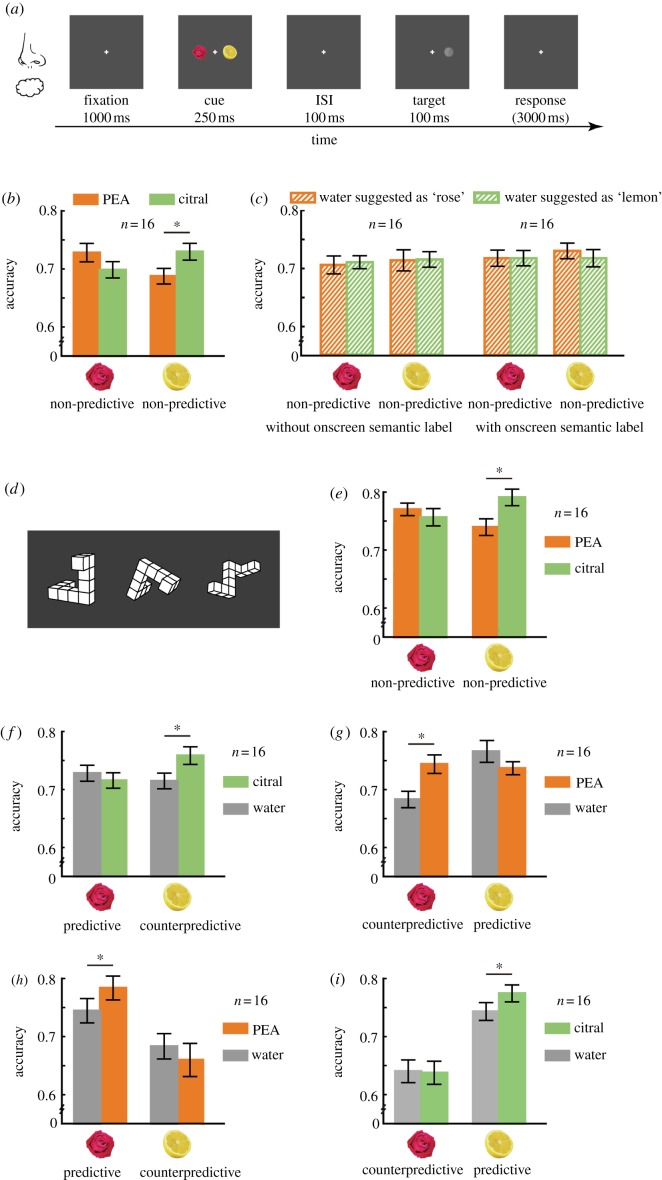

Figure 1.

Olfaction reflexively attracts spatial attention to the congruent visual object. (a) Procedure of the dot-probe task. (b) Overall, the orientation discrimination accuracy was higher when the Gabor targets followed the site of the image that was congruent with the smell they were being exposed to, despite the fact that neither image was of predictive value for the position of the Gabor targets. (c) Suggestion was insufficient to modulate the allocation of attention. The orientation discrimination accuracy remained the same for the Gabor targets following the rose/lemon image under the two conditions where purified water was suggested as containing a rose or lemon smell, irrespective of whether the semantic label of the suggested smell content was constantly presented on screen (right) or not (left). (d) Exemplars of the Shepard–Metzler three-dimensional cube figures used in Experiment 3. (e) Visual imagery load had little impact on the smell-related attentional benefit. A response pattern akin to that depicted in (b) was obtained. (f–i) Olfactory cues modulated the allocation of attention in the presence of top-down biases. When the rose image served as the predictive cue, smelling the lemon-like citral relative to water increased the performance accuracy for the Gabor targets following the counterpredictive lemon image (f), whereas smelling the rose-like PEA relative to water increased the accuracy for those following the predictive rose image (h); similarly, when the lemon image served as the predictive cue, smelling PEA and citral as opposed to water, respectively, boosted the performance accuracies for the Gabor targets following the counterpredictive rose image (g) and the predictive lemon image (i). Error bars represent standard errors of the mean adjusted for individual differences. *p < 0.05.

(iii). Mental-rotation task

Experiment 3 combined a mental-rotation task to the dot-probe task. A Shepard–Metzler three-dimensional cube figure [22] (7.1°×7.1°, selected in a pseudo-randomized manner from a pool of 24; figure 1d) was centrally presented for 2.5 s at the beginning of each run and immediately after every eight dot-probe trials. The subjects, under continuous exposure to either PEA or citral, had to try their best to indicate whether each, except for the first, three-dimensional cube figure depicted an object that could be rotated into congruence with that in the previous figure (a modified 1-back task). The dot-probe trials began immediately after the disappearance of the first three-dimensional cube figure and after a response was made for each, except for the last, of the subsequent three-dimensional cube figures. There were a total of four runs, with five mental-rotation trials and 40 dot-probe trials in each run.

(iv). Visual-search task

Experiments 5 and 6 adopted a visual-search task. On each trial, participants fixated a cross (0.5° × 0.5°) at the centre of the screen for 1 s before the search display (figure 2a) appeared. There could be three (one-third of the trials), nine (one-third of the trials) or 18 (one-third of the trials) yellow-coloured items (2.2° × 2.2° each) on display, each at 1 of 18 evenly spaced locations of equal eccentricity (10.9° from fixation). A banana image served as the target and was present in half of the trials. The distractors were randomly selected from a pool of 40 yellow-coloured images depicting various household items. The subjects were asked to respond (by pressing one of two buttons) as accurately and quickly as possible whether the target was present or not. The next trial started 1 s after a response was made. Each run consisted of 90 such trials in random order and a short break in the middle. Each subject completed four runs of the visual-search task (two runs per olfactory condition). The order of odour presentation was balanced within subjects and counterbalanced across subjects. There was a 5 min break in-between the runs.

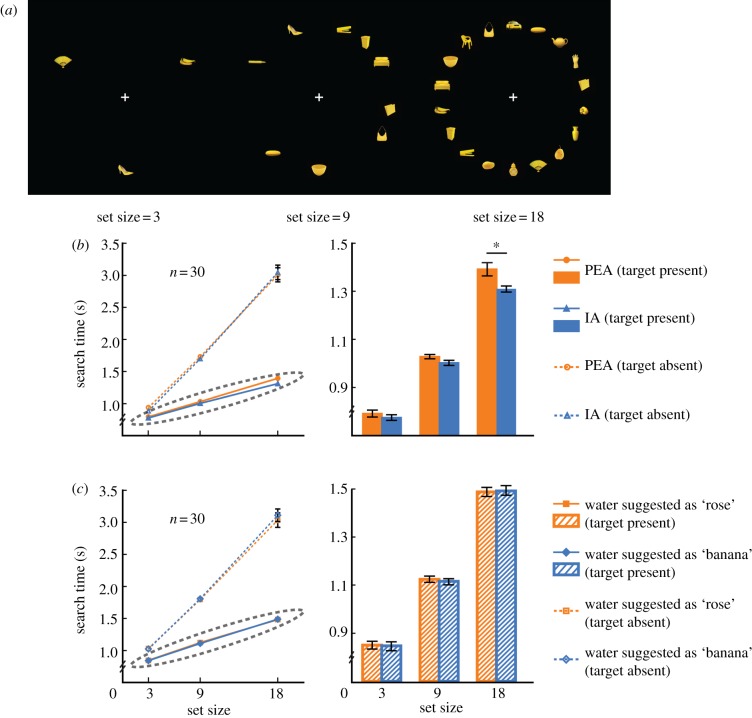

Figure 2.

Olfaction facilitates visual search of the corresponding visual object. (a) Examples of the search displays with 3, 9 and 18 items, respectively. (b) Overall, smelling the banana-like IA when compared with the rose-like PEA accelerated the detection of the target banana image when it was present, but did not significantly affect the correct rejection of its presence when the banana image was absent. (c) Suggestion had no effect on search efficacy. There was no interaction between suggested smell content and set size both when the target banana image was present and absent. In (b,c) the bar graph in the right panel is a blown-up plot of the values highlighted by the dotted eclipse in the left panel. Error bars represent standard errors of the mean adjusted for individual differences. Error bars shorter than the diameter of the markers are not displayed in the line graphs. *p < 0.05.

All participants went through a practice session before starting the actual experiment. Owing to the heavy cognitive load of the combined mental-rotation|dot-probe task, participants in Experiment 3 were allowed a longer practice session, which likely contributed to their overall better performance in the dot-probe task (figure 1e).

(d). Analyses

Response accuracy, calculated as the percentage of correct responses, served as the dependent variable in Experiments 1–4. The data were analysed with repeated measures ANOVA, using olfactory condition and probe position (Gabor patch appearing in the position previously occupied by the rose image versus the lemon image) as the within-subjects factors. To compare the magnitudes of odour-induced attentional bias between Experiments 1 and 3, experiment (Experiment 1 versus 3) was added as a between-subjects factor. In Experiment 4, cue validity (see §3 for details) was also included as a between-subjects factor. Follow-up paired sample t-tests were performed where appropriate.

In Experiments 5 and 6, search time served as the main dependent measure. Trials with incorrect responses (less than 1%) were excluded beforehand. We separated the trials with target and those without, and performed two independent repeated measures ANOVAs using olfactory condition and set size (3, 9 and 18) as the within-subjects factors. In Experiment 5, we also calculated for each subject their search slopes (time spent per item to decide whether the target was present or absent) under different combinations of olfactory condition and target presence. Paired sample t-tests were then performed to compare between the search slopes under the exposures of IA and PEA, respectively, both when the target was present and when it was absent.

Data are available for download from http://zhouw.psych.ac.cn/Smell.Attention.Data.zip.

3. Results

(a). Olfaction reflexively attracts spatial attention to the congruent visual object

In the dot-probe task in Experiment 1, the Gabor target appeared in the position that the rose or the lemon image previously occupied in 50% of the trials (figure 1a and see §2). With this in mind, participants indicated its orientation while being continuously exposed to either the rose-like PEA or the lemon-like citral, which were comparable in both perceived intensity (69.6 versus 69.8, t15 = −0.03, p = 0.98) and pleasantness (55.5 versus 56.1, t15 = −0.06, p = 0.95). Using accuracy as the dependent variable, repeated measures ANOVA revealed a significant interaction between olfactory condition and probe position (F1,15 = 12.90, p = 0.003, partial η2 = 0.46; figure 1b), with no significant main effect of either factor (p = 0.71 and 0.81 for olfactory condition and probe position, respectively). In other words, the participants tended to be more accurate at the orientation discrimination task when the Gabor targets followed the site of the image that was congruent with the smell they were being exposed to, despite that the image's location was of no predictive value for the position of the Gabor targets.

It is possible that the presence of the rose/lemon smell primed the conceptual link between rose/lemon smell and rose/lemon image, which in turn, through top-down attentional modulation, produced the observed smell-related attentional benefit to the corresponding visual object. To examine this alternative, we recruited an independent group of 32 participants and conducted Experiment 2, in which two bottles of purified water were used in place of the actual smells in Experiment 1. The participants were however told that one of the bottles contained a low concentration of rose smell and the other contained a low concentration of lemon smell [19]. They were also told that smell they were going to receive before each run, and had no problem recalling it afterwards. Furthermore, for half of the participants, the label of the suggested smell content (i.e. rose smell versus lemon smell) was constantly presented at fixation (1.4° × 1.4°) throughout each run of the dot-probe task, serving as a salient semantic cue. As a result, the purified water was rated as more like the smell of rose (41.3 versus 23.4, t31 = 4.63, p < 0.001) and less like the smell of lemon (17.9 versus 34.8, t31 = −4.74, p < 0.001), more pleasant (51.6 versus 44.6, t31 = 2.31, p = 0.027) but similarly intense (21.6 versus 23.1, t31 = −0.47, p = 0.64) when it was suggested as containing a rose smell when compared with a lemon smell. However, despite being susceptible to top-down influences in making olfactory judgements, the participants were not affected by the suggested smell contents in performing the orientation discrimination task. There was no interaction between suggested smell content and Gabor position regardless of whether the semantic label appeared on screen (F1,15 = 0.17, p = 0.68) or not (F1,15 = 0.01, p = 0.92), indicating that semantic bias was insufficient to modulate the distribution of attention in this case (figure 1c).

There remains the possibility that visual imagery could have mediated the smell-related attentional benefit observed in Experiment 1. Instead of being directly integrated with visual computation per se, the rose/lemon smell could have evoked visual imagery of roses/lemons that biased attention to the corresponding image. Although results from Experiment 2 argue against this possibility, one could suggest that the visual imagery generated from semantic instruction was not strong enough compared with the sensory-evoked imagery as in Experiment 1. Thus, the effect of mental imagery was directly assessed in Experiment 3, which employed a dual-task design (see §2 for details), combining a mental-rotation task (figure 1d) with the dot-probe task used in Experiments 1 and 2. Participants had to actively keep the most recent three-dimensional cube figure in their visual imagery while performing the dot-probe task until the next three-dimensional cube figure popped up. They then indicated whether or not the current and the last figures portrayed congruent three-dimensional objects. This heavily taxed visual imagery and the participants on average got 68% correct on the mental-rotation task (versus chance = 0.5, t15 = 7.55, p < 0.001) with no difference between the olfactory conditions (0.69 versus 0.67 for PEA and citral, respectively, t15 = 0.33, p = 0.74). Their performance accuracy on the dot-probe task nevertheless displayed a significant interaction between olfactory condition and probe position (F1,15 = 7.82, p = 0.01, partial η2 = 0.34, figure 1e) comparable with that in Experiment 1 (three-way interaction F1,30 = 0.05, p = 0.82), whereas neither factor showed a main effect (p = 0.32 and 0.89 for olfactory condition and probe position, respectively). Hence, visual imagery load had little impact on the smell-related attentional benefit.

We thus inferred that the attentional benefit observed in Experiments 1 and 3 was a consequence of the sensory congruency between olfactory and visual inputs. We speculated that it was the object-based binding of these two channels of information that enhanced the saliency of the bound object and drew the spotlight of attention to it. To further verify the extent to which such bottom-up sensory integration captures attention, we modified the validity of the visual cues in Experiment 4, such that for half of the 64 participants, the Gabor targets followed the rose image in 75% of the trials (predictive) and the lemon image in 25% of the trials (counterpredictive), whereas for the other half, it was the reverse (the Gabor targets followed the rose and lemon images in 25% and 75% of the trials, respectively). Within each half of the participants, 16 performed the task while being exposed to either purified water or PEA, whereas the other 16 smelled either purified water or citral. As a consequence of the validity manipulation, the participants paid more attention to the site of the predictive image when they were exposed to purified water, exhibiting a significant interaction between cue validity and probe position in their performance accuracy (F1,62 = 19.34, p < 0.001, partial η2 = 0.24, figure 1f–i). Nevertheless, such bias was modulated by olfactory inputs: overridden with the introduction of smells congruent to the counterpredictive visual cues (three-way interaction F1,30 = 13.55, p = 0.001, partial η2 = 0.31; figure 1f,g) and enlarged by smells congruent to the predictive visual cues (three-way interaction F1,30 = 4.85, p = 0.035, partial η2 = 0.14; figure 1h,i). Specifically, when the rose image served as the predictive cue, smelling the lemon-like citral as opposed to water increased the performance accuracy for the Gabor targets following the counterpredictive lemon image (0.77 versus 0.72, t15 = 2.86, p = 0.012; figure 1f), whereas smelling the rose-like PEA relative to water increased the accuracy for those following the predictive rose image (0.78 versus 0.74, t15 = 2.69, p = 0.017, figure 1h). Similarly, when the lemon image served as the predictive cue, smelling PEA and citral as opposed to water, respectively, increased the performance accuracies for the Gabor targets following the counterpredictive rose image (0.74 versus 0.68, t15 = 3.23, p = 0.006, figure 1g) and the predictive lemon image (0.78 versus 0.74, t15 = 2.21, p = 0.043, figure 1i). These findings provide strong evidence that the object-based binding between olfactory and visual inputs is an independent involuntary process, reflexively modulating the allocation of attention even in the presence of opposite top-down biases.

Based on the prevailing models of bottom-up attention as a saliency map that topographically weighs feature strengths and contextual modulations [2,3], we reasoned that smell quality could act as an object feature and contribute to the perceptual saliency of the corresponding visual object. We next tested this hypothesis in a visual-search task, which is sensitive to features and feature conjunctions [17,23] and better mimics everyday situations where attention is implemented.

(b). Olfaction facilitates visual search of the corresponding visual object

In Experiment 5, participants looked for the presence of a banana image (target, present in 50% of the trials) among an array (set size: 3, 9 or 18) of common household objects of similar colour, size and equal eccentricity while being continuously exposed to either the banana-like IA or the rose-like PEA, which were comparable in perceived intensity (69.3 versus 70.0, t29 = −0.23, p = 0.82) and pleasantness (72.6 versus 70.2, t29 = 0.68, p = 0.50) (figure 2a and see §2 for details). The search was a serial process: whereas accuracy was high and stable (greater than or equal to 99%) across all combinations of target presence, olfactory condition and set size, search time increased linearly with the number of items on display both in the presence and absence of the target (F1,29 > 200, p < 0.0001). Critically, olfactory condition interacted with set size when the target was present (F1.47,42.68 = 3.62, p = 0.048, partial η2 = 0.11) rather than absent (F1.52,44.04 = 2.43, p = 0.11) (figure 2b). An examination of the search slopes showed that smelling IA when compared with PEA accelerated the detection of the banana image by about 5 ms per item ( (target present) = 35.5 ms per item,

(target present) = 35.5 ms per item,  (target present) = 40.2 ms per item, t29 = −2.17, p = 0.038) but did not significantly affect the determination of its absence (t29 = 1.76, p = 0.089). If anything, the latter tended to be hindered by the exposure to IA (

(target present) = 40.2 ms per item, t29 = −2.17, p = 0.038) but did not significantly affect the determination of its absence (t29 = 1.76, p = 0.089). If anything, the latter tended to be hindered by the exposure to IA ( (target absent) = 144.7 ms per item versus

(target absent) = 144.7 ms per item versus  (target absent) = 138.5 ms per item). Hence, the expedited target detection was not caused by a smell-induced general arousal specifically linked with IA.

(target absent) = 138.5 ms per item). Hence, the expedited target detection was not caused by a smell-induced general arousal specifically linked with IA.

To ensure that the above results were not owing to semantic-related top-down bias, we tested a new group of participants with the same task in Experiment 6 but replaced IA and PEA with two bottles of purified water, as we carried out in Experiment 2. The participants were nevertheless told that one bottle contained a low concentration of banana smell and the other a low concentration of rose smell. They were also told that smell they were going to receive before each run. This manipulation again successfully altered their olfactory perceptions in the direction of the suggested smell content. The participants rated the purified water as more like the smell of banana (42.6 versus 23.8, t29 = 4.05, p < 0.001) and less like the smell of rose (22.5 versus 44.7, t29 = −5.07, p < 0.001) but similarly intense (27.4 versus 32.0, t29 = −1.04, p = 0.31) and pleasant (55.4 versus 57.7, t29 = −0.67, p = 0.51) when it was described as containing a banana smell when compared with a rose smell. Despite this, their search efficacy was unaffected. There was no interaction between suggested smell content and set size both when the target banana image was present (F2,58 = 0.25, p = 0.78) and when it was absent (F1.25,36.14 = 1.50, p = 0.23; figure 2c). Meanwhile, their accuracy remained high and stable across all conditions (greater than or equal to 99%).

We thus concluded that the presence of the banana smell added to the saliency of the target banana image in the perceptual saliency map based on their sensory congruency. Put differently, both visual and olfactory inputs contribute to the formulation of a multimodal saliency map that guides attention.

4. Discussion

In a series of six experiments, we have shown that a smell, typically experienced as vague and fuzzy [24], reflexively boosts the saliency of its corresponding visual object and attracts attention. This effect is independent of semantic priming (Experiments 2 and 6), not mediated by visual imagery (Experiment 3) and is separable from and robust enough to override top-down cognitive biases (Experiment 4). As all smells used were task-irrelevant (participants were explicitly told to ignore them), continuously (as opposed to transiently) presented (hence of low conspicuity themselves) and spatially uninformative (see §2 for details), we reason it was the spontaneous binding between congruent olfactory and visual information [25] that formed a multimodal saliency map where the visual object with added olfactory presence gained increased perceptual saliency.

Smells constantly swirl around us and naturally convey object identities. They are the olfactory transcriptions of the intrinsic chemical compositions of objects [26]. In this regard, smell, similar to visual form, is an inherent attribute contributing to the mental constructions of an object [19,27]. Whereas saliency has commonly been associated with basic visual features [2,3] and visual context [28], our results indicate that smells are also automatically factored in. Such a mechanism may facilitate localization of food as well as predators in the long course of evolution, particularly when inputs from the visual channel are crowded.

Recent neurophysiological studies have linked the computation of a visual object's saliency with V4 [29] and inferior temporal cortex [30]. These regions incorporate both top-down (from the parietal and frontal lobes) and bottom-up (from earlier visual cortices) signals and are involved in selecting the objects to which we attend and foveate. It is not yet clear how olfaction is integrated with this process. We speculate that it could be mediated by perirhinal cortex, which lies close to primary olfactory regions on the ventral–medial aspect of the temporal lobes. Perirhinal cortex is a polymodal structure interconnected with a broad range of subcortical and cortical areas including frontal structures which have recently been implicated in automatic olfactory attention [31] as well as the inferior temporal cortex [32]. It supports representations of feature conjunctions [33], binds the various sensory attributes of an object into a reified representation [34] and is critically involved in processing meaningful aspects of multimodal object representations [35]. The unified representation of an object defined by both visual and olfactory attributes in this region may thus feed to a multimodal attentional saliency map.

Finally, our findings echo a well-studied phenomenon named ‘attentional spread’, viz. attention to one sensory feature/modality can spread to encompass associated signals from another task-irrelevant feature/modality, as if the latter is pulled into the attentional spotlight [36,37]. This phenomenon has been taken to suggest that the units of implicit attentional selection are object based [36]—whole objects are selected even when only one attribute is relevant [38]. Complementing this notion, our results demonstrate that cross-modal feature binding, in turn, accents the bound object and attracts attention in a multimodal saliency map.

Funding statement

This work was supported by the National Basic Research Programme of China (2011CB711000), the National Natural Science Foundation of China (31070906), the Knowledge Innovation Programme (KSCX2-EW-BR-4 and KSCX2-YW-R-250) and the Strategic Priority Research Programme (XDB02010003) of the Chinese Academy of Sciences, and the Key Laboratory of Mental Health, Institute of Psychology, Chinese Academy of Sciences. The authors declare no competing financial interests.

References

- 1.Driver J. 2001. A selective review of selective attention research from the past century. Br. J. Psychol. 92, 53–78 (doi:10.1348/000712601162103) [PubMed] [Google Scholar]

- 2.Itti L, Koch C. 2001. Computational modelling of visual attention. Nat. Rev. Neurosci. 2, 194–203 (doi:10.1038/35058500) [DOI] [PubMed] [Google Scholar]

- 3.Li Z. 2002. A saliency map in primary visual cortex. Trends Cogn. Sci. 6, 9–16 (doi:10.1016/S1364-6613(00)01817-9) [DOI] [PubMed] [Google Scholar]

- 4.Yantis S, Serences JT. 2003. Cortical mechanisms of space-based and object-based attentional control. Curr. Opin. Neurobiol. 13, 187–193 (doi:10.1016/S0959-4388(03)00033-3) [DOI] [PubMed] [Google Scholar]

- 5.Reynolds JH, Desimone R. 1999. The role of neural mechanisms of attention in solving the binding problem. Neuron 24, 19–29 (doi:10.1016/S0896-6273(00)80819-3) [DOI] [PubMed] [Google Scholar]

- 6.Zhang W, Luck SJ. 2009. Feature-based attention modulates feedforward visual processing. Nat. Neurosci. 12, 24–25 (doi:10.1038/nn.2223) [DOI] [PubMed] [Google Scholar]

- 7.Kayser C, Petkov CI, Lippert M, Logothetis NK. 2005. Mechanisms for allocating auditory attention: an auditory saliency map. Curr. Biol. 15, 1943–1947 (doi:10.1016/j.cub.2005.09.040) [DOI] [PubMed] [Google Scholar]

- 8.Alsius A, Navarra J, Campbell R, Soto-Faraco S. 2005. Audiovisual integration of speech falters under high attention demands. Curr. Biol. 15, 839–843 (doi:10.1016/j.cub.2005.03.046) [DOI] [PubMed] [Google Scholar]

- 9.Talsma D, Doty TJ, Woldorff MG. 2007. Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb. Cortex 17, 679–690 (doi:10.1093/cercor/bhk016) [DOI] [PubMed] [Google Scholar]

- 10.Driver J, Spence C. 1998. Attention and the crossmodal construction of space. Trends Cogn. Sci. 2, 254–262 (doi:10.1016/S1364-6613(98)01188-7) [DOI] [PubMed] [Google Scholar]

- 11.Macaluso E, Driver J. 2005. Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci. 28, 264–271 (doi:10.1016/j.tins.2005.03.008) [DOI] [PubMed] [Google Scholar]

- 12.Van der Burg E, Olivers CN, Bronkhorst AW, Theeuwes J. 2008. Pip and pop: nonspatial auditory signals improve spatial visual search. J. Exp. Psychol. Hum. Percept. Perform. 34, 1053–1065 (doi:10.1037/0096-1523.34.5.1053) [DOI] [PubMed] [Google Scholar]

- 13.Van der Burg E, Olivers CN, Bronkhorst AW, Theeuwes J. 2009. Poke and pop: tactile–visual synchrony increases visual saliency. Neurosci. Lett. 450, 60–64 (doi:10.1016/j.neulet.2008.11.002) [DOI] [PubMed] [Google Scholar]

- 14.Vroomen J, de Gelder B. 2000. Sound enhances visual perception: cross-modal effects of auditory organization on vision. J. Exp. Psychol. Hum. Percept. Perform. 26, 1583–1590 (doi:10.1037/0096-1523.26.5.1583) [DOI] [PubMed] [Google Scholar]

- 15.Fujisaki W, Koene A, Arnold D, Johnston A, Nishida S. 2006. Visual search for a target changing in synchrony with an auditory signal. Proc. R. Soc. B 273, 865–874 (doi:10.1098/rspb.2005.3327) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.MacLeod C, Mathews A, Tata P. 1986. Attentional bias in emotional disorders. J. Abnorm. Psychol. 95, 15–20 (doi:10.1037/0021-843X.95.1.15) [DOI] [PubMed] [Google Scholar]

- 17.Treisman AM, Gelade G. 1980. A feature-integration theory of attention. Cogn. Psychol. 12, 97–136 (doi:10.1016/0010-0285(80)90005-5) [DOI] [PubMed] [Google Scholar]

- 18.Wyatt TD. 2003. Pheromones and animal behavior: communication by smell and taste. London, UK: Cambridge University Press [Google Scholar]

- 19.Zhou W, Jiang Y, He S, Chen D. 2010. Olfaction modulates visual perception in binocular rivalry. Curr. Biol. 20, 1356–1358 (doi:10.1016/j.cub.2010.05.059) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Seo HS, Roidl E, Muller F, Negoias S. 2010. Odors enhance visual attention to congruent objects. Appetite 54, 544–549 (doi:10.1016/j.appet.2010.02.011) [DOI] [PubMed] [Google Scholar]

- 21.Seigneuric A, Durand K, Jiang T, Baudouin JY, Schaal B. 2010. The nose tells it to the eyes: crossmodal associations between olfaction and vision. Perception 39, 1541–1554 (doi:10.1068/p6740) [DOI] [PubMed] [Google Scholar]

- 22.Shepard RN, Metzler J. 1971. Mental rotation of three-dimensional objects. Science 171, 701–703 (doi:10.1126/science.171.3972.701) [DOI] [PubMed] [Google Scholar]

- 23.Wolfe JM, Horowitz TS. 2004. What attributes guide the deployment of visual attention and how do they do it? Nat. Rev. Neurosci. 5, 495–501 (doi:10.1038/nrn1411) [DOI] [PubMed] [Google Scholar]

- 24.Cain WS. 1979. To know with the nose: keys to odor identification. Science 203, 467–470 (doi:10.1126/science.760202) [DOI] [PubMed] [Google Scholar]

- 25.Zhou W, Zhang X, Chen J, Wang L, Chen D. 2012. Nostril-specific olfactory modulation of visual perception in binocular rivalry. J. Neurosci. 32, 17 225–17 229 (doi:10.1523/JNEUROSCI.2649-12.2012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Buck LB. 2000. The molecular architecture of odor and pheromone sensing in mammals. Cell 100, 611–618 (doi:10.1016/S0092-8674(00)80698-4) [DOI] [PubMed] [Google Scholar]

- 27.Gottfried JA, Dolan RJ. 2003. The nose smells what the eye sees: crossmodal visual facilitation of human olfactory perception. Neuron 39, 375–386 (doi:10.1016/S0896-6273(03)00392-1) [DOI] [PubMed] [Google Scholar]

- 28.Chun MM. 2000. Contextual cueing of visual attention. Trends Cogn. Sci. 4, 170–178 (doi:10.1016/S1364-6613(00)01476-5) [DOI] [PubMed] [Google Scholar]

- 29.Mazer JA, Gallant JL. 2003. Goal-related activity in V4 during free viewing visual search: evidence for a ventral stream visual salience map. Neuron 40, 1241–1250 (doi:10.1016/S0896-6273(03)00764-5) [DOI] [PubMed] [Google Scholar]

- 30.Chelazzi L, Miller EK, Duncan J, Desimone R. 1993. A neural basis for visual search in inferior temporal cortex. Nature 363, 345–347 (doi:10.1038/363345a0) [DOI] [PubMed] [Google Scholar]

- 31.Grabenhorst F, Rolls ET, Margot C. 2011. A hedonically complex odor mixture produces an attentional capture effect in the brain. Neuroimage 55, 832–843 (doi:10.1016/j.neuroimage.2010.12.023) [DOI] [PubMed] [Google Scholar]

- 32.Suzuki WA. 1996. The anatomy, physiology and functions of the perirhinal cortex. Curr. Opin. Neurobiol. 6, 179–186 (doi:10.1016/S0959-4388(96)80071-7) [DOI] [PubMed] [Google Scholar]

- 33.Buckley MJ, Gaffan D. 2006. Perirhinal cortical contributions to object perception. Trends Cogn. Sci. 10, 100–107 (doi:10.1016/j.tics.2006.01.008) [DOI] [PubMed] [Google Scholar]

- 34.Murray EA, Richmond BJ. 2001. Role of perirhinal cortex in object perception, memory, and associations. Curr. Opin. Neurobiol. 11, 188–193 (doi:10.1016/S0959-4388(00)00195-1) [DOI] [PubMed] [Google Scholar]

- 35.Taylor KI, Moss HE, Stamatakis EA, Tyler LK. 2006. Binding crossmodal object features in perirhinal cortex. Proc. Natl Acad. Sci. USA 103, 8239–8244 (doi:10.1073/pnas.0509704103) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Melcher D, Papathomas TV, Vidnyanszky Z. 2005. Implicit attentional selection of bound visual features. Neuron 46, 723–729 (doi:10.1016/j.neuron.2005.04.023) [DOI] [PubMed] [Google Scholar]

- 37.Donohue SE, Roberts KC, Grent-'t-Jong T, Woldorff MG. 2011. The cross-modal spread of attention reveals differential constraints for the temporal and spatial linking of visual and auditory stimulus events. J. Neurosci. 31, 7982–7990 (doi:10.1523/JNEUROSCI.5298-10.2011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.O'Craven KM, Downing PE, Kanwisher N. 1999. FMRI evidence for objects as the units of attentional selection. Nature 401, 584–587 (doi:10.1038/44134) [DOI] [PubMed] [Google Scholar]